Deep Feature Migration for Real-Time Mapping of Urban Street Shading Coverage Index Based on Street-Level Panorama Images

Abstract

:1. Introduction

2. Materials and Methods

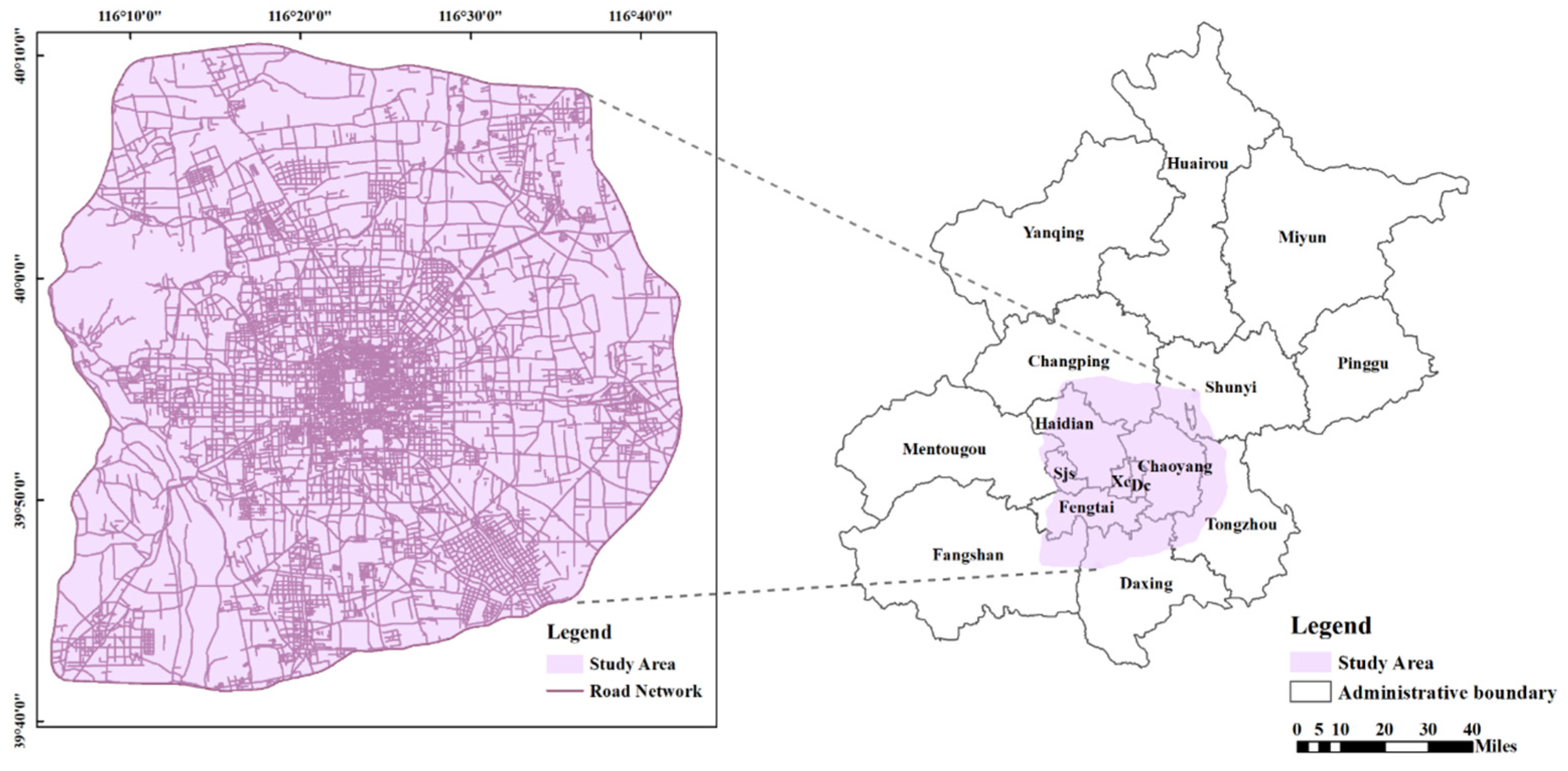

2.1. Study Area

2.2. Data Source

2.3. Methods

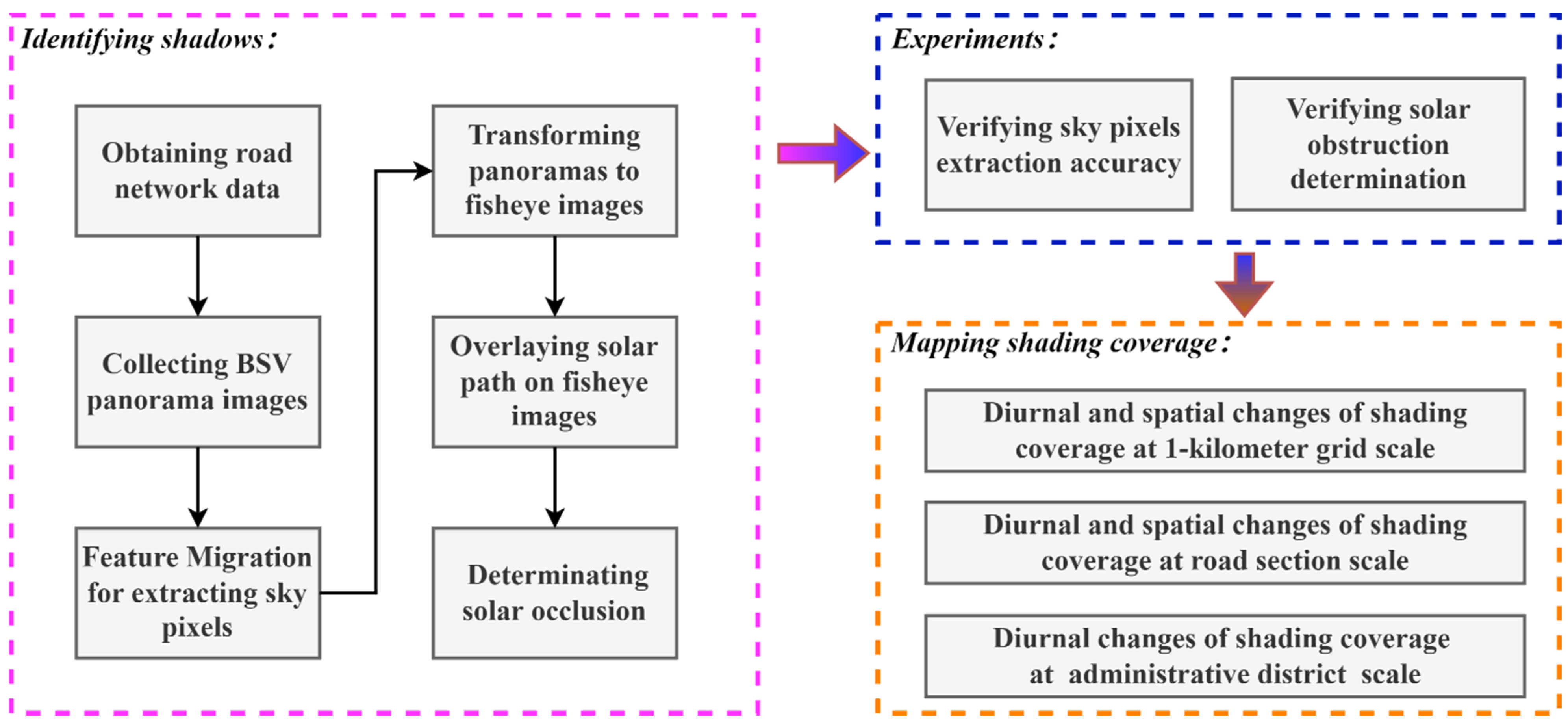

2.3.1. Research Framework

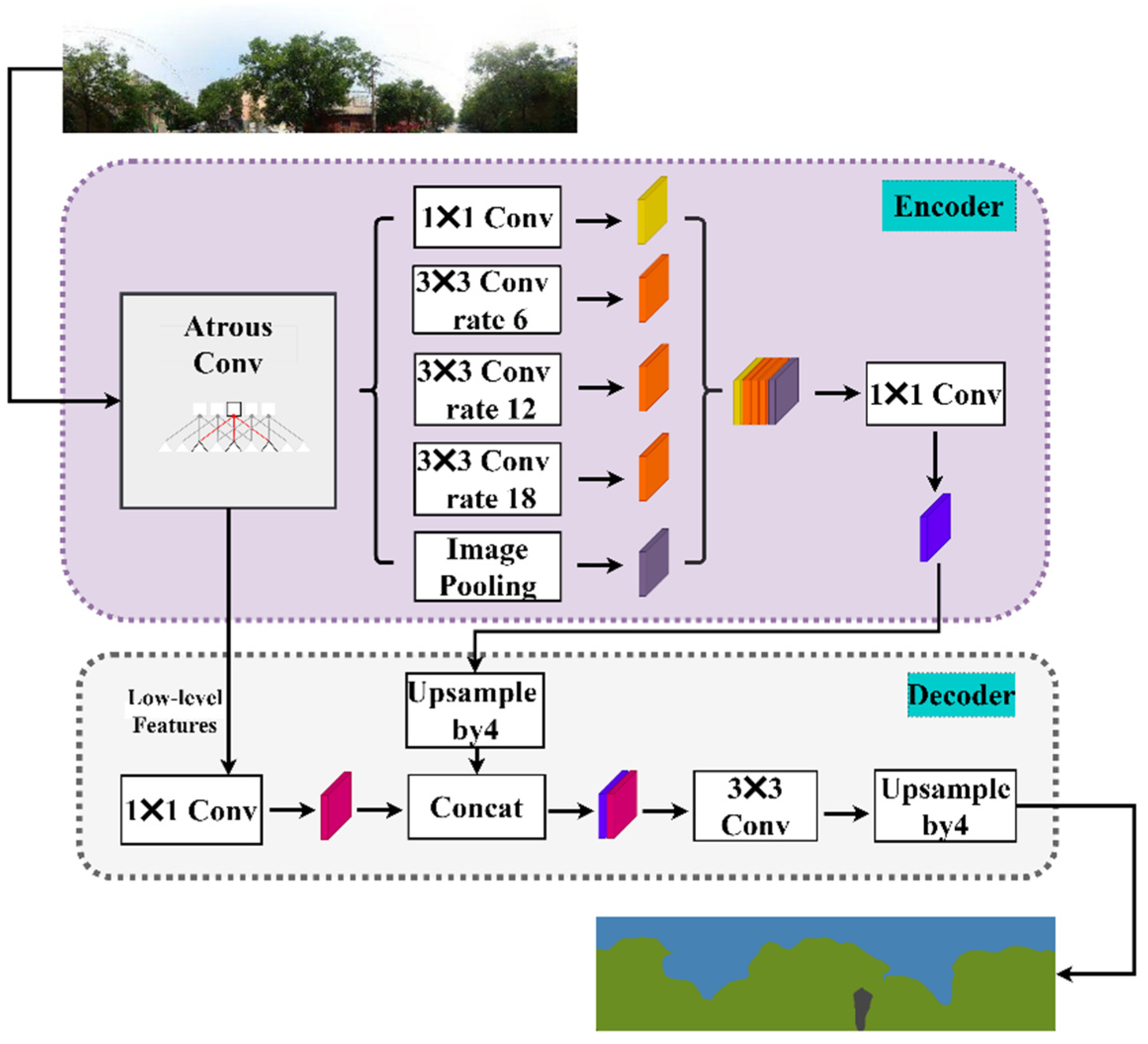

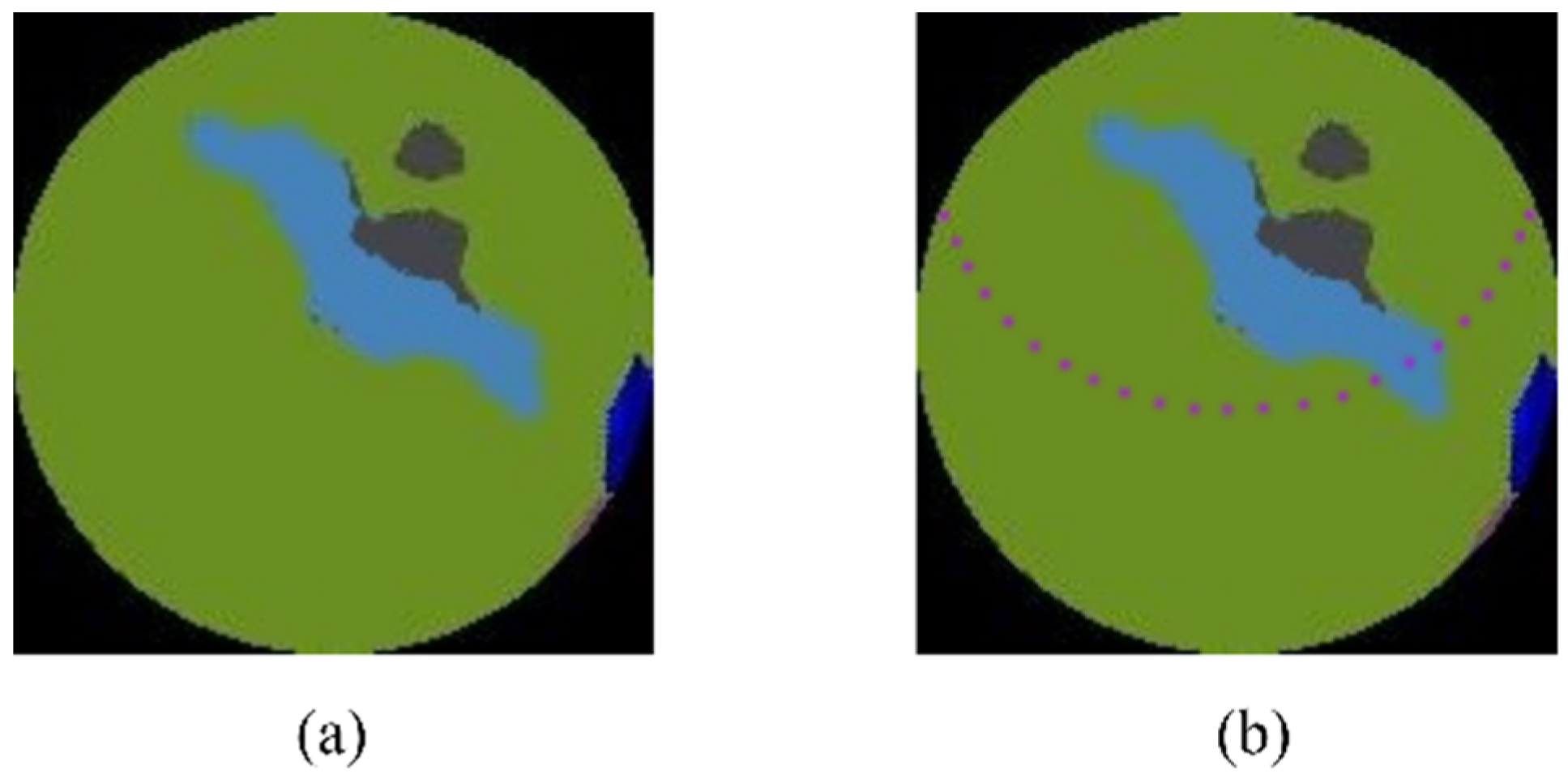

2.3.2. Identifying Shadows

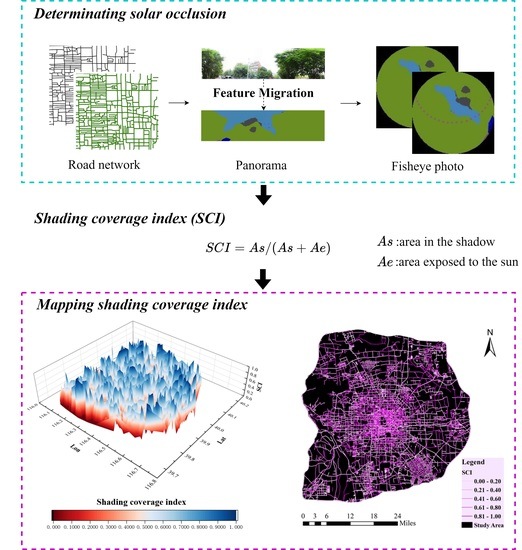

2.3.3. Shading Coverage Index

3. Experiments and Results

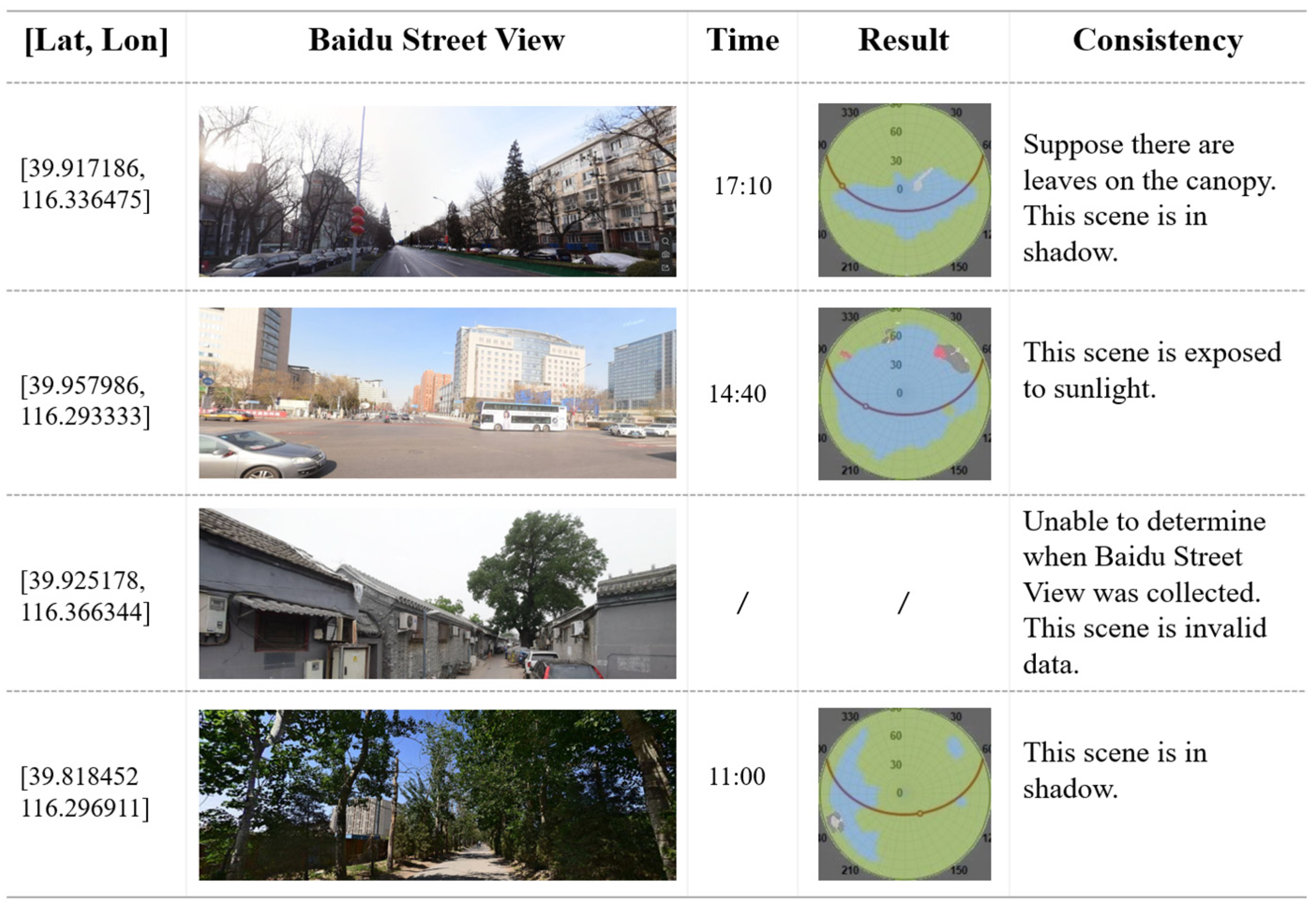

3.1. Experiments

3.2. Mapping Shading Coverage

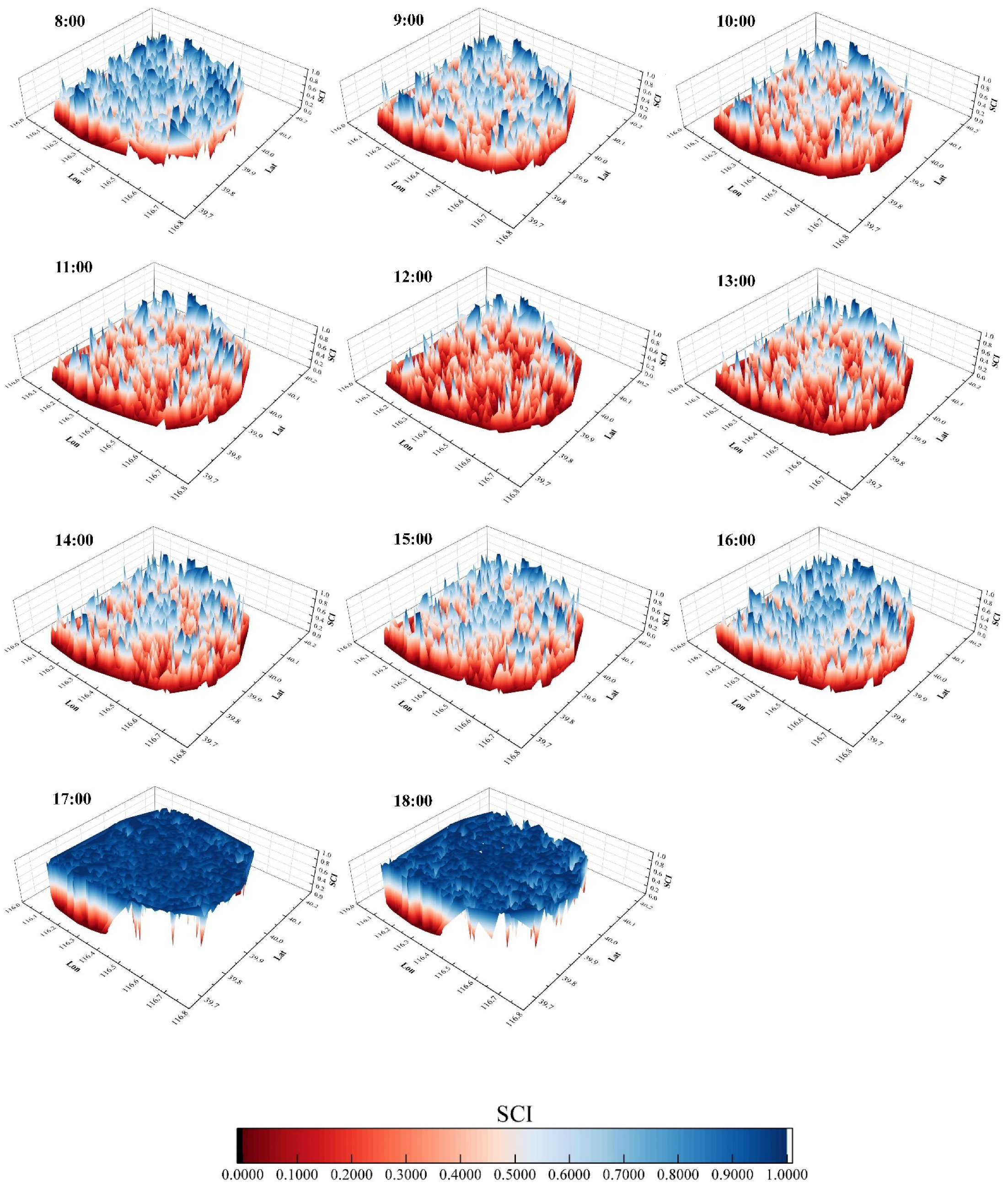

3.2.1. Diurnal and Spatial Distribution of Shading Coverage Index at 1-km Grid

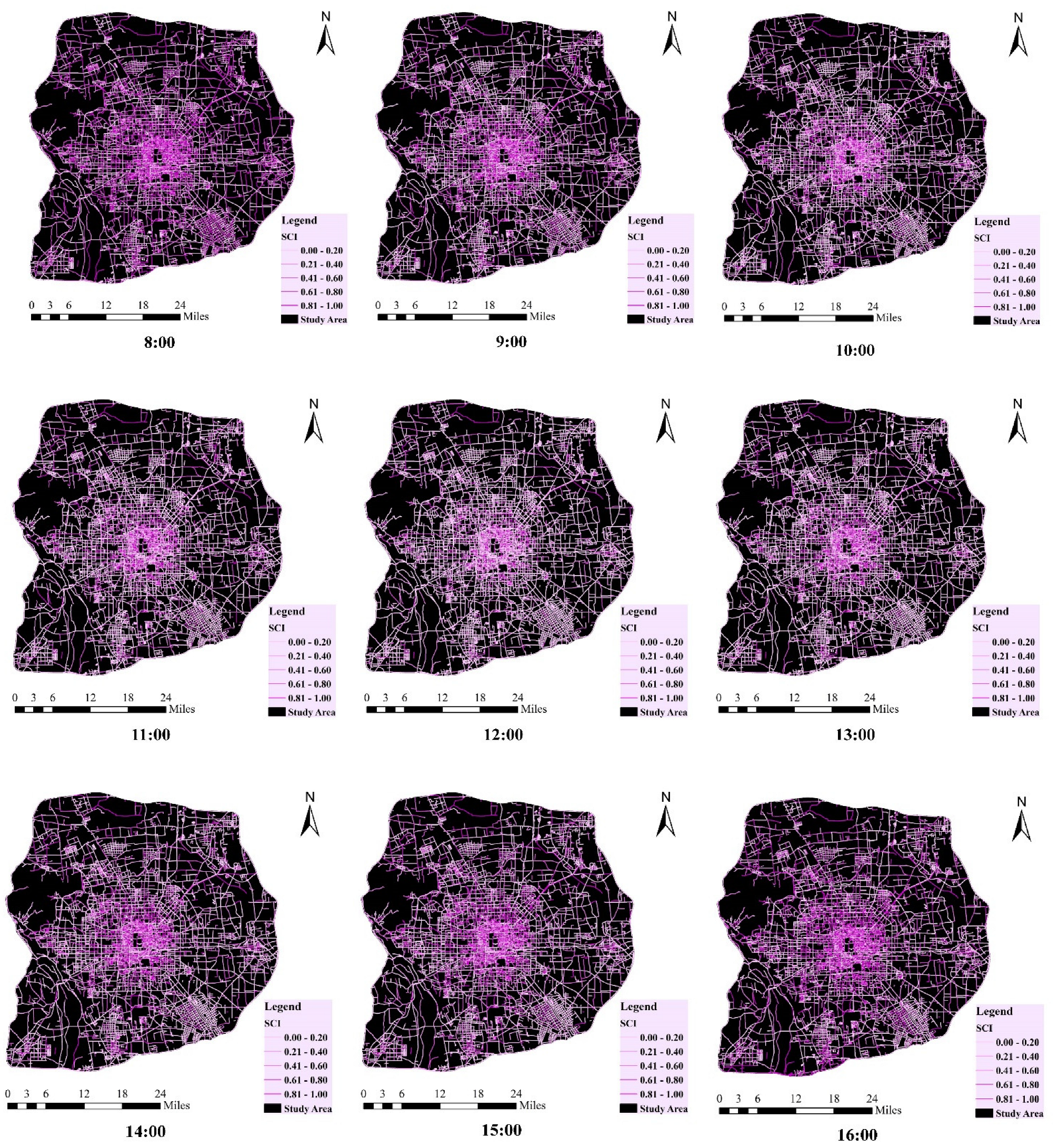

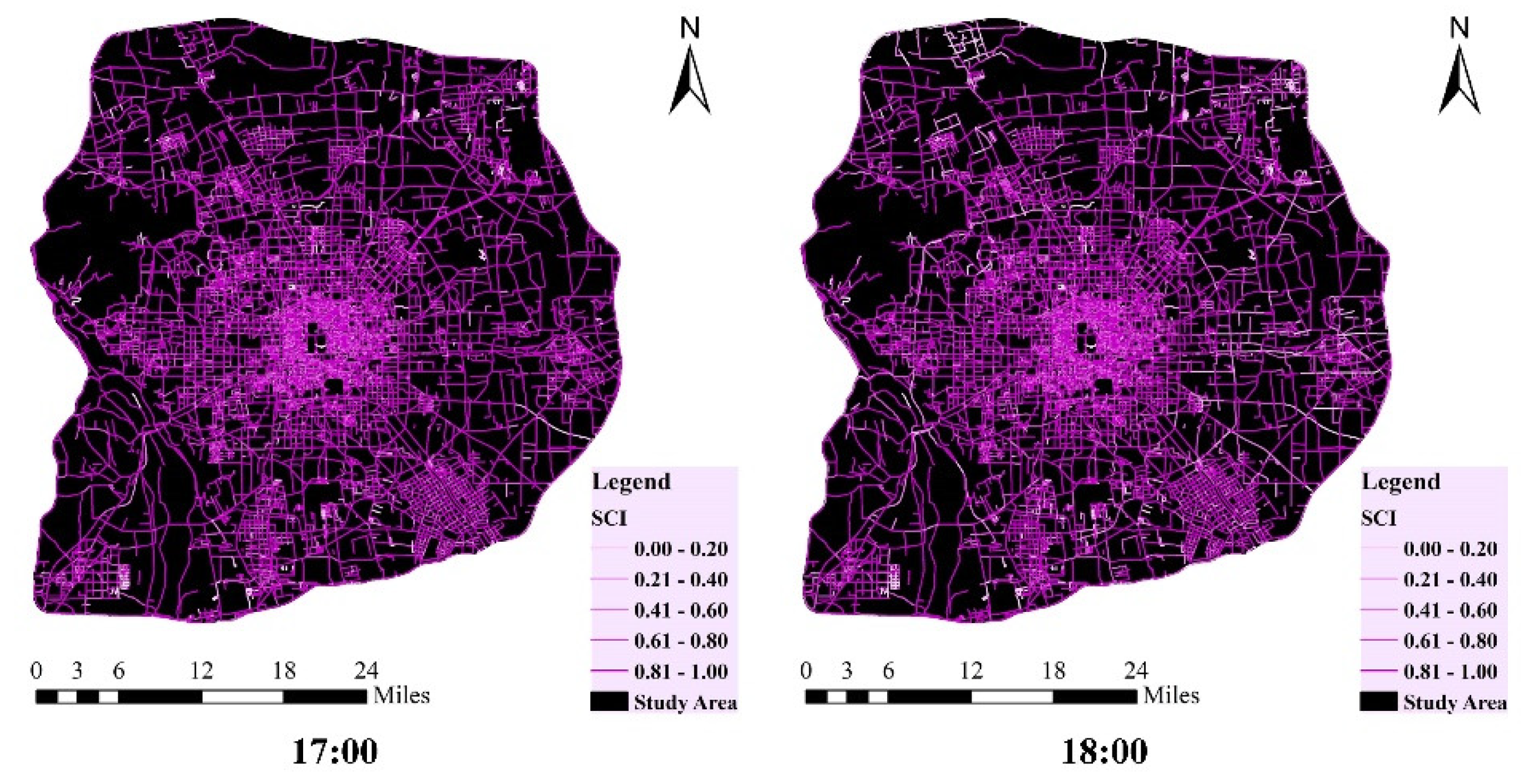

3.2.2. Diurnal and Spatial Distribution of Shading Coverage Index at the Scale of Road Section

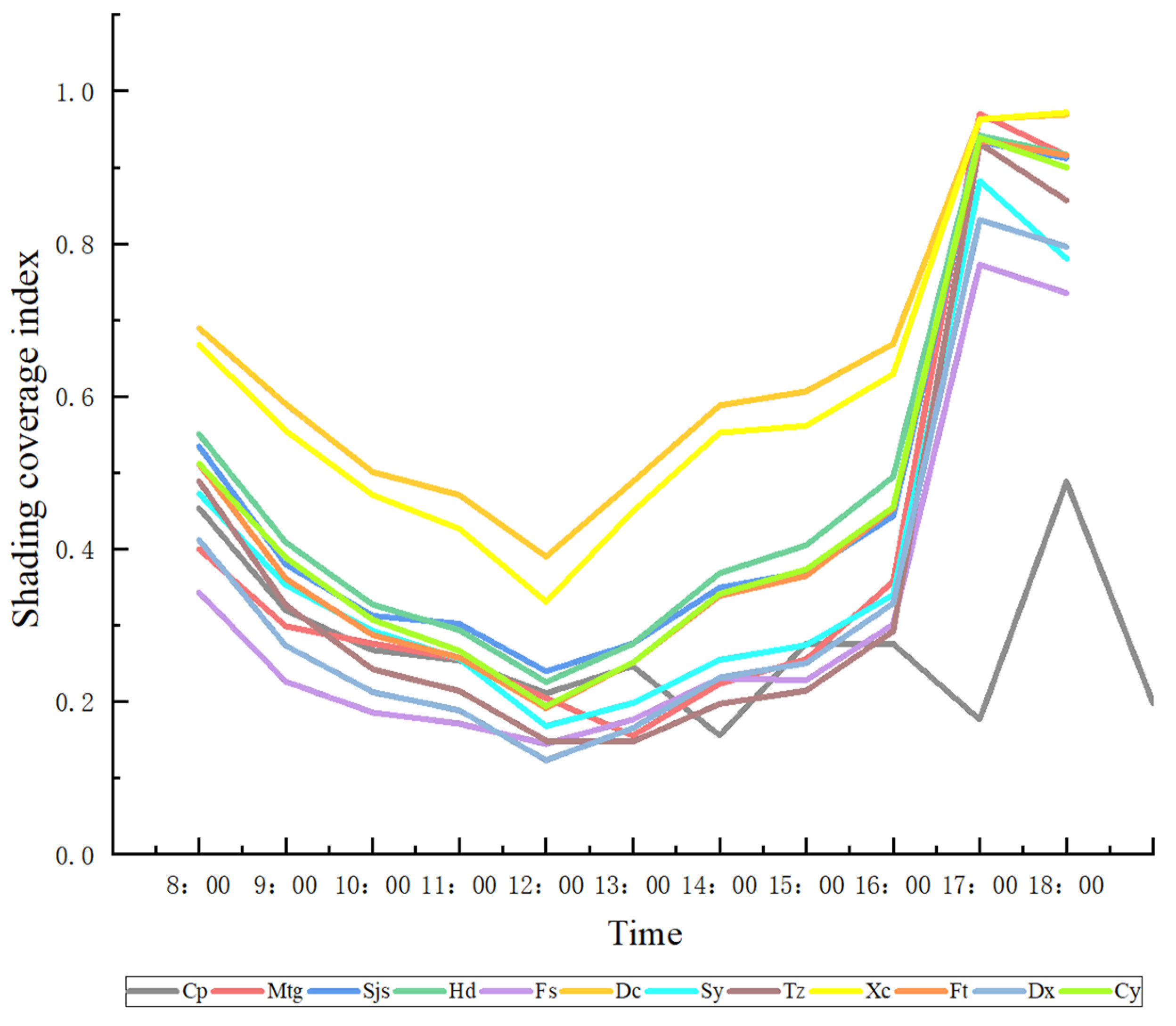

3.2.3. Shading Coverage Index at the Scale of Administrative District

4. Discussion

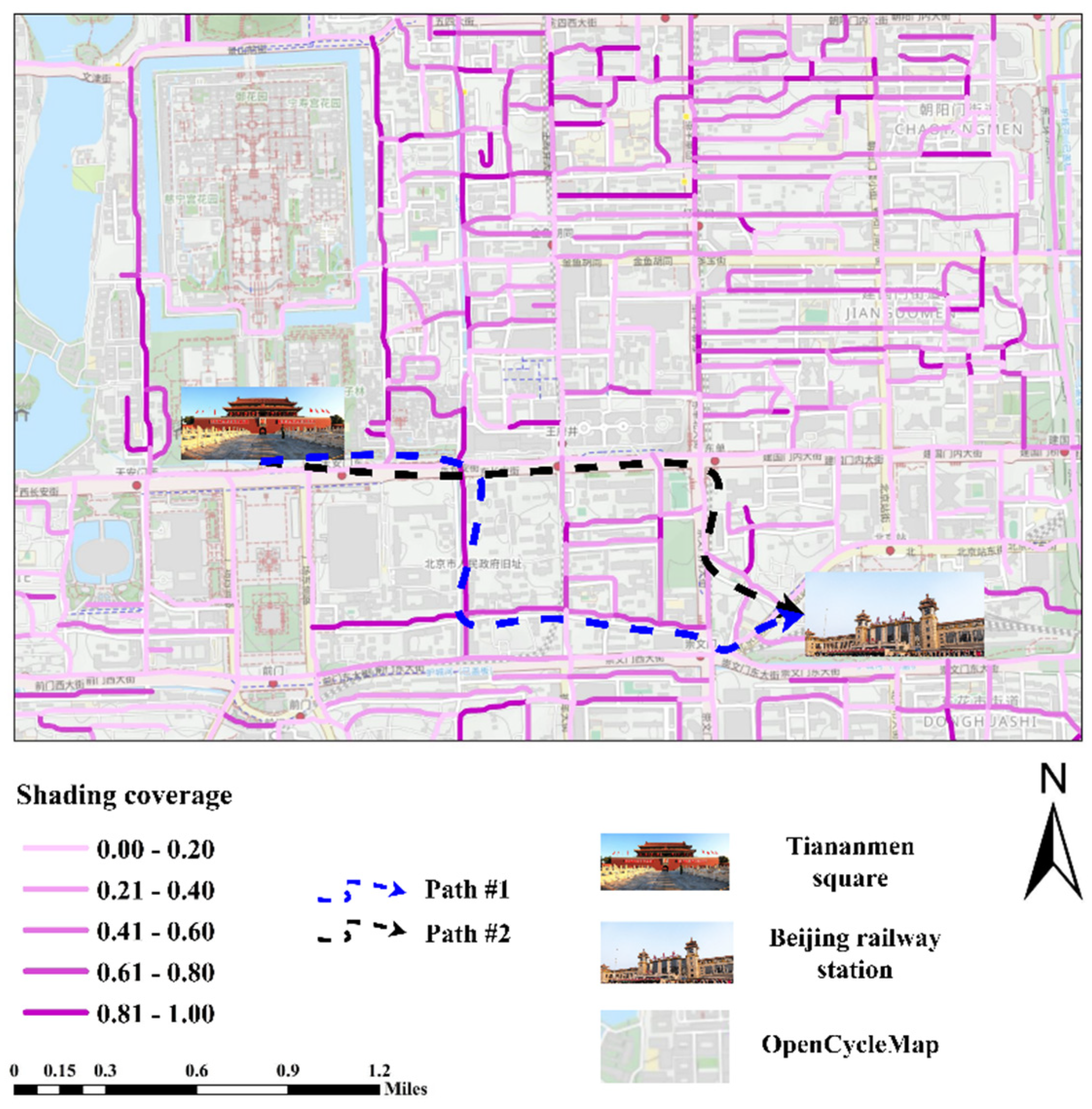

4.1. Shading Coverage Index Applications

4.2. Limitations and Future Consideration

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Jiang, C.; Ward, M.O. Shadow identification. In Proceedings of the 1992 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Champaign, IL, USA, 15–18 June 1992; pp. 606–612. [Google Scholar] [CrossRef]

- Mehta, V. The Street: A Quintessential Social Public Space; Routledge: London, UK, 2013. [Google Scholar]

- Zhou, W.; Wang, J.; Cadenasso, M.L. Effects of the spatial configuration of trees on urban heat mitigation: A comparative study. Remote Sens. Environ. 2017, 195, 1–12. [Google Scholar] [CrossRef]

- Poumadère, M.; Mays, C.; Le Mer, S.; Blong, R. The 2003 heat wave in France: Dangerous climate change here and now. Risk Anal. 2005, 25, 1483–1494. [Google Scholar] [CrossRef] [PubMed]

- Harlan, S.L.; Ruddell, D.M. Climate change and health in cities: Impacts of heat and air pollution and potential co-benefits from mitigation and adaptation. Curr. Opin. Environ. Sust. 2011, 3, 126–134. [Google Scholar] [CrossRef]

- Ouillet, A.; Rey, G.; Laurent, F.; Pavillon, G.; Bellec, S.; Guihenneuc-Jouyaux, C.; Clavel, J.; Jougla, E.; Hemon, D. Excess mortality related to the August 2003 heat wave in France. Int. Arch. Occup. Environ. Health 2006, 80, 16–24. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sun, Q.C.; Macleod, T.; Both, A.; Hurley, J.; Butt, A.; Amati, M. A human-centered assessment framework to prioritise heat mitigation efforts for active travel at city scale. Sci. Total Environ. 2021, 763, 143033. [Google Scholar] [CrossRef] [PubMed]

- Loughner, C.P.; Allen, D.J.; Zhang, D.; Pickering, K.E.; Dickerson, R.R.; Landry, L. Roles of urban tree canopy and buildings in urban heat island effects: Parameterization and preliminary results. J. Appl. Meteorol. Clim. 2012, 51, 1775–1793. [Google Scholar] [CrossRef]

- Krier, R.; Rowe, C. Urban Space; Academy Editions: London, UK, 1979. [Google Scholar]

- Chen, L.; Ng, E. Outdoor thermal comfort and outdoor activities: A review of research in the past decade. Cities 2012, 29, 118–125. [Google Scholar] [CrossRef]

- Samarasekara, G.N.; Fukahori, K.; Kubota, Y. Environmental correlates that provide walkability cues for tourists: An analysis based on walking decision narrations. Environ. Behav. 2011, 43, 501–524. [Google Scholar] [CrossRef]

- Li, X.; Yoshimura, Y.; Tu, W.; Ratti, C. A pedestrian-level strategy to minimize outdoor sunlight exposure. Artif. Intell. Mach. Learn. Optim. Tools Smart Cities 2022, 123–134. [Google Scholar] [CrossRef]

- Kang, J.; Körner, M.; Wang, Y.; Taubenböck, H.; Zhu, X.X. Building instance classification using street view images. ISPRS J. Photogramm. 2018, 145, 44–59. [Google Scholar] [CrossRef]

- Wang, J.; Tett, S.F.B.; Yan, Z. Correcting urban bias in large-scale temperature records in China, 1980–2009. Geophys. Res. Lett. 2017, 44, 401–408. [Google Scholar] [CrossRef] [Green Version]

- Cao, Q.; Yu, D.; Georgescu, M.; Wu, J.; Wang, W. Impacts of future urban expansion on summer climate and heat-related human health in eastern China. Environ. Int. 2018, 112, 134–146. [Google Scholar] [CrossRef] [PubMed]

- Nasrollahi, N.; Hatami, Z.; Taleghani, M. Development of outdoor thermal comfort model for tourists in urban historical areas; A case study in Isfahan. Build. Environ. 2017, 125, 356–372. [Google Scholar] [CrossRef]

- Lubans, D.R.; Boreham, C.A.; Kelly, P.; Foster, C.E. The relationship between active travel to school and health-related fitness in children and adolescents: A systematic review. Int. J. Behav. Nutr. Phy. 2011, 8, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Rodríguez-Algeciras, J.; Tablada, A.; Matzarakis, A. Effect of asymmetrical street canyons on pedestrian thermal comfort in warm-humid climate of Cuba. Theor. Appl. Climatol. 2018, 133, 663–679. [Google Scholar] [CrossRef]

- Shashua-Bar, L.; Hoffman, M.E. Vegetation as a climatic component in the design of an urban street: An empirical model for predicting the cooling effect of urban green areas with trees. Energy Build. 2000, 31, 221–235. [Google Scholar] [CrossRef]

- Lin, T.; Tsai, K.; Hwang, R.; Matzarakis, A. Quantification of the effect of thermal indices and sky view factor on park attendance. Landsc. Urban Plan. 2012, 107, 137–146. [Google Scholar] [CrossRef]

- Peng, F.; Xiong, Y.; Zou, B. Identifying the optimal travel path based on shading effect at pedestrian level in cool and hot climates. Urban Clim. 2021, 40, 100988. [Google Scholar] [CrossRef]

- Muhaisen, A.S. Shading simulation of the courtyard form in different climatic regions. Build. Environ. 2006, 41, 1731–1741. [Google Scholar] [CrossRef]

- Oke, T.R. Street design and urban canopy layer climate. Energy Build. 1988, 11, 103–113. [Google Scholar] [CrossRef]

- White, M.; Hu, Y.; Burry, M.; Ding, W.; Langenheim, N. Cool City Design: Integrating Real-Time Urban Canyon Assessment into the Design Process for Chinese and Australian Cities. Urban Plan. 2016, 3, 25–37. [Google Scholar] [CrossRef]

- Middel, A.; Lukasczyk, J.; Maciejewski, R. Sky View Factors from Synthetic Fisheye Photos for Thermal Comfort Routing—A Case Study in Phoenix, Arizona. Urban Plan. 2017, 2, 19–30. [Google Scholar] [CrossRef]

- Carrasco-Hernandez, R.; Smedley, A.R.D.; Webb, A.R. Using urban canyon geometries obtained from Google Street View for atmospheric studies: Potential applications in the calculation of street level total shortwave irradiances. Energy Build. 2015, 340–348. [Google Scholar] [CrossRef]

- Johansson, E.; Emmanuel, R. The influence of urban design on outdoor thermal comfort in the hot, humid city of Colombo, Sri Lanka. Int. J. Biometeorol. 2006, 51, 119–133. [Google Scholar] [CrossRef] [PubMed]

- Moro, J.; Krüger, E.L.; Camboim, S. Shading analysis of urban squares using open-source software and free satellite imagery. Appl. Geomat. 2020, 12, 441–454. [Google Scholar] [CrossRef]

- Chen, X.; Zhao, H.; Li, P.; Yin, Z. Remote sensing image-based analysis of the relationship between urban heat island and land use/cover changes. Remote Sens. Environ. 2006, 104, 133–146. [Google Scholar] [CrossRef]

- Klemm, W.; Heusinkveld, B.G.; Lenzholzer, S.; van Hove, B. Street greenery and its physical and psychological impact on thermal comfort. Landsc. Urban Plan. 2015, 138, 87–98. [Google Scholar] [CrossRef]

- Chow, A.; Fung, A.; Li, S. GIS Modeling of Solar Neighborhood Potential at a Fine Spatiotemporal Resolution. Buildings 2014, 4, 195–206. [Google Scholar] [CrossRef]

- Du, K.; Ning, J.; Yan, L. How long is the sun duration in a street canyon? Analysis of the view factors of street canyons. Build. Environ. 2020, 172, 106680. [Google Scholar] [CrossRef]

- Gong, F.; Zeng, Z.; Zhang, F.; Li, X.; Ng, E.; Norford, L.K. Mapping sky, tree, and building view factors of street canyons in a high-density urban environment. Build. Environ. 2018, 134, 155–167. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, M.; Li, Q.; Zhang, T.; Yang, L.; Liu, J. Investigation on the distribution patterns and predictive model of solar radiation in urban street canyons with panorama images. Sustainable Cities Soc. 2021, 75, 103275. [Google Scholar] [CrossRef]

- Li, X.; Ratti, C. Mapping the spatio-temporal distribution of solar radiation within street canyons of Boston using Google Street View panoramas and building height model. Landsc. Urban Plan. 2019, 191, 103387. [Google Scholar] [CrossRef]

- Yang, J.; Yi, D.; Qiao, B.; Zhang, J. Spatio-temporal change characteristics of spatial-interaction networks: Case study within the sixth ring road of Beijing, China. ISPRS Int. J. Geo-Inf. 2019, 8, 273. [Google Scholar] [CrossRef] [Green Version]

- Dong, R.; Zhang, Y.; Zhao, J. How green are the streets within the sixth ring road of Beijing? An analysis based on Tencent Street View pictures and the Green View Index. Int. J. Environ. Res. Public Health 2018, 15, 1367. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, X.; Meng, Q.; Hu, D.; Zhang, L.; Yang, J. Evaluating Greenery around Streets Using Baidu Panoramic Street View Images and the Panoramic Green View Index. Forests 2019, 10, 1109. [Google Scholar] [CrossRef] [Green Version]

- Chen, L.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with strous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar] [CrossRef] [Green Version]

- Cordts, M.; Omran, M.; Ramos, S.; Scharw Achter, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset; CVPR Workshop on the Future of Datasets in Vision: New Orleans, LO, USA, 2015. [Google Scholar]

- Chouai, M.; Dolezel, P.; Stursa, D.; Nemec, Z. New End-to-End Strategy Based on DeepLabv3+ Semantic Segmentation for Human Head Detection. Sensors 2021, 21, 5848. [Google Scholar] [CrossRef] [PubMed]

- He, X.D.; Shen, S.H.; Miao, S.G. Applications of fisheye imagery in urban environment: A case based study in Nanjing. Adv. Mater. Res. 2013, 726, 4870–4874. [Google Scholar] [CrossRef]

- Yousuf, M.U.; Siddiqui, M.; Rehman, N.U. Solar energy potential estimation by calculating sun illumination hours and sky view factor on building rooftops using digital elevation model. J. Renew. Sustain. Energy 2018, 10, 13703. [Google Scholar] [CrossRef]

- Forsyth, A. What is a walkable place? The walkability debate in urban design. Urban Des. Int. 2015, 20, 274–292. [Google Scholar] [CrossRef]

- Jacobson, M.Z. Fundamentals of Atmospheric Modeling; Cambridge University Press: Cambridge, UK, 1999. [Google Scholar]

- Li, X.; Hu, T.; Gong, P.; Du, S.; Chen, B.; Li, X.; Dai, Q. Mapping Essential Urban Land Use Categories in Beijing with a Fast Area of Interest (AOI)-Based Method. Remote Sens. 2021, 13, 477. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, Q.; Huang, H.; Wu, W.; Du, X.; Wang, H. The Combined Use of Remote Sensing and Social Sensing Data in Fine-Grained Urban Land Use Mapping: A Case Study in Beijing, China. Remote Sens. 2017, 9, 865. [Google Scholar] [CrossRef] [Green Version]

- Zhao, S.; Tang, Y.; Chen, A. Carbon Storage and Sequestration of Urban Street Trees in Beijing, China. Front. Ecol. Evol. 2016, 4, 53. [Google Scholar] [CrossRef] [Green Version]

- Ma, B.C.; Sang, Q.; Gou, J.F. Shading Effect on Outdoor Thermal Comfort in High-Density City a Case Based Study of Beijing. Adv. Mater. Res. 2014, 1065–1069, 2927–2930. [Google Scholar] [CrossRef]

- Guo, Z.; Zhang, Z.; Wu, X.; Wang, J.; Zhang, P.; Ma, D.; Liu, Y. Building shading affects the ecosystem service of urban green spaces: Carbon capture in street canyons. Ecol. Model. 2020, 431, 109178. [Google Scholar] [CrossRef]

- Guo, Z.; Loo, B.P.Y. Pedestrian environment and route choice: Evidence from New York City and Hong Kong. J. Transp. Geogr. 2013, 28, 124–136. [Google Scholar] [CrossRef]

- Hoogendoorn, S.P.; Bovy, P.H.L. Pedestrian route-choice and activity scheduling theory and models. Transp. Res. Part B Methodol. 2004, 38, 169–190. [Google Scholar] [CrossRef]

- Sevtsuk, A.; Basu, R.; Li, X.; Kalvo, R. A big data approach to understanding pedestrian route choice preferences: Evidence from San Francisco. Travel Behav. Soc. 2021, 25, 41–51. [Google Scholar] [CrossRef]

- Xue, P.; Jia, X.; Lai, D.; Zhang, X.; Fan, C.; Zhang, W.; Zhang, N. Investigation of outdoor pedestrian shading preference under several thermal environment using remote sensing images. Build. Environ. 2021, 200, 107934. [Google Scholar] [CrossRef]

- Peeters, A.; Shashua-Bar, L.; Meir, S.; Shmulevich, R.R.; Caspi, Y.; Weyl, M.; Motzafi-Haller, W.; Angel, N. A decision support tool for calculating effective shading in urban streets. Urban Clim. 2020, 34, 100672. [Google Scholar] [CrossRef]

| Dataset | Backbone | CPA | IoU | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Sky | Tree | Building | Background | Sky | Tree | Building | Background | ||

| Our dataset | MobileNet-v2 | 0.93 | 0.96 | 0.47 | 0.03 | 0.90 | 0.64 | 0.45 | 0.01 |

| MobileNet-v3 | 0.26 | 0.48 | 0.06 | 0.77 | 0.26 | 0.45 | 0.06 | 0.02 | |

| Xception65 | 0.68 | 0.98 | 0.26 | 0.07 | 0.67 | 0.53 | 0.24 | 0.01 | |

| Xception71 | 0.55 | 0.97 | 0.44 | 0.15 | 0.55 | 0.72 | 0.40 | 0.01 | |

| CamVid | MobileNet-v2 | 0.70 | 0.95 | 0.95 | 0.89 | 0.69 | 0.65 | 0.74 | 0.88 |

| MobileNet-v3 | 0.65 | 0.42 | 0.94 | 0.83 | 0.64 | 0.34 | 0.60 | 0.78 | |

| Xception65 | 0.75 | 0.94 | 0.96 | 0.91 | 0.75 | 0.70 | 0.79 | 0.90 | |

| Xception71 | 0.81 | 0.94 | 0.98 | 0.91 | 0.81 | 0.67 | 0.82 | 0.90 | |

| Time | Scale of Road Section | Scale of 1 km Grid | ||||

|---|---|---|---|---|---|---|

| Average | Median | Standard Deviation | Average | Median | Standard Deviation | |

| 8:00 | 0.379 | 0.368 | 0.250 | 0.482 | 0.500 | 0.250 |

| 9:00 | 0.292 | 0.500 | 0.247 | 0.352 | 0.326 | 0.250 |

| 10:00 | 0.236 | 0.179 | 0.231 | 0.287 | 0.243 | 0.238 |

| 11:00 | 0.216 | 0.142 | 0.223 | 0.258 | 0.208 | 0.227 |

| 12:00 | 0.164 | 0.084 | 0.201 | 0.187 | 0.137 | 0.191 |

| 13:00 | 0.208 | 0.130 | 0.225 | 0.232 | 0.175 | 0.245 |

| 14:00 | 0.270 | 0.222 | 0.243 | 0.304 | 0.259 | 0.245 |

| 15:00 | 0.286 | 0.250 | 0.247 | 0.327 | 0.294 | 0.247 |

| 16:00 | 0.337 | 0.333 | 0.257 | 0.403 | 0.395 | 0.261 |

| 17:00 | 0.627 | 0.650 | 0.233 | 0.905 | 0.955 | 0.178 |

| 18:00 | 0.609 | 0.631 | 0.240 | 0.848 | 0.938 | 0.221 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yue, N.; Zhang, Z.; Jiang, S.; Chen, S. Deep Feature Migration for Real-Time Mapping of Urban Street Shading Coverage Index Based on Street-Level Panorama Images. Remote Sens. 2022, 14, 1796. https://doi.org/10.3390/rs14081796

Yue N, Zhang Z, Jiang S, Chen S. Deep Feature Migration for Real-Time Mapping of Urban Street Shading Coverage Index Based on Street-Level Panorama Images. Remote Sensing. 2022; 14(8):1796. https://doi.org/10.3390/rs14081796

Chicago/Turabian StyleYue, Ning, Zhenxin Zhang, Shan Jiang, and Siyun Chen. 2022. "Deep Feature Migration for Real-Time Mapping of Urban Street Shading Coverage Index Based on Street-Level Panorama Images" Remote Sensing 14, no. 8: 1796. https://doi.org/10.3390/rs14081796

APA StyleYue, N., Zhang, Z., Jiang, S., & Chen, S. (2022). Deep Feature Migration for Real-Time Mapping of Urban Street Shading Coverage Index Based on Street-Level Panorama Images. Remote Sensing, 14(8), 1796. https://doi.org/10.3390/rs14081796