Transforming 2D Radar Remote Sensor Information from a UAV into a 3D World-View

Abstract

:1. Introduction

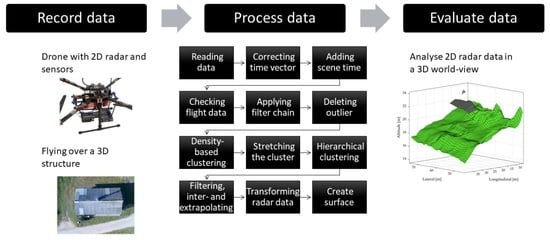

2. Materials and Methods

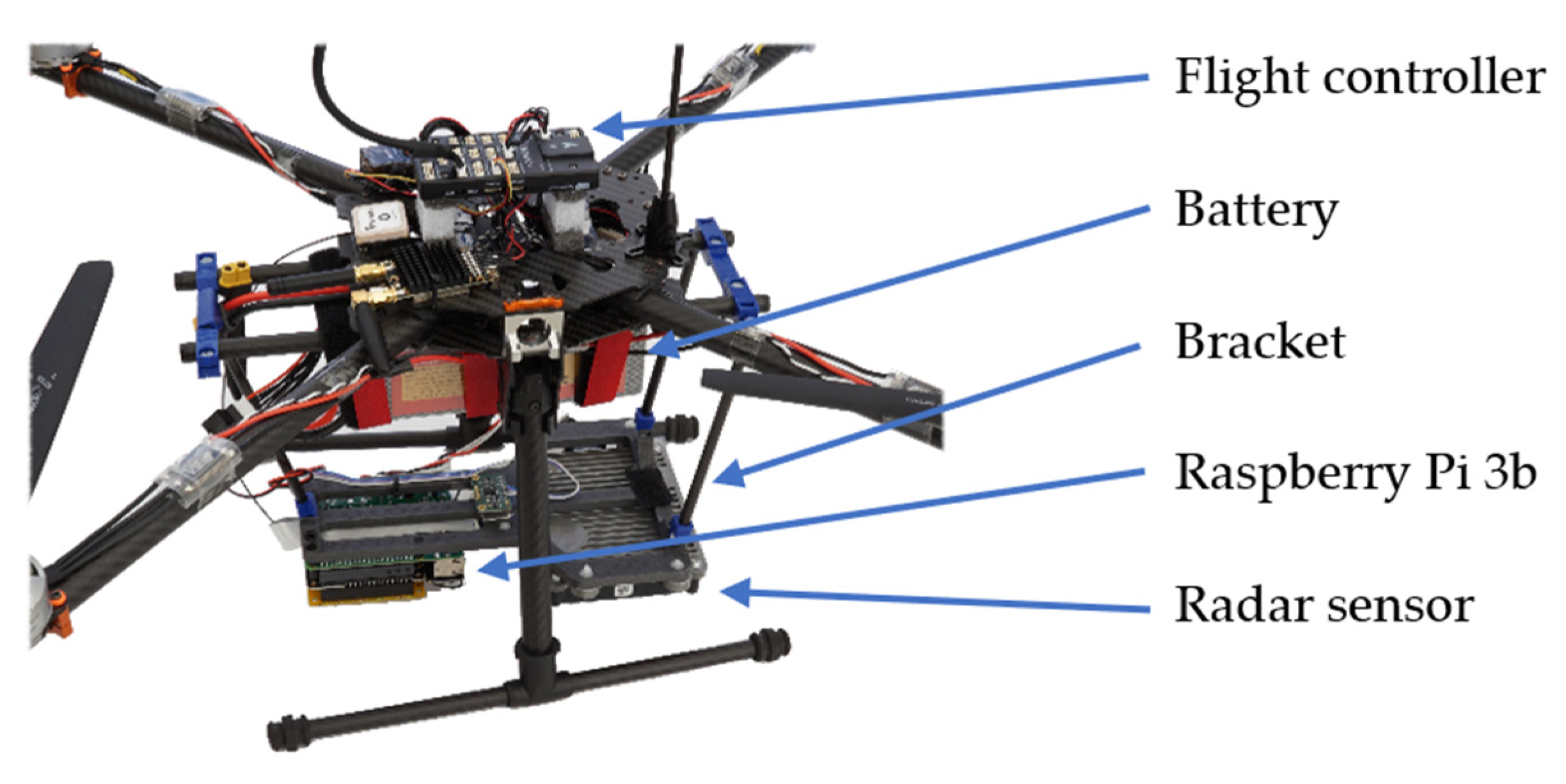

2.1. Measurement System and Location

2.2. Data Acquisition

2.3. Data Processing

- Reading data from CSV file;

- Correcting time vector and adding scene time;

- Checking if the flight is suitable for evaluation;

- Applying filter chain;

- Deleting outlier radar data;

- Applying density-based clustering;

- Stretching the cluster perpendicular to the ground plane;

- Applying hierarchical clustering;

- Filtering, inter- and extrapolating sensor data;

- Transforming 2D radar data into 3D;

- Fitting cluster to a surface.

2.3.1. Reading Data

- Radar sensor (RAD)—signals listed in Table 2;

- RPi sensors—GNSS, IMU, temperature and pressure;

- UAV sensors—GNSS, IMU and pressure.

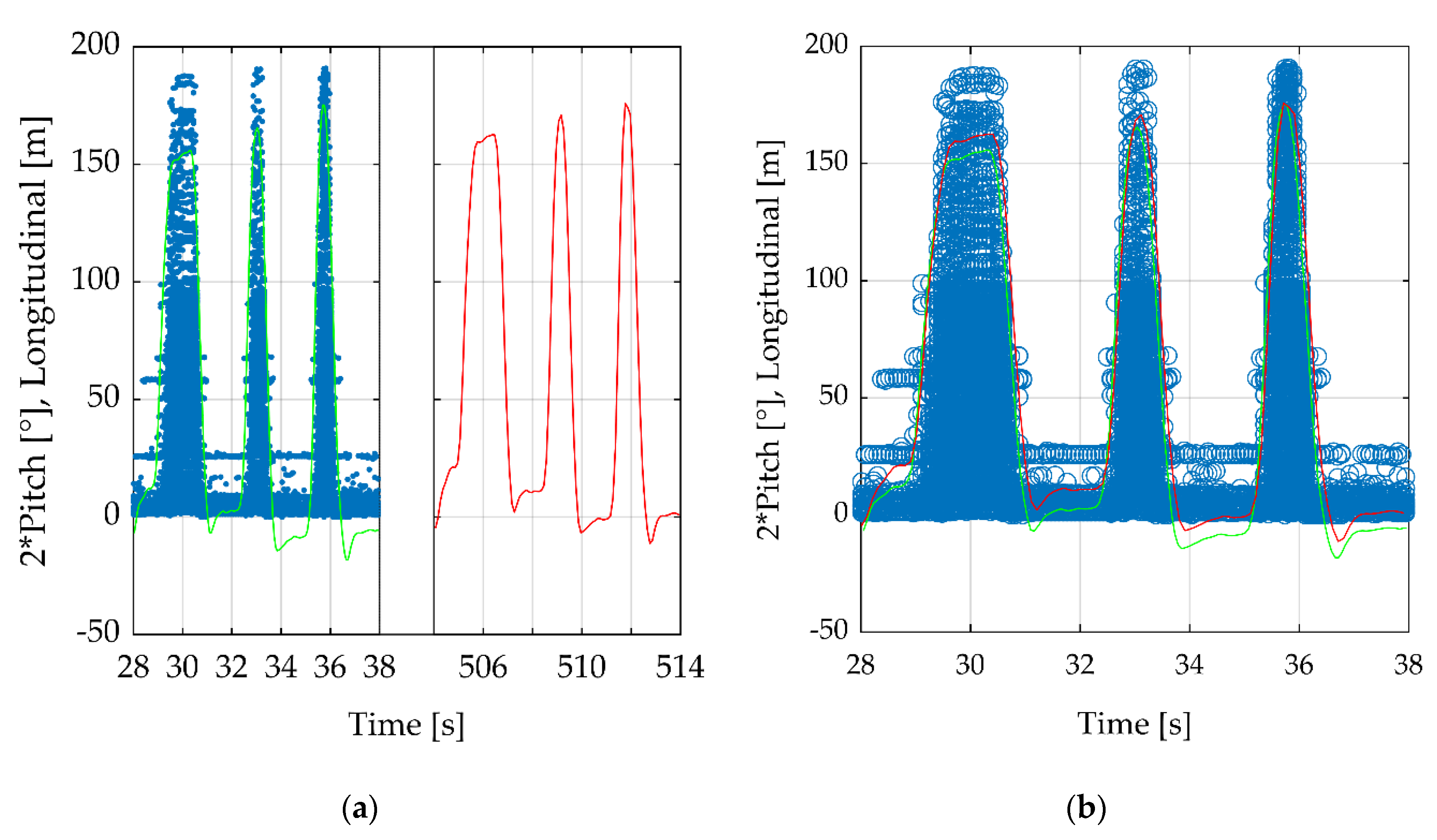

2.3.2. Correcting and Adding Time

- Radar = 35.8109 s;

- RPi = 35.6997 s;

- UAV = 511.761 s.

2.3.3. Checking Flight Data

2.3.4. Filter Chain

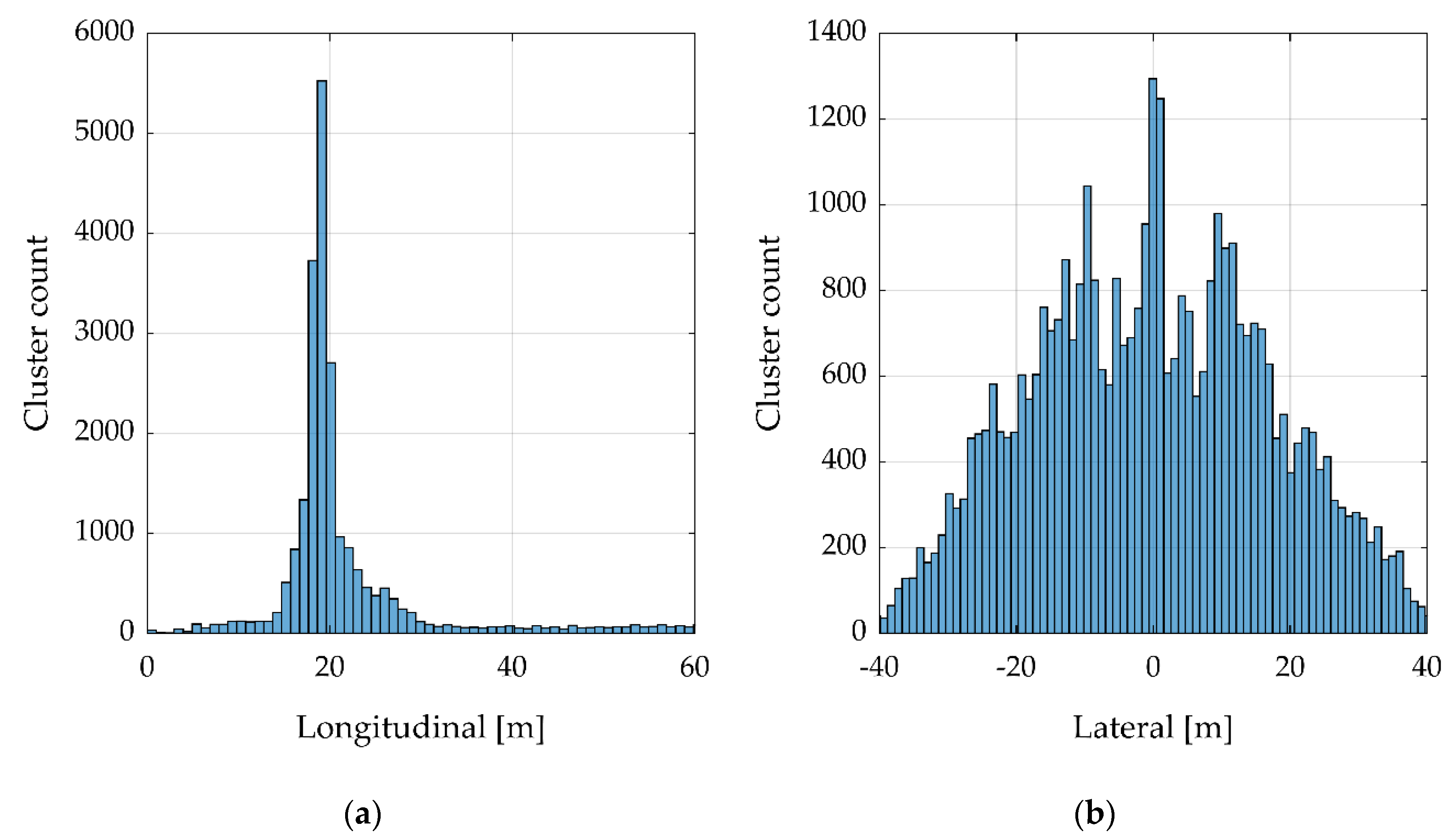

2.3.5. Delete Outlier Radar Data

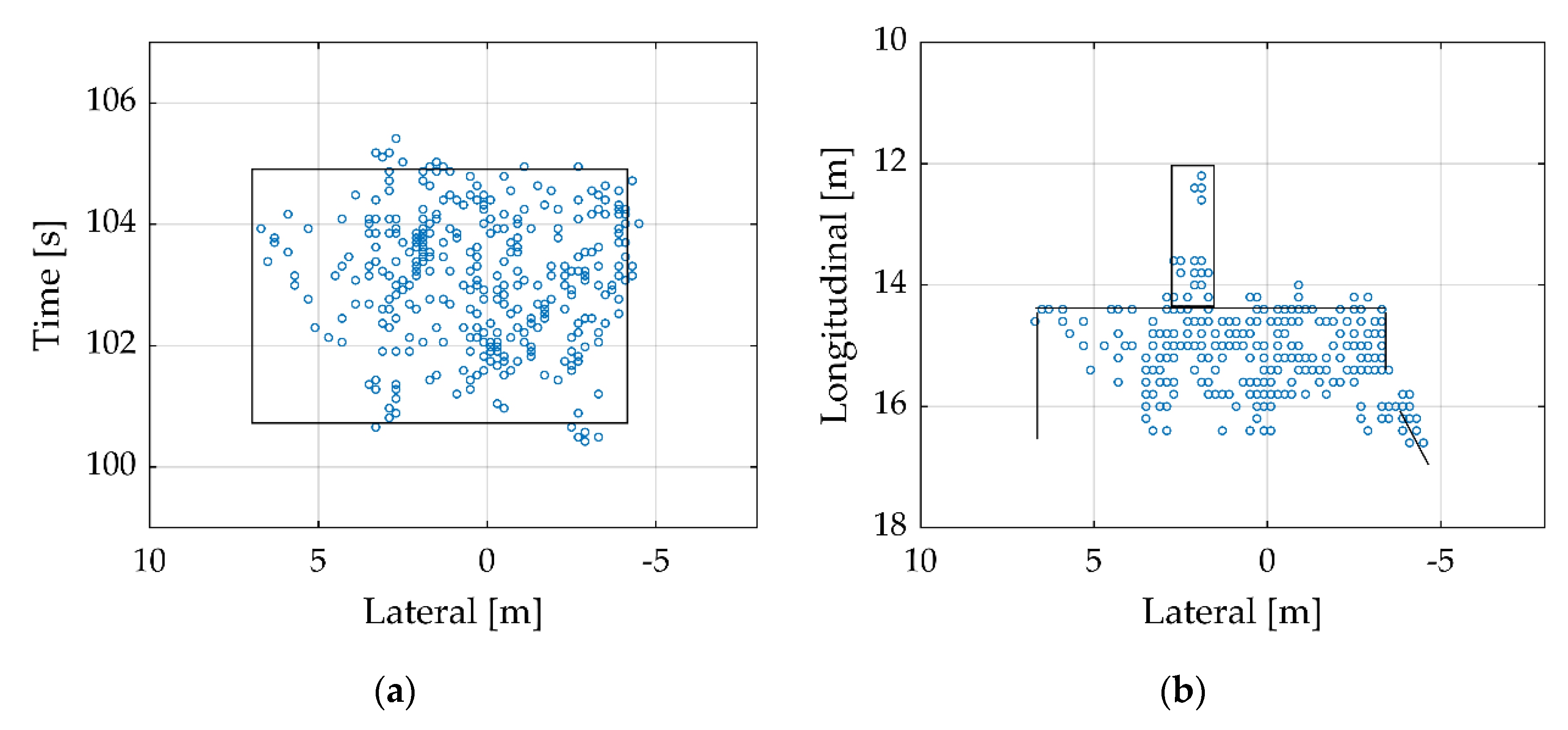

- Define the cuboid by time, longitude and latitude;

- Define the minimum number of clusters;

- Set the cuboid to the first cluster, so that is lies in its center;

- Count all clusters in the cuboid;

- If the number of minimum clusters is not reached, mark the current cluster;

- Iterate over all clusters and repeat steps 4 and 5;

- Delete all marked clusters.

2.3.6. Density-Based Clustering

2.3.7. Cluster Stretching

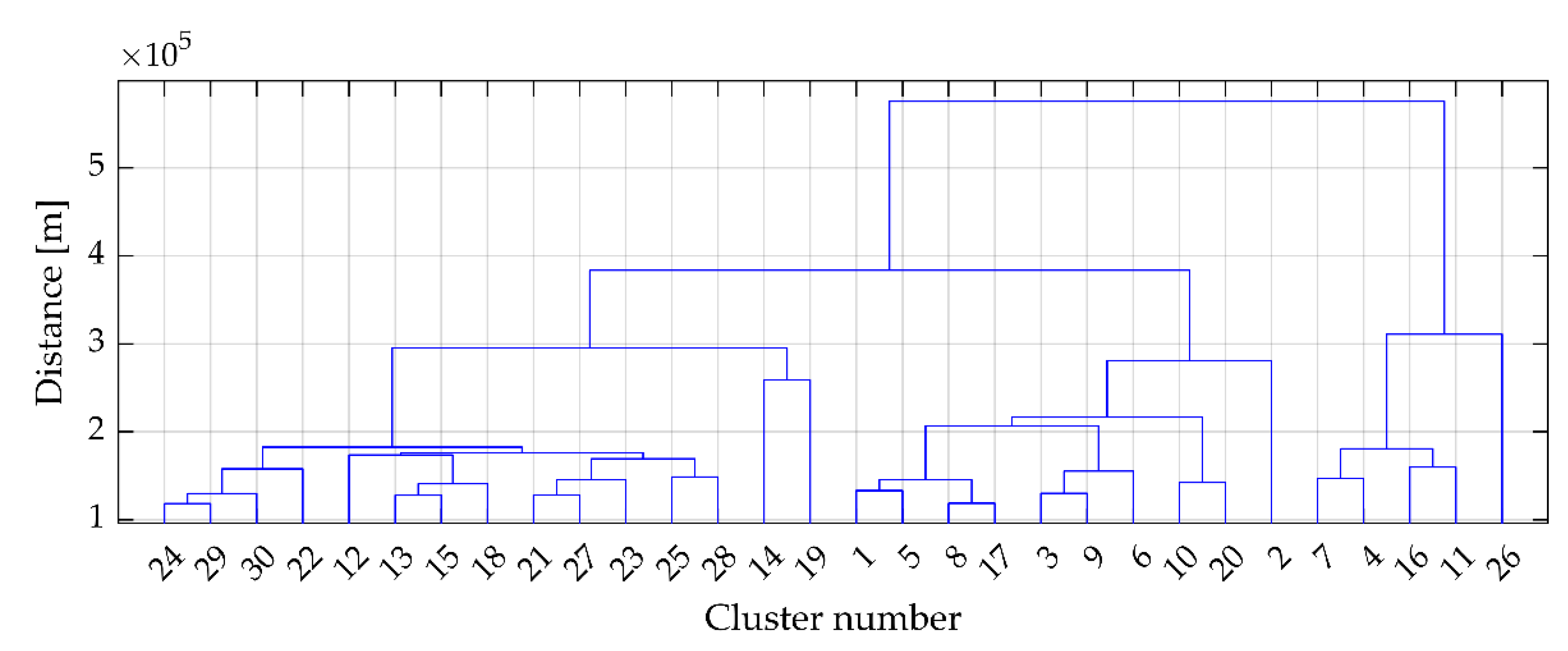

2.3.8. Hierarchical Agglomerative Distance-Based Clustering

2.3.9. Filter and Interpolate Sensor Data

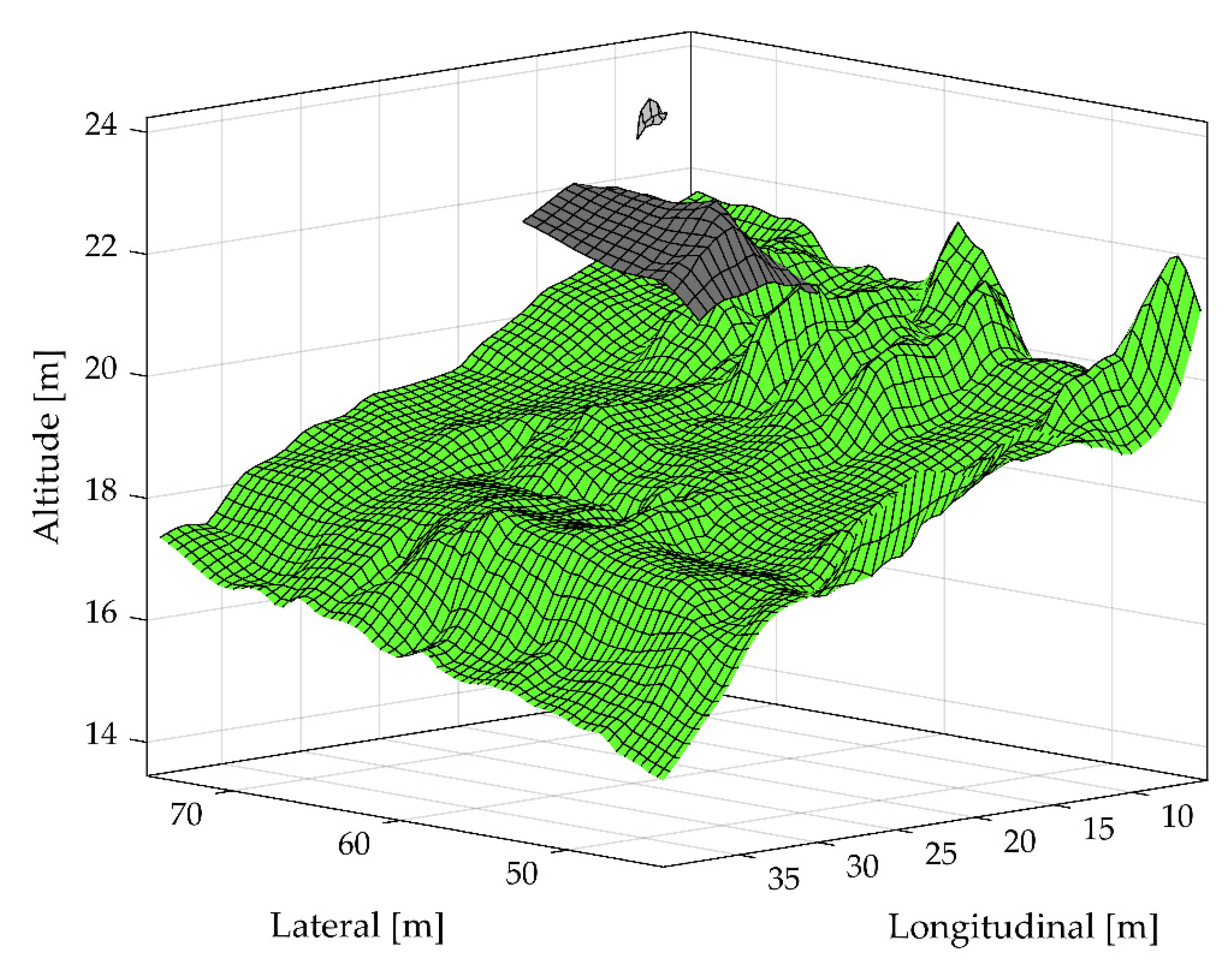

2.3.10. Transform 2D Radar Data into 3D

2.3.11. Fit Cluster to a Surface

3. Results

4. Discussion and Further Challenges

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Applications of Unmanned Aerial Vehicles in Geosciences. Available online: https://link.springer.com/book/9783030031701 (accessed on 2 January 2022).

- Kyriou, A.; Nikolakopoulos, K.; Koukouvelas, I.; Lampropoulou, P. Repeated UAV Campaigns, GNSS Measurements, GIS, and Petrographic Analyses for Landslide Mapping and Monitoring. Minerals 2021, 11, 300. [Google Scholar] [CrossRef]

- Gawehn, M.; De Vries, S.; Aarninkhof, S. A Self-Adaptive Method for Mapping Coastal Bathymetry On-The-Fly from Wave Field Video. Remote Sens. 2021, 13, 4742. [Google Scholar] [CrossRef]

- Chen, J.; Sasaki, J. Mapping of Subtidal and Intertidal Seagrass Meadows via Application of the Feature Pyramid Network to Unmanned Aerial Vehicle Orthophotos. Remote Sens. 2021, 13, 4880. [Google Scholar] [CrossRef]

- Mogili, U.R.; Deepak, B.B.V.L. Review on Application of Drone Systems in Precision Agriculture. Procedia Comput. Sci. 2018, 133, 502–509. [Google Scholar] [CrossRef]

- Khun, K.; Tremblay, N.; Panneton, B.; Vigneault, P.; Lord, E.; Cavayas, F.; Codjia, C. Use of Oblique RGB Imagery and Apparent Surface Area of Plants for Early Estimation of Above-Ground Corn Biomass. Remote Sens. 2021, 13, 4032. [Google Scholar] [CrossRef]

- Al-Naji, A.; Perera, A.G.; Mohammed, S.L.; Chahl, J. Life Signs Detector Using a Drone in Disaster Zones. Remote Sens. 2019, 11, 2441. [Google Scholar] [CrossRef] [Green Version]

- Hildmann, H.; Kovacs, E. Review: Using Unmanned Aerial Vehicles (UAVs) as Mobile Sensing Platforms (MSPs) for Disaster Response, Civil Security and Public Safety. Drones 2019, 3, 59. [Google Scholar] [CrossRef] [Green Version]

- Wufu, A.; Yang, S.; Chen, Y.; Lou, H.; Li, C.; Ma, L. Estimation of Long-Term River Discharge and Its Changes in Ungauged Watersheds in Pamir Plateau. Remote Sens. 2021, 13, 4043. [Google Scholar] [CrossRef]

- Wufu, A.; Chen, Y.; Yang, S.; Lou, H.; Wang, P.; Li, C.; Wang, J.; Ma, L. Changes in Glacial Meltwater Runoff and Its Response to Climate Change in the Tianshan Region Detected Using Unmanned Aerial Vehicles (UAVs) and Satellite Remote Sensing. Water 2021, 13, 1753. [Google Scholar] [CrossRef]

- D’Oleire-Oltmanns, S.; Marzolff, I.; Peter, K.D.; Ries, J.B. Unmanned Aerial Vehicle (UAV) for Monitoring Soil Erosion in Morocco. Remote Sens. 2012, 4, 3390–3416. [Google Scholar] [CrossRef] [Green Version]

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating Multispectral Images and Vegetation Indices for Precision Farming Applications from UAV Images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef] [Green Version]

- Yin, N.; Liu, R.; Zeng, B.; Liu, N. A review: UAV-based Remote Sensing. IOP Conf. Ser.: Mater. Sci. Eng. 2019, 490, 062014. [Google Scholar] [CrossRef]

- Adamopoulos, E.; Rinaudo, F. UAS-Based Archaeological Remote Sensing: Review, Meta-Analysis and State-of-the-Art. Drones 2020, 4, 46. [Google Scholar] [CrossRef]

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Madrigal, V.P.; Mallinis, G.; Ben Dor, E.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the Use of Unmanned Aerial Systems for Environmental Monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef] [Green Version]

- Koh, L.P.; Wich, S.A. Dawn of Drone Ecology: Low-Cost Autonomous Aerial Vehicles for Conservation. Trop. Conserv. Sci. 2012, 5, 121–132. [Google Scholar] [CrossRef] [Green Version]

- Naughton, J.; McDonald, W. Evaluating the Variability of Urban Land Surface Temperatures Using Drone Observations. Remote Sens. 2019, 11, 1722. [Google Scholar] [CrossRef] [Green Version]

- Prasenja, Y.; Alamsyah, A.T.; Bengen, D.G. Land-Use Analysis of Eco Fishery Tourism Using a Low-Cost Drone, the Case of Lumpur Island, Sidoarjo District. IOP Conf. Ser. Earth Environ. Sci. 2018, 202, 012014. [Google Scholar] [CrossRef]

- Von Eichel-Streiber, J.; Weber, C.; Rodrigo-Comino, J.; Altenburg, J. Controller for a Low-Altitude Fixed-Wing UAV on an Embedded System to Assess Specific Environmental Conditions. Int. J. Aerosp. Eng. 2020, 2020, 1360702. [Google Scholar] [CrossRef]

- Remke, A.; Rodrigo-Comino, J.; Wirtz, S.; Ries, J. Finding Possible Weakness in the Runoff Simulation Experiments to Assess Rill Erosion Changes without Non-Intermittent Surveying Capabilities. Sensors 2020, 20, 6254. [Google Scholar] [CrossRef]

- Caduff, R.; Schlunegger, F.; Kos, A.; Wiesmann, A. A review of terrestrial radar interferometry for measuring surface change in the geosciences. Earth Surf. Process. Landforms 2015, 40, 208–228. [Google Scholar] [CrossRef]

- Smith, L.C. Emerging Applications of Interferometric Synthetic Aperture Radar (InSAR) in Geomorphology and Hydrology. Ann. Assoc. Am. Geogr. 2002, 92, 385–398. [Google Scholar] [CrossRef]

- GPR Applications across Engineering and Geosciences Disciplines in Italy: A Review. Available online: https://ieeexplore.ieee.org/abstract/document/7475886/ (accessed on 2 January 2022).

- Samaras, S.; Diamantidou, E.; Ataloglou, D.; Sakellariou, N.; Vafeiadis, A.; Magoulianitis, V.; Lalas, A.; Dimou, A.; Zarpalas, D.; Votis, K.; et al. Deep Learning on Multi Sensor Data for Counter UAV Applications—A Systematic Review. Sensors 2019, 19, 4837. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bethke, K.-H.; Baumgartner, S.; Gabele, M. Airborne Road Traffic Monitoring with Radar. In Proceedings of the World Congress on Intelligent Transport Systems (ITS), Beijing, China, 9–13 October 2007; pp. 1–6. Available online: https://elib.dlr.de/51746/ (accessed on 25 February 2022).

- Besada, J.A.; Campaña, I.; Carramiñana, D.; Bergesio, L.; De Miguel, G. Review and Simulation of Counter-UAS Sensors for Unmanned Traffic Management. Sensors 2022, 22, 189. [Google Scholar] [CrossRef]

- Coluccia, A.; Parisi, G.; Fascista, A. Detection and Classification of Multirotor Drones in Radar Sensor Networks: A Review. Sensors 2020, 20, 4172. [Google Scholar] [CrossRef]

- Haley, M.; Ahmed, M.; Gebremichael, E.; Murgulet, D.; Starek, M. Land Subsidence in the Texas Coastal Bend: Locations, Rates, Triggers, and Consequences. Remote Sens. 2022, 14, 192. [Google Scholar] [CrossRef]

- De Alban, J.D.T.; Connette, G.M.; Oswald, P.; Webb, E.L. Combined Landsat and L-Band SAR Data Improves Land Cover Classification and Change Detection in Dynamic Tropical Landscapes. Remote Sens. 2018, 10, 306. [Google Scholar] [CrossRef] [Green Version]

- Bauer-Marschallinger, B.; Paulik, C.; Hochstöger, S.; Mistelbauer, T.; Modanesi, S.; Ciabatta, L.; Massari, C.; Brocca, L.; Wagner, W. Soil Moisture from Fusion of Scatterometer and SAR: Closing the Scale Gap with Temporal Filtering. Remote Sens. 2018, 10, 1030. [Google Scholar] [CrossRef] [Green Version]

- Sivasankar, T.; Kumar, D.; Srivastava, H.S.; Patel, P. Advances in Radar Remote Sensing of Agricultural Crops: A Review. Int. J. Adv. Sci. Eng. Inf. Technol. 2018, 8, 1126–1137. [Google Scholar] [CrossRef] [Green Version]

- Nikaein, T.; Iannini, L.; Molijn, R.A.; Lopez-Dekker, P. On the Value of Sentinel-1 InSAR Coherence Time-Series for Vegetation Classification. Remote Sens. 2021, 13, 3300. [Google Scholar] [CrossRef]

- Lausch, A.; Bastian, O.; Klotz, S.; Leitão, P.J.; Jung, A.; Rocchini, D.; Schaepman, M.E.; Skidmore, A.K.; Tischendorf, L.; Knapp, S. Understanding and assessing vegetation health by in situ species and remote-sensing approaches. Methods Ecol. Evol. 2018, 9, 1799–1809. [Google Scholar] [CrossRef]

- Tripathi, A.; Tiwari, R.K. Utilisation of spaceborne C-band dual pol Sentinel-1 SAR data for simplified regression-based soil organic carbon estimation in Rupnagar, Punjab, India. Adv. Space Res. 2021, 69, 1786–1798. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, H.; Lei, X. Real-time forecasting of river water level in urban based on radar rainfall: A case study in Fuzhou City. J. Hydrol. 2021, 603, 126820. [Google Scholar] [CrossRef]

- Qi, Y.; Fan, S.; Li, B.; Mao, J.; Lin, D. Assimilation of Ground-Based Microwave Radiometer on Heavy Rainfall Forecast in Beijing. Atmosphere 2022, 13, 74. [Google Scholar] [CrossRef]

- Klotzsche, A.; Jonard, F.; Looms, M.; Van der Kruk, J.; Huisman, J. Measuring Soil Water Content with Ground Penetrating Radar: A Decade of Progress. Vadose Zone J. 2018, 17, 180052. [Google Scholar] [CrossRef] [Green Version]

- Giannakis, I.; Giannopoulos, A.; Warren, C. A Realistic FDTD Numerical Modeling Framework of Ground Penetrating Radar for Landmine Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 37–51. [Google Scholar] [CrossRef]

- Winner, H.; Hakuli, S.; Lotz, F.; Singer, C. (Eds.) Handbook of Driver Assistance Systems: Basic Information, Components and Systems for Active Safety and Comfort; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; Available online: https://www.springer.com/de/book/9783319123516 (accessed on 27 February 2020).

- Reif, K. (Ed.) Brakes, Brake Control and Driver Assistance Systems: Function, Regulation and Components; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar] [CrossRef]

- Weber, C.; Von Eichel-Streiber, J.; Rodrigo-Comino, J.; Altenburg, J.; Udelhoven, T. Automotive Radar in a UAV to Assess Earth Surface Processes and Land Responses. Sensors 2020, 20, 4463. [Google Scholar] [CrossRef]

- Siebert, S.; Teizer, J. Mobile 3D mapping for surveying earthwork projects using an Unmanned Aerial Vehicle (UAV) system. Autom. Constr. 2014, 41, 1–14. [Google Scholar] [CrossRef]

- Tagarakis, A.C.; Filippou, E.; Kalaitzidis, D.; Benos, L.; Busato, P.; Bochtis, D. Proposing UGV and UAV Systems for 3D Mapping of Orchard Environments. Sensors 2022, 22, 1571. [Google Scholar] [CrossRef]

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Gómez-Gutiérrez, Á.; Gonçalves, G.R. Surveying coastal cliffs using two UAV platforms (multirotor and fixed-wing) and three different approaches for the estimation of volumetric changes. Int. J. Remote Sens. 2020, 41, 8143–8175. [Google Scholar] [CrossRef]

- Vacca, G.; Furfaro, G.; Dessì, A. The Use of the Uav Images for the Building 3d Model Generation. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-4/W8, 217–223. [Google Scholar] [CrossRef] [Green Version]

- Barba, S.; Barbarella, M.; Di Benedetto, A.; Fiani, M.; Gujski, L.; Limongiello, M. Accuracy Assessment of 3D Photogrammetric Models from an Unmanned Aerial Vehicle. Drones 2019, 3, 79. [Google Scholar] [CrossRef] [Green Version]

- Hugler, P.; Roos, F.; Schartel, M.; Geiger, M.; Waldschmidt, C. Radar Taking Off: New Capabilities for UAVs. IEEE Microw. Mag. 2018, 19, 43–53. [Google Scholar] [CrossRef] [Green Version]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise. In Proceedings of the 2nd International Conference on Knowledge Discovery and Data Mining KDD-96, Portland, OR, USA, 2–4 August 1996; pp. 226–231. [Google Scholar]

- “Pixhawk 4” Holybro. Available online: http://www.holybro.com/product/pixhawk-4/ (accessed on 29 December 2021).

- ArduPilot “ArduPilot,” ArduPilot.org. Available online: https://ardupilot.org (accessed on 29 December 2021).

- “ARS 408” Continental Engineering Services. Available online: https://conti-engineering.com/components/ars-408/ (accessed on 4 January 2022).

- Cloos, C. Price Request ARS-408.

- Xia, S.; Chen, D.; Wang, R.; Li, J.; Zhang, X. Geometric Primitives in LiDAR Point Clouds: A Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 685–707. [Google Scholar] [CrossRef]

- Day, W.H.E.; Edelsbrunner, H. Efficient algorithms for agglomerative hierarchical clustering methods. J. Classif. 1984, 1, 7–24. [Google Scholar] [CrossRef]

- WitMotion WT901 TTL & I2C Output 9 Axis AHRS Sensor Accelerometer + Gyroscope + Angle + Magnetic Field MPU9250. Available online: https://www.wit-motion.com/gyroscope-module/Witmotion-wt901-ttl-i2c.html (accessed on 5 January 2022).

- Kaiser, A.; Neugirg, F.; Rock, G.; Müller, C.; Haas, F.; Ries, J.; Schmidt, J. Small-Scale Surface Reconstruction and Volume Calculation of Soil Erosion in Complex Moroccan Gully Morphology Using Structure from Motion. Remote Sens. 2014, 6, 7050–7080. [Google Scholar] [CrossRef] [Green Version]

- Remke, A.; Rodrigo-Comino, J.; Gyasi-Agyei, Y.; Cerdà, A.; Ries, J.B. Combining the Stock Unearthing Method and Structure-from-Motion Photogrammetry for a Gapless Estimation of Soil Mobilisation in Vineyards. ISPRS Int. J. Geo-Inf. 2018, 7, 461. [Google Scholar] [CrossRef] [Green Version]

- Smith, M.W.; Vericat, D. From experimental plots to experimental landscapes: Topography, erosion and deposition in sub-humid badlands from Structure-from-Motion photogrammetry. Earth Surf. Process. Landf. 2015, 40, 1656–1671. [Google Scholar] [CrossRef] [Green Version]

| Type | Value |

|---|---|

| Output type | Cluster |

| Send quality data | On |

| Maximum distance | 196 m |

| RCS threshold | Normal/high sensitivity |

| Signal | Description | Unit |

|---|---|---|

| ID | Increasing ID of the cluster | - |

| Longitudinal | X-position | m |

| Lateral | Y-position | m |

| Velocity longitudinal | Velocity x-axis | m/s |

| Velocity lateral | Always zero | - |

| Dynamic property | Dynamic cluster state | - |

| Radar cross-section | Reflection strength | dbm2 |

| Flight Number | Altitude [m] | Flight Velocity [m/s] | Radar Sensitivity Mode |

|---|---|---|---|

| 1 | 20 | 1 | High |

| 2 | 20 | 1 | Normal |

| 3 | 30 | 1 | High |

| 4 | 30 | 5 | Normal |

| Building | 1. Flight | 2. Flight | 3. Flight | 4. Flight | |

|---|---|---|---|---|---|

| Length [m] | 10.2 | 10.6 | 10.4 | 10.4 | 10.4 |

| Width [m] | 6.2 | 7.2 | 6.9 | 7.9 | 6.3 |

| Height left/right [m] | 3.2/4.0 | 3.4/4.2 | 3.8/4.6 | 4.0/4.7 | 4.0/4.6 |

| Building | 1. Flight | 2. Flight | 3. Flight | 4. Flight | |

|---|---|---|---|---|---|

| Length [m] | 10.2 | 10.7 | 10.6 | 10.5 | 10.7 |

| Width [m] | 6.2 | 6.1 | 6.5 | 5.9 | 6.5 |

| Height left/right [m] | 3.2/4.0 | 3.7/4.6 | 3.6/4.4 | 3.7/4.7 | 3.7/4.4 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Weber, C.; Eggert, M.; Rodrigo-Comino, J.; Udelhoven, T. Transforming 2D Radar Remote Sensor Information from a UAV into a 3D World-View. Remote Sens. 2022, 14, 1633. https://doi.org/10.3390/rs14071633

Weber C, Eggert M, Rodrigo-Comino J, Udelhoven T. Transforming 2D Radar Remote Sensor Information from a UAV into a 3D World-View. Remote Sensing. 2022; 14(7):1633. https://doi.org/10.3390/rs14071633

Chicago/Turabian StyleWeber, Christoph, Marius Eggert, Jesús Rodrigo-Comino, and Thomas Udelhoven. 2022. "Transforming 2D Radar Remote Sensor Information from a UAV into a 3D World-View" Remote Sensing 14, no. 7: 1633. https://doi.org/10.3390/rs14071633

APA StyleWeber, C., Eggert, M., Rodrigo-Comino, J., & Udelhoven, T. (2022). Transforming 2D Radar Remote Sensor Information from a UAV into a 3D World-View. Remote Sensing, 14(7), 1633. https://doi.org/10.3390/rs14071633