Smartphone–Camera–Based Water Reflectance Measurement and Typical Water Quality Parameter Inversion

Abstract

:1. Introduction

2. Study Area and Datasets

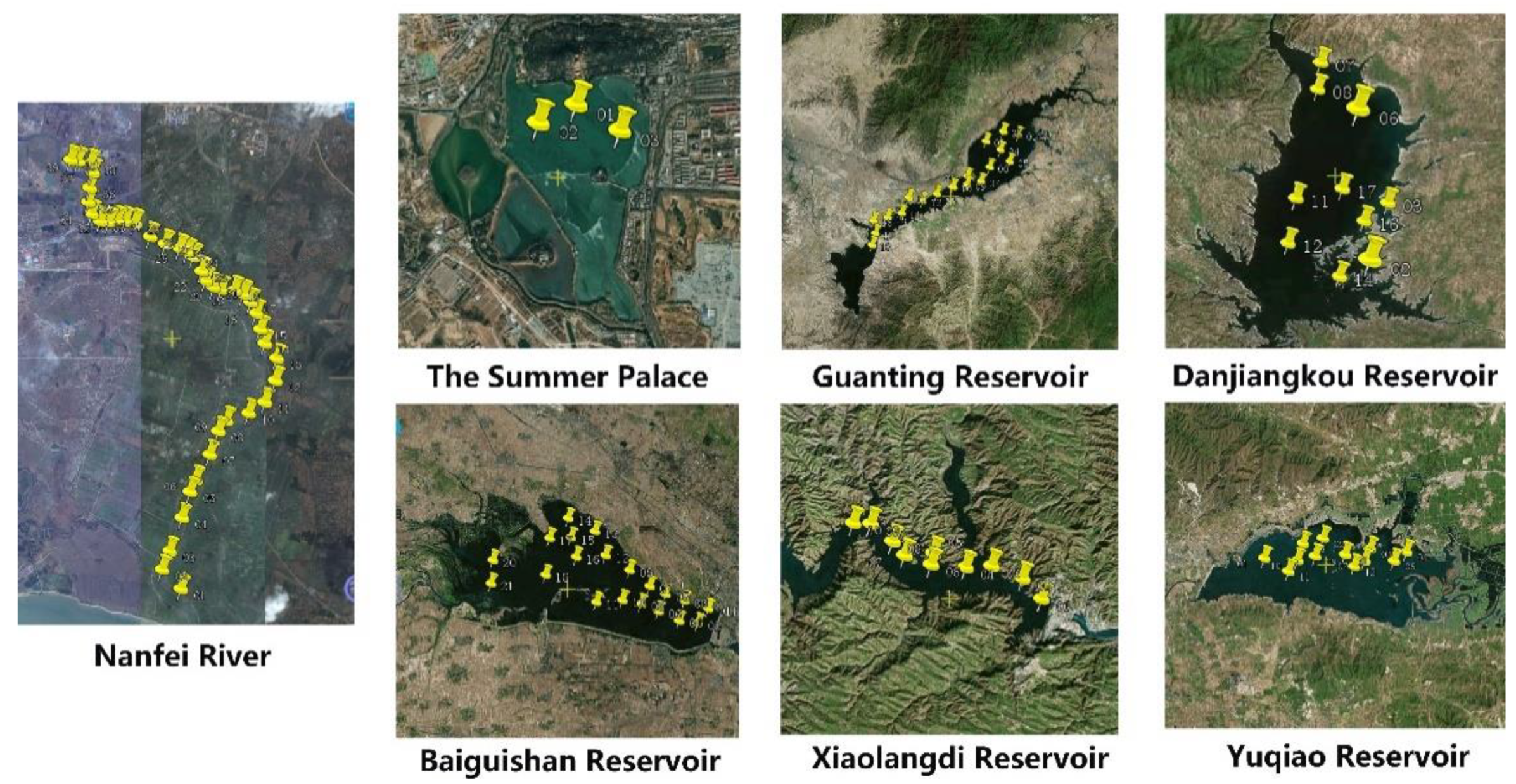

2.1. Study Area

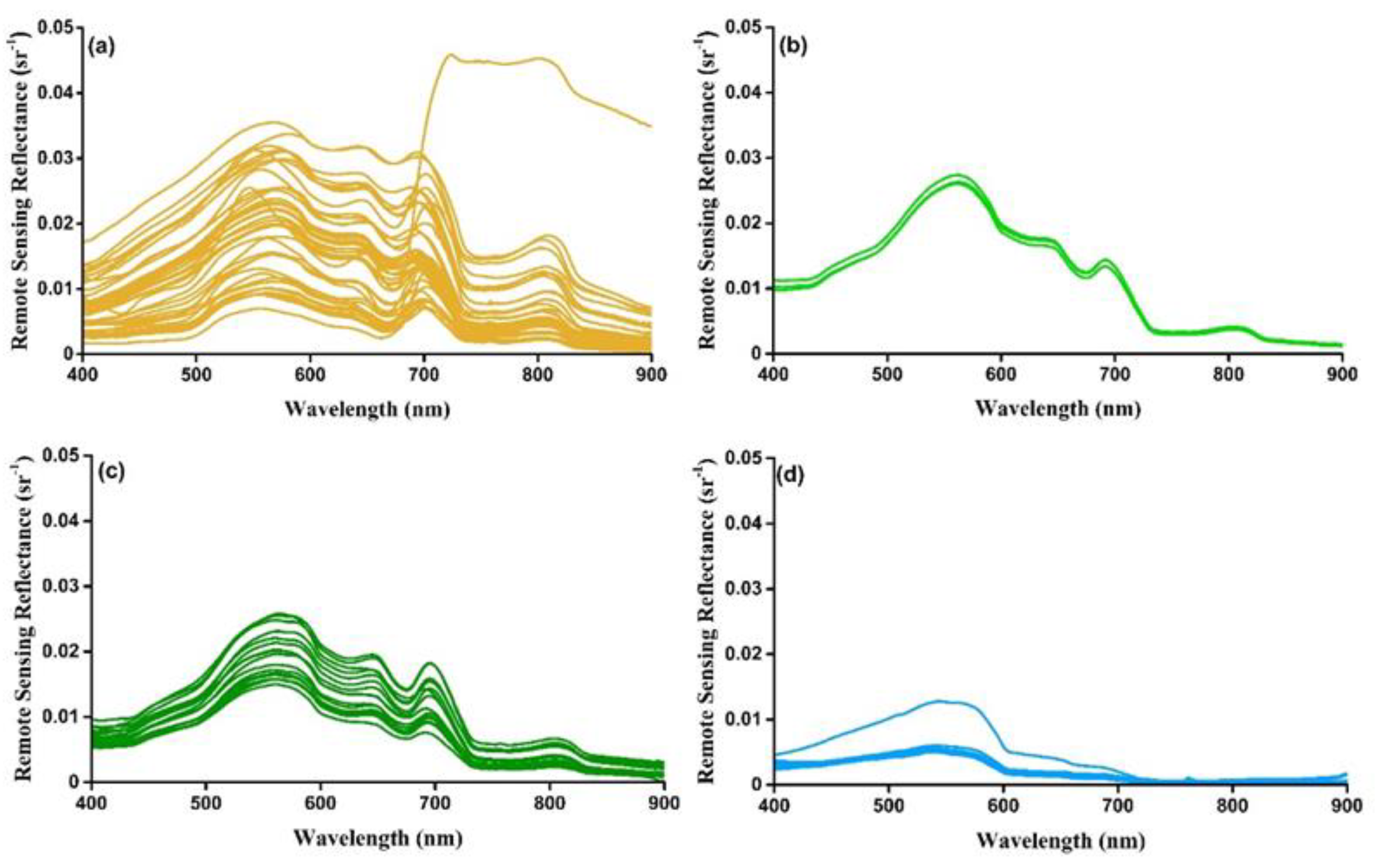

2.2. In Situ Dataset

3. Methods

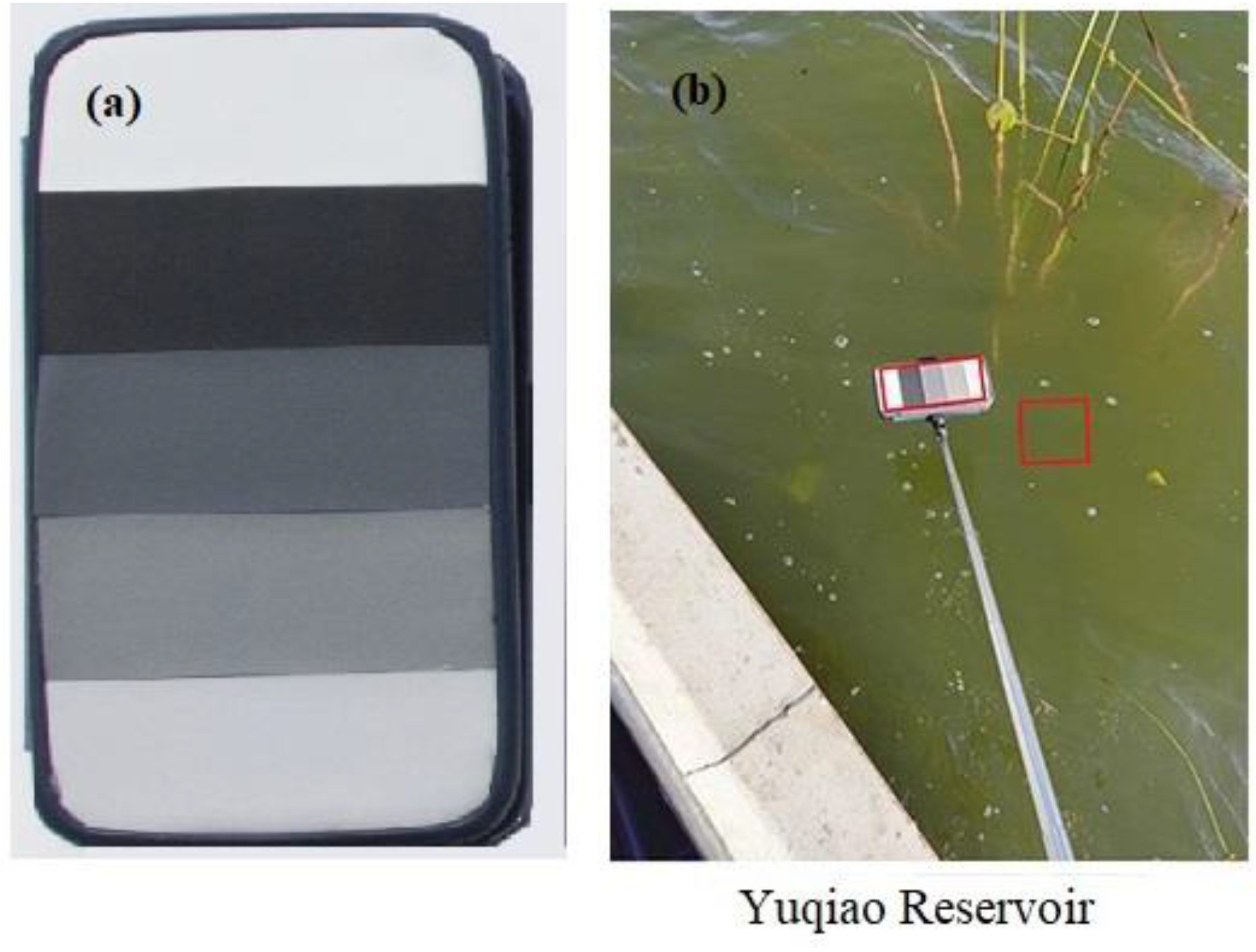

3.1. Water Surface Photographs Taken by Smartphone Camera

3.1.1. Observation Geometry Design

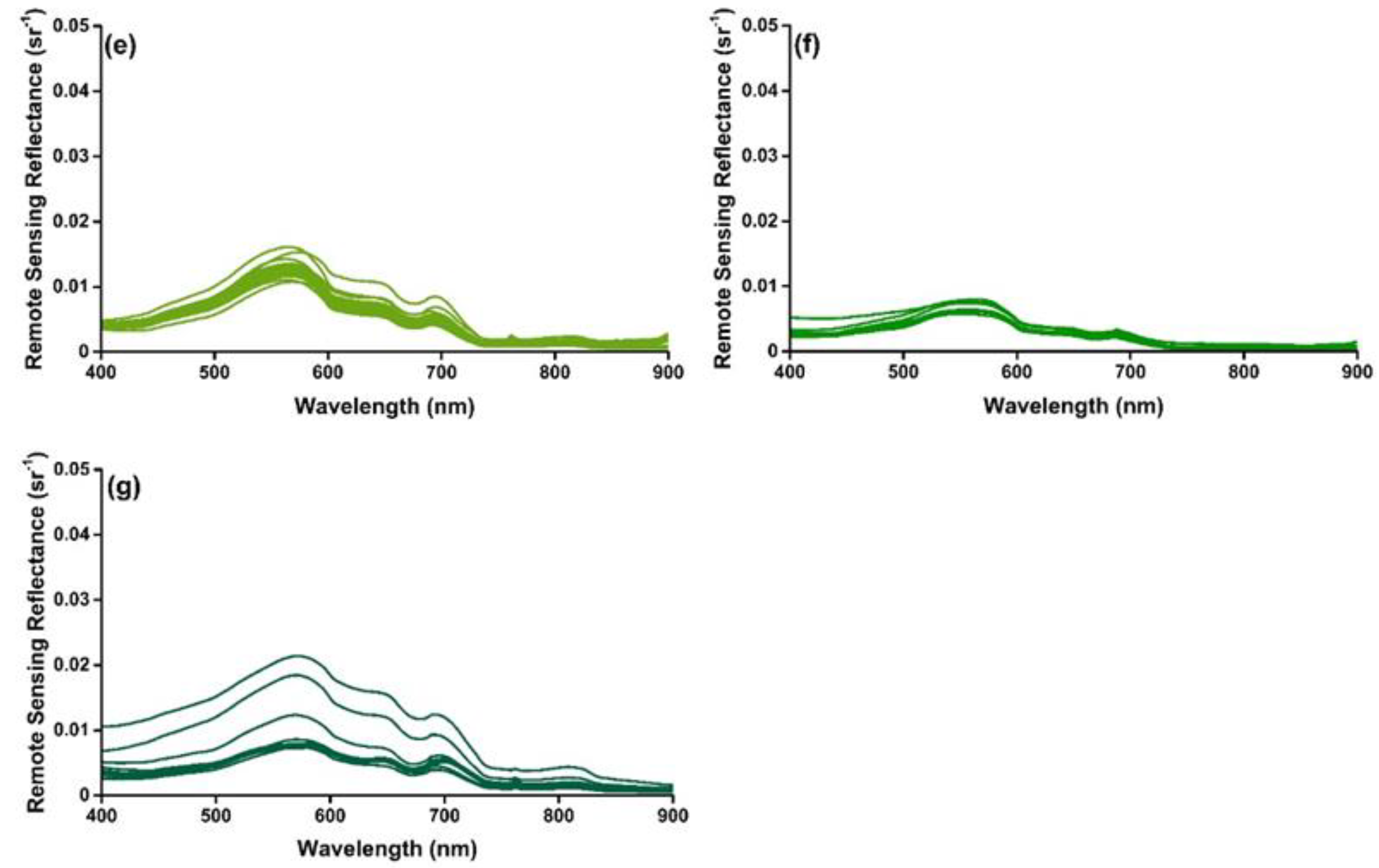

3.1.2. Five-Color Reference Card

3.2. Rrs Derivation from Water Surface Photograph

3.2.1. Reference Card and Water Body Photograph Clipping

3.2.2. Water Reflectance Calculation

3.3. Water Quality Retrieval

3.4. Accuracy Evaluation

4. Results

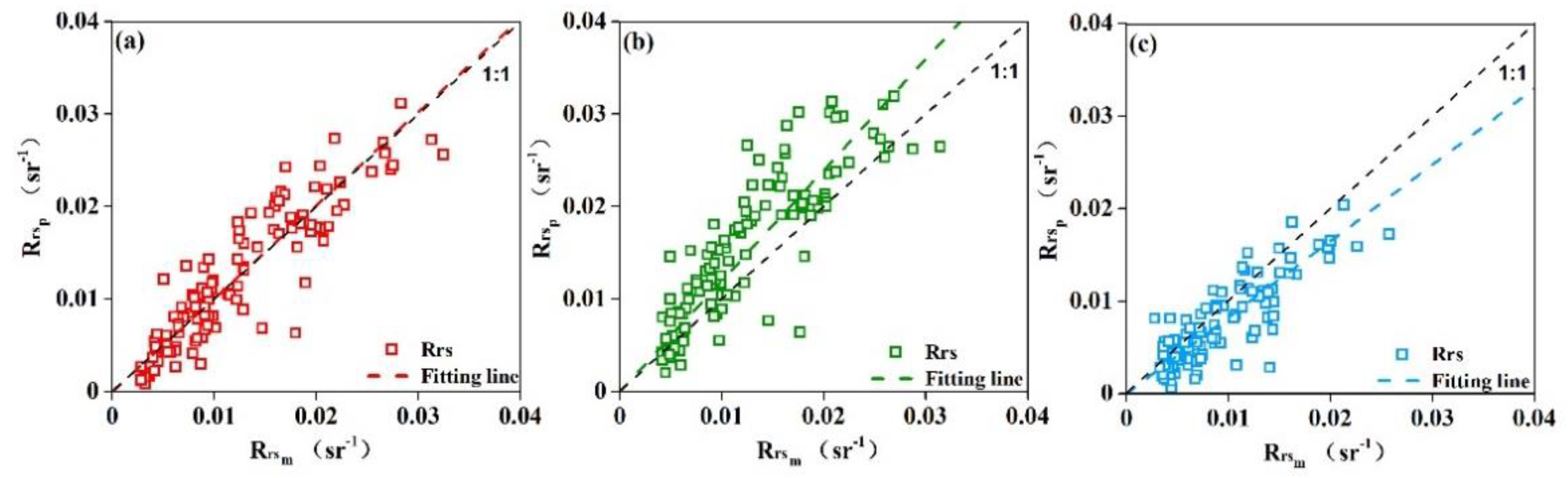

4.1. Validation of Smartphone Photograph–Derived Rrs

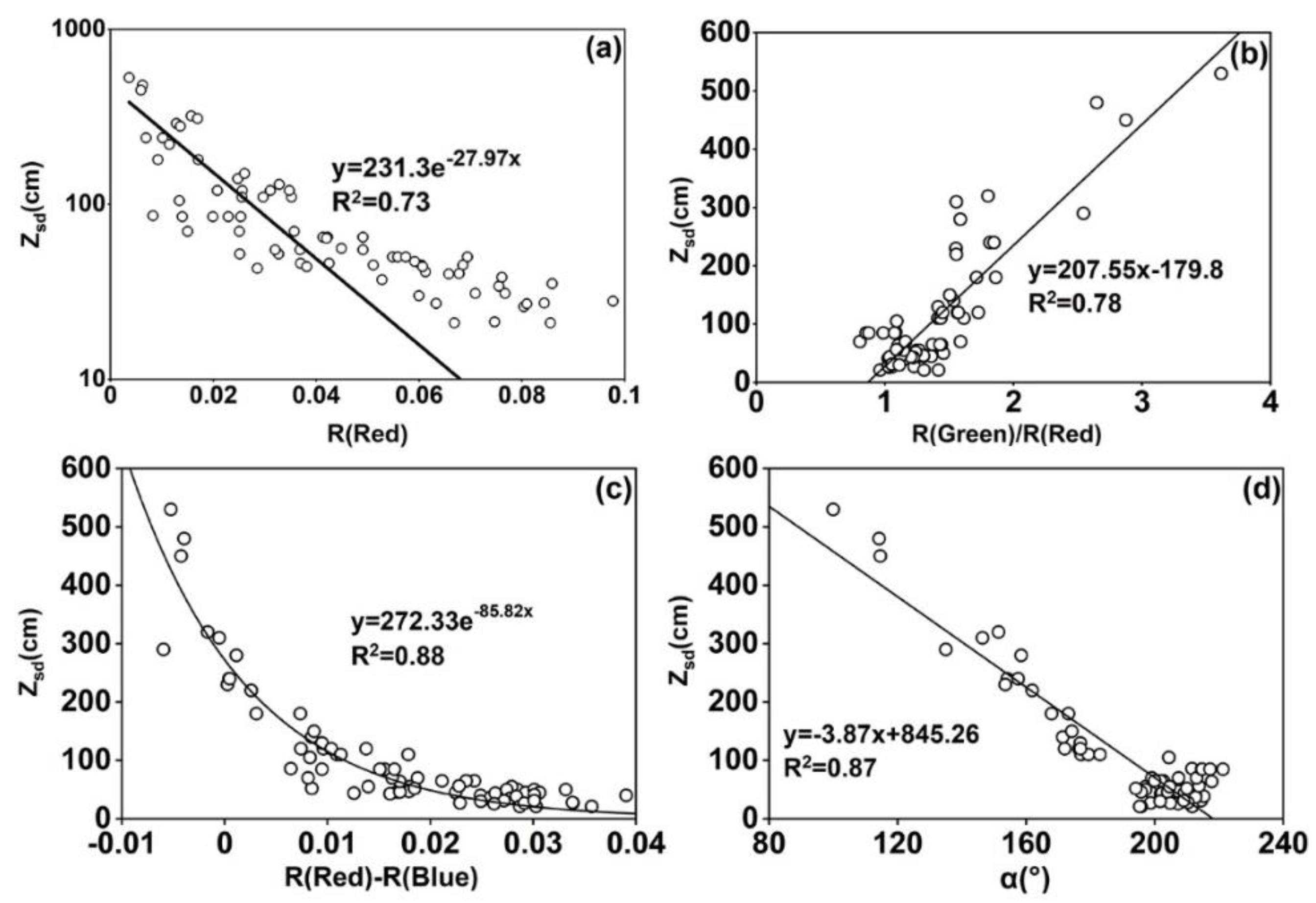

4.2. Secchi–Disk Depth Estimation Based on Smartphone Photography

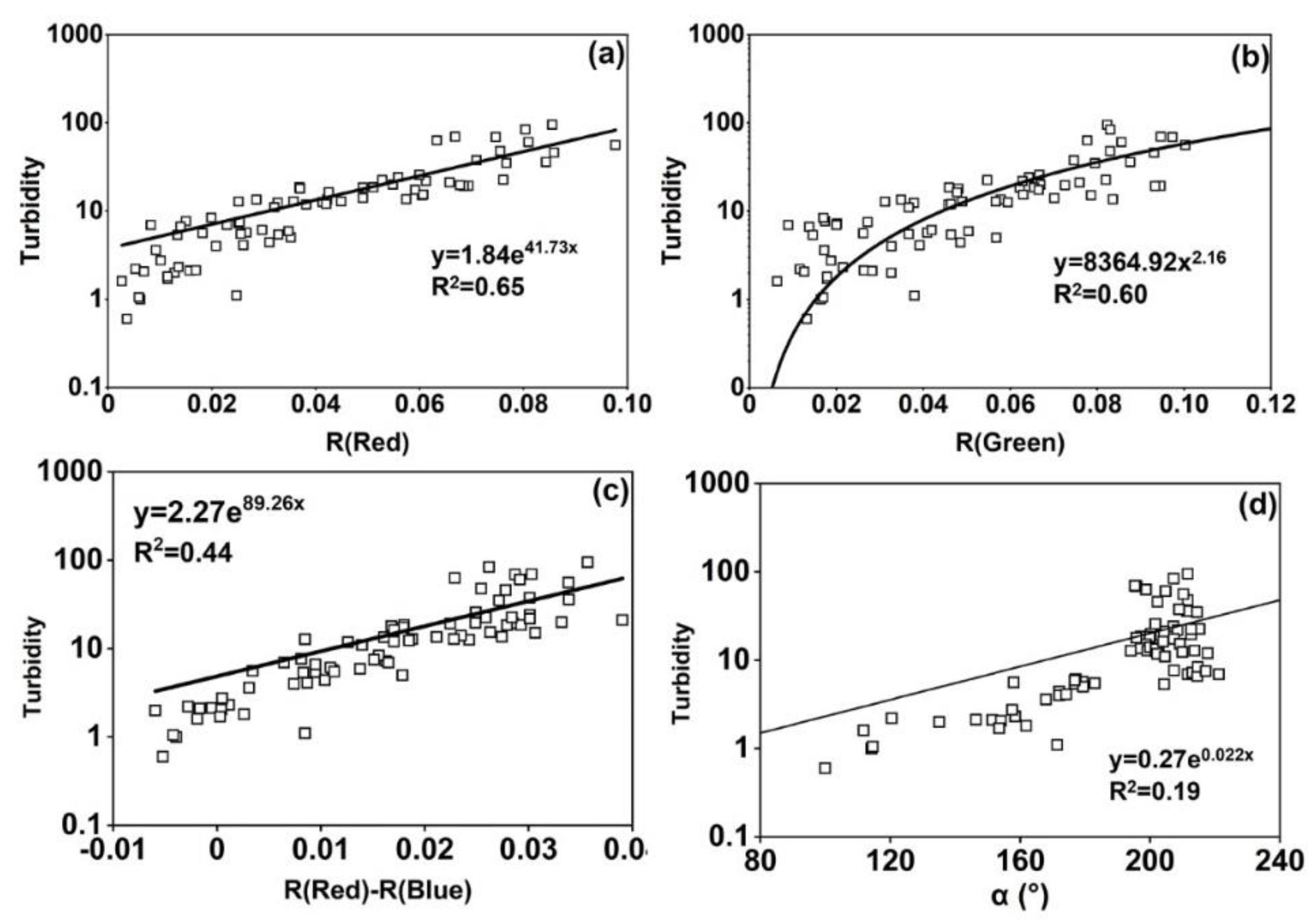

4.3. Turbidity Estimation Based on Smartphone Photography

5. Discussion on the Uncertainties Caused by Smartphone Parameter Settings

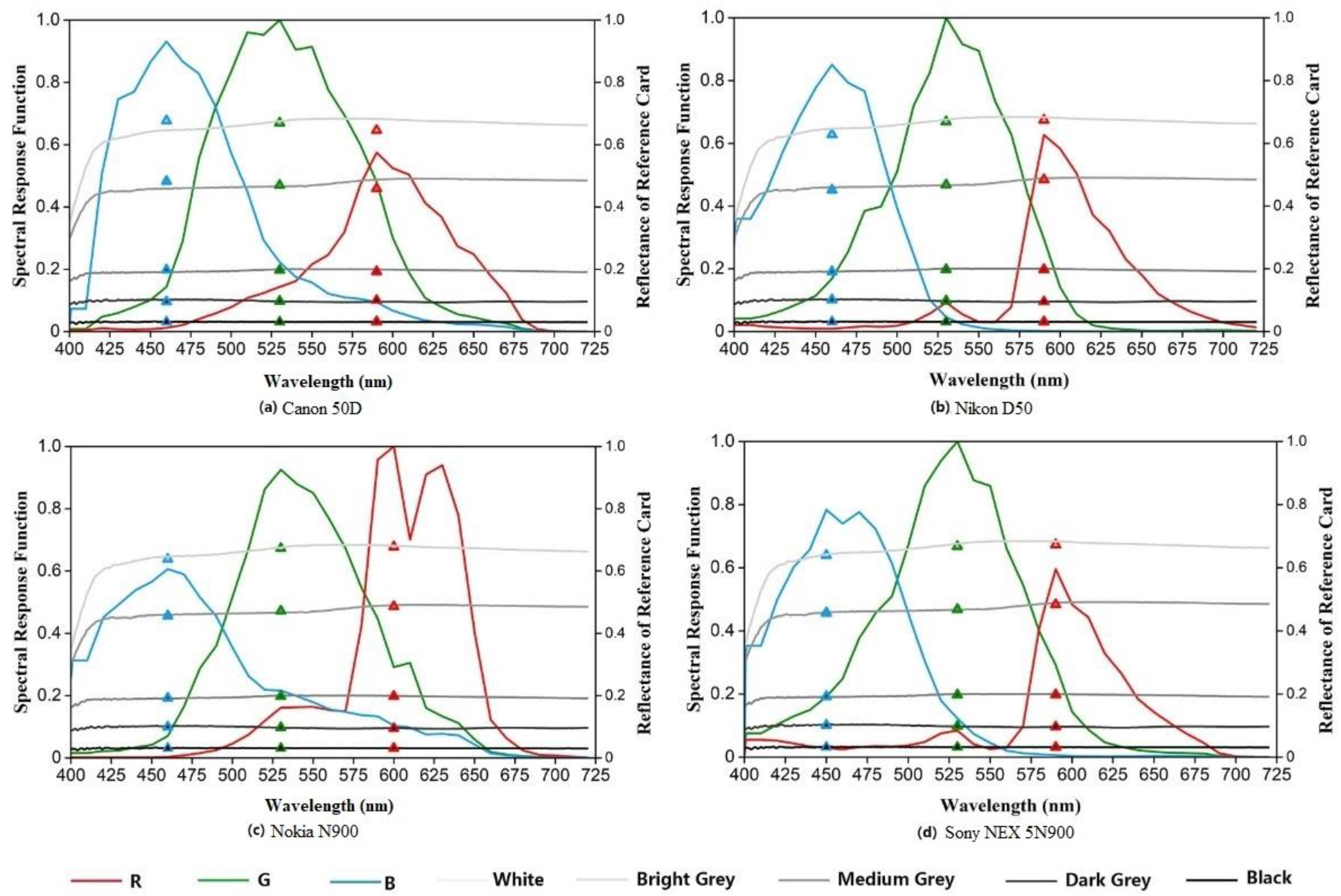

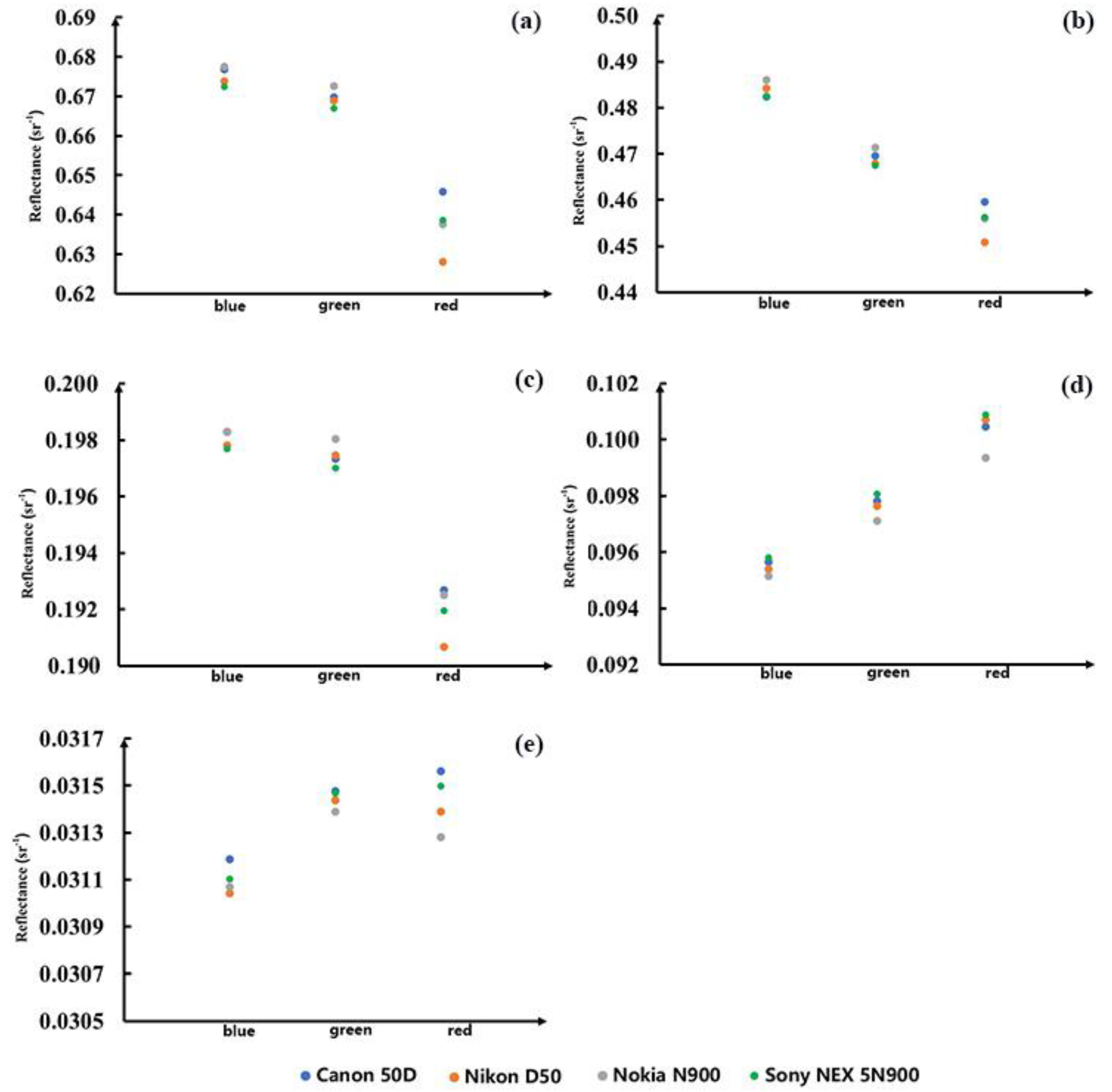

5.1. Uncertainties Caused by the Spectral Response Functions of Different Digital Cameras

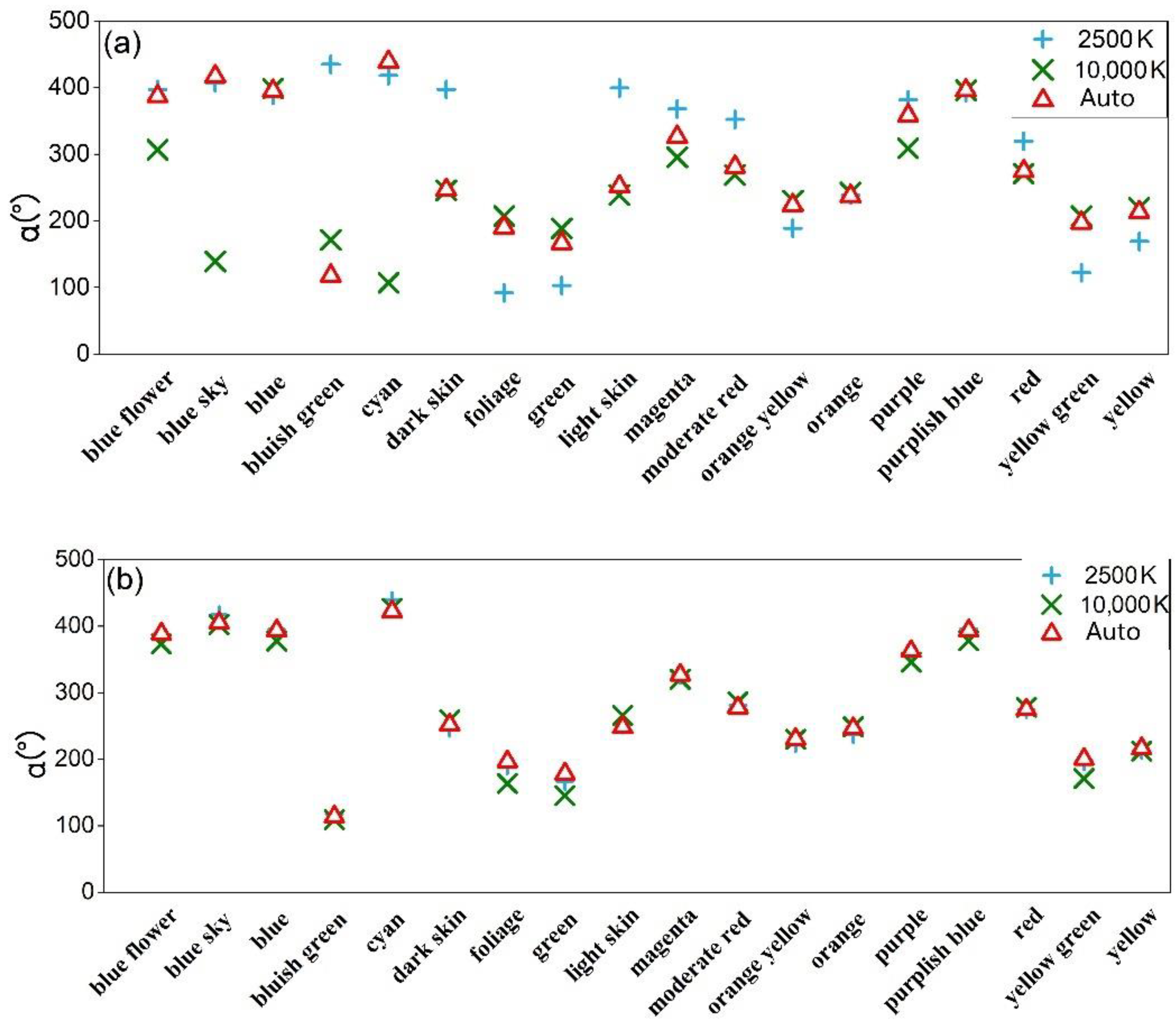

5.2. Uncertainties Caused by the Automatic White Balance

6. Conclusions

6.1. Calculation of Water Reflectance Based on Digital Photographs of the Water Surface

6.2. Inversion of Water Quality Parameters by Calculating Water Reflectance Based on Smartphone Water Surface Photographs

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, B.; Li, J.; Shen, Q.; Wu, Y.; Zhang, F.; Wang, S. Key Technologies and Systems of Surface Water Environment Monitoring by Remote Sensing. Environ. Monit. China 2019, 35, 1–9. [Google Scholar]

- Barwick, H. The ‘Four Vs’ of Big Data. In Proceedings of the Implementing Information Infrastructure Symposium, Sydney, Australia, 5 August 2011. [Google Scholar]

- Beyer, M.A.; Laney, D. The Importance of ‘Big Data’: A Definition; Gartner: Stamford, CT, USA, 2012. [Google Scholar]

- Liang, D.; Wang, L.; Chen, F.; Guo, H. Scientific big data and digital Earth. Chin. Sci. Bull. 2014, 59, 1047–1054. (In Chinese) [Google Scholar] [CrossRef]

- Wu, B.; Zhang, M.; Zeng, H.; Zhang, X.; Yan, N.; Meng, J. Agricultural Monitoring and Early Warning in the Era of Big Data. J. Remote Sens. 2016, 20, 1027–1037. [Google Scholar]

- Leeuw, T. Crowdsourcing Water Quality Data Using the iPhone Camera. Master’s Thesis, The University of Maine, Orono, ME, USA, 2014. [Google Scholar]

- Leeuw, T.; Boss, E. The HydroColor App: Above Water Measurements of Remote Sensing Reflectance and Turbidity Using a Smartphone Camera. Sensors 2018, 18, 256. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Burggraaff, O.; Schmidt, N.; Zamorano, J.; Pauly, K.; Pascual, S.; Tapia, C.; Spyrakos, E.; Snik, F. Standardized Spectral and Radiometric Calibration of Consumer Cameras. Opt. Express 2019, 27, 19075–19101. [Google Scholar] [CrossRef] [Green Version]

- Gao, M.; Li, J.; Zhang, F.; Wang, S.; Xie, Y.; Yin, Z.; Zhang, B. Measurement of Water Leaving Reflectance Using a Digital Camera Based on Multiple Reflectance Reference Cards. Sensors 2020, 20, 6580. [Google Scholar] [CrossRef]

- Malthus, T.J.; Ohmsen, R.; van der Woerd, H.J. An Evaluation of Citizen Science Smartphone Apps for Inland Water Quality Assessment. Remote Sens. 2020, 12, 1578. [Google Scholar] [CrossRef]

- Salama, M.; Mahama, P. Smart Phones for Water Quality Mapping. Master’s Thesis, University of Twente, Enschede, The Netherlands, 2016. [Google Scholar]

- Liu, D.; Duan, H.; Loiselle, S.; Hu, C.; Zhang, G.; Li, J.; Yang, H.; Thompson, J.R.; Cao, Z.; Shen, M.; et al. Observations of Water Transparency in China’s Lakes from Space. Int. J. Appl. Earth Obs. Geoinf. 2020, 92, 102187. [Google Scholar] [CrossRef]

- Lathrop, R.G., Jr.; Lillesand, T.M. Monitoring Water Quality and River Plume Transport in Green Bay, Lake Michigan with SPOT–1 Imagery. Photogramm. Eng. Remote Sens. 1989, 55, 349–354. [Google Scholar]

- Binding, C.E.; Jerome, J.H.; Bukata, R.P.; Booty, W.G. Trends in Water Clarity of the Lower Great Lakes from Remotely Sensed Aquatic Color. J. Great Lakes Res. 2007, 33, 828–841. [Google Scholar] [CrossRef] [Green Version]

- Olmanson, L.G.; Bauer, M.E.; Brezonik, P.L. A 20-Year Landsat Water Clarity Census of Minnesota’s 10,000 Lakes. Remote Sens. Environ. 2008, 112, 4086–4097. [Google Scholar] [CrossRef]

- Song, T.; Shi, J.; Liu, J.; Xu, W.; Yan, F.; Xu, C.; Zhu, B. Research on Remote Sensing Quantitative Inversion Models of Blue–green Algae Density and Turbidity Based on Landsat–8 OLI Image Data in Lake Taihu. Saf. Environ. Eng. 2015, 22, 67–71, 78. (In Chinese) [Google Scholar]

- Wang, S.; Li, J.; Shen, Q.; Zhang, B.; Zhang, F.; Lu, Z. MODIS-Based Radiometric Color Extraction and Classification of Inland Water with the Forel–Ule Scale: A Case Study of Lake Taihu. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 907–918. [Google Scholar] [CrossRef]

- Wang, S.; Li, J.; Zhang, B.; Lee, Z.; Spyrakos, E.; Feng, L.; Liu, C.; Zhao, H.; Wu, Y.; Zhu, L.; et al. Changes of Water Clarity in Large Lakes and Reservoirs Across China Observed from Long–Term MODIS. Remote Sens. Environ. 2020, 247, 111949. [Google Scholar] [CrossRef]

- Wang, S.; Li, J.; Zhang, B.; Spyrakos, E.; Tyler, A.N.; Shen, Q.; Zhang, F.; Kuster, T.; Lehmann, M.K.; Wu, Y.; et al. Trophic State Assessment of Global Inland Waters Using a MODIS–Derived Forel–Ule Index. Remote Sens. Environ. 2018, 217, 444–460. [Google Scholar] [CrossRef] [Green Version]

- Mueller, J.; Fargion, G. Ocean Optics Protocols for Satellite Ocean Color Sensor Validation, Revision 3; NASA: Washington, DC, USA, 2003; Volume 2. [Google Scholar]

- Prasad, D.; Nguyen, R.; Brown, M. Quick Approximation of Camera’s Spectral Response from Casual Lighting. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) Workshops, Sydney, Australia, 2–8 December 2013; pp. 844–851. [Google Scholar]

- Mobley, C.D. Estimation of the Remote–Sensing Reflectance from Above-Surface Measurements. Appl. Opt. 1999, 38, 7442–7455. [Google Scholar] [CrossRef]

- Mobley, C.D. Polarized Reflectance and Transmittance Properties of Windblown Sea Surfaces. Appl. Opt. 2015, 54, 4828–4849. [Google Scholar] [CrossRef]

- Xu, W.; Zhang, L.; Yang, B.; Qiao, Y. On–orbit radiometric calibration based on gray-scale tarps. Acta Opt. Sin. 2012, 32, 164–168. [Google Scholar]

- Shenglei, W.; Junsheng, L.; Bing, Z.; Qian, S.; Fangfang, Z.; Zhaoyi, L. A Simple Correction Method for the MODIS Surface Reflectance Product Over Typical Inland Waters in China. Int. J. Remote Sens. 2016, 37, 6076–6096. [Google Scholar] [CrossRef]

- Yin, Z.; Li, J.; Huang, J.; Wang, S.; Zhang, F.; Zhang, B. Steady Increase in Water Clarity in Jiaozhou Bay in the Yellow Sea From 2000 to 2018: Observations From MODIS. J. Ocean. Limnol. 2021, 39, 800–813. [Google Scholar] [CrossRef]

- Yin, Z.; Li, J.; Liu, Y.; Xie, Y.; Zhang, F.; Wang, S.; Sun, X.; Zhang, B. Water Clarity Changes in Lake Taihu Over 36 Years Based on Landsat TM and OLI Observations. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102457. [Google Scholar] [CrossRef]

- Duan, H.T.; Wen, Y.; Zhang, B.; Song, K.S.; Wang, Z.M. Application Hyperspectral Data in Remote Sensing Inverse of Water Quality Variables in Lake Chagan. J. Arid. Land Resour. Environ. 2006, 20, 104–108. [Google Scholar]

- Koponen, S.; Pulliainen, J.; Kallio, K.; Hallikainen, M. Lake Water Quality Classification with Airborne Hyperspectral Spectrometer and Simulated MERIS Data. Remote Sens. Environ. 2002, 79, 51–59. [Google Scholar] [CrossRef]

- Wang, M.; Son, S.; Zhang, Y.; Shi, W. Remote Sensing of Water Optical Property for China’s Inland Lake Taihu Using the SWIR Atmospheric Correction With 1640 and 2130–nm Bands. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2505–2516. [Google Scholar] [CrossRef]

- Xiao, X.; Xu, J.; Zhao, D.Z.; Zhao, B.C.; Xu, J.; Cheng, X.J.; LI, G.Z. Research on Combined Remote Sensing Retrieval of Turbidity for River Based on Domestic Satellite Data. J. Yangtze River Sci. Res. Inst. 2021, 38, 128. [Google Scholar]

- Goddijn-Murphy, L.M.; Dailloux, D.; White, M.; Bowers, D. Fundamentals of In Situ Digital Camera Methodology for Water Quality Monitoring of Coast and Ocean. Sensors 2009, 9, 5825–5843. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.C.; Yin, Y. Underwater Image Enhancement Based on Color Balance and Correction. Ship Sci. Technol. 2021, 43, 154–159. [Google Scholar]

- Wu, X. Sensitivity, White Balance and Shutter Speed in Photography. View Financ. 2021, 4, 82–83. [Google Scholar]

| Research Area Name | Center Longitude | Center Latitude | Sampling Date | Time Range (Local Time) | Sampling Number |

|---|---|---|---|---|---|

| Nanfei River | 117.40E | 31.77N | 22 May 2020 | 9:30–15:45 | 40 |

| The SummerPalace | 116.27E | 39.99N | 19 June 2020 | 10:15–14:15 | 3 |

| Guanting Reservoir | 115.73E | 40.34N | 13 August 2020 | 9:55–13:50 | 18 |

| Danjiangkou Reservoir | 110.58E | 32.74N | 2 September 2020 | 9:30–15:45 | 9 |

| Baiguishan Reservoir | 113.19E | 33.73N | 4 September 2020 | 10:15–14:15 | 20 |

| Xiaolangdi Reservoir | 112.33E | 34.94N | 22 October 2020 | 9:55–13:50 | 10 |

| Yuqiao Reservoir | 117.53E | 40.04N | 8 November 2020 | 9:30–15:45 | 12 |

| Study Area Name | Smartphone Model (Operating System) | Average Zsd (cm) | Standard Deviation of Zsd (cm) | Average Turbidity (NTU) | Standard Deviation of Turbidity (NTU) |

|---|---|---|---|---|---|

| Nanfei River | Mi 8 (Android) | 38.4 | 9.8 | 30.1 | 21.3 |

| The Summer Palace | Mi 8 (Android) | 46.7 | 2.4 | 20.3 | 1.6 |

| Guanting Reservior | Mi 8 (Android) | 57.7 | 9.2 | 15.6 | 3.7 |

| Danjiangkou Reservior | Apple XS (Apple) | 455.7 | 85.5 | 1.2 | 0.6 |

| Baiguishan Reservior | Apple XS (Apple) | 122.2 | 29.0 | 5.4 | 1.9 |

| XiaolangdiReservior | Apple XS (Apple) | 245.0 | 42.0 | 2.2 | 0.3 |

| Yuqiao Reservior | Honor9 (Android) | 85.5 | 14.2 | 8.4 | 3.7 |

| Band | Red Band | Green Band | Blue Band | |

|---|---|---|---|---|

| Reflectance Scale | ||||

| White card | 0.6769 | 0.6697 | 0.6458 | |

| Bright gray card | 0.4824 | 0.4695 | 0.4596 | |

| Medium gray card | 0.1983 | 0.1973 | 0.1927 | |

| Dark gray card | 0.0957 | 0.0978 | 0.1005 | |

| Black card | 0.0312 | 0.0315 | 0.0316 | |

| Band | RMSE (sr−1) | AURE (%) | R2 | Accuracy Ratio |

|---|---|---|---|---|

| Red | 0.0032 | 25.7 | 0.98 | 0.98 |

| Green | 0.0051 | 29.5 | 0.94 | 1.20 |

| Blue | 0.0031 | 35.2 | 0.92 | 0.80 |

| Zsd Inversion Model Name | AURE (%) | Accuracy Ratio | R2 |

|---|---|---|---|

| (a) Red band model | 25.5 | 0.74 | 0.76 |

| (b) Green:red band ratio model | 37.5 | 0.75 | 0.89 |

| (c) Red and blue band difference model | 27.6 | 0.90 | 0.94 |

| (d) Chromaticity angle model | 27.0 | 0.85 | 0.92 |

| Turbidity Inversion Model Name | AURE (%) | Accuracy Ratio | R2 |

|---|---|---|---|

| (a) Red band model | 39.8 | 0.99 | 0.61 |

| (b) Green band model | 50.5 | 0.91 | 0.32 |

| (c) Red and blue band difference model | 35.7 | 0.82 | 0.57 |

| (d) Chromaticity angle model | 31.9 | 0.62 | 0.61 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, M.; Li, J.; Wang, S.; Zhang, F.; Yan, K.; Yin, Z.; Xie, Y.; Shen, W. Smartphone–Camera–Based Water Reflectance Measurement and Typical Water Quality Parameter Inversion. Remote Sens. 2022, 14, 1371. https://doi.org/10.3390/rs14061371

Gao M, Li J, Wang S, Zhang F, Yan K, Yin Z, Xie Y, Shen W. Smartphone–Camera–Based Water Reflectance Measurement and Typical Water Quality Parameter Inversion. Remote Sensing. 2022; 14(6):1371. https://doi.org/10.3390/rs14061371

Chicago/Turabian StyleGao, Min, Junsheng Li, Shenglei Wang, Fangfang Zhang, Kai Yan, Ziyao Yin, Ya Xie, and Wei Shen. 2022. "Smartphone–Camera–Based Water Reflectance Measurement and Typical Water Quality Parameter Inversion" Remote Sensing 14, no. 6: 1371. https://doi.org/10.3390/rs14061371

APA StyleGao, M., Li, J., Wang, S., Zhang, F., Yan, K., Yin, Z., Xie, Y., & Shen, W. (2022). Smartphone–Camera–Based Water Reflectance Measurement and Typical Water Quality Parameter Inversion. Remote Sensing, 14(6), 1371. https://doi.org/10.3390/rs14061371