Abstract

Land Surface Phenology is an important characteristic of vegetation, which can be informative of its response to climate change. However, satellite-based identification of vegetation transition dates is hindered by inconsistencies in different observation platforms, including band settings, viewing angles, and scale effects. Therefore, time-series data with high consistency are necessary for monitoring vegetation phenology. This study proposes a data harmonization approach that involves band conversion and bidirectional reflectance distribution function (BRDF) correction to create normalized reflectance from Landsat-8, Sentinel-2A, and Gaofen-1 (GF-1) satellite data, characterized by the same spectral and illumination-viewing angles as the Moderate-Resolution Imaging Spectroradiometer (MODIS) and Nadir BRDF Adjusted Reflectance (NBAR). The harmonized data are then subjected to the spatial and temporal adaptive reflectance fusion model (STARFM) to produce time-series data with high spatio–temporal resolution. Finally, the transition date of typical vegetation was estimated using regular 30 m spatial resolution data. The results show that the data harmonization method proposed in this study assists in improving the consistency of different observations under different viewing angles. The fusion result of STARFM was improved after eliminating differences in the input data, and the accuracy of the remote-sensing-based vegetation transition date was improved by the fused time-series curve with the input of harmonized data. The root mean square error (RMSE) estimation of the vegetation transition date decreased by 9.58 days. We concluded that data harmonization eliminates the viewing-angle effect and is essential for time-series vegetation monitoring through improved data fusion.

1. Introduction

Land surface phenology (LSP) is an important indicator of climate change [1]. In the past few decades, affected by global climate change, the phenology of terrestrial vegetation has undergone significant changes. European deciduous forest leaf unfolding is 4.2 days earlier every ten years on average [2]. In China, the time of leaf unfolding in deciduous forests is an average of 5.5 days earlier every ten years, which is a greater rate of phenological change than in Europe [3]. Vegetation monitoring can clarify the dynamics of different vegetation types and then reveal the spatial and temporal characteristics of climate change [2]. Therefore, accurate and detailed phenological information is of great value for regional and global climate change studies [4].

Many studies have obtained satellite-based remote-sensing datasets for vegetation monitoring. Data from the Moderate-Resolution Imaging Spectroradiometer (MODIS) have been widely used to obtain phenological information from different types of vegetation [5,6]. Long-term Data Record (LTDR) Advanced Very High-Resolution Radiometer (AVHRR) observations have been successfully used to monitor distinct phenological events on a global scale [7,8]. The Visible Infrared Imaging Radiometer Suite (VIIRS) instrument onboard the Suomi National Polar-orbiting Partnership (Suomi-NPP) was also used to extend the long-term LSP data records that began with AVHRR and MODIS [9,10]. Although the data have a relatively coarse spatial resolution (≥250 m), the short revisit time gives the data an advantage in LSP retrieval [11,12].

However, owing to the comparatively coarse spatial resolution of these various satellite sensors, detecting phenological dynamics in heterogeneous landscapes can be difficult [10,13]. For a simulation analysis, the variance of the finer pixels in one coarse pixel for the start of the season can be as high as 40 days [14]. The coarse spatial resolution of remote-sensing data makes it difficult to capture the phenology in regions with multiple types of vegetation owing to the mixed-pixel problem, and the ground-measured data cannot be well-matched with the remote-sensing LSP derived by the coarse spatial resolution data [15].

High-resolution data have more detailed spatial information and have been used widely in vegetation phenology mapping [16]. However, high-spatial-resolution data often have a long revisit period, which cannot effectively capture the rapid changes in vegetation [11]. Furthermore, optical remote-sensing systems would face constant data loss in vegetation regions that are often clouded during the growing season. In the mid-latitude region, the average yearly cloud-free probability was 21.3% [17]. The above two points make the use of high-spatial-resolution data for phenological monitoring ineffective.

Integrating imagery from various remote-sensing platforms is a feasible method to compensate for a lack of data, which increases the frequency of observations and therefore collects more data [18,19]. In addition, it uses a variety of data types, including spatial detail [20], temporal information [9], and spectral ranges [21]. Harmonized Landsat Sentinel-2 (HLS) data have been widely used for monitoring vegetation [18,22,23]. Through the combination of the two sensors, a data-set with a repeat cycle of 3.2 days and a 30 m spatial resolution can be formed, which provides a stable data source for high-spatial-resolution LSP monitoring [24]. However, using different types of data in terms of spectral, spatial resolution, and illumination-viewing geometries will likely introduce uncertainties, which will eventually be passed onto vegetation monitoring based on the vegetation index (VI) [10,25]. Therefore, different remote-sensing datasets cannot be combined directly, and the inconsistencies between different datasets should be taken into consideration [26].

Spatio–temporal data fusion is another methodology for integrating imagery from various remote-sensing platforms [27]. Fusion models, such as the spatial and temporal adaptive reflectance fusion model (STARFM), use the spatial and temporal information provided by different satellite data to reconstruct high spatio–temporal resolution data, resulting in higher spatial resolutions and time sampling frequencies for remote-sensing data [28]. However, most applications of STARFM do not consider the difference in the illumination-viewing geometries of the input data [29]. Therefore, the MODIS nadir bidirectional reflectance distribution function adjusted reflectance (NBAR) and Landsat series data provide the major input data for the similar spectral settings and illumination-viewing geometry of these two datasets [28,30]. However, owing to the influence of cloud cover, high-spatial-resolution data are often unavailable. Therefore, the input data sources of the fusion model need to be enhanced to improve the stability of the output results of the model [31,32]. However, the difference between different high-spatial-resolution data in terms of illumination-view geometries and spectral characteristics will increase the uncertainty in the fused result [28].

VI is a useful reference for vegetation monitoring [33], and constructing a time-series VI curve is the main step in phenological retrieval. However, time-series VI is heavily influenced by the differences in the spectral and illumination-viewing geometries of the observations [34]. Different satellite observations may vary significantly owing to differences in spectral and illumination-viewing geometry. The difference caused by the spectrum is determined by the spectral range and relative spectral response (RSR) [35], and the difference caused by the illumination-view geometry is determined by the surface bidirectional reflectance distribution function (BRDF) [36]. Nagol et al. (2015) [37] revealed that the difference in reflectance before and after the vegetation growing season in the red and near-infrared (NIR) bands of Landsat-5 can exceed 30% caused by the variation in the solar zenith angle (SZA). Moreover, the VI in a nadir viewing usually increases with an increase in the SZA [38]. Yang et al. (2017b) [39] showed that the reflectance of the MODIS red band under the same SZA and relative azimuth angle (RAA) differed by up to 15% with different view zenith angles (VZA).

In this article, we proposed a data harmonization method for normalizing different high-spatial-resolution reflectances with similar spectral and illumination-viewing properties. The STARFM was then applied to the harmonized data to produce time-series and high-spatio–temporal-resolution reflectance data. Finally, the transition date of the typical vegetation types was determined using the fused 30 m spatial resolution reflectance data. The objectives of this study are as follows: (1) to propose a data harmonization algorithm to eliminate the spectral and angular variations among different remote-sensing data, (2) reconstruct the time-series and fine-resolution reflectance data, and (3) investigate the viewing-angle effects in remote-sensing data with different VZAs and improve the accuracy of vegetation phenology detection.

2. Materials and Methods

2.1. Materials

2.1.1. In Situ Measurement

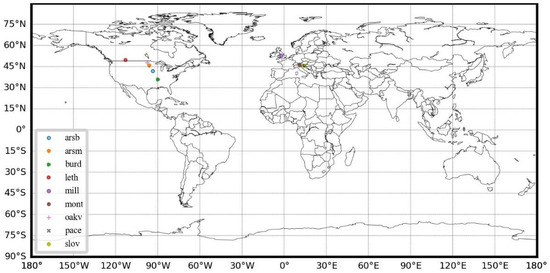

Near-surface digital repeat photography is an important data source for vegetation monitoring, and can also provide an assessment of phenological information obtained from satellite data [40,41]. The PhenoCam tracks vegetation status every 30 min from dawn to dusk using digital photographs [42] (https://phenocam.sr.unh.edu/webcam/, accessed on 10 February 2022). To ensure the reliability of the conclusions, we monitored different vegetation types across various climatic regions. The in situ measurements were collected from nine PhenoCam sites with different climate and vegetation types (Table 1), and the spatial distribution of in situ sites is shown in Figure 1. Digital photographs were used to provide in situ measurements to evaluate satellite-derived vegetation phenological information. The digital images were acquired between 10:00 a.m. and local solar noon from 1 January to 31 December 2020, every day, which was consistent with the acquisition time of the corresponding remote-sensing data in situ.

Table 1.

Overview of the nine PhenoCam study sites.

Figure 1.

The locations of the selected in situ PhenoCam sites.

2.1.2. Satellite Data

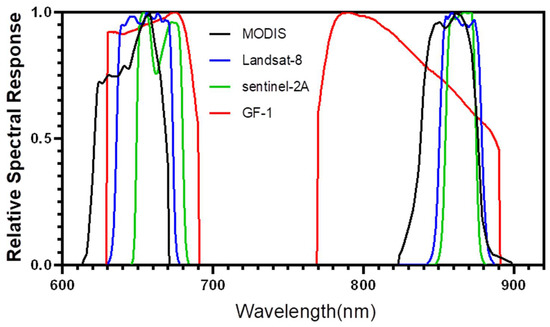

Multiple types of satellite data were used in this study. The Landsat-8 data used in our study are the atmospherically corrected surface reflectance data downloaded from the United States Geological Survey (USGS) archive (http://earthexplorer.usgs.gov/, accessed on 10 February 2022), generated from the Landsat Ecosystem Disturbance Adaptive Processing System (LEDAPS) [43,44]. The Sentinel-2A Multi-Spectral Instrument (MSI) L2A data were distributed by the European Space Agency Open Access Hub (https://scihub.copernicus.eu/, accessed on 10 February 2022). The Gaofen-1 (GF-1) wide-field viewer (WFV) L1A data were collected from the China Center for Resources Satellite Data and Application (CRESDA) (http://www.cresda.com/CN/, accessed on 10 February 2022), and the surface reflectance of GF-1 was obtained by 6S atmospheric correction model [45]. Detailed information on the above three kinds of data is shown in Table 2, and the red (R) and NIR bands RSR of the different sensors are shown in Figure 2. The auxiliary satellite-based data include the MODIS collection V006 500 m spatial resolution daily gridded NBAR [46] and Ross-Li kernel-driven parameter product (MCD43A1) [36,47], and MODIS aerosol products (MOD04_L2) [48]. NBAR, MCD43A1, and MOD04_L2 were downloaded from NASA’s official website (https://search.earthdata.nasa.gov/, accessed on 10 February 2022).

Table 2.

Basic information about the three fine-resolution satellite sensors.

Figure 2.

Comparison of RSRs for MODIS, OLI, MSI, and WFV.

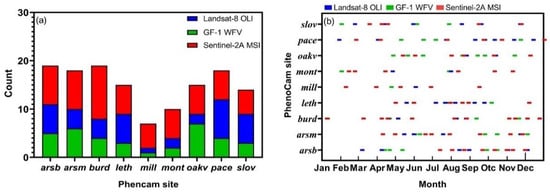

In this study, the image acquisition time ranged from 1 January to 31 December 2020, and only images without cloud cover in the study area were used. Each PhenoCam site is defined by its center and a 45 × 45 km square (i.e., 1500 × 1500 pixels of Landsat-8 OLI) is taken as the spatial range. The cloudless observations in this range are used as effective observations for vegetation monitoring. The count of effective observations of Landsat-8 OLI, Sentinel-2 MSI, and GF-1 WFV at nine PhenoCam sites is shown in Figure 3a. The day of the year of the three datasets is shown in Figure 3b. It can be seen from Figure 2 that under different climate types, the number of effective observations in each PhenoCam is quite different. The average number of effective observations at the nine stations was 15 in 2020. From the perspective of time distribution, most PhenoCam sites lack effective observations during the start of the season (i.e., March and April). For example, arsb has only one effective observation, while the leth and oakv sites do not have effective observations. Moreover, during the middle of the season (i.e., June and July), some PhenoCam sites lacked effective observations. There were no observations at arsb and mont and only one observation at the burd. The lack of effective observations during the growing season creates uncertainty in remote-sensing vegetation phenology mapping [12]. Therefore, it is critical to reconstruct the intensive observation data.

Figure 3.

Count of effective observations (a) and the DOY (b) of Landsat-8 OLI, Sentinel-2 MSI, and GF-1 WFV at nine PhenoCam sites. The red is Sentinel-2 MSI, the blue is Landsat-8 OLI, and the green is GF-1 WFV. The name of the site is abbreviated corresponding to the name in Table 1.

2.2. Methods

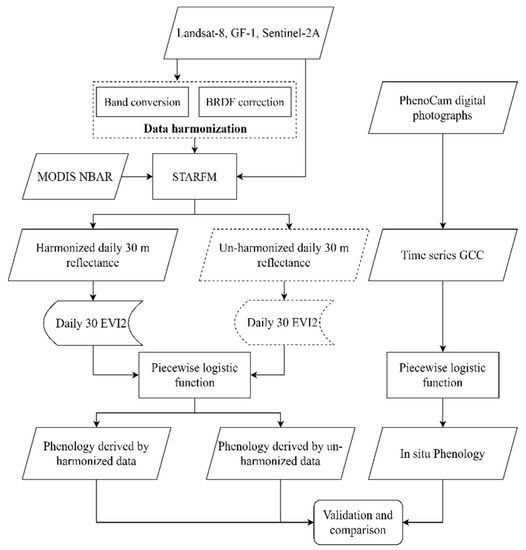

First, the PhenoCam digital photographs were processed to produce time-series ground measurements. Second, a data harmonization method involving band conversion and BRDF correction was proposed to normalize the multisource 30 m satellite data. Daily 30 m reflectance data were further derived by applying the STARFM algorithm to the harmonized data and MODIS NBAR. Third, we extracted the vegetation phenology by applying a piecewise linear fitting algorithm to the daily 30 m VI data and time-series in situ measurements, respectively. Finally, we validated the vegetation transition date derived from the harmonized and unharmonized 30 m data respectively using phenological information extracted from digital repeat photographs. The workflow is shown in Figure 4 and each step is described in the following sections.

Figure 4.

Land surface phenology retrieval workflow chart.

2.2.1. Processing In Situ PhenoCam Data

The objective of digital photograph preprocessing is to obtain the green chromatic coordinates (GCC), which is a commonly used index for measuring green vegetation in near-sensing images [42]. The digital number (DN) of the digital photograph was converted to the adjusted DN value using Equation:

where DNA is the adjusted DN value, DN is the pixel value of the digital photograph, and E is the exposure time, which can be obtained from the metadata of the photo. To eliminate the influence of mixed pixels, we selected the vegetation pixels in the photograph and manually determined the region of interest (ROI).

When the ROI is determined, the GCC of all pixels of the phenological camera photos in the ROI can be calculated using Equation:

where GCC represents the green chromatic coordinates, and DNr, DNg, DNb are the red, green, and blue components of the adjusted DN value. As the phenology camera made observations every half an hour, and the selected time of the photograph data is 2 h, there will be multiple GCC values for a day. Therefore, the average GCC value for each day is selected as the GCC value of the day.

2.2.2. Harmonizing Satellite Data

The inconsistent observations registered by different sensors are mainly due to the different characteristics of the spectral and illumination-viewing geometries [24]. The objective of data harmonization is to solve the problem of inconsistent observations from the input data, which is not addressed in STARFM. Since the Landsat-8 and Sentinel-2A have similar characteristics on overpass time and observation angles [49,50], this study focused on the harmonization of MODIS NBAR and GF-1. The data harmonization includes (1) adjusting data to represent the response from a common spectral band, and (2) normalizing illumination-viewing geometry via BRDF correction. In this study, the MODIS NBAR was chosen as the reference to harmonize the Landsat-8 OLI, Sentinel-2A MSI, and GF-1 WFV. Each substep was elaborated on below.

- Band conversion

The differences in the observations of different sensors that arise from different RSRs need to be considered. Even for wavelengths designed to have similar coverage, the spectral reflectance can be substantially different because of the RSR [51]. Thus, the sensor signal difference arising from the RSR difference must be considered. As shown in Figure 1, the GF-1 WFV has a broad spectral coverage among the red and NIR bands when compared with the other sensors, and has a significant variation in RSR, especially in the NIR band. Thus, spectral matching should be conducted to mitigate the differences in RSRs. The reflectance for an object in a certain band can be estimated using:

where is ρ the simulated reflectance of a certain band, λ is the wavelength, Γ(λ) is the relative spectral response, a and b are the spectral range of a specific band, and f(λ) is the in situ measured spectrum. In this paper, 245 surface reflectance spectrum samples were collected from the United States Geological Survey (USGS) and advanced spaceborne thermal emission and reflection radiometer (ASTER) spectral libraries [52]. These samples included vegetation, soil, rock, water, snow, and ice [35,53]. The surface spectrum was then used to establish the relationship between the reference sensor and target sensor using a linear regression model:

where ρMODIS is the simulated reflectance of MODIS, i is the band index of MODIS including red and NIR bands, k and ε are the band conversion coefficients, and j is the band index corresponding to MODIS red and NIR bands of the high-spatial-resolution data.

- BRDF correction

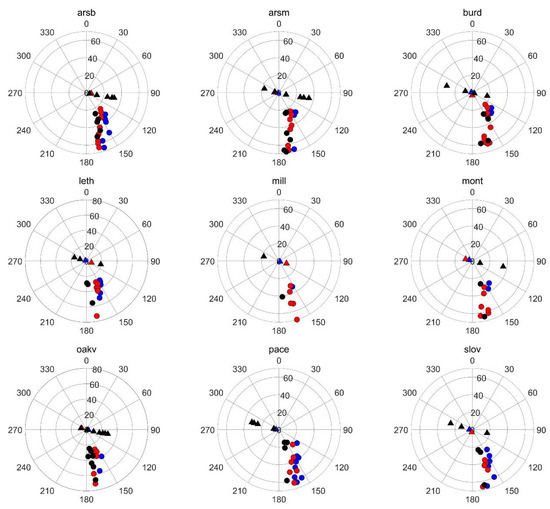

The illumination-viewing geometries of the center pixels of each PhenoCam site are plotted in Figure 5. It was observed that the satellite overpass times of all PhenoCam sites were close, and the time difference was approximately 2 h. Among the effective observations of all PhenoCam sites in 2020, the VZAs of Landsat-8 OLI and Sentinel-2A MSI are relatively concentrated, and the values are fixed within 7° and 9°, which can be considered to be nadir viewing sensors. However, the VZAs of GF-1 WFV vary greatly, and the maximum value can exceed 35°. The average difference in the VZAs of the images obtained by the three sensors at all PhenoCam sites was 26.20°. Such a high amplitude of variation in VZAs of the remote-sensing data will inevitably affect the consistency of the observation results, so the viewing-angle effect between different observations needs to be eliminated.

Figure 5.

Illumination-viewing geometries of different satellite data used in this study. The triangles represent the location of the sensor, and the circles represent the location of the sun. The blue represents Landsat-8 OLI, the red represents Sentinel-2 MSI, and the black represents GF-1 WFV.

To implement the BRDF correction of different data, the following steps were performed: First, MCD43A1 was used to estimate the surface reflectance of the two instruments. The theoretical basis of this product is that the surface reflectance observed from a certain direction can be simulated as a sum of three kernels representing basic scattering types: isotropic, volumetric, and geometric-optical surface [54,55,56]. The equation is as follows:

where R is the surface directional reflectance; fiso, fvol, and fgeo are the coefficients; θs, θv, and φ are the SZA, VZA, and RAA of the sensor and the sun, respectively. The simulation of surface reflectance in any specific illumination-view geometry can be performed using the MODIS BRDF parameters MCD43A1 (fiso, fvol, and fgeo), when the SZA, VZA, and RAA were determined.

We then established the relationship between R and NBAR. The spatial resolution of MCD43A1 is 500 m, and considering the “mixed-pixel” effect, we chose the homogeneous pixels in the R and NBAR to build the BRDF correction model. An objective and automatic method for selecting homogeneous pixels was proposed in this study. First, a 30 × 30 window was built on the GF-1 WFV surface reflectance based on the selected pixels on R. The homogeneous pixels were chosen by the coefficient of variation (CV), which was calculated as follows:

where σ and μ are the standard deviation and average value of surface reflectance in the window, respectively. If the CV of the pixels within the window is less than 1%, then the coarse MODIS pixel related to the pixels in this window could be considered a homogeneous pixel pair [57].

Due to the non-linear relationship between the directional reflectances under different illumination-viewing geometries of GF-1 WFV and MODIS NBAR, we built a piecewise linear model to calculate the BRDF correction coefficient between R and NBAR. It was assumed that the same land cover type has the same BRDF shape, but the magnitude of reflectance may vary [34,58,59]. The normalized difference vegetation index (NDVI) was used as an index to distinguish different land cover types (e.g., bare soils, vegetation, snow/ice, and water). For the same land cover type, variations in the BRDF shape throughout the year were limited and linked to the NDVI [60,61]. The homogeneous pixels were divided into several classes with an interval of 0.2 (>0, 0–0.2, 0.2–0.4, 0.4–0.6, and >0.6) based on NDVI [34]. Then, a linear model was built on each class for each band in R and NBAR, as shown in Equation (7). Equation (8) shows a combined set of regression coefficients for all NDVI intervals for a spectral band:

where R′ is the surface reflectance of MODIS NBAR, P and Q are the BRDF correction coefficients for a specific spectral band and NDVI interval, i is the class index, and j is the band index. After the linear models of all classes were built, the piecewise linear BRDF correction model for a spectral band in a scene was obtained.

To apply the BRDF correction coefficients derived by the R and R′ to the true observations of GF-1 WFV, we assumed that the BRDF shape is dependent on land cover type and independent of the spatial resolution [62]. Then, we calculated the NDVI of the surface reflectance of GF-1 WFV and divided it into several classes with an interval of 0.2. We applied P and Q to the same class in each band derived from the surface reflectance of the GF-1 WFV:

where p and q are the BRDF correction coefficients for the surface reflectance of GF-1 WFV. Therefore, the directional reflectance under the illumination-viewing geometry of MODIS NBAR can be simulated by the surface reflectance of GF-1 WFV as follows:

where ρ (θs, θv = 0, φ) is the 16 m spatial resolution harmonized directional reflectance under the illumination-viewing geometry of MODIS NBAR, and ρ (θs′, θv′, φ′) is the true surface reflectance of the GF-1 WFV. For a more detailed description of the data harmonization algorithm, we refer to Lu et al. (2022) [26].

2.2.3. Generating Time-Series Vegetation Index Data

In this study, the daily 30 m spatial resolution surface reflectance data were reconstructed using STARFM. STARFM is the earliest developed data fusion method based on the weight function which determines how much each neighboring pixel contributes to the estimated reflectance of the central pixel [27]. STARFM assumes that changes in reflectance are consistent and comparable at different spatial scales over a homogeneous surface. This means that fluctuations existed in coarse spatial resolution data can be introduced directly to the estimation at high resolution.

STARFM uses a linear model to establish the relationship between high-spatial-resolution data (e.g., Landsat) and coarse spatial resolution data (e.g., MODIS). First, MODIS data were reprojected and resampled to fit the Landsat data format. Second, a moving window was built on Landsat data to identify similar neighboring pixels. Third, a weight was assigned to each similar neighbor based on the difference between the surface reflectances of the Landsat-MODIS image pair, which includes the spectral difference, the temporal difference, and the spatial Euclidean distance between the neighbor and the central pixel. Finally, the central pixel can be predicted at a high spatial resolution by the equation below:

where L is the estimated Landsat surface reflectance, (xi, yi) is the pixel location, ω is the window, t0 is the prediction date, Wijk is the weight function, M is the MODIS true observation, and tk is the true observation date. For a more detailed description of the STARFM algorithm, we refer to Gao et al. (2006) [27].

Several VIs have been implemented to monitor the dynamics of vegetation, including the normalized difference vegetation index (NDVI) [5], enhanced vegetation index (EVI) [63], and soil adjusted vegetation index (SAVI) [64]. The two-band enhanced vegetation index (EVI2) has the capability to reduce background noise and has enhanced sensitivity over dense vegetation canopies. In addition, EVI2 has been shown to have advantages for LSP detection over the commonly used NDVI [65,66]. Therefore, after reconstructing the daily 30 m spatial resolution reflectance data through STARFM, we calculated EVI2 using Equation (12) and constructed the time-series EVI2 data as an indicator of vegetation dynamics.

where ρNIR and ρred are the surface reflectance of the NIR and red bands, respectively. The EVI2 time-series usually fluctuates and presents missing values owing to the interference of atmospheric factors such as aerosols, dust, clouds, and snow. Therefore, it was temporally filtered before the phenology extraction. A Savitzky–Golay (S–G) filter is applied first to remove outliers in the EVI2 series. This was performed twice. The local window size was set to 65, 45 and the polynomial degree was set to 2 and 4, respectively. Finally, a gap-filling method based on inverse distance weighted using the two nearest EVI2 values was performed.

2.2.4. Vegetation Phenology Detection and Validation

In this study, we estimated the following transition date of the different vegetation types over different regions: (1) greenup, the date photosynthesis started; (2) maturity, the date when the plant has the largest green leaf area, (3) senescence, the date when photosynthetic activity and green leaf area begin to decrease rapidly; and (4) dormancy, the date when physical activity approaches zero [5]. The piecewise logistic function is a widely used method for VI data fitting, and can be modeled using a function of the form:

where t is time in days, y(t) is the VI data at time t, a and b are fitting parameters, c + d is the maximum VI value, and d is the initial background VI value. After curve fitting using the piecewise logistic model, the vegetation transition date can be identified by the curvature and rate of change of curvature. For calculation of the two values, we refer to Zhang et al. (2003) [5]. For each growth cycle, the rate of change in the curvature of the fitted logistic model was calculated. Four transition dates were then extracted. Greenup and maturity were identified during the growth phase, and senescence and dormancy were identified during the senescence phase.

We calculated the bias and root mean square error (RMSE) to evaluate the vegetation transition date detection results based on multisource remote-sensing data. The vegetation transition dates retrieved by PhenoCam data were taken as the true value, and a comparison of in situ vegetation transition dates with the corresponding pixel in the image was made. The calculation formulas of bias and RMSE are given by Equations (14) and (15):

where xi and yi are the transition dates of the validation site derived from in situ PhenoCam data and remote-sensing data, respectively, is the average value of the transition dates, and n is the number of the transition date.

3. Results

3.1. Data Harmonization Result

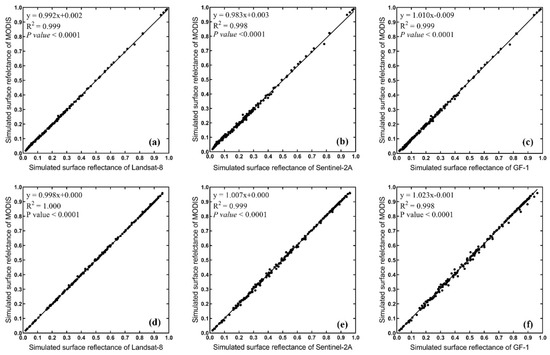

The calculation of EVI2 requires information on the reflectance in the red and NIR bands. We used simulated surface reflectance of MODIS as the standard to calculate the conversion coefficients of the red and NIR bands of Landsat-8, Sentinel-2A, and GF-1WFV. As shown in Figure 6, the spectral differences between the red and NIR bands of MODIS and Landsat-8 OLI are relatively small, with the slope of the linear model close to 1, while the spectral differences between the corresponding bands of Sentinel-2A MSI and GF-1 WFV are relatively high.

Figure 6.

Band conversion coefficients of red (a–c) and NIR (d–f) bands of different sensors with the corresponding MODIS bands. The x-axis is the simulated reflectance of the 245 ground types of each sensor, and the y-axis is the simulated reflectance of MODIS.

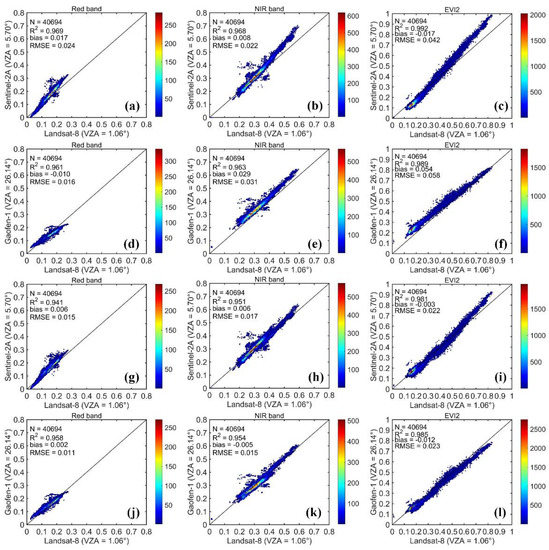

The input data of STARFM include MODIS NBAR and high-spatial-resolution data, and the VZA of all pixels was 0°. Therefore, in this study, the reflectance of Landsat-8 OLI, Sentinel-2A MSI, and GF-1 WFV was harmonized to the reflectance under the nadir view. We used the Landsat-8 OLI image as the reference to evaluate the data harmonization results of Sentinel-2A MSI and GF-1 WFV as the Landsat-8 OLI is considered a nadir view sensor. To investigate whether data harmonization is beneficial to the accuracy of VI data, we calculated the EVI2 using the Sentinel-2A MSI and GF-1 WFV reflectance with and without BRDF correction and compared it to the EVI2 derived from Landsat-8 OLI. The results are shown in Figure 7.

Figure 7.

Comparison of the surface reflectance and EVI2 without (a–f) and with (g–l) BRDF correction. The GF-1 WFV data (center latitude and longitude: 38.716, −97.021) was obtained on day 323 of 2020. The Landsat-8 OLI data (path/row: 029/034) was obtained on day 325 of 2020, and the Sentinel-2A MSI data (Tile: T14SNG) was obtained on day 323 of 2020.

As shown in Figure 6, the RMSE of the red band reflectance between Sentinel-2A MSI and Landsat-8 OLI decreased by 37.5% while the NIR band decreased by 22.7% after BRDF correction. The RMSE of the red band reflectance between GF-1 WFV and Landsat-8 OLI decreased by 31.3%, while the NIR band decreased by 51.6% after BRDF correction. Moreover, the RMSE of EVI2 between Sentinel-2A MSI and Landsat-8 OLI decreased by 47.6% while the GF-1 WFV dropped by 60.3% after BRDF correction. The improvement of Sentinel-2A MSI was not as obvious as that of GF-1, and the improvement of EVI2 was better than that of reflectance. Therefore, as shown in Figure 6, the data harmonization method proposed in this study can help improve the consistency between the high-spatial-resolution data and can eliminate the inconsistency in VI caused by the huge difference in illumination-viewing geometries.

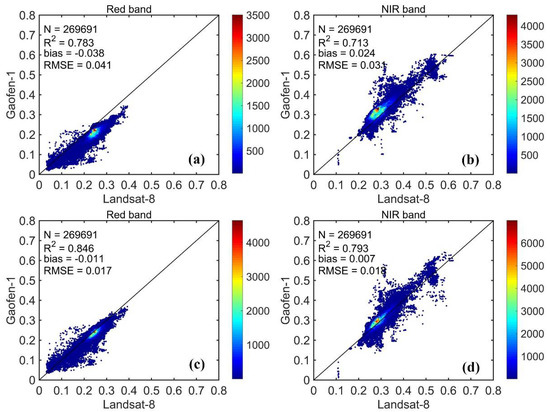

To demonstrate the contribution of harmonization to data fusion, we randomly selected a scene of GF-1 WFV data and compared the fused GF-1 reflectance with and without data harmonization to Landsat-8 OLI data. The difference in data acquisition time between GF-1 and Landsat-8 was 4 days, allowing an assumption of no change to the ground. It can be seen from Figure 8 that the R2 of the red and NIR bands are improved by 8.0% and 11.2%, respectively, the bias decreased by 71.1% and 70.8%, and the RMSE decreased by 58.5% and 41.9%, respectively. By inputting the harmonized data into STARFM, the output results on the predicted date could be improved compared with the true reflectance of the predicted date. The improvement of the NIR band was higher than that of the red band. Data harmonization improved the consistency of the fusion result.

Figure 8.

Comparison of the fusion result without (a,b) and with (c,d) data harmonization. The x-axis (ρOLI) is the reflectance of Landsat-8 OLI on day 237, 2020, and the y-axis is the fused reflectance. The ρWFV is the fused reflectance with the input of original GF-1 WFV reflectance on day 233, 2020, and the is the fused reflectance with the input of harmonized GF-1 reflectance.

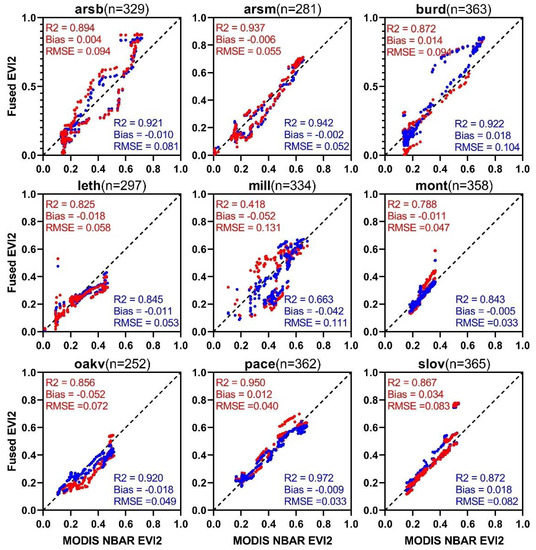

To explore whether data harmonization is beneficial for the consistency of the EVI2 curve calculated by the output of STARFM, the high-spatial-resolution data (Landsat-8 OLI, Sentinel-2A MSI, and GF-1 WFV) and the harmonized data were fused with MODIS NBAR to generate two different time-series EVI2 curves. Meanwhile, we calculated the standard time-series EVI2 using the MODIS EVI2 data. As the missing values of the original MODIS NBAR will cause the missing of fusion results, we chose the fusion results of the nine PhenoCam sites to obtain the time-series EVI2 curve in 2020, and the two different types of time-series EVI2 were compared to the MODIS NBAR EVI2 curve. The results are presented in Figure 9. As shown in Figure 9, the R2 of the EVI2 time-series increased by 6.7%, the bias decreased by 18.7%, and the RMSE decreased by 11.3%. Therefore, with the input of the harmonized high-spatial-resolution data, the consistency of the EVI2 curves is improved. Moreover, specific to different vegetation types, the decrease in bias and RMSE of cropland was 50.0% and 2.5%, respectively, and the improvement in R2 was 3.0%. The decrease in bias and RMSE of grassland was 58.6% and 21.5%, respectively, and the improvement in R2 was 5.0%. As for the deciduous forest, the decrease in bias and RMSE was 123.5% and 10.8%, respectively, and the improvement in R2 was 14.0%. The improvement of the statistical indicators reveals that the consistency of the EVI2 curves was improved with the input of the harmonized data, and this is especially obvious in deciduous forests.

Figure 9.

Comparison of fused EVI2 in time-series with the input of harmonized and unharmonized data in the nine PhenoCam sites. The red points and the statistical indicators are the fused EVI2 with the input of unharmonized data. The blue points and the statistical indicators are the fused EVI2 with the input of harmonized data. n is the count of available fusion results in 2020.

3.2. Vegetation Phenology Retrieval Result

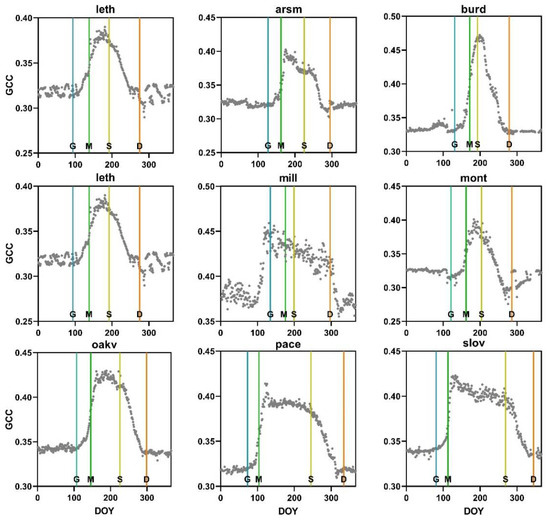

The vegetation phenology detection results in this study include in situ and remote-sensing LSP datasets. PhenoCam data are first converted to GCC, and then the transition dates within the year are confirmed by the time-series in situ GCC through the transition date detection method proposed in this study. The in situ phenology detection results are shown in Figure 10.

Figure 10.

Phenological extraction results of the 9 PhenoCam sites. The gray points are daily in situ GCC. The vertical lines are the transition dates. The letters on the vertical lines are G: greenup; M: maturity; S: senescence; D: dormancy.

The single-point phenology extraction algorithm was applied to the daily 30 m spatial resolution fused EVI2 data to achieve high-spatial-resolution phenological mapping in the study area. To investigate the viewing-angle effects on vegetation monitoring using remote-sensing data with large differences in VZAs, we extracted the vegetation phenology using the fused EVI2 constructed by the harmonized and unharmonized data, respectively.

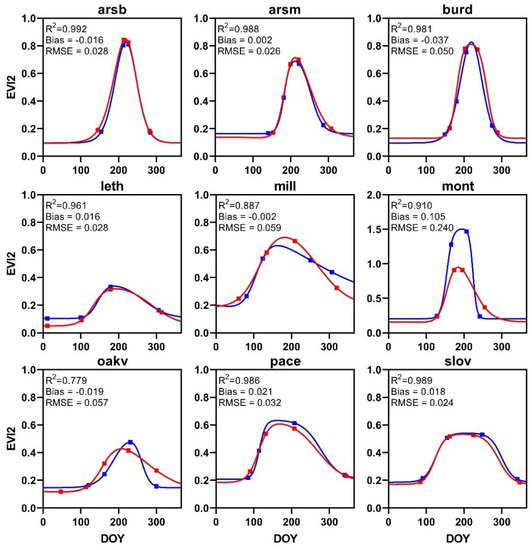

As shown in Figure 11, the filtered EVI2 curve derived from the fused data with and without data harmonization shows a significant difference. The average R2 of the nine PhenoCam sites was 0.94, the average bias was 0.01, and the average RMSE was 0.06. Additionally, the transition dates of the two filtered EVI2 curves also have a large difference, and there is a significant misalignment in the time of peak appearance in the mill and oakv datasets.

Figure 11.

Filtered EVI2 curve and the transition date of the nine PhenoCam sites. The red and blue lines are the filtered EVI2 curves derived by the fused data without and with data harmonization, respectively. The red and blue squares are the vegetation transition dates derived by the corresponding EVI2 curves.

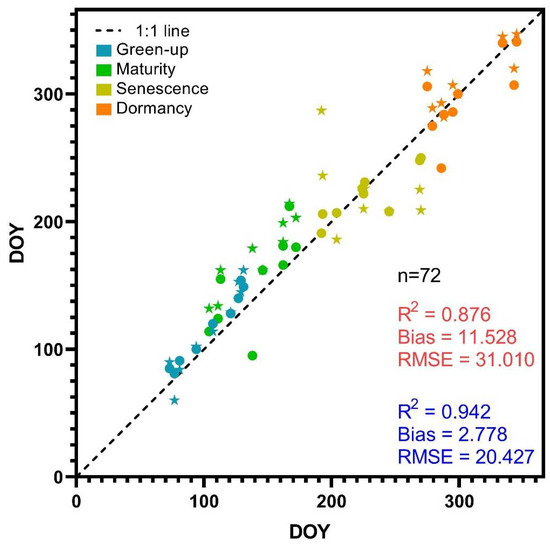

In this study, in situ phenology was also used to evaluate the accuracy of phenology data extracted from remote-sensing images. To explore the impact of data normalization on the accuracy of phenology extraction, we compared the two sets of transition dates based on different data in Figure 11 with the results of the in situ measurements. As shown in Figure 12, the R2 improved by 7.5%, the bias decreased by 8.75 days, and the RMSE decreased by 9.58 days. Therefore, with the input of harmonized high-spatial-resolution data, the accuracy of vegetation monitoring was improved.

Figure 12.

Evaluation result based on the in situ transition date. The x-axis is the in situ transition date. The y-axis is the transition date derived by fused data. The stars and circles are the transition date derived by the fused data without and with data harmonization. The red and blue statistical indicators represent the evaluation results of the transition date derived by the fused data without and with data harmonization.

4. Discussion

Different illumination-viewing geometries result in inconsistencies in the observation results of the same target introduced by the BRDF effect [34]. When the two viewing angles differ greatly, the BRDF effect in the NIR band is more obvious than that in the red band. In Figure 7, the RMSE of the NIR band of GF-1 WFV and Landsat-8 OLI is higher than that of the red band, and the RMSE of the NIR band of GF-1 WFV is also higher than that of Sentinel-2A MSI. In addition, the difference caused by the BRDF effect is propagated on to the calculation of VI. The RMSEs of EVI2 of Sentinel-2A MSI and GF-1 WFV were higher than the surface reflectance of the red and NIR bands; thus, the difference in EVI2 at different viewing angles even exceeds the band reflectivity (Figure 7). Thus, VI increases the BRDF effect.

Since its establishment, STARFM has been widely implemented [28]. However, when designing the weight function, the variations in the spectral and illumination-viewing geometries between different input data sources were not considered. The input data of STARFM mainly concentrated on the Landsat-8 and MODIS NBAR, owing to the high consistency of the two datasets. In this study, STARFM was implemented on images from two different satellite sensors. These images are different in terms of spatial resolution, bandwidth, spectral response function, atmospheric conditions, and illumination-viewing geometry [24]. Here, we focused on the harmonization of spectral and illumination-viewing geometries between different images. After data harmonization, the consistency between the high-spatial-resolution data and the MODIS NBAR was improved (Figure 6).

The data harmonization method proposed in this paper eliminated the influence of different viewing angles on the VI data. However, the solar angle also affects phenological monitoring [25]. We only harmonized the reflectance of different sensors to a nadir viewing condition and ignored the influence of SZA variation on vegetation monitoring during the year. In the study area, the variation of SZA over the entire year can exceed 60°; therefore, this factor must be taken into consideration for data harmonization.

By comparing the fused time-series EVI2 derived from harmonized and unharmonized data (Figure 9), results showed that in nine PhenoCam sites, the improvement of forest LSP data was greater than that of grassland and cultivated land. This is mainly because the BRDF effect of forest land is more obvious than that of grassland and cultivated land [67]. The data harmonization eliminated the BRDF effect, so the improvement of forest land was more obvious than that of grassland and cultivated land.

In this study, only pure pixels containing a single land cover type were selected to implement and evaluate the BRDF correction. This effectively reduced the impact of the difference in spatial resolution of different sensors. However, the correction effect of pixels was not evaluated in heterogeneous areas. We also assumed that the PhenoCam site and the corresponding pixels were homogeneous, and therefore the transition date retrieval by the PhenoCam site and the corresponding pixels could be compared directly. However, the scale effect of the PhenoCam in situ measurement has not been fully addressed [15]; therefore, an important issue for future research is the spatial representativeness of the PhenoCam in situ measurements as well as the spatio–temporal matching to the remote-sensing data.

5. Conclusions

This research proposed a harmonization method to normalize the variations in spectral and illumination-viewing characteristics of high-spatial-resolution reflectance data derived from various satellite sensors. A data fusion method was then performed on the harmonized reflectance data to generate time-series reflectance with high spatio–temporal resolutions. Finally, the daily 30-m reflectance data were used to detect the transition dates of different vegetation types across multiple climate types. It was observed that the viewing angle could affect the accuracy of vegetation phenology monitoring. The proposed data harmonization method can eliminate the inconsistency between fine-resolution reflectance data acquired by different sensors under various illumination-viewing geometries, which further improved the consistency of VI data. The data fusion result of STARFM also benefited from the data harmonization, and the R2 increased by 8.0% and 11.2% for the red and NIR band, respectively. The time-series VI derived from the fused reflectance showed less fluctuations. By using the harmonized reflectance and fused VI data, the accuracy of the vegetation phenology monitoring was improved, with the R2 increased by 7.5% and the RMSE decreased by 9.58 days. We concluded that the proposed data harmonization method should be adopted when using multisource satellite data to monitor vegetation phenology over a large spatial extent.

Author Contributions

T.H. and D.-X.S. designed the study; J.L. and C.-Q.W. collected the data, processed the satellite images, and performed the experiments; J.L. conducted the analysis and drafted the manuscript; and T.H., D.-X.S. and C.-Q.W. revised the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China under Grants 42090012 and 41901300, the Hubei Natural Science Grant 2021CFA082, the Open Fund of Key Laboratory of National Geographical Census and Monitoring, Ministry of Natural Resources (2022NGCM07), and the Fundamental Research Funds for the Central University through Wuhan University under Grant 2042021kf1063.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The PhenoCam digital photographs can be accessed at: https://phenocam.sr.unh.edu/webcam/, accessed on 10 February 2022. The Landsat-8 surface reflectance data can be accessed at the United States Geological Survey (USGS): http://landsat.usgs.gov (accessed on 10 February 2022). The Sentinel-2A Multi-Spectral Instrument (MSI) L2A data can be accessed at the European Space Agency Open Access Hub: https://scihub.copernicus.eu/ (accessed on 10 February 2022). The Gaofen-1 (GF-1) wide-field viewer (WFV) L1A data can be accessed at the China Center for Resources Satellite Data and Application (CRESDA): http://www.cresda.com/CN/ (accessed on 10 February 2022).

Acknowledgments

The authors would like to thank the Moderate-Resolution Imaging Spectroradiometer (MODIS) Land Team for providing the aerosol and BRDF products and the three anonymous reviewers whose constructive comments and suggestions have helped significantly improve the quality of this article. We gratefully acknowledge data support from the National Earth System Science Data Center, National Science & Technology Infrastructure of China: http://www.geodata.cn (accessed on 10 February 2022).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Piao, S.; Liu, Q.; Chen, A.; Janssens, I.A.; Fu, Y.; Dai, J.; Liu, L.; Lian, X.; Shen, M.; Zhu, X. Plant phenology and global climate change: Current progresses and challenges. Glob. Chang. Biol. 2019, 25, 1922–1940. [Google Scholar] [CrossRef] [PubMed]

- Fu, Y.H.; Zhao, H.; Piao, S.; Peaucelle, M.; Peng, S.; Zhou, G.; Ciais, P.; Huang, M.; Menzel, A.; Uelas, J.P.; et al. Declining global warming effects on the phenology of spring leaf unfolding. Nature 2015, 526, 104–118. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ge, Q.; Wang, H.; Rutishauser, T.; Dai, J. Phenological response to climate change in China: A meta-analysis. Glob. Chang. Biol. 2015, 21, 265–274. [Google Scholar] [CrossRef] [PubMed]

- Gao, M.; Wang, X.; Meng, F.; Liu, Q.; Li, X.; Zhang, Y.; Piao, S. Three-dimensional change in temperature sensitivity of northern vegetation phenology. Glob. Chang. Biol. 2020, 26, 5189–5201. [Google Scholar] [CrossRef]

- Zhang, X.Y.; Friedl, M.A.; Schaaf, C.B.; Strahler, A.H.; Hodges, J.C.F.; Gao, F.; Reed, B.C.; Huete, A. Monitoring vegetation phenology using MODIS. Remote Sens. Environ. 2003, 84, 471–475. [Google Scholar] [CrossRef]

- Tan, B.; Morisette, J.T.; Wolfe, R.E.; Gao, F.; Ederer, G.A.; Nightingale, J.; Pedelty, J.A. An Enhanced TIMESAT Algorithm for Estimating Vegetation Phenology Metrics from MODIS Data. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2011, 4, 361–371. [Google Scholar] [CrossRef]

- Pouliot, D.; Latifovic, R.; Fernandes, R.; Olthof, I. Evaluation of compositing period and AVHRR and MERIS combination for improvement of spring phenology detection in deciduous forests. Remote Sens. Environ. 2011, 115, 158–166. [Google Scholar] [CrossRef]

- Zhang, X. Reconstruction of a complete global time series of daily vegetation index trajectory from long-term AVHRR data. Remote Sens. Environ. 2015, 156, 457–472. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, J.; Henebry, G.M.; Gao, F. Development and evaluation of a new algorithm for detecting 30 m land surface phenology from VIIRS and HLS time series. ISPRS-J. Photogramm. Remote Sens. 2020, 161, 37–51. [Google Scholar] [CrossRef]

- Peng, D.; Wang, Y.; Xian, G.; Huete, A.R.; Huang, W.; Shen, M.; Wang, F.; Yu, L.; Liu, L.; Xie, Q.; et al. Investigation of land surface phenology detections in shrublands using multiple scale satellite data. Remote Sens. Environ. 2021, 252, 1–17. [Google Scholar] [CrossRef]

- Zhang, X.; Friedl, M.A.; Schaaf, C.B. Sensitivity of vegetation phenology detection to the temporal resolution of satellite data. Int. J. Remote Sens. 2009, 30, 2061–2074. [Google Scholar] [CrossRef]

- Tian, J.; Zhu, X.; Wan, L.; Collin, M. Impacts of Satellite Revisit Frequency on Spring Phenology Monitoring of Deciduous Broad-Leaved Forests Based on Vegetation Index Time Series. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2021, 14, 10500–10508. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, J.; Gao, F.; Liu, Y.; Schaaf, C.; Friedl, M.; Yu, Y.; Jayavelu, S.; Gray, J.; Liu, L.; et al. Exploration of scaling effects on coarse resolution land surface phenology. Remote Sens. Environ. 2017, 190, 318–330. [Google Scholar] [CrossRef] [Green Version]

- Chen, X.; Wang, D.; Chen, J.; Wang, C.; Shen, M. The mixed pixel effect in land surface phenology: A simulation study. Remote Sens. Environ. 2018, 211, 338–344. [Google Scholar] [CrossRef]

- Burke, M.W.V.; Rundquist, B.C. Scaling Phenocam GCC, NDVI, and EVI2 with Harmonized Landsat-Sentinel using Gaussian Processes. Agric. For. Meteorol. 2021, 300, 1–25. [Google Scholar] [CrossRef]

- Moon, M.; Richardson, A.D.; Friedl, M.A. Multiscale assessment of land surface phenology from harmonized Landsat 8 and Sentinel-2, PlanetScope, and PhenoCam imagery. Remote Sens. Environ. 2021, 266, 1–14. [Google Scholar] [CrossRef]

- Armitage, R.P.; Ramirez, F.A.; Danson, F.M.; Ogunbadewa, E.Y. Probability of cloud-free observation conditions across Great Britain estimated using MODIS cloud mask. Remote Sens. Lett. 2013, 4, 427–435. [Google Scholar] [CrossRef]

- Kowalski, K.; Senf, C.; Hostert, P.; Pflugmacher, D. Characterizing spring phenology of temperate broadleaf forests using Landsat and Sentinel-2 time series. Int. J. Appl. Earth Obs. Geoinf. 2020, 92, 1–8. [Google Scholar] [CrossRef]

- An, S.; Zhang, X.; Chen, X.; Yan, D.; Henebry, G.M. An Exploration of Terrain Effects on Land Surface Phenology across the Qinghai-Tibet Plateau Using Landsat ETM plus and OLI Data. Remote Sens. 2018, 10, 1069. [Google Scholar] [CrossRef] [Green Version]

- Cheng, Y.; Vrieling, A.; Fava, F.; Meroni, M.; Marshall, M.; Gachoki, S. Phenology of short vegetation cycles in a Kenyan rangeland from PlanetScope and Sentinel-2. Remote Sens. Environ. 2020, 248, 1–20. [Google Scholar] [CrossRef]

- Veloso, A.; Mermoz, S.; Bouvet, A.; Thuy Le, T.; Planells, M.; Dejoux, J.-F.; Ceschia, E. Understanding the temporal behavior of crops using Sentinel-1 and Sentinel-2-like data for agricultural applications. Remote Sens. Environ. 2017, 199, 415–426. [Google Scholar] [CrossRef]

- Bolton, D.K.; Gray, J.M.; Melaas, E.K.; Moon, M.; Eklundh, L.; Friedl, M.A. Continental-scale land surface phenology from harmonized Landsat 8 and Sentinel-2 imagery. Remote Sens. Environ. 2020, 240, 1–16. [Google Scholar] [CrossRef]

- Babcock, C.; Finley, A.O.; Looker, N. A Bayesian model to estimate land surface phenology parameters with harmonized Landsat 8 and Sentinel-2 images. Remote Sens. Environ. 2021, 261, 1–13. [Google Scholar] [CrossRef]

- Claverie, M.; Ju, J.; Masek, J.G.; Dungan, J.L.; Vermote, E.F.; Roger, J.C.; Skakun, S.V.; Justice, C. The Harmonized Landsat and Sentinel-2 surface reflectance data set. Remote Sens. Environ. 2018, 219, 145–161. [Google Scholar] [CrossRef]

- Ma, X.; Huete, A.; Tran, N.; Bi, J.; Gao, S.; Zeng, Y. Sun-Angle Effects on Remote-Sensing Phenology Observed and Modelled Using Himawari-8. Remote Sens. 2020, 12, 1339. [Google Scholar] [CrossRef] [Green Version]

- Lu, J.; He, T.; Liang, S.; Zhang, Y. An Automatic Radiometric Cross-Calibration Method for Wide-Angle Medium-Resolution Multispectral Satellite Sensor Using Landsat Data. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–11. [Google Scholar] [CrossRef]

- Gao, F.; Masek, J.; Schwaller, M.; Hall, F. On the blending of the Landsat and MODIS surface reflectance: Predicting daily Landsat surface reflectance. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2207–2218. [Google Scholar]

- Zhu, X.; Cai, F.; Tian, J.; Williams, T.K.-A. Spatiotemporal Fusion of Multisource Remote Sensing Data: Literature Survey, Taxonomy, Principles, Applications, and Future Directions. Remote Sens. 2018, 10, 1–23. [Google Scholar] [CrossRef]

- Zheng, Y.; Wu, B.; Zhang, M.; Zeng, H. Crop Phenology Detection Using High Spatio-Temporal Resolution Data Fused from SPOT5 and MODIS Products. Sensors 2016, 16, 2099. [Google Scholar] [CrossRef] [Green Version]

- Gao, F.; Hilker, T.; Zhu, X.; Anderson, M.C.; Masek, J.G.; Wang, P.; Yang, Y. Fusing Landsat and MODIS Data for Vegetation Monitoring. IEEE Geosci. Remote Sens. Mag. 2015, 3, 47–60. [Google Scholar] [CrossRef]

- Gevaert, C.M.; Javier Garcia-Haro, F. A comparison of STARFM and an unmixing-based algorithm for Landsat and MODIS data fusion. Remote Sens. Environ. 2015, 156, 34–44. [Google Scholar] [CrossRef]

- Hilker, T.; Wulder, M.A.; Coops, N.C.; Linke, J.; McDermid, G.; Masek, J.G.; Gao, F.; White, J.C. A new data fusion model for high spatial- and temporal-resolution mapping of forest disturbance based on Landsat and MODIS. Remote Sens. Environ. 2009, 113, 1613–1627. [Google Scholar] [CrossRef]

- Pisek, J.; Rautiainen, M.; Nikopensius, M.; Raabe, K. Estimation of seasonal dynamics of understory NDVI in northern forests using MODIS BRDF data: Semi-empirical versus physically-based approach. Remote Sens. Environ. 2015, 163, 42–47. [Google Scholar] [CrossRef] [Green Version]

- Gao, F.; He, T.; Masek, J.G.; Shuai, Y.; Schaaf, C.B.; Wang, Z. Angular Effects and Correction for Medium Resolution Sensors to Support Crop Monitoring. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 7, 4480–4489. [Google Scholar] [CrossRef]

- He, T.; Liang, S.; Wang, D.; Cao, Y.; Gao, F.; Yu, Y.; Feng, M. Evaluating land surface albedo estimation from Landsat MSS, TM, ETM plus, and OLI data based on the unified direct estimation approach. Remote Sens. Environ. 2018, 204, 181–196. [Google Scholar] [CrossRef]

- Schaaf, C.B.; Gao, F.; Strahler, A.H.; Lucht, W.; Li, X.W.; Tsang, T.; Strugnell, N.C.; Zhang, X.Y.; Jin, Y.F.; Muller, J.P.; et al. First operational BRDF, albedo nadir reflectance products from MODIS. Remote Sens. Environ. 2002, 83, 135–148. [Google Scholar] [CrossRef] [Green Version]

- Nagol, J.R.; Sexton, J.; Kim, D.-H.; Anand, A.; Morton, D.; Vermote, E.; Townshend, J.R. Bidirectional effects in Landsat reflectance estimates: Is there a problem to solve? ISPRS-J. Photogramm. Remote Sens. 2015, 103, 129–135. [Google Scholar] [CrossRef]

- Ishihara, M.; Inoue, Y.; Ono, K.; Shimizu, M.; Matsuura, S. The Impact of Sunlight Conditions on the Consistency of Vegetation Indices in Croplands-Effective Usage of Vegetation Indices from Continuous Ground-Based Spectral Measurements. Remote Sens. 2015, 7, 14079–14098. [Google Scholar] [CrossRef] [Green Version]

- Yang, A.; Zhong, B.; Wu, S.; Liu, Q. Radiometric Cross-Calibration of GF-4 in Multispectral Bands. Remote Sens. 2017, 9, 232. [Google Scholar] [CrossRef] [Green Version]

- Hufkens, K.; Melaas, E.K.; Mann, M.L.; Foster, T.; Ceballos, F.; Robles, M.; Kramer, B. Monitoring crop phenology using a smartphone based near-surface remote sensing approach. Agric. For. Meteorol. 2019, 265, 327–337. [Google Scholar] [CrossRef]

- Julitta, T.; Cremonese, E.; Migliavacca, M.; Colombo, R.; Galvagno, M.; Siniscalco, C.; Rossini, M.; Fava, F.; Cogliati, S.; di Cella, U.M.; et al. Using digital camera images to analyse snowmelt and phenology of a subalpine grassland. Agric. For. Meteorol. 2014, 198, 116–125. [Google Scholar] [CrossRef]

- Sonnentag, O.; Hufkens, K.; Teshera-Sterne, C.; Young, A.M.; Friedl, M.; Braswell, B.H.; Milliman, T.; O’Keefe, J.; Richardson, A.D. Digital repeat photography for phenological research in forest ecosystems. Agric. For. Meteorol. 2012, 152, 159–177. [Google Scholar] [CrossRef]

- Masek, J.G.; Vermote, E.F.; Saleous, N.E.; Wolfe, R.; Hall, F.G.; Huemmrich, K.F.; Gao, F.; Kutler, J.; Lim, T.K. A Landsat surface reflectance dataset for North America, 1990–2000. IEEE Geosci. Remote Sens. Lett. 2006, 3, 68–72. [Google Scholar] [CrossRef]

- Vermote, E.; Justice, C.; Claverie, M.; Franch, B. Preliminary analysis of the performance of the Landsat 8/OLI land surface reflectance product. Remote Sens. Environ. 2016, 185, 46–56. [Google Scholar] [CrossRef] [PubMed]

- Vermote, E.F.; Tanre, D.; Deuze, J.L.; Herman, M.; Morcrette, J.J. Second Simulation of the Satellite Signal in the Solar Spectrum, 6S: An overview. IEEE Trans. Geosci. Remote Sens. 1997, 35, 675–686. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Schaaf, C.B.; Sun, Q.; Shuai, Y.; Roman, M.O. Capturing rapid land surface dynamics with Collection V006 MODIS BRDF/NBAR/Albedo (MCD43) products. Remote Sens. Environ. 2018, 207, 50–64. [Google Scholar] [CrossRef]

- Wang, Z.; Schaaf, C.B.; Strahler, A.H.; Chopping, M.J.; Roman, M.O.; Shuai, Y.; Woodcock, C.E.; Hollinger, D.Y.; Fitzjarrald, D.R. Evaluation of MODIS albedo product (MCD43A) over grassland, agriculture and forest surface types during dormant and snow-covered periods. Remote Sens. Environ. 2014, 140, 60–77. [Google Scholar] [CrossRef] [Green Version]

- Gupta, P.; Remer, L.A.; Levy, R.C.; Mattoo, S. Validation of MODIS 3 km land aerosol optical depth from NASA’s EOS Terra and Aqua missions. Atmos. Meas. Technol. 2018, 11, 3145–3159. [Google Scholar] [CrossRef] [Green Version]

- Roy, D.P.; Li, J.; Zhang, H.K.; Yan, L.; Huang, H.; Li, Z. Examination of Sentinel 2A multi-spectral instrument (MSI) reflectance anisotropy and the suitability of a general method to normalize MSI reflectance to nadir BRDF adjusted reflectance. Remote Sens. Environ. 2017, 199, 25–38. [Google Scholar] [CrossRef]

- Zhang, H.K.; Roy, D.P.; Yan, L.; Li, Z.; Huang, H.; Vermote, E.; Skakun, S.; Roger, J.-C. Characterization of Sentinel-2A and Landsat-8 top of atmosphere, surface, and nadir BRDF adjusted reflectance and NDVI differences. Remote Sens. Environ. 2018, 215, 482–494. [Google Scholar] [CrossRef]

- Chander, G.; Hewison, T.J.; Fox, N.; Wu, X.; Xiong, X.; Blackwell, W.J. Overview of Intercalibration of Satellite Instruments. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1056–1080. [Google Scholar] [CrossRef]

- Baldridge, A.M.; Hook, S.J.; Grove, C.I.; Rivera, G. The ASTER spectral library version 2.0. Remote Sens. Environ. 2009, 113, 711–715. [Google Scholar] [CrossRef]

- He, T.; Liang, S.; Wang, D.; Wu, H.; Yu, Y.; Wang, J. Estimation of surface albedo and directional reflectance from Moderate Resolution Imaging Spectroradiometer (MODIS) observations. Remote Sens. Environ. 2012, 119, 286–300. [Google Scholar] [CrossRef] [Green Version]

- Lucht, W.; Schaaf, C.B.; Strahler, A.H. An algorithm for the retrieval of albedo from space using semiempirical BRDF models. IEEE Trans. Geosci. Remote Sens. 2000, 38, 977–998. [Google Scholar] [CrossRef] [Green Version]

- Roujean, J.L.; Leroy, M.; Deschamps, P.Y. A Bidirectional Reflectance Model of the Earth’s Surface for the Correction of Remote Sensing Data. J. Geophys. Res. Atmos. 1992, 97, 20455–20468. [Google Scholar] [CrossRef]

- Wanner, W.; Li, X.; Strahler, A.H. On the derivation of kernels for kernel-driven models of bidirectional reflectance. J. Geophys. Res. Atmos. 1995, 100, 21077–21089. [Google Scholar] [CrossRef]

- Li, J.; Feng, L.; Pang, X.; Gong, W.; Zhao, X. Radiometric cross Calibration of Gaofen-1 WFV Cameras Using Landsat-8 OLI Images: A Simple Image-Based Method. Remote Sens. 2016, 8, 411. [Google Scholar] [CrossRef] [Green Version]

- Shuai, Y.; Masek, J.G.; Gao, F.; Schaaf, C.B. An algorithm for the retrieval of 30-m snow-free albedo from Landsat surface reflectance and MODIS BRDF. Remote Sens. Environ. 2011, 115, 2204–2216. [Google Scholar] [CrossRef]

- Shuai, Y.; Masek, J.G.; Gao, F.; Schaaf, C.B.; He, T. An approach for the long-term 30-m land surface snow-free albedo retrieval from historic Landsat surface reflectance and MODIS-based a priori anisotropy knowledge. Remote Sens. Environ. 2014, 152, 467–479. [Google Scholar] [CrossRef]

- Vermote, E.; Justice, C.O.; Breon, F.-M. Towards a Generalized Approach for Correction of the BRDF Effect in MODIS Directional Reflectances. IEEE Trans. Geosci. Remote Sens. 2009, 47, 898–908. [Google Scholar] [CrossRef]

- Franch, B.; Vermote, E.F.; Claverie, M. Intercomparison of Landsat albedo retrieval techniques and evaluation against in situ measurements across the US SURFRAD network. Remote Sens. Environ. 2014, 152, 627–637. [Google Scholar] [CrossRef]

- Roy, D.P.; Ju, J.; Lewis, P.; Schaaf, C.; Gao, F.; Hansen, M.; Lindquist, E. Multi-temporal MODIS-Landsat data fusion for relative radiometric normalization, gap filling, and prediction of Landsat data. Remote Sens. Environ. 2008, 112, 3112–3130. [Google Scholar] [CrossRef]

- Wang, C.; Li, J.; Liu, Q.; Zhong, B.; Wu, S.; Xia, C. Analysis of Differences in Phenology Extracted from the Enhanced Vegetation Index and the Leaf Area Index. Sensors 2017, 17, 1982. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wu, C.; Gonsamo, A.; Gough, C.M.; Chen, J.M.; Xu, S. Modeling growing season phenology in North American forests using seasonal mean vegetation indices from MODIS. Remote Sens. Environ. 2014, 147, 79–88. [Google Scholar] [CrossRef]

- Yan, D.; Zhang, X.; Nagai, S.; Yu, Y.; Akitsu, T.; Nasahara, K.N.; Ide, R.; Maeda, T. Evaluating land surface phenology from the Advanced Himawari Imager using observations from MODIS and the Phenological Eyes Network. Int. J. Appl. Earth Obs. Geoinf. 2019, 79, 71–83. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, L.; Liu, Y.; Jayavelu, S.; Wang, J.; Moon, M.; Henebry, G.M.; Friedl, M.A.; Schaaf, C.B. Generation and evaluation of the VIIRS land surface phenology product. Remote Sens. Environ. 2018, 216, 212–229. [Google Scholar] [CrossRef]

- Gerard, F.F.; North, P.R.J. Analyzing the effect of structural variability and canopy gaps on forest BRDF using a geometric-optical model. Remote Sens. Environ. 1997, 62, 46–62. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).