Abstract

In this paper, an algorithm based on local binary pattern (LBP) is proposed to obtain clear remote sensing images under the premise of unknown causes of blurring. We find that LBP can completely record the texture features of the images, which will not change widely due to the generation of blur. Therefore, LBP prior is proposed, which can filter out the pixels containing important textures in the blurry image through the mapping relationship. The corresponding processing methods are adopted for different types of pixels to cope with the challenges brought by the rich texture and details of remote sensing images and prevent over-sharpening. However, the existence of LBP prior increases the difficulty of solving the model. To solve the model, we construct the projected alternating minimization (PAM) algorithm that involves the construction of the mapping matrix, the fast iterative shrinkage-thresholding algorithm (FISTA) and the half-quadratic splitting method. Experiments with the AID dataset show that the proposed method can achieve highly competitive processing results for remote sensing images.

1. Introduction

Affected by many factors, such as the imaging environment and camera shake, an acquired image may face the problems of image quality degradation and loss of important details caused by blurring during the imaging process. Therefore, it is necessary to study how to restore the image without prior knowledge, i.e., blind image deblurring. Ideally, the image quality degradation model can be expressed as:

where G, H, U, and N represent the blurry image, the blur kernel, original clear image, and noise, respectively. * represents convolution operator. Obviously, this is a typical ill-posed problem with countless conditional solutions. In order to find the optimal solution, we need to use the image feature information to change an ill-posed equation into a benign equation. Traditional image deblurring algorithms are generally divided into two parts: blind image deblurring algorithm, which can estimate an accurate blur kernel, and non-blind image deblurring that uses the estimated blur kernel to obtain a clear image.

Early image restoration algorithms used parameterized models to estimate blur kernels [1,2]. Still, the real blur kernels rarely follow the parameterized models, which leads to the lack of universality of such methods. After the total variation model proposed by Rudin et al. [3] in 1992, the theory of partial differential equations has become more and more popular in blind image restoration algorithms. Since then, researchers have designed two algorithmic frameworks based on theories of probability and statistics: Maximum a posteriori (MAP) [4,5,6,7] and Variational Bayes (VB) [8,9,10,11,12]. Although VB-based algorithms have good stability, their rapid development is permanently restricted by the complexity and vast calculation. On the contrary, MAP-based methods are relatively simple. However, Levin et al. [13] pointed out that the naive MAP approach, which is based on the sparse derivative prior, cannot achieve the expected effect. Therefore, it is necessary to introduce an appropriate blur kernel prior and delay the blur kernel normalization. Analyzing probability, the issue is recovering the clear image U and the blur kernel H simultaneously. This is equivalent to solving the standard maximum posterior probability. It can be expressed as:

where is the noise distribution; and are the prior distributions of the latent clear image and blur kernel, respectively. After taking the negative logarithm of each item in (2), it is equivalent to the following regular model:

where is the fidelity term, and are regularization functions about U and H. and are the corresponding parameters.

Under the MAP framework, some algorithms seek the optimal solution by utilizing sharp edges. However, when sharp edges are missing in some images, solving the problem requires prior knowledge that can distinguish between clear images and blurry images. Pan et al. [14] noted the difference in dark channel pixel distribution between the clear and blurry images. They proposed the dark channel prior, which performed well in processing natural, text, facial, and low-light images. To avoid the algorithm failure caused by insufficient dark channel pixels in the image, Yan et al. [15] introduced both dark channels and bright channels into the image-blind deblurring model. Ge et al. [16] pointed out that the above two methods will fail when there are insufficient extreme pixels. Therefore, they constructed a non-linear channel (NLC) prior and introduced it into a blind images deblurring algorithm. However, these methods may not perform well in processing remote sensing images.

In this paper, we notice that the similarity of LBP for clear and blurry images can be applied to blind image deblurring. A new optimization algorithm is proposed, inspired by algorithms based on extreme channels and local priors. We use the local binary pattern (LBP) [17] as the threshold to filter critical pixels containing texture features by establishing their mapping relationship to the image, the intensities of which will be accumulated by the strong convex -norm. The gradients of different types of pixels will be processed correspondingly using the -norm and -norm. It is complicated to solve the restoration model directly, so the original problem needs to be decomposed into multiple sub-problems using the half-quadratic splitting method to facilitate results. In addition, we indirectly optimize the LBP prior by constructing a linear operator and adopt fast iterative shrinkage-thresholding algorithm (FISTA) [18] to solve the related equations. The contributions of this paper are as follows:

- (1)

- We note that LBP can completely extract the texture features of the images, which will not change significantly due to the presence of blur. Therefore, the LBP of the image can be used to locate the pixels that contain important texture information in the image by mapping.

- (2)

- A new remote sensing image deblurring algorithm based on LBP prior is proposed, which can remove the blur in the image and prevent over-sharpening by classifying all pixels and processing them in different ways.

- (3)

- As shown in the results, our proposed method, which has good stability and convergence, achieves extremely competitive results for remote sensing images.

The outline of the paper is as follows: Section 2 summarizes the related work in the field of image deblurring in recent years. Section 3 introduces the LBP prior and establishes the image-blind deblurring model and the corresponding optimization algorithm. Section 4 is the display of algorithm processing results. Section 5 makes a quantitative analysis of the performance of the proposed algorithm and discusses our algorithm after sufficient analysis and obtaining experimental results. Section 6 is the conclusion of this article.

2. Related Work

Blind image deblurring is now roughly divided into three categories: edge selection-based algorithms, image prior knowledge-based algorithms and deep learning-based algorithms. This part summarizes the achievements of blind image restoration in recent years.

2.1. Edge Selection-Based Algorithms

As one of the key features of recorded image information, edge information is widely used in image deblurring. Joshi et al. [19] used sub-pixel differences to locate important edges to estimate the blur kernel. Cho and Lee [20] proposed bilateral filters and impulsed filters to extract edge information in images. Xu and Jia [21] found that when the size of the edge is smaller than the blur kernel’s, it will affect the estimation of the kernel. Therefore, they proposed a new two-stage processing scheme based on the edge selection criteria. Sun et al. [22] used a patch method to extract edge information. However, this algorithm has a lot of calculation and time-consuming image processing. When there are insufficient sharp edges in an image, edge selection-based algorithms will fail. However, in the algorithms based on prior knowledge, the image edge information does not disappear, which is hidden in the regular term or prior knowledge, i.e., the low-rank characteristics of the gradient [23], -norm of the gradient of the latent image [24] and the local maximum gradient prior [25], etc. In the restoration of hyperspectral images, gradient information has also received much attention. Yuzuriha et al. [26] took into account the low-rank nature of the gradient domain into the restoration model to make it better able to deal with anomalous variations.

2.2. Image Priors-Based Algorithms

Observing the clear and blurry images, much prior conducive to image restoration is applied to the algorithm. Shan et al. [27] used a probability model to process natural images with noise and blur. Krishnan et al. [28] proposed the / norm with sparse features after analyzing the statistical characteristics of the image. Levin et al. [10] designed the maximum a posterior (MAP) framework based on the characteristics of image pixel distribution. Kotera et al. [29] improved the MAP method using image priors, which are heavier tail than Laplace, and applied a method of augmented Lagrangian. Michaeli and Irani [30] used the recursive characteristics of image patches at different scales to restore images. Ren et al. [23] adopted a method of minimizing the weighted nuclear norm, which combined the low rank prior of similar patches the blurry image and its gradient map, to enhance the effectiveness of the image deblurring algorithm. Zhong et al. [31] proposed a high-order variational model to process blurred images with impulse noise. After Pan et al. [14] used a dark channel prior for image deblurring and achieved excellent results, the sparse channel has attracted much attention in blind image deblurring. Yan et al. [15] combined dark channel and bright channel and designed an extreme channel prior algorithm. Since then, Yang [32] and Ge [16] have made further improvements to the problems faced by the extreme channel prior algorithms. At the same time, the blind image deblurring algorithms, based on local prior information, have also made significant achievements, i.e., the method based on the local maximum gradient (LMG) prior proposed by Chen et al. [25] and the method based on the local maximum difference (LMD) prior proposed by Liu et al. [33]. The algorithm we proposed also belongs to this category. Recently, Zhou et al. [34] established the image deblurring model of the luminance channel in YCrCb colorspace based on the dark channel prior, which expands a new idea for better processing color images. The image deblurring algorithm proposed by Chen et al. [35], which takes advantage of both saturated and unsaturated pixels in the image, effectively removes the blur of night scene images and has excellent inspiration for the processing of night remote sensing images.The restoration of hyperspectral images generally uses low-rank priors [26,36,37].

2.3. Deep Learning-Based Deblurring Methods

With the development of deep learning technology, related image deblurring algorithms have also been developed. Early learning networks, such as those proposed by Sun et al. [38] and Schuler et al. [39], were still designed based on the alternating directions method of multipliers used in traditional algorithms. Li et al. [40] combined deep learning networks with traditional algorithms, which use neural network learning priors to distinguish images. However, it is inferior when dealing with some complex and severely motion-blurred images. Some methods do not need to estimate the blur kernel, which can obtain clear images directly through training. For example, Nah et al. [41] trained a multi-scale convolutional neural network (CNN). Cai et al. [42] introduced the extreme channel prior to CNN. Zhang et al. [43] and Suin et al. [44] use multi-patch networks to improve performance. In order to reduce the computational complexity of the algorithm and obtain sufficient image information, the feature pyramid has become the focus of multi-scale learning. Lin et al. [45] proposed a feature pyramid network (FPN) that can fuse mapping information of different resolutions. In the field of deep learning, new networks are constantly being proposed to deal with different imaging situations and take shorter processing time, i.e., GAMSNet [46], ID-Net [47], DCTResNet [48] and LSFNet [49]. However, these methods have many model parameters and require a large number of data sets for long-term training to achieve good processing results.

3. Local Binary Pattern Prior Model and Optimization

In this part, we briefly introduced the local prior, i.e., LBP, and constructed an optimization model based on the LBP prior for blind image deblurring.

3.1. The Local Binary Pattern

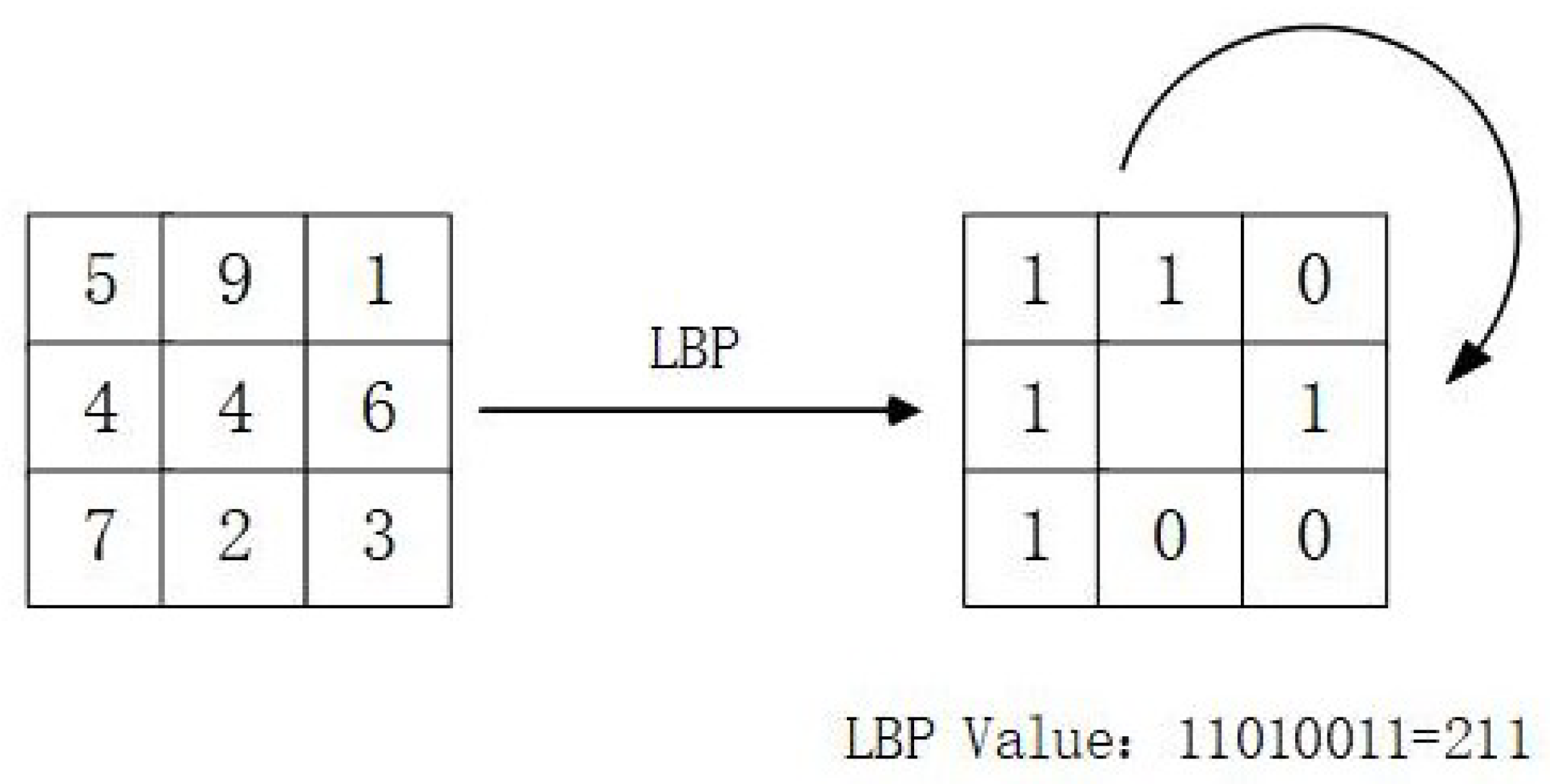

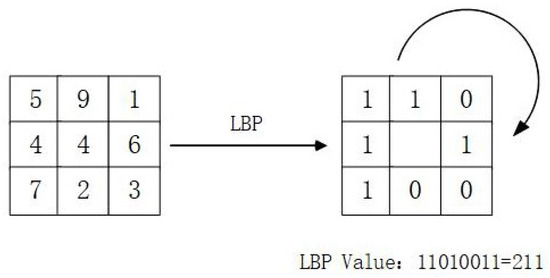

LBP, the characteristics of intensity and rotation invariance, can extract local texture features in an image. Its principle is shown in Figure 1. In a window with the size of 3 × 3, the center pixel is compared with its 8-neighbor pixels. When a peripheral pixel is smaller than the center pixel, the location of the peripheral pixel is marked as 0; otherwise, it is recorded as 1. After that, we encode the 8-bit binary number generated to obtain the LBP value corresponding to the center pixel of the window.

Figure 1.

Schematic diagram of LBP feature extraction principle.

Its formula is expressed as:

where is the center pixel, is the intensity of the central pixel, is the intensity of the peripheral pixel, s(t) is the sign function.

3.2. The Local Binary Pattern Prior

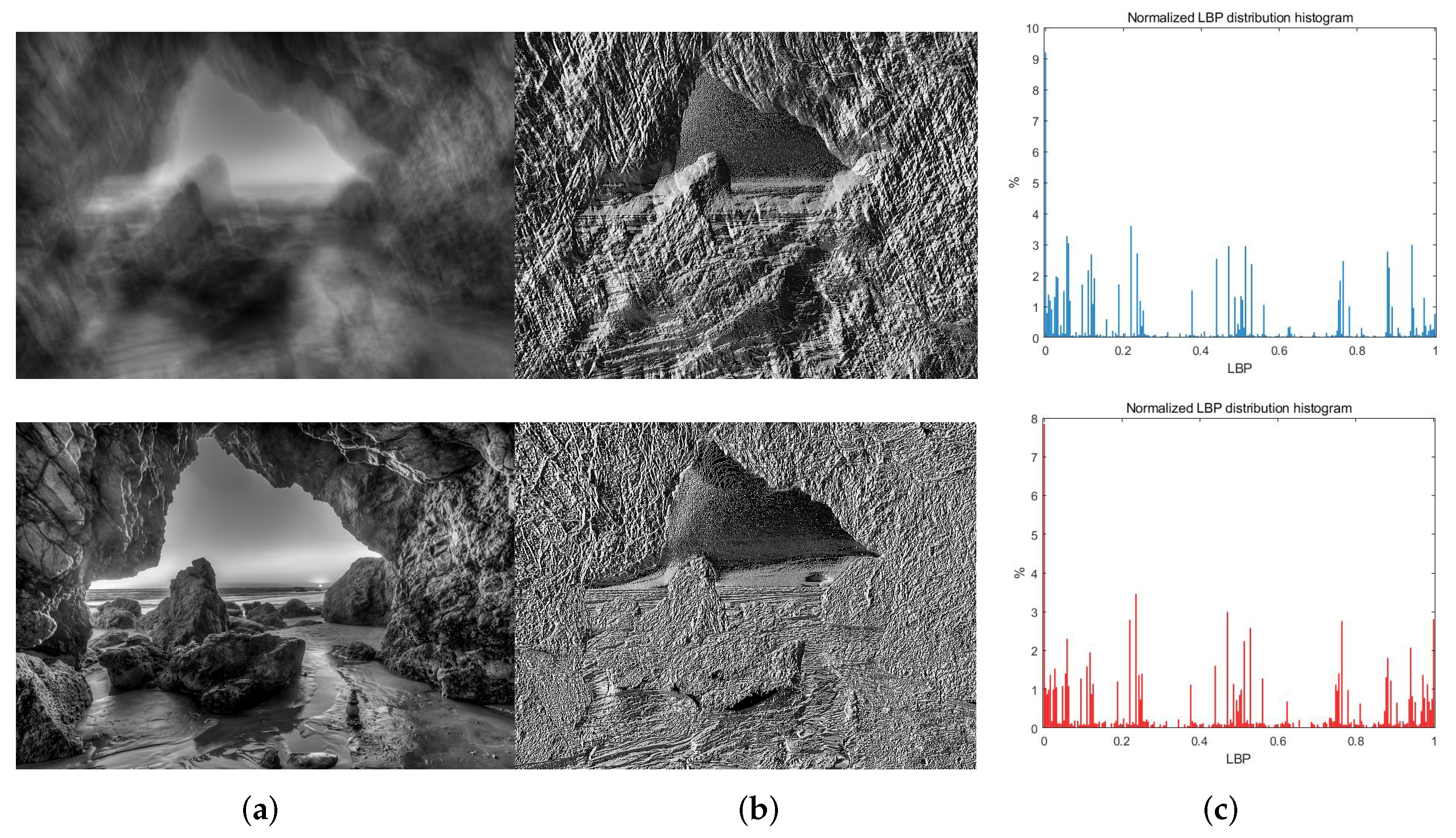

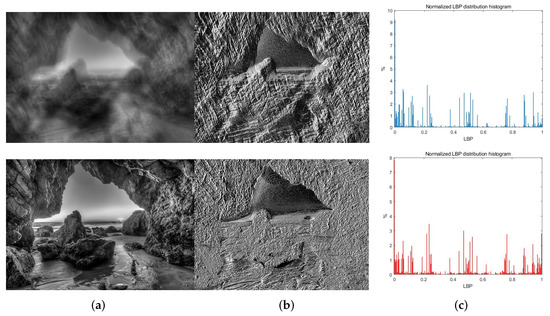

By observing the histogram of the LBP distribution of the clear image and the blurred image, it can be noticed that the blur does not change the distribution of the LBP value in an extensive range. Thus, LBP can be used to get the key pixels in the image restoration process. Different types of pixels will be processed accordingly to sharpen the image and remove fine textures (Figure 2).

Figure 2.

Top: A blurry image, corresponding LBP map and LBP normalized distribution histogram. Bottom: The clear image, corresponding LBP map and LBP normalized distribution histogram. (a) Image pair [50]; (b) LBPs; (c) LBP normalized distribution histograms.

For the key pixels, we use the convex -norm for accumulation and define the LBP prior as:

At the same time, as shown in Formula (7), we constrain the gradient of key pixels with the -norm and use the -norm to constrain the gradients of other pixels,

where and are the upper and lower limits of the threshold, respectively, is the LBP value of the image and ∇ is the gradient operator.

According to the above analysis, we use MAP as the framework and introduce LBP prior to design an effective optimization algorithm. The optimization function is defined as:

where , and are the weights of the corresponding regularization terms. The items in the objective function are the fidelity term, the related term of the LBP prior, the image gradient regular term and the constraint term to keep the blur kernel H smooth. The projected alternating minimization (PAM) algorithm is used to decompose the objective function into sub-problems to solve the clear image U and the blur kernel H.

where represents the coordinates of the blur kernel element. Note that all elements of h are greater than zero and sum to 1. After iteratively estimating the blur kernel, we can restore a clear image through the existing non-blind restoration methods.

3.3. Estimating the Latent Image

Because of LBP prior and regularization term , it is necessary to decompose (9) into three sub-problems by half-quadratic splitting method, which can be expressed as:

where and are the penalty parameters, w and z are auxiliary variables. When and tends to infinity, (9) and (12) are equivalent. First, we solve the parameter w via:

where is a non-linear operator, which cannot be directly solved linearly. Therefore, we need to construct a sparse mapping matrix C from key pixels to the original image, which is defined as:

Same as [16], C will be calculated explicitly, and (14) is equivalent to the following formula:

This is a classic convex -regularized problem, which can be solved by FISTA [18]. The contraction operator is defined as:

The solution process is shown in Algorithm 1.

| Algorithm 1: Solving auxiliary variables w. |

| Input: , , , , , . |

| , , and . |

| Maximum iterations M, initialize . |

| While |

| . |

| . |

| . |

| . |

| End while |

| . |

The next step is to solve for z:

When , then

When , then

Given w and z, we finally solve U by the following formula:

Although (21) is a least-squares problem, we cannot directly solve it by using Fast Fourier Transform (FFT). Therefore, it is necessary to introduce a new auxiliary variable d:

where is a penalty parameter. The alternating directions method of multipliers is used to decompose (22) into two sub-problems:

Both (23) and (24) have closed-form solutions. The solution of (23) is as follows:

We can use FFT to solve Equation (24):

where F, and represent the FFT, the complex conjugate operator of FFT and the inverse FFT, respectively.

The process of solving U is shown in Algorithm 2.

| Algorithm 2: Estimating the latent image. |

| Input: Blurry image G and blur kernel H. |

| Initialize , . |

| For 1 to 5 |

| Solve w using Algorithm 1. |

| Initialize . |

| For 1 to 4 |

| Solve d using Equation (23). |

| Initialize . |

| While |

| Solve z using Equation (18). |

| Solve U using Equation (26). |

| . |

| End while |

| . |

| End for |

| . |

| End for |

| Output: latent image U. |

3.4. Estimating the Blur Kernel

Referring to a variety of restoration algorithms [14,15,16,24,25,38], we replace the image intensities in (10) with the image gradients to make the estimated blur kernel more accurate. The formula for solving the blur kernel is:

The blur kernel can be obtained by using FFT:

After obtaining the blur kernel, it needs to be non-negative and normalized:

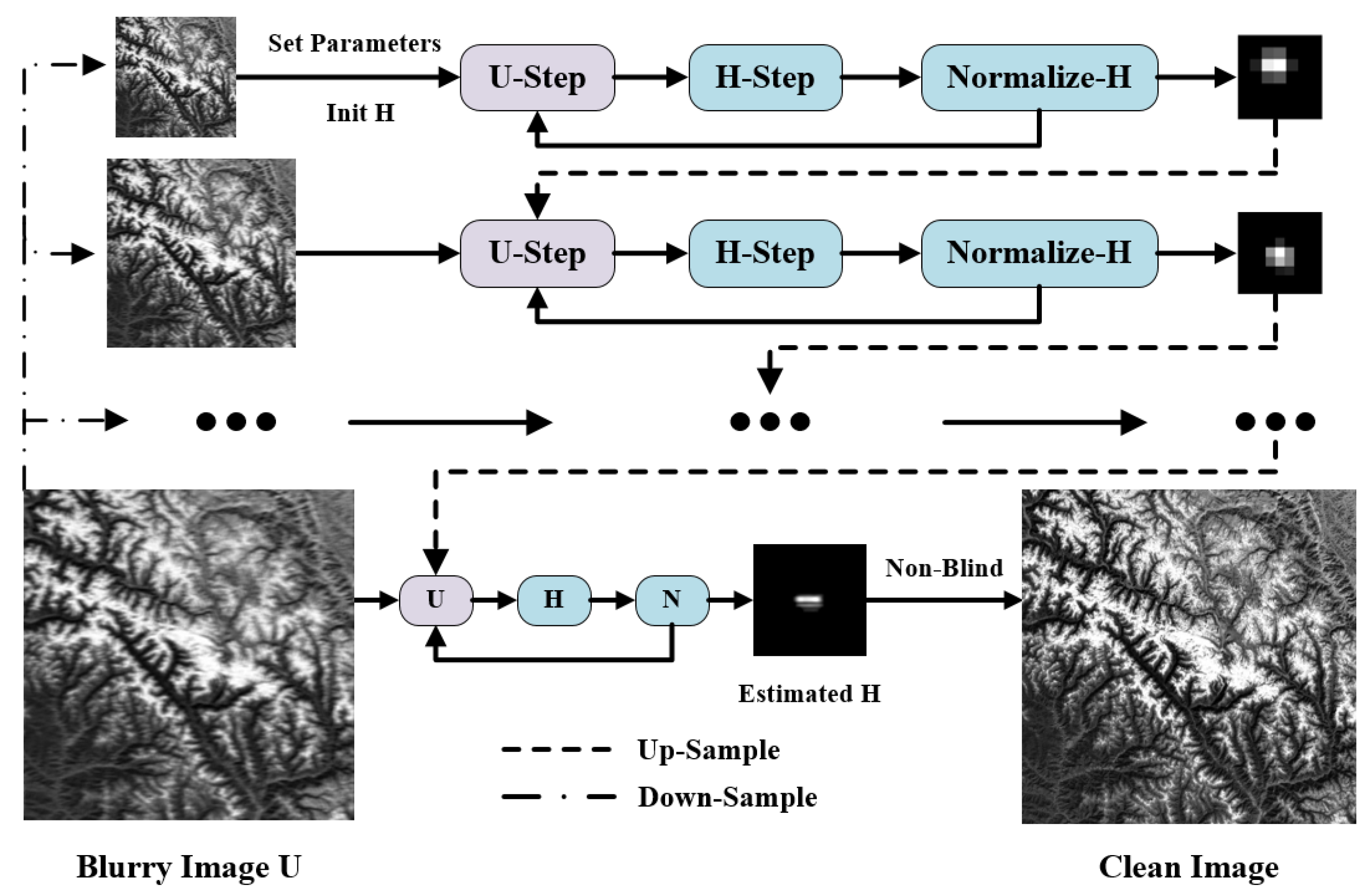

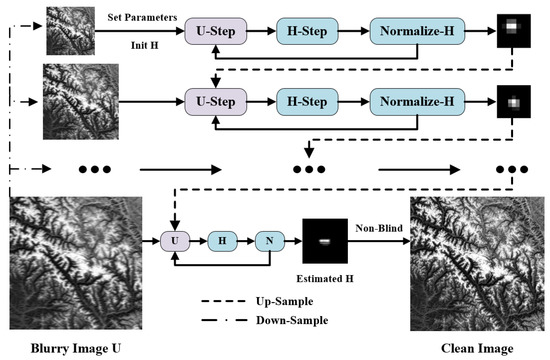

where m is the weight. The solution process of the blur kernel is shown in Algorithm 3. Figure 3 is a brief flow-chart of this algorithm.

| Algorithm 3: Estimating the blur kernel. |

| Input: Blurry image G. |

| Initialize H with results from the coarser level. |

| While i do |

| Solve U using Algorithm 2. |

| Solve H using Equation (28). |

| End while |

| Output: Blur kernel H and intermediate latent image U. |

Figure 3.

A brief flow-chart of this algorithm.

3.5. Algorithm Implementation

This section describes the relevant details that need to be paid attention to in the implementation of this algorithm. In order to get a more accurate blur kernel, we construct a multi-scale image pyramid from coarse-to-fine with a down-sampling factor of , and the total number of cycles of each layer of the pyramid is 5. After estimating the potential clear image U and the blur kernel H, we up-sample the blur kernel H and pass it to the next layer. We usually set , , , , and . The number of iterations of the FISTA algorithm is empirically set to 500. These parameters can be adjusted as needed. Finally, a clear image can be obtained after applying the obtained blur kernel to the existing non-blind deblurring method.

4. Experiment Results

In this paper, the experimental results are divided into simulated and real remote sensing image data. Our method is tested on the AID dataset (http://www.captain-whu.com/project/AID/, accessed on 26 November 2021). The following shows the comparison results of our method with four algorithms, which use the heavy-tailed prior (HTP) [29], the dark channel prior (Dark) [14], -regularized intensity and gradient prior () [24] and the non-linear channel prior (NLCP) [16].

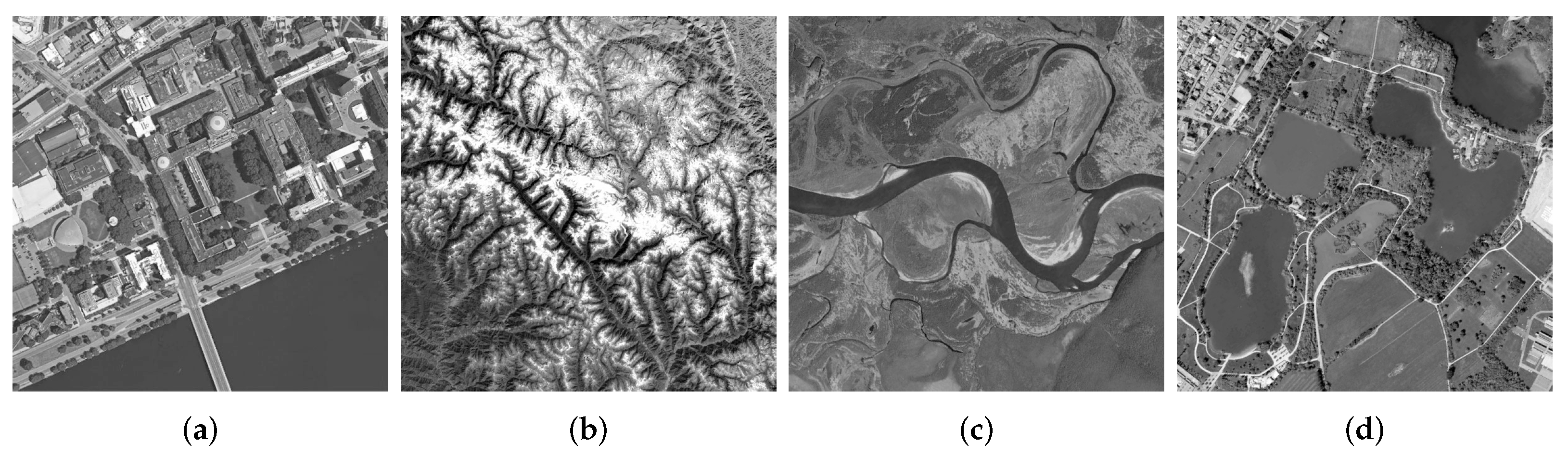

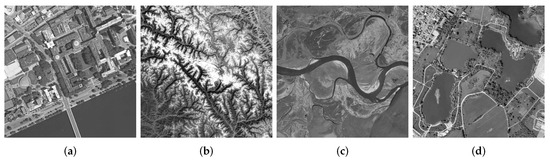

4.1. Simulate Remote Sensing Image Data

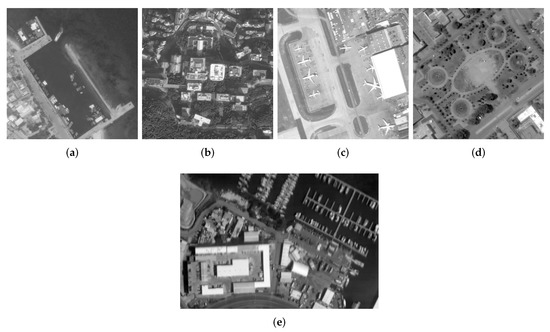

AID is an aerial image data set composed of sample images collected from Google Earth. The data set consists of the following 30 types of aerial scenes, all of which are marked by experts in the field of remote sensing image interpretation, totaling 10,000 images. In the test experiment of simulated remote sensing images, we selected four images from the AID data set to verify the effectiveness of the algorithm, as shown in Figure 4. Taking into account the types of blur in the actual remote sensing images, we added motion blur, Gaussian blur, and defocus blur to the image, respectively, and adopted Peak-Signal-to-Noise Ratio (PSNR), Structural-Similarity (SSIM) [51] and Root Mean Squard Error (RMSE) as judgment indexes.

Figure 4.

Selected remote sensing image (Simulate).

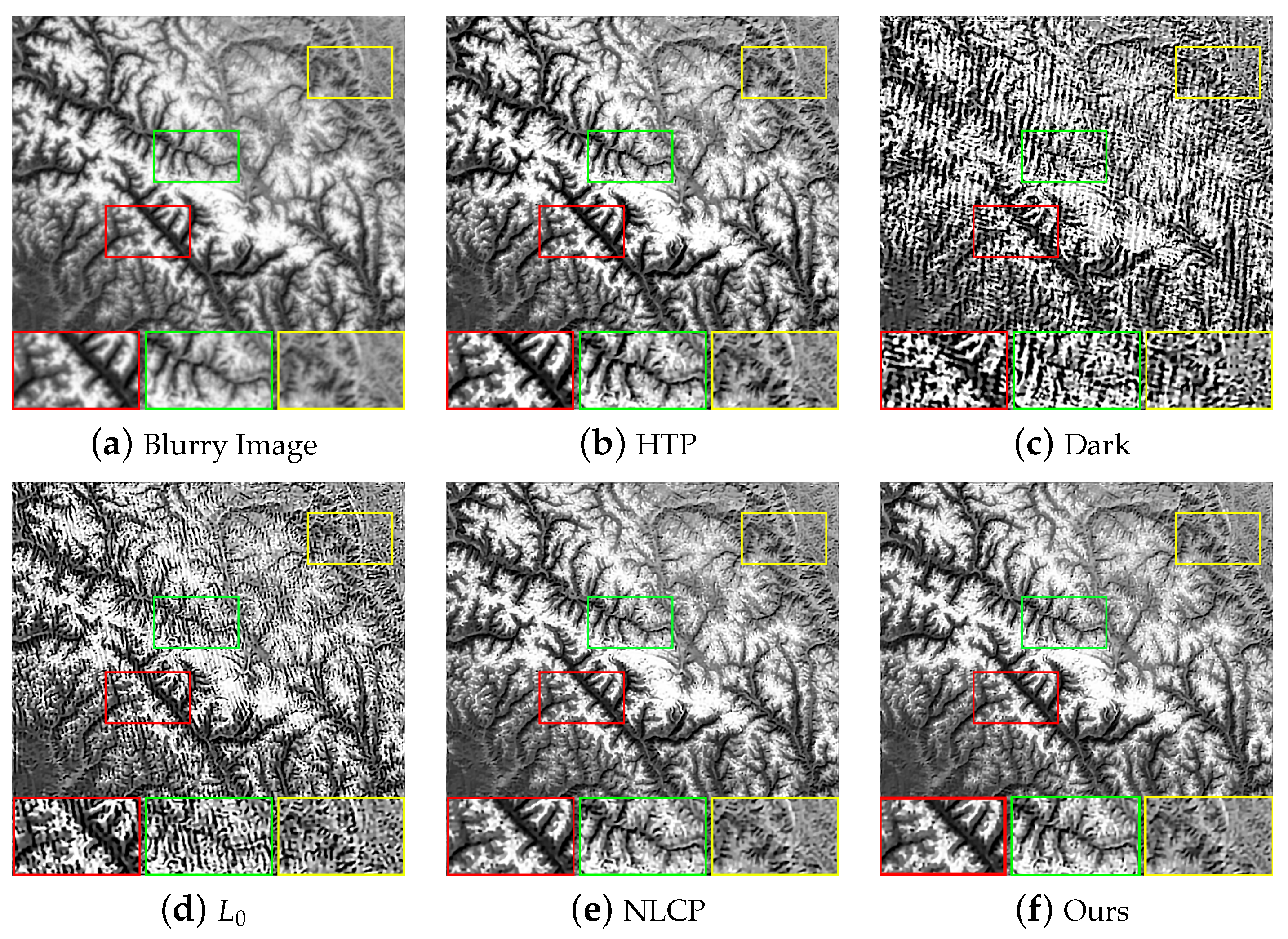

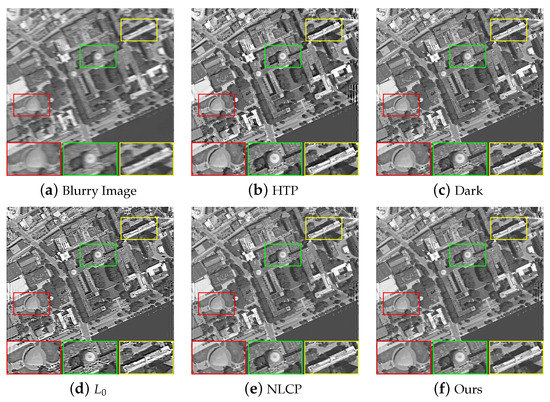

4.1.1. Motion Blur

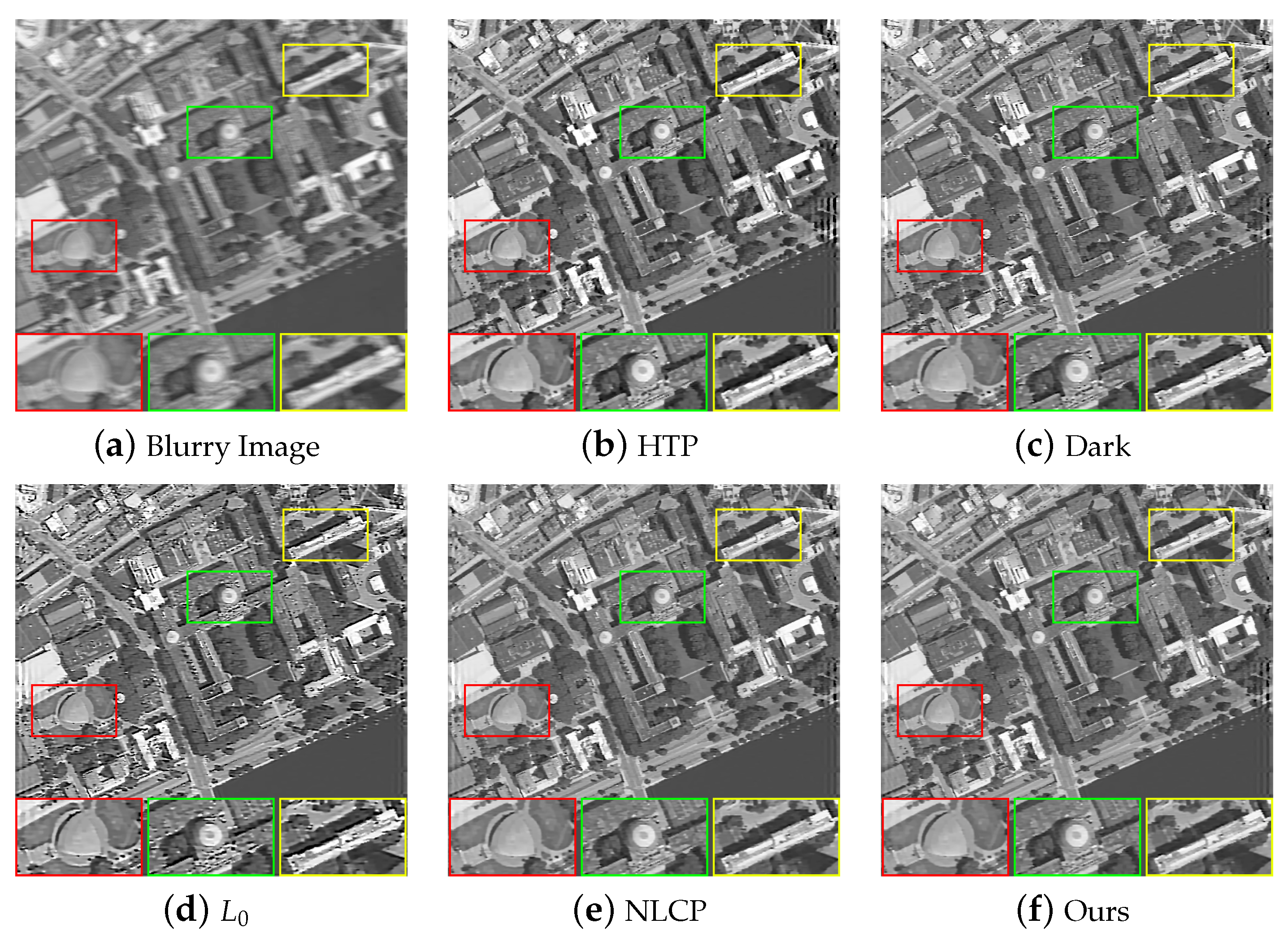

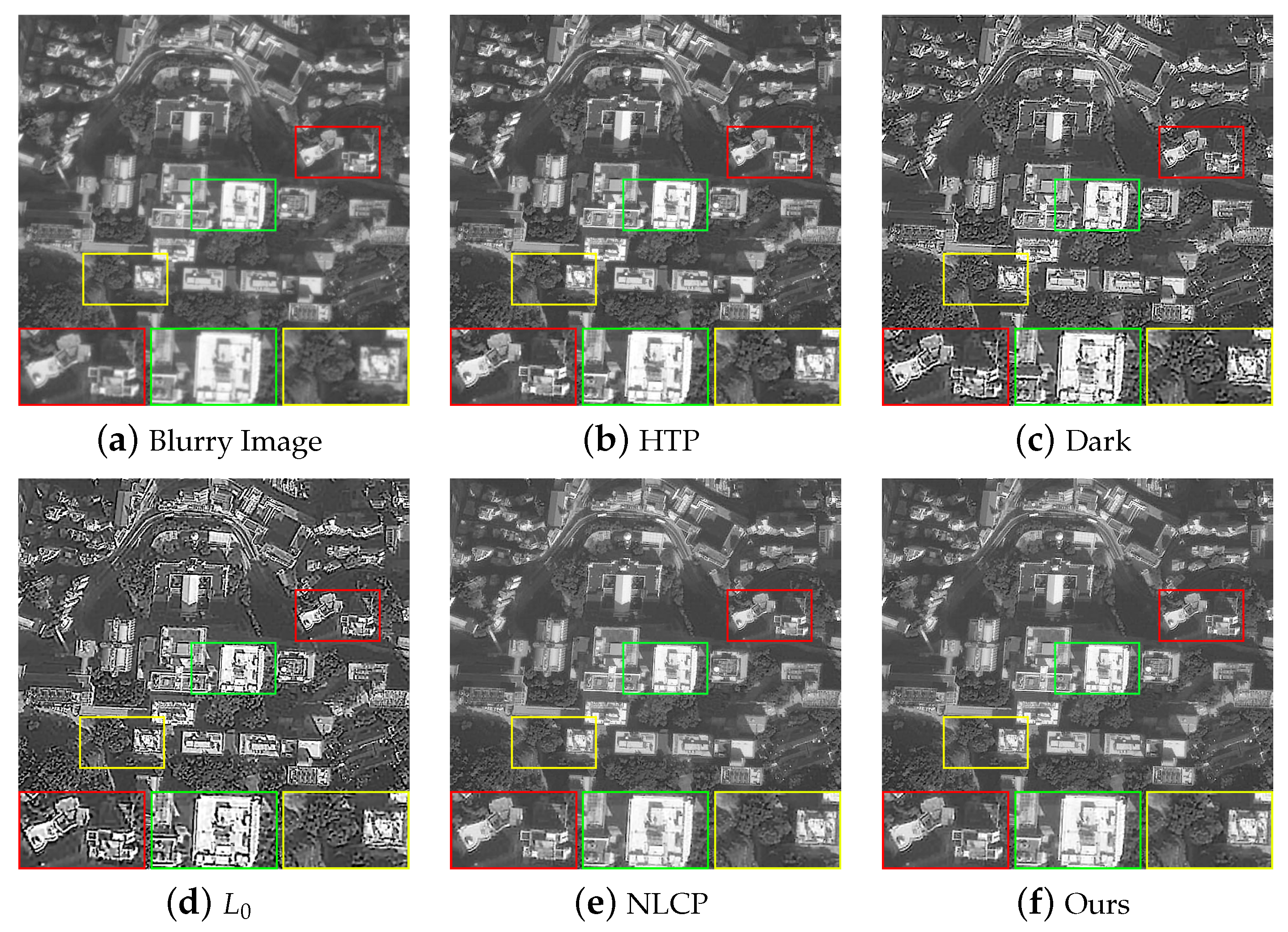

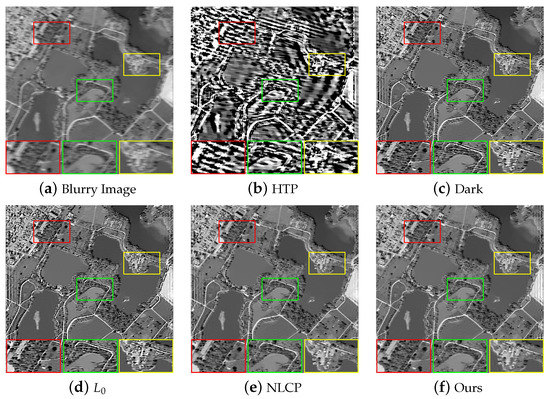

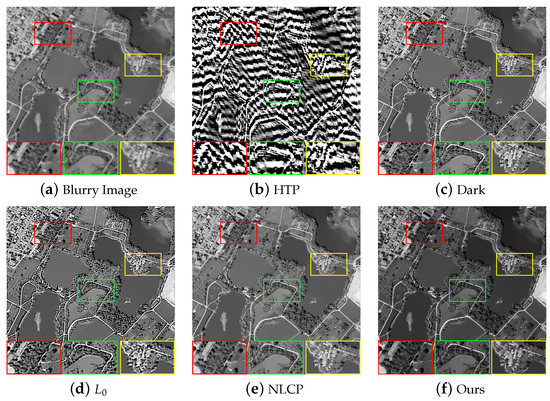

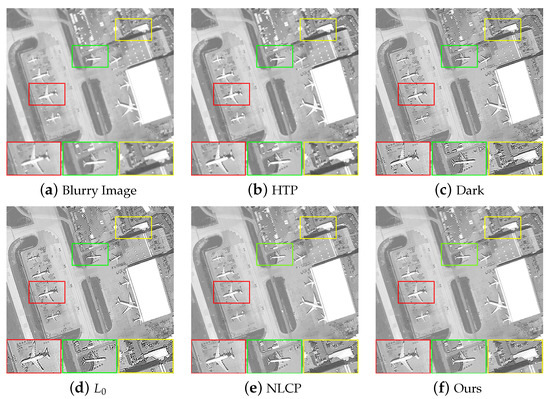

We added motion blur with an angle of and the displacement of 10 pixels to the remote sensing images. The processing results of each method are shown in Table 1. HTP can sharpen the main contour edges of the image very well, but its ability to retain the details of the image is poor. Furthermore, the images processed by HTP have artifacts remaining. Dark and use -norm processing for the prior items of images, which leads to the problem of the over-sharpening of the image. This phenomenon is grave when facing remote sensing images with complex texture details. NLCP and our method use convex -norm accumulation for the prior terms of the image. The processing results have good visual effects and will not appear to have over-sharpening like Dark and , but the PSNR, SSIM and RMSE of our method are better. However, for Figure 4b with too many details, NLCP sharpens the tiny details in the image and makes the resulting image quality degraded. In general, our method performs well in processing remote sensing images with motion blur. Figure 5 and Figure 6 show representative images of the processing results.

Table 1.

Quantitative Comparisons on Remote Sensing Image with Motion Blur.

Figure 5.

Visual comparison of remote sensing image (Figure 4a) with motion blur processed by different methods.

Figure 6.

Visual comparison of remote sensing image (Figure 4d) with motion blur processed by different methods.

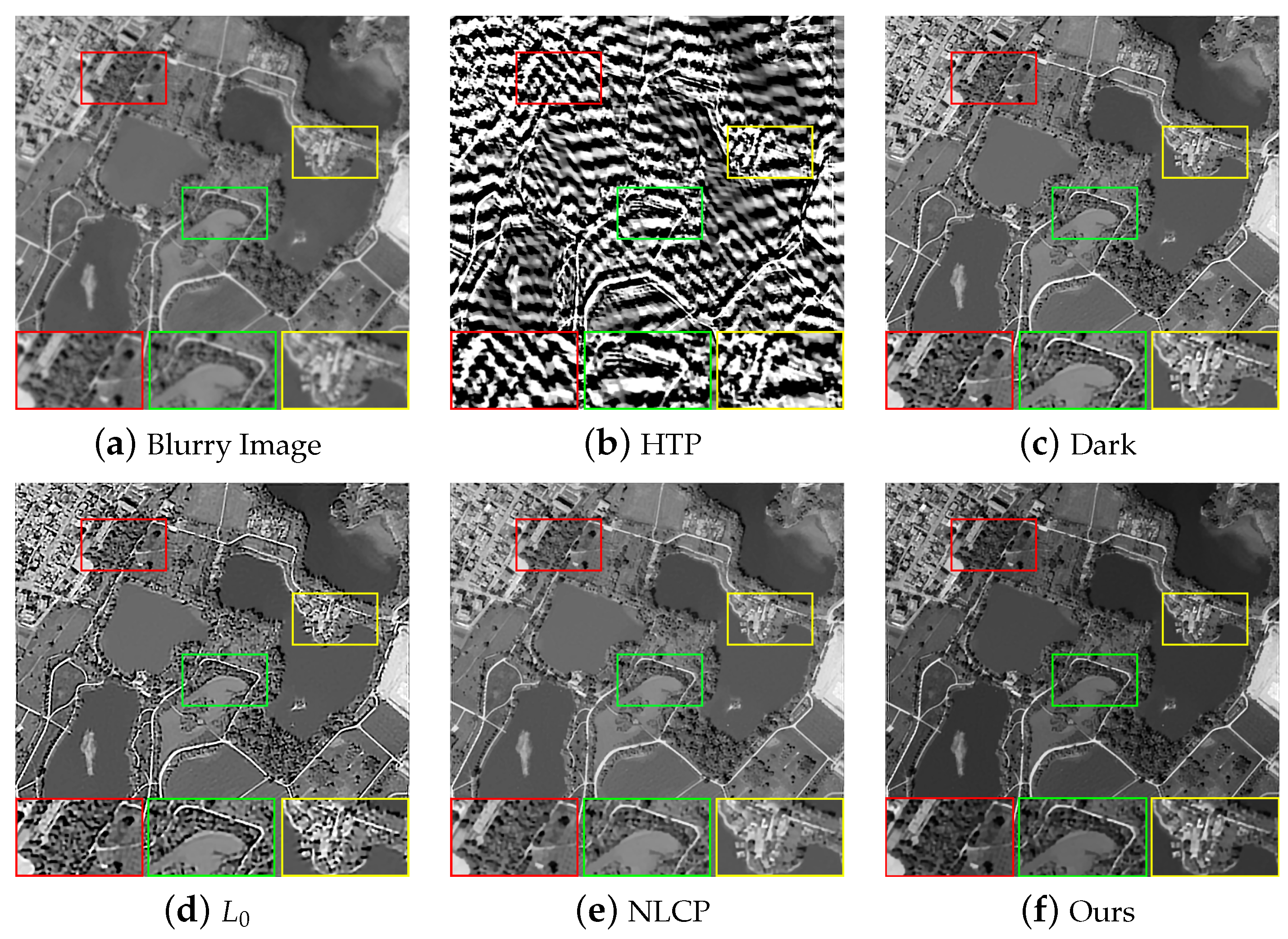

4.1.2. Gaussian Blur

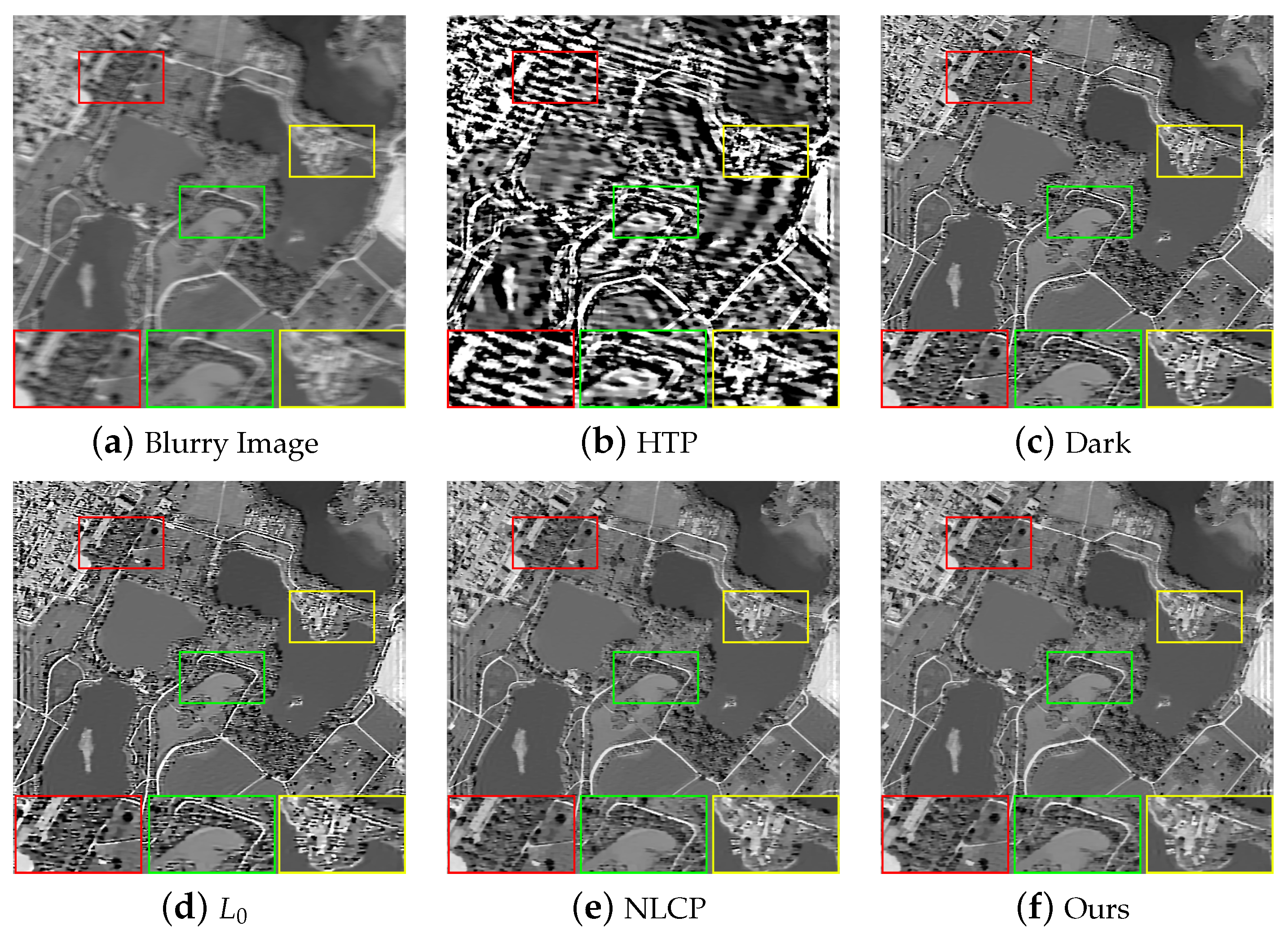

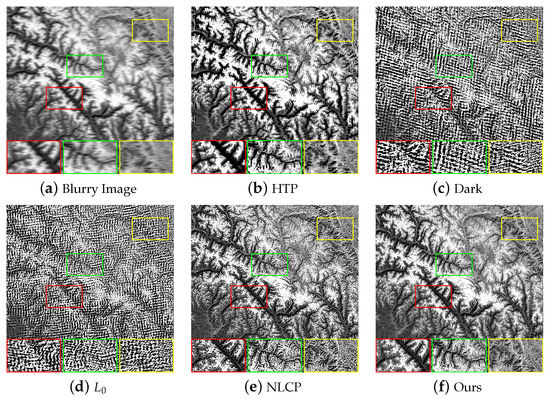

For Gaussian blur, we set its size to 20 × 20 and standard deviation to 0.5. The evaluation results of each method are shown in Table 2. Through observation, it can be seen that the performance of each method in processing Gaussian blur is similar to that in processing motion blur. The images processed by HTP lack detailed information. Dark and are more serious damage to images with rich details. NLCP performs well in most cases and achieves results consistent with the subjective vision of our method. However, when processing Figure 4b with too many details, it still sharpens the tiny details in the image, which greatly reduces the evaluation of visual effects and objective indicators. In general, in the process of removing the Gaussian blur of the remote sensing image, the restored images of our method achieve good visual effects and objective indicators. Figure 7 and Figure 8 show representative images of the processing results.

Table 2.

Quantitative Comparisons on Remote Sensing Image with Gaussian Blur.

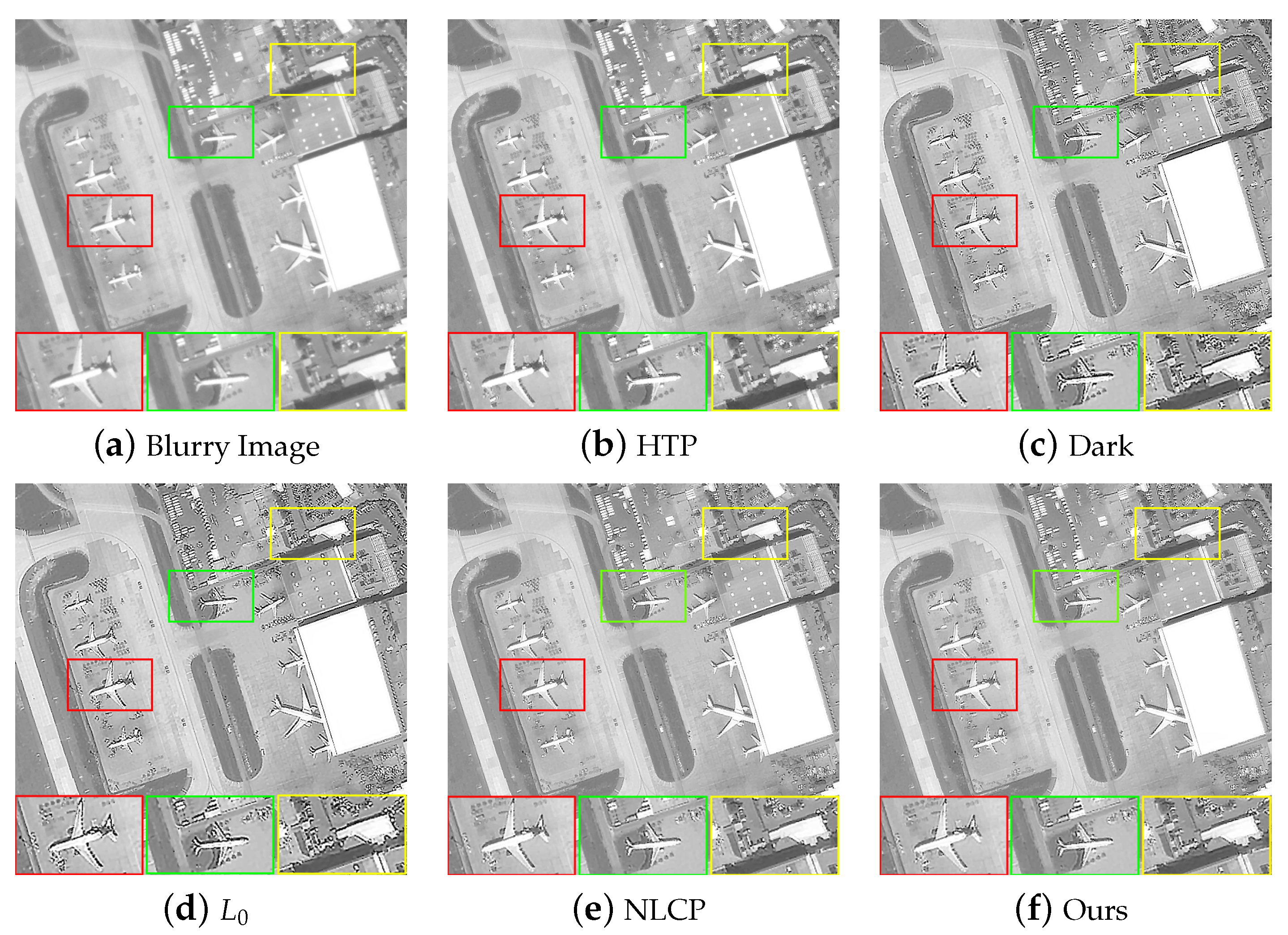

Figure 7.

Visual comparison of remote sensing image (Figure 4b) with Gaussian blur processed by different methods.

Figure 8.

Visual comparison of remote sensing image (Figure 4c) with Gaussian blur processed by different methods.

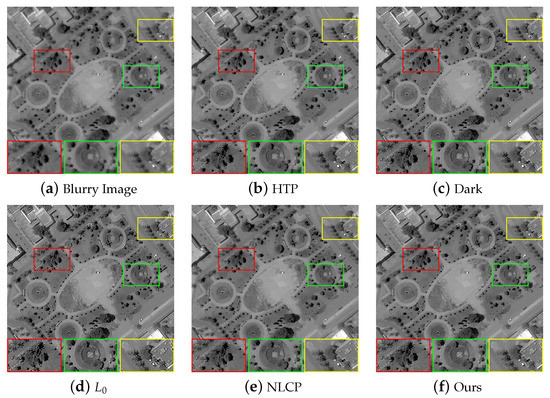

4.1.3. Defocus Blur

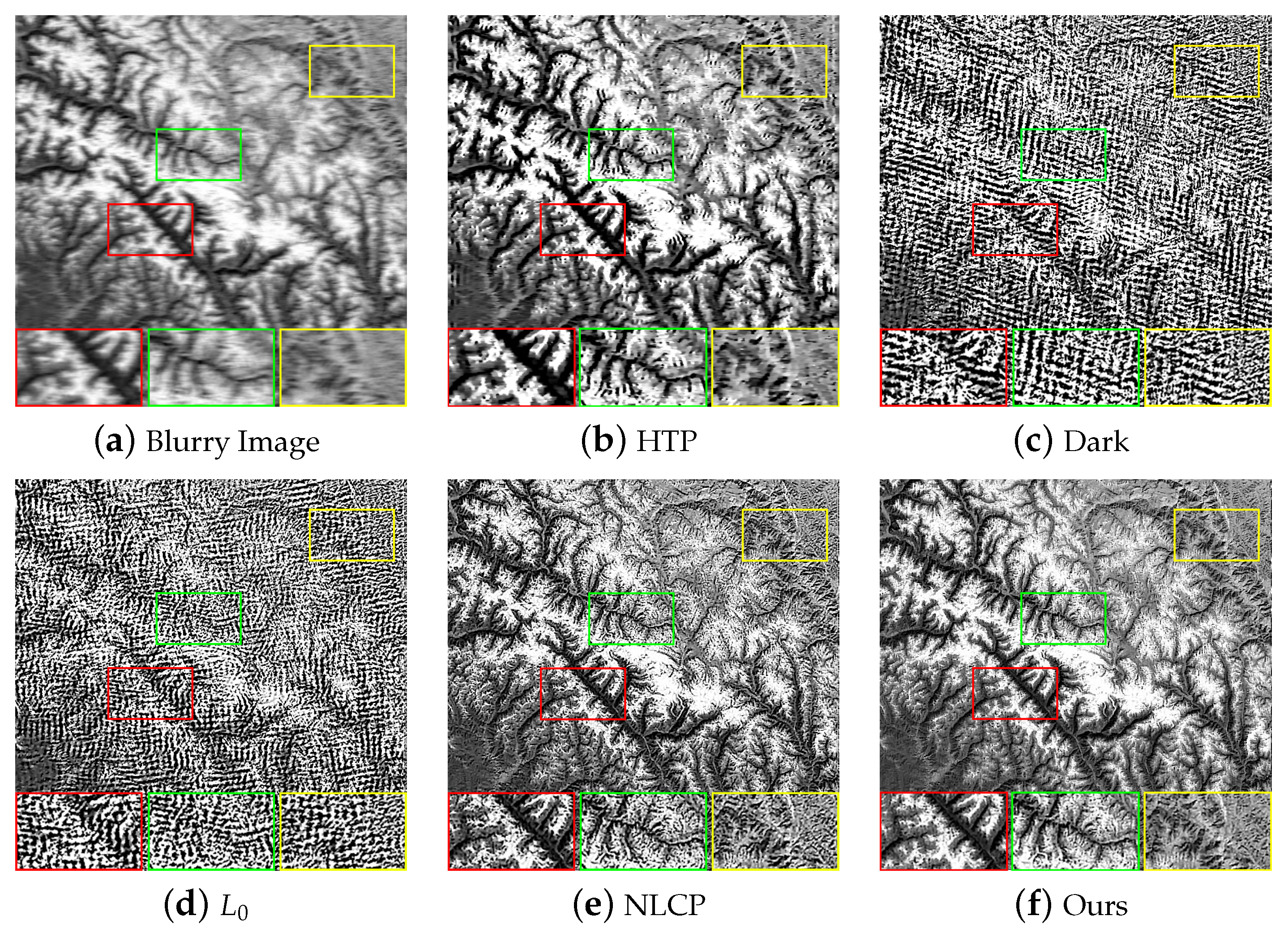

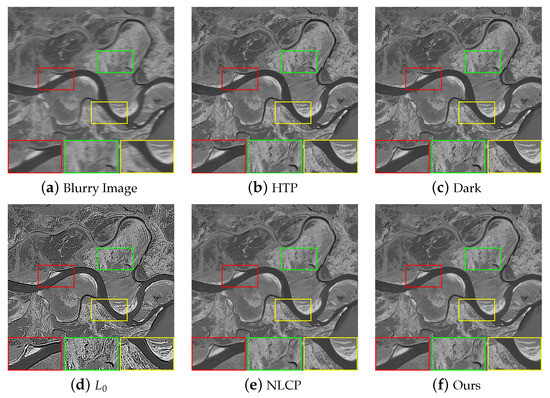

We added defocus blur with a radius of 2 to the remote sensing image. The evaluation results of each method are shown in Table 3. Through observation, it can be seen that HTP cannot retain the detailed information of the image. Except for Figure 4b, the results of Dark processing have no obvious over-sharpening phenomenon. The result has excellent evaluation indexes, especially when Dark deals with Figure 4c with scarce detail information. The performance of NLCP is very stable and can achieve good results in most cases. Overall, for processing remote sensing images with defocus blur, the images processed by our method and NLCP achieve excellent visual effects compared to other methods, but our method achieves higher objective metrics. Figure 9 and Figure 10 show a representative image of the processing result.

Table 3.

Quantitative Comparisons on Remote Sensing Image with Defocus Blur.

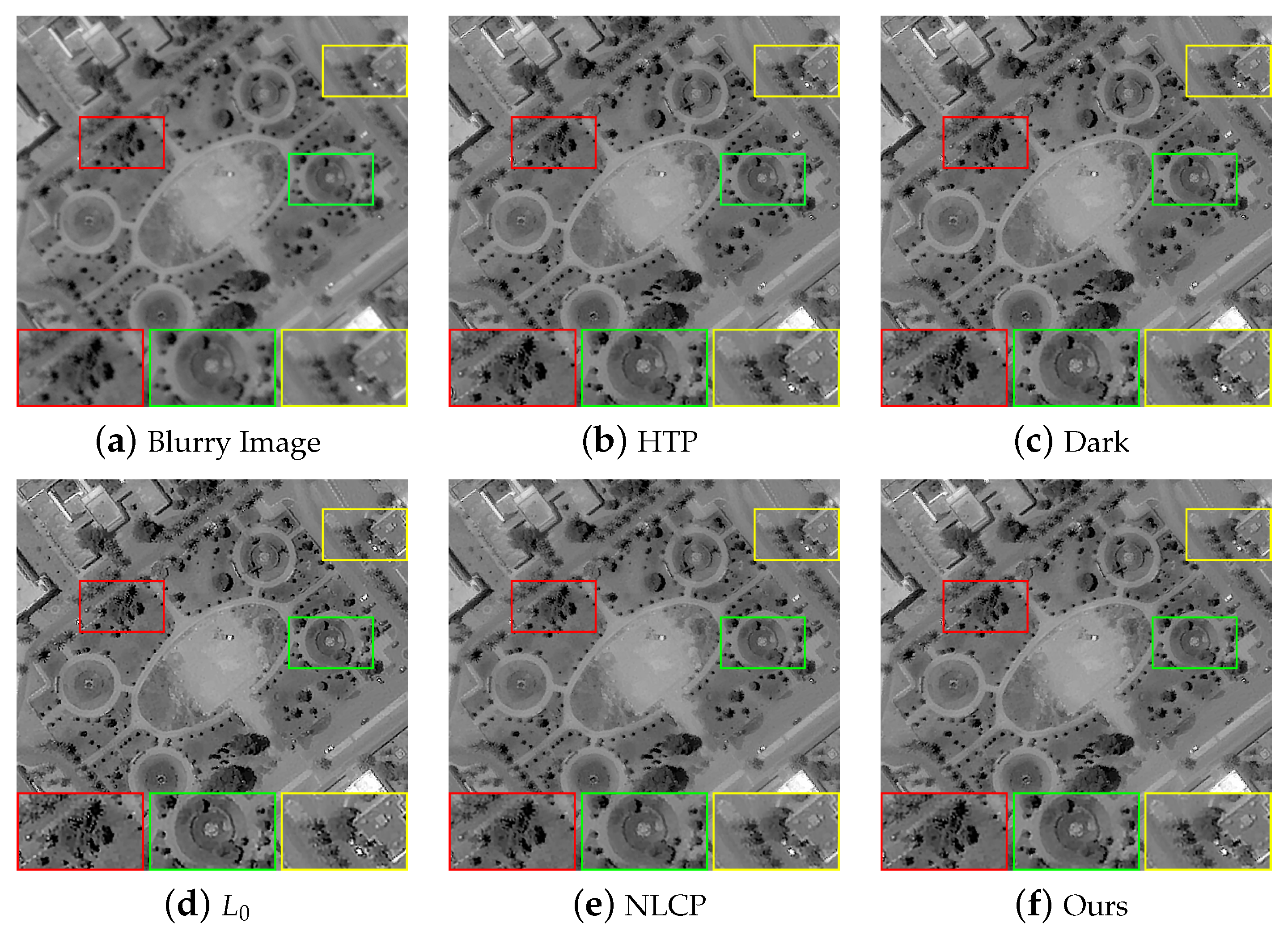

Figure 9.

Visual comparison of remote sensing image (Figure 4b) with defocus blur processed by different methods.

Figure 10.

Visual comparison of remote sensing image (Figure 4d) with defocus blur processed by different methods.

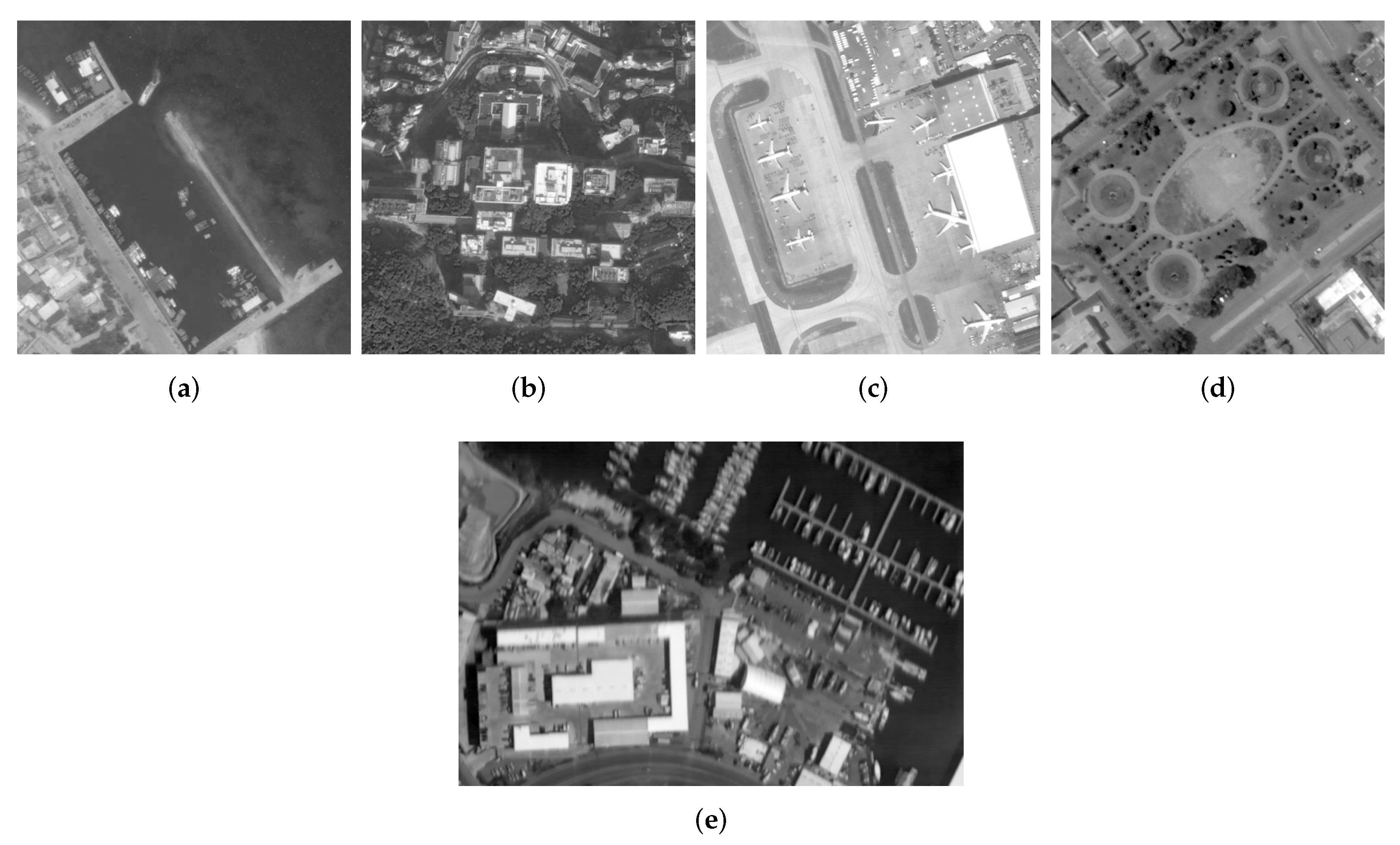

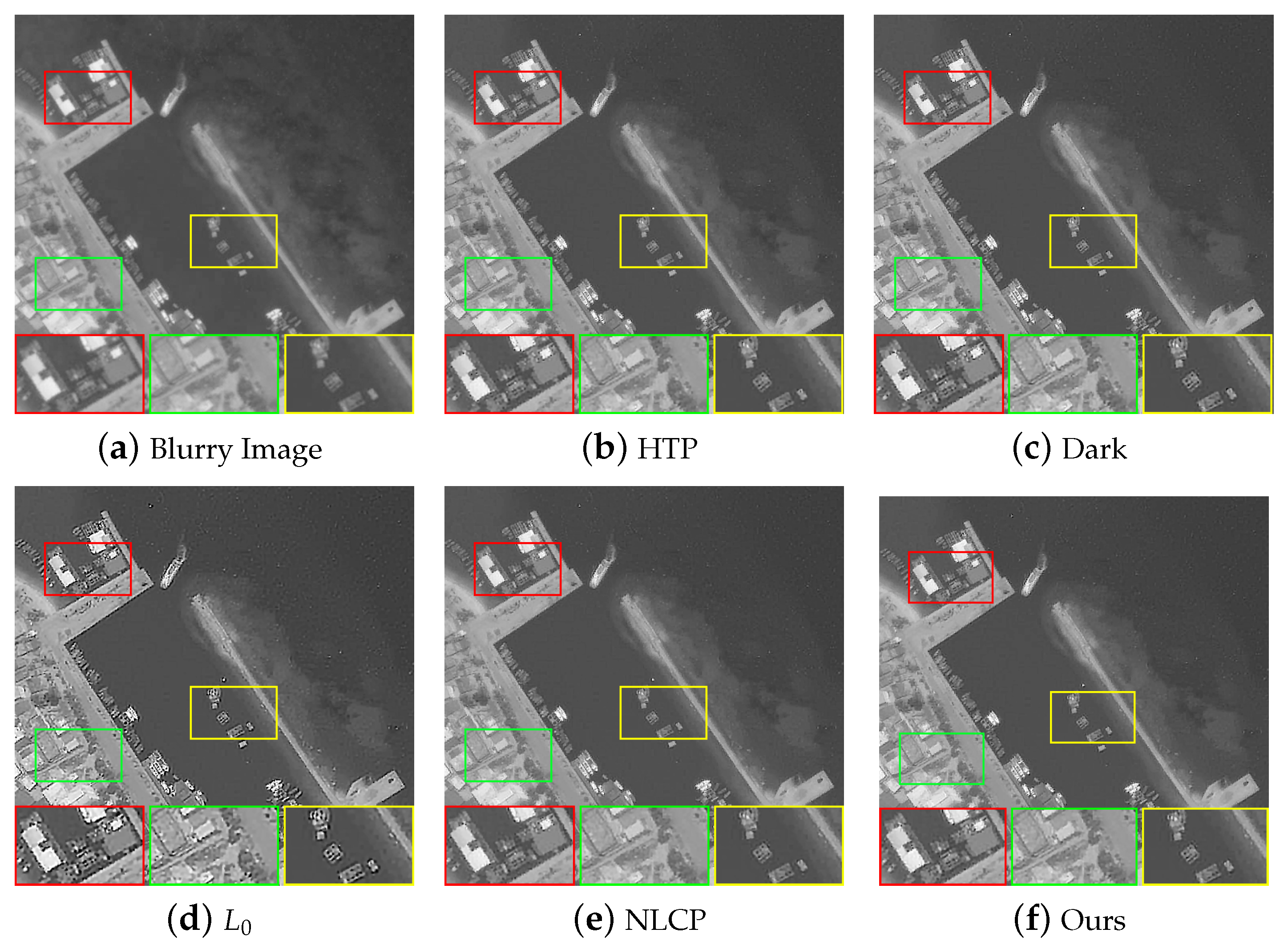

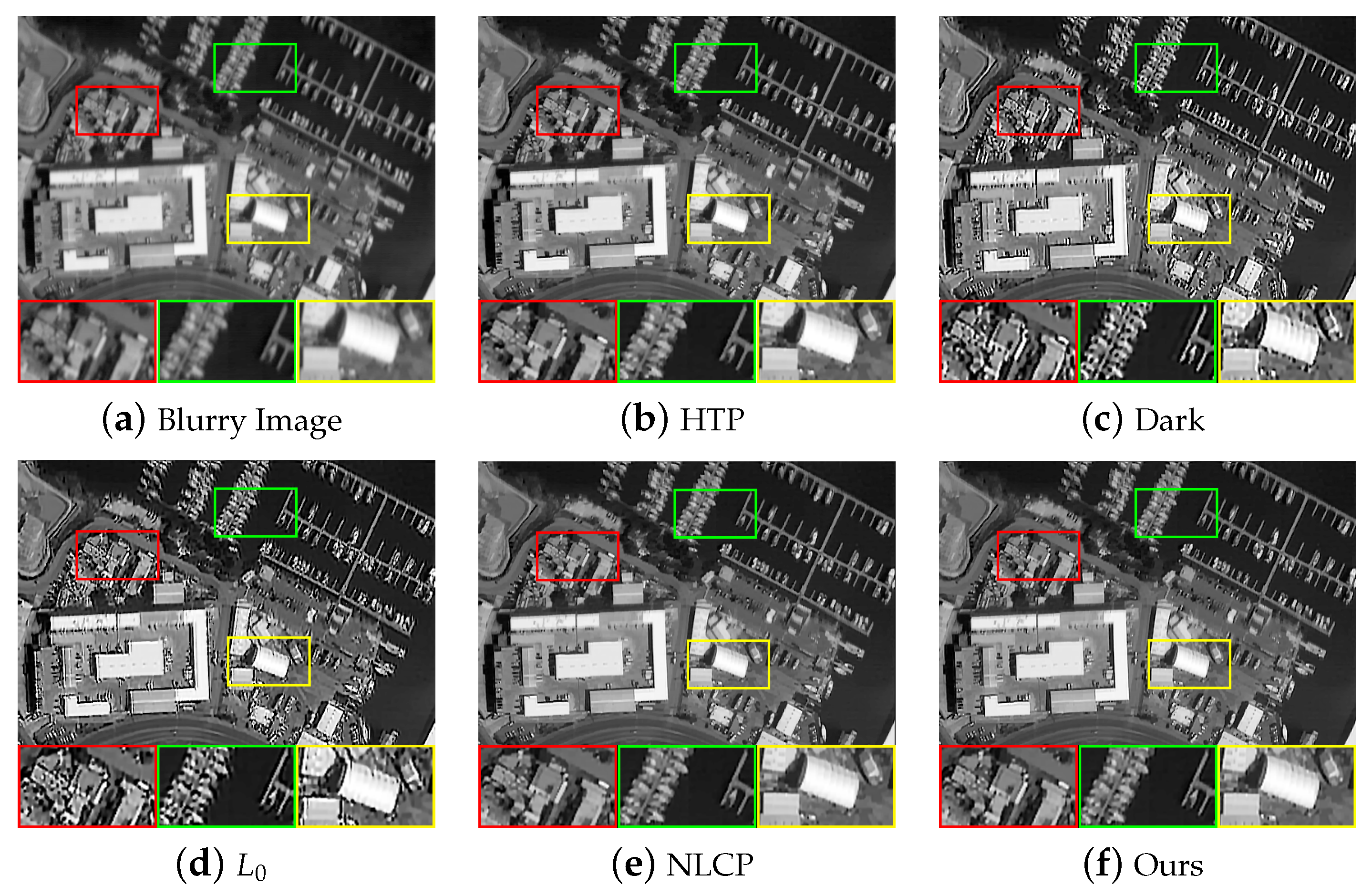

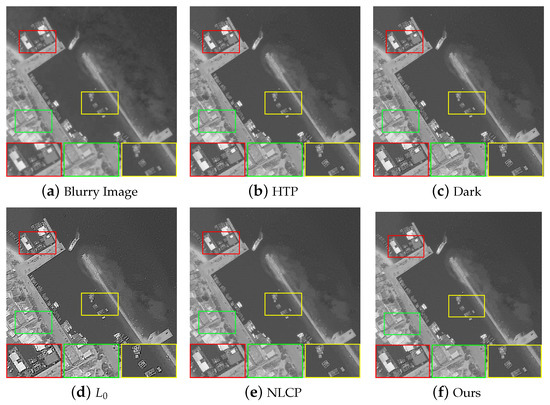

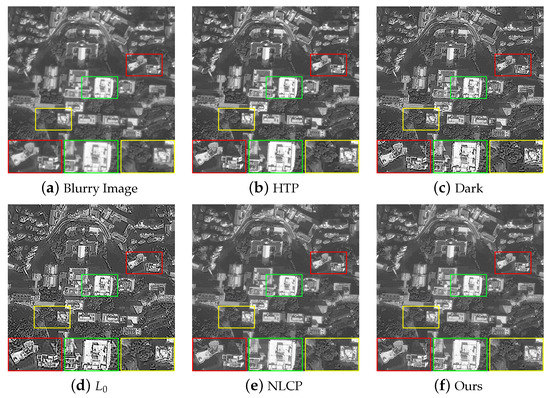

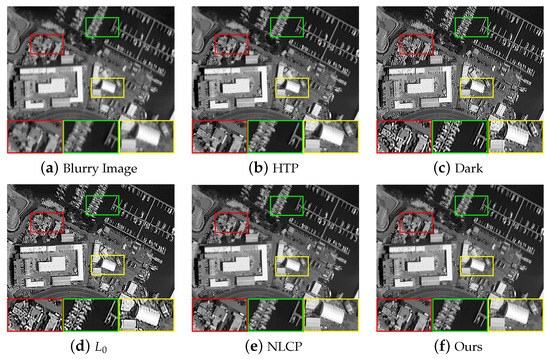

4.2. Real Remote Sensing Image Data

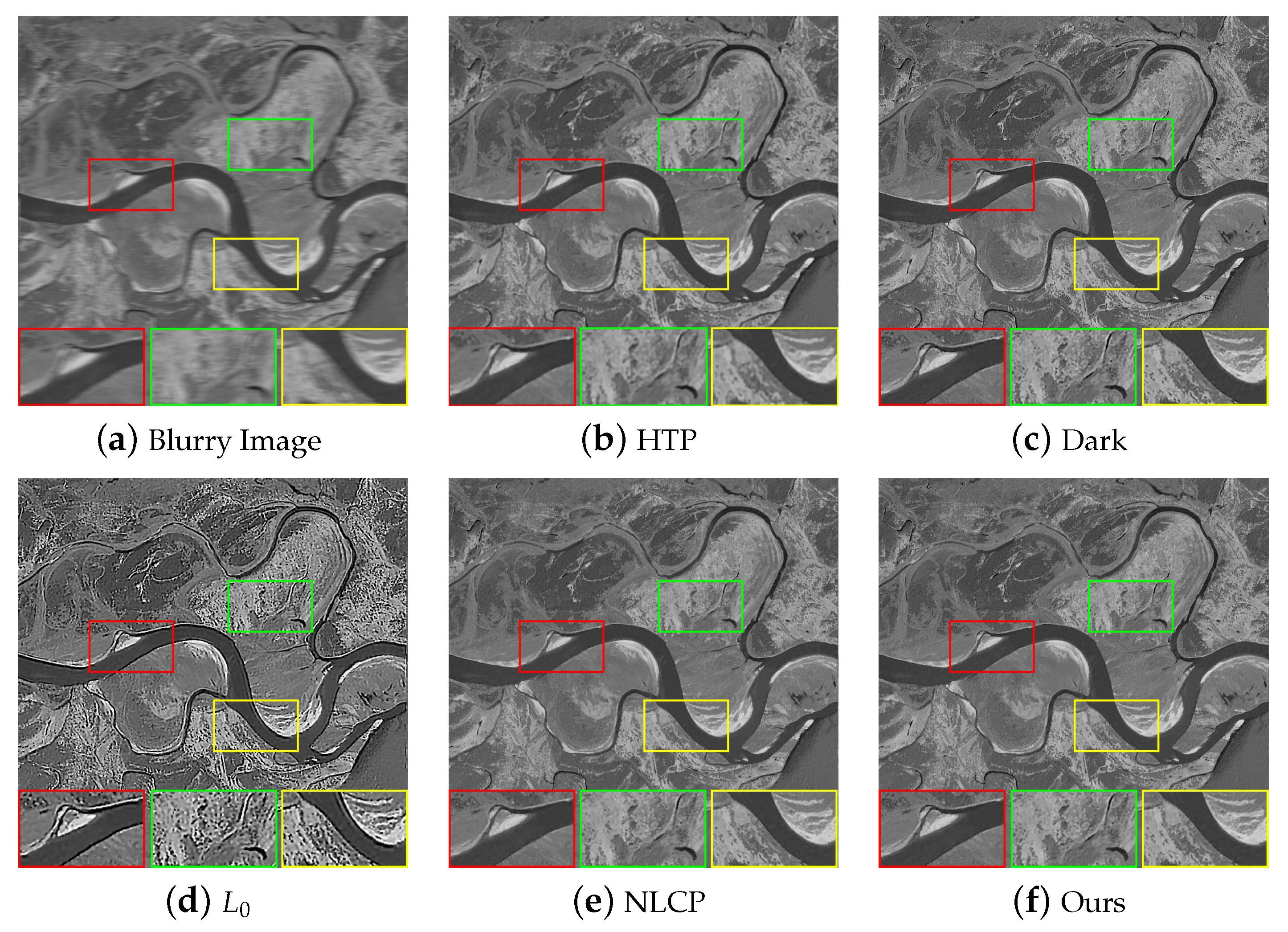

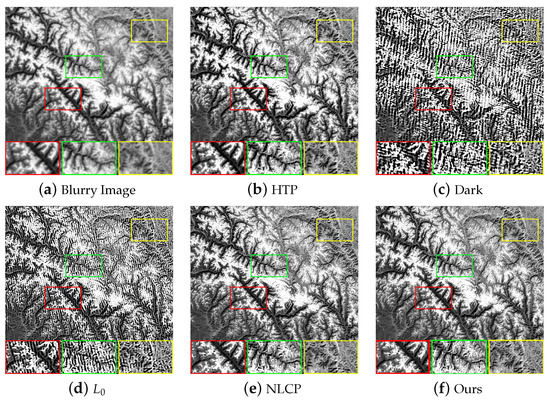

In the real remote sensing images test experiment, we selected four blurry images from the AID data set (Figure 11a–d) and a target image was taken in the experiment (Figure 11e), as shown in Figure 11, to test the effectiveness of our algorithm. In this case, there are no reference images, so we need to apply the no-reference image sharpness assessment indexes. The evaluation indexes include image Entropy (E) [52], Average Gradient (AG) and Point sharpness (P) [53]. Through the comparison of Table 4 and Table 5, Figure 12, Figure 13, Figure 14, Figure 15 and Figure 16, it is clear that HTP restores the overall outline of the image very well but lacks the ability to retain detailed information. The processing results of Dark and algorithms face the problem of over-sharpening. When processing Figure 11a,d with very little texture information, the over-sharpening effect is not obvious, and the resulting image has good visual effects and objective evaluation indicators. However, this effect is particularly serious when dealing with images with many details and scenes (Figure 11b,c,e). The images processed by our algorithm and NLCP can retain more image details, and the visual effects of them are almost the same. However, our algorithm has achieved higher objective evaluation indicators.

Figure 11.

Selected remote sensing image (Real).

Table 4.

Quantitative Comparisons on Real Remote Sensing Image.

Table 5.

Quantitative Comparisons on Experimental Target Image.

Figure 12.

Visual comparison of real remote sensing image (Figure 11a) processed by different methods.

Figure 13.

Visual comparison of real remote sensing image (Figure 11b) processed by different methods.

Figure 14.

Visual comparison of real remote sensing image (Figure 11c) processed by different methods.

Figure 15.

Visual comparison of real remote sensing image (Figure 11d) processed by different methods.

Figure 16.

Visual comparison of real remote sensing image (Figure 11e) processed by different methods.

5. Analysis and Discussion

In this part, we will test the effectiveness of the LBP prior, the influence of hyper-parameters and the convergence of the algorithm, and discuss our algorithm after sufficient analysis and obtaining experimental results. In all testing experiments, we used the Levin dataset [13] containing four images and eight different blur kernels. All blind image deblurring algorithms, finally, use the same non-blind restoration method to obtain clear images to ensure the reliability of the experiment. In addition, Error-Ratio [13], Peak-Signal-to-Noise Ratio (PSNR), Structural-Similarity (SSIM) [51] and Kernel Similarity [54] are used as quantitative evaluation indicators. All experiments are run on a computer with Intel Core i5-1035G1 CPU and 8 GB RAM.

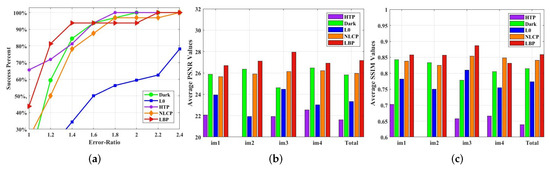

5.1. Effectiveness of the LBP Prior

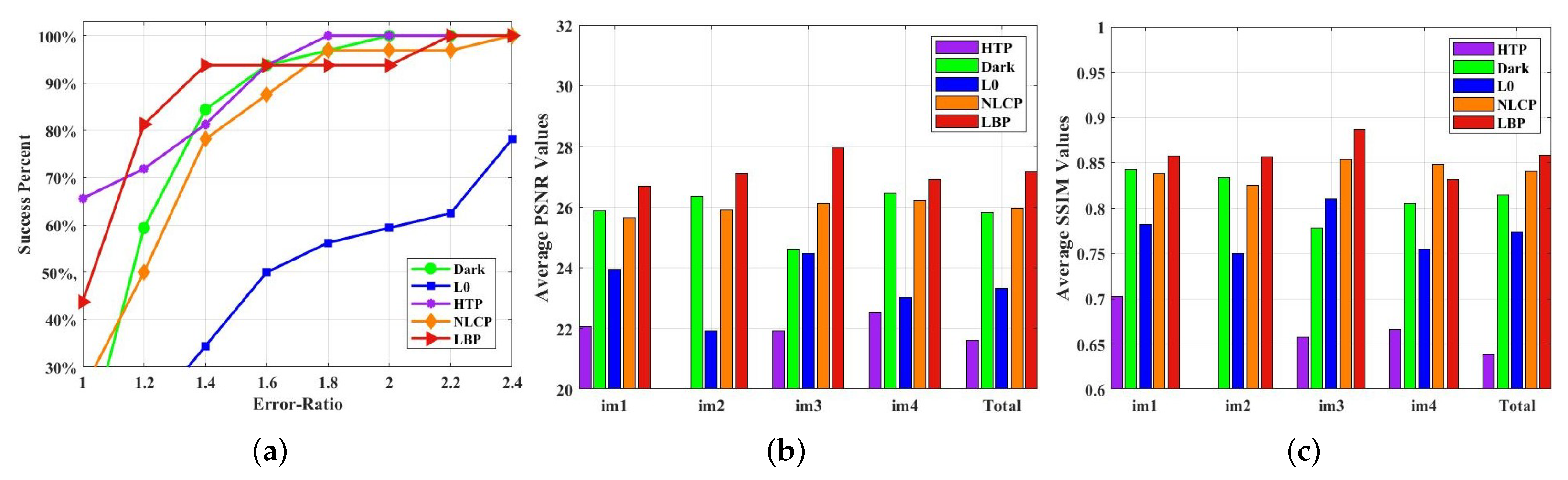

Although the image deblurring algorithm based on LBP prior can effectively obtain a clear image in theory, it needs to be quantitatively evaluated and verified in the actual situation. As shown in Figure 17, the proposed algorithm is compared with HTP [29], Dark [14], [24] and NLCP [16]. The performance of our algorithm in error accumulation rate is second only to MAP, and it is better than other methods in the two indicators of average PSNR and average SSIM. Overall, LBP prior can effectively remove the blur in the image and improve the image quality.

Figure 17.

Quantitative evaluations on the benchmark dataset [13]. (a) Comparisons in terms of cumulative Error-Ratio. (b) Comparisons in terms of average PSNR. (c) Comparisons in terms of average SSIM.

5.2. Effect of Hyper-Parameters

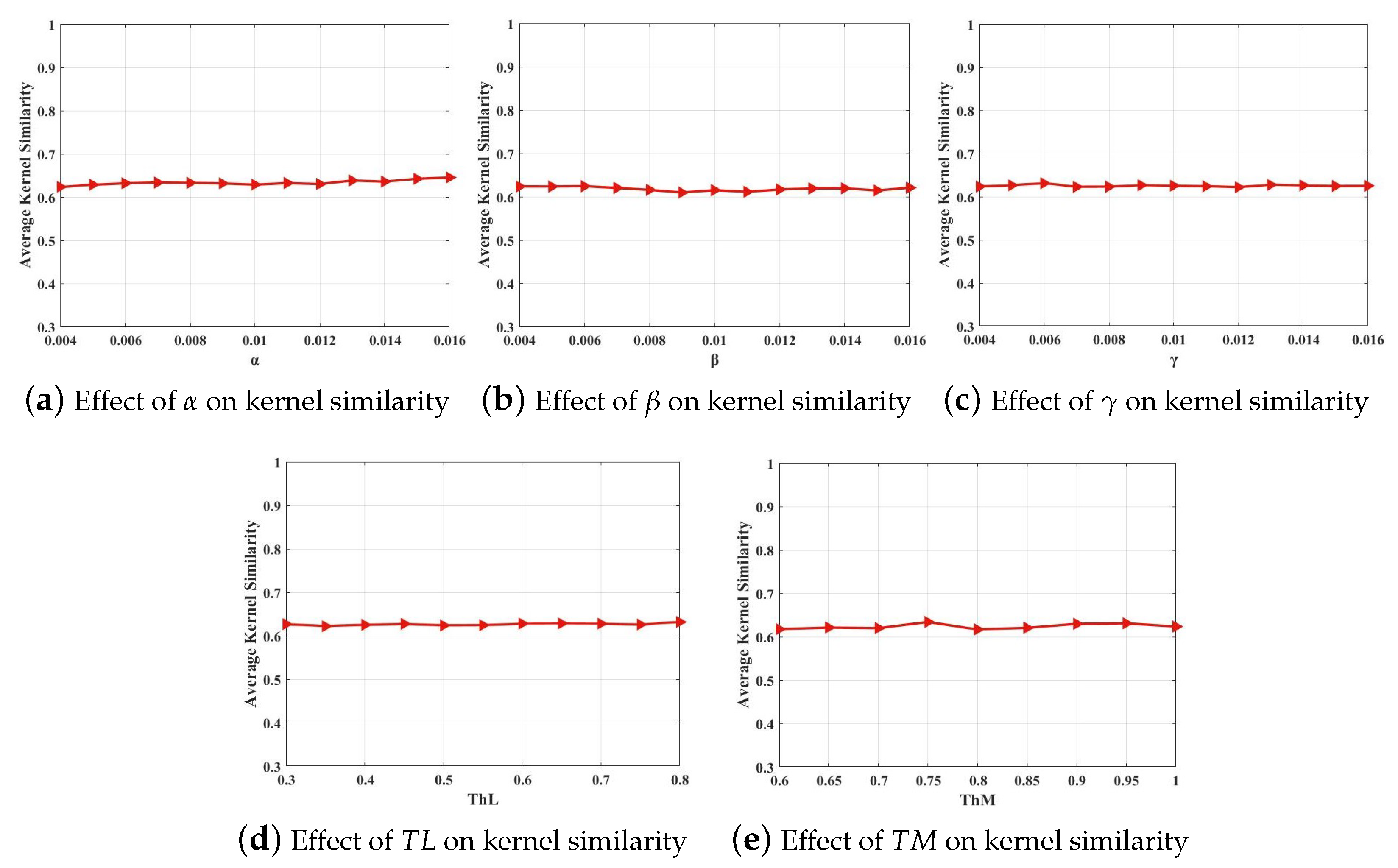

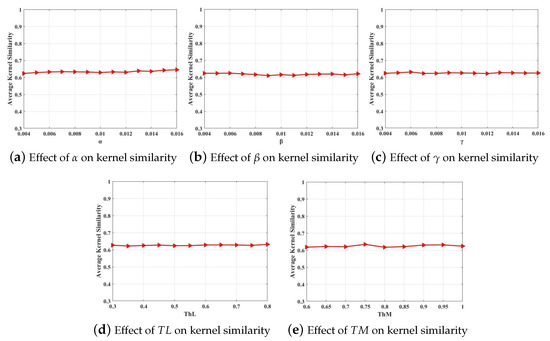

The objective function (8) proposed in this paper mainly contains five main hyper-parameters, i.e., , , , , and . To explore the influence of the five hyper-parameters on the proposed algorithm, we only changed one parameter at a time, ensuring that other parameters remained unchanged, and then calculated the kernel similarity between the estimated blur kernel and the ground truth kernel. Suppose the kernel similarity does not change on a large scale with the change of hyper-parameters. In that case, the estimated blur kernel is relatively stable, i.e., the algorithm is not sensitive to hyper-parameters. As shown in Figure 18, our method is not sensitive to changes in a wide range of hyper-parameters.

Figure 18.

Sensitivity analysis of hyper-parameters in the proposed method.

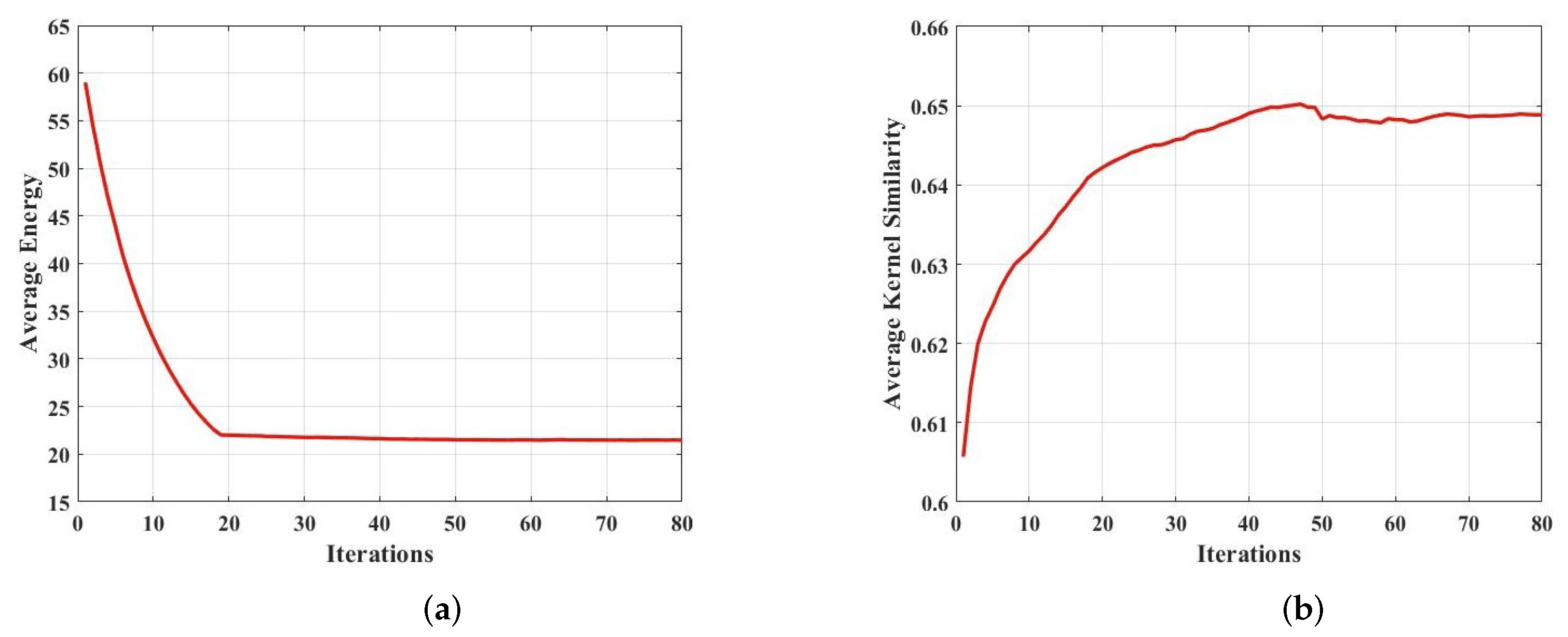

5.3. Convergence Analysis

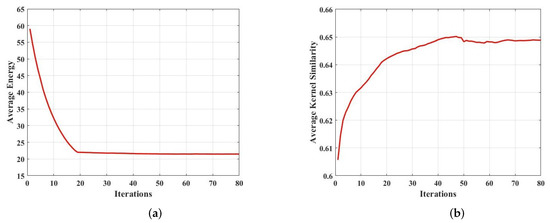

PAM is widely used in various algorithms as an effective method to make the objective function converge to the optimal solution. For the convergence of the PAM method applied to the total variational algorithm, reference [6] gives an explanation of delay scaling (normalization) in the iterative step of the blur kernel. Our algorithm also applies the PAM method and uses half-quadratic splitting method to decompose the objective function into several sub-problems. It is known that each sub-problem has a convergent solution [18,55], but the overall theoretical research on convergence is minimal. The convergence of the algorithm can be quantitatively verified by calculating the average energy function (8) and the average kernel similarity that changes with iteration under the optimal scale of the Levin dataset. As shown in Figure 19, our method converges after approximately 19 iterations, and the kernel similarity stabilizes after 40 iterations, proving the method’s convergence.

Figure 19.

Convergence analysis of the proposed method. (a) The average value of the objective function (8). (b) Kernel Similarity.

5.4. Run-Time Comparisons

To explore the running efficiency of each algorithm, we tested the average running time of different restoration algorithms. The test results are shown in Table 6. By comprehensively analyzing the data in Table 6 and the conclusions in Section 5.1, it is clear that our method achieves very competitive processing results while spending relatively less time.

Table 6.

Running Time (in second) Comparison.

5.5. Limitations

Although our method excels in processing remote sensing images, it has limitations. The hyper-parameters used by our algorithm are not fixed due to the introduction of LBP priors. To achieve the best processing effect, it is necessary to select corresponding parameters for different processed images. In addition, the presence of noise in the image will also affect the processing effect. Significant noise, especially the strip noise that often appears in remote sensing imaging, will make our method misjudge important pixels and amplify the noise. At the same time, to get a clear image, our algorithm uses PAM, which includes the half-quadratic splitting method and FISTA, to solve the objective function, and updates the parameters iteratively. This way of solving will undoubtedly increase the amount of calculation and make the whole process more time-consuming. Therefore, for future work, we will focus on exploring the factors affecting the hyper-parameters to improve the LBP prior further, using the LBP prior to remove noise and blur at the same time and reducing the amount of calculation to shorten the processing time.

6. Conclusions

Unlike the existing methods dedicated to exploring the prior that can clearly distinguish between a clear image and the blurry image, the prior we introduce is used to select critical pixels to restore images by focusing on the similarities. The proposed algorithm uses LBP, to filter pixels because of remote sensing images with small scenes and numerous details. It processes different types of pixels, respectively, to prevent over-sharpening while restoring the images. By introducing the LBP prior, we established an optimization model based on PAM, which involves the construction of the mapping matrix, the fast iterative shrinkage-thresholding algorithm (FISTA) and the half-quadratic splitting method. It can be seen that the proposed algorithm has excellent convergence and stability. The experimental results show that our method is better than the existing algorithms for deblurring remote sensing images. Moreover, we believe that the proposed algorithm can provide a new idea for further developing remote sensing image processing.

Author Contributions

Conceptualization, Z.Z. and L.Z.; methodology, Z.Z. and L.Z.; funding acquisition, L.Z., Y.P., S.T.; resources, L.Z., Y.P. and S.T.; writing—original draft preparation, Z.Z. and L.Z.; writing—review and editing, Z.Z., L.Z., Y.P., S.T., W.X., T.G. and X.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China under Grant 62075219; and the Key Technological Research Projects of Jilin Province, China under Grant 20190303094SF.

Institutional Review Board Statement

Not Applicable.

Informed Consent Statement

Not Applicable.

Data Availability Statement

The AID data used in this paper are available at the fllowing link: http://www.captain-whu.com/project/AID/, accessed on 26 November 2021.

Acknowledgments

The author is very grateful to the editors and anonymous reviewers for their constructive suggestions. At the same time, The author also thanks Ge Xianyu for their help, which is of great significance.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| LBP | Local Binary Pattern |

| PAM | Projected Alternating Minimization |

| FISTA | Fast Iterative Shrinkage-Thresholding Algorithm |

| MAP | Maximum A Posteriori |

| VB | Variational Bayes |

| NLC | Non-Linear Channel |

| LMG | Local Maximum Gradient |

| CNN | Convolutional Neural Network |

| FPN | Feature Pyramid Network |

| PSNR | Peak-Signal-to-Noise Ratio |

| SSIM | Structural-Similarity |

| RMSE | Root Mean Squard Error |

| HTP | Heavy-Tailed prior |

| NLCP | Non-Linear Channel Prior |

| E | Entropy |

| AG | Average Gradient |

| P | Point sharpness |

References

- Rugna, J.D.; Konik, H. Automatic blur detection for metadata extraction in content-based retrival context. In Proceedings of the Internet Imaging V SPIE, San Jose, CA, USA, 18–22 January 2004; Volume 5304, pp. 285–294. [Google Scholar]

- Liu, R.T.; Li, Z.R.; Jia, J.Y. Image partial blur detection and classification. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Rudin, L.I.; Osher, S.; Fatemi, E. Nonlinear total variation based noise removal algorithms. Phys. D Nonlinear Phenom. 1992, 60, 259–268. [Google Scholar] [CrossRef]

- Chan, T.F.; Wong, C.-K. Total variation blind deconvolution. IEEE Trans. Image Process. 1998, 7, 370–375. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liao, H.Y.; Ng, M.K. Blind deconvolution using generalized cross-validation approach to regularization parameter estimation. IEEE Trans. Image Process. 2011, 20, 670–680. [Google Scholar] [CrossRef] [PubMed]

- Perrone, D.; Favaro, P. Total variation blind deconvolution: The Devil Is in the Details. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2909–2916. [Google Scholar]

- Rameshan, R.M.; Chaudhuri, S.; Velmurugan, R. Joint MAP estimation for blind deconvolution: When does it work? In Proceedings of the Eighth Indian Conference on Computer Vision, Graphics and Image Processing December, Mumbai, India, 16–19 December 2012; pp. 1–7. [Google Scholar]

- Likas, A.C.; Galatsanos, N.P. A variational approach for Bayesian blind image deconvolution. IEEE Trans. Signal Process. 2004, 52, 2222–2233. [Google Scholar] [CrossRef]

- Fergus, R.; Singh, B.; Hertzmann, A.; Roweis, S.T.; Freeman, W.T. Removing camera shake from a single photograph. In Proceedings of the SIGGRAPH06: Special Interest Group on Computer Graphics and Interactive Techniques Conference, Boston, MA, USA, 30 July–3 August 2006; pp. 787–794. [Google Scholar]

- Levin, A.; Weiss, Y.; Durand, F.; Freeman, W.T. Efficient marginal likelihood optimization in blind deconvolution. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 2657–2664. [Google Scholar]

- Wipf, D.; Zhang, H.C. Analysis of Bayesian blind deconvolution. In Proceedings of the Energy Minimization Methods in Computer Vision and Pattern Recognition, Lund, Sweden, 19–21 August 2013; pp. 40–53. [Google Scholar]

- Perrone, D.; Diethelm, R.; Favaro, P. Blind deconvolution via lower bounded logarithmic image priors. In Proceedings of the 10th International Conference EMMCVPR 2015, Hong Kong, China, 13–16 January 2015; pp. 112–125. [Google Scholar]

- Levin, A.; Weiss, Y.; Durand, F.; Freeman, W.T. Understanding and evaluating blind deconvolution algorithms. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1964–1971. [Google Scholar]

- Pan, J.S.; Sun, D.Q.; Pfister, H.; Yang, M.-H. Blind image deblurring using dark channel prior. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1628–1636. [Google Scholar]

- Yan, Y.Y.; Ren, W.Q.; Guo, Y.F.; Wang, R.; Cao, X.C. Image deblurring via extreme channels prior. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6978–6986. [Google Scholar]

- Ge, X.Y.; Tan, J.Q.; Zhang, L. Blind Image Deblurring Using a Non-Linear Channel Prior Based on Dark and Bright Channels. IEEE Trans. Image Process. 2021, 30, 6970–6984. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikäinen, M.; Harwood, D. A comparative study of texture measures with classification based on featured distributions. Pattern Recognit. 1996, 29, 51–59. [Google Scholar] [CrossRef]

- Beck, A.; Teboulle, M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2009, 2, 183–202. [Google Scholar] [CrossRef] [Green Version]

- Joshi, N.; Szeliski, R.; Kriegman, D.J. PSF estimation using sharp edge prediction. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Cho, S.; Lee, S. Fast motion deblurring. ACM Trans. Graph. 2009, 28, 1–8. [Google Scholar] [CrossRef]

- Xu, L.; Jia, J.Y. Two-phase kernel estimation for robust motion deblurring. In Proceedings of the 11th European Conference on Computer Vision: Part I, Heraklion, Crete, Greece, 5–11 September 2010; pp. 157–170. [Google Scholar]

- Sun, L.B.; Cho, S.; Wang, J.; Hays, J. Edge-based blur kernel estimation using patch priors. In Proceedings of the IEEE International Conference on Computational Photography (ICCP), Cambridge, MA, USA, 19–21 April 2013; pp. 1–8. [Google Scholar]

- Ren, W.Q.; Cao, X.C.; Pan, J.S.; Guo, X.J.; Zuo, W.M.; Yang, M.-H. Image deblurring via enhanced low-rank prior. IEEE Trans. Image Process. 2016, 25, 3426–3437. [Google Scholar] [CrossRef]

- Pan, J.S.; Hu, Z.; Su, Z.X.; Yang, M.-H. L0-Regularized Intensity and Gradient Prior for Deblurring Text Images and Beyond. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 342–355. [Google Scholar] [CrossRef]

- Chen, L.; Fang, F.M.; Wang, T.T.; Zhang, G.X. Blind image deblurring with local maximum gradient prior. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1742–1750. [Google Scholar]

- Yuzuriha, R.; Kurihara, R.; Matsuoka, R.; Okuda, M. TNNG: Total Nuclear Norms of Gradients for Hyperspectral Image Prior. Remote Sens. 2021, 13, 819. [Google Scholar] [CrossRef]

- Shan, Q.; Jia, J.; Agarwala, A. High-quality motion deblurring from a single image. ACM Trans. Graph. 2008, 27, 1–10. [Google Scholar]

- Krishnan, D.; Tay, T.; Fergus, R. Blind deconvolution using a normalized sparsity measure. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 233–240. [Google Scholar]

- Kotera, J.; Šroubek, F.; Milanfar, P. Blind Deconvolution Using Alternating Maximum a Posteriori Estimation with Heavy-Tailed Priors. In Proceedings of the 15th International Conference on Computer Analysis of Images and Patterns (CAIP), York, UK, 27–29 August 2013; pp. 59–66. [Google Scholar]

- Michaeli, T.; Irani, M. Blind deblurring using internal patch recurrence. In Proceedings of the 13th European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 783–798. [Google Scholar]

- Zhong, Q.X.; Wu, C.S.; Shu, Q.L.; Liu, W. Spatially adaptive total generalized variation-regularized image deblurring with impulse noise. J. Electron. Imaging 2018, 27, 1–21. [Google Scholar] [CrossRef]

- Yang, D.Y.; Wu, X.J. Dual-Channel Contrast Prior for Blind Image Deblurring. IEEE Access 2020, 8, 227879–227893. [Google Scholar] [CrossRef]

- Liu, J.; Tan, J.Q.; He, L.; Ge, X.Y.; Hu, D.D. Blind Image Deblurring via Local Maximum Difference Prior. IEEE Access 2020, 8, 219295–219307. [Google Scholar] [CrossRef]

- Zhou, L.Y.; Liu, Z.Y. Blind Deblurring Based on a Single Luminance Channel and L1-Norm. IEEE Access 2021, 9, 126717–126727. [Google Scholar] [CrossRef]

- Chen, L.; Zhang, J.W.; Lin, S.G.; Fang, F.M.; Ren, J.S. Blind Deblurring for Saturated Images. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 6304–6312. [Google Scholar]

- He, W.; Zhang, H.; Zhang, L.; Shen, H. Total-variation-regularized low-rank matrix factorization for hyperspectral image restoration. IEEE Trans. Geosci. Remote Sens. 2016, 54, 178–188. [Google Scholar] [CrossRef]

- Zhang, H.; He, W.; Zhang, L.; Shen, H.; Yuan, Q. Hyperspectral image restoration using low-rank matrix recovery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4729–4743. [Google Scholar] [CrossRef]

- Sun, J.; Cao, W.F.; Xu, Z.B.; Ponce, J. Learning a convolutional neural network for non-uniform motion blur removal. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 769–777. [Google Scholar]

- Schuler, C.J.; Hirsch, M.; Harmeling, S.; Schölkopf, B. Learning to deblur. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1439–1451. [Google Scholar] [CrossRef]

- Li, L.; Pan, J.S.; Lai, W.-S.; Gao, C.X.; Sang, N.; Yang, M.-H. Blind image deblurring via deep discriminative priors. Int. J. Comput. Vis. 2019, 127, 1025–1043. [Google Scholar] [CrossRef]

- Nah, S.; Kim, T.H.; Lee, K.M. Deep multi-scale convolutional neural network for dynamic scene deblurring. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 257–265. [Google Scholar]

- Cai, J.R.; Zuo, W.M.; Zhang, L. Dark and bright channel prior embedded network for dynamic scene deblurring. IEEE Trans. Image Process. 2020, 29, 6885–6897. [Google Scholar] [CrossRef]

- Zhang, H.G.; Dai, Y.C.; Li, H.D.; Koniusz, P. Deep stacked hierarchical multi-patch network for image deblurring. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5971–5979. [Google Scholar]

- Suin, M.; Purohit, K.; Rajagopalan, A.N. Spatially-attentive patchhierarchical network for adaptive motion deblurring. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 3603–3612. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.M.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Li, C.; Gao, W.Z.; Liu, F.H.; Chang, E.B.; Xuan, S.X.; Xu, D.Y. GAMSNet: Deblurring via Generative Adversarial and Multi-Scale Networks. In Proceedings of the 2020 Chinese Automation Congress (CAC), Shanghai, China, 6–8 November 2020; pp. 3631–3636. [Google Scholar]

- Tian, H.; Sun, L.J.; Dong, X.L.; Lu, B.L.; Qin, H.; Zhang, L.P.; Li, W.J. A Modeling Method for Face Image Deblurring. In Proceedings of the 2021 International Conference on High Performance Big Data and Intelligent Systems (HPBD&IS), Macau, China, 5–7 December 2021; pp. 37–41. [Google Scholar]

- Maharjan, P.; Xu, N.; Xu, X.; Song, Y.Y.; Li, Z. DCTResNet: Transform Domain Image Deblocking for Motion Blur Images. In Proceedings of the 2021 International Conference on Visual Communications and Image Processing (VCIP), Munich, Germany, 5–8 December 2021; pp. 1–5. [Google Scholar]

- Chang, M.; Feng, H.J.; Xu, Z.H.; Li, Q. Low-Light Image Restoration with Short- and Long-Exposure Raw Pairs. IEEE Trans. Multimed. 2022, 24, 702–714. [Google Scholar] [CrossRef]

- Lai, W.S.; Huang, J.B.; Hu, Z.; Ahuja, N.; Yang, M.H. A Comparative Study for Single Image Blind Deblurring. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1701–1709. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shannon, C.E. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948, 27, 623–656. [Google Scholar] [CrossRef]

- Wang, H.N.; Zhong, W.; Wang, J.; Xia, D.S. Research of measurement for digital image definition. J. Image Graph. 2004, 9, 828–831. [Google Scholar]

- Hu, Z.; Yang, M.-H. Good regions to deblur. In Proceedings of the 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 59–72. [Google Scholar]

- Xu, L.; Lu, C.W.; Xu, Y.; Jia, J.Y. Image smoothing via L0 gradient minimization. ACM Trans. Graph. 2011, 30, 1–12. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).