A Review of Synthetic-Aperture Radar Image Formation Algorithms and Implementations: A Computational Perspective

Abstract

:1. Introduction

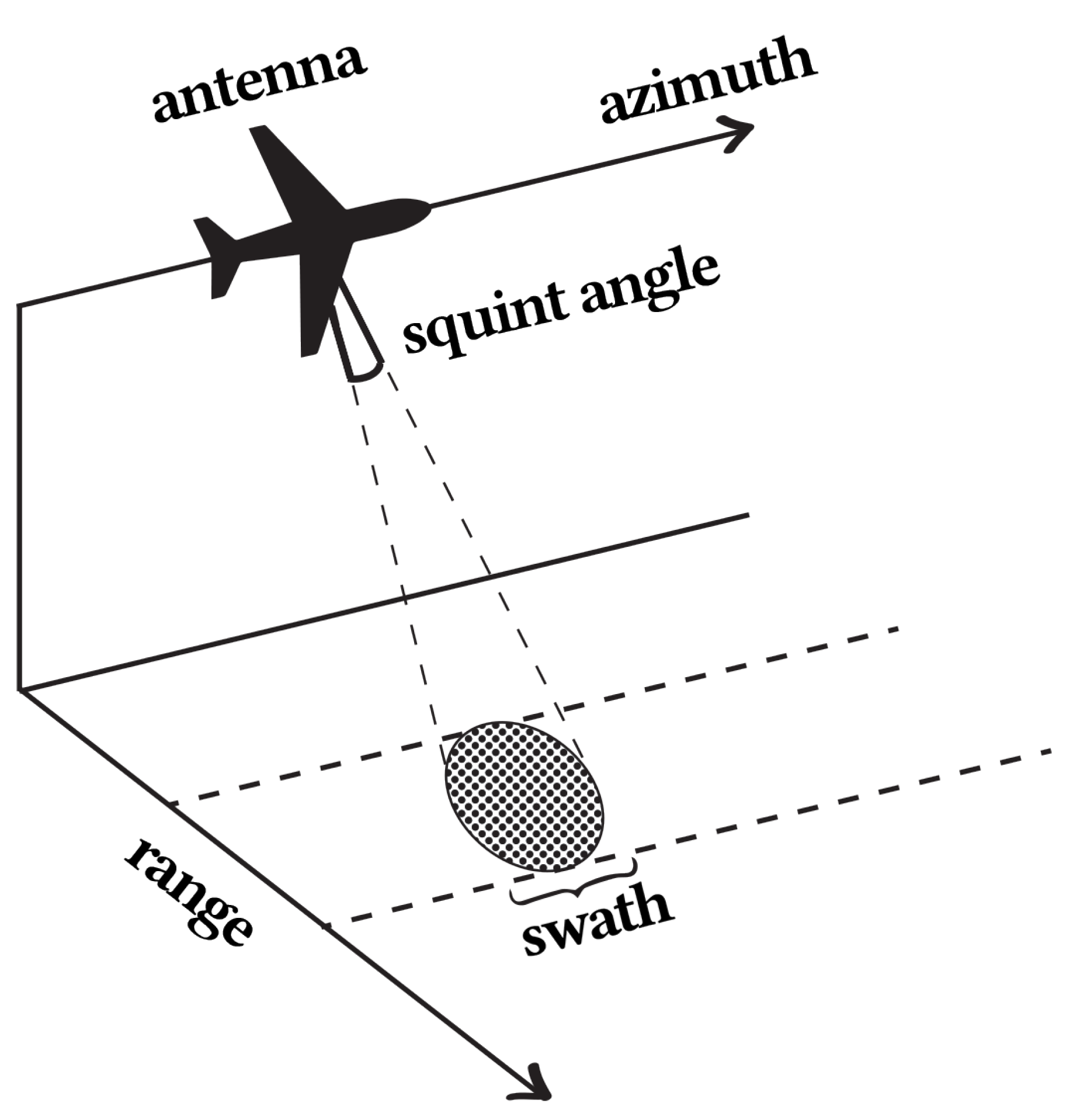

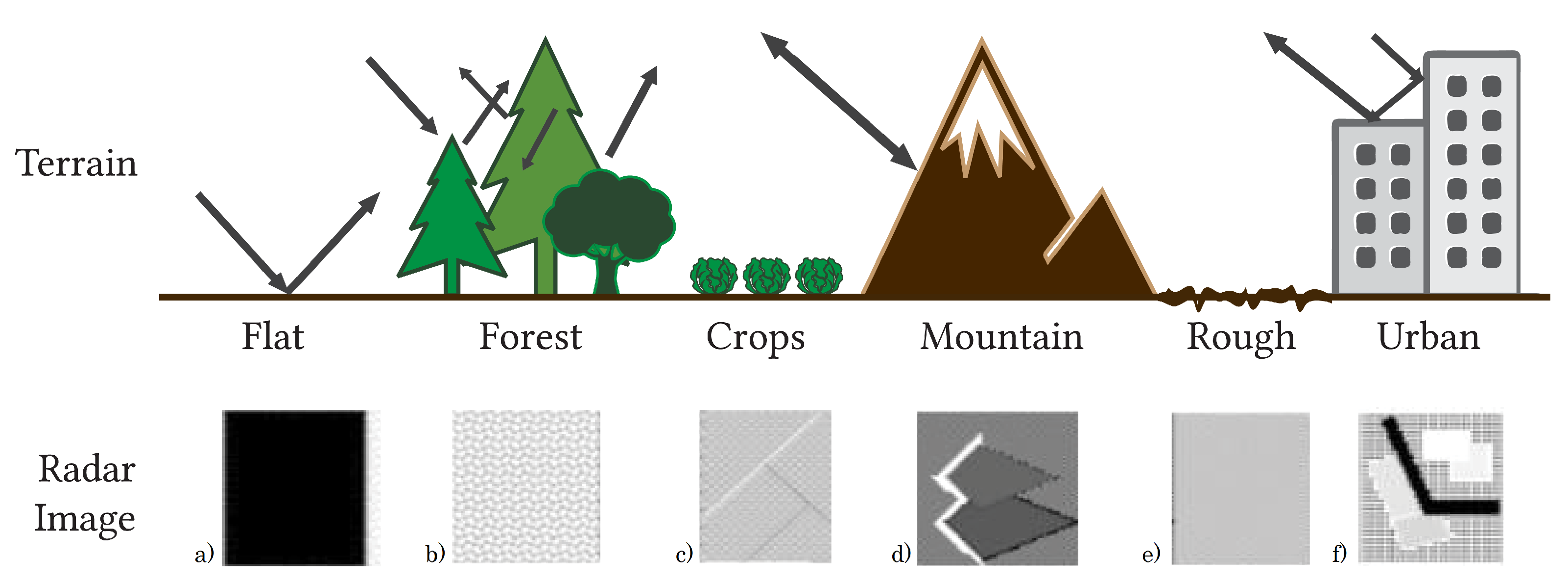

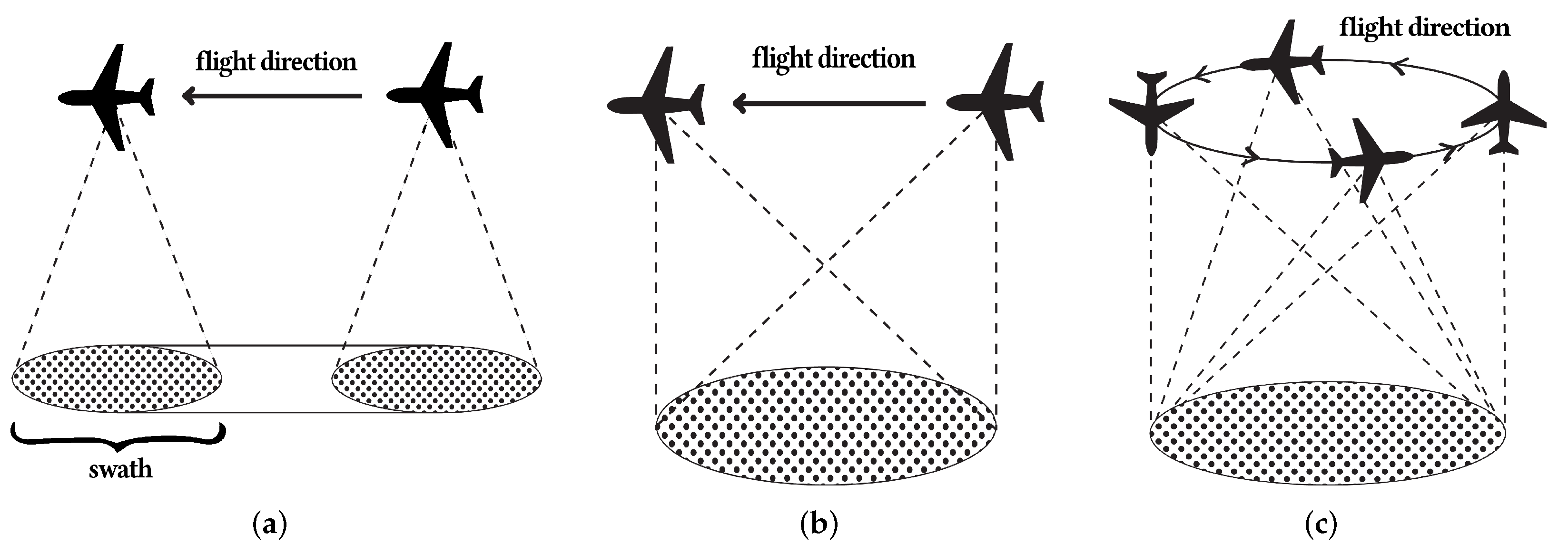

2. Synthetic-Aperture Radar

3. Synthetic-Aperture Radar Image Formation Algorithms

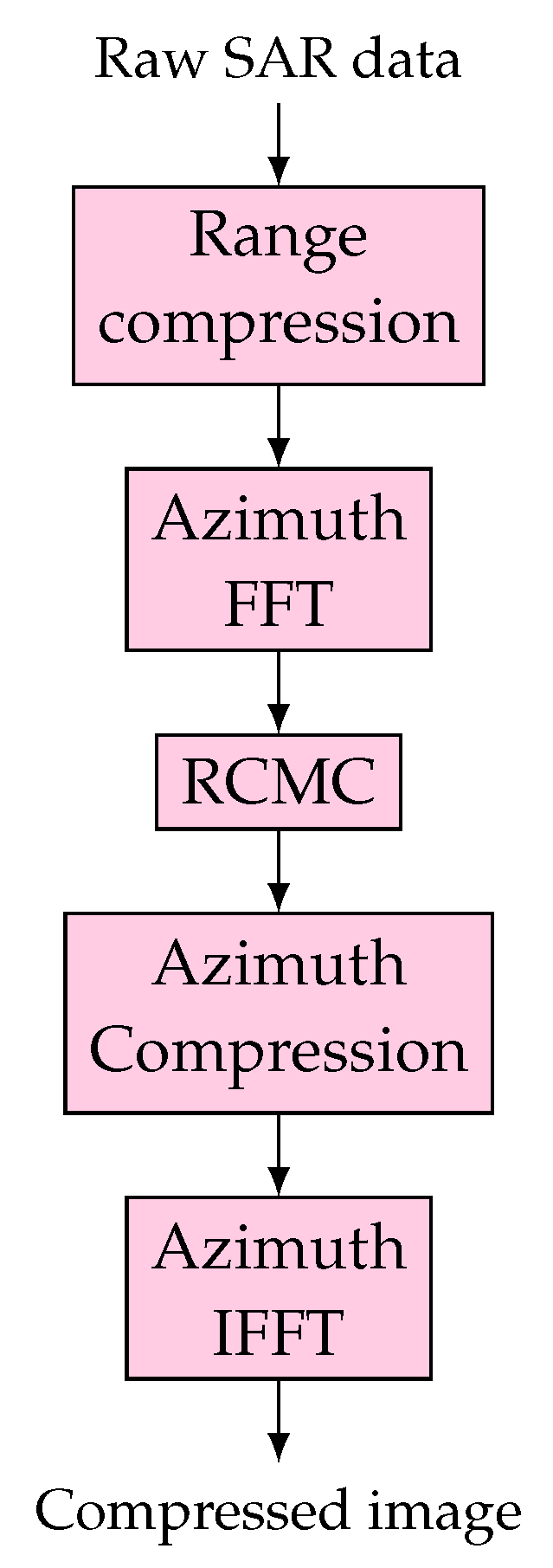

3.1. Range–Doppler Algorithm

- 1.

- A range compression is performed along the range direction, with a fast convolution. This means that a range FFT is performed, a matched filter multiplication and, lastly, a range inverse fast Fourier transform. Using the received demodulation signal given by Equation (4), assuming is the range FFT of and is the frequency domain matched filter, the output of this step of the Range–Doppler algorithm is given bywhere the compressed pulse envelope, ), is the IFFT of the rectangular function.

- 2.

- The data are transformed into the Range–Doppler domain with an azimuth FFT. Since the first exponential in Equation (5) is constant for each target and with , where is the azimuth FM rate of point target signal, the output after the azimuth FFT is given bywhere is the envelope of the Doppler spectrum of the antenna beam pattern.

- 3.

- The platform movement causes range variations in the data, a phenomenon called range migration, and hence, a correction is performed to rearrange the data in memory, and straighten the trajectory. This way, it is possible to perform azimuth compression along each parallel azimuth line. This step is called range cell migration correction, and is given bywhere is the wavelength of carrier frequency , resulting in the following signal

- 4.

- Azimuth compression is performed to compress the energy in the trajectory to a single cell in the azimuth direction. A matched filter is applied to the data after RCMC and, lastly, an IFFT is performed.The frequency domain matched filter is given byAfter azimuth compression, the resulting signal is given by

- 5.

- Lastly, an azimuth IFFT transforms the data into the time domain, resulting in a compressed complex image. After this step, the compressed image is given bywhere is the amplitude of the azimuth impulse response.

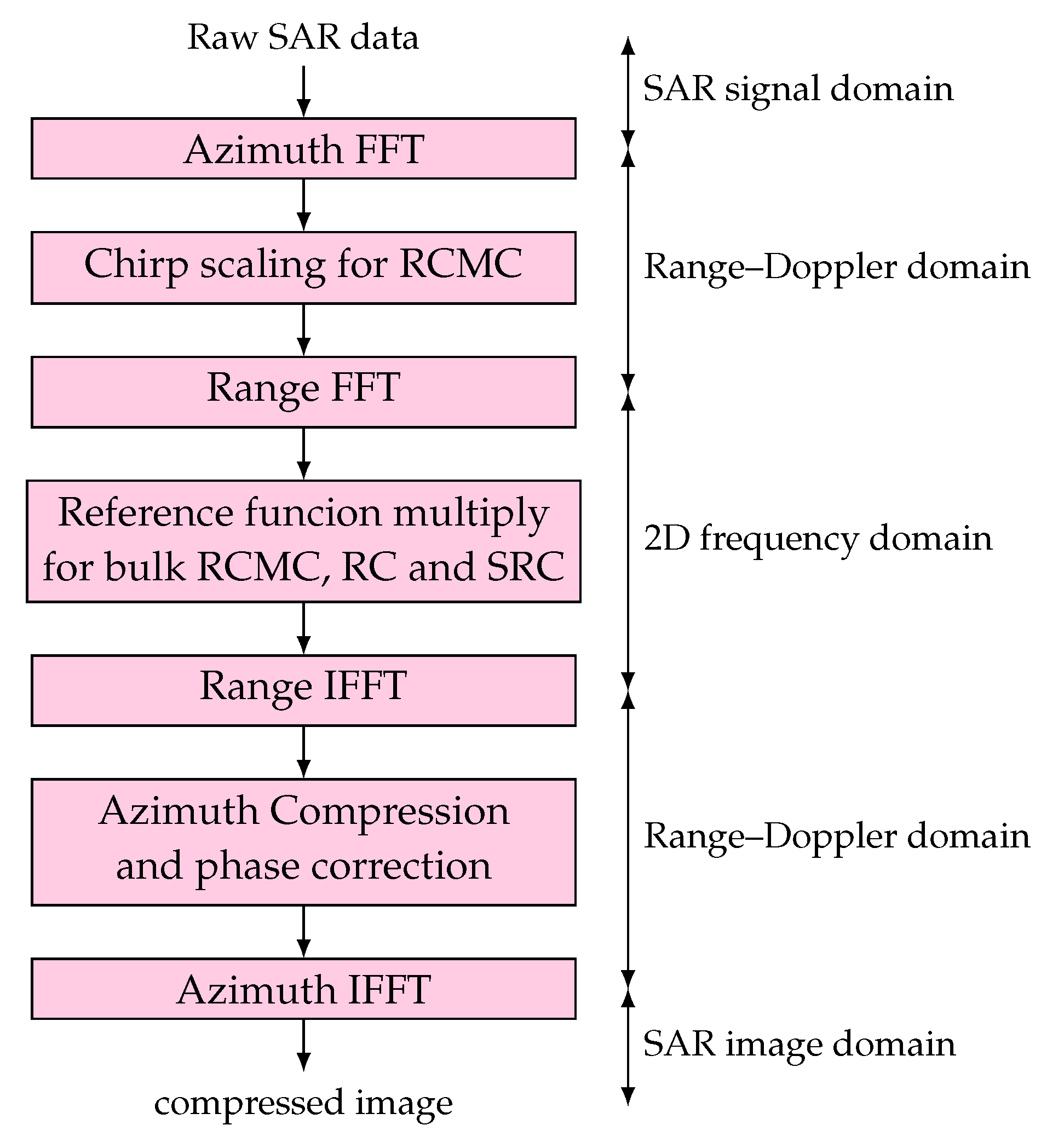

3.2. Chirp Scaling Algorithm

- 1.

- The data are transformed into the complex Doppler domain using an azimuth FFT.

- 2.

- Chip scaling is applied, employing a phase multiply, in order to adjust the range migration of the trajectories. Assuming a linear frequency-modulated (FM) pulse, a range invariant radar velocity and a range invariant modified pulse FM rate, in the Range–Doppler domain, the scaling function [7] is given bywhere is the range FM of the point target signal in Range–Doppler domain, is the reference azimuth frequency, is the azimuth frequency, is the effective radar velocity at reference range and is the migration factor in the Range–Doppler domain, resulting in the scaled signal in the Range–Doppler domain given bywhere is given bywhere A is a complex constant.

- 3.

- The data are transformed into the two-dimensional frequency domain with a range FFT, resulting in the signal given by

- 4.

- Range compression, secondary range compression (SRC), and bulk RCMC are applied using a phase multiply with a reference function. This step compensates the second and fourth exponentials from Equation (15), resulting in

- 5.

- Data are converted to the Range–Doppler domain using an IFFT, resulting in a signal in the Range–Doppler domain given by

- 6.

- This step consists of an azimuth compression with a range-varying matched filter, followed by a phase correction and an azimuth IFFT. The matched filter is the complex conjugate of the first exponential of Equation (17). The phase correction is given by the complex conjugate of the second exponential of Equation (17) for linear FM signals. After this step, including azimuth-matched filtering, phase correction and azimuth, the compressed signal at point target is given bywhere is the IFFT of the window and is the target phase.

3.3. Omega-K Algorithm

- 1.

- Transforming the data into the two-dimensional frequency domain using a 2D FFT, resulted in the baseband uncompressed signal given by

- 2.

- Computing the reference function multiply, which is usually computed for the midswath range. Assuming the range pulse is an up chirp with an hyperbolic equation, the phase is given byBy setting the range and effective radar velocity to their midrange or reference values, the phase of the reference function multiplier (RFM) filter isAfter applying the filter, the phase remaining is given byThe approximation comes from the assumption that is range-invariant. This step is called bulk compression.

- 3.

- After the previous step, the data are focused at reference range, and are thus necessary to focus the objects at other ranges. This can be done using the Stolt interpolation, which consists of the mapping of the range frequency axis. This interpolation performs the steps seen in the algorithms presented above, RCMC, SRC, and azimuth compression. The idea of this interpolation is to modify the range frequency axis, replacing the square root in Equation (22) with the shifted and scaled variable, , so thatThis results map the original variable, , into a new one, . After the Stolt interpolation, the phase function is given by

- 4.

- The last step of this algorithm is a two-dimensional IFFT, transforming the data back into the time domain, and resulting in a compressed complex image.

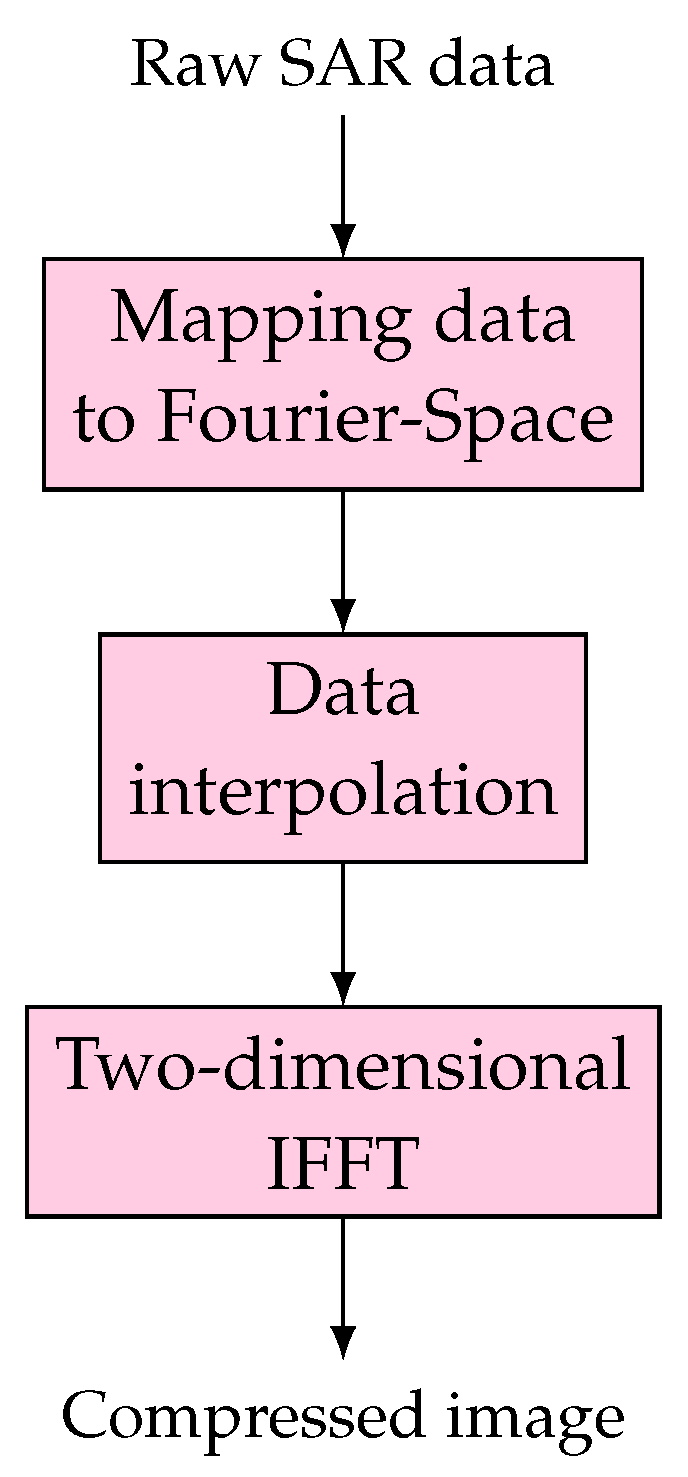

3.4. Polar Format Algorithm

- 1.

- Map the phase history, or received data, to the correct coordinate of the spatial Fourier transform.

- 2.

- Perform the two-stage interpolation on the K-space data, as described above. This step is going to interpolate the data in a keystone shape to a rectangular grid.

- 3.

- A two-dimensional inverse FFT is performed in the interpolated data, converting the data from the K-space to the Euclidean space, resulting in the final image.

3.5. Matched Filter Algorithm

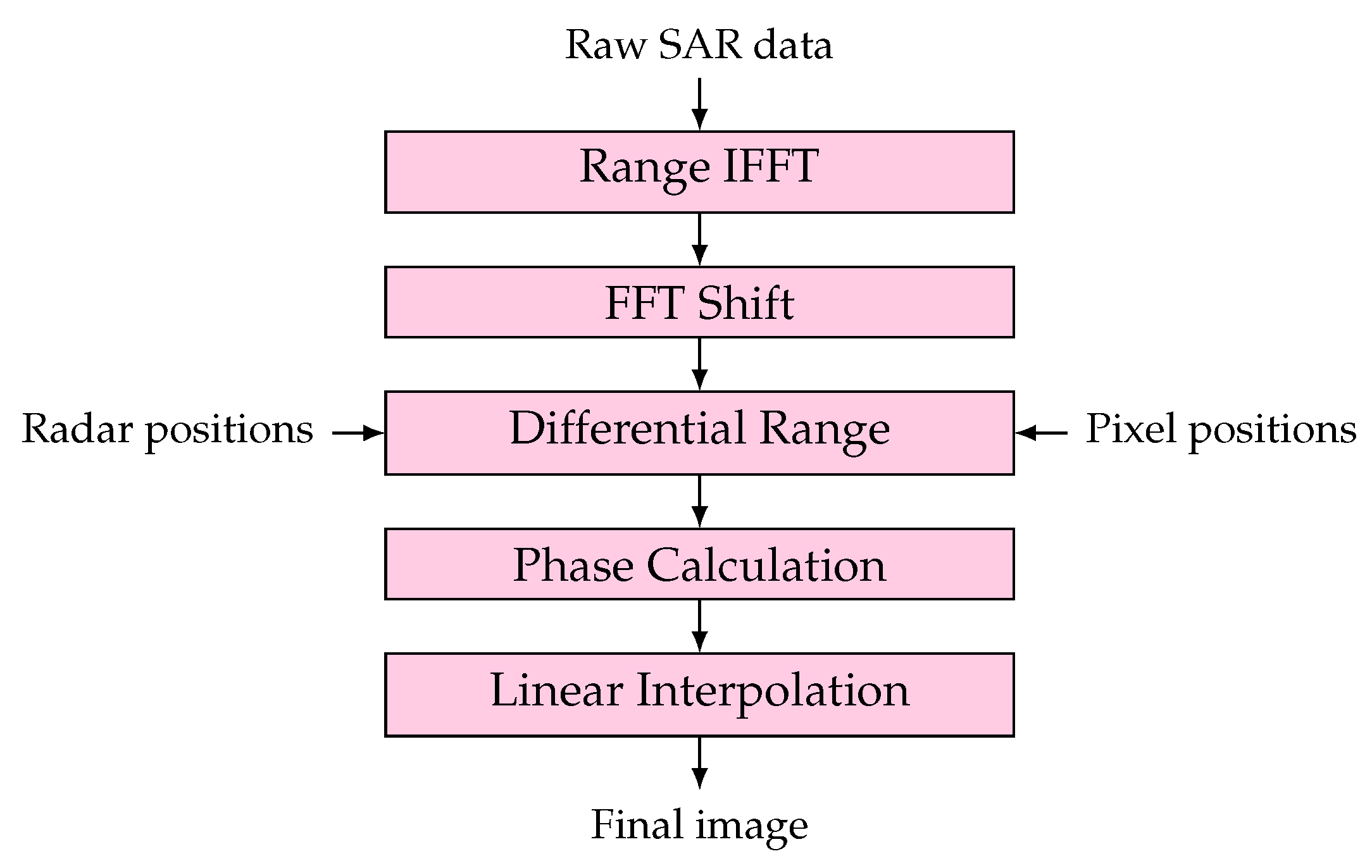

3.6. Backprojection Algorithm

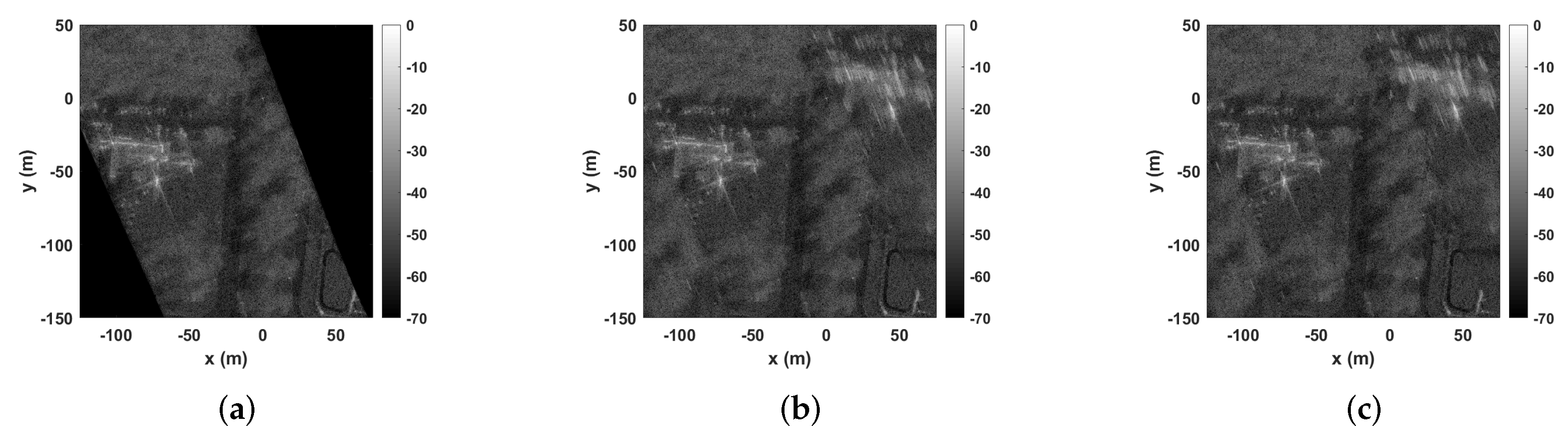

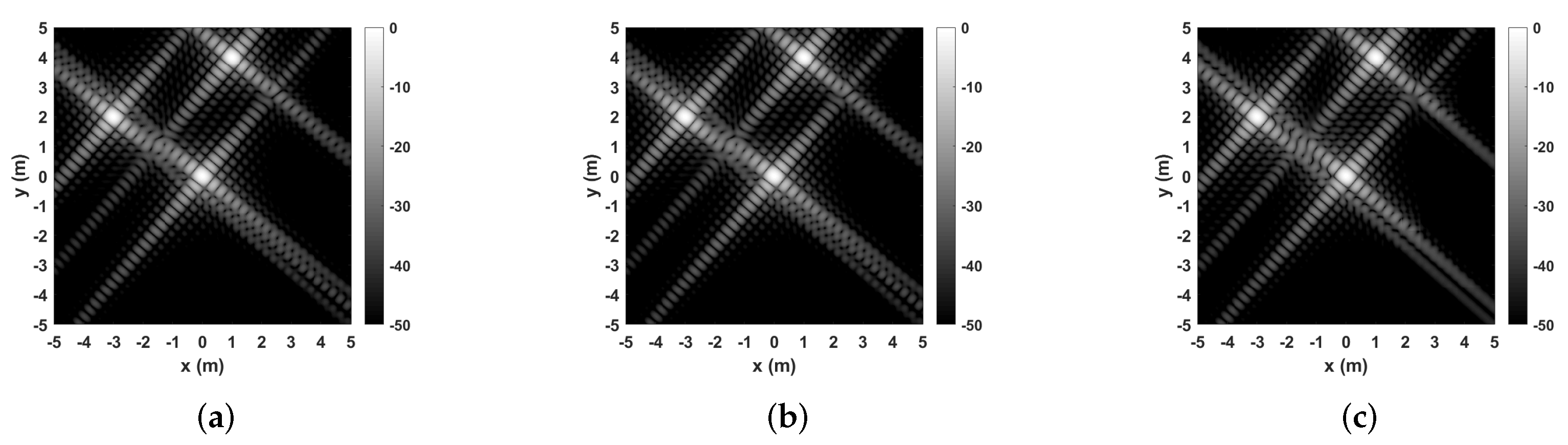

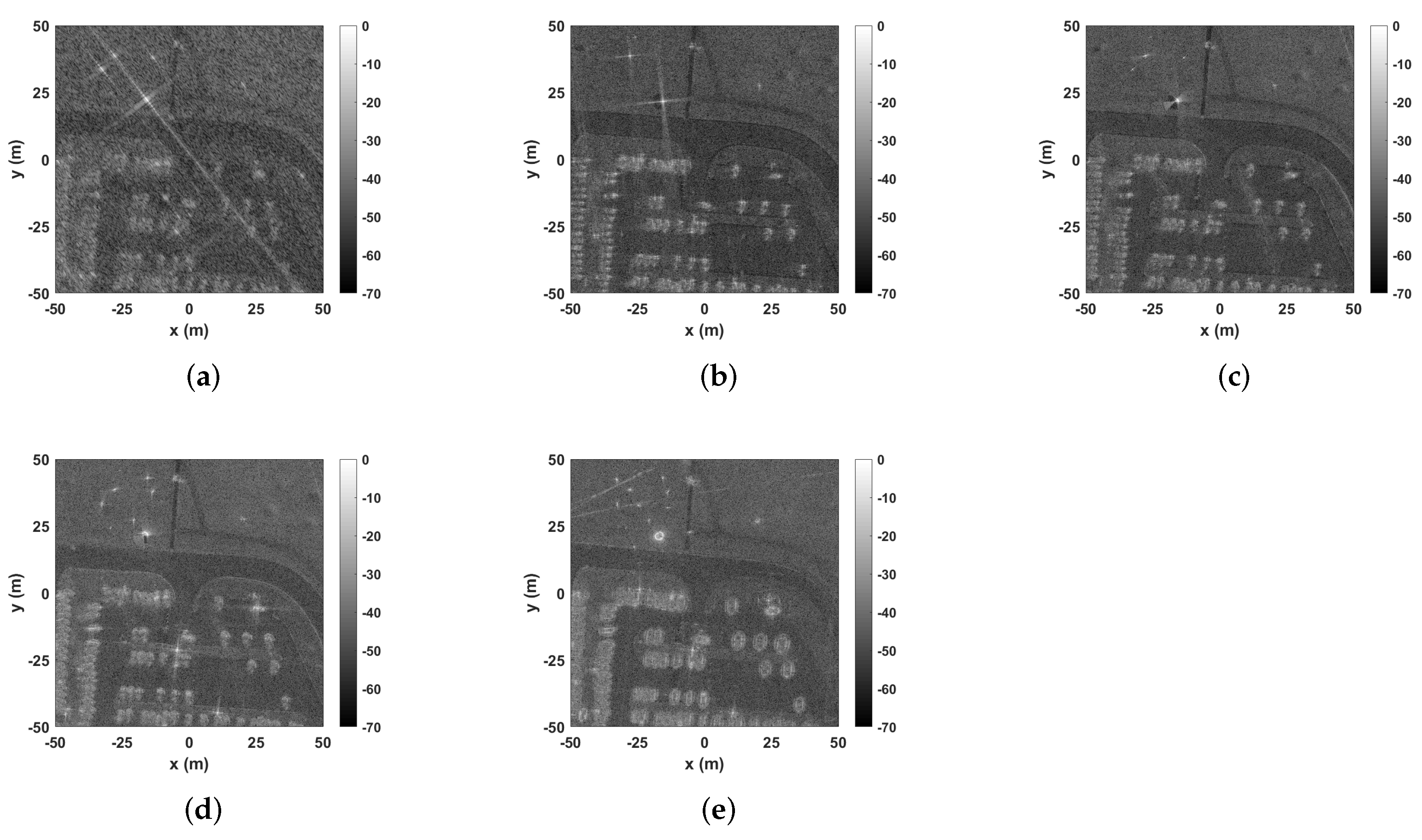

3.7. Comparison Between Algorithms

4. Synthetic-Aperture Radar Imaging Implementations

4.1. Software-Only Implementations

4.2. Comparison Between Software-Only Implementations

4.3. GPU Accelerators for SAR

4.4. Hardware Accelerators

4.5. Comparison Between GPU and Hardware Implementations

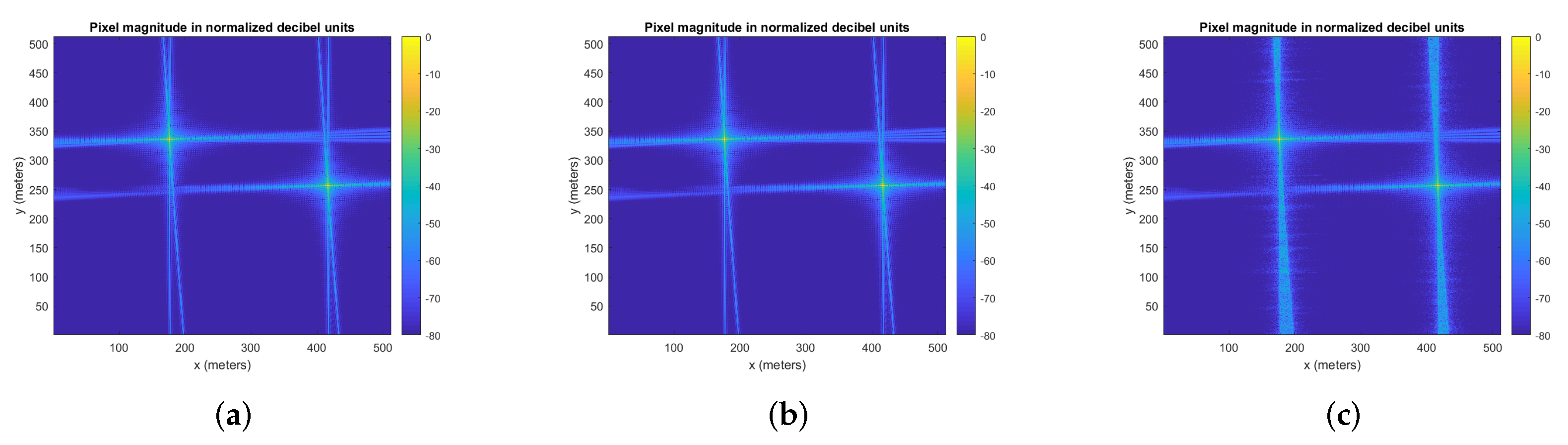

4.6. Precision Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AFRL | Air Force Research Laboratory |

| ASIC | Application-Specific Integrated Circuit |

| BRAM | Block Random-Access Memory |

| CMOS | Complementary Metal–Oxide–Semiconductor |

| CORDIC | COordinate Rotation DIgital Computer |

| CPU | Central Processing Unit |

| CUDA | Compute Unified Device Architecture |

| DSP | Digital-Signal Processing |

| EDMA3 | Enhanced Direct Memory Access |

| ESA | European Space Agency |

| FFT | Fast Fourier Transform |

| FLOP | Floating-Point Operation |

| FM | Frequency-Modulated |

| FMCW | Frequency-Modulated Continuous-Wave |

| FPGA | Field-Programmable Gate Array |

| GFLOP | Giga-Floating-Point Operation |

| GPU | Graphical Processing Unit |

| HPC | High-Performance Computing |

| IFFT | Inverse Fast Fourier Transform |

| LUT | Look-Up Table |

| MPI | Message Passing Interface |

| MSE | Mean Squared Error |

| NASA | National Aeronautics and Space Administration |

| OCM | On-Chip Memory |

| OS | Operating System |

| PC | Program Counter |

| PL | Programmable Logic |

| PLR | Process-Level Redundancy |

| PS | Processing System |

| PSLR | Peak Side Lobe Ratio |

| PSNR | Peak Signal-to-Noise Ratio |

| RCMC | Range Cell Migration Correction |

| SAR | Synthetic-Aperture Radar |

| SNR | Signal-to-Noise Ratio |

| SoC | System-on-Chip |

| SRC | Secondary Range Compression |

| SSIM | Structural Similarity |

| UAV | Unmanned Aerial Vehicle |

| UEMU | Unified EMUlation Framework |

Appendix A. Mathematical Notation

| Symbol | Meaning | Units |

|---|---|---|

| A | Complex constant | — |

| Complex constant, | — | |

| Magnitude | — | |

| c | Speed of light | m/s |

| Range to the scene center, also referred to as | m | |

| Distance between the radar and the pixel | m | |

| Migration factor in Range–Doppler domain | — | |

| Diameter of the radar in the azimuth domain | m | |

| Carrier frequency | Hz | |

| Minimum frequency for every pulse | Hz | |

| Frequency sample per pulse | Hz | |

| Azimuth frequency | Hz | |

| Azimuth FM rate of point target signal | Hz | |

| Reference azimuth frequency | Hz | |

| Range frequency | Hz | |

| Range frequency after Stolt mapping | Hz | |

| Ground reflectivity | — | |

| Frequency domain matched filter | — | |

| Second frequency domain matched filter | — | |

| Wavenumber at carrier frequency | m | |

| K | Number of frequency samples per pulse | — |

| Azimuth FM of the point target signal in Range–Doppler domain | Hz/s | |

| Range FM of the point target signal in Range–Doppler domain | Hz/s | |

| Chirp rate | Hz/s | |

| L | Half size of the aperture | m |

| m | Range bin | — |

| FFT length | — | |

| Number of pulses | — | |

| Amplitude of the azimuth impulse response | m | |

| Pulse envelope | — | |

| IFFT of the window | — | |

| Target radial distance from center of aperture | m | |

| Slant range of closest approach | m | |

| Reference range | m | |

| Instantaneous slant range | m | |

| Reflected SAR signal | — | |

| Emitted SAR signal | — | |

| Phase history | — | |

| Pulse duration | s | |

| Effective radar velocity | m/s | |

| Effective radar velocity at reference range | m/s | |

| Azimuth envelope (a sinc-squared function) | — | |

| Range envelope (a rectangular function) | — | |

| Envelope of the Doppler spectrum of antenna beam pattern | — | |

| Envelope of range spectrum of radar data | — | |

| Position of the radar | — | |

| Azimuth resolution | m | |

| Frequency step size | Hz | |

| Range resolution | m | |

| Differential range | m | |

| Azimuth time | s | |

| Beam center crossing time relative to the time of closest approach | s | |

| Aspect angle of the nth target when radar is at (0, 0) | rad | |

| Average depression angle of target area | rad | |

| Target phase | — | |

| Wavelength of carrier frequency, | m | |

| Wavelength at carrier fast-time frequency | m | |

| Maximum polar radius in spatial frequency domain for support of a target at center of the spotlighted area | m | |

| Minimum polar radius in spatial frequency domain for support of a target at center of the spotlighted area | m | |

| Range time | s | |

| Transmission time of each pulse | s | |

| Phase change resulting from the scattering process | — | |

| Polar angle in spatial frequency domain | rad | |

| Radar signal half bandwidth | rad |

References

- Soumekh, M. Synthetic Aperture Radar Signal Processing with MATLAB Algorithms; John Wiley & Sons: New York, NY, USA, 1999. [Google Scholar]

- Duarte, R.P.; Cruz, H.; Neto, H. Reconfigurable accelerator for on-board SAR imaging using the backprojection algorithm. Applied Reconfigurable Computing. Architectures, Tools, and Applications; Rincón, F., Barba, J., So, H.K.H., Diniz, P., Caba, J., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 392–401. [Google Scholar]

- Moreira, A.; Prats-Iraola, P.; Younis, M.; Krieger, G.; Hajnsek, I.; Papathanassiou, K. A Tutorial on Synthetic Aperture Radar. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–43. [Google Scholar] [CrossRef] [Green Version]

- Meyer, F. Spaceborne synthetic aperture radar: Principles, data access, and basic processing techniques. In Synthetic Aperture Radar (SAR) Handbook: Comprehensive Methodologies for Forest Monitoring and Biomass Estimation; SERVIR Global Science Coordination Office National Space Science and Technology Center 320 Sparkman Drive: Huntsville, AL, USA, 2019. [Google Scholar]

- Lu, J. Design Technology of Synthetic Aperture Radar. In Design Technology of Synthetic Aperture Radar; John Wiley & Sons: Hoboken, NJ, USA, 2019; pp. 15–73. Available online: https://onlinelibrary.wiley.com/doi/pdf/10.1002/9781119564621.ch (accessed on 14 April 2020).

- Liu, D.; Boufounos, P.T. High resolution scan mode SAR using compressive sensing. In Proceedings of the Conference Proceedings of 2013 Asia-Pacific Conference on Synthetic Aperture Radar (APSAR), Tsukuba, Japan, 23–27 September 2013; pp. 525–528. [Google Scholar]

- Cumming, I.G.; Wong, F. Digital Processing of Synthetic Aperture Radar Data: Algorithms and Implementation; Artech House: Norwood, MA, USA, 2005. [Google Scholar]

- Hu, R.; Rao, B.S.M.R.; Alaee-Kerahroodi, M.; Ottersten, B.E. Orthorectified Polar Format Algorithm for Generalized Spotlight SAR Imaging With DEM. IEEE Trans. Geosci. Remote Sens. 2021, 59, 3999–4007. [Google Scholar] [CrossRef]

- Pu, W. Deep SAR Imaging and Motion Compensation. IEEE Trans. Image Process. 2021, 30, 2232–2247. [Google Scholar] [CrossRef] [PubMed]

- Pu, W. SAE-Net: A Deep Neural Network for SAR Autofocus. IEEE Trans. Geosci. Remote Sens. 2022, 1. [Google Scholar] [CrossRef]

- Hughes, W.; Gault, K.; Princz, G. A comparison of the Range–Doppler and Chirp Scaling algorithms with reference to RADARSAT. Int. Geosci. Remote Sens. Symp. 1996, 2, 1221–1223. [Google Scholar] [CrossRef]

- Jin, M.Y.; Cheng, F.; Chen, M. Chirp scaling algorithms for SAR processing. In Proceedings of the IGARSS’93—IEEE International Geoscience and Remote Sensing Symposium, Tokyo, Japan, 18–21 August 1993; pp. 1169–1172. [Google Scholar] [CrossRef]

- Raney, R.K.; Runge, H.; Bamler, R.; Cumming, I.G.; Wong, F.H. Precision SAR processing using chirp scaling. IEEE Trans. Geosci. Remote Sens. 1994, 32, 786–799. [Google Scholar] [CrossRef]

- Sun, J.P.; Wang, J.; Yuan, Y.N.; Mao, S.Y. Extended Chirp Scaling Algorithm for Spotlight SAR. Chin. J. Aeronaut. 2002, 15, 103–108. [Google Scholar] [CrossRef] [Green Version]

- Bamler, R. A systematic comparison of sar focussing algorithms. In Proceedings of the IGARSS’91 Remote Sensing: Global Monitoring for Earth Management, Espoo, Finland, 3–6 June 1991; Volume 2, pp. 1005–1009. [Google Scholar] [CrossRef]

- Bamler, R. A comparison of range-Doppler and wavenumber domain SAR focusing algorithms. IEEE Trans. Geosci. Remote Sens. 1992, 30, 706–713. [Google Scholar] [CrossRef]

- Stolt, R.H. Migration by Fourier transform. Geophysics 1978, 43, 23–48. [Google Scholar] [CrossRef]

- Melnikov, A.; Kernec, J.L.; Gray, D. A case implementation of a spotlight range migration algorithm on FPGA platform. In Proceedings of the 2014 International Symposium on Antennas and Propagation Conference Proceedings, Kaohsiung, Taiwan, 2–5 December 2014; pp. 177–178. [Google Scholar] [CrossRef] [Green Version]

- Deming, R.; Best, M.; Farrell, S. Polar format algorithm for SAR imaging with Matlab. In Proceedings of the Algorithms for Synthetic Aperture Radar Imagery XXI, Baltimore, MD, USA, 5–9 May 2014; Volume 9093, pp. 47–66. [Google Scholar] [CrossRef]

- Musgrove, C.; Naething, R. A method to evaluate residual phase error for polar formatted synthetic aperture radar systems. In Radar Sensor Technology XVII; International Society for Optics and Photonics: Bellingham, WA, USA, 2013; Volume 8714, pp. 361–370. [Google Scholar] [CrossRef]

- Rigling, B.D.; Moses, R.L. Taylor expansion of the differential range for monostatic SAR. IEEE Trans. Aerosp. Electron. Syst. 2005, 41, 60–64. [Google Scholar] [CrossRef]

- Jakowatz, C.V.; Wahl, D.E.; Eichel, P.H.; Ghiglia, D.C.; Thompson, P.A. Spotlight-Mode Synthetic Aperture Radar: A Signal Processing Approach; Springer: Boston, MA, USA, 1996. [Google Scholar] [CrossRef]

- Gorham, L.A.; Rigling, B.D. Dual format algorithm implementation with gotcha data. In Algorithms for Synthetic Aperture Radar Imagery XIX; SPIE: Baltimore, MD, USA, 2012; Volume 8394, pp. 19–24. [Google Scholar] [CrossRef]

- Linnehan, R.; Yasuda, M.; Doerry, A. An efficient means to mitigate wavefront curvature effects in polar format processed SAR imagery. In Radar Sensor Technology XVI; International Society for Optics and Photonics: Baltimore, MD, USA, 2012; Volume 8361, pp. 553–561. [Google Scholar] [CrossRef]

- Ausherman, D.A.; Kozma, A.; Walker, J.L.; Jones, H.M.; Poggio, E.C. Developments in Radar Imaging. IEEE Trans. Aerosp. Electron. Syst. 1984, 20, 363–400. [Google Scholar] [CrossRef]

- Pritsker, D. Efficient Global Back-Projection on an FPGA. In Proceedings of the 2015 IEEE Radar Conference (RadarCon), Arlington, VA, USA, 10–15 May 2015; pp. 204–209. [Google Scholar] [CrossRef]

- Pimentel, J.J.; Stillmaker, A.; Bohnenstiehl, B.; Baas, B.M. Area efficient backprojection computation with reduced floating-point word width for SAR image formation. In Proceedings of the 2015 49th Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 8–11 November 2015; pp. 732–736. [Google Scholar] [CrossRef]

- Gorham, L.A.; Moore, L.J. SAR image formation toolbox for MATLAB. In Proceedings of the SPIE Algorithms for Synthetic Aperture Radar Imagery XVII, Orlando, FL, USA, 5–9 April 2010; Volume 7699, pp. 46–58. [Google Scholar] [CrossRef]

- Casteel, H.C., Jr.; Gorham, L.A.; Minardi, M.J.; Scarborough, S.M.; Naidu, K.D.; Majumder, U.K. A challenge problem for 2D/3D imaging of targets from a volumetric data set in an urban environment. In Algorithms for Synthetic Aperture Radar Imagery XIX; SPIE: Orlando, FL, USA, 2007; Volume 6568, pp. 97–103. [Google Scholar] [CrossRef]

- Naidu, K.; Lin, L. Data Dome: Full k-space sampling data for high-frequency radar research. Algorithms for Synthetic Aperture Radar Imagery XIX; SPIE: Orlando, FL, USA, 2004; Volume 5427. [Google Scholar] [CrossRef]

- Scarborough, S.M.; Casteel, C.H., Jr.; Gorham, L.; Minardi, M.J.; Majumder, U.K.; Judge, M.G.; Zelnio, E.; Bryant, M.; Nichols, H.; Page, D. A challenge problem for SAR-based GMTI in urban environments. Algorithms for Synthetic Aperture Radar Imagery XIX; SPIE: Orlando, FL, USA, 2009; Volume 7337. [Google Scholar] [CrossRef]

- Kelly, S.I.; Rilling, G.; Davies, M.; Mulgrew, B. Iterative image formation using fast (Re/Back)-projection for spotlight-mode SAR. In Proceedings of the 2011 IEEE RadarCon (RADAR), Kansas City, MS, USA, 23–27 May 2011; pp. 835–840. [Google Scholar] [CrossRef]

- Barker, K.; Benson, T.; Campbell, D.; Ediger, D.; Gioiosa, R.; Hoisie, A.; Kerbyson, D.; Manzano, J.; Marquez, A.; Song, L.; et al. PERFECT (Power Efficiency Revolution For Embedded Computing Technologies) Benchmark Suite Manual; Pacific Northwest National Laboratory and Georgia Tech Research Institute. 2013. Available online: http://hpc.pnnl.gov/projects/PERFECT/ (accessed on 14 April 2020).

- Frey, O.; Werner, C.L.; Wegmuller, U. GPU-based parallelized time-domain back-projection processing for agile SAR platforms. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; pp. 1132–1135. [Google Scholar] [CrossRef]

- Frey, O.; Werner, C.L.; Wegmuller, U.; Wiesmann, A.; Henke, D.; Magnard, C. A car-bone sar and insar experiment. In Proceedings of the 2013 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Melbourne, VIC, Australia, 21–26 July 2013; pp. 93–96. [Google Scholar] [CrossRef] [Green Version]

- Stringham, C.; Long, D.G. GPU Processing for UAS-Based LFM-CW Stripmap SAR. Photogramm. Eng. Remote Sens. 2014, 80, 1107–1115. [Google Scholar] [CrossRef]

- Long, D.G.; Zaugg, E.; Edwards, M.; Maslanik, J. The microASAR experiment on CASIE-09. In Proceedings of the 2010 IEEE International Geoscience and Remote Sensing Symposium, Honolulu, HI, USA, 25–30 July 2010; pp. 3466–3469. [Google Scholar] [CrossRef] [Green Version]

- Fasih, A.; Hartley, T. GPU-accelerated synthetic aperture radar backprojection in CUDA. In Proceedings of the 2010 IEEE Radar Conference, Arlington, VA, USA, 10–14 May 2010; pp. 1408–1413. [Google Scholar] [CrossRef]

- Gocho, M.; Oishi, N.; Ozaki, A. Distributed parallel backprojection for real-time stripmap SAR imaging on GPU clusters. In Proceedings of the 2017 IEEE International Conference on Cluster Computing (CLUSTER), Honolulu, HI, USA, 5–8 September 2017; pp. 619–620. [Google Scholar] [CrossRef]

- Park, J.; Tang, P.T.P.; Smelyanskiy, M.; Kim, D.; Benson, T. Efficient backprojection-based synthetic aperture radar computation with many-core processors. In Proceedings of the 2012 International Conference for High Performance Computing, Networking, Storage and Analysis, Salt Lake City, UT, USA, 10–16 November 2012; pp. 1–11. [Google Scholar] [CrossRef]

- Hartley, T.D.R.; Fasih, A.R.; Berdanier, C.A.; Özgüner, F.; Catalyurek, U.V. Investigating the use of GPU-accelerated nodes for SAR image formation. In Proceedings of the 2009 IEEE International Conference on Cluster Computing and Workshops, New Orleans, LA, USA, 31 August–4 September 2009; pp. 1–8. [Google Scholar] [CrossRef]

- Wang, D.; Ali, M. Synthetic Aperture Radar on Low Power Multi-Core Digital Signal Processor. In Proceedings of the 2012 IEEE Conference on High Performance Extreme Computing, Waltham, MA, USA, 10–12 September 2012; Volume 1, pp. 1–6. [Google Scholar] [CrossRef]

- Schleuniger, P.; Kusk, A.; Dall, J.; Karlsson, S. Synthetic Aperture Radar Data Processing on an FPGA Multi-Core System. In Proceedings of the 26th International Conference on Architecture of Computing Systems—ARCS 2013, Prague, Czech Republic, 19–22 February 2013; pp. 74–85. [Google Scholar] [CrossRef]

- Schleuniger, P.; McKee, S.A.; Karlsson, S. Design Principles for Synthesizable Processor Cores; Springer: Berlin/Heidelberg, Germany, 2012; pp. 111–122. [Google Scholar]

- Cholewa, F.; Pfitzner, M.; Fahnemann, C.; Pirsch, P.; Blume, H. Synthetic Aperture Radar with Backprojection: A Scalable, Platform Independent Architecture for Exhaustive FPGA Resource Utilization. In Proceedings of the 2014 International Radar Conference, Lille, France, 13–17 October 2014; pp. 1–5. [Google Scholar] [CrossRef]

- Hou, N.; Zhang, D.; Du, G.; Song, Y. An FPGA-Based Multi-Core System for Synthetic Aperture Radar Data Processing. In Proceedings of the 2014 International Conference on Anti-Counterfeiting, Security and Identification (ASID), Macao, China, 12–14 December 2014; pp. 1–4. [Google Scholar] [CrossRef]

- Linchen, Z.; Jindong, Z.; Daiyin, Z. FPGA Implementation of Polar Format Algorithm for Airborne Spotlight SAR Processing. In Proceedings of the 2013 IEEE International Conference on Dependable, Autonomic and Secure Computing (DASC), Chengdu, China, 21–22 December 2013; pp. 143–147. [Google Scholar] [CrossRef]

- Cordes, B.; Leeser, M. Parallel Backprojection: A Case Study in High-Performance Reconfigurable Computing. EURASIP J. Embed. Syst. 2009, 2009, 727965. [Google Scholar] [CrossRef] [Green Version]

- Araujo, G.F.; d’Amore, R.; Fernandes, D. Cost-sensitive FPGA implementation of SAR range-doppler algorithm. IEEE Aerosp. Electron. Syst. Mag. 2018, 33, 54–68. [Google Scholar] [CrossRef]

| Frequency Band | Ka | Ku | X | C | S | L | P |

|---|---|---|---|---|---|---|---|

| Frequency [GHz] | 40–25 | 17.6–12 | 12–7.5 | 7.5–3.75 | 3.75–2 | 2–1 | 0.5–0.25 |

| Wavelength [cm] | 0.75–1.2 | 1.7–2.5 | 2.5–4 | 4–8 | 8–15 | 15–30 | 60–120 |

| Range Resolution | Azimuth Resolution | |

|---|---|---|

| Stripmap SAR | ||

| Spotlight SAR | ||

| Circular SAR |

| Algorithm | Main Features |

|---|---|

| Range–Doppler | Frequency domain for range and azimuth; uses block processing; range cell migration correction between range and azimuth; simple one-dimensional operations; not good for high-squint angles. |

| Chirp Scaling | Offers a good trade-off in terms of simplicity, efficiency, and accuracy; high computing load; limited accuracy for high squint, and wide-aperture uses. |

| Omega-K | Commonly used for processing raw stripmap SAR in frequency domain; good results for high-squint angles. |

| Polar Format | good for cases where resolution is close to the nominal wavelength of the radar. |

| Backprojection | Time-domain processing; most complex; better image. |

| Algorithm | GFLOPs |

|---|---|

| Range–Doppler | 5.61 |

| Chirp scaling | 4.05 |

| Omega-K | 4.38 |

| Backprojection Algorithm | Matched-Filter Algorithm | Fast-Factorized Backprojection Algorithm | ||||

|---|---|---|---|---|---|---|

| Time | Time | SSIM | Time | SSIM | ||

| Gotcha | 39° | 2.13 s | 364.30 s | 0.999101 | 1.14 s | 0.861199 |

| 1–10° | 20.49 s | 3755.68 s | 0.999100 | 7.85 s | 0.824825 | |

| 1–50° | 104.36 s | 18625.73 s | 0.999117 | 36.60 s | 0.832565 | |

| 1–100° | 208.74 s | — | — | 73.10 s | 0.817611 | |

| 1–360° | 759.96 s | — | — | 266.99 s | 0.817061 | |

| GMTI | 387.86 s | 24791.79 s | 0.843764 | 71.91 s | 0.946678 * | |

| Point Target | 2.04 s | 107.34 s | 0.995989 | 1.05 s | 0.904601 | |

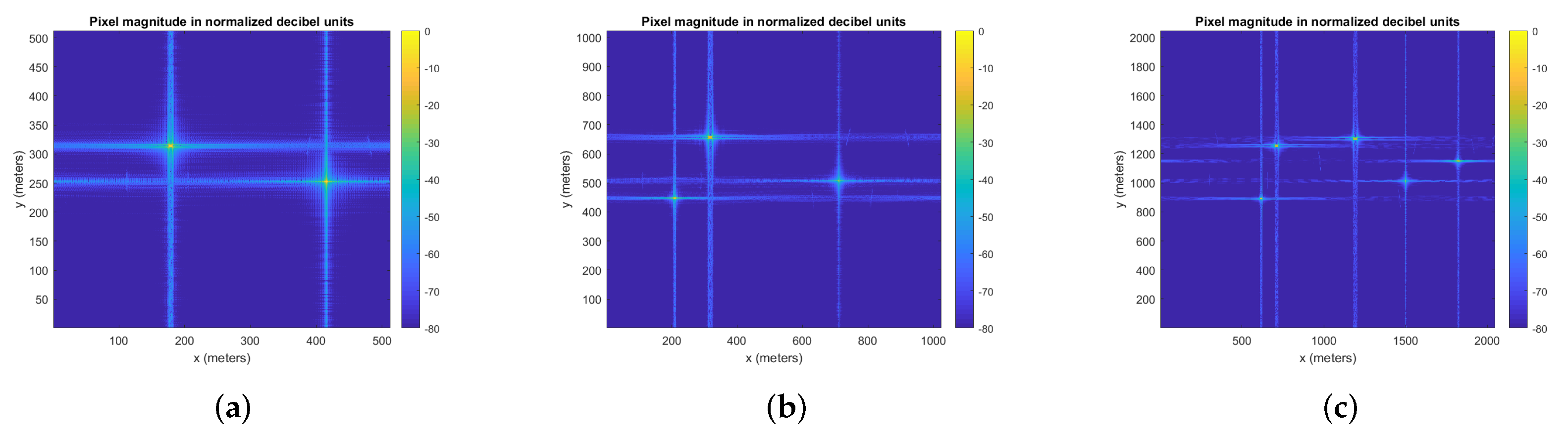

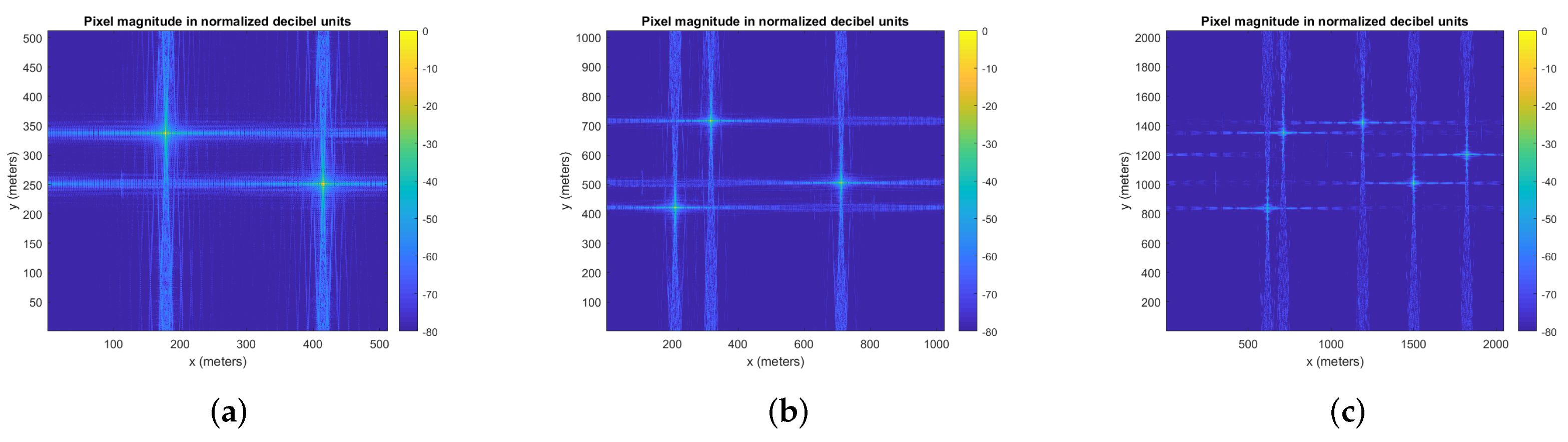

| Backprojection Algorithm | Polar Format Algorithm (Range) | Polar Format Algorithm (Azimuth) | |

|---|---|---|---|

| Small | 5.844 s | 0.056 s | 0.065 s |

| (512 × 512) | 44.8 k PPS | 4681 k PPS | 4032 k PPS |

| Medium | 57.913 s | 0.205 s | 0.238 s |

| (1024 × 1024) | 18.1 k PPS | 5115 k PPS | 4405 k PPS |

| Large | 565 s | 0.798 s | 1.054 s |

| (2048 × 2048) | 7.4 k PPS | 5256 k PPS | 3979 k PPS |

| Alg. | PPS | Execution Time | Device | Image Dimension | Notes | |

|---|---|---|---|---|---|---|

| [34] | BP | n/a | 315 s 79 s 51 s | NVIDIA GeForce GT 650M (384 cores) NVIDIA GeForce GTX 660 Ti (1344 cores) NVIDIA Tesla K20c (2496 cores) | — | — |

| [36] | BP | n/a | Real-time | NVIDIA GTX 285 (240 cores) | — | — |

| [38] | BP | n/a | 40× to 60× of speedup, depending on block size | NVIDIA Quadro FX 5600 (128 cores) NVIDIA Tesla C1060 (240 cores) | 501 × 501 px 8 K × 384 px | SNR > 30 dB. |

| [41] | BP | 1747 k | 0.15 s (real-time) | 4 nodes, each with NVIDIA Quadro 5600 (128 cores) | 512 × 512 px * | Speedup of 31×. * More image sizes were tested, with different speedups. For more details, check Section 4.3. |

| [40] | BP | n/a | Real-time | Node with dual-socket Intel Xeon E5-2670 (8 cores) processors and two Intel Knights Corner co-processor (60 cores) | 3 K × 3 K px * | * Using 16 nodes, 13 K × 13 K images can be generated in real-time. |

| [39] | BP | 63,337 k | 1.0 s (real-time) | 4 nodes, each with two Intel Xeon E5-2690v3 (12 cores) and 4 NVIDIA Tesla M60 (2048 cores) | 8965 × 7065 px | Speedup of 11.5× compared to 1 node (4 GPUs). |

| [42] | RD | 64,000 k | 0.25 s (real-time) | TMX320C6678 DSP (8 cores) | 4 K × 4 K px | 10 Watts of power consumption. |

| Ref | Alg. | PPS | Image Dimension | Execution Time | Real-Time | Quality Metrics |

|---|---|---|---|---|---|---|

| [43] | BP | 60 k | px | 1.0 s | ✓ | — |

| [27] | BP | n/a | px | — | — | SSIM > 0.99 |

| [45] | BP | 2184 k 1456 k 728 k 383 k | px, px, px, px | 0.03 s 0.18 s 1.44 s 10.94 s | ✗ | — |

| [26] | BP | 2085 k | px | 120.34 ms | ✓ | — |

| [46] | RD | 697 k | px | 12.03 s | ✗ | PSNR and MSE almost identical |

| [47] | PF | 16777 k | px | 1.0 s | ✓ | Range PSLR: −28 dB Range resolution: 2.65 m Azimuth PSLR: −40 dB Azimuth resolution: 1.03 m |

| [48] | BP | n/a | — | 146 ms to 351 ms | ✓ | Depending on the dataset, error percentage ranges from 0.9% to 5.6%. |

| [49] | RD | 13530 k | px | 0.31 s | ✓ | Range PLSR of −44.11 dB and azimuth PSLR between −46.44 dB and −39.40 dB |

| Ref | Device | Device Frequency | Hardware Resource Occupation | Power Consumption | Notes |

|---|---|---|---|---|---|

| [43] | Xilinx Virtex-7 | 300 MHz | 60% | 10W | This work was simulated. |

| [27] | ASIC | 1.2 GHz | — | — | — |

| [45] | Xilinx ML605 | 100 MHz | 78% LUTs 62% BRAMs 40% DSPs | — | Speedup of 68 with a parallelization factor of 8 compared to the execution on a quad-core Intel i5 3.2 GHz. |

| [26] | Altera Arria-V SoC | 133 MHz | — * | 26.55 W | * 20 BP cores fitted into the device. |

| [46] | 4 Xilinx Virtex-6-550T | 130 MHz | 67% LUTs | 85 W | * The paper did not specify values of quality metrics. |

| [47] | Xilinx Kintex-7 | 200 MHz | 68% LUTs 48% registers 42% BRAM 96% DSPs | — | — |

| [48] | Dual 2.2 GHz Intel Xeon PC and Anapolis Microsystems WildStar II FPGA board with two Virtex-II FPGAs | 133 MHz | — | — | — |

| [49] | DE2-115 Terasic Development kit with Cyclone E IV | 50 MHz | 57% LUTs 31% registers 21% BRAMs 56% DSPs. | — | — |

| SNR | SSIM | Time | |

|---|---|---|---|

| Original | 139.16 dB | 1.000000 | 270.80 s |

| Single-Precision | 15.02 dB | 0.893861 | 259.75 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cruz, H.; Véstias, M.; Monteiro, J.; Neto, H.; Duarte, R.P. A Review of Synthetic-Aperture Radar Image Formation Algorithms and Implementations: A Computational Perspective. Remote Sens. 2022, 14, 1258. https://doi.org/10.3390/rs14051258

Cruz H, Véstias M, Monteiro J, Neto H, Duarte RP. A Review of Synthetic-Aperture Radar Image Formation Algorithms and Implementations: A Computational Perspective. Remote Sensing. 2022; 14(5):1258. https://doi.org/10.3390/rs14051258

Chicago/Turabian StyleCruz, Helena, Mário Véstias, José Monteiro, Horácio Neto, and Rui Policarpo Duarte. 2022. "A Review of Synthetic-Aperture Radar Image Formation Algorithms and Implementations: A Computational Perspective" Remote Sensing 14, no. 5: 1258. https://doi.org/10.3390/rs14051258

APA StyleCruz, H., Véstias, M., Monteiro, J., Neto, H., & Duarte, R. P. (2022). A Review of Synthetic-Aperture Radar Image Formation Algorithms and Implementations: A Computational Perspective. Remote Sensing, 14(5), 1258. https://doi.org/10.3390/rs14051258