Abstract

Crop type classification is critical for crop production estimation and optimal water allocation. Crop type data are challenging to generate if crop reference data are lacking, especially for target years with reference data missed in collection. Is it possible to transfer a trained crop type classification model to retrace the historical spatial distribution of crop types? Taking the Hetao Irrigation District (HID) in China as the study area, this study first designed a 10 m crop type classification framework based on the Google Earth Engine (GEE) for crop type mapping in the current season. Then, its interannual transferability to accurately retrace historical crop distributions was tested. The framework used Sentinel-1/2 data as the satellite data source, combined percentile, and monthly composite approaches to generate classification metrics and employed a random forest classifier with 300 trees for crop classification. Based on the proposed framework, this study first developed a 10 m crop type map of the HID for 2020 with an overall accuracy (OA) of 0.89 and then obtained a 10 m crop type map of the HID for 2019 with an OA of 0.92 by transferring the trained model for 2020 without crop reference samples. The results indicated that the designed framework could effectively identify HID crop types and have good transferability to obtain historical crop type data with acceptable accuracy. Our results found that SWIR1, Green, and Red Edge2 were the top three reflectance bands for crop classification. The land surface water index (LSWI), normalized difference water index (NDWI), and enhanced vegetation index (EVI) were the top three vegetation indices for crop classification. April to August was the most suitable time window for crop type classification in the HID. Sentinel-1 information played a positive role in the interannual transfer of the trained model, increasing the OA from 90.73% with Sentinel 2 alone to 91.58% with Sentinel-1 and Sentinel-2 together.

1. Introduction

Zero hunger is a critical sustainable development goal (SDG) initiative proposed by the United Nations [1]. However, it faces considerable challenges from the increasing number of people suffering food insecurity due to extreme weather events (floods, droughts, and others), the COVID-19 pandemic, and local conflicts. In 2020, the Food and Agriculture Organization of the United Nations (UN FAO) estimated that more than 720 million people worldwide suffered hunger [2]. Accurate and early crop production estimation is vital to food security situation assessments. High-resolution crop type mapping can provide critical information to estimate crop production and food security assessment [3]. As the largest consumer of freshwater, agriculture is now responsible for more than 70% of global freshwater withdrawals [4]. The overuse of agricultural water has accelerated water depletion in many dryland areas, leading to declining water tables [5] and shrinking lakes [6]. The optimization of crop planting structure is a plausible way to reduce water consumption in dryland areas [7,8], while it needs the support of high-spatial-resolution crop type data.

Due to the increasing availability of free optical and microwave satellite data with moderate spatial resolution, the emergence of cloud computing for big earth data analysis, and the significant improvement in machine learning algorithms, crop type classification methods based on supervised methods have rapidly developed. In recent years, open, freely available satellite imagery with global coverage and acceptable spatial resolution, e.g., Sentinel-1 [9], Sentinel-2 [10], and Landsat-8 [11], has provided strong data support for crop type identification at national, continental, and even global scales, with an acceptable spatial resolution. The development of cloud computing for big earth data analysis has provided stronger computing ability for crop type classification at a large scale, for example, global cropland extent mapping [12], continental-scale crop type mapping in Europe based on Sentinel-1 [13], national crop type mapping in Belgium [14] and regional crop type mapping in Northeast China [15] using Sentinel-1 and Sentinel-2 alone or together. Specifically, the Google Earth Engine (GEE) [16], with powerful computational capabilities, rich satellite images, and multiple classifiers, has become one of the crucial infrastructures for crop type classification at a large scale, for example, the identification of paddy [17], winter wheat [18], and maize [19] and the determination of crop types [14,15,20]. Machine learning has a strong ability to explore unique metrics for crops. It is widely used for land cover mapping and crop type classification, especially for random forest classifiers [21], adopted by many previous studies because it is less sensitive to the number of samples [22]. Many new indices based on new satellites were developed to improve distinguishing crops with crop type classification approaches. These include the red edge normalized difference vegetation index [23], vertical transmit/horizontal receive (VH) and vertical transmit/vertical receive (VV) ratios [24], and the difference between VV and VH [25]. Some organizations have developed several automated crop type classification systems based on free satellite data and computing technologies. For instance, Sen2Agri, an automatic system for national crop type mapping developed by Université Catholique de Louvain [23], has been adopted to extract the national-scale crop spatial distribution in countries such as Ukraine [26], Morocco [27], and sub-Saharan countries [23]. Recently, the ESA-initiated WorldCereal project (https://esa-worldcereal.org/en, accessed date: 20 February 2022) aimed to develop an EO-based global cropland monitoring system for mapping cropland and crop types [28].

Due to the influence of clouds, rain, and haze, the features generated by optical satellite images suffer the problem of discontinuity, which significantly increases the difficulty of crop classification. Different feature composites, such as the median composite [15,29,30], the percentile composite [19,31,32], and temporal interpolation [3,33], have been proposed to overcome or solve the discontinuity problem in optical satellite images. Many previous studies have indicated that the median composite was generally superior to the mean composite because the latter does not reflect the actual physical observation [33] and is susceptible to extreme values [29]. Percentile composites are less sensitive to the continuity of time series and are easy to compute; therefore, they are widely used for metric generation for land cover and crop type mapping [19,31,32]. Simple temporal interpolation uses good observations before and after to fill in missing values, has less stringent requirements on the length of time series, and has recently been widely used to reconstruct time series of features [3,29,33]. Nevertheless, if there are too many missing values in the time series, this approach loses its ability to fill gaps.

Although cloud computing, machine learning and satellite fusion algorithms have made milestone breakthroughs, existing supervised methods are still cropping reference sample dependent. The number and distribution of crop reference samples significantly impact classification performance. Currently, sparse and insufficient ground reference data are still one of the critical restricting factors for crop type mapping [34]. The collection of reference data is labor-intensive and time-consuming [35]; the European Union, through the land use/cover area frame survey (LUCAS) [36], collected continental, in situ data of EU. Improving the collection efficiency of crop reference samples and data sharing is considered a good way to overcome the insufficient crop reference sample problem. Some organizations have developed many tools to improve data collection efficiency. For example, the CropWatch team in China designed a GPS, video, and GIS (GVG) application based on smart cell phones [37]. GVG has significantly improved the collection efficiency of land cover types and crop types of reference data. Building online platforms for data sharing can enhance the value of archived reference data by sharing. For example, the Geo-Wiki platform [38,39] was built to improve global land cover by data sharing. Laso et al. [40] used it to create a global cropland reference database. Recently, models trained on archived reference data to predict crop types in the target year have emerged as a new approach to increasing data value. For example, You and Dong [29] used a trained random forest classifier to predict crop types in the early stage of Heilongjiang Province. This proved that it is a feasible way to predict crop type maps by transferring trained models for crop type classification. However, this approach is rarely used to retrace the spatial distribution of historical crop types, and its applicability needs further study.

The Hetao Irrigation District (HID) is an important grain- and oil-growing area in China [41,42]. The crop type proportion in the HID has shifted rapidly to pursue higher profits, leading to a significant virtual water transfer [8]. The sustainable development of the HID has suffered from a sequence of issues, including the overuse of agricultural water, soil salinization [43], and water pollution [44]. The water demand between agriculture and the sustainable management of the ecosystem urgently needs to be balanced in the HID. Optimizing the cropping structure to save water for the ecosystem is considered a solution to improve the ecosystem environment in the HID. However, optimization requires the support of an accurate crop type map [8]. In recent decades, a series supervised classification method based on the fitted normalized difference vegetation index (NDVI) time series has been an effective way to identify the spatial distribution of crop types in the HID. For example, Jiang et al. [45] proposed a vegetation index–phenological index (VI–PI) classifier to map crop types in the HID based on the MODIS NDVI time series. Yu et al. [41] used the NDVI characteristics fitted with an asymmetric logistic curve to calculate the NDVI and a phenological metric to separate maize and sunflower in the HID. However, the accuracy obtained by these methods was not good [41]. Integrating multiple classifiers is another effective way to identify the spatial distribution of crop types in the HID. For example, Wen et al. [46] identified the spatial distribution of multiyear sunflower, maize, and wheat in the HID combined with three classifiers (random forest, support vector machine, and phenology-based NDVI classifiers). Due to the small size of cropland fields in the HID, the crop type map with 30 m spatial resolution or coarser resolution cannot meet the optimized irrigation water scheduling requirements.

This study aimed to design a high-resolution crop type mapping framework and test its feasibility in interannual transfer. The objectives of this study were as follows: (1) to identify the 10 m up-to-date crop type in the HID with acceptable accuracy and (2) test the feasibility and reliability of a trained model for crop type identification by interannual transfer.

2. Study Area

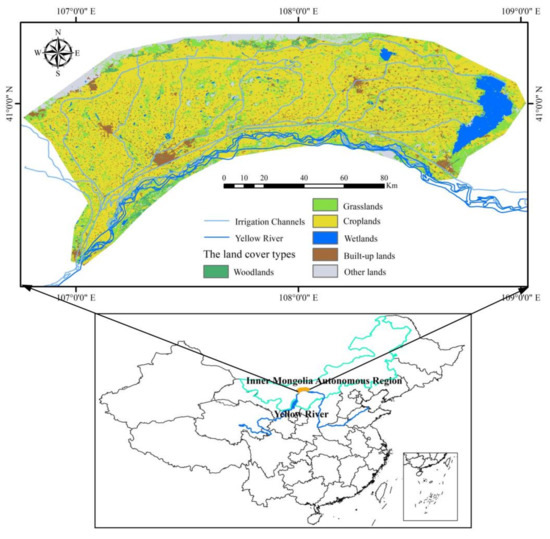

The HID is located in the southern part of Bayannur city in the Inner Mongolian Autonomous Region, China, and it is the largest gravity-fed irrigation district in Asia [47]. An arid continental monsoon climate with hot and dry summers and cold winters dominate the HID [47], leading to the scarcity of precipitation, with less than 250 mm of rainfall annually [48], while potential evaporation is 2100 to 2300 mm per year [8]. The annual average temperature is 6–10 °C, and the average monthly temperature ranges from −9.58 °C in January to 23.72 °C in July [48]. This area is very flat, with elevations ranging from 1007 to 1050 m [49]. Although an arid climate dominates the HID, the Yellow River flowing through the HID provides valuable water resources for agricultural development (Figure 1). A study suggested that the annual increase in crop sown area reached 3.57 × 103 ha year−1 [8], which significantly increased the water depletion and reduced the runoff reserved for the lower Yellow River.

Figure 1.

Geographical location of the Hetao Irrigation District, China.

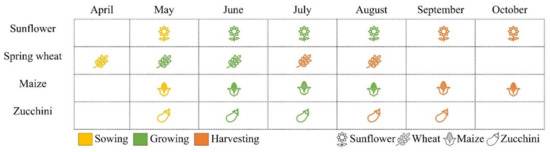

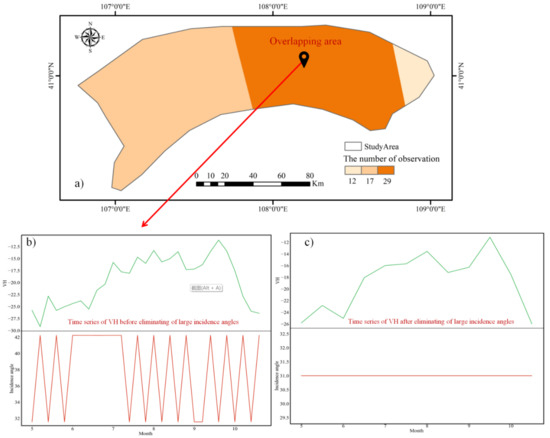

The total arable land area in the region is approximately 7330 km2. The majority of crops are sunflower, maize, and spring wheat [48]. This region is the largest sunflower-producing area in China, accounting for 28% of sunflower production in China in 2021. Figure 2 shows the phenological periods of sunflower, maize, zucchini, and spring wheat in the HID. Sunflower and maize have the same phenological period, and both are sown in May and harvested in September–October. Zucchini is planted in May and harvested in August–September, and spring wheat is sown in April and harvested in July–August. The NDVI curve of spring wheat is significantly different from the NDVI curves of maize, sunflower, and zucchini in the HID, while the NDVI curves of maize, sunflower, and zucchini are similar, all peaking in mid-July (Figure 3).

Figure 2.

Phenological information on sunflower, spring wheat, maize, and zucchini in the HID.

Figure 3.

NDVI curve characteristics of maize, sunflower, wheat, and zucchini for the HID, 2020.

3. Materials and Methods

3.1. Sentinel-2 Imagery and Processing

The crop type classification in the HID used 319 scenes of the Sentinel-2 Multispectral Instrument (MSI) Level-1C dataset acquired between 1 April and 1 November 2020. Although spectral bands of Level-1C represent TOA reflectance without atmospheric correction, previous studies have shown that its operation is not mandatory for crop classification if the training data and image are classified at the same scale [50]. Sentinel-2 TOA products are widely used for winter wheat [18], maize [50], and rice identification [31]. The total observation times of Sentinel-2 Level-1 C in the HID varied between 21 and 219 for each pixel from April to October 2020, with an average observation time of 61.18, and 75% of the HID was observed 40~60 times during the monitoring period, indicating that the obtained product can provide adequate information for metric composites and crop identification.

This study removed all pixels contaminated by clouds based on QA60 band values. The Sentinel-2 Level-1C dataset contains 13 bands of top of atmosphere (TOA) reflectance, and this study selected blue, green, red, red edge 1 (RDED1), red edge 2 (RDED2), red edge 3 (RDED3), near-infrared (NIR), shortwave infrared 1 (SWIR1), and shortwave infrared 2 (SWIR2) for crop type classification. In addition, this study also used nine related vegetation indices generated from Sentinel-2 Level-1C data in crop type identification. They included the NDVI [51], the enhanced vegetation index (EVI) [52], the land surface water index (LSWI) [53], the normalized difference water index (NDWI) [54], the green chlorophyll vegetation index (GCVI) [55], and four red-edge-related indices: RDNDVI1, RDNDVI2, RDGCVI1, and RDGCVI2 [50]. Table 1 lists all Sentinel-2 bands and vegetation indices used for crop classification.

Table 1.

Reflectance bands and vegetation indices of Sentinel-2 and polarization bands of Sentinel-1 used for crop type classification.

The NDVI [51] is commonly used to determine the photosynthetic capacity of plants. The EVI [52] can help differentiate soil from vegetation with low cover because it is less sensitive to atmospheric conditions. Due to high leaf moisture and soil moisture sensitivity, the LSWI is widely used to identify paddy [17,56,57] and maize [58]. The NDWI can effectively describe open water features and eliminate the effects of soil and terrestrial vegetation features. The GCVI is used to describe the photosynthetic activity of vegetation [55]. The GCVI uses green reflectance, which is more sensitive to canopy nitrogen than other vegetation indices usually based on red and near-infrared bands and performs well in detecting paddy [31]. The RDNDVI is widely used in estimating canopy chlorophyll and nitrogen contents [59] and crop classification [15].

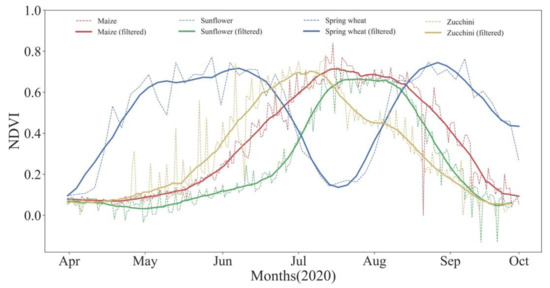

3.2. Sentinel-1 SAR Data and Processing

In total, this study used 206 scenes of Sentinel-1 dual-polarization C-band SAR instrument imagery archived in the GEE as the Sentinel-1 SAR Ground Range Detected (GRD) dataset with VV (single copolarization, vertical transmit/vertical receive) and VH (dual-band cross-polarization, vertical transmit/horizontal receive) bands in crop type classification. All Sentinel-1 SAR GRD data were preprocessed with thermal noise removal, radiation calibration, and terrain correction. Zhang et al. [31] indicated that overlapping areas with various incidence angles can produce noise in time series, so this study removed the data in overlapping regions with high incidence angles (Figure 4). To reduce the speckle noise in SAR data, we employed the refined Lee filter (RLF) method [60] to filter SAR images.

Figure 4.

(a) Number of Sentinel-1 observations from April to October 2020; (b) variation in VH time series before removing the large inclination region from the overlapping part of Sentinel-1 images; (c) variation in VH time series after removing the large inclination region from the overlapping part of Sentinel-1 images.

3.3. Topographic Data and Reference Crop Sample Data Collection

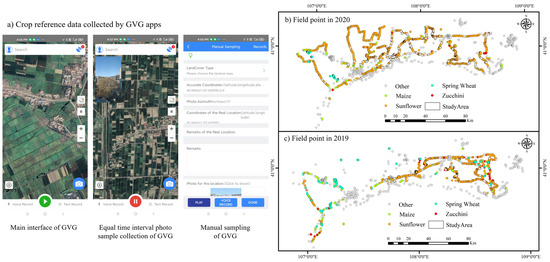

Elevation, slope, and aspect can affect the planting of crops [31]. Therefore, information regarding elevation and elevation-derived variables (slope and aspect) was extracted as metrics for the crop identification classifier from the Shuttle Radar Topography Mission (SRTM) digital elevation dataset with a 90 m spatial resolution. In 2019, the GVG app collected a total of 3741 samples, of which the numbers of sunflowers, maize, spring wheat, zucchini, and others were 1122, 685, 246, 380, and 1308, respectively. In 2020, 5225 valid reference samples were collected by the GVG app, of which the numbers of sunflowers, maize, spring wheat, zucchini, and others were 2316, 1234, 163, 288, and 1224, respectively. The GVG tools and samples collected in 2019 and 2020 are shown in Figure 5. Information on each geotagged sample includes latitude, longitude, crop type name, and time of collection. The GVG app is a ‘GPS-Video-GIS’ integrated reference data collection system developed by the Chinese Academy of Sciences to efficiently collect geotagged photos [61]. Users can download the latest GVG app from the following website: https://gvgserver.cropwatch.com.cn/download (accessed date: 20 February 2022). The GVG app provides three reference data collection methods: mobile data collection along roads, location-fixed photo collection, and mobile drag-and-drop collection.

Figure 5.

Distribution of the 2019 and 2020 reference samples collected with the GVG application. (a) GVG app and its data collection process; (b) reference data collected in 2020; (c) reference data collected in 2019.

3.4. Methodology

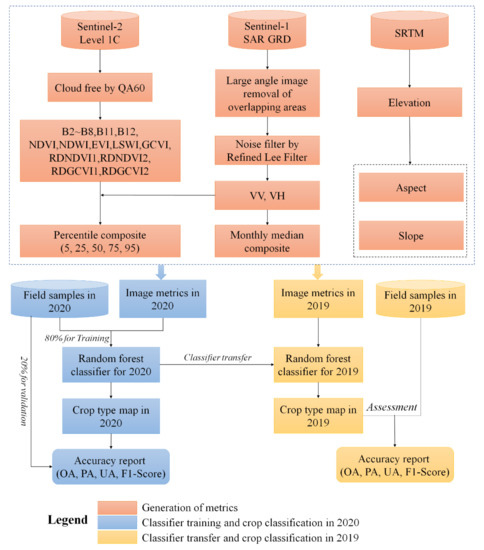

Figure 6 shows the interannual transfer learning framework for crop type classification in the HID. This framework uses all available Sentinel-2 Level 1C and Sentinel-1 SAR GRD images to classify crops in the HID. This framework has three components: metric generation (1st), classifier training with crop classification in 2020 (2nd), and classifier transfer with crop classification in 2019 (3rd). In the first component, Sentinel-2 data were first cloud-free processed in the first component, and then reflectance and vegetation index metrics were generated by the percentile composite. Sentinel-1 data were processed by the higher-angle image removal modules in the overlap region and the Refined Lee Filter (RLF). Then, VV and VH metrics were generated by percentile and monthly median composites. Monthly VV and VH metrics were generated by monthly median synthesis because it is less sensitive to outliers than the maximum, minimum, or mean composite [62]. Elevation, slope, and aspect metrics from SRTM data were integrated into metrics. In the second component, all metrics generated by the first component were used as input features of the RF classifier to build a crop type classification model in 2020, which was then used to identify the spatial distribution of crop types in 2020. Performance was assessed by OA, PA, US, and F1 scores. Many previous studies have indicated the robustness of RF classifiers in crop type classification. In the third component, the trained classifier in 2020 was transferred to 2019 and then used to produce crop types in 2019 using the metrics in 2019 generated by the first component as input. Finally, the performance was evaluated by crop reference samples in 2019.

Figure 6.

Flowchart for crop type classification in 2020 and classifier transfer in 2019.

3.4.1. Metric Composites

The presence of missing values in optical remote sensing time series data due to rainy and cloudy weather increases the difficulty of crop classification [33]. This study used the percentile method to generate categorical indicators to reduce the effect of missing data. The percentile composite creates a histogram for each input feature collected and then calculates the specific metrics based on the specified percentile [63]. The percentile composite is less sensitive to the length and incompleteness of datasets [19]. It is considered an effective method to overcome the effects of missing data on classification model training. In general, the same crops are planted in different years, and the phenological information varies, which increases the instability of classifier transfer; however, percentile composite is less sensitive to phenological variation. This is another reason the percentile composite was chosen in this study. Currently, the percentile composite is widely used in the classification of forest vegetation [64], rice [31], maize [19], crops [15], and land cover [65]. For the Sentinel-2 data, 90 metrics were generated from 9 reflectance bands (B2, B3, B4, B5, B6, B7, B8, B11, and B12) and 9 vegetation indices (NDVI, NDWI, LSWI, GCVI, RDNDVI1, RDNDVI2, RDGCVI1, RDGCVI2, and EVI) by the percentile composite, with the percentiles set at 5%, 25%, 50%, 75%, and 95%. For the Sentinel-1 SAR data, 10 SAR metrics (VHP5, VHP25, VHP50, VHP75, VHP95, VVP5, VVP25, VVP50, VVP75, and VVP95) were obtained by the percentile composite at 5%, 25%, 50%, 75%, and 95%, and 14 monthly SAR metrics (VVMON4, VVMON5, VVMON6, VVMON7, VVMON8, VVMON9, VVMON10, VHMON4, VHMON5, VHMON6, VHMON7, VHMON8, VHMON9, and VHMON10) were generated by the median composite. Table 2 lists a total of 114 metrics from Sentinel-1/2. In addition, elevation, slope, and aspect were used as metrics to participate in crop classification modeling.

Table 2.

Metrics of Sentinel-1/2 generated by percentile and monthly median composites.

3.4.2. Training and Validation Dataset Preparation

The performance of the classifier and classification accuracy of crop types is affected by class imbalance [22]. Rare classes tend to be predicted using random sampling in the region dominated by major classes [63]. This study used two steps to prepare training and validation samples to avoid oversampling training samples of dominated crops and undersampling rare crops. First, all crop reference samples were separated into sunflower, maize, spring wheat, zucchini, and other crop groups according to sample attribution; then, each group was split into training and validation samples at a ratio of 4:1. In 2020, the numbers of crop reference sample for classifier training and result validation were 4179 and 1046 based on this strategy, respectively. In the training group, the numbers of maize, sunflower, spring wheat, zucchini, and others were 987, 1853, 130, 230, and 979, respectively. In the validation group, the numbers of maize, sunflower, spring wheat, zucchini, and others were 247, 463, 33, 58, and 245, respectively.

3.4.3. Classifier: Random Forest

The random forest (RF) classifier [21] is a combination of tree predictors, and tree bagging is used to search for a random subspace in the input set of features to construct mutually independent decision trees. RF classifiers can effectively handle many input metrics and provide faster and more reliable classification results than traditional classifiers without a significant increase in computational effort [21,66]. In addition, the RF classifier is less sensitive to the number, quality, and imbalance of samples [22]. Therefore, the RF classifier is widely used in crop identification [29,67] and land cover classification [63,66]. The Scikit-learn package in Python is used to determine the optimal parameters of the applied RF classifier. Each tree in the RF is fitted based on a random sample of observations, usually a bootstrap sample or a subsample of the original data. The out-of-bag (OOB) error [68] is calculated for each mean error value using the predicted values of the trees not included in the respective bootstrap samples. This approach allows the RF classifier to be fitted and validated during the training procedure. The OOB error is often used to assess the prediction performance of an RF. In the GEE, the RF classifier is named “smileRandomForest”, and the “explain” function determines the weight of metrics for crop type classification.

3.4.4. Model Transfer Scenario and Performance Assessment

There are two steps to implement the trained crop classification model by transfer. The first step is to build the crop type classification model for 2020 (CTC2020), and the second step is to use this model to identify the crop types in 2019 by transfer. In the construction of CTC2020, the following steps are used to determine the best combination of metrics for classification.

First, the Sentinel-1/2 remote sensing images in 2020 are grouped into five time periods: April–May, April–June, April–July, April–August, and April–September.

Second, the classification metrics for these five time periods in 2020 are generated using the percentile and monthly median composites introduced in Section 3.1.

Third, the random forest parameters are optimized by the method presented in Section 3.2, and the classifier is trained with the training samples and their associated metrics.

Fourth, the spatial distribution of crop types in 2020 is generated, and the classification accuracy is evaluated using validation samples. The period introduced in the first step with the highest overall accuracy (OA) is considered the best period for crop classification.

After obtaining the optimal classifier for crop type classification, it is transferred to determine the spatial distribution of the 2019 crop types. The sentinel metrics of 2019 from the best period are input into CTC2020 to generate the 2019 crop types in the HID. The reference samples in 2019 are used to estimate the performance of classifier transfer.

3.4.5. Accuracy Assessment Indicators

In this study, the accuracy of crop classification results and the feasibility of the crop transfer model are assessed for each crop using a confusion matrix. The user’s accuracy (UA), producer’s accuracy (PA), OA, and the F1-score [69], which is the harmonic mean of the UA and PA [70], are adopted to assess classification performance. Details of the four indices are calculated using the following equations.

where is the observation in row i and column j of the confusion matrix, and is the ith classification category. Specifically, denotes the number of images that originally belonged to category but were misclassified into category .

4. Results

4.1. Metris Characteristic Changes

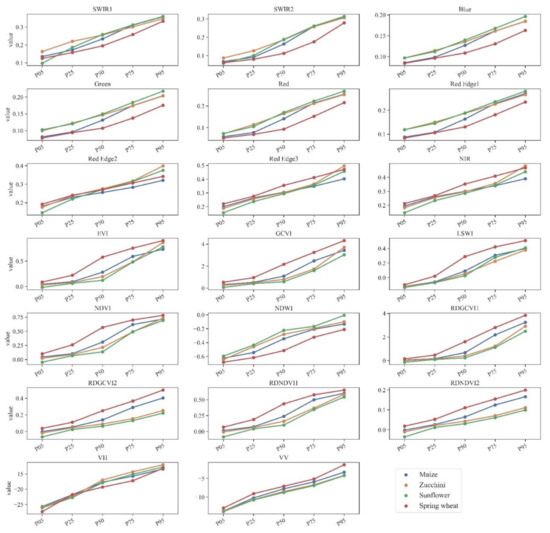

The percentiles of nine Sentinel-2 reflectance bands, nine vegetation indices, and two SAR bands (VV and VH) for sunflower, maize, zucchini, and spring wheat were calculated in 2020 based on all crop reference samples (Figure 7). The curve of percentiles for spring wheat is different from that of other crops in SWIR2, blue, green, red, red edge 1, EVI, GCVI, LSWI, RDGCVG2, and RDNDVI1. Blue, green, red, and red edge 1 bands are suitable for separating maize from other crops. The percentile curves of sunflower and zucchini are very similar, and SWIR1 can separate them.

Figure 7.

Percentiles of 20 features of sunflower, maize, zucchini, and spring wheat in 2020.

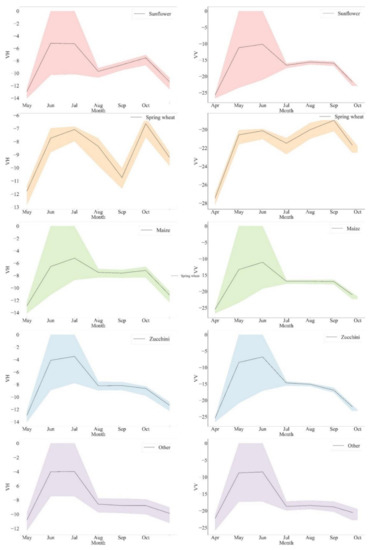

SAR backscattering signals are sensitive to crop canopy structure and underlying soil conditions [71] and are widely used in crop classification [72]. Figure 8 shows the monthly VV and VH polarization information for sunflower, maize, spring wheat, and zucchini in the HID. These changes were closely related to the crop growing period. Due to crop rotation, spring wheat has two backscatter peaks, and the VH band of Sentinel-1 effectively captures the change. April–June is the rapid growth period for sunflower, maize, and zucchini when the crop’s height, canopy closure, geometry, and structure change significantly and the backscatter signal is enhanced considerably. After June, crops tended to canopy closure, with little change in canopy structure, and the backscattered signal decreased significantly.

Figure 8.

Changes in monthly VV and VH values for maize, zucchini, sunflower, and spring wheat in 2020.

4.2. Optimization of Tree Number and Classification Period

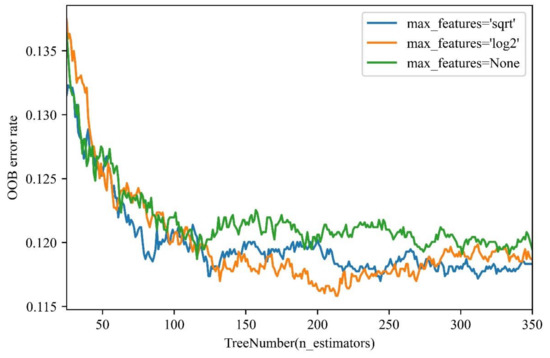

Figure 9 shows the variation in the OOB error with the number of trees when the “max_features” parameter is equal to “sqrt”, “long2”, and “None”. Smaller OOB values indicate the better classification ability of the random forest. As the number of trees increases, the OOB error decreases rapidly, and when the number of trees exceeds 125, the decreasing rate of the OOB error tends to slow. When the number of trees exceeds 300, the OOB error tends to stabilize and reach the minimum value. The number of trees in the random forest classifier is set to 300 in this study based on the variation in the OOB error.

Figure 9.

Trend of the OOB error with the increasing number of random forest trees.

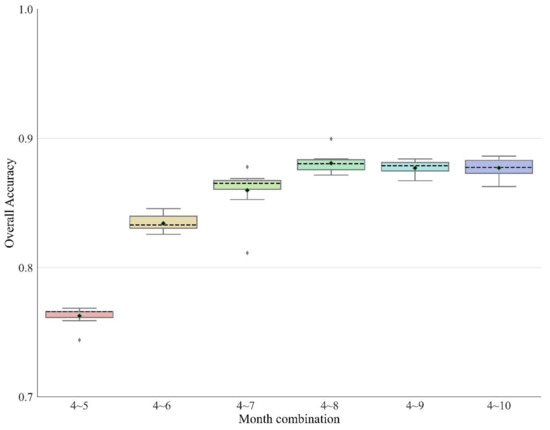

The study analyzed the effect of different periods of Sentinel-1/2 data combinations on crop type classification from April to June, April to July, April to August, April to September, and April to October. Each period was analyzed ten times, and the best period was selected using the average overall accuracy as an indicator. The test results showed that the average overall accuracies of crop classification were 83.4%, 86.0%, 88.1%, and 87.7% for April–June, April–July, April–August, and April–September, respectively (Figure 10). Both April–June and April–July have OAs above 80%, indicating that crop types in the HID can be extracted at the early stages of crop growth using the framework proposed in this study. Time series data from April–August had the highest OA in the HID, indicating that this period is the best time to classify crop types in the HID.

Figure 10.

OA changes in crop type classification in the HID over five time periods in 2020. (Each bar represents its range of variation).

4.3. Crop Type Classification in 2020

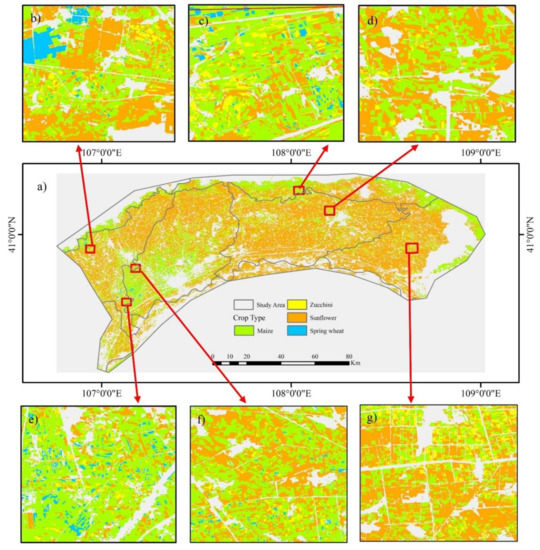

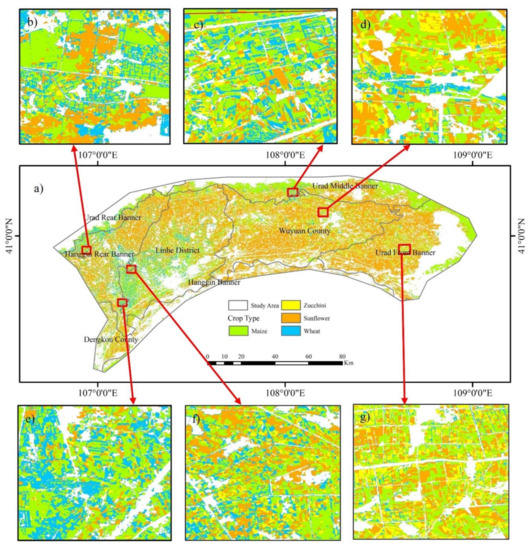

Using the random forest method with 300 trees and time series data from April to August 2020, this study generated the crop types for 2020 in the HID based on (Figure 11) with an OA of 0.89. The confusion matrix (Table 3) showed that the F1-score based on for maize, zucchini, sunflower, and spring wheat reached 0.85, 0.73, 0.91, and 0.99, respectively (Table 3). The reported OAs of crop type classification in the HID ranged between 70% and 90.52% [41,46,73]. In this study, the OA of crop type classification in 2020 reached 0.89, which is acceptable compared with previous results. Thus, the proposed framework of crop type classification can be used to perform crop type classification in the HID. Sunflower crops are mainly distributed in Wuyuan County and Urad Front Banner and northern Linhe District, and maize is mainly distributed in the southwestern part of Linhe District. The proportions of spring wheat, sunflower, zucchini, and maize were 0.5%, 68.8%, 3.0%, and 27.7%, respectively, in 2020. Sunflower crops accounted for the largest proportion of crops in the HID and indicated the pursuit of higher economic returns by farmers.

Figure 11.

Distribution of crop types in the Hetao Irrigation District in 2020. (a) Crop type distribution in 2020 of HID. (b–g) crop type distribution in typical area.

Table 3.

Confusion matrix for crop type classification in 2020.

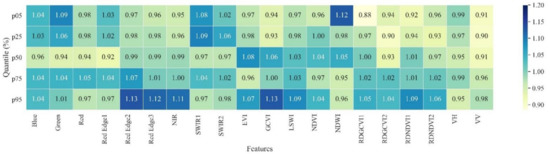

This study also quantified the weight contribution of each metric to the crop type classification model (Figure 12). The value of the metric weight indicates the increase in OOB error when a metric is excluded from the feature selection process. A higher importance value indicates that the metric is more important in the classification process. Ten-fold cross-validation was conducted in this study to reduce the effect of random error, and the resulting feature importance values were averaged as the final feature importance. In 2020, the highest contributing metrics were the B11 (SWIR1) (5.14%), B3 (green) (5.14%) and B6 (red edge 2) (5.14%) bands and B2 (blue) (5.11%), LSWI (5.10%), NDWI (5.07%), EVI (5.05%), GCVI (5.05%), and SWIR2 (5.05%). In terms of percentiles, the 95th percentiles of B6 (red edge 2) (1.13%), B7 (red edge 3) (1.12%), B8 (NIR) (1.11%), and GCVI (1.13%) and the 5th percentile of NDWI (1.1%) were the most important metrics.

Figure 12.

Weighting of metrics generated by percentiles in 2020.

4.4. Performance Analysis of Model Transfer in 2019

Based on CTC2020, the Sentinel-1/2 data from April to August 2019 were used as inputs to CTC2020 to determine the 2019 crop types () (Figure 13). The OA of crop classification by trained model transfer reached 0.92, and the F1-scores of maize, zucchini, sunflower, spring wheat, and other crops based on CPC2020 reached 0.89, 0.85, 0.93, 0.87, and 0.94, respectively (Table 4). Compared to previous studies, the OA and F1-score indicated that the transfer approach is suitable for crop type classification in the HID. The proportions of spring wheat, sunflower, zucchini, and maize were 5.9%, 48.7%, 6.4%, and 39.0%, respectively, in 2019.

Figure 13.

Distribution of crop types in the Hetao Irrigation District in 2019 based on CTC2020. (a) Crop type distribution in 2019 of HID by classifier transfer. (b–g) crop type distribution in typical area.

Table 4.

Confusion matrix for crop type classification in 2019 based on CTC2020.

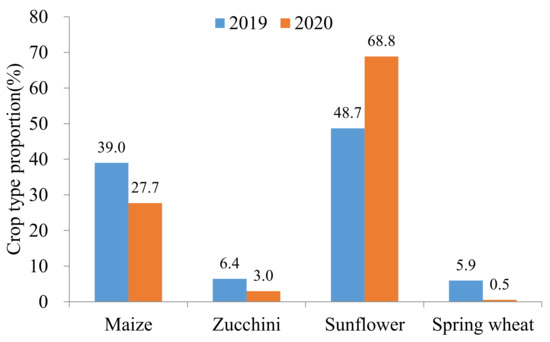

4.5. Analysis of Crop Type Proportions from 2019 to 2020

The crop type proportions in the HID varied from 2019 to 2020 (Figure 14). As the leading crop in the HID, the share of sunflower increased from 48.7% in 2019 to 68.8% in 2020. In return, the share of other crops declined in 2020 compared to 2019 due to the rapid expansion of sunflowers. The shares of maize, zucchini and spring wheat declined from 39.0%, 6.4%, and 3.0% in 2019 to 27.7%, 3.0% and 0.5% in 2020, respectively. There are two reasons for the expansion of sunflowers in the HID. Salinization has gradually become a serious problem in the HID due to the shallow water table and strong evapotranspiration [74], while sunflower has good performance in salt tolerance, supporting the expansion of the sunflower cultivation area. In addition, as the main raw material of sunflower oil, sunflower planting can bring more profit to local farmers than other crops [46].

Figure 14.

Proportions of maize, zucchini, sunflower, and spring wheat in 2019 and 2020.

5. Discussion

5.1. Performance Analysis of Trained Model Transfer for Crop Type Classification

The OA of crop type classification in 2019 () reached 0.92 by the model trained in 2020 (CTC2020), which is 0.02 higher than the OA of crop type classification in 2019 achieved by a model trained using crop reference data in 2019 () (Table 5), indicating that the proposed crop type classification framework can be used for crop classification and transfer learning. The possible reason is that there are enough crop reference samples in 2020 to ensure that the classifier can obtain sufficient training from analyzing the spectral features. On the other hand, this study shows that it is not necessary to collect crop reference samples every year in the HID and that acceptable crop type classification results can be obtained by interannual transfer of the trained model. This also provides an alternative method to trace the historical distribution of crop types or predict crop types for the following year. This study trained the 2019 crop classification model with the crop reference samples in 2019 (CTC2019) and then used it to predict the crop type distribution in 2020 () to verify the robustness of model interannual transfer. The OA reached 0.89 (Table 5), which was the same as the result in 2020 based on . A similar study from Heilongjiang Province, China, supported our findings that an OA of 0.91 was obtained after the model was transferred from 2017 to 2018 [29], meaning that the interannual transfer of a trained model in the HID can identify crop types in history and be used to predict crop type distributions for the next year in the absence of crop reference samples.

Table 5.

Changes in the OA and F1-score of crop type classification before and after model transfer.

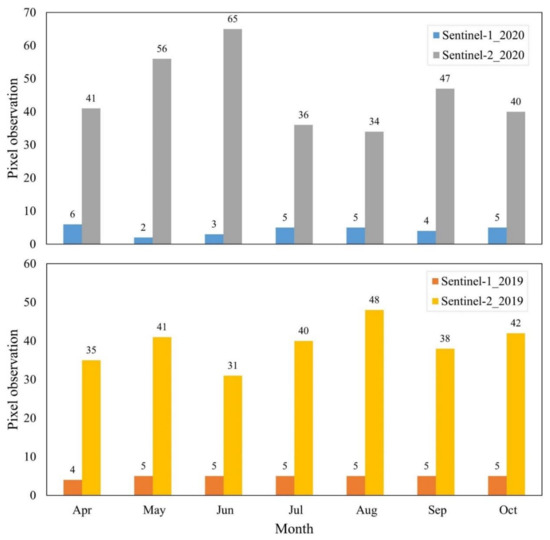

This study further analyzed the difference in the numbers of Sentinel-1/2 observations in 2019 and 2020 (Figure 15). In 2019 and 2020, there were some differences in the numbers of available Sentinel-1/2 satellite images in different growth stages of crops. Each month, the average observation times of Sentinel-1 are very close in 2019 and 2020. There are some differences in the numbers of Sentinel-2 images between 2019 and 2020. Compared with 2020, the number of Sentinel-2 observations was less in 2019 than in 2020 at the early growing stage (April to June), while the number of observations in 2019 was greater than that in 2020 at the critical growing stage (July to August). Every month, the average observations of Sentinel-2 in 2019 and 2020 are above 30, providing sufficient satellite images for crop classification model interannual transfer, thus ensuring the accuracy of the classification model after transfer.

Figure 15.

Number of pixel observations in the HID in 2019 and 2020.

In the transfer experiments of the trained crop classification model, a higher OA was obtained using the Sentinel-1/2 combination from April to August, which may be attributed to the arid climate in the HID, providing sufficient Sentinel-2 images for the critical growth stage of the 2020 crop and enhancing the effectiveness of the model training. More training samples in 2020 may be another reason for the OA improvement after model transfer. The numbers of reference samples in 2019 and 2020 were 3741 and 5255, respectively, of which 4180 and 2993 were used for model training. The number of reference samples used in 2020 was 614 samples larger than that in 2019, which may have improved the feature mining ability of the crop classification model built in 2020 and thus enhanced the classification accuracy after model transfer.

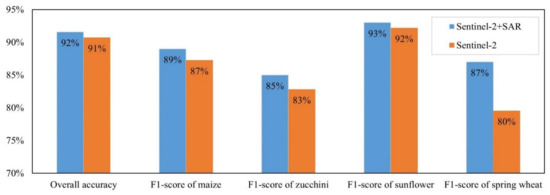

5.2. Performance Analysis of Trained Model Transfer for Crop Type Classification

A comparison of the pretransfer and post-transfer accuracies obtained using Sentinel-1/2 image composite metrics as inputs and only including the Sentinel-2 image composite metric (Figure 16) was performed. The OA of crop type classification improved from 90.73% to 91.58% when Sentinel-1/2 images were combined compared to that of Sentinel-2 satellite images alone. The F1-scores for spring wheat, sunflower, zucchini, and maize increased from 79.55%, 92.19%, 82.81%, and 87.26% using Sentinel-2 satellite images alone to 87.00%, 93.00%, 85.00%, and 89.00% when combining Sentinel-1/2 images, respectively. The SAR information improved the performance of trained model transfer. Several previous studies have also shown that higher OAs can be achieved using the combination of Sentinel-1/2 compared to a single sensor in crop type classification [14,24,75]. Notably, the diurnal monitoring capability of SAR is not affected by clouds and rain, and the electromagnetic polarization of SAR is sensitive to the canopy geometry (size, shape, and orientation), water content, and biomass.

Figure 16.

Change in F1-score and OA for each crop with and without Sentinel-1 data.

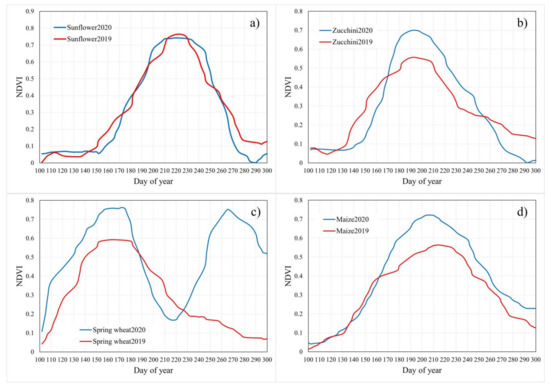

Model transfer cases using Sentinel-1/2 showed that sunflower had the highest F1 scores, followed by maize, spring wheat, and zucchini. The difference between them could be caused by the difference in phenology information and crop growth situation in 2019 and 2020 (Figure 17). We calculated the M index [76,77] for maize, spring wheat, sunflower, and zucchini based on their NDVI series. An M-value higher than 1 means that they are significantly different from each other; otherwise, they are more similar to each other, and an M-value closer to 0 indicates more similarity. The M value is widely used to estimate the separability of two classes [25]. In this study, the M values for maize, spring wheat, sunflower, and zucchini were 0.15, 0.71, 0.10, and 0.09, respectively. The sunflower, corn, and zucchini curves are similar, while the spring wheat curve is different. The difference in the M value explains why sunflower has the highest F1 score and spring wheat has the lowest F1 score. Although maize, spring wheat, and zucchini had slight differences, the F1 scores were all above 0.8, which indicates that the metric composite method in this study is appropriate.

Figure 17.

(a) NDVI curve of sunflower in 2019 and 2020; (b) NDVI curve of zucchini in 2019 and 2020; (c) NDVI curve of spring wheat in 2019 and 2020; (d) NDVI curve of maize in 2019 and 2020.

5.3. Uncertainty Analysis and Outlook

In this study, the TOA reflectance of Sentinel-2 data was used instead of surface reflectance (SR). Although previous work has demonstrated that TOA reflectance data are reasonable for classification tasks [18,31,78], TOA data may inhibit the performance of classifier transfer because interannual climate variability can alter the spectral and temporal characteristics of TOA reflectance between years [24]. The results of the interannual transfer of the crop classification model suggest that trained models can extract the spatial distribution of crop types for specific years in arid regions, which can reduce the intensity and frequency of collecting reference crop samples. This study only tested the transferring effect of the proposed framework in the HID with a dry climate but did not test in a humid climate region; in the future, its feasibility should be tested in a humid climate region. In this study, only two adjacent years were used to test the feasibility of crop type classification by classifier transfer. The stability of the phenological information and a similar number of remote sensing images provided assurance of successful classifier transfer, but the effect of differences in phenological and crop conditions on model transfer when the interval was greater than two years remains to be investigated.

6. Conclusions

This study designed a cloud-based approach to identify crop types in China’s Hetao Irrigation District using Sentinel-1/2 images and a random forest classifier. We also evaluated the transfer performance of the established crop classification model. The framework designed in this study successfully identified the crop type distribution of the Hetao Irrigation District in 2020 with an overall accuracy of 0.89. Good performance was estimated in 2019, with an overall accuracy of 0.92, through the interannual transfer of the crop classification model in 2020. April to August is the best period for crop identification in the Hetao Irrigation District. The combination of Sentinel-1 and Sentinel-2 improved the interannual transfer performance of the crop classification model compared to using Sentinel-2 data alone. Furthermore, the variation in phenological information and crop condition from year to year should be included in the proposed framework to enhance the interannual transferability of the trained model. Better temporal interpolation methods are expected to be used to reconstruct the time series of features to enhance the robustness of the trained model for interannual transfer. Our findings suggest that crop type reference samples do not need to be collected every year and that crop type maps can be predicted or retraced by the interannual transfer of the trained model.

Author Contributions

Y.H. was responsible for the experimental design and manuscript preparation. H.Z. contributed to the conceptual design, editing, funding, and final review of the manuscript. F.T., M.Z., B.W., S.G., Y.L. (Yuanchao LI), Y.L. (Yuming Lu), and H.Y. contributed to reviewing the manuscript. S.L. and Y.L. (Yuming Lu) contributed to the collection of crop reference data. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Key Research and Development Project of China (No. 2019YFE0126900), Strategic Priority Research Program of the Chinese Academy of Sciences (No. XDA19030201), Natural Science Foundation of China (No. 41861144019), Alliance of International Science Organizations (No. ANSO-CR-KP-2020-07), GEF Integrated Water Resources and Water Environment Management Extension (Mainstreaming) Project (No. MWR-C-3-11), Natural Science Foundation of Qinghai Province (No. 2020-ZJ-927), and CAS “Light of West China” Program (No. 1 _5).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All relevant data are shown in the paper.

Acknowledgments

We would like to express our deep gratitude to the anonymous reviewers.

Conflicts of Interest

The authors declare no conflict of interest.

References

- UN. Transforming Our World: The 2030 Agenda for Sustainable Development. In A New Era in Global Health; Springer Publishing Company: New York, NY, USA, 2017. [Google Scholar]

- FAO; IFAD; UNICEF; WFP; WHO. The State of Food Security and Nutrition in the World 2021. Transforming Food Systems for Food Security, Improved Nutrition and Affordable Healthy Diets for All; FAO: Rome, Italy, 2021. [Google Scholar] [CrossRef]

- Cai, Y.; Guan, K.; Peng, J.; Wang, S.; Seifert, C.; Wardlow, B.; Li, Z. A high-performance and in-season classification system of field-level crop types using time-series Landsat data and a machine learning approach. Remote Sens. Environ. 2018, 210, 35–47. [Google Scholar] [CrossRef]

- Hoogeveen, J.; Faurès, J.M.; Peiser, L.; Burke, J.; van de Giesen, N. GlobWat—A global water balance model to assess water use in irrigated agriculture. Hydrol. Earth Syst. Sci. 2015, 19, 3829–3844. [Google Scholar] [CrossRef] [Green Version]

- Zou, M.; Niu, J.; Kang, S.; Li, X.; Lu, H. The contribution of human agricultural activities to increasing evapotranspiration is significantly greater than climate change effect over Heihe agricultural region. Sci. Rep. 2017, 7, 8805. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zeng, H.; Wu, B.; Zhu, W.; Zhang, N. A trade-off method between environment restoration and human water consumption: A case study in Ebinur Lake. J. Clean. Prod. 2019, 217, 732–741. [Google Scholar] [CrossRef]

- Zhang, L.; Yin, X.A.; Xu, Z.; Zhi, Y.; Yang, Z. Crop Planting Structure Optimization for Water Scarcity Alleviation in China. J. Ind. Ecol. 2016, 20, 435–445. [Google Scholar] [CrossRef]

- Liu, J.; Wu, P.; Wang, Y.; Zhao, X.; Sun, S.; Cao, X. Impacts of changing cropping pattern on virtual water flows related to crops transfer: A case study for the Hetao irrigation district, China. J. Sci. Food Agric. 2014, 94, 2992–3000. [Google Scholar] [CrossRef] [PubMed]

- Torres, R.; Snoeij, P.; Geudtner, D.; Bibby, D.; Davidson, M.; Attema, E.; Potin, P.; Rommen, B.; Floury, N.; Brown, M.; et al. GMES Sentinel-1 mission. Remote Sens. Environ. 2012, 120, 9–24. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Roy, D.P.; Wulder, M.A.; Loveland, T.R.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Helder, D.; Irons, J.R.; Johnson, D.M.; Kennedy, R.; et al. Landsat-8: Science and product vision for terrestrial global change research. Remote Sens. Environ. 2014, 145, 154–172. [Google Scholar] [CrossRef] [Green Version]

- Thenkabail, P.S.; Teluguntla, P.G.; Xiong, J.; Oliphant, A.; Congalton, R.G.; Ozdogan, M.; Gumma, M.K.; Tilton, J.C.; Giri, C.; Milesi, C.; et al. Global Cropland-Extent Product at 30-m Resolution (GCEP30) Derived from Landsat Satellite Time-Series Data for the Year 2015 Using Multiple Machine-Learning Algorithms on Google Earth Engine Cloud; U.S. Geological Survey: Reston, VA, USA, 2021; p. 63.

- d’Andrimont, R.; Verhegghen, A.; Lemoine, G.; Kempeneers, P.; Meroni, M.; van der Velde, M. From parcel to continental scale—A first European crop type map based on Sentinel-1 and LUCAS Copernicus in-situ observations. Remote Sens. Environ. 2021, 266, 112708. [Google Scholar] [CrossRef]

- Van Tricht, K.; Gobin, A.; Gilliams, S.; Piccard, I. Synergistic Use of Radar Sentinel-1 and Optical Sentinel-2 Imagery for Crop Mapping: A Case Study for Belgium. Remote Sens. 2018, 10, 1642. [Google Scholar] [CrossRef] [Green Version]

- You, N.; Dong, J.; Huang, J.; Du, G.; Zhang, G.; He, Y.; Yang, T.; Di, Y.; Xiao, X. The 10-m crop type maps in Northeast China during 2017–2019. Sci. Data 2021, 8, 41. [Google Scholar] [CrossRef] [PubMed]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Dong, J.; Xiao, X.; Menarguez, M.A.; Zhang, G.; Qin, Y.; Thau, D.; Biradar, C.; Moore, B. Mapping paddy rice planting area in northeastern Asia with Landsat 8 images, phenology-based algorithm and Google Earth Engine. Remote Sens. Environ. 2016, 185, 142–154. [Google Scholar] [CrossRef] [Green Version]

- Yang, G.; Yu, W.; Yao, X.; Zheng, H.; Cao, Q.; Zhu, Y.; Cao, W.; Cheng, T. AGTOC: A novel approach to winter wheat mapping by automatic generation of training samples and one-class classification on Google Earth Engine. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102446. [Google Scholar] [CrossRef]

- Tian, F.; Wu, B.; Zeng, H.; Zhang, X.; Xu, J. Efficient Identification of Corn Cultivation Area with Multitemporal Synthetic Aperture Radar and Optical Images in the Google Earth Engine Cloud Platform. Remote Sens. 2019, 11, 629. [Google Scholar] [CrossRef] [Green Version]

- Lambert, M.-J.; Traoré, P.C.S.; Blaes, X.; Baret, P.; Defourny, P. Estimating smallholder crops production at village level from Sentinel-2 time series in Mali’s cotton belt. Remote Sens. Environ. 2018, 216, 647–657. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef] [Green Version]

- Defourny, P.; Bontemps, S.; Bellemans, N.; Cara, C.; Dedieu, G.; Guzzonato, E.; Hagolle, O.; Inglada, J.; Nicola, L.; Rabaute, T.; et al. Near real-time agriculture monitoring at national scale at parcel resolution: Performance assessment of the Sen2-Agri automated system in various cropping systems around the world. Remote Sens. Environ. 2019, 221, 551–568. [Google Scholar] [CrossRef]

- Inglada, J.; Vincent, A.; Arias, M.; Marais-Sicre, C. Improved Early Crop Type Identification By Joint Use of High Temporal Resolution SAR And Optical Image Time Series. Remote Sens. 2016, 8, 362. [Google Scholar] [CrossRef] [Green Version]

- Xun, L.; Zhang, J.; Cao, D.; Yang, S.; Yao, F. A novel cotton mapping index combining Sentinel-1 SAR and Sentinel-2 multispectral imagery. ISPRS J. Photogramm. Remote Sens. 2021, 181, 148–166. [Google Scholar] [CrossRef]

- Kussul, N.; Kolotii, A.; Shelestov, A.; Lavrenyuk, M.; Bellemans, N.; Bontemps, S.; Defourny, P.; Koetz, B.; Symposium, R.S. Sentinel-2 for agriculture national demonstration in ukraine: Results and further steps. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 5842–5845. [Google Scholar]

- Moumni, A.; Sebbar, B.E.; Simonneaux, V.; Ezzahar, J.; Lahrouni, A. Evaluation of Sen2agri System over Semi-Arid Conditions: A Case Study of The Haouz Plain in Central Morocco. In Proceedings of the 2020 Mediterranean and Middle-East Geoscience and Remote Sensing Symposium (M2GARSS), Tunis, Tunisia, 9–11 March 2020; pp. 343–346. [Google Scholar]

- Cintas, R.J.; Franch, B.; Becker-Reshef, I.; Skakun, S.; Sobrino, J.A.; van Tricht, K.; Degerickx, J.; Gilliams, S. Generating Winter Wheat Global Crop Calendars in the Framework of Worldcereal. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 6583–6586. [Google Scholar]

- You, N.; Dong, J. Examining earliest identifiable timing of crops using all available Sentinel 1/2 imagery and Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2020, 161, 109–123. [Google Scholar] [CrossRef]

- Tran, K.H.; Zhang, H.K.; McMaine, J.T.; Zhang, X.; Luo, D. 10 m crop type mapping using Sentinel-2 reflectance and 30 m cropland data layer product. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102692. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, B.; Ponce-Campos, G.E.; Zhang, M.; Chang, S.; Tian, F. Mapping up-to-Date Paddy Rice Extent at 10 M Resolution in China through the Integration of Optical and Synthetic Aperture Radar Images. Remote Sens. 2018, 10, 1200. [Google Scholar] [CrossRef] [Green Version]

- Hansen, M.C.; Egorov, A.; Potapov, P.V.; Stehman, S.V.; Tyukavina, A.; Turubanova, S.A.; Roy, D.P.; Goetz, S.J.; Loveland, T.R.; Ju, J.; et al. Monitoring conterminous United States (CONUS) land cover change with Web-Enabled Landsat Data (WELD). Remote Sens. Environ. 2014, 140, 466–484. [Google Scholar] [CrossRef] [Green Version]

- Griffiths, P.; Nendel, C.; Hostert, P. Intra-annual reflectance composites from Sentinel-2 and Landsat for national-scale crop and land cover mapping. Remote Sens. Environ. 2019, 220, 135–151. [Google Scholar] [CrossRef]

- Song, X.-P.; Potapov, P.V.; Krylov, A.; King, L.; Di Bella, C.M.; Hudson, A.; Khan, A.; Adusei, B.; Stehman, S.V.; Hansen, M.C. National-scale soybean mapping and area estimation in the United States using medium resolution satellite imagery and field survey. Remote Sens. Environ. 2017, 190, 383–395. [Google Scholar] [CrossRef]

- Dell’Acqua, F.; Iannelli, G.C.; Torres, M.A.; Martina, M.L.V. A Novel Strategy for Very-Large-Scale Cash-Crop Mapping in the Context of Weather-Related Risk Assessment, Combining Global Satellite Multispectral Datasets, Environmental Constraints, and In Situ Acquisition of Geospatial Data. Sensors 2018, 18, 591. [Google Scholar] [CrossRef] [Green Version]

- Gallego, J.; Delincé, J. The European land use and cover area-frame statistical survey. Agric. Surv. Methods 2010, 149–168. [Google Scholar] [CrossRef] [Green Version]

- Bingfang, W. Cloud services with big data provide a solution for monitoring and tracking sustainable development goals. Geogr. Sustain. 2020, 1, 25–32. [Google Scholar] [CrossRef]

- Fritz, S.; McCallum, I.; Schill, C.; Perger, C.; See, L.; Schepaschenko, D.; van der Velde, M.; Kraxner, F.; Obersteiner, M. Geo-Wiki: An online platform for improving global land cover. Environ. Model. Softw. 2012, 31, 110–123. [Google Scholar] [CrossRef]

- Fritz, S.; McCallum, I.; Schill, C.; Perger, C.; Grillmayer, R.; Achard, F.; Kraxner, F.; Obersteiner, M. Geo-Wiki.Org: The Use of Crowdsourcing to Improve Global Land Cover. Remote Sens. 2009, 1, 345–354. [Google Scholar] [CrossRef] [Green Version]

- Laso Bayas, J.C.; Lesiv, M.; Waldner, F.; Schucknecht, A.; Duerauer, M.; See, L.; Fritz, S.; Fraisl, D.; Moorthy, I.; McCallum, I.; et al. A global reference database of crowdsourced cropland data collected using the Geo-Wiki platform. Sci. Data 2017, 4, 170136. [Google Scholar] [CrossRef]

- Yu, B.; Shang, S. Multi-Year Mapping of Maize and Sunflower in Hetao Irrigation District of China with High Spatial and Temporal Resolution Vegetation Index Series. Remote Sens. 2017, 9, 855. [Google Scholar] [CrossRef] [Green Version]

- Yu, B.; Shang, S.; Zhu, W.; Gentine, P.; Cheng, Y. Mapping daily evapotranspiration over a large irrigation district from MODIS data using a novel hybrid dual-source coupling model. Agric. For. Meteorol. 2019, 276–277, 107612. [Google Scholar] [CrossRef]

- Xu, X.; Huang, G.; Qu, Z.; Pereira, L.S. Assessing the groundwater dynamics and impacts of water saving in the Hetao Irrigation District, Yellow River basin. Agric. Water Manag. 2010, 98, 301–313. [Google Scholar] [CrossRef]

- Shi, W.; Zhang, H.; Li, J.; Liu, Y.; Shi, R.; Du, H.; Chen, J. Occurrence and spatial variation of antibiotic resistance genes (ARGs) in the Hetao Irrigation District, China. Environ. Pollut. 2019, 251, 792–801. [Google Scholar] [CrossRef]

- Jiang, L.; Shang, S.; Yang, Y.; Guan, H. Mapping interannual variability of maize cover in a large irrigation district using a vegetation index—Phenological index classifier. Comput. Electron. Agric. 2016, 123, 351–361. [Google Scholar] [CrossRef]

- Wen, Y.; Shang, S.; Rahman, K.U. Pre-Constrained Machine Learning Method for Multi-Year Mapping of Three Major Crops in a Large Irrigation District. Remote Sens. 2019, 11, 242. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.; Sun, S.; Wu, P.; Wang, Y.; Zhao, X. Inter-county virtual water flows of the Hetao irrigation district, China: A new perspective for water scarcity. J. Arid. Environ. 2015, 119, 31–40. [Google Scholar] [CrossRef]

- Zhang, X.; Guo, P.; Zhang, F.; Liu, X.; Yue, Q.; Wang, Y. Optimal irrigation water allocation in Hetao Irrigation District considering decision makers’ preference under uncertainties. Agric. Water Manag. 2021, 246, 106670. [Google Scholar] [CrossRef]

- Nie, W.-B.; Dong, S.-X.; Li, Y.-B.; Ma, X.-Y. Optimization of the border size on the irrigation district scale—Example of the Hetao irrigation district. Agric. Water Manag. 2021, 248, 106768. [Google Scholar] [CrossRef]

- Jin, Z.; Azzari, G.; You, C.; Di Tommaso, S.; Aston, S.; Burke, M.; Lobell, D.B. Smallholder maize area and yield mapping at national scales with Google Earth Engine. Remote Sens. Environ. 2019, 228, 115–128. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Xiao, X.; Boles, S.; Liu, J.; Zhuang, D.; Liu, M. Characterization of forest types in Northeastern China, using multi-temporal SPOT-4 VEGETATION sensor data. Remote Sens. Environ. 2002, 82, 335–348. [Google Scholar] [CrossRef]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Viña, A.; Ciganda, V.; Rundquist, D.C.; Arkebauer, T.J. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32, L08403. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Dong, J.; Xiang, K.; Wang, S.; Han, W.; Yuan, W. A sub-pixel method for estimating planting fraction of paddy rice in Northeast China. Remote Sens. Environ. 2018, 205, 305–314. [Google Scholar] [CrossRef]

- Xiao, X.; Boles, S.; Liu, J.; Zhuang, D.; Frolking, S.; Li, C.; Salas, W.; Moore, B. Mapping paddy rice agriculture in southern China using multi-temporal MODIS images. Remote Sens. Environ. 2005, 95, 480–492. [Google Scholar] [CrossRef]

- Qiu, B.; Huang, Y.; Chen, C.; Tang, Z.; Zou, F. Mapping spatiotemporal dynamics of maize in China from 2005 to 2017 through designing leaf moisture based indicator from Normalized Multi-band Drought Index. Comput. Electron. Agric. 2018, 153, 82–93. [Google Scholar] [CrossRef]

- Clevers, J.G.P.W.; Gitelson, A.A. Remote estimation of crop and grass chlorophyll and nitrogen content using red-edge bands on Sentinel-2 and -3. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 344–351. [Google Scholar] [CrossRef]

- Lee, J.-S. Refined filtering of image noise using local statistics. Comput. Graph. Image Processing 1981, 15, 380–389. [Google Scholar] [CrossRef]

- Wu, B.; Tian, Y.; Li, Q. GVG, a crop type proportion sampling instrument. J. Remote Sens. 2004, 8, 570–580. (In Chinese) [Google Scholar]

- Johnson, D.M.; Mueller, R. Pre- and within-season crop type classification trained with archival land cover information. Remote Sens. Environ. 2021, 264, 112576. [Google Scholar] [CrossRef]

- Zeng, H.; Wu, B.; Wang, S.; Musakwa, W.; Tian, F.; Mashimbye, Z.E.; Poona, N.; Syndey, M. A Synthesizing Land-cover Classification Method Based on Google Earth Engine: A Case Study in Nzhelele and Levhuvu Catchments, South Africa. Chin. Geogr. Sci. 2020, 30, 397–409. [Google Scholar] [CrossRef]

- Potapov, P.V.; Turubanova, S.A.; Hansen, M.C.; Adusei, B.; Broich, M.; Altstatt, A.; Mane, L.; Justice, C.O. Quantifying forest cover loss in Democratic Republic of the Congo, 2000–2010, with Landsat ETM+ data. Remote Sens. Environ. 2012, 122, 106–116. [Google Scholar] [CrossRef]

- Liu, H.; Gong, P.; Wang, J.; Clinton, N.; Bai, Y.; Liang, S. Annual dynamics of global land cover and its long-term changes from 1982 to 2015. Earth Syst. Sci. Data 2020, 12, 1217–1243. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Wang, S.; Azzari, G.; Lobell, D.B. Crop type mapping without field-level labels: Random forest transfer and unsupervised clustering techniques. Remote Sens. Environ. 2019, 222, 303–317. [Google Scholar] [CrossRef]

- Martínez-Muñoz, G.; Suárez, A. Out-of-bag estimation of the optimal sample size in bagging. Pattern Recognit. 2010, 43, 143–152. [Google Scholar] [CrossRef] [Green Version]

- Stehman, S.V. Selecting and interpreting measures of thematic classification accuracy. Remote Sens. Environ. 1997, 62, 77–89. [Google Scholar] [CrossRef]

- Ekim, B.; Sertel, E. Deep neural network ensembles for remote sensing land cover and land use classification. Int. J. Digit. Earth 2021, 14, 1868–1881. [Google Scholar] [CrossRef]

- Lopez-Sanchez, J.M.; Cloude, S.R.; Ballester-Berman, J.D. Rice Phenology Monitoring by Means of SAR Polarimetry at X-Band. IEEE Trans. Geosci. Remote Sens. 2012, 50, 2695–2709. [Google Scholar] [CrossRef]

- Liu, C.-A.; Chen, Z.-X.; Shao, Y.; Chen, J.-S.; Hasi, T.; Pan, H.-Z. Research advances of SAR remote sensing for agriculture applications: A review. J. Integr. Agric. 2019, 18, 506–525. [Google Scholar] [CrossRef] [Green Version]

- Su, T.; Zhang, S. Object-based crop classification in Hetao plain using random forest. Earth Sci. Inform. 2021, 14, 119–131. [Google Scholar] [CrossRef]

- Guo, S.; Ruan, B.; Chen, H.; Guan, X.; Wang, S.; Xu, N.; Li, Y. Characterizing the spatiotemporal evolution of soil salinization in Hetao Irrigation District (China) using a remote sensing approach. Int. J. Remote Sens. 2018, 39, 6805–6825. [Google Scholar] [CrossRef]

- Forkuor, G.; Conrad, C.; Thiel, M.; Ullmann, T.; Zoungrana, E. Integration of Optical and Synthetic Aperture Radar Imagery for Improving Crop Mapping in Northwestern Benin, West Africa. Remote Sens. 2014, 6, 6472–6499. [Google Scholar] [CrossRef] [Green Version]

- Kaufman, Y.J.; Remer, L.A. Detection of forests using mid-IR reflectance: An application for aerosol studies. IEEE Trans. Geosci. Remote Sens. 1994, 32, 672–683. [Google Scholar] [CrossRef]

- Guo, X.; Li, P. Mapping plastic materials in an urban area: Development of the normalized difference plastic index using WorldView-3 superspectral data. ISPRS J. Photogramm. Remote Sens. 2020, 169, 214–226. [Google Scholar] [CrossRef]

- Teluguntla, P.; Thenkabail, P.S.; Oliphant, A.; Xiong, J.; Gumma, M.K.; Congalton, R.G.; Yadav, K.; Huete, A. A 30-m landsat-derived cropland extent product of Australia and China using random forest machine learning algorithm on Google Earth Engine cloud computing platform. ISPRS J. Photogramm. Remote Sens. 2018, 144, 325–340. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).