1. Introduction

Electromagnetic signal classification (ESC) is a key technology in the field of information processing, which forms the basis for non-cooperative communications [

1], electronic counter-measures [

2], smart antennas [

3], software radio [

4], and wireless spectrum management [

5]. With the widespread use of various types of radio equipment, the types and numbers of radiation sources in the electromagnetic environment are increasing, and the electromagnetic spectrum is becoming more and more congested. More importantly, electromagnetic signal data are acquired faster than before. Under these circumstances, electromagnetic signal data have applications in many fields, such as electromagnetic spectrum monitoring [

6], cognitive radio [

7], and cyberspace security [

8]. ESC is an important requirement for these applications. ESC includes electromagnetic signal type classification and specific emitter identification (SEI). The main task of the former is to exploit the characteristics of electromagnetic signals to distinguish different signal types [

8], while the latter involves measuring the characteristics of the received electromagnetic signals and determining the individual radiation sources that generate the signals, based on available prior information [

9].

Existing methods based on deep learning have recently achieved remarkable success in the field of signal classification. Many correlative algorithms [

10,

11] have been proposed and have achieved excellent classification results. For instance, in [

12], a graph convolutional modulation recognition framework has been proposed to identify the modulation type of the signal. In [

13], a framework based on the capsule network has been proposed to solve the small sample modulation-type detection problem. In [

14], a sequence-based network has been proposed. In [

15], a convolutional neural network for radio modulation classification has been presented, which is not only suitable for the complex time domain of radio signals but also can achieve excellent results under low signal-to-noise ratio (SNR) conditions. A residual neural network [

16] has been used in [

17] to perform signal classification over a range of configurations and channel impairments, providing reliable statistics. To solve the task of modulation signal classification, AlexNet and GoogleNet have been used in [

18] and achieved great results, proving the effectiveness of deep learning methods in this area. In [

10], the authors proposed a complex-valued convolutional neural network, which was used to study the intrinsic properties of the radar interference signal. The authors in [

19] have developed a small sample signal modulation recognition framework using an attention relation network. In [

20], a convolutional long short-term deep neural network has been used to fully exploit the temporal properties of electromagnetic signals and achieve excellent performance. In [

21], a multitasking-based generalized automatic modulation classification framework has been proposed, which was more robust than conventional methods. Moreover, in [

22], the authors proved the effectiveness of a deep model in identifying interference sources using frequency band, SNR, and sample selection to optimize the training time. A generative adversarial network has been proposed, in [

8], to address the problem of insufficiently labeled electromagnetic signal samples.

Although researchers have conducted many explorations on deep learning-based ESC methods and achieved good results, there is a pre-condition for such deep learning algorithms, which is that the number of signal categories that these algorithms can recognize is fixed. When multiple new categories appear, a natural approach to incremental learning is to simply fine-tune a pre-trained model with the training data of new categories. However, a serious challenge to this approach is catastrophic forgetting [

23]; specifically, fine-tuning a model on new data usually results in a significant drop in performance on previous categories. The model has to be trained with the training data of all the previously observed categories in order to recognize the new and the old classes simultaneously. This leads to high computational effort and memory requirements.

With the increasing number of signal processing tasks, electromagnetic signal data have shown an explosive growth trend. In particular, we are faced with the constant emergence of new categories of electromagnetic signal data. For example, in the field of coded communication and adaptive modulation, the receiver recognizes the coding and the modulation method used by the transmitter, and then uses the corresponding decoding and demodulation algorithm to decode and demodulate the acquired signal. When the transmitter changes the modulation mode, the signal received by the receiver will be a new class. When managing the spectrum, cognitive radio can monitor whether illegal signals are interfering with the user’s communications by detecting radio signals in the perceived frequency band. If there are multiple categories of illegal signals, they should be identified immediately. Moreover, in realistic applications, new categories are expected to keep appearing over time. If the classification accuracy on the previous categories of the classification model decreases after the learning task of the new class is completed at each stage, there will theoretically be an infinite number of new classes to learn, thus creating an infinite loop.

Class incremental learning is a suitable learning mode for this scenario, where the data increase gradually. Class-incremental learning algorithms can learn a set of gradually evolving classification tasks, and the categories in each task have no overlap with the categories in other tasks. In recent years, driven by practical applications, class incremental learning has gradually become an important research topic. Existing deep learning-based algorithms can be mainly divided into the following three categories: regularization-based, rehearsal-based, and bias-correction-based. The core idea of regularization-based approaches is to estimate the importance of each parameter in the network [

23]. The regularization element was added, in [

24,

25], in order to penalize parameters that vary widely. To constrain important parameters, ref. [

26] have introduced regularization terms in the loss function. Furthermore, the authors of [

27] have attempted to use the idea of data regularization to prevent activation drift. MAS [

28] uses the gradient of the loss function to measure the importance of neurons. The work in [

29] preserved old knowledge by freezing the weights of the last layer and penalizing differences between activations before the classification layer. Rehearsal-based methods can mitigate the catastrophic forgetting phenomenon by storing a small number of old samples [

30,

31], or using a generation network to generate fake samples [

32,

33]. In [

30], the distillation loss and an exemplar set of old categories were used to train the model for the first time. In [

34], a semantic drift compensation algorithm was used to estimate the center of each category and perform the prediction by the NCM classifier [

30]. In [

35], a supervised contrast loss was used to bring samples from the same category closer together in the embedding space, while samples of different classes were further apart in the embedding space. Bias-correction-based methods are dedicated to addressing the problem of imbalance between new and old tasks [

31,

36,

37]. To alleviate such an imbalance, cosine normalization, less-forget constraint, and inter-class separation have been proposed in [

36]. To address the problem that the norm of the classifier weight vector of new categories is larger than that of old categories, the weight aligning [

37] method has been proposed, which cooperates better with the distillation loss. Another effective algorithm has been proposed in [

31], which corrects the biases of different tasks by adding an additional linear layer.

In real ESC applications, an incremental learning algorithm may be able to use part of the previous samples. What an incremental learning method based on the class exemplar selection should do is retain the previous classification capabilities in the form of exemplars of the old categories. The samples selected by the rehearsal-based methods described above can only maintain the classification performance of the model for samples in the center of the class. In order to maintain the classification ability of the model for samples far from the class center at the same time, more efficient samples should be selected. Many exemplar selection-based strategies have recently been proposed [

38]. They can be broadly divided into two categories: model output-based and training data distribution-based. Model output-based methods use some output indicators of the classification model as the exemplar selection criteria. In [

39], samples were selected based on the lowest confidence level, but the sample set selected in this way was redundant. In [

40], the output probability of the model was used as the selection criterion. The entropy-based method was proposed in [

41]. Studies have also considered the prediction of errors [

42] and interface-distance-based sampling [

23]. Training data distribution-based methods use the characteristics of the data itself as the selection criterion. In [

30], the average feature vector was extracted. Then, selection was performed according to the distance between the feature vector of each sample and the center of the class feature. In [

43], clustering information was used to select the effective samples. Some samples were randomly selected from the whole data set as exemplars. In general, methods based on random sampling are used for comparison with other methods [

23,

44]. However, the samples selected by the abovementioned methods can only represent the class very well, while discrimination between classes is not sufficient for the models to achieve good classification results for old classes.

It is not an easy task to develop a good classification model which is suitable for new classes. The model must be able to classify new classes while maintaining its ability to recognize old categories. Nevertheless, existing deep learning approaches are not suitable for the above situations. In summary, the challenges facing ESC can be expressed as follows:

- (1)

How to maintain the classification performance of the model when new categories of electromagnetic signal data emerge. As the number of different electromagnetic devices increases and the amount of collected signal data continues to grow, various new types of signals may be mixed with the existing data. Furthermore, data are updated rapidly, making it an unrealistic task to save all signal data for further training when memory is limited. The emergence of multiple categories and the updating of large data require the deep model to rapidly update its classification capabilities; however, existing state-of-the-art deep learning approaches are not able to learn new categories efficiently; and

- (2)

How to maintain the model’s ability to classify old classes after learning the new class. A model trained on old categories may suffer from catastrophic forgetting [

23] after learning new categories. If all of the data from the previously observed classes are used to train the new model, significant computational overhead and time costs will be incurred. Mainstream methods based on exemplar selection are usually based on prototypes [

30]. These methods use the mean value of the extracted features as the selection criterion. However, samples selected in this way can only represent the class very well, while discrimination between classes is not sufficient for the model to achieve good classification results on the old classes.

To overcome the aforementioned problems, we present a multi-class incremental learning framework, CES-MOLPC, for ESC, which not only can learn new classes rapidly but also ensures that its classification accuracy on old classes is maintained at a high value. Specifically, we develop a multi-objective linear programming (MOLP) incremental classifier based on the average feature vectors extracted by the deep extractor. Inspired by [

45], the proposed MOLP classifier is obtained by solving the MOLP problem, where the whole process only requires forward propagation of the old model without using a back-propagation algorithm to train the model. In addition, inspired by [

46,

47,

48], we develop an adaptive class exemplar selection approach, in view of the normalized mutual information, in order to maintain the accuracy of new model on previous categories. We also design a weighted loss function based on cross-entropy and distillation loss, which is used to fine-tune the incremental model, thus making the model more robust. The presented method requires only a small amount of computational and storage resources. In summary, the main innovative contributions of this article are as follows:

- (1)

The presented MOLP classifier learns multiple new categories simultaneously by increasing multiple weight vectors, which can be obtained by solving the MOLP problem based on the average feature vector of each class;

- (2)

The presented adaptive class exemplar selection approach, in view of normalized mutual information, preserves the classification ability of the model for previous categories by selecting key samples; and

- (3)

A weighted loss function based on cross-entropy and distillation loss is presented to fine-tune the new model using both the new category data and the old exemplar set.

The remainder of this article is organized as follows: in

Section 2, the proposed ESIC framework is presented.

Section 3 shows the numerical examples to verify the classification performance of our method. In

Section 4, we discuss the proposed method and experimental results. Finally, in

Section 5, we summarize the main results of this paper.

2. Methodology

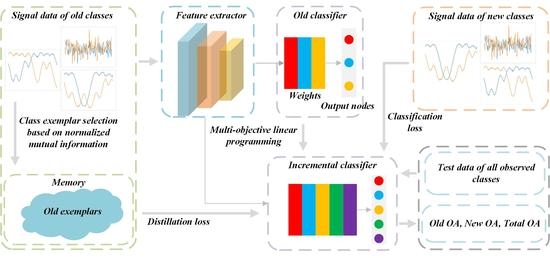

According to the electromagnetic signal increment classification (ESIC) framework, shown in

Figure 1, the proposed framework is mainly composed of the following three modules:

- (1)

Adaptive class exemplar selection;

- (2)

MOLP incremental classifier; and

- (3)

Incremental learning.

The specific description, with respect to the proposed algorithm, is given in the following. Assuming that there are N categories of signal data, a model trained using these data has the ability to accurately classify N old categories, where is a feature extractor, is an N-class classifier, and is the weight matrix of the classifier. In our model, the bias of the classifier is set to 0. In the ESIC task, the signal data constantly appear in a stream. In the case of limited memory size, only a small part of the training data can be saved. Therefore, the exemplar selection approach, in view of normalized mutual information, is presented for the selection of key samples. When T unknown categories are used as input to the model, the model must correctly identify the N old categories while also being able to identify the T new categories well. At this time, the output layer of the model must add T output nodes; that is, the weight matrix of the classifier needs to add T weight columns. Thus, the MOLP classifier is proposed to learn new classes by adding T weight columns. is obtained by solving the MOLP problem. Finally, in the incremental learning stage, the exemplars of the old classes and the data of the new class are fused to fine-tune the model . At the same time, cross-entropy loss and distillation loss are adopted for fine-tuning. Our goal is to obtain the incremental model .

2.1. Adaptive Exemplar Selection

The idea behind the proposed exemplar selection approach is to calculate the normalized mutual information between the one-hot vector corresponding to the true label of the sample and the probability vector predicted by model for the sample. The one-hot vector and the class vector predicted by the model for the sample can be regarded as two clusters, where the resemblance between the two clusters is measured by the normalized mutual information. We assume that the harder a sample is to identify as belonging to the correct category, the closer it is to the decision boundary. Specifically, on the premise that a sample is correctly classified, if there is less normalized mutual information between the ground-truth label and the probability of the sample predicted by model, the more difficult it is for the sample to be recognized as the correct category, which means that we can select a small number of boundary samples to describe the sample distribution of the entire category.

As shown in Algorithm 1, given the data of

N old categories

, the exemplar is selected from

. An initial model,

, is trained to recognize

N classes using all data from

. When

T new classes appear, they are integrated with the exemplars of old categories to train a new model. The normalized mutual information between the one-hot ground-truth label

and predicted label

is shown in (1).

where

refers to the mutual information between

and

, as shown in (2);

refers to the entropy of

, as shown in (3); and

refers to the entropy of

, as shown in (4).

| Algorithm 1 Adaptive class exemplar selection |

Input: : all training data, are the training data of the category; : size of memory; : pre-trained model; : the number of training samples for each old class; Output: Class exemplar set. 1. For each training sample in class i:

- i.

The sample is input into the model to obtain its predicted probability ; - ii.

Calculate the normalized mutual information between and , according to Equation ( 1);

2. For each class i:

- i.

Obtain the classification accuracy of category through forward propagation; - ii.

Obtain the average feature vector f of all samples through forward propagation and the average feature vector ; - iii.

Calculate the weight factor of the category according to Equation ( 5); - iv.

Calculate the number of samples that need to be saved for the category; - v.

Find samples with the smallest mutual information;

3. Obtain the exemplar set .

|

Previous algorithms based on sample playback select the same number of samples in each category. In this work, an adaptive exemplar selection method, which is weighted based on the number of samples, is proposed to maintain the classification ability on old categories. As the classification difficulty for each category is different, it is not necessary for each category to preserve the same number of samples. For the categories that are difficult to distinguish, more samples should be retained, while samples that are easy to distinguish need only retain a small number of samples. When selecting the exemplar set, the recognition accuracy of the old model on the old training data is used as feedback. If the recognition rate for a certain old category is higher, then fewer samples should be saved, accordingly. In addition, the degree of dispersion of the category distribution is also used as a measure. We use the mean value of the distance between the feature vector of all samples in a category and the average feature vector to measure the distribution of samples in the category. The more concentrated the sample distribution of the category, the higher the similarity within the category, such that fewer samples are required to describe the category. The weight factor is shown in (5):

where

is the classification accuracy on the

class,

refers to the number of training samples in the

class,

refers to the feature vector of the

sample, and

refers to the average feature vector of the

class.

As the memory size is fixed, considering the number of samples in each category and then normalizing the weights of all old categories to between 0 and 1, the number of samples that need to be saved for each category can be obtained, as shown in (6):

where

represents the number of samples that need to be saved in the signal data of the first type in the old data.

2.2. MOLP Incremental Classifier

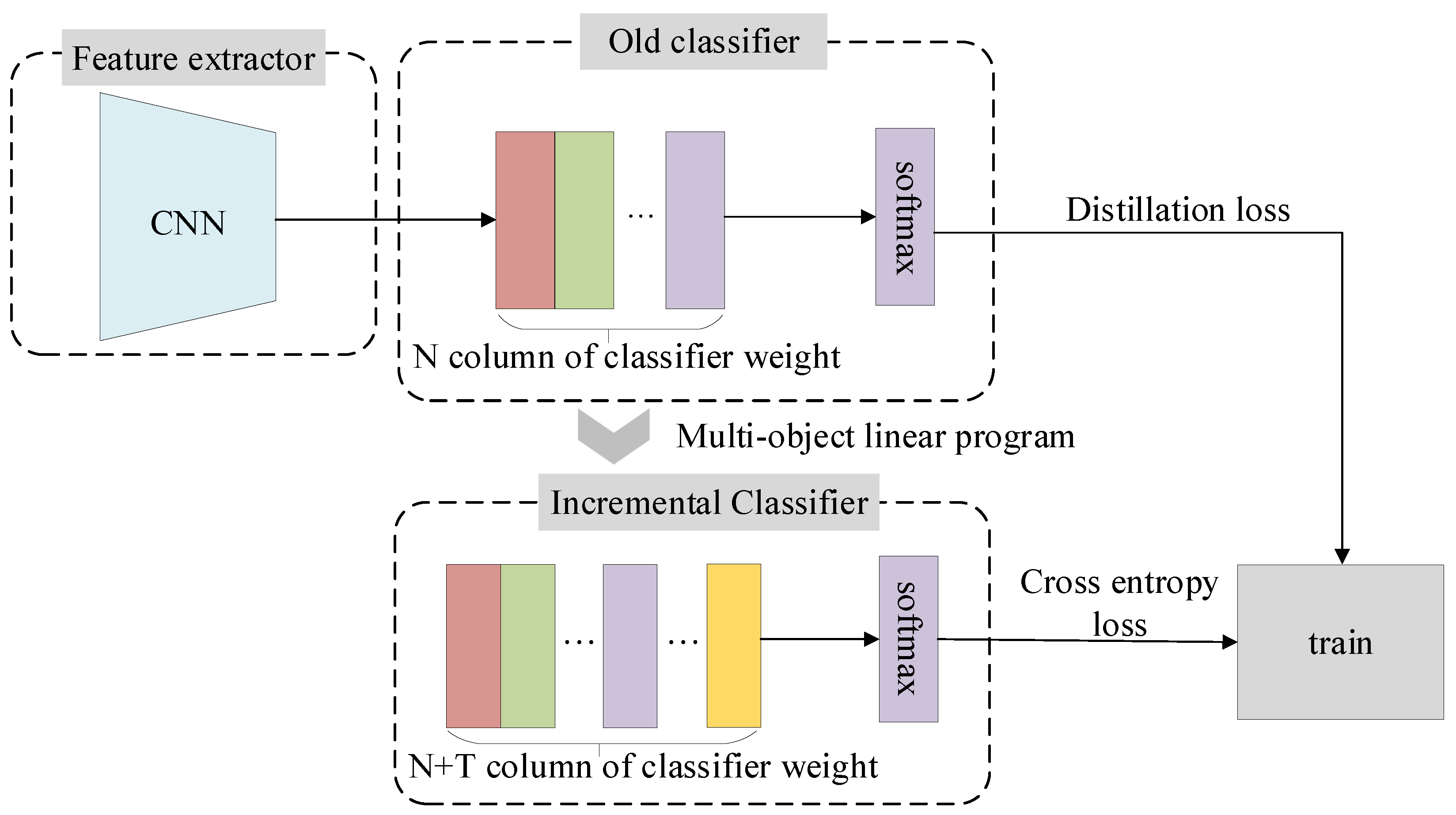

As shown in

Figure 2, when the model needs to learn

T new categories, the classifier must add

T output nodes; that is, the weight matrix needs to add

T columns. According to the classification principle of the linear classifier, when the new category data are input to the CNN model, the feature vector is first extracted through the feature extractor, following which the feature is used as input to the linear classification layer to obtain a vector. Each component in the vector denotes the possibility that the sample belongs to certain category. Thus, the component value corresponding to the new class should be maximized.

Given the model

trained on old categories and a sample

x of the

category, as well as the eigenvector

f of

x extracted by the feature extractor

, the existing classifier

gives the score by classifier dot multiplying

f with

. Then, the softmax activation function is used to obtain the probability of

x belonging to the

category, as shown in (7):

where

,

is

dimensional, as shown in (8);

refers to the

column of the weight matrix; and

L refers to the dimension of the eigenvector. When

T new categories arrive, the new classifier corresponds to the weight matrix

, as shown in (9):

The exemplar set of old categories and new categories of data are used as input to the model. The class mean eigenvector of each class is obtained by averaging each eigenvector extracted by the sophisticated extractor

:

where

refers to the mean feature vector of old categories, and

refers to the mean feature vector of new categories at a time. When the mean feature vector of the

old category is input to the classification layer, it will predict a score

by multiplying the

class feature vector by the new weight matrix, as shown in (11).

should be maximized in

; thus, we can formulate it as

,

,

, which leads to the constraint of the MOLP problem.

If the feature vector of the

new category is input into the classifier, it will predict a score

by multiplying the

class feature vector with the new weight matrix, as shown in (12).

should be maximized in

; thus, we can formulate it as

,

,

,

, which leads to the constraint of the MOLP problem. We take

as the maximization goal of the MOLP.

In summary, we can express the MOLP problem as follows:

where

is the mean feature vector of the

new category,

is the mean feature vector of the

old category,

denotes the

column of the new weight matrix,

denotes the

column of the new weight matrix, and

denotes the largest value of the old weight matrix. By solving the above MOLP problem, we can obtain the new classifier

.

2.3. Incremental Learning

After completing the exemplar

selection of old classes and obtaining the incremental classifier

, the last step is incremental learning. The specific algorithm flow is detailed in Algorithm 2. The old exemplar set and data of new categories are integrated to fine-tune the model

. In the incremental learning phase, both the cross-entropy loss and distillation loss are used when fine-tuning the new incremental model. The cross-entropy loss is designed to guide the model to better distinguish signal categories, while distillation loss is used to help the new model not forget the knowledge of the old model as much as possible. Our loss function can be expressed as follows:

where

is equal to

,

N is the number of old classes, and

T is the number of new classes. The standard cross-entropy loss

and the distillation loss

are as follows:

where

collects the old exemplar set and all the data of the new classes,

is the indicator function,

is the probability that the input signal belongs to the

category obtained by the new model,

is the soft label of the input signal generated by old model

on all observed categories and

y is the ground-truth label, and

is a rescaling function, where

is scaling factor (

in our experiments) which increases the weights of small values.

| Algorithm 2 Incremental learning |

Input: : the exemplar set of old categories; : new training data, is the training data of category; : old model; : incremental model; Output: : new model. // form combined training set: 1. 2. For each true label :

- i.

Obtain the according probability through forward propagation of model , for all ; - ii.

Obtain the according probability through forward propagation of model , for all ;

3. Calculate the total loss according to Equations (14)–(16), as follows: 4. Run the fine-tuning and update the parameters of model

|

4. Discussion

In terms of computational complexity, the proposed algorithms mainly included multi-objective linear programming, adaptive class example selection, and incremental learning. The interior-point method was used to solve linear programming problems, such that the time complexity was . During the process of adaptive class exemplar selection, the normalized mutual information of each sample and the Euclidean distance between each sample and its class feature center had to be computed, and so its computational complexity was . During the process of incremental learning, the deep model was fine-tuned. Therefore, we introduce the concept of floating point operations (FLOPs) to illustrate the computational complexity. The FLOPs of the model corresponding to the RML2016.04c data set were 1.28 M, while the FLOPs of the model corresponding to the ACARS data set were 2.83 M.

To further illustrate the computational complexity of the proposed method, we performed a comparative experiment considering the runtime of the algorithm, setting

on both the RML2016.04c data set and the ACARS data set.

Table 7 and

Table 8 show the running times of the proposed method and other comparable methods for learning new classes. We can see that the running time of the proposed CES-MOLPC was much shorter than those of the other methods. The proposed method, CES-MOLPC, took only 1.64 min to complete learning of a new class on the RML2016.04c data set; however, that on the ACARS data set took a longer time, due to the high dimensionality of the data. Taken together, the other methods took more than three times as much time as the proposed method, CES-MOLPC.

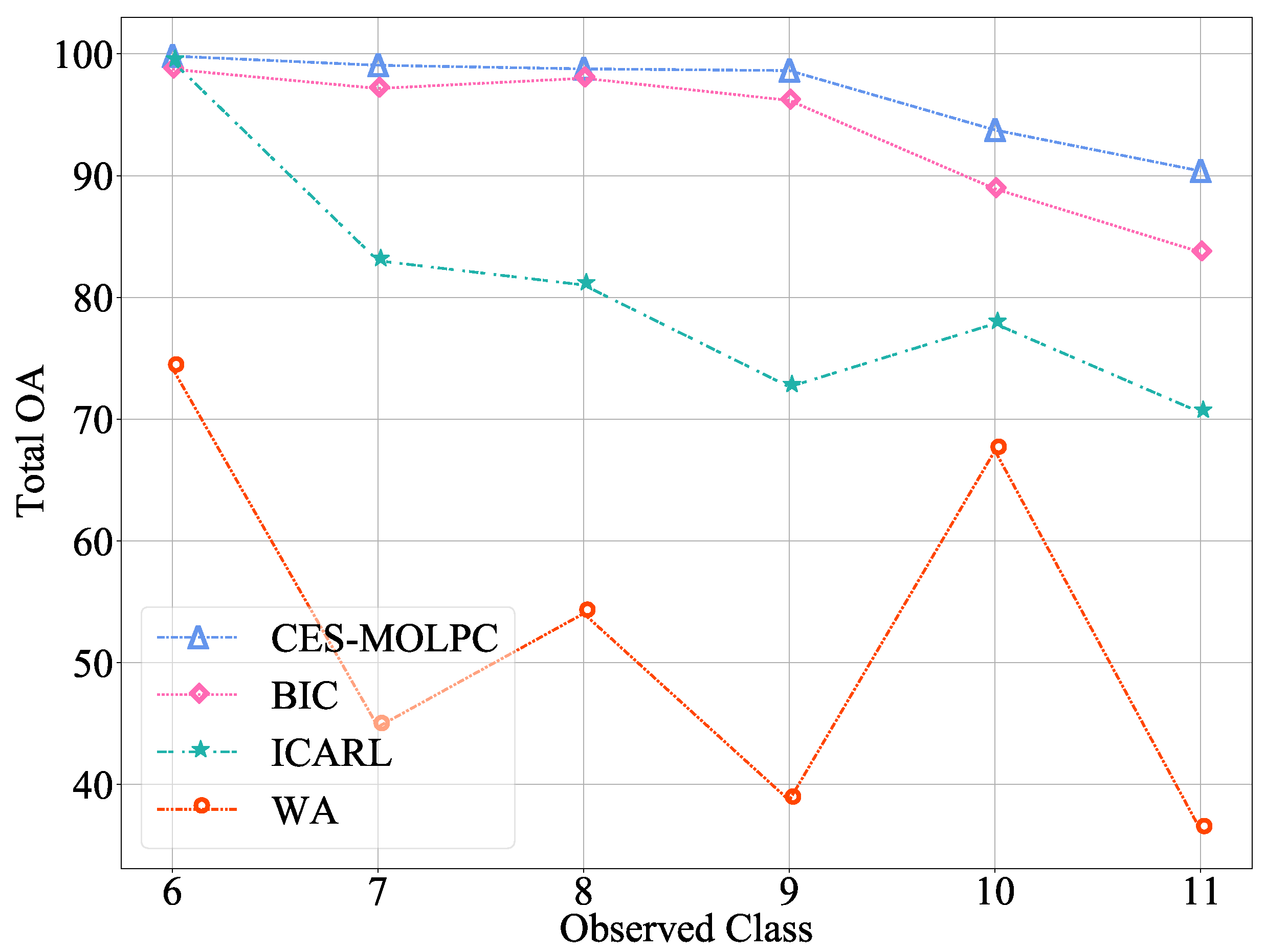

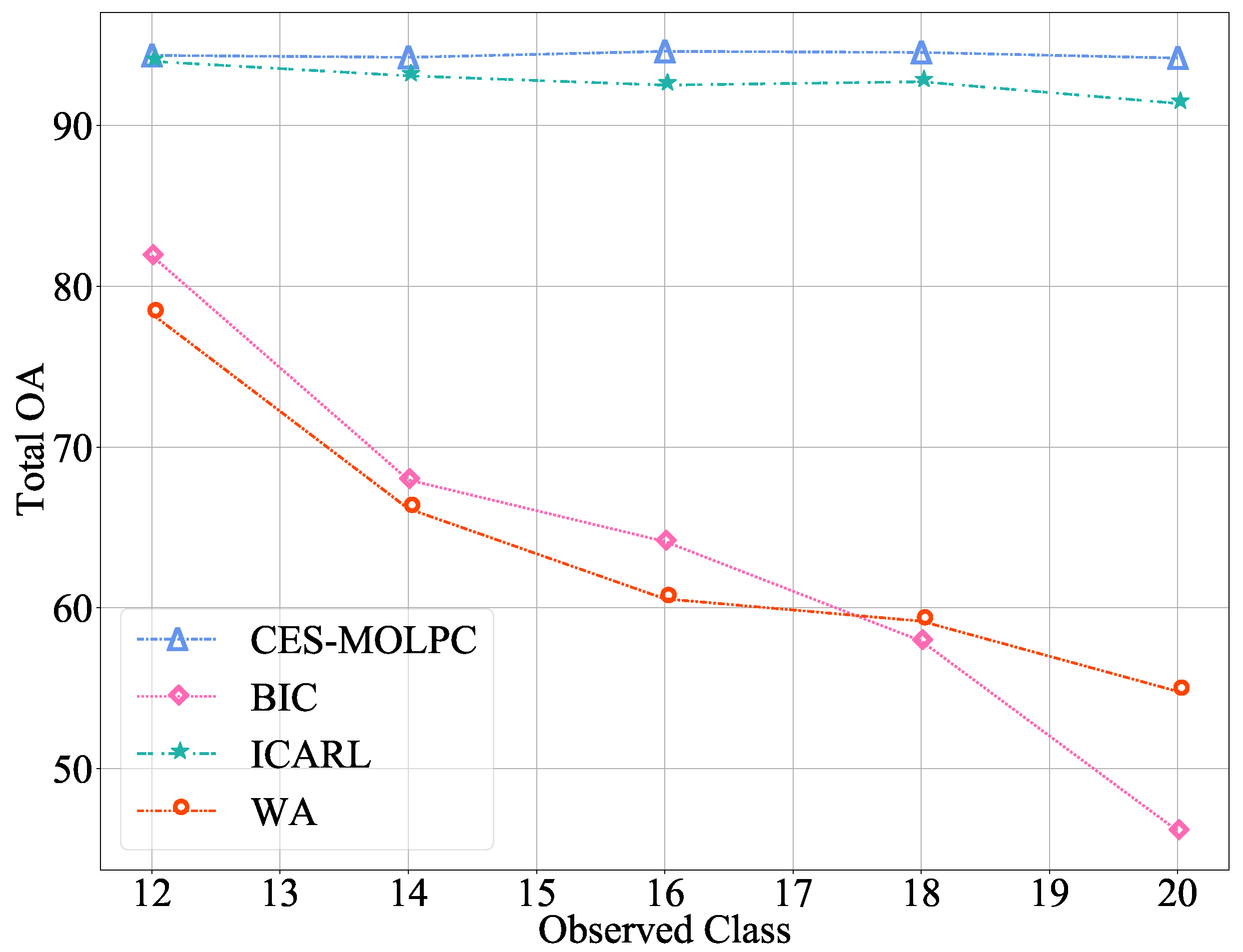

From the experimental results shown in

Table 3 and

Table 4, the proposed method showed good generalization performance for the public data set RML2016.04c, as well as for the signal data set ACARS recorded in a real environment. The Total OA corresponding to the proposed method surpassed that of other comparison methods; however, the New OA and Old OA values were different. In fact, as the distillation loss was used to fine-tune the model, it was difficult for New OA and Old OA to reach a relatively balanced condition simultaneously. We privately performed a more extreme experiment, in order to adjust the ratio of cross-entropy loss and distillation loss. When the cross-entropy loss was much larger than the distillation loss, the resulting New OA was higher and the Old OA was lower. Meanwhile, if the distillation loss was much greater than the cross-entropy loss, then New OA would be higher and Old OA would be lower. In the WA method, it was assumed that the L2-norms of the weight vectors of the classifiers corresponding to the new and old classes were different, such that the weight alignment method was used to balance the new and old classes, for which distillation loss was also used. However, in the experimental results, the Old OA corresponding to the WA method was almost 0 in any case and, so, this method is not suitable for the problem considered in this paper. Therefore, the advantages of the proposed method can be seen from the experimental results. In incremental learning, both the Old OA and the New OA must be considered. Taken together, the proposed method outperformed the comparison methods. The common feature of the BIC, ICARL, and WA methods was that they all used the Prototype exemplar selection method. From

Table 5 and

Table 6, it can be seen that the adaptive sample selection method based on normalized mutual information proposed in this paper had better performance than other exemplar selection methods. This indicates that the proposed exemplar selection method can select more valuable samples from the old categories. The results shown in

Figure 3 and

Figure 4 demonstrate that the proposed method outperformed the other methods, regardless of how many new classes were learned.

In all of the experiments conducted in this paper, the total exemplar size of the old classes was set to a fixed value of 200, which is relatively small, compared to the total number of training samples. This allows the proposed algorithm to guarantee its robustness under small sample conditions. However, in a real-world ESC application, when the number of classes for which the model has learned exceeds 200, the number of samples stored in each previous class is less than 1. At this point, the value of must be increased, in order to make the algorithm suitable for the learning of new classes. Obviously, the larger the value of , the more training samples are retained from each old class, and the classification accuracy of the model after incremental learning will be higher. When the value of exceeds the sum of all training samples of all old classes, the trained model reaches the upper bound of performance. Assuming that only one sample is reserved for each category as the exemplar of the category, it may be difficult for this sample to represent the feature distribution of the whole category. In this case, if there are too many training samples in the new class, there will be an extreme imbalance between the old and new classes. This will result in the New OA being significantly higher than Old OA; however, the distillation loss may offset the effects of the imbalance between old and new classes. A key problem researchers face in incremental learning tasks is catastrophic forgetting, and so the metric researchers should pay more attention to is Old OA. In practical applications, the signals that we are interested in may belong to a new class or an old class. Therefore, New OA and Old OA should be considered equally important. If the model can both recognize the new classes and store the data features of the old classes only by learning the new class without storing samples from the old classes, it will not be constrained by memory resources. Another feasible idea is to retain the key features of the previous categories, then replay them when learning new classes. This can save memory, compared to storing the training samples directly.