VO-LVV—A Novel Urban Regional Living Vegetation Volume Quantitative Estimation Model Based on the Voxel Measurement Method and an Octree Data Structure

Abstract

:1. Introduction

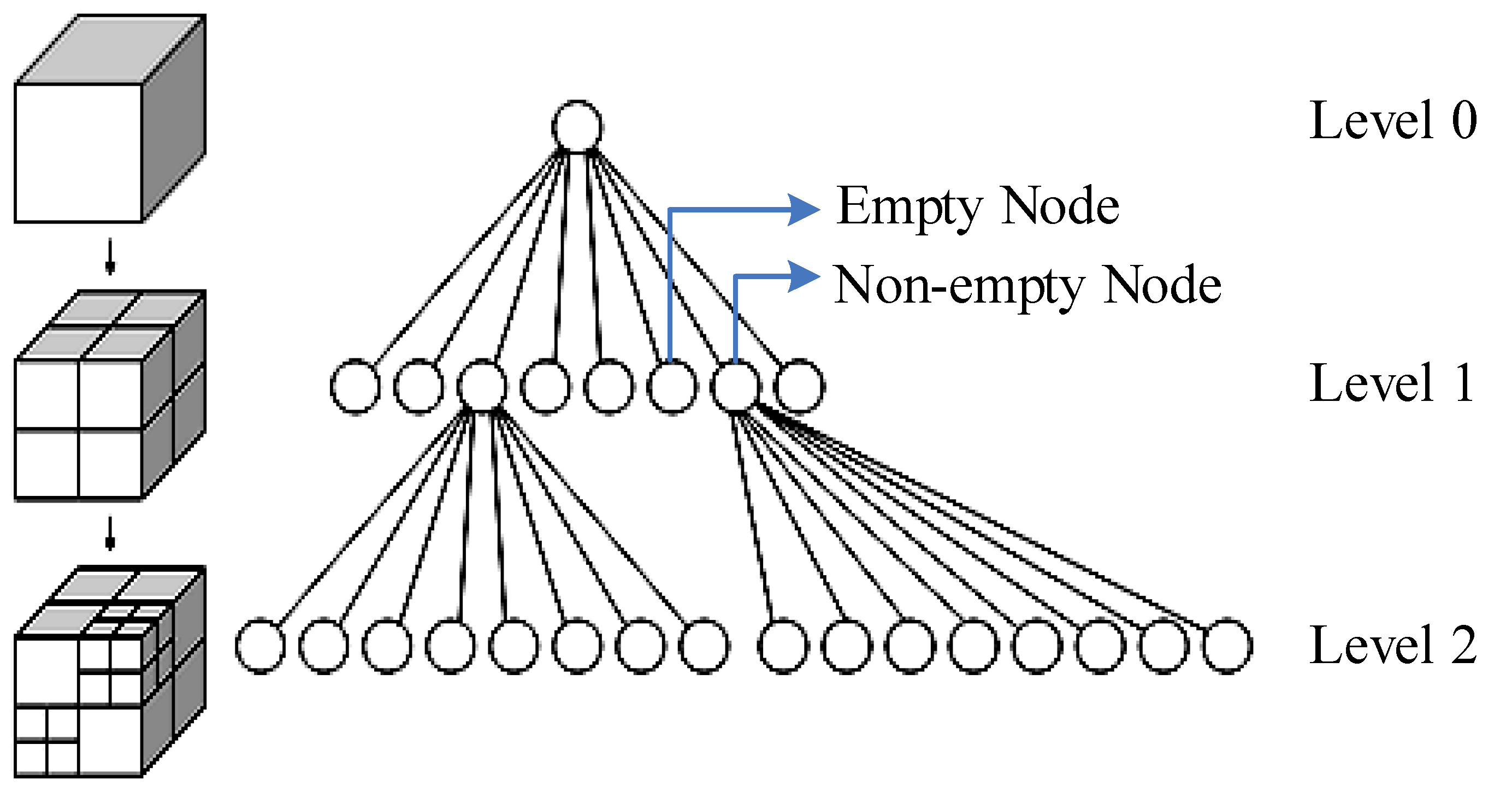

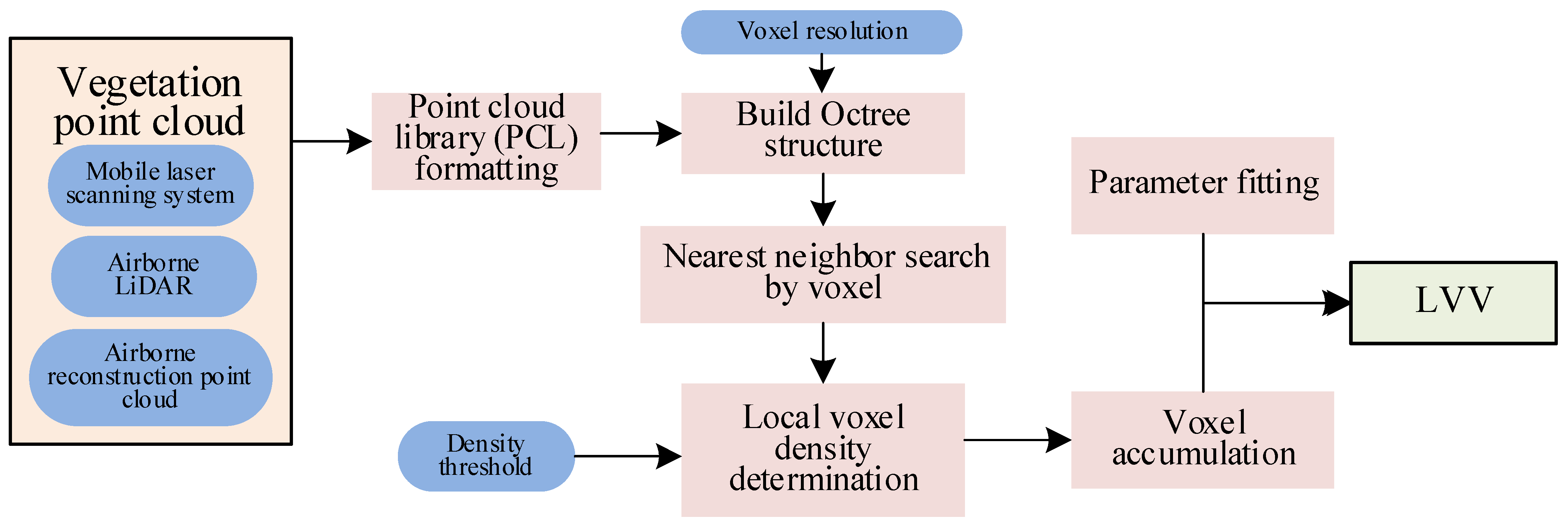

2. Principle and Design of a Novel LVV Estimation Model Based on a Voxel Method and an Octree

- (1)

- The 3D bounding box of the vegetation point cloud data is constructed, which will be used as the partition space of the voxel model and octree.

- (2)

- According to the set voxel resolution, the voxel model is constructed; on this basis, the octree index is established for each vegetation point cloud.

- (3)

- The voxel density at each node is calculated and compared with the set threshold.

- (4)

- When the density is lower than this threshold, it is necessary to search the leaf node of the point and infer with the voxel of smaller scale until the voxel of the smallest scale is accessed.

- (5)

- When the density is higher than a certain threshold, the voxel is considered to be completely covered by the vegetation point cloud. At that time, the voxel volume is calculated and accumulated into the results according to the voxel level.

- (6)

- According to the observed original data, depending on the data collection method and vegetation type, parameter fitting is performed, and the corrected LVV calculation value is finally obtained.

3. Implementation of the VO-LVV Estimation Model

- (1)

- Obtain the XYZ space coordinate information for all point clouds.

- (2)

- Construct an octree organizational structure for the point cloud collection.

- (3)

- Use the voxel nearest neighbor method to establish a fast search method for point clouds in voxel space.

- (4)

- Establish a method for measuring the volume of voxels at different levels.

4. Experimental Verification of the VO-LVV Estimation Model

- (1)

- Through the testing of vegetation point cloud samples, as compared with the wrapped surface approach, our LVV measurement method used in this study enabled faster calculation, and the acceleration ratio generally reached approximately 44:1–102:1, thus indicating good calculation efficiency.

- (2)

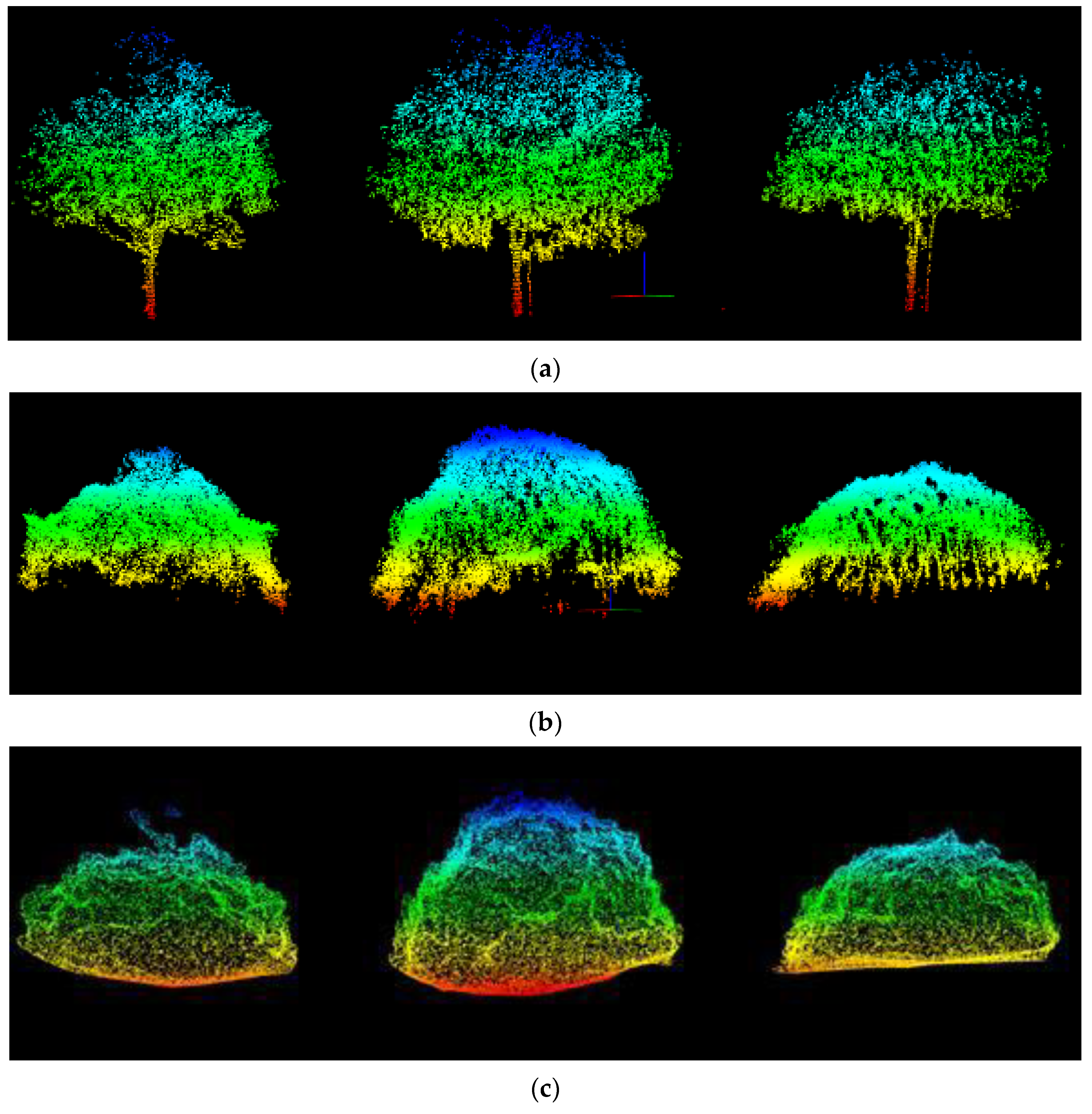

- For point cloud data from three different sources, when the same calculation method is used, e.g., the wrapped surface approach or the VO-LVV measurement method, the corresponding LVV results can be calculated. However, comparison of the proximity of the results to the true value of LVV indicated that the colocation results from the point cloud data based on 3D reconstruction with the UAV airborne oblique photogrammetry technique were better than those obtained through the other methods (MLS or UAV airborne LiDAR). Therefore, the point cloud data with higher point density appear to improve the LVV calculation accuracy. Meanwhile, the mean value (16.0617) of the first method for the three data sources is closer to the true value (25.7401) than that of the second method (14.0726).

- (3)

- For the most suitable point cloud data source for LVV estimation, i.e., the point cloud data from 3D reconstruction, the absolute value of the measurement error rate (difference between measured value and true value/true value) was 19.9%, thus indicating better measurement accuracy than the 26.8% absolute value of the traditional wrapped surface approach.

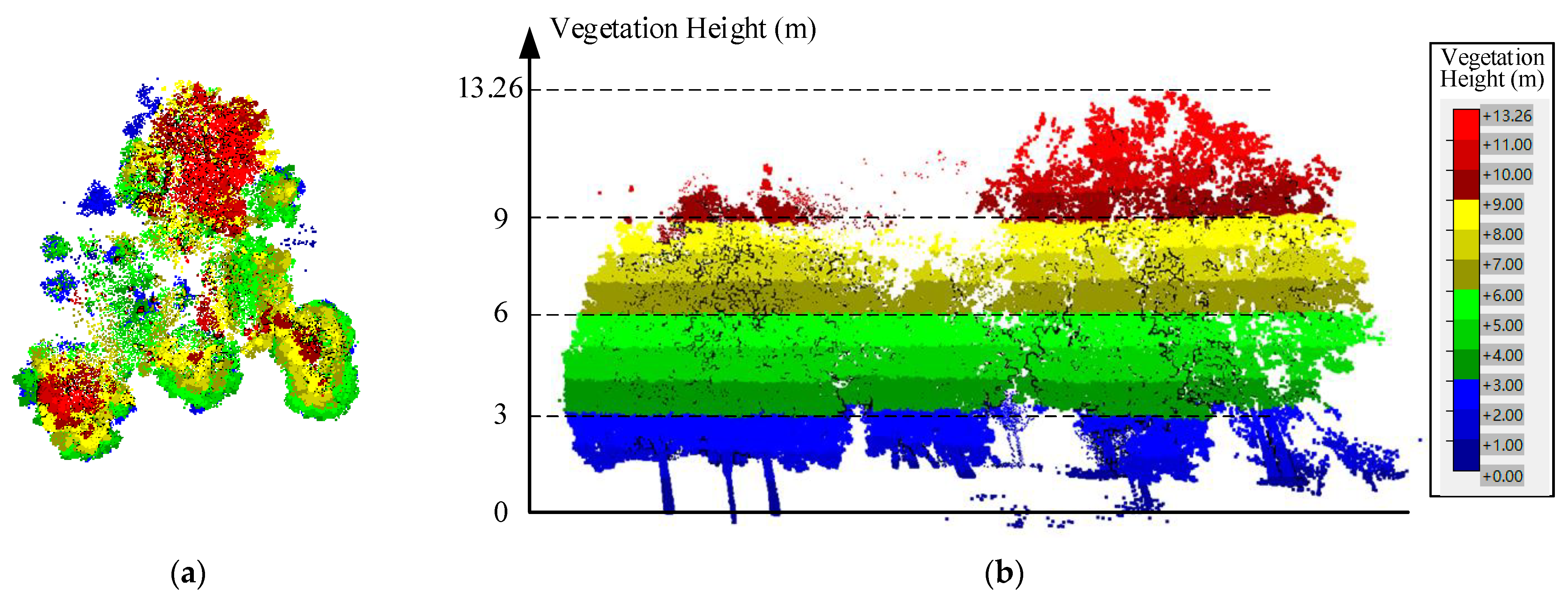

- (1)

- The VO-LVV estimation method can maintain a linear relationship with a single plant in calculations involving multiple plants. Therefore, this method is suitable for simultaneous calculation scenarios of single trees and multiple plants. In contrast, the wrapped surface approach and formula measurement method can perform calculations for only single trees, and their calculation efficiencies are quite low.

- (2)

- Similar to the second item in the above analysis from Table 3.

- (3)

- The VO-LVV estimation method still maintained a good acceleration ratio, as compared with that of the wrapped surface approach, reaching approximately 98:1–230:1.

- (4)

- For the most suitable point cloud data source for LVV estimation, i.e., the point cloud data from 3D reconstruction, the absolute error rate of the VO-LVV method was 14.5%, whereas the absolute error rate of the wrapped surface approach was 15.2%.

5. Discussion and Conclusions

- The VO-LVV estimation method, compared with a traditional method, i.e., the wrapped surface approach, enabled substantial efficiency improvement and higher calculation accuracy. For the most suitable point cloud data source (i.e., the point cloud data from 3D reconstruction) for LVV estimation, the maximum calculation acceleration ratio reached 230X, and the best absolute error acceleration rate of the VO-LVV method was 6.9%.

- Furthermore, the new method can be simultaneously applied to scenarios of single plants and multiple plants, and can be used for the calculation of LVV in areas with various vegetation types in cities.

- However, this method also has some limitations in the following aspects:

- In this method, the voxel resolution and density threshold strongly influence the LVV calculation results. Due to the lack of theoretical analysis on voxel scale division in this algorithm, it is unable to process data with low point density.

- This method uses parameter fitting to optimize the lack of information in the original point cloud data, and it does not analyze the spatial distribution of point cloud data; therefore, errors may be present in the estimation results.

- The number of verification data will be increased to verify the adaptability of the algorithm in different canopy structures.

- For low point density in the original point cloud data, the commonly used point cloud up-sampling algorithm [33] could be introduced.

- For the lack of information due to the point cloud acquisition method, point cloud completion technology based on deep learning [34] could be used to complete the canopy data and improve the calculation accuracy.

- Among different types of vegetation in cities, the lushness of branches and leaves greatly varies. Other measurement methods for specific tree species could be introduced and combined with the results of this study to yield an adaptive LVV high-precision calculation scheme. Through in-depth improvement of the method reported herein, a rapid estimation model of urban regional LVV could be established, which would enable urban greening measurements to be quickly obtained and analyzed.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

|

Appendix B

|

References

- Kim, J.; Mok, K. Study on the current status and direction of environmental governance around urban forest in Korea: With a focus on the recognition of local government officials. J. Korean Society For. Sci. 2010, 99, 580–589. [Google Scholar]

- Grimmond, S.; Loridan, T.; Best, M. The importance of vegetation on urban surface-atmosphere exchanges: Evidence from measurements and modelling. In Proceedings of the International Workshop on Urban Weather and Climate: Observation and Modeling, Beijing, China, 12 July 2011. [Google Scholar]

- Gao, Y.G.; Xu, H.Q. Estimation of multi-scale urban vegetation coverage based on multi-source remote sensing images. J. Infrared Millim. Waves (Chin. Ed.) 2017, 36, 225–235. (In Chinese) [Google Scholar]

- Mishev, D. Vegetation index of a mixed class of natural formations. Acta Astronaut. 1992, 26, 665–667. [Google Scholar] [CrossRef]

- Hutmacher, A.M.; Zaimes, G.N.; Martin, J.; Green, D.M. Vegetation structure along urban ephemeral streams in southeastern Arizona. Urban Ecosyst. 2014, 17, 349–368. [Google Scholar] [CrossRef]

- Zhou, H.X.; Tao, G.X.; Yan, X.Y.; Sun, J.; Wu, Y. A review of research on the urban thermal environment effects of green quantity. Chin. J. Appl. Ecol. (Chin. Ed.) 2020, 31, 2804–2816. [Google Scholar]

- Pataki, D.E. City trees: Urban greening needs better data. Nature 2013, 502, 624. [Google Scholar] [CrossRef] [Green Version]

- Zhao, W. Research on urban green space system evaluation index system. In Proceedings of the International Conference on Air Pollution and Environmental Engineering (APEE), Hong Kong, China, 26–28 October 2018; Volume 208. [Google Scholar]

- Bai, X.Q.; Wang, W.; Lin, Z.Y.; Zhang, Y.J.; Wang, K. Three-dimensional measuring for green space based on high spatial resolution remote sensing images. Remote Sens. Land Resour. (Chin. Ed.) 2019, 31, 53–59. [Google Scholar]

- Li, F.X.; Li, M.; Feng, X.G. High-precision method for estimating the three-dimensional green quantity of an urban forest. J. Indian Soc. Remote Sens. 2021, 49, 1407–1417. [Google Scholar] [CrossRef]

- Zheng, S.J.; Meng, C.; Xue, J.H.; Wu, Y.B.; Liang, J.; Xin, L.; Zhang, L. UAV-based spatial pattern of three-dimensional green volume and its influencing factors in Lingang New City in Shanghai, China. Front. Earth Sci. (Chin. Ed.) 2021, 15, 543–552. [Google Scholar] [CrossRef]

- Zhou, J.H.; Sun, T.Z. Study on remote sensing model of three-dimensional green biomass and the estimation of environmental benefits of greenery. Remote Sens. Environ. Chin. (Chin. Ed.) 1995, 10, 162–174. [Google Scholar]

- Feng, D.L.; Liu, Y.H.; Wang, F.; Yuan, Y. Review on ecological benefits evaluation of urban green space based on three-dimensional green quantity. Chin. Agr. Sci. Bull. (Chin. Ed.) 2017, 33, 129–133. [Google Scholar]

- Huang, Y.; Yu, B.L.; Zhou, J.H.; Hu, C.L.; Tan, W.Q.; Hu, Z.M.; Wu, J.P. Toward automatic estimation of urban green volume using airborne LiDAR data and high-resolution remote sensing images. Front. Earth Sci. (Chin. Ed.) 2013, 7, 43–54. [Google Scholar] [CrossRef]

- Liu, C.F.; Jiang, Y.Z.; Zhang, Q.L.; Liu, J.; Wu, B.; Li, C.M.; Zhang, S.X.; Wen, J.B.; Liu, S.F.; Li, Y.B.; et al. Tridimensional green biomass measures of Shenyang urban forests. J. Beijing For. Univ. (Chin. Ed.) 2006, 28, 32–37. [Google Scholar]

- Zhou, J.H. Theory and Practice on Database of three-dimensional vegetation quantity. Acta Geogr. Sin. (Chin. Ed.) 2001, 56, 14–23. [Google Scholar]

- Zhou, Y.F.; Zhou, J.H. Fast method to detect and calculate LVV. Acta Ecol. Sin. (Chin. Ed.) 2006, 26, 4204–4211. [Google Scholar]

- Nowak, D.J.; Crane, D.E.; Dwyer, J.F. Compensatory value of urban trees in the United States. J. Arboric. 2002, 28, 194–199. [Google Scholar] [CrossRef]

- Nowak, D.J.; Greenfield, E.J.; Hoehn, R.E.; Lapoint, E. Carbon storage and sequestration by trees in urban and community areas of the United States. Environ. Pollut. 2013, 178, 229–236. [Google Scholar] [CrossRef] [Green Version]

- Sander, H.; Polasky, S.; Haight, R.G. The value of urban tree cover: A hedonic property price model in Ramsey and Dakota Counties, Minnesota, USA. Ecol. Econ. 2010, 69, 1646–1656. [Google Scholar] [CrossRef]

- Sun, G.; Ranson, K.J.; Kimes, D.S.; Blair, J.B.; Kovacs, K. Forest vertical structure from GLAS: An evaluation using LVIS and SRTM data. Remote Sens. Environ. 2008, 112, 107–117. [Google Scholar] [CrossRef]

- Li, W.; Niu, Z.; Wang, C.; Gao, S.; Feng, Q.; Chen, H.Y. Forest above-ground biomass estimation at plot and tree levels using airborne LiDAR data. J. Remote Sens. (Chin. Ed.) 2015, 4, 669–679. [Google Scholar]

- Dai, C. The Predicting Models of Crown Volume and Crown Surface Area about Main Species of Tree of Beijing; North China University of Science and Technology: Qinhuangdao, China, 2015. [Google Scholar]

- Cháidez, J.D.J.N. Allometric equations and expansion factors for tropical dry forest trees of Eastern Sinaloa, Mexico. Trop. Subtrop. Agroecosyst. 2009, 10, 45–52. [Google Scholar]

- Chen, X.X.; Li, J.C.; Xu, Y.L. Hierarchical measurement of urban living vegetation volume based on LiDAR point cloud data. Eng. Surv. Mapp. (Chin. Ed.) 2018, 27, 43–48. [Google Scholar]

- Hosoi, F.; Nakai, Y.; Omasa, K. 3D voxel-based solid modeling of a broad-leaved tree for accurate volume estimation using portable scanning LiDAR. ISPRS J. Photogramm. 2013, 82, 41–48. [Google Scholar] [CrossRef]

- Wu, B.; Yu, B.L.; Yue, W.H.; Shu, S.; Tan, W.Q.; Hu, C.L.; Huang, Y.; Wu, J.P.; Liu, H.X. A voxel-based method for automated identification and morphological parameters estimation of individual street trees from mobile laser scanning data. Remote Sens. 2013, 5, 584–611. [Google Scholar] [CrossRef] [Green Version]

- Che, D.F.; Zhang, C.L.; Du, H.Y. 3D model organization method of digital city based on BSP tree and grid division. Mine Surv. (Chin. Ed.) 2019, 47, 81–84. [Google Scholar]

- Saona-Vazquez, C.; Navazo, I.; Brunet, P. The visibility octree: A data structure for 3D navigation. Comput. Graph. 1999, 23, 635–643. [Google Scholar] [CrossRef]

- Li, F.X.; Shi, H.; Sa, L.W.; Feng, X.G.; Li, M. 3D green volume measurement of single tree using 3D laser point cloud data and differential method. J. Xi’an Univ. of Arch. Tech. (Chin. Ed.) 2017, 49, 530–535. [Google Scholar]

- Losasso, F.; Gibou, F.; Fedkiw, R. Simulating water and smoke with an octree data structure. ACM T. Graph. 2004, 23, 457–462. [Google Scholar] [CrossRef]

- Kato, A.; Moskal, L.M.; Schiess, P.; Swanson, M.E.; Calhoun, D.; Stuetzle, W. Capturing tree crown formation through implicit surface reconstruction using airborne LiDAR data. Remote Sens. Environ. 2009, 1136, 1148–1162. [Google Scholar] [CrossRef]

- Yu, L.Q.; Li, X.Z.; Fu, C.W.; Cohen-Or, D.; Heng, P.A. PU-Net: Point Cloud Upsampling Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2790–2799. [Google Scholar]

- Cheng, M.; Li, G.Y.; Chen, Y.P.; Chen, J.; Wang, C.; Li, J. Dense Point Cloud Completion Based on Generative Adversarial Network. IEEE T. Geosci. Remote 2022, 60, 1–10. [Google Scholar] [CrossRef]

| Type | iScan-S-Z (MLS) | Zenmuse L1 (Airborne LiDAR) |

|---|---|---|

| Limit measurement distance | 119 m | 450 m |

| Laser emission frequency | 1.01 × 107 p/s | 2.4 × 106 p/s (single echo), 4.8 × 106 p/s (multiple echo) |

| Ranging accuracy | 0.9 mm (at 50 m) | 3 cm (at 100 m) |

| Angular resolution | 0.0088° | 0.01° |

| System accuracy | 5 cm (at 100 m) | 10 cm (at 50 m) |

| Point Cloud Source | Data Format | Point Cloud Quantity | Point Cloud Density, Resolution | Data Details |

|---|---|---|---|---|

| MLS | .las | 23,762 | 8000 points/m3, 5 cm | Missing part of the crown dorsal information |

| UAV Airborne LiDAR | .las | 50,398 | 8000 points/m3, 5 cm | Missing the trunk and crown bottom |

| UAV Airborne oblique photogrammetry | .las | 58,7005 | 1,000,000 points/m3, 1 cm | Missing the trunk and crown bottom |

| Calculation Method | Measurement Data | Calculated LVV (m3) | Calculation Time (s) |

|---|---|---|---|

| Wrapped surface approach | MLS | 16.1555 | 0.308 |

| Airborne LiDAR | 13.1832 | 1.673 | |

| 3D reconstruction point cloud | 18.8464 | 7.660 | |

| VO-LVV method (voxel resolution: 0.2 m, density threshold: 1000/m3) | MLS | 11.3601 | 0.007 |

| Airborne LiDAR | 10.2427 | 0.024 | |

| 3D reconstruction point cloud | 20.6150 | 0.075 | |

| True value (based diameter-height correlation equation) | Crown width | 4.355 m | / |

| Crown height | 2.592 m | ||

| LVV in theory | 25.7401 m3 |

| Calculation Method | Measurement Data | Calculated LVV (m3) | Calculation Time (s) |

|---|---|---|---|

| Wrapped surface approach | MLS | 58.1347 | 2.291 |

| Airborne LiDAR | 39.9395 | 6.199 | |

| 3D reconstruction point cloud | 67.7621 | 26.942 | |

| VO-LVV method (voxel resolution: 0.2 m, density threshold: 1000/m3) | MLS | 39.2171 | 0.016 |

| Airborne LiDAR | 39.1926 | 0.063 | |

| 3D reconstruction point cloud | 68.3091 | 0.117 | |

| True value (based on diameter-height correlation equation) | LVV in theory | 79.8619 | / |

| Point Cloud Source | Data Format | Point Cloud Quantity | Point Cloud Density, Resolution | Data Details |

|---|---|---|---|---|

| MLS | .las | 301,462 | 8000 points/m3, 5 cm | Missing part of the crown dorsal information |

| Airborne LiDAR | .las | 723,622 | 8000 points/m3, 5 cm | Missing the trunk and crown bottom |

| UAV Airborne oblique photogrammetry | .las | 7,137,059 | 1,000,000 points/m3, 1 cm | Missing the trunk and crown bottom |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, F.; Peng, S.; Chen, S.; Cao, H.; Ma, N. VO-LVV—A Novel Urban Regional Living Vegetation Volume Quantitative Estimation Model Based on the Voxel Measurement Method and an Octree Data Structure. Remote Sens. 2022, 14, 855. https://doi.org/10.3390/rs14040855

Huang F, Peng S, Chen S, Cao H, Ma N. VO-LVV—A Novel Urban Regional Living Vegetation Volume Quantitative Estimation Model Based on the Voxel Measurement Method and an Octree Data Structure. Remote Sensing. 2022; 14(4):855. https://doi.org/10.3390/rs14040855

Chicago/Turabian StyleHuang, Fang, Shuying Peng, Shengyi Chen, Hongxia Cao, and Ning Ma. 2022. "VO-LVV—A Novel Urban Regional Living Vegetation Volume Quantitative Estimation Model Based on the Voxel Measurement Method and an Octree Data Structure" Remote Sensing 14, no. 4: 855. https://doi.org/10.3390/rs14040855

APA StyleHuang, F., Peng, S., Chen, S., Cao, H., & Ma, N. (2022). VO-LVV—A Novel Urban Regional Living Vegetation Volume Quantitative Estimation Model Based on the Voxel Measurement Method and an Octree Data Structure. Remote Sensing, 14(4), 855. https://doi.org/10.3390/rs14040855