Multiview Image Matching of Optical Satellite and UAV Based on a Joint Description Neural Network

Abstract

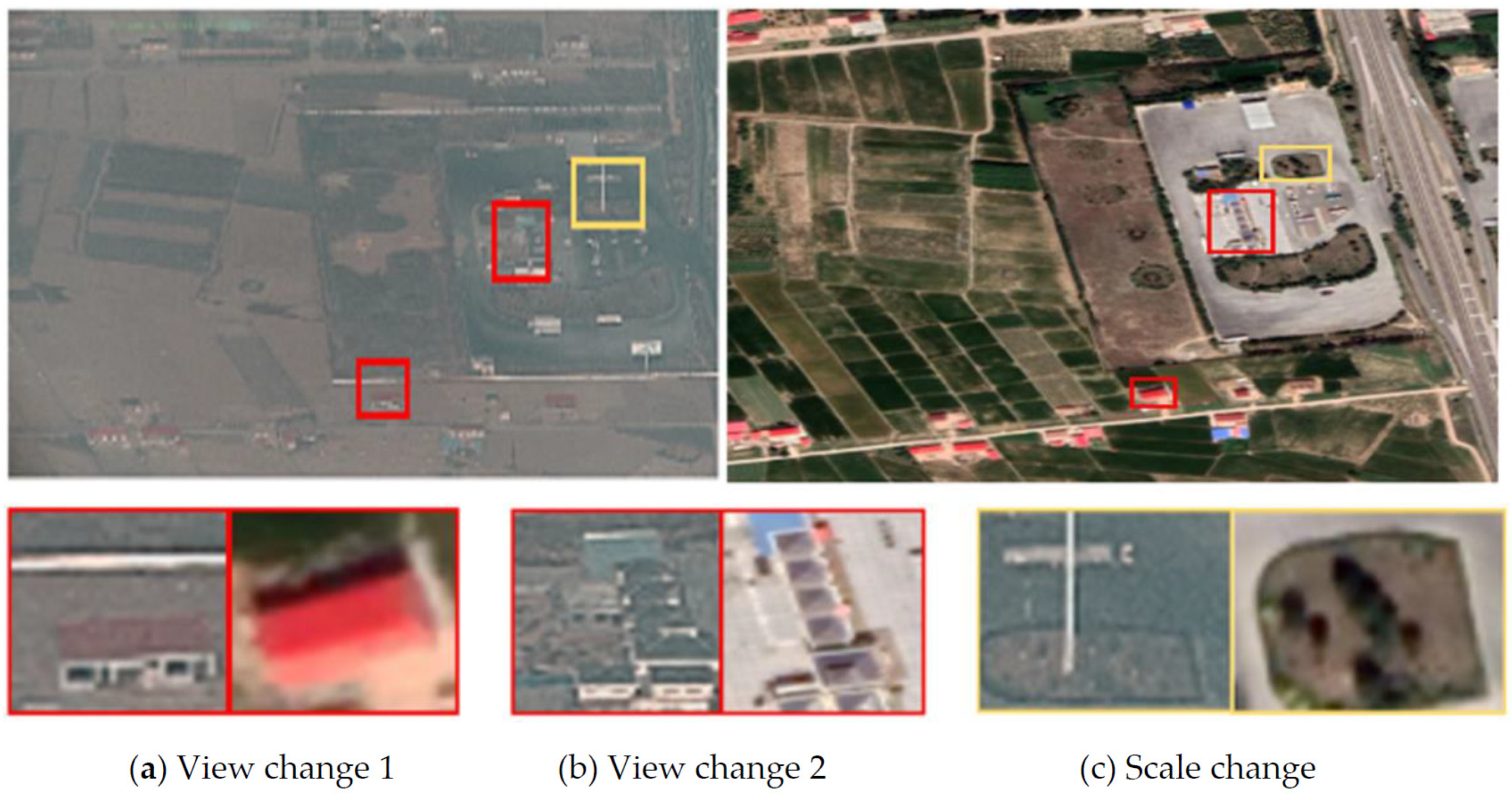

:1. Introduction

- (1)

- A soft description method is designed for network training and auxiliary description.

- (2)

- A high-dimensional hard description method is designed to ensure the matching accuracy of the model.

- (3)

- The joint descriptor supplements the hard descriptor to highlight the differences between different features.

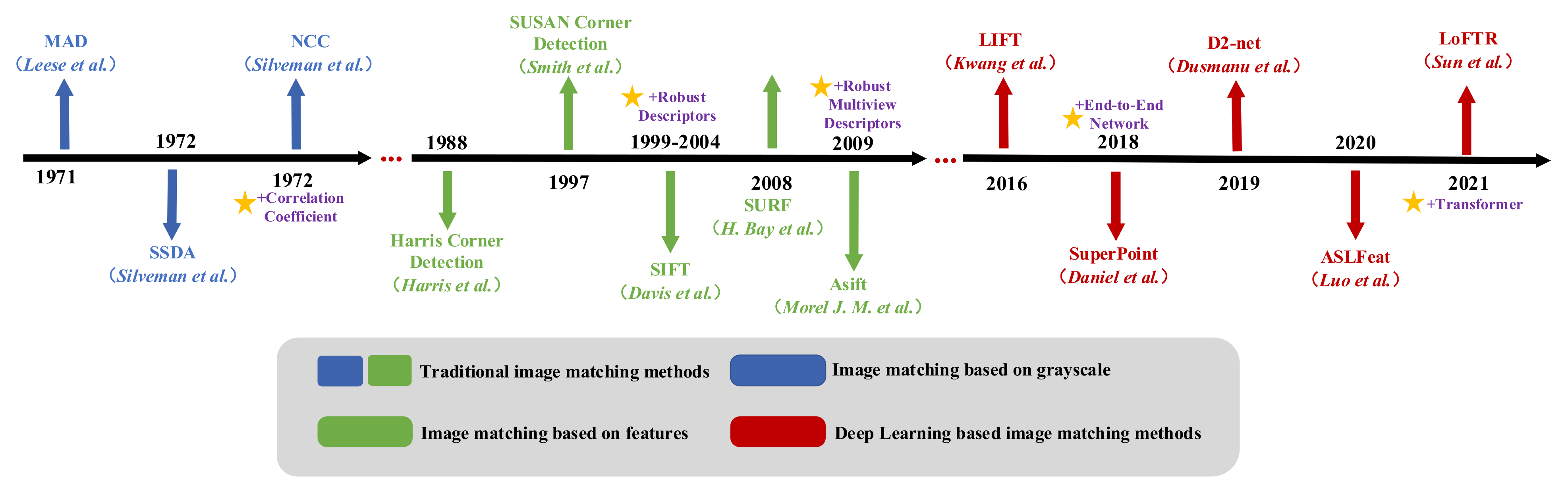

2. Related Works

2.1. Matching Method Based on Grayscale

2.2. Matching Method Based on Features

2.3. Multiview Space-Sky Image Matching Method

2.4. Matching Method Based on Deep Learning

3. Proposed Method

3.1. Feature Detection and Hard Descriptor

3.2. Soft Descriptor

3.3. Joint Descriptors

3.4. Multiscale Models

3.5. Training Loss

3.6. Feature Matching Method

3.7. Model Training Methods and Environment

4. Experiment and Results

4.1. Data

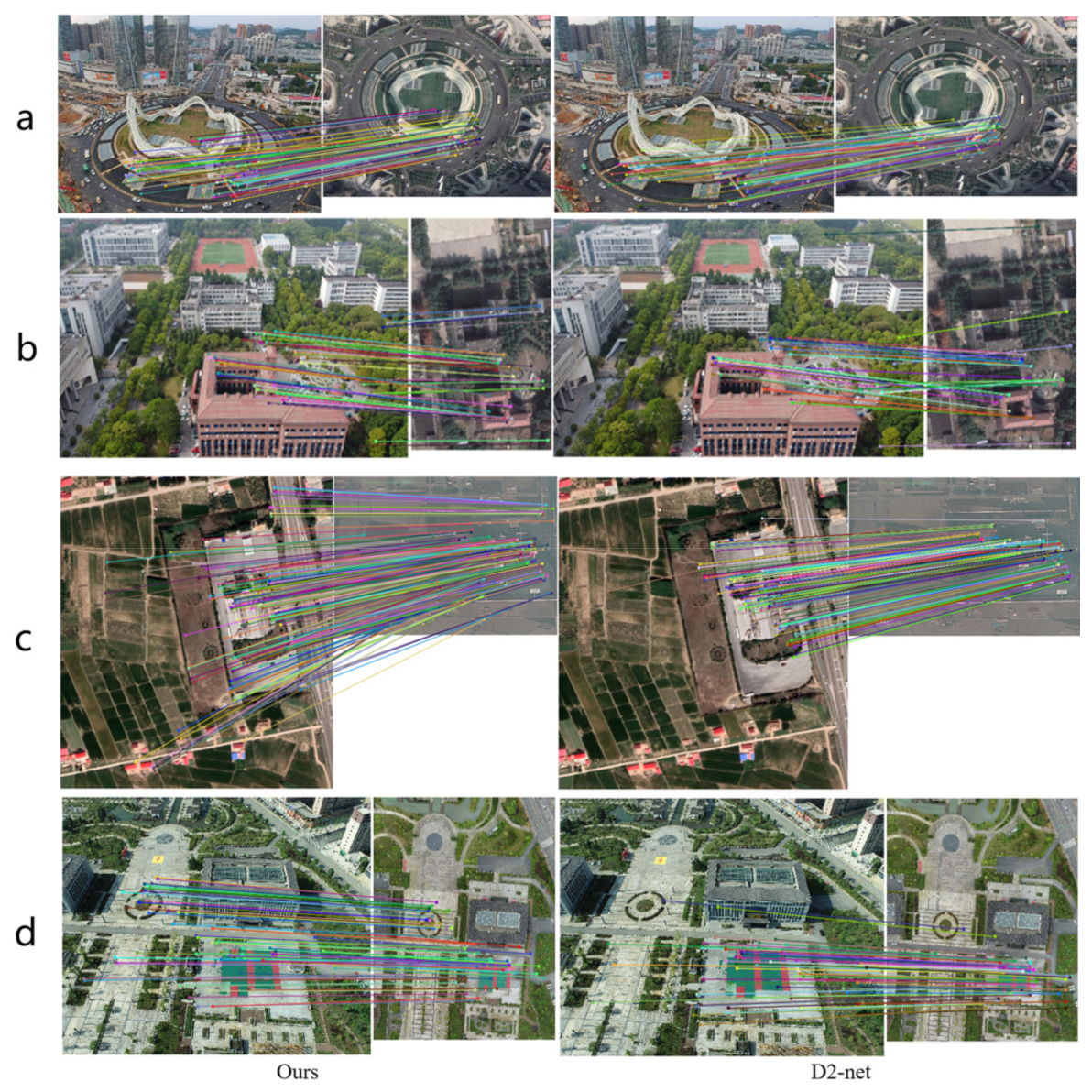

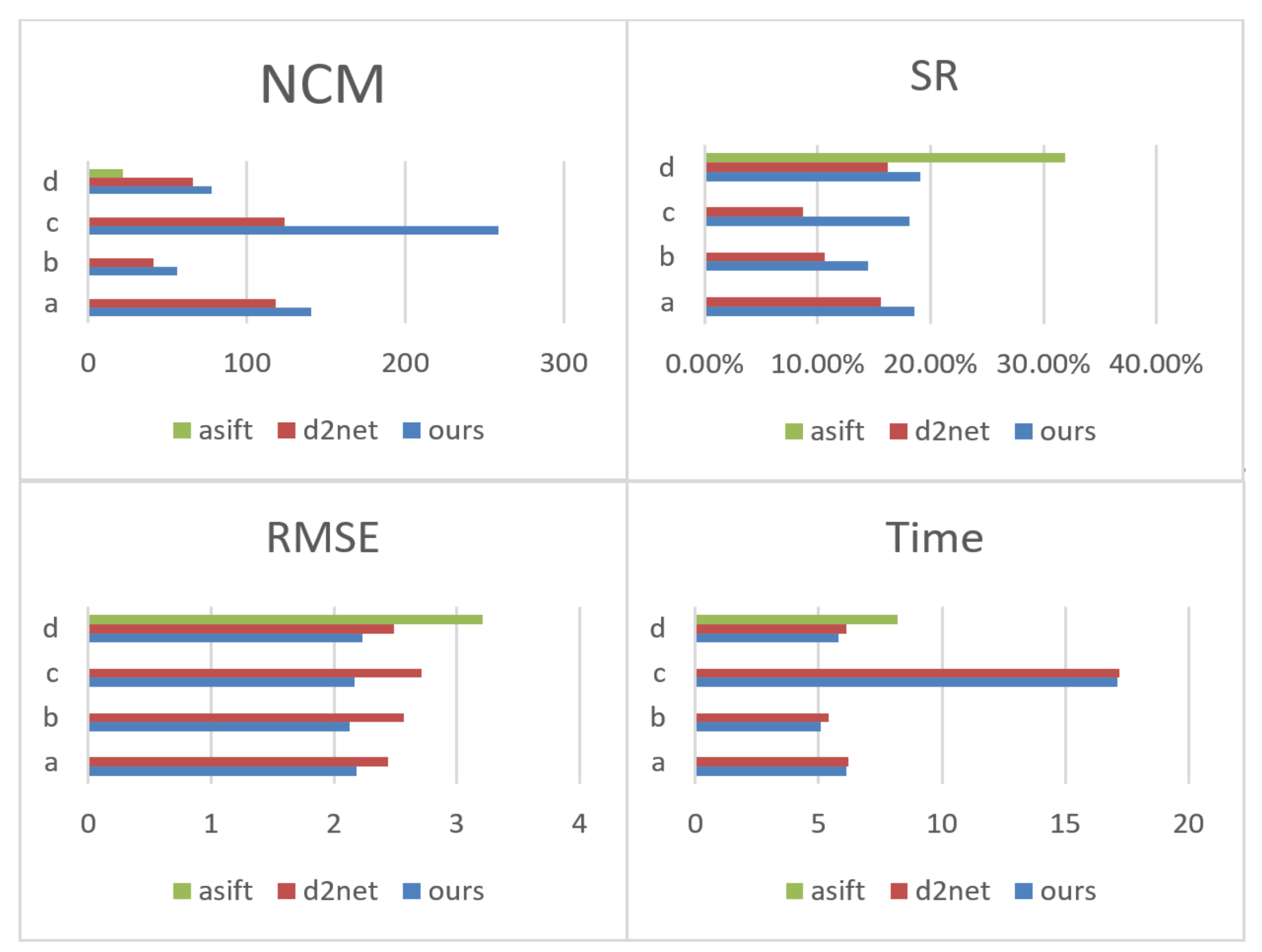

4.2. Comparison of Image Matching Methods

4.3. Angle Adaptability Experiment

4.4. Application in Image Geometric Correction

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, Y.; Chen, W.; Zhang, Y.; Tao, C.; Xiao, R.; Tan, Y. Accurate cloud detection in high-resolution remote sensing imagery by weakly supervised deep learning. Remote Sens. Environ. 2020, 250, 112045. [Google Scholar] [CrossRef]

- Dou, P.; Chen, Y. Dynamic monitoring of land-use/land-cover change and urban expansion in Shenzhen using Landsat imagery from 1988 to 2015. Int. J. Remote Sens. 2017, 38, 5388–5407. [Google Scholar] [CrossRef]

- Shi, W.; Zhang, M.; Zhang, R.; Chen, S.; Zhan, Z. Change detection based on artificial intelligence: State-of-the-art and challenges. Remote Sens. 2020, 12, 1688. [Google Scholar] [CrossRef]

- Guo, Y.; Du, L.; Wei, D.; Li, C. Robust SAR Automatic Target Recognition via Adversarial Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 716–729. [Google Scholar] [CrossRef]

- Guerra, E.; Munguía, R.; Grau, A. UAV visual and laser sensors fusion for detection and positioning in industrial applications. Sensors 2018, 18, 2071. [Google Scholar] [CrossRef] [Green Version]

- Ma, J.; Jiang, X.; Fan, A.; Jiang, J.; Yan, J. Image matching from handcrafted to deep features: A survey. Int. J. Comput. Vis. 2021, 129, 23–79. [Google Scholar] [CrossRef]

- Ye, Y.; Shan, J.; Hao, S.; Bruzzone, L.; Qin, Y. A local phase based invariant feature for remote sensing image matching. ISPRS J. Photogramm. Remote Sens. 2018, 142, 205–221. [Google Scholar] [CrossRef]

- Manzo, M. Attributed relational sift-based regions graph: Concepts and applications. Mach. Learn. Knowl. Extr. 2020, 2, 13. [Google Scholar] [CrossRef]

- Zhao, X.; Li, H.; Wang, P.; Jing, L. An Image Registration Method Using Deep Residual Network Features for Multisource High-Resolution Remote Sensing Images. Remote Sens. 2021, 13, 3425. [Google Scholar] [CrossRef]

- Zeng, L.; Du, Y.; Lin, H.; Wang, J.; Yin, J.; Yang, J. A Novel Region-Based Image Registration Method for Multisource Remote Sensing Images via CNN. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 1821–1831. [Google Scholar] [CrossRef]

- Wang, S.; Quan, D.; Liang, X.; Ning, M.; Guo, Y.; Jiao, L. A deep learning framework for remote sensing image registration. ISPRS J. Photogramm. Remote Sens. 2018, 145, 148–164. [Google Scholar] [CrossRef]

- Leese, J.A.; Novak, C.S.; Clark, B.B. An automated technique for obtaining cloud motion from geosynchronous satellite data using cross correlation. J. Appl. Meteorol. Climatol. 1971, 10, 118–132. [Google Scholar] [CrossRef]

- Barnea, D.I.; Silverman, H.F. A class of algorithms for fast digital image registration. IEEE Trans. Comput. 1972, 100, 179–186. [Google Scholar] [CrossRef]

- Zitova, B.; Flusser, J. Image registration methods: A survey. Image Vis. Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef] [Green Version]

- Harris, C.G.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; Volume 15, p. 10-5244. [Google Scholar]

- Smith, S.M.; Brady, J.M. SUSAN—A new approach to low level image processing. Int. J. Comput. Vis. 1997, 23, 45–78. [Google Scholar] [CrossRef]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Corfu, Greece, 20–25 September 1999; IEEE: Piscataway, NJ, USA, 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Bosch, A.; Zisserman, A.; Munoz, X. Scene classification using a hybrid generative/discriminative approach. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 712–727. [Google Scholar] [CrossRef] [Green Version]

- Ke, Y.; Sukthankar, R. PCA-SIFT: A more distinctive representation for local image descriptors. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 2, p. 2. [Google Scholar]

- Morel, J.M.; Yu, G. ASIFT: A new framework for fully affine invariant image comparison. SIAM J. Imaging Sci. 2009, 2, 438–469. [Google Scholar] [CrossRef]

- Etezadifar, P.; Farsi, H. A New Sample Consensus Based on Sparse Coding for Improved Matching of SIFT Features on Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2020, 58, 5254–5263. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, W. Reliable image matching via photometric and geometric constraints structured by Delaunay triangulation. ISPRS J. Photogramm. Remote Sens. 2019, 153, 1–20. [Google Scholar] [CrossRef]

- Li, J.; Hu, Q.; Ai, M. LAM: Locality affine-invariant feature matching. ISPRS J. Photogramm. Remote Sens. 2019, 154, 28–40. [Google Scholar] [CrossRef]

- Yu, Q.; Ni, D.; Jiang, Y.; Yan, Y.; An, J.; Sun, T. Universal SAR and optical image registration via a novel SIFT framework based on nonlinear diffusion and a polar spatial-frequency descriptor. ISPRS J. Photogramm. Remote Sens. 2021, 171, 1–17. [Google Scholar] [CrossRef]

- Gao, X.; Shen, S.; Zhou, Y.; Cui, H.; Zhu, L.; Hu, Z. Ancient Chinese Architecture 3D Preservation by Merging Ground and Aerial Point Clouds. ISPRS J. Photogramm. Remote Sens. 2018, 143, 72–84. [Google Scholar] [CrossRef]

- Hu, H.; Zhu, Q.; Du, Z.; Zhang, Y.; Ding, Y. Reliable spatial relationship constrained feature point matching of oblique aerial images. Photogramm. Eng. Remote Sens. 2015, 81, 49–58. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, W. On-Board GNSS/IMU Assisted Feature Extraction and Matching for Oblique UAV Images. Remote Sens. 2017, 9, 813. [Google Scholar] [CrossRef] [Green Version]

- Yi, K.M.; Trulls, E.; Lepetit, V.; Fua, P. Lift: Learned invariant feature transform. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 467–483. [Google Scholar]

- Balntas, V.; Johns, E.; Tang, L.; Mikolajczyk, K. PN-Net: Conjoined triple deep network for learning local image descriptors. arXiv 2016, arXiv:1601.05030. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Self-supervised interest point detection and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 224–236. [Google Scholar]

- Bhowmik, A.; Gumhold, S.; Rother, C.; Brachmann, E. Reinforced Feature Points: Optimizing Feature Detection and Description for a High-Level Task. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Ono, Y.; Trulls, E.; Fua, P.; Yi, K.M. LF-Net: Learning local features from images. arXiv 2018, arXiv:1805.09662. [Google Scholar]

- Sun, J.; Shen, Z.; Wang, Y.; Bao, H.; Zhou, X. LoFTR: Detector-free local feature matching with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 8922–8931. [Google Scholar]

- Lhh, A.; Dm, B.; Slb, C.; Dtb, D.; Msa, E. A deep learning framework for matching of SAR and optical imagery. ISPRS J. Photogramm. Remote Sens. 2020, 169, 166–179. [Google Scholar]

- Dusmanu, M.; Rocco, I.; Pajdla, T.; Pollefeys, M.; Sivic, J.; Torii, A.; Sattler, T. D2-net: A trainable cnn for joint description and detection of local features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 8092–8101. [Google Scholar]

- Megalingam, R.K.; Sriteja, G.; Kashyap, A.; Apuroop, K.G.S.; Gedala, V.V.; Badhyopadhyay, S. Performance Evaluation of SIFT & FLANN and HAAR Cascade Image Processing Algorithms for Object Identification in Robotic Applications. Int. J. Pure Appl. Math. 2018, 118, 2605–2612. [Google Scholar]

- Li, H.; Qin, J.; Xiang, X.; Pan, L.; Ma, W.; Xiong, N.N. An efficient image matching algorithm based on adaptive threshold and RANSAC. IEEE Access 2018, 6, 66963–66971. [Google Scholar] [CrossRef]

- Yang, T.Y.; Hsu, J.H.; Lin, Y.Y.; Chuang, Y.Y. Deepcd: Learning deep complementary descriptors for patch representations. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3314–3332. [Google Scholar]

| Method | Advantages | Disadvantages |

|---|---|---|

| NCC, MAD, SSDA (based on grayscale) | High matching accuracy rates. | Low efficiency, poor adaptability to scale, light, noise, etc. |

| SIFT, ASIFT, HSV-SIFT (based on features) | High adaptability to scale, illumination, and rotation. | Low adaptability to radiation distortion. |

| Refs. [26,27] (multiview space-sky image matching method) | High adaptability to large dip angle. | Dependent upon prior knowledge. |

| LIFT, SuperPoint, D2net (based on deep learning) | High feature extraction capability, strong adaptability to different factors through training. | Depending on the equipment, complex model, tedious training process. |

| Ours | High feature extraction capability, high adaptability to scale, large dip angle, radiation distortion, etc. | Depending on the equipment, at present, it cannot meet the needs of real-time processing. |

| Test Data | Data Description | ||

|---|---|---|---|

| UAV Image | Satellite Image | Study Area Description | |

| Group a | Sensor: UAV Resolution: 0.24 m Date: \ Size: 1080 × 811 | Sensor: Satellite Resolution: 0.24 m Date: \ Size: 1080 × 811 | The study area is located at Wuhan City, Hubei Province, China. The UAV image is taken by a small, low-altitude UAV in a square. The satellite image is downloaded from Google Satellite Images. There is a significant perspective difference between the two images, which increases the difficulty of image matching. |

| Group b | Sensor: UAV Resolution: 1 m Date: \ Size: 1000 × 562 | Sensor: Satellite Resolution: 0.5 m Date: \ Size: 402 × 544 | The study area is located at Hubei University of Technology, Wuhan, China. The UAV image is taken by a small, low-altitude UAV at the school. The satellite image is downloaded from Google Satellite Images. There is a large perspective difference between the two images, which increases the difficulty of image matching. |

| Group c | Sensor: UAV Resolution: 0.5 m Date: \ Size: 1920 × 1080 | Sensor: Satellite Resolution: 0.24 m Date: \ Size: 2344 × 2124 | The study area is located at Tongxin County, Gansu Province, China. The UAV image is taken by a large, high-altitude UAV at a gas station. The satellite image is downloaded from Google Satellite Images. Similarly, the two images have a significant perspective difference. Furthermore, these images are taken from different sensors, resulting in radiation differences that make matching more difficult. |

| Group d | Sensor: UAV Resolution: 0.3 m Date: \ Size: 800 × 600 | Sensor: Satellite Resolution: 0.3 m Date: \ Size: 590 × 706 | The study area is located at Anshun City, Guizhou Province, China. The UAV image is taken by a large, high-resolution UAV in a park. The satellite image is downloaded from Google Satellite Images. The linear features of the two images are distinct and rich. However, the shooting angles of the two images are quite different, which leads to difficulty during the image matching process. |

| Image\Method | NCM | SR | RMSE | MT | |

|---|---|---|---|---|---|

| Group a | Ours | 141 | 18.6% | 2.18 | 6.1 s |

| D2Net | 118 | 15.6% | 2.44 | 6.2 s | |

| ASIFT | 0 | - | - | - | |

| Group b | Ours | 56 | 14.5% | 2.13 | 5.1 s |

| D2Net | 41 | 10.6% | 2.57 | 5.4 s | |

| ASIFT | 0 | - | - | - | |

| Group c | Ours | 259 | 18.1% | 2.17 | 17.1 s |

| D2Net | 124 | 8.7% | 2.71 | 17.2 s | |

| ASIFT | 0 | - | - | - | |

| Group d | Ours | 78 | 19.1% | 2.23 | 5.8 s |

| D2Net | 66 | 16.2% | 2.49 | 6.1 s | |

| ASIFT | 22 | 31.9% | 3.21 | 8.2 s | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, C.; Liu, C.; Li, H.; Ye, Z.; Sui, H.; Yang, W. Multiview Image Matching of Optical Satellite and UAV Based on a Joint Description Neural Network. Remote Sens. 2022, 14, 838. https://doi.org/10.3390/rs14040838

Xu C, Liu C, Li H, Ye Z, Sui H, Yang W. Multiview Image Matching of Optical Satellite and UAV Based on a Joint Description Neural Network. Remote Sensing. 2022; 14(4):838. https://doi.org/10.3390/rs14040838

Chicago/Turabian StyleXu, Chuan, Chang Liu, Hongli Li, Zhiwei Ye, Haigang Sui, and Wei Yang. 2022. "Multiview Image Matching of Optical Satellite and UAV Based on a Joint Description Neural Network" Remote Sensing 14, no. 4: 838. https://doi.org/10.3390/rs14040838

APA StyleXu, C., Liu, C., Li, H., Ye, Z., Sui, H., & Yang, W. (2022). Multiview Image Matching of Optical Satellite and UAV Based on a Joint Description Neural Network. Remote Sensing, 14(4), 838. https://doi.org/10.3390/rs14040838