Damaged Building Extraction Using Modified Mask R-CNN Model Using Post-Event Aerial Images of the 2016 Kumamoto Earthquake

Abstract

1. Introduction

2. Datasets and Methods

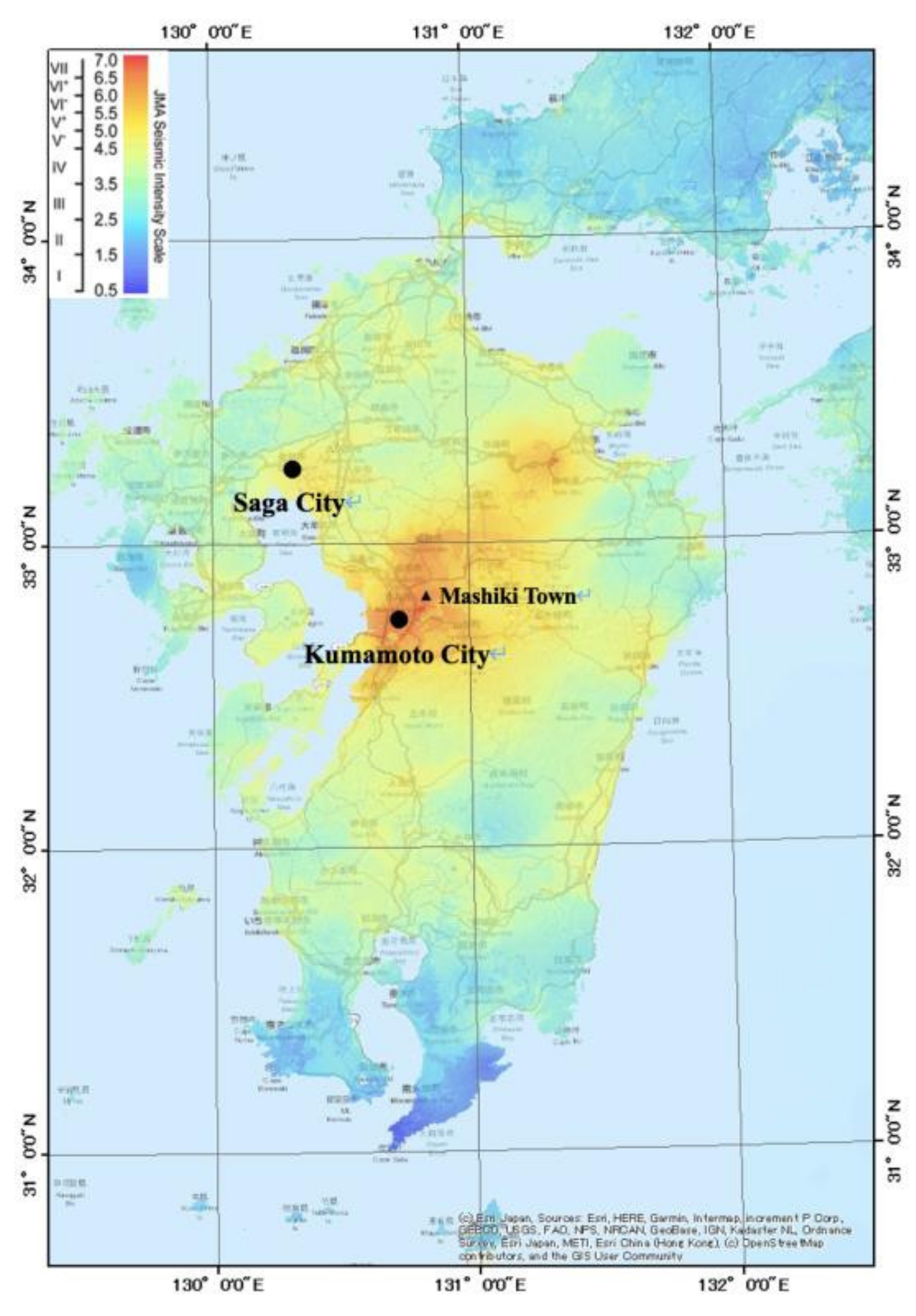

2.1. Outline of the 2016 Kumamoto Earthquake

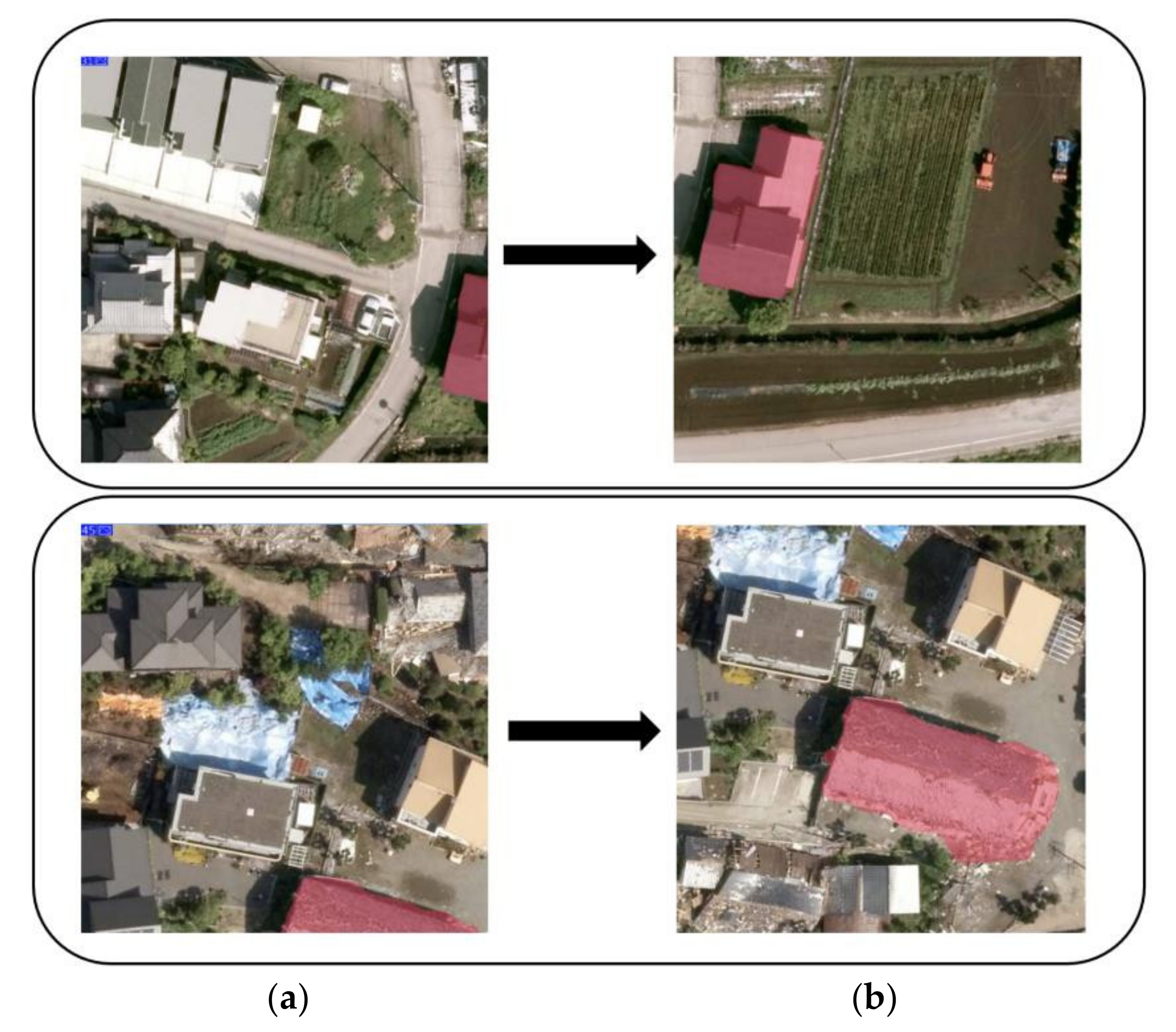

2.2. Datasets

2.3. Proposed Mask R-CNN Model

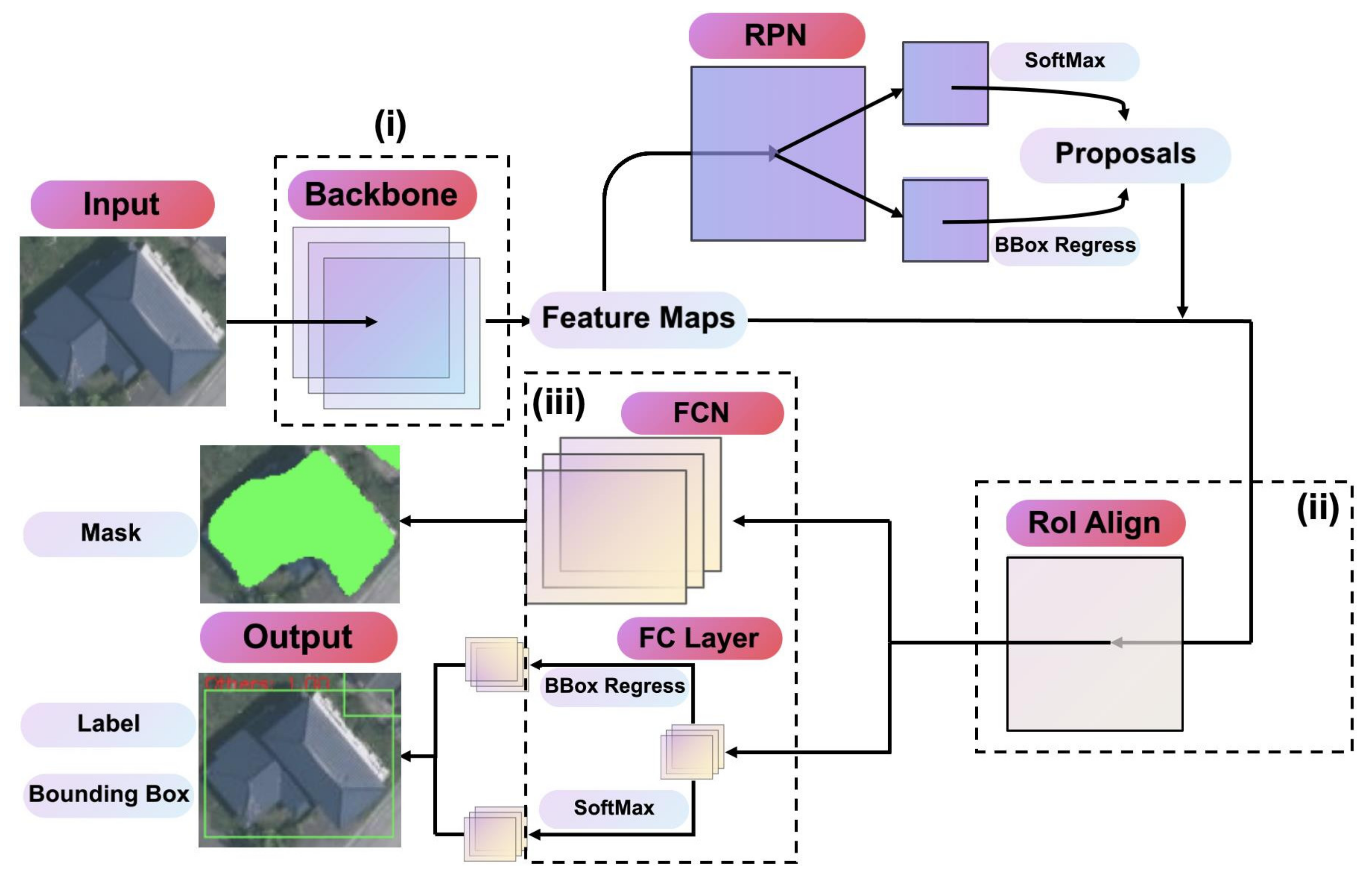

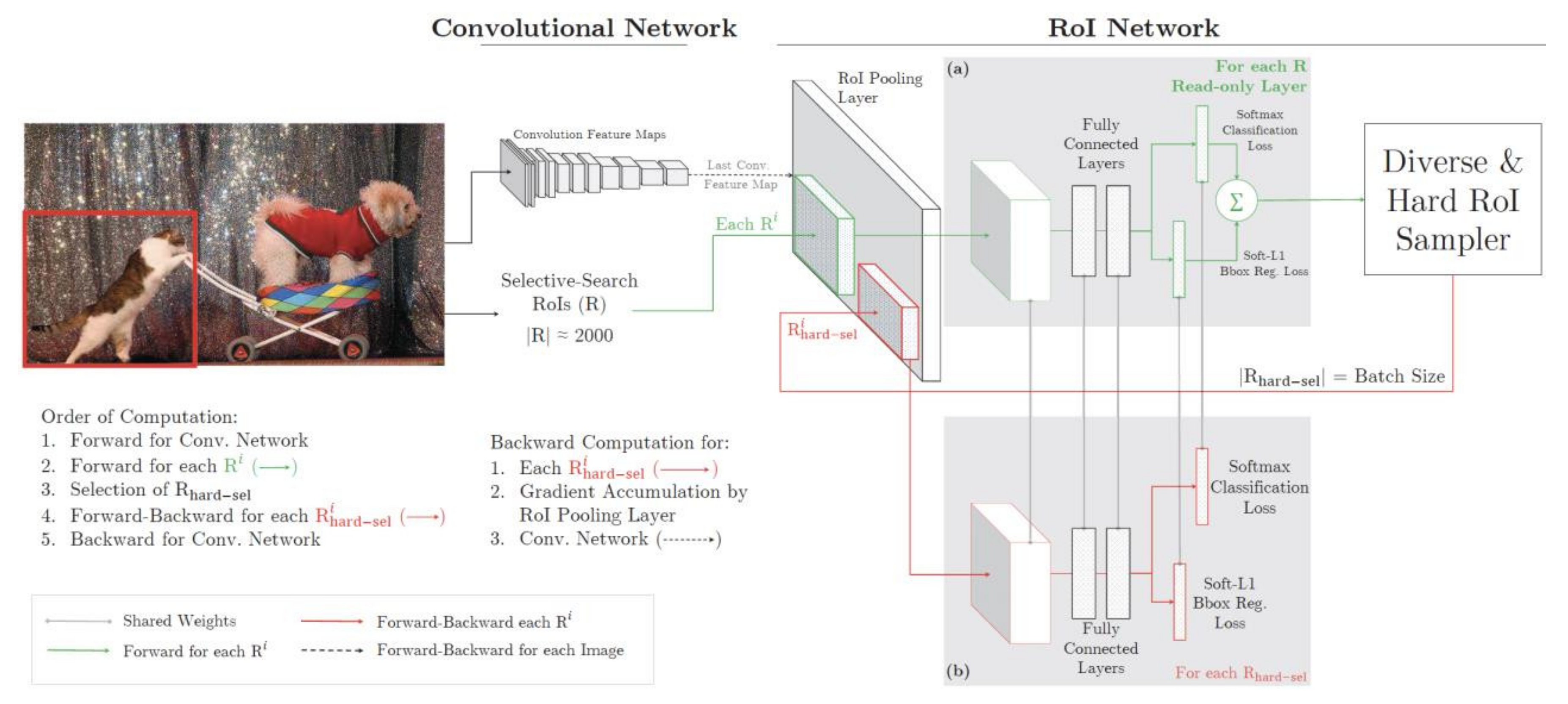

2.3.1. Mask R-CNN Architecture

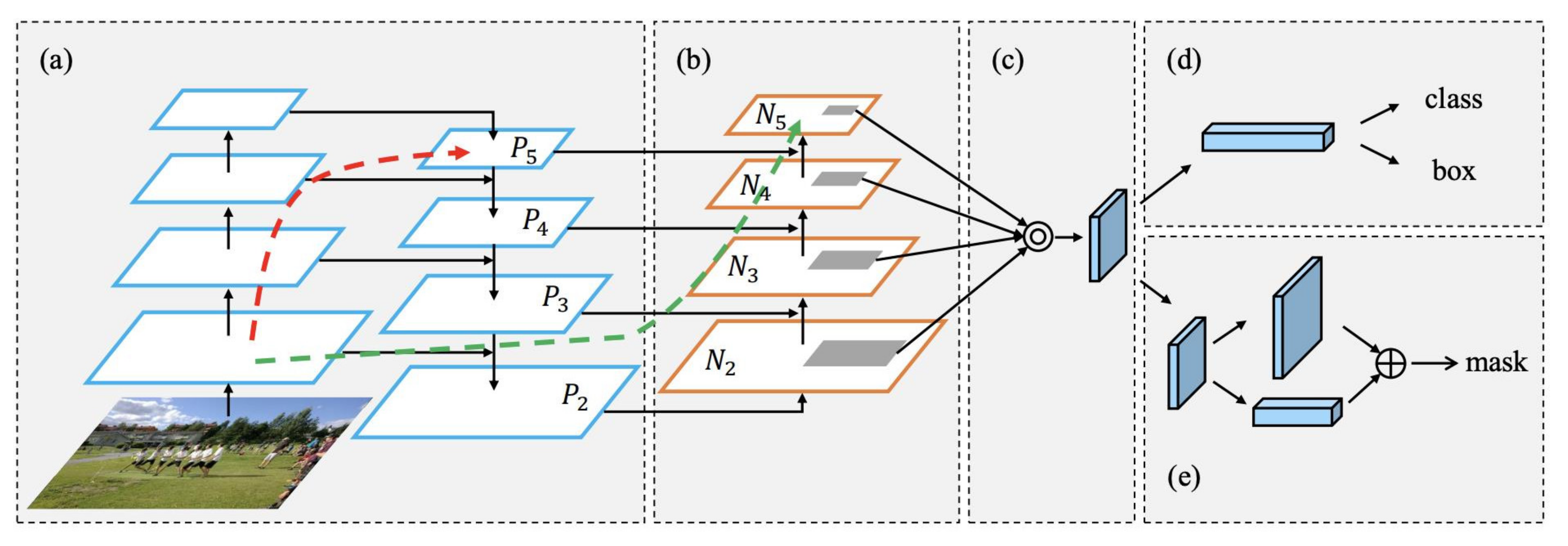

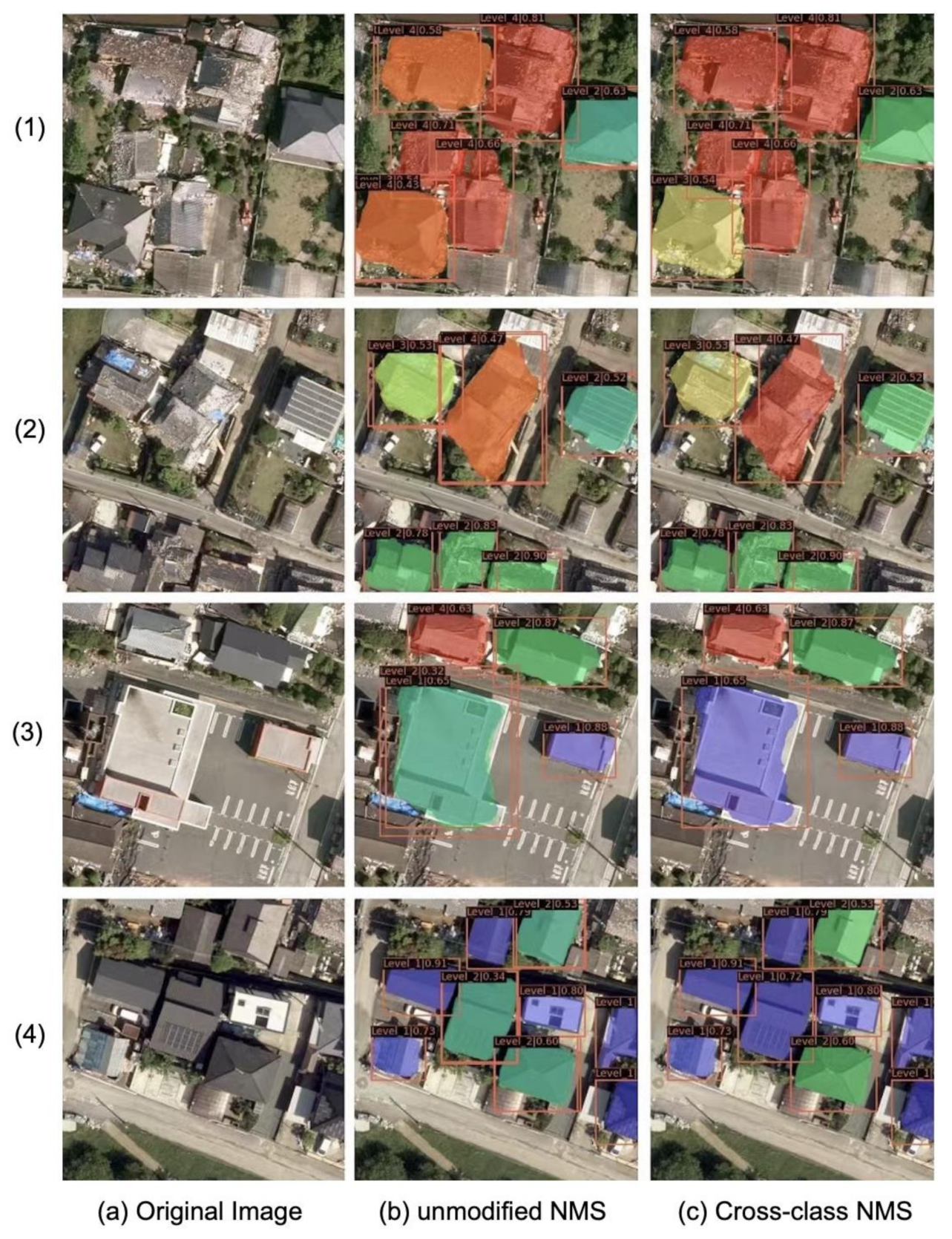

2.3.2. Model Modification

2.4. Details of Training and Evaluation Methods

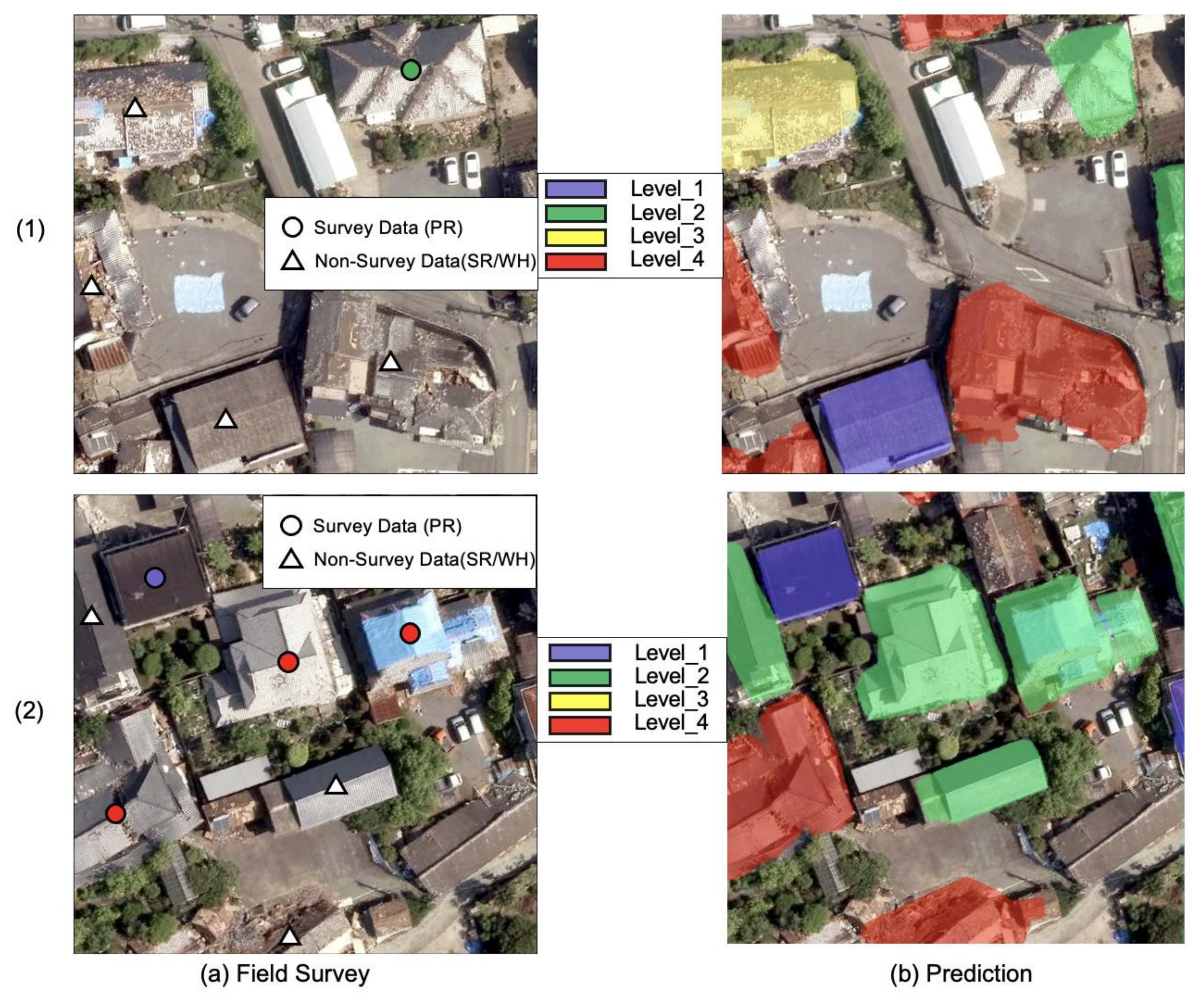

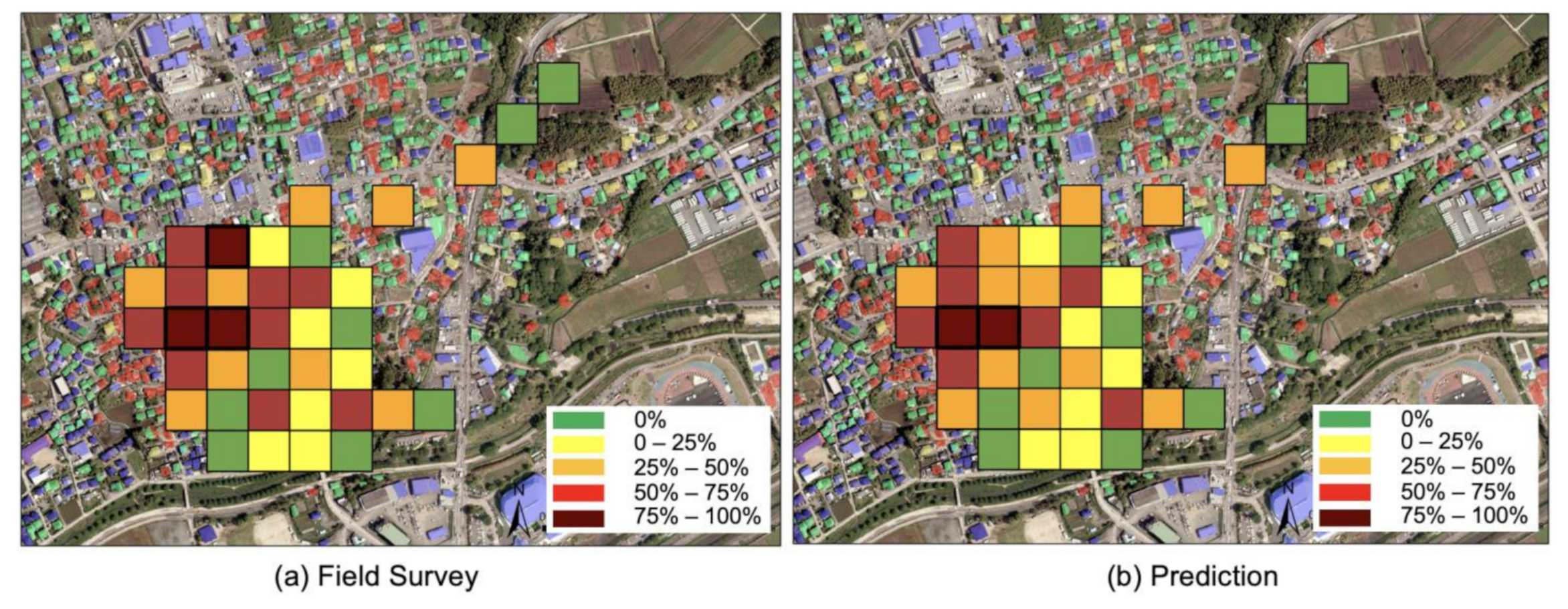

3. Results

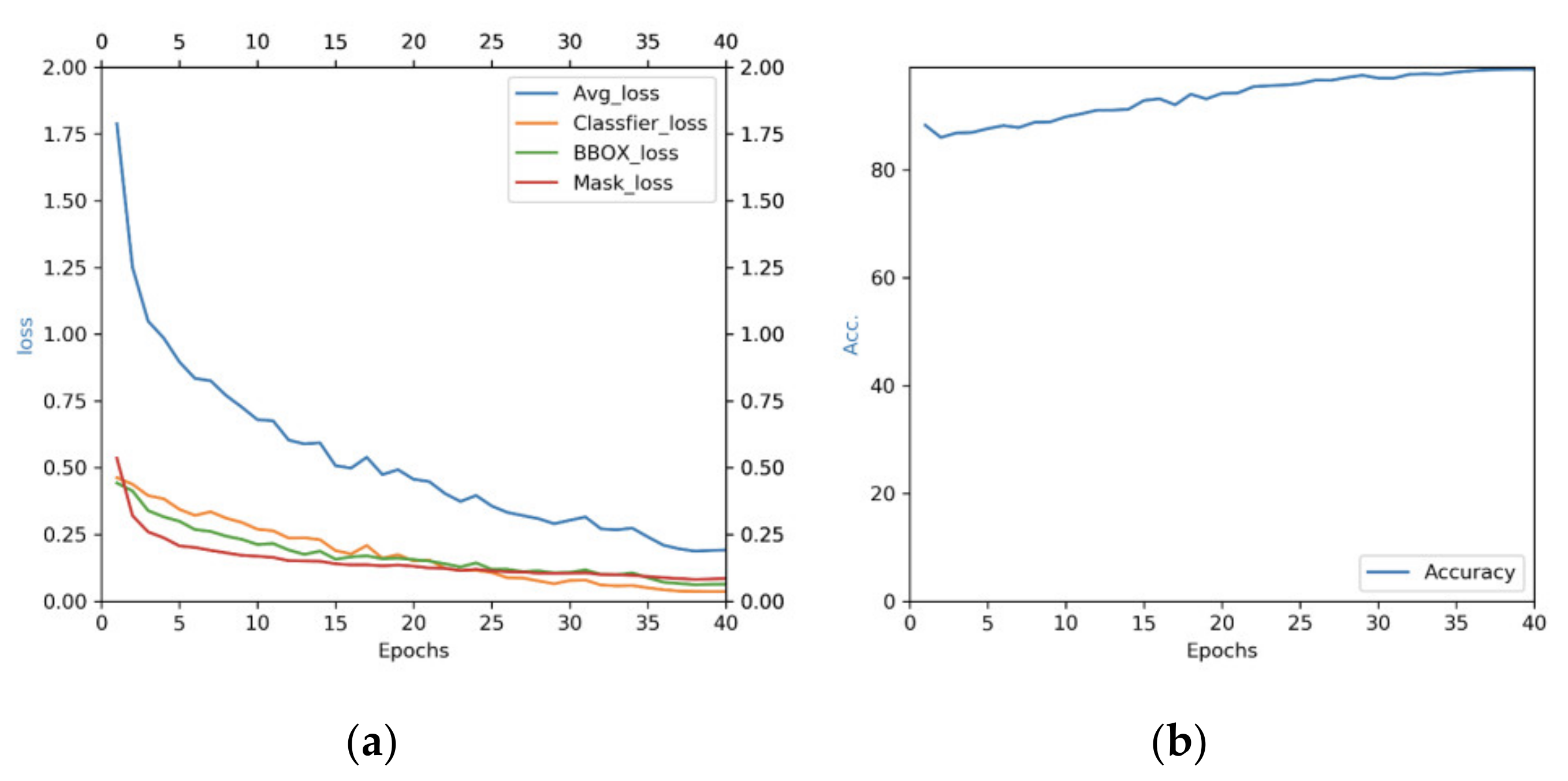

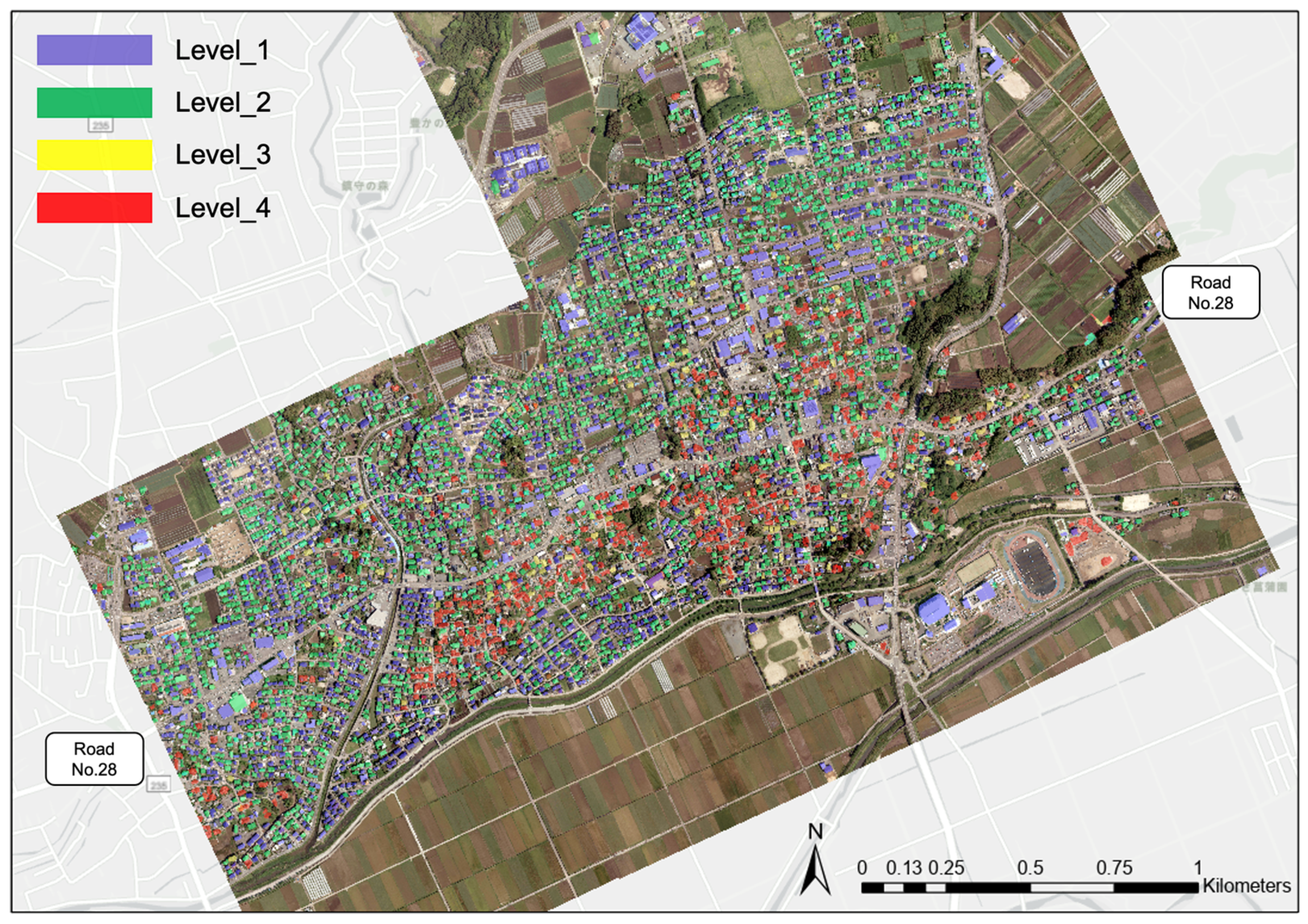

3.1. Experiment Results

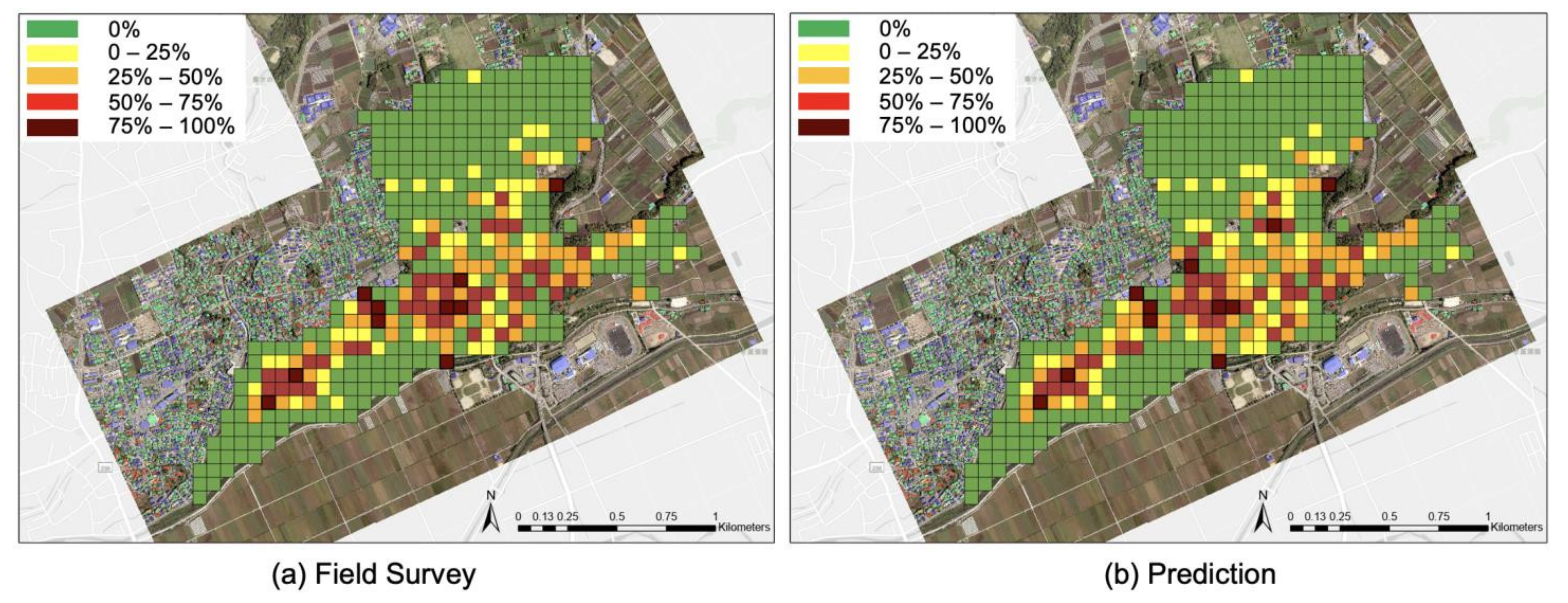

3.2. Verification

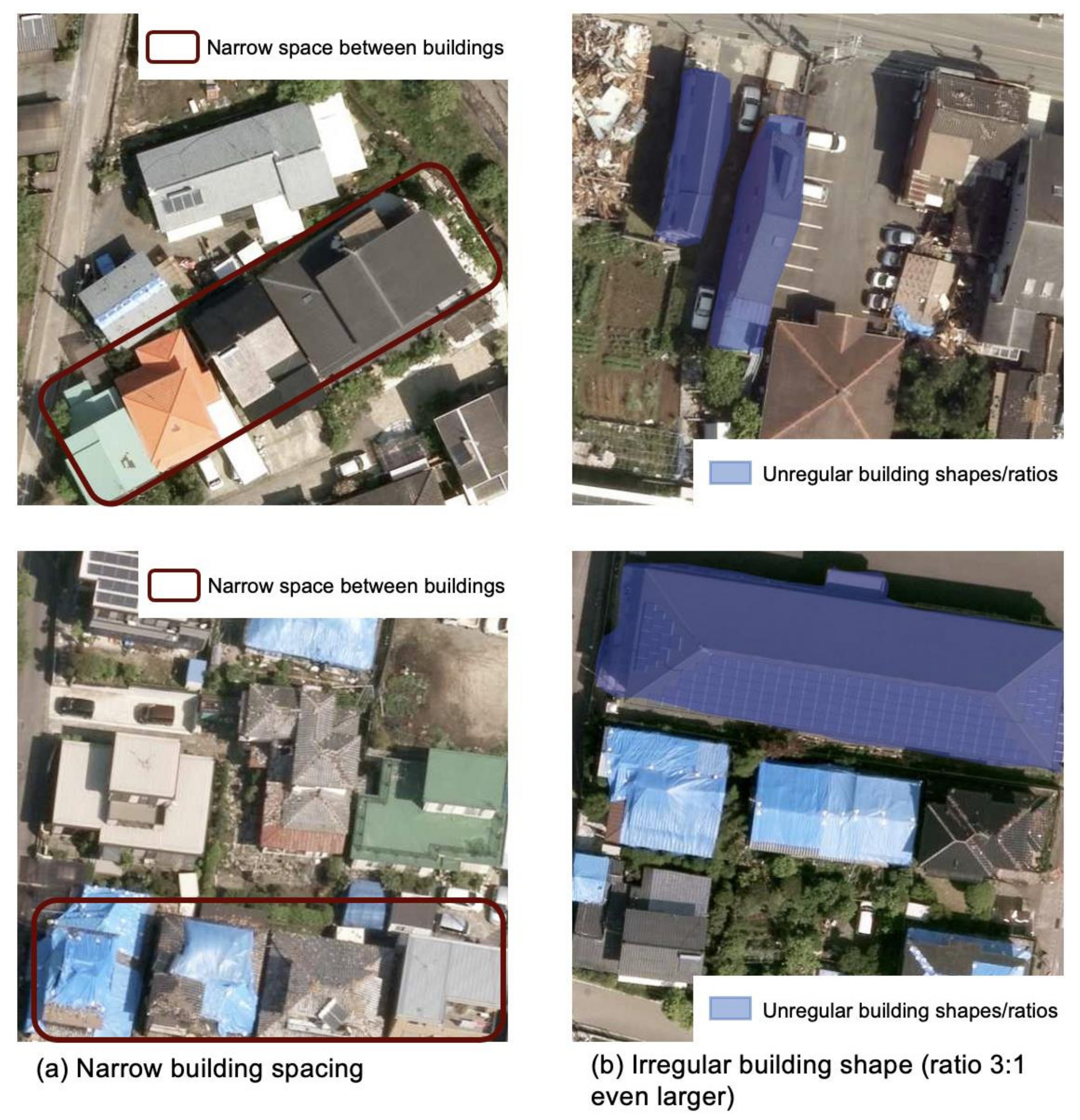

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- The Human Cost of Disasters: An Overview of the Last 20 Years (2000–2019). Available online: https://www.undrr.org/publication/human-cost-disasters-overview-last-20-years-2000-2019 (accessed on 27 October 2021).

- Plank, S. Rapid damage assessment by means of multi-temporal SAR—A comprehensive review and outlook to Sentinel-1. Remote Sens. 2014, 6, 4870–4906. [Google Scholar] [CrossRef]

- Haq, M.; Akhtar, M.; Muhammad, S.; Paras, S.; Rahmatullah, J. Techniques of remote sensing and GIS for flood monitoring and damage assessment: A case study of Sindh Province, Pakistan. Egypt. J. Remote Sens. Space Sci. 2012, 15, 135–141. [Google Scholar] [CrossRef]

- Wang, W.; Qu, J.J.; Hao, X.; Liu, Y.; Stanturf, A.J. Post-hurricane forest damage assessment using satellite remote sensing. Agric. For. Meteorol. 2009, 150, 122–132. [Google Scholar] [CrossRef]

- Jiménez, I.J.S.; Bustamante, O.W.; Capurata, E.O.R.; Pablo, J.M.M. Rapid urban flood damage assessment using high resolution remote sensing data and an object-based approach. Geomat. Nat. Hazards Risk 2020, 11, 906–927. [Google Scholar] [CrossRef]

- Matsuoka, M.; Yamazaki, F. Interferometric Characterization of Areas Damaged by the 1995 Kobe Earthquake Using Satellite SAR Images. In Proceedings of the 12th World Conference on Earthquake Engineering, Auckland, New Zealand, 30 January–4 February 2000. [Google Scholar]

- Vu, T.T.; Matsuoka, M.; Yamazaki, F. Detection and animation of damage using very high-resolution satellite data following the 2003 Bam, Iran earthquake. Earthq. Spectra 2005, 21, S319–S327. [Google Scholar] [CrossRef]

- Matsuoka, M.; Yamazaki, F. Use of interferometric satellite SAR for earthquake damage detection. In Proceedings of the 6th International Conference on Seismic Zonation, Palm Springs, CA, USA, 12–15 November 2000. [Google Scholar]

- Hussain, E.; Ural, S.; Kim, K.; Fu, F.; Shan, J. Building extraction and rubble mapping for city Port-au-Prince post-2010 earthquake with GeoEye-1 imagery and lidar data. Photogramm. Eng. Remote Sens. 2011, 77, 1011–1023. [Google Scholar]

- Choi, C.; Kim, J.; Kim, J.; Kim, D.; Bae, Y.; Kim, H.S. Development of heavy rain damage prediction model using machine learning based on big data. Adv. Meteorol. 2018, 2018, 5024930. [Google Scholar] [CrossRef]

- Kim, H.I.; Han, K.Y. Linking hydraulic modeling with a machine learning approach for extreme flood prediction and response. Atmosphere 2020, 11, 987. [Google Scholar] [CrossRef]

- Harirchian, E.; Kumari, V.; Jadhav, K.; Rasulzade, S.; Lahmer, T.; Raj Das, R. A synthesized study based on machine learning approaches for rapid classifying earthquake damage grades to RC buildings. Appl. Sci. 2021, 11, 7540. [Google Scholar] [CrossRef]

- Castorrini, A.; Venturini, P.; Gerboni, F.; Corsini, A.; Rispoli, F. Machine learning aided prediction of rain erosion damage on wind turbine blade sections. In Proceedings of the ASME Turbo Expo 2021: Turbomachinery Technical Conference and Exposition, Rotterdam, The Netherlands, 7–11 June 2021. [Google Scholar] [CrossRef]

- Dornaika, F.; Moujahid, A.; Merabet, E.Y.; Ruichek, Y. Building detection from orthophotos using a machine learning approach: An empirical study on image segmentation and descriptors. Expert Syst. Appl. 2016, 58, 130–142. [Google Scholar] [CrossRef]

- Naito, S.; Tomozawa, H.; Mori, Y.; Nagata, T.; Monma, N.; Nakamura, H.; Fujiwara, H.; Shoji, G. Building-damage detection method based on machine learning utilizing aerial photographs of the Kumamoto Earthquake. Earthq. Spectra 2020, 36, 1166–1187. [Google Scholar] [CrossRef]

- Dong, Z.; Lin, B. Learning a robust CNN-based rotation insensitive model for ship detection in VHR remote sensing images. Int. J. Remote Sens. 2020, 41, 3614–3626. [Google Scholar] [CrossRef]

- Ishii, Y.; Matsuoka, M.; Maki, N.; Horie, K.; Tanaka, S. Recognition of damaged building using deep learning based on aerial and local photos taken after the 1995 Kobe Earthquake. J. Struct. Constr. Eng. 2018, 83, 1391–1400. [Google Scholar] [CrossRef][Green Version]

- Sun, X.; Liu, L.; Li, C.; Yin, J.; Zhao, J.; Si, W. Classification for remote sensing data with improved CNN-SVM method. IEEE Access 2019, 7, 164507–164516. [Google Scholar] [CrossRef]

- Yang, J.; Guo, J.; Yue, H.; Liu, Z.; Hu, H.; Li, K. CDnet: CNN-based cloud detection for remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6195–6211. [Google Scholar] [CrossRef]

- Zhang, C.; Wei, S.; Ji, S.; Lu, M. Detecting large-scale urban land cover changes from very high resolution remote sensing images using CNN-based classification. ISPRS Int. J. Geo.-Inf. 2019, 8, 189. [Google Scholar] [CrossRef]

- Chaoyue, C.; Weiguo, G.; Yongliang, C.; Weihong, L. Learning a two-stage CNN model for multi-sized building detection in remote sensing images. Remote Sens. Lett. 2019, 10, 103–110. [Google Scholar]

- Krizhevsky, A.; Hinton, G. Image net classification with deep convolutional neural networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; Volume 1, pp. 1097–1105. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2015; pp. 770–778. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Volume 4, pp. 630–645. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Jiang, H.; Learned-Miller, E. Face detection with the Faster R-CNN. In Proceedings of the 12th IEEE International Conference on Automatic Face & Gesture Recognition, Washington, DC, USA, 30 May–3 June 2017; pp. 650–657. [Google Scholar] [CrossRef]

- Yahalomi, E.; Chernofsky, M.; Werman, M. Detection of distal radius fractures trained by a small set of X-Ray images and Faster R-CNN. Adv. Intell. Syst. Comput. 2019, 997, 971–981. [Google Scholar] [CrossRef]

- Shetty, A.R.; Krishna Mohan, B. Building extraction in high spatial resolution images using deep learning techniques. Lect. Notes Comput. Sci. 2018, 10962, 327–338. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Ullo, L.S.; Mohan, A.; Sebastianelli, A.; Ahamed, E.A.; Kumar, B.; Dwivedi, R.; Sinha, R.G. A new Mask R-CNN-based method for improved landslide detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 3799–3810. [Google Scholar] [CrossRef]

- Stiller, D.; Stark, T.; Wurm, M.; Dech, S.; Taubenböck, H. Large-scale building extraction in very high-resolution aerial imagery using Mask R-CNN. In Proceedings of the 2019 Joint Urban Remote Sensing Event (JURSE), Vannes, France, 22–24 May 2019; pp. 1–4. [Google Scholar]

- Kyushu District Administrative Evaluation Bureau, Ministry of Internal Affairs and Communications: Survey on the Issuance of Disaster Victim Certificates during Large-Scale Disasters—Focusing on the 2016 Kumamoto Earthquake. Available online: https://www.soumu.go.jp/main_content/000528758.pdf (accessed on 28 October 2021). (In Japanese).

- Geological Survey of Japan (GSJ), National Institute of Advanced Industrial Science and Technology (AIST), 2016. Quick Estimation System for Earthquake Map Triggered by Observed Records (QuiQuake). Available online: https://gbank.gsj.jp/QuiQuake/QuakeMap/ (accessed on 27 October 2021).

- Cabinet Office of Japan. Summary of Damage Situation in the Kumamoto Earthquake Sequence. 2021. Available online: http://www.bousai.go.jp/updates/h280414jishin/index.html (accessed on 27 October 2021).

- Record of the Mashiki Town Earthquake of 2008: Summary Version. Available online: https://www.town.mashiki.lg.jp/kiji0033823/3_3823_5428_up_pi3lhhyu.pdf (accessed on 27 October 2021).

- Microsoft Photogrammetry. UltraCam-X Technical Specifications. Available online: https://www.sfsaviation.ch/files/177/SFS%20UCX.pdf. (accessed on 28 October 2021).

- Wada, K. Labelme: Image Polygonal Annotation with Python. Available online: https://github.com/wkentaro/labelme (accessed on 28 October 2021).

- Ministry of Land, Infrastructure, Transport and Tourism (MLIT). Report of the Committee to Analyze the Causes of Building Damage in the Kumamoto Earthquake. 2016. Available online: https://www.mlit.go.jp/common/001147923.pdf (accessed on 28 October 2021).

- Okada, S.; Takai, N. Classifications of structural types and damage patterns of buildings for earthquake field investigation. J. Struct. Constr. Eng. 1999, 64, 65–72. [Google Scholar] [CrossRef]

- Takai, N.; Okada, S. Classifications of damage patterns of reinforced concrete buildings for earthquake field investigation. J. Struct. Constr. Eng. 2001, 66, 67–74. (In Japanese) [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Shrivastava, A.; Gupta, A.; Girshick, R. Training region-based object detectors with online hard example mining. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1063–6919. [Google Scholar]

- Chen, K.; Wang, J.; Pang, J.; Cao, Y.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Xu, J.; et al. MMDetection: Open mmlab detection toolbox and benchmark. arXiv 2019, arXiv:1906.07155. [Google Scholar]

- Jadon, S. A survey of loss functions for semantic segmentation. arXiv 2020, arXiv:2006.14822v4. [Google Scholar]

- Saito, T.; Rehmsmeier, M. The precision-recall plot is more informative than the ROC plot when evaluating binary classifiers on imbalanced datasets. PLoS ONE 2015, 10, e0118432. [Google Scholar] [CrossRef]

- Powers, D. What the F-measure doesn’t measure: Features, flaws, fallacies and fixes. arXiv 2015, arXiv:1503.06410. [Google Scholar]

- Paul, J. Etude de la distribution florale dans une portion des Alpes et du Jura. Bull. Soc. Vaud. Sci. Nat. 1901, 37, 547–579. [Google Scholar] [CrossRef]

- mAP (mean Average Precision) for Object Detection. Available online: https://jonathan-hui.medium.com/map-mean-average-precision-for-object-detection-45c121a31173 (accessed on 1 November 2021).

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Statistics Bureau of Japan, Ministry of Internal Affairs and Communications. Standard Grid Square and Grid Square Code Used for the Statistics. Available online: https://www.stat.go.jp/english/data/mesh/02.html (accessed on 1 February 2022).

- Hosang, J.; Benenson, R.; Schiele, B. Learning non-maximum suppression. arXiv 2017, arXiv:1705.02950v2. [Google Scholar]

- Liu, W.; Yamazaki, F. Extraction of collapsed buildings in the 2016 Kumamoto earthquake using multi-temporal PALSAR-2 data. J. Disaster Res. 2017, 12, 241–250. [Google Scholar] [CrossRef]

| Damage Grades | Damage Grades (MLIT) | Damage Grades [43,44] | Features in Photographs |

|---|---|---|---|

| Level_1 | No damage | D0 | No damage |

| Level_2 | Slight damage | D1–D3 | Spalling of surface cover and cracks in columns, beams, and structural walls |

| Level_3 | Severe damage | D4 | Loss of interior space due to destruction of columns and beams |

| Level_4 | Collapsed | D5–D6 | Collapse of total or parts of building |

| Parameter | Value |

|---|---|

| Learning Rate | 0.0025 |

| Learning Rate Decay | 0.0001 |

| Step | [15,300, 17,850] |

| Total iterations | 40,000 |

| Momentum parameter | 0.9 |

| Batch size | 3 |

| Prediction | |||||

|---|---|---|---|---|---|

| Level_1 | Level_2 | Level_3 | Level_4 | ||

| Truth | Level_1 | FP | |||

| Level_2 | FN | TP | FN | FN | |

| Level_3 | FP | ||||

| Level_4 | FP | ||||

| Model | Epochs | Anchor Stride | Anchor Ratios | Anchor Size | Bounding Box mAP | Segmentation mAP |

|---|---|---|---|---|---|---|

| 1 | 300 | [4, 8, 16, 32, 64] | [0.5, 1, 2] | [32, 64, 128, 256, 512] | 0.292 | 0.289 |

| 2 | [4, 8, 16, 32, 64] | [0.5, 1, 2, 4] | [32, 64, 128, 256, 512] | 0.311 | 0.305 | |

| 3 | [4, 8, 16, 32, 64] | [0.25, 0.5, 1, 2, 4] | [32, 64, 128, 256, 512] | 0.320 | 0.318 | |

| 4 | [6, 9, 18, 32, 64] | [0.25, 0.5, 1, 2, 4] | [48, 72, 144, 256, 512] | 0.328 | 0.326 | |

| 5 | [6, 9, 16, 32, 64] | [0.25, 0.5, 1, 2, 4] | [48, 72, 128, 256, 512] | 0.332 | 0.333 |

| Model | Epochs | PANet | OHEM | Bounding Box mAP | Segmentation mAP |

|---|---|---|---|---|---|

| 5 | 300 | No | No | 0.332 | 0.333 |

| 6 | 80 | No | No | 0.345 | 0.342 |

| 7 | 80 | No | Yes | 0.350 | 0.356 |

| 8 | 80 | Yes | No | 0.352 | 0.368 |

| 9 | 80 | Yes | Yes | 0.361 | 0.370 |

| 10 | 40 | Yes | Yes | 0.365 | 0.373 |

| Prediction for the Test Area | |||||

|---|---|---|---|---|---|

| Level_1 | Level_2 | Level_3 | Level_4 | ||

| True Label | Level_1 | 34 | 11 | 0 | 0 |

| Level_2 | 7 | 71 | 1 | 2 | |

| Level_3 | 0 | 9 | 28 | 3 | |

| Level_4 | 0 | 8 | 2 | 75 | |

| Prediction | ||||||

|---|---|---|---|---|---|---|

| 0% | 0–25% | 25–50% | 50–75% | 75–100% | ||

| Field Survey | 0% | 259 | 2 | 1 | 0 | 0 |

| 0–25% | 2 | 42 | 3 | 0 | 0 | |

| 25–50% | 0 | 1 | 52 | 0 | 0 | |

| 50–75% | 0 | 0 | 4 | 37 | 1 | |

| 75–100% | 0 | 0 | 1 | 0 | 9 | |

| Prediction | ||||||

|---|---|---|---|---|---|---|

| 0% | 0–25% | 25–50% | 50–75% | 75–100% | ||

| Field Survey | 0% | 9 | 0 | 0 | 0 | 0 |

| 0–25% | 0 | 7 | 0 | 0 | 0 | |

| 25–50% | 0 | 0 | 9 | 0 | 0 | |

| 50–75% | 0 | 0 | 2 | 7 | 0 | |

| 75–100% | 0 | 0 | 1 | 0 | 2 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhan, Y.; Liu, W.; Maruyama, Y. Damaged Building Extraction Using Modified Mask R-CNN Model Using Post-Event Aerial Images of the 2016 Kumamoto Earthquake. Remote Sens. 2022, 14, 1002. https://doi.org/10.3390/rs14041002

Zhan Y, Liu W, Maruyama Y. Damaged Building Extraction Using Modified Mask R-CNN Model Using Post-Event Aerial Images of the 2016 Kumamoto Earthquake. Remote Sensing. 2022; 14(4):1002. https://doi.org/10.3390/rs14041002

Chicago/Turabian StyleZhan, Yihao, Wen Liu, and Yoshihisa Maruyama. 2022. "Damaged Building Extraction Using Modified Mask R-CNN Model Using Post-Event Aerial Images of the 2016 Kumamoto Earthquake" Remote Sensing 14, no. 4: 1002. https://doi.org/10.3390/rs14041002

APA StyleZhan, Y., Liu, W., & Maruyama, Y. (2022). Damaged Building Extraction Using Modified Mask R-CNN Model Using Post-Event Aerial Images of the 2016 Kumamoto Earthquake. Remote Sensing, 14(4), 1002. https://doi.org/10.3390/rs14041002