Automated Archaeological Feature Detection Using Deep Learning on Optical UAV Imagery: Preliminary Results

Abstract

:1. Introduction

2. Background

2.1. Deep Learning in UAV Remote Sensing

2.2. Remote Sensing, Deep Learning, and UAV Imagery in Archaeology

3. Software and Data

3.1. Mask R-CNN Approach

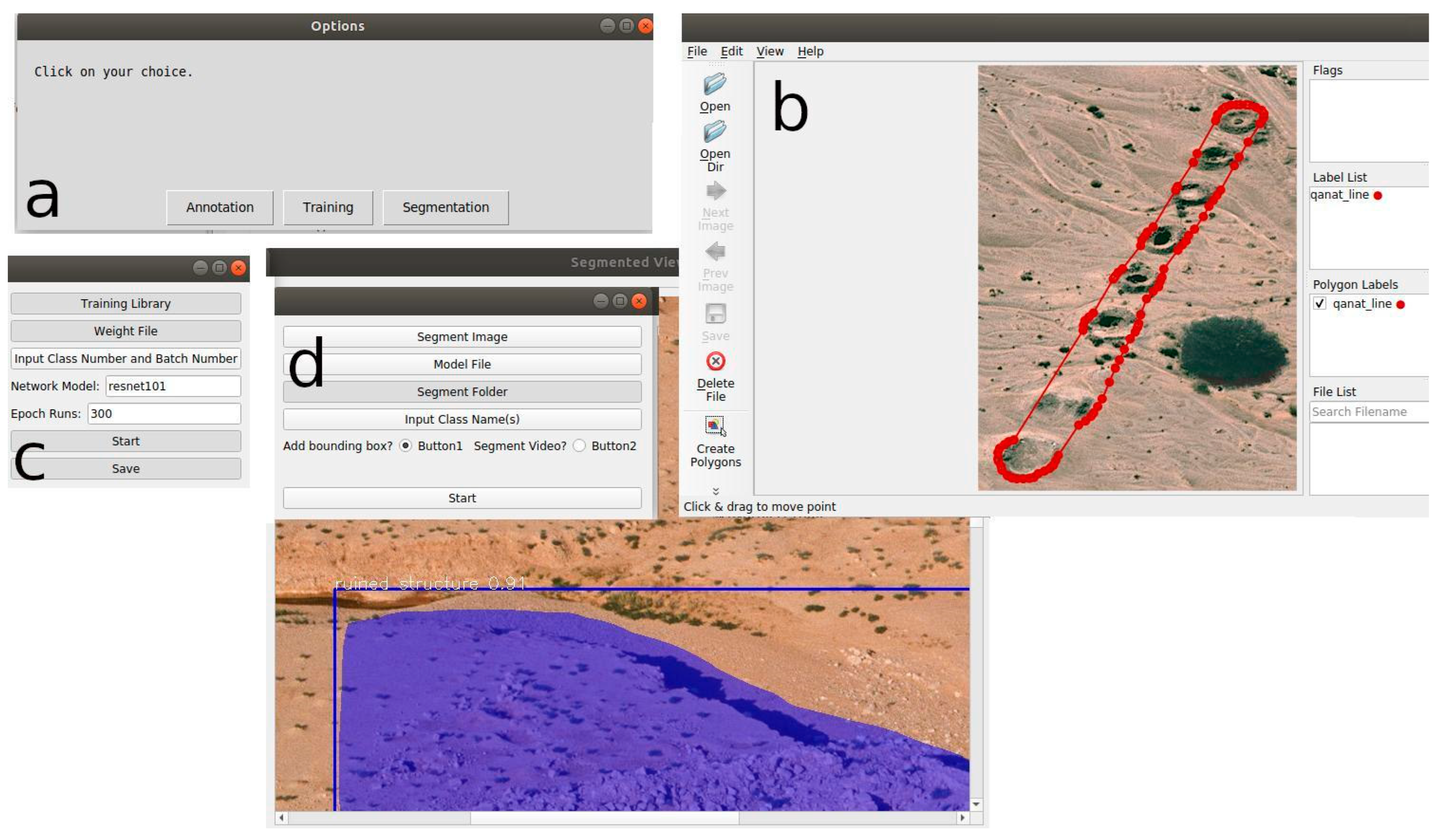

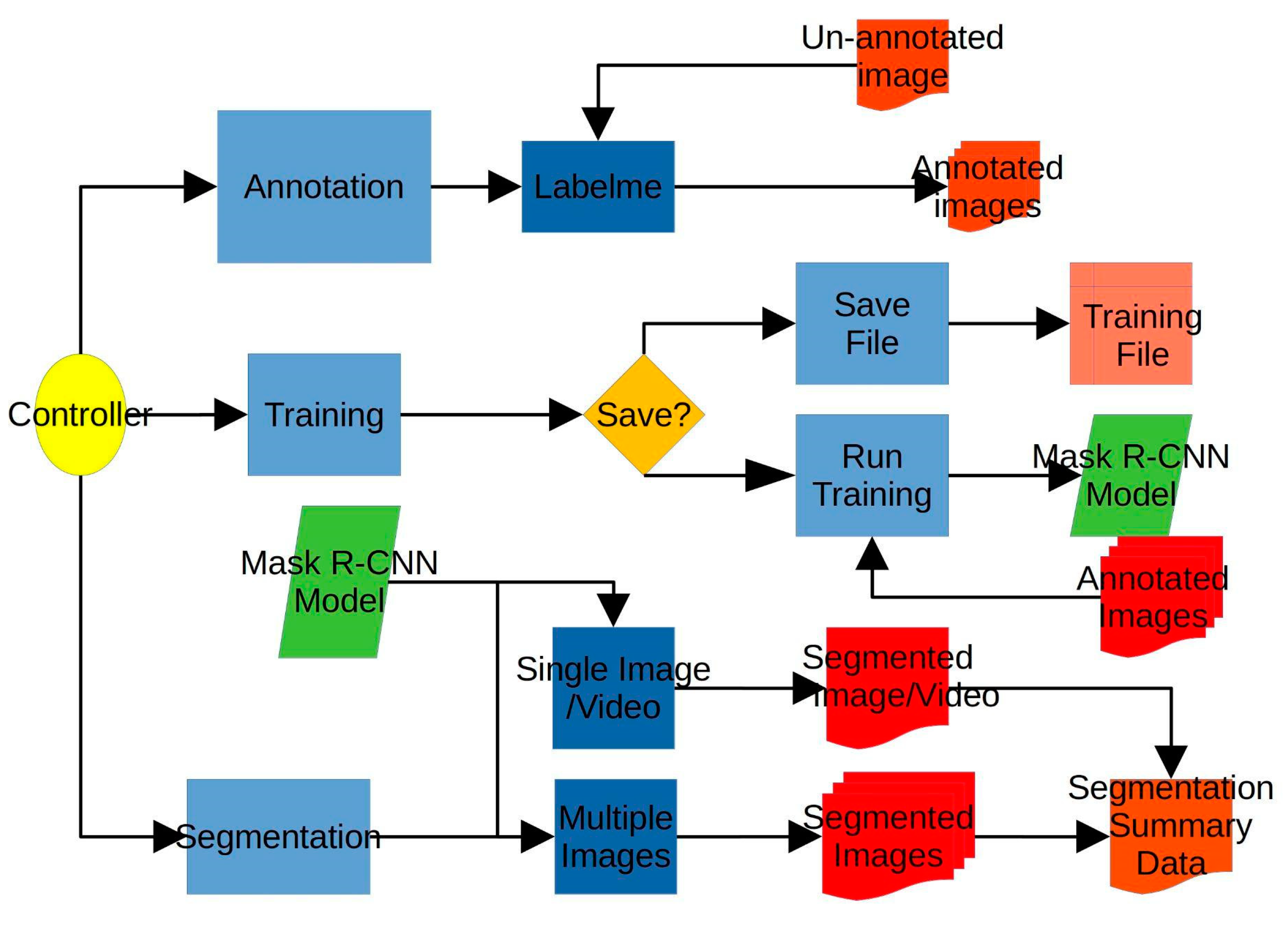

3.2. Applied Software

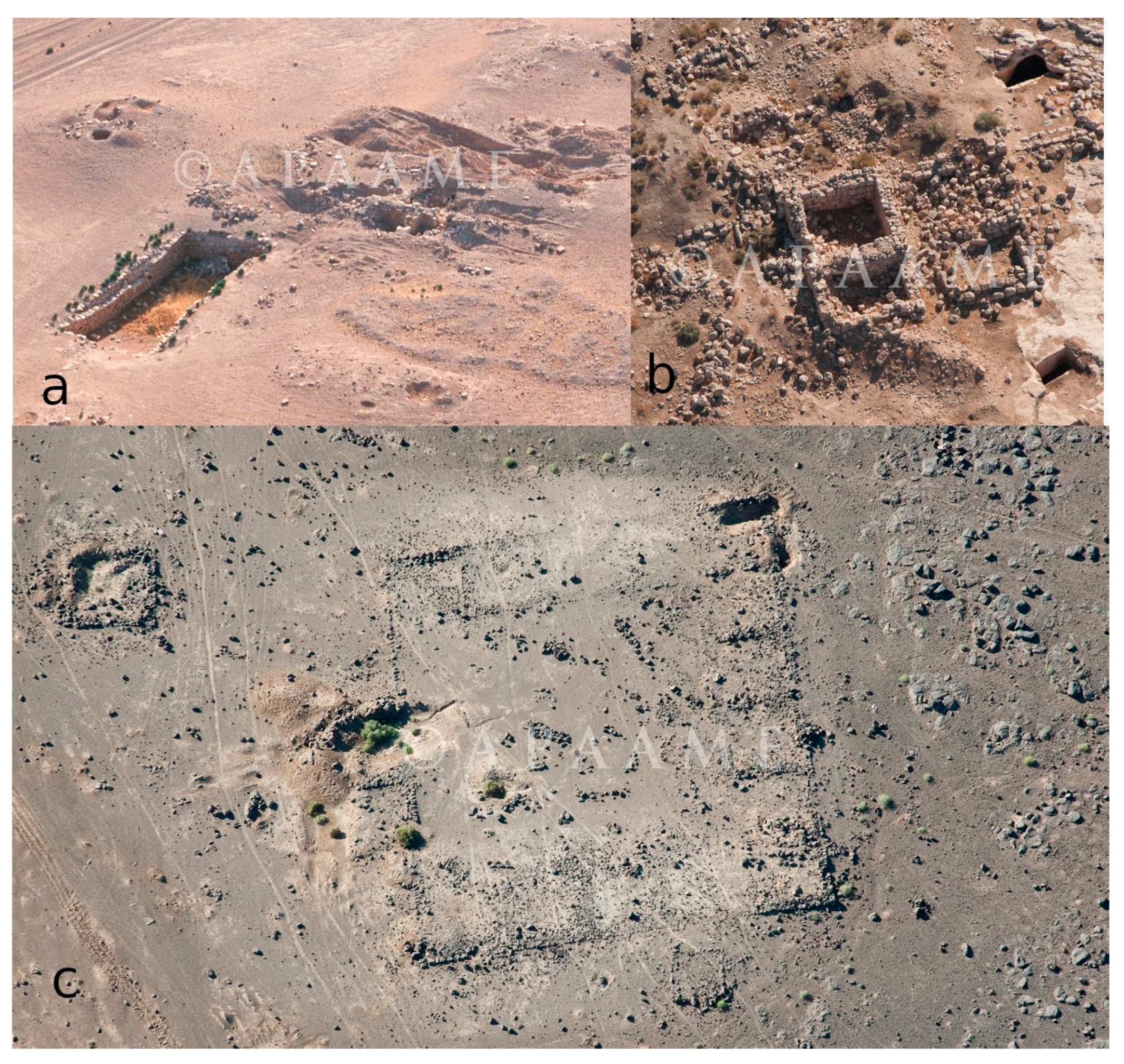

3.3. Training and Testing Data

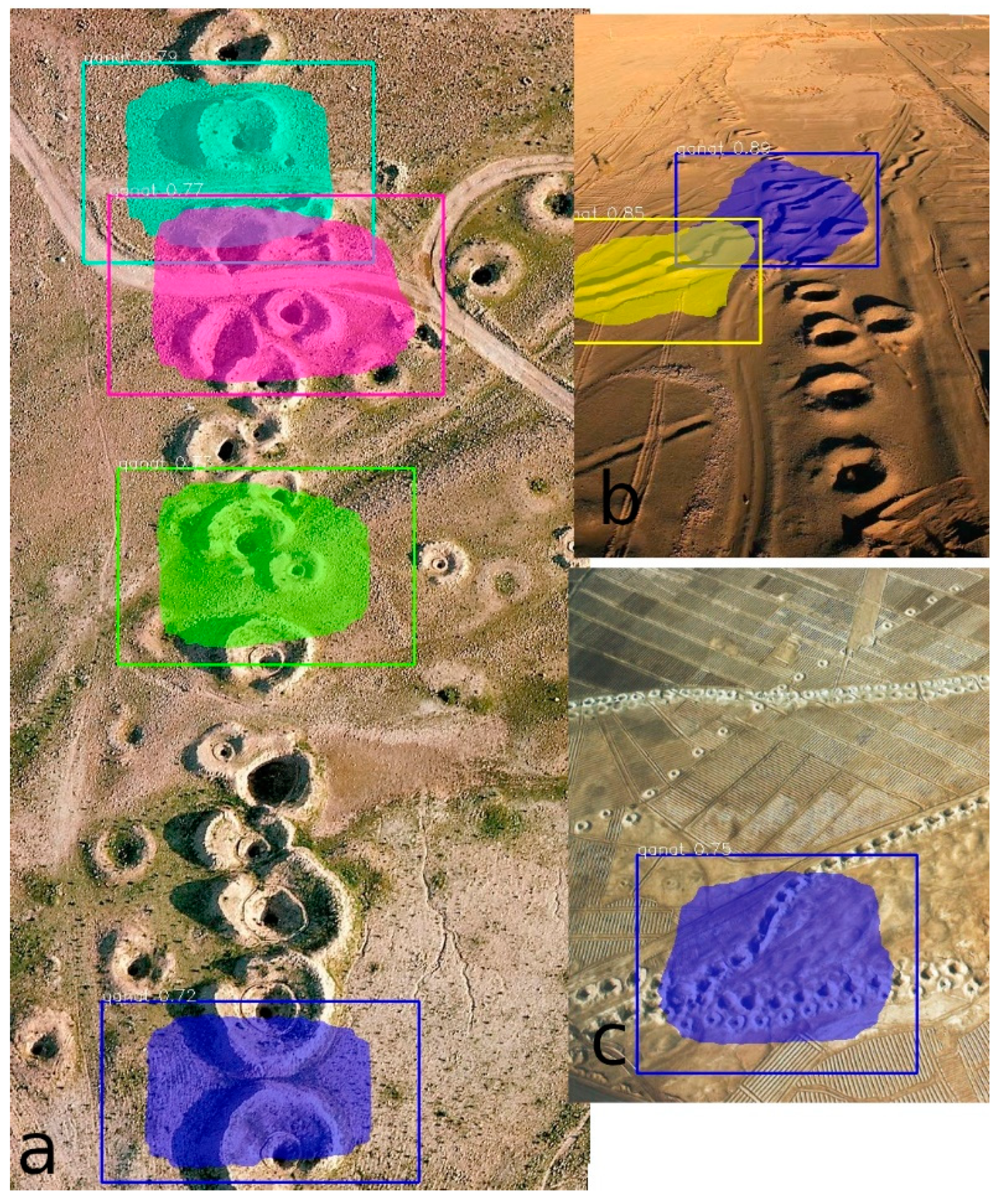

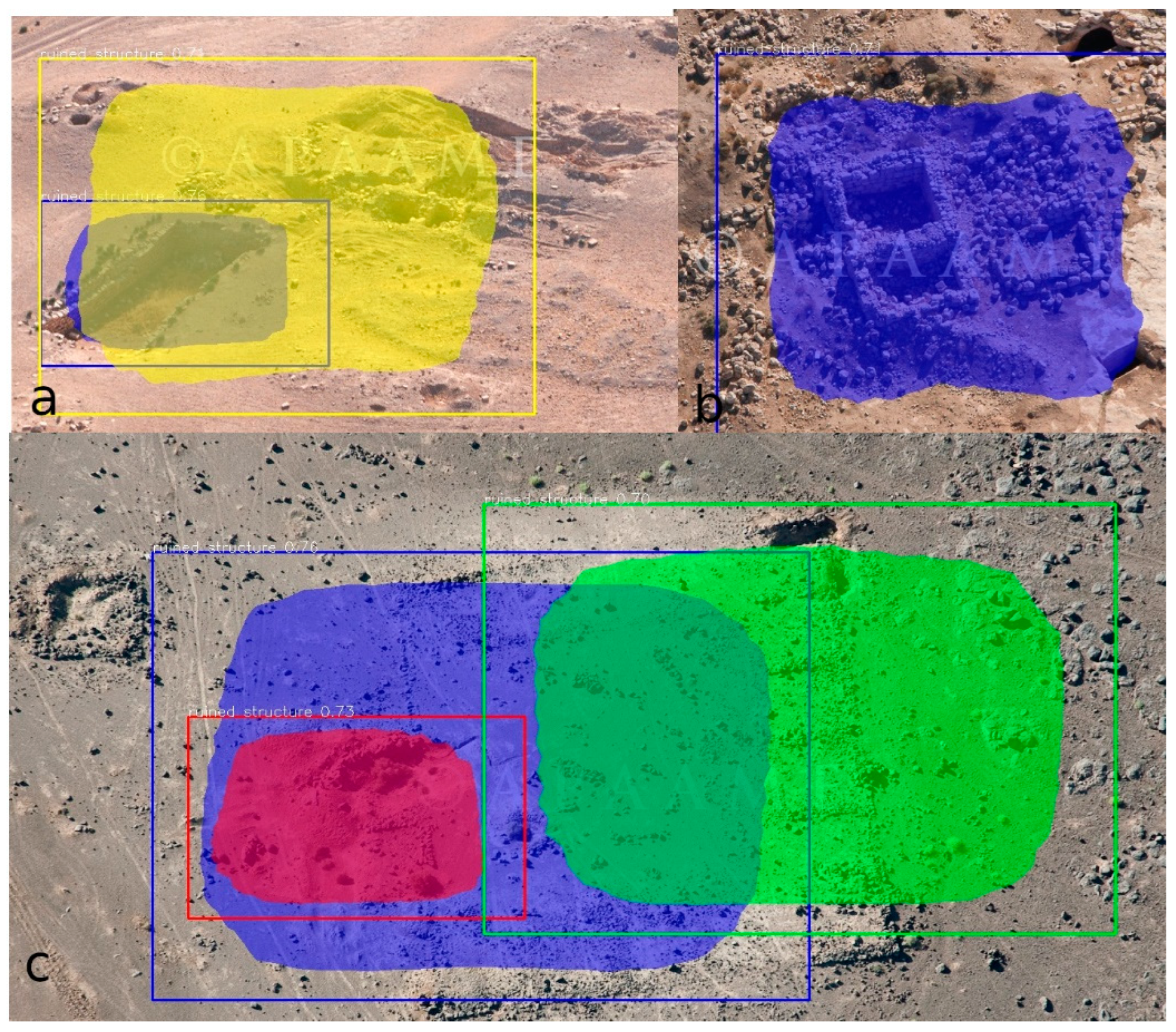

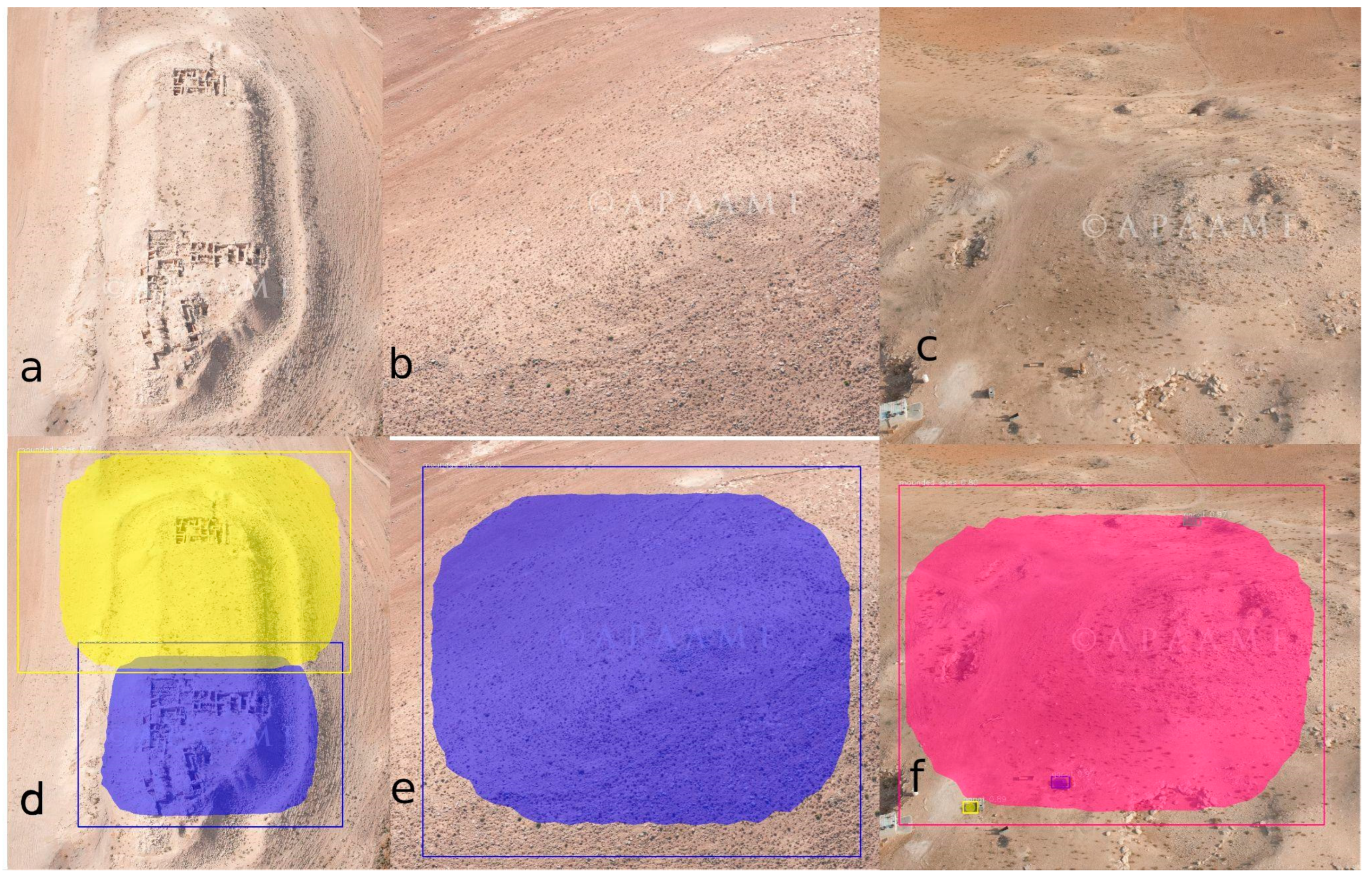

4. Some Initial Results from Sample Data

5. Discussion and Conclusions

5.1. Discussion

5.2. Conclusion and Future Direction

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Themistocleous, K. The Use of UAVs for Cultural Heritage and Archaeology. In Remote Sensing for Archaeology and Cultural Landscapes; Hadjimitsis, D.G., Themistocleous, K., Cuca, B., Agapiou, A., Lysandrou, V., Lasaponara, R., Masini, N., Schreier, G., Eds.; Springer Remote Sensing/Photogrammetry; Springer International Publishing: Cham, Switzerland, 2020; ISBN 978-3-030-10978-3. [Google Scholar]

- Altaweel, M.; Squitieri, A. Finding a Relatively Flat Archaeological Site with Minimal Ceramics: A Case Study from Iraqi Kurdistan. J. Field Archaeol. 2019, 44, 523–537. [Google Scholar] [CrossRef]

- Brutto, M.L.; Borruso, A.; D’Argenio, A. Uav Systems for Photogrammetric Data Acquisition of Archaeological Sites. Int. J. Herit. Digit. Era 2012, 1, 7–13. [Google Scholar] [CrossRef] [Green Version]

- Nikolakopoulos, K.G.; Soura, K.; Koukouvelas, I.K.; Argyropoulos, N.G. UAV vs Classical Aerial Photogrammetry for Archaeological Studies. J. Archaeol. Sci. Rep. 2017, 14, 758–773. [Google Scholar] [CrossRef]

- Fernández-Hernandez, J.; González-Aguilera, D.; Rodríguez-Gonzálvez, P.; Mancera-Taboada, J. Image-Based Modelling from Unmanned Aerial Vehicle (UAV) Photogrammetry: An Effective, Low-Cost Tool for Archaeological Applications: Image-Based Modelling from UAV Photogrammetry. Archaeometry 2015, 57, 128–145. [Google Scholar] [CrossRef]

- Orengo, H.A.; Garcia-Molsosa, A.; Berganzo-Besga, I.; Landauer, J.; Aliende, P.; Tres-Martínez, S. New Developments in Drone-based Automated Surface Survey: Towards a Functional and Effective Survey System. Archaeol. Prospect. 2021, 28, 519–526. [Google Scholar] [CrossRef]

- Brooke, C.; Clutterbuck, B. Mapping Heterogeneous Buried Archaeological Features Using Multisensor Data from Unmanned Aerial Vehicles. Remote Sens. 2019, 12, 41. [Google Scholar] [CrossRef] [Green Version]

- Figshare. 2021. Available online: https://figshare.com/ (accessed on 8 January 2021).

- Yao, C.; Luo, X.; Zhao, Y.; Zeng, W.; Chen, X. A Review on Image Classification of Remote Sensing Using Deep Learning. In Proceedings of the 2017 3rd IEEE International Conference on Computer and Communications (ICCC), Chengdu, China, 13–16 December 2017; pp. 1947–1955. [Google Scholar]

- Osco, L.P.; Marcato Junior, J.; Marques Ramos, A.P.; de Castro Jorge, L.A.; Fatholahi, S.N.; de Andrade Silva, J.; Matsubara, E.T.; Pistori, H.; Gonçalves, W.N.; Li, J. A Review on Deep Learning in UAV Remote Sensing. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102456. [Google Scholar] [CrossRef]

- Yu, H.; Li, G.; Zhang, W.; Huang, Q.; Du, D.; Tian, Q.; Sebe, N. The Unmanned Aerial Vehicle Benchmark: Object Detection, Tracking and Baseline. Int. J. Comput. Vis. 2020, 128, 1141–1159. [Google Scholar] [CrossRef]

- Penatti, O.A.B.; Nogueira, K.; dos Santos, J.A. Do Deep Features Generalize from Everyday Objects to Remote Sensing and Aerial Scenes Domains? In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 44–51. [Google Scholar]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Hu, Q.; Ling, H. Vision Meets Drones: Past, Present and Future. arXiv arXiv:2001.06303, 2020.

- Xia, G.-S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A Large-Scale Dataset for Object Detection in Aerial Images. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3974–3983. [Google Scholar]

- Zou, Q.; Ni, L.; Zhang, T.; Wang, Q. Deep Learning Based Feature Selection for Remote Sensing Scene Classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2321–2325. [Google Scholar] [CrossRef]

- Zhao, L.; Tang, P.; Huo, L. Feature Significance-Based Multibag-of-Visual-Words Model for Remote Sensing Image Scene Classification. J. Appl. Remote Sens. 2016, 10, 035004. [Google Scholar] [CrossRef]

- Sheng, G.; Yang, W.; Xu, T.; Sun, H. High-Resolution Satellite Scene Classification Using a Sparse Coding Based Multiple Feature Combination. Int. J. Remote Sens. 2012, 33, 2395–2412. [Google Scholar] [CrossRef]

- dos Santos, A.A.; Marcato Junior, J.; Araújo, M.S.; Di Martini, D.R.; Tetila, E.C.; Siqueira, H.L.; Aoki, C.; Eltner, A.; Matsubara, E.T.; Pistori, H.; et al. Assessment of CNN-Based Methods for Individual Tree Detection on Images Captured by RGB Cameras Attached to UAVs. Sensors 2019, 19, 3595. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lobo Torres, D.; Queiroz Feitosa, R.; Nigri Happ, P.; Elena Cué La Rosa, L.; Marcato Junior, J.; Martins, J.; Olã Bressan, P.; Gonçalves, W.N.; Liesenberg, V. Applying Fully Convolutional Architectures for Semantic Segmentation of a Single Tree Species in Urban Environment on High Resolution UAV Optical Imagery. Sensors 2020, 20, 563. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wiseman, J.; El-Baz, F. (Eds.) Remote Sensing in Archaeology: Interdisciplinary Contributions to Archaeology; Springer: New York, NY, USA, 2007; ISBN 978-0-387-44453-6. [Google Scholar]

- Parcak, S.H. Satellite Remote Sensing for Archaeology; Routledge: London, UK; New York, NY, USA, 2009; ISBN 978-0-415-44877-2. [Google Scholar]

- Satellite Remote Sensing: A New Tool for Archaeology; Lasaponara, R.; Masini, N. (Eds.) Remote Sensing and Digital Image Processing; Springer: Dordrecht, The Netherlands; New York, NY, USA, 2012; ISBN 978-90-481-8800-0. [Google Scholar]

- Hritz, C. Tracing Settlement Patterns and Channel Systems in Southern Mesopotamia Using Remote Sensing. J. Field Archaeol. 2010, 35, 184–203. [Google Scholar] [CrossRef]

- Bewley, B.; Bradbury, J. The Endangered Archaeology in the Middle East and North Africa Project: Origins, Development and Future Directions. Bull. Counc. Br. Res. Levant 2017, 12, 15–20. [Google Scholar] [CrossRef]

- Hammer, E.; Seifried, R.; Franklin, K.; Lauricella, A. Remote Assessments of the Archaeological Heritage Situation in Afghanistan. J. Cult. Herit. 2018, 33, 125–144. [Google Scholar] [CrossRef] [Green Version]

- Caspari, G.; Crespo, P. Convolutional neural networks for archaeological site detection -Finding “princely” tombs. J. Archaeol. Sci. 2019, 110, 104998. [Google Scholar] [CrossRef]

- Guyot, A.; Hubert-Moy, L.; Lorho, T. Detecting Neolithic Burial Mounds from LiDAR-Derived Elevation Data Using a Multi-Scale Approach and Machine Learning Techniques. Remote Sens. 2018, 10, 225. [Google Scholar] [CrossRef] [Green Version]

- Verschoof-van der Vaart, W.B.; Lambers, K.; Kowalczyk, W.; Bourgeois, Q.P.J. Combining Deep Learning and Location-Based Ranking for Large-Scale Archaeological Prospection of LiDAR Data from The Netherlands. IJGI 2020, 9, 293. [Google Scholar] [CrossRef]

- Davis, D.S.; Lundin, J. Locating Charcoal Production Sites in Sweden Using LiDAR, Hydrological Algorithms, and Deep Learning. Remote Sens. 2021, 13, 3680. [Google Scholar] [CrossRef]

- Megarry, W.; Cooney, G.; Comer, D.; Priebe, C. Posterior Probability Modeling and Image Classification for Archaeological Site Prospection: Building a Survey Efficacy Model for Identifying Neolithic Felsite Workshops in the Shetland Islands. Remote Sens. 2016, 8, 529. [Google Scholar] [CrossRef] [Green Version]

- Biagetti, S.; Merlo, S.; Adam, E.; Lobo, A.; Conesa, F.C.; Knight, J.; Bekrani, H.; Crema, E.R.; Alcaina-Mateos, J.; Madella, M. High and Medium Resolution Satellite Imagery to Evaluate Late Holocene Human–Environment Interactions in Arid Lands: A Case Study from the Central Sahara. Remote Sens. 2017, 9, 351. [Google Scholar] [CrossRef] [Green Version]

- Menze, B.H.; Ur, J.A. Mapping Patterns of Long-Term Settlement in Northern Mesopotamia at a Large Scale. Proc. Natl. Acad. Sci. USA 2012, 109, E778–E787. [Google Scholar] [CrossRef] [Green Version]

- Orengo, H.A.; Conesa, F.C.; Garcia-Molsosa, A.; Lobo, A.; Green, A.S.; Madella, M.; Petrie, C.A. Automated Detection of Archaeological Mounds Using Machine-Learning Classification of Multisensor and Multitemporal Satellite Data. Proc. Natl. Acad. Sci. USA 2020, 117, 18240–18250. [Google Scholar] [CrossRef]

- Bonhage, A.; Eltaher, M.; Raab, T.; Breuß, M.; Raab, A.; Schneider, A. A modified Mask region-based convolutional neural network approach for the automated detection of archaeological sites on high-resolution light detection and ranging-derived digital elevation models in the North German Lowland. Archaeol. Prospect. 2021, 28, 177–186. [Google Scholar] [CrossRef]

- Soroush, M.; Mehrtash, A.; Khazraee, E.; Ur, J.A. Deep Learning in Archaeological Remote Sensing: Automated Qanat Detection in the Kurdistan Region of Iraq. Remote Sens. 2020, 12, 500. [Google Scholar] [CrossRef] [Green Version]

- Orengo, H.A.; Garcia-Molsosa, A. A Brave New World for Archaeological Survey: Automated Machine Learning-Based Potsherd Detection Using High-Resolution Drone Imagery. J. Archaeol. Sci. 2019, 112, 105013. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- PixelLib. 2021. Available online: https://pixellib.readthedocs.io/en/stable/ (accessed on 8 April 2021).

- TensorFlow. 2021. Available online: https://www.tensorflow.org/ (accessed on 8 April 2021).

- Labelme. 2021. Available online: https://github.com/wkentaro/labelme (accessed on 8 April 2021).

- COCO. 2021. Available online: https://cocodataset.org/#home (accessed on 8 September 2021).

- Liang, M.; Palado, G.; Browne, W.N. Identifying Simple Shapes to Classify the Big Picture. In Proceedings of the 2019 International Conference on Image and Vision Computing New Zealand (IVCNZ), Dunedin, New Zealand, 2–4 December 2019; pp. 1–6. [Google Scholar]

- Aerial Photographic Archive in the Middle East (APAAME). 2021. Available online: http://www.apaame.org/ (accessed on 8 December 2021).

- Mendeley Data. 2021. Available online: https://data.mendeley.com/ (accessed on 8 December 2021).

- Open Science Framework. 2021. Available online: https://osf.io/ (accessed on 8 December 2021).

- Picterra. 2021. Available online: https://picterra.ch/ (accessed on 8 December 2021).

- Cannan, L.; Robinson, B.M.; Patterson, K.; Langford, D.; Diltz, R.; English, W. Synthetic AI Training Data Generation Enabling Airfield Damage Assessment. In Proceedings of the SPIE Defense + Commercial Sensing, Online Only, FL, USA, 12–17 April 2021; p. 24. [Google Scholar]

- Akar, Ö.; Güngör, O. Classification of Multispectral Images Using Random Forest Algorithm. J. Geod. Geoinf. 2012, 1, 105–112. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Altaweel, M.; Khelifi, A.; Li, Z.; Squitieri, A.; Basmaji, T.; Ghazal, M. Automated Archaeological Feature Detection Using Deep Learning on Optical UAV Imagery: Preliminary Results. Remote Sens. 2022, 14, 553. https://doi.org/10.3390/rs14030553

Altaweel M, Khelifi A, Li Z, Squitieri A, Basmaji T, Ghazal M. Automated Archaeological Feature Detection Using Deep Learning on Optical UAV Imagery: Preliminary Results. Remote Sensing. 2022; 14(3):553. https://doi.org/10.3390/rs14030553

Chicago/Turabian StyleAltaweel, Mark, Adel Khelifi, Zehao Li, Andrea Squitieri, Tasnim Basmaji, and Mohammed Ghazal. 2022. "Automated Archaeological Feature Detection Using Deep Learning on Optical UAV Imagery: Preliminary Results" Remote Sensing 14, no. 3: 553. https://doi.org/10.3390/rs14030553

APA StyleAltaweel, M., Khelifi, A., Li, Z., Squitieri, A., Basmaji, T., & Ghazal, M. (2022). Automated Archaeological Feature Detection Using Deep Learning on Optical UAV Imagery: Preliminary Results. Remote Sensing, 14(3), 553. https://doi.org/10.3390/rs14030553