All-Weather and Superpixel Water Extraction Methods Based on Multisource Remote Sensing Data Fusion

Abstract

1. Introduction

2. Materials and Methods

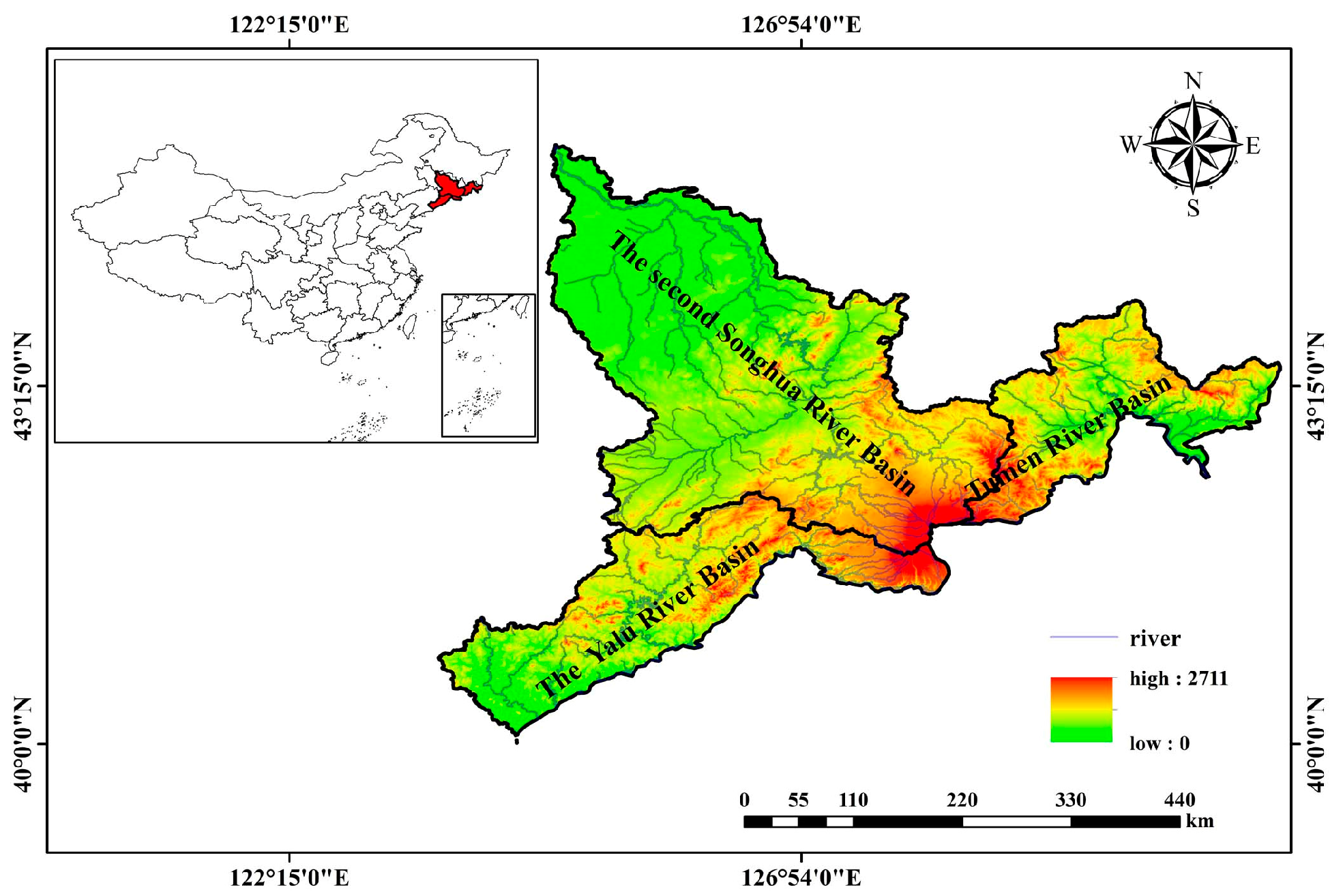

2.1. Study Area

2.2. Data Sources

2.2.1. Remote Sensing Images

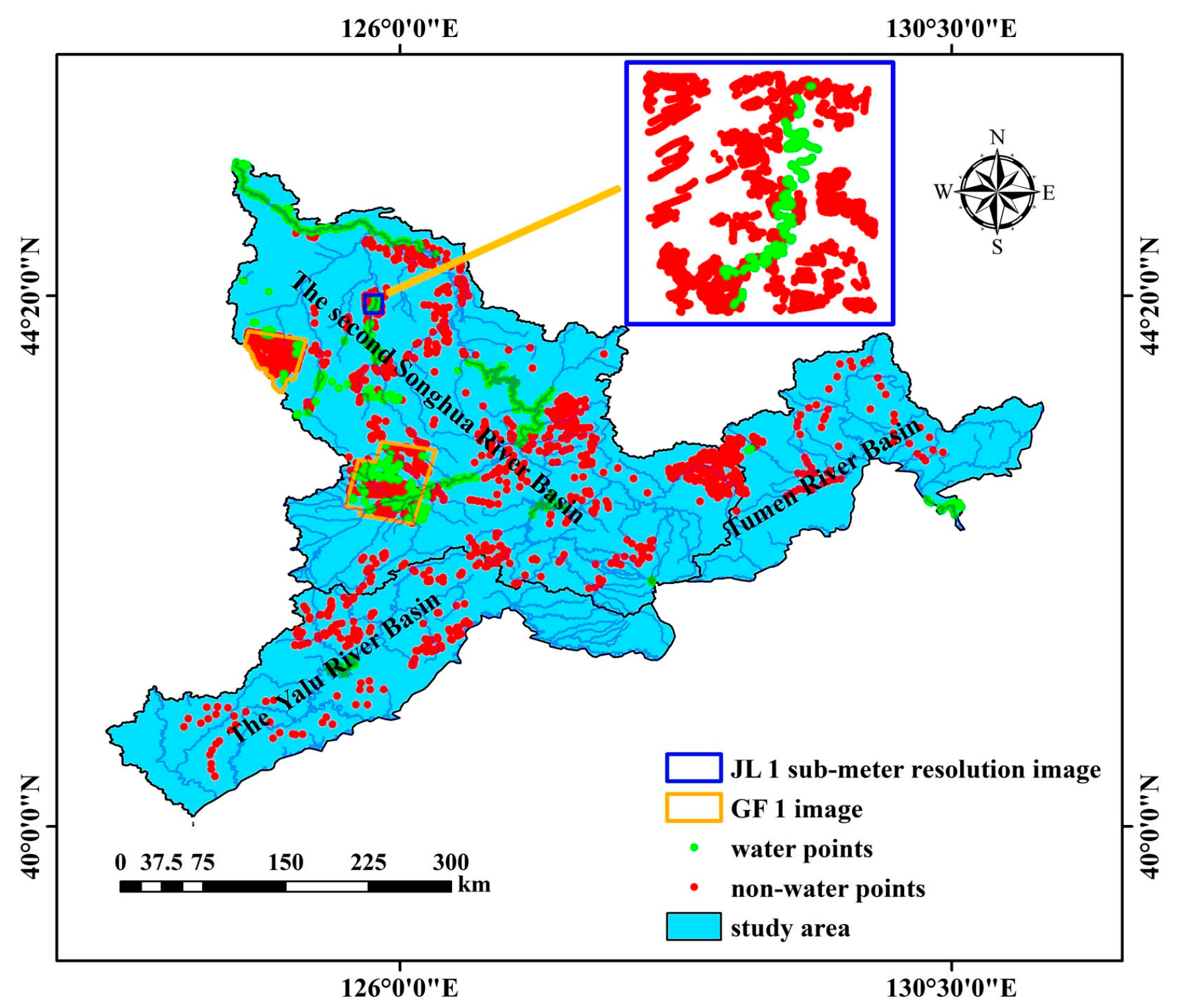

2.2.2. Sample Data

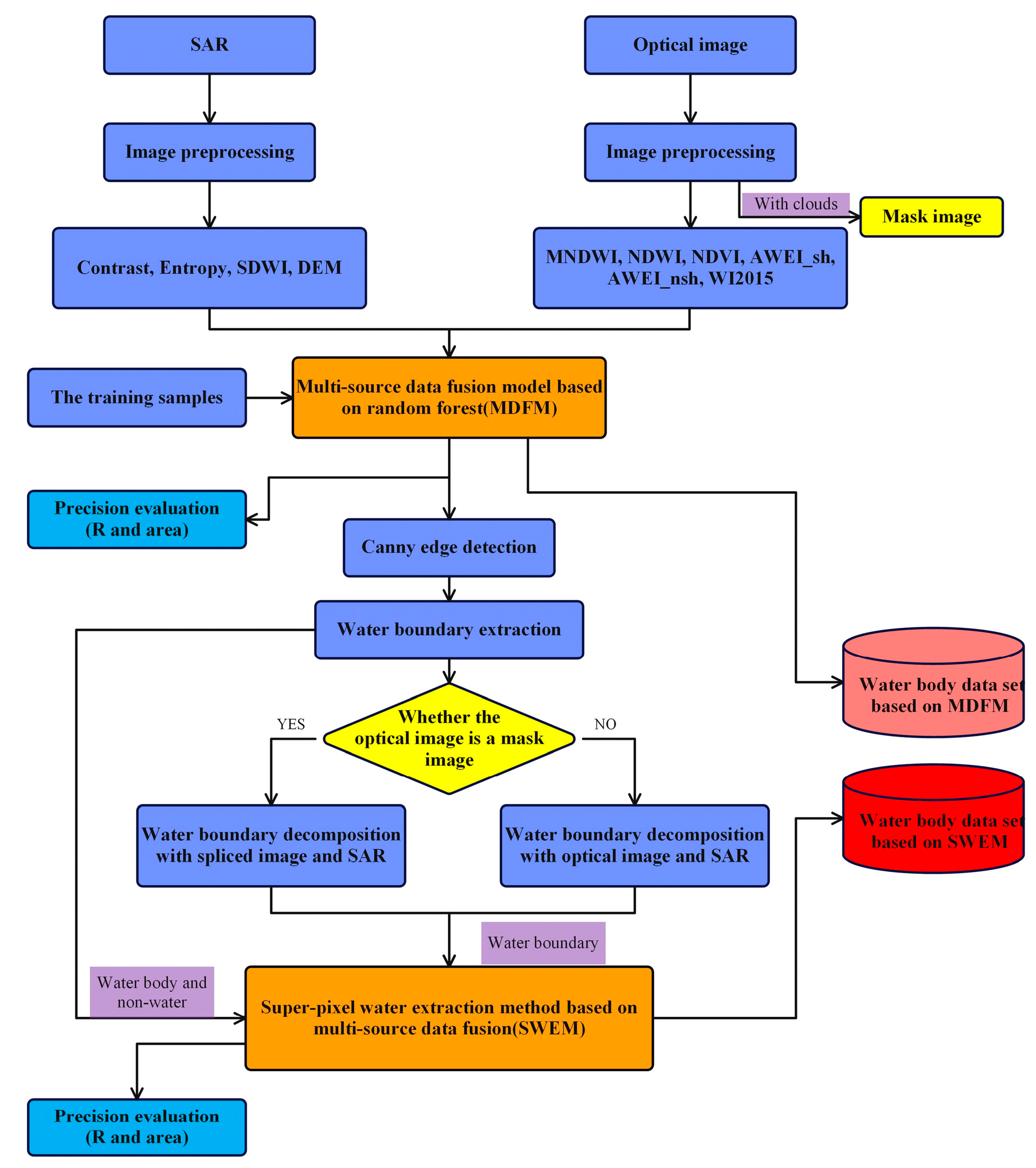

2.3. Methodology

2.3.1. Image Preprocessing

- i.

- Using the measured data, 39 clouds, 32 shadows, 23 water bodies and 35 other regions of interest (ROIs) were selected as training labels;

- ii.

- The B1~B12 bands of the optical image and VV and VH of the SAR image were selected as input parameters. Among them, the SAR image is not affected by cloud shadows, and its reflection characteristics are quite different from those of water, so VV and VH can be used to remove cloud shadows;

- iii.

- A RF model was established based on the data (step (i)) to extract clouds and cloud shadows;

- iv.

- Buffer analysis was performed on the clouds and shadows obtained in Step (iii) to achieve cloud removal after deleting the cloud and cloud shadow areas.

2.3.2. MDFM Based on RF

- i.

- Water indices of optical images

- ii.

- Water index of the SAR image

- iii.

- Construction of the MDFM based on the RF

2.3.3. Water Boundary Extraction

2.3.4. Super-Pixel Decomposition of Water Boundary

2.4. Accuracy Evaluation

- i.

- A fishnet was created in the study area based on the pixel size of Sentinel-1 and Sentinel-2 images, and sample labels were created in each grid;

- ii.

- The actual area value represented by the grid was calculated;

- iii.

- The pixel values of each grid were counted using the sample labels in step (i);

- iv.

- The water area can be obtained by accumulating the grid pixel values calculated in step (iii) and multiplying by the grid area in step (ii).

3. Results

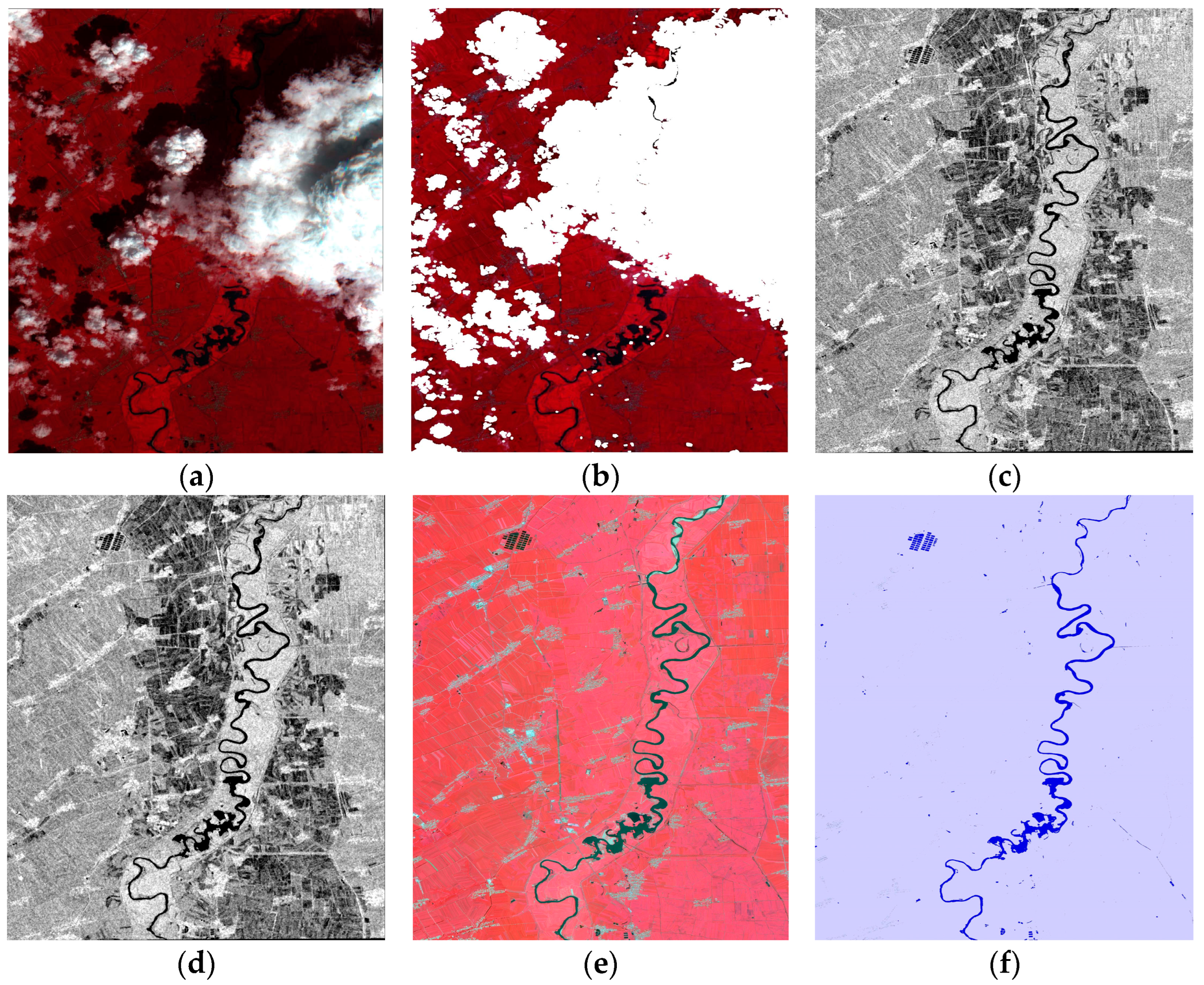

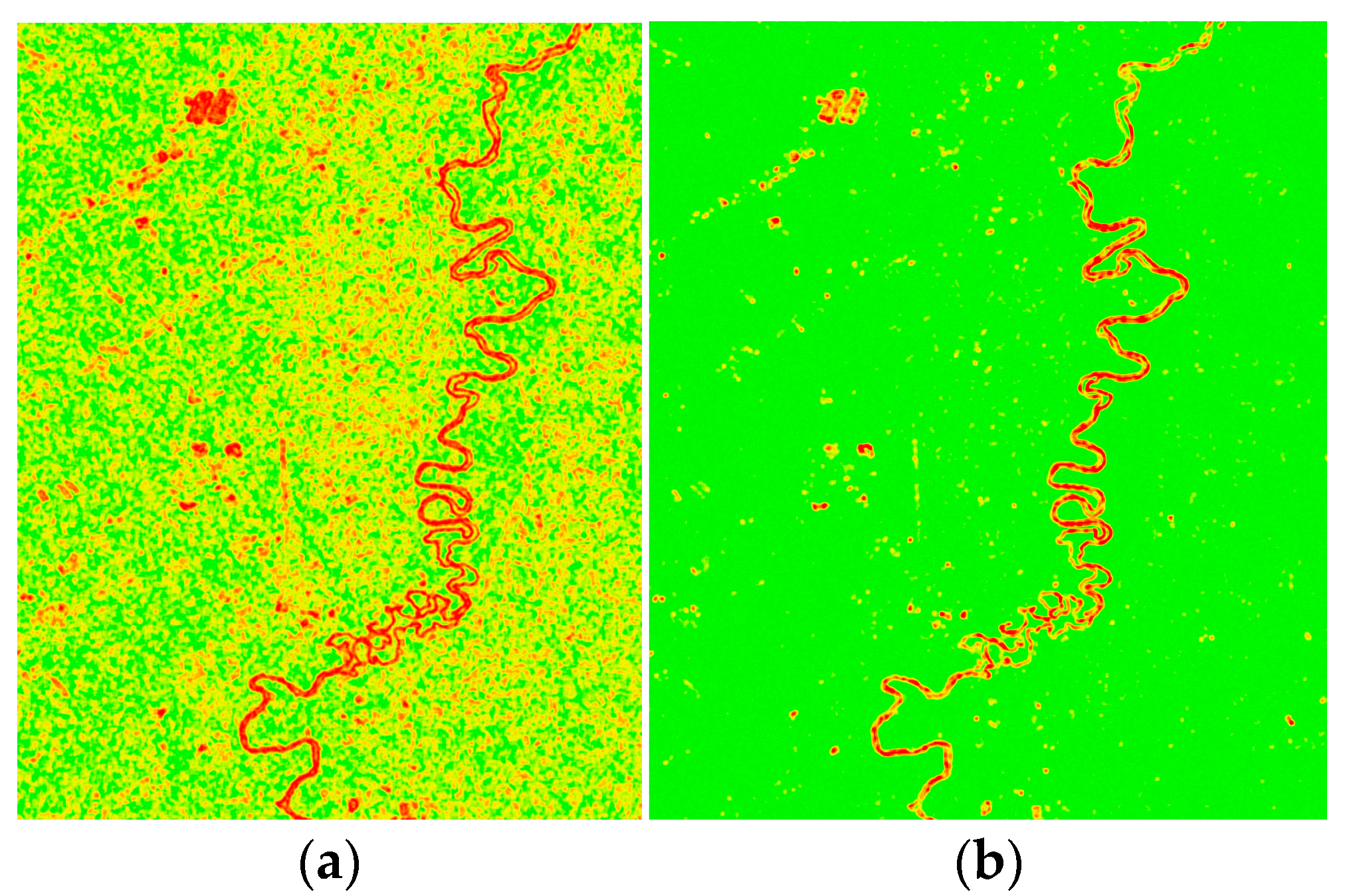

3.1. Image Preprocessing Results

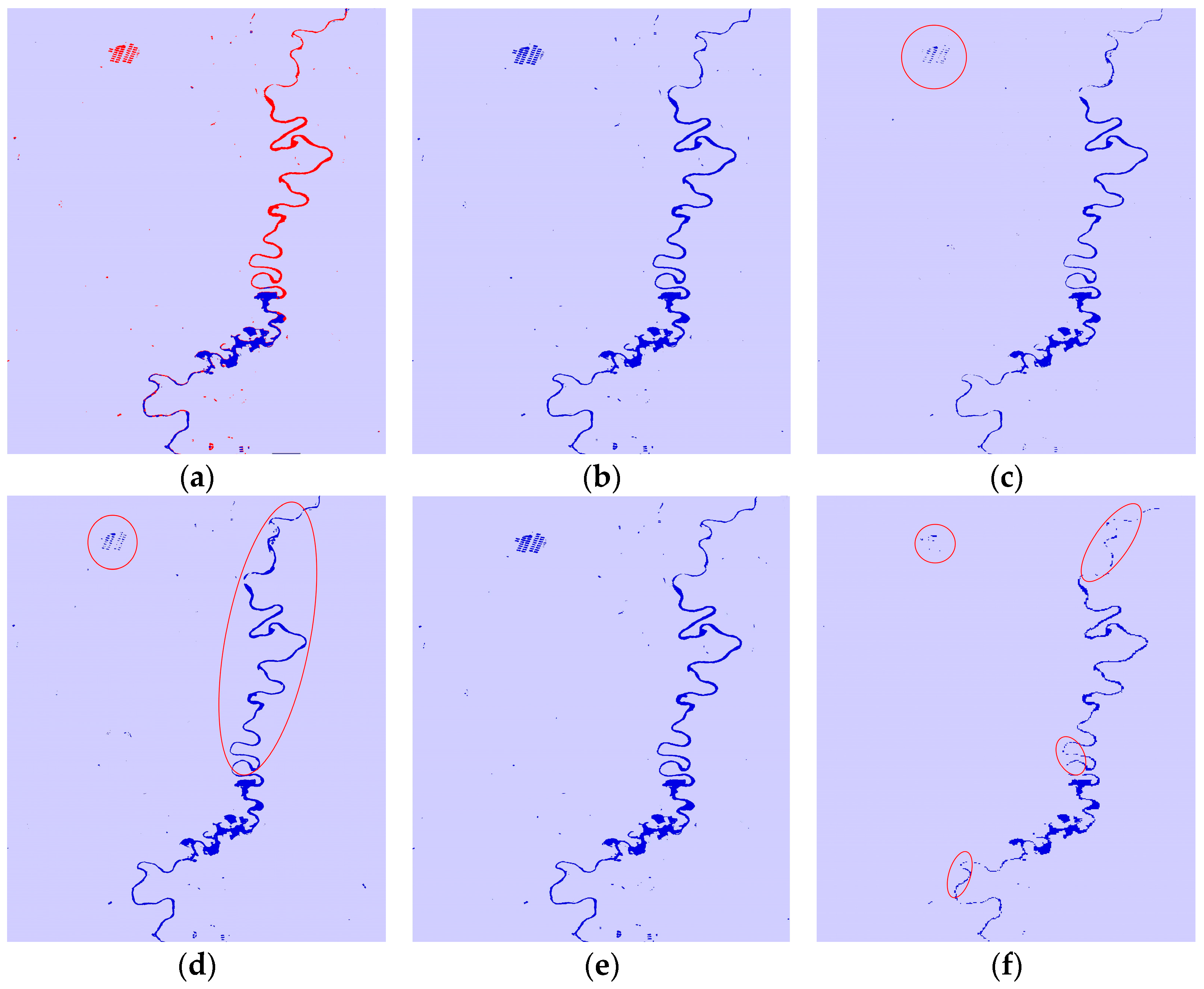

3.2. Results of Water Extraction of the Multisource Remote Sensing Data Fusion

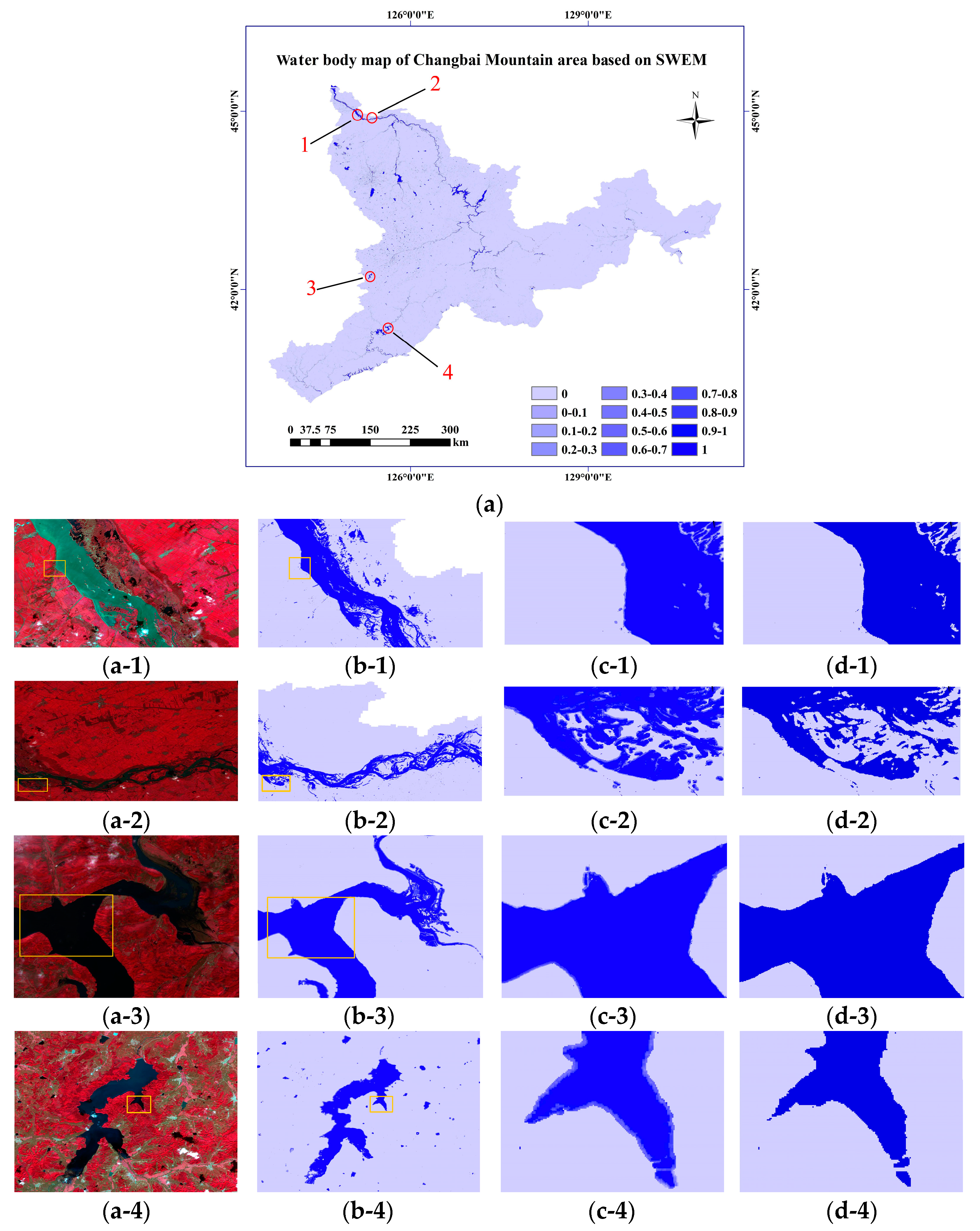

3.3. Water Extraction in Changbai Mountain area Based on MDFM

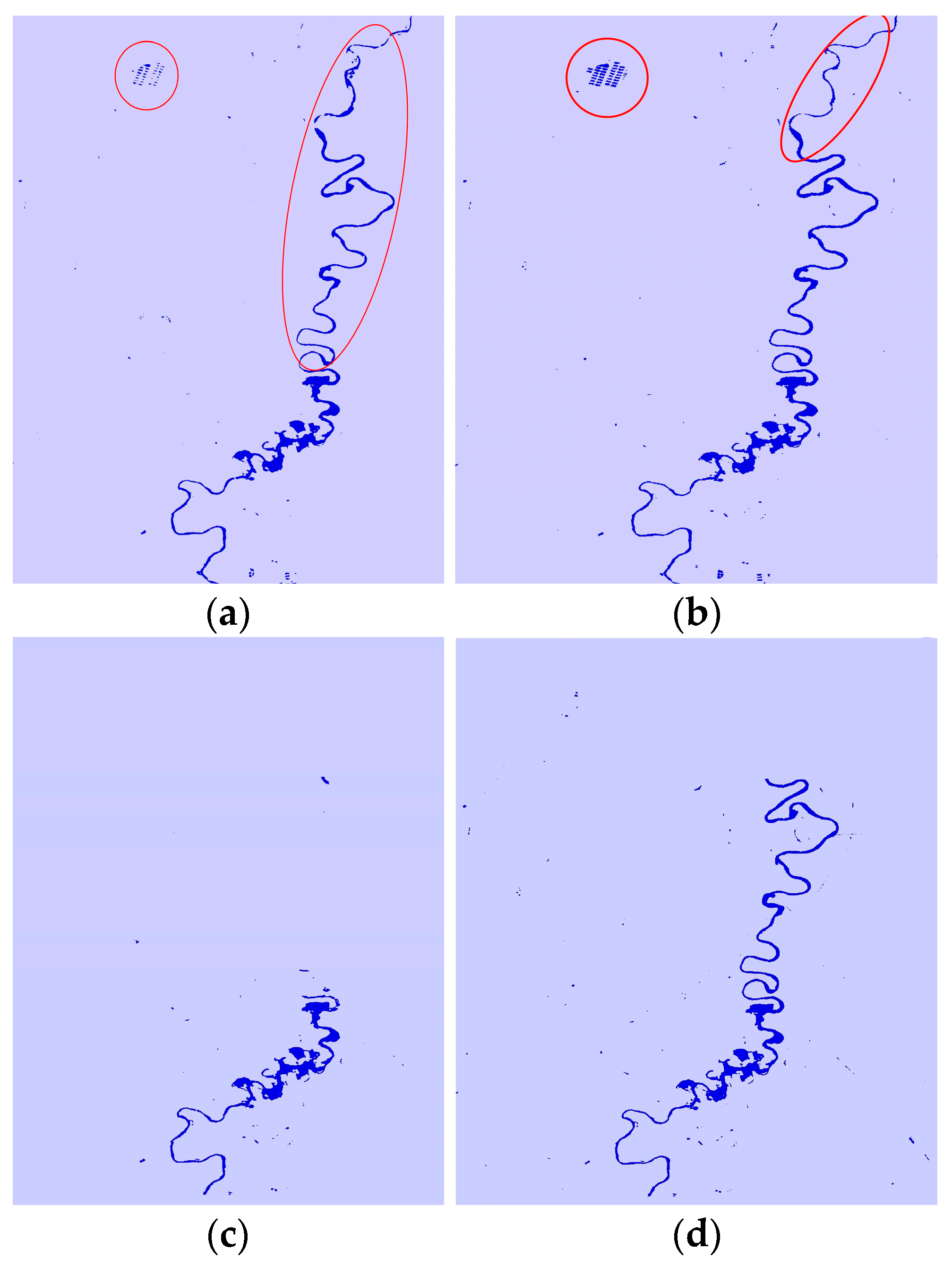

3.4. Water Extraction Results of MDFM and SWEM

3.5. Water Extraction Results of MDFM and SWEM in Changbai Mountain Area

3.6. Temporal Resolution of Water Extraction in the Changbai Mountain Area

4. Discussion

5. Conclusions

- (1)

- The correlation coefficient accuracy and area accuracy (r = 0.90 and 0.83; Parea = 0.87 and 0.79, respectively) of the MDFM in the without clouds and with clouds conditions are higher than those when only optical and SAR images were used (r = 0.90, 0.56, and 0.78; Parea = 0.86, 0.38, and 0.63, respectively).

- (2)

- The correlation coefficient and area accuracy of the MDFM and SWEM under the without clouds condition are improved by 2.22% and 9.20%, respectively, compared with the MDFM, and 41.54% and 85.09%, respectively, compared with the JRC-GSWE. The correlation coefficient and area accuracy of the MDFM and SWEM under the with clouds condition are 3.61% and 18.99% higher, respectively, than those of the MDFM and 32.31% and 84.31% higher, respectively, than those of the JRC-GSWE, indicating that the MDFM and SWEM could further improve the accuracy of water extraction.

- (3)

- The water dataset of the Changbai Mountain area is generated every 6~13 days with high temporal resolution.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Biggs, J.; Von Fumetti, S.; Kelly-Quinn, M. The importance of small waterbodies for biodiversity and ecosystem services: Implications for policy makers. Hydrobiologia 2017, 793, 3–39. [Google Scholar]

- Druce, D.; Tong, X.; Lei, X.; Guo, T.; Kittel, C.M.; Grogan, K.; Tottrup, C. An optical and SAR based fusion approach for mapping surface water dynamics over Mainland China. Remote Sens. 2021, 13, 1663. [Google Scholar] [CrossRef]

- Feyisa, G.L.; Meilby, H.; Fensholt, R.; Proud, S.R. Automated Water Extraction Index: A new technique for surface water mapping using Landsat imagery. Remote Sens. Environ. 2014, 140, 23–35. [Google Scholar] [CrossRef]

- Morss, R.E.; Wilhelmi, O.V.; Downton, M.W.; Gruntfest, E. Flood risk, uncertainty, and scientific information for decision making: Lessons from an interdisciplinary project. Bull. Am. Meteorol. Soc. 2005, 86, 1593–1602. [Google Scholar] [CrossRef]

- Luo, X.; Tong, X.; Hu, Z. An applicable and automatic method for earth surface water mapping based on multispectral images. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102472. [Google Scholar] [CrossRef]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Qiu, J.; Cao, B.; Park, E.; Yang, X.; Zhang, W.; Tarolli, P. Flood monitoring in rural areas of the Pearl River Basin (China) using Sentinel-1 SAR. Remote Sens. 2021, 13, 1384. [Google Scholar] [CrossRef]

- Pohl, C.; Van Genderen, J.L. Review article multisensor image fusion in remote sensing: Concepts, methods and applications. Int. J. Remote Sens. 1998, 19, 823–854. [Google Scholar] [CrossRef]

- Chang, N.-B.; Bai, K.; Imen, S.; Chen, C.-F.; Gao, W. Multisensor satellite image fusion and networking for all-weather environmental monitoring. IEEE Syst. J. 2016, 12, 1341–1357. [Google Scholar] [CrossRef]

- Jiang, X.; Li, G.; Liu, Y.; Zhang, X.-P.; He, Y. Homogeneous transformation based on deep-level features in heterogeneous remote sensing images. In Proceedings of the IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 June–2 July 1999; pp. 106–109. [Google Scholar]

- Zhang, L.; Shen, H. Progress and future of remote sensing data fusion. J. Remote Sens. 2016, 20, 1050–1061. [Google Scholar]

- Zhang, T.; Ren, H.; Qin, Q.; Zhang, C.; Sun, Y. Surface water extraction from Landsat 8 OLI imagery using the LBV transformation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4417–4429. [Google Scholar] [CrossRef]

- Wang, Q.; Blackburn, G.A.; Onojeghuo, A.O.; Dash, J.; Zhou, L.; Zhang, Y.; Atkinson, P.M. Fusion of Landsat 8 OLI and Sentinel-2 MSI data. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3885–3899. [Google Scholar] [CrossRef]

- Tu, T.-M.; Huang, P.S.; Hung, C.-L.; Chang, C.-P. A fast intensity-hue-saturation fusion technique with spectral adjustment for IKONOS imagery. IEEE Geosci. Remote Sens. Lett. 2004, 1, 309–312. [Google Scholar] [CrossRef]

- Yang, Y.; Han, C.; Kang, X.; Han, D. An overview on pixel-level image fusion in remote sensing. In Proceedings of the 2007 IEEE International Conference on Automation and Logistics, Jinan, China, 18–21 August 2007; pp. 2339–2344. [Google Scholar]

- Cakir, H.I.; Khorram, S. Pixel level fusion of panchromatic and multispectral images based on correspondence analysis. Photogramm. Eng. Remote Sens. 2008, 74, 183–192. [Google Scholar] [CrossRef]

- Kulkarni, S.C.; Rege, P.P. Pixel level fusion techniques for SAR and optical images: A review. Inf. Fusion 2020, 59, 13–29. [Google Scholar] [CrossRef]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Sveinsson, J.R.; Ulfarsson, M.O.; Benediktsson, J.A. Cluster-based feature extraction and data fusion in the wavelet domain. In IGARSS 2001. Scanning the Present and Resolving the Future, Proceedings of the IEEE 2001 International Geoscience and Remote Sensing Symposium (Cat. No. 01CH37217), Sydney, NSW, Australia, 9–13 July 2001; IEEE: Piscataway, NJ, USA; 2. [Google Scholar]

- Lehner, B.; Grill, G. Global river hydrography and network routing: Baseline data and new approaches to study the world’s large river systems. Hydrol. Process. 2013, 27, 2171–2186. [Google Scholar] [CrossRef]

- Bonnefon, R.; Dhérété, P.; Desachy, J. Geographic information system updating using remote sensing images. Pattern Recogn. Lett. 2002, 23, 1073–1083. [Google Scholar] [CrossRef]

- Yu, L.; Zhang, R.; Tian, S.; Yang, L.; Lv, Y. Deep multi-feature learning for water body extraction from Landsat imagery. Autom. Control Comput. Sci. 2018, 52, 517–527. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Li, M.; Wu, P.; Wang, B.; Park, H.; Yang, H.; Wu, Y. A deep learning method of water body extraction from high resolution remote sensing images with multisensors. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 3120–3132. [Google Scholar] [CrossRef]

- Li, J.; Ma, R.; Cao, Z.; Xue, K.; Xiong, J.; Hu, M.; Feng, X. Satellite Detection of Surface Water Extent: A Review of Methodology. Water 2022, 14, 1148. [Google Scholar] [CrossRef]

- Saghafi, M.; Ahmadi, A.; Bigdeli, B. Sentinel-1 and Sentinel-2 data fusion system for surface water extraction. J. Appl. Remote Sens. 2021, 15, 014521. [Google Scholar] [CrossRef]

- Challa, S.; Koks, D. Bayesian and dempster-shafer fusion. Sadhana 2004, 29, 145–174. [Google Scholar] [CrossRef]

- Fauvel, M.; Chanussot, J.; Benediktsson, J.A. Decision fusion for the classification of urban remote sensing images. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2828–2838. [Google Scholar] [CrossRef]

- Benediktsson, J.A.; Kanellopoulos, I. Classification of multisource and hyperspectral data based on decision fusion. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1367–1377. [Google Scholar] [CrossRef]

- Pickens, A.H.; Hansen, M.C.; Hancher, M.; Stehman, S.V.; Tyukavina, A.; Potapov, P.; Marroquin, B.; Sherani, Z. Mapping and sampling to characterize global inland water dynamics from 1999 to 2018 with full Landsat time-series. Remote Sens. Environ. 2020, 243, 111792. [Google Scholar] [CrossRef]

- Downing, J.A.; Cole, J.J.; Duarte, C.; Middelburg, J.J.; Melack, J.M.; Prairie, Y.T.; Kortelainen, P.; Striegl, R.G.; McDowell, W.H.; Tranvik, L.J. Global abundance and size distribution of streams and rivers. Inland Waters 2012, 2, 229–236. [Google Scholar] [CrossRef]

- Kristensen, P.; Globevnik, L. European small water bodies. Biol. Environ. Proc. R. Ir. Acad. 2014, 114, 281–287. [Google Scholar] [CrossRef]

- Carroll, M.L.; Townshend, J.R.; DiMiceli, C.M.; Noojipady, P.; Sohlberg, R.A. A new global raster water mask at 250 m resolution. Int. J. Digit. Earth 2009, 2, 291–308. [Google Scholar] [CrossRef]

- Yamazaki, D.; Sato, T.; Kanae, S.; Hirabayashi, Y.; Bates, P.D. Regional flood dynamics in a bifurcating mega delta simulated in a global river model. Geophys. Res. Lett. 2014, 41, 3127–3135. [Google Scholar] [CrossRef]

- Lehner, B.; Verdin, K.; Jarvis, A. New global hydrography derived from spaceborne elevation data. Eos Trans. Am. Geophys. Union 2008, 89, 93–94. [Google Scholar] [CrossRef]

- Aires, F.; Prigent, C.; Fluet-Chouinard, E.; Yamazaki, D.; Papa, F.; Lehner, B. Comparison of visible and multi-satellite global inundation datasets at high-spatial resolution. Remote Sens. Environ. 2018, 216, 427–441. [Google Scholar] [CrossRef]

- Mullissa, A.; Vollrath, A.; Odongo-Braun, C.; Slagter, B.; Balling, J.; Gou, Y.; Gorelick, N.; Reiche, J. Sentinel-1 sar backscatter analysis ready data preparation in google earth engine. Remote Sens. 2021, 13, 1954. [Google Scholar] [CrossRef]

- Vollrath, A.; Mullissa, A.; Reiche, J. Angular-based radiometric slope correction for Sentinel-1 on google earth engine. Remote Sens. 2020, 12, 1867. [Google Scholar] [CrossRef]

- Lee, J.-S. Refined filtering of image noise using local statistics. Comput. Graph. Image Process. 1981, 15, 380–389. [Google Scholar] [CrossRef]

- Pratt, W.K. Correlation techniques of image registration. IEEE Trans. Aerosp. Electron. Syst. 1974, 3, 353–358. [Google Scholar] [CrossRef]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random forests for land cover classification. Pattern Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- Xu, H. Modification of normalised difference water index (NDWI) to enhance open water features in remotely sensed imagery. Int. J. Remote Sens. 2006, 27, 3025–3033. [Google Scholar] [CrossRef]

- Crippen, R.E. Calculating the vegetation index faster. Remote Sens. Environ. 1990, 34, 71–73. [Google Scholar] [CrossRef]

- Fisher, A.; Flood, N.; Danaher, T. Comparing Landsat water index methods for automated water classification in eastern Australia. Remote Sens. Environ. 2016, 175, 167–182. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 6, 679–698. [Google Scholar] [CrossRef]

- Heinz, D.; Chang, C.-I.; Althouse, M.L. Fully constrained least-squares based linear unmixing [hyperspectral image classification]. In Proceedings of the IEEE 1999 International Geoscience and Remote Sensing Symposium. IGARSS’99 (Cat. No. 99CH36293), Hamburg, Germany, 28 June–2 July 1999; IEEE: Piscataway, NJ, USA, 1999; 2, pp. 1401–1403. [Google Scholar]

- Wang, L.; Liu, D.; Wang, Q. Geometric method of fully constrained least squares linear spectral mixture analysis. IEEE Trans. Geosci. Remote Sens. 2012, 51, 3558–3566. [Google Scholar] [CrossRef]

- Hinton, G.E. Reducing the Dimensionality of Data with Neural Networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

| Band | Band Name | Wavelength Range (nm) | Resolution (m) |

|---|---|---|---|

| B1 | Blue | 450~510 | 0.75 |

| B2 | Green | 510~580 | 0.75 |

| B3 | Red | 630~690 | 0.75 |

| B4 | NIR | 770~895 | 0.75 |

| Name | Abbreviation | Equation | Reference |

|---|---|---|---|

| Normalized difference water index | NDWI | [6] | |

| Modified normalized difference water index | MNDWI | [42] | |

| Normalized difference vegetation index | NDVI | [43] | |

| Automated water extraction index (1) | AWEI_sh | [3] | |

| Automated water extraction index (2) | AWEI_nsh | [2] | |

| Water index 2015 | WI 2015 | [44] |

| Category | r | Parea | |

|---|---|---|---|

| Without clouds | Optical image only | 0.90 | 0.86 |

| MDFM | 0.90 | 0.87 | |

| With clouds | Optical image only | 0.56 | 0.38 |

| MDFM | 0.83 | 0.79 | |

| SAR image only | 0.78 | 0.63 | |

| JRC-GSWE | 0.65 | 0.51 | |

| Category | r | Increase of r | Parea | Increase of Parea | |

|---|---|---|---|---|---|

| Without clouds | MDFM | 0.90 | / | 0.87 | / |

| (MDFM and SWEM)_MDFM (Optical image decomposition) | 0.92 | 2.22% | 0.95 | 9.20% | |

| (MDFM and SWEM)_ JRC-GSWE (Optical image decomposition) | 0.92 | 41.54% | 0.95 | 85.09% | |

| (MDFM and SWEM)_MDFM (SAR image decomposition) | 0.91 | 1.11% | 0.88 | 1.15% | |

| (MDFM and SWEM)_ JRC-GSWE (SAR image decomposition) | 0.91 | 40.00% | 0.88 | 72.55% | |

| With clouds | MDFM | 0.83 | / | 0.79 | / |

| (MDFM and SWEM)_MDFM (Spliced image decomposition) | 0.86 | 3.61% | 0.94 | 18.99% | |

| (MDFM and SWEM)_ JRC-GSWE (Spliced image decomposition) | 0.86 | 32.31% | 0.94 | 84.31% | |

| (MDFM and SWEM)_MDFM (SAR image decomposition) | 0.86 | 3.61% | 0.88 | 11.39% | |

| (MDFM and SWEM)_ JRC-GSWE (SAR image decomposition) | 0.86 | 32.31% | 0.88 | 72.55% | |

| JRC-GSWE | 0.65 | / | 0.51 | / | |

| The Serial Number | Image Type | The Time Interval | Revisit Time /Days | Minimum Image Synthesis Time/Days | Number of Images | The Date of Indispensable Image |

|---|---|---|---|---|---|---|

| 1 | Optical image | 2020.09.03–2020.09.08 | 5 | 5 | 64 | / |

| SAR image | 2020.09.05–2020.09.11 | 6 | 6 | 12 | 2020.09.05 | |

| 2 | Optical image | 2020.09.08–2020.09.13 | 5 | 5 | 63 | / |

| SAR image | 2020.09.11–2020.09.24 | 6 | 13 | 22 | 2020.09.11 | |

| 3 | Optical image | 2020.09.13–2020.09.18 | 5 | 5 | 64 | / |

| SAR image | 2020.09.24–2020.10.06 | 6 | 12 | 20 | 2020.10.05 | |

| 4 | Optical image | 2020.09.18–2020.09.23 | 5 | 5 | 64 | / |

| SAR image | 2020.10.06–2020.10.18 | 6 | 12 | 20 | 2020.10.17 | |

| 5 | Optical image | 2020.09.23–2020.09.28 | 5 | 5 | 70 | / |

| SAR image | 2020.10.18–2020.10.30 | 6 | 12 | 20 | 2020.10.29 |

| Category | r | Parea | |

|---|---|---|---|

| Without clouds | Optical image only | 0.90 | 0.86 |

| MDFM | 0.90 | 0.87 | |

| WatNet | 0.83 | 0.76 | |

| With clouds | Optical image only | 0.56 | 0.38 |

| MDFM | 0.83 | 0.79 | |

| WatNet | 0.64 | 0.44 | |

| SAR image only | 0.78 | 0.63 | |

| JRC-GSWE | 0.65 | 0.51 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, X.; Gao, F.; Li, Y.; Wang, B.; Li, X. All-Weather and Superpixel Water Extraction Methods Based on Multisource Remote Sensing Data Fusion. Remote Sens. 2022, 14, 6177. https://doi.org/10.3390/rs14236177

Chen X, Gao F, Li Y, Wang B, Li X. All-Weather and Superpixel Water Extraction Methods Based on Multisource Remote Sensing Data Fusion. Remote Sensing. 2022; 14(23):6177. https://doi.org/10.3390/rs14236177

Chicago/Turabian StyleChen, Xiaopeng, Fang Gao, Yingye Li, Bin Wang, and Xiaojie Li. 2022. "All-Weather and Superpixel Water Extraction Methods Based on Multisource Remote Sensing Data Fusion" Remote Sensing 14, no. 23: 6177. https://doi.org/10.3390/rs14236177

APA StyleChen, X., Gao, F., Li, Y., Wang, B., & Li, X. (2022). All-Weather and Superpixel Water Extraction Methods Based on Multisource Remote Sensing Data Fusion. Remote Sensing, 14(23), 6177. https://doi.org/10.3390/rs14236177