Author Contributions

Conceptualization, F.T. and S.G.; Methodology, F.T., S.N. and S.G.; Software, F.T.; Formal analysis, F.T. and S.N.; Resources, G.B.; Data curation, S.N.; Writing—original draft, F.T.; Writing—review & editing, F.T., S.N., G.B. and S.G.; Supervision, G.B. and S.G.; Project administration, G.B. and S.G.; Funding acquisition, G.B. and S.G. All authors have read and agreed to the published version of the manuscript.

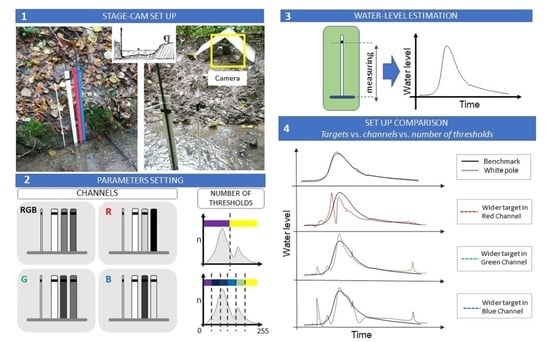

Figure 1.

Experimental setup including: white pole, three colored bars, and a wildlife camera.

Figure 1.

Experimental setup including: white pole, three colored bars, and a wildlife camera.

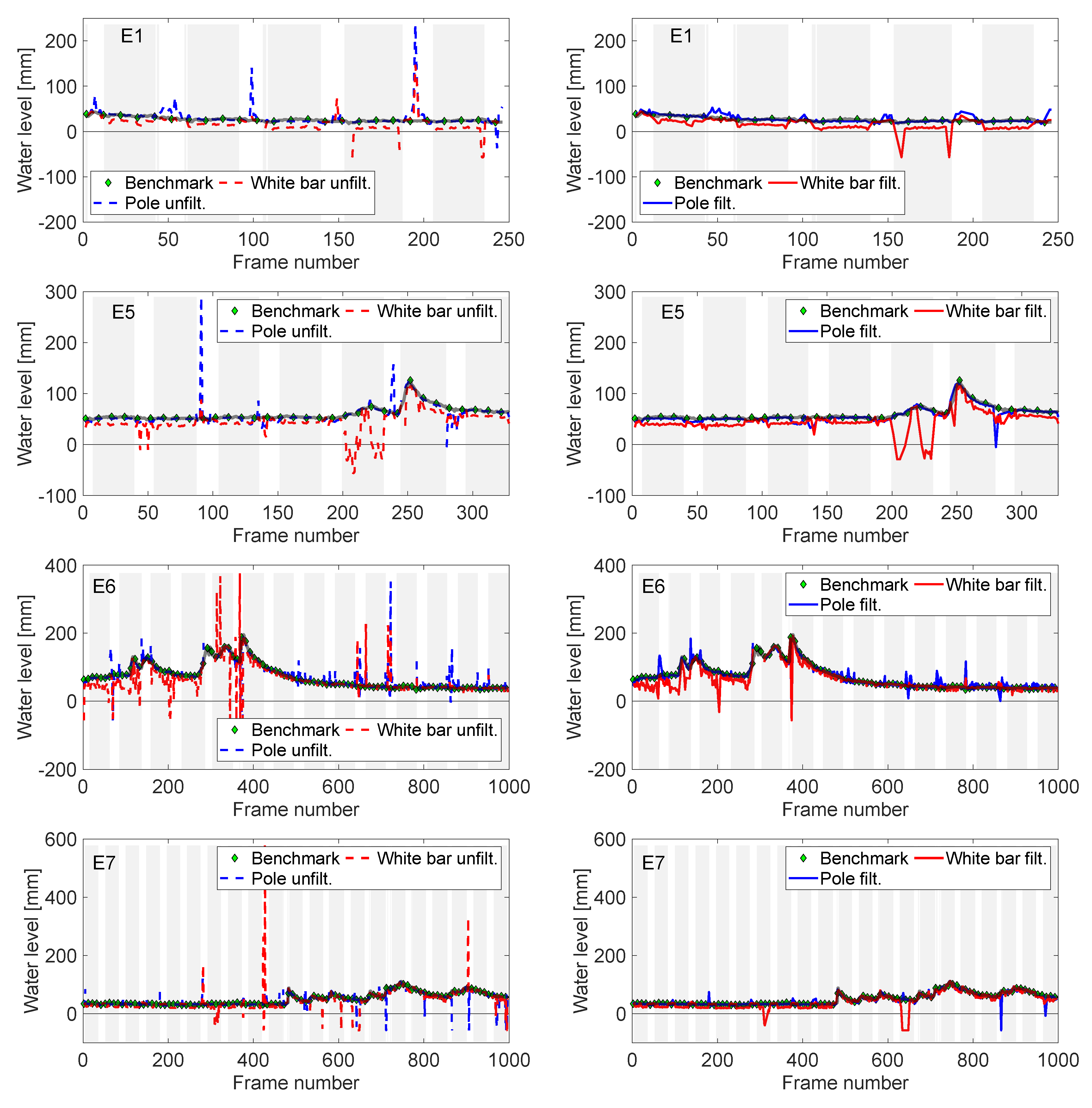

Figure 2.

Unfiltered (left) and filtered (right) water levels computed from the pole and the white bar for experimental tests E1 and E5–E7. Benchmark water levels are in green markers and transparent black; pole unfiltered and filtered in dashed and solid blue, respectively; and white bar unfiltered and filtered in dashed and solid red, respectively. Light gray vertical bars indicate NIR frames.

Figure 2.

Unfiltered (left) and filtered (right) water levels computed from the pole and the white bar for experimental tests E1 and E5–E7. Benchmark water levels are in green markers and transparent black; pole unfiltered and filtered in dashed and solid blue, respectively; and white bar unfiltered and filtered in dashed and solid red, respectively. Light gray vertical bars indicate NIR frames.

Figure 3.

Examples of good-quality (left) and poor-quality (right) images taken during experiments E5–E7 (from top to bottom). Right-side pictures led to large deviations from the benchmark.

Figure 3.

Examples of good-quality (left) and poor-quality (right) images taken during experiments E5–E7 (from top to bottom). Right-side pictures led to large deviations from the benchmark.

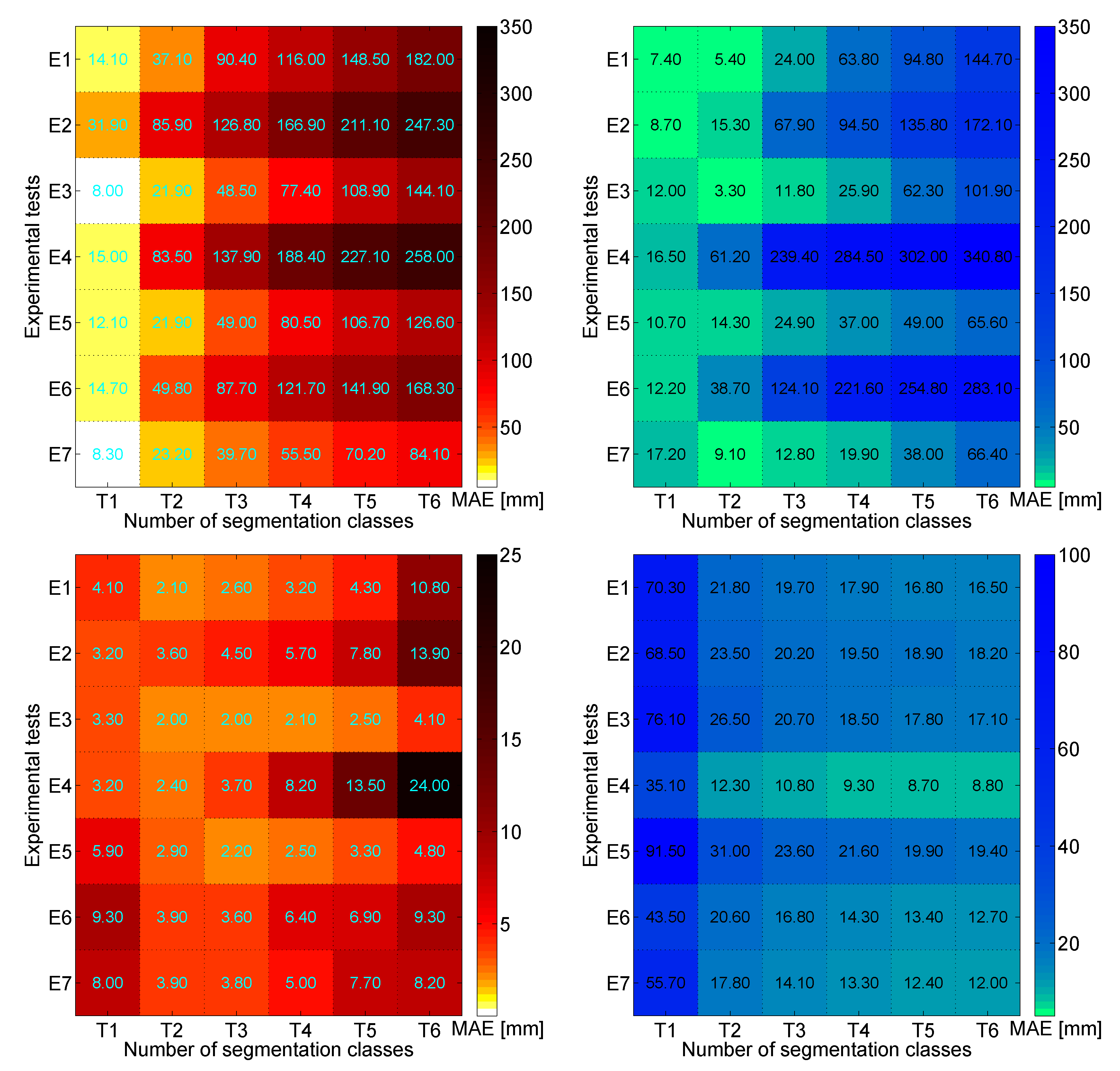

Figure 4.

Mean absolute error (MAE) in between unfiltered and a priori known pole/white bar lengths for all experimental tests (E1 to E7). Left side heatmaps show values estimated from images of the pole in RGB (top) and NIR (bottom). Right side heatmaps show values estimated from images of the white bar in RGB (top) and NIR (bottom). Values are reported for all segmentation classes (T1 to T6).

Figure 4.

Mean absolute error (MAE) in between unfiltered and a priori known pole/white bar lengths for all experimental tests (E1 to E7). Left side heatmaps show values estimated from images of the pole in RGB (top) and NIR (bottom). Right side heatmaps show values estimated from images of the white bar in RGB (top) and NIR (bottom). Values are reported for all segmentation classes (T1 to T6).

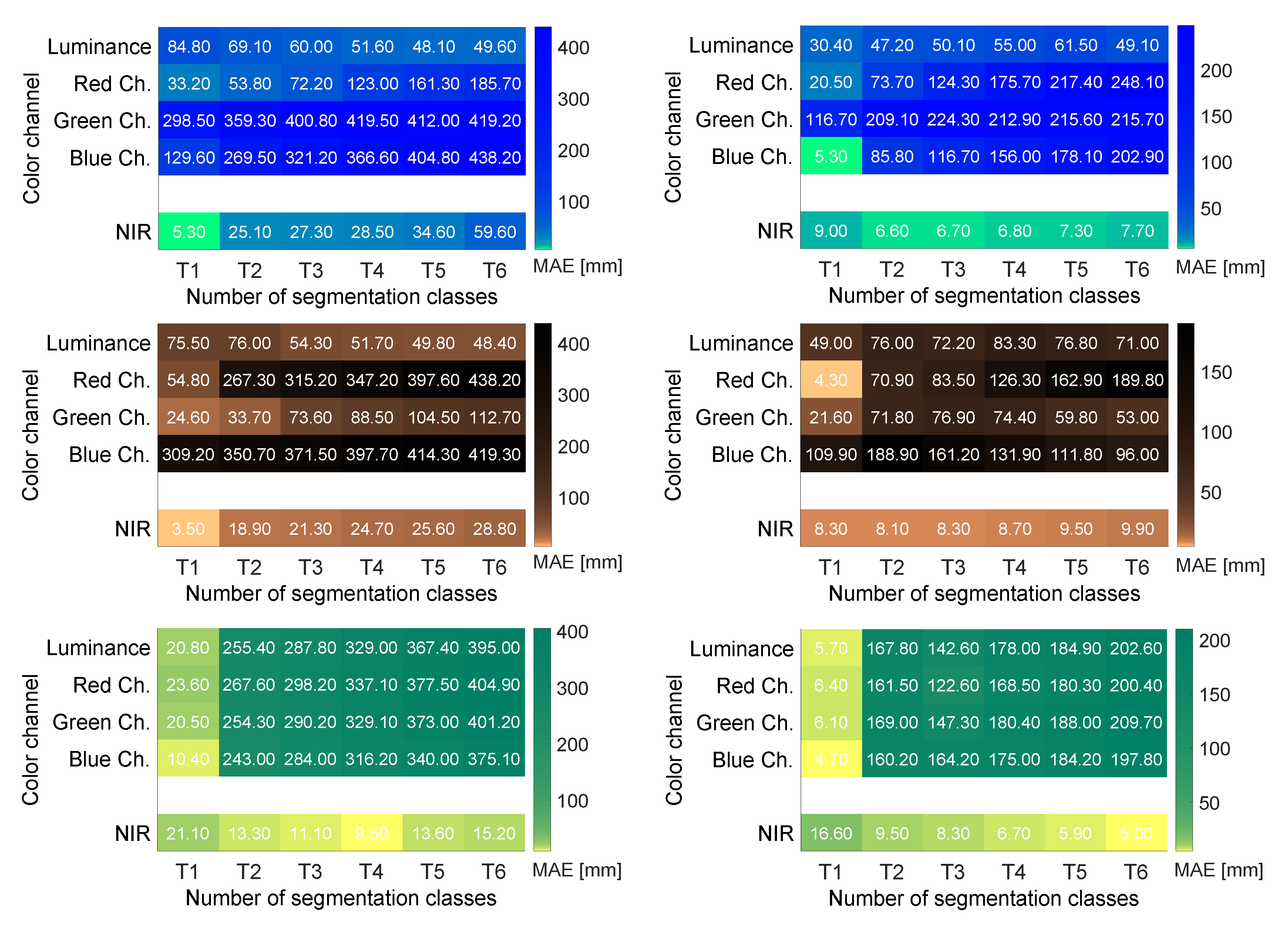

Figure 5.

Mean absolute error (MAE) in between unfiltered and a priori known bar lengths for all experimental tests (E6, left, and E7, right) treated with the narrow image crop. From top to bottom, heatmaps show MAE values estimated from images of the blue (top), red (middle), and white (bottom) bars. In each heatmap, rows indicate MAEs obtained for different color channels: the top four rows report values for RGB images processed through four gray-scale conversion modalities (luminance, red, green, and blue channels). The bottom row refers to values for NIR images. Values are reported for all segmentation classes (T1 to T6).

Figure 5.

Mean absolute error (MAE) in between unfiltered and a priori known bar lengths for all experimental tests (E6, left, and E7, right) treated with the narrow image crop. From top to bottom, heatmaps show MAE values estimated from images of the blue (top), red (middle), and white (bottom) bars. In each heatmap, rows indicate MAEs obtained for different color channels: the top four rows report values for RGB images processed through four gray-scale conversion modalities (luminance, red, green, and blue channels). The bottom row refers to values for NIR images. Values are reported for all segmentation classes (T1 to T6).

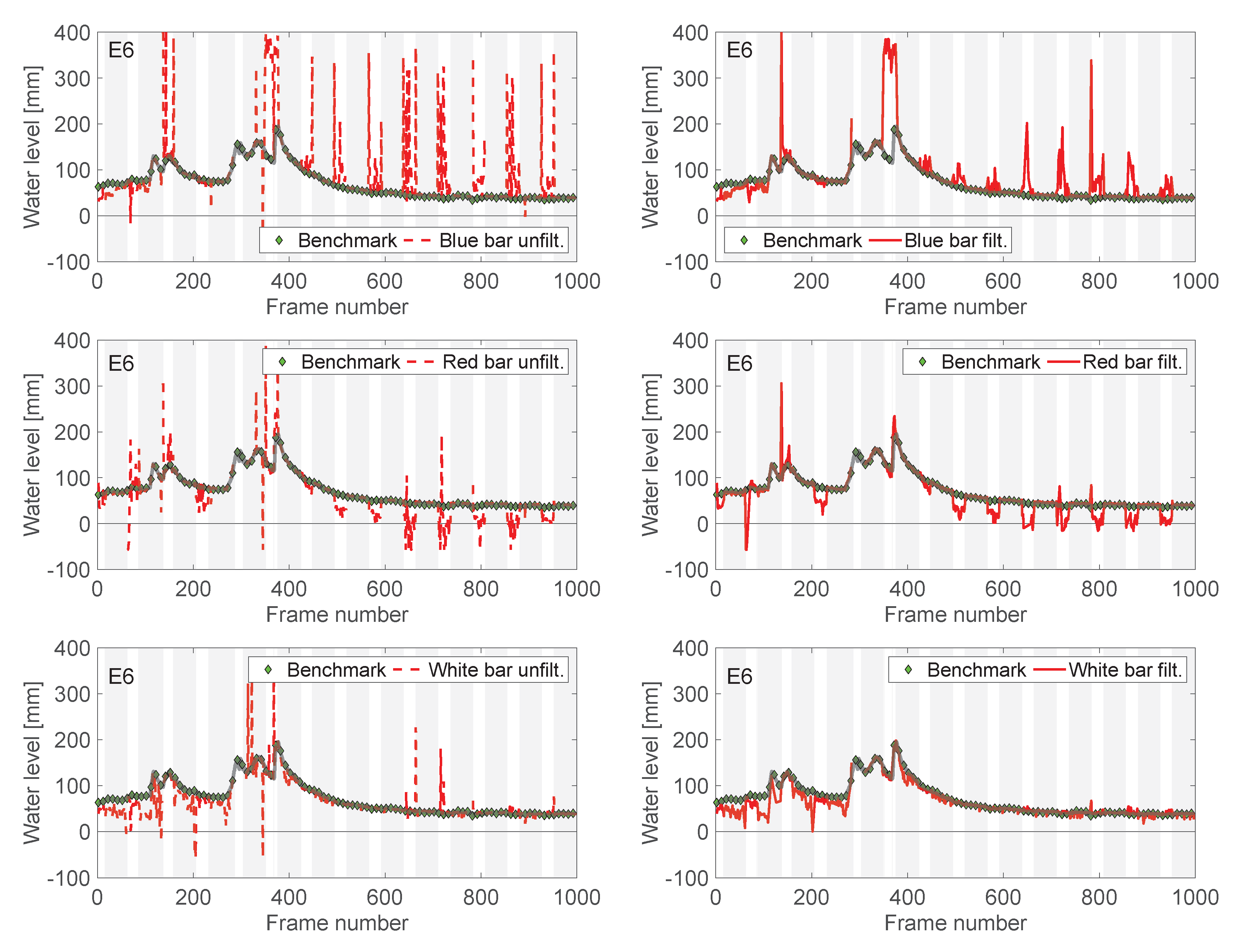

Figure 6.

Raw (left) and filtered (right) water levels computed from the bars (top: blue, middle: red, bottom: white) for experimental test E6. Benchmark water levels are in green markers and transparent black; bar raw and filtered in dashed and solid red, respectively. Light gray vertical bars indicate NIR frames. Images are treated with the narrow image crop.

Figure 6.

Raw (left) and filtered (right) water levels computed from the bars (top: blue, middle: red, bottom: white) for experimental test E6. Benchmark water levels are in green markers and transparent black; bar raw and filtered in dashed and solid red, respectively. Light gray vertical bars indicate NIR frames. Images are treated with the narrow image crop.

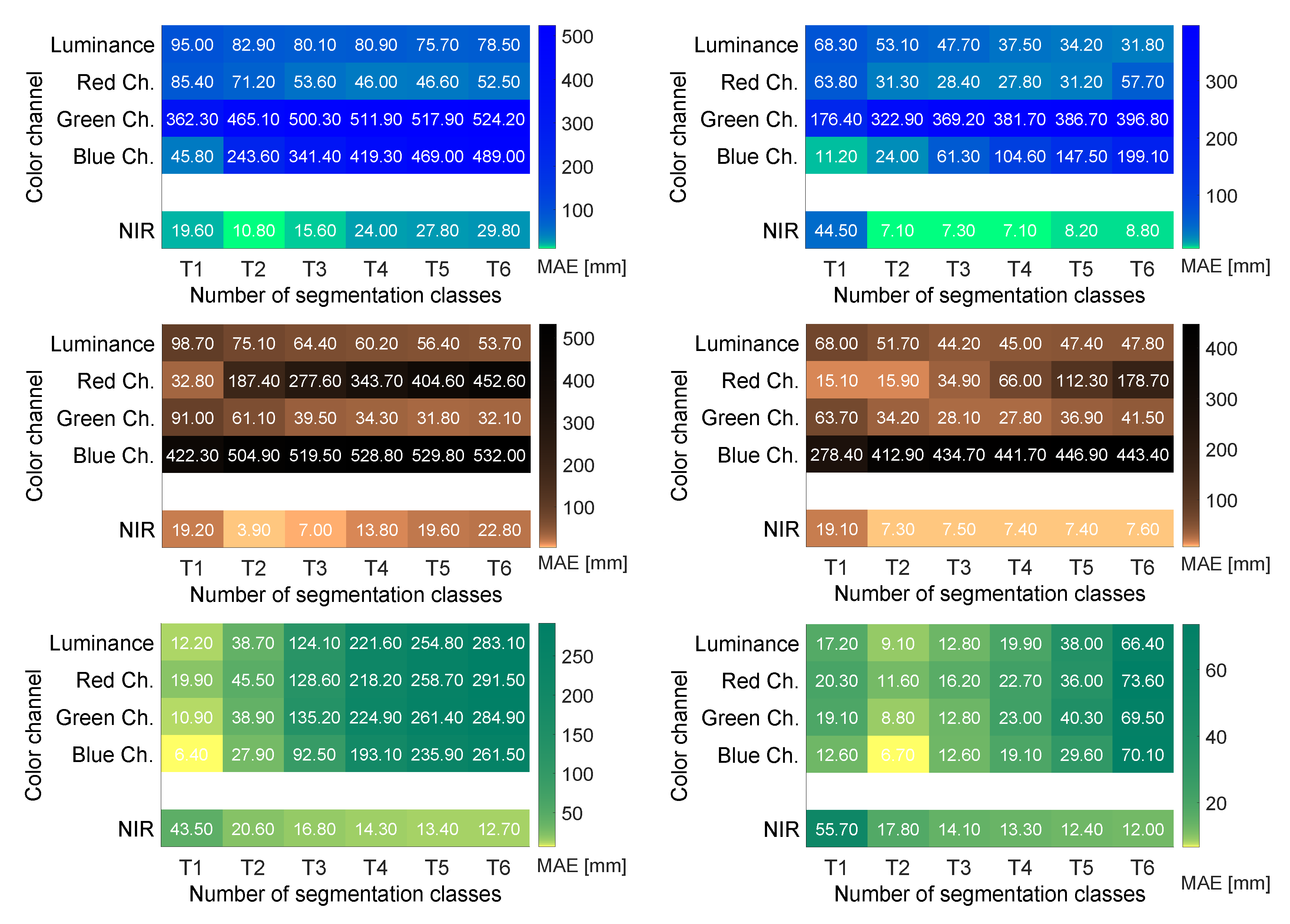

Figure 7.

Mean absolute error (MAE) in between unfiltered and a priori known bar lengths for all experimental tests (E6, left, and E7, right) treated with the large image crop. From top to bottom, heatmaps show MAE values estimated from images of the blue (top), red (middle), and white (bottom) bars. In each heatmap, rows indicate MAEs obtained for different color channels: the top four rows report values for RGB images processed through four gray-scale conversion modalities (luminance, red, green, and blue channels). The bottom row refers to values for the NIR images. Values are reported for all the segmentation classes (T1 to T6).

Figure 7.

Mean absolute error (MAE) in between unfiltered and a priori known bar lengths for all experimental tests (E6, left, and E7, right) treated with the large image crop. From top to bottom, heatmaps show MAE values estimated from images of the blue (top), red (middle), and white (bottom) bars. In each heatmap, rows indicate MAEs obtained for different color channels: the top four rows report values for RGB images processed through four gray-scale conversion modalities (luminance, red, green, and blue channels). The bottom row refers to values for the NIR images. Values are reported for all the segmentation classes (T1 to T6).

Figure 8.

Raw (left) and filtered (right) water levels computed from the bars (top: blue, middle: red, bottom: white) for experimental test E6. Benchmark water levels are in green markers and transparent black; bar raw and filtered in dashed and solid red, respectively. Light gray vertical bars indicate NIR frames. Images are treated with the large image crop.

Figure 8.

Raw (left) and filtered (right) water levels computed from the bars (top: blue, middle: red, bottom: white) for experimental test E6. Benchmark water levels are in green markers and transparent black; bar raw and filtered in dashed and solid red, respectively. Light gray vertical bars indicate NIR frames. Images are treated with the large image crop.

Table 1.

Initial and final dates, times, and number of frames for the experimental tests.

Table 1.

Initial and final dates, times, and number of frames for the experimental tests.

| Test | Initial Date and Time | Final Date and Time | Number of Frames |

|---|

| E1 | 2020/10/15 10:40 | 2020/10/20 14:45 | 249 |

| E2 | 2020/10/20 15:20 | 2020/10/29 10:20 | 423 |

| E3 | 2020/10/29 11:20 | 2020/11/06 13:20 | 389 |

| E4 | 2020/11/17 11:30 | 2020/11/27 11:30 | 481 |

| E5 | 2020/11/27 12:16 | 2020/12/04 09:15 | 331 |

| E6A | 2020/12/04 11:00 | 2020/12/08 09:00 | 283 |

| E6B | 2020/12/08 18:00 | 2020/12/18 07:00 | 690 |

| E7 | 2020/12/18 14:00 | 2021/01/08 09:30 | 1000 |

Table 2.

Light conditions during each experimental test. Abbreviations “morn.” and “aft.” stand for morning and afternoon, respectively. The index G quantifies the average intensity of gray-scale images captured during the day.

Table 2.

Light conditions during each experimental test. Abbreviations “morn.” and “aft.” stand for morning and afternoon, respectively. The index G quantifies the average intensity of gray-scale images captured during the day.

| Test | Light Conditions | G |

|---|

| E1 | Diffused sunlight in morn. and aft.; weakly scattered in the day | 83 |

| E2 | Diffused sunlight in morn. and aft.; weakly scattered in the day | 86 |

| E3 | Diffused sunlight in morn. and aft.; weakly scattered in the day | 90 |

| E4 | Diffused sunlight in early morn. and aft.; scattered in the day | 93 |

| E5 | Diffused sunlight in early morn. and aft.; scattered in the day | 95 |

| E6 | Diffused sunlight in morn. and aft.; weakly scattered in the day | 104 |

| E7 | Diffused sunlight in early morn. and aft.; scattered in the day | 101 |

Table 3.

Rainfall characteristics (I, average intensity, and duration) for experimental tests executed during the precipitation events.

Table 3.

Rainfall characteristics (I, average intensity, and duration) for experimental tests executed during the precipitation events.

| Test | Rainfall Event Initial Date and Time | I [mm/h] | Duration [min] |

|---|

| E2 | 2020/10/24 07:30 | 4.8 | 80 |

| | 2020/10/26 21:50 | 4.6 | 50 |

| E4 | 2020/11/20 05:40 | 3.2 | 30 |

| | 2020/11/20 07:10 | 3.2 | 260 |

| E5 | 2020/12/01 16:20 | 3.0 | 20 |

| | 2020/12/01 19:00 | 1.6 | 120 |

| | 2020/12/01 23:10 | 2.1 | 70 |

| | 2020/12/02 10:30 | 3.3 | 450 |

| E6 | 2020/12/05 04:40 | 1.7 | 120 |

| | 2020/12/05 13:10 | 1.9 | 50 |

| | 2020/12/05 22:20 | 5.0 | 240 |

| | 2020/12/06 06:30 | 3.4 | 330 |

| | 2020/12/06 13:10 | 5.5 | 50 |

| | 2020/12/07 03:40 | 2.1 | 40 |

| | 2020/12/07 07:40 | 2.0 | 30 |

| | 2020/12/08 01:00 | 3.6 | 630 |

| | 2020/12/08 17:40 | 2.4 | 250 |

| | 2020/12/08 23:20 | 2.7 | 80 |

| | 2020/12/09 03:40 | 1.4 | 60 |

| | 2020/12/09 12:30 | 7.2 | 60 |

| | 2020/12/09 21:40 | 2.0 | 30 |

| | 2020/12/10 07:30 | 2.8 | 30 |

| E7 | 2020/12/28 07:20 | 2.4 | 70 |

| | 2020/12/28 11:40 | 4.7 | 160 |

| | 2020/12/28 15:40 | 2.3 | 110 |

| | 2020/12/29 14:30 | 4.2 | 80 |

| | 2020/12/29 17:40 | 2.6 | 60 |

| | 2020/12/30 04:00 | 1.5 | 40 |

| | 2020/12/30 10:50 | 2.8 | 30 |

| | 2020/12/30 13:40 | 4.7 | 90 |

| | 2021/01/01 09:00 | 2.7 | 220 |

| | 2021/01/01 15:20 | 2.4 | 30 |

| | 2021/01/02 03:30 | 2.2 | 50 |

| | 2021/01/02 05:50 | 3.5 | 140 |

| | 2021/01/02 10:20 | 2.6 | 210 |

| | 2021/01/02 20:10 | 3.8 | 60 |

| | 2021/01/03 02:40 | 3.6 | 50 |

| | 2021/01/03 14:20 | 1.2 | 100 |

| | 2021/01/04 16:40 | 1.8 | 40 |

| | 2021/01/05 05:50 | 2.1 | 120 |

| | 2021/01/05 11:30 | 3.6 | 80 |

| | 2021/01/05 23:10 | 1.9 | 180 |

| | 2021/01/07 00:20 | 3.6 | 20 |

Table 4.

Optimal number of segmentation classes for RGB () and NIR () images.

Table 4.

Optimal number of segmentation classes for RGB () and NIR () images.

| Test | Pole | White Bar |

|---|

| | | | | |

| E1 | 1 | 2 | 2 | 6 |

| E2 | 1 | 1 | 1 | 6 |

| E3 | 1 | 3 | 2 | 6 |

| E4 | 1 | 2 | 1 | 5 |

| E5 | 1 | 3 | 1 | 6 |

| E6 | 1 | 3 | 1 | 6 |

| E7 | 1 | 3 | 2 | 6 |

Table 5.

MAE in between filtered and benchmark lengths estimated from the entire sequence of RGB and NIR images for the pole and white bar.

Table 5.

MAE in between filtered and benchmark lengths estimated from the entire sequence of RGB and NIR images for the pole and white bar.

| Test | Pole | White Bar |

|---|

| | | |

| E1 | 4.4 | 11.4 |

| E2 | 9.3 | 13.7 |

| E3 | 2.6 | 11.2 |

| E4 | 3.0 | 6.7 |

| E5 | 3.8 | 15.8 |

| E6 | 5.8 | 9.8 |

| E7 | 3.7 | 8.9 |

Table 6.

Optimal combinations of gray-scale conversion modality (red, green, and blue channel, ch.) and number of segmentation classes ( and for RGB and NIR, respectively) for each bar (blue, red, and white) and experimental test (E6 and E7). Images are treated with the narrow crop.

Table 6.

Optimal combinations of gray-scale conversion modality (red, green, and blue channel, ch.) and number of segmentation classes ( and for RGB and NIR, respectively) for each bar (blue, red, and white) and experimental test (E6 and E7). Images are treated with the narrow crop.

| Bar | E6 | E7 |

|---|

| | | | | |

| Blue | Red Ch. − 1 | 1 | Blue Ch. − 1 | 2 |

| Red | Green Ch. − 1 | 1 | Red Ch. − 1 | 2 |

| White | Blue Ch. − 1 | 4 | Blue Ch. − 1 | 6 |

Table 7.

MAE in between benchmark and filtered lengths estimated from the entire sequence of RGB and NIR images of the colored and white bars treated with the narrow crop.

Table 7.

MAE in between benchmark and filtered lengths estimated from the entire sequence of RGB and NIR images of the colored and white bars treated with the narrow crop.

| Bar | E6 | E7 |

|---|

| | | |

| | | |

| Blue | 11.8 | 4.5 |

| Red | 7.0 | 5.6 |

| White | 7.0 | 3.9 |

Table 8.

Optimal combinations of gray-scale conversion modality (red, green, and blue channel, ch.) and number of segmentation classes ( and for RGB and NIR, respectively) for each bar (blue, red, and white) and experimental test (E6 and E7). Images are treated with the large crop.

Table 8.

Optimal combinations of gray-scale conversion modality (red, green, and blue channel, ch.) and number of segmentation classes ( and for RGB and NIR, respectively) for each bar (blue, red, and white) and experimental test (E6 and E7). Images are treated with the large crop.

| Bar | E6 | E7 |

|---|

| | | | | |

| Blue | Blue Ch. − 1 | 2 | Blue Ch. − 1 | 4 |

| Red | Green Ch. − 5 | 2 | Red Ch. − 1 | 2 |

| White | Blue Ch. − 1 | 6 | Blue Ch. − 2 | 6 |

Table 9.

MAE in between benchmark and filtered lengths estimated from the entire sequence of RGB and NIR images of the colored and white bars treated with the large crop.

Table 9.

MAE in between benchmark and filtered lengths estimated from the entire sequence of RGB and NIR images of the colored and white bars treated with the large crop.

| Bar | E6 | E7 |

|---|

| | MAE | MAE |

| | mm | mm |

| Blue | 17.9 | 8.6 |

| Red | 11.2 | 9.9 |

| White | 8.6 | 8.9 |