Displacement Measurement Based on UAV Images Using SURF-Enhanced Camera Calibration Algorithm

Abstract

1. Introduction

- An auxiliary reference image was fabricated, and the SURF feature point tracking and MSAC algorithm were used to correct the image, which made the algorithm have high computational accuracy and stability;

- The calculation accuracy and stability of the algorithm under different wobble modes are compared and analyzed, and the guidance for the operation of the UAV in the actual measurement is put forward.

2. Materials and Methods

2.1. Displacement Measurement Using DIC with Fixed Camera

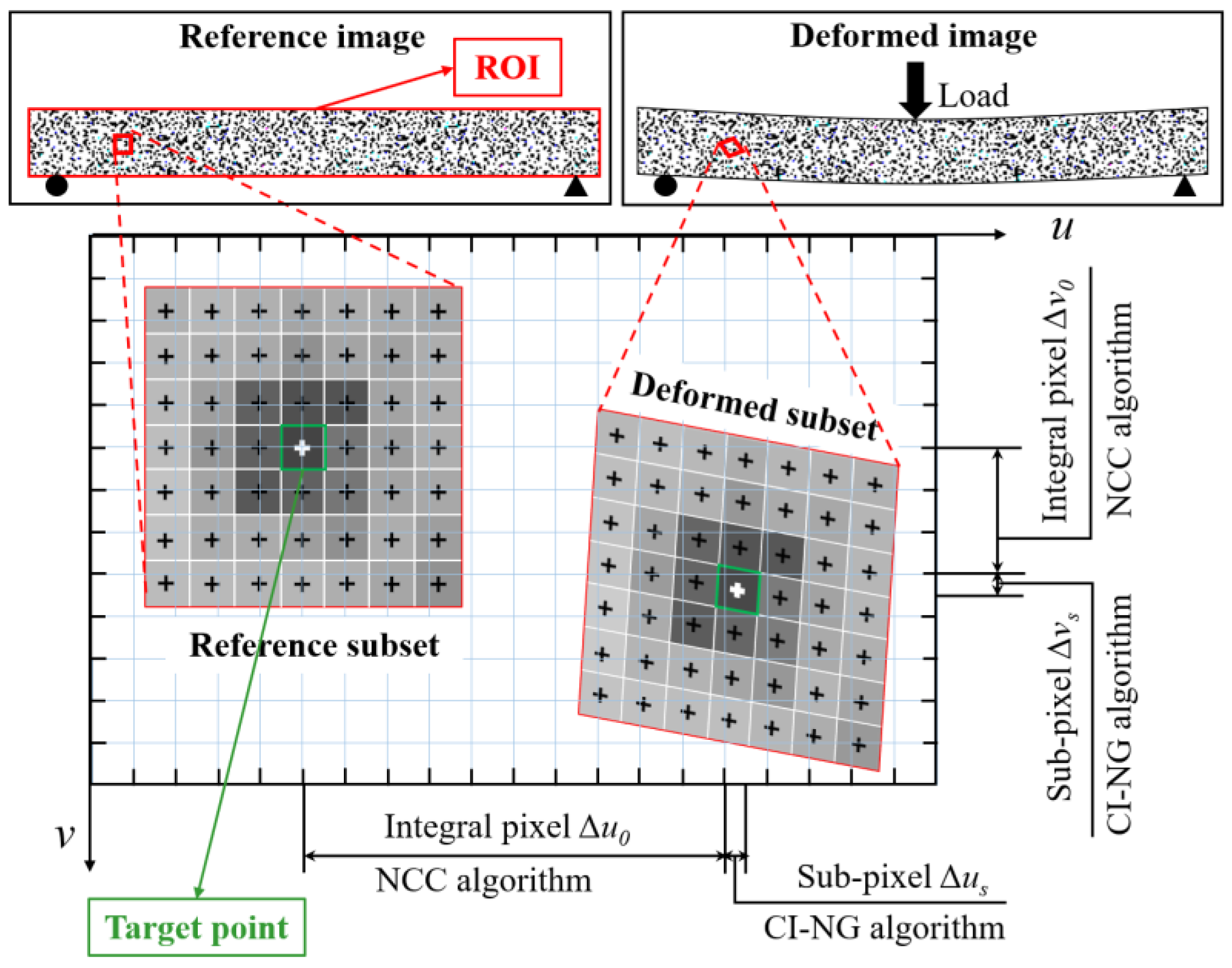

2.1.1. Integral Pixel and Sub-Pixel Matching

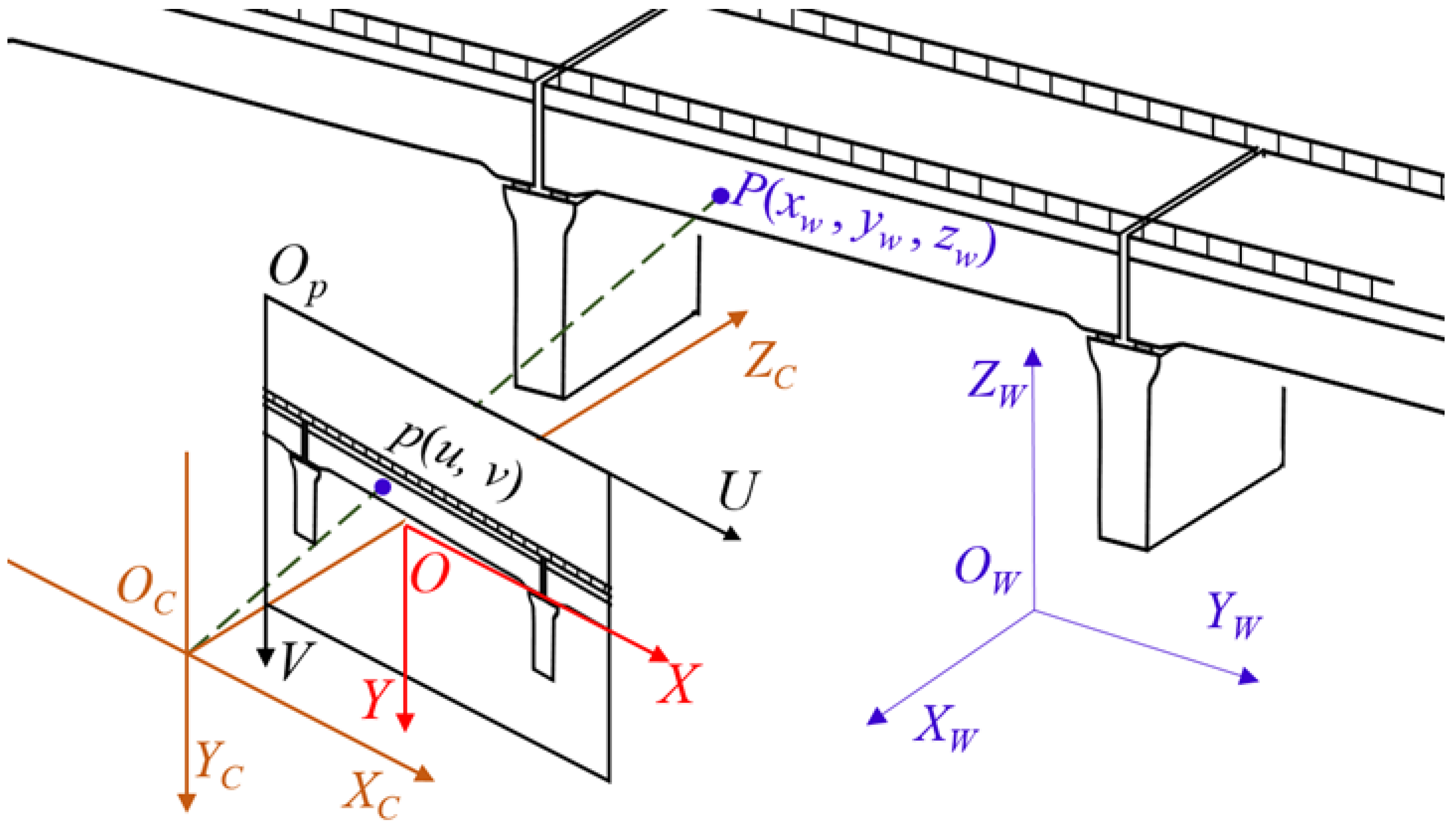

2.1.2. Camera Calibration Algorithm for the Fixed Camera

2.2. Displacement Measurement Using DIC and SURF for Nonstationary Cameras

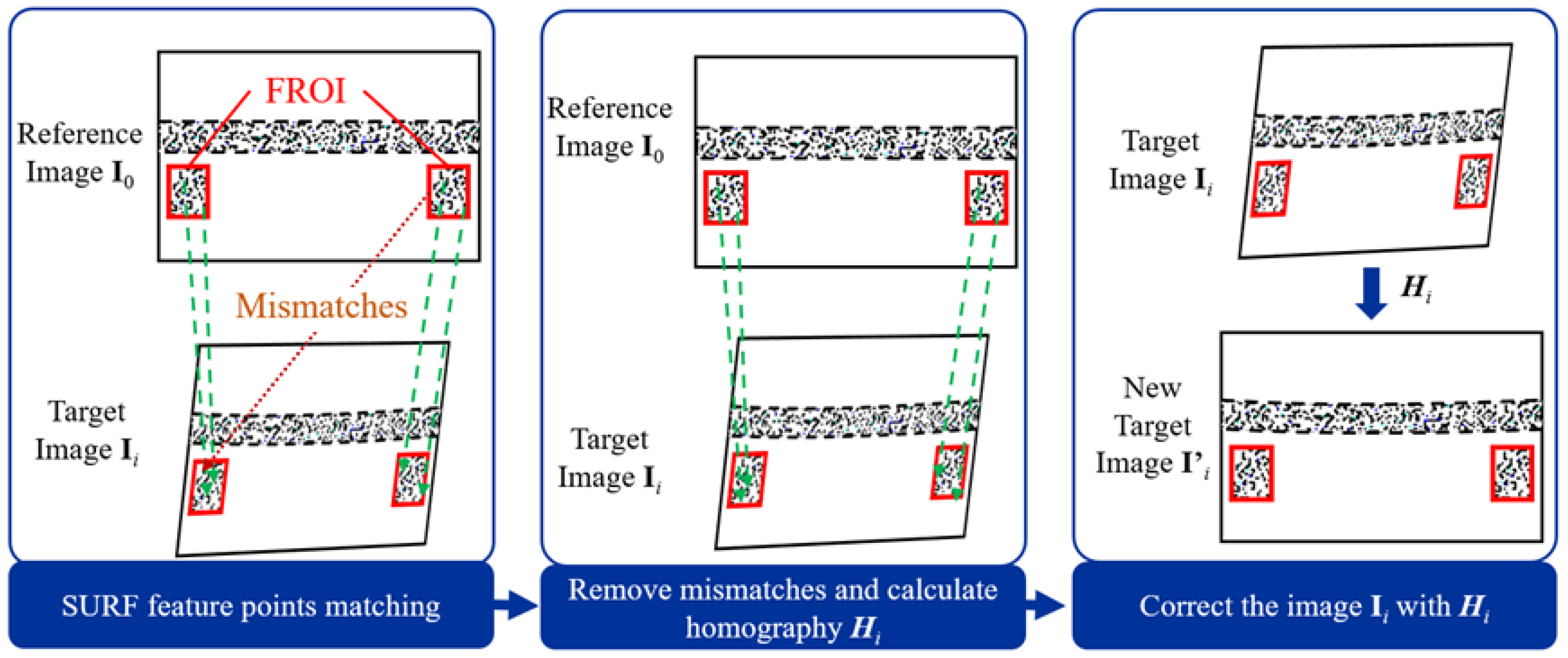

2.2.1. Principle of Image Correction

2.2.2. Feature Points Searching by SURF Algorithm

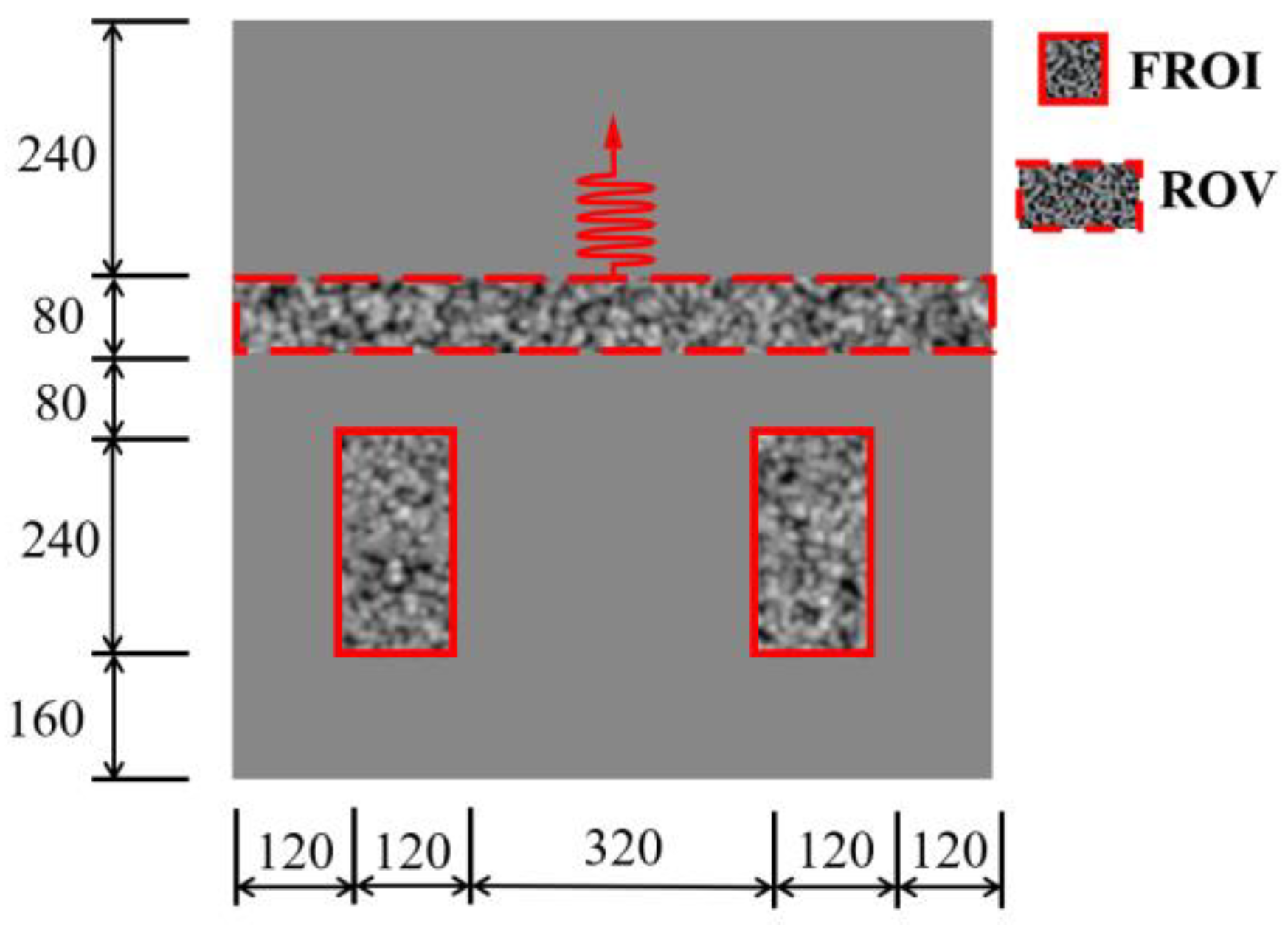

2.2.3. Auxiliary Reference Image Fabrication

2.2.4. Procedure of the Proposed Method

3. Results

3.1. Numerical Simulation

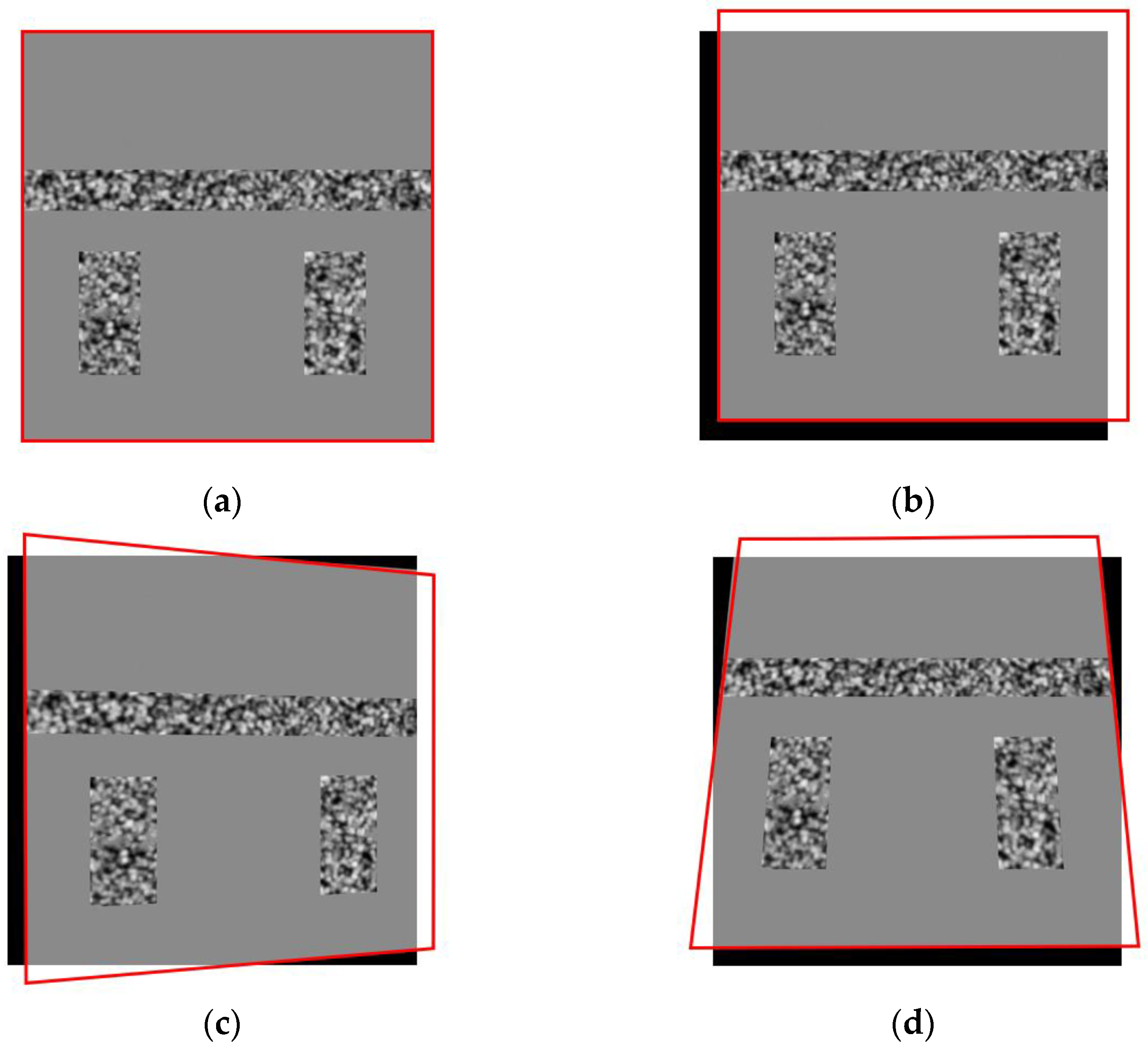

3.1.1. Images and Camera Motions Simulation

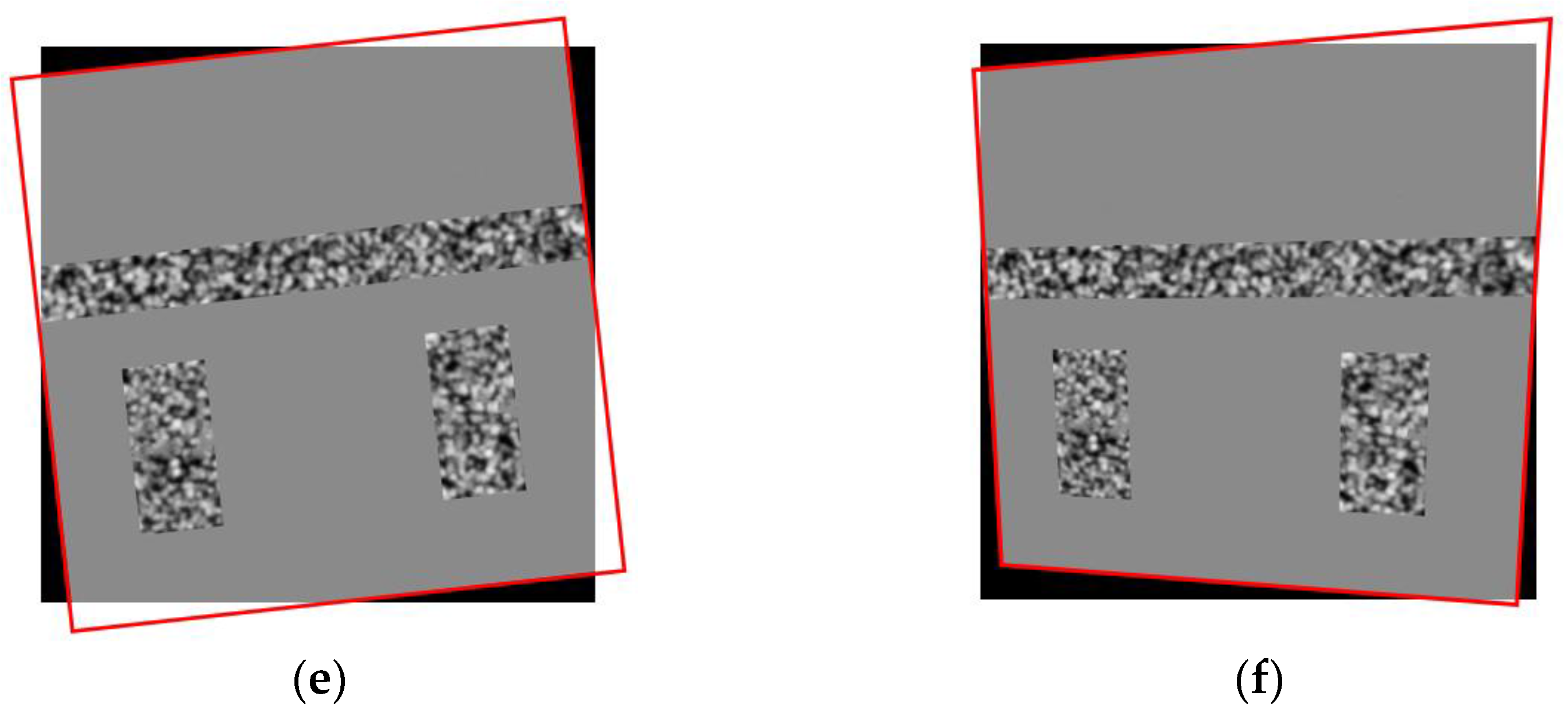

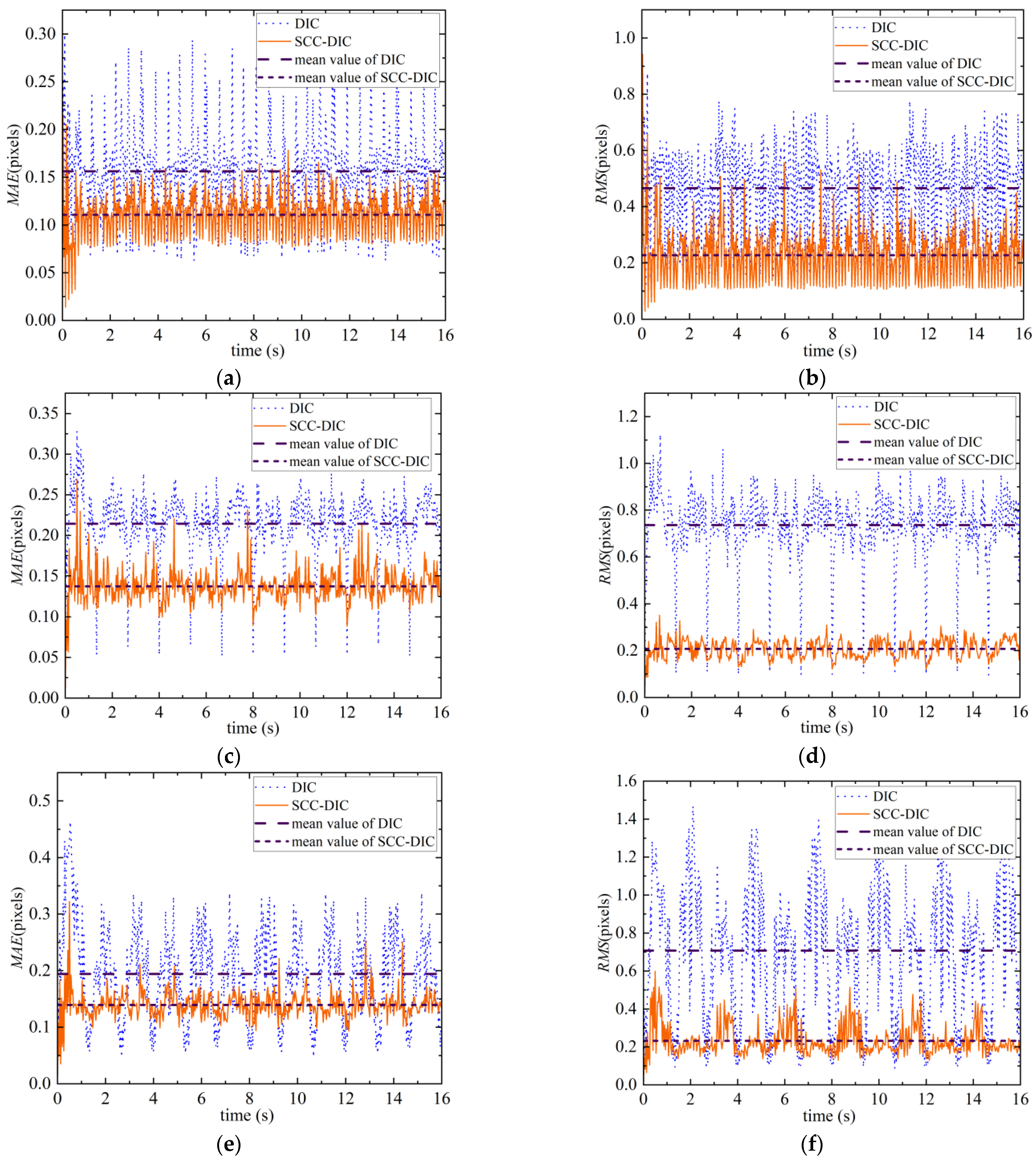

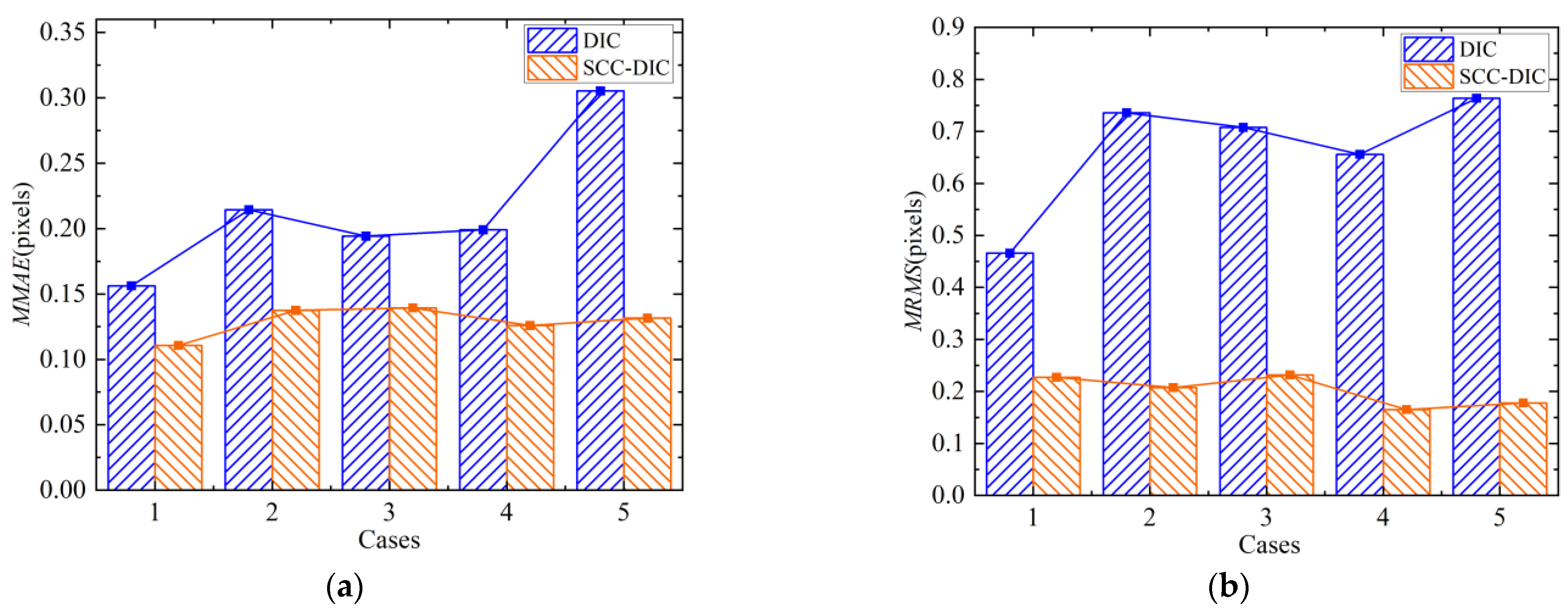

3.1.2. Results of Numerical Simulation

3.2. Experiment Verification

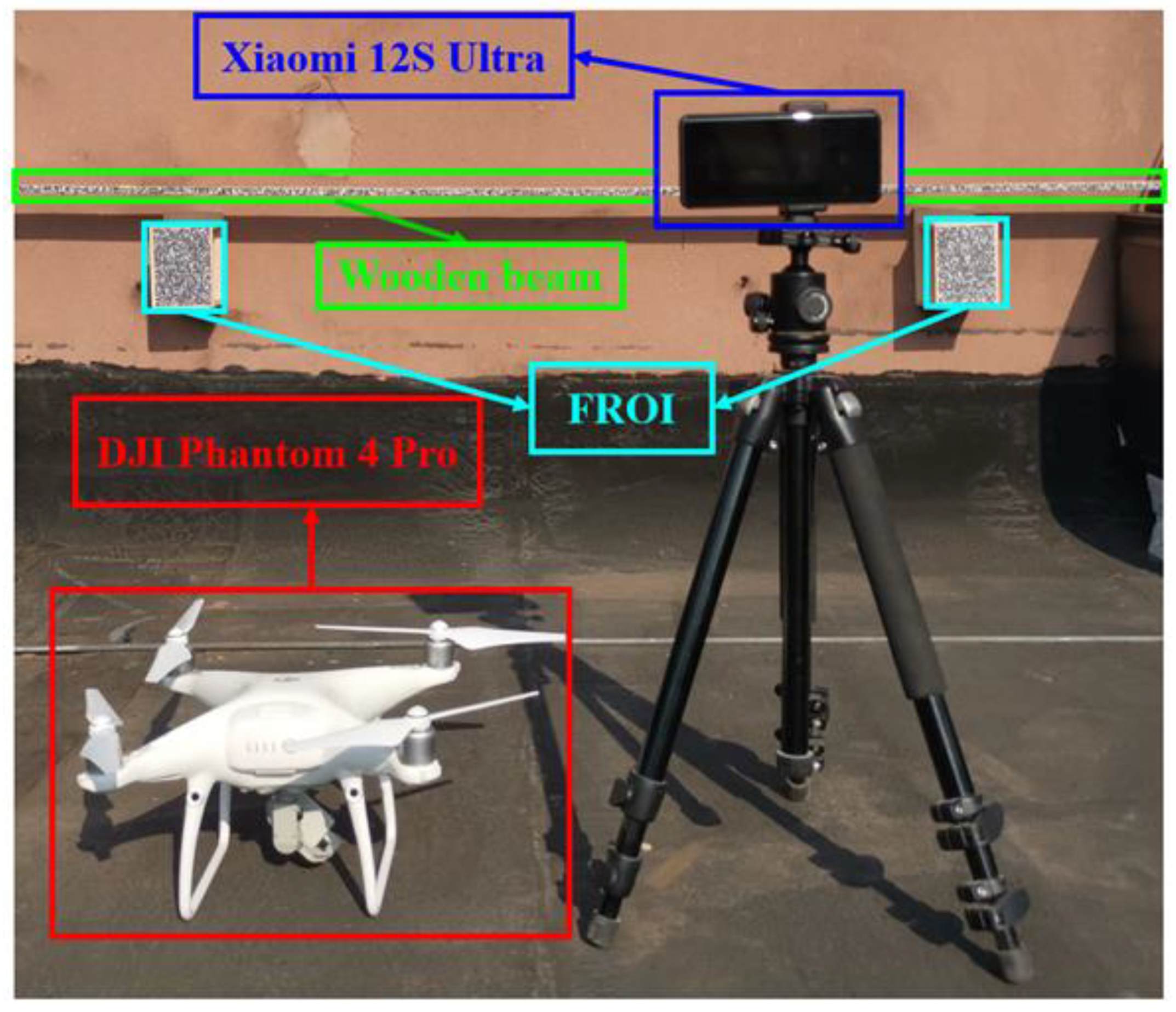

3.2.1. Experiment Setting

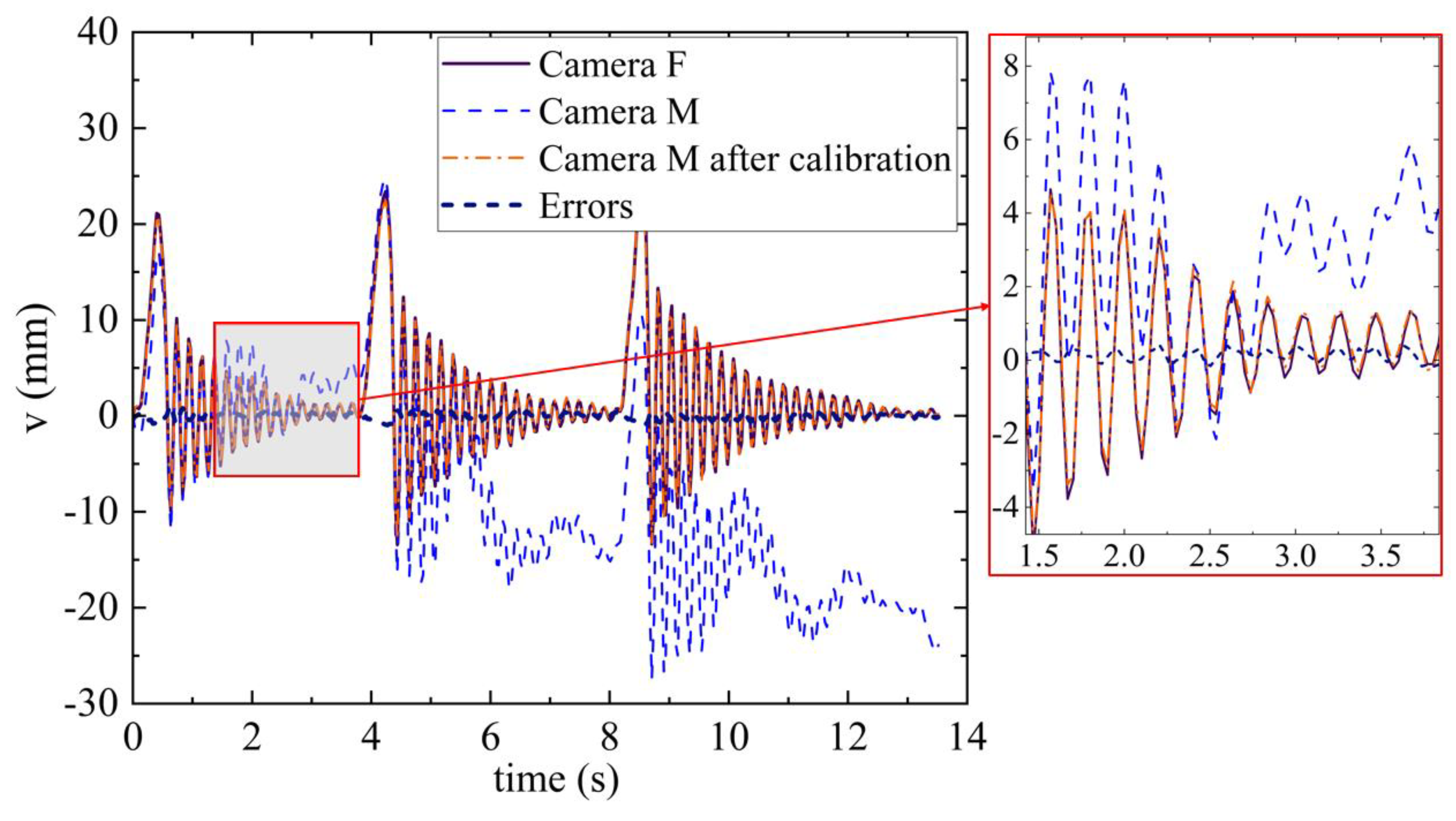

3.2.2. Experiment Results

4. Discussion

4.1. Discussion for Numerical Simulation

4.2. Discussion for Experiment

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, J.J.; Li, G.M. Study on Bridge Displacement Monitoring Algorithms Based on Multi-Targets Tracking. Future Internet 2020, 12, 9. [Google Scholar] [CrossRef]

- Won, J.; Park, J.W.; Park, J.; Shin, J.; Park, M. Development of a Reference-Free Indirect Bridge Displacement Sensing System. Sensors 2021, 21, 5647. [Google Scholar] [CrossRef] [PubMed]

- Xu, J.M.; Franza, A.; Marshall, A.M.; Losacco, N.; Boldini, D. Tunnel-framed building interaction: Comparison between raft and separate footing foundations. Geotechnique 2021, 71, 631–644. [Google Scholar] [CrossRef]

- Liu, G.; Li, M.Z.; Mao, Z.; Yang, Q.S. Structural motion estimation via Hilbert transform enhanced phase-based video processing. Mech. Syst. Signal Process. 2022, 166, 108418. [Google Scholar] [CrossRef]

- Lee, J.; Lee, K.-C.; Jeong, S.; Lee, Y.-J.; Sim, S.-H. Long-term displacement measurement of full-scale bridges using camera ego-motion compensation. Mech. Syst. Signal Process. 2020, 140, 106651. [Google Scholar] [CrossRef]

- Luo, J.; Liu, G.; Huang, Z.M.; Law, S.S. Mode shape identification based on Gabor transform and singular value decomposition under uncorrelated colored noise excitation. Mech. Syst. Signal Process. 2019, 128, 446–462. [Google Scholar] [CrossRef]

- Huang, S.; Duan, Z.; Wu, J.; Chen, J. Monitoring of Horizontal Displacement of a Super-Tall Structure During Construction Based on Navigation Satellite and Robotic Total Station. J. Tongji Univ. 2022, 50, 138–146. [Google Scholar]

- Kim, K.; Sohn, H. Dynamic displacement estimation for longspan bridges using acceleration and heuristically enhanced displacement measurements of realtime kinematic global navigation system. Sensors 2020, 20, 5092. [Google Scholar] [CrossRef]

- Yu, J.; Meng, X.; Yan, B.; Xu, B.; Fan, Q.; Xie, Y. Global Navigation Satellite System-based positioning technology for structural health monitoring: A review. Struct. Control Health Monit. 2020, 27, e2467. [Google Scholar] [CrossRef]

- Du, W.K.; Lei, D.; Bai, P.X.; Zhu, F.P.; Huang, Z.T. Dynamic measurement of stay-cable force using digital image techniques. Measurement 2020, 151, 107211. [Google Scholar] [CrossRef]

- Mousa, M.A.; Yussof, M.M.; Udi, U.J.; Nazri, F.M.; Kamarudin, M.K.; Parke, G.A.R.; Assi, L.N.; Ghahari, S.A. Application of Digital Image Correlation in Structural Health Monitoring of Bridge Infrastructures: A Review. Infrastructures 2021, 6, 176. [Google Scholar] [CrossRef]

- Kumarapu, K.; Mesapam, S.; Keesara, V.R.; Shukla, A.K.; Manapragada, N.; Javed, B. RCC Structural Deformation and Damage Quantification Using Unmanned Aerial Vehicle Image Correlation Technique. Appl. Sci. 2022, 12, 6574. [Google Scholar] [CrossRef]

- Liang, Z.; Zhang, J.; Qiu, L.; Lin, G.; Yin, F. Studies on deformation measurement with non-fixed camera using digital image correlation method. Measurement 2021, 167, 108139. [Google Scholar] [CrossRef]

- Liu, G.; Li, M.; Zhang, W.; Gu, J. Subpixel Matching Using Double-Precision Gradient-Based Method for Digital Image Correlation. Sensors 2021, 21, 3140. [Google Scholar] [CrossRef]

- Malesa, M.; Szczepanek, D.; Kujawińska, M.; Świercz, A.; Kołakowski, P. Monitoring of civil engineering structures using Digital Image Correlation technique. EPJ Web Conf. 2010, 6, 31014. [Google Scholar] [CrossRef]

- Molina-Viedma, A.J.; Pieczonka, L.; Mendrok, K.; Lopez-Alba, E.; Diaz, F.A. Damage identification in frame structures using high-speed digital image correlation and local modal filtration. Struct. Control Health Monit. 2020, 27, e2586. [Google Scholar] [CrossRef]

- Ribeiro, D.; Santos, R.; Cabral, R.; Saramago, G.; Montenegro, P.; Carvalho, H.; Correia, J.; Calçada, R. Non-contact structural displacement measurement using Unmanned Aerial Vehicles and video-based systems. Mech. Syst. Signal Process. 2021, 160, 107869. [Google Scholar] [CrossRef]

- Hoskere, V.; Park, J.-W.; Yoon, H.; Spencer, B.F. Vision-Based Modal Survey of Civil Infrastructure Using Unmanned Aerial Vehicles. J. Struct. Eng. 2019, 145, 04019062. [Google Scholar] [CrossRef]

- Garg, P.; Moreu, F.; Ozdagli, A.; Taha, M.R.; Mascarenas, D. Noncontact Dynamic Displacement Measurement of Structures Using a Moving Laser Doppler Vibrometer. J. Bridge Eng. 2019, 24, 04019089. [Google Scholar] [CrossRef]

- Hatamleh, K.S.; Ma, O.; Flores-Abad, A.; Xie, P. Development of a Special Inertial Measurement Unit for UAV Applications. J. Dyn. Syst. Meas. Control 2013, 135, 011003. [Google Scholar] [CrossRef]

- Yoon, H.; Shin, J.; Spencer, B.F. Structural Displacement Measurement Using an Unmanned Aerial System. Comput.-Aided Civ. Infrastruct. Eng. 2018, 33, 183–192. [Google Scholar] [CrossRef]

- Yoneyama, S.; Ueda, H. Bridge Deflection Measurement Using Digital Image Correlation with Camera Movement Correction. Mater. Trans. 2012, 53, 285–290. [Google Scholar] [CrossRef]

- Chen, G.; Liang, Q.; Zhong, W.; Gao, X.; Cui, F. Homography-based measurement of bridge vibration using UAV and DIC method. Measurement 2021, 170, 108683. [Google Scholar] [CrossRef]

- Wang, L.P.; Bi, S.L.; Li, H.; Gu, Y.G.; Zhai, C. Fast initial value estimation in digital image correlation for large rotation measurement. Opt. Lasers Eng. 2020, 127, 105838. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Blaber, J.; Adair, B.; Antoniou, A. Ncorr: Open-Source 2D Digital Image Correlation Matlab Software. Exp. Mech. 2015, 55, 1105–1122. [Google Scholar] [CrossRef]

- Bai, X.; Yang, M. UAV based accurate displacement monitoring through automatic filtering out its camera’s translations and rotations. J. Build. Eng. 2021, 44, 102992. [Google Scholar] [CrossRef]

- Baldi, A.; Santucci, P.M.; Bertolino, F. Experimental assessment of noise robustness of the forward-additive, symmetric-additive and the inverse-compositional Gauss-Newton algorithm in digital image correlation. Opt. Lasers Eng. 2022, 154, 107012. [Google Scholar] [CrossRef]

- Shao, X.; He, X. Statistical error analysis of the inverse compositional gauss-newton algorithm in digital image correlation. In Proceedings of the 1st Annual International Digital Imaging Correlation Society 2016, Philadelphia, PA, USA, 7–10 November 2016; Springer: Cham, Switzerland, 2017; pp. 75–76. [Google Scholar]

- Passieux, J.-C.; Bouclier, R. Classic and inverse compositional Gauss-Newton in global DIC. Int. J. Numer. Methods Eng. 2019, 119, 453–468. [Google Scholar] [CrossRef]

- Juarez-Salazar, R.; Zheng, J.; Diaz-Ramirez, V.H. Distorted pinhole camera modeling and calibration. Appl. Opt. 2020, 59, 11310–11318. [Google Scholar] [CrossRef]

- Long, L.; Dongri, S. Review of Camera Calibration Algorithms. Adv. Intell. Syst. 2019, 924, 723–732. [Google Scholar]

- Zhu, Y.; Wu, Y.; Zhang, Y.; Qu, F. Multi-camera System Calibration of Indoor Mobile Robot Based on SLAM. In Proceedings of the 2021 3rd International Conference on Machine Learning, Big Data and Business Intelligence (MLBDBI), Taiyuan, China, 3–5 December 2021; pp. 240–244. [Google Scholar]

- Wu, S.L.; Zeng, W.K.; Chen, H.D. A sub-pixel image registration algorithm based on SURF and M-estimator sample consensus. Pattern Recognit. Lett. 2020, 140, 261–266. [Google Scholar] [CrossRef]

- Swamidoss, I.N.; Bin Amro, A.; Sayadi, S. An efficient low-cost calibration board for Long-wave infrared (LWIR) camera. In Proceedings of the Electro-Optical and Infrared Systems: Technology and Applications XVII, Edinburgh, UK, 21–24 September 2020. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, G.; He, C.; Zou, C.; Wang, A. Displacement Measurement Based on UAV Images Using SURF-Enhanced Camera Calibration Algorithm. Remote Sens. 2022, 14, 6008. https://doi.org/10.3390/rs14236008

Liu G, He C, Zou C, Wang A. Displacement Measurement Based on UAV Images Using SURF-Enhanced Camera Calibration Algorithm. Remote Sensing. 2022; 14(23):6008. https://doi.org/10.3390/rs14236008

Chicago/Turabian StyleLiu, Gang, Chenghua He, Chunrong Zou, and Anqi Wang. 2022. "Displacement Measurement Based on UAV Images Using SURF-Enhanced Camera Calibration Algorithm" Remote Sensing 14, no. 23: 6008. https://doi.org/10.3390/rs14236008

APA StyleLiu, G., He, C., Zou, C., & Wang, A. (2022). Displacement Measurement Based on UAV Images Using SURF-Enhanced Camera Calibration Algorithm. Remote Sensing, 14(23), 6008. https://doi.org/10.3390/rs14236008