1. Introduction

Radar extended target tracking technology is widely used in military and civilian fields, e.g., maritime target tracking. Traditional target tracking methods perform well based on the point target hypothesis, which is appropriate, as each target produces only one measurement per frame. Tracking filters are used to smooth the measured data during the scans, e.g., Kalman filter (KF) provides an optimal estimation for linear systems based on Gaussian and Markov assumptions [

1]. Subsequently, for non-linear systems, several KF variants are proposed under the Gaussian assumption, including the Extended KF (EKF) [

2] and the Unscented KF (UKF) [

3]. Among them, the EKF ignores high order terms of the system’s state by linear approximation. The UKF reduces the estimation error by an unscented transform, which can effectively cope with highly non-linear environments. These methods use the accumulated detector data as input with the aim of iteratively calculating the posterior probability density function (PDF) of the target’s kinematic state. These techniques can be described within unified Bayes filter (BF) formalism [

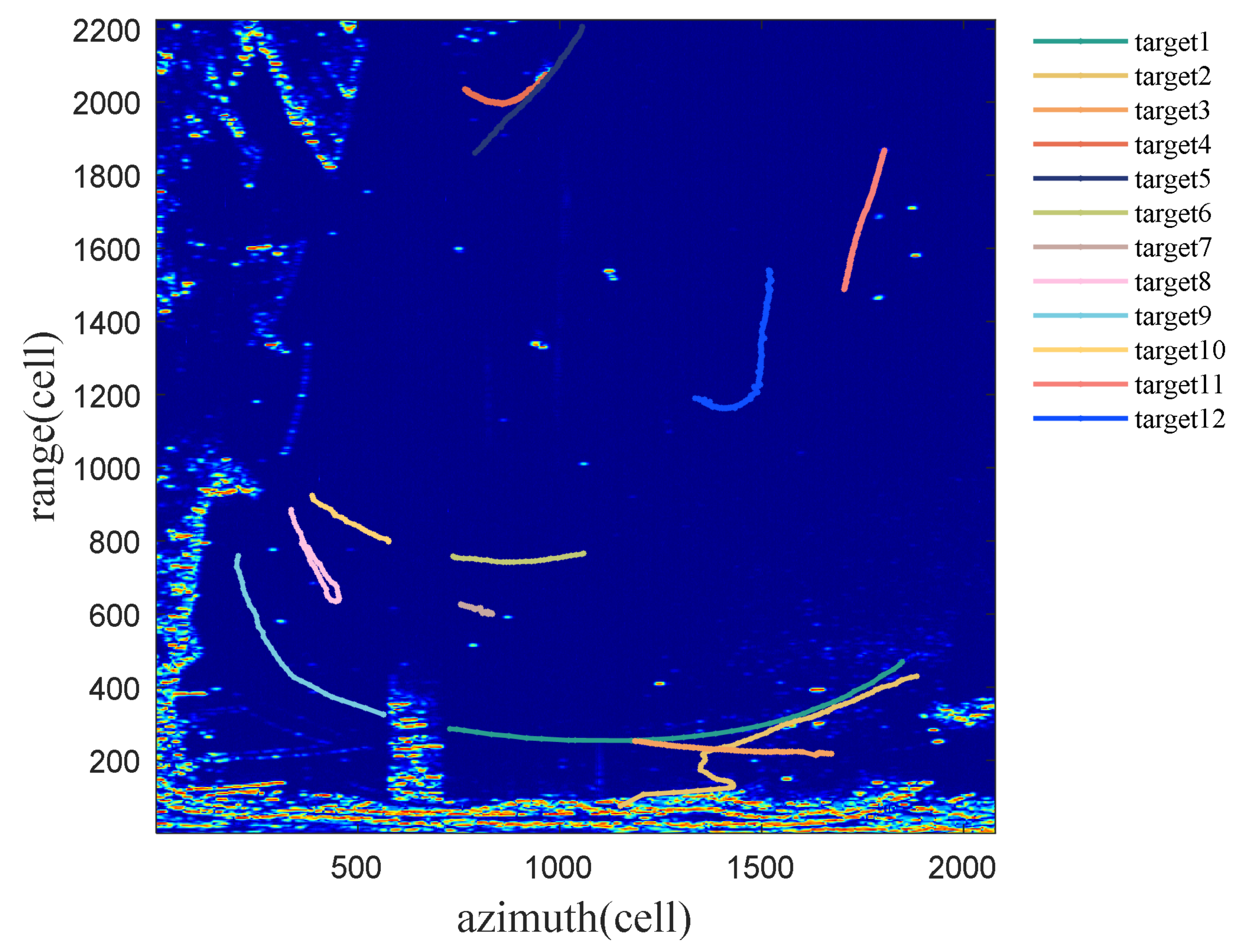

4]. However, traditional trackers are not suitable for an extended target, which may produce multiple measurements with noise, since the measurements of ship targets captured by the high-resolution radar working at scanning mode usually produce two-dimensional expansion in range and azimuth. The higher the radar’s range resolution, the more likely the extended target will generate measurements occupying multiple range resolution cells. In addition, if the radar working in scanning mode, the target may occupy multiple azimuth cells. Additionally, the resolution cell occupied by the target’s scattering point often changes due to the radar resolution, the reflection strength of the target or the change of the target’s attitude, which leads to the shape of some targets’ scattering point varying.

Recently, many methods are proposed for extended target tracking [

5,

6,

7,

8,

9]. For example, Koch [

5,

6] utilized the random matrix model to describe the extension of the target appearance, and derived the analytical solutions under the Bayesian framework. Granstrom [

8] proposed the extended target-Gaussian inverse Wishart-probability hypothesis density (ET-GIW-PHD) filter based on the RM (Random Matrices) model under the PHD framework. In addition, Granstrom [

9] estimated the target’s motion state and shape by calculating the Gamma Gaussian inverse Wishart (GGIW) distribution of each target recursively. Marcus et al. [

10,

11] proposed Random Hypersurface Models to model extended targets. Random Hypersurface Models describe the target’s profile in terms of a parametric set of geometric shape functions, e.g., the Gaussian process model is one typical model [

12]. Since each target generates multiple measurements, it is necessary to divide the measurements of the target. The algorithms mentioned above ensure reliability by traversing all divided measurements. However, the effect of measurement division is unsatisfactory when faced with the following challenging situations: overtaking, head-on meeting, and interruption clutter. Especially in maritime, strong sea clutter could interfere with the division of measurements. The clutter classified into the target cell always causes the association errors in the above methods, which results in severe degradation of the tracking performance. Although the extended target tracking methods mentioned above can estimate the shape of the target, the tracker only uses the motion information of the targets. In radar target tracking, the tracker usually only utilizes the position observation provided by the detector because the radar only captures one or more scatter points of the target. Intuitively, taking into account more available information would greatly improve the stability and accuracy of the tracker.

In addition to motion information, radar extended targets can also provide appearance information. Inspired by human visual instincts, some scholars introduce the amplitude information of the target into tracking methods which not only use the motion information but also take advantage of the appearance information of targets. Shui et al. [

13] proposed a new approach to exploit three features of the received time series for detection of floating small targets in sea clutter. The difference in the statistical characteristics of amplitude between targets and clutters are used to improve the discrimination of them [

14,

15,

16,

17]. However, these feature-aided tracking methods need to extract features manually, and it is difficult to find a stable feature suitable for various complex maritime scenes.

The deep learning method, which has achieved remarkable results in computer vision, are also used to extract the features of radar targets by many scholars. Chen et al. [

18] proposed to classify marine target and sea clutter by convolutional neural networks, which suggested that the above statistical learning methods are suitable for marine target detection and tracking, and the appearance of an extended target can provide useful features for detection. To achieve full utilization of the information contained in radar signals and improve the detection performance, Su et al. [

19] utilized the graph convolutional network to detect marine radar targets. The above deep learning methods had achieved a very appealing performance on the real datasets. However, these methods require a large amount of data for offline training.

The kernel correlation filter (KCF) is an online learning method widely used in visual tracking [

20], which can track the target in real time if given the training samples in the first frame. KCF [

20] trains the filter based on the appearance information of the target in the current frame and the previous frame, and then performs correlation calculation with a new frame to track the appearance of the target. In order to handle the scale changing of the target, Danelljan et al. [

21] proposed to learn discriminative correlation filters from a scale pyramid model, and Li et al. [

22] gave a multiple scales searching strategy. Zhou et al. [

23] introduced the correlation filtering method into radar target tracking for the first time, and proposed the Multiple Kernelized Correction Filter (MKCF) from the perspective of multi-kernel fusion, which performed satisfactorily in some challenging situations. It indicated that this correlation filter (CF) method is promising in radar target tracking [

23]. Introducing the CF method into the traditional radar target tracking framework will help to improve the radar target tracking performance. However, most of these methods [

23,

24] treat the CF as a detector but not a tracker, which is not the best way to combine CF and the traditional radar target tracking framework.

To sum up, many existing methods for radar marine extended target tracking have been proven to be effective, but some of them suffer from poor robustness and incomplete use of observation information. Therefore, this paper attempts to make full use of the limited observation information of the radar targets adaptively. A new algorithm called JCBF is proposed to track a single maritime radar extended target utilizing both the motion and appearance information by jointing correlation filter and Bayes filter (JCBF). The proposed algorithm consists of three main parts: (1) Bayes filter (BF) is used to estimate the target’s motion state; (2) CF calculates the likelihood PDF of the target’s position using the real-time appearance features from the perspective of signal estimation; and (3) the final estimation of the target’s state is obtained by fusing the results of both filters from maximum a posteriori estimation criterion. In addition, a specific scaling pool for radar signals is also proposed to accommodate the extensions in distance and azimuth of targets. Finally, the experiments are performed on the scanning data collected by X-band marine radar to verify the proposed algorithm.

The main contributions of this paper are as follows. Firstly, a novel and specific tracker is designed for the radar target by jointing the BF and CF from an a posteriori probability perspective. Secondly, a strategy that can adapt to the target’s shape is employed to track the targets with varying shape. Finally, the experiments on real data sets demonstrate that the proposed method improves the stability of the tracker under low signal-to-clutter/noise ratio environments.

Table 1 gives a list of notations used throughout the paper.

2. Proposed JCBF

This section begins with a brief introduction to the basic principles of BF [

4] and KCF [

20]. Next, the theoretical derivation and a specific implementation of the integrated BF and CF approach are given in

Section 2.3 and

Section 2.4, respectively, where the viewpoint in [

23] that analyzing the KCF in the perspective of probability is highlighted. Following that, two strategies for improving the robustness of the tracker are given in

Section 2.5 where a feedback structure is suggested to pass the fusion outcomes back to the former BF and KCF to improve their stability, and a scale adaptive strategy is presented to ameliorate the robustness of the tracker against shape-changing targets. Finally, the proposed method is summarized in

Section 2.6.

2.1. Bayesian Framework

Assuming that the state equation and the observation equation of the system (linear or nonlinear system) are as follows:

where

denotes the time step,

is the system state vector at time

k,

denotes the state transition map,

is the process noise,

is the measurement vector at time

k,

denotes the measurement map (

), and

is the measurement noise. Note that

and

can denote both linear and nonlinear maps.

The Bayes filter uses the measurements set () to calculate the PDF of the state at time k, i.e., . The steps of the Bayes filter are divided into prediction and update, as follows:

Assuming that

has been obtained at time

k − 1, then the PDF of the one-step predictions of the state and measurement are (

3) and (

4), respectively:

where

and

are given by (

1) and (

2), respectively.

The posterior PDF is calculated using the new measurement

obtained at time

k [

4]:

2.2. Kernal Correlation Filter

KCF [

20] solves the classifier by means of ridge regression. In the initial linear regression model, the optimization objective function is (

6). Given the training feature-label pairs

, the loss function is as in (

7):

Assuming that denotes n training samples and denotes their labels, it can be derived that w has a closed-form solution .

To improve the classifier’s performance, the data in original space are mapped to a high-dimensional space with weights

w as linear combinations of the input samples:

When putting the w in the optimization process, the detailed form of function is not necessary, only the inner product of is needed, i.e., .

By introducing the kernel function

, and putting it and weights (

8) into (

7), the optimal solution can be converted to (

9):

where

,

K is a circular matrix with

dimensions, and

.

Since

K is a circular matrix, (

9) can be converted into

, where

denotes the first row of the circular matrix

K [

20].

After training the model using sample

g in the last frame, the response of the new input sample

g′ in the new frame is computed by:

Let

v be the position variable of the target at a certain time step, the position

v of the target is the position of the maximum value in the response

, which can be given by:

The model is updated by (

12), where

and

denote the training sample and the weights at time

t,

and

denote the new sample and the new weights, and

denotes forgetting rate [

23].

2.3. Joint Correlation and Bayes Filtering

In order to make full use of the target’s appearance and motion information to estimate the target’s position , the posterior PDF of at time k is calculated utilizing both the obtained position measurement and the appearance measurement , where () denotes the appearance measurements set.

Further discussion will be much simplified if additionally assuming that the position of the target and the appearance feature of the target are independent of each other. Such an assumption seems to be justified in many practical cases, as follows:

Therefore, the posterior PDF of the state

is given by:

Zhou et al. [

23] firstly analyze the KCF in the perspective of maximum likelihood. They propose that the response (

10) can be viewed as the more advanced likelihood function whose strength shows the probability of the target at each position

. Hence, given a position

, the probability of

being the center of the target measured by KCF is the individual likelihood

, where the

denotes the appearance measurements obtained by KCF. Treating the response of KCF as an appearance measurement captured by one sensor, KCF can be considered as a filter that utilizes the target’s real-time appearance measurements

to calculate the likelihood PDF of the target’s position

.

In KCF, the response matrix is equal to the individual likelihood distribution of

[

23], thus

in (

15) can be calculated by KCF:

As discussed in

Section 2.1, BF calculates the posterior PDF

of

using the obtained measurement

and

.

The state estimation

of the target’s position is a fraction of

, thus

can be decomposed into two components as

, where

. As a result,

is given by (

17):

Finally,

can be calculated by (

15)–(

17). As a result, the maximum a posteriori estimation (MAP) of

is given by (

18):

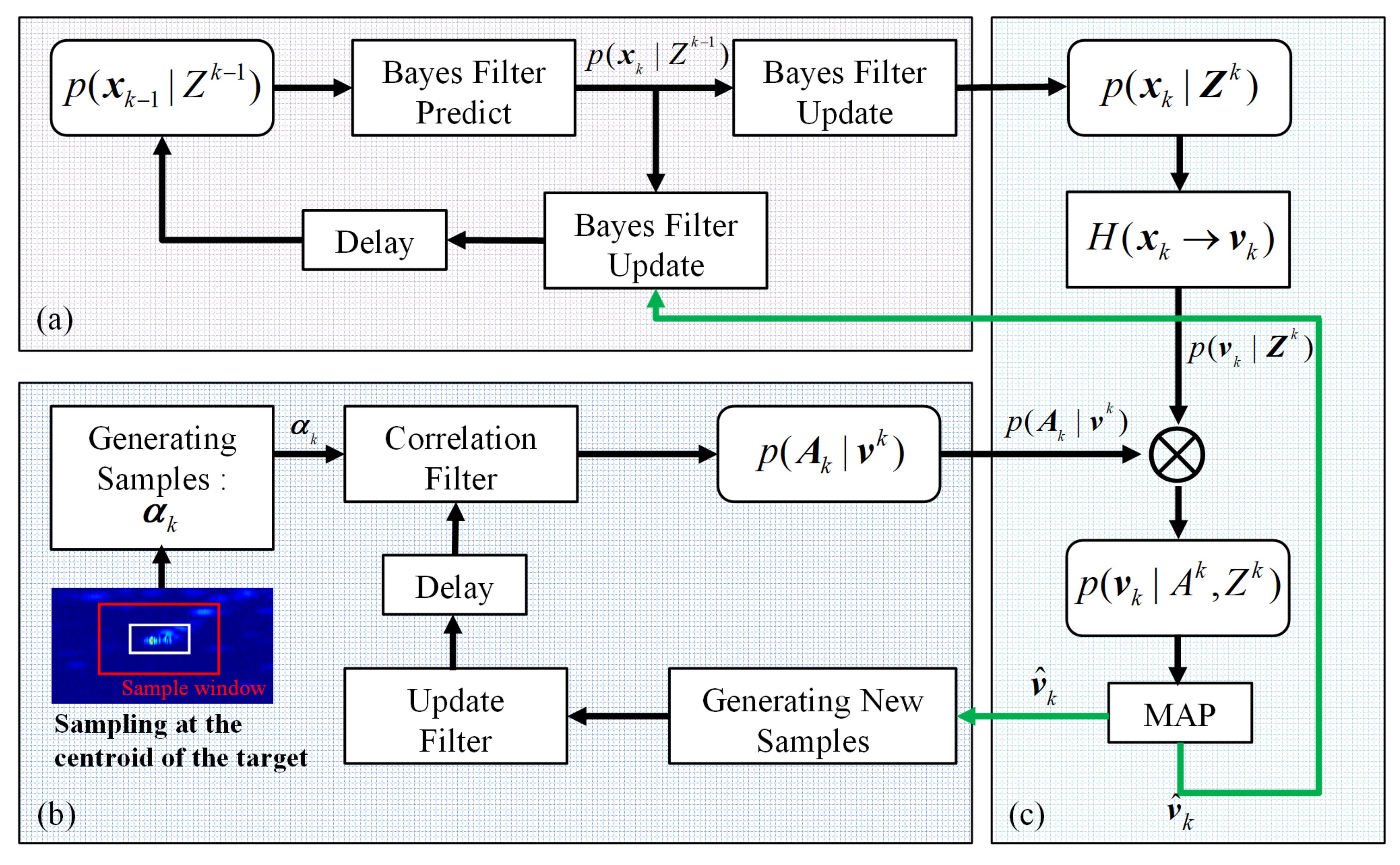

Not only the appearance information but also the motion information is successfully utilized in (

18) to estimate the target’s position

by jointing the Bayes filter and the Correlation filter. The overall flow of JCBF is shown in

Figure 1, among which a fusion module (

Figure 1c) is used to combine the BF module (

Figure 1a) and the CF module

Figure 1b.

2.4. Specific Implementation of JCBF

This section gives a specific implementation of JCBF. Since radar measurements are generally obtained from the distance and bearing of the target in the polar coordinate system, this paper constructs the equations of state and observation in the polar coordinate system and implements the proposed tracking algorithm.

2.4.1. Bayes Filter Module

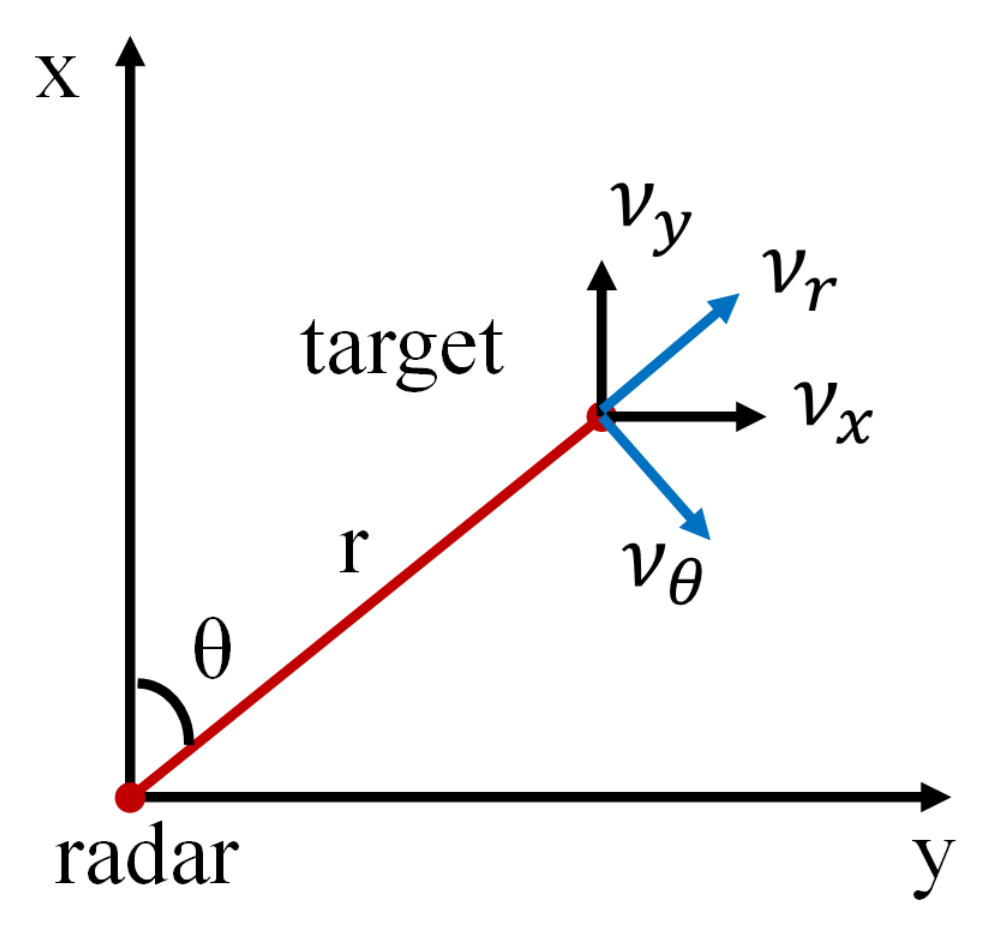

In the polar coordinate, the motion state of the target can be represented by , where r denotes the radial range between the target and the radar, θ denotes the azimuth, denotes the radial velocity and denotes the tangential velocity. The measurement can be represented by .

Due to the weak manoeuvrability of most maritime targets, for the sake of simplicity, this paper assumes that the target’s motion model conforms to a Nearly Constant Velocity (NCV) motion model in the Cartesian coordinate. Assuming that the radar’s scanning period is

T, the above motion model and the observation equation in the polar coordinate system can be represented as follows [

25]:

where

Since the motion Equation (

19) is a nonlinear model, this paper utilizes EKF [

2] to estimate the state

. The detailed calculation process of EKF and the derivation of (

21) are displayed in

Appendix A and

Appendix B, respectively.

Given the measurements

, EKF iteratively calculates the first moment and second moment of

, and approximates the posterior PDF of

to a Gaussian distribution:

where

denotes the mean of

and

denotes the covariance matrix. The expressions for

and

are displayed in

Appendix A.

As discussed in

Section 2.3, the key is to solve for the posterior PDF of

as (

15). In the motion model (

19),

denotes the range and azimuth where the target is located, i.e.,

. Consequently,

can be decomposed into two components as

, where

. Plugging (

23) into (

17), the posterior PDF of the target’s position

can be derived as:

where

,

and

.

2.4.2. Correlation Filter Module

The training labels of KCF are two-dimensional (2D) needle-shaped Gaussian [

20,

23], if the test sample is similar with the train samples, the response

of KCF given by () is a similar needle-shaped Gaussian to the training labels. Thus, the position

can be assumed to obey 2d Gaussian distribution, and such an assumption simplifies the further discussion and is reasonable in most cases. As discussed in

Section 2.3, given the

, the likelihood PDF of position variable

is computed by:

where

and

. For KCF,

denotes the location of the maximum value

in the response map, and

is inversely proportional to the

, i.e.,

.

2.4.3. Fusion Module

This module calculates the maximum posterior estimate of the target location by fusing the results of the above two modules.

is given by:

where

According to (

18), the maximum a posterior estimation of

can be derived as (

30) and the covariance matrix of estimation errors is

.

2.5. Strategies to Improve the Stability of Tracker

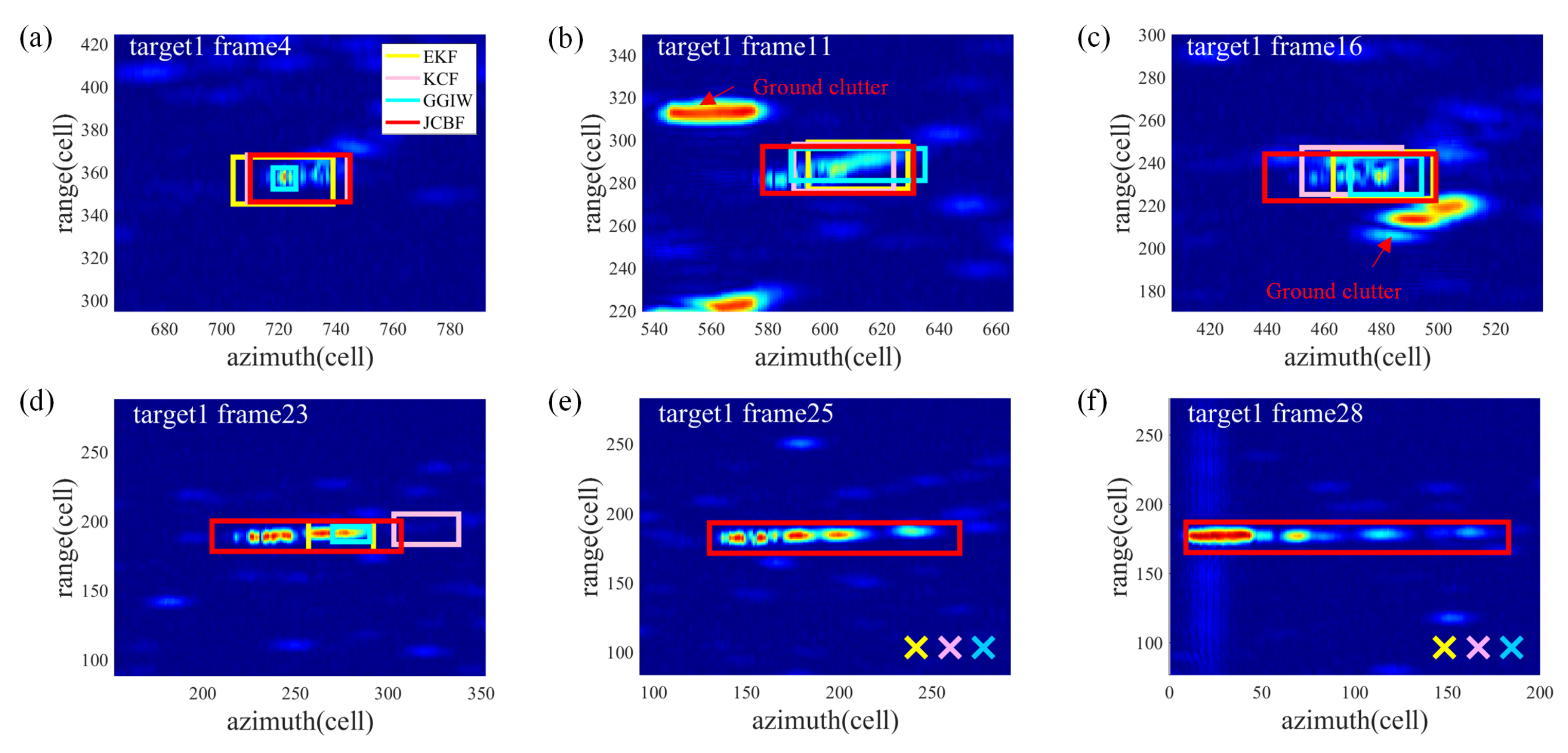

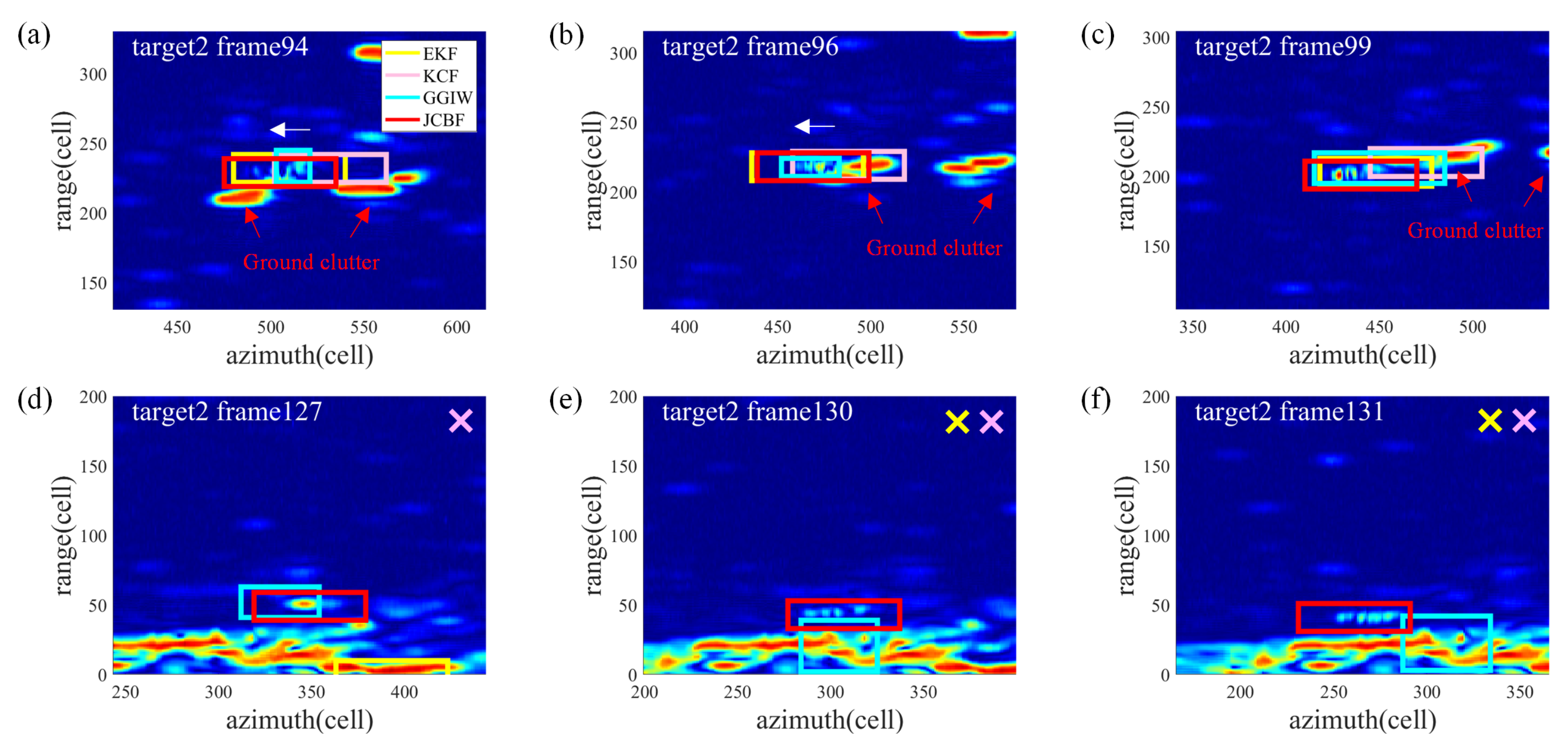

2.5.1. A Feedback Structure in the JCBF

A feedback module is introduced into the proposed method to improve the stability. The fused results given by JCBF are treated as corrected measurements to re-update BF and CF. The green lines in

Figure 1 illustrate this process.

After capturing the target at time

k, the correlation filter usually updates the model with new sample, as shown in (

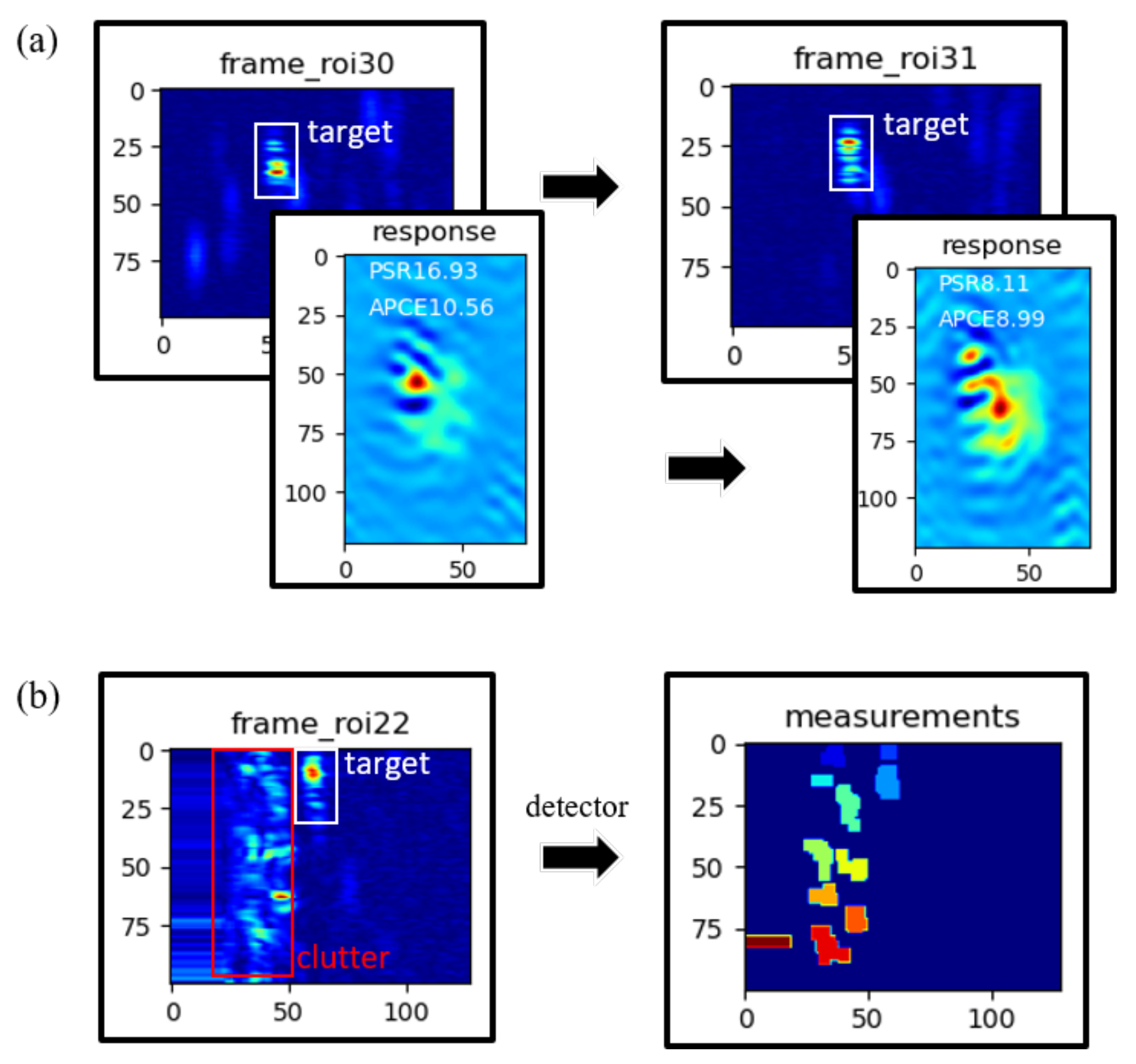

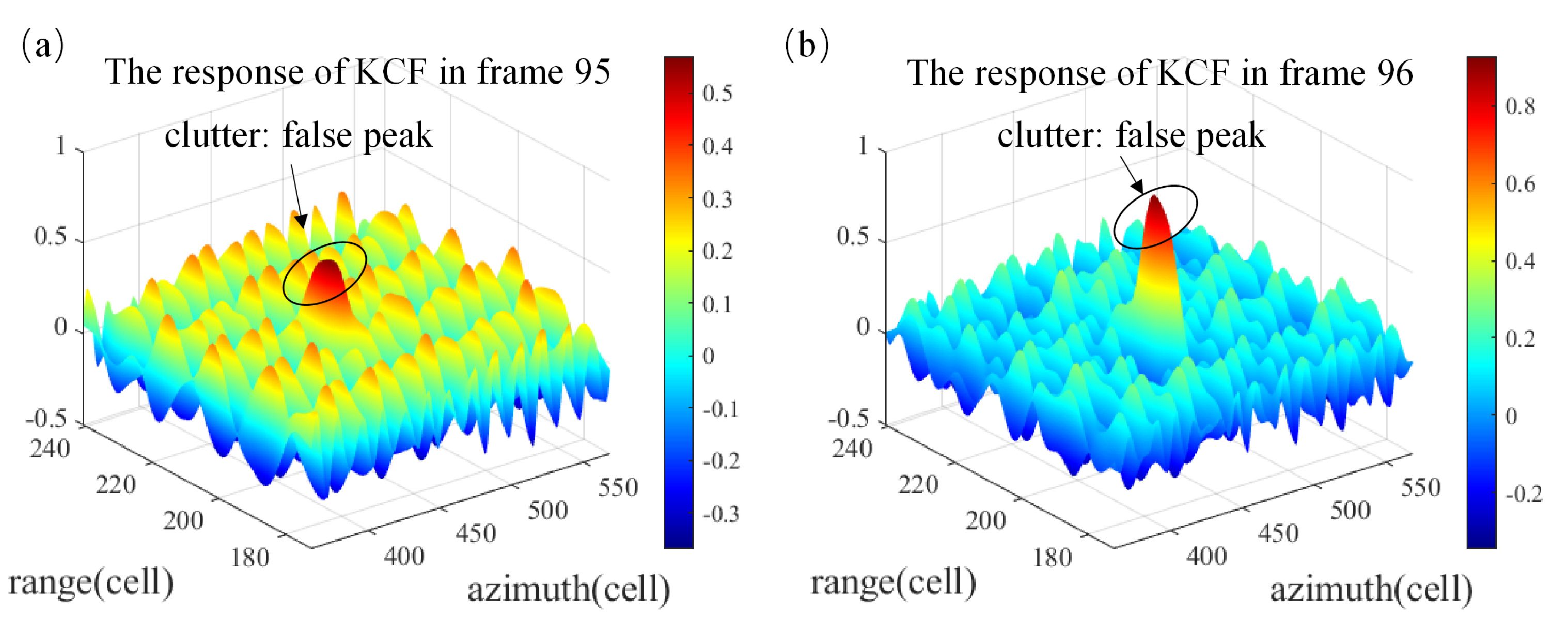

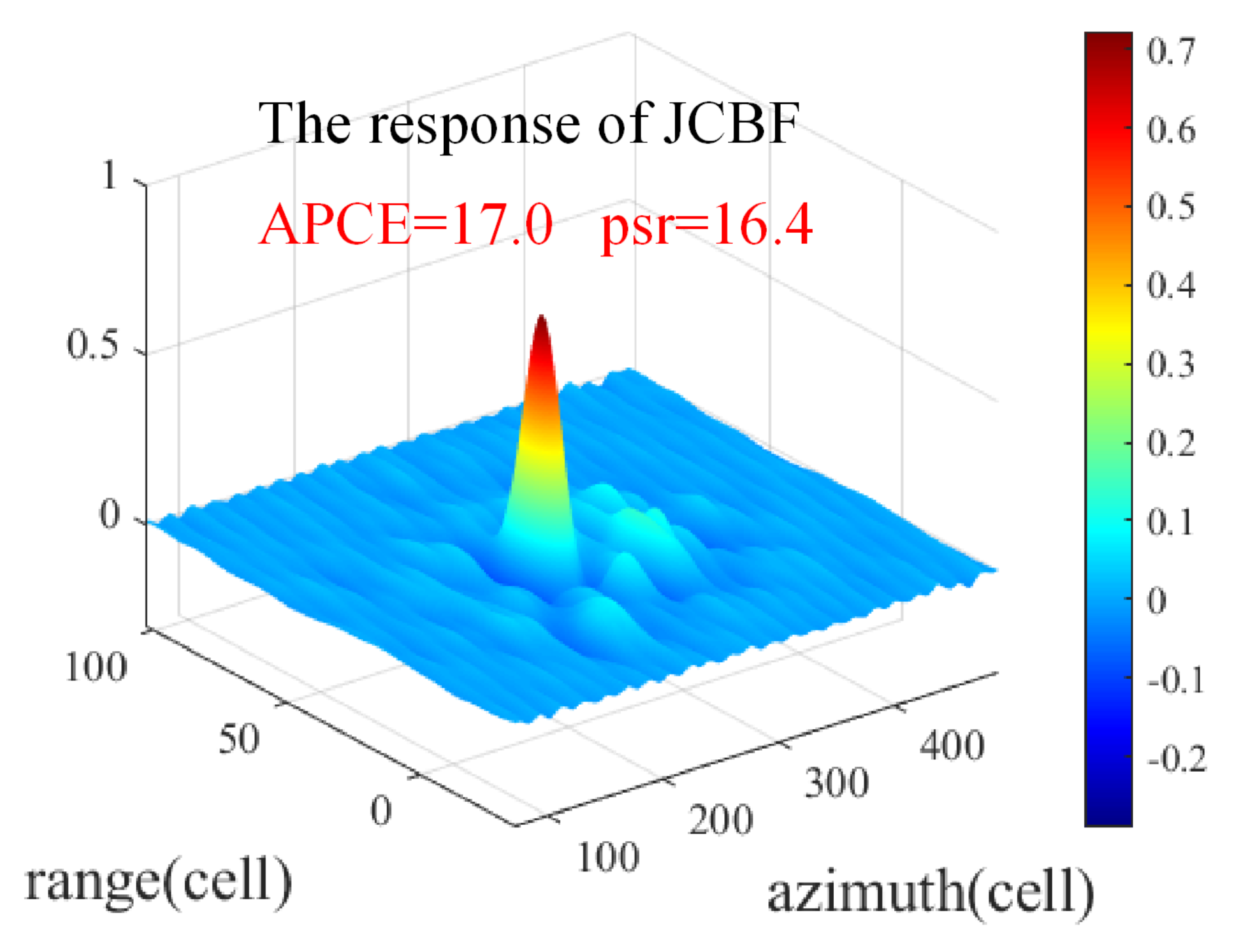

12). However, when the target is obscured by clutter or changes significantly in appearance, as shown in

Figure 2a, the response of the filter deteriorates significantly, which leads to inaccurate estimation of the target state. The peak to side-lobe ratio (PSR) [

26] and average peak-to-correlation energy (APCE) [

27] are utilized to evaluate the fluctuated degree of response

y and the confidence level of the detected targets, which are given by (

31) and (

32), respectively,

where

and

are the mean and standard deviation of the side-lobe, respectively, and ∑ denotes summation.

In this strategy, the reselected sample extracted from the estimated position given by the JCBF is utilized to update the CF rather than that form the original position given by the CF.

Similarly, Bayes filter updates the model with new measurements, as in (

5). When the target passes through interference clutter or meets other targets, the measurements could be disturbed, as shown in

Figure 2b. To solve this problem,

is treated as a measurement and is fed back to the BF module together with the covariance matrix

of estimation errors to update the BF.

In addition, a confidence threshold is set to determine whether to update the filter or not. If the PSR is less than the threshold, both filters will not be updated and the estimation for this frame will be replaced by the prediction of the Kalman filter.

2.5.2. A Scale Adaptive Strategy

The traditional Bayes methods [

5,

6,

7,

8,

9] obtain an extended estimate of the target by dividing multiple measurements of the target; however, contaminated measurements may degrade the performance of these methods. Without relying on measurements, existing scale-adaptive CF methods [

21,

22] proposed for optical images calculate the response of a sample scaled at multiple scales by defining a scaling pool

. For the current frame, the template size is fixed as

and

z with sizes in

is sampled to find the proper target. The scale of the sample with the largest response is considered as the target’s size. These methods don’t distort the appearance of the target by scaling both the length and width at the same ratio, for the reason that the optical images have the same resolution in both dimensions. However, the resolutions of radar in two dimensions are different, where the azimuthal resolution is determined by the beamwidth of the antenna, and the distance resolution is determined by the bandwidth of the radar’s emitting signal. Therefore, scaling the sample using above ratios would distort the radar targets. In addition, the clutter may be introduced into the sample

z when the size expands. In summary, the method mentioned above with superior performance in optical images is not suitable for radar targets.

Unlike the targets in optical images, the expansion of radar targets in azimuth becomes more evident as it approaches closer to the radar, but not in distance, due to the lack of radar’s azimuthal resolution and the variation in radar’s view. Consequently, a scaling pool (

33) for radar targets is proposed which scales the length and width of the template at different ratios, where width is scaled with a greater gradient than length. The gradient of the scaling pool is defined as

, and

l denotes the length of scaling pool.

The further operations are the same as in [

22], except the proposed scaling pool. The scaling size are given by (

34):

where

denotes the sample

z with scaling size

.

In the end, the response of KCF with scaling size t will be used in JCBF.

2.6. Framework of the Proposed JCBF

The proposed method is summarized in Algorithm 1. Given the position and size of the target in the initial frame, firstly,

g is sampled to train KCF using (). In the latter frame, the response of the target is given by (

10) and the

is calculated by (

25). It is worth noting that the scale adaptive strategy was only used to track the targets with varying shape. Meanwhile, a segmentation algorithm [

23] (’detecor’ in Algorithm 1) is used to obtain measurements

z′ of the target. Next, the EKF module is used to calculate the

. Then, the results of the two filters are fused to obtain the state estimation

of the target, as in (

30). In the end, PSR [

23,

27] is utilized to determine whether to update the filter or not.

| Algorithm 1: Joint correlation filter and Bayes filter. |

Input Output |

| 1: for k = 1 to N |

| 2: if k initial frame |

| 3: Sampling g at position in size of |

| 4: Generating Gaussian needle-shaped label y |

| 5: train: |

| 6: |

| 7: end if |

| 8: if k ≠ initial frame then |

| 9: if step KCF then |

| 10: for in S do |

| 11: Sampling g′ (denotes at position ) in size of |

| 12: test: |

| 13: end for |

| 14: |

| 15: The response of KCF is |

| 16: Calculating by (25) |

| 17: end if |

| 18: if step EKF then |

| 19: |

| 20: Calculating by (24) |

| 21: end if |

| 22: if step Fusion then |

| 23: Calculating the estimation by (30) |

| 24: Calculating the covariance matrix of estimation errors by (27) |

| 25: if psr > th then |

| 26: Updating KCF by (12) |

| 27: Updating EKF using and |

| 28: end if |

| 29: end if |

| 30: end if |

| 31: end for |

2.7. Parameters Setup

Table 2 lists the value of key parameters of JCBF, which are set depending on the dataset used for the experiments. A detailed description of the dataset used in the experiments is presented in

Section 3.1.

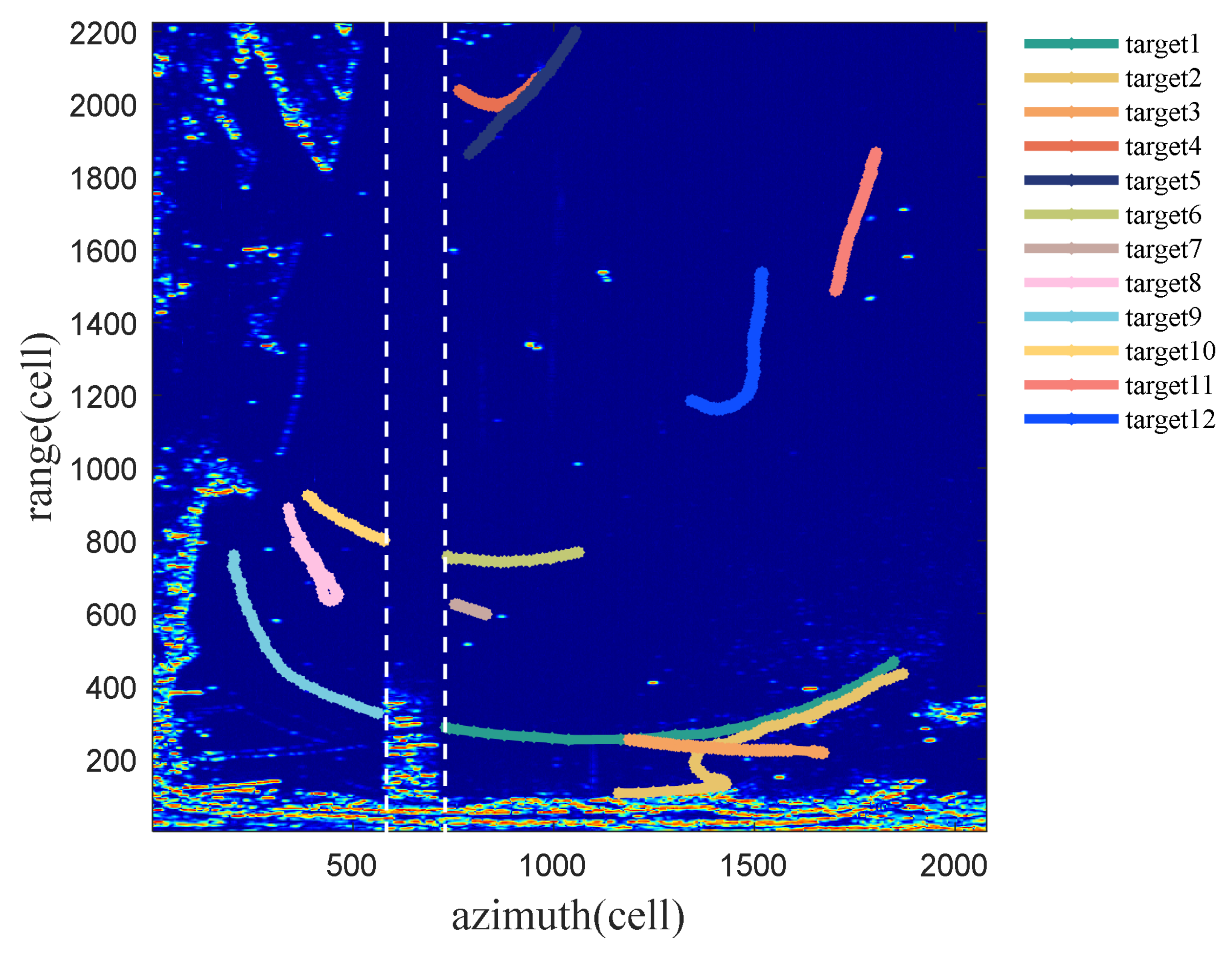

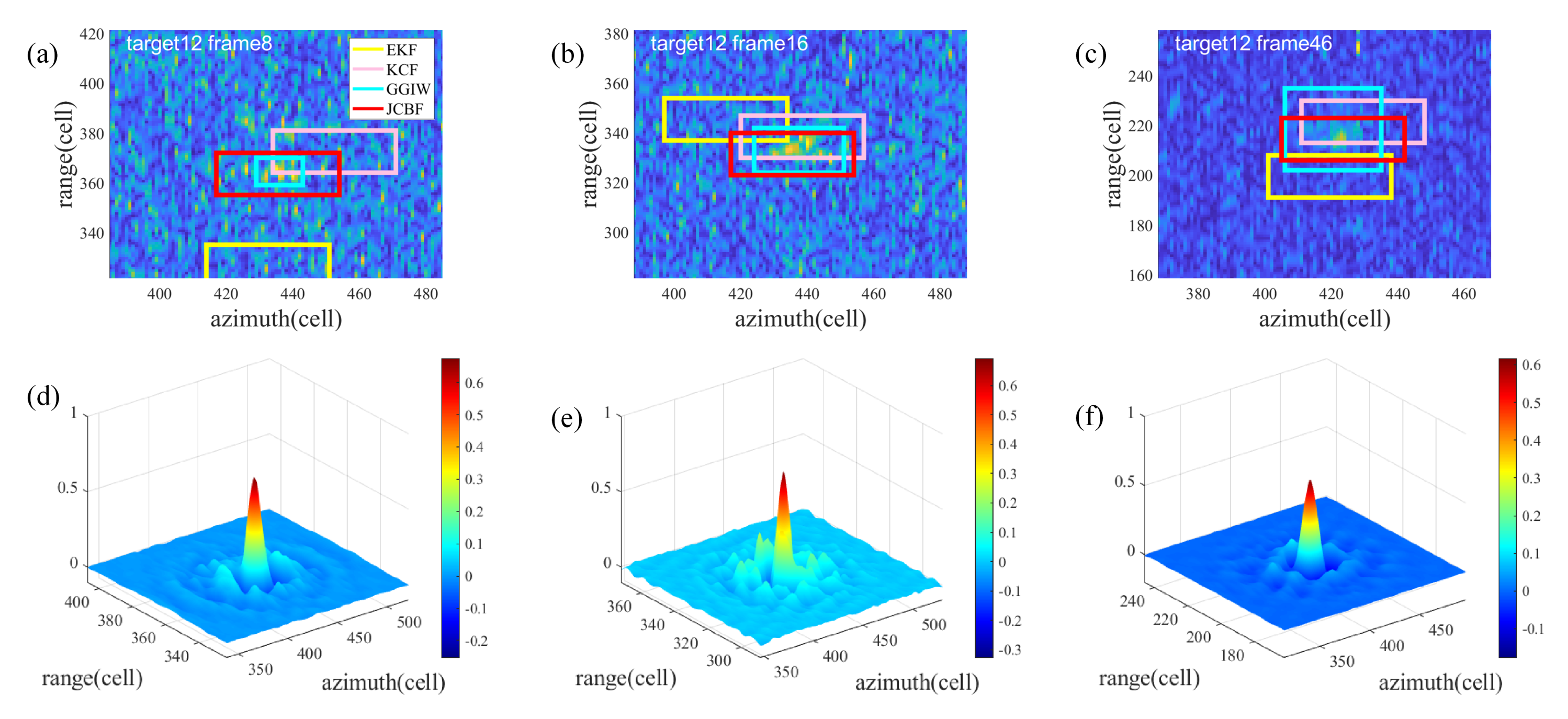

2.8. Evaluation Methodology

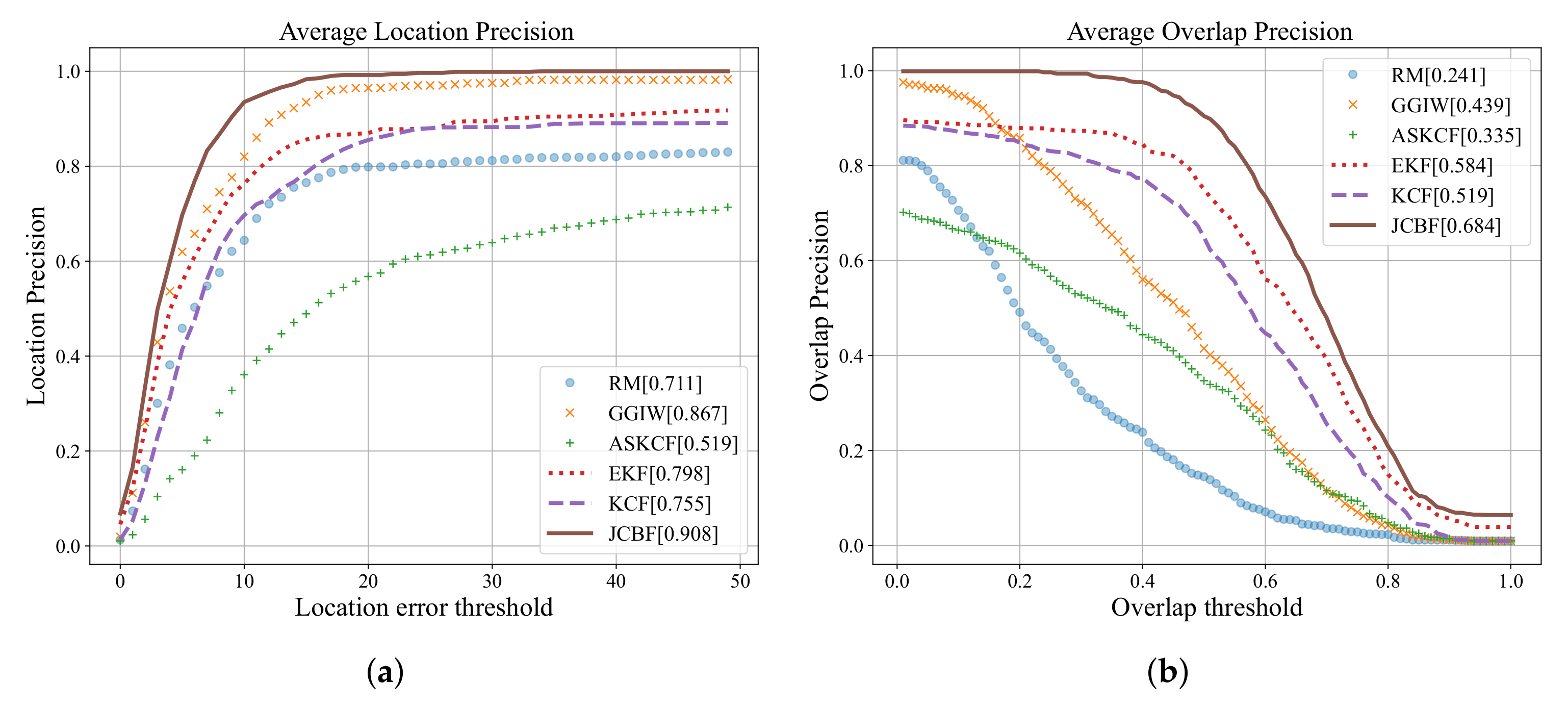

The experiments in this paper are performed using the data matrices of radar echoes. The proposed JCBF and several selected trackers are used to track each target separately. The results of each tracker are the center location and the bounding box of the targets. The targets in the radar monitoring scenario are non-cooperative, so the real motion information and the real labels of the targets can’t be obtained. In addition, the experimental results are compared with the manually generated labels to evaluate the trackers’ performance.

Average center location error (CLE) and bounding box overlap ratio are calculated to evaluate the difference between the results and the ground truth. For the performance criteria, the precision curves widely applied in the visual tracking [

20,

23,

28] are also utilized to simply show the percentage of correctly tracked frames. Targets are considered to be tracked correctly if the predicted target center is within a distance threshold of ground truth.

The location precision and overlap precision are given by (

35) and (

36), respectively:

where

and

denote ground truth set and tracking results set, respectively.

calculates the distance between the center of ground truth and the center of tracking results, and

calculates the intersection-over-union (IOU) between the ground truth and the tracking results.

and

denote the location error threshold and overlap threshold, respectively.

Three traditional Bayes tracking methods (EKF [

2], RM-based trackers [

5], GGIW-based trackers [

9]) and two correlation filter-based methods (KCF [

20], Scale Adaptive with Multiple Features tracker (ASKCF) [

21]) are chosen to compare with JCBF. The same measurements are provided for three Bayes methods and the proposed JCBF. In addition, the parameters of the two correlation filters are the same as those of the proposed JCBF.

It is worth noting that KF has been used for smoothing the measurements in both RM-based trackers and GGIW-based trackers, the KF parameters of the two trackers are the same as those of JCBF. To show the performance improvement of the JCBF, the EKF supplemented by the nearest neighbor data correlation is also tested on the same dataset. Furthermore, the IOU is calculated based on the target size on the first frame, as the EKF cannot estimate the size of targets based on the point target hypothesis.