A Multibranch Crossover Feature Attention Network for Hyperspectral Image Classification

Abstract

1. Introduction

- (1)

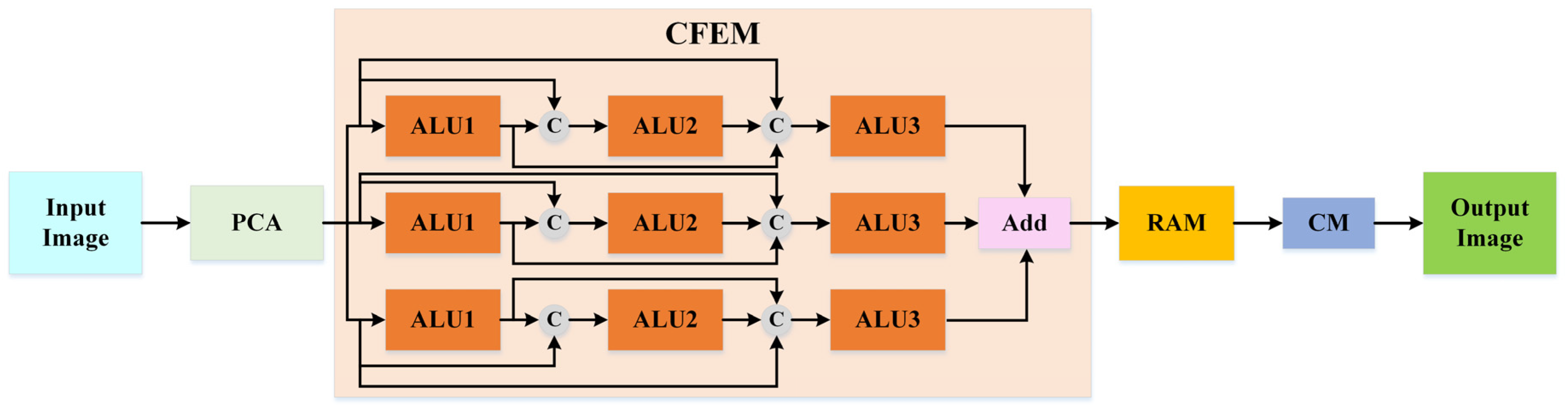

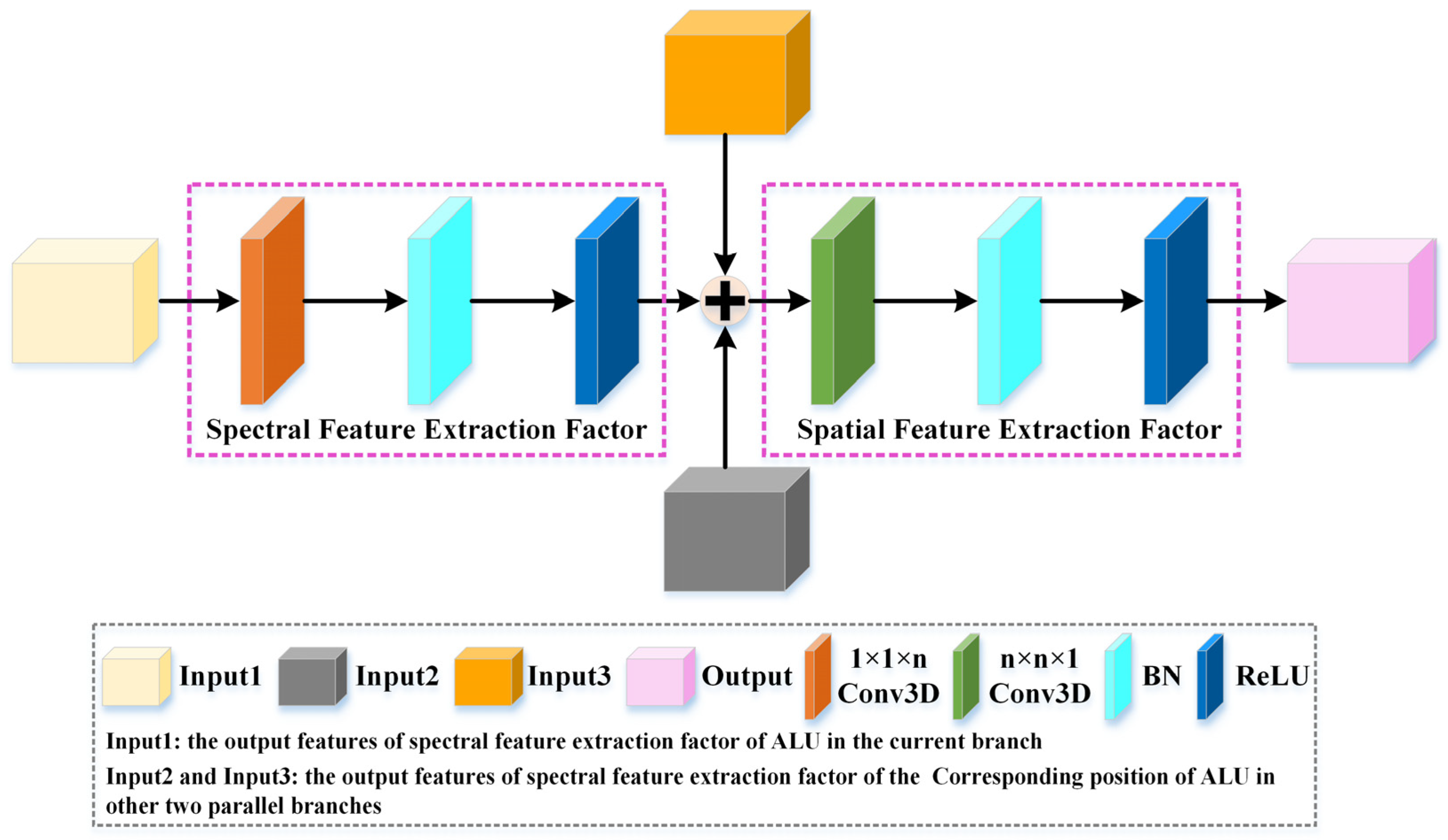

- In order to decrease training parameters and accelerate model convergence, we designed an additive link unit (ALU) to replace the conventional 3D convolutional layer. For one, ALU utilizes the spectral feature extraction factor and spatial feature extraction factor to capture joint spectral–spatial features; for another, it also introduces the cross transmission to take full advantage of spectral–spatial feature flows between different branches;

- (2)

- In order to tackle the fixed-scale convolutional kernels that are difficult to sufficiently extract spectral–spatial features, a crossover feature extraction module (CFEM) was constructed, which can obtain spectral–spatial features at different convolutional scales and branches. CFEM not only utilizes three parallel branches with multiple available, receptive fields to increase the diversity of spectral–spatial features but also applies the dense connection to each branch to incorporate shallow and deep features and, thus, realize robust complementary features for classification;

- (3)

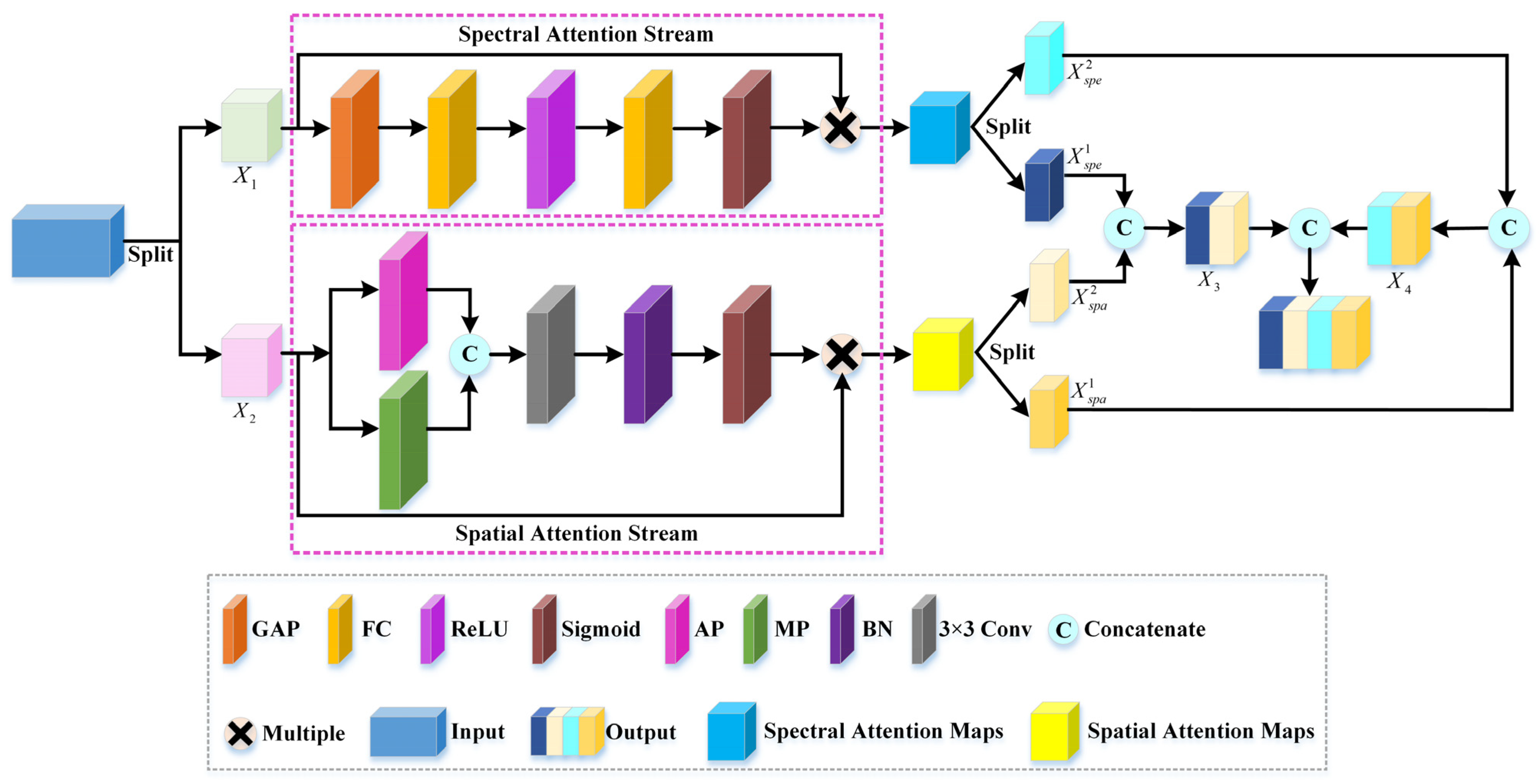

- In order to dispel the interference of redundant information and noise, we devised a rearranged attention module (RAM) to adaptively concentrate on recalibrating spatial-wise and spectral-wise feature response while exploiting the shifted cascade operation to realign the obtained attention-enhanced features, which are beneficial to boost the classification performance.

2. Methodology

2.1. Additive Link Unit

2.2. Crossover Feature Extraction Module

2.3. Rearranged Attention Module

2.4. Framework of the Proposed MCFANet

3. Experimental Results and Discussion

3.1. Datasets Description

3.2. Experimental Setup

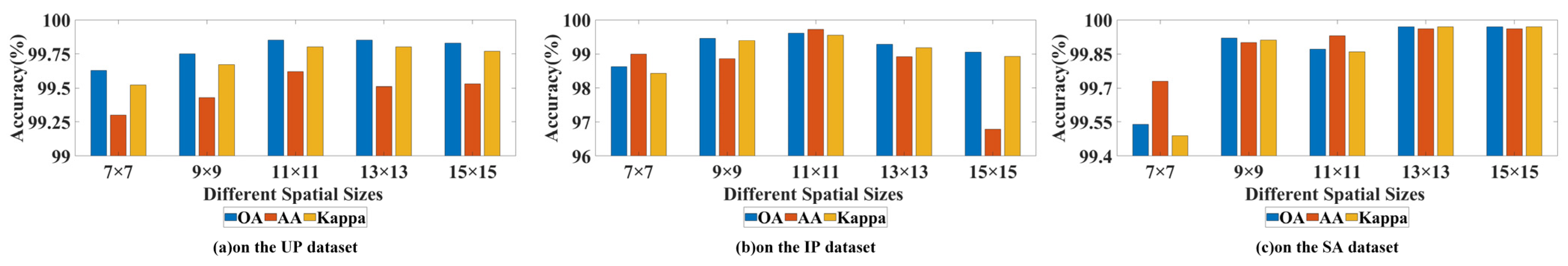

3.3. Parameter Analysis

3.3.1. Effect of the Spatial Sizes

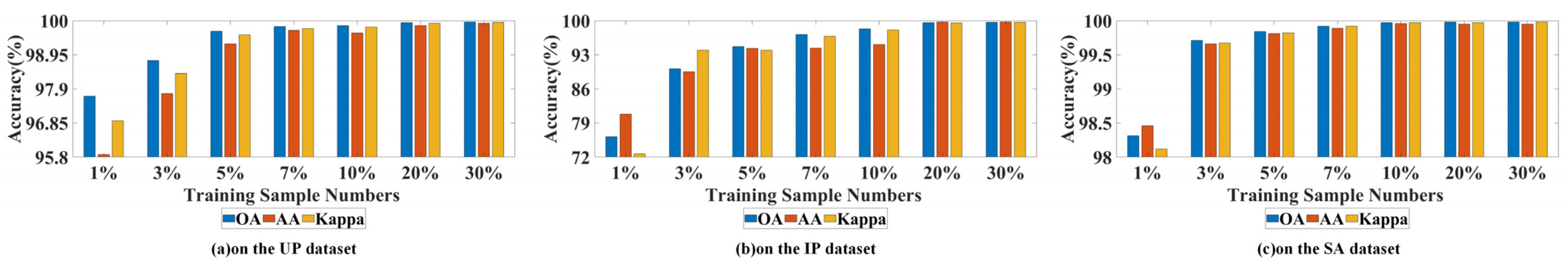

3.3.2. Effect of the Training Sample Ratios

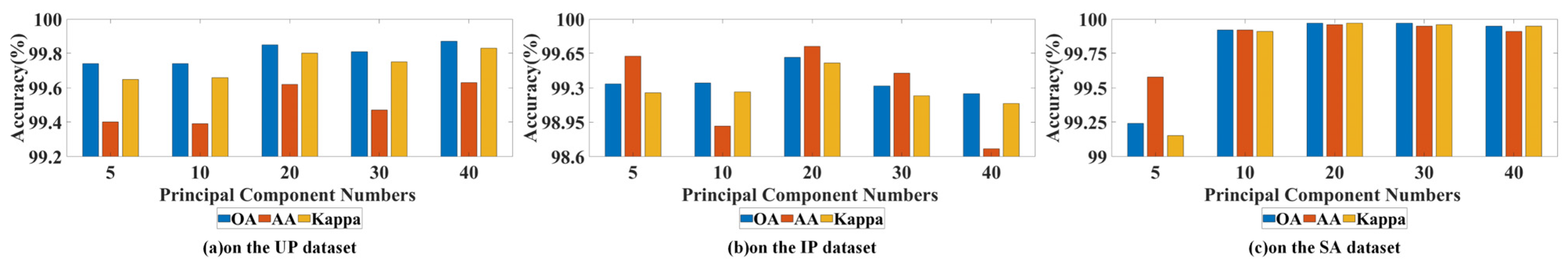

3.3.3. Effect of the Principal Component Numbers

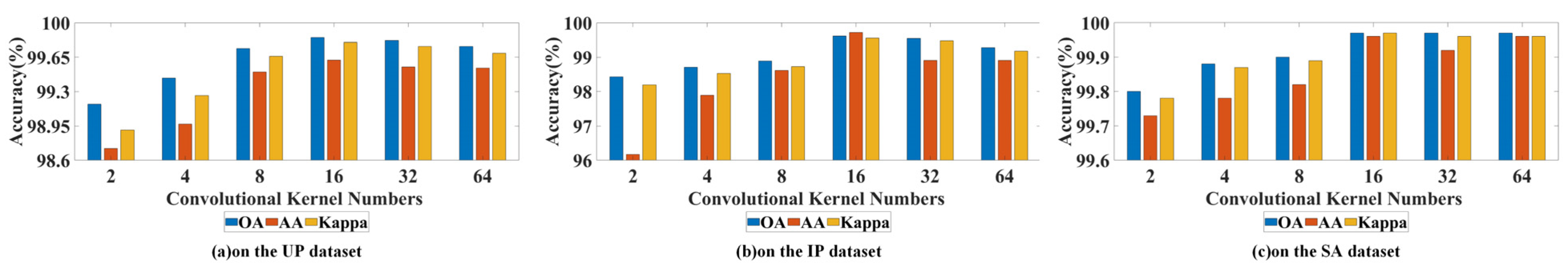

3.3.4. Effect of the Number of Convolutional Kernels in Additive Link Units

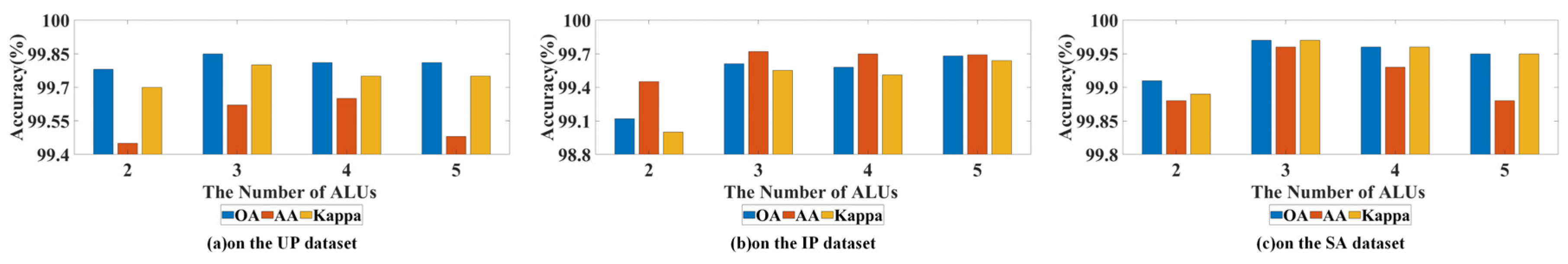

3.3.5. Effect of the Number of Additive Link Units

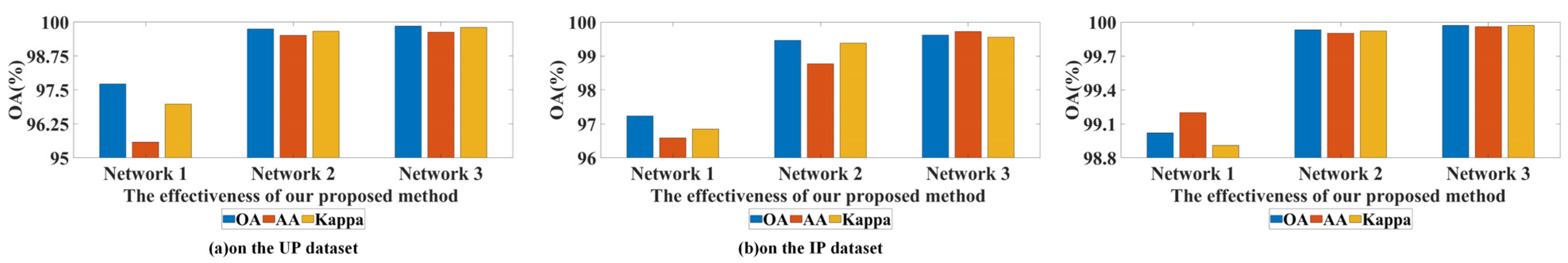

3.4. Ablation Study

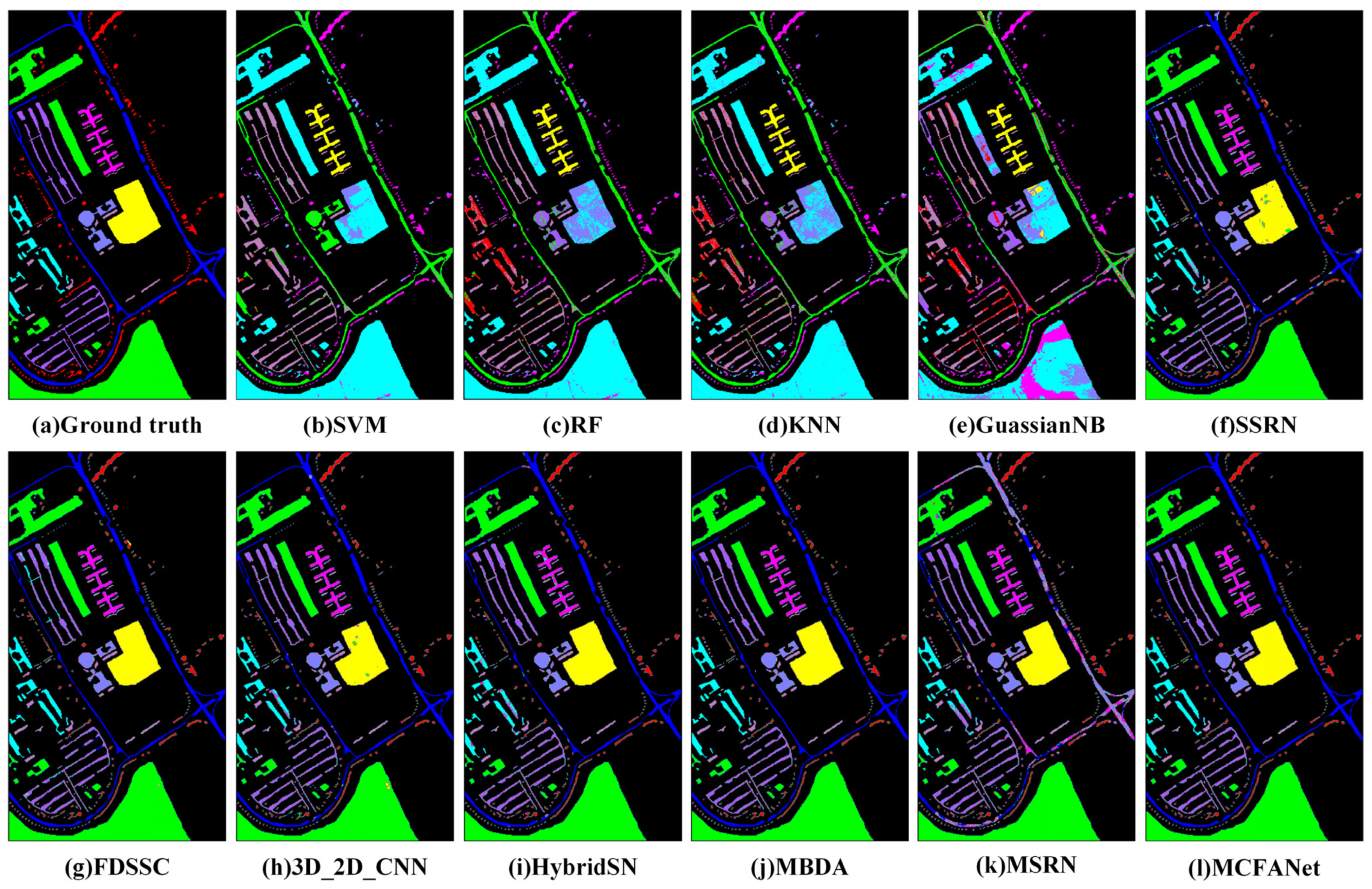

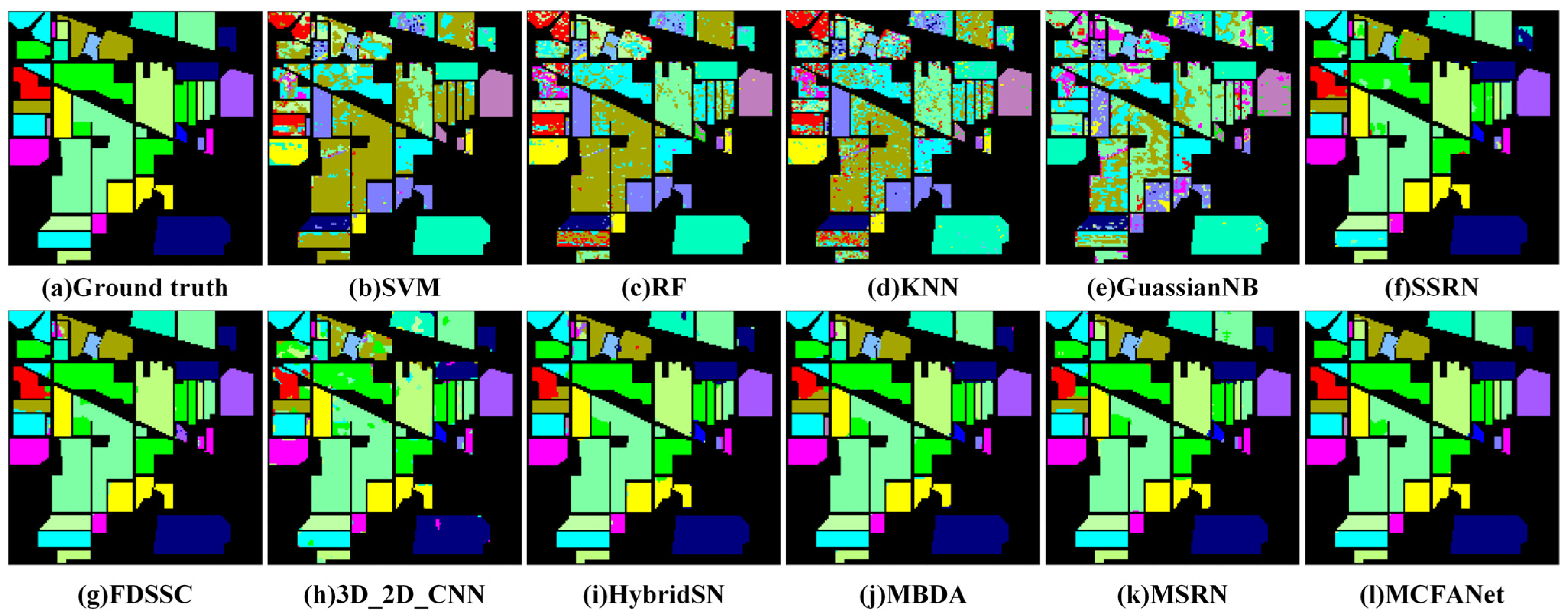

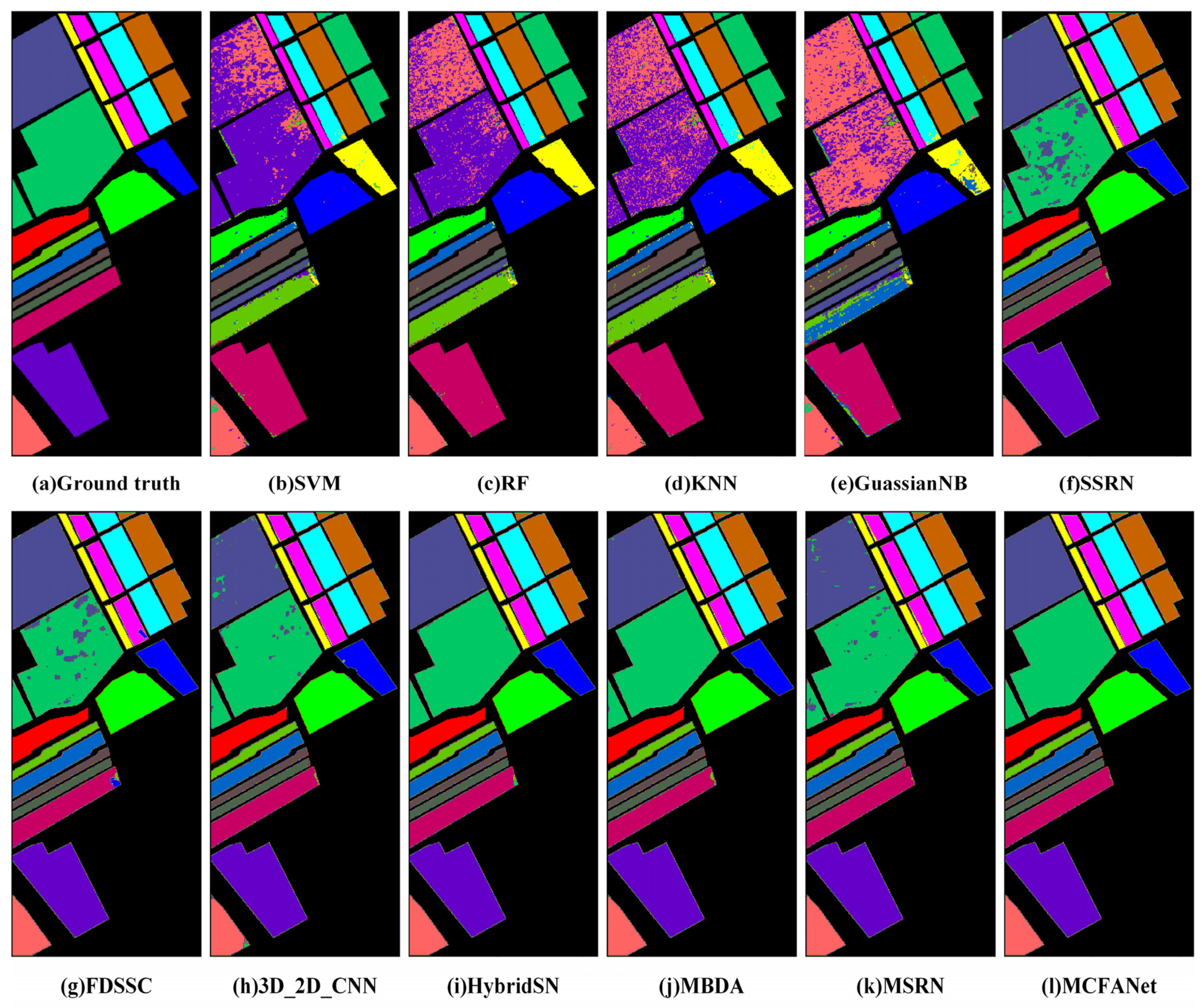

3.5. Comparison Methods Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Guo, Y.; Chanussot, J.; Jia, X.; Benediktsson, J.A. Multiple Kernel learning for hyperspectral image classification: A review. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6547–6565. [Google Scholar] [CrossRef]

- Li, S.; Song, W.; Fang, L.; Chen, Y.; Ghamisi, P.; Benediktsson, J.A. Deep learning for hyperspectral image classification: An overview. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6690–6709. [Google Scholar] [CrossRef]

- Xu, Y.; Wu, Z.; Chanussot, J.; Wei, Z. Joint reconstruction and anomaly detection from compressive hyperspectral images using mahalanobis distance-regularized tensor RPCA. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2919–2930. [Google Scholar] [CrossRef]

- Pyo, J.; Duan, H.; Ligaray, M.; Kim, M.; Baek, S.; Kwon, Y.S.; Lee, H.; Kang, T.; Kim, K.; Cha, Y.; et al. An integrative remote sensing application of stacked autoencoder for atmospheric correction and cyanobacteria estimation using hyperspectral imagery. Remote Sens. 2020, 12, 1073. [Google Scholar] [CrossRef]

- Ghamisi, P.; Dalla Mura, M.; Benediktsson, J.A. A survey on spectral classification techniques based on attribute profiles. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2335–2353. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Tuia, D.; Bruzzone, L.; Benediktsson, J.A. Advances in hyperspectral image classification: Earth monitoring with statistical learning methods. IEEE Signal Process. Mag. 2014, 31, 45–54. [Google Scholar] [CrossRef]

- Ghiyamat, A.; Shafri, H.Z. A review on hyperspectral remote sensing for homogeneous and heterogeneous forest biodiversity assessment. Int. J. Remote Sens. 2010, 31, 1837–1856. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, P.; Nasrabadi, N.; Nasrabadi, J. Hyperspectral remote sensing data analysis and future challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef]

- Malthus, T.J.; Mumby, P.J. Remote sensing of the coastal zone: An overview and priorities for future research. Int. J. Remote Sens. 2003, 24, 2805–2815. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Bruzzone, L. Kernel-based methods for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1351–1362. [Google Scholar] [CrossRef]

- Gewali, U.B.; Monteiro, S.T.; Saber, E. Machine learning based hyperspectral image analysis: A survey. arXiv 2018, arXiv:1802.08701. [Google Scholar]

- Du, H.; Qi, H.; Wang, X.; Ramanath, R.; Snyder, W.E. Band selection using independent component analysis for hyperspectral image processing. In Proceedings of the 32nd Applied Imagery Pattern Recognition Workshop, Washington, DC, USA, 15–17 October 2003; pp. 93–98. [Google Scholar]

- Chen, Y.; Nasrabadi, N.M.; Tran, T.D. Hyperspectral image classification via kernel sparse representation. IEEE Trans. Geosci. Remote Sens. 2013, 51, 217–231. [Google Scholar] [CrossRef]

- Li, J.; Bioucas-Dias, J.M.; Plaza, A. Semisupervised hyperspectral image segmentation using multinomial logistic regression with active learning. IEEE Trans. Geosci. Remote Sens. 2010, 48, 4085–4098. [Google Scholar] [CrossRef]

- Kang, X.; Xiang, X.; Li, S.; Benediktsson, J.A. PCA-based edge preserving features for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7140–7151. [Google Scholar] [CrossRef]

- Mercier, G.; Lennon, M. Support vector machines for hyperspectral image classification with spectral-based kernels. In Proceedings of the 2003 IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2003), Toulouse, France, 21–25 July 2003; Volume 6, pp. 288–290. [Google Scholar]

- Zhu, J.; Hu, J.; Jia, S.; Jia, X.; Li, Q. Multiple 3-D feature fusion framework for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1873–1886. [Google Scholar] [CrossRef]

- Huo, L.-Z.; Tang, P. Spectral and spatial classification of hyperspectral data using SVMs and Gabor textures. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium, IGARSS 2011, Vancouver, BC, Canada, 24–29 July 2011; pp. 1708–1711. [Google Scholar]

- Li, J.; Marpu, P.R.; Plaza, A.; Bioucas-Dias, J.M.; Benediktsson, J.A. Generalized composite kernel framework for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4816–4829. [Google Scholar] [CrossRef]

- Fang, L.; He, N.; Li, S.; Plaza, A.J.; Plaza, J. A new spatial–spectral feature extraction method for hyperspectral images using local covariance matrix representation. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3534–3546. [Google Scholar] [CrossRef]

- Mou, L.; Zhu, X. Learning to pay attention on spectral domain: A spectral attention module-based convolutional network for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 110–122. [Google Scholar] [CrossRef]

- He, M.; Li, B.; Chen, H. Multi-scale 3d deep convolutional neural network for hyperspectral image classification. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3904–3908. [Google Scholar]

- Sellami, A.; Farah, M.; Farah, I.R.; Solaiman, B. Hyperspectral imagery classification based on semi-supervised 3-D deep neural network and adaptive band selection. Expert Syst. Appl. 2019, 129, 246–259. [Google Scholar] [CrossRef]

- Haut, J.M.; Paoletti, M.E.; Plaza, J.; Plaza, A.; Li, J. Visual Attention-Driven Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8065–8080. [Google Scholar] [CrossRef]

- Fang, B.; Li, Y.; Zhang, H.; Chan, J.C.W. Hyperspectral Images Classification Based on Dense Convolutional Networks with Spectral-Wise Attention Mechanism. Remote Sens. 2019, 11, 159. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep feature extraction and classification of hyperspectral images based on convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef]

- Hu, W.; Huang, Y.; Wei, L.; Zhang, F.; Li, H. Deep convolutional neural networks for hyperspectral image classification. J. Sens. 2015, 2015, 258619. [Google Scholar] [CrossRef]

- Zhao, W.; Du, S. Spectral-spatial feature extraction for hyperspectral image classification: A dimension reduction and deep learning approach. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4544–4554. [Google Scholar] [CrossRef]

- Zou, L.; Zhu, X.; Wu, C.; Liu, Y.; Qu, L. Spectral–Spatial Exploration for Hyperspectral Image Classification via the Fusion of Fully Convolutional Networks. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2020, 13, 659–674. [Google Scholar] [CrossRef]

- Zhang, M.; Li, W.; Du, Q. Diverse Region-Based CNN for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 27, 2623–2634. [Google Scholar] [CrossRef]

- Ge, Z.; Cao, G.; Zhang, Y.; Li, X.; Shi, H.; Fu, P. Adaptive Hash Attention and Lower Triangular Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 59, 5509119. [Google Scholar] [CrossRef]

- Nie, J.; Xu, Q.; Pan, J.; Guo, M. Hyperspectral Image Classification Based on Multiscale Spectral–Spatial Deformable Network. IEEE Geosci. Remote Sens. Lett. 2022, 19, 5500905. [Google Scholar] [CrossRef]

- Zhang, X.; Shang, S.; Tang, X.; Feng, J.; Jiao, L. Spectral Partitioning Residual Network with Spatial Attention Mechanism for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5507714. [Google Scholar] [CrossRef]

- Huang, L.; Chen, Y. Dual-Path Siamese CNN for Hyperspectral Image Classification with Limited Training Samples. IEEE Geosci. Remote Sens. Lett. 2021, 18, 518–522. [Google Scholar] [CrossRef]

- Gao, H.; Zhang, Y.; Chen, Z.; Li, C. A Multiscale Dual-Branch Feature Fusion and Attention Network for Hyperspectral Images Classification. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2021, 13, 8180–8192. [Google Scholar] [CrossRef]

- Shi, H.; Cao, G.; Zhnag, Y.; Ge, Z.; Liu, Y.; Fu, P. H2A2Net: A Hybrid Convolution and Hybrid Resolution Network with Double Attention for Hyperspectral Image Classification. Remote Sens. 2022, 14, 4235. [Google Scholar] [CrossRef]

- Chan, R.H.; Li, R. A 3-Stage Spectral-Spatial Method for Hyperspectral Image Classification. Remote Sens. 2022, 14, 3998. [Google Scholar] [CrossRef]

- Wang, Q.; Liu, S.; Chanussot, J.; Li, X. Scene classification with recurrent attention of VHR remote sensing images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1155–1167. [Google Scholar] [CrossRef]

- Yang, K.; Sun, H.; Zou, C.; Lu, X. Cross-Attention Spectral–Spatial Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5518714. [Google Scholar] [CrossRef]

- Xiang, J.; Wei, C.; Wang, M.; Teng, L. End-to-End Multilevel Hybrid Attention Framework for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2019, 57, 1155–1167. [Google Scholar] [CrossRef]

- Huang, H.; Luo, L.; Pu, C. Self-Supervised Convolutional Neural Network via Spectral Attention Module for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6006205. [Google Scholar] [CrossRef]

- Tu, B.; He, W.; He, W.; Ou, X.; Plaza, A. Hyperspectral Classification via Global-Local Hierarchical Weighting Fusion Network. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2021, 15, 182–200. [Google Scholar] [CrossRef]

- Zhu, M.; Jiao, L.; Liu, F.; Yang, F.; Wang, J. Residual Spectral–Spatial Attention Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 449–462. [Google Scholar] [CrossRef]

- Li, R.; Zheng, S.; Chen, D.; Yang, Y.; Wang, X. Classification of Hyperspectral Image Based on Double-Branch Dual-Attention Mechanism Network. Remote Sens. 2020, 12, 582. [Google Scholar] [CrossRef]

- Gao, H.; Miao, Y.; Cao, X.; Li, C. Densely Connected Multiscale Attention Network for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2021, 14, 2563–2576. [Google Scholar] [CrossRef]

- Xiong, Z.; Yuan, Y.; Wang, Q. AI-NET: Attention inception neural networks for hyperspectral image classification. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 2647–2650. [Google Scholar]

- Xi, B.; Li, J.; Li, Y.; Song, R.; Shi, Y.; Liu, S.; Du, Q. Deep Prototypical Networks with Hybrid Residual Attention for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2020, 13, 3683–3700. [Google Scholar] [CrossRef]

- Zhang, C.; Li, G.; Lei, R.; Du, S.; Zhang, X.; Zheng, H.; Wu, Z. Deep Feature Aggregation Network for Hyperspectral Remote Sensing Image Classification. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2020, 13, 5314–5325. [Google Scholar] [CrossRef]

- Li, Z.; Zhao, X.; Xu, Y.; Li, W.; Zhai, L.; Fang, Z.; Shi, X. Hyperspectral Image Classification with Multiattention Fusion Network. IEEE Geosci. Remote Sens. Lett. 2022, 19, 5503305. [Google Scholar] [CrossRef]

- Gao, H.; Yang, Y.; Li, C.; Gao, L.; Zhangm, B. Multiscale Residual Network With Mixed Depthwise Convolution for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 3396–3408. [Google Scholar] [CrossRef]

- Xue, X.; Yu, X.; Liu, B.; Tan, X.; Wei, X. HResNetAM: Hierarchical Residual Network With Attention Mechanism for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2021, 14, 3566–3580. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Weinberger, K.Q.; van der Maaten, L. Densely connected convolutional networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Zhong, Z.; Li, J.; Luo, Z.; Chapman, M. Spectral-Spatial Residual Network for Hyperspectral Image Classification: A 3-D Deep Learning Framework. IEEE Trans. Geosci. Remote Sens. 2018, 56, 847–858. [Google Scholar] [CrossRef]

- Wu, W.; Dou, S.; Jiang, Z.; Sun, L. A Fast Dense Spectral-Spatial Convolution Network Framework for Hyperspectral Images Classification. Remote Sens. 2018, 10, 1068. [Google Scholar]

- Kumar Roy, S.; Krishna, G.; Ram Dubey, S.; Chaudhuri, B.B. HybridSN: Exploring 3-D–2-D CNN Feature Hierarchy for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2020, 17, 277–281. [Google Scholar]

- Ahmad, M.; Shabbir, S.; Aamir Raza, P.; Mazzara, M.; Distefano, S.; Mehmood Khan, A. Hyperspectral Image Classification: Artifacts of Dimension Reduction on Hybrid CNN. arXiv 2021, arXiv:2101.10532v. [Google Scholar]

- Yin, J.; Qi, C.; Huang, W.; Chen, Q.; Qu, J. Multibranch 3D-Dense Attention Network for Hyperspectral Image Classification. IEEE Access 2022, 10, 71886–71898. [Google Scholar] [CrossRef]

| No. | Color | Class | Train | Test |

|---|---|---|---|---|

| 1 |  | Asphalt | 664 | 5967 |

| 2 |  | Meadows | 1865 | 16,784 |

| 3 |  | Gravel | 210 | 1889 |

| 4 |  | Trees | 307 | 2757 |

| 5 |  | Metal sheets | 135 | 1210 |

| 6 |  | Bare Soil | 503 | 4526 |

| 7 |  | Bitumen | 133 | 1197 |

| 8 |  | Bricks | 369 | 3313 |

| 9 |  | Shadows | 95 | 852 |

| Total | 4281 | 38,495 | ||

| No. | Color | Class | Train | Test |

|---|---|---|---|---|

| 1 |  | Alfalfa | 10 | 36 |

| 2 |  | Corn-notill | 286 | 1142 |

| 3 |  | Corn-mintill | 166 | 664 |

| 4 |  | Corn | 48 | 189 |

| 5 |  | Grass-pasture | 97 | 386 |

| 6 |  | Grass-trees | 146 | 584 |

| 7 |  | Grass-pasture-mowed | 6 | 22 |

| 8 |  | Hay-windrowed | 96 | 382 |

| 9 |  | Oats | 4 | 16 |

| 10 |  | Soybean-notill | 195 | 777 |

| 11 |  | Soybean-mintill | 491 | 1964 |

| 12 |  | Soybean-clean | 119 | 474 |

| 13 |  | Wheat | 41 | 164 |

| 14 |  | Woods | 253 | 1012 |

| 15 |  | Buildings-Grass-Tree | 78 | 308 |

| 16 |  | Stone-Steel-Towers | 19 | 74 |

| Total | 2055 | 8194 | ||

| No. | Color | Class | Train | Test |

|---|---|---|---|---|

| 1 |  | Broccoli-green-weeds-1 | 201 | 2825 |

| 2 |  | Broccoli-green-weeds-2 | 373 | 3353 |

| 3 |  | Fallow | 198 | 1178 |

| 4 |  | Fallow-rough-plow | 140 | 154 |

| 5 |  | Fallow-smooth | 268 | 2410 |

| 6 |  | Stubble-trees | 396 | 3563 |

| 7 |  | Celery | 358 | 3221 |

| 8 |  | Grapes-untrained | 1128 | 10,143 |

| 9 |  | Soil-vineyard-develop | 621 | 5582 |

| 10 |  | Corn-senseced-green-weeds | 328 | 2950 |

| 11 |  | Lettuce-romaine-4 week | 107 | 961 |

| 12 |  | Lettuce-romaine-5 week | 193 | 1734 |

| 13 |  | Lettuce-romaine-6 week | 92 | 824 |

| 14 |  | Lettuce-romaine-7 week | 107 | 963 |

| 15 |  | Vineyard-untrained | 727 | 6541 |

| 16 |  | Vineyard-vertical-trellis | 181 | 1626 |

| Total | 5418 | 48,711 | ||

| No. | SVM | RF | KNN | GuassianNB | SSRN | FDSSC | 2D_3D_CNN | HybridSN | MBDA | MSRN | MCFANet |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 76.52 | 91.60 | 89.71 | 96.50 | 99.67 | 98.38 | 97.98 | 98.78 | 99.62 | 98.99 | 99.88 |

| 2 | 80.59 | 89.08 | 86.46 | 79.14 | 98.89 | 99.77 | 99.29 | 99.97 | 99.83 | 99.94 | 99.94 |

| 3 | 81.03 | 80.01 | 68.51 | 30.41 | 96.30 | 85.04 | 97.39 | 93.30 | 100.00 | 99.46 | 99.95 |

| 4 | 95.85 | 93.32 | 96.33 | 44.02 | 99.72 | 100.00 | 99.47 | 96.55 | 98.99 | 93.16 | 99.53 |

| 5 | 99.59 | 99.33 | 99.66 | 79.47 | 99.92 | 100.00 | 99.77 | 95.92 | 99.76 | 40.58 | 99.51 |

| 6 | 94.25 | 88.88 | 82.82 | 39.15 | 99.72 | 99.36 | 98.85 | 99.96 | 100.00 | 100.00 | 99.91 |

| 7 | 0.00 | 84.70 | 76.32 | 38.91 | 85.39 | 100.00 | 96.81 | 97.15 | 100.00 | 33.78 | 99.92 |

| 8 | 64.73 | 79.05 | 77.29 | 70.03 | 96.07 | 99.97 | 93.83 | 91.51 | 99.26 | 91.38 | 99.67 |

| 9 | 99.88 | 100.00 | 100.00 | 100.00 | 96.15 | 100.00 | 99.33 | 99.25 | 95.15 | 74.98 | 99.29 |

| OA | 80.21 | 88.84 | 86.01 | 65.87 | 98.23 | 98.71 | 98.42 | 98.23 | 99.61 | 87.22 | 99.85 |

| AA | 66.47 | 85.35 | 82.34 | 72.57 | 97.89 | 98.29 | 97.43 | 95.80 | 99.15 | 88.86 | 99.62 |

| Kappa×100 | 72.39 | 84.93 | 81.05 | 56.61 | 97.70 | 98.29 | 97.90 | 97.65 | 99.48 | 83.38 | 99.80 |

| FLOPs (×106) | - | - | - | - | 1.39 | 0.51 | 0.51 | 10.24 | 0.48 | 0.32 | 1.13 |

| Test Time (s) | - | - | - | - | 9.33 | 11.89 | 0.87 | 10.32 | 10.86 | 10.88 | 17.82 |

| No. | SVM | RF | KNN | GuassianNB | SSRN | FDSSC | 2D_3D_CNN | HybridSN | MBDA | MSRN | MCFANet |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.00 | 83.33 | 11.76 | 42.50 | 100.00 | 0.00 | 97.62 | 90.91 | 97.62 | 86.36 | 94.74 |

| 2 | 61.21 | 72.33 | 48.73 | 40.95 | 94.98 | 95.38 | 88.17 | 97.04 | 97.09 | 91.27 | 99.56 |

| 3 | 78.31 | 79.57 | 56.67 | 23.28 | 97.16 | 96.10 | 87.61 | 99.46 | 98.42 | 96.98 | 99.85 |

| 4 | 81.82 | 73.33 | 52.59 | 9.04 | 89.77 | 100.00 | 87.80 | 98.20 | 100.00 | 100.00 | 100.00 |

| 5 | 93.58 | 89.01 | 83.33 | 2.75 | 99.06 | 91.65 | 94.06 | 94.53 | 97.52 | 95.81 | 100.00 |

| 6 | 79.09 | 79.80 | 77.58 | 67.59 | 97.76 | 99.69 | 93.92 | 99.53 | 99.84 | 99.22 | 99.83 |

| 7 | 0.00 | 100.00 | 88.33 | 100.00 | 100.00 | 0.00 | 95.45 | 85.00 | 100.00 | 78.57 | 100.00 |

| 8 | 84.86 | 92.10 | 88.32 | 83.13 | 100.00 | 91.10 | 97.50 | 96.41 | 100.00 | 100.00 | 100.00 |

| 9 | 0.00 | 0.00 | 50.00 | 11.11 | 66.67 | 0.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 |

| 10 | 72.83 | 99.37 | 65.23 | 23.34 | 97.63 | 99.76 | 90.85 | 99.40 | 100.00 | 98.28 | 99.36 |

| 11 | 56.77 | 75.59 | 70.69 | 63.73 | 94.48 | 99.41 | 90.73 | 99.05 | 99.45 | 99.95 | 99.49 |

| 12 | 45.48 | 59.96 | 64.46 | 15.79 | 96.64 | 93.84 | 90.93 | 89.43 | 90.81 | 94.14 | 99.57 |

| 13 | 87.43 | 91.95 | 80.30 | 87.92 | 98.92 | 98.92 | 80.53 | 98.40 | 100.00 | 100.00 | 98.80 |

| 14 | 85.90 | 90.69 | 91.85 | 75.14 | 97.70 | 99.65 | 98.75 | 96.67 | 98.44 | 98.87 | 100.00 |

| 15 | 81.82 | 76.40 | 59.86 | 62.71 | 89.38 | 99.14 | 92.43 | 98.79 | 99.71 | 99.71 | 100.00 |

| 16 | 98.46 | 98.46 | 98.48 | 100.00 | 100.00 | 97.53 | 94.12 | 94.05 | 80.61 | 85.42 | 94.87 |

| OA | 68.89 | 79.00 | 69.45 | 47.84 | 96.04 | 97.45 | 91.65 | 97.45 | 98.21 | 97.27 | 99.61 |

| AA | 53.09 | 67.73 | 61.98 | 50.53 | 93.42 | 79.61 | 85.30 | 92.52 | 96.58 | 92.80 | 99.72 |

| Kappa × 100 | 63.51 | 75.80 | 65.01 | 41.22 | 95.48 | 97.09 | 90.44 | 97.09 | 97.96 | 96.88 | 99.55 |

| FLOPs (×106) | - | - | - | - | 0.17 | 3.81 | 0.52 | 10.24 | 0.48 | 0.32 | 0.80 |

| Test Time (s) | - | —- | - | - | 2.23 | 5.65 | 0.31 | 2.43 | 7.40 | 0.88 | 6.52 |

| No. | SVM | RF | KNN | GuassianNB | SSRN | FDSSC | 2D_3D_CNN | HybridSN | MBDA | MSRN | MCFANet |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 100.00 | 99.89 | 99.83 | 99.87 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 |

| 2 | 99.08 | 99.67 | 99.26 | 99.53 | 99.69 | 100.00 | 99.92 | 100.00 | 100.00 | 100.00 | 100.00 |

| 3 | 92.14 | 95.05 | 91.06 | 89.46 | 99.57 | 92.60 | 99.95 | 100.00 | 100.00 | 100.00 | 100.00 |

| 4 | 97.94 | 98.57 | 97.50 | 97.09 | 99.24 | 98.81 | 97.99 | 99.77 | 96.15 | 90.31 | 99.92 |

| 5 | 97.87 | 98.96 | 98.26 | 97.28 | 99.21 | 99.96 | 99.41 | 99.84 | 100.00 | 100.00 | 99.92 |

| 6 | 99.92 | 99.92 | 100.00 | 99.97 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 |

| 7 | 98.02 | 99.57 | 99.16 | 97.78 | 100.00 | 100.00 | 99.85 | 100.00 | 99.97 | 100.00 | 100.00 |

| 8 | 70.12 | 77.56 | 72.88 | 74.36 | 99.47 | 99.92 | 97.36 | 99.67 | 99.73 | 98.31 | 100.00 |

| 9 | 98.78 | 98.87 | 99.09 | 98.90 | 100.00 | 99.58 | 99.81 | 100.00 | 100.00 | 100.00 | 100.00 |

| 10 | 87.86 | 93.91 | 89.89 | 59.84 | 99.13 | 99.86 | 98.97 | 100.00 | 100.00 | 97.67 | 99.90 |

| 11 | 92.96 | 93.68 | 91.81 | 31.16 | 98.02 | 96.75 | 97.00 | 98.16 | 95.56 | 99.31 | 99.38 |

| 12 | 94.95 | 96.88 | 95.14 | 91.76 | 99.56 | 99.89 | 99.40 | 100.00 | 99.89 | 99.89 | 100.00 |

| 13 | 92.50 | 96.83 | 94.45 | 94.62 | 100.00 | 100.00 | 98.31 | 100.00 | 99.89 | 100.00 | 99.88 |

| 14 | 97.45 | 97.59 | 96.63 | 67.90 | 99.60 | 100.00 | 100.00 | 99.61 | 95.58 | 96.69 | 100.00 |

| 15 | 81.99 | 78.56 | 60.28 | 45.88 | 84.27 | 89.34 | 97.39 | 99.71 | 99.83 | 96.56 | 100.00 |

| 16 | 98.97 | 98.81 | 99.00 | 85.79 | 99.82 | 100.00 | 100.00 | 100.00 | 100.00 | 99.94 | 100.00 |

| OA | 88.54 | 91.22 | 87.29 | 76.77 | 97.20 | 97.93 | 98.81 | 99.83 | 99.63 | 98.68 | 99.97 |

| AA | 92.60 | 95.16 | 93.13 | 86.26 | 98.75 | 98.99 | 99.11 | 99.87 | 99.63 | 99.04 | 99.96 |

| Kappa × 100 | 87.18 | 90.21 | 85.85 | 74.46 | 96.89 | 97.70 | 98.67 | 99.82 | 99.58 | 98.53 | 99.97 |

| FLOPs (×106) | - | - | - | - | 4.15 | 3.93 | 0.52 | 10.24 | 0.48 | 0.32 | 1.13 |

| Test Time (s) | - | - | - | - | 16.57 | 25.81 | 4.70 | 15.58 | 12.69 | 14.59 | 30.91 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, D.; Wang, Y.; Liu, P.; Li, Q.; Yang, H.; Chen, D.; Liu, Z.; Han, G. A Multibranch Crossover Feature Attention Network for Hyperspectral Image Classification. Remote Sens. 2022, 14, 5778. https://doi.org/10.3390/rs14225778

Liu D, Wang Y, Liu P, Li Q, Yang H, Chen D, Liu Z, Han G. A Multibranch Crossover Feature Attention Network for Hyperspectral Image Classification. Remote Sensing. 2022; 14(22):5778. https://doi.org/10.3390/rs14225778

Chicago/Turabian StyleLiu, Dongxu, Yirui Wang, Peixun Liu, Qingqing Li, Hang Yang, Dianbing Chen, Zhichao Liu, and Guangliang Han. 2022. "A Multibranch Crossover Feature Attention Network for Hyperspectral Image Classification" Remote Sensing 14, no. 22: 5778. https://doi.org/10.3390/rs14225778

APA StyleLiu, D., Wang, Y., Liu, P., Li, Q., Yang, H., Chen, D., Liu, Z., & Han, G. (2022). A Multibranch Crossover Feature Attention Network for Hyperspectral Image Classification. Remote Sensing, 14(22), 5778. https://doi.org/10.3390/rs14225778