Abstract

Due to the functional limitations of a single UAV, UAV clusters have become an important part of smart cities, and the relative positioning between UAVs is the core difficulty in UAV cluster applications. Existing UAVs can be equipped with satellite navigation, radio navigation, and other positioning equipment, but in complex environments, such as urban canyons, various navigation sources cannot achieve full positioning information due to occlusion, interference, and other factors, and existing positioning fusion methods cannot meet the requirements of these environments. Therefore, demand exists for the real-time positioning of UAV clusters. Aiming to solve the above problems, this paper proposes multisource fusion UAV cluster cooperative positioning using information geometry (UCP-IG), which converts various types of navigation source information into information geometric probability models and reduces the impact of accidental errors, and proposes the Kullback–Leibler divergence minimization (KLM) fusion method to achieve rapid fusion on geometric manifolds and creatively solve the problem of difficult fusion caused by different positioning information formats and parameters. The method proposed in this paper is compared with the main synergistic methods, such as LS and neural networks, in an ideal scenario, a mutation error scenario, and a random motion scenario. The simulation results show that by using UAV cluster movement, the method proposed in this paper can effectively suppress mutation errors and achieve fast positioning.

1. Introduction

The UAV has the characteristics of not being affected by terrain, fast speed, small size, etc. Therefore, it has shown great development prospects in the fields of logistics transportation, security monitoring, information collection, and traffic guidance in smart cities [1]. However, due to load limitations and the occlusion effect of urban canyons, a single UAV provides low coverage, which greatly limits its application in smart cities. While a lightweight UAV cluster has attracted more attention because of its wide coverage and high transportation efficiency, the main direction of UAV development is towards applications in cities [2]. However, unlike a single UAV, the positioning between UAVs in a cluster is the core difficulty in the application of UAV clusters. Compared with traditional node positioning, UAV cluster positioning has the characteristics of a three-dimensional position, large dynamics, and easy interference. The main positioning information of a UAV cluster comes from satellite navigation and inertial navigation, supplemented by wireless base station navigation, laser navigation, visual navigation, and other technologies. Due to inherent problems, various types of navigation sources cannot realize the positioning service of a UAV cluster in the whole area and environment. For example, navigation satellites are easily blocked by urban canyons, resulting in the loss of UAV navigation signals [3]; wireless base stations have limited navigation transmission distance and cannot provide positioning services outside the area [4]; LiDAR is greatly affected by weather and atmosphere; the beam is narrow, and performance drops significantly in cloudy, rainy, and foggy environments [5]; and visual navigation requires a huge amount of image processing in complex and dynamic environments, exhibits poor real-time performance, and has a large impression of the light-receiving environment [6]. Therefore, using the relative position of a UAV cluster to realize the rapid fusion of distributed multisource navigation system information is the main problem of UAV cluster positioning.

The existing distributed node positioning method mainly realizes cooperative information fusion positioning through ranging, direction finding, and information interaction. This fusion method mainly includes two categories. The first category is the fusion of positioning data represented by neural networks [7,8,9], and the other is the fusion of localization results represented by the Kalman filter [10,11,12]. Both types of algorithms have problems of high computational complexity and poor real-time performance, and thus they are not suitable for the cooperative positioning of UAV clusters. Therefore, using the various types of navigation sources independently distributed in the UAV cluster to achieve fast and high-stability fusion is the main problem of the coordinated positioning of the UAV cluster.

As the current mainstream positioning information fusion algorithm, the Kalman filter and its extended series of filtering algorithms [13] can not only estimate the time domain information of stationary random processes but can also estimate nonstationary random processes. However, the positioning information is output by different types of navigation sources. The format and frequency of the parameters are completely different, resulting in high computational complexity and a long fusion time, which cannot meet the needs of UAV clusters for performing the rapid fusion of different types of navigation sources. With the rapid development of artificial intelligence, intelligent information fusion by neural networks has also developed rapidly, and these can realize the rapid fusion of various types of navigation information, but they also have the problems of requiring a large amount of data and exhibiting poor real-time performance. To solve the problem of difficulty in fusion positioning caused by factors such as the time-space asynchrony of distributed multitype navigation source information in UAV clusters, the loss of navigation source information, and the rapid movement of UAV clusters, this paper proposes a multisource information geometry-based approach. The main innovation points of the fusion UAV cluster positioning method are as follows:

- The information from various navigation sources carried by the UAV cluster is creatively transformed into an information probability model, the time and frequency parameters of various types of navigation information of the UAV cluster are unified, and a simulation scenario is established to verify the model.

- A multisource fusion UAV cluster localization method based on information geometry is proposed. The method utilizes the correlation between the information probability of the UAV navigation sources and the positioning accuracy, calculates the accuracy probability function of the navigation source information, establishes the probability geometric manifold of the navigation source information, and fuses multiple probability density functions to obtain the positioning result.

- Simulation tests of the proposed UCP-IG model in ideal scenarios, sudden loss of navigation information scenarios, and random motion scenarios are carried out. The test results show that the UCP-IG method proposed in this paper can effectively improve the stability of UAV clusters. In the case of a loss of human–machine navigation information, errors can also be effectively suppressed.

The rest of the paper is organized as follows. In the following section, we present the cooperative-positioning-related work. Section III introduces the system model. Then, the multisource fusion UAV cluster localization method based on information geometry is introduced in Section IV. Section V contains a detailed analysis of the results, and Section VI presents the concluding remarks.

2. Related Work

With the application of UAVs in unmanned transportation applications, traffic management, and other basic core projects of smart cities, the relative positioning between UAV clusters has become the core basis for UAV cluster applications [14]. With the improvement of the accuracy of the GNSS system and the enhancement of the navigation signal by low-orbit communication satellite systems, satellite navigation has become the main method of the cluster positioning of UAVs. However, due to the serious occlusion of urban environments, the signals of some UAVs will always be lost during the flight of a UAV cluster. The development of the coordinated navigation and positioning of UAV clusters has become a hot topic for UAV cluster applications.

UAV cooperative positioning mainly evolved from wireless sensor network cooperative positioning. Early UAV cooperative positioning methods mainly achieved cooperative positioning through the LS method [15], MSE method [16], etc. This kind of method has the advantage of fast positioning speed, but it is easily disturbed by environmental errors.

Due to the jittery characteristics of UAVs, reducing the error has become the main problem of UAV positioning. Techniques such as Bayesian estimation [17] and non-Bayesian estimation [18] are used for UAV cooperative positioning, but the disadvantage of Bayesian estimation methods is the large communication and computational overhead required. The research work discussed in [19] proposed a cooperative positioning fusion technique based on a particle filter (PF), but it is difficult to solve the problem of particle degradation and depletion [20]. To this end, [21] and [22] proposed a positioning estimation method based on the extended Kalman filter (EKF) and unscented Kalman filter (UKF), respectively. Non-Bayesian estimation cooperative positioning methods mainly include the least squares (LS) [23] estimation method and the maximum likelihood (ML) [24] method. The research work discussed in [25] uses weight compensation combined with the LS method to achieve UAV cluster positioning, which can reduce the impact of environmental errors. The study proposes a new hybrid cooperative positioning scheme based on distance and angle measurement; that is, a modification of the TOA-AOA-based and AOA-RSS-based linear least squares (LLS) hybrid scheme. Based on the ranging information between the man–machine and base station, an optimized version of LLS estimation was proposed, which further improves the positioning performance but limits the scope of use. Tomic S et al. [26] proposed a new method based on received signal strength (RSS) and convex optimization. By deriving nonconvex estimates, the search problem of the global optimal solution was solved, but it could not meet the high mobility characteristics of UAVs, and the stability of positioning accuracy was poor.

In recent years, neural networks have also been used in the field of cooperative positioning due to their advantages of arbitrary nonlinear mapping of input and output. The research work discussed in [27] used BP neural-network-assisted EKF and UKF to achieve cooperative positioning, and the results showed that using a BP neural network to optimize nonlinear filtering could improve filter estimation performance. The research work discussed in [28] proposes a layered sensor fusion method with an artificial neural network (ANN) to solve the self-localization problem of mobile robots. This method uses octagonal sonar, digital compass, and wireless network signal strength measurement to determine the position of autonomous mobile robots. Multi-layer perceptron (MLP) is used in combination with supervised learning and back-propagation techniques to train the network by layered fusion steps and determine the robot’s position on the map. However, this type of method needs to train various parameters and the calculation amount and time are too large, so it cannot meet the real-time requirements of UAV cluster cooperative positioning.

Therefore, how to realize the cooperative positioning of UAV clusters in an unknown, complex, and dynamic environment and how to maximize the real-time performance of the algorithm and reduce the amount of calculation require further research. For this reason, an increasing number of scholars have introduced graph theory knowledge into cooperative positioning. For instance, Ihler A proposed the nonparametric belief propagation algorithm [29]. The basic idea of this algorithm is a method based on a graph model, which models the localization problem as an inference problem, allowing it to perform a distributed estimation process, but it has problems of high computational complexity and poor real-time performance. Starting from estimation theory and factor graphs [30,31,32], Wymeersch H et al. proposed a distributed cooperative positioning algorithm, SPAWN (sum-product algorithm over a wireless network), which transformed the node localization problem in cooperative networks into variables. The approximate edge posterior distribution of variable nodes is obtained by updating and transmitting confidence information on the factor graph. However, the algorithm requires a large amount of computation and large communication bandwidth and has poor positioning performance in dynamic scenarios. On the basis of graph theory, information geometry is widely used as an effective tool for solving nonlinear and random problems. Compared with the mathematical theory of the Euclidean framework, information geometry theory can more effectively solve some nonlinear and random problems in the information field. Information geometry methods have made considerable progress in statistical signal processing, parameter estimation, and filtering. Reference [33] refers to the concept of information geometry to radar signal processing and proposes a corresponding radar signal detection method, but how to use the information geometry distance to realize multi-UAV cooperative positioning is currently unaddressed. This paper takes advantage of the unified parameters of the information geometric model, combined with the K–L fusion method, which can effectively improve the positioning stability of the UAV cluster and the ability to suppress mutation errors.

3. System Model

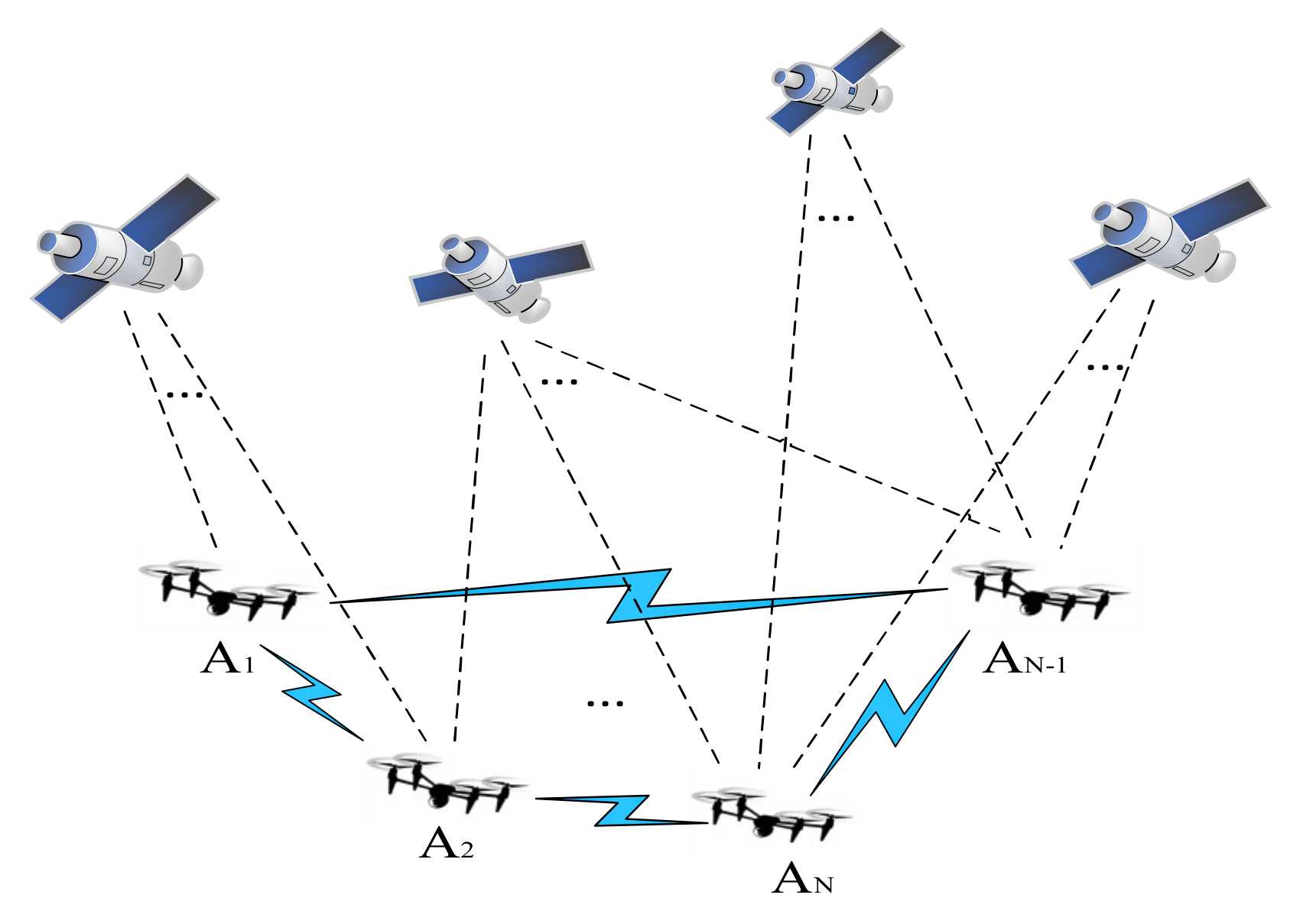

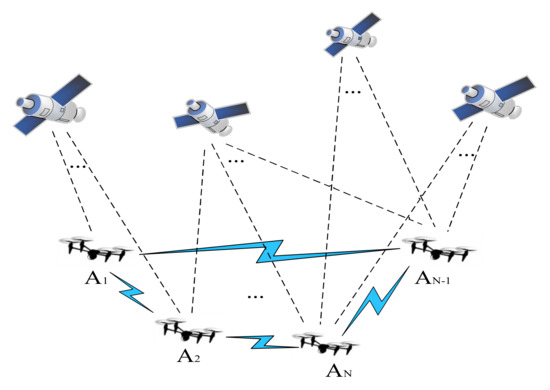

Due to the limited transportation weight and quantity of a single UAV, distributed UAV clusters can greatly improve transportation efficiency and reduce costs, but the mutual distance and control between clusters require high-precision, real-time positioning information support. The cooperative positioning network of the UAV cluster studied in this paper is shown in Figure 1.

Figure 1.

Cooperative positioning network of a UAV cluster.

In Figure 1, A1, A2, AN-1, and AN all represent UAVs, while AN represents the n-th UAV. UAV positioning can be realized by a base station or cluster UAV. In the figure, the blue link represents the ranging communication link between UAVs. Meanwhile, the UAV is equipped with a satellite receiver and inertial measurement unit (IMU), which can receive satellite navigation signals and information such as speed and acceleration.

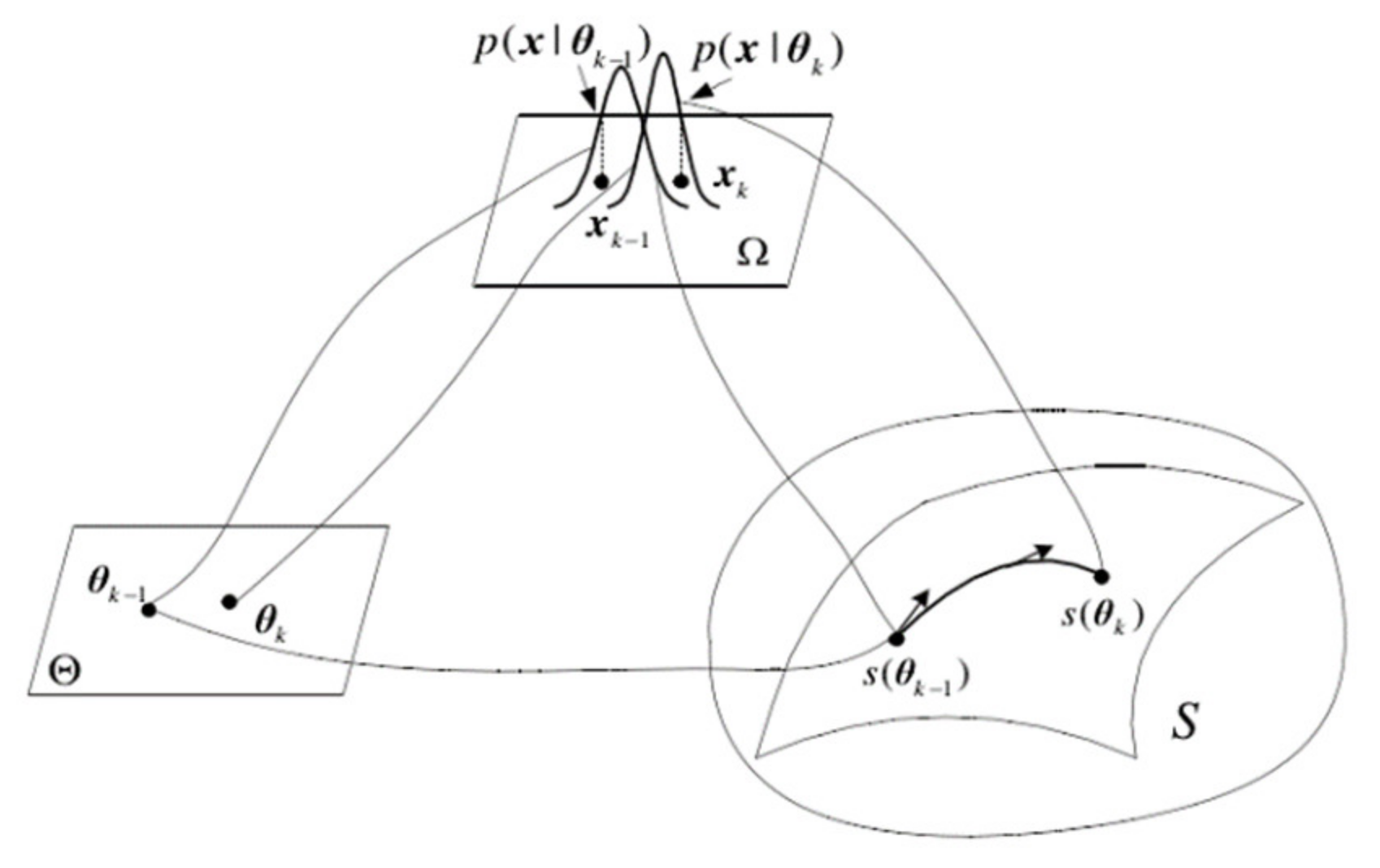

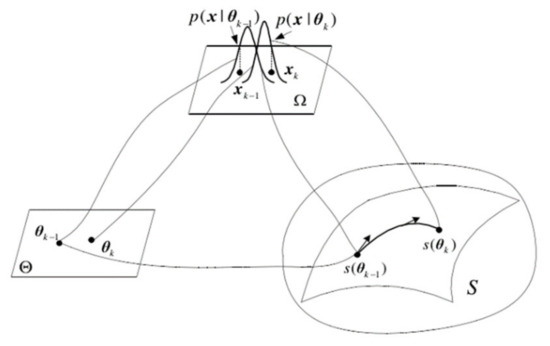

The essence of information geometry is to study the intrinsic geometric properties of probability distribution manifolds. The basic problems of probability theory and information theory are geometrized by using the differential geometry method. Different types of probability distribution function families have corresponding statistical manifolds with certain structures. A statistical manifold can be understood as a surface in the parameter space of the probability density function. Every point on it corresponds to a specific probability distribution, and the coordinates of points are related to the parameters of the probability distribution. The form of each probability distribution function determines the relationship between its neighboring probability distribution function and the spatial structure it constitutes. The relationship between the probability distribution and statistical manifold is shown in Figure 2. In Figure 2, is the sample space of navigation source information, its probability density function is , is the parameter vector of , is the vector space of parameter , and is the statistical manifold with the parameter as the coordinate.

Figure 2.

Schematic diagram of the statistical manifold.

In the multisource fusion scenario, combined with information geometry theory, the probability distribution functions of multiple UAV navigation information sources can be mapped to the Riemannian space as a family of functions to form a statistical manifold, and each point on the statistical manifold represents a probability distribution function. The multisource fusion algorithm based on information geometry theory can process heterogeneous data with better real-time performance, fault tolerance, and positioning accuracy.

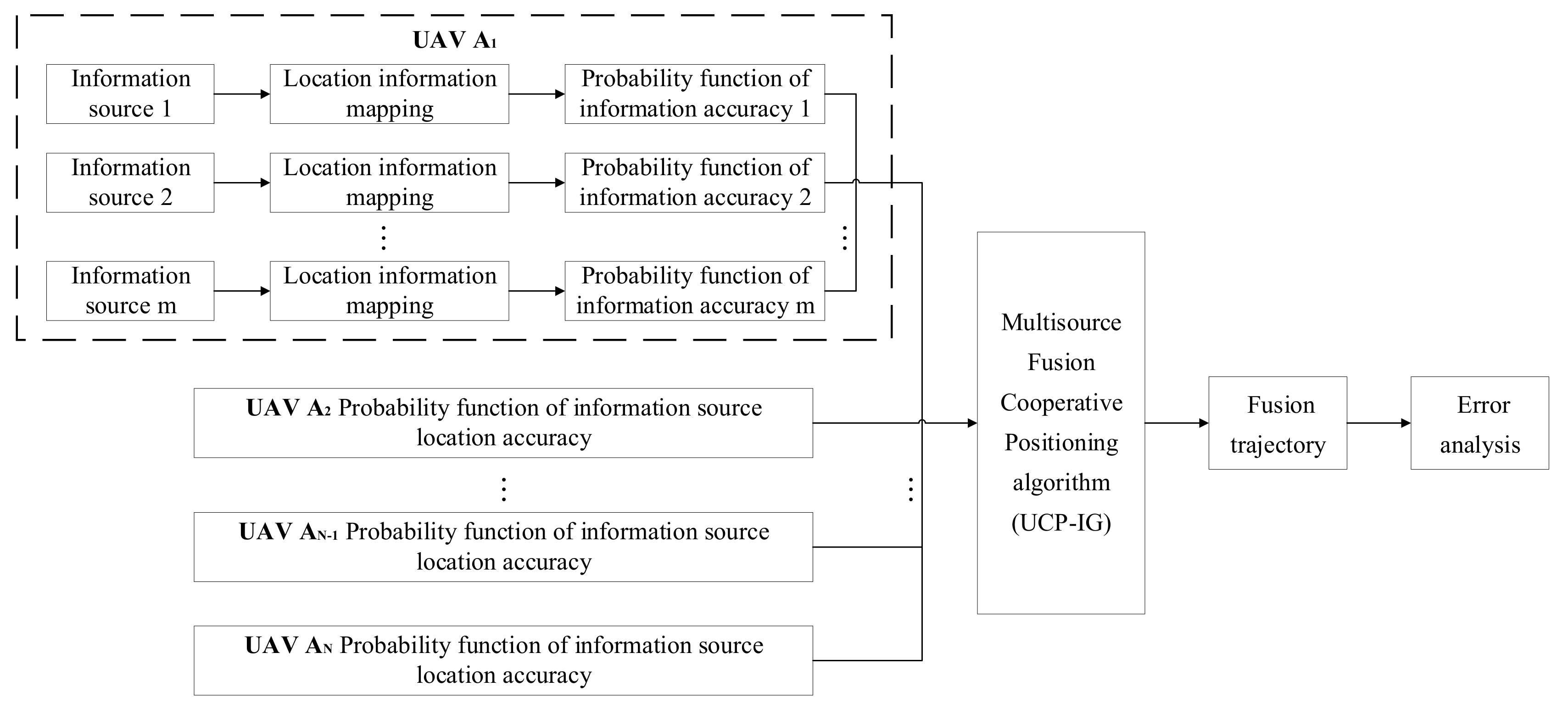

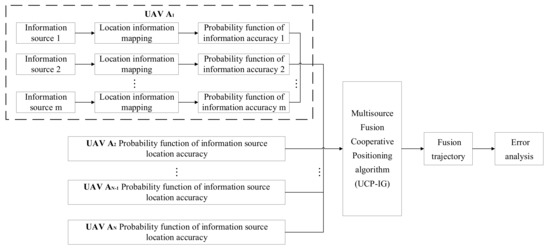

The information fusion of a target UAV includes two parts: the target UAV’s own information fusion and the cooperative information fusion from its neighboring UAVs. Each UAV merges the location information of its own multiple navigation sources, and then the target UAV iterates the information fusion through the location information provided by the neighbor UAVs and combines the ranging information to reduce the error. Figure 3 shows the multisource fusion process based on information geometry. Assume that UAV A1 is the target UAV that needs to obtain positioning information. First, each information source of UAV A1 is processed, a statistical manifold is established, the navigation information is mapped to the manifold, and the probability density function of each information source is obtained. Then, combined with the probability density function of location information provided by each neighboring UAV, the multisource fusion algorithm based on information geometry replaces the geodesic distance of the correlation matrix of the two probability density functions on the manifold by K–L divergence, obtains the lower bound of the fused objective function, and the real-time positioning result of target UAV A1 is finally obtained.

Figure 3.

Flow chart of UAV cluster multisource fusion based on information geometry.

4. Multisource Fusion Cooperative Positioning Algorithm

Distributed navigation sources on UAV clusters contain navigation sources, whose observation data . At the -th navigation source, the probability density of is , and the correlation between navigation sources is unknown. The ultimate goal is to fuse all of these probability densities into a single probability density, denoted as . For the convenience of the subsequent derivation process, is denoted as , and is denoted as .

Assume that all in , belong to the same probability distribution family, . Generally, an optimization criterion is needed to select the best point on . In the framework of information geometry, the distribution family is regarded as a Riemannian manifold, which is equipped with a Fisher information matrix [34]. Under this condition, the information-related geodesic distance can be obtained, and the information fusion theory criterion can be further obtained:

It is observed that the objective function in (1) is the sum of squared geodesic distances between a probability density and all locally known probability densities. However, as the information geometric analysis of statistical manifold is far from being fully studied, there is not much work to be done on (1). Even on many common statistical manifolds, a display expression for geodesic distance cannot be obtained. Therefore, attention needs to be focused on the family of Gaussian distributions. Although there is no definite form of geodesic distance between any two multivariate Gaussian distributions, the Gaussian family of distributions is one of the most studied manifold structures among various probability distribution families.

This paper assumes that the observed data probability density follows a Gaussian distribution, namely, , where and are their respective mean and covariance matrices. Similarly, the probability density function after the fusion is defined as . Under an assumption of a Gaussian distribution, the normal distribution with mean and variance is expressed as ; then, the information fusion criterion (2) becomes:

Due to the lack of an exact expression of the objective function (2), directly solving the optimization problem is too complicated for the real-time requirements of UAV cluster positioning. From the perspective of information geometry, (2) has been transformed into a geodesic distance problem on a statistical manifold. However, the length of the geodesic is not easy to calculate, so divergence can be introduced as a distance function to measure the difference between two points on a manifold, where Kullback–Leibler divergence (KLD) is often used to measure the difference between two points on a statistical manifold [35], providing a lower limit for the minimum of the objective function in (2). The minimum value was solved by Kullback–Leibler minimization (KLM) to achieve UAV cluster fusion location optimization. KLD is defined as:

where represents the expectation according to the distribution . KLD has information monotony; that is, the divergence value will increase with the increase in the information difference between the two matrices, and vice versa. KLD can therefore be thought of as a distance on a manifold. However, it should be pointed out that the length of geodesic represents the shortest distance between two points on a manifold, and has good properties of distance measure, that is, symmetry, non-negativity, and triangle inequality. KLD does not satisfy symmetry and triangle inequality. Therefore, average KLD can be considered to satisfy the symmetry of distance measurement:

Consider that follows a complex Gaussian vector distribution, , whose mean is 0 and correlation matrix is , and the expression is:

where represents the determinant of the matrix. Consider the probability distribution family as parameterized by the correlation matrix , where is the -dimensional Hermitian positive definite matrix space. According to the theory of information geometry, can form a manifold with as its natural coordinate under certain topological and differential structures. Because coordinate of manifold is a correlation matrix, it can also be called a matrix manifold.

The zero-mean Gaussian vector distribution belongs to the exponential distribution family, which has a dual structure; that is, the manifold has two coordinate systems that are mutually dual and can be transformed into each other by the Legendre transformation of the potential function. The exponential distribution family has the following form [36]:

where is a polynomial about , is a sufficient statistic of natural parameter , is called the potential function of the distribution, and the potential function of the zero-mean complex Gaussian distribution, , is [37]:

Suppose that the dual coordinate system of the natural coordinate is , and the potential function of the manifold in the dual coordinate is ; then, the natural coordinate and the dual coordinate have the following Legendre transformation relationship [37]:

where represents the gradient operator. Let and be any two points on the manifold , and their dual coordinates are and , respectively. Then, the Bregman divergence from and is defined as [37]:

Substituting (8) into (9), the Bregman divergence can be expressed only by natural coordinates as:

Then, according to (7), substituting the potential function of the zero-mean complex Gaussian distribution, the Kullback–Leibler divergence (KLD) from to on the manifold is [38]:

where represents the trace of the matrix.

For Gaussian distributions , if they are merged into a probability density, the problem is reduced to finding , so that the objective function obtains the minimum value:

Here, the distance is the geodesic distance between the probability densities. However, there is no direct, explicit expression for this distance, so the optimization result of (12) cannot be obtained directly. K–L divergence is used instead in this paper, and so (12) is approximated by dividing it into two steps to solve the minimization problem of the objective function.

The first step: covariance matrices and of the Gaussian distribution are fused, so the problem becomes one of obtaining an R that satisfies the minimum value of the objective function (13):

The second step: After determining the minimum covariance matrix of the objective function, the fusion result of the mean value can be obtained through (14):

where is the weighting factor. Calculating the optimal weight factor requires repeated iterations, and the amount of calculation is very large. To avoid such a large amount of calculation, this algorithm uses the noniterative fast covariance intersection (FCI). The following discusses the value of the fusion weighting factor when and .

When , the noniterative fusion weights and must satisfy . The linear constraint can be expressed as:

Thus, we obtain (16):

As long as is established, the unique solution of (16) can be obtained:

When , the noniterative fusion weight satisfies , and the linear constraint can be generalized to:

Let ; then, (19) can be obtained:

The analytical expression of the fusion weights can be derived from (19), as is shown in (20):

To obtain the solution to from (13), the gradient descent method is used to iteratively solve the solution of the objective function, where the initial iterative value of is:

By the matrix derivative formula and the matrix derivative chain rule:

Taking the derivative of (13), we can obtain:

Therefore:

is the iteration step size.

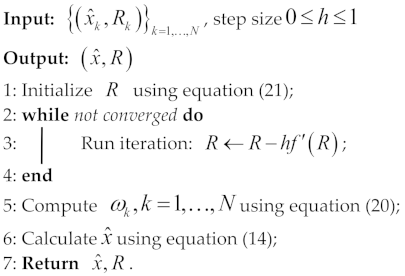

The entire process of the UCP-IG method based on matrix K–L divergence square mean minimization is shown in Algorithm 1.

| Algorithm 1: UCP-IG Fusion Algorithm |

|

5. Simulation Results and Analysis

In this section, we take four mobile UAVs as examples and apply the multisource fusion cooperative positioning algorithm to the cooperative positioning of UAV clusters in different experimental environments. The experiment consists of three scenes. The first scene is a comprehensive scene in which each UAV flies freely. In the second scenario, the UAV cluster flies randomly in the experimental site and sends back experimental data in real time through the GUI interface. The third scene is the occlusion scene. In the moving process of the UAV cluster, some UAV navigation source signals will be lost in flight due to occlusion by building groups.

5.1. Experimental Environment

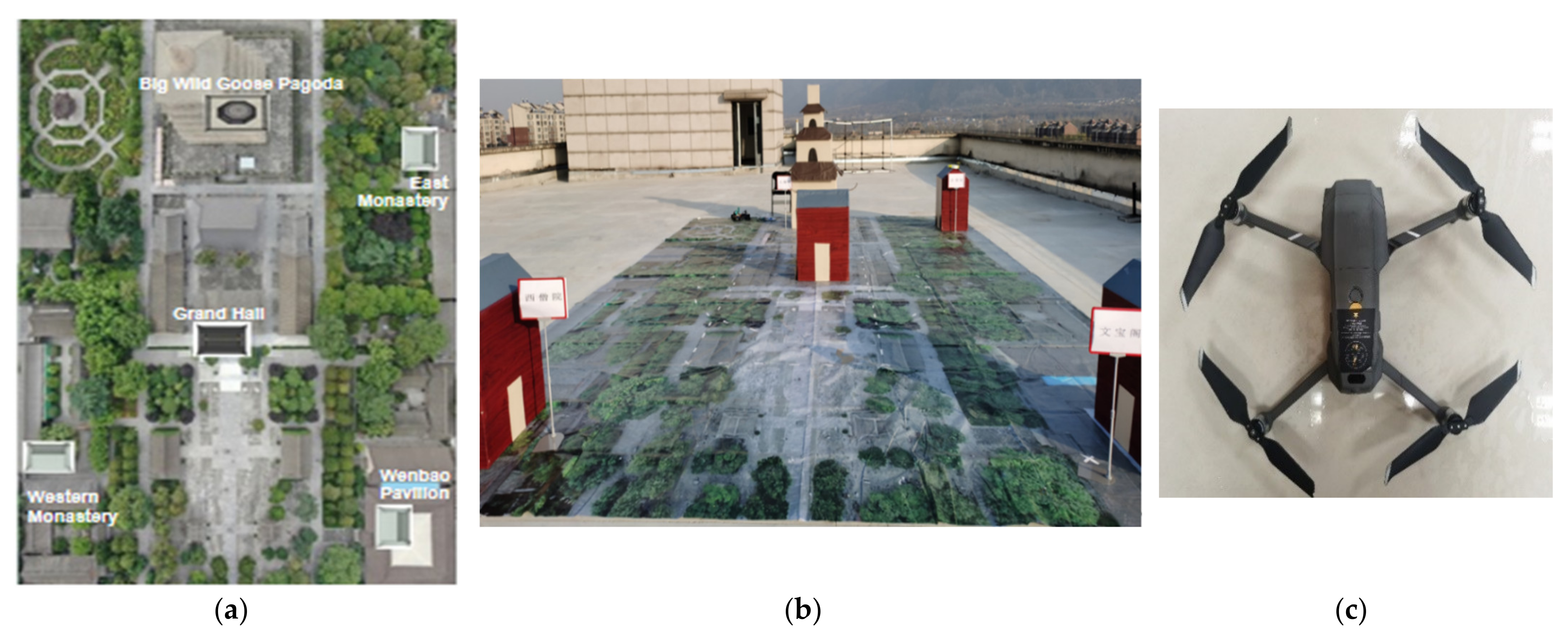

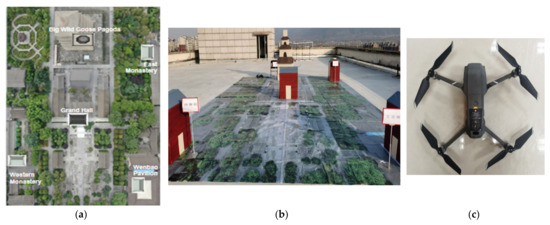

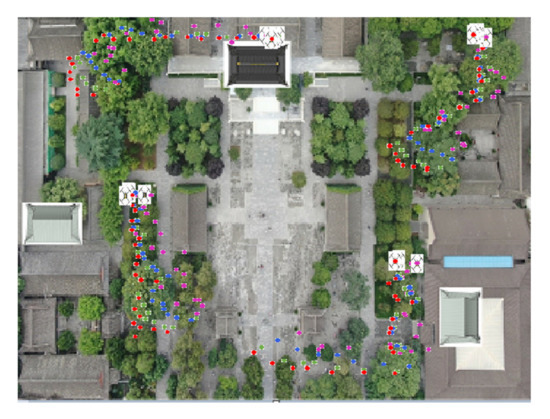

The experimental site selects some buildings of Xi’an’s classic landmarks, the Big Wild Goose Pagoda and the Da Ci’en Temple, and builds them under the guidance of actual aerial photos, as shown in Figure 4a. In this paper, the aerial photos of the scenic spot are scaled down and painted, and the painting specifications are long and wide. The landmark buildings in the figure are simulated by using cardboard boxes and placed in corresponding positions, respectively. The established test positioning site is shown in Figure 4b.

Figure 4.

(a) Aerial photo of the local pagoda. (b) Experimental environment. (c) UAV used in experiments.

5.2. Comprehensive Scenario

The experimental conditions are as follows: there are four UAVs throughout the measured experimental environment, which are equipped with satellite navigation receivers, IMUs, and communication equipment. The measured noise is considered Gaussian noise, and all UAVs can distance and communicate with other UAVs. A commercial IMU inertial measurement module, model LPMS-IG1, was used in the simulation. The zero stability is 4 degrees/h, the resolution is 0.01 degrees, and the sampling rate is 100 Hz. Table 1 lists the remaining simulation parameters.

Table 1.

Parameter settings.

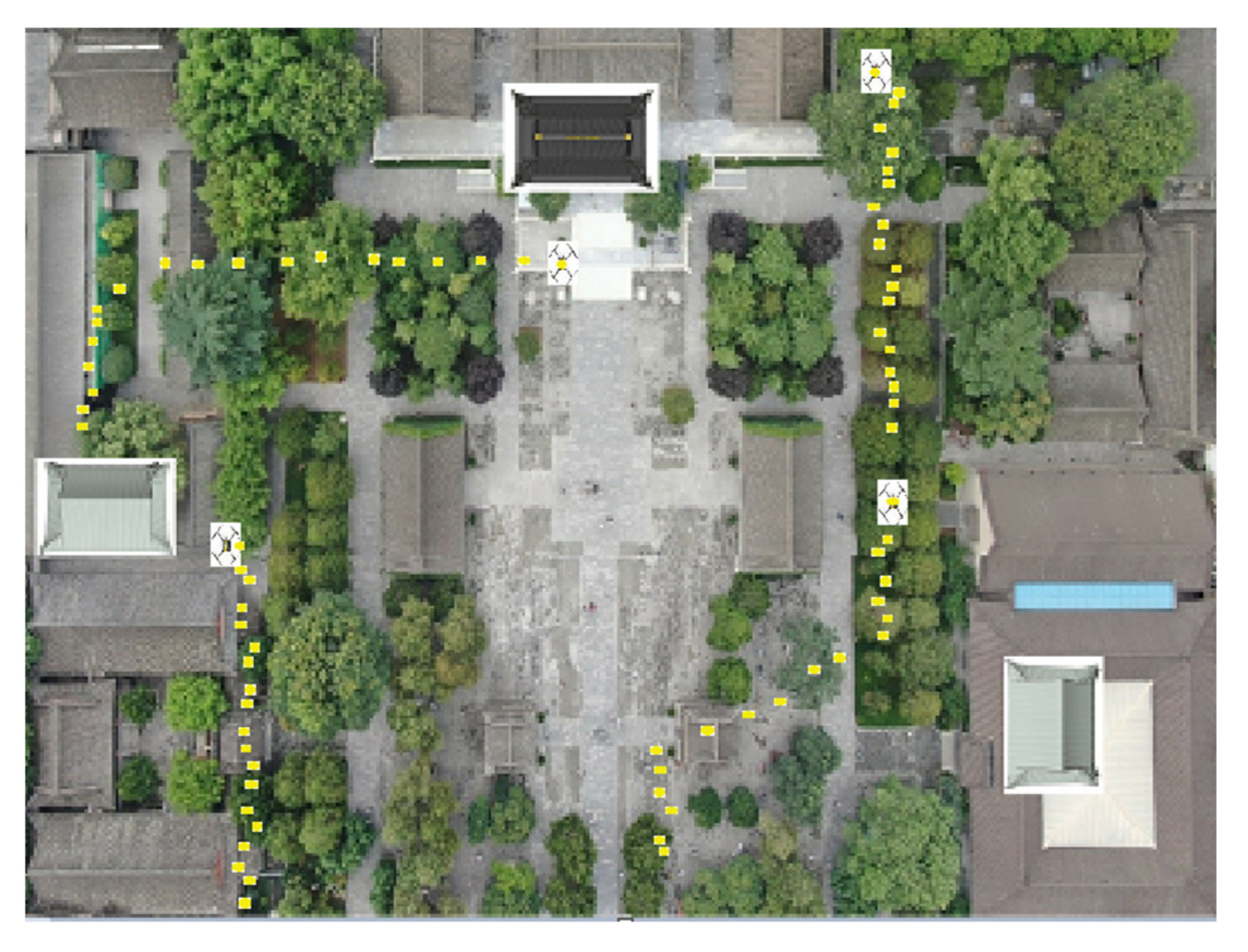

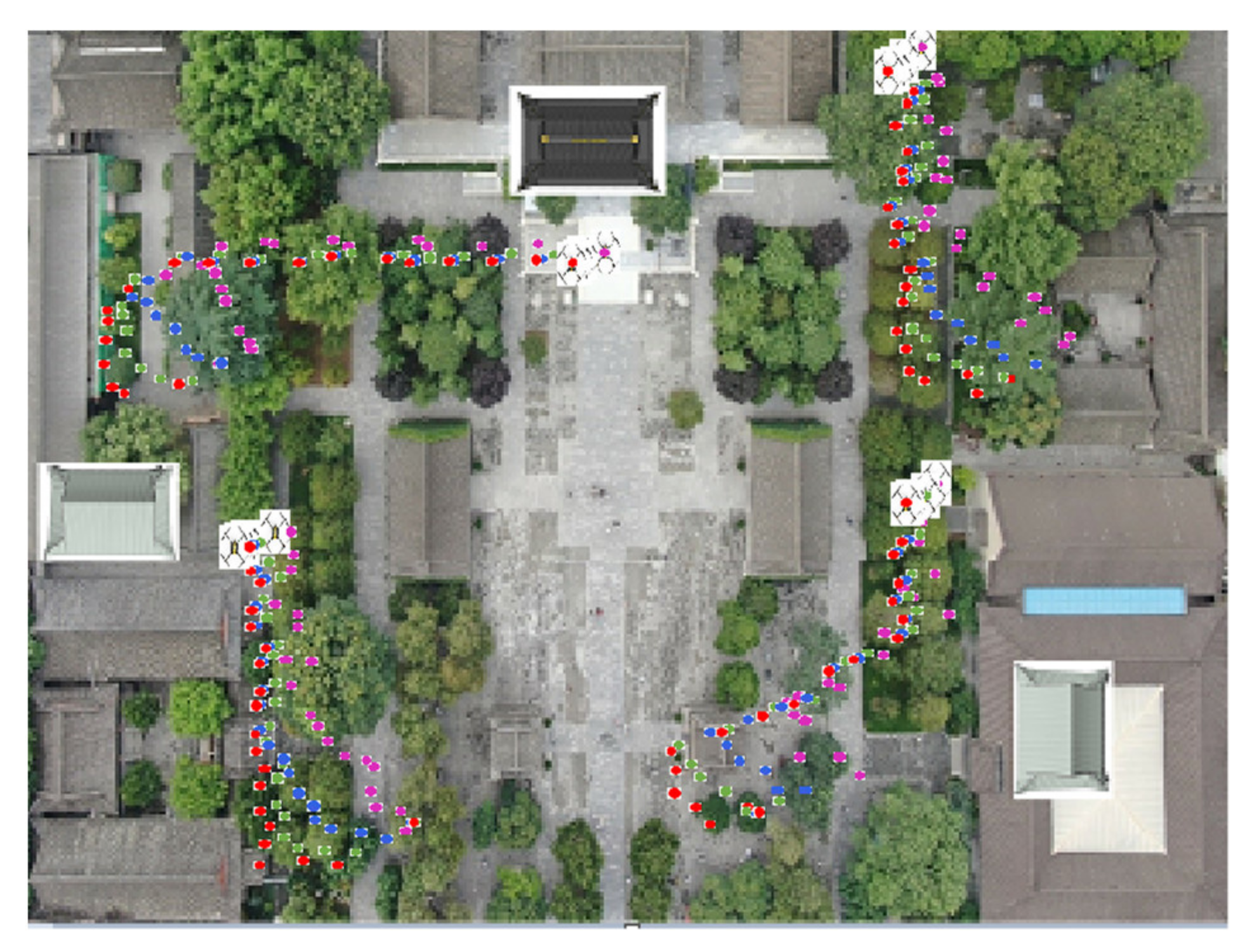

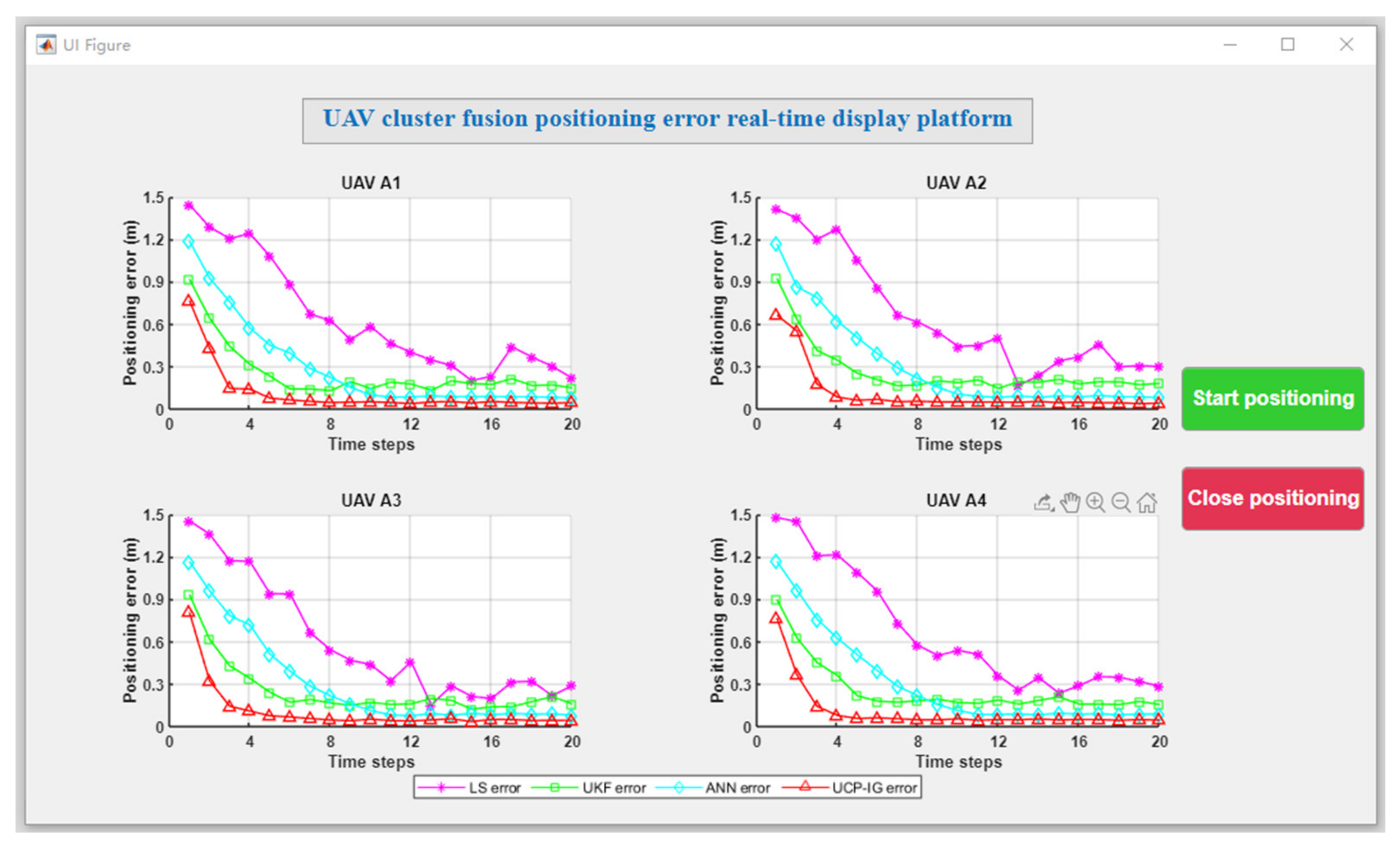

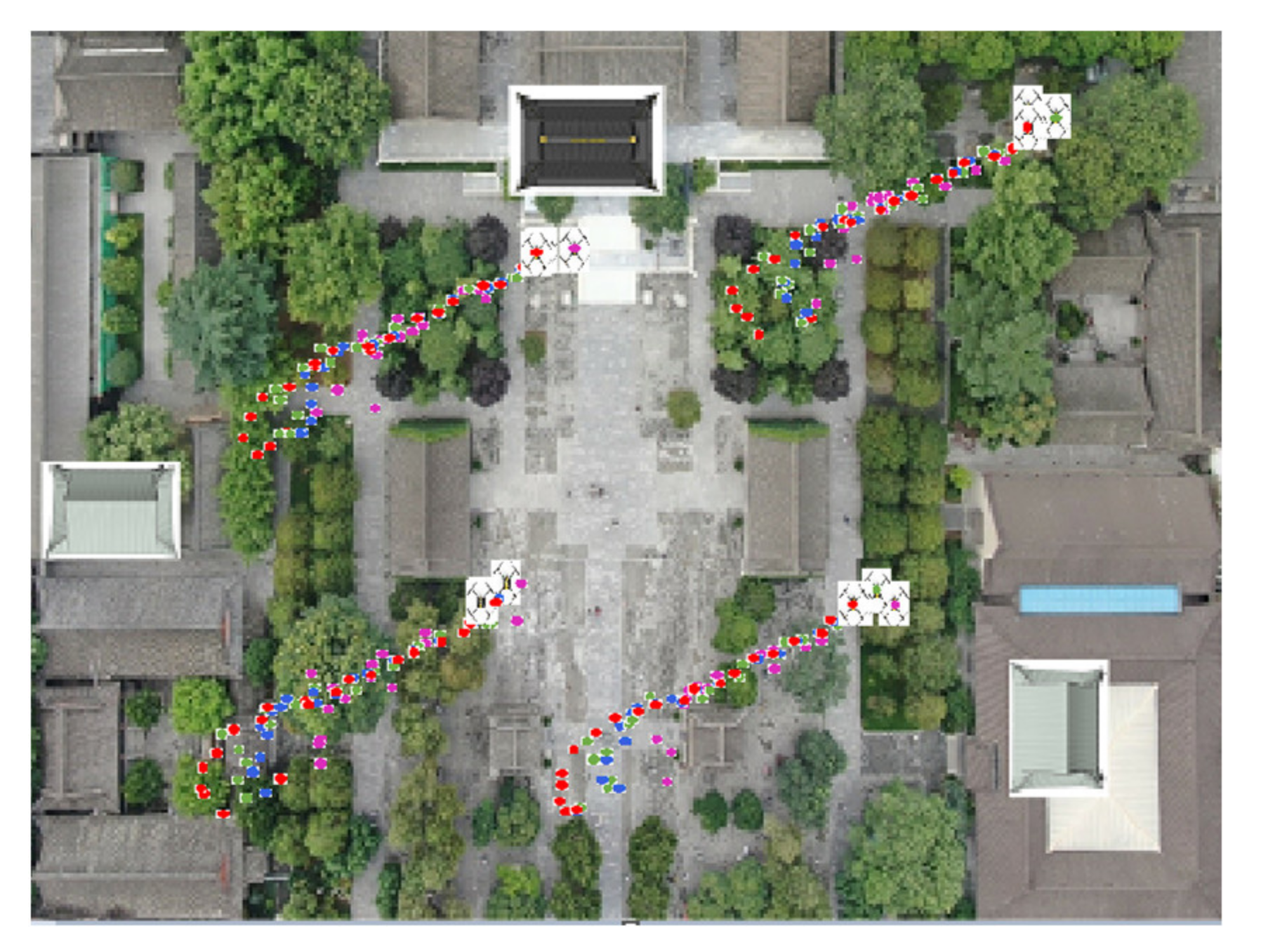

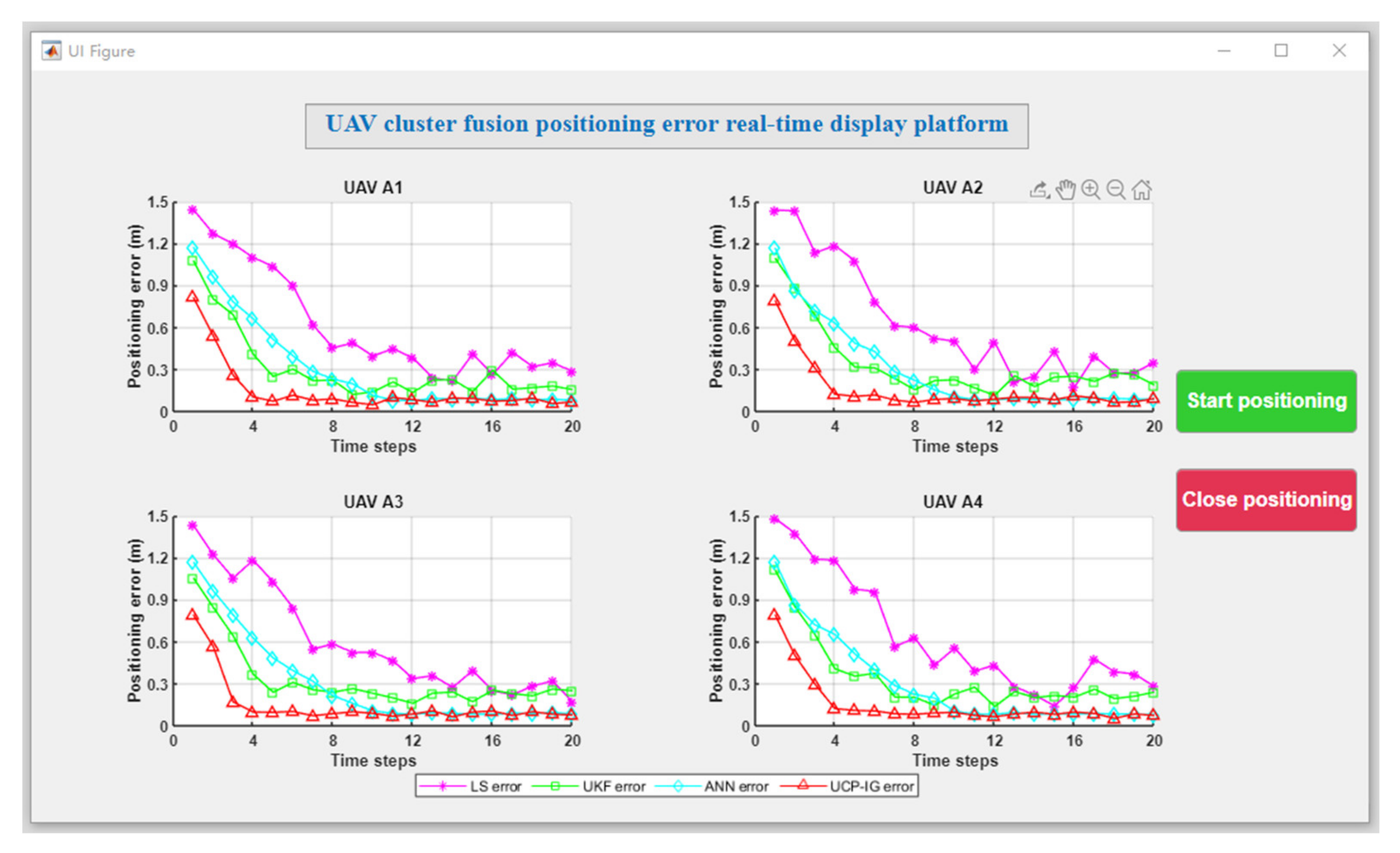

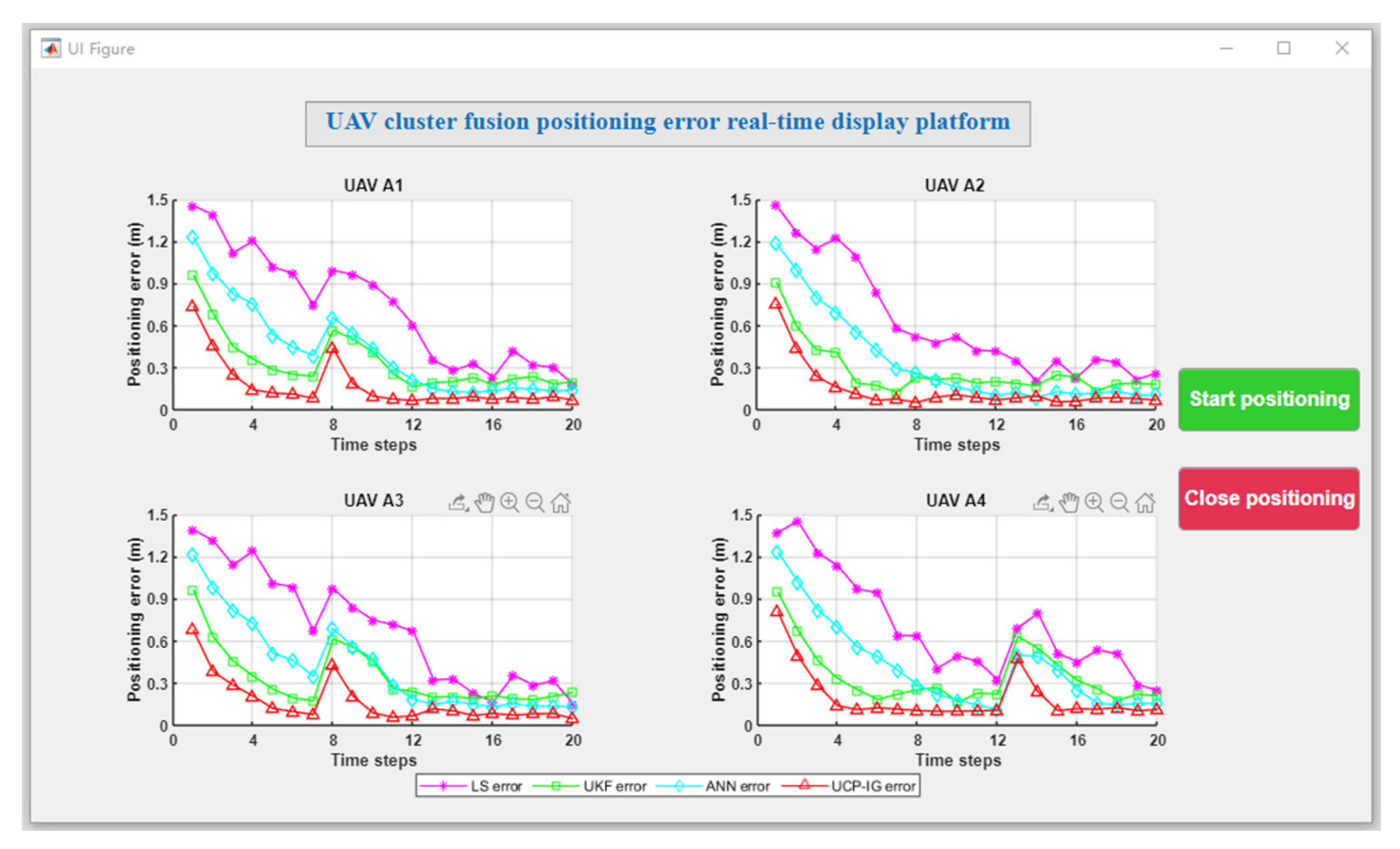

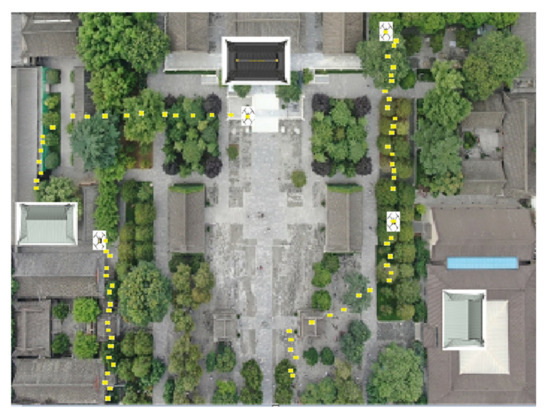

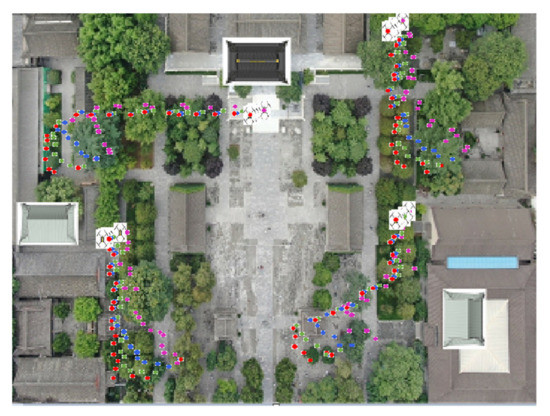

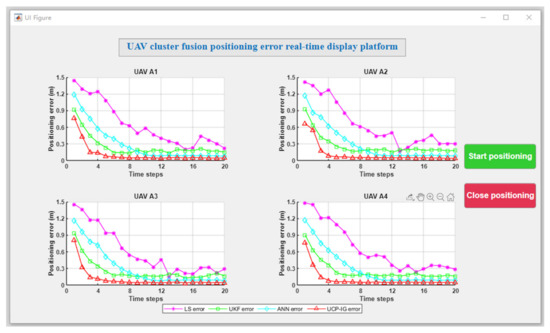

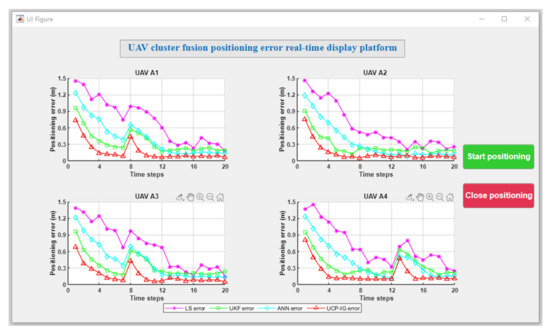

Based on the above simulation conditions, the UCP-IG method is compared with the LS method proposed in [25], the UKF method proposed in [22], and the neural network method proposed in [28] in comprehensive scenarios. The initial positions of each UAV in the UAV group were: UAV A1 (0.97 m, 0.02 m, 0.22 m), UAV A2 (4.29 m, 4.76 m, 0.26 m), UAV A3 (0.22 m, 4.77 m, 0.27 m), and UAV A4 (3.14 m, 0.49 m, 0.29 m). Each UAV makes random motions from the initial position, and the specific motion trajectories of the four UAVs are shown in Figure 5. After 10,000 Monte Carlo simulations, the UAV motion positioning results of four algorithms are shown in Figure 6, where the purple motion trajectory represents the LS method positioning result. The green motion trajectory represents the UKF method positioning result, and the blue motion trajectory represents the ANN method. The red motion track represents the positioning result of the UCP-IG method. The corresponding positioning error fluctuations of the four algorithms are shown in the GUI error display platform in Figure 7.

Figure 5.

UAV trajectory (comprehensive scenario).

Figure 6.

LS, UKF, ANN, UCP-IG method positioning trajectory (comprehensive scenario).

Figure 7.

GUI positioning error display platform (comprehensive scenario).

In Figure 6, various UAV swarm positioning methods can achieve fusion and ensure that the UAV swarm positioning is in a stable state. In order to further compare the positioning error, the fusion positioning error of each UAV at each moment is compared with the actual position, and the results are shown in Figure 7. It can be seen from Figure 7 that the error of the LS method after the convergence is between 0.21 and 0.43 m, and the error jitter is obvious; the error fluctuation of the UKF method after convergence is between 0.13 and 0.18 m, and the error jitter is higher than that of the ANN method. The error fluctuation of the ANN method is about 0.07 m, and the error jitter is small. It shows that the multi-layer neurons of the ANN method can effectively suppress the error jitter of the UAV cluster. The UCP-IG method proposed in this paper achieves the shortest convergence time, and the error jitter range is about 0.05 m, which is better than the single navigation source and other UAV positioning methods. It shows that the UCP-IG method can effectively reduce the jitter error of each navigation source by converting all kinds of navigation source information into information geometric probability for fusion. At the same time, the positioning accuracy of the UAV cluster is improved. Table 2 lists the real height data and UCP-IG positioning height data of each UAV in this scenario. It can be seen that the data are very close, and the height error fluctuates at the centimeter level.

Table 2.

Part of the experimental data (comprehensive scenario).

In order to quantitatively analyze the positioning errors of UAV A1, UAV A2, UAV A3, and UAV A4, the average errors are statistically analyzed, and the results are shown in Table 3. It can be seen from the results in Table 3 that the average error of the LS method, UKF method, and ANN method is greater than that of the UCP-IG method, indicating that the UCP-IG method can effectively improve the stability of UAV clusters.

Table 3.

Average positioning errors (comprehensive scenario).

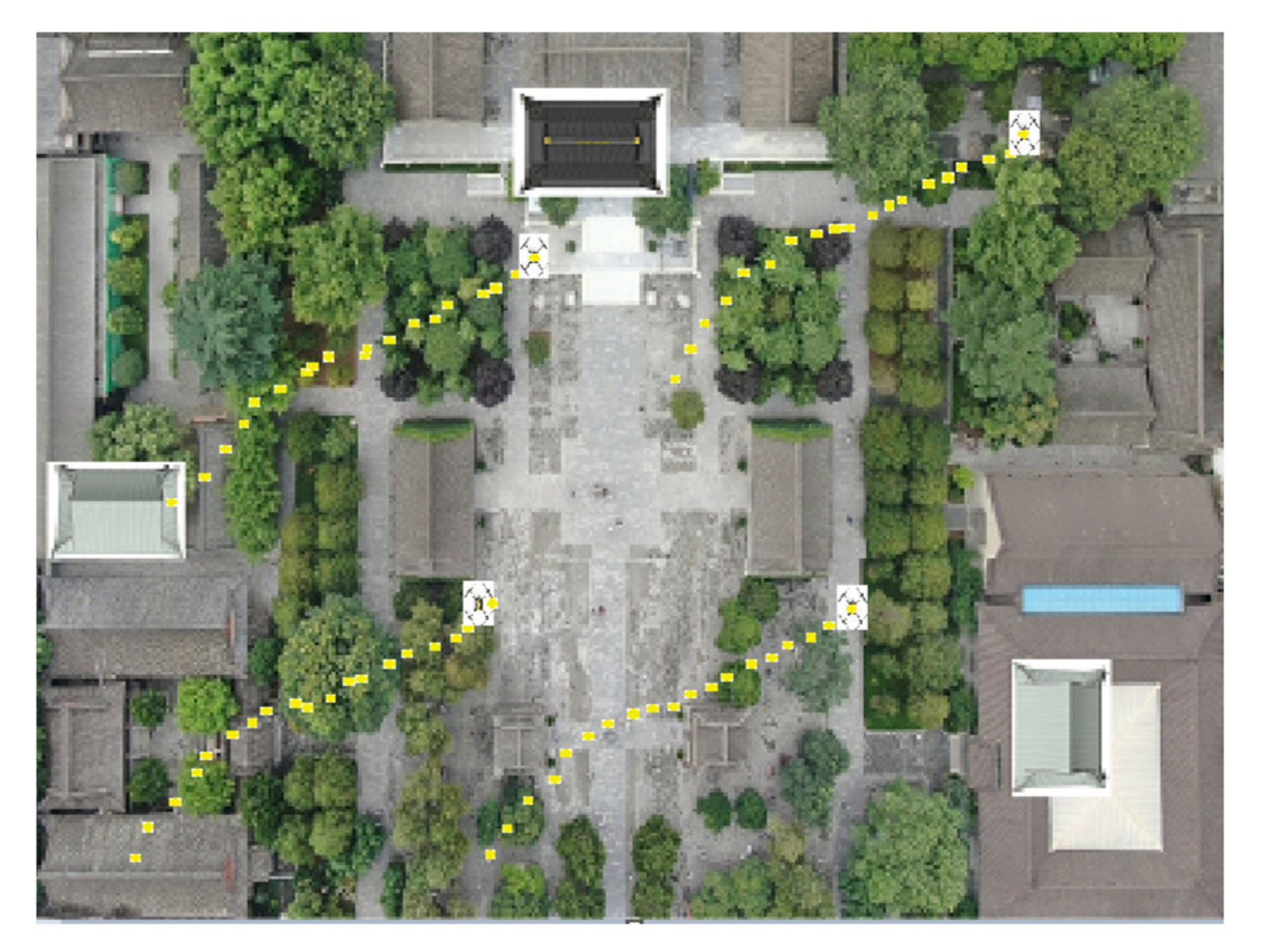

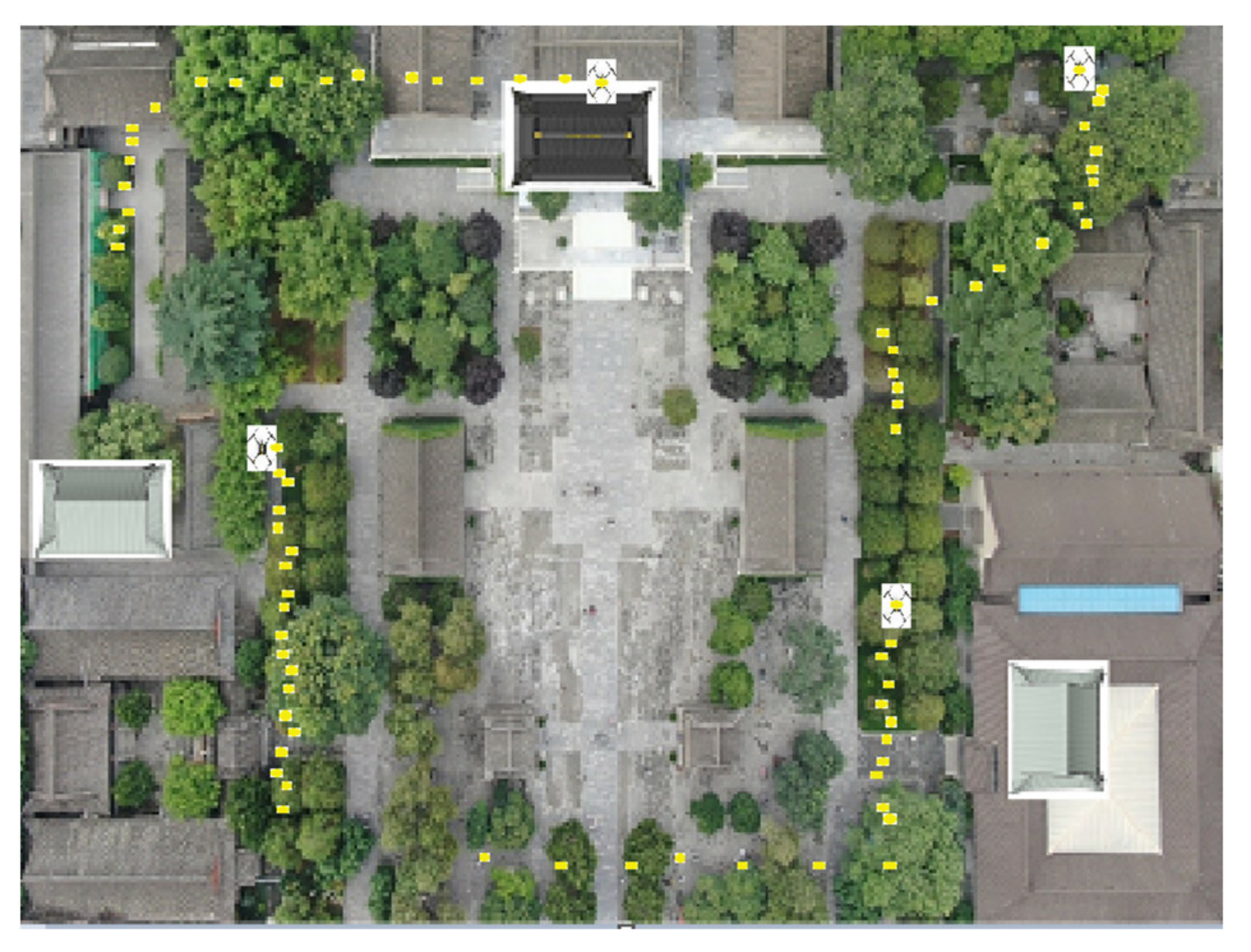

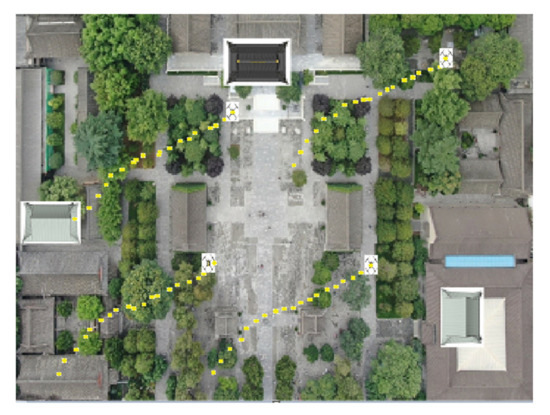

5.3. Experimental Random Scenario

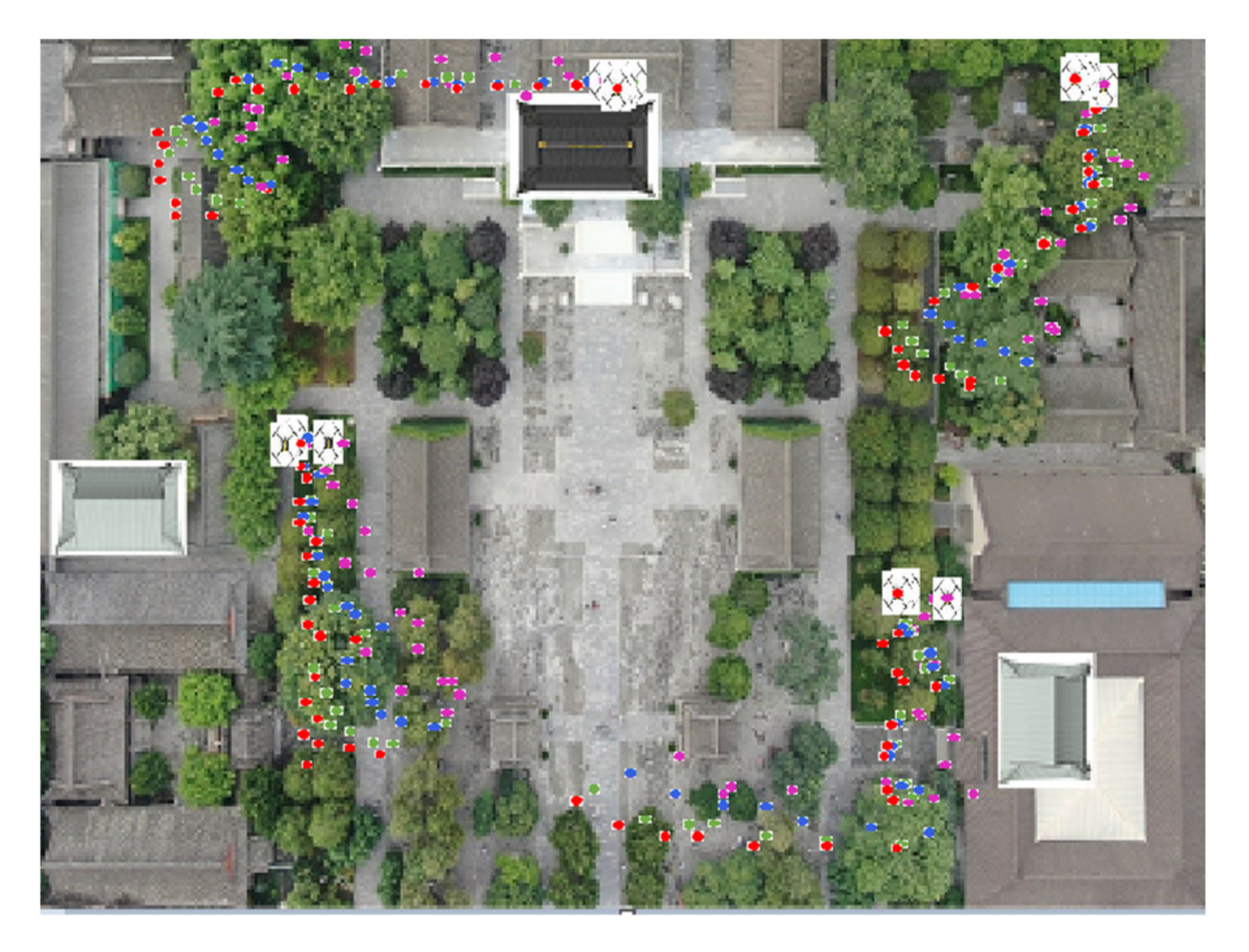

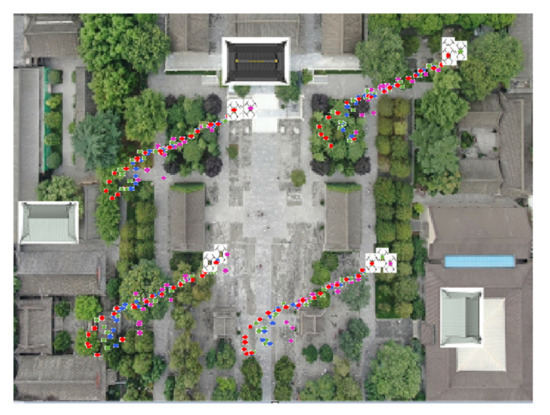

In order to make the experimental results universal, the verification was carried out in the actual experimental scene. The initial positions of each UAV of the UAV group were: UAV A1 (0.36 m, 0.46 m, 0.30 m), UAV A2 (3.16 m, 5.28 m, 0.35 m), UAV A3 (0.62 m, 4.04 m, 0.34 m), and UAV A4 (2.22 m, 0.49 m, 0.32 m). Each UAV makes random motions from the initial position, and the specific motion trajectories of the four UAVs are shown in Figure 8. The LS, UKF, ANN, and UCP-IG methods are compared respectively, and the UCP-IG motion localization results of the four algorithms are shown in Figure 9, where the purple motion trajectory represents the LS method’s localization result, the green motion trajectory represents the UKF method’s localization result, and the blue motion trajectory represents the UKF method’s localization result. The color motion trajectories represent the localization results of the ANN method, and the red motion trajectories represent the localization results of the UCP-IG method. The corresponding positioning error fluctuations of the four algorithms are shown in the GUI error display platform in Figure 10.

Figure 8.

UAV trajectory (experimental random scenario).

Figure 9.

LS, UKF, ANN, UCP-IG method positioning trajectory (experimental random scenario).

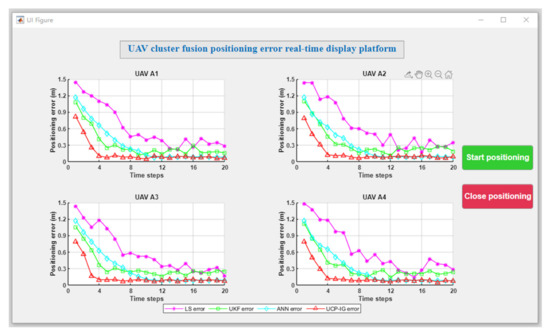

Figure 10.

GUI positioning error display platform (experimental random scenario).

From Figure 9 and Figure 10, it can be seen that the LS method, UKF method, ANN method, and UCP-IG method can all achieve convergence, where the LS method has a slight increase in the average value of the localization error fluctuation in random scenes, which is due to random motion In the LS method, when the direction and position are changed, the position of the previous moment has an impact on the next moment; the UKF method, ANN method and UCP-IG method have the average value of the fluctuation of the positioning error in the random experimental scene and the basic value in the simulation scene. Similarly, the positioning error of the UCP-IG method fluctuates around 0.08 m.

For the convergence time of gradient descent, the proposed cooperative localization method essentially realizes the interaction of localization information with the surrounding nodes. The information of the surrounding nodes is used to locate the whole UAV cluster. The gradient descent method brings some computational delay, but the number of cluster nodes involved in the location of a single UAV is limited. Therefore, the impact on the overall computational complexity delay is small, which can also be seen in Figure 10.

Table 4 shows the real height data and UCP-IG positioning height data of each UAV in the random motion experiment scenario, and the height error fluctuation is also at the centimeter level. In order to further compare the performance of each algorithm, the average error of each algorithm is compared, as shown in Table 5.

Table 4.

Part of the experimental data (experimental random scenario).

Table 5.

Average positioning errors (experimental random scenario).

As can be seen from Table 5, the average error of the LS method fluctuates between 0.60 and 0.65 m in the measured scene. The average error of the UKF method fluctuates around 0.33 m. The ANN method is better and fluctuates about 0.29 m. The UCP-IG method is the best and fluctuates around 0.14 m. The UCP-IG method proposed in this paper can transform all types of navigation sources into information probability models, which is beneficial to the suppression of navigation information errors. The corresponding KL fusion method can realize the fast fusion of the distributed navigation source information of UAV clusters.

5.4. Experimental Occlusion Scenario

In the process of UAV movement, satellite navigation sources may lose signal or offset error due to the occlusion of buildings and environmental interference. In this section, the initial positions of the UAVs were set as follows: UAV A1 (1.17 m, 0.98 m, 0.22 m), UAV A2 (4.29 m, 4.75 m, 0.26 m), UAV A3 (0.42 m, 6.56 m, 0.27 m), and UAV A4 (2.24 m, 0.50 m, 0.30 m). The movement trajectory of the four UAVs is shown in Figure 11. In this occlusion scenario, satellite signal loss or navigation signal deviation occurs due to occlusion. Among them, UAV A1 and UAV A3 lost satellite signal in unit time 6–10, and UAV A4 had navigation signal deviation in unit time 11–16. The motion positioning results of the UAVs with four algorithms are shown in Figure 12, in which the purple trajectory represents the positioning results of the LS method, the green trajectory represents the positioning results of the UKF method, the blue trajectory represents the positioning results of the ANN method, and the red trajectory represents the positioning results of the UCP-IG method. The corresponding positioning error fluctuation of the four algorithms is shown in Figure 13 on the GUI error display platform.

Figure 11.

UAV trajectory (experimental occlusion scenario).

Figure 12.

LS, UKF, ANN, UCP-IG method positioning trajectory (experimental occlusion scenario).

Figure 13.

GUI positioning error display platform (experimental occlusion scenario).

It can be seen from Figure 12 and Figure 13 that the satellite signal loss of UAV A1 and UAV A3 occurs at the eighth time unit, and the LS method can recover the UAV positioning error by about 0.45 m after six time units. It is because LS suppresses the error caused by the lost signal by means of attenuation. The UKF method can recover the UAV positioning error to about 0.19 m after four time units. The ANN method can restore the UAV positioning error to the normal range of 0.08 m after five time units, indicating that although the ANN method can well integrate multi-type navigation source information, when the navigation source information is lost, it cannot quickly eliminate the impact of the loss. The UCP-IG method proposed in this paper can restore the UCP-IG positioning error to about 0.07 m within two time units, which is superior to other UCP positioning methods and single navigation source accuracy. When the navigation signal of UAV A4 deviates at the 12th time unit, the time positioning error of LS is restored to about 0.48 m after six time units, and the positioning error of UKF is restored to about 0.19 m after four time units. The time positioning error of five time units is restored to about 0.08 m, and the time positioning error of the UCP-IG method is restored to about 0.07 m after two time units. There are various types of navigation sources on the man–machine, and the fusion speed is the fastest, which can effectively suppress the influence of the loss of navigation sources and interference errors. In addition, Table 6 lists the real height data and UCP-IG positioning height data of each UAV in the random motion experiment scenario. Even if there is signal occlusion, the height error fluctuation after fusion remains at the centimeter level.

Table 6.

Part of the experimental data (experimental random scenario).

6. Conclusions

Aiming to address the problems of UAV cluster jittering, the small number of navigation sources, and the high real-time demand, this paper proposes a multisource fusion UAV cluster cooperative positioning algorithm. The method creatively uses the correlation between UAV navigation source information probability and positioning accuracy and converts different types of navigation source information into probability functions to realize fast fusion. To analyze the results achieved by the UCP-IG method proposed in this paper, a comparison was performed with the current mainstream UAV cluster positioning technologies; namely, the proposed method was compared with the LS and ANN methods in ideal, occlusion, and random scenarios. The results show that the UCP-IG method can make full use of the distributed navigation source information of different types carried by the UAV cluster to realize fusion and effectively improve the positioning accuracy and stability of UAV clusters.

Author Contributions

Conceptualization, C.T. and Y.W.; methodology, C.T.; software, Y.W.; validation, C.T., L.Z. and Y.Z.; formal analysis, L.Z.; investigation, C.T.; resources, L.Z.; data curation, Y.W.; writing—original draft preparation, Y.W.; writing—review and editing, L.Z.; visualization, L.Z.; supervision, H.S.; project administration, H.S.; funding acquisition, C.T. and L.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China under Grant 62171735, 62271397, 62101458 and 61803310, in part by the Natural Science Basic Research Program of Shaanxi under Grant 2021GY-075, and in part by China Postdoctoral Science Foundation under Grant 2020M673485.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Estrada, M.A.R.; Ndoma, A. The uses of unmanned aerial vehicles—UAV’s-(or drones) in social logistic: Natural disasters response and humanitarian relief aid. Procedia Comput. Sci. 2019, 149, 375–383. [Google Scholar] [CrossRef]

- Song, B.D.; Park, K.; Kim, J. Persistent UAV delivery logistics: MILP formulation and efficient heuristic. Comput. Ind. Eng. 2018, 120, 418–428. [Google Scholar] [CrossRef]

- Mo, Y.; Zhang, Z.; Meng, W.; Ma, L.; Wang, Y. A Spatial Division Clustering Method and Low Dimensional Feature Extraction Technique Based Indoor Positioning System. Sensors 2014, 14, 1850–1876. [Google Scholar] [CrossRef]

- Sivakumar, A. UAV swarm coordination and control for establishing wireless. In Proceedings of the 9th International Conference on Autonomous Agents and Multiagent Systems (AAMAS), Toronto, ON, Canada, 10–14 May 2010; pp. 1157–1164. [Google Scholar]

- Gualda, D.; Ureña, J.; García, J.C.; Pérez, M.C.; Díaz, E. Study of cooperative position estimations of mobile robots. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017; pp. 1–7. [Google Scholar] [CrossRef]

- Lin, Q.; Wang, X.; Wang, Y. Cooperative Formation and Obstacle Avoidance Algorithm for Multi-UAV System in 3D Environment. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 6943–6948. [Google Scholar] [CrossRef]

- Zhou, Y.; Wan, J.; Li, Z.; Song, Z. GPS/INS integrated navigation with BP neural network and Kalman filter. In Proceedings of the 2017 IEEE International Conference on Robotics and Biomimetics (ROBIO), Macau, China, 5–8 December 2017; pp. 2515–2520. [Google Scholar] [CrossRef]

- Pulford, G.W. Taxonomy of Multiple Target Tracking Methods. IEE Proc.-Radar Sonar Navig. 2005, 152, 291–304. [Google Scholar] [CrossRef]

- Rajchl, M.; Baxter, J.S.; McLeod, A.J.; Yuan, J.; Qiu, W.; Peters, T.M.; Khan, A.R. Hierarchical max-flow segmentation framework for multi-atlas segmentation with Kohonen self-organizing map based Gaussian mixture modeling. Med. Image Anal. 2016, 27, 45–56. [Google Scholar] [CrossRef] [PubMed]

- Kiani, M.; Pourtakdoust, S.H. Concurrent orbit and attitude estimation using minimum sigma point unscented Kalman filter. Proc. Inst. Mech. Eng. Part G J. Aerosp. Eng. 2014, 228, 801–819. [Google Scholar] [CrossRef]

- Jwo, D.J.; Wang, S.H. Adaptive Fuzzy Strong Tracking Extended Kalman Filtering for GPS Navigation. IEEE Sens. J. 2007, 7, 778–789. [Google Scholar] [CrossRef]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problem. J. Basic Eng (ASME) 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Arasaratnam, I.; Haykin, S. Cubature Kalman Filters. IEEE Trans. Autom. Control 2009, 54, 1254–1269. [Google Scholar] [CrossRef]

- Patwari, N.; Ash, J.N.; Kyperountas, S.; Hero, A.O.; Moses, R.L.; Correal, N.S. Locating the nodes: Cooperative localization in wireless sensor networks. IEEE Signal Process. Mag. 2005, 22, 54–69. [Google Scholar] [CrossRef]

- Nguyen, T.V.; Jeong, Y.; Shin, H.; Win, M.Z. Least Square Cooperative Localization. IEEE Trans. Veh. Technol. 2015, 64, 1318–1330. [Google Scholar] [CrossRef]

- Panzieri, S.; Pascucci, F.; Sciavicco, L.; Setola, R. Distributed Cooperative Localization. J. Inf. Technol. Res. 2015, 6, 49–67. [Google Scholar] [CrossRef]

- Nguyen, T.L.T.; Septier, F.; Rajaona, H.; Peters, G.W.; Nevat, I.; Delignon, Y. A Bayesian Perspective on Multiple Source Localization in Wireless Sensor Networks. IEEE Trans. Signal Process. 2016, 64, 1684–1699. [Google Scholar] [CrossRef]

- Garello, R.; Samson, J.; Spirito, M.A.; Wymeersch, H. Peer-to-Peer Cooperative Positioning Part II: Hybrid Devices with GNSS & Terrestrial Ranging Capability. Inside GNSS 2015, 7, 56–64. [Google Scholar]

- Sun, C.; Zhang, Y.; Wang, G.; Gao, W. A New Variational Bayesian Adaptive Extended Kalman Filter for Cooperative Navigation. Sensors 2018, 18, 2538. [Google Scholar] [CrossRef] [PubMed]

- Kwok, C.; Fox, D.; Meila, M. Real-time particle filters. Proc. IEEE 2004, 92, 469–484. [Google Scholar] [CrossRef]

- Kia, S.S.; Rounds, S.; Martinez, S. Cooperative Localization for Mobile Agents: A Recursive Decentralized Algorithm Based on Kalman-Filter Decoupling. IEEE Control Syst. Mag. 2016, 36, 86–101. [Google Scholar] [CrossRef]

- Hai, N.; Zhao, Q.; Wan, Q. UKF algorithm based on optimized weighting data fusion. In Proceedings of the IET International Radar Conference 2015, Hangzhou, China, 14–16 October 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Abd El Aziz, M. Source localization using TDOA and FDOA measurements based on modified cuckoo search algorithm. Wirel. Netw. 2017, 23, 487–495. [Google Scholar] [CrossRef]

- Nguyen, H.D.; Wood, I.A. A Block Successive Lower-Bound Maximization Algorithm for the Maximum Pseudo-Likelihood Estimation of Fully Visible Boltzmann Machines. Neural Comput. 2016, 28, 485–492. [Google Scholar] [CrossRef]

- Khan, M.W.; Salman, N.; Kemp, A.H. Optimised hybrid localisation with cooperation in wireless sensor networks. IET Signal Process. 2017, 11, 341–348. [Google Scholar] [CrossRef]

- Tomic, S.; Beko, M.; Dinis, R. RSS-Based Localization in Wireless Sensor Networks Using Convex Relaxation: Noncooperative and Cooperative Schemes. IEEE Trans. Veh. Technol. 2015, 64, 2037–2050. [Google Scholar] [CrossRef]

- Li, S.; Wang, S.L. Research of nonlinear filtering algorithm based on BP neural network. Electron. Meas. Technol. 2018, 41, 34–39. [Google Scholar]

- Magrin, C.E.; Todt, E. Multi-Sensor Fusion Method Based on Artificial Neural Network for Mobile Robot Self-Localization. In Proceedings of the 2019 Latin American Robotics Symposium (LARS), 2019 Brazilian Symposium on Robotics (SBR) and 2019 Workshop on Robotics in Education (WRE), Rio Grande, Brazil, 23–25 October 2019; pp. 138–143. [Google Scholar] [CrossRef]

- Ihler, A.T.; Fisher, J.W.; Moses, R.L.; Willsky, A.S. Nonparametric belief propagation for self-localization of sensor networks. IEEE J. Sel. Areas Commun. 2005, 23, 809–819. [Google Scholar] [CrossRef]

- Kschischang, F.R.; Frey, B.J.; Loeliger, H. Factor graphs and the sum-product algorithm. IEEE Trans. Inf. Theory 2001, 47, 498–519. [Google Scholar] [CrossRef]

- Loeliger, H.A. An introduction to factor graphs. IEEE Signal Process. Mag. 2004, 21, 28–41. [Google Scholar] [CrossRef]

- Caceres, M.A.; Penna, F.; Wymeersch, H.; Garello, R. Hybrid Cooperative Positioning based on Distributed Belief Propagation. IEEE J. Sel. Areas Commun. 2011, 29, 1948–1958. [Google Scholar] [CrossRef]

- Cheng, Y.; Wang, X.; Caelli, T.; Li, X.; Moran, B. On Information Resolution of Radar Systems. IEEE Trans. Aerosp. Electron. Syst. 2012, 48, 3084–3102. [Google Scholar] [CrossRef]

- Opitz, F. Information geometry and its applications. In Proceedings of the 2012 9th European Radar Conference, Amsterdam, The Netherlands, 31 October–2 November 2012; pp. 46–49. [Google Scholar]

- Wu, Y.; Wang, J.; Zhang, P.; Cao, Y. Parallel clutter suppression algorithm for passive radar in CUDA. Xi’an Dianzi Keji Daxue Xuebao/J. Xidian Univ. 2015, 42, 1001–2400. [Google Scholar]

- Amari, S.I. Information geometry on hierarchy of probability distributions. IEEE Trans. Inf. Theory 2001, 47, 1701–1711. [Google Scholar] [CrossRef]

- Chebbi, Z.; Moakher, M. Means of Hermitian positive-definite matrices based on the log-determinant α-divergence function. Linear Algebra Its Appl. 2012, 436, 1872–1889. [Google Scholar] [CrossRef]

- Amari, S.I. Differential-Geometrical Methods in Statistics; Springer: Berlin/Heidelberg, Germany, 1985. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).