Abstract

Forest ecosystem detection and assessment usually requires accurate spatial distribution information of forest tree species. Remote sensing technology has been confirmed as the most important method for tree species acquisition, and space-borne hyperspectral imagery, with the advantages of high spectral resolution, provides a better possibility for tree species classification. However, the present in-orbit hyperspectral imager has proved to be too low in spatial resolution to meet the accuracy needs of tree species classification. In this study, we firstly explored and evaluated the effectiveness of the Gram-Schmidt (GS) Harmonic analysis fusion (HAF) method for image fusion of GaoFen-5 (GF-5) and Sentinel-2A. Then, the Integrated Forest Z-Score (IFZ) was used to extract forest information from the fused image. Next, the spectral and textural features of the fused image, and topographic features extracted from DEM were selected according to random forest importance ranking (Mean Decreasing Gini (MDG) and Mean Decreasing Accuracy (MDA)), and imported into the random forest classifier to complete tree species classification. The results showed that: comparing some evaluation factors such as information entropy, average gradient and standard deviation of the fused images, the GS fusion image was proven to have a higher degree of spatial integration and spectral fidelity. The random forest importance ranking showed that WBI, Aspect, NDNI, ARI2, FRI were more important for tree species classification. Both the classification accuracy and kappa coefficients of the fused images were significantly greatly improved when compared to those of original GF-5 images. The overall classification accuracy ranged from 61.17% to 86.93% for different feature combination scenarios, and accuracy of the selected method based on MDA achieved higher results (OA = 86.93%, Kappa = 0.85). This study demonstrated the feasibility of fusion of GF-5 and Sentinel-2A images for tree species classification, which further provides good reference for application of in-orbit hyperspectral images.

1. Introduction

Forests are the mainstay of the global terrestrial ecosystem, with functions such as water conservation, soil and water conservation, and maintaining the balance of the ecosystem [1,2]. Tree species’ composition is an essential component of forest certification programs, and information on the spatial distribution of tree species can provide basic information for studies such as biodiversity assessment, invasive alien species monitoring, and wildlife habitat mapping [3].

Traditional methods of forest surveying involve random sampling and field surveys at each sample site, which are time-consuming and labor-intensive. Remote sensing technology can obtain forest information from rough terrain or hard-to-reach areas and speed up the work progress [4]. The application of remote sensing images to tree species classification originated from the visual interpretation of color aerial photographs [5], and then developed to multi-spectral image classification [6,7], multi-temporal remote sensing image [8,9] hyperspectral image analysis [10,11], and fusion of multiple sensor images [12,13]. Medium resolution images have been applied to classify tree species on the regional scale. However, species classification accuracy was inadequate for forest inventory at the stand scale. Hyperspectral images with continuous narrow wavelength bands, especially in visible and near-infrared regions, can detect subtle changes from different tree species on the canopy and leaf levels [14]. In the recent decade, airborne platforms, especially UAV platforms, have been widely used, which are commonly equipped with multi-spectral or hyperspectral sensors to meet the requirements of flexible spectral and spatial resolution for tree species classification [15,16]. LiDAR can detect the 3D structural information of tree species and can obtain rich spectral and structural features, providing a favorable platform and technical basis for fine tree species classification and forest parameter estimation at small and medium scales [17,18,19].

However, it is challenging to popularize the application of plant diversity monitoring and forest resources surveys in large areas, and satellite-based spectral remote sensing data became a better choice.

There are some studies of satellite-based hyperspectral for tree species classification; M.Papeş et al. (2010) verified that the Hyperion imaging spectroscopy has the potential for developing regional mapping of large-crowned tropical trees [20]. George et al. (2014) demonstrated the potential utility of the narrow spectral bands of Hyperion data in discriminating tree species in a hilly terrain [21]. Lu et al. (2017) generated fine spatial-spectral-resolution images by blending the environment 1A series satellite (HJ-1A) multispectral images; the spatial and spectral information was utilized simultaneously to distinguish various forest types [22]. Xi et al. (2019) used OHS-1 hyperspectral images combined with RF models to classify tree species, and the overall classification accuracy was 80.61% [23]. Vangi et al. (2021) compared the capabilities of the new PRISMA sensor and the well-known Sentinel-2A/2B Multispectral Instrument (MSI) in identifying different forest types. The PRISMA hyperspectral sensor was able to distinguish forest types [24]. Wan et al. (2020) used Landsat 8 OLI, simulated Hyperion and GF-5 image data sets, RF and SVM to classify mangrove species, and the results showed that GF-5 has the highest accuracy [25]. Compared to previous hyperspectral images, the GF-5 has an advantage in terms of band number and bandwidth, with 330 bands and a strip width of 60 km [26]. However, its spatial resolution is 30 m, the misclassification of tree species is still very obvious, the classification accuracy is not very high, and it is not enough to reach the demand of forest inventory. To make up for the low spatial resolution of hyperspectral images, many researchers began to explore the method of fusing high spatial resolution images and high spectral resolution images.

In recent years, many methods of hyperspectral image fusion have emerged, which can be classified into four categories, including Component Substitution (CS) comma Multiresolution Analysis (MRA), Variational Optimization (VO)-based methods, and Deep Learning (DL)-based methods [27]. Gram-Schmidt (GS) belongs to the CS method, which is more widely used in the fusion of multi-source remote sensing data, with the fusion of multi-spectral and high-resolution images such as Gaofen-2 (GF-2), ZiYuan-3 (ZY-3), Worldview-2, QuickBird, and IKONOS imagery [28,29,30]. The image quality evaluation results showed that the spectral information fidelity was better than the traditional IHS, Brovey, and PCA fusion methods [31].

Harmonic analysis was firstly applied to power systems, then a study applied the theory of harmonic analysis to the NDVI time series analysis of vegetation from remote sensing images such as AVHRR and MODIS for the first time [32,33]. Jakubauskas et al. (2003) applied this algorithm to crop species identification in their subsequent harmonic study [34]. The image fusion algorithm based on harmonic analysis overcame the problems of low fidelity and low pervasiveness of the fused data, could be compatible with panchromatic, single-band, or multispectral images for processing, and could obtain good fusion effects and solved the defects of the low spatial resolution of hyperspectral data [35].

Sentinel-2A, with three red-edge bands, has advantages in vegetation classification and spatial resolution, which could be obtained free of charge, and has a spatial resolution of 10 m. Fusion with GF-5 images results in hyperspectral high spatial resolution images, and it is hoped that the advantages of the respective sensors of Sentinel-2A and GF-5 images would be used to improve the accuracy of tree species classification and explore their classification effects and application extension values.

Due to their excellent performance, machine learning algorithms are increasingly used in tree species classifications. Commonly used machine learning algorithms include Support Vector Machine (SVM) and Random Forest (RF) classifier [36]. RF can evaluate the importance of features based on internal sorting before classification, and extract the most important feature set to participate in classification. RF can filter features and rank feature importance based on accuracy [37].

In this paper, Sentinel-2A and GF-5 images were used, combined with two methods of Gram-Schmidt and harmonic analysis for image fusion, then image quality evaluation was performed to select images with better results and band selection was performed; then, spectral features, vegetation indexes, texture features, and topographic features were extracted as feature variables. RF was combined to map the spatial distribution of tree species, with three objectives. RF was chosen as the classification method for this study. Accordingly, we tried to compare the effect of the two image fusion methods of Gram-Schmidt and harmonic analysis, the changes in classification accuracy of tree species before and after GF-5 fusion, and the extent to which the fused images and their features improve the classification effect of tree species.

2. Materials and Methods

2.1. Study Area

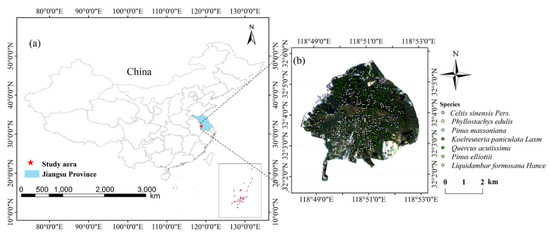

The study area illustrated in Figure 1, covering an area of 31 km2, is located in the Scenic Forest Region in Purple Mountain, Nanjing, Jiangsu Province (118°48′~118°52′ E, 32°02′~32°06′ N, WGS84), with an elevation of 448.9 m, annual precipitation of 1200 mm, an average annual temperature of 15.4 °C. Nowadays, in this study area, the forests are mainly planted forests and naturally restored secondary forests, and the community types are secondary deciduous forests and mixed coniferous forests, including Pinus massoniana (PM), Pinus elliottii (PE), Quercus acutissima (QA), broad-leaved forests including Koelreuteria paniculata Laxm (KPL), Celtis sinensis Pers. (CSP), and Liquidambar formosana Hance (LFH), in addition to a portion of Phyllostachys edulis (BA) [38].

Figure 1.

The geographical location of the study area. (a) Purple Mountain, Jiangsu Province, China. (b) GF-5 image (the Purple Mountain).

2.2. Data Collection and Preprocessing

To accurately identify the different tree species in the study area, four data types were used in this study: Sentinel-2A imagery, GF-5 imagery, a Digital Elevation Model (DEM) data, and field data.

Sentinel-2A (L2A) images were downloaded from the Copernicus Open Access Hub (https://scihub.copernicus.eu/ (accessed on 19 September 2019)) at a spatial resolution of 10 m for four bands (b2, b3, b4, b8) and 20 m for six bands (b5, b6, b7, b8A, b11, b12); the 2A-level data mainly contain atmospherically corrected atmospheric bottom reflectance data, and then we resampled the 20 m bands to 10 m by SNAP.

The GF-5 AHSI Image was obtained from the Land Satellite Remote Sensing Application Center of the Ministry of Natural Resources, China. The GF-5 AHSI Image is a 330-channel imaging spectrometer with an approximately 30 m spatial resolution covering the 0.4–2.5 µm spectral range. The spectral resolution of visible and near-infrared (VNIR)(0.4~1.0 μm) is about 5 nm and 10 nm of SWIR (1.0~2.5 μm) experiments.

The GF-5 images were processed by the preprocessing module of the PIE-Hyp 6.3 software, which was developed by Piesat Information Technology Co., Ltd., Beijing, China. The preprocessing mainly includes wavelength information writing, and combined storage of data in the visible-NIR band and the short-wave infrared band. For the short-wave infrared (SWIR) band, there are two strong water vapor absorption bands at 1350–1426 nm (b43–b50) and 1798–1948 nm (b96–b112), and the first four bands in the partial wavelength overlap band of SWIR were discarded, followed by bad band removal and stripe repair, radiation calibration, atmospheric correction (6S model), and geometric correction (the accuracy was 0.15 pixels); the final image contained 282 bands.

Since topography significantly influences the distribution of forest species in montane ecosystems, considering topographic metrics in classification can effectively improve classification accuracy [39]. The Digital Elevation Model (DEM) data were obtained from the Geospatial Data Cloud (http://www.gscloud.cn/ (accessed on 29 June 2009)) with a spatial resolution of 30 m, and the DEM data were resampled to 10 m to be consistent with the resolution of the satellite images.

The reference data were derived from the survey data of forest resource planning and design. The survey was conducted from May to December of 2018. Each sample plot recorded information such as tree species, diameter at breast height (DBH), canopy cover, and average height. There were 777 sub-compartments, with 2535 samples measured, each with an area of 100 m2. The number of samples for each type of tree species is shown in Table 1. Google Earth images were used to verify the samples. Two-thirds of the total number of samples were used as training, and the remaining samples were used as verification.

Table 1.

The number of sample points and area.

2.3. Methods

2.3.1. Image Fusion

The spatial resolution of GF-5 is 30 m, which could not obtain high accuracy in the present tree species classification studies. Therefore, fusing GF-5 with a high spatial resolution image was necessary to improve its spatial resolution while maintaining spectral fidelity, and Gram-Schmidt (GS) and Harmonic Analysis Fusion (HAF) had been proven to be effective in previous remote sensing image fusion.

The GS method is an image fusion method based on the Gram-Schmidt transformation. The number of bands is not limited. The GS fusion method uses GS transformation to transform the multi-spectral image into orthogonal space, then replaces the first component with a high-resolution image, and finally obtains the fused image by inverse transformation. The fused image not only improves the spatial resolution, but also maintains the spectral characteristics of the original image [40]. Harmonic analysis can decompose the image into a set of components composed of harmonic energy spectrum feature components (harmonic remainder, amplitude, and phase) by analyzing the characteristics between image spectral dimensions. For a single pixel in the image, harmonic analysis expresses the spectral curve of each pixel as the sum of a series of positive (cosine) sine waves composed of harmonic remainders, amplitude, and phase [41]. HAF was realized by the spectral-spatial fusion module of PIE-Hyp6.3 software (PIESAT, Sydney, Australia). In this paper, two image fusion methods were adopted for experiments and we evaluated the image fusion quality by using Average Gradient (AG), Standard Deviation (SD), Mean Squared Error (MSE), Mean Value (MV), and Information Entropy (IE) [42,43].

2.3.2. Forest Extraction

VCT is a highly automated vegetation change detection algorithm proposed by Huang et al. (2010), which has been developed to reconstruct recent forest disturbance history using Landsat time series. The integrated forest z-score (IFZ) in the VCT algorithm was used to detect the probability of whether an image was a forest image or not. The change in the IFZ index for each image was used to determine whether a forest disturbance has occurred in the time year of the image in the time series. Huang used Band3, Band5 and Band7 of Landsat 8 as the characteristic bands for the IFZ to be calculated. The smaller the IFZ, the greater the possibility of a forest. The IFZ of more than 99% forest pixels were less than 3, under the assumption that forest pixels are normally distributed [44]. The IFZ is calculated as follows.

where: indicates the degree of deviation between the image value of an image element in a particular band and the forest image element, indicates the image value of any image element in a particular band, denotes the average of the image elements of all forest samples in a particular band, and denotes the standard deviation of the image element values for all forest samples in a particular band. indicates the number of feature bands selected for remote sensing images. There were many bands about GF-5 images, so band selection was needed to determine the best band combination for IFZ calculation. Due to the comprehensive consideration of the amount of information of single band images and the correlation between bands, the optimal index method (OIF) was very convenient and reliable for screening the optimal band combination in the local band range. The OIF index effectively unifies the standard deviation and correlation coefficients, providing a further basis for image quality judgement. The principle is that the smaller the correlation between the bands, the larger the standard deviation of the bands and the more informative the combination of bands.

The OIF formula is as follows.

where: denotes the standard deviation of the radiometric brightness values in the i band; denotes the correlation coefficient between bands i, j; m denotes the total number of bands synthesized.

Therefore, we first selected the corresponding bands of GF-5 (47 bands in total), according to the wavelength range of Landsat 8 OLI, Band3, Band5 and Band7, arranged and combined them, and calculated the OIF value of each combination. The larger the OIF value, the greater the amount of data information contained. As shown in Table 2, among the top 10 band combinations of OIF index values, the maximum values are b42 (565 nm), b114 (874 nm) and b280 (2091 nm) band combinations. Therefore, three band combinations were determined to be used to calculate IFZ.

Table 2.

Results of OIF for different band combinations of GF-5 images.

2.3.3. Band Selection of Fusion Image

There were many bands of the fusion image, which could produce dimensional disasters, and band selection for hyperspectral dimensionality reduction was a feasible solution to determine the optimal subset of bands for HSI processing, Wang et al. (2018) proposed the TRC-OC-FDPC algorithm for band selection, which was based on the optimal clustering framework for cluster optimization for band selection, which could obtain the optimal clustering of bands under the specified form of objective function. The effect of finalizing the selection, FDPC, was a simple and effective criterion for sorting data points and automatically finding clustering centers by defining the clustering center as a local maximum in the data point density [45]. The final selected bands were 15, 21, 28, 41, 55, 60, 67, 84, 89, 104, 110, 121, 142, 143, 158, 167, 184, 218, 227, and 282.

2.3.4. Feature Extraction

Eight texture features (co-occurrence matrix, including mean, variance, homogeneity, contrast, dissimilarity, entropy, second moment, and correlation) were calculated [46]. Slope and Aspect were extracted from DEM, and twenty-six vegetation indices were extracted based on Shang et al.’s (2020) research, which was sensitive to biochemical information to increase the different vegetation types’ differentiability [47] and included in the vegetation feature set.

2.3.5. Random Forest Classifier

The random forest model is insensitive to the number of input variables, can handle high-dimensional data without feature selection, has good generalization and overfitting resistance, fast training, etc. [48]. It can handle thousands of input variables without deleting them. Mean Decrease Gini (MDG) and Mean Decrease Accuracy (MDA) evaluate the importance of variables, and could estimate which variables were important in the classification [49,50].

Therefore, we chose a random forest classifier to classify tree species in the Purple Mountain. We designed ten classification schemes, analyzed the effects of spectral features (SF), vegetation indices (VI), topographic features (TGF), and texture features (TF) on the separability of different tree species, and selected the optimal separability scheme for tree species classification:

- (1)

- Scheme 1: Spectral features (all bands), Images preprocessed by GF-5;

- (2)

- Scheme 2: Spectral features (all bands), fusion image;

- (3)

- Scheme 3: Spectral features (SF), band selection, fusion image;

- (4)

- Scheme 4: SF and vegetation indices (SF + VI);

- (5)

- Scheme 5: SF and texture features (SF + TF);

- (6)

- Scheme 6: SF, VI and topographic features (SF + TGF + VI);

- (7)

- Scheme 7: SF, TGF, TF and vegetation indices (SF + TGF + TF + VI);

- (8)

- Scheme 8: SF, TGF, TF, VI (SF + TGF + TF + VI), features of MDA selection;

- (9)

- Scheme 9: SF, TGF, TF, VI (SF + TGF + TF + VI), features of MDG selection;

- (10)

- Scheme 10: Spectral features (10 bands), Images preprocessed by Sentinel-2A.

The RF classifier in the study was based on the Random Forest plugin in ENVI 5.3. After several tests, the parameter ntree (number of trees) was set to 500; the mtry is the square root of the number of variables in the data set.

To evaluate the accuracy of tree species classification in fused images, we calculated the overall accuracy (OA), Kappa coefficient, producer accuracy (PA), and user accuracy (UA) based on the confusion matrix of the ground test samples [51].

3. Results

3.1. Images Fusion and Evaluation

The results of the different methods of fusion are shown in Figure 2, As can be seen from Table 3, the GS fusion image was higher than the HAF image according to the standard deviation, mean value, and information entropy, and the harmonic analysis fusion image was higher than the GS fusion image according to the average gradient and mean square error. Combining these indicators and the actual fusion images, GS fusion images were selected as the data source for tree species classification.

Figure 2.

Images of different fusion methods ((a): original image; (b): Gram-Schmidt fusion image; (c): Harmonic analysis fusion image).

Table 3.

Evaluation of the integration degree of the fused spatial information.

3.2. Forest Extraction Results

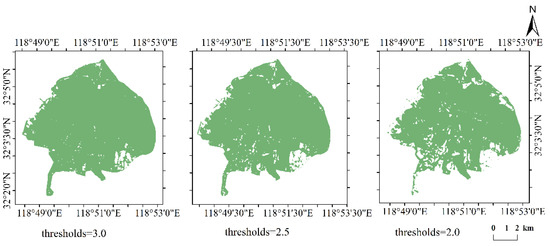

For Landsat images, when the IFZ was three, it could be used to distinguish between forest and non-forest areas, but for GF-5 images, when the IFZ was three, it could not distinguish between forest and non-forest areas very well. Therefore, it was necessary to determine the optimal threshold of IFZ. We designed three schemes respectively, selected 600 samples of forest and non-forest, calculated the accuracy under the three thresholds respectively, and calculated the optimal accuracy of distinguishing forest and non-forest when IFZ = 3, 2.5, 2. The results are shown in Table 4. It could be seen that when IFZ = 3, The overall accuracy of forest extraction was the highest. The results of three different thresholds are shown in Figure 3.

Table 4.

Accuracy evaluation results for different thresholds.

Figure 3.

Forest extraction results of different thresholds for IFZ index of GF-5 image.

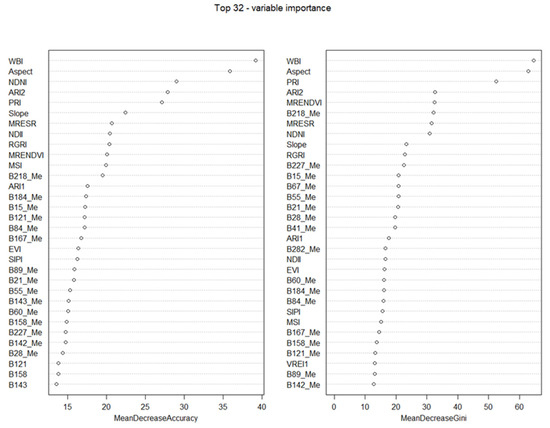

3.3. Feature Importance of Forest Type Identification

Figure 4 shows the results obtained by RF based on Section 2.3.4. After several comparative tests, the best mtry of 32, ntree of 500 was determined. According to these two methods, the higher the value of the feature, the more important it is for the classification of the tree species, it could be seen that the top five features according to MDA are WBI, Aspect, NDNI, ARI2, and FRI; according to MDG, the top five features were WBI, Aspect, PRI, ARI2, and MRENDVI. Both indicators include Aspect features, topographic features had a great contribution to tree species identification, and the rest were vegetation indices; these vegetation indices were related to vegetation cell structure and color content, and from the variables selected for all of the two indicators, the texture features were mean values of the bands, indicating that the mean values were helpful in tree species identification.

Figure 4.

The importance ranking results of random forest characteristic variables.

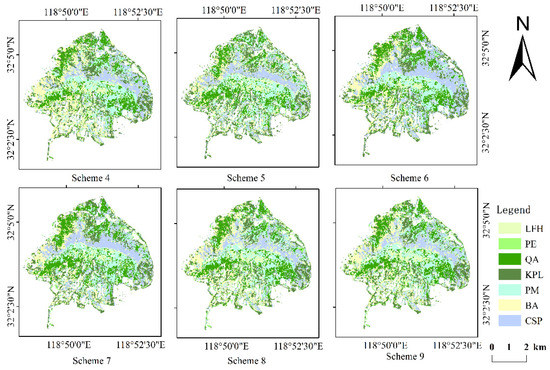

3.4. Classification Results and Evaluation of Different Schemes

Images of different scenarios were classified by RF. As can be seen from Table 5, it could be seen that Scheme 8 (MDA) had the highest overall accuracy and kappa coefficient (overall accuracy 86.93% and Kappa 0.85), Scheme 9 (MDG) ranked second (overall accuracy 86.63% and Kappa 0.84) and Scheme 1 had the worst results (overall accuracy 61.67% and Kappa 0.53). Scheme 1 and Scheme 2 represented the GF-5 images and fused images, respectively. Scheme 3 was the result of hyperspectral classification after band selection, and it could be seen that classification accuracy reduced by 0.51%. Scheme 7 used all the feature variables, which were higher than Scheme 4, 5, and 6, and the results of Scheme 8 and Scheme 9 outperformed other scenarios, indicating that RF importance ranking could select the feature variables that were important for tree species classification, and that using only 32 (instead of hundreds) feature variables resulted in higher classification accuracy.

Table 5.

Classification results of different schemes.

Since Scheme 8 got the best classification results, the confusion matrix and classification result graph were listed for detailed analysis. From Table 6, we can see that Liquidambar formosana Hance (LFH) had the highest user accuracy, followed by Pinus elliottii (PE), Phyllostachys edulis (BA) and Celtis sinensis Pers. (CSP), which have the highest producer accuracy, mainly because Phyllostachys edulis (BA) is mainly pure forest. There were more pure forests of Liquidambar formosana Hance (LFH) and Celtis sinensis Pers. (CSP), and less mixed forests of these two species.

Table 6.

Confusion matrix of Scheme 8.

3.5. Results of Forest Type Mapping

The highest overall classification result was selected as the thematic map (Figure 5), from which it could be seen that on the ridges of Purple Mountain, Quercus acutissima (QA) was the most abundant dominant forest type, with Koelreuteria paniculata Laxmi (KPL) having a relatively small proportion, concentrated in the east; according to the reference data, Pinus massoniana (PM) was the most widely distributed and in addition, Quercus acutissima (QA) stands were mainly distributed on the south-facing side of ridges and slopes, which was consistent with its favorable habitat. For Pinus elliottii (PE), it was found only in small numbers, mainly in the central and southern parts of the study area, and Phyllostachys edulis (BA) was also relatively small in proportion, mainly in the west, and relatively isolated. The three coniferous and broad-leaved mixed forests, Pinus massoniana (PM), Liquidambar formosana Hance (LFH), and Quercus acutissima, (QA) were mainly distributed located in low altitude areas and flat areas near the communities, in addition, Celtis sinensis Pers (CSP) was mainly distributed in the central and eastern areas, which were concentrated.

Figure 5.

Classification results for different schemes.

According to Table 5, in terms of PA, Celtis sinensis Pers. (CSP) and Phyllostachys edulis (BA) were the same, achieved the highest accuracy, followed by Pinus massoniana (PM) (94.67%), and the accuracy of all tree species were more than 70%. According to UA, Liquidambar formosana Hance (LFH) was the highest, followed by Pinus elliottii (PE), and the other tree species were more than 85%. The colors and textures of the crown of coniferous forest and broad-leaved forest were very different. The coniferous forest was more easily recognized because of its unique needles.

4. Discussion

Spectral features are the basis of tree species classification. Sentinel-2A images have relatively higher spatial resolution and less spectral resolution than GF-5 images. The spectral resolution of GF-5 images is about 5 nm for VNIR and 10 nm for SWIR [47]. High spectral resolution showed great potential in the classification of complex tree species [25,26]. When fusing hyperspectral and multispectral images, the ratio of their spatial resolution also had an impact on the results. In this study, the ratio of spatial resolution between hyperspectral and multispectral images is three. When the ratio of spatial resolution is two, major changes cannot be represented, and image fusion methods can improve spatial fidelity [52].

In this paper, Gram-Schmidt (GS) and Harmonic Analysis Fusion (HAF) were used to fuse GF-5 and Sentinel-2A images. Ren et al. (2020) showed that Adaptive Gram-Schmidt (GSA) and Smoothing Filtered-Based Intensity Modulation (SFIM) can be used to fuse GF-5 with Sentinel-2A images [53]. Based on the fused spectral image of ZY-3 and Sentinel-2A, the fusion of ZY-3 and Sentinel-2A images increased the spectral bands from 4 to 10 and improved the classification accuracy by 14.2% at 2 m spatial resolution, and the image fusion played an important role in the improvement of tree species classification accuracy [54]. In this study, the classification accuracy of fused images was 17.98% higher than that of GF-5 images (Scheme 1 and Scheme 2).

Zhang et al. (2018) studied the fusion process of Gaofen-2 (GF-2), and fused the visible and near-infrared bands, respectively. It was found that the GS method was more suitable for the fusion of near-infrared band images and was more suitable for monitoring vegetation and water indicators [55]. Mauro Dalla Mura et al. (2015) combined panchromatic images with multispectral or hyperspectral data; the results illustrated that the Gram-Schmidt-Pansharping method can be used as an example to evaluate the performance of global and local gain estimation strategies. In the fusion of Synthetic Aperture Radar (SAR) images with optical images [56], Yan et al. (2020) used the Non-Subsampled Contour wave Transform (NSCT) to improve the GS method to obtain high-resolution images containing spectral information and SAR image detail information [57]. These showed the superiority of the GS method for image fusion.

Zhang et al. (2020) constructed an improved feature set, HGFM, by over combining multi-scale Guided Filter (GF)-optimized Harmonic Analysis (HA) with morphological operations for HSI classification, combined with random forests, etc., to evaluate different feature sets; experimental results confirmed the effectiveness of the feature set in terms of classification accuracy and generalization ability [58]. The hyperspectral anomaly detection method based on harmonic analysis and low-rank decomposition proposed by Xiang et al. (2019) was validated using public hyperspectral datasets (University of Pavia ROSIS, Indian Pines AVIRIS, Salinas AVIRIS), and the method was found to have excellent visual properties, ROC curves and AUC values with excellent performance and satisfactory results [59]. In this paper, only the common HAF method was used for fusion, and the fusion results were less reliable than those of the GS method (Table 2); improvement of the HAF method is needed for future hyperspectral fusion research.

In addition, most of the top-ranked features are vegetation indices with NDVI, WBI, etc. Water Band Index (WBI) was very sensitive to changes in canopy water status and has important applications in canopy stress analysis, productivity prediction, and modeling [60]. NDVI was widely used in tree species classification. These vegetation indices accounted for the majority of the results and improved the results of tree species classification; they differed in canopy pigmentation and moisture content, and these differences were important in distinguishing tree species, which was similar to the results of Li et al. (2020) whose findings indicate that it was possible to classify forests based on narrow-band vegetation indices (NDVI705, mSR705, mNDVI705, VOG1, VOG2, REP) and texture information to classify forests in cloud-shaded areas, which was better than using only reflectance images [61]. Zagajewski et al. (2015) also demonstrated the validity of NDVI and NDWI for tree species classification [62].

Aspect and Slope were ranked highly (Figure 4), suggesting that topographic features can improve the classification accuracy of tree species (Scheme 6, Scheme 7). Minfei Ma et al. (2021) added topographic features as variables to the spectral bands and found that elevation, slope, and aspect all influenced the current spatial distribution of the four tree species in the study area [36]. The slope derived from the DEM data contributed the most to the classification of forest types, which is consistent with the findings of this paper (Figure 4) [63].

The similarity of textural between tree species of the same type was significant, while natural forests have more variation in canopy size, height, and density. Textural features could effectively reduce the “salt-and-pepper noise” and improve the integrity of patches and classification accuracy [64]. This is consistent with the findings of Ye et al. (2021), who used WorldView-2 and Sentinel-2A image fusion to classify eight land cover classes with the participation of spectral features, texture features, and who also assessed the relative importance of these features, with GLCM-Mean ranking second in importance [2].

In terms of PA, the precision of all species was greater than 70%, and Celtis sinensis Pers. (CSP) and Phyllostachys edulis (BA) were both 98%. The highest accuracy occurred in homogeneous forest areas with high forest and tree canopy cover [65]; they were more concentrated in spatial distribution and had a smaller number of missed image elements. Pinus elliottii (PE) had an accuracy of less than 80%; these two trees were more dispersed in space. Pinus elliottii (PE) covered a smaller area, with a significant mix of pixels, and more pixels were missed and easily classified as other species.

This study showed that GF-5 and Sentinel-2A images have the potential to identify tree species types, which can provide new options for forest resource detection and management, and the results of seven forest species classification showed that the method can obtain high classification accuracy. In this study, only a single remote sensing image of Sentinel-2A was used for image fusion. It has been shown that time series images play an important role in tree species classification, which can more intuitively reflect the spectral changes during the growth and development of trees, and the use of multi-temporal Sentinel-2A and GF-5 fusion can be considered to show the spectral change pattern more finely [66,67,68]. In addition, only one machine learning method (RF) was used in this paper; other machine learning methods such as Support Vector Machine (SVM), Artificial Neural Network (ANN), K-Nearest Neighbor (KNN), and BP neural network could also be used in the study. Deep learning methods (AlexNet, VGG-16, ResNet-50, LSTM, etc., have also been used recently in tree species classification [1,69,70], and these methods can be considered in subsequent studies to compare the classification accuracy of different methods. Future research can consider using some deep learning methods for research, which may further improve the potential of mapping forest tree species types.

5. Conclusions

In this study, Firstly, GF-5 and Sentinel-2A images was fused by the GS and HAF methods. Secondly, forest was extracted by the IFZ. Thirdly, features such as vegetation indices, texture features, and topographic features were extracted. Finally, tree species were classified, resulted in the following conclusions.

- (1)

- GS fusion images were superior to harmonic analysis fusion images according to the comprehensive quality evaluation indexes.

- (2)

- The overall accuracy and Kappa coefficient were higher than the classification results of single remote sensing data when using the IFZ to extract forests and fusing features from a single data source (vegetation indices, texture features, and topographic features) for classification.

- (3)

- The fused images had high spectral and spatial resolution, and the overall accuracy and Kappa coefficients of tree species classification were better than those of the original GF-5 image. The RF importance ranking results showed that WBI, Aspect, NDNI, ARI2, FRI, MRENDVI, and the mean value of textural features was more important, which should be focused on when using hyperspectral for tree species classification later.

There are some potential areas for improvement in this study:

- (1)

- In this paper, the spatial resolution of GF-5 and Sentinel-2A is 10 m after fusion; considering the growth condition and canopy size of each tree species in the landscape forest area, and there are some mixed image elements at the junction of different tree species, higher resolution images can be considered for fusion, and some new image fusion methods can also be tried.

- (2)

- When selecting the method for tree classification, this study used the prevalent RF algorithm, which can be classified using the more popular deep learning and migration learning methods, to further improve the classification results.

Author Contributions

Conceptualization, W.C. and Y.S.; methodology, W.C. and Y.S.; software, W.C. and Y.S.; validation, W.C. and Y.S.; formal analysis, W.C. and Y.S.; investigation, W.C. and Y.S.; data curation, W.C. and Y.S.; writing—original draft preparation, W.C.; writing—review and editing, W.C.; visualization, W.C.; supervision, J.P.; project administration, J.P.; funding acquisition, J.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (No. 31470579); the Priority Academic Programme Development of Jiangsu Higher Education Institutions (PAPD); the Fund for Natural Science in Colleges (11KJB220001); the Universities of Jiangsu Province the Science and Technology Innovation Foundation of Nanjing Forestry University (163010049), the Jiangsu Forestry Science and Technology Innovation and Promotion Project (LYKJ [2021]14).

Acknowledgments

We would like to acknowledge the European Space Agency (ESA) for freely providing Sentinel-2A image required for the research, as well as the reviewers’ constructive comments and suggestions for the improvement of our study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, C.; Xia, K.; Feng, H.; Yang, Y.; Du, X. Tree Species Classification Using Deep Learning and RGB Optical Images Obtained by an Unmanned Aerial Vehicle. J. For. Res. 2021, 32, 1879–1888. [Google Scholar] [CrossRef]

- Ye, N.; Morgenroth, J.; Xu, C.; Chen, N. Indigenous Forest Classification in New Zealand—A Comparison of Classifiers and Sensors. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102395. [Google Scholar] [CrossRef]

- Fassnacht, F.E.; Latifi, H.; Stereńczak, K.; Modzelewska, A.; Lefsky, M.; Waser, L.T.; Straub, C.; Ghosh, A. Review of Studies on Tree Species Classification from Remotely Sensed Data. Remote Sens. Environ. 2016, 186, 64–87. [Google Scholar] [CrossRef]

- Li, D.; Ke, Y.; Gong, H.; Li, X. Object-Based Urban Tree Species Classification Using Bi-Temporal Worldview-2 and Worldview-3 Images. Remote Sens. 2015, 7, 16917–16937. [Google Scholar] [CrossRef]

- Heller, R.C.; Doverspike, G.E.; Aldrich, R.C. Identification of Tree Species on Large—Scale Panchromatic and Color Aerial Photographs; U.S. Deptartment of Agriculture, Forest Service: Washington, DC, USA, 1964.

- Vieira, I.C.G.; de Almeida, A.S.; Davidson, E.A.; Stone, T.A.; Reis De Carvalho, C.J.; Guerrero, J.B. Classifying Successional Forests Using Landsat Spectral Properties and Ecological Characteristics in Eastern Amazônia. Remote Sens. Environ. 2003, 87, 470–481. [Google Scholar] [CrossRef]

- Walsh, S.J. Coniferous Tree Species Mapping Using LANDSAT Data. Remote Sens. Environ. 1980, 9, 11–26. [Google Scholar] [CrossRef]

- Brown De Colstoun, E.C.; Story, M.H.; Thompson, C.; Commisso, K.; Smith, T.G.; Irons, J.R. National Park Vegetation Mapping Using Multitemporal Landsat 7 Data and a Decision Tree Classifier. Remote Sens. Environ. 2003, 85, 316–327. [Google Scholar] [CrossRef]

- Wolter, P.T.; Mladenoff, D.J.; Host, G.E.; Crow, T.R. Improved Fotest Glassification in the Northern Lake State Using Multi-Temporal Lndsat Lmage. Photogramm. Eng. Remote Sens. 1995, 61, 1129–1143. [Google Scholar]

- Clark, M.L.; Roberts, D.A.; Clark, D.B. Hyperspectral Discrimination of Tropical Rain Forest Tree Species at Leaf to Crown Scales. Remote Sens. Environ. 2005, 96, 375–398. [Google Scholar] [CrossRef]

- Goodenough, D.G.; Dyk, A.; Niemann, K.O.; Pearlman, J.S.; Chen, H.; Han, T.; Murdoch, M.; West, C. Processing Hyperion and ALI for Forest Classification. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1321–1331. [Google Scholar] [CrossRef]

- Dalponte, M.; Bruzzone, L.; Gianelle, D. Tree Species Classification in the Southern Alps Based on the Fusion of Very High Geometrical Resolution Multispectral/Hyperspectral Images and LiDAR Data. Remote Sens. Environ. 2012, 123, 258–270. [Google Scholar] [CrossRef]

- Aval, J.; Fabre, S.; Zenou, E.; Sheeren, D.; Fauvel, M.; Briottet, X. Object-Based Fusion for Urban Tree Species Classification from Hyperspectral, Panchromatic and NDSM Data. Int. J. Remote Sens. 2019, 40, 5339–5365. [Google Scholar] [CrossRef]

- Clark, M.L.; Roberts, D.A. Species-Level Differences in Hyperspectral Metrics among Tropical Rainforest Trees as Determined by a Tree-Based Classifier. Remote Sens. 2012, 4, 1820–1855. [Google Scholar] [CrossRef]

- Goodbody, T.R.H.; Coops, N.C.; Marshall, P.L.; Piotr, T.; Patrick, C. Unmanned Aerial Systems for Precision Forest Inventory Purposes: A Review and Case Study. For. Chron. 2017, 93, 71–81. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry Applications of UAVs in Europe: A Review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Hartling, S.; Sagan, V.; Sidike, P.; Maimaitijiang, M.; Carron, J. Urban Tree Species Classification Using a Worldview-2/3 and LiDAR Data Fusion Approach and Deep Learning. Sensors 2019, 19, 1284. [Google Scholar] [CrossRef]

- Rochdi, N.; Yang, X.; Staenz, K.; Patterson, S.; Purdy, B. Mapping Tree Species in a Boreal Forest Area Using RapidEye and Lidar Data. Earth Resour. Environ. Remote Sens./GIS Appl. V 2014, 9245, 92450Z. [Google Scholar] [CrossRef]

- Zhao, D.; Pang, Y.; Liu, L.; Li, Z. Individual Tree Classification Using Airborne Lidar and Hyperspectral Data in a Natural Mixed Forest of Northeast China. Forests 2020, 11, 303. [Google Scholar] [CrossRef]

- Papeş, M.; Tupayachi, R.; Martínez, P.; Peterson, A.T.; Powell, G.V.N. Using Hyperspectral Satellite Imagery for Regional Inventories: A Test with Tropical Emergent Trees in the Amazon Basin. J. Veg. Sci. 2010, 21, 342–354. [Google Scholar] [CrossRef]

- George, R.; Padalia, H.; Kushwaha, S.P.S. Forest Tree Species Discrimination in Western Himalaya Using EO-1 Hyperion. Int. J. Appl. Earth Obs. Geoinf. 2014, 28, 140–149. [Google Scholar] [CrossRef]

- Lu, M.; Chen, B.; Liao, X.; Yue, T.; Yue, H.; Ren, S.; Li, X.; Nie, Z.; Xu, B. Forest Types Classification Based on Multi-Source Data Fusion. Remote Sens. 2017, 9, 1153. [Google Scholar] [CrossRef]

- Xi, Y.; Ren, C.; Wang, Z.; Wei, S.; Bai, J.; Zhang, B.; Xiang, H.; Chen, L. Mapping Tree Species Composition Using OHS-1 Hyperspectral Data and Deep Learning Algorithms in Changbai Mountains, Northeast China. Forests 2019, 10, 818. [Google Scholar] [CrossRef]

- Vangi, E.; D’amico, G.; Francini, S.; Giannetti, F.; Lasserre, B.; Marchetti, M.; Chirici, G. The New Hyperspectral Satellite PRISMA: Imagery for Forest Types Discrimination. Sensors 2021, 21, 1182. [Google Scholar] [CrossRef] [PubMed]

- Wan, L.; Lin, Y.; Zhang, H.; Wang, F.; Liu, M.; Lin, H. GF-5 Hyperspectral Data for Species Mapping of Mangrove in Mai Po, Hong Kong. Remote Sens. 2020, 12, 656. [Google Scholar] [CrossRef]

- Gong, Z.; Gu, L.; Ren, R.; Yang, S. Forest Classification Based on GF-5 Hyperspectral Remote Sensing Data in Northeast China. SPIE 2020, 11501, 49. [Google Scholar] [CrossRef]

- Meng, X.; Shen, H.; Li, H.; Zhang, L.; Fu, R. Review of the Pansharpening Methods for Remote Sensing Images Based on the Idea of Meta-Analysis: Practical Discussion and Challenges. Inf. Fusion 2019, 46, 102–113. [Google Scholar] [CrossRef]

- Qiu, C.; Wei, J.; Dong, Q. Research of Image Fusion Method about ZY-3 Panchromatic Image and Multispectral Image. In Proceedings of the 5th International Workshop on Earth Observation and Remote Sensing Applications, EORSA 2018, Xi’an, China, 18–20 June 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Li, C.; Liu, L.; Wang, J.; Zhao, C.; Wang, R. Comparison of Two Methods of the Fusion of Remote Sensing Images with Fidelity of Spectral Information. Int. Geosci. Remote Sens. Symp. 2004, 4, 2561–2564. [Google Scholar] [CrossRef]

- Zheng, W.; Li, X.; Sun, Y. Study on the Quality and Adaptability of Fusion Methods Based on Worldview-2 Remote Sensing Image. In Multispectral, Hyperspectral, and Ultraspectral Remote Sensing Technology, Techniques and Applications V; SPIE: Bellingham, DC, USA, 2014; Volume 9263, pp. 323–331. [Google Scholar] [CrossRef]

- Chen, X.; Wu, J.; Zhang, Y. Comparison of Fusion Algorithms for ALOS Panchromatic and Multispectral Images. In Proceedings of the 2008 International Workshop on Education Technology and Training and 2008 International Workshop on Geoscience and Remote Sensing, ETT and GRS 2008, Shanghai, China, 21–22 December 2008; Volume 2, pp. 167–170. [Google Scholar] [CrossRef]

- Sakamoto, T.; Yokozawa, M.; Toritani, H.; Shibayama, M.; Ishitsuka, N.; Ohno, H. A Crop Phenology Detection Method Using Time-Series MODIS Data. Remote Sens. Environ. 2005, 96, 366–374. [Google Scholar] [CrossRef]

- Bradley, B.A.; Jacob, R.W.; Hermance, J.F.; Mustard, J.F. A Curve Fitting Procedure to Derive Inter-Annual Phenologies from Time Series of Noisy Satellite NDVI Data. Remote Sens. Environ. 2007, 106, 137–145. [Google Scholar] [CrossRef]

- Jakubauskas, M.E.; Legates, D.R.; Kastens, J.H. Crop Identification Using Harmonic Analysis of Time-Series AVHRR NDVI Data. Comput. Electron. Agric. 2003, 37, 127–139. [Google Scholar] [CrossRef]

- Yang, K.M.; Zhang, T.; Wang, L.B.; Qian, X.L.; Wang, L.W.; Liu, S.W. Harmonic Analysis Fusion of Hyperspectral Image and Its Spectral Information Fidelity Evaluation. Spectrosc. Spectr. Anal. 2013, 33, 2496–2501. [Google Scholar] [CrossRef]

- Ma, M.; Liu, J.; Liu, M.; Zeng, J.; Li, Y. Tree Species Classification Based on Sentinel-2 Imagery and Random Forest Classifier in the Eastern Regions of the Qilian Mountains. Forests 2021, 12, 1736. [Google Scholar] [CrossRef]

- Archer, K.J.; Kimes, R.V. Empirical Characterization of Random Forest Variable Importance Measures. Comput. Stat. Data Anal. 2008, 52, 2249–2260. [Google Scholar] [CrossRef]

- Deng, S.; Katoh, M.; Guan, Q.; Yin, N.; Li, M. Interpretation of Forest Resources at the Individual Tree Level at Purple Mountain, Nanjing City, China, Using WorldView-2 Imagery by Combining GPS, RS and GIS Technologies. Remote Sens. 2013, 6, 87–110. [Google Scholar] [CrossRef]

- Liu, M.; Liu, J.; Atzberger, C.; Jiang, Y.; Ma, M.; Wang, X. Zanthoxylum Bungeanum Maxim Mapping with Multi-Temporal Sentinel-2 Images: The Importance of Different Features and Consistency of Results. ISPRS J. Photogramm. Remote Sens. 2021, 174, 68–86. [Google Scholar] [CrossRef]

- Sarp, G. Spectral and Spatial Quality Analysis of Pan-Sharpening Algorithms: A Case Study in Istanbul. Eur. J. Remote Sens. 2014, 47, 19–28. [Google Scholar] [CrossRef]

- Xue, Z.; Du, P.; Su, H. Harmonic Analysis for Hyperspectral Image Classification Integrated with PSO Optimized SVM. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2131–2146. [Google Scholar] [CrossRef]

- Shi, W.; Zhu, C.Q.; Tian, Y.; Nichol, J. Wavelet-Based Image Fusion and Quality Assessment. Int. J. Appl. Earth Obs. Geoinf. 2005, 6, 241–251. [Google Scholar] [CrossRef]

- Chu, J.; Fan, J.; Chen, Y.; Zhang, F. A Comparative Analysis on GF-2 Remote Sensing Image Fusion Effects. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 3770–3773. [Google Scholar] [CrossRef]

- Huang, C.; Goward, S.N.; Masek, J.G.; Thomas, N.; Zhu, Z.; Vogelmann, J.E. An Automated Approach for Reconstructing Recent Forest Disturbance History Using Dense Landsat Time Series Stacks. Remote Sens. Environ. 2010, 114, 183–198. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, F.; Li, X. Optimal Clustering Framework for Hyperspectral Band Selection. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5910–5922. [Google Scholar] [CrossRef]

- Haralick, R.M. Statistical and Structural Approaches to Texture. Proc. IEEE 1979, 67, 786–804. [Google Scholar] [CrossRef]

- Shang, K.; Xie, Y.; Wei, H. Study on Sophisticated Vegetation Classification for AHSI/GF-5 Remote Sensing Data. In Proceedings of the MIPPR 2019: Remote Sensing Image Processing, Geographic Information Systems, and Other Applications, Wuhan, China, 2–3 November 2019. [Google Scholar] [CrossRef]

- Ba, A.; Laslier, M.; Dufour, S.; Hubert-Moy, L. Riparian Trees Genera Identification Based on Leaf-on/Leaf-off Airborne Laser Scanner Data and Machine Learning Classifiers in Northern France. Int. J. Remote Sens. 2020, 41, 1645–1667. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăgu, L. Random Forest in Remote Sensing: A Review of Applications and Future Directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Du, P.; Samat, A.; Waske, B.; Liu, S.; Li, Z. Random Forest and Rotation Forest for Fully Polarized SAR Image Classification Using Polarimetric and Spatial Features. ISPRS J. Photogramm. Remote Sens. 2015, 105, 38–53. [Google Scholar] [CrossRef]

- Story, M.; Congalton, R.G. Remote Sensing Brief Accuracy Assessment: A User’s Perspective. Photogramm. Eng. Remote Sens. 1986, 52, 397–399. [Google Scholar]

- Marcello, J.; Ibarrola-Ulzurrun, E.; Gonzalo-Martín, C.; Chanussot, J.; Vivone, G. Assessment of Hyperspectral Sharpening Methods for the Monitoring of Natural Areas Using Multiplatform Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8208–8222. [Google Scholar] [CrossRef]

- Ren, K.; Sun, W.; Meng, X.; Yang, G.; Du, Q. Fusing China GF-5 Hyperspectral Data with GF-1, GF-2 and Sentinel-2a Multispectral Data: Which Methods Should Be Used? Remote Sens. 2020, 12, 882. [Google Scholar] [CrossRef]

- Xie, Z.; Chen, Y.; Lu, D.; Li, G.; Chen, E. Classification of Land Cover, Forest, and Tree Species Classes with Ziyuan-3 Multispectral and Stereo Data. Remote Sens. 2019, 11, 164. [Google Scholar] [CrossRef]

- Zhang, D.; Xie, F.; Zhang, L. Preprocessing and Fusion Analysis of GF-2 Satellite Remote-Sensed Spatial Data. In Proceedings of the 2018 International Conference on Information Systems and Computer Aided Education (ICISCAE), Changchun, China, 6–8 July 2018; pp. 24–29. [Google Scholar]

- Mura, M.D.; Vivone, G.; Restaino, R.; Addesso, P.; Chanussot, J. Global and Local Gram-Schmidt Methods for Hyperspectral Pansharpening. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 37–40. [Google Scholar] [CrossRef]

- Yan, B.; Kong, Y. A Fusion Method of SAR Image and Optical Image Based on NSCT and Gram-Schmidt Transform. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Waikoloa, HI, USA, 26 September–2 October 2020; pp. 2332–2335. [Google Scholar]

- Zhang, W.; Du, P.; Lin, C.; Fu, P.; Wang, X.; Bai, X.; Zheng, H.; Xia, J.; Samat, A. An Improved Feature Set for Hyperspectral Image Classification: Harmonic Analysis Optimized by Multiscale Guided Filter. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3903–3916. [Google Scholar] [CrossRef]

- Xiang, P.; Song, J.; Li, H.; Gu, L.; Zhou, H. Hyperspectral Anomaly Detection with Harmonic Analysis and Low-Rank Decomposition. Remote Sens. 2019, 11, 3028. [Google Scholar] [CrossRef]

- Penuelas, J.; Filella, I.; Biel, C.; Serrano, L.; Save, R. The Reflectance at the 950-970 Nm Region as an Indicator of Plant Water Status. Int. J. Remote Sens. 1993, 14, 1887–1905. [Google Scholar] [CrossRef]

- Li, J.; Pang, Y.; Li, Z.; Jia, W. Tree Species Classification of Airborne Hyperspectral Image in Cloud Shadow Area. Springer Proc. Phys. 2020, 232, 389–398. [Google Scholar] [CrossRef]

- Peña, M.A.; Brenning, A. Assessing Fruit-Tree Crop Classi Fi Cation from Landsat-8 Time Series for the Maipo Valley, Chile. Remote Sens. Environ. 2015, 171, 234–244. [Google Scholar] [CrossRef]

- Liu, Y.; Gong, W.; Hu, X.; Gong, J. Forest Type Identification with Random Forest Using Sentinel-1A, Sentinel-2A, Multi-Temporal Landsat-8 and DEM Data. Remote Sens. 2018, 10, 946. [Google Scholar] [CrossRef]

- Dian, Y.; Li, Z.; Pang, Y. Spectral and Texture Features Combined for Forest Tree Species Classification with Airborne Hyperspectral Imagery. J. Indian Soc. Remote Sens. 2015, 43, 101–107. [Google Scholar] [CrossRef]

- Grabska, E.; Frantz, D.; Ostapowicz, K. Evaluation of Machine Learning Algorithms for Forest Stand Species Mapping Using Sentinel-2 Imagery and Environmental Data in the Polish Carpathians. Remote Sens. Environ. 2020, 251, 112103. [Google Scholar] [CrossRef]

- Persson, M.; Lindberg, E.; Reese, H. Tree Species Classification with Multi-Temporal Sentinel-2 Data. Remote Sens. 2018, 10, 1794. [Google Scholar] [CrossRef]

- Wessel, M.; Brandmeier, M.; Tiede, D. Evaluation of Different Machine Learning Algorithms for Scalable Classification of Tree Types and Tree Species Based on Sentinel-2 Data. Remote Sens. 2018, 10, 1419. [Google Scholar] [CrossRef]

- Grabska, E.; Hostert, P.; Pflugmacher, D.; Ostapowicz, K. Forest Stand Species Mapping Using the Sentinel-2 Time Series. Remote Sens. 2019, 11, 1197. [Google Scholar] [CrossRef]

- Zagajewski, B.; Kluczek, M.; Raczko, E.; Njegovec, A.; Dabija, A.; Kycko, M. Comparison of Random Forest, Support Vector Machines, and Neural Networks for Post-Disaster Forest Species Mapping of the Krkonoše/Karkonosze Transboundary Biosphere Reserve. Remote Sens. 2021, 13, 2581. [Google Scholar] [CrossRef]

- Xi, Y.; Ren, C.; Tian, Q.; Ren, Y.; Dong, X.; Zhang, Z. Exploitation of Time Series Sentinel-2 Data and Different Machine Learning Algorithms for Detailed Tree Species Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7589–7603. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).