Multibranch Unsupervised Domain Adaptation Network for Cross Multidomain Orchard Area Segmentation

Abstract

1. Introduction

- (1)

- This paper proposes a novel MTUDA network called MBUDA for cross multidomain orchard area segmentation; the designed multibranch structure and ancillary classifiers enable the segmentation model to learn the better feature representation of the target domains by learning and controlling the private features;

- (2)

- To further enhance the adaptation effect, an adaptation enhanced learning strategy is designed to refine the training process, which directly reduces the target–target gaps by aligning the features of target domain images with different confidence;

- (3)

- This paper designed various experiments to demonstrate the validity of the proposed methods, indicating that the proposed MBUDA method and adaptation enhanced learning strategy both achieve superior results to those of current approaches.

2. Materials and Methods

2.1. Related Work

2.1.1. Unsupervised Domain Adaptation

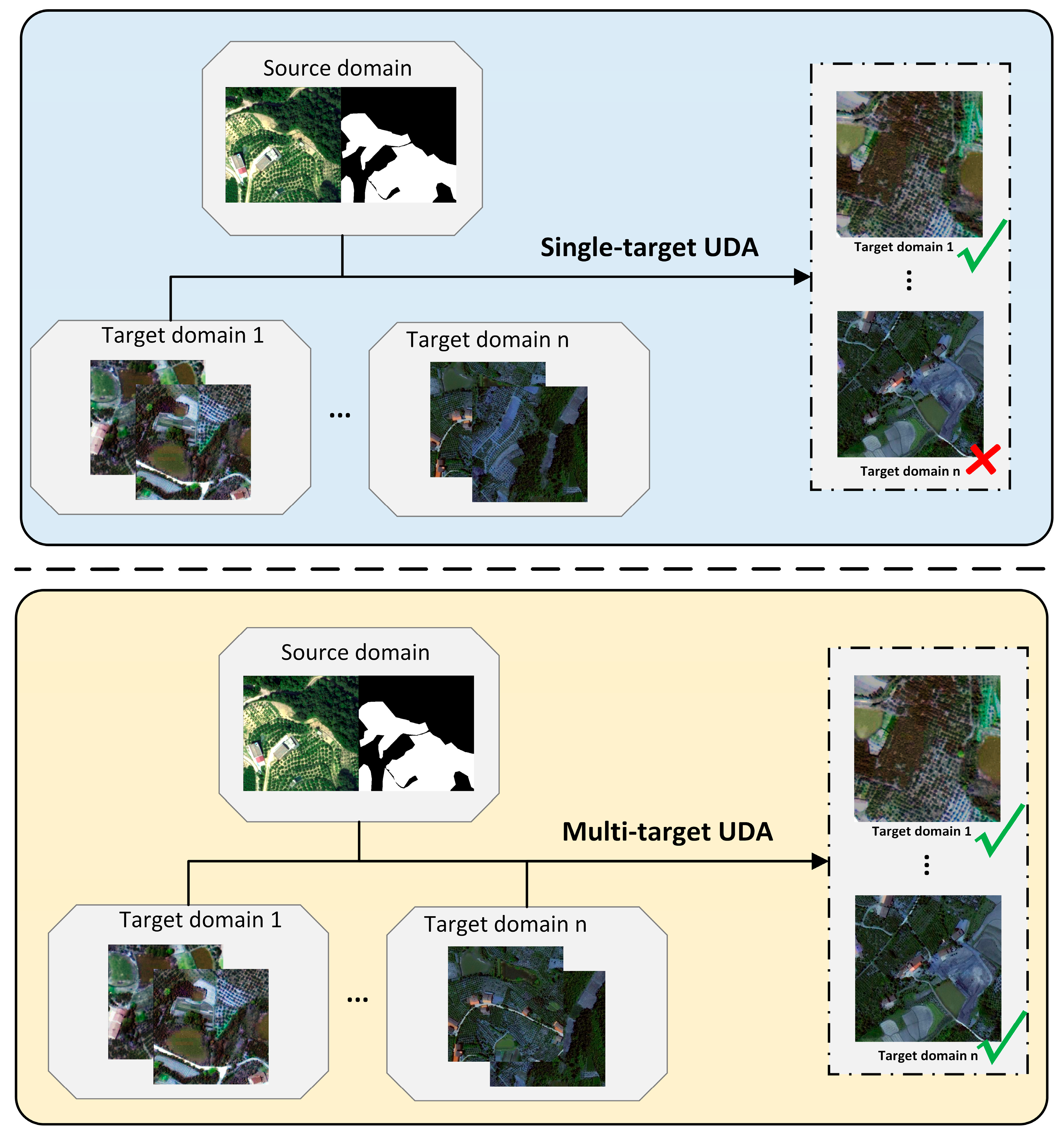

2.1.2. Multi-Target Unsupervised Domain Adaptation

2.2. Datasets

2.3. Methods

2.3.1. Preliminaries

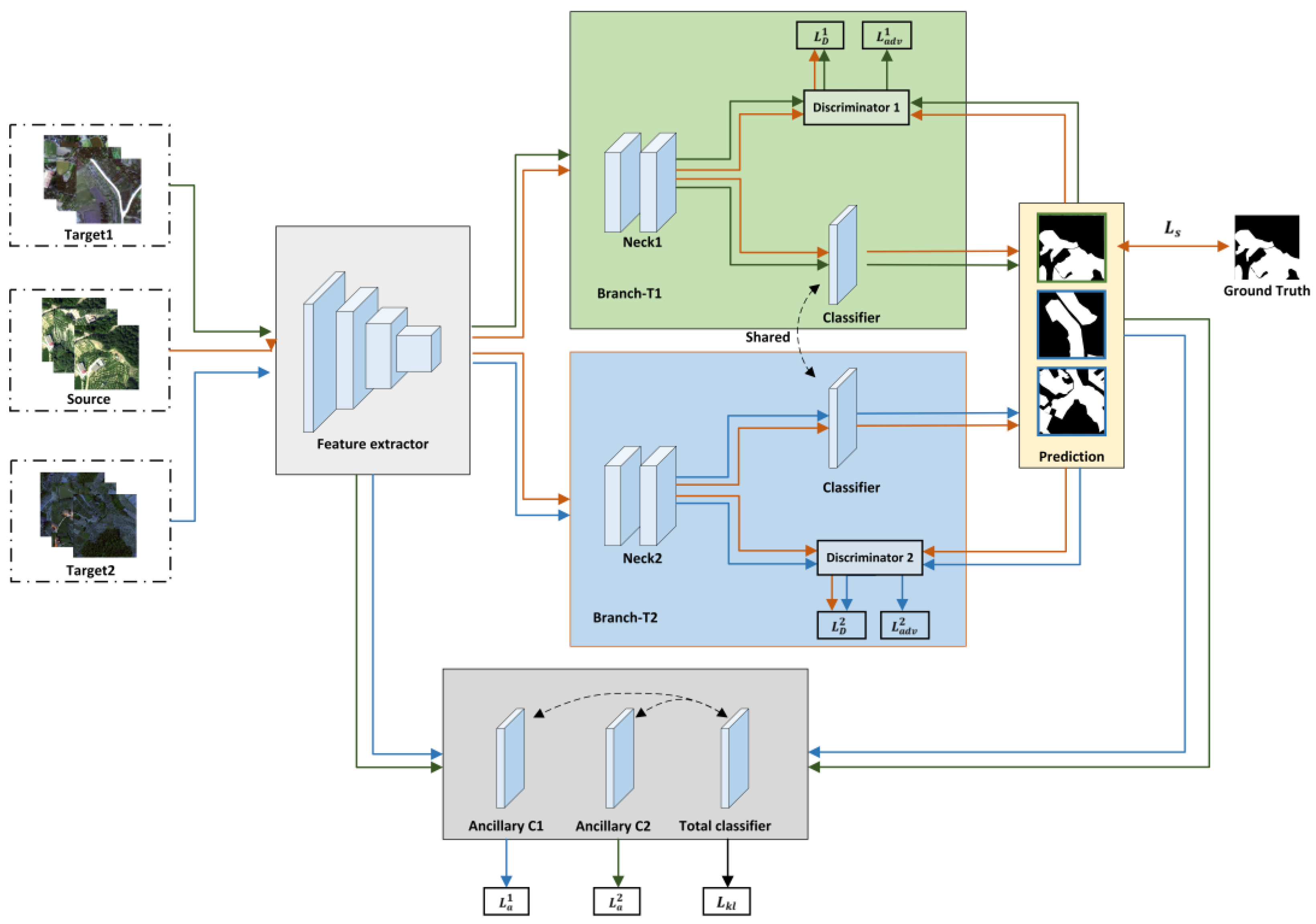

2.3.2. MBUDA Network

2.3.3. Adaptation Enhanced Learning Strategy

3. Results

3.1. Implementation Details

3.2. Evaluation Metrics

3.3. Experimental Results

3.3.1. Two Target Domains

3.3.2. Three Target Domains

3.4. Additional Impact of Pseudo Labels

4. Discussion

4.1. Comparison of Different Models

- Traditional domain adaptive models reduce the domain gap with the goal of adapting from the source to a specific target domain. When multiple target domain data exist, as described in Section 2.3.1, there are two ways to directly extend single-target UDA models to work on multiple target domains, but the results of these methods are not satisfactory. As shown in Table 3, the “Single-T Baselines” approach is costly and difficult to scale, and “MT Baselines” ignores distribution shifts across different target domains. The proposed MBUDA handles source–target domain pairs separately by multiple branches, which enables the source domain to align multiple target domains simultaneously, and MBUDA simplifies the training process while ensuring the performance of the segmentation model;

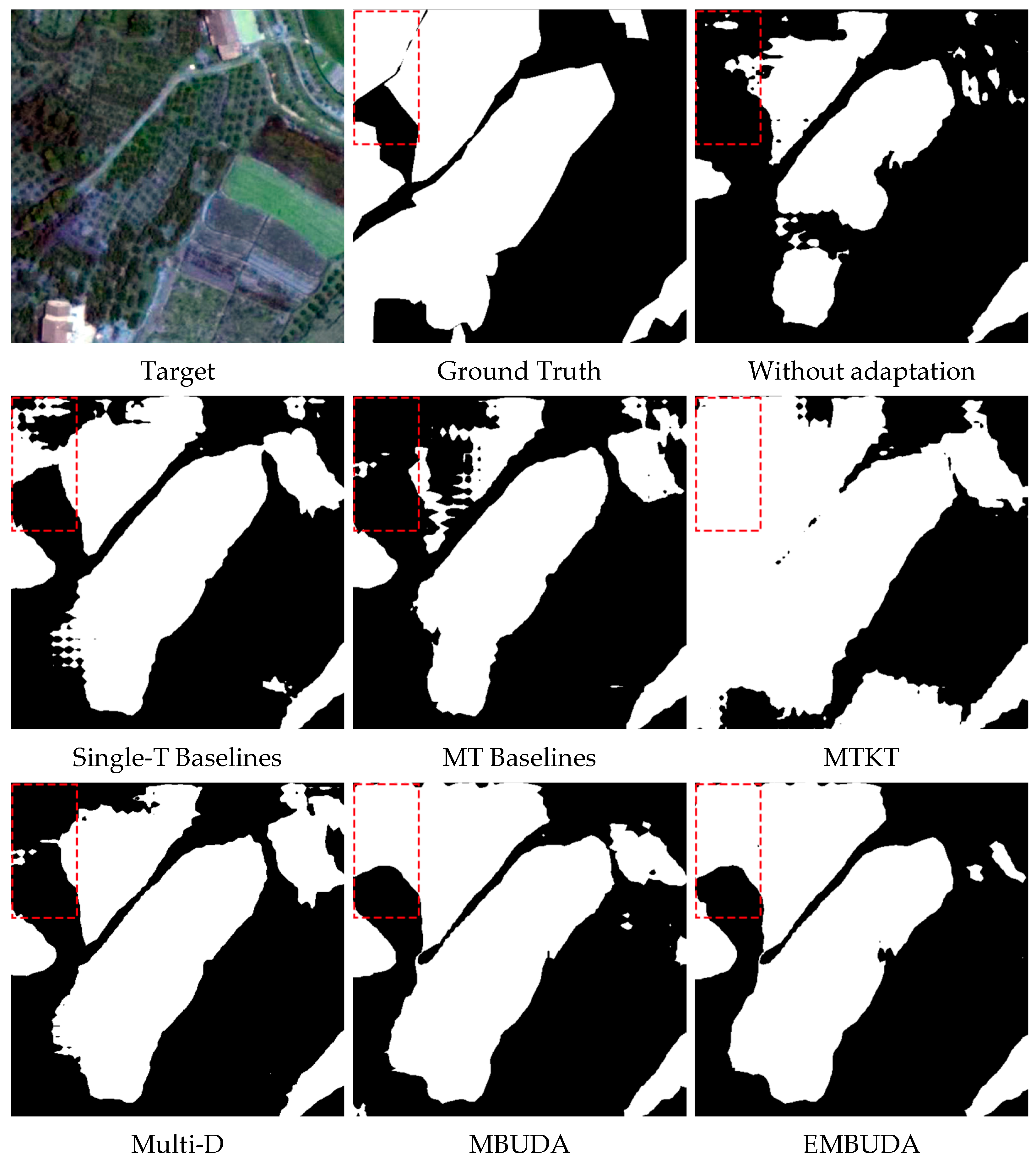

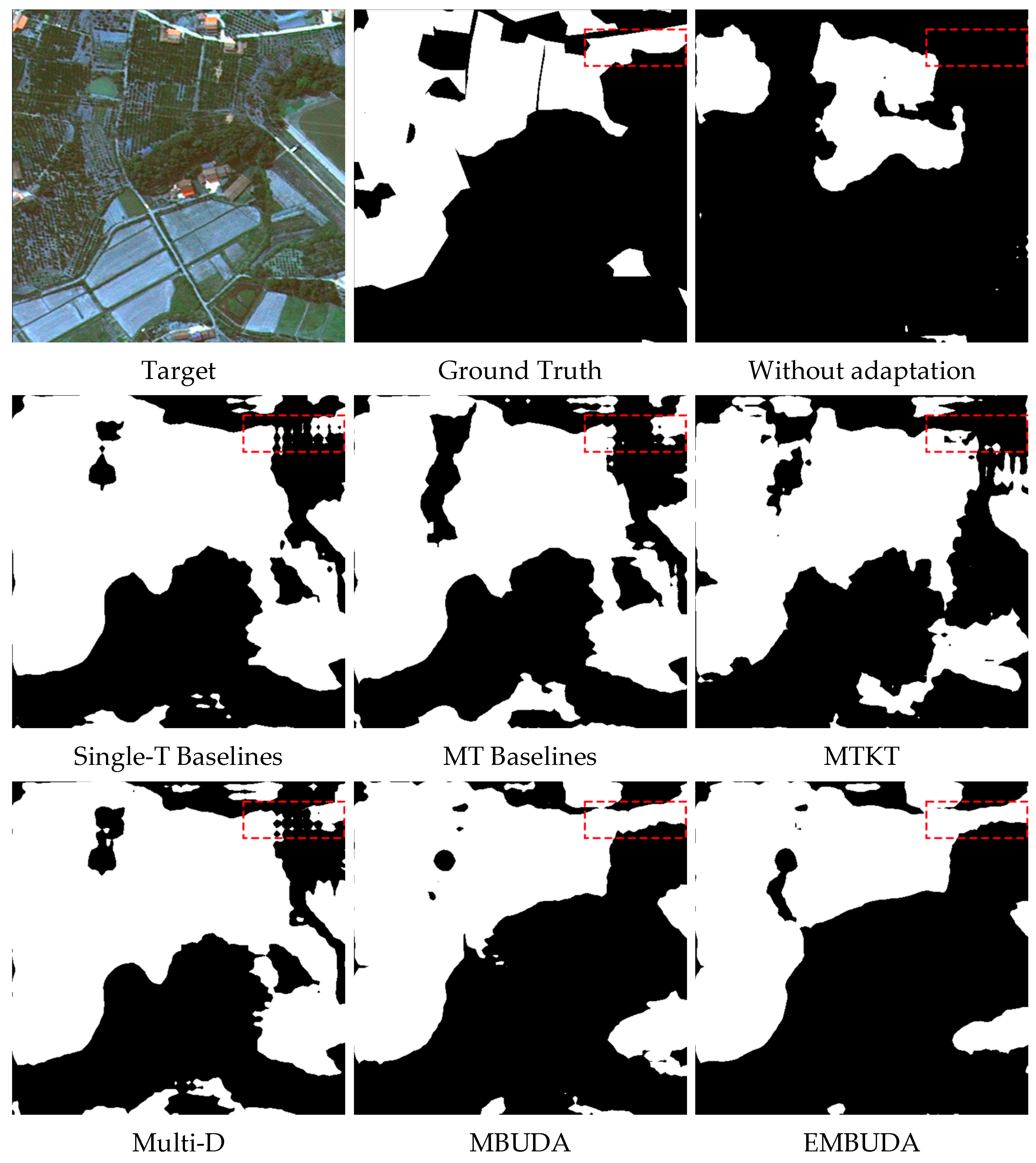

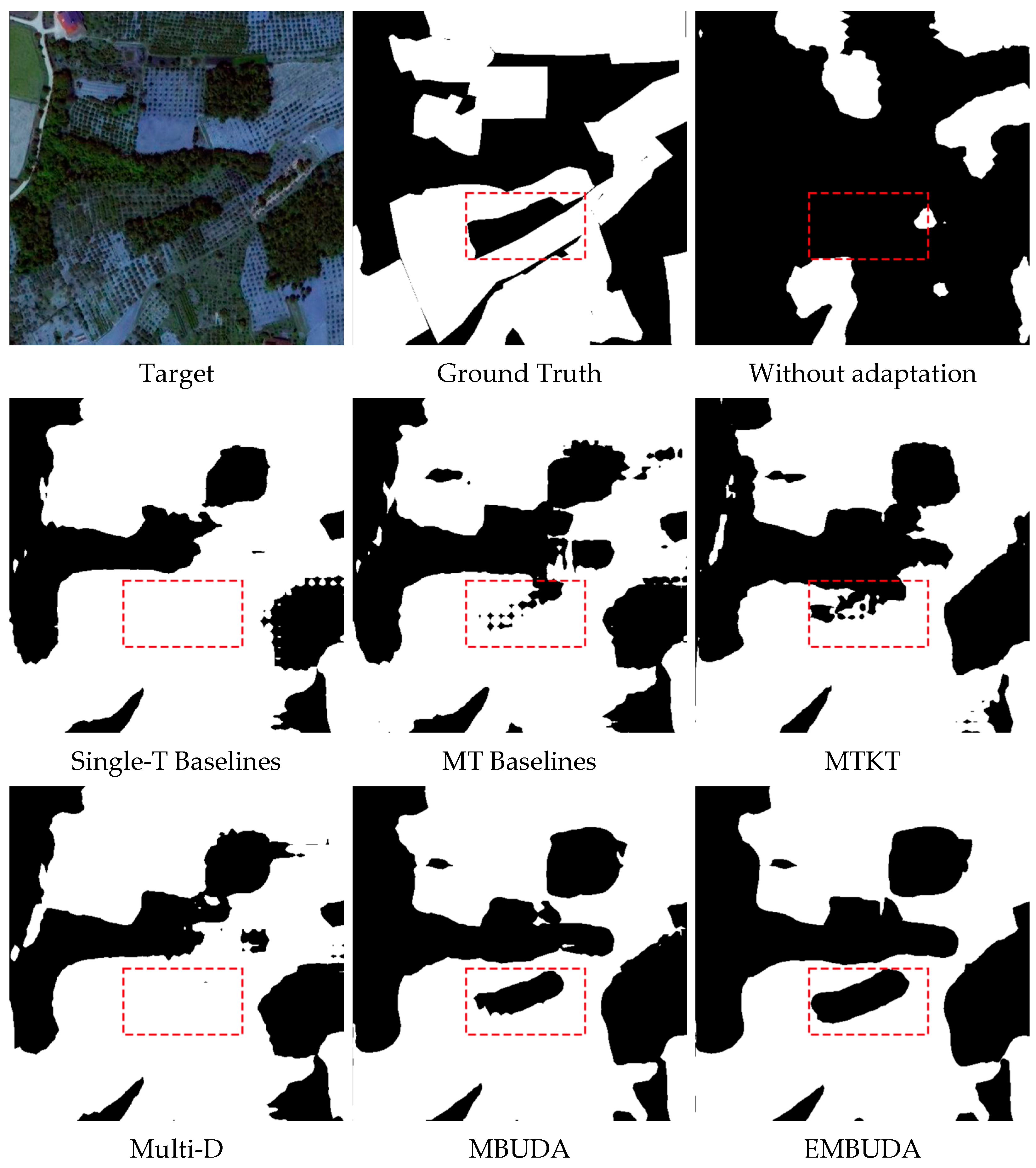

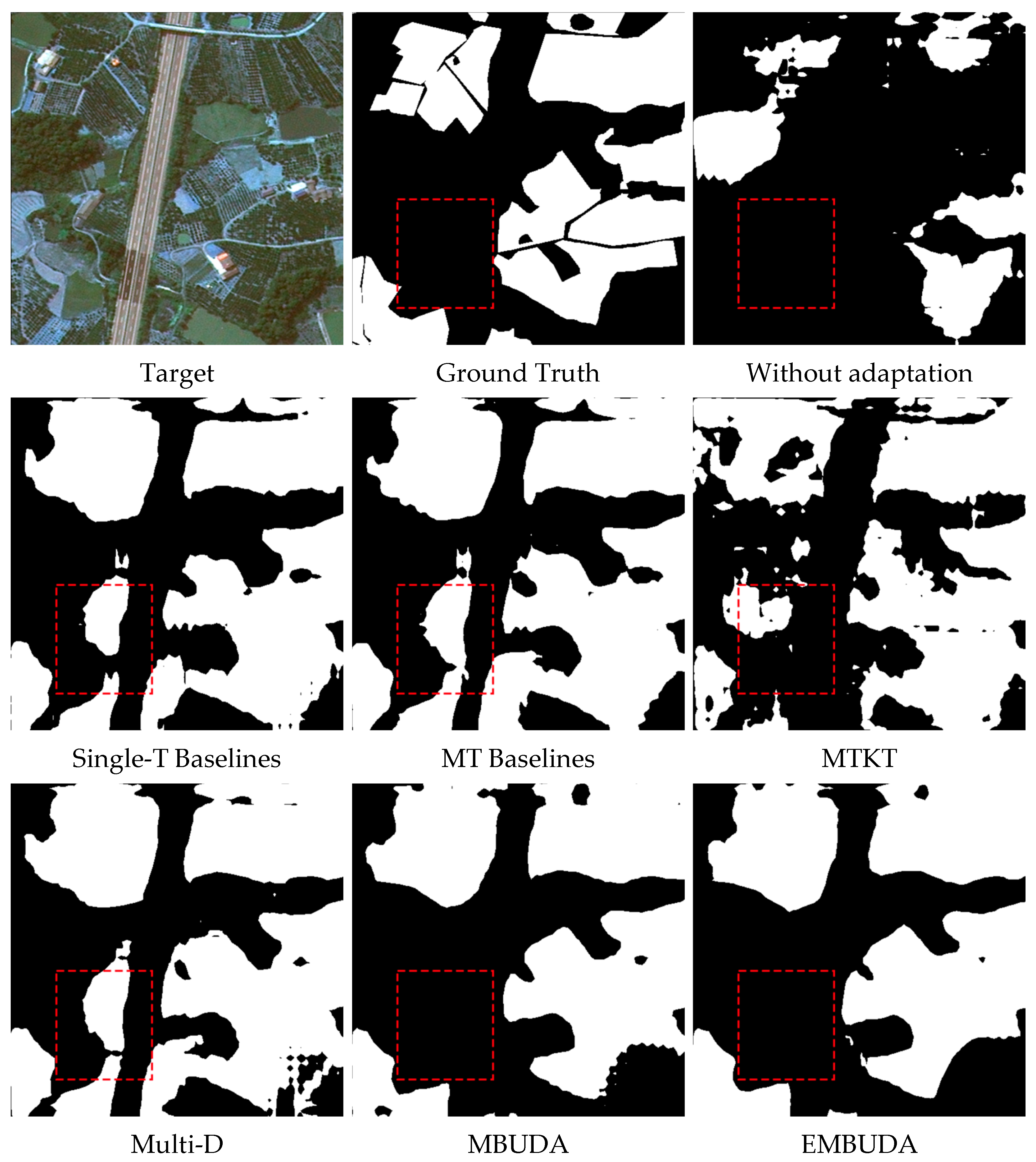

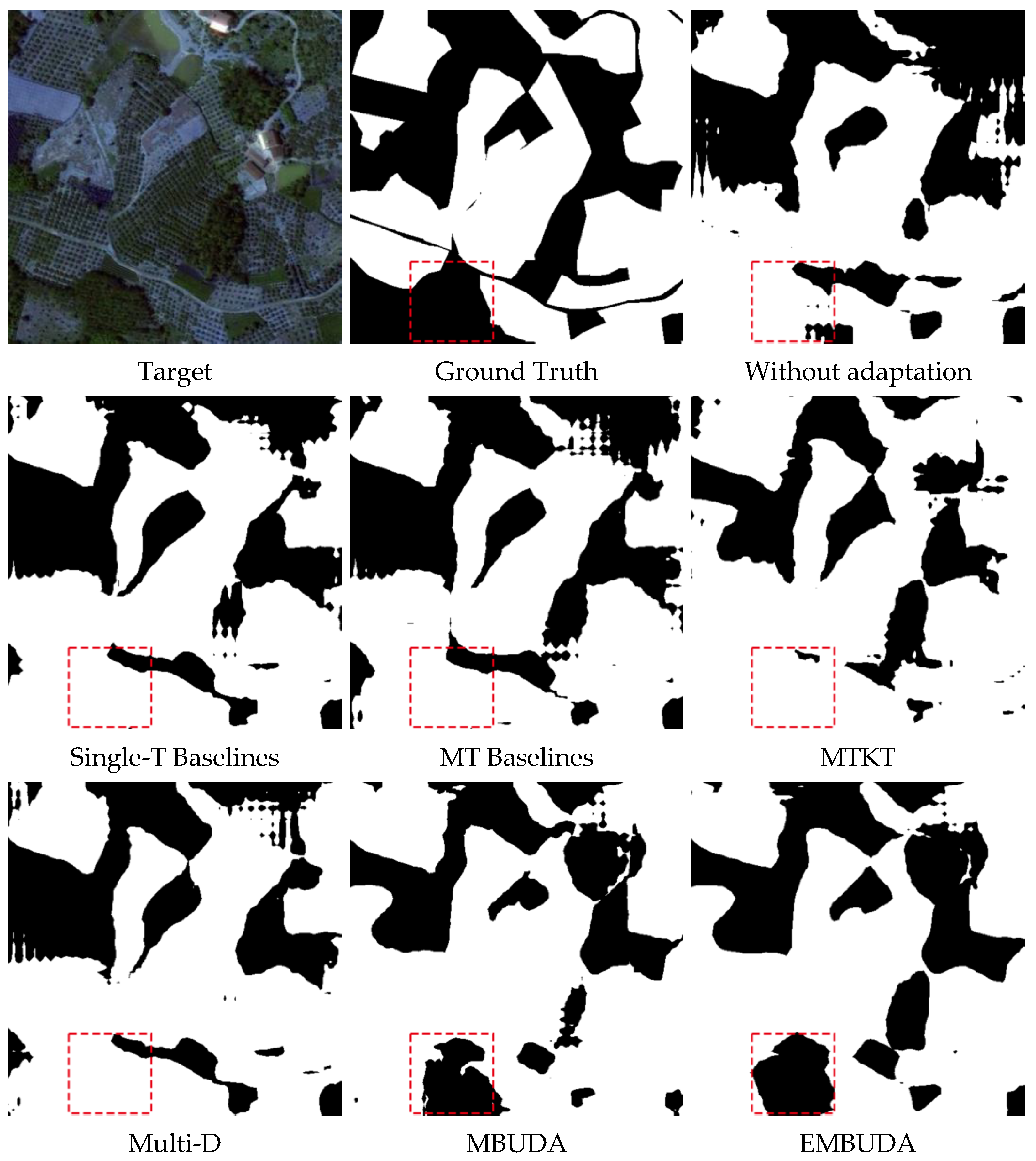

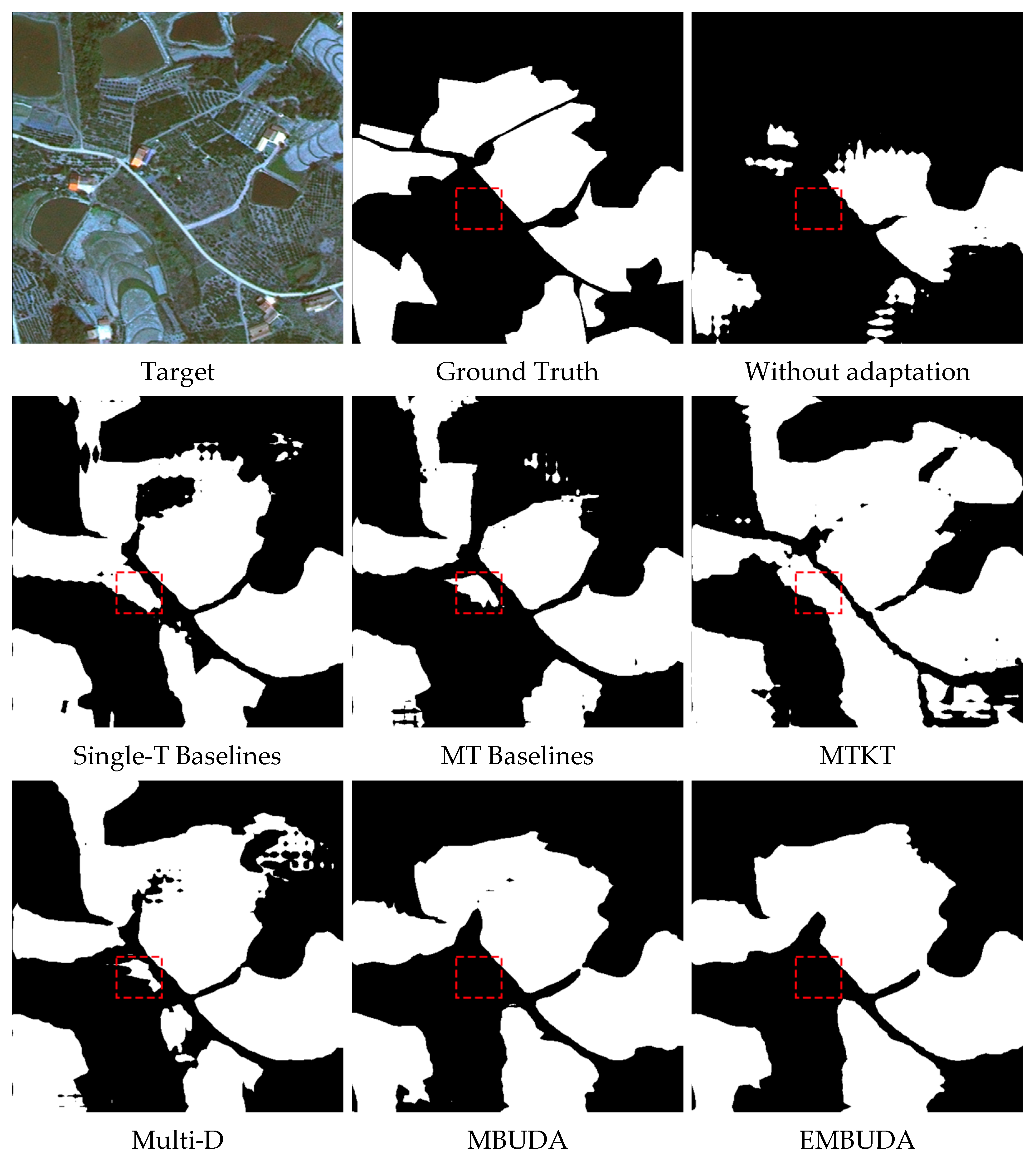

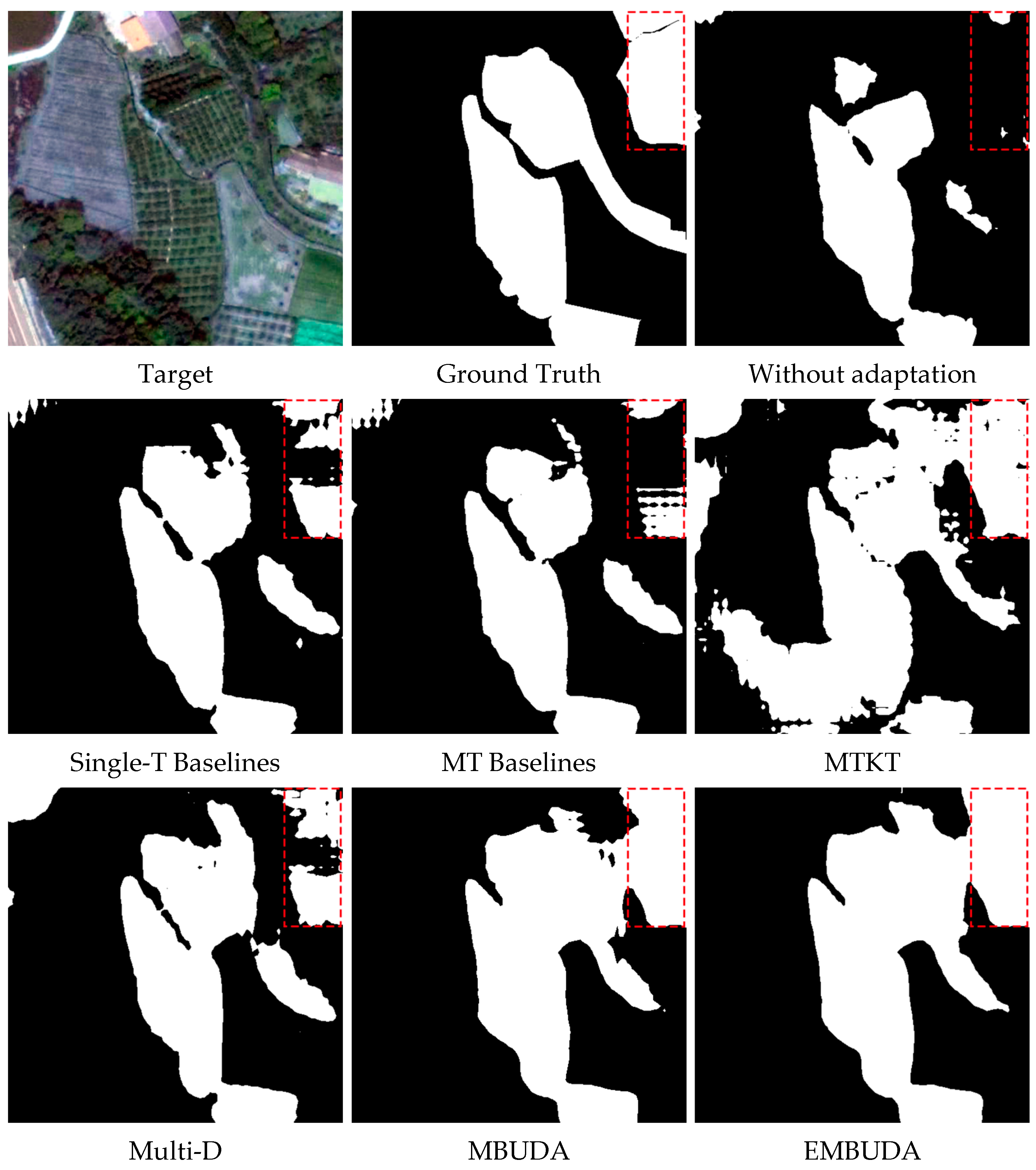

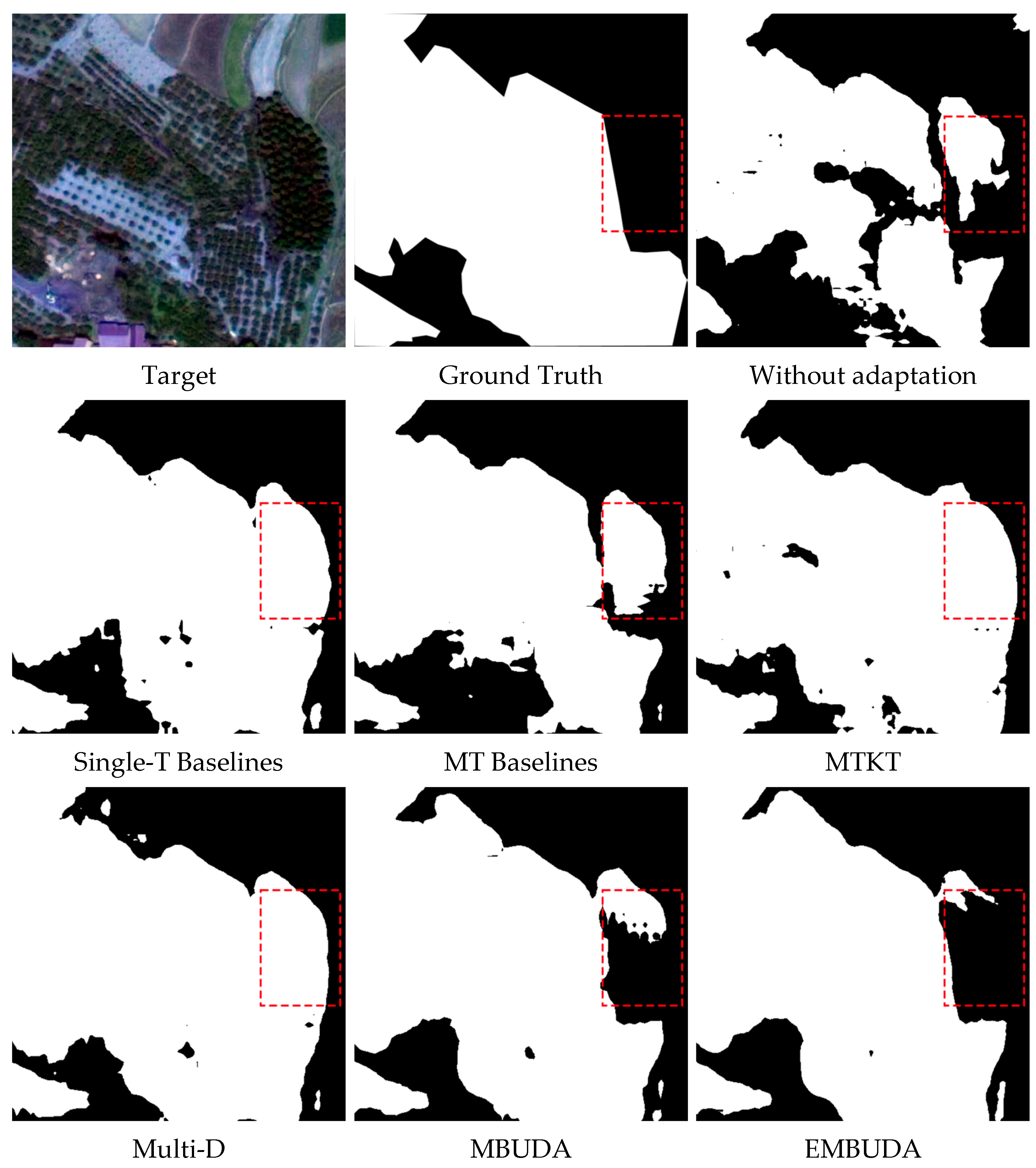

- In MTUDA task, segmentation models need to learn the full potential representation of the target domain in order to better predict images from different domains. The proposed MBUDA separates the feature learning and alignment processes, which prevents private features to interfere the alignment and ensures that the model learns both invariant features and private features. In Table 3, MBUDA achieves 4.62%, 6.65%, and 8.00% IoU gains over the “MT Baselines” on the three groups of tasks, respectively. We attribute the improved performance to the better feature representation learned by the model. As shown in Figure 6, the proposed methods have better discriminative ability for other classes and orchard areas;

- Most unsupervised domain adaptive models do not consider the large distribution gap in the target domain itself during the process of aligning source–target domain features. In this paper, we design an adaptation enhanced learning strategy to use pseudo labels to directly reduce the target–target domain gaps. As shown in Table 3, EMBUDA achieves 1.27%, 1.06%, and 1.16% IoU gains over the MBUDA on the three groups of tasks, respectively. The improvement in model performance demonstrates the importance to further reduce the target–target domain gaps.

4.2. Impact of Training Data

4.3. Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Outputs of Orchard Area Segmentation

References

- Chen, W.; Jiang, Z.; Wang, Z.; Cui, K.; Qian, X. Collaborative Global-Local Networks for Memory-Efficient Segmentation of Ultra-High Resolution Images. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–21 June 2019; pp. 8916–8925. [Google Scholar]

- Li, G.; Li, L.; Zhu, H.; Liu, X.; Jiao, L. Adaptive Multiscale Deep Fusion Residual Network for Remote Sensing Image Classification. IEEE Trans. Image Process 2019, 57, 8506–8521. [Google Scholar] [CrossRef]

- Peng, B.; Ren, D.; Zheng, C.; Lu, A. TRDet: Two-Stage Rotated Detection of Rural Buildings in Remote Sensing Images. Remote Sens. 2022, 14, 522. [Google Scholar] [CrossRef]

- Du, S.; Du, S.; Liu, B.; Zhang, X. Mapping large-scale and fine-grained urban functional zones from VHR images using a multi-scale semantic segmentation network and object based approach. Remote Sens. Environ. 2021, 261, 112480. [Google Scholar] [CrossRef]

- Cui, B.; Zhang, H.; Jing, W.; Liu, H.; Cui, J. SRSe-Net: Super-Resolution-Based Semantic Segmentation Network for Green Tide Extraction. Remote Sens. 2022, 14, 710. [Google Scholar] [CrossRef]

- Huan, H.; Liu, Y.; Xie, Y.; Wang, C.; Xu, D.; Zhang, Y. MAENet: Multiple Attention Encoder-Decoder Network for Farmland Segmentation of Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Banerjee, B.; Bovolo, F.; Bhattacharya, A.; Bruzzone, L.; Chaudhuri, S.; Buddhiraju, K.M. A Novel Graph-Matching-Based Approach for Domain Adaptation in Classification of Remote Sensing Image Pair. IEEE Trans. Geosci. Remote Sens. 2015, 53, 4045–4062. [Google Scholar] [CrossRef]

- Ma, X.; Yuan, J.; Chen, Y.-W.; Tong, R.; Lin, L. Attention-based cross-layer domain alignment for unsupervised domain adaptation. Neurocomputing 2022, 499, 1–10. [Google Scholar] [CrossRef]

- Shi, L.; Yuan, Z.; Cheng, M.; Chen, Y.; Wang, C. DFAN: Dual-Branch Feature Alignment Network for Domain Adaptation on Point Clouds. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Xu, Q.; Yuan, X.; Ouyang, C. Class-Aware Domain Adaptation for Semantic Segmentation of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Wang, H.; Tian, J.; Li, S.; Zhao, H.; Wu, F.; Li, X. Structure-conditioned adversarial learning for unsupervised domain adaptation. Neurocomputing 2022, 497, 216–226. [Google Scholar] [CrossRef]

- Zhang, B.; Chen, T.; Wang, B. Curriculum-Style Local-to-Global Adaptation for Cross-Domain Remote Sensing Image Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Zheng, J.; Fu, H.; Li, W.; Wu, W.; Zhao, Y.; Dong, R.; Yu, L. Cross-regional oil palm tree counting and detection via a multi-level attention domain adaptation network. ISPRS J. Photogramm. Remote Sens. 2020, 167, 154–177. [Google Scholar] [CrossRef]

- Zhao, P.; Zang, W.; Liu, B.; Kang, Z.; Bai, K.; Huang, K.; Xu, Z. Domain adaptation with feature and label adversarial networks. Neurocomputing 2021, 439, 294–301. [Google Scholar] [CrossRef]

- Yang, Y.; Zhang, T.; Li, G.; Kim, T.; Wang, G. An unsupervised domain adaptation model based on dual-module adversarial training. Neurocomputing 2022, 475, 102–111. [Google Scholar] [CrossRef]

- Du, L.; Tan, J.; Yang, H.; Feng, J.; Xue, X.; Zheng, Q.; Ye, X.; Zhang, X. SSF-DAN: Separated Semantic Feature Based Domain Adaptation Network for Semantic Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 982–991. [Google Scholar]

- Benjdira, B.; Bazi, Y.; Koubaa, A.; Ouni, K. Unsupervised Domain Adaptation Using Generative Adversarial Networks for Semantic Segmentation of Aerial Images. Remote Sens. 2019, 11, 1092. [Google Scholar] [CrossRef]

- Li, C.; Du, D.; Zhang, L.; Wen, L.; Luo, T.; Wu, Y.; Zhu, P. Spatial Attention Pyramid Network for Unsupervised Domain Adaptation. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 481–497. [Google Scholar]

- Lee, S.; Cho, S.; Im, S. DRANet: Disentangling Representation and Adaptation Networks for Unsupervised Cross-Domain Adaptation. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 15247–15256. [Google Scholar]

- Saporta, A.; Vu, T.H.; Cord, M.; Perez, P. Multi-Target Adversarial Frameworks for Domain Adaptation in Semantic Segmentation. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 9052–9061. [Google Scholar]

- Roy, S.; Krivosheev, E.; Zhong, Z.; Sebe, N.; Ricci, E. Curriculum Graph Co-Teaching for Multi-Target Domain Adaptation. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 5347–5356. [Google Scholar]

- Wang, H.; Shen, T.; Zhang, W.; Duan, L.-Y.; Mei, T. Classes Matter: A Fine-Grained Adversarial Approach to Cross-Domain Semantic Segmentation. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 642–659. [Google Scholar]

- Hung, W.-C.; Tsai, Y.-H.; Liou, Y.-T.; Lin, Y.-Y.; Yang, M.-H. Adversarial Learning for Semi-Supervised Semantic Segmentation. arXiv 2018, arXiv:1802.07934. [Google Scholar]

- Zheng, Y.; Huang, D.; Liu, S.; Wang, Y. Cross-domain Object Detection through Coarse-to-Fine Feature Adaptation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 13763–13772. [Google Scholar]

- Pan, F.; Shin, I.; Rameau, F.; Lee, S.; Kweon, I.S. Unsupervised Intra-Domain Adaptation for Semantic Segmentation Through Self-Supervision. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 3763–3772. [Google Scholar]

- Tsai, Y.; Hung, W.; Schulter, S.; Sohn, K.; Yang, M.; Chandraker, M. Learning to Adapt Structured Output Space for Semantic Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7472–7481. [Google Scholar]

- Vu, T.H.; Jain, H.; Bucher, M.; Cord, M.; Pérez, P.P. DADA: Depth-Aware Domain Adaptation in Semantic Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 7363–7372. [Google Scholar]

- Creswell, A.; White, T.; Dumoulin, V.; Arulkumaran, K.; Sengupta, B.; Bharath, A.A. Generative Adversarial Networks: An Overview. IEEE Signal Process. Mag. 2018, 35, 53–65. [Google Scholar] [CrossRef]

- Zhu, J.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2242–2251. [Google Scholar]

- Kim, M.; Byun, H. Learning Texture Invariant Representation for Domain Adaptation of Semantic Segmentation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 12972–12981. [Google Scholar]

- Choi, J.; Kim, T.; Kim, C. Self-Ensembling With GAN-Based Data Augmentation for Domain Adaptation in Semantic Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 6829–6839. [Google Scholar]

- Sun, Y.; Tzeng, E.; Darrell, T.; Efros, A.A. Unsupervised domain adaptation through self-supervision. arXiv 2019, arXiv:1909.11825. [Google Scholar]

- Xu, J.; Xiao, L.; López, A.M. Self-Supervised Domain Adaptation for Computer Vision Tasks. IEEE Access 2019, 7, 156694–156706. [Google Scholar] [CrossRef]

- Sun, B.; Feng, J.; Saenko, K. Correlation Alignment for Unsupervised Domain Adaptation. arXiv 2016, arXiv:1612.01939. [Google Scholar]

- Yang, L.; Balaji, Y.; Lim, S.N.; Shrivastava, A. Curriculum Manager for Source Selection in Multi-Source Domain Adaptation. arXiv 2020, arXiv:2007.01261. [Google Scholar]

- Vu, T.-H.; Jain, H.; Bucher, M.; Cord, M.; Pérez, P. ADVENT: Adversarial Entropy Minimization for Domain Adaptation in Semantic Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 2512–2521. [Google Scholar]

- Zou, Y.; Yu, Z.; Vijaya Kumar, B.V.K.; Wang, J. Unsupervised Domain Adaptation for Semantic Segmentation via Class-Balanced Self-training. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 297–313. [Google Scholar]

- Liu, W.; Luo, Z.; Cai, Y.; Yu, Y.; Ke, Y.; Junior, J.M.; Gonçalves, W.N.; Li, J. Adversarial unsupervised domain adaptation for 3D semantic segmentation with multi-modal learning. ISPRS J. Photogramm. Remote Sens. 2021, 176, 211–221. [Google Scholar] [CrossRef]

- Zheng, A.; Wang, M.; Li, C.; Tang, J.; Luo, B. Entropy Guided Adversarial Domain Adaptation for Aerial Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Zhang, L.; Lan, M.; Zhang, J.; Tao, D. Stagewise Unsupervised Domain Adaptation With Adversarial Self-Training for Road Segmentation of Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Tasar, O.; Giros, A.; Tarabalka, Y.; Alliez, P.; Clerc, S. DAugNet: Unsupervised, Multisource, Multitarget, and Life-Long Domain Adaptation for Semantic Segmentation of Satellite Images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 1067–1081. [Google Scholar] [CrossRef]

- Iqbal, J.; Ali, M. Weakly-supervised domain adaptation for built-up region segmentation in aerial and satellite imagery. ISPRS J. Photogramm. Remote Sens. 2020, 167, 263–275. [Google Scholar] [CrossRef]

- Li, Y.; Shi, T.; Zhang, Y.; Chen, W.; Wang, Z.; Li, H. Learning deep semantic segmentation network under multiple weakly-supervised constraints for cross-domain remote sensing image semantic segmentation. ISPRS J. Photogramm. Remote Sens. 2021, 175, 20–33. [Google Scholar] [CrossRef]

- Wittich, D.; Rottensteiner, F. Appearance based deep domain adaptation for the classification of aerial images. ISPRS J. Photogramm. Remote Sens. 2021, 180, 82–102. [Google Scholar] [CrossRef]

- Baydilli, Y.Y.; Atila, U.; Elen, A. Learn from one data set to classify all—A multi-target domain adaptation approach for white blood cell classification. Comput. Methods Programs Biomed. 2020, 196, 105645. [Google Scholar] [CrossRef]

- Yu, H.; Hu, M.; Chen, S. Multi-target unsupervised domain adaptation without exactly shared categories. arXiv 2018, arXiv:1809.00852. [Google Scholar]

- Chen, Z.; Zhuang, J.; Liang, X.; Lin, L. Blending-Target Domain Adaptation by Adversarial Meta-Adaptation Networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 2243–2252. [Google Scholar]

- Zheng, J.; Wu, W.; Fu, H.; Li, W.; Dong, R.; Zhang, L.; Yuan, S. Unsupervised Mixed Multi-Target Domain Adaptation for Remote Sensing Images Classification. In Proceedings of the IGARSS 2020–2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 Septemper–2 October 2020; pp. 1381–1384. [Google Scholar]

- Nguyen-Meidine, L.T.; Belal, A.; Kiran, M.; Dolz, J.; Blais-Morin, L.A.; Granger, E. Unsupervised Multi-Target Domain Adaptation Through Knowledge Distillation. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 1338–1346. [Google Scholar]

- Isobe, T.; Jia, X.; Chen, S.; He, J.; Shi, Y.; Liu, J.; Lu, H.; Wang, S. Multi-Target Domain Adaptation with Collaborative Consistency Learning. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 8183–8192. [Google Scholar]

- Gholami, B.; Sahu, P.; Rudovic, O.; Bousmalis, K.; Pavlovic, V. Unsupervised Multi-Target Domain Adaptation: An Information Theoretic Approach. IEEE Trans. Image Process. 2020, 29, 3993–4002. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.; Choi, W.; Kim, C.; Choi, M.; Im, S. ADAS: A Direct Adaptation Strategy for Multi-Target Domain Adaptive Semantic Segmentation (2022). arXiv 2022, arXiv:2203.06811. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network (2015). arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Everingham, M.; Eslami, S.M.A.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes Challenge: A Retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

| Index | ZG | CY | XT1 | XT2 |

|---|---|---|---|---|

| Source | UAV | Google Earth | Google Earth | DMC3 |

| Longitude and latitude | 110.5788E, 30.9798N | 111.3116E, 30.4194N | 111.5250E, 30.5663N | 111.5120E, 30.5543N |

| Spectral | RGB | RGB | RGB | RGB, NIR |

| Resolution | 0.2 m | 0.3 m | 0.6 m | 0.8 m |

| Image size | 13,000 × 10,078 | 12,000 × 12,000 | 10,000 × 12,000 | 10,000 × 10,000 |

| Dataset | Total | Train Set | Validation Set | Test Set |

|---|---|---|---|---|

| Dataset ZG | 4598 | 2798 | 1200 | 600 |

| Dataset CY | 5000 | 3000 | 1200 | 800 |

| Dataset XT1 | 4162 | 2600 | 1062 | 500 |

| Dataset XT2 | 3500 | 2085 | 915 | 500 |

| Method | ZG → CY + XT1 | ZG → CY + XT2 | ZG → XT1 + XT2 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| CY | XT1 | Average | CY | XT2 | Average | XT1 | XT2 | Average | |

| Without adaptation | 42.17 | 23.90 | 33.04 | 42.17 | 15.36 | 28.77 | 23.90 | 15.36 | 19.63 |

| Single-T Baselines | 60.66 | 68.36 | 64.51 | 60.66 | 63.66 | 62.16 | 68.36 | 63.66 | 66.01 |

| MT Baselines | 58.52 | 65.88 | 62.20 | 57.20 | 62.69 | 59.95 | 66.32 | 62.02 | 64.17 |

| MTKT | 58.05 | 70.75 | 64.40 | 58.14 | 58.07 | 58.11 | 67.77 | 58.78 | 63.28 |

| Multi-D | 62.99 | 70.01 | 66.50 | 59.18 | 64.43 | 61.81 | 68.06 | 62.61 | 65.34 |

| MBUDA | 66.59 | 75.65 | 71.12 | 66.91 | 70.01 | 68.46 | 74.76 | 71.91 | 73.34 |

| EMBUDA | 68.36 | 76.42 | 72.39 | 67.18 | 71.85 | 69.52 | 76.38 | 72.61 | 74.50 |

| Method | CY → ZG + XT1 | CY → ZG + XT2 | CY → XT1 + XT2 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| ZG | XT1 | Average | ZG | XT2 | Average | XT1 | XT2 | Average | |

| Without adaptation | 64.12 | 58.77 | 61.45 | 64.12 | 29.31 | 46.72 | 58.77 | 29.32 | 44.04 |

| Single-T Baselines | 66.30 | 67.02 | 66.66 | 66.30 | 68.61 | 67.46 | 67.02 | 68.61 | 67.82 |

| MT Baselines | 64.47 | 64.23 | 64.35 | 66.68 | 62.01 | 64.35 | 67.78 | 63.54 | 65.66 |

| MTKT | 65.75 | 68.16 | 66.96 | 64.99 | 59.06 | 62.03 | 71.05 | 59.86 | 65.46 |

| Multi-D | 64.25 | 66.82 | 65.54 | 64.74 | 67.06 | 65.90 | 68.10 | 68.42 | 68.26 |

| MBUDA | 75.78 | 72.52 | 74.15 | 74.39 | 71.98 | 73.19 | 73.21 | 70.29 | 71.75 |

| EMBUDA | 76.36 | 73.12 | 74.74 | 74.84 | 73.27 | 74.06 | 74.71 | 70.91 | 72.81 |

| Method | ZG → CY + XT1 + XT2 | CY → ZG + XT1 + XT2 | ||||||

|---|---|---|---|---|---|---|---|---|

| CY | XT1 | XT2 | Average | ZG | XT1 | XT2 | Average | |

| Without adaptation | 42.17 | 23.90 | 15.36 | 27.14 | 64.12 | 58.77 | 29.31 | 50.73 |

| Single-T Baselines | 60.66 | 68.36 | 63.66 | 64.23 | 66.30 | 67.02 | 68.61 | 67.31 |

| MT Baselines | 56.02 | 66.52 | 61.68 | 61.41 | 64.40 | 66.19 | 62.55 | 64.38 |

| MTKT | 58.33 | 68.89 | 59.20 | 62.14 | 64.18 | 69.61 | 61.74 | 65.18 |

| Multi-D | 62.79 | 69.89 | 62.48 | 65.05 | 64.88 | 68.36 | 66.53 | 66.59 |

| MBUDA | 64.97 | 74.83 | 72.69 | 70.83 | 77.30 | 73.51 | 71.91 | 74.24 |

| EMBUDA | 66.21 | 75.62 | 73.92 | 71.92 | 79.69 | 75.36 | 72.14 | 75.73 |

| Method | ZG → CY + XT1 | ZG → CY + XT1 + XT2 | |||||

|---|---|---|---|---|---|---|---|

| CY | XT1 | Average | CY | XT1 | XT2 | Average | |

| MBUDA | 66.59 | 75.65 | 71.12 | 64.97 | 74.83 | 72.69 | 70.83 |

| MBUDA+PL1 | 67.20 | 76.33 | 71.77 | 66.01 | 75.77 | 73.29 | 71.69 |

| MBUDA+PL2 | 67.43 | 76.48 | 71.96 | 66.12 | 75.29 | 73.57 | 71.66 |

| MBUDA+PL3 | 68.36 | 76.42 | 72.39 | 66.21 | 75.62 | 73.92 | 71.92 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, M.; Ren, D.; Sun, H.; Yang, S.X. Multibranch Unsupervised Domain Adaptation Network for Cross Multidomain Orchard Area Segmentation. Remote Sens. 2022, 14, 4915. https://doi.org/10.3390/rs14194915

Liu M, Ren D, Sun H, Yang SX. Multibranch Unsupervised Domain Adaptation Network for Cross Multidomain Orchard Area Segmentation. Remote Sensing. 2022; 14(19):4915. https://doi.org/10.3390/rs14194915

Chicago/Turabian StyleLiu, Ming, Dong Ren, Hang Sun, and Simon X. Yang. 2022. "Multibranch Unsupervised Domain Adaptation Network for Cross Multidomain Orchard Area Segmentation" Remote Sensing 14, no. 19: 4915. https://doi.org/10.3390/rs14194915

APA StyleLiu, M., Ren, D., Sun, H., & Yang, S. X. (2022). Multibranch Unsupervised Domain Adaptation Network for Cross Multidomain Orchard Area Segmentation. Remote Sensing, 14(19), 4915. https://doi.org/10.3390/rs14194915