RaftNet: A New Deep Neural Network for Coastal Raft Aquaculture Extraction from Landsat 8 OLI Data

Abstract

:1. Introduction

2. Study Area and Data

2.1. Study Area

2.2. Data and Preprocessing

3. Methods

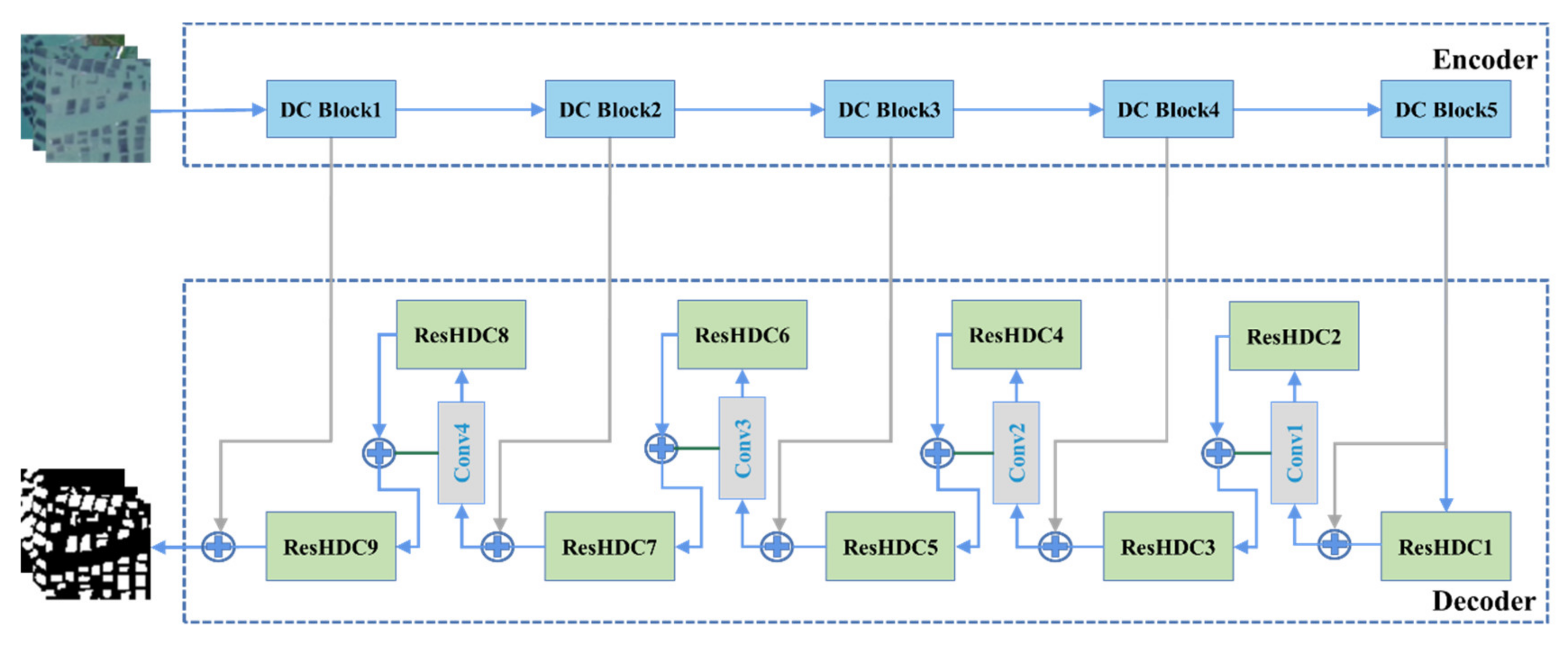

3.1. Structure of RaftNet

3.1.1. Dual-Channel Block for Downsampling Structures

3.1.2. The ResHDC Block

3.2. Contrastive Methods

3.3. Experimental Setup

3.3.1. Construction of the Training Dataset

3.3.2. Implementation Details

3.4. Accuracy Evaluation Indicators

4. Results and Discussion

4.1. Comparison with Various Deep Learning Methods

4.1.1. Typical Area Extraction Comparison

4.1.2. Extraction Accuracy Evaluation

4.2. Ablation Study

4.3. Spatiotemporal Change in Raft Aquaculture

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gentry, R.R.; Froehlich, H.E.; Grimm, D.; Kareiva, P.; Parke, M.; Rust, M.; Gaines, S.D.; Halpern, B.S. Mapping the global potential for marine aquaculture. Nat. Ecol. Evol. 2017, 1, 1317–1324. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Wang, N. Exploring the role of the fisheries sector in China’s national economy: An input–output analysis. Fish. Res. 2021, 243, 106055. [Google Scholar] [CrossRef]

- Visch, W.; Kononets, M.; Hall, P.O.; Nylund, G.M.; Pavia, H. Environmental impact of kelp (Saccharina latissima) aquaculture. Mar. Pollut. Bull. 2020, 155, 110962. [Google Scholar] [CrossRef] [PubMed]

- Gao, G.; Gao, L.; Jiang, M.; Jian, A.; He, L. The potential of seaweed cultivation to achieve carbon neutrality and mitigate deoxygenation and eutrophication. Environ. Res. Lett. 2021, 17, 14018. [Google Scholar] [CrossRef]

- Hu, Z.M.; Shan, T.F.; Zhang, J.; Zhang, Q.S.; Critchley, A.T.; Choi, H.G.; Yotsukura, N.; Liu, F.L.; Duan, D.L. Kelp aquaculture in China: A retrospective and future prospects. Rev. Aquacult. 2021, 13, 1324–1351. [Google Scholar] [CrossRef]

- Liu, J.; Xia, J.; Zhuang, M.; Zhang, J.; Yu, K.; Zhao, S.; Sun, Y.; Tong, Y.; Xia, L.; Qin, Y. Controlling the source of green tides in the Yellow Sea: NaClO treatment of Ulva attached on Pyropia aquaculture raft. Aquaculture 2021, 535, 736378. [Google Scholar] [CrossRef]

- Gernez, P.; Palmer, S.C.; Thomas, Y.; Forster, R. remote sensing for aquaculture. Front. Mar. Sci. 2021, 7, 1258. [Google Scholar] [CrossRef]

- Yewei, L.; Qiangzi, L.; Du Xin, W.H.; Jilei, L. A method of coastal aquaculture area automatic extraction with high spatial resolution images. Remote Sens. Technol. Appl. 2015, 30, 486–494. [Google Scholar]

- Xia, Z.; Guo, X.; Chen, R. Automatic extraction of aquaculture ponds based on Google Earth Engine. Ocean Coast. Manag. 2020, 198, 105348. [Google Scholar] [CrossRef]

- Fu, Y.; Deng, J.; Wang, H.; Comber, A.; Yang, W.; Wu, W.; You, S.; Lin, Y.; Wang, K. A new satellite-derived dataset for marine aquaculture areas in China’s coastal region. Earth Syst. Sci. Data 2021, 13, 1829–1842. [Google Scholar] [CrossRef]

- Hou, T.; Sun, W.; Chen, C.; Yang, G.; Meng, X.; Peng, J. Marine floating raft aquaculture extraction of hyperspectral remote sensing images based decision tree algorithm. Int. J. Appl. Earth Obs. 2022, 111, 102846. [Google Scholar] [CrossRef]

- Yan, J.; Zhao, S.; Su, F.; Du, J.; Feng, P.; Zhang, S. Tidal Flat Extraction and Change Analysis Based on the RF-W Model: A Case Study of Jiaozhou Bay, East China. Remote Sens. 2021, 13, 1436. [Google Scholar] [CrossRef]

- Xu, Y.; Hu, Z.; Zhang, Y.; Wang, J.; Yin, Y.; Wu, G. Mapping Aquaculture Areas with Multi-Source Spectral and Texture Features: A Case Study in the Pearl River Basin (Guangdong), China. Remote Sens. 2021, 13, 4320. [Google Scholar] [CrossRef]

- Zhong, Y.; Liao, S.; Yu, G.; Fu, D.; Huang, H. Harbor Aquaculture Area Extraction Aided with an Integration-Enhanced Gradient Descent Algorithm. Remote Sens. 2021, 13, 4554. [Google Scholar] [CrossRef]

- Zhang, X.; Ma, S.; Su, C.; Shang, Y.; Wang, T.; Yin, J. Coastal oyster aquaculture area extraction and nutrient loading estimation using a GF-2 satellite image. IEEE J.-Stars. 2020, 13, 4934–4946. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Z.; Yang, X.; Zhang, Y.; Yang, F.; Liu, B.; Cai, P. Satellite-based monitoring and statistics for raft and cage aquaculture in China’s offshore waters. Int. J. Appl. Earth Obs. 2020, 91, 102118. [Google Scholar] [CrossRef]

- Su, H.; Lu, X.; Chen, Z.; Zhang, H.; Lu, W.; Wu, W. Estimating coastal chlorophyll-a concentration from time-series OLCI data based on machine learning. Remote Sens. 2021, 13, 576. [Google Scholar] [CrossRef]

- Arellano-Verdejo, J.; Santos-Romero, M.; Lazcano-Hernandez, H.E. Use of semantic segmentation for mapping Sargassum on beaches. PeerJ. 2022, 10, e13537. [Google Scholar] [CrossRef]

- Arellano-Verdejo, J.; Lazcano-Hernandez, H.E.; Cabanillas-Terán, N. ERISNet: Deep neural network for Sargassum detection along the coastline of the Mexican Caribbean. PeerJ. 2019, 7, e6842. [Google Scholar] [CrossRef]

- Lu, Y.; Shao, W.; Sun, J. Extraction of Offshore Aquaculture Areas from Medium-Resolution Remote Sensing Images Based on Deep Learning. Remote Sens. 2021, 13, 3854. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Pan, X.; Jiang, T.; Zhang, Z.; Sui, B.; Liu, C.; Zhang, L. A New Method for Extracting Laver Culture Carriers Based on Inaccurate Supervised Classification with FCN-CRF. J. Mar. Sci. Eng. 2020, 8, 274. [Google Scholar] [CrossRef]

- Cui, B.; Zhong, Y.; Fei, D.; Zhang, Y.; Liu, R.; Chu, J.; Zhao, J. Floating Raft Aquaculture Area Automatic Extraction Based on Fully Convolutional Network. J. Coast. Res. 2019, 90, 86–94. [Google Scholar] [CrossRef]

- Shi, T.; Xu, Q.; Zou, Z.; Shi, Z. Automatic raft labeling for remote sensing images via dual-scale homogeneous convolutional neural network. Remote Sens. 2018, 10, 1130. [Google Scholar] [CrossRef]

- Chen, L.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Sui, B.; Jiang, T.; Zhang, Z.; Pan, X.; Liu, C. A modeling method for automatic extraction of offshore aquaculture zones based on semantic segmentation. ISPRS Int. J. Geo-Inf. 2020, 9, 145. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Cheng, B.; Liang, C.; Liu, X.; Liu, Y.; Ma, X.; Wang, G. Research on a novel extraction method using Deep Learning based on GF-2 images for aquaculture areas. Int. J. Remote Sens. 2020, 41, 3575–3591. [Google Scholar] [CrossRef]

- Cui, B.; Fei, D.; Shao, G.; Lu, Y.; Chu, J. Extracting raft aquaculture areas from remote sensing images via an improved U-net with a PSE structure. Remote Sens. 2019, 11, 2053. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, C.; Chen, J.; Wang, F. Shape-Constrained Method of Remote Sensing Monitoring of Marine Raft Aquaculture Areas on Multitemporal Synthetic Sentinel-1 Imagery. Remote Sens. 2022, 14, 1249. [Google Scholar] [CrossRef]

- Fu, Y.; Ye, Z.; Deng, J.; Zheng, X.; Huang, Y.; Yang, W.; Wang, Y.; Wang, K. Finer resolution mapping of marine aquaculture areas using worldView-2 imagery and a hierarchical cascade convolutional neural network. Remote Sens. 2019, 11, 1678. [Google Scholar] [CrossRef]

- Wang, J.; Fan, J.; Wang, J. MDOAU-Net: A Lightweight and Robust Deep Learning Model for SAR Image Segmentation in Aquaculture Raft Monitoring. IEEE Geosci. Remote Sens. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Arellano-Verdejo, J. Moderate resolution imaging spectroradiometer products classification using deep learning. In Proceedings of the International Congress of Telematics and Computing, Merida, Mexico, 4–8 November 2019; pp. 61–70. [Google Scholar]

- Roy, D.P.; Wulder, M.A.; Loveland, T.R.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Helder, D.; Irons, J.R.; Johnson, D.M.; Kennedy, R. Landsat-8: Science and product vision for terrestrial global change research. Remote Sens. Environ. 2014, 145, 154–172. [Google Scholar] [CrossRef] [Green Version]

- Kumar, L.; Mutanga, O. Google Earth Engine applications since inception: Usage, trends, and potential. Remote Sens. 2018, 10, 1509. [Google Scholar] [CrossRef]

- Zhang, Y.; Mishra, R.K. A review and comparison of commercially available pan-sharpening techniques for high resolution satellite image fusion. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 182–185. [Google Scholar]

- Ibtehaz, N.; Rahman, M.S. MultiResUNet: Rethinking the U-Net architecture for multimodal biomedical image segmentation. Neural Netw. 2020, 121, 74–87. [Google Scholar] [CrossRef] [PubMed]

- Lou, A.; Guan, S.; Loew, M.H. DC-UNet: Rethinking the U-Net architecture with dual channel efficient CNN for medical image segmentation. In Proceedings of the International Society for Optics and Photonics, Online, 15 February 2021; p. 115962T. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Yu, F.; Koltun, V.; Funkhouser, T. Dilated residual networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 472–480. [Google Scholar]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. Unet++: Redesigning skip connections to exploit multiscale features in image segmentation. IEEE Trans. Med. Imaging 2019, 39, 1856–1867. [Google Scholar] [CrossRef]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.; Wu, J. Unet 3+: A full-scale connected unet for medical image segmentation. In Proceedings of the ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 1055–1059. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 1–48. [Google Scholar] [CrossRef]

- Chlap, P.; Min, H.; Vandenberg, N.; Dowling, J.; Holloway, L.; Haworth, A. A review of medical image data augmentation techniques for deep learning applications. J. Med. Imag. Radiat. Oncol. 2021, 65, 545–563. [Google Scholar] [CrossRef]

- Su, H.; Qin, T.; Wang, A.; Lu, W. Reconstructing ocean heat content for revisiting global ocean warming from remote sensing perspectives. Remote Sens. 2021, 13, 3799. [Google Scholar] [CrossRef]

- Su, H.; Jiang, J.; Wang, A.; Zhuang, W.; Yan, X. Subsurface temperature reconstruction for the global ocean from 1993 to 2020 using satellite observations and deep learning. Remote Sens. 2022, 14, 3198. [Google Scholar] [CrossRef]

| Band Name | Bandwidth (nm) | Resolution (m) |

|---|---|---|

| Band 1 Coastal Aerosol | 430–450 | 30 |

| Band 2 Blue | 450–510 | 30 |

| Band 3 Green | 530–590 | 30 |

| Band 4 Red | 640–670 | 30 |

| Band 5 Near-Infrared | 850–880 | 30 |

| Band 6 SWIR 1 | 1570–1650 | 30 |

| Band 7 SWIR 2 | 2110–2290 | 30 |

| Band 8 Panchromatic | 500–680 | 15 |

| Band 9 Cirrus | 1360–1380 | 30 |

| Methods | RMSE | Mean RMSE | ||

|---|---|---|---|---|

| B2 | B3 | B5 | ||

| GS | 0.004534 | 0.003535 | 0.002654 | 0.003574 |

| Brovey | 0.008843 | 0.010237 | 0.012003 | 0.010361 |

| IHS | 0.017246 | 0.021416 | 0.025506 | 0.021389 |

| simpleMean | 0.006009 | 0.004580 | 0.012761 | 0.007783 |

| Network Parameters | Optimal Parameters |

|---|---|

| Loss function | binary_crossentropy |

| Optimizer | Adam |

| Activation | sigmoid |

| Initial learning rate | 0.0001 |

| Epoch | 50 |

| Batch Size | 5 |

| Dropout | 0.3 |

| Group | Methods | Precision | Recall | F1-Score | OA | IoU |

|---|---|---|---|---|---|---|

| First Group | FCN | 81.5% | 79.4% | 80.5% | 94.1% | 67.3% |

| SegNet | 50.8% | 52.6% | 51.7% | 85.0% | 34.9% | |

| Second Group | UNet | 83.1% | 85.7% | 84.4% | 95.2% | 73.0% |

| ResUNet | 70.8% | 91.4% | 79.8% | 92.3% | 66.4% | |

| UNet++ | 60.5% | 90.6% | 72.6% | 89.5% | 72.4% | |

| RaftNet | 84.5% | 88.1% | 86.3% | 95.7% | 75.9% |

| Methods | Precision | Recall | F1-Score | OA | IoU |

|---|---|---|---|---|---|

| UNet | 83.1% | 85.7% | 84.4% | 95.2% | 73.0% |

| DCUNet | 87.4% | 82.1% | 84.7% | 95.4% | 73.3% |

| RaftNet | 84.5% | 88.1% | 86.3% | 95.7% | 75.9% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, H.; Wei, S.; Qiu, J.; Wu, W. RaftNet: A New Deep Neural Network for Coastal Raft Aquaculture Extraction from Landsat 8 OLI Data. Remote Sens. 2022, 14, 4587. https://doi.org/10.3390/rs14184587

Su H, Wei S, Qiu J, Wu W. RaftNet: A New Deep Neural Network for Coastal Raft Aquaculture Extraction from Landsat 8 OLI Data. Remote Sensing. 2022; 14(18):4587. https://doi.org/10.3390/rs14184587

Chicago/Turabian StyleSu, Hua, Susu Wei, Junlong Qiu, and Wenting Wu. 2022. "RaftNet: A New Deep Neural Network for Coastal Raft Aquaculture Extraction from Landsat 8 OLI Data" Remote Sensing 14, no. 18: 4587. https://doi.org/10.3390/rs14184587

APA StyleSu, H., Wei, S., Qiu, J., & Wu, W. (2022). RaftNet: A New Deep Neural Network for Coastal Raft Aquaculture Extraction from Landsat 8 OLI Data. Remote Sensing, 14(18), 4587. https://doi.org/10.3390/rs14184587