Abstract

Assembly, Integration, and Verification/Testing (AIV or AIT) is a standardized guideline for projects to ensure consistency throughout spacecraft development phases. The goal of establishing such a guideline is to assist in planning and executing a successful mission. While AIV campaigns can help reduce risk, they can also take years to complete and be prohibitively costly for smaller new space programs, such as university CubeSat teams. This manuscript outlines a strategic approach to the traditional space industry AIV campaign through demonstration with a 6U CubeSat mission. The HYPerspectral Smallsat for Ocean observation (HYPSO-1) mission was developed by the Norwegian University of Science and Technology’s (NTNU) SmallSatellite Laboratory in conjunction with NanoAvionics (the platform provider). The approach retains critical milestones of traditional AIV, outlines tailored testing procedures for the custom-built hyperspectral imager, and provides suggestions for faster development. A critical discussion of de-risking and design-driving decisions, such as imager configuration and machining custom parts, highlights the consequences that helped, or alternatively hindered, development timelines. This AIV approach has proven key for HYPSO-1’s success, defining further development within the lab (e.g., already with the second-generation, HYPSO-2), and can be scaled to other small spacecraft programs throughout the new space industry.

1. Introduction

A rising demand for remote sensing data [1,2], along with the increased accessibility of small satellite platforms and launch opportunities [3] have opened the door to a growing industry of governmental, commercial, and university-developed small satellite missions. These missions tend to focus on quick development times and highly ambitious scientific objectives. Immature technologies are tested rapidly on lower-cost bus platforms. To do this, spacecraft (s/c) platforms can be designed within the Cube Satellite (CubeSat) form factor and use payloads and subsystems based on Commercial Off-The-Shelf (COTS) components [3]. This minimizes the efforts needed for equipment and allows more time for payload development. Using standardized components can reduce the overall cost and schedule, but they also introduce integration and verification challenges when combining subsystems from different vendors [4,5]. The Norwegian University of Science and Technology (NTNU) has developed a 6U Cube Satellite (CubeSat) with a novel, high-performance hyperspectral imager using multiple Commercial Off-The-Shelf (COTS) optical elements and a configurable on-board processor for ocean color remote sensing. The goal of the mission is to provide open-access and on-demand hyperspectral data for scientists and interested parties [6].

CubeSats are standard-design, miniature satellite platforms developed in the 2000s that enable easy launch piggybacking and accelerated development times, among other advantages [7]. This standard design helped the development of microspace and the new space industry, significantly reduced the cost for both developers and users, and effectively lowered the barrier to entry to space. As with any new technological advancements, there were significant challenges at the beginning. Less than 50% of the first 400 nano satellites (≤10 kg) successfully completed their mission [8,9]. It is often difficult to pin-point the exact reasons for a failure after launch, especially when no contact can be established with the satellite [5,10]. However, at least in early missions, one of the most common sources of failure was functional integration error—these being most prevalent in first-time university projects [11].

The National Aeronautics and Space Administration (NASA) and the European Space Agency (ESA) provide standards for their well-established spacecraft (s/c) programs, which guide the system life-cycle management process. Systems engineering is concerned with the big picture of a project, starting with the stakeholder needs and ensuring that these are traced throughout the design phases and verified and validated at each level of design. Verification focuses on ensuring that the requirements are met (i.e., that the system is built according to specifications, or built right), while validation is concerned with the ensuring that the right system is built according to the stakeholder needs [12,13].

The process of verification is included in what is called the Assembly, Integration, and Testing/Verification (Assembly, Integration, and Testing (AIT)/Assembly, Integration, and Verification (AIV)) campaign. AIT is an integral part of the system’s life-cycle, as discussed in the Systems Engineering Handbook [13]. Engineers approach these campaigns using, for example, the vee-model [14] of system development: where one starts at a high level to define the general goals and requirements of the mission [15]. The mission is then broken down into systems, subsystems, etc., down to the smallest elements. The detailed components are finally integrated together. The AIT campaign is completed with verification and validation of the full system through operational use. Other system development approaches include the spiral model or the waterfall model (e.g., in Boehm [16], exemplified with software systems). If deadlines are kept on-schedule, integration goes smoothly, and testing checks out, this process can support early identification of errors while there is still time left for fixing them. Errors caught late in the development process are often significant and can even delay launch.

When the AIT development process is scaled to the CubeSat industry, the reality shifts. CubeSat projects often are intended to be high risk and low investment when compared with other s/c [8,11]. When a launch is purchased, the date is fixed since most CubeSats do not have a dedicated launch (they instead rely on piggybacking). Costs are lower since the s/c are significantly smaller and can share the launch costs with others piggybacking, among other reasons [3]. This gives both the opportunity for smaller-budget projects to test out ideas, but also the limitation to have good control over the entire development schedule. As seen in recent years, interest in CubeSats is growing [17], especially university involvement in the field. AIT campaigns for larger s/c can be lengthy [15], but this often is not viable in order to deliver scientific data quickly, within university contexts, or for Small- and Medium-sized Enterprise (SMEs).

At the NTNU, the AIT campaign was tailored to suit both the intended schedule and university budget, but also follows closely s/c industry standards so as to limit the possibilities of functional integration errors. Our proposed campaign follows the lean development [9] of the HYPerspectral Smallsat for Ocean observation (HYPSO-1) CubeSat, which is a hyperspectral mission designed to observe ocean color and algal blooms, primarily along the Norwegian coast (HYPSO-1 mission, https://www.hypso.space/, accessed on 5 July 2022). Given that CubeSat failures are common among first-time university projects, efforts were focused solely on payload development, rather than the CubeSat as a whole. This approach will be adapted as experience and capability grow within the team. For HYPSO-1, the CubeSat bus was purchased from NanoAvionics Corp. (NanoAvionics) (NanoAvionics Corp., https://nanoavionics.com/, accessed on 5 July 2022) and includes the main 6U structure, solar panels, batteries, Attitude Determination and Control System (ADCS) hardware, a flight computer, and data handling, as well as radios for communication. All subsystems have space heritage from previous Low Earth Orbit (LEO) missions. NTNU is responsible for the payload development, and the presented AIT procedure is based on this.

This paper is organized into detailed descriptions of the assembly, integration, and testing/verification activities of the HYPSO-1 hyperspectral imaging payload. We outline the most critical aspects of larger s/c practices and demonstrate how they can be scaled for smaller project campaigns, which often lack access to knowledge in the field (personnel with experience), adequate assembly/testing facilities, and even necessary software. Selected test results are presented to demonstrate the validation methods. Finally, critical decisions that set apart the presented AIT approach are discussed with implications for CubeSat projects, both within academia and industry.

1.1. HYPSO-1 Mission Overview

Mission statement:

The HYPSO-1 CubeSat mission shall support and provide coastal ocean color mapping and monitoring through hyperspectral imaging, intelligent on-board processed data, and on-demand communications in a concert of robotic agents.

Specific details about the mission and payload, including the analysis of system budgets, were presented in previous works by the NTNU’s SmallSat Lab and can be found as outlined in Table 1. A brief overview is presented below, while this work focuses on the assembly, integration, and testing procedures.

Table 1.

Summary of selected supplementary works about the HYPSO-1 mission and payload, for reference.

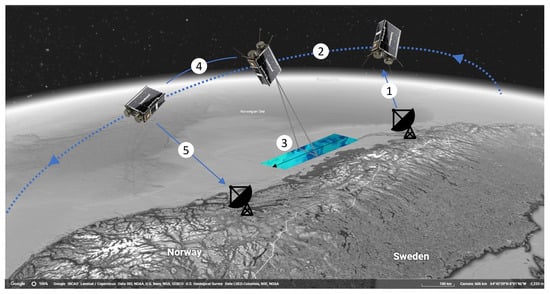

Concept of operations: In order to provide the ocean color data products, the CubeSat payload contains a hyperspectral imager [19], a red-green-blue camera, and an on-board processing unit. The operational concept, Figure 1 is to reside in LEO for a lifetime of 5 years, harvesting energy via solar panels and conserving energy while in eclipse. Once over a target, the CubeSat scans along a coastal region with a 70 km swath width. Images will be captured with overlapping pixels by completing a slew maneuver, or rotation. Magnetorquers and reaction wheels adjust the imager pointing based on Inertial Measurement Unit (IMU), Global Navigation Satellite System (GNSS), and star tracker measurement processing on-board. More information can be found in Grøtte et al. [6]. After imaging, the collected data can then be processed on-board [23], compressed, and downlinked to a ground station. NTNU has its own ground station for transmitting and receiving data, but also has agreements with companies operating stations in Norway (Svalbard), Lithuania, and Spain to maximize downlinking capability. The local station can operate on 400 MHz Ultra-High Frequency (UHF), as well as the S-band; the commercial stations operate on the S-band. Mission control in Trondheim (NTNU) will then command in situ agents to verify or obtain more detail on the imaged targets.

Figure 1.

Simplified operational concept showing (1) uplink of commands, (2) slew maneuver, (3) pushbroom hyperspectral imaging, (4) on-board processing, and (5) downlinking of data (inspired by M. Grøtte; map from Google Earth).

Requirements: The goal of the AIT campaign is to reduce the risk of failure during launch and in-orbit operations, in addition to verifying and validating the requirements and objectives of the mission. Mission objectives follow from the mission statement (above) and depend on various levels of requirements such as environmental, system, and performance requirements, to name a few. Concerning the payload, Table 2 shows a selection of key performance requirements for HYPSO-1. These will be used throughout this paper to demonstrate how validation can be accomplished through testing, keeping in mind that this is only a very small subset of the full list of requirements. A successful AIT campaign cannot ensure mission success, but it will increase the chance of accomplishing that goal.

Table 2.

Selected payload performance requirements from the HYPSO-1 mission; used to demonstrate validation in the AIT campaign. The Validation column points to the section in this paper that explains how each of these requirements were validated. Note: these are the high-level requirements; they are not quantified at the level they are presented at. Full-Width at Half-Maximum (FWHM) refers to the Full-Width at Half-Maximum of signal intensity peaks measured for calibration. Requirements derived from: previous missions [24,25], the IOCCG [26], and ESA’s tailored ECSS [27].

1.2. Model Philosophy

The model philosophy is a strategy to help plan out how many components will be required and to define the role that each one of those components will play throughout the AIT campaign and final mission. This is a trade-off between cost and risk. A chosen model philosophy strongly influences the direction of the campaign. The overarching model philosophies can be divided into two categories: (1) those with a Protoflight Model (pFM) approach [28,29] and (2) those with a Qualification Model (QM)/Engineering Qualification Model (EQM) and Flight Model (FM) approach [30,31,32]. The pFM approach reduces the cost of parts and the use of facilities, but increases the risk of delay if the pFM fails. Moreover, the pFM will be subject to more testing with harsher loads than a strictly FM approach, which can increase the probability of failure during launch. Essentially, space hardware must be reliably functional, but also must not be over-tested to the point that it already provides less than optimal performance at launch.

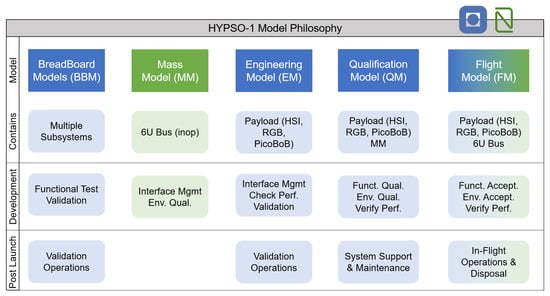

For HYPSO-1, the second philosophy was chosen, creating a dedicated FM. This is the model that was launched into space. It went through minimal acceptance testing [27] and was additionally tested for full functionality. The more rigorous qualification testing that proves that the satellite will survive mission extremes was completed on another model that will be referred to as the QM. These two models ensured that duplicate components were available at all times and reduced the risk of compromising the flight hardware during testing. There is also a third model, the EM, which was completely integral for speeding up the schedule by allowing for preliminary testing and quick results. A third full model comes at an extra cost though, both in terms of extra components and time. The HYPSO-1 model philosophy, including the function of each model, is shown in Figure 2.

Figure 2.

The HYPSO-1 model philosophy with both NTNU (blue) and NanoAvionics (green) deliverables. The payload contains the Hyperspectral Imager (HSI), Red-Green-Blue camera (RGB), and electronics stack/computer/Break-out Board (PicoBoB). The Mass Model (MM) is a non-operational, hence “inop”, version of the 6U bus with the correct distribution of component masses. Abbreviations are as follows: Mgmt = Management, Perf. = Performance, Funct. = Functional, Qual. = Qualification, Env. = Environmental, Accept. = Acceptance.

The Hyperspectral Imager (HSI) went through 7 iterations from its initial inception, the Red-Green-Blue (RGB) imager through 2, the payload controlling electronics board 3, and the structural/mechanical mounting platform for the payloads 6. The BreadBoard Models (BBMs) and EM versions of subsystems, such as the Flat Satellite (FlatSat), were mostly incorporated into the EM by the end of the campaign to save time and reduce costs. It was determined that testing on flight hardware, as with a Protoflight Model approach, would create too high a risk of damage during testing.

1.3. CubeSat Verification and Validation

Failure during launch and in-orbit operations are equally detrimental to a mission. Early efforts in the AIT campaign should be aimed at uncovering the failures at the system functional level [8,33]. These functional failures can be “design, manufacturing, workmanship or of operational origin” [33] (p. 1). Technology Readiness Level (TRL), Integration Readiness Level (IRL), and System Readiness Level (SRL) [34] are especially useful in classifying critical components and functionality. The level identifiers can support projects by helping to plan where to focus efforts for verification of elements, components, and subsystems. For example, the TRL of a COTS component may be 9 (proven) in its nominal operational environment, but the TRL is reduced to 5/6 for a space project before verification activities have commenced.

The IRL and SRL can be challenging for CubeSat teams because teams often mix components from different vendors, develop the interfaces in-house, and lack the subsystems to performed a full system-level functional testing. In Honoré-Livermore et al. [35], the NTNU HYPSO-1 team demonstrated how the maturity levels could be used to evaluate the subsystems and to inform the project team how to focus efforts. The results indicated that using a FlatSat [36] with non-space-ready components or leftover development models (breadboards) can greatly reduce the risks of system-level failures. A FlatSat allows for verification of the integrated system and interfaces, is easy to maintain and reconfigure, and can be used for validation as well. Choosing which subsystems and components to include in the FlatSat should be directed by the level of risk associated (i.e., how mature they are) and their criticality to the mission (for example, the payload is typically highly critical and has low maturity). Other verification activities on the component level could be to test elements in launch or operational environments, in addition to the EM or pFM testing.

In addition to relying on internal resources for development, programs such as ESA’s Fly Your Satellite! provide tools especially designed for assisting university CubeSat projects. They can serves as mentors, supply facility access, and review materials, providing valuable feedback [37,38]. While HYPSO-1 was not part of this program, there are advantages of joining targeted projects such as these and contributing to shared knowledge in the field. That is one of the aims in presenting the HYPSO-1 AIT campaign here.

2. Materials, Methods, and Integration

The HYPSO-1 bus and payload were assembled separately by the NanoAvionics and NTNU teams, respectively. This led to considerable attention on the communication and interfaces between the separate components. Therefore, team organization and documentation became critical factors early on in the project. The focus of this section is on the NTNU’s development since the NanoAvionics bus already has flight heritage.

2.1. Organization

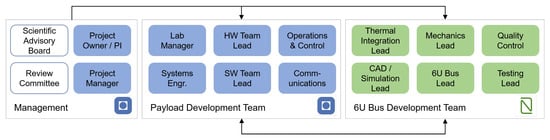

The HYPSO-1 project team is organized in such a way that enables sub-teams to develop components and subsystems independently before satellite integration. The task division led to more lateral responsibility, common in Scandinavian project management [39], rather than waiting on hierarchical approval. The project owner/principle investigator (professor) was responsible for securing funding and connections that would form the basis of the scientific advisory board. The project manager was responsible for facilitating communication across the team, planning reviews, and overseeing the day-to-day activities of the development teams. This role was filled by a Ph.D. student with additional hours allocated specifically for the tasks. These two higher management roles for the mission were conducted by NTNU members.

Internally, at NTNU, the team consisted of Bachelor’s/Master’s students (per semester), 8 Ph.D. students, 2 postdocs, and professors and engineers. The size of the team remained approximately consistent, but each semester, the student team base changed and new students had to be trained. Members were divided into payload subsystem development teams. The teams are summarized in Figure 3. Each team was tasked with the assembly of their own components, software, or infrastructure. The lab manager played a key role in ensuring that the lab was functional at all times, ordering and acquisition was efficient, safety measures were adhered to, and equipment repairs were made without significant delays. The NTNU team could, however, have benefited from closer contact and mentorship from experts in the field, either external or internal. Most students involved in the project had no prior experience in space sciences and/or s/c development.

Figure 3.

The overall organization of AIT responsibility for HYPSO-1. The NTNU team is shown in blue, NanoAvionics in green, and external participants in white.

Externally, the NanoAvionics team independently developed their standard bus and modified it to fit the payload, while observing mission objectives. The 6U bus was entirely assembled at NanoAvionics facilities. The responsibility for integration and quality control also fell primarily on the NanoAvionics team. This was not initially planned, but instead, developed from the limited in-person contact enforced by the strict Coronavirus Pandemic (COVID-19) regulations at the time.

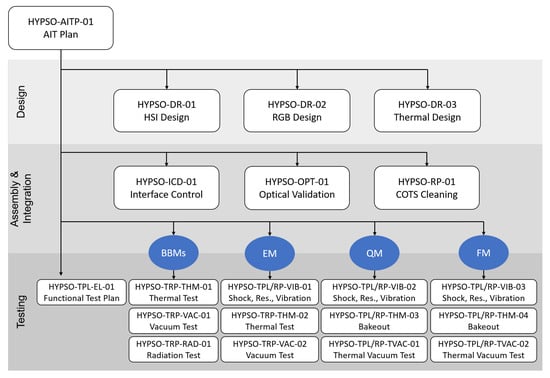

Testing was completed iteratively over many models and in various environmental conditions. Preliminary testing for both the payload and bus was performed by the respective teams. After final assembly and integration, NanoAvionics was responsible for the FM test campaign. All documentation of the AIT activities is organized as shown in Figure 4.

Figure 4.

A summary of AIT reports written over the course of the HYPSO-1 AIT campaign.

Project documentation is a critical resource for the team, especially since HYPSO-1 is the first satellite for the SmallSat Lab at NTNU. Each document followed the European Cooperation for Space Standardization (ECSS) guidelines, in as much as was applicable, and will serve as a template for proceeding CubeSats at the SmallSat Lab. It was also important that the outlines were kept consistent with larger space projects to facilitate student transition from studies to industry. These reports were written internally on a shared cloud storage drive, which made it possible to continuously and concurrently update them throughout the project. Furthermore, the final versions of each document were archived in a document configuration control system. Each semester, an informal meeting was held to review documentation and to keep an ongoing record of version control. The Review Committee, comprising internal and external experts in the field, was responsible for ensuring quality, raising concerns and discrepancies in the work, and reporting on these at twice-annual meetings.

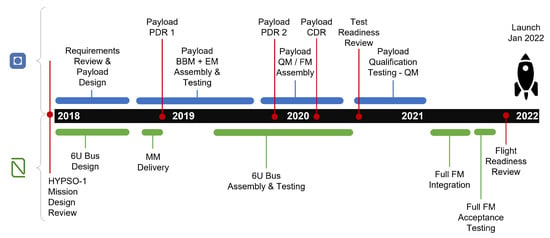

2.2. Schedule

HYPSO-1 commenced with the mission design review at the end of 2017. It was launched in January 2022. Development periods range widely, especially for first-time CubeSat developers [40]. A summary of the four-year schedule of AIT activities is shown in Figure 5. As illustrated, the development from both NTNU and NanoAvionics ran concurrently. Although milestones are noted mostly with NTNU, the reviews were attended by both parties. The Review Committee also attended both the Preliminary Design Reviews and the Critical Design Review. The launch was originally scheduled for late 2020, but due to the COVID-19 pandemic and resulting campus lockdowns, the qualification testing and launch were postponed until 2021/2022.

Figure 5.

The overall schedule of AIT activities for HYPSO-1. NTNU development is shown in blue and NanoAvionics in green. The red dots represents milestones for the project.

Reviews were a mix of digital and in-person attendance. This enabled more accessibility for interested parties from outside of Trondheim, especially during COVID-19. Feedback and Review Item Discrepancies (RIDs) were accessible by both development teams and integrated into the AIT activities moving forward.

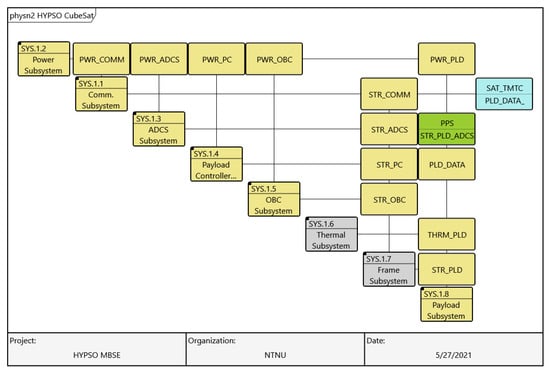

2.3. System Architecture and Interface Control

Being a small university team with limited funding and experience, the team decided early on to select CubeSat form factor, rideshare/piggyback launch missions to reduce risk. Of the sizes available at that time, 1U, 2U, 3U, 6U, or 12U CubeSats could be selected. Funding for and availability of the optical design and components were also limited, so this meant relying on COTS components. The components that could be purchased for the optics determined the minimum size of the imager. With this optical design and the orbital constraints, the imager could not be made smaller than 3U. To complete the mission, the remainder of the satellite functions such as radios (for data transmission), batteries, attitude determination and control (pointing accuracy), etc., was needed. A 6U platform from NanoAvionics was selected during an open tender process. In its entirety, the HYPSO-1 satellite can be broken down into eight main subsystems: communication, power, ADCS, Payload Controller (PC), On-Board Computer (OBC; and also called a Flight Controller (FC)), thermal, 6U frame and structure, and payload. Each of these subsystem interfaces depends on one another, as illustrated in Figure 6.

Figure 6.

N2-matrix of the main interfaces for the NTNU payload subsystem (SYS.1.8.) from a logical architecture point of view. The green link between SYS.1.3 ADCS and SYS.1.8 payload is critical to ensure pointing knowledge accuracy. The yellow links are on-board the CubeSat, while the blue box is to the external subsystem (ground.) All subsystems except SYS.1.6 and SYS.1.7 (in gray) are active components and are connected via the CAN-bus. STR means Structural/mechanical support; PWR means Power interface; PLD means Payload; DT means Data; TMTC means Telemetry/Telecommand; THRM means Thermal interface; COMM means Communication. Diagram made using GENESYS.

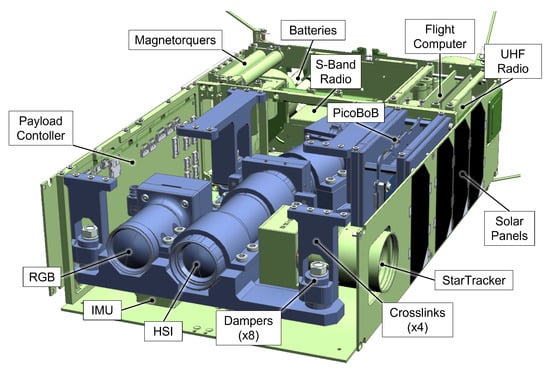

The NTNU payload interfaces with NanoAvionics components are as follows: the 6U frame (mechanical, thermal), the thermal subsystem (thermal), the payload controller (data), the ADCS (data, mechanical), and power (electrical). The payload itself has many internal interfaces as well. Figure 7 shows the division of work within the satellite based on color-coding and illustrates the interface points mentioned above.

Figure 7.

The satellite architecture showing NanoAvionics components (with flight heritage) in green and the NTNU payload components in blue. The 6U frame, top and front panels, and cable harness are not shown. IMU = Inertial Measurement Unit, RGB = Red-Green-Blue camera, HSI = Hyperspectral Imager, PicoBoB = picoZed + Break-Out Boards, UHF = Ultra-High Frequency. (Payload model work by E. Prentice, M. Hjertenæs, H. Galtung, T. Kaasa, and T. Tran; bus model work by NanoAvionics; COTS dampers from SMAC [41]; objectives and grating from Edmund Optics [42]; detectors from iDS [43]; slit and adapter from ThorLabs [44]—downloaded online.)

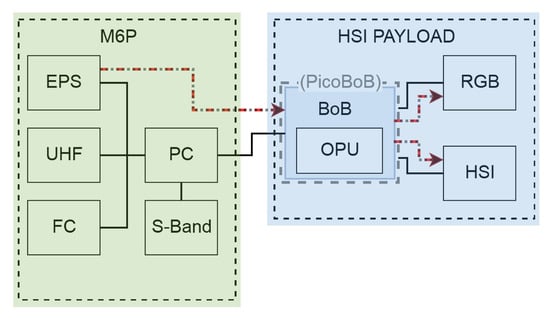

The electrical subsystem is integrated as shown in Figure 8. The colored boxes again represent the split development responsibilities between NanoAvionics and NTNU. The HSI payload receives power from the electronic power supply on the M6P 6U platform bus, and data are passed between both. The onboard processing unit, referred to as picoBoB, is the combination of the break-out board and the PicoZed System-On-Module (SOM). The SOM used was the AES-Z7PZ-7Z030-SOM-I-G, and the mounted processing System-on-Chip (SoC) is Xilinx’s SBG485. More details can be found in the references listed in Table 1.

Figure 8.

Electrical subsystem demonstrating the interfaces between electronics boards on the satellite: red/grey dotted arrows indicate power; black lines are data links. NanoAvionics is responsible for the M6P platform, NTNU for the HSI payload. Abbreviation key: M6P = satellite bus platform, EPS = Electronic Power Supply, UHF = Ultra-High Frequency radio, FC = Flight Computer, PC = Payload Controller, S-band = radio, BoB = Break-out Board, OPU = On-board Processing Unit, RGB = Red-Green-Blue imager, and HSI = Hyperspectral Imager.

From here on, the focus of this paper is on the payload exclusively, since the 6U bus is an available industrial platform that can be purchased through NanoAvionics. It has been fully tested and has flight heritage. Integration with the bus will be covered in the context of adding the payload to the established bus.

2.4. Payload Assembly Sequence and Methods

The payload was assembled in-house at NTNU. This was both an advantage and disadvantage, but the only option amidst the COVID-19 pandemic. The advantage was that logistics and travel were greatly simplified, which reduced wasted time; the disadvantage was that all tools, resources, and facilities needed to be accessible on campus at all times throughout the assembly procedure. This led to the basic assembly sequence shown in Figure 9.

Figure 9.

Simplified assembly sequence for the HYPSO-1 payload at NTNU.

The steps are explained in the sections that follow. It is important to note that some steps were not followed exactly sequentially due to the nature of the assembly. For example, one side of the thermal strap needed to be secured on the electronics stack, PicoZed + Break-out-Board (picoBoB), during the assembly, and the other side was done after (as shown in frame F in the diagram) to allow for the thermal paste to dry in between. An estimate of the time used for each step is also included for reference—this estimate is based on the FM procedures and does not include the time needed to develop the methods. Some activities occurred concurrently. Together, the QM and FM payload model assemblies took roughly 10 months (from September 2019 until June 2020) to complete. Development activities took nearly a year.

A. Acquisition: Orders, acquisition, and machining were the largest unknowns when it came to scheduling and caused the greatest unanticipated delays. Duplicates of every COTS component were ordered from the start to ensure backup if anything broke, was lost, went out of stock, or had significant shipping delays (common during the pandemic, especially across international borders). This proved helpful, but sometimes not sufficient enough.

All of the custom parts were machined from aluminum 6082, in-house. In-house fabrication made communication more straightforward, but also caused many issues with priority amongst other projects within the department and the limited availability of machinists during vacation periods. Only trained machinists were allowed access to the machines, not students directly, causing unexpected delays:

| Order parts: 2 weeks–3 months; |

| Machine parts: 2–6 months. |

B. Modify parts: Many of the ordered COTS components included parts with high outgassing materials, such as stickers, plastics, and rubber seals. These materials had to be removed, modified, or replaced. Stickers were removed, and the glue residue was cleaned off with ethanol. Critical components, such as rubber gaskets, were replaced with space-proof plastics such as Polytetrafluoroethylene (PTFE).

Some COTS components also contained moving parts, particularly the adjustable aperture in the HSI objectives. The fragile, interwoven leaves were replaced with a fixed, circular baffle ring since the aperture diameter is fixed for imaging purposes in space. The slit tube assembly was designed with a fixed baffling plate so that the slit was automatically constrained and to mitigate the risk of using movable COTS-retaining rings.

All machined components in the optical train were anodized with a black, lusterless, MIL-A-8625 Type II coating (Alcoa Alumilite System Finishes, USA). This coating helped to minimize stray light and reflection inside the optics. Anodized components included the slit tube, aperture, detector housing, and grating assembly. Coatings can also help prevent corrosion or cold welding and should be added to the remaining machined parts.

The printed circuit boards were conformally coated with primer and MAPSIL® 231-B (MAP Space Coatings, France). This clear coating can improve the structural integrity of the boards themselves and avoid short circuiting throughout the mission:

| Modify objectives: 1–2 weeks; |

| Modify detectors: 4 days; |

| Modify slit: 2 weeks; |

| Anodizing: 3 weeks; |

| Conformal coating: 6 weeks. |

C. Clean parts: All components were thoroughly cleaned and bagged in new, metalized, ElectroStatic Discharge (ESD)-safe bags prior to assembly. In this way, contamination was limited to mitigate potential outgassing onto critical surfaces, such as the lenses and electronics boards. All machined components, the dissembled COTS objectives, and anodized parts were cleaned in an ultrasonic bath with a series of soapy distilled water and/or absolute ethanol. The glass lenses were removed from the objectives before cleaning and were polished with a cleanroom (low particle count) optical cloth and a solution of acetone and/or ethanol. The imager sensors, slit, and grating were wrapped in cleanroom cloths and bagged immediately without attempts at cleaning due to their sensitive, microscopic features:

| Clean objectives: 2 weeks; |

| Clean lenses: 3 days; |

| Clean boards: 1 day; |

| Clean machined parts: 1–2 weeks. |

D. Assemble and focus objectives: Once cleaned and modified, the HSI objectives were re-assembled. COTS objectives have slightly different dimensions due to production tolerances, so focus needed to be set individually before assembling the full imager. Since observation will be from LEO, all objectives needed infinite focus. The focus was set by using a collimator (backwards, to simulate infinite distance) and a 3D-printed reticle connected in series as the target. After setting, the focus was then verified by pointing toward a target at a hyperfocal distance of greater than 50 m. This was performed by focusing on man-made structures (buildings with straight lines) outside the window of the lab. When both methods agreed, the focus was fixed using set screws coated in space-approved epoxy, 3M™ Scotch-Weld™ DP2216 Gray (3M Company, Saint Paul, MN, USA):

| Re-assemble objectives: 1 week; |

| Focus setting: 1–3 days. |

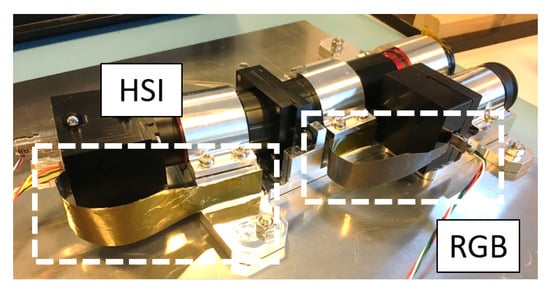

E. Assemble HSI, RGB, and picoBoB: The payload consists of two separate physical components: the payload platform (a base for the HSI and the RGB) and the picoBoB (the payload electronics stack) connected via the payload harness. Both components were mounted rigidly to the 6U frame (see Section 2.5 on integration) and connected electrically via cabling. Thus, they can be assembled independently.

Optical components of the HSI were assembled first. The grating was press fit into the cassette with PTFE gaskets and secured using set screws. All screws in the assembly were tightened using defined torques based on the screw dimensions. Additionally, a dot of space-approved epoxy was added to the head of each screw for extra security. The grating/cassette assembly was mounted directly to the payload platform with screws, similarly. The slit was mounted inside the slit tube, and the HSI detector was housed in its anodized shell. From here, the threaded COTS objectives connect the sub-assemblies in series.

The less complex RGB imager assembly consisted of securing the detector to the housing with internal screws and twisting the objective into the C-mount at the front of the housing. Again, torqued and epoxied screws fasten the sub-assemblies together. Both imagers were set in the payload platform groove and were secured with gasketed clamping brackets. The gaskets used in this case were simply a layer of Kapton® tape (DuPont de Nemours, Inc., Wilmington, DE, USA). Screws secure these brackets and must be tightened to the correct torque value.

The picoBoB assembly contains an electronics board, a break-out board, two shield plates, and two ring frames for attachment to the 6U frame. All have corresponding holes built-in and connect as a typical electronics stack with spacers in between. Before assembling the stack, cables and connectors were secured and checked, and thermal straps were added to the shield plates. It is not possible to connect these once the stack is complete due to tight clearances, and it is also best to let the thermal paste that attaches the straps cure on a flat surface. More details on the thermal straps follow in the next section:

| Assemble HSI: 3 days; |

| Assemble RGB: 1 day; |

| Assemble picoBoB: 2 days. |

F. Add thermal straps: A Passive Thermal Control (PTC) system was implemented to dissipate excessive localized heat in active payload components. This was particularly chosen considering the limited power budget on-board. Our passive thermal solutions were basic, but effective, and included: anodizing, thermal straps, and thermal decoupling components. As mentioned in Step B, components along the optical train were anodized black. This changes the thermo-optical properties of the surface and improves the radiative heat transfer because of its high emittance. Thermal straps are thin, thermally conductive sheets that transport heat from a source to a sink. The three components on the payload that experience the highest temperatures are the picoBoB electronics and the two imager sensors. Thermal straps were attached to these components with thermal paste and linked to the large surface area aluminum heat “sinks”, namely the picoBoB shield plates and payload platform, respectively. Figure 10 shows the assembly of the payload platform, with thermal straps installed, on the two imagers. These straps are fragile and can be easily damaged during handling, storage, or shipping:

Figure 10.

Thermal straps assist with heat dissipation from active electronics boards in the HSI and RGB (heat source) to the payload platform (heat sink). Additionally, black features in the image are anodized.

| Add thermal straps: 2 days. |

G. Add dampers: The final additions to the payload include the crosslinks and dampers. Through these structures, the payload platform is able to integrate with the rest of the 6U bus. Dampers were purchased commercially from SMAC [41] and were selected based on their thermal isolating properties and high-frequency damping capability highlighted in the simulation. Here, 6 mm bolts pass through the payload platform, which is sandwiched between dampers and the crosslinks. In this way, the payload platform is thermally and mechanically decoupled from the rest of the satellite. Using the prescribed torque on these bolts is important to ensure correct damper functionality:

| Add crosslinks + dampers: 3 h. |

2.5. Payload Integration into the Satellite

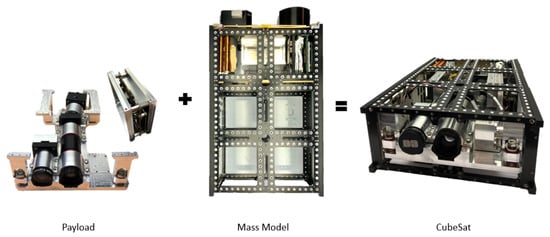

The mechanical payload interface to the CubeSat frame consists of four crosslinks. The electronics stack, picoBoB, was mounted to the s/c frame via two ring frames. The interface and integration of the payload into the 6U bus is shown in Figure 11.

Figure 11.

Integrating the payload (NTNU) with the 6U bus (NanoAvionics); cabling not shown.

These interfaces all require screws directly through the 6U frame and were secured with designated torques and a dot of epoxy. The 6U frame must be disassembled and the top part of the frame removed in order to slide the payload into place. Cables were secured in a harness with nylon ties or Dyneema® (Koninklijke DSM N.V., Heerlen, The Netherlands) fishing line. Additional small loops were made before each cable termination and taped with Kapton® tape (DuPont, Wilmington, DE, USA) for stress relief at the joints.

2.6. Ground Support Equipment and Facilities

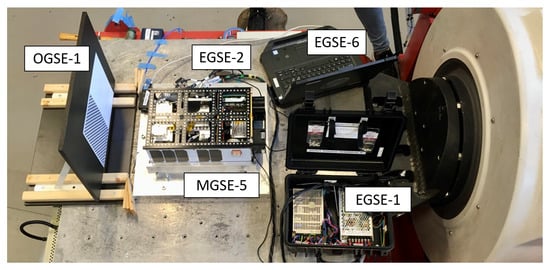

The Ground Support Equipment (GSE) used for HYPSO-1 has three varieties: Mechanical Ground Support Equipment (MGSE), Optical Ground Support Equipment (OGSE), and Electrical Ground Support Equipment (EGSE). Each of these items played a critical role in the payload development. Table 3 gives a summary of the Ground Support Equipment (GSE) used in the HYPSO-1.

Table 3.

Summary of GSE for AIT activities at NTNU. Selected GSE used for development and testing per model: EM = Engineering Model, QM = Qualification Model, and FM = Flight Model.

Mechanical GSE: includes all items used for storage, transportation, and test mounting. The payload was double-bagged in two ESD-safe, metalized bags and packed into Peli™ Protector cases (Pelican Products, Torrance, CA, USA) when stored or transported. All attached cables were secured with Kapton® tape (DuPont, Wilmington, DE, USA), and foam was used to fill voids in the packaging and secure the components.

Since the payload was assembled entirely in-house, transportation occurred only when visiting testing sites and shipping the payload FM to NanoAvionics for final integration. Most transportation was done by car to the testing sites. Obvious care was taken in these cases. The final shipment went via air freight. In this case, shock labels were attached to the outside of the packaging to ensure careful handling, and special export licenses had to be obtained.

Mounting plates were designed for each environmental test and each different chamber/table. Since NTNU focused only on the payload, standard dispenser test pods that fit the entire satellite were not used. The standard dispenser pod is a duplicate of actual dispenser pods from the launch vehicle that help simulate loading, as it will be experience at launch. Looking back, this would have been a safer approach, but no pods were available for use at the time. Instead, custom plates and brackets were designed to interface with the hole patterns of the chambers/tables and payload components. Each was designed to imitate the actual mounting structures in the satellite and launch vehicle.

Optical GSE: was used for optical calibration and verification activities. All calibration was performed in a dedicated optics laboratory on campus. This lab supplied an integrating sphere (coated in barium sulfate), calibration lamps (including a certified tungsten halogen lamp and spectral calibration lamps with known spectral emission), and mounting structures for the imagers. HSI calibration [20] was completed using this method, while RGB calibration was performed with spatial targets, such as a checkerboard pattern, in the same lab.

Since testing took place at different facilities, a portable and robust setup was used to check optical performance before and after each test. A large, non-reflective (matte black) box that could be placed over the payload/satellite was used to simulate a dark room. A fluorescent light bulb was installed in the top as the light source has a known spectral signature. For the radiation optical tests, a stable tungsten halogen lamp was used. In addition, a solid target mount was built with mounting holes in its base. Targets were switched out depending on the goals of the test and the instruments involved. Since RGB images are spatial, checkerboard and standard pattern targets were used. For the HSI, matte white paper provided a near-uniform reflectance of whatever the chosen light source was. This setup enabled the HYPSO-1 team to easily transport and setup a repeatable optical environment despite the varied testing environments.

Electrical GSE: was used to power and communicate with the payload before it was completely integrated into the satellite. An external power supply unit that could be plugged into a wall outlet was built into a Peli™case to simply and safely provide power to the payload at various test sites. It included different and well-labeled connectors and fuses to protect the payload against surges. The testing umbilical could be paired with this and a laptop to provide connection and control of the payload.

The chosen PicoLock (Molex LLC, Lisle, IL, USA) connectors have a maximum recommended number of mate/de-mate cycles of 30. Connector savers were made to reduce the number of cycles during testing. A connector saver is a length of cable where one end is attached to the protected connector and the other end can be “sacrificed” and mated/de-mated more often. The connector saver can be replaced when necessary, greatly reducing the mating cycles on payload connectors. Several connectors were mounted on one common Printed Circuit Board (PCB), for example to mimic the interface of the Electronic Power Supply (EPS). The connector savers were connected to the payload cable harness on the payload/satellite interface.

To avoid damage from static electrical discharge at and around the sensitive equipment, ESD equipment was required whenever handling s/c components. The SmallSat lab is an ESD-safe environment, including: mats, coats, flooring, shoes, grounded work surfaces, gloves, etc. Precautions for ESD handling were also taken when removing the payload from the SmallSat lab, for example during environmental tests.

For the environmental tests (shock/vibration and thermal vacuum), sensors were needed to evaluate the componentwise results of testing. This included accelerometers and temperature sensors, placed at various locations on the payload assembly.

Figure 12 summarizes the validation setup used at an external testing site. The most common GSE components used throughout the testing campaign are highlighted here.

Figure 12.

Portable validation setup showcasing GSE. The ”dark room” box and light source that cover the setup are not shown, but are used in this setup. OGSE-1 optical target (half white for the HSI, half checkerboard for the RGB), MGSE-5 vibration table mounting plate (clamps not shown), EGSE-6 laptop with test software, EGSE-2 testing umbilical, and EGSE-1 external power supply unit.

Facilities: Several facilities were required in addition to the equipment needed for building and testing the satellite. Most work was completed in the SmallSatellite Lab at NTNU, which included an ESD-safe working area, computers, storage space, soldering equipment, 3D printers, workshop tools, multimeters, oscilloscopes/spectrum analyzers, and spare parts.

Machined components were made at the Mechanical Workshop in-house. All optical components were configured in a flow bench to limit particle contamination. A dedicated optics laboratory on campus was used for calibration and characterization of the optical parameters. Electronics were tested in the ESD-safe section of the SmallSatellite lab. The FM was integrated in an ISO class 7-certified cleanroom at NanoAvionics. Preliminary vacuum and thermal testing was carried out at various chambers on campus, while qualification and acceptance testing required machines that were able to meet the specified levels. These testing facilities are detailed in the section that follows.

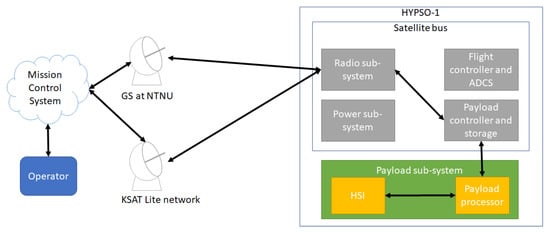

For end-to-end functional testing, a flexible Hardware-In-the-Loop (HIL)-setup was created [22]. It is capable of connecting to the payload and FlatSat, either locally, through the NanoAvionics operations architecture, or through a UHF radio link between the local FlatSat and the local ground station (NTNU ground station, https://www.ntnu.edu/web/smallsat/infrastructure, accessed on 5 July 2022). The ground station communicates with the satellite through both UHF (backup, 400 MHz-band, 1.2 kbps) and S-band (primary, 2200 GHz-band, up to 1.4 Mbps) radios. Figure 13 shows a model of the communications infrastructure, including ground stations.

Figure 13.

Communications architecture including ground stations for the HYPSO-1 mission.

The main ground station, including antennas, radios, and control equipment, is located on the roof of the SmallSatellite Lab building at NTNU and can be remotely accessed. For on-site end-to-end tests, only the UHF chain was used. When operational, the satellite is commanded and supervised from a mission control center, also physically located at NTNU. While in orbit, the satellite will also be accessible from other ground stations through the KSATlite’s (KSATlite ground station, https://www.ksat.no/no/ground-network-services/ksatlite/, accessed on 5 July 2022) network. Which specific station to use depends on specific mission needs, and the acquired license for operations and may change during the mission lifetime. Details on the communications and operational architectures for different mission types can be found in [45].

3. Results: Validation through Verification

Several methods were used to validate the HYPSO-1 CubeSat. Validation was assumed if systems passed verification. Validation through verification occurred primarily through testing given the timing constraints and the lean approach to development. Strategies for review, inspection, and analysis were also relied upon throughout the early stages of the campaign. The following sections illustrate the steps toward verification according to the sequence of activities. This schedule reflects payload development based on NTNU’s work. The NanoAvionics portion of the 6U bus and the remainder of the satellite were flight-proven hardware and ready for integration and testing from the start of the project.

The payload design was verified at qualification and acceptance levels. The qualification levels were first run on the BBMs, the EM, and the QM; then, less severe acceptance level tests were run on the final FM to verify survival and function without over-stressing the systems. This was based on the ECSS [27] approach for larger s/c projects.

3.1. Review of Design

Verification activities began in the design phase of payload development. The optical design parameters (such as the platform angle and focal length) were calculated using the data sheets of the COTS components, such as with the grating, lens objectives, and detectors. The theoretical design itself, assisted by these calculations, suggests that the HSI is able to image in the Visible–Near-Infrared (VIS-NIR) spectral range within the required resolution (requirements R.01, R.02, Table 2). Additionally, data sheets provided information to confirm that the chosen materials could survive the expected temperatures and vacuum and that all electrical interfaces were correctly paired.

3.2. Inspection

The HYPSO-1 payload was based on COTS and in-house built components, mostly without space heritage. Close inspection of each component was considered critical in development and maturity. Virtual COTS components were first imported into Computer-Aided Drafting (CAD) and checked for accurate mass and dimensioning against the physical components. If these values were missing, they were estimated and assigned based on the measurements. Custom components were then designed in CAD according to continuous consultation and prototyping with the in-house machinists. A final check was made after the design was complete, masses were added, and the virtual assembly was fully constrained. This model then formed the basis for preliminary measurements and, later, simulation.

Additional inspections were made on the physical payload QM and FM to measure the spacing and orientation of the components, specifically the HSI relative to the RGB, star tracker, and IMU (requirement R.03, Table 2). These values help to determine the pointing geometry for georeferencing images.

3.3. Analysis

It is recommended to complete simulations for resonance, shock, vibrations, and thermal analysis prior to starting a testing campaign. Instead, the HYPSO-1 approach focused more on the “test early and often” motto—meaning that numerous component-level tests were completed as quickly as possible on physical hardware and repeated concurrently with design iterations and prototyping. Three-dimensional printing proved extremely useful in this case. With more resources available as time progressed in the project, the simulations were completed for the start of the second-generation CubeSat, HYPSO-2, instead. Launch loading and vibrations were simulated using the Nastran module in Siemens NX 11 (Siemens Aktiengesellschaft, Munich, Germany), and a thermal analysis was performed with the ESATAN-TMS (ITP Engines UK Ltd., Whetstone, England) software.

3.4. Preliminary Testing

Preliminary testing could begin with the CAD model complete. The first checks were made to ensure that the payload total mass, center of gravity, and moments of inertia fell within the designated envelope outlined by NanoAvionics. From these measurements, components went through iterative shifting and reconfiguration until the requirements were met. This information, being a key interface point with NanoAvionics, was worked on by both development teams together.

Furthermore, BBMs were built to start verifying the function. At this stage, small checks such as cabling connections, power indicator lights, and electronics board heartbeats were verified. The following subsections outline specific tests run on some of the early hardware components.

3.4.1. Break-Out Board Interfaces

As the Break-Out Board (BOB) was the only electronic component that was designed in-house, it underwent testing before other subsystems (e.g., the HSI-instrument or Onboard Processing Unit (OPU)) were connected to avoid damaging these. A total of 11 boards from two different batches were manufactured and assembled by an external company. Nine boards were found functional, while two boards from the first batch had production faults, which made them unusable. The second batch consisted of four boards with lead solder intended for the QM and FM, but were otherwise identical to the first batch.

During initial testing of the BOB, it was found that the Ethernet (ETH) interface between the OPU and the HSI provided only 100 Mbps as opposed to the 1000 Mbps required to support the target camera frame rate. Without identifying the fault, this issue would have changed the entire operational procedure of the mission. The root cause was identified as an error/ambiguity in the interface definition (specifically the pin-out of one component). The high-level component model from a supplier of an integrated part that was used in the PCB design tool differed from the low-level documentation. A deeper analysis showed the ETH electrical Interface Control Document (ICD) definition from the sub-supplier and the error was identified. The final fix was simply swapping two leads in the ETH cable.

3.4.2. Payload Electrical Harness

The payload’s electrical cable harness underwent basic electrical testing for continuity and isolation with a multimeter (requirement R.04, Table 2). The mechanical strength of the crimped pins and pin retention in the contact housings were also checked with simple pull testing by hand using reasonable force, but slightly higher than what would be expected during normal handling.

3.4.3. Imager Calibration

Functional verification of early imager prototypes was also tested at this stage. A calibration lab setup, as detailed in Henriksen et al. [46], was used to confirm the spectral and radiometric imager performance of the HSI. The results were verified against theoretical calculations, and adjustments were made to the design accordingly. Final calibration results can be found in Henriksen et al. [20].

3.5. Verification Test Software

Test software was developed to verify that the payload software functioned as specified under different environmental constraints. Scripts automating command sequencing and checks ensured that imaging requirements could be verified, payload functionality was complete after integration, and testing procedures were repeatable (requirements R.05, R.06, Table 2). A nominal operations code was used to first test the BBMs of the imagers and on-board computer. Iterative testing was then run on a HIL setup, a modular engineering model made to resemble the subsystem setup of the final CubeSat, i.e., a FlatSat [47].

The nominal operations test consisted of various functions including pinging connections to ensure the correct setup, performing file transfers with checksums and timing, capturing images with both the HSI and RGB imagers, and saving and compressing the captured data. In addition, the internal temperature was logged at identified critical points during operation. The captured data, logs, and other telemetry were stored with a unique identifier at the end of each test for in-depth analysis. These scripts were then developed and evolved throughout the program to meet the specific goals per test. This helped expand the functionality of the test suite with each iteration.

The test software, which interfaced with the payload software, was developed originally to aid in hardware development and testing, but it also helped verify the payload as a whole during testing in a consistent and repeatable manner. This formed the basis for a fully automated HIL test suite of the payload software. Several HIL installations were built to resemble the actual satellite as closely as possible. They were set up to be interfaced remotely by any team member, with the correct credentials, for testing (and development). The HILs facilitated verification and validation activities and improved integration efforts, which is important for mission success [48]. The HIL testing provided useful information regarding functionality, performance, debugging, and future software development and provided a platform for automated testing [49].

Furthermore, any code contributions to the payload software were initially manually reviewed by a team member that was not involved in the development of that code contribution, using the HIL setup and GitHub. As a result, these contributions required a description of the proposed changes or additions, such that the other (software) team members could review them. Changes were only added when another team member approved the contribution, signing off that the changes performed as intended and had no detected adverse effects on the system as a whole. When the contribution was approved and included in the subsequent release, then the functionality was included in the test suite used to test the hardware.

3.6. Environmental Testing

Environmental testing helps to verify that the satellite will be able to fully function in harsh environments, such as at launch and throughout the duration of the mission in space. A summary of the HYPSO-1 environmental testing campaign (the payload development branch) is outlined in Table 4. It illustrates the reality of iterative development and the importance of using testing results for design changes.

Table 4.

Summary of environmental testing: from the preliminary BBMs to the fully integrated FM. * indicates tests that were completed at external facilities. Test parameters are briefly summarized, but note that, due to machine/facility limitations, parameters varied in preliminary testing. Mechanical testing parameters also changed throughout the campaign since it became necessary to switch launch providers. Not all noted “issues” required mitigation, but were used instead labeled “characterized”. By characterized, we mean that the results were recorded, and henceforth expected, but nothing was done further to mitigate the issues. Abbreviations: TVAC = Thermal Vacuum, op = operational, non-op = non-operational, g = gravitational force, 9.8 m/s2. Component definitions in Section 2.

The payload QM passed qualification levels, and the satellite FM passed acceptance testing by the end of 2021. The following sections explain the payload environmental testing in more detail. All of the results summarized in the proceeding sections were based on reports written by the HYPSO-1 team, as outlined in Figure 4.

3.6.1. Launch

Launch is considered an extreme test of CubeSat survival. In this segment, the s/c must travel from ambient Earth conditions to LEO on a launch vehicle, a 500 km altitude gain in a matter of roughly 10 min. This can result in shock, vibrations, and rapid pressure and temperature changes. Several tests need to be conducted to simulate these extreme conditions and to verify that the CubeSat will survive (requirement R.07, Table 2).

Shock: The largest shock experienced by the CubeSat will be during separation from the launch vehicle. This shock can be up to 1000 g in the Shock Response Spectrum (SRS), as estimated by the launch provider. The requirement for shock testing of the HYPSO-1 was waived under the assumption that the vibration testing is more rigorous. However, a simplified shock test was simulated using a vibration table at the testing facility. For this test, the payload QM was mounted in the 6U bus MM, similarly to how the FM will be. It underwent 300 g SRS for a duration of 2 ms with a Q-factor of 10 ( damping, approximation for bolted connections) on each axis—the maximum the machine could handle. Three-axis accelerometers were attached with beeswax and Kapton® tape to several of the key payload components to measure individual response.

No damage was noted; imager performance was nearly identical before/after testing as concluded with the optical validation procedure (see Figure 12), and test software output files highlighted no issues with the electronics’ functionality.

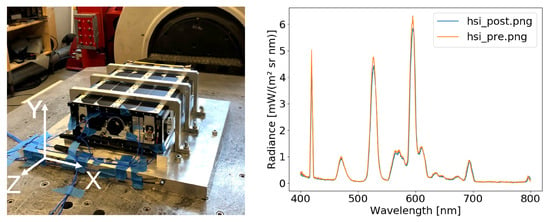

Resonance and vibrations: Launch is the largest contributor to random vibrations in the CubeSat. Maximum expected levels are between 20and 2000 Hz with an amplitude of 5.48 g (RMS) for a duration of 120 sec per axis. To test these levels, the payload QM was mounted in the MM and secured to the vibration table, as shown in Figure 14. This is the same setup as the simplified shock test. Again, accelerometers were placed on key components. Sine sweeps were run both before and after random vibrations per axis. These pre/post sweeps can be compared to highlight any changes in the resonance profile related to the more rigorous random vibrations run in between. The sine sweep profile was 5–200 Hz with an amplitude of 0.4 g sweeping two octaves per minute. The spectral response was measured before and after testing, as seen in Figure 14 (right).

Figure 14.

(Left)MM including payload QM mounted to vibration table. This setup was used to simulate shock as well. (Right) Center line spectral response recorded before (pre) and after (post) shock and vibration testing in the “Y” axis (the axis with the greatest response to vibrations; “X” and “Z” axis responses are not shown, but have less variation). Plotted results are cropped from 400 nm, low-end sensitivity cutoff of the sensor, to 800 nm, since the quantum efficiency of the sensor falls below for wavelengths greater than 800. Note: spectral images were taken while the satellite was mounted to the table—slight variations in the ambient temperature, pressure, and humidity in the room could have minor influences on the plotted results.

The sweeps showed first eigenfrequencies around 75, 130, and 80 Hz in the X, Y, and Z axis, respectively. These exceed the minimum requirement of 50 Hz, as stated by the launch vehicle. All resonant peaks shifted at or below 7% of each other pre and post testing—less than 10% was required. No peaks dropped by 50% or more. In random vibrations, component resonance values did not exceed NASA’s General Environmental Verification Standard (GEVS) [50] on the X and Z axes and just barely exceeded it in the Y axis.

Launch vehicle requirements are more strict, and the components did slightly exceed these values. However, the profile requirement is for the CubeSat as a whole, which was tested with acceptance levels. Here, only one sensor was attached to the exterior of the 6U frame, and the satellite was mounted in a duplicate of the 6U dispenser on a shaker table. First resonance frequencies were over 300 Hz for each axis, and all varied less than 2% after quasi-static and random vibration testing. High-frequency vibrations were minimal, likely due to the installed dampers between the 6U frame and payload interface. Upon testing the EM in similar conditions, two screws fell out during testing. This problem was fixed by double-checking screw torques and applying epoxy to the screw heads, which proved effective with the QM.

Venting: Ambient pressure drops at launch until vacuum is reached in LEO. The CalPoly 6U CubeSat design specification, requirement #3.1.9, states that “the cubesat shall be designed to accommodate ascent venting per ventable volume/area <2000 inches” [51], or 50,800 mm. Although the components were not sealed, both the HSI objectives and slit tube may be considered closed cavities, and there is a risk of breaking lenses or the slit due to abrupt pressure changes.

To reduce the risk, worst-case calculations meant that venting holes greater than or equal to about 1 mm in diameter per part should be sufficient to fulfill the requirement. The objective is, in fact, several smaller cavities, so this is an over-estimate. It was concluded that two mm-diameter venting holes drilled halfway through the parts (into the cavities) would be sufficient based on available drill bit sizes. The hole locations were chosen to be on each side (along the Z axis) of the aperture, or slit, respectively. Holes were drilled when objectives and the slit tube were disassembled and before they were cleaned. When assembled, the holes faced downward into an open channel below the imager to avoid stray light in the optical train.

3.6.2. Lifetime

Beyond launch, there are also several considerations for survival throughout the duration of the mission, and HYPSO-1 is expected to operate for 5 years in LEO. Each of the following tests and procedures give confidence that the satellite will survive and give an indication of the performance that can be expected (requirements R.08, R.09, Table 2).

Radiation: Radiation in space, even LEO, can cause damage to sensitive electronics and optics. Most electronics on the HYPSO-1 are enclosed within the CubeSat, and in this way have some shielding. However, the front lenses of the two imagers are fully exposed since they need a clear view of the targeted Earth (ocean) surface. One of the first tests run was to determine if the COTS objectives could survive the expected radiation doses over the course of the 5-year mission. The goal was to characterize the lens objective for expected darkening and to investigate changes in the sensor response, both with and without a light source. Only the HSI objective was tested. The RGB will be used for spatial referencing, so changing the lens transmission is not relevant. Additionally, a previous version of the HSI detector was also tested at the same time. Gamma radiation from a cobalt-60 source was used since it is assumed to be the hardest to shield and also generally the easiest to test with. The samples were cumulatively radiated by 5, 10, 20, 30, 40, 60, 80, 100, and 140 Gy. The project mission analysis report estimated a radiation dosage of less than 30 Gy for completing the full mission using STK (Analytical Graphics, Inc., Exton, PA, USA) and SPENVIS (Royal Belgian Institute for Space Aeronomy—BIRA-IASB at ESA, Brussels, Belgium) for analysis. It was decided to test up to 140 Gy to observe the effect in more extreme conditions. The objective and detector were characterized between the radiation doses to benchmark the gradual degradation expected. These characterizations were made radiometrically with a stable light source and without light, to also investigate the noise and background level in the images.

The tests showed that the objective lost about transmissivity after exposure to 100 Gy and darkened to a more yellow absorbent. When added as the front lens to a new hyperspectral imager, the spectral response showed a general loss in intensity counts for every wavelength, but the highest absolute and percentage differences at around 500 nm. The average value in the dark images appeared to decrease with about after being exposed to 100 Gy. The noise, calculated as the standard deviation in a set of dark images, increased by about in the irradiated sensor.

Bakeout: The vacuum of space will cause materials to outgas, producing particles that could condense on lenses or even short-circuit electronics boards. It is important to select materials with very low outgassing properties. Many of the COTS components were not made for space and contained high outgassing materials such as greases, plastics, and rubber. These materials were all removed before assembling the payload, as noted in Section 2.4. One way to ensure that all outgassing occurs before launch, thus providing an opportunity to make final adjustments/clean, is to bakeout the components. This procedure combines extreme pressure and temperature profiles designed for complete outgassing.

Preliminary vacuum testing highlighted major outgassing issues with our COTS HSI objectives. A brand new objective was brought to a pressure of only Pa over 90 min. When extracted from the chamber, the glass lenses were coated in grease. At this point, we realized the importance of disassembling all COTS components to fully understand the internal constituents and the importance of cleaning components before testing in a vacuum. The payload QM underwent a 72 h bakeout at a temperature of 50 °C and was held at a pressure below Pa. No outgassing issues were noted after the QM bakeout.

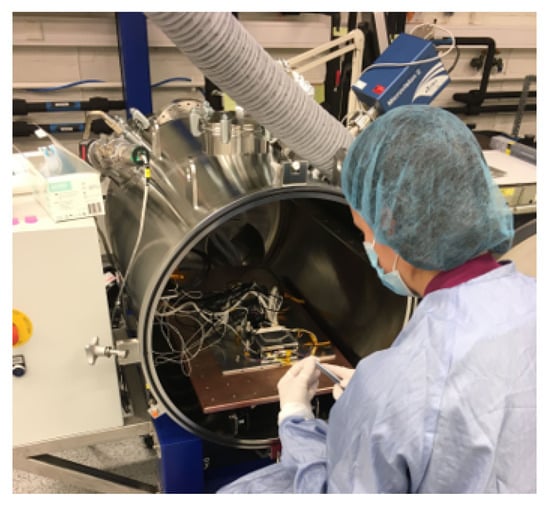

Thermal Vacuum (TVAC): After bakeout, a full thermal vacuum test was run. This test simulates realistic temperature profiles in orbit at vacuum pressure. Temperature is cycled through the extremes while testing the CubeSat operation concurrently. The goal is to verify that the payload will work in the various environmental conditions it will experience throughout its lifetime. The temperature and vacuum profiles followed for the payload QM were based on tailored ECSS standards [27] and requirements from the launch provider. Four cycles, the first one being non-operational temperatures (e.g., simulating launch conditions), were run. The non-operational temperature range was to 60 °C, and the operational one was to 50 °C. This cycling occurred at a constant pressure of Pa, which was the lowest vacuum the chamber could reach. Temperature sensors were attached to critical components and recorded throughout the test. This was performed using a combination of screw/nut fasteners and aluminum tape. The setup is shown in Figure 15. Cables were routed through a feed-through on the side of the chamber, and a window was installed at the top to allow for illumination of the chamber during imaging.

Figure 15.

Payload QM and temperature sensors mounted in the TVAC chamber.

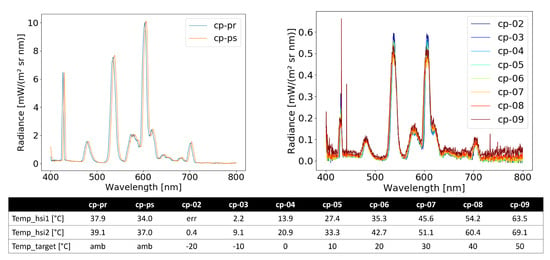

The test software (see Section 3.5) was run within operational temperature ranges in 10-degree increments for the first cycle and at the extremes for proceeding cycles. These independent test runs are known as Characterization Points (CPs). Imaging capability was verified at each CP as well.

At first, it was difficult to stabilize the temperature within the chamber, but stabilization improved after changing the sensor control of the chamber. The payload was able to function and complete software testing without errors at each of the CPs during testing. The measured software runtime duration was within a tenth of a second for each CP, and there were no issues performing an intentional reboot of the payload at each temperature. Component temperatures, notably active components such as the HSI detector, exceeded factory recommendations, but experienced no operational issues throughout testing. Imager performance response in spectral intensity varied with temperature within about at peak wavelengths. This temperature-dependent variability is explored further in Prentice et al. [52]. The results are summarized in Figure 16. The imager will be calibrated per temperature, in addition to spectral and radiometric calibration, for HYPSO-2 because of this result.

Figure 16.

(Left) Spectral response of the QM HSI pre (pr) and post (ps) TVAC testing: white paper illuminated by an external fluorescent light source. (Right) Spectral response of the QM HSI at varying temperatures inside the TVAC chamber: metallic chamber interior illuminated by an external fluorescent light source. Note that the y-axes are not equal—the light intensity is stronger in the pre/post testing setup than in the chamber. Cropping was performed according to the quantum efficiency of the sensor, as stated in Figure 14. (Bottom) The table summarizes temperatures recorded during testing—Temp_hsi1 is from inside the imaging sensor housing before powering the camera on; Temp_hsi2 is the same sensor, but recorded after running a test script/imaging, which simulates a typical overpass activity; Temp_target shows the environmental target temperature of the chamber/setup. CP = Characterization Point, amb = ambient room temperature (assume °C), err = error (the imager cannot report negative values).

Electromagnetic Compatibility (EMC): Since the CubeSat is powered down during launch, there are no EMC requirements applicable to the payload. However, the interfaces and subsystems were evaluated to determine if there were any systems that would be affected or affect each other, for example if there are high-speed buses or high power lines that could cause interference or noise on other signals.

3.7. Mission Scenario and Operations Testing

In addition to regular testing during software development [22], the final set of tests on the payload was to run through realistic mission scenarios to verify the selected operational modes. This testing began with the FlatSat BBM and continued through to the payload QM, occurring concurrently with environmental testing. Here, flight software was run using orbital parameters to estimate energy harvesting time (solar), ground station communication pass windows (NTNU will be using stations at NTNU in Trondheim and Svalbard), and typical data package transfer duration from the satellite (requirement R.10, Table 2). The operators had to plan the mission execution and perform a set of tasks and procedures during each simulated pass. These tests included more people and more of the mission-realistic infrastructure and proved helpful in identifying performance issues stemming from changing data signal paths, as well as issues with the operator interface. Additionally, the ground station at NTNU was tested through each step of the development with actual satellite overpasses.

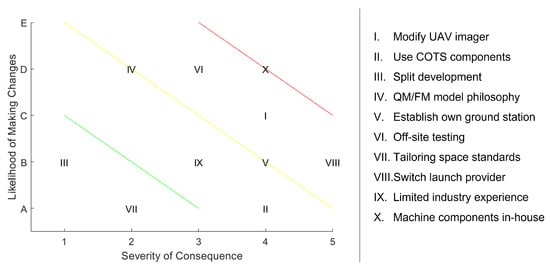

4. Discussion and Implications

Several key decisions were made that influenced the design and development of HYPSO-1. Each decision was a calculated risk that inherently affected the project and, specifically, the AIT procedure. All risks combined make up the unique approach of this project’s strategy. The following decisions (risks) had the largest influence on the project outcome.

I. Modify a UAV Imager for Space

The optical design of the hyperspectral imager was established before the start of the project, so only modifications and adaptions for use in space were required. Since the original design was intended for handheld and Unmanned Aerial Vehicle (UAV) use [18,53], the greatest change was in observational target distances, e.g., from about 50 m above the ground compared with 500 km in orbit. Observing from space meant that a greater throughput (light that reaches the sensor) was needed—so larger optics were needed [19]. The original imager designer was part of the project, so these modifications were relatively simple and fully supported internally. The CubeSat needed to be sized according to the imager, which dictated a minimum size of 6U. A transmission grating hyperspectral imager is a chain of optics and, therefore, difficult to miniaturize in the length dimension further; however, other types of hyperspectral designs can be more compact, such as the Three-Mirror Anastigmat telescope (TMA) design of HyperScout [54]. One remaining advantage to using the modified imager is that the team already had experience working with and testing it through UAV campaigns. This means that a basic understanding of the technology existed internally before even getting started on the satellite and the team could already work with the known advantages and limitations of the specific design.

II. Use COTS Components

Several COTS components were selected for the final design, including the precision-cut slit and grating, the lens objectives, the camera detectors, the wiring connectors, and the electronics boards. These components require specialized equipment and skill in order to manufacture. The decision to make small, “space-proofing” modifications greatly outweighed the consequences of creating each component from scratch with internal resources. Most likely, this was also a less expensive option considering that labor costs in Norway are relatively high. However, it is important to note that there are also drawbacks to using COTS components. Acquisition and shipping were difficult to control and delayed, and sometimes, mistakes were made. Occasionally, parts arrived with unexpected import fees and taxes. Materials lists, structural composition, and thermo-optical properties were almost always proprietary information to the supplier, thus components needed to be evaluated, sometimes fully disassembled, and all were tested for outgassing. Sometimes, supplier product support was helpful, but more often, it was very limited.

III. Split Development

Early in the project, it was decided to split the satellite development into payload and bus responsibilities. This enabled NTNU to focus on delivering scientific results and not spending time developing satellite bus capabilities from scratch. This was especially important considering the fact that HYPSO-1 is NTNU’s first research satellite. It was an attempt to limit the risk of basic infrastructure failure since the purchased bus was already space-proven. This also enabled the team to divide the work amongst the available students per semester—designing the entire satellite would have required more people and/or time. Additionally, the division proved very useful for external review since both teams were able to volley questions back and forth and challenge accepted internal standards. During integration of the payload at NanoAvionics, it was discovered that the threading of some holes in the payload platform was missing, some threading was too short, and that the NanoAvionics CAD model had changed after the payload had been shipped. All affected integration time and could have been anticipated by a more thorough pre-shipping inspection and better communication between partners.

IV. QM/FM Model Philosophy

Many CubeSat teams opt for a Protoflight Model approach in order to speed up assembly, integration, and testing time frames. However, this induces higher risk since the Protoflight Model will inherently be handled much more and tested more rigorously than a dedicated Flight Model. In turn, this can affect the budget and scheduling as well. Due primarily to the COVID-19 outbreak, the team had to postpone the HYPSO-1 launch. With the extended timeline, it was decided to limit risk with model philosophy and create a dedicated QM and FM for the project.

V. Establish Own Ground Station