Abstract

Rice is one of the most important food crops for human beings. The timely and accurate understanding of the distribution of rice can provide an important scientific basis for food security, agricultural policy formulation, and regional development planning. As an active remote sensing system, polarimetric synthetic aperture radar (PolSAR) has the advantage of working both day and night and in all weather conditions and hence plays an important role in rice growing area identification. This paper focuses on the topic of rice planting area identification using multi-temporal PolSAR images and a deep learning method. A rice planting area identification attention U-Net (RIAU-Net) model is proposed, which is trained by multi-temporal Sentinel-1 dual-polarimetric images acquired in different periods of rice growth. In addition, considering the diversity of the rice growth period in different years caused by the different climatic conditions and other factors, a transfer mechanism is investigated to apply the well-trained model to monitor the rice planting areas in different years. The experimental results show that the proposed method can significantly improve the classification accuracy, with 11–14% F1-score improvement compared with the traditional methods and a pleasing generalization ability in different years. Moreover, the classified rice planting regions are continuous. For reproducibility, the source codes of the well-trained RIAU-Net model are provided.

1. Introduction

Rice is one of the most important food crops for human beings. To ensure food security, long-term inter-annual monitoring of rice is necessary, and is important for understanding the distribution of rice, which is the basis for making decisions on agricultural management [1,2]. The development of remote sensing technology and the ongoing research into crop classification using satellite remote sensing images allows us to obtain frequent Earth observation data for a long period, providing the possibility to monitor rice distribution at a large scale [3,4]. Compared with optical satellite sensors, polarimetric synthetic aperture radar (PolSAR) systems have the advantage of working both day and night and in all weather conditions and hence play an important role in crop cultivation area monitoring [5].

Early studies of rice growing area identification with SAR images mainly focused on using the SAR amplitude or intensity information [6,7,8,9,10,11,12]. In [6], the scattering properties of different crop types from Sentinel-1 VV-VH data of southern France were analyzed, and the distribution information for rice and other crop types was obtained. Wu et al. [8] used fully polarimetric SAR images to analyze the correlation between the backscattering coefficients of different polarimetric channels and the rice growth period and found that the backscattering coefficients of the cross-polarimetric channels are highly correlated with rice growth. In [11], both double- and single-crop rice in Hanoi, Vietnam, were mapped using a time series of Sentinel-1 SAR amplitude imagery. The above studies have confirmed the feasibility of using PolSAR imagery to monitor rice. However, the phase information of the PolSAR data was not fully utilized in these studies, which is a useful way to characterize the scattering mechanisms of rice and to improve the accuracy of rice monitoring.

To fully exploit the scattering mechanisms of rice, a number of rice classification algorithms using polarimetric information from PolSAR data have been presented in the last decades [13,14,15,16,17,18,19,20]. For example, Xie et al. [13] investigated the capability of fully PolSAR data for rice mapping and explored the relationships between the fully polarimetric information and rice biophysical parameters. A modified entropy-alpha decomposition method was then proposed to analyze the temporal scattering behavior of rice in the different growth periods. Using a thresholding technique and a support vector machine (SVM), Hoang et al. [14] investigated the potential of RADARSAT-2 images in identifying rice fields over a large and fragmented land-use area. A random forest (RF) algorithm-based crop phenology monitoring method was proposed by Wang et al. [15] using polarimetric decomposition and a multi-temporal RADARSAT-2 data. Emile et al. [18] used Sentinel-1 data with the classical machine learning techniques of multiple linear regression (MLR), support vector regression (SVR), and RF to estimate rice height and dry biomass. To build a feasible phenology classification scheme for paddy rice, a method combining SVM, k-nearest neighbor, and decision tree classifiers was proposed in [20].

In recent years, with the rapid development of geospatial data and computer vision technology, deep learning has been widely applied in the field of remote sensing image classification [21]. Deep learning models can learn and reflect the essential features of the input data and enhance the recognition ability for feature information, which can help to improve the classification performance for rice [22,23,24,25,26,27]. Considering that the backscattering signals of SAR image data are sensitive to the crop phenological period, Pang et al. [22] presented a method based on multi-temporal Sentinel-1A datasets and a fully convolutional neural network classification model to dynamically monitor crop growth and related phenological traits. In [24], the authors used multi-temporal Sentinel-1 SAR intensity data and a two-dimensional convolutional neural network architecture to classify crops and found that deep learning techniques have the potential to recognize complex phenological traits from the available SAR data. An adaptive U-Net method was proposed by Wei et al. [27] to identify rice from Sentinel-1 images using the spatial distribution traits and the amplitude information of the backscattering signals of the rice.

The above research has shown that deep learning has notable advantages in rice planting area identification, but the current studies still have some drawbacks. On the one hand, most of the existing deep-learning-based rice monitoring methods mainly use the amplitude or intensity information in SAR data and ignore the other polarimetric information, such as the phase and polarimetric scattering mechanisms, which can better reflect the scattering characteristics of rice. Clearly, the above problem can limit the interpretation reliability. On the other hand, the general framework of the previous studies is to train the rice identification network using sample data from specific months and then directly apply the well-trained network to identify the rice planting areas in other years by the images of the corresponding months. However, due to different climatic conditions, the scattering characteristics of rice can vary in the same growth period of different years, which results in an unsatisfactory generalization capability and interpretation results, especially when the changes are significant.

In this paper, based on the above viewpoint, we propose a rice growing area method based on the combination of multi-temporal Sentinel-1 PolSAR data and an attention U-Net model. The proposed rice planting area identification attention U-Net (RIAU-Net) model exploits the rich scattering traits of rice during the different growth periods by using Sentinel-1 dual-polarimetric images acquired in specific months. In addition, considering that the scattering characteristics of rice can vary in the same growth period of different years due to different climatic conditions, a transfer mechanism is introduced to guarantee the good generalization capability of the model based on a similar image pair matching strategy, which is used to find similar image pairs between the dataset used for the training of the network and that used for the rice cultivation area identification.

2. PolSAR Datasets and Study Area

2.1. Dual-Polarimetric SAR Data and Polarimetric Decomposition

The rich polarimetric information contained in PolSAR images has great potential for the characterization of surface land-cover types. For fully polarimetric SAR imagery, the backscattering signal of each pixel contains both amplitude and phase information, which can be represented by a 2 × 2 complex scattering matrix S [28,29]:

with:

where the SVH and SHV terms are the cross-polarization channels and the SHH and SVV terms contain the co-polarization channels. denotes the amplitude, represents the phase, and is the imaginary unit. Under a monostatic case, the backscattering matrix satisfies the reciprocity theorem, namely, SVH = SHV.

The Sentinel-1 datasets used in this study were dual-polarimetric images with VV and VH polarization, for which the scattering matrix is defined as:

The corresponding target scattering vector, k, can be expressed as:

The Sentinel-1 dual-polarimetric SAR data can also be represented by the following polarimetric covariance matrix:

where Y represents the conjugate transpose and is the conjugate operator.

The polarimetric target decomposition technique has been used in the applications of PolSAR image since the 1990s and plays an important role in target detection, classification, and geophysical parameter retrieval [30,31]. As some supplements to the original PolSAR data, the polarimetric decomposition parameters can directly reflect the physical scattering mechanisms of the targets and are helpful for the identification of rice planting areas. In order to make full use of the polarimetric information of Sentinel-1 images in rice identification, we obtained the polarimetric decomposition parameters (the polarimetric entropy (H), polarimetric anisotropy (A), and mean scattering angle (α)) from the Sentinel-1 dual-polarimetric data by adopting H/A/Alpha decomposition [32].

The covariance matrix, C, is a positive semidefinite matrix [33]. Therefore, it can be decomposed into the weighted sum of two mutually orthogonal covariance matrices, which are denoted as:

where the real number, , represents the eigenvalue of the polarimetric covariance matrix, C; represents the eigenvector; and z is the rank of the matrix.

The decomposition parameter, H, describes the disorder of the various different scattering types (i.e., the randomness of the scattering) with the following formula:

with:

A high H value indicates that the scattering randomness of the target is high.

Although H is an effective scalar feature to describe the randomness of scattering problems, it cannot completely describe the ratio relation of eigenvalues. Hence, parameter A is a good supplement, which is defined as:

A higher A value means that the back-scattering signal of the dominant scattering mechanism is much higher.

α is the key parameter used to identify the main scattering mechanism with the following formula:

Parameter α is directly related to the average physical scattering mechanism, and thus the observed quantity can be related to the physical properties of the medium. A lower α value indicates that the main scattering mechanism is single scattering, and a medium or higher α value denotes that the main scattering mechanism is double scattering [34]. In practical application, the H/A/Alpha parameters are obtained by the PolSARpro software, which can process PolSAR data and undertake the task of PolSAR image classification using traditional methods.

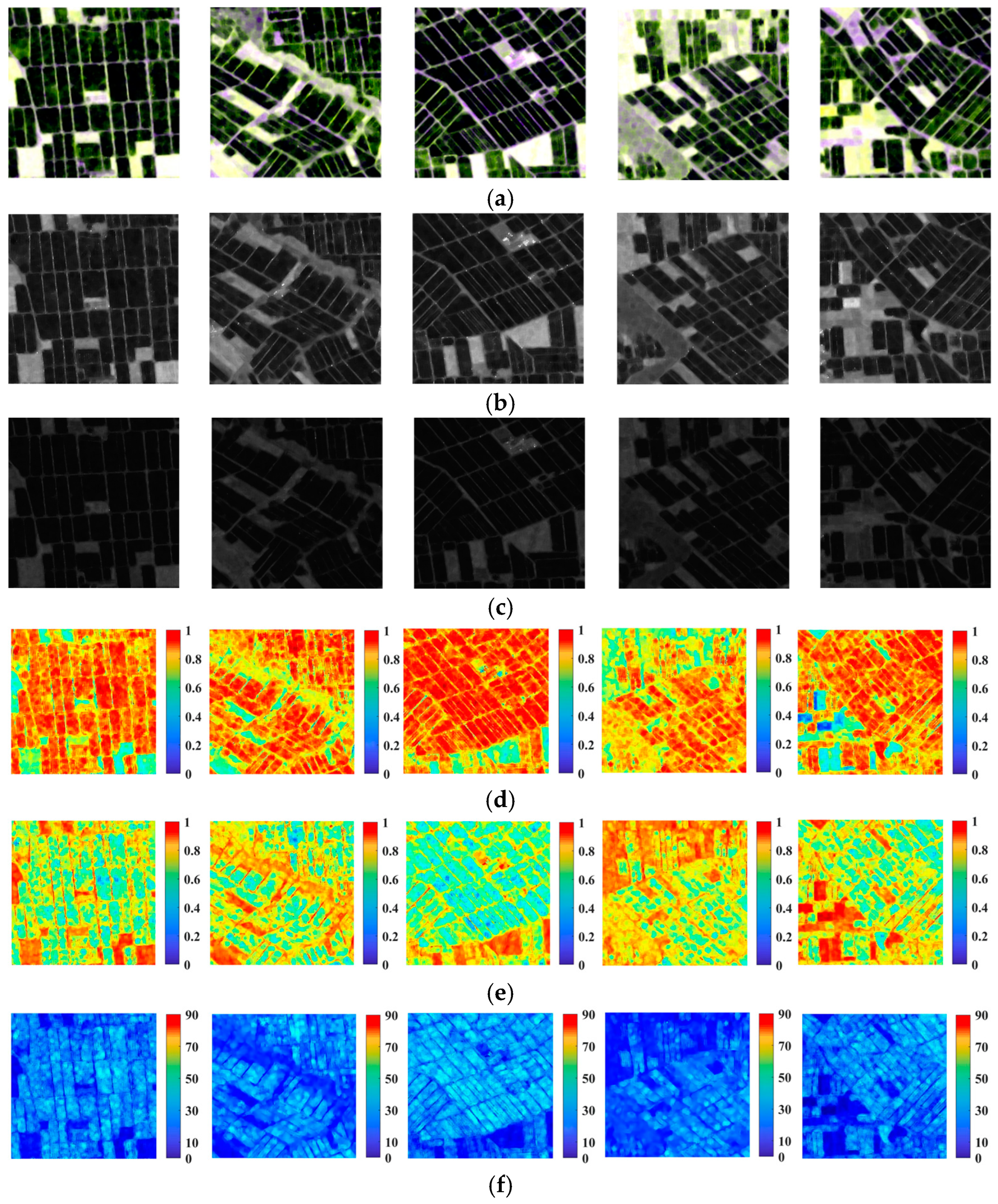

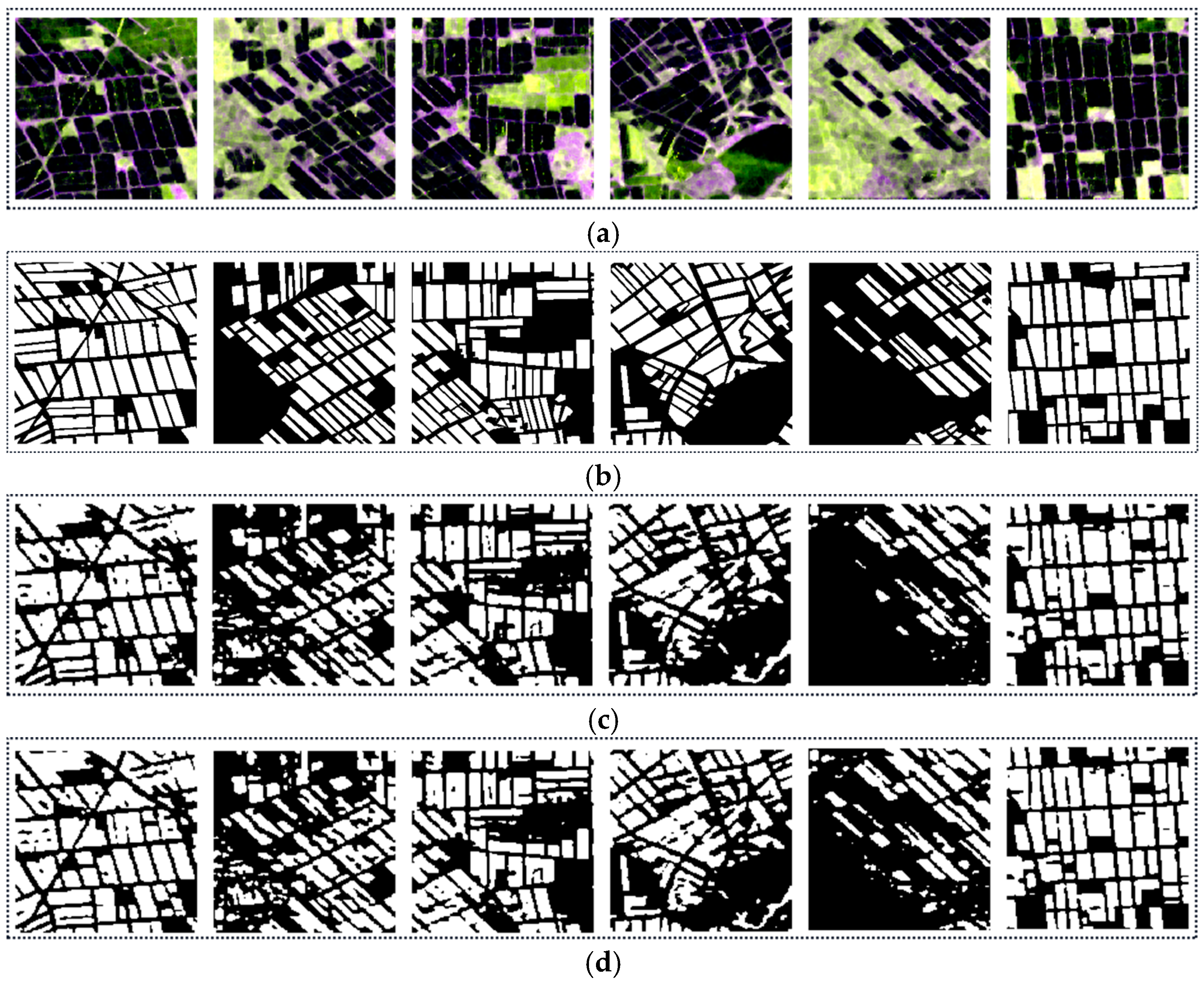

To visualize the scattering traits of rice, we display some Sentinel-1 images in Figure 1a, where the main land-cover types are rice planting field and bare land. The corresponding intensity images and H/A/Alpha decomposition images are also displayed. As can be seen, the rice planting fields are generally distinguishable from the surrounding background objects (bare land or water) in the decomposition images. However, in the intensity images, the boundaries between the rice planting areas and the backgrounds are blurred, especially in the VH intensity image. In the entropy images, the planting fields generally have higher values than the background objects, which is because the signals of the background objects are mainly from single-bounce scattering, while the scattering traits of the planting fields are much more complex. In the anisotropy images, the background objects have higher values, which is because the second-largest eigenvalue in the polarimetric matrix (see Equations (6) and (9)) is much lower than the largest one. In the alpha images, the planting fields have higher values due to the fact that more double-bounce signals are returned by the plant canopy.

Figure 1.

Sentinel-1 SAR sub-images of the Sanjiang Plain. (a) Pauli RGB composite images. (b) VV images. (c) VH images. (d–f) The corresponding polarimetric entropy images, anisotropy images, and scattering angle images, respectively.

2.2. Study Area and Data Source

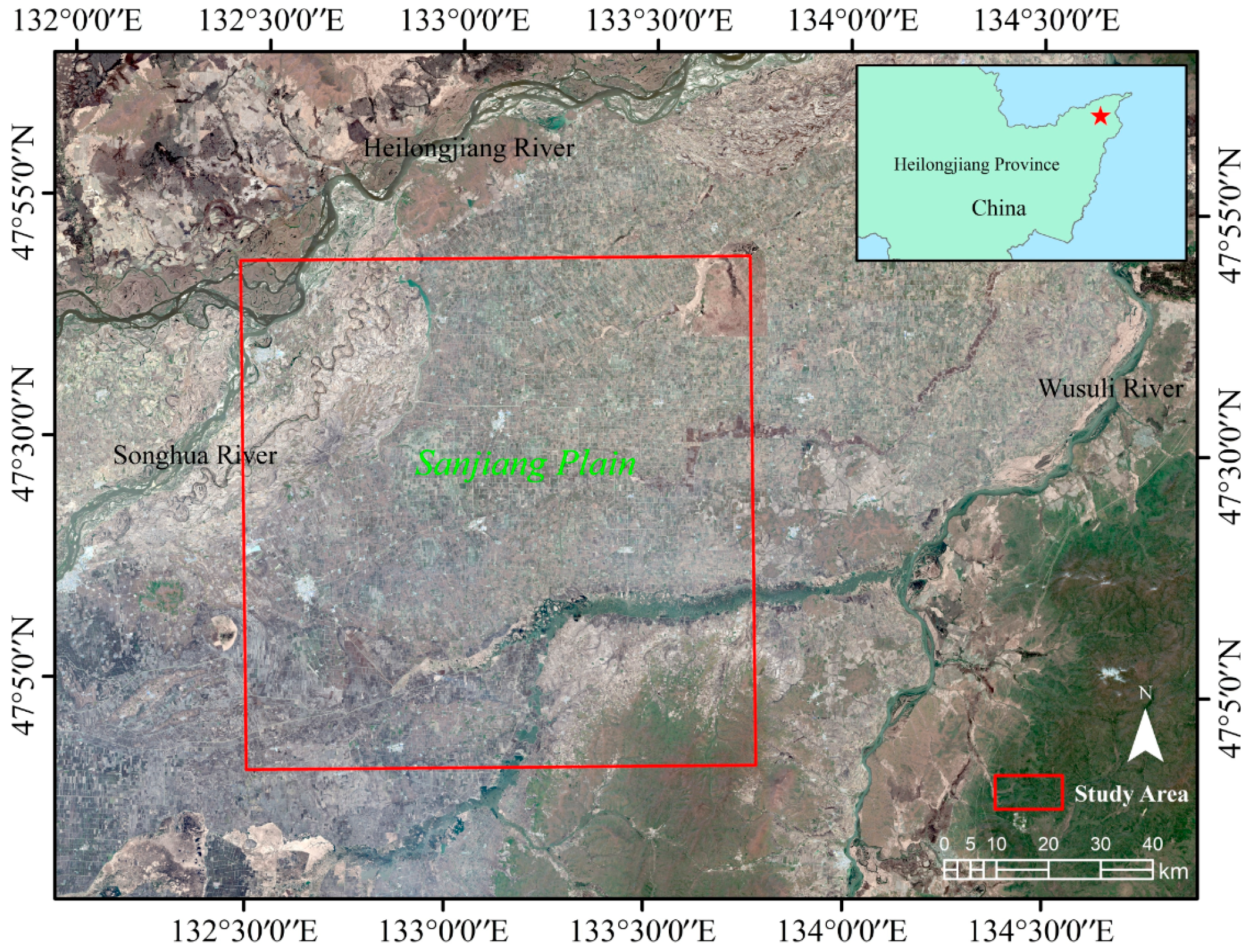

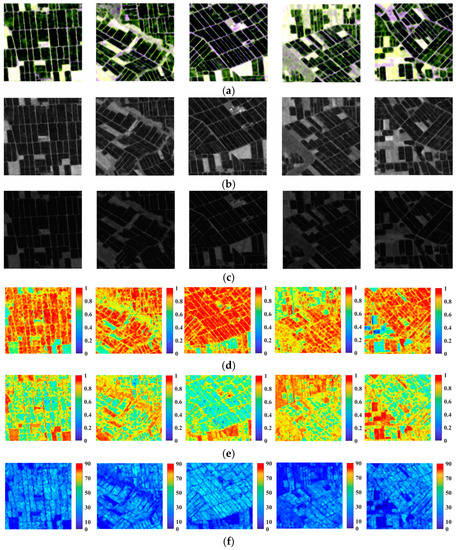

The northeastern part of the Sanjiang Plain of China was taken as the study area (Figure 2), which was formed by the alluvial deposits of the Heilongjiang River, Wusuli River, and Songhua River. The Sanjiang Plain has flat and fertile land with abundant water resources. The Sanjiang Plain has a temperate humid and semi-humid continental monsoon climate, with the average temperature of the hottest month being above 22 °C, making it suitable for rice cultivation. The Sanjiang Plain has become an important commodity grain production base in China. Due to its special geographical location and climatic conditions, the Sanjiang Plain is able to meet the requirements for a one-crop-a-year agricultural production system.

Figure 2.

Map of the study area.

Early maturing single-crop paddy rice is grown in the Sanjiang Plain and is usually sown in early–mid April and planted in early May each year. The rice matures and begins to be harvest between September and October, with a growth cycle of about 200–240 days. A high degree of mechanized agricultural production has been adopted in the flat terrain and vast area of the Sanjiang Plain.

In this study, to identify the rice planting areas in the Sanjiang Plain, Sentinel-1 images from the different rice growing seasons were used. The Sentinel-1 radar mission is made up of Earth observation satellites for environmental and safety monitoring, which were launched by the European Space Agency (ESA). The mission consists of two polar-orbiting satellites, Sentinel-1 A and B, which carry C-band synthetic aperture radar sensors. Sentinel-1 works in four imaging modes, namely, stripmap (SM), interferometric wide (IW) swath, extra wide (EW) swath, and wave (WV). The Sentinel-1 mission is thus able to provide dual-polarimetric images with a high spatial resolution and short visit cycle that are suitable for observing rice paddy fields in large regions. The Sentinel-1 single look complex (SLC) data used in this study were downloaded from the ESA data distribution website (https://scihub.esa.int, accessed on 10 May 2022). The basic product information is listed in Table 1.

Table 1.

Basic information of the Sentinel-1 data source.

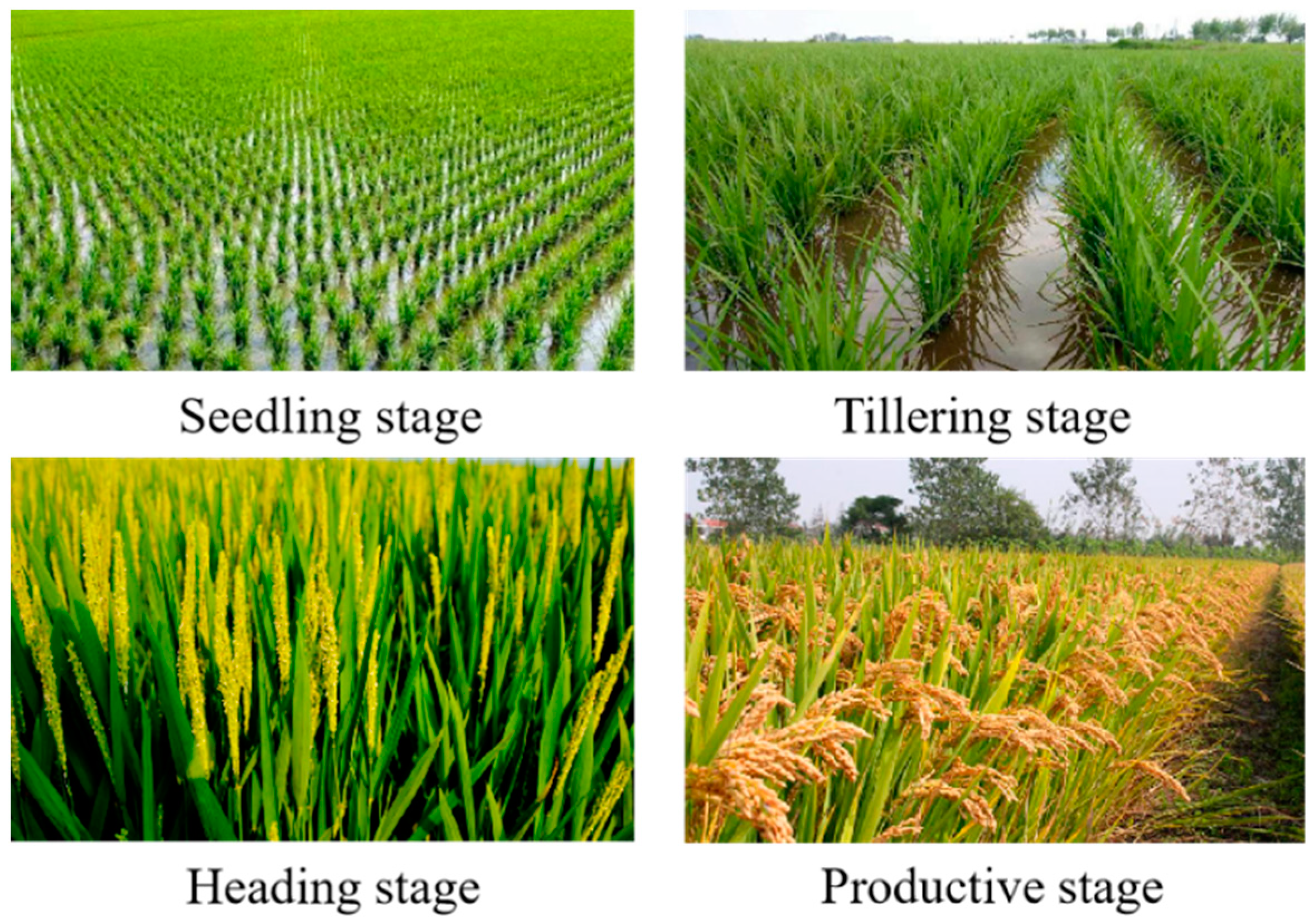

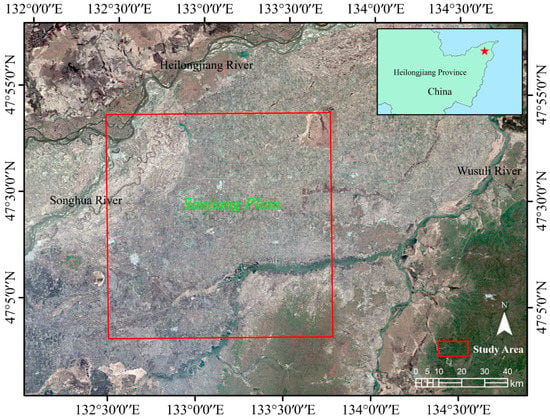

For the SAR data acquired in a certain seasons, the scattering traits of rice are typically similar to those of some other plants. That is to say, it is difficult to effectively distinguish rice from objects with similar scattering characteristics by single-temporal imagery. Therefore, using multi-temporal imagery becomes an effective means to improve the interpretation accuracy. In this study, to train the RIAU-Net model, five Sentinel-1 images of rice during the seedling stage, tillering stage, heading stage, and productive stage in the Sanjiang Plain in 2020 were selected, as shown in Figure 3 and Table 2.

Figure 3.

Rice status during the different growth periods.

Table 2.

The Sentinel-1 data used for training the proposed model.

3. The Rice Planting Area Identification Attention U-Net Model

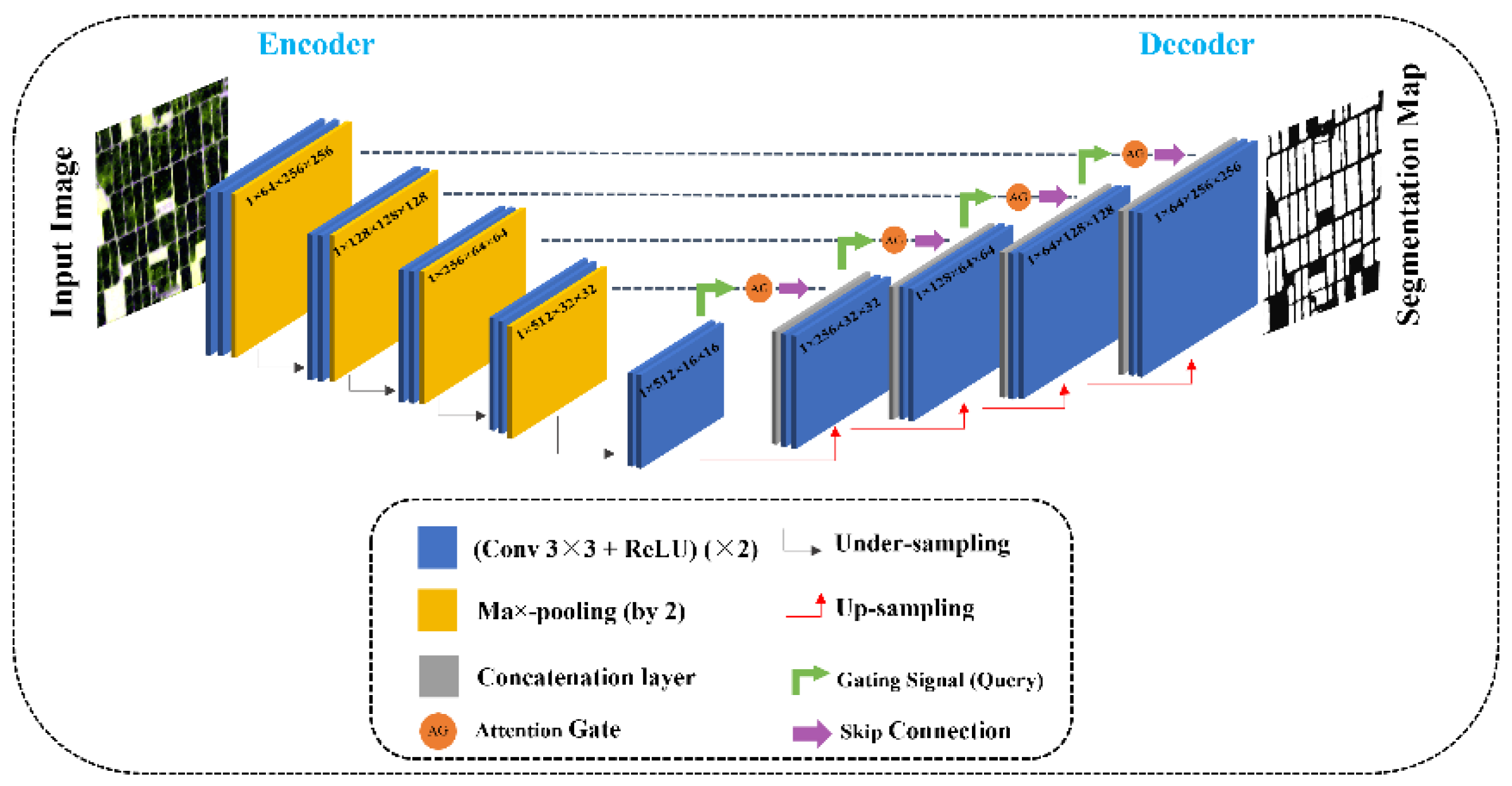

3.1. Basic Architecture of the RIAU-Net Model

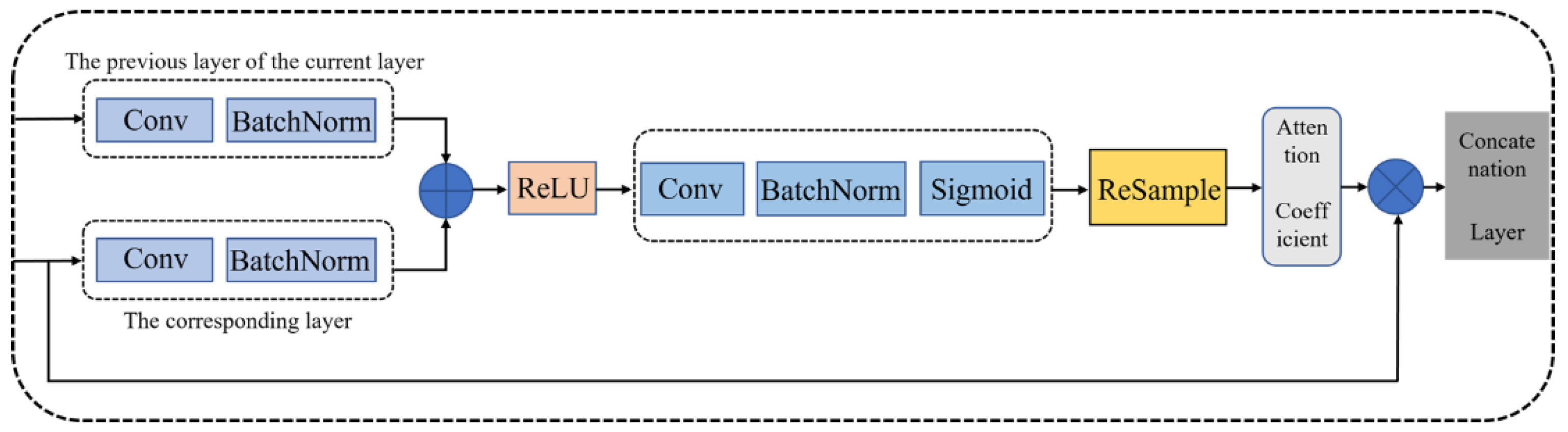

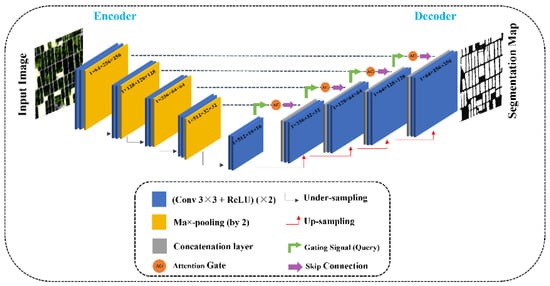

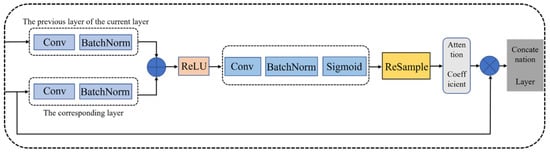

The proposed RIAU-Net model adds attention gates (AGs) [35] to the U-Net network architecture [36], which ensures that the model focuses more on feature extraction. To better distinguish rice from objects with similar scattering characteristics in a certain period of each year, we used multi-temporal polarimetric data of rice as the input data of the model. The structure of the RIAU-Net model [37] is shown in Figure 4, which includes two main parts: (1) a contraction path and (2) a symmetric expanding path. The contraction path is the encoder part, in which two 3 × 3 convolutional layers are repeatedly applied to each layer, with each followed by a rectified linear unit (ReLU) and a 2 × 2 max pooling operation with stride of 2 for down-sampling. At each down-sampling step, the number of feature channels is doubled, and the feature information (such as the location and semantics of the image) is extracted. The symmetric expanding path is the decoder part and performs the up-sampling operation, where each module contains a concatenation layer and two 3 × 3 convolutional layers to reduce the number of feature channels by half, with each convolutional layer followed by the same ReLU. In the meantime, the symmetric skip connection structure connects the contraction path, the corresponding layer of the symmetric expanding path, and the AGs. In the up-sampling stage, the previous layer of the current layer and the corresponding layer are connected to the AGs. The concatenation layer is obtained after processing the feature information of the two layers inside the AGs modules (Figure 5). The purpose of adding the AGs to the U-Net is to focus the model’s attention on the feature targets in the image, thus improving the network’s ability to extract features in the receptive field [38]. AGs can help the RIAU-Net model to effectively focus on the salient regions and suppress the features of irrelevant regions so that the model can obtain better contextual semantic information and make predictions to improve the sensitivity and accuracy of the model.

Figure 4.

The architecture of the RIAU-Net model.

Figure 5.

The internal AG modules in the RIAU-Net model.

3.2. Transfer Mechanism

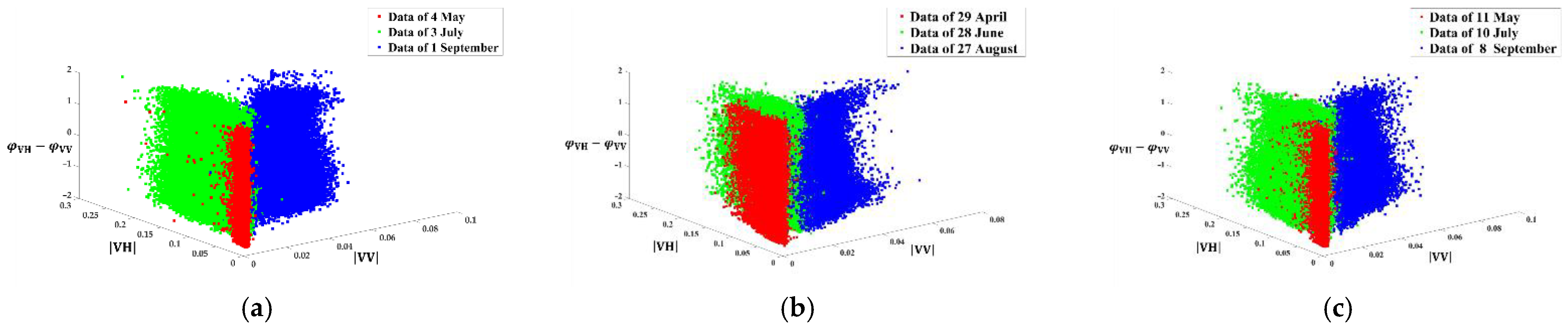

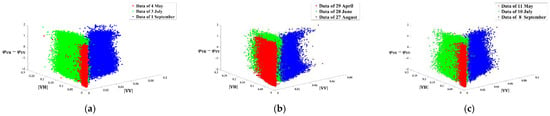

In this study, the proposed RIAU-Net model was trained using images from the year 2020. A simple and fast way to identify the areas of rice in other years is to directly apply the well-trained network based on the temporally close principle, i.e., the input images between the training process in 2020 and the interpretation process in other years are temporally close to each other. However, the scattering traits of rice can vary in the same growth period of different years as a result of certain factors such as different climatic conditions. To illustrate the differences in the scattering traits of rice in the same growth period of different years, scatter plots of the scattering traits of rice in different seasons of different years are shown in Figure 6. Taking the imaging time of the three images from 2020 as the reference (Figure 6a), the closest images in the temporal domain (2021) were selected. As can be seen, the distribution traits of the green dots in Figure 6a,b are generally similar, which means that the scattering traits of the rice in July of 2020 and 2021 are similar. However, notable differences can be observed for the red and blue dots, which means that the scattering traits of the rice in May and September of the two years are quite different. In such a case, the temporally close principle could result in an unsatisfactory interpretation of the results of the model, especially when the changes are significant.

Figure 6.

Scatter plots of the scattering traits of rice in different seasons of different years, where the three axes represent the amplitudes of the VH and VV polarization and the phase difference between VH and VV, respectively. (a) Scatter plots of the data from 2020. (b) Scatter plots of the data from 2021, which are temporally close to the data from 2020. (c) Scatter plots of the data from 2021 matched by the similar image pair matching strategy.

To tackle the above issue, an alternative approach is to retrain the network for identifying rice planting areas using the images of other years. However, this is impractical because a large effort needs to be made to select training samples, and the retraining of the network is time-consuming. In this study, a transfer mechanism was adopted to apply the model trained by the images from 2020 to monitor the rice distribution areas in different years.

The main idea of the proposed transfer mechanism is that the input data should be adaptively adjusted during the interpretation process to make the model fit better in the new year. A similar image pair matching strategy was adopted to find similar image pairs between the dataset used for the training of the network and that used for the rice monitoring.

In this research, we deployed the Wishart likelihood-ratio test statistic to calculate the similarity between two pixels in the PolSAR images. The independent 2 × 2 Hermitian positive definite matrices (covariance matrices) X and Y are complex Wishart distributed matrices, and the Wishart likelihood-ratio test statistic can be deduced as follows [39,40]:

where L is the number of looks. Taking the logarithm of (11) and discarding the constant terms, this can be calculated as:

To measure the scattering similarity between the rice planting areas of two images, the similarity between each pixel pair in the same location is calculated, and the mean value of the pixel similarity is taken as the scattering similarity between image pairs. The scatter plots of three images matched by the proposed method are shown in Figure 6c. Clearly, compared with Figure 6b, the distribution state of the dots in Figure 6c is much more in line with Figure 6a, which demonstrates the validity of the proposed similar image pair matching strategy.

4. Experimental Part

4.1. Experiment Details

In this study, to train the network, 400 samples with a patch size of 256 × 256 were selected of each image, where the ratio of the non-rice planting area to the rice planting area was about 3:1. To prevent the over-fitting problem during training, data augmentation was used to increase the number of samples by both vertical and horizontal flipping. Data augmentation is the process of making limited data produce value equivalent to more data without materially increasing the data. The RIAU-Net model used in this paper randomly vertically and horizontally flipped all data during the training process. Thus, the purpose of expanding the limited dataset was to improve the network training effect and enhance the generalization ability of the model. The number of samples was then increased to 1200. We randomly divided the samples into three separate parts, namely, the training, validation, and test parts, with a ratio of 5:2:3. It needs to be pointed out that, although only five Sentinel-1 images were used, the image size was large (more than 10,000 × 10,000 pixels), and we also used some approaches to augment the number of samples. Therefore, it was enough to select sufficient samples for the training of the model.

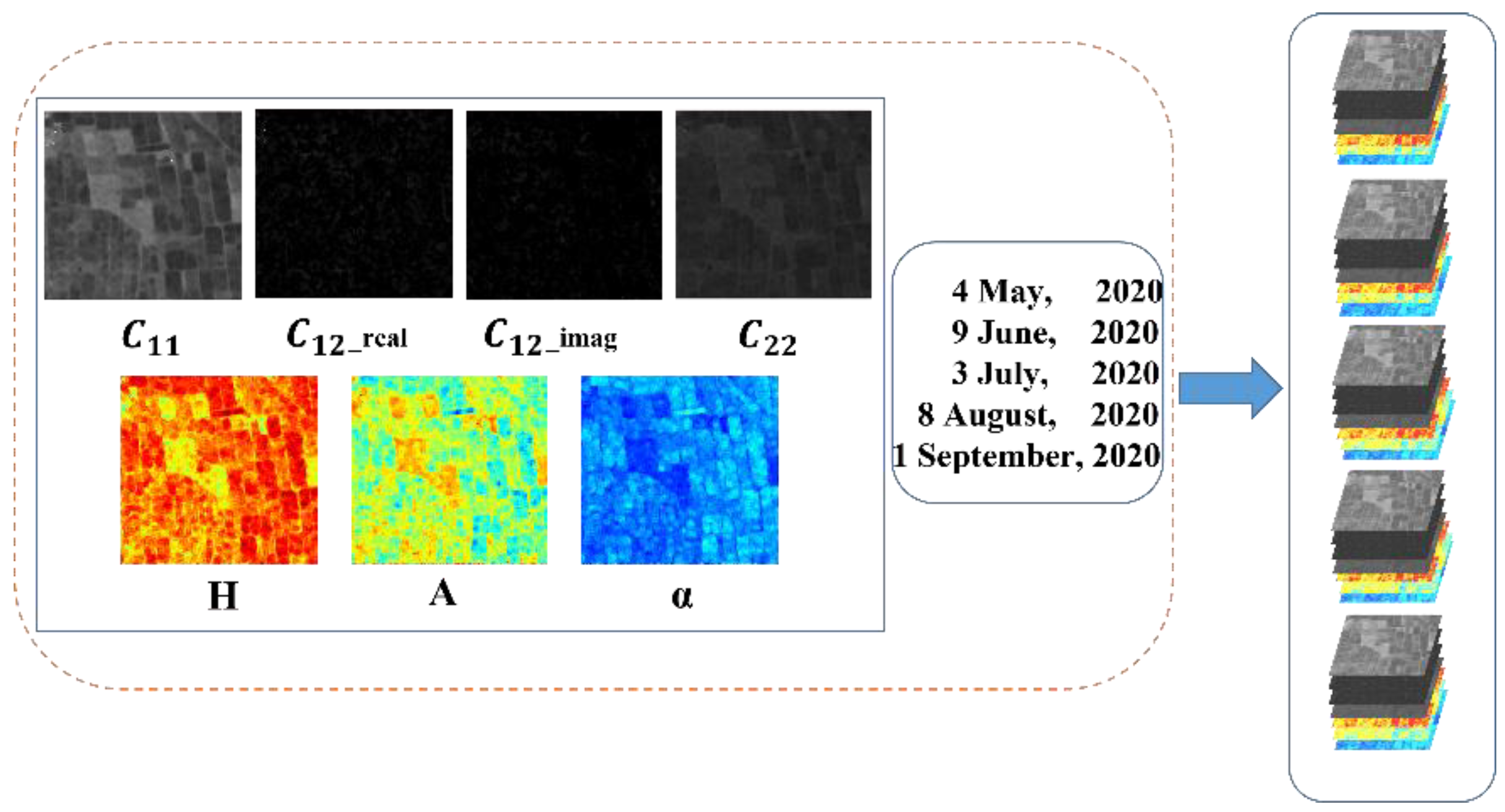

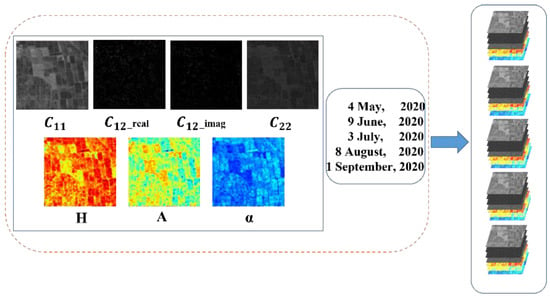

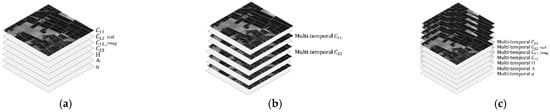

In the data preprocessing steps, geocoding and speckle reduction approaches were undertaken. The elements of the polarimetric covariance matrix and the H/A/Alpha polarimetric decomposition parameters after preprocessing were input into the network. That is to say, 35 data channels constructed from five images were input, as shown in Figure 7. The hardware and software configurations for the experiments are listed in Table 3.

Figure 7.

The data input of the RIAU-Net model, where C12_real and C12_imag denote the real and imaginary part of C12.

Table 3.

The hardware and software configurations for the experiments.

In order to achieve the optimal configuration of the model hyperparameters during training, we performed a large number of experiments to adjust the settings of the different hyperparameters. We found that the optimal results were obtained when the learning rate was set to 0.001 and the batch size was set to 10 with the Adam optimizer. The validation set was made up of two parts, namely, the images from 2020 and the images from 2021 (for reproducibility, the well-trained RIAU-Net model is available at https://github.com/Hzunyi/Rice-Identification.git, accessed on 5 August 2022).

4.2. Evaluation Metrics

In order to objectively assess the classification performance of the different rice identification methods, several evaluation indices are introduced in this paper: the overall accuracy (OA), precision, recall, and F1-score [41].

The OA represents the ratio of the number of correct samples predicted by the model to the total samples, for which the formula is as follows:

where TP, TN, FP, and FN denote the number of true positive, true negative, false positive, and false negative samples, respectively.

Precision represents the proportion of correct samples in the model prediction of the various classification results, for which the formula is as follows:

Recall represents the proportion of correctly classified samples to the true value:

The F1-score is the harmonic average of the model precision and recall, which is obtained with the following formula:

4.3. Experimental Results

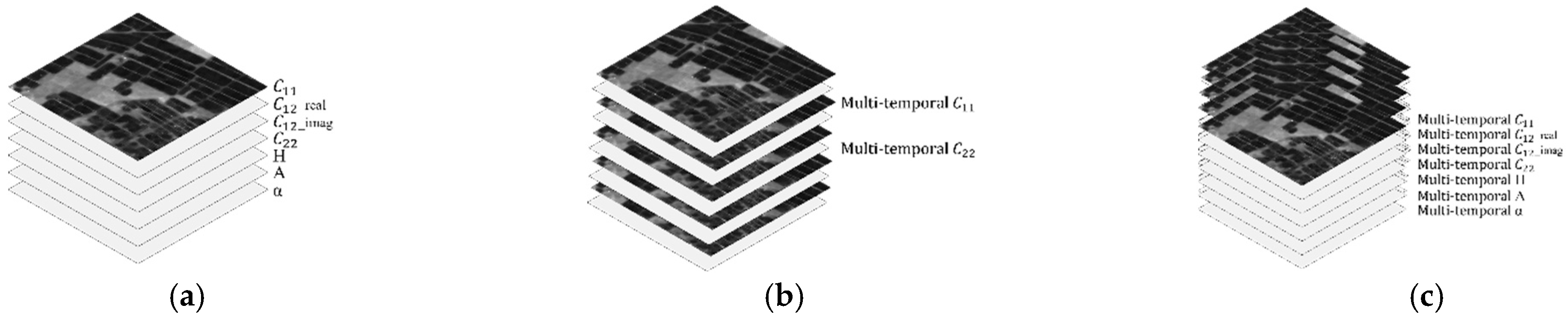

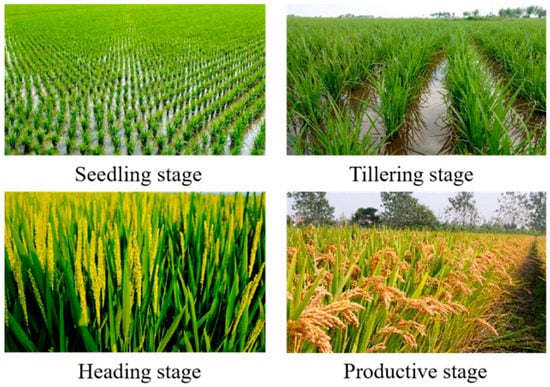

4.3.1. Comparison between Different Datasets

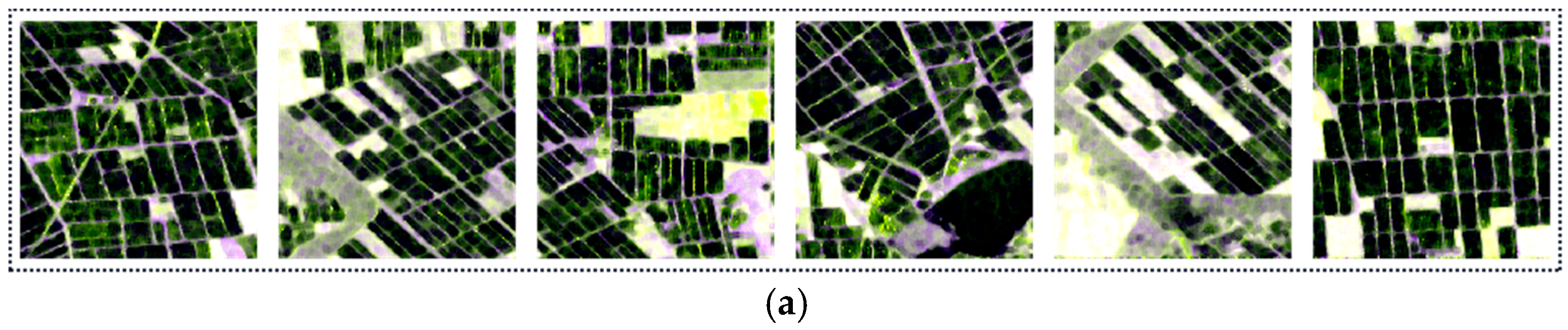

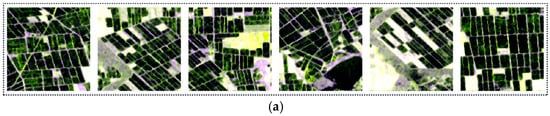

In this study, the multi-temporal Sentinel-1 dual-polarimetric data from the Sanjiang Plain were used to construct a multi-temporal dataset, which was then applied to the RIAU-Net model. The dataset included intensity information, phase information, and polarimetric decomposition parameters from the different growth periods of rice. To demonstrate the superiority of using multi-temporal and polarimetric information, we constructed three different datasets (Figure 8) for 2020, namely, a single-temporal polarimetric dataset, a multi-temporal intensity dataset, and a multi-temporal polarimetric dataset, and then used them to train the model. The corresponding results are shown in Figure 9, and the quantitative assessment results are listed in Table 4. By comparing the indices of the first and third rows in Table 4, we can see that employing a multi-temporal dataset can significantly improve the performance of the proposed model; by comparing the indices of the second and third rows in Table 4, we can see that employing more polarimetric information can also improve the performance of the proposed model to some degree. In summary, employing multi-temporal and rich polarimetric information can effectively improve the classification accuracy of the model. As can be seen in Figure 9c, the misclassification problem for the model trained by the single-temporal dataset is severe, which is because the scattering traits of rice are typically similar to those of some other plants in certain months.

Figure 8.

The PolSAR dataset form. (a) The single-temporal polarimetric dataset. (b) The multi-temporal intensity dataset. (c) The multi-temporal polarimetric dataset.

Figure 9.

The classification results of RIAU-Net for the different 2020 datasets. (a) The Sentinel-1 SAR images. (b) The label images. (c) The single-temporal polarimetric dataset. (d) The multi-temporal intensity dataset. (e) The multi-temporal polarimetric dataset.

Table 4.

Results for the RIAU-Net model trained by the different datasets.

4.3.2. Comparison between RIAU-Net and Other Methods

In order to fully verify the classification performance of the proposed model, the RIAU-Net model was compared with traditional supervised classification methods (Wishart and SVM) and classical semantic segmentation algorithms (DeepLab v3+ and U-Net). Wishart is a supervised classification commonly used for PolSAR data, which is a maximum-likelihood classification based on the complex Wishart distribution, and the Wishart classifier is generally used for fine classification in PolSAR image processing applications. SVM is a classification algorithm in PolSAR image processing applications with small sample training and support for high-dimensional feature space, which is stable and usually has good classification results. DeepLab v3+ and U-Net are internationally popular deep learning semantic segmentation algorithms, which have strong feature extraction capabilities. Both of the above semantic segmentation algorithms are based on the development of fully convolutional neural networks, with model variants based on FCN to achieve better classification performance and finer segmentation results.

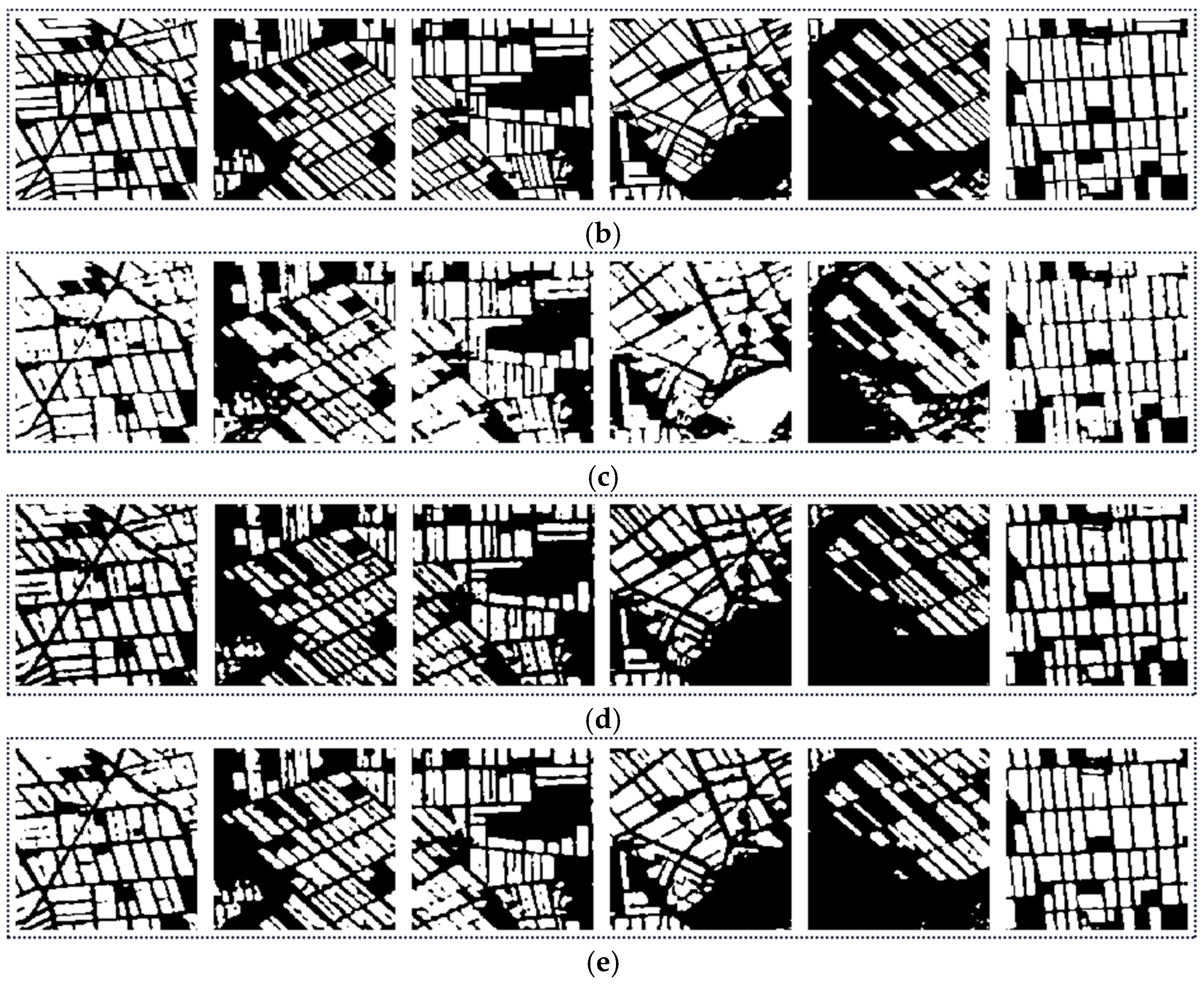

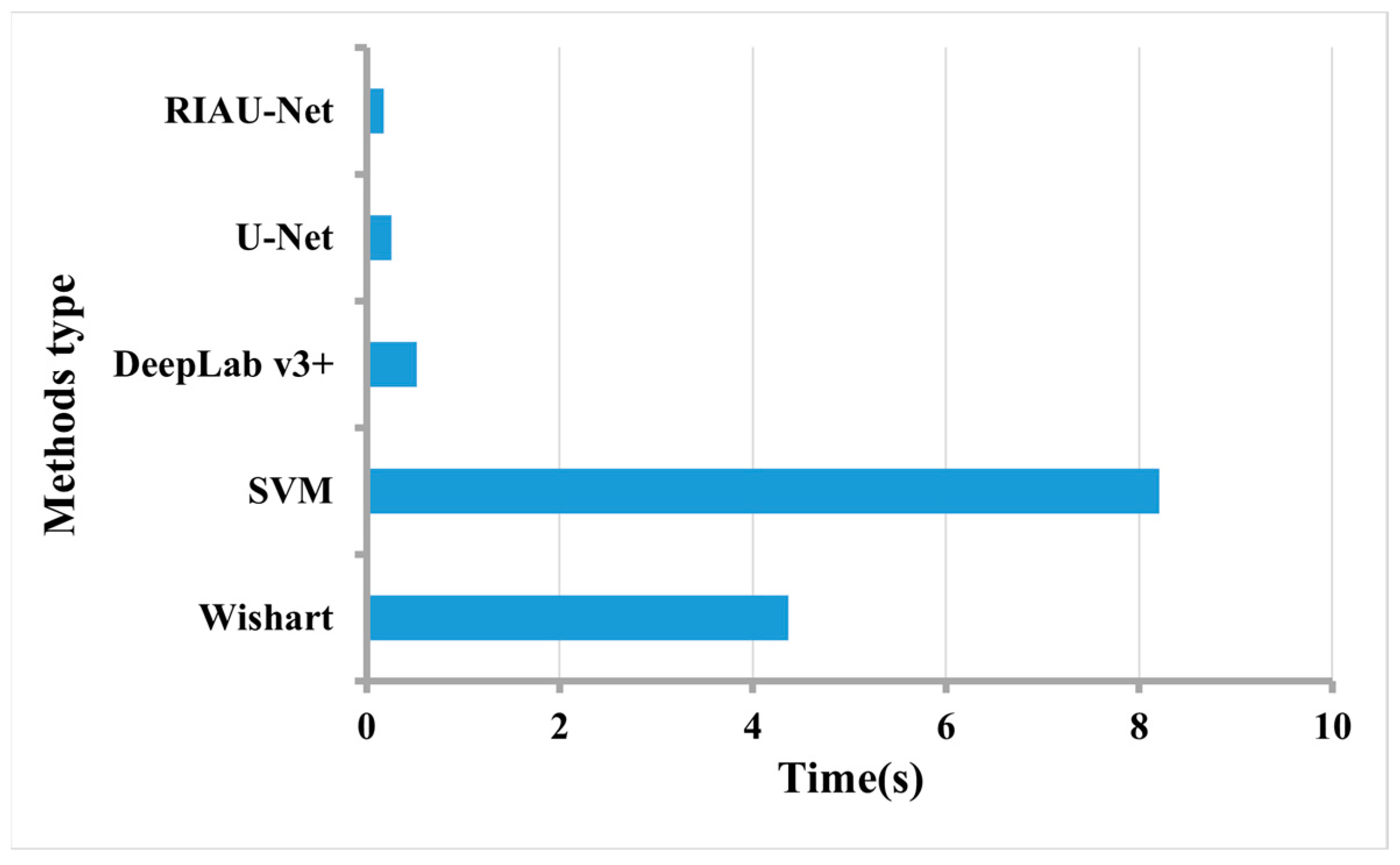

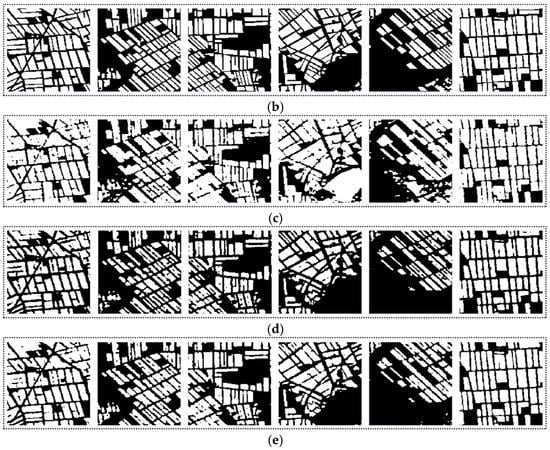

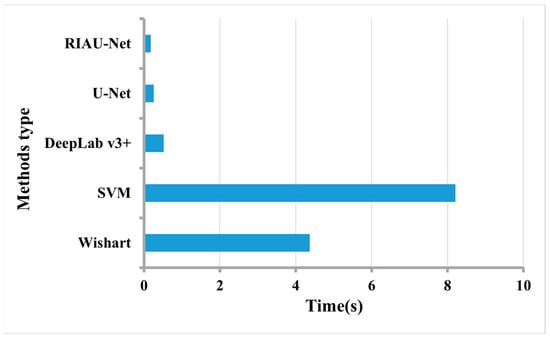

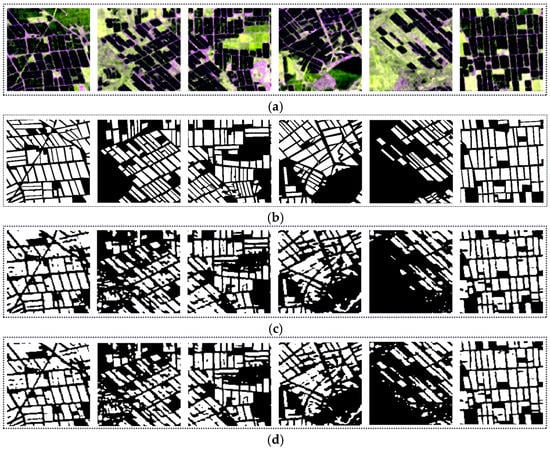

The semantic segmentation algorithms were trained using the multi-temporal dataset. The classification maps are shown in Figure 10, and the quantitative evaluation results of the different methods are listed in Table 5. The average inference time per image of each method is also displayed in Figure 11.

Figure 10.

Classification results of the different methods for 2020. (a) The original SAR images. (b) The label images. (c) Wishart. (d) SVM. (e) DeepLab v3+. (f) U-Net. (g) RIAU-Net.

Table 5.

Classification results of the different methods.

Figure 11.

Inference time per image of the different methods.

It can be seen that the RIAU-Net model showed the best overall performance and showed significant advantages over the other models in terms of both the detection results and run time. The Wishart and SVM classifiers obtained the lowest accuracies, with F1-scores below 80%. As for the semantic segmentation algorithms, DeepLab v3+ obtained the lowest accuracy. The RIAU-Net model was improved and optimized on the basis of the U-Net model, and the OA was better than that of the original U-Net network.

As can be observed in Figure 10, the speckle noise of SAR images inevitably affects the traditional supervised classification methods, despite a filtering approach being employed to suppress the noise. The classification results of the Wishart and SVM classifiers contained many spots with blurred boundaries, which seriously affected the accuracy of the classification. This indicates that the traditional methods only consider the low-level characteristics of the target and ignore the contextual information. In the meantime, there are more misclassification phenomena in the results of the traditional methods, especially for those objects that have similar scattering characteristics to rice.

The semantic segmentation algorithms take into account the contextual information of the imagery as well as the polarimetric information, which effectively reduces the influence of the speckle noise in the SAR images and improves the classification quality. Visually, the targets in the classification map of the RIAU-Net model were more continuous, and the boundaries between the rice planting areas and the other areas were clearer and in line with the real situation.

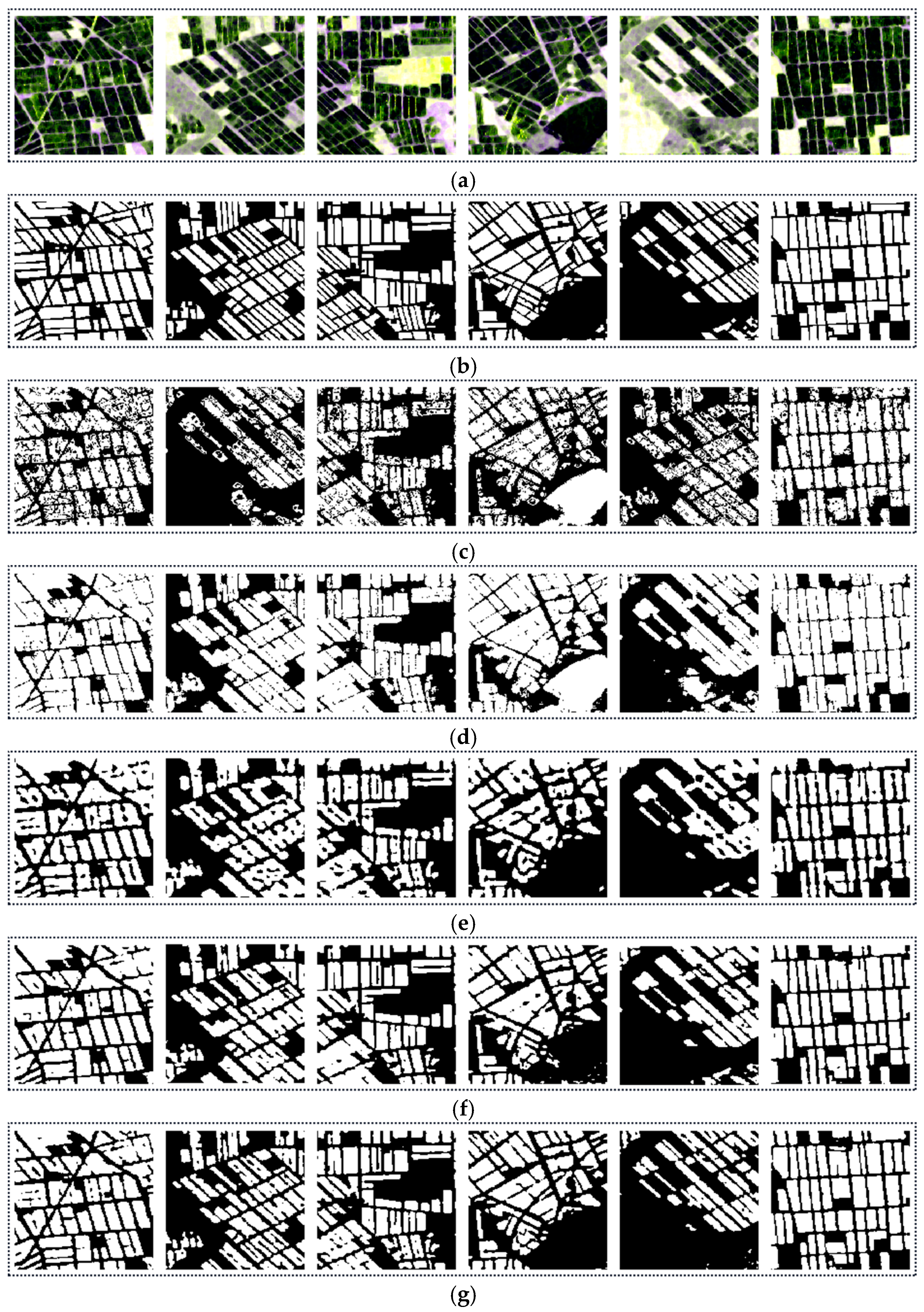

4.3.3. Validation of the Transfer Mechanism

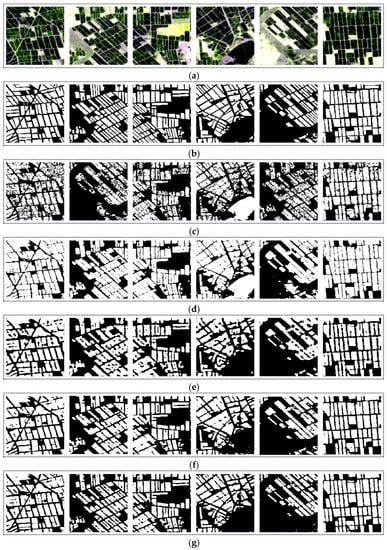

To improve the generalization ability of the RIAU-Net model without making a large effort to select training samples and retrain the network, a transfer mechanism was developed, and a similar image pair matching strategy was adopted to find similar image pairs between the dataset used for training and that used for rice monitoring. To validate the effectiveness of the proposed transfer mechanism, the performance of the RIAU-Net model with and without the transfer mechanism for rice classification in 2021 was inspected.

The similar image pairs between 2020 and 2021 obtained by the matching strategy are listed in Table 6. An interesting phenomenon is that, compared with the phases of the images found based on the temporally close principle, the phases of the images found based on the proposed matching strategy shifted rearwardly. According to the report of the Heilongjiang Meteorological Bureau of China, the seeding time of rice in the Sanjiang Plain in 2021 shifted rearwardly compared with that in 2020 due to the cold spell that occurred in the middle of April. The finding in Table 6 is in line with the above report, which validates the reliability of the proposed similar image pair matching strategy.

Table 6.

The similar image pairs between 2020 and 2021.

The classification results are shown in Figure 12, and the quantitative assessment results are listed in Table 7. The increase in the two most important indices (OA and F1-score) indicates that the proposed transfer mechanism can indeed improve the generalization ability of the RIAU-Net model.

Figure 12.

The classification results for 2021. (a) The original SAR images. (b) The label images. (c) RIAU-Net without the transfer mechanism. (d) RIAU-Net with the transfer mechanism.

Table 7.

The results of the RIAU-Net model with and without the transfer mechanism.

5. Discussion

Neural network models are often underfitted and overfitted during training, so the choice of hyperparameters is important to determine whether the model performs optimally and yields good results. In this paper, we have conducted numerous experiments and set up various combinations to determine the optimizer and the learning rate.

Optimizers often play a pivotal role in machine learning and deep learning. In the same model, due to the choice of different optimizers, the performance may vary greatly or even lead to some models not being able to be trained. For the optimizers, we chose the following two: Adam and RMSprop.

The learning rate refers to the magnitude of updating the network weights in the optimization algorithm. The learning rate can be constant, gradually decreasing, momentum-based, or adaptive, and different optimization algorithms determine different learning rates. In order to make the gradient descent method have a good performance, we needed to set the value of the learning rate in a suitable range. The model learning rates are normally set as follows: 0.01, 0.001, and 0.0001.

We conducted experiments by choosing different combinations of the optimal optimizer and the learning rate. The quantitative assessment results are listed in Table 8. It can be seen that when the learning rate was initialized as 0.001 using the Adam optimizer best results were obtained.

Table 8.

The results for different combinations of hyperparameters.

A temporal transform mechanism was studied when applying the proposed model. However, it should be noted that the proposed method was developed on the basis that the rice growing areas in Sanjiang Plain have simultaneous planting and harvest times due to the high degree of mechanization. This means that, for the complex rice cultivation environment, such as the rice planting areas in southern China where the double- and triple-crop rice are planted and harvested in different periods in most areas, the performance of the proposed method can be limited. Our future study will focus on the issue of the identification of rice planting areas in complex rice cultivation environments.

6. Conclusions

Rice is one of the important food crops on which human beings survive. The timely and accurate understanding of the distribution of rice can provide an important scientific basis for food security, agricultural policy formulation, and regional development planning. The distribution of rice is typically continuous, on the whole, accompanied by the characteristic of fragmented distribution in some areas. The environment around rice planting areas can be complex, and there are often some land-cover types with similar scattering traits to rice. Rice also shows strong phenological changes in the different growth periods and in different years. Therefore, the accurate identification of rice cultivation areas is a challenging task.

In this paper, taking the Sanjiang Plain as the study area, a rice planting area identification attention U-Net model was proposed (the RIAU-Net model). The model was trained using multi-temporal Sentinel-1 dual-polarimetric images acquired in different periods of rice growth. The rich polarimetric information of PolSAR data, i.e., the intensity information, phase information, and polarimetric decomposition parameters, was introduced into the training of the proposed model. In addition, considering the diversity of rice growth periods in different years caused by different climatic conditions and other factors, a transfer mechanism was introduced to apply the well-trained model to identify the rice planting areas in different years.

The experimental results showed that, by introducing the multi-temporal polarimetric information, the proposed RIAU-Net model can effectively identify rice planting areas. When compared with other classical supervised classification methods and deep convolutional neural network semantic segmentation algorithms, the RIAU-Net model outperformed them all in terms of both the classification results and the inference time. The Wishart and SVM methods only obtained 76.82% and 79.8% F1-score values. The F1-score values of DeepLab v3+ and U-Net were 83.66%, 88.94%, respectively. Compared to classical supervised classification methods, the F1-score of RIAU-Net improved by 11–14%, and the F1-score of RIAU-Net also improved compared to other deep convolutional neural network semantic segmentation algorithms. Moreover, the RIAU-Net model shows a pleasing temporal generalization ability. Our future study will focus on the issue of the identification of rice planting areas in complex rice cultivation environments.

Author Contributions

Conceptualization, X.M. and Z.H.; methodology, X.M.; software, X.M. and Z.H.; validation, S.Z.; formal analysis, W.F.; investigation, X.M. and Y.W.; resources, Y.W.; data curation, S.Z.; writing—original draft preparation, X.M.; writing—review and editing, Z.H. and S.Z.; visualization, X.M.; supervision, X.M.; project administration, Y.W.; funding acquisition, X.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Open Research Foundation of CMA/Henan Key Laboratory of Agrometeorological Support and Applied Technique (AMF202204) and by the Hefei Municipal Natural Science Foundation (No. 2021041).

Data Availability Statement

The dataset are publicly available at: https://github.com/Hzunyi/Rice-Identification.git, accessed on 5 August 2022.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Inoue, Y.; Sakaiya, E.; Wang, C. Capability of C-band backscattering coefficients from high-resolution satellite SAR sensors to assess biophysical variables in paddy rice. Remote Sens. Environ. 2014, 140, 257–266. [Google Scholar] [CrossRef]

- Xu, T.; Wang, F.; Yi, Q.; Xie, L.; Yao, X. A bibliometric and visualized analysis of research progress and trends in rice remote densing over the past 42 years (1980–2021). Remote Sens. 2022, 14, 3607. [Google Scholar] [CrossRef]

- Phan, A.; Ha, D.N.; Man, C.D.; Nguyen, T.T.; Bui, H.Q.; Nguyen, T.T.N. Rapid Assessment of flood inundation and damaged rice drea in red river delta from Sentinel 1A imagery. Remote Sens. 2019, 11, 2034. [Google Scholar] [CrossRef]

- Hütt, C.; Koppe, W.; Miao, Y.; Bareth, G. Best accuracy Land Use/Land Cover (LULC) classification to derive crop types using multitemporal, multisensor, and multi-polarization SAR satellite images. Remote Sens. 2016, 8, 684. [Google Scholar] [CrossRef]

- Ma, X.; Wang, C.; Yin, Z. SAR Image despeckling by noisy reference-based deep learning method. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8807–8818. [Google Scholar] [CrossRef]

- Bazzi, H.; Baghdadi, N.; El Hajj, M.; Zribi, M.; Minh, D.H.T.; Ndikumana, E.; Courault, D.; Belhouchette, H. Mapping paddy rice using Sentinel-1 SAR time series in Camargue, France. Remote Sens. 2019, 11, 887. [Google Scholar] [CrossRef]

- Karila, K.; Nevalainen, O.; Krooks, A.; Karjalainen, M.; Kaasalainen, S. Monitoring changes in rice cultivated area from SAR and optical satellite Images in Ben Tre and Tra Vinh Provinces in Mekong Delta, Vietnam. Remote Sens. 2014, 6, 4090–4108. [Google Scholar] [CrossRef]

- Wu, F.; Wang, C.; Zhang, H. Rice Crop monitoring in south China with RADARSAT-2 quad-polarimetric SAR data. IEEE Geosci. Remote Sens. Lett. 2011, 8, 196–200. [Google Scholar] [CrossRef]

- Phan, H.; Le Toan, T.; Bouvet, A. Understanding dense time series of Sentinel-1 backscatter from rice fields: Case study in a province of the Mekong Delta, Vietnam. Remote Sens. 2021, 13, 921. [Google Scholar] [CrossRef]

- Sun, C.; Zhang, H.; Ge, J.; Wang, C.; Li, L.; Xu, L. Rice Mapping in a subtropical hilly region based on Sentinel-1 time deries feature snalysis and the dual Branch BiLSTM model. Remote Sens. 2022, 14, 3213. [Google Scholar] [CrossRef]

- Lasko, K.; Vadrevu, K.; Tran, V. Mapping double and single crop paddy rice with Sentinel-1A at varying spatial scales and polarizations in Hanoi, Vietnam. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 498–512. [Google Scholar] [CrossRef] [PubMed]

- Kobayashi, S.; Ide, H. Rice crop monitoring using Sentinel-1 SAR data: A case study in Saku, Japan. Remote Sens. 2022, 14, 3254. [Google Scholar] [CrossRef]

- Xie, L.; Zhang, H.; Wu, F.; Wang, C.; Zhang, B. Capability of rice mapping using hybrid polarimetric SAR data. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2015, 8, 3812–3822. [Google Scholar] [CrossRef]

- Hoang, H.; Bernier, M.; Duchesne, S.; Tran, Y. Rice mapping using RADARSAT-2 dual- and quad-Pol data in a complex land-use watershed: Cau river basin (Vietnam). IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2016, 9, 3082–3096. [Google Scholar] [CrossRef]

- Wang, H.; Magagi, R.; Goita, K. Crop phenology retrieval via polarimetric SAR decomposition and random forest algorithm. Remote Sens. Environ. 2019, 231, 111234. [Google Scholar] [CrossRef]

- Chang, L.; Chen, Y.; Wang, J.; Chang, Y. Rice-field mapping with Sentinel-1A SAR time-series data. Remote Sens. 2021, 13, 103. [Google Scholar] [CrossRef]

- Li, H.; Fu, D.; Huang, C.; Su, F.; Liu, Q.; Liu, G.; Wu, S. An approach to high-resolution rice paddy mapping using time-series Sentinel-1 SAR data in the mun river basin, Thailand. Remote Sens. 2020, 12, 3959. [Google Scholar] [CrossRef]

- Ndikumana, E.; Ho Tong Minh, D.; Dang Nguyen, H.T.; Baghdadi, N.; Courault, D.; Hossard, L.; El Moussawi, I. Estimation of rice height and biomass using multitemporal SAR Sentinel-1 for camargue, Southern France. Remote Sens. 2018, 10, 1394. [Google Scholar] [CrossRef]

- Lopez-Sanchez, J.; Vicente-Guijalaba, F.; Ballester-Berman, J. Polarimetric response of rice fields at C-Band: Analysis and phenology retrieval. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2977–2993. [Google Scholar] [CrossRef]

- Küçük, Ç.; Taşkın, G.; Erten, E. Paddy-rice phenology classification based on machine-Learning methods using multitemporal co-polar X-Band SAR images. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2016, 9, 2509–2519. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Zhou, H. Deep learning based multi-temporal crop classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

- Pang, J.; Zhang, R.; Yu, B. Pixel-level rice planting information monitoring in Fujin City based on time-series SAR imagery. Int. J. Appl. Earth Obs. Geoinform. 2021, 104, 102551. [Google Scholar] [CrossRef]

- Xu, J.; Yang, J.; Xiong, X. Towards interpreting multi-temporal deep learning models in crop mapping. Remote Sens Environ. 2021, 264, 112599. [Google Scholar] [CrossRef]

- Paul, S.; Kumari, M.; Murthy, C. Generating pre-harvest crop maps by applying convolutional neural network on multi-temporal Sentinel-1 data. Int. J. Remote Sens. 2022, 1–24. [Google Scholar] [CrossRef]

- Xu, L.; Zhang, H.; Wang, C.; Wei, S.; Zhang, B.; Wu, F.; Tang, Y. Paddy Rice Mapping in thailand using time-series Sentinel-1 data and deep learning model. Remote Sens. 2021, 13, 3994. [Google Scholar] [CrossRef]

- Lin, Z.; Zhong, R.; Xiong, X.; Guo, C.; Xu, J.; Zhu, Y.; Xu, J.; Ying, Y.; Ting, K.C.; Huang, J.; et al. Large-scale rice mapping using multi-task spatiotemporal deep learning and Sentinel-1 SAR time series. Remote Sens. 2022, 14, 699. [Google Scholar] [CrossRef]

- Wei, P.; Chai, D.; Lin, T. Large-scale rice mapping under different years based on time-series Sentinel-1 images using deep semantic segmentation model. ISPRS J. Photogramm. Remote Sens. 2021, 174, 198–214. [Google Scholar] [CrossRef]

- Ma, T.; Xu, J.; Wu, P. Oil spill detection based on deep convolutional neural networks using polarimetric scattering information from Sentinel-1 SAR images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–13. [Google Scholar] [CrossRef]

- Sun, W.; Li, P.; Du, B.; Yang, J.; Tian, L.; Li, M.; Zhao, L. Scatter matrix based domain adaptation for bi-temporal polarimetric SAR images. Remote Sens. 2020, 12, 658. [Google Scholar] [CrossRef]

- Salehi, M.; Maghsoudi, Y.; Mohammadzadeh, A. Assessment of the potential of H/A/Alpha decomposition for polarimetric interferometric SAR data. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2440–2451. [Google Scholar] [CrossRef]

- Ferro-Famil, L.; Pottier, E.; Lee, J. Unsupervised classification of multifrequency and fully polarimetric SAR images based on the H/A/Alpha-Wishart classifier. IEEE Trans. Geosci. Remote Sens. 2001, 39, 2332–2342. [Google Scholar] [CrossRef]

- Cloude, S.; Pottier, E. An entropy based classification scheme for land applications of polarimetric SAR. IEEE Trans. Geosci. Remote Sens. 1997, 35, 68–78. [Google Scholar] [CrossRef]

- Lopez-Martinez, C.; Pottier, E. Statistical assessment of eigenvector-based target decomposition theorems in radar polarimetry. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2004), Anchorage, AK, USA, 20–24 September 2004; pp. 192–195. [Google Scholar]

- Lee, J.; Ainsworth, T.; Kelly, J. Evaluation and bias removal of multilook effect on entropy/alpha/anisotropy in polarimetric SAR decomposition. IEEE Trans. Geosci. Remote Sens. 2008, 46, 3039–3052. [Google Scholar] [CrossRef]

- Oktay, O.; Schlemper, J.; Folgoc, L. Attention U-Net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999v3. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. arXiv 2015, arXiv:1505.04597v1. [Google Scholar]

- Ren, Y.; Li, X.; Yang, X. Development of a dual-Attention U-Net model for sea ice and open water classification on SAR images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Nava, L.; Bhuyan, K.; Meena, S.R.; Monserrat, O.; Catani, F. Rapid mapping of landslides on SAR data by Attention U-Net. Remote Sens. 2022, 14, 1449. [Google Scholar] [CrossRef]

- Conradsen, K.; Nielsen, A.; Schou, J. A test statistic in the complex Wishart distribution and its application to change detection in polarimetric SAR data. IEEE Trans. Geosci. Remote Sens. 2002, 41, 4–19. [Google Scholar] [CrossRef]

- Ma, X.; Wu, P.; Shen, H. A nonlinear guided filter for polarimetric SAR image despeckling. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1918–1927. [Google Scholar] [CrossRef]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).