1. Introduction

The hyperspectral (HS) remote sensing technique, which emerged in the 1980s, is a comprehensive scientific technique combining information processing, computer technology and other technologies. Hyperspectral images (HSIs) consist of dozens to hundreds of spectral bands in the same area of the Earth’s surface. HSIs have the characteristics of high dimensionality, high redundant band information and high spectral resolution. Compared to traditional remote sensing techniques, hyperspectral remote sensing techniques can be used to qualitatively and quantitatively detect substances with ultrastrong spectral information. The advantage of HSIs lies in their high spectral resolution. In HSIs, many bands are required to increase the physical size of the photoreceptor, spatial resolution is sacrificed as a result. However, high spatial resolution images are required in many applications of HS remote sensing, such as marine research [

1,

2], food detection [

3], military [

4] and other fields [

5,

6,

7,

8,

9]. Therefore, research on super-resolution (SR) reconstruction technology for HSIs has important scientific significance and engineering application value.

Recent studies of HSI SR can be divided into four types: Bayesian framework [

10,

11,

12,

13], matrix factorization [

14,

15,

16,

17], tensor factorization [

18,

19,

20,

21], and deep learning [

22,

23,

24].

The Bayesian framework is a common method that is used to establish the posterior distribution of high spatial resolution hyperspectral image (HR-HSI) based on prior information and observational models. In [

10], a multi-sparse Bayesian learning method was proposed based on the sparsity prior and the temporal correlation between successive frames. Wei et al. [

11] adopted a Bayesian model based on sparse coding and dictionary learning to solve the fusion problem. The maximum a posterior (MAP) estimator, which uses a coregistered HSI from an auxiliary sensor, was introduced in [

12]. In [

13], a Bayesian sparse representation and spectral decomposition fusion method was adopted to improve image resolution. Bayesian-based methods are very sensitive to the independence of input data, which limits the practical application of the Bayesian framework.

Matrix factorization-based methods usually decompose HSI into two matrix forms to represent the spectral dictionary and low retention numbers. These two matrices are estimated by observing HS-multispectral (HS-MS) pairs. In [

14], a spectral matrix decomposition and dictionary learning method was proposed to train spectral dictionaries of high spatial-spectral information by low spatial resolution HSI (LR-HSI) and HR multispectral image (HR-MSI) matrices. In [

15], a spatial-spectral sparse representation method was adopted by using spectral decomposition priors, sparse priors and nonlocal self-similarity. In [

16], a non-negative structure sparse representation (NSSR) method based on the sparsity of HR-HSI was proposed for the joint estimation of the HS dictionary and sparse code. In [

17], based on sparse representation and local spatial low-rank, a method was proposed to solve HSI SR by estimating spectral dictionary and regression coefficients. Hyperspectral data are three-dimensional images, which have one more dimension of spectral information than ordinary two-dimensional images. Matrix-based methods destroy the data structure.

As multidimensional arrays, tensors provide a natural expression of HSI data. Tensor representation has been widely applied to high-dimensional data denoising [

25,

26,

27,

28], completion [

29,

30,

31] and SR [

18,

19,

20,

21] in the past few years. A coupled non-negative tensor decomposition (NTD) method was introduced in [

18] to extend the non-negative matrix decomposition to a tensor. In this method, Tucker decomposition of LR-HSI and HR-MSI is performed under NTD constraints. A joint tensor decomposition (JTF)-based method for solving HR-HSI was proposed by Ren et al. [

19]. These methods are designed by treating the HSI data as a 3rd-order tensor and assuming that the tensor is of sufficiently global low-rank. Nonlocal self-similarity is an important feature of images. It depicts the repeated appearance of the nonlocal regional structure of the image, effectively retains the edge information of the image, and has certain advantages in image restoration. Dian et al. [

20] adopted a fusion method based on low tensor train rank (LTTR) decomposition. This method was applied to HR-HSI through the nonlocal similarity learned from HR-MSI, and multiple group 4D similarity cubes were formed. The SR problem was efficiently solved using LTTR priors for the 4D cubes. In [

21], a fusion method based on nonlocal Tucker decomposition, which uses the nonlocal similarity of HS data, was proposed. The clustering blocks obtained by nonlocal self-similarity are very dependent on the accuracy of block matching. The nonlocal clustering space does not improve the data redundancy, which makes it difficult to effectively explore the low-rank characteristics contained in the data.

With its superior effect in detection, recognition, classification and other tasks [

32,

33,

34,

35,

36,

37,

38,

39], deep learning has been gradually applied to deal with low-level vision tasks in recent years [

22,

23,

24]. Bing et al. [

22] proposed an improved generative adversarial network (GAN) to improve the squeeze and excitation (SE) block. By increasing the weight value of important features and reducing the weight value of weak features, the SE block is included in the simplified enhanced deep residual networks for the single image SR (SISR) model to recover HSIs. To improve the computing speed of deep learning networks, Kim et al. [

23] designed an SISR network, which improves the fire modules based on Squeeze-Net, and asymmetrically arranges the fire modules. The number of network parameters is effectively reduced. In [

24], a video SR network based on learning temporal dynamics was introduced. In this network, filters with different temporal scales are used to fully exploit the temporal relationship of continuous LR frames, and a spatial alignment network is employed to reduce motion complexity. The performance of deep learning networks is closely determined by the size of the training data. However, the size of the acquired HSI data is limited by the physical size of the photosensor, and there are only dozens to hundreds of spectral bands, which is not sufficient for training.

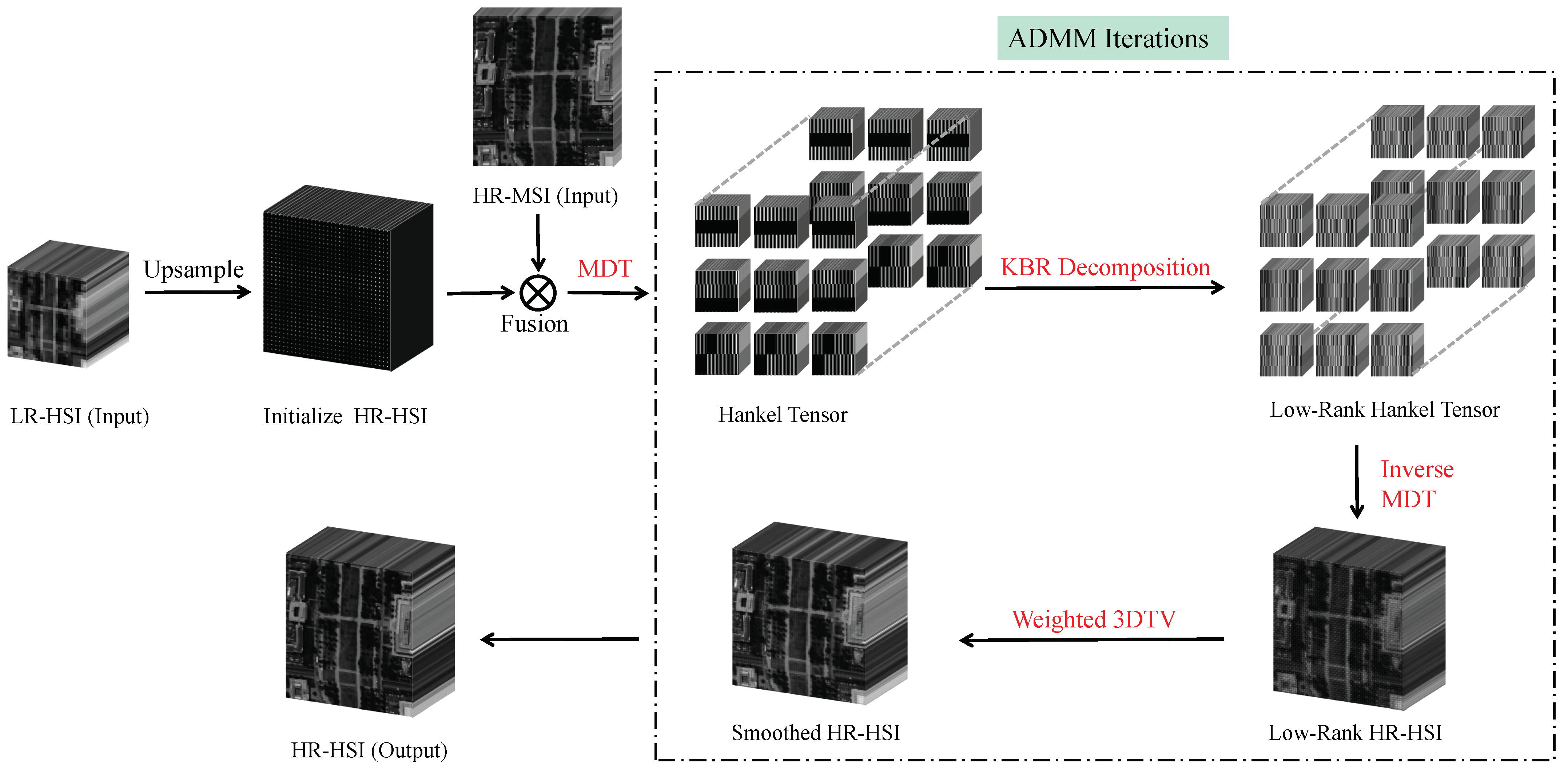

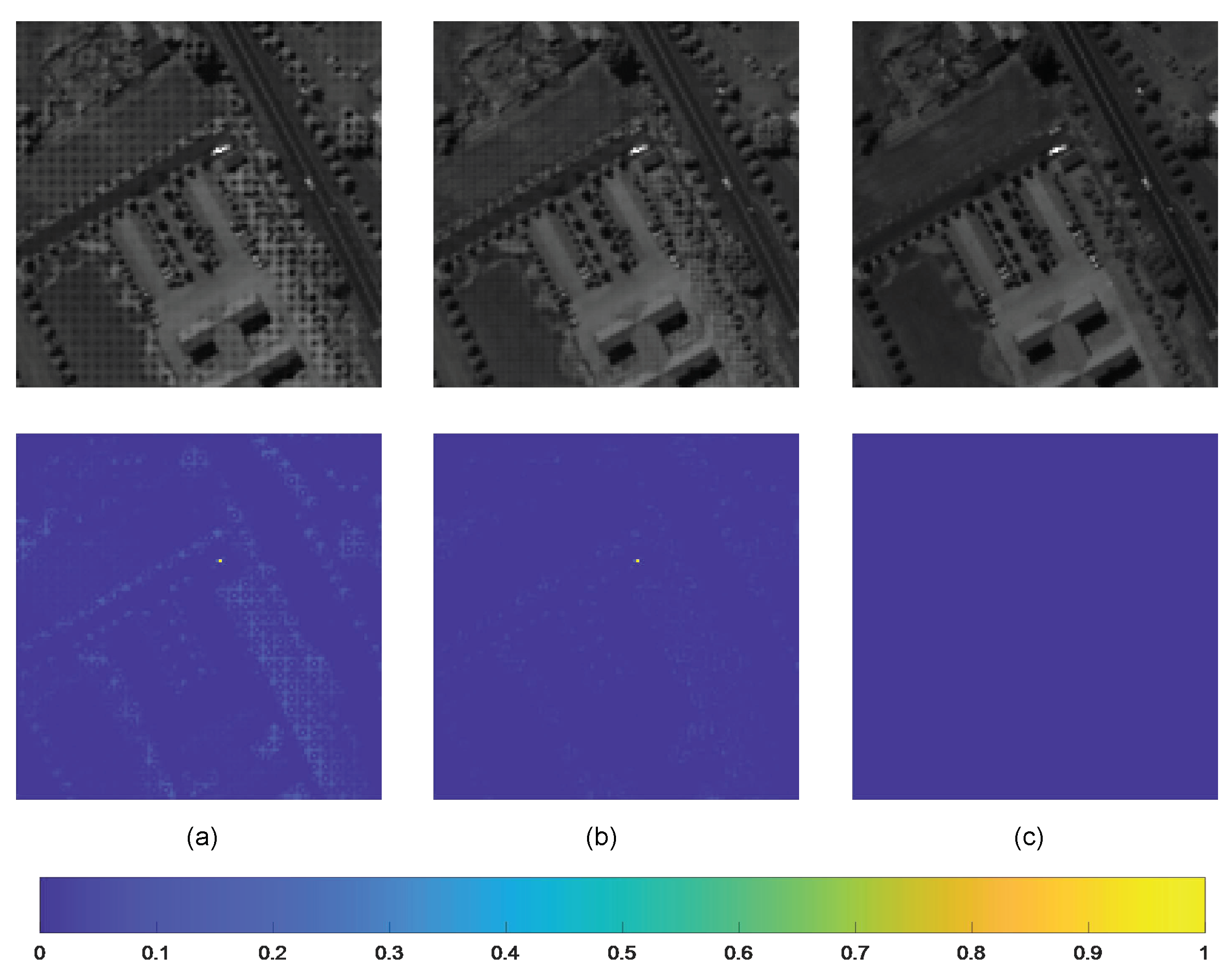

Considering the limitation of HSI data and to better maintain the data structure, we adopt a tensor-based method. To improve data redundancy and fully explore the low-rank characteristics of data, we propose using HSIs in the Hankel space to carry out high-order low-rank tensor SR (HTSR). The Hankel space is an embedded space obtained by employing delay-embedding transform in a multi-way manner for tensors, which consists of duplicated high-order tensors with low-rankness. Different from traditional high-order tensor decomposition, we use a folded-concave penalized Kronecker-basis-representation-based tensor sparsity measure (KBR) tensor decomposition [

40] to reasonably and effectively represent the low-rank properties of high-order tensor in the Hankel space. From the spatial-spectral domain of HS data, there are local smoothing characteristics between adjacent pixels. We adopt W3DTV to constrain the spatial-spectral local continuity of HSIs. To further improve the effect of HSI SR recovery, HSI-MSI fusion is used in our method. Unlike HSIs, multispectral images (MSIs) contain 3–20 discontinuous bands with a higher signal-to-noise ratio (SNR) and spatial resolution. HR-MSI-assisted enhancement can compensate for the lack of LR-HSI, so the fusion method can more accurately reconstruct HR-HSI.

The contributions are highlighted as follows:

To exploit the low-rank information hidden in HSI data, we propose modeling the SR problem in the Hankel space. Compared with the global and nonlocal image spaces, the effectiveness of the designed Hankel space SR has been demonstrated.

A folded-concave penalized KBR tensor decomposition is proposed to describe the low-rank characteristics in the spatial–spectral domain of the high-order tensor in the Hankel space.

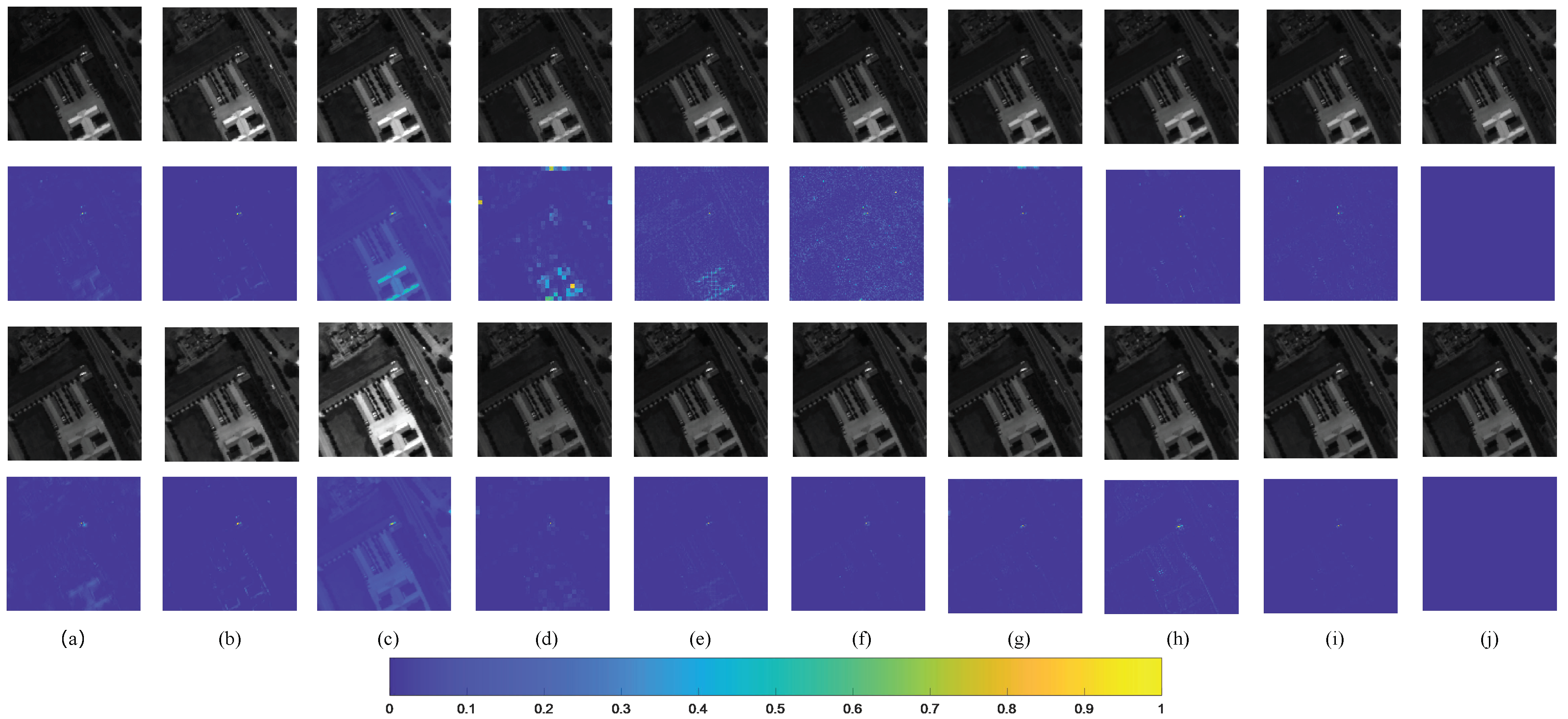

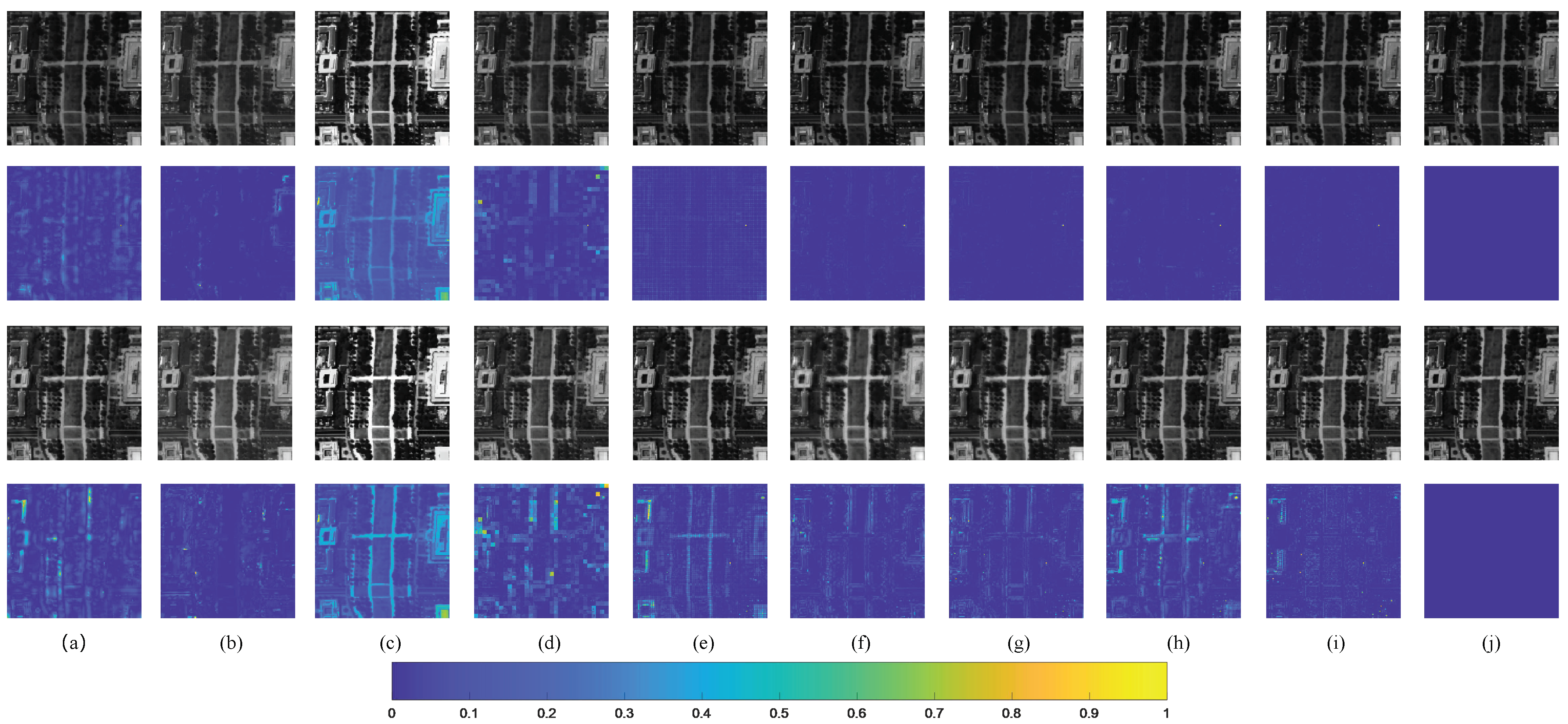

Extensive experiments demonstrate that our HTSR method can obtain a relatively great performance compared with the current state-of-the-art methods.

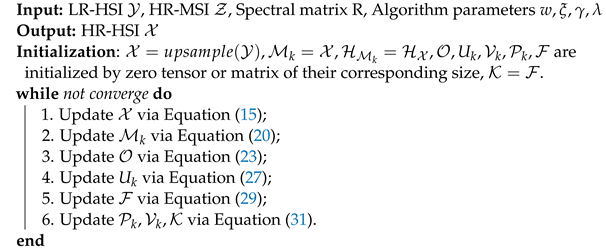

The rest of this paper is organized as follows. In

Section 2, we introduce the tensor preparatory knowledge. In

Section 3, we present the proposed HTSR fusion method. In

Section 4, we introduce the optimization problem of the solution algorithm of the HTSR method in detail. The experimental results on three commonly used hyperspectral datasets are described in

Section 5. Finally, conclusions are presented in

Section 6.