Abstract

The increasing need for inexpensive, safe, highly efficient, and time-saving damage detection technology, combined with emerging technologies, has made damage detection by unmanned aircraft systems (UAS) an active research area. In the past, numerous sensors have been developed for damage detection, but these sensors have only recently been integrated with UAS. UAS damage detection specifically concerns data collection, path planning, multi-sensor fusion, system integration, damage quantification, and data processing in building a prediction model to predict the remaining service life. This review provides an overview of crucial scientific advances that marked the development of UAS inspection: underlying UAS platforms, peripherals, sensing equipment, data processing approaches, and service life prediction models. Example equipment includes a visual camera, a multispectral sensor, a hyperspectral sensor, a thermal infrared sensor, and light detection and ranging (LiDAR). This review also includes highlights of the remaining scientific challenges and development trends, including the critical need for self-navigated control, autonomic damage detection, and deterioration model building. Finally, we conclude with a brief discussion regarding the pros and cons of this emerging technology, along with a prospect of UAS technology research for damage detection.

1. Introduction

A reliability analysis of both products and systems is an important task, closely linked to security and service life [1]. Service safety, traffic safety, and a long service life are of crucial importance in bridge engineering. Optimization of traffic loads consequently has a positive effect on the service life of a bridge. Existing bridge structures and their components are subject to various loads caused by daily traffic. Bridge fatigue is then accumulated by the variable amplitude normal stress cycles [2]. In addition, corrosion is an influential factor in the deterioration of reinforced concrete (RC) structures [3,4,5]. As a result, it is necessary to recognize structural conditions, such as cracks and pits, in a timely manner to ensure safety.

Over the past decade, the pursuit of novel methodologies and advanced technologies has expanded far beyond rough damage detection. The key enabling concept is multiscale, accurate measurement of a damaged structure. This includes both efficient detection methodologies and integrated service life prediction based on fatigue and corrosion. Traditionally, visual inspection is the most common way to assess operation condition and to detect defects on structure surfaces. However, visual inspections can be labor-intensive, subjective, and time-consuming, even for well-trained inspectors.

To solve this issue, emerging techniques including structural health monitoring (SHM) were designed for long-span bridges in the past [6]. SHM can be applied to examine the damaged area and to provide information regarding detected damage for maintenance and repair [7]. By using sensing techniques, SHM systems monitor the structural condition of a bridge in real time and analyze structural characteristics according to data output [8]. An SHM system used to evaluate structural health typically consists of hundreds or thousands of sensors and peripheral processors [9,10,11]. SHM systems have increasingly been installed in multi-type bridges worldwide for almost 40 years with the aim of acquiring damage data for a bridge [12,13,14].

These damage data were extensive and hard to extract effective information from when evaluating the condition of a bridge. For the development of emerging light-weight, small, high-performance sensors, an unmanned aerial vehicle (UAV) equipped with sensor(s) is making enormous advances in solving problems related to the conventional procedures. A UAV equipped with special sensors, such as visual cameras and LiDAR, will be more reliable and efficient, as well as simple to operate. An interesting study was conducted which employed a UAV to acquire data for SH. A full analysis of this new method was undertaken and it was compared to conventional inspection methods [15]. Mader et al. [16] developed and constructed a fleet of UAVs with different sensors for three-dimensional (3D) object data acquisition. This contribution highlighted the potential of UAVs in building inspections.

Service life prediction of bridges is a key subject in civil engineering. The damage of bridges stems from two major contributors. Firstly, the service life of bridges is affected by the daily growth of traffic [17]. Secondly, corrosion is an essential factor which accounts for the deterioration of RC structures on a bridge, leading to a corrosion pit in the bridge [3,4,5]. The coupled effect of fatigue and corrosion is very complex, and refers to chloride ingress, concrete crack expansion, corrosion pit growth, environmental variation, and local stress concentration [18].

There are two approaches commonly employed in bridge structures for service life prediction and fatigue damage evaluation. The first approach is a traditional S-N curve, containing the number of failure cycles (N), and the constant amplitude stress range (S), which conform to an S-N curve by accumulative fatigue experiments. The relationship between S and N is a linear damage hypothesis that extends to variable amplitude loadings, called Miner’s rule [19]. Miner’s rule tracks fatigue reliability by monitoring data [20,21,22] or finite element analysis (FEA) calculations [23]. The peak over threshold (POT) approach predicts the load effects of extreme amplitude based on extreme values of monitoring data [17]. The second approach, known as fracture mechanics, explores the crack initiation and growth stage under a stress concentration field [24]. In bridge fatigue calculation and service life prediction, two approaches can be applied sequentially. Firstly, the S-N curve method is employed to determine fatigue life. Secondly, a fracture mechanics approach is utilized for refining a crack-based remaining service life assessment and maintenance strategies.

For image-based crack detection [25,26,27,28,29], edge detector-based approaches (e.g., Roberts, fast Haar, Fourier, Sobel, Butterworth, Canny, Prewitt, Laplacian of Gaussian, and Gaussian) [30,31,32,33,34,35,36] and deep learning-based approaches [37,38,39,40] are the two most widely implemented approaches. Edge detectors have been proposed to detect cracks, and their performance depends on the thresholding method and the image gradient formulation. The performances of the latter two approaches in detecting cracks have been evaluated and compared [27,41]. Another approach suggested using 3D LiDAR [27,42,43,44,45] as a remote sensing technique for damage detection by 3D construction. In addition, the multispectral and hyperspectral sensors have also been applied; they cover a wider range of wavelengths compared to visual or thermal cameras [46,47]. Multi-sensors and data fusion increase functionality and accuracy by combining several types of information [46,48,49].

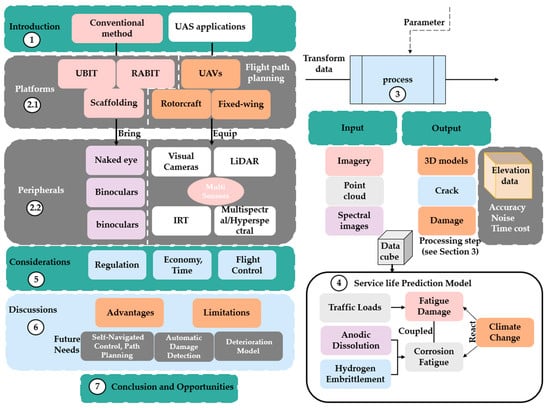

Algorithmically, how multi-sensor data can be mapped to damage detection remains unclear, even though the potential of UAV multi-sensor data fusion has been proven. Meanwhile, how to design them to facilitate high-resolution, adaptive systems, alongside their integration and transition, remains unclear. This review provides a roadmap detailing work on damage detection, considerations, future areas of research, and opportunities for crack growth. The rest of this paper is organized as follows: in Section 2, underlying platforms and peripherals applied to gather damage information are listed in detail; in Section 3, data processing methods commonly used to handle or extract valid information is introduced; in Section 4, several bridge service life prediction models, including crack extension, fatigue failure, and material corrosion are reviewed; in Section 5, we provide some considerations when utilizing this emerging technology; in Section 6, we discuss advantages, limitations, and future needs of UAS bridge damage detection; and in Section 7, the conclusion and opportunities are given. The methodology and structure of the articles covered in the main elements of this review are shown in Figure 1.

Figure 1.

Flow chart of the methodology and structure of the article depicting the main elements of the study.

2. Platforms and Peripherals

UAS damage detection needs physical components, including the underlying platforms (UAV) and a few common peripherals (visual cameras, LiDAR, thermal infrared sensors, sound navigation and ranging, magnetic sensors, multi-sensors, and data fusion).

2.1. Platforms

The term “platform”, in relation to the underlying UAV body, facilitates UAS inspection by equipping external sensors. As these UAVs began to incorporate more peripheral technologies and grew in complexity, a new term was developed to describe the whole system together—UAS. UAS was defined as a combination of UAV, either rotorcraft or fixed-wing aircrafts, the ground control system, and the payload (what it is carrying). When it comes to bridge inspection, rotorcrafts and fixed-wing aircrafts are all available platforms to choose.

UAS inspection has clear advantages compared with traditional inspection methods: higher security level, higher information completeness, more efficiency, lower cost, less labor, and more types of information. In some special areas hard to reach by manpower, UAS allows easier access, and path preplanning-based computers can provide improved site visibility and optimized views [33,50]. A financial advantage is of great significance when building a UAS equipped with both LiDAR [51] and image sensors [52]. In addition, various single-sensor technologies or multiple sensors in combination can be utilized for different inspection purposes.

Although there are many available platforms, such as kites and balloons, UAVs are much more variable in their technical specifications. The requirements for lifting capability and platform safety are less stringent with UAVs; the cost and technical expertise needed to operate such systems also vary greatly. When compared with conventional platforms, another advantage of UAVs is that they can be used in situations that require delicate control through specially designed dynamic systems. This level of control is always necessary for narrow space detection in bridge inspection missions. Technical obstacles will not bother users; there is a vast body of useful internet resources (e.g., diydrones.com, dronezon.com, rcgroups.com, accessed on 21 August 2022) as well as practical guidebooks [53] with up-to-date information on UAS technology, models, operational use, navigation tutorials, applications, and economic potential. The peer-reviewed academic literature may also help, containing UAS special issues and proceedings of dedicated UAV conferences (e.g., Remote sensing [54], GIScience and Remote Sensing [55], and International Journal of Remote Sensing [56]).

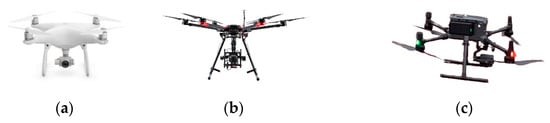

Traditional visual inspectors usually perform the task by walking on the deck, using binoculars or relying on their naked eye to judge the damaged area. In regions that are difficult to access, scaffolding or big mechanical equipment such as under-bridge inspection trucks (UBIT) may be useful [57]. In 2014, the robotics-assisted bridge inspection tool (RABIT) was the first robotic vehicle used for surface detection and subsurface detection on a bridge deck [58,59]. In the past, fixed-wing UAVs were needed to mount heavy equipment, such as a 3D LiDAR, depending on mounted sensors [60]. However, with the development of autonomous driving, the 3D LiDAR has been updated to a smaller size and lighter weight to enable the equipment of LiDAR and other sensors to be carried on rotorcrafts. Rotorcrafts are most commonly used for their low cost, flexibility, and ability to hover. For bridge inspection, the most common UAV configurations include quadcopters (four propellers) [61,62,63,64] and hexacopters (six propellers) [61,62,65]. A fleet of UAVs with different types of sensors has been developed and constructed [16]. The different types, inspection methods, advantages, and shortcomings of different bridge detection platforms are shown in Figure 2 and Table 1.

Figure 2.

Different types of UAVs used in bridge detection. (a) DJI Phantom 4. (b) DJI M600 Pro. (c) DJI M300 equipped with Zenmuse H20.

Table 1.

Inspection method, advantages, and shortcomings of bridge detection platform.

The above-mentioned platforms are usually controlled by computer programming in combination with a global navigation satellite system (GNSS), such as global positioning systems (GPS), to acquire data, no matter what type of sensor is equipped. Many professional-grade UAS are now equipped with real-time kinematic (RTK) or post-processing kinematic (PPK) GNSS, providing the high accuracy needed for flight control, together with attitude information from the inertial measurement units (IMU). The UAV may be flown manually by experienced pilots, semiautomated by the less experienced, or in fully autonomous mode, from hand-launching to automatic landing. In fully autonomous mode, flight path planning can be accomplished with flight-planning software, where the aircraft follows a predefined flightline along a set of waypoints. Both methods are applied to bright inspection surveys, on the basis of computing exposure interval, flying height, ground sample distance (GSD), flight-path orientation and spacing, and photogrammetric overlap, manually or automatically [66,67].

UAV flight path programming has recently been introduced as an effective method to provide the potential locations of surface defects; it efficiently achieves perpendicular and overlapping views for sampling the viewpoints, with the help of powerful algorithms such as a spanning tree algorithm [68], a wavefront algorithm [69], and a neural network [70]. There are other methods aiming at different purpose, such as the traveling salesman problem (TSP) [71,72] and the A* algorithm [73] for minimizing the chances of the path passing through pre-defined viewpoints; the art gallery problem (AGP) [74] for minimizing the number of viewpoints; and coverage path planning [50,75] for 3D-mapping. The core objectives of flight path programming are improving safety, accuracy, coverage, and efficiency and minimizing flight cost.

2.2. Peripherals

2.2.1. Visual Cameras

Visual cameras are commonly used in UAS inspections, with inspectors on the ground to operate the UAV and onboard imaging sensors and cameras [76]. Computer vision techniques are utilized in automated visual inspection quantification systems for bridge damage detection introduced by many researchers [77,78,79,80,81,82]. Professional remote sensing UAVs, such as the DJI phantom and Mavic series [57,66] (DJI innovation, Shenzhen, China), can be directly used to capture damage information from the surface of a bridge. Digital cameras, such as Nikons, were selected as visual sensors to acquire high-resolution images [62]. Three general image-based inspection methods are raw image direct inspection, image enhancement technology, and image processing algorithms. Compared to image enhancement technology and image processing algorithms, manual raw image direct inspection is prone to inaccuracy and is time consuming [41].

2.2.2. LiDAR Sensor

The terrestrial 3D LiDAR scanner is a piece of optical sensing equipment specially used for distance measurement, and it has been widely used in remote sensing. Millions of position data points collected by LiDAR can populate a surficial area. These position data points can be used to reconstruct 3D models and object mapping of the interest area [43,83]. The feasibility and advantages of merged UAV–LiDAR systems have been demonstrated [84,85].

2.2.3. Thermal Infrared Imagery

Infrared thermography (IRT) imaging technology has been widely used in non-destructive evaluations (NDE), examinations of bond defects in composite materials [86], complicated geometries [87], moisture content in roofs, performance analysis of wet insulation [88], post-disaster inspections [89,90,91], and crack, nugget, expulsion, and porosity in concrete decks [92,93,94]. Both active [95,96] and passive [85,97] thermography can identify internal defects of bridges, such as delamination in bridge piers and decks. Sakagami [98] developed a new, thermal non-destructive testing (NDT) detection technique for bridge fatigue crack detection by detecting a temperature change. Based on temperature distribution data, Omar [99] and Wells [100] completed a concrete bridge inspection using a UAS mounted with infrared thermography. Escobar-Wolf et al. [101] used IRT for undersurface delamination and deck hole detection, which generated thermal and visible images for a 968 m2 area. Compared with direct contact hammer sounding, an overall accuracy of approximately 95% showed in 14 m2 delamination. The three types of sensors below, mainly equipped in UAS, are shown in Figure 3 and Table 2.

Figure 3.

Different types of peripherals used for bridge detection. (a) DJI Zenmuse H20. (b) DJI Zenmuse L1. (c) HDL-32E, VLP-16 LiDAR. (d) DJI Zenmuse XT.

2.2.4. Multispectral and Hyperspectral Sensors

Recent interests have included the study of overall spectral information acquisition and generation [102,103,104]. Compared to visual and thermal cameras, multispectral and hyperspectral imaging can obtain a bridge inspection image in several bands because they cover a wider wavelength range. Visual and thermal cameras collect intensity for only three bands: red, green, and blue (RGB) colors. Kim proposed a damage detection algorithm and tested it by decomposing a efflorescence area of two concrete specimens based on their spectral features [105].

2.2.5. Multi Sensors

With the emergence of new sensor technologies, how to effectively integrate and combine several sensing technologies capable of collecting and processing detailed information has been a frontier problem. The concept of airborne LiDAR and hyperspectral integrated systems was first introduced by Hakala et al. [106]. They acquired 3D model and image information of an observed area using an integrated multispectral-LiDAR imaging system. The LiDAR and hyperspectral integrated systems based on a UAV was designed to integrate two imaging technologies effectively and collect simultaneous 3D model and spectral information accurately [46]. Mader et al. [16] organized a fleet of UAVs equipped with different sensors (LiDAR, camera, and multispectral scanner) to investigate the potential of UAV-based multi-sensors in a data feasibility analysis.

2.2.6. Other Sensors

Alongside the above common sensors, other potential sensors, such as the magnetic sensor, were also applied to UAS inspection for special demands or assignments. Magnetic sensors with sufficient power and accuracy can be applied to generate defect maps of ferrous materials in a bridge. Climbing robots equipped with magnetic wheels have been developed to measure the vertical component of an induced magnetic field [107,108]. The limitation of magnetic sensors is their testing capability, and that the test material only includes steel bridges. For underwater bridge structure inspection, sound navigation and ranging (SONAR) was a common testing method [109,110]. Vertical take-off and landing platforms can be used for close inspection, along with rejecting environmental disturbances [111].

Table 2.

Work mode, main advantages, and shortcomings of peripherals used for bridge detection.

Table 2.

Work mode, main advantages, and shortcomings of peripherals used for bridge detection.

| Peripherals | Ref. | Focus | Work Mode | Main Advantages | Main Shortcomings |

|---|---|---|---|---|---|

| Visual cameras | [65,76,77,78,79,80,81,82] | Visible bridge damage | Image capture | Easy access to data | Appropriate shooting angle and advanced path planning |

| LiDAR | [43,84,85] | Bridge damage structure | Transmitting and receiving laser light | Scanning efficiency, overall point cloud data | Expensive, large data, seriously affected by vibration |

| Thermal infrared imagery | [86,87,88,89,90,91,92,93,94,95,96,97,98,99,100] | Internal defects | Active and passive thermal imaging | Internal defect identification | Hard to map processing and threshold extraction |

| Multispectral and hyperspectral | [102,103,105] | Spectral information | Push-broom scanning | Wider range of wavelengths | Complex dimension and noise reduction |

| Multi-sensors | [15,46,108] | Various data types | Multi-sensors cooperate | Various types of sensors | Hard to achieve data fusion |

LiDAR: light detection and ranging.

3. Data Processing

UAS are able to collect various types of data using external sensors, and the next step is usually extracting useful information through proper data processing methods. This section is a generalization of the data processing approach after data has been collected by platforms (multi-type UAVs) and various sensors (visual cameras, LiDAR, and so on).

3.1. Three-Dimensional Reconstruction

Researchers are able to construct 3D models of bridges and identify delamination in the deck based on UAS data. These 3D reconstruction types can be divided into three approaches according to their respective data resources: image based [44,79,112,113,114], LiDAR based [115,116,117], and image and LiDAR merged [118,119]. Photogrammetric point clouds can be acquired by multi-view images [120,121]. Oude Elberink [122] proposed an automated 3D reconstruction aimed at highway interchanges by data integration of point cloud and topographic maps. Recently, deep learning was used to reconstruct 3D models of cable-stayed bridges by Hu et al. [123]. This approach identifies bridges in panoramic images, then decomposes bridges into parts by utilizing deep neural networks. The proposed method has a higher spatial accuracy in comparison to the manual approach. In addition, the method is robust to noise and partial scans.

After an accurate model is established, damage detection can be solved by auto-clustering algorithms, such as k-means or density-based spatial clustering of applications with noise (DBSCAN). However, missing data is the most common source of error in 3D-reconstructed point clouds, caused by site-line-based occlusions [124], nonuniform data densities [125], inaccurate geometric positioning [126], surface deviations [127], and outlier-based noise [128]. To solve these problems, 3D reconstruction optimization algorithms and noise removal techniques should be developed to improve the performances beyond commercial software in the construction of complex objects. Another issue in 3D reconstruction by images is the high time requirement stemming from both data collection and model building. Chen et al. [44] required approximately five hours for total model building and extra images to increase the time past the minimum. However, previous testing indicated that 48 images was enough to reconstruct a detailed bridge model [129]. Although the inspected object was small, 140 × 53 × 23 (H × W × D) cm, one hour was needed to create the model [130]. Free or commercially available 3D software (Automatic Reconstruction Conduit, Agisoft PhotoScan, and Pix4D [131,132]) can be used to construct 3D models. For damage detection using reconstruction models, accuracy usually depends on model precision and a negative correlation with UAV flight height. In a recent damage evaluation study, the 3D volume calculation showed a 3.97% error from the UAV 10 m data set, and a 25% error from the UAV 20 m data set [44].

3.2. Image-Processing Techniques

Aimed at different goals or different imaging patterns, the image processing algorithms were also different. For field image generation, various distortions, interferences, and vignette corrections are essential based on raw format images with geo-referencing [133]. Image processing algorithms can be utilized in crack identification based on concrete bridge images. Many researchers proposed crack assessment diagram frameworks to realize crack quantification and localization. The proposed diagram framework always follows a combination of several image processing techniques, and the common process can be categorized as follows. Firstly, a pretreatment of images containing a region of interest (ROI) is acquired. Following this, the ROI image is enhanced with the noise removed. Finally, cracks are identified by an edge filter (Fujita [134], Sobel [34,135], Laplacian of Gaussian [32], Butterworth, Canny [136], and Gaussian [137,138,139]), and are subsequently labeled or saved.

Xu [140] developed a crack detection method utilizing the Boltzmann machine algorithm based on bridge images. Ahmed et al. [34] detected major cracks using the Otsu approach after utilizing Sobel’s filtering to eliminate residual noise. An edge filter in the image processing algorithm classified deck cracks as edges for two-dimensional (2D) images and then extracted position information to calculate crack length and area. Clustering algorithms, such as c-means [141,142,143], k-means [144,145], and DBSCAN [146] are also common for concrete crack detection. In short, choosing from the large number of methods and algorithms for crack detection creates confusion. As a result, comparisons between different image processing techniques through the same samples have been carried out by many researchers. Abdel performed a comparative study using four edge detection methods—fast Fourier transform (FFT), Canny, Sobel, and fast Haar transform (FHT) based on 25 images of defected concrete [27]. Cha [40] compared Sobel, Canny, and a convolutional neural network with four images. In addition to images, LiDAR point cloud data can be projected onto a 2D plane for convenience [31]. The image processing algorithms mentioned above accurately detected 53–79% of cracked pixels, but they produced residual noise in the final binary images.

3.3. Deep Learning in Neural Networks

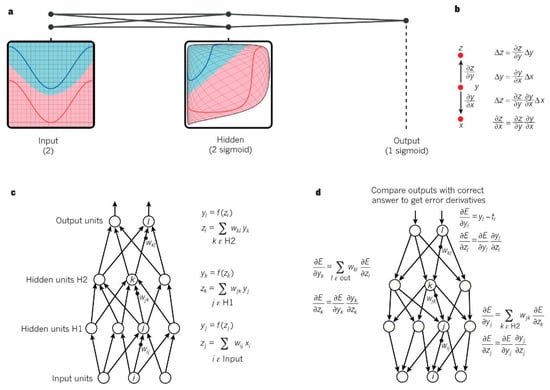

Currently, deep learning methods have risen in popularity in order to make sense of damage datasets collected by UAS. A deep learning architecture is composed of various modules; they compute non-linear, input-output mappings and are subject to learning. The backpropagation procedure to compute the gradient of an objective function with respect to the weights of a multilayer stack of modules is nothing more than a practical application of the chain rule for derivatives (Figure 4) [147].

Figure 4.

Multilayer neural networks and backpropagation showing (a) an illustrative example with only two input units, two hidden units, and one output unit in a multi-layer neural network; (b) the chain rule of derivatives, showing two small composed effects including Δx in x gets transformed first into a small change Δy in y by getting multiplied by ∂y/∂x and Δy in y creates a change Δz in z, similarly; (c) the equations used for computing the forward pass in a neural network with two hidden layers and one output layer,; and (d) the equations used for computing the backward pass.

Deep convolutional neural networks (DCNNs) and convolutional neural networks (CNNs) have been increasingly utilized in bridge damage detection for image processing, and different architectures are increasingly used in the automatic identification of structural defects in images [39,148,149]. Compared to most traditional methods, damage detection by CNNs avoids the disadvantages of requiring hand-crafted features. Traditional methods can only identify certain defect types and the results are susceptible to surrounding disturbances [150]. An early study concerning this can be obviously seen in the research by Munawar, which contained a vast dataset of 600 images using pixel-wise segmentation prediction and crack detection in buildings in Sydney [151]. Chen et al. [152] proposed an NB-CNN model to detect cracks in video frame, where a 98.3% hit rate was reached by gathering information from video frames. Hoskere et al. [153] recognized six different types of damage using a damage localization and classification technique modified by two classic CNN architectures, ResNet and VGG19. Cha et al. [40] designed a deep architecture of CNNs and recorded that accuracies were 97.95% in 8 K images and 98.2% in 32 K images after training and validation. Significant improvements regarding test speed were discussed in a separate study [42].

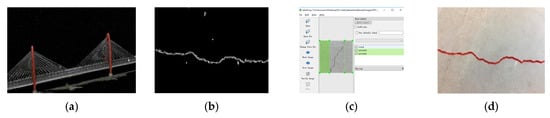

Gong et al. [154] identified collapsed buildings by three machine learning classifiers: the K-nearest neighbor (KNN) method, random forest, and support vector machine (SVM). Sun et al. reviewed applications of artificial intelligence techniques (AI) in bridge damage detection and discussed the challenges, upper limit, and future trends [6]. The performance of DCNN and six common edge detectors (Roberts, Sobel, Prewitt, Laplacian of Gaussian, Gaussian, and Butterworth) for crack detection of bridge concrete images was compared in a separate report [82]. In regards to identification precision accuracy, the neural network was capable of detecting concrete cracks wider than 0.08 mm after trained modes and 0.04 mm after transfer learning mode. The required time, main advantages, and shortcomings of different data processing methods can be seen in Figure 5 and Table 3. The above studies show a higher accuracy compared to image processing technologies, usually exceeding 90% after several days of training.

Figure 5.

Results from different data processing methods, showing (a) cable-stayed bridges, (b) Canny algorithm, (c) labelling crack region for deep learning, and (d) threshold extraction and painting.

Table 3.

Required time, main advantages, and shortcomings in different data processing methods.

4. Service Life Prediction Model

After damage data processing, the next step is to undertake a condition assessment and service life prediction according to existing damage. This is necessary to repair or even destroy the bridge for public security consideration if the bridge displays poor reliability. The operation service life of bridges is affected by variable traffic loads, climate change, and chloride ingress, variables that are intercoupled and time-varying. An accurate service life prediction model, deduced from the deterioration model, makes sense in calculating bridge lift and providing corresponding maintenance strategies. This section presents a literature review of bridge deterioration modeling and deduces a service life prediction model containing research on the structural assessment of concrete bridge decks. The content is based on three common models used for bridge service life prediction.

4.1. Traffic Load-Fatigue Damage Model

The growth of traffic loads has become a popular topic and has been increasingly studied in recent years. This issue is critical in bridge deterioration for both fatigue crack growth and fatigue damage accumulation [32]. This type of damage is inevitable and is a common concern to public, management agencies, and bridge owners. When assessing fatigue problems affected by traffic loads, a crucial step involves determining a traffic load model. Modeling the traffic loading for same-direction, two-lane, and multi-lane traffic, and the relationships between adjacent vehicles in both lanes, have been researched [155,156].

In the modeling of traffic loads, characteristic load effects were generally acquired from Monte Carlo [157,158,159,160] simulations of traffic in each lane simulated independently [160]. The early development of bridge weigh-in-motion (BWIM) [161,162,163,164,165] provides supporting data for fatigue damage assessment and service life prediction using measures and records from long-term authentic responses. Guo provided a fatigue reliability level method in the case of load models based on traffic counts [166]. Simplified simulations through a multi-lane traffic model [164,167,168] and a car stress model [17] were applied due to the complex nature of traffic loads. A long-term traffic load spectrum contains the complete load information, but directly measuring is difficult due to the restrictions of testing technology. In addition, this approach is limited as it is time consuming and expensive.

Therefore, a long-term load spectrum must be obtained according to a short-term one. Considering various forms and characteristics of loads, selection of proper extrapolation methods is of great significance. A review of extrapolation methods has been published [169]. The load effects of extreme amplitude can be predicted by using the POT approach based on extreme values [17,156,170].

Following traffic load modeling, fatigue life prediction must be considered. The stress–life method reveals a relationship between the number of cycles and the stress range when visible cracking occurs [171,172]. The Basquin function is widely used in fatigue failure [173,174] and can be expressed mathematically by [175]:

Or

where m and A are positive empirical material constants. In Equation (2), m and log A are the slope of the curve and the intercept on the log N axis, respectively.

The rain flow cycle counting method [176,177] can be used to analyze the statistics of stress cycles. In engineering practices, the Miner’s rule damage accumulation linear hypothesis is the most common way to predict the fatigue life. According to the rule, it occurs after the fatigue resistance is fully consumed. The failure of component fatigue can be generalized as [19,178,179]:

where ni is the applied cycles among the stress range (Δσi) and Ni is the corresponding fatigue life cycles in the stress range of Δσi.

4.2. Anodic Dissolution and Hydrogen Embrittlement Corrosion Fatigue Coupled Model

As well as traffic load-fatigue, RC bridge corrosion-fatigue has been regarded as an essential failure paradigm for bridges [180]. The interaction between fatigue and corrosion can be seen as a coupled effect, where fatigue and corrosion join forces to deteriorate the performance of bridge RC structures. In the corrosion-fatigue coupled model, hydrogen embrittlement [181] and anodic dissolution [182] are the two main factors which need to be taken into consideration. The factors above lead to compromised steel strength, pitting corrosion, and weakening of the bonds between concrete and steel bars [183]. The corrosion rate can be accelerated and the load-carrying capacity can be reduced by severe corrosion occurring in the cracking areas of bridge concrete [184,185].

The life of a corrosion pit is predicted by experimental crack growth or linear-elastic fracture mechanics, treated as a tunneling crack [186,187]. Early bridge fatigue failures are mainly found on corroded surfaces and take the form of shallow pits; their size and density are estimated by statistical or empirical corrosion pit growth rate or equivalent crack size [188,189,190]. A coupled corrosion-fatigue model was performed by Ma [20]. In the study, they considered concrete cover severe cracking, traffic frequency, and the effects of seasonal environment aggressiveness. Detailed crack and corrosion pit growth models were provided and the entire procedure included three stages: (1) corrosion initiation, (2) competition between fatigue crack growth and corrosion pit growth, and (3) determination of structural failure.

4.3. Climate Change and Bridge Life Prediction Model

Climate change is another important aspect that must not be ignored when calculating the service life of a bridge. One relevant risk involves an increased deterioration and degradation rate of the concrete structure materials in a bridge [191]. For instance, in a recent study [192], the risk of corrosion and damage to the concrete structure of a bridge were quantitatively assessed in the cities of Darwin and Sydney, Australia, under future climatic conditions. A climate simulation of wind speeds was initiated followed by global models concerning emission scenarios [193]. A total of 31 climate change risks were grouped into seven main categories, and the interconnectedness of these risks were discussed and reviewed [194].

5. Considerations

This review aims at displaying the concise, but thorough, key scientific advances of the recent development of UAS application in the realm of bridge damage detection. In addition to the above technical knowledge, there are some considerations that should be taken into account when using this emerging technology.

5.1. Regulation

As both UAVs and UAS are emerging technologies, it is difficult to find laws or regulating bodies in many areas. According to the weight of the UAV or UAS, all countries have a classification scheme except for Japan, China, Rwanda, and Nigeria. They follow a basic risk-based concept—flight conditions are strictly limited by the associated risks (i.e., weight). The relevant risks include malfunction and uninterrupted damage to people or property by of UAVs [195]. In essence, regulations and laws seek to minimize the risks and perceived harms, mainly aim at operational limitations. Most nations have defined horizontal distances from people and property, or so-called no-fly zones, which need to be taken into account. UAS flights are prohibited from flying in the vicinity of people and/or crowds of people. Many countries specifically mention minimum lateral distances these devices must be from people, usually ranging between 30 m and 150 m (e.g., Japan, South Africa, Canada, Italy, and the UK). Hence, UAS bridge inspection could not be applied in the above situations due to restrictions in UAV operation. Another general limitation is the maximum flying height; many countries have a minimum of 90 m and a maximum of 152 m, aiming to separate manned aircrafts and UAVs. Although regular inspections are low altitude aerial operations for high resolution and are unlikely to reach 90 m, the long focal length required to create panoramas or inspection for suspended-cable structures on top of suspension bridges are strongly hampered. In different nations, the determination of camera lens focal length is slightly affected by various maximum height levels, that is, to select shorter focal lengths for higher altitude limits. However, the strategy to respond to large traffic volumes may require lane closures. This is also the reason why the majority of the literature targets abandoned bridges. For a deeper understanding, the reader is referred to UAV regulation literature [196,197,198,199].

5.2. Economy and Time

Traditional damage detection was undertaken using visual inspections, and UBIT was employed in special regions difficult for manpower to access [200]. UAS inspection ($20,000) was proven to be 66% cheaper compared to traditional inspection methods involving four UBIT and a 25-m man lift ($59,000). A 2017 cost analysis of a large-scale (2400 m long) bridge showed that UAS inspection was cheaper than the traditional inspection methods [103]. A further example (ITD Bridge Key 21105) shows a cost of $391 per hour using UBIT inspection and $200 per hour using UAS inspection. In their study, UAS inspection is calculated as almost half of UBIT inspection for the hourly cost. According to the above research, UAS inspection was able to decrease both the time spent and the budget for bridge inspections under the premise of providing high-quality inspection information comparable to traditional inspection. However, the above statistics are based on essentials in the context of equipment; the specialized equipment and cameras cost a lot compared to a UAS platform itself. For instance, a popular consumer UAV, DJI Mavic 3 Pro, equipped with a high-resolution RGB camera, costs approximately $2000, whereas adding a Zenmuse X7 with a camera lens built specially for damage detection could cost nearly $5000 [201,202,203]. However, as the technology develops and corporate commercialization grows, the cost of these complete systems is forecast to decrease in the future.

5.3. Flight Control

General purpose navigation is commonly undertaken by GPS, micro-electro-mechanical systems (MEMs), inertial navigation sensors (INS), altitude sensors (AS), IMU, accelerometers, and gyroscopes through autopilot computers and external sensors [204]. Due to the restrictions of bridge environments, UAV self-controlled navigation is still a large challenge in the domain of bridge inspection [205]. A GPS signal is limited in the environment under the bridge, risking catastrophic technical difficulty to the UAS and external sensors, while also bringing a security risk to the pilot, the public, and the inspector [206]. Furthermore, weather conditions are an important factor for UAS flight control.

The distance of UAV to the detection surface and the minimum required number of images should be taken into account before planning a flight path. The distance of an UAV to the detection surface can be determined by the width of the narrowest detectable crack and the required size of the imaging scene. The minimum required number of images can be computed by the central angle between the two adjacent positions of the UAS [33]. Paine (2003) recommended 30 ± 15% for sidelap and 60 ± 5% for endlap [207] to optimize aerial photography and image interpretation.

6. Discussion

This section discusses the advantages, limitations, and future needs for the application of UAS in bridge damage detection. Advantages and limitations provide a reference for users to consider this emerging technology. In addition to the above content, future needs include self-navigated control, path planning, automatic damage detection, and a precision deterioration model.

6.1. Advantages and Limitations

Limitations exist in the application of UAS damage detection, although powerful benefits and potentials also exist. A prominent advantage of UAS damage detection technology is that they are capable of creating accurate 3D models and mapping for damage detection by a single sensor or multiple sensors. However, the photogrammetric and point cloud data processing times are very extensive, requiring a lot of computing time when applying high-resolution sensors. Another limitation is UAV flight times (often approximately 30 min for one flight), and an extra backup battery is necessary for large-scale missions. In addition, it would likewise expend more battery power to equip additional sensors. Nevertheless, UAS inspections utilize a faster inspection speed than traditional methods under the premise of requiring a large upfront cost with respect to high solution equipment. As a result, essential care should be taken in evaluating the economic cost when applying UAS damage detection technology.

6.2. Future Needs

6.2.1. Self-Navigated Control and Path Planning

Autonomous control was commonly used with some form of appropriate airborne sensors and autonomous control algorithms. However, the limitations with regards to UAS autonomous control involve the rationality of flight path planning which is directly dependent on the professional skills of the pilot. Self-navigated UAS are essential for achieving autonomous path planning and efficient bridge inspection [208]. The breakthroughs in UAS technology containing accuracy sensors and integrated navigation algorithms, flight path planning, inspector safety, and data collection reliability are the crux of self-navigated UAS damage detection.

6.2.2. Automatic Damage Detection

Recently, automatic damage detection technology and algorithms regarding images, point cloud data, and 3D models have been developed to provide a tool for crack detection and quantification, but more robust sensors and data processing tools are urgently needed in addition to the existing inspection framework. Feature detectors and feature descriptors have the ability to detect bridge surface defects using programs such as SIFT and SURF to generate 3D reconstructed bridge models. For example, spalls, cracks, and surface degradation by 3D model reconstructions of a building require highly skilled technicians and are time consuming when using high-resolution sensors. The realm of deep learning is very active in academic research and applies to bridge damage detection. The deep neural network method is an emerging methodology that catches sensitive features to structural condition variations and performs better than traditional algorithms in classification problems. Hence, quickly locating damaged areas in bridge structures by deep learning may be easily achieved, although their application in various bridges still needs investigation. Certainly, in the future, the relationship between computer science and bridge damage detection technology will be more intimately connected.

6.2.3. Deterioration Model

A deterioration model is a useful tool for service life prediction. They consider traffic loads, climate change, anodic dissolution, and hydrogen embrittlement. However, there are few studies incorporating fully integrated models. Field experiment data measured by sensors is capable of providing accurate deterioration data in fatigue condition assessment. Well-established AI-based models have the potential to avoid some model limitations by overcoming the nascent development stage. Artificial neural network (ANN) based models using multilayer perceptron (MLP) generates missing condition state data to fill the gaps created by irregular inspections.

7. Conclusions

This paper has outlined the body of work associated with UAS damage detection technology for life prediction with the aim of providing a current status for researchers. The current development and recent advances with future capabilities are demonstrated through the literature review. Owing to an influx of UAS technologies, improved sensors, and efficient algorithms, the field of damage detection is rapidly developing. All infrastructure, including but not limited to bridges, is filled with unpredictable risks, especially relevant to human safety. UAS cooperated sensors make use of advances in damage detection, permitting inspectors to gather complete damage information and to perform a reliability analysis in the context of the above studies. To achieve applicable systems, fundamental insights and technological breakthroughs are needed in the following areas:

- (i).

- robust autonomy: how to produce verifiable and scrutiny-proof algorithms that lead to desired emerging outcomes with real-time damage detection.

- (ii).

- UAS flight strategy: facilitating high-efficiency damage information extracts and flight path manipulations, depending on UAS technology breakthroughs.

- (iii).

- reliable model: enabling output feedback and evaluation of structures and stability based on field measurement data.

- (iv).

- system integration: insights into the co-design of hardware and software and a combination of bottom-up and top-down strategies may be combined and leveraged for enhanced functionality.

The field still requires many different studies. Although this review mainly focuses on bridges, many of the damage detection technologies can also be applied to other infrastructures. Moving forward, future research will also reveal the role of physical humans with respect to UAS damage detection. They may define flight strategy, offer online corrections, or maintain and support methods for the UAS. The emerging technology covered in these systematic scientific studies of UAS damage detection will undoubtedly unlock new understanding critical to both UAS systems and sensor technology, along with addressing integrated systems, data processing, service life modeling, and properties, simultaneously.

Author Contributions

Conceptualization, H.L.; formal analysis, H.L.; writing—original draft, H.L.; supervision, Y.C. and H.Z.; visualization, J.L. and Z.Z.; resources, J.L.; funding acquisition, Y.C.; validation, Y.C. and H.Z.; project administration, H.Z.; writing—review and editing, H.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Jilin Province Key R&D Plan Project under Grant: 20200401113GX.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, G.; Zhong, Y.; Chen, C.; Jin, T.; Liu, Y. Reliability allocation method based on linguistic neutrosophic numbers weight Muirhead mean operator. Expert Syst. Appl. 2022, 193, 116504. [Google Scholar] [CrossRef]

- Lahti, K.E.; Hänninen, H.; Niemi, E. Nominal stress range fatigue of stainless steel fillet welds—The effect of weld size. J. Constr. Steel Res. 2000, 54, 161–172. [Google Scholar] [CrossRef]

- Enright, M.P.; Frangopol, D.M. Service-Life Prediction of Deteriorating Concrete Bridges. J. Struct. Eng. 1998, 124, 309–317. [Google Scholar] [CrossRef]

- Faber, M.H.; Kroon, I.B.; Kragh, E.; Bayly, D.; Decosemaeker, P. Risk Assessment of Decommissioning Options Using Bayesian Networks. J. Offshore Mech. Arct. Eng. 2002, 124, 231–238. [Google Scholar] [CrossRef]

- Ma, Y.; Lu, B.; Guo, Z.; Wang, L.; Chen, H.; Zhang, J. Limit Equilibrium Method-based Shear Strength Prediction for Corroded Reinforced Concrete Beam with Inclined Bars. Materials 2019, 12, 1014. [Google Scholar] [CrossRef]

- Diez, A.; Khoa, N.L.D.; Makki Alamdari, M.; Wang, Y.; Chen, F.; Runcie, P. A clustering approach for structural health monitoring on bridges. J. Civ. Struct. Health Monit. 2016, 6, 429–445. [Google Scholar] [CrossRef]

- Yun, C.-B.; Min, J. Smart sensing, monitoring, and damage detection for civil infrastructures. KSCE J. Civ. Eng. 2010, 15, 1–14. [Google Scholar] [CrossRef]

- Housner, G.W.; Bergman, L.A.; Caughey, T.K.; Chassiakos, A.G.; Claus, R.O.; Masri, S.F.; Skelton, R.E.; Soong, T.T.; Spencer, B.F.; Yao, J.T.P. Structural Control: Past, Present, and Future. J. Eng. Mech. 1997, 123, 897–971. [Google Scholar] [CrossRef]

- Hall, S.R. The effective management and use of structural health data. In Proceedings of the 2nd International Workshop on Structural Health Monit, Lancaster, PA, USA, 8–10 September 1999. [Google Scholar]

- Kessler, S.S.; Spearing, S.M.; Soutis, C. Damage detection in composite materials using Lamb wave methods. Smart Mater. Struct. 2002, 11, 269–278. [Google Scholar] [CrossRef]

- Raghavan, A.; Cesnik, C.E.S. Review of Guided-wave Structural Health Monitoring. Shock Vib. Dig. 2007, 39, 91–114. [Google Scholar] [CrossRef]

- Brownjohn, J.M.W.; De Stefano, A.; Xu, Y.-L.; Wenzel, H.; Aktan, A.E. Vibration-based monitoring of civil infrastructure: Challenges and successes. J. Civ. Struct. Health Monit. 2011, 1, 79–95. [Google Scholar] [CrossRef]

- Ko, J.M.; Ni, Y.-Q. Technology developments in structural health monitoring of large-scale bridges. Eng. Struct. 2005, 27, 1715–1725. [Google Scholar] [CrossRef]

- Nagarajaiah, S.; Erazo, K. Structural monitoring and identification of civil infrastructure in the United States. Struct. Monit. Maint. 2016, 3, 51–69. [Google Scholar] [CrossRef]

- Jongerius, A. The Use of Unmanned Aerial Vehicles to Inspect Bridges for Rijkswaterstaat. Bachelor’s Thesis, Faculty of Engineering Technology, University of Twente, Enschede, The Netherlands, 2018. [Google Scholar]

- Mader, D.; Blaskow, R.; Westfeld, P.; Weller, C. Potential of uav-based laser scanner and multispectral camera data in building inspection. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B1, 1135–1142. [Google Scholar] [CrossRef]

- Nesterova, M.; Schmidt, F.; Soize, C. Fatigue analysis of a bridge deck using the peaks-over-threshold approach with application to the Millau viaduct. SN Appl. Sci. 2020, 2, 1–12. [Google Scholar] [CrossRef]

- Ma, Y.; Guo, Z.; Wang, L.; Zhang, J. Probabilistic Life Prediction for Reinforced Concrete Structures Subjected to Seasonal Corrosion-Fatigue Damage. J. Struct. Eng. 2020, 146, 04020117. [Google Scholar] [CrossRef]

- Miner, M.A. Cumulative Damage in Fatigue. J. Appl. Mech. 1945, 12, A159–A164. [Google Scholar] [CrossRef]

- Chryssanthopoulos, M.; Righiniotis, T. Fatigue reliability of welded steel structures. J. Constr. Steel Res. 2006, 62, 1199–1209. [Google Scholar] [CrossRef]

- Xu, J.-H.; Zhou, G.-D.; Zhu, T.-Y. Fatigue Reliability Assessment for Orthotropic Steel Bridge Decks Considering Load Sequence Effects. Front. Mater. 2021, 8, 678855. [Google Scholar] [CrossRef]

- Kwon, K.; Frangopol, D.M. Bridge fatigue reliability assessment using probability density functions of equivalent stress range based on field monitoring data. Int. J. Fatigue 2010, 32, 1221–1232. [Google Scholar] [CrossRef]

- Ma, H.; Wang, J.; Li, G.; Qiu, J. Fatigue redesign of failed sub frame using stress measuring, FEA and British Standard. Eng. Fail. Anal. 2019, 97, 103–114. [Google Scholar] [CrossRef]

- Adel, M.; Yokoyama, H.; Tatsuta, H.; Nomura, T.; Ando, Y.; Nakamura, T.; Masuya, H.; Nagai, K. Early damage detection of fatigue failure for RC deck slabs under wheel load moving test using image analysis with artificial intelligence. Eng. Struct. 2021, 246, 113050. [Google Scholar] [CrossRef]

- Metni, N.; Hamel, T. A UAV for bridge inspection: Visual servoing control law with orientation limits. Autom. Constr. 2007, 17, 3–10. [Google Scholar] [CrossRef]

- Dorafshan, S.; Maguire, M.; Qi, X. Automatic Surface Crack Detection in Concrete Structures Using OTSU Thresholding and Morphological Operations; UTC Report 01-2016; Department of Civil and Environmental Engineering, Utah State University: Logan, UT, USA, 2016. [Google Scholar] [CrossRef]

- Abdel-Qader, I.; Abudayyeh, O.; Kelly, M.E. Analysis of Edge-Detection Techniques for Crack Identification in Bridges. J. Comput. Civ. Eng. 2003, 17, 255–263. [Google Scholar] [CrossRef]

- Liu, X.; Ai, Y.; Scherer, S. Robust image-based crack detection in concrete structure using multi-scale enhancement and visual features. IEEE Int. Conf. Image Process. 2017, 17, 2304–2308. [Google Scholar] [CrossRef]

- Yamaguchi, T.; Hashimoto, S. Fast crack detection method for large-size concrete surface images using percolation-based image processing. Mach. Vis. Appl. 2009, 21, 797–809. [Google Scholar] [CrossRef]

- Oh, J.-K.; Jang, G.; Oh, S.; Lee, J.H.; Yi, B.-J.; Moon, Y.S.; Lee, J.S.; Choi, Y. Bridge inspection robot system with machine vision. Autom. Constr. 2009, 18, 929–941. [Google Scholar] [CrossRef]

- Cabaleiro, M.; Lindenbergh, R.; Gard, W.; Arias, P.; van de Kuilen, J.-W. Algorithm for automatic detection and analysis of cracks in timber beams from LiDAR data. Constr. Build. Mater. 2017, 130, 41–53. [Google Scholar] [CrossRef]

- Lim, R.S.; La, H.M.; Sheng, W. A Robotic Crack Inspection and Mapping System for Bridge Deck Maintenance. IEEE Trans. Autom. Sci. Eng. 2014, 11, 367–378. [Google Scholar] [CrossRef]

- Kim, J.W.; Kim, S.B.; Park, J.C.; Nam, J.W. Development of crack detection system with unmanned aerial vehicles and digital image processing. In Proceedings of the Advances in Structural Engineering and Mechanics (ASEM15), Incheon, Korea, 25–29 August 2015. [Google Scholar]

- Talab, A.M.A.; Huang, Z.; Xi, F.; HaiMing, L. Detection crack in image using Otsu method and multiple filtering in image processing techniques. Optik 2016, 127, 1030–1033. [Google Scholar] [CrossRef]

- Dorafshan, S. Comparing Automated Image-Based Crack Detection Techniques in Spatial and Frequency Domains. In Proceedings of the 26th ASNT Research Symposium, Jacksonville, FL, USA, 13–16 March 2017. [Google Scholar]

- Dorafshan, S.; Maguire, M. Autonomous Detection of Concrete Cracks on Bridge Decks and Fatigue Cracks on Steel Members. In Proceedings of the ASNT Digital Imaging 20, Mashantucket, CT, USA, 26–28 June 2017. [Google Scholar]

- Choudhary, G.K.; Dey, S. Crack detection in concrete surfaces using image processing, fuzzy logic, and neural networks. In Proceedings of the 2012 IEEE Fifth International Conference on Advanced Computational Intelligence (ICACI), Nanjing, China, 18–20 October 2012; pp. 404–411. [Google Scholar] [CrossRef]

- Cha, Y.-J.; Choi, W.; Büyüköztürk, O. Deep Learning-Based Crack Damage Detection Using Convolutional Neural Networks. Comput. Civ. Infrastruct. Eng. 2017, 32, 361–378. [Google Scholar] [CrossRef]

- Dorafshan, S.; Thomas, R.; Coopmans, C.; Maguire, M. Deep Learning Neural Networks for sUAS-Assisted Structural Inspections: Feasibility and Application. In Proceedings of the 2018 International Conference on Unmanned Aircraft Systems (ICUAS), Dallas, TX, USA, 12–15 June 2018; pp. 874–882. [Google Scholar] [CrossRef]

- Cha, Y.-J.; Choi, W.; Suh, G.; Mahmoudkhani, S.; Büyüköztürk, O. Autonomous Structural Visual Inspection Using Region-Based Deep Learning for Detecting Multiple Damage Types. Comput. Civ. Infrastruct. Eng. 2017, 33, 731–747. [Google Scholar] [CrossRef]

- Dorafshan, S.; Thomas, R.J.; Maguire, M. Comparison of deep convolutional neural networks and edge detectors for image-based crack detection in concrete. Constr. Build. Mater. 2018, 186, 1031–1045. [Google Scholar] [CrossRef]

- Liu, W.; Chen, S.; Hauser, E. Lidar-Based Bridge Structure Defect Detection. Exp. Tech. 2010, 35, 27–34. [Google Scholar] [CrossRef]

- Liu, W.; Chen, S.-E. Reliability analysis of bridge evaluations based on 3D Light Detection and Ranging data. Struct. Control Health Monit. 2013, 20, 1397–1409. [Google Scholar] [CrossRef]

- Chen, S.; Laefer, D.F.; Mangina, E.; Zolanvari, S.M.I.; Byrne, J. UAV Bridge Inspection through Evaluated 3D Reconstructions. J. Bridge Eng. 2019, 24, 1343. [Google Scholar] [CrossRef]

- Wang, L.; Chu, C.-H. 3D building reconstruction from LiDAR data. In Proceedings of the 2009 IEEE International Conference on Systems, Man and Cybernetics, San Antonio, TX, USA, 11–14 October 2009; pp. 3054–3059. [Google Scholar] [CrossRef]

- Gu, Y.; Jin, X.; Xiang, R.; Wang, Q.; Wang, C.; Yang, S. UAV-based integrated multispectral-LiDAR imaging system and data processing. Sci. China Technol. Sci. 2020, 63, 1293–1301. [Google Scholar] [CrossRef]

- Jensen, R.R.; Hardin, A.J.; Hardin, P.J.; Jensen, J.R. A New Method to Correct Pushbroom Hyperspectral Data Using Linear Features and Ground Control Points. GIScience Remote Sens. 2011, 48, 416–431. [Google Scholar] [CrossRef]

- Xiong, N.; Svensson, P. Multi-sensor management for information fusion: Issues and approaches. Inf. Fusion 2002, 3, 163–186. [Google Scholar] [CrossRef]

- Haghighat, M.B.A.; Aghagolzadeh, A.; Seyedarabi, H. A non-reference image fusion metric based on mutual information of image features. Comput. Electr. Eng. 2011, 37, 744–756. [Google Scholar] [CrossRef]

- Bircher, A.; Alexis, K.; Burri, M.; Oettershagen, P.; Omari, S.; Mantel, T.; Siegwart, R. Structural inspection path planning via iterative viewpoint resampling with application to aerial robotics. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; Volume 2015, pp. 6423–6430. [Google Scholar]

- Chan, B.; Guan, H.; Jo, J.; Blumenstein, M. Towards UAV-based bridge inspection systems: A review and an application perspective. Struct. Monit. Maint. 2015, 2, 283–300. [Google Scholar] [CrossRef]

- Byrne, J.; Laefer, D.; O’Keeffe, E. Maximizing feature detection in aerial unmanned aerial vehicle datasets. J. Appl. Remote Sens. 2017, 11, 025015. [Google Scholar] [CrossRef]

- Cheng, E. Aerial Photography and Videography Using Drones; Peachpit Press: San Francisco, CA, USA, 2016. [Google Scholar]

- Emery, W.J.; Schmalzel, J. Editorial for “Remote Sensing from Unmanned Aerial Vehicles”. Remote Sens. 2018, 10, 1877. [Google Scholar] [CrossRef]

- Hardin, P.J.; Jensen, R.R. Introduction—Small-Scale Unmanned Aerial Systems for Environmental Remote Sensing. GIScience Remote Sens. 2011, 48, 1–3. [Google Scholar] [CrossRef]

- Milas, A.S.; Sousa, J.J.; Warner, T.A.; Teodoro, A.C.; Peres, E.; Gonçalves, J.A.; Delgado-García, J.; Bento, R.; Phinn, S.; Woodget, A. Unmanned Aerial Systems (UAS) for environmental applications special issue preface. Int. J. Remote Sens. 2018, 39, 4845–4851. [Google Scholar] [CrossRef]

- Dorafshan, S.; Maguire, M.; Hoffer, N.; Coopmans, C. Fatigue Crack Detection Using Unmanned Aerial Systems in Under-Bridge Inspection; Idaho Transportation Department: Boise, ID, USA, 2018.

- Gucunski, N.; Boone, S.D.; Zobel, R.; Ghasemi, H.; Parvardeh, H.; Kee, S.-H. Nondestructive evaluation inspection of the Arlington Memorial Bridge using a robotic assisted bridge inspection tool (RABIT). In Proceedings of the Nondestructive Characterization for Composite Materials, Aerospace Engineering, Civil Infrastructure, and Homeland Security 2014, San Diego, CA, USA, 9–13 March 2014; Wu, H.F., Yu, T.-Y., Gyekenyesi, A.L., Shull, P.J., Eds.; SPIE: Bellingham, DC, USA, 2014; Volume 9063, pp. 148–160. [Google Scholar]

- Gucunski, N.; Kee, S.-H.; La, H.; Basily, B.; Maher, A.; Ghasemi, H. Implementation of a Fully Autonomous Platform for Assessment of Concrete Bridge Decks RABIT. In Proceedings of the Structures Congress 2015, Portland, OR, USA, 23–25 April 2015. [Google Scholar] [CrossRef] [Green Version]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR System with Application to Forest Inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef]

- Dorafshan, S.; Thomas, R.J.; Maguire, M. Fatigue Crack Detection Using Unmanned Aerial Systems in Fracture Critical Inspection of Steel Bridges. J. Bridg. Eng. 2018, 23, 1291. [Google Scholar] [CrossRef]

- Lei, B.; Ren, Y.; Wang, N.; Huo, L.; Song, G. Design of a new low-cost unmanned aerial vehicle and vision-based concrete crack inspection method. Struct. Health Monit. 2020, 19, 1871–1883. [Google Scholar] [CrossRef]

- Saleem, M.R.; Park, J.-W.; Lee, J.-H.; Jung, H.-J.; Sarwar, M.Z. Instant bridge visual inspection using an unmanned aerial vehicle by image capturing and geo-tagging system and deep convolutional neural network. Struct. Health Monit. 2020, 20, 1760–1777. [Google Scholar] [CrossRef]

- Chen, G.; Liang, Q.; Zhong, W.; Gao, X.; Cui, F. Homography-based measurement of bridge vibration using UAV and DIC method. Measurement 2020, 170, 108683. [Google Scholar] [CrossRef]

- Yan, Y.; Mao, Z.; Wu, J.; Padir, T.; Hajjar, J.F. Towards automated detection and quantification of concrete cracks using integrated images and lidar data from unmanned aerial vehicles. Struct. Control Health Monit. 2021, 28, e2757. [Google Scholar] [CrossRef]

- Liu, Y.; Nie, X.; Fan, J.; Liu, X. Image-based crack assessment of bridge piers using unmanned aerial vehicles and three-dimensional scene reconstruction. Comput. Civ. Infrastruct. Eng. 2019, 35, 511–529. [Google Scholar] [CrossRef]

- Aber, J.S.; Marzolff, I.; Ries, J.B.; Aber, S.E.W. Chapter 8—Unmanned Aerial Systems. In Small-Format Aerial Photography and UAS Imagery, 2nd ed.; Aber, J.S., Marzolff, I., Ries, J.B., Aber, S.E.W., Eds.; Academic Press: Cambridge, MA, USA, 2019; pp. 119–139. [Google Scholar] [CrossRef]

- Gabriely, Y.; Rimon, E. Spiral-STC: An on-line coverage algorithm of grid environments by a mobile robot. In Proceedings of the 2002 IEEE International Conference on Robotics and Automation (Cat. No.02CH37292), Washington, DC, USA, 11–15 May 2002; Volume 1, pp. 954–960. [Google Scholar]

- Zelinsky, A.; Jarvis, R.; Byrne, J. Planning Paths of Complete Coverage of an Unstructured Environment by a Mobile Robot. In Proceedings of the International Conference on Advanced Robotics, Ljubljana, Slovenia, 6–10 December 2021; p. 13. [Google Scholar]

- Luo, C.; Yang, S.; Stacey, D.A.; Jofriet, J. A solution to vicinity problem of obstacles in complete coverage path planning. In Proceedings of the 2002 IEEE International Conference on Robotics and Automation (Cat. No.02CH37292), Washington, DC, USA, 11–15 May 2002; Volume 1, pp. 612–617. [Google Scholar]

- Chvátal, V.; Cook, W.; Dantzig, G.B.; Fulkerson, D.R.; Johnson, S.M. Solution of a Large-Scale Traveling-Salesman Problem. J. Oper. Res. Soc. Am. 2009, 2, 7–28. [Google Scholar] [CrossRef]

- Phung, M.D.; Quach, C.H.; Dinh, T.H.; Ha, Q. Enhanced discrete particle swarm optimization path planning for UAV vision-based surface inspection. Autom. Constr. 2017, 81, 25–33. [Google Scholar] [CrossRef] [Green Version]

- Bolourian, N.; Hammad, A. LiDAR-equipped UAV path planning considering potential locations of defects for bridge inspection. Autom. Constr. 2020, 117, 103250. [Google Scholar] [CrossRef]

- Hover, F.S.; Eustice, R.; Kim, A.; Englot, B.; Johannsson, H.; Kaess, M.; Leonard, J.J. Advanced perception, navigation and planning for autonomous in-water ship hull inspection. Int. J. Robot. Res. 2012, 31, 1445–1464. [Google Scholar] [CrossRef]

- Michel, D.; McIsaac, K. New path planning scheme for complete coverage of mapped areas by single and multiple robots. In Proceedings of the 2012 IEEE International Conference on Mechatronics and Automation, Chengdu, China, 5–8 August 2012; pp. 1233–1240. [Google Scholar] [CrossRef]

- Lee, S.; Kalos, N.; Shin, D.H. Non-destructive testing methods in the U.S. for bridge inspection and maintenance. KSCE J. Civ. Eng. 2014, 18, 1322–1331. [Google Scholar] [CrossRef]

- Kim, I.-H.; Jeon, H.; Baek, S.-C.; Hong, W.-H.; Jung, H.-J. Application of Crack Identification Techniques for an Aging Concrete Bridge Inspection Using an Unmanned Aerial Vehicle. Sensors 2018, 18, 1881. [Google Scholar] [CrossRef]

- Prasanna, P.; Dana, K.J.; Gucunski, N.; Basily, B.B.; La, H.M.; Lim, R.S.; Parvardeh, H. Automated Crack Detection on Concrete Bridges. IEEE Trans. Autom. Sci. Eng. 2014, 13, 591–599. [Google Scholar] [CrossRef]

- Ortiz, A.; Bonnin-Pascual, F.; Garcia-Fidalgo, E.; Company-Corcoles, J.P. Vision-Based Corrosion Detection Assisted by a Micro-Aerial Vehicle in a Vessel Inspection Application. Sensors 2016, 16, 2118. [Google Scholar] [CrossRef]

- Zhu, Z.; German, S.; Brilakis, I. Visual retrieval of concrete crack properties for automated post-earthquake structural safety evaluation. Autom. Constr. 2011, 20, 874–883. [Google Scholar] [CrossRef]

- Catbas, F.N.; Brown, D.L.; Aktan, A.E. Parameter Estimation for Multiple-Input Multiple-Output Modal Analysis of Large Structures. J. Eng. Mech. 2004, 130, 921–930. [Google Scholar] [CrossRef]

- Dan, D.; Dan, Q. Automatic recognition of surface cracks in bridges based on 2D-APES and mobile machine vision. Measurement 2020, 168, 108429. [Google Scholar] [CrossRef]

- Rahman, M.A.; Zayed, T.; Bagchi, A. Deterioration Mapping of RC Bridge Elements Based on Automated Analysis of GPR Images. Remote Sens. 2022, 14, 1131. [Google Scholar] [CrossRef]

- Lin, Y.; Hyyppa, J.; Jaakkola, A. Mini-UAV-Borne LIDAR for Fine-Scale Mapping. IEEE Geosci. Remote Sens. Lett. 2010, 8, 426–430. [Google Scholar] [CrossRef]

- Whitehead, K.; Hugenholtz, C.H.; Myshak, S.; Brown, O.; LeClair, A.; Tamminga, A.; Barchyn, T.E.; Moorman, B.; Eaton, B. Remote sensing of the environment with small unmanned aircraft systems (UASs), part 2: Scientific and commercial applications. J. Unmanned Veh. Syst. 2014, 2, 86–102. [Google Scholar] [CrossRef]

- Tashan, J.; Al-Mahaidi, R. Investigation of the parameters that influence the accuracy of bond defect detection in CFRP bonded specimens using IR thermography. Compos. Struct. 2012, 94, 519–531. [Google Scholar] [CrossRef]

- Omar, M.; Hassan, M.; Saito, K.; Alloo, R. IR self-referencing thermography for detection of in-depth defects. Infrared Phys. Technol. 2005, 46, 283–289. [Google Scholar] [CrossRef]

- Edis, E.; Flores-Colen, I.; de Brito, J. Passive thermographic detection of moisture problems in façades with adhered ceramic cladding. Constr. Build. Mater. 2013, 51, 187–197. [Google Scholar] [CrossRef]

- Vetrivel, A.; Gerke, M.; Kerle, N.; Vosselman, G. Identification of Structurally Damaged Areas in Airborne Oblique Images Using a Visual-Bag-of-Words Approach. Remote Sens. 2016, 8, 231. [Google Scholar] [CrossRef]

- Giordan, D.; Manconi, A.; Remondino, F.; Nex, F. Use of unmanned aerial vehicles in monitoring application and management of natural hazards. Geomatics Nat. Hazards Risk 2016, 8, 1315619. [Google Scholar] [CrossRef]

- Qi, J.; Song, D.; Shang, H.; Wang, N.; Hua, C.; Wu, C.; Qi, X.; Han, J. Search and Rescue Rotary-Wing UAV and Its Application to the Lushan Ms 7.0 Earthquake. J. Field Robot. 2015, 33, 290–321. [Google Scholar] [CrossRef]

- Clark, M.; McCann, D.; Forde, M. Application of infrared thermography to the non-destructive testing of concrete and masonry bridges. NDT E Int. 2003, 36, 265–275. [Google Scholar] [CrossRef]

- Runnemalm, A.; Broberg, P.; Henrikson, P. Ultraviolet excitation for thermography inspection of surface cracks in welded joints. Nondestruct. Test. Evaluation 2014, 29, 332–344. [Google Scholar] [CrossRef]

- Aggelis, D.; Kordatos, E.; Soulioti, D.; Matikas, T. Combined use of thermography and ultrasound for the characterization of subsurface cracks in concrete. Constr. Build. Mater. 2010, 24, 1888–1897. [Google Scholar] [CrossRef]

- Lesniak, J.R.; Bazile, D.J. Forced-diffusion thermography technique and projector design. In Proceedings of the Thermosense XVIII: An International Conference on Thermal Sensing and Imaging Diagnostic Applications, Orlando, FL, USA, 8–12 April 1996; SPIE: Bellingham, DC, USA,, 1996; 2766, pp. 210–218. [Google Scholar] [CrossRef]

- Washer, G.A. Developments for the non-destructive evaluation of highway bridges in the USA. NDT E Int. 1998, 31, 245–249. [Google Scholar] [CrossRef]

- Hiasa, S.; Birgul, R.; Matsumoto, M.; Catbas, F.N. Experimental and numerical studies for suitable infrared thermography implementation on concrete bridge decks. Measurement 2018, 121, 144–159. [Google Scholar] [CrossRef]

- Sakagami, T. Remote nondestructive evaluation technique using infrared thermography for fatigue cracks in steel bridges. Fatigue Fract. Eng. Mater. Struct. 2015, 38, 755–779. [Google Scholar] [CrossRef]

- Omar, T.; Nehdi, M.L. Remote sensing of concrete bridge decks using unmanned aerial vehicle infrared thermography. Autom. Constr. 2017, 83, 360–371. [Google Scholar] [CrossRef]

- Wells, J.L.B. Unmanned Aircraft System Bridge Inspection Demonstration Project Phase II; Minnesota Department of Transportation: St. Paul, MN, USA, 2017.

- Escobar-Wolf, R.; Oommen, T.; Brooks, C.N.; Dobson, R.J.; Ahlborn, T.M. Unmanned Aerial Vehicle (UAV)-Based Assessment of Concrete Bridge Deck Delamination Using Thermal and Visible Camera Sensors: A Preliminary Analysis. Res. Nondestruct. Evaluation 2017, 29, 183–198. [Google Scholar] [CrossRef]

- Wallace, A.M.; McCarthy, A.; Nichol, C.J.; Ren, X.; Morak, S.; Martinez-Ramirez, D.; Woodhouse, I.H.; Buller, G.S. Design and Evaluation of Multispectral LiDAR for the Recovery of Arboreal Parameters. IEEE Trans. Geosci. Remote Sens. 2013, 52, 4942–4954. [Google Scholar] [CrossRef]

- Niu, Z.; Xu, Z.; Sun, G.; Huang, W.; Wang, L.; Feng, M.; Li, W.; He, W.; Gao, S. Design of a New Multispectral Waveform LiDAR Instrument to Monitor Vegetation. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1506–1510. [Google Scholar] [CrossRef]

- Feroz, S.; Abu Dabous, S. UAV-Based Remote Sensing Applications for Bridge Condition Assessment. Remote Sens. 2021, 13, 1809. [Google Scholar] [CrossRef]

- Kim, B.; Kim, D.; Cho, S. A Study on Concrete Efflorescence Assessment using Hyperspectral Camera. J. Korean Soc. Saf. 2017, 32, 98–103. [Google Scholar]

- Hakala, T.; Suomalainen, J.; Kaasalainen, S.; Chen, Y. Full waveform hyperspectral LiDAR for terrestrial laser scanning. Opt. Express 2012, 20, 7119–7127. [Google Scholar] [CrossRef]

- Wang, R.; Kawamura, Y. An Automated Sensing System for Steel Bridge Inspection Using GMR Sensor Array and Magnetic Wheels of Climbing Robot. J. Sensors 2015, 2016, 8121678. [Google Scholar] [CrossRef]

- Li, E.; Kang, Y.; Tang, J.; Wu, J. A New Micro Magnetic Bridge Probe in Magnetic Flux Leakage for Detecting Micro-cracks. J. Nondestruct. Evaluation 2018, 37, 46. [Google Scholar] [CrossRef]

- Jung, J.-Y.; Yoon, H.-J.; Cho, H.-W. Research of Remote Inspection Method for River Bridge using Sonar and visual system. J. Korea Acad. Ind. Coop. Soc. 2017, 18, 330–335. [Google Scholar] [CrossRef]

- Shin, C.; Jang, I.-S.; Kim, K.; Choi, H.-T.; Lee, S.-H. Performance Analysis of Sonar System Applicable to Underwater Construction Sites with High Turbidity. J. Korea Acad. Coop. Soc. 2013, 14, 4507–4513. [Google Scholar] [CrossRef]

- Sa, I.; Hrabar, S.; Corke, P. Inspection of pole-like structures using a vision-controlled VTOL UAV and shared autonomy. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 4819–4826. [Google Scholar] [CrossRef]

- Pan, Y.; Dong, Y.; Wang, D.; Chen, A.; Ye, Z. Three-Dimensional Reconstruction of Structural Surface Model of Heritage Bridges Using UAV-Based Photogrammetric Point Clouds. Remote Sens. 2019, 11, 1204. [Google Scholar] [CrossRef]

- Lattanzi, D.; Miller, G.R. 3D Scene Reconstruction for Robotic Bridge Inspection. J. Infrastruct. Syst. 2015, 21, 229. [Google Scholar] [CrossRef]

- Kouimtzoglou, T.; Stathopoulou, E.; Agrafiotis, P.; Georgopoulos, A. Image-based 3d reconstruction data as an analysis and documentation tool for architects: The case of plaka bridge in greece. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2017, XLII-2/W3, 391–397. [Google Scholar] [CrossRef] [Green Version]

- Goebbels, S. 3D Reconstruction of Bridges from Airborne Laser Scanning Data and Cadastral Footprints. J. Geovisualization Spat. Anal. 2021, 5, 1–15. [Google Scholar] [CrossRef]

- Sampath, A.; Shan, J. Segmentation and Reconstruction of Polyhedral Building Roofs From Aerial Lidar Point Clouds. IEEE Trans. Geosci. Remote Sens. 2009, 48, 1554–1567. [Google Scholar] [CrossRef]

- Conde-Carnero, B.; Riveiro, B.; Arias, P.; Caamaño, J.C. Exploitation of Geometric Data provided by Laser Scanning to Create FEM Structural Models of Bridges. J. Perform. Constr. Facil. 2016, 30, 807. [Google Scholar] [CrossRef]

- Cheng, L.; Tong, L.; Chen, Y.; Zhang, W.; Shan, J.; Liu, Y.; Li, M. Integration of LiDAR data and optical multi-view images for 3D reconstruction of building roofs. Opt. Lasers Eng. 2013, 51, 493–502. [Google Scholar] [CrossRef]

- Schonberger, J.L.; Frahm, J.-M. Structure-from-Motion Revisited. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar]

- Furukawa, Y.; Hernández, C. Multi-View Stereo: A Tutorial. Found. Trends® Comput. Graph. Vis. 2013, 9, 1–148. [Google Scholar] [CrossRef]

- Ullman, S. The interpretation of structure from motion. Proc. R. Soc. B 1979, 203, 405–426. [Google Scholar] [CrossRef]

- Elberink, S.O.; Vosselman, G. 3D information extraction from laser point clouds covering complex road junctions. Photogramm. Rec. 2009, 24, 23–36. [Google Scholar] [CrossRef]

- Hu, F.; Zhao, J.; Huang, Y.; Li, H. Structure-aware 3D reconstruction for cable-stayed bridges: A learning-based method. Comput. Civ. Infrastruct. Eng. 2020, 36, 89–108. [Google Scholar] [CrossRef]

- Tagliasacchi, A.; Zhang, H.; Cohen-Or, D. Curve skeleton extraction from incomplete point cloud. ACM Trans. Graph. 2009, 28, 71–79. [Google Scholar] [CrossRef]

- Berger, M.; Tagliasacchi, A.; Seversky, L.; Alliez, P.; Levine, J.; Sharf, A.; Silva, C. State of the Art in Surface Reconstruction from Point Clouds; Eurographics 2014-State of the Art Reports; Wiley: New York, NY, USA, 2014. [Google Scholar]

- Sargent, I.; Harding, J.; Freeman, M. Data Quality in 3D: Gauging Quality Measures from Users’ Requirements. International Archives of Photogrammetry. Remote Sens. Spat. Inf. Sci. 2012, 36, 1–8. Available online: https://www.researchgate.net/publication/228628243 (accessed on 13 July 2022).

- Koutsoudis, A.; Vidmar, B.; Ioannakis, G.; Arnaoutoglou, F.; Pavlidis, G.; Chamzas, C. Multi-image 3D reconstruction data evaluation. J. Cult. Herit. 2014, 15, 73–79. [Google Scholar] [CrossRef]

- Cheng, S.-W.; Lau, M.-K. Denoising a Point Cloud for Surface Reconstruction. arXiv 2017, arXiv:1704.04038. [Google Scholar]

- Torok, M.M.; Fard, M.G.; Kochersberger, K.B. Post-Disaster Robotic Building Assessment: Automated 3D Crack Detection from Image-Based Reconstructions. In Proceedings of the 2012 ASCE International Conference on Computing in Civil Engineering, Clearwater Beach, FL, USA, 17–20 June 2012; pp. 397–404. [Google Scholar] [CrossRef]

- Torok, M.M.; Golparvar-Fard, M.; Kochersberger, K.B. Image-Based Automated 3D Crack Detection for Post-disaster Building Assessment. J. Comput. Civ. Eng. 2014, 28, 334. [Google Scholar] [CrossRef]

- Metashape. Agisoft. Available online: https://www.agisoft.com/ (accessed on 21 August 2022).

- Byrne, J.; Laefer, D. Variables effecting photomosaic reconstruction and ortho-rectification from aerial survey datasets. arXiv 2016, arXiv:1611.03318. [Google Scholar]

- Tewes, A.; Schellberg, J. Towards Remote Estimation of Radiation Use Efficiency in Maize Using UAV-Based Low-Cost Camera Imagery. Agronomy 2018, 8, 16. [Google Scholar] [CrossRef]

- Fujita, Y.; Mitani, Y.; Hamamoto, Y. A Method for Crack Detection on a Concrete Structure. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 3, pp. 901–904. [Google Scholar]

- Moghaddam, M.E.; Jamzad, M. Linear Motion Blur Parameter Estimation in Noisy Images Using Fuzzy Sets and Power Spectrum. EURASIP J. Adv. Signal Process. 2006, 2007, 068985. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. In Proceedings of the IEEE Transactions on Pattern Analysis and Machine Intelligence, Cambridge, MA, USA, November 1986; pp. 679–698. [Google Scholar]

- Parker, J. Algorithms for Image Processing and Computer Vision; Wiley: Indianapolis, Indiana, 1997. [Google Scholar]

- Berthelot, M.; Nony, N.; Gugi, L.; Bishop, A.; De Luca, L. The avignon bridge: A 3d reconstruction project integrating archaeological, historical and gemorphological issues. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-5/W4, 223–227. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided Image Filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef]