1. Introduction

Synthetic aperture radar (SAR) is a microwave sensor for imaging based on the scattering characteristics of electromagnetic waves, which can be observed all day and all weather and has certain cloud and ground penetrating ability. It has unique advantages in marine monitoring, mapping, and the military. With the continuous exploitation of marine resources, people have begun to pay attention to the monitoring of marine vessels. Therefore, SAR ship detection algorithm is of great significance in the fields of territorial sea security, maritime law enforcement, and marine ecological protection.

There are some differences between ship target detection in SAR image and optical image. Contour information is one of the important features for target detection. However, since the SAR uses electromagnetic scattered echoes for vector synthesis, the coherently superimposed scattered echoes inevitably experience random fading in amplitude and phase, presenting as light and dark interlaced speckle noise. The special imaging mechanism of SAR causes the outline of the ship to be unclear, which is more serious in the complex background of the ship close to the coast and berthing in the port. In addition, the SAR ship detection algorithm should also consider the limited computing resources of hardware devices in practical application scenarios of micro-SAR platforms such as small unmanned aerial vehicles, the complexity of the algorithm determines the practicability.

The development of SAR ship detection algorithm is divided into two stages: the traditional detection algorithm represented by constant false alarm rate (CFAR) and the deep learning detection algorithm represented by convolutional neural network.

The most widely used traditional SAR ship detection method is the CFAR detection algorithm, such as literature [

1,

2,

3] are all algorithms for SAR ship detection based on CFAR. The CFAR algorithm adjusts the detection threshold according to the statistical characteristics of the background local clutter, and judges whether it is a target according to the threshold. The algorithm relies on the modeling of clutter statistical characteristics, so it is sensitive to complex coastline, sea clutter and coherent speckle noise, with low detection accuracy and poor generalization. At present, SAR ship detection based on deep learning is gradually replacing traditional methods, which is becoming the mainstream research direction.

Since the sensational impact of AlexNet in the ImageNet image classification challenge in 2012, deep learning has started a rapid revival in the field of computer vision. Thanks to the high accuracy and reliability of the deep learning method and the simple end-to-end processing, these have led to the rapid development of convolutional neural network-based target detection algorithms, resulting in the gradual replacement of traditional target detection algorithms. The current mainstream target detection methods based on deep learning are mainly divided into two-stage and one-stage target detection algorithms. Typical representatives of two-stage target detection algorithms are R-CNN [

4], Fast R-CNN [

5], Faster R-CNN [

6] and so on. These two-stage algorithms first generate target candidate regions in the first stage, and then classify and identify and position the candidate regions in the second stage. Typical representatives of one-stage target detection algorithms are SSD [

7], RetinaNet [

8], YOLO series, etc. Among them, YOLO series target detection algorithms have strong vitality due to their superior performance and have been updated and iterated several versions. These one-stage algorithms do not need to generate candidate regions, and directly predict the category and position coordinate information of the target in one step, and the detection speed is faster.

When the target detection algorithm based on deep learning was just emerging, it could not be applied in the field of SAR ship detection because SAR images were not easy to obtain and there were no public data sets. Fortunately, Li et al. [

9] published the first SAR ship detection data set (SSDD) in 2017, which promoted the rapid development of this field. In 2021, Zhang et al. [

10] corrected the mislabeling of the initial version of the SSDD dataset and standardized the usage standards. At present, the SSDD dataset has become one of the most widely used datasets for SAR ship detection. In recent years, Cui et al. [

11] proposed a dense connection pyramid with enhanced attention mechanism, which makes full use of context features and improves the detection ability of small ships, but this method uses the super large network Resnet101 as the backbone, and the number of training iterations is as high as 50,000. Jiang et al. [

12] designed a lightweight SAR ship detection network based on YOLOV4-Tiny, and used non-subsampling Laplace transform to construct a multi-channel SAR image, which enhances the ship contour features, but this method has low adaptability to SAR images with unbalanced channel features. Yu et al. [

13] proposed a convolutional network based on a bidirectional convolutional structure, which greatly improves the effectiveness of SAR image feature extraction through the exchange of information between the upper and lower channels, but the convolution operation in two directions by this method increases the number of model parameters and reduces the model inference speed. Zhang et al. [

14] proposed a quad feature pyramid network composed of Deformable Convolutional FPN, Content-Aware Feature Reassembly FPN, Path Aggregation Space Attention FPN and Balance Scale Global Attention FPN, which improves the ability of network feature extraction and fusion, but this method uses a large network ResNet50 as the backbone and stacks four feature pyramids with different functions in a serial manner, resulting in high network complexity and slow detection speed. Ma et al. [

15] designed an anchor-free framework with skip connections and aggregated nodes based on key point estimation and attention mechanism to fuse multi-resolution features, which improves the detection ability of multi-scale ship objects. Zhu et al. [

16] proposed an anchor-free ship detection algorithm based on fully convolutional one-stage object detection and adaptive training sample selection, which eliminated the influence of anchor points. However, neither of the above [

15,

16] methods mentioned the amount of network parameters or the size of the model. It can be seen that the SAR ship detection algorithm based on deep learning still has further development space in terms of lightweight and high average precision.

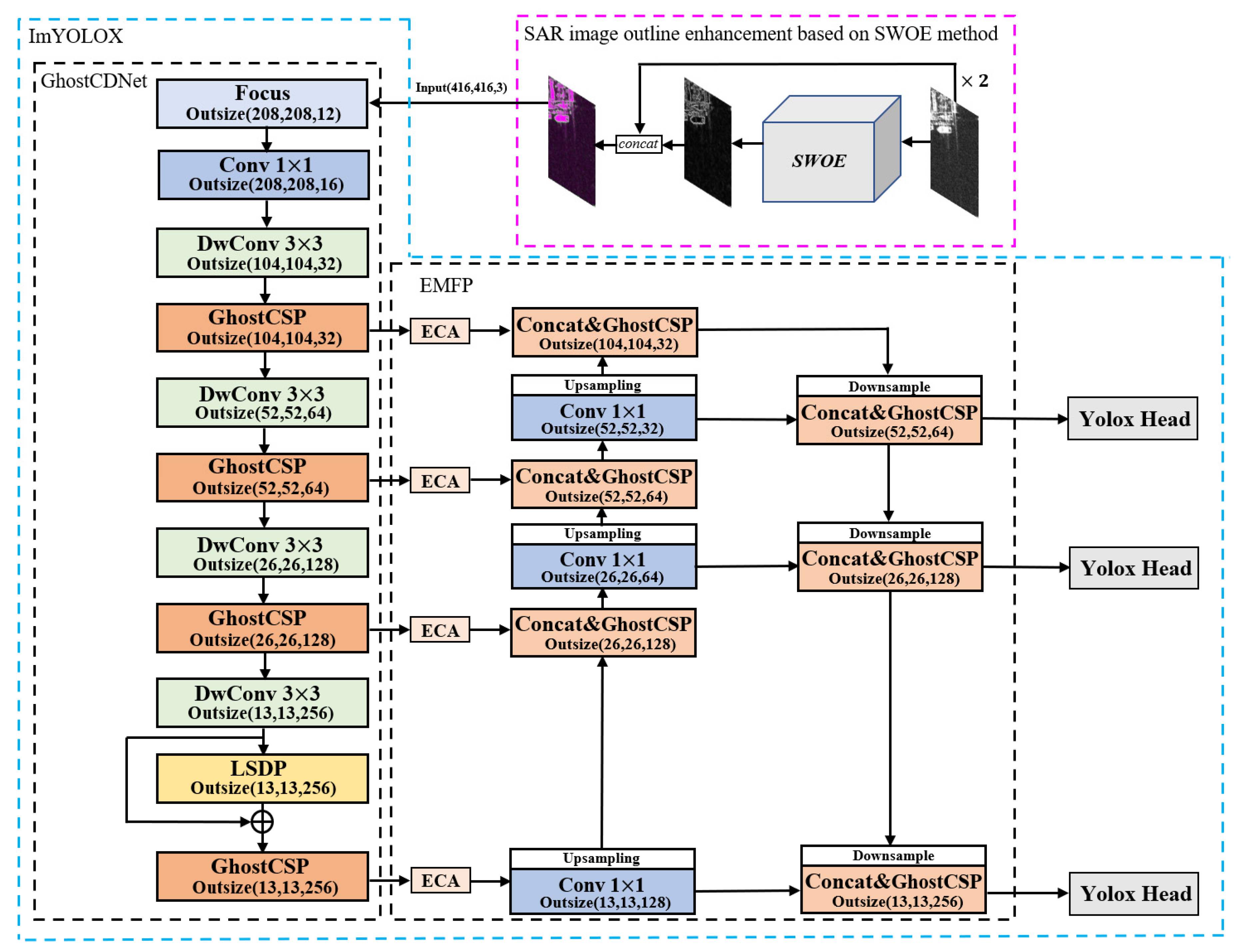

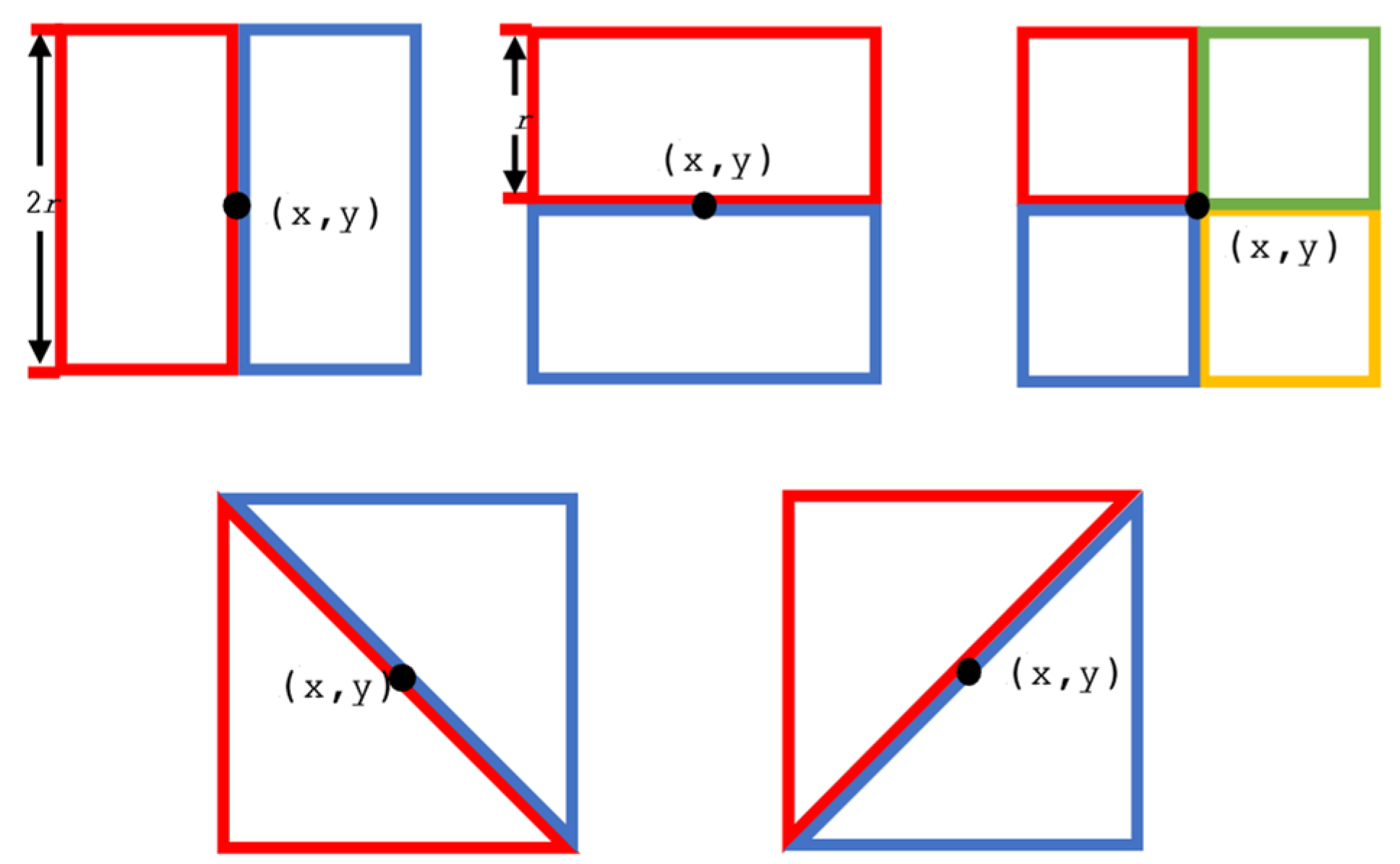

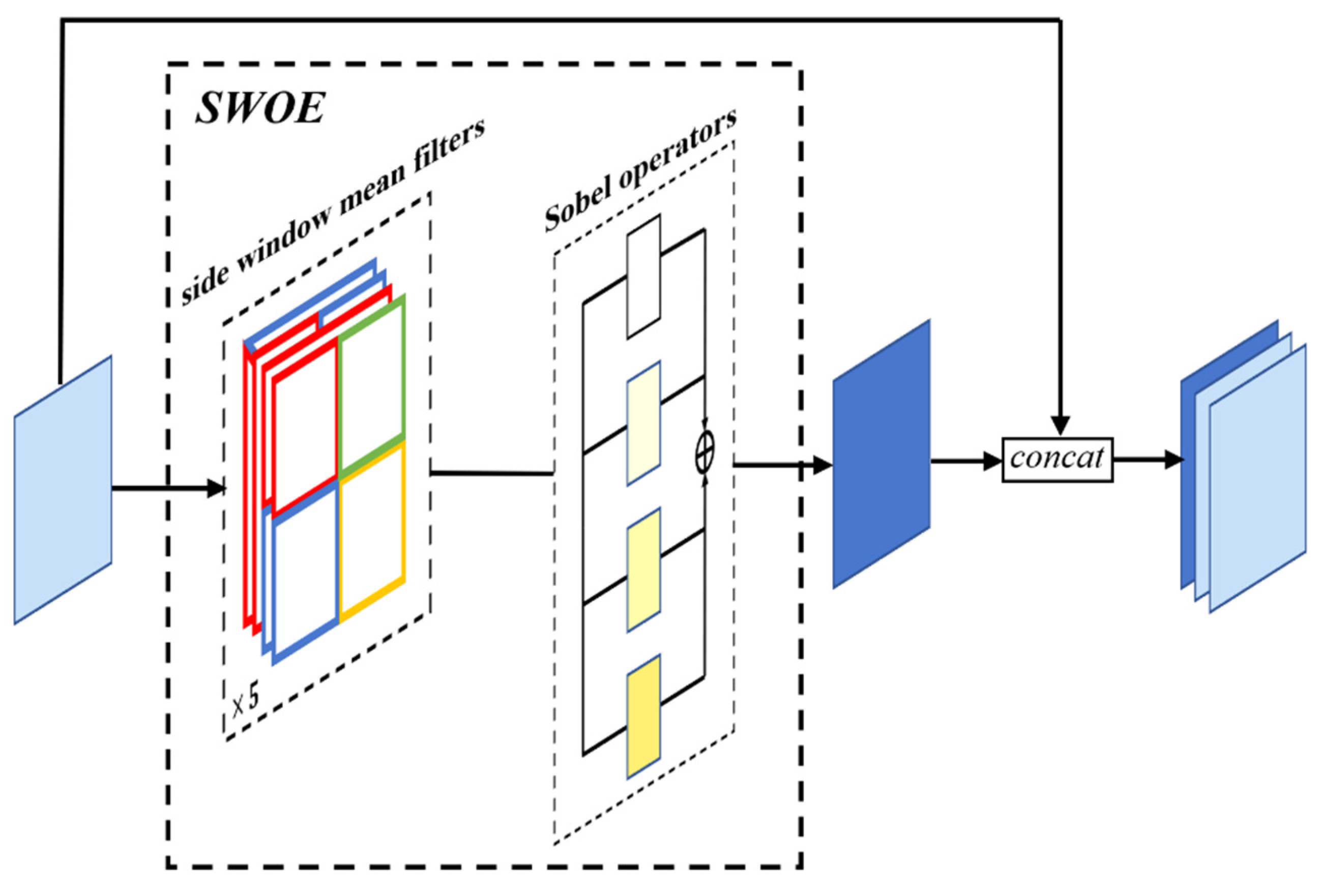

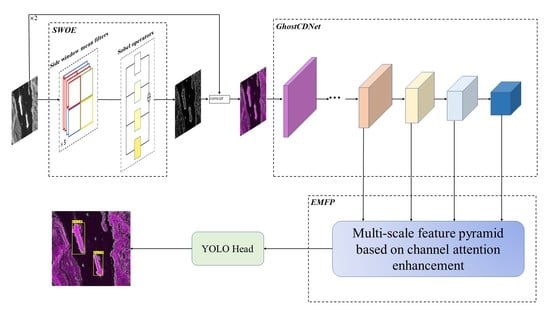

Aiming at the above problems, this paper proposes a SAR ship feature enhancement method based on high-frequency sub-band channel fusion that makes full use of contour information. This method enhances the important profile features of the ship while reducing the influence of speckle noise and provides more effective feature information for the detection network. In addition, this paper selects the advanced YOLOX of the YOLO series as the basic network. It is the first anchor-free target detection algorithm with superior performance in the YOLO series. According to the characteristics of SAR ship detection, this paper makes targeted improvements to YOLOX. Through experimental verification using SSDD dataset on mobile computing platform, the proposed method achieves higher average accuracy with fewer model parameters.

The main contributions of our work are as follows:

- (1)

A new SAR ship outline enhancement preprocessing method is proposed, which combines the original single channel SAR image with the outline extracted image, enhances the ship outline feature while weakening the influence of speckle noise, and improves the network′s ability to extract key features;

- (2)

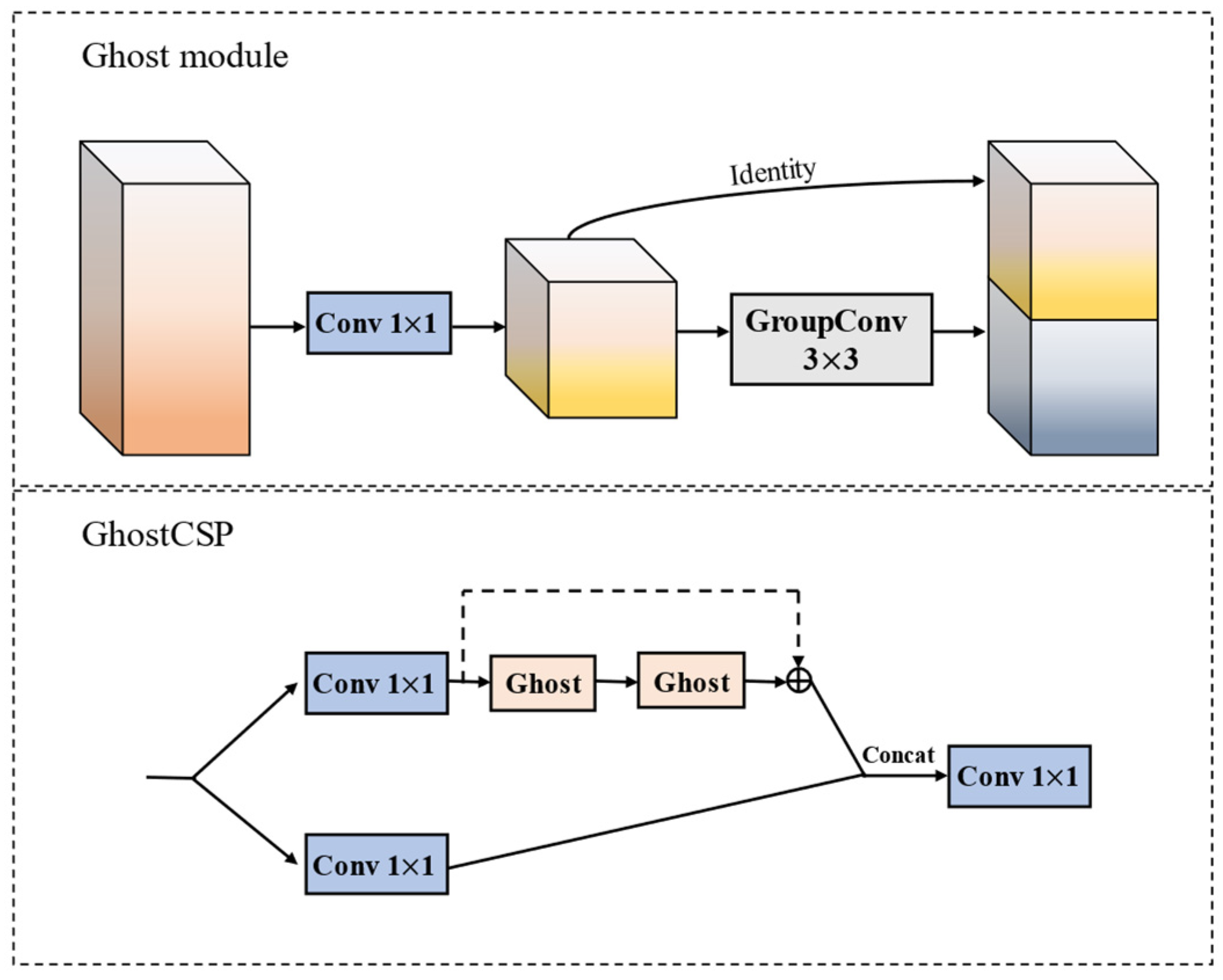

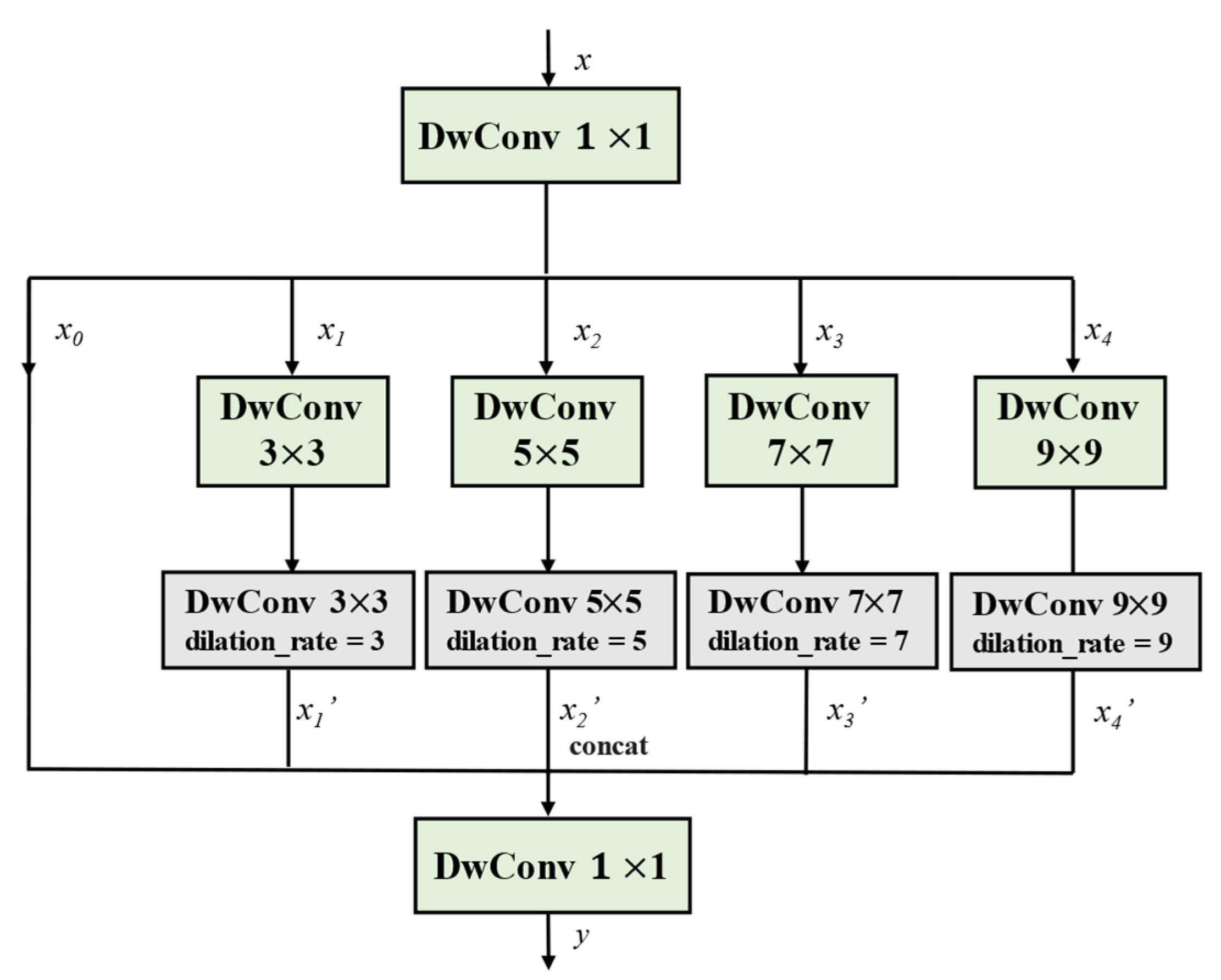

According to the actual situation that the hardware equipment of micro-SAR platform such as small unmanned aerial vehicle has limited computing resources and the disadvantage that the spatial pooling pyramid structure damages the contour features, the paper takes YOLOX as the basic network, uses Ghost module to carry out lightweight design of Cross Stage Partial (CSP) structure and proposes a lightweight spatial dilation convolution pyramid (LSDP) structure improved by spatial pooled pyramid, and finally designs an ultra lightweight high-performance backbone for SAR ship detection, which is named Ghost Cross Stage Partial Darknet (GhostCDNet);

- (3)

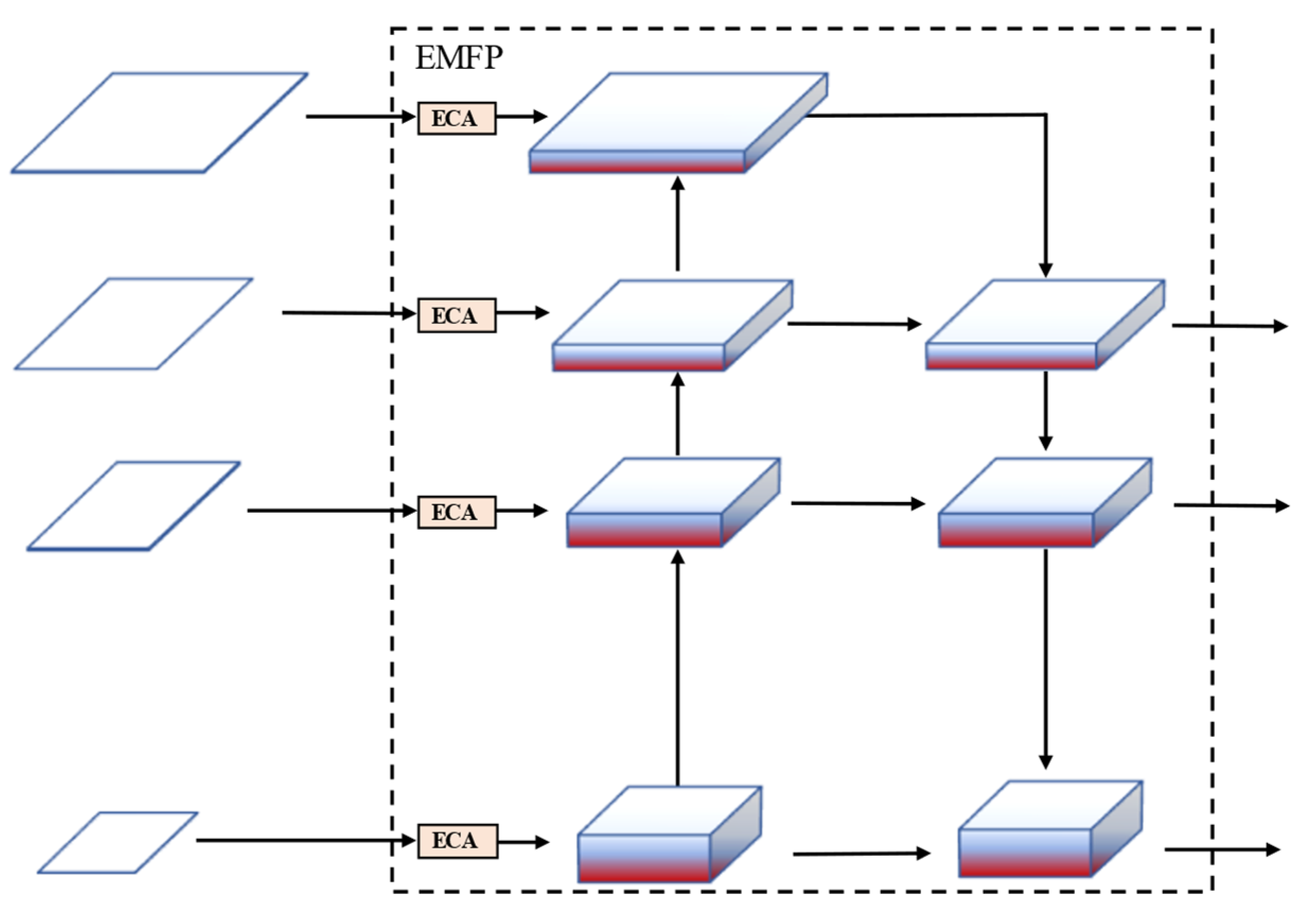

A multi-scale feature pyramid structure based on channel attention enhancement (EMFP) is proposed to highlight channel features that make important contributions to object detection. Contextual multi-scale feature fusion using four-layer feature maps enhances the detection capability of multi-scale ship targets.

The remainder of this paper is organized as follows: the second section introduces the proposed method; the third section describes the experiments and analysis of the results; and the fourth section summarizes this paper.

3. Experiments

In this section, the performance of the proposed method for SAR ship detection will be verified by experiments. Firstly, the software environment and hardware equipment of the experiment are described, and then the SAR image data set used is introduced. Finally, the effectiveness of each module is evaluated through ablation experiment and compared with other latest detection algorithms.

3.1. Experimental Environment

These experiments were carried out on a portable mobile laptop. The details of the software environment and hardware configuration of these experiments are shown in

Table 1. All experiments were carried out on this mobile platform.

3.2. Dataset and Experimental Settings

We use the publicly available SAR ship detection dataset SSDD for experimental validation. The SSDD dataset contains various ships in different environmental backgrounds near shore and far sea, and the weather conditions during imaging are not exactly the same. The dataset is collected by TerraSAR-X, RadarSat-2 and Sentinel-1 in Yantai, China and Visakhapatnam, India. The imaging resolution is 1–15 m, with a total of 1160 images, including 2456 ships. Due to the high acquisition cost of SAR image data, the dataset contains fewer sample images. If the dataset is randomly divided, there will be great uncertainty in the distribution of data samples, which will destroy the consistency of scene distribution between the training set and the test set and is not conducive to the performance comparison between different detection methods. Therefore, the publisher of the SSDD dataset stipulates a strict division standard [

10], that is, the images whose data file numbers end with 1 and 9 are required to be determined as the test set. According to this regulation, we divide the dataset into training set, validation set, and test set according to the ratio of 7:1:2, as shown in

Table 2. The original SSDD dataset and the new dataset SSDD-EE after SAR image edge enhancement preprocessing according to the method proposed in

Section 2.2 are divided in this way, and the division of both training sets and validation sets is guaranteed to be exactly the same.

The training and testing of the networks are carried out in the experimental environment described in

Section 3.1. Since the number of images in the SSDD dataset is limited, we use the pre-training method to load the pre-trained model trained with the VOC2007 dataset to initialize the network parameters. The training process is divided into two parts: freezing training and unfreezing training. First, load the pre-training model, and perform frozen training in the first 50 epochs, the batch size is set to 32 and the initial learning rate is 0.001, the cosine annealing method is used to dynamically adjust the learning rate, only train and adjust the part behind the backbone network. Unfreeze training is performed in the last 450 epochs, at this time, the batch size is set to 16, and the initial learning rate is 0.0001, the cosine annealing method is also used to dynamically adjust the learning rate.

3.3. Evaluation Indicators

As we all know, the performance evaluation indicators of target detection algorithms include Precision, Recall and Average Precision (AP). Below we briefly introduce the physical meaning and calculation method of these indicators.

The calculation of these indicators uses 4 components, namely True Positives (TP), True Negatives (TN), False Positives (FP), and False Negatives (FN). TP represents the number of correctly detected ships, TN represents the number of correctly detected backgrounds, FP represents the number of falsely detected ships, and FN represents the number of ships missed. The criterion for the detector to correctly detect the ship is that the Intersection over Union (IoU) between the predicted box and the ground-truth box is greater than the standard value, which is set as 0.5. The calculation announcements of Precision, Recall and AP are as follows:

Precision is the ratio of the number of real ships to the total number of ships determined by the detector, 1-Precision is called the false detection rate. Recall is the ratio of the number of real ships detected by the detector to all real ships, 1-Recall is called the missed detection rate. However, Precision and Recall can only have a one-sided response detection performance, so AP is used to balance the two, which is the area enclosed by the Recall-Precision curve in the interval. AP can comprehensively evaluate the performance of the target detection network. The larger its value, the better the detection performance of the network.

3.4. Ablation Experimental Results and Analysis

In this section, in order to verify the effect of SWOE-based SAR image outline enhancement preprocessing method, GhostCDNet and EMFP, we design four ablation experiments using SSDD dataset.

The first experiment uses the benchmark network YOLOX-Tiny as a comparison benchmark for subsequent experiments. The second experiment uses our designed GhostCDNet to replace the backbone of YOLOX-Tiny and compress the number of convolutional channels of the Neck part and the detection head, which is mainly used to verify the detection performance of the proposed lightweight backbone. The third experiment uses EMFP to replace the original Neck part on the basis of the second experiment, which is mainly used to verify the effectiveness of the proposed feature pyramid. The fourth experiment uses the SAR image outline enhancement method based on SWOE as the SAR image preprocessing process of the third experiment, which is mainly used to verify the effect of the proposed SAR outline enhancement method on the detection performance. Four experiments are carried out step by step, which finally verifies the effectiveness and superiority of our proposed method. The training set, validation set, test set and hyperparameters are kept the same for all experiments.

The target detection performance evaluation indicators of these four comparative experiments are shown in

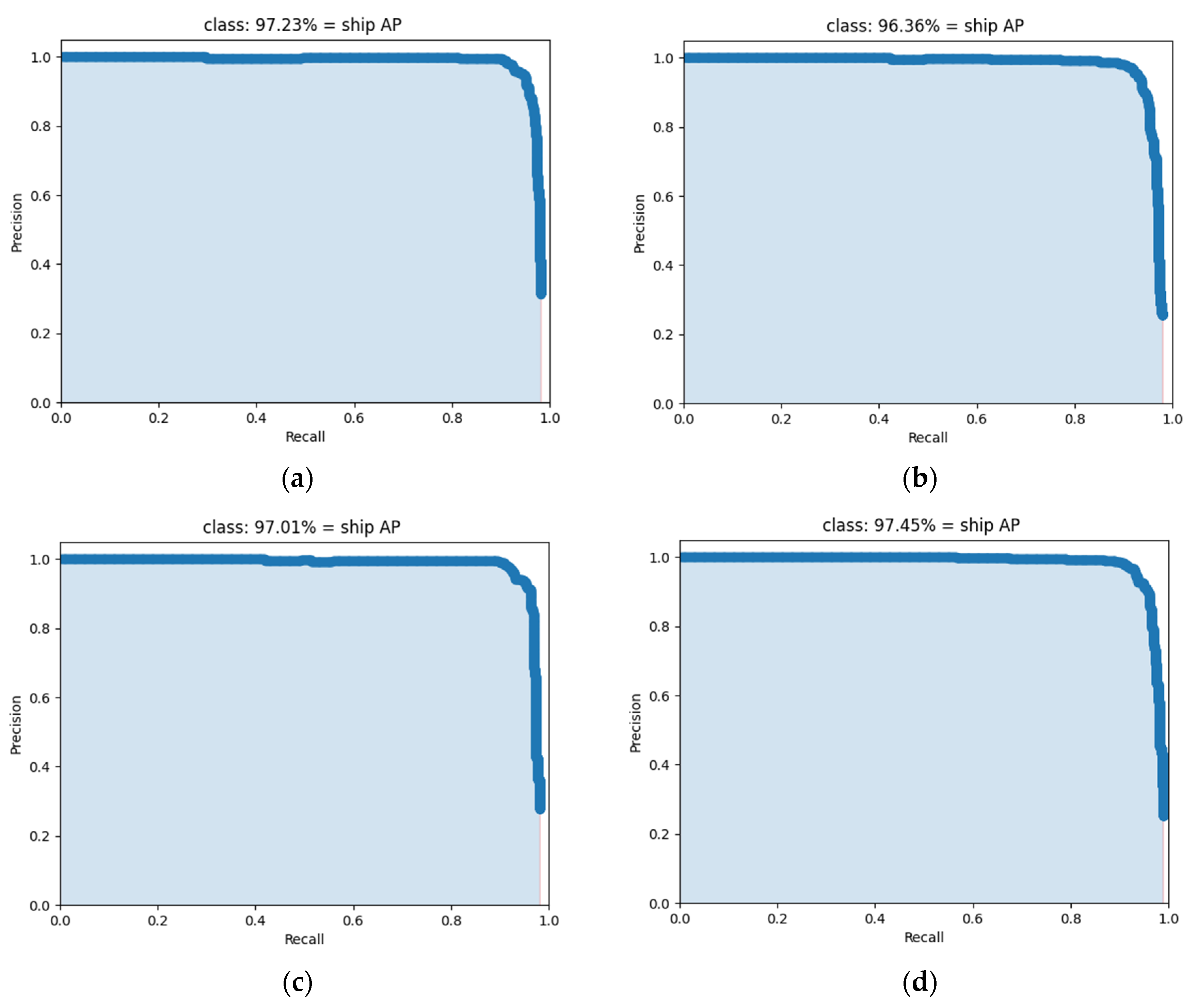

Table 3. It can be seen that after the lightweight design and improvement of YOLOX-Tiny based on the characteristics of SAR images in experiment 2, the total params of the network decreased from 5,032,866 to 851,474, which achieved the requirements of lightweight and maintained good detection performance. Compared with YOLOX-Tiny, the AP decreased by only 0.87%. Experiment 3 uses EMFP to add a small amount of params to the model, which improves the model′s ability to detect multi-scale targets. The AP increases by 0.65 percentage points, and the detection performance is close to the large-scale network YOLOX-Tiny. In experiment 4, the SAR outline enhancement preprocessing is performed by the SWOE method, which further improves the AP. Finally, compared with YOLOX-Tiny, total params decreased by about 82.74%, model size decreased by 77.58% and AP increased by 0.22%.

Figure 9 shows the variation curves of Precision-Recall of the four experiments, the abscissa is Recall, the ordinate is Precision, and the area of the shaded part is the AP value.

Figure 9 shows the variation curves of Precision-Recall of the four experiments, the abscissa is Recall, the ordinate is Precision, and the area of the shaded part is the Average Precision value.

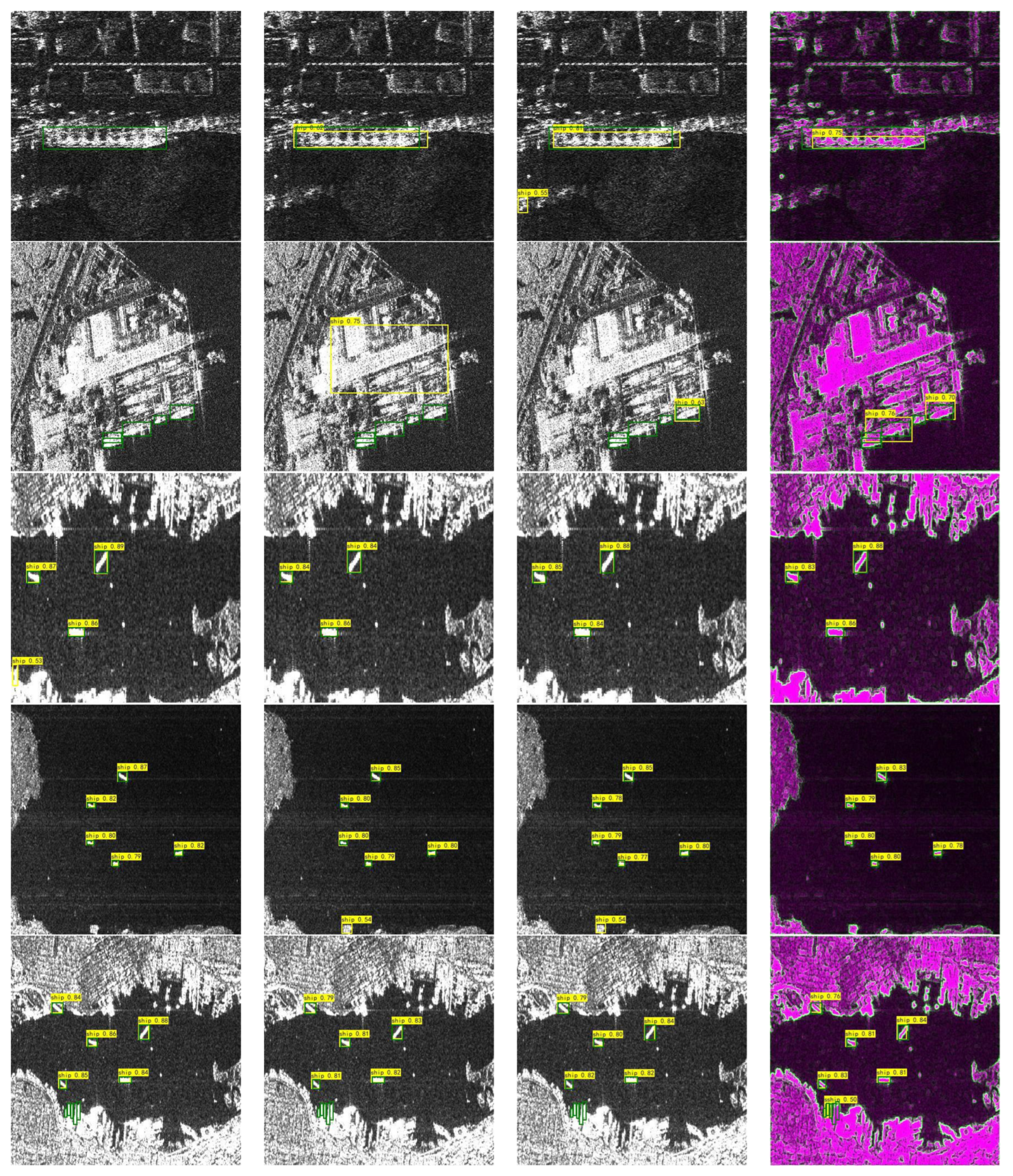

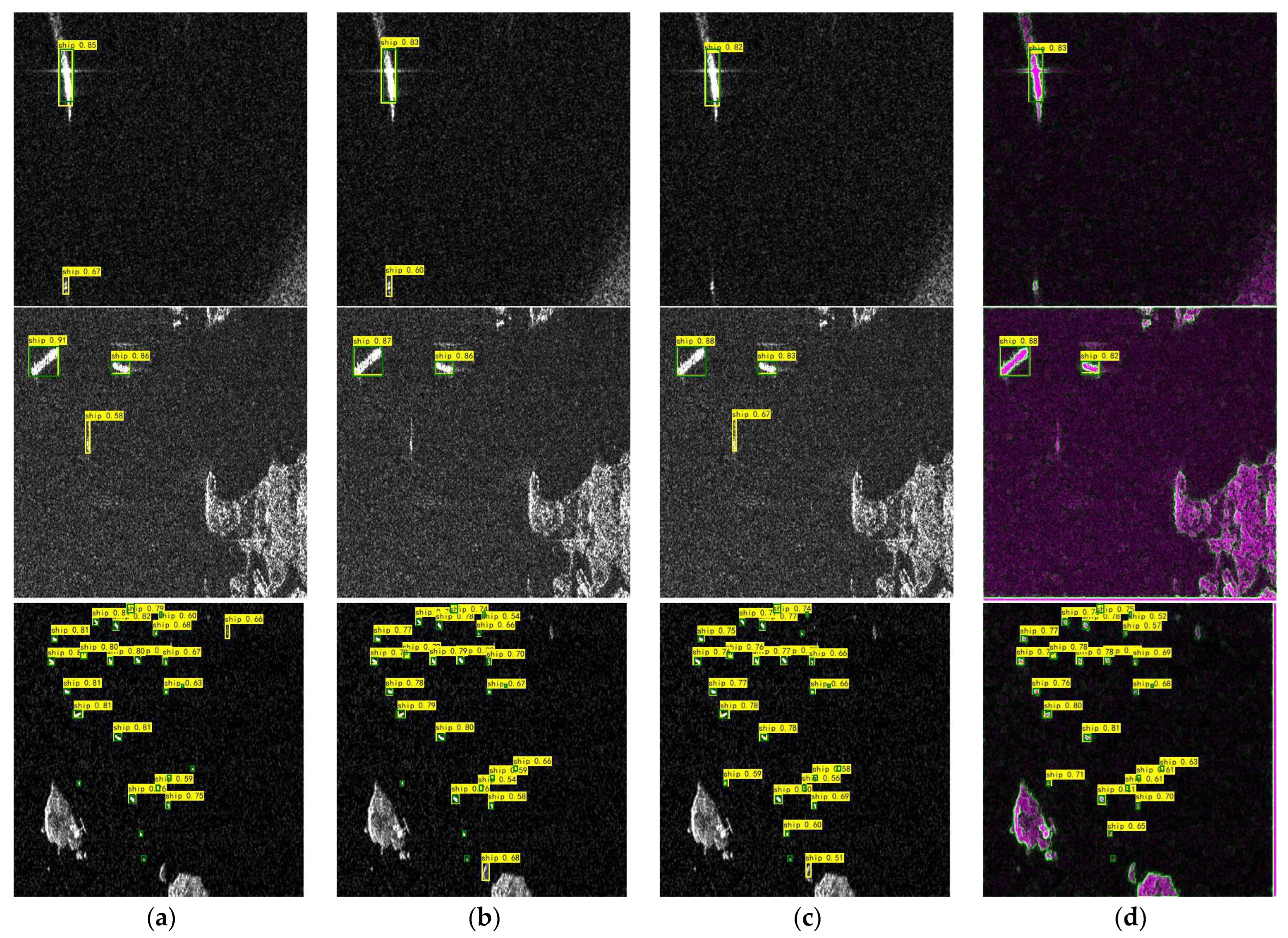

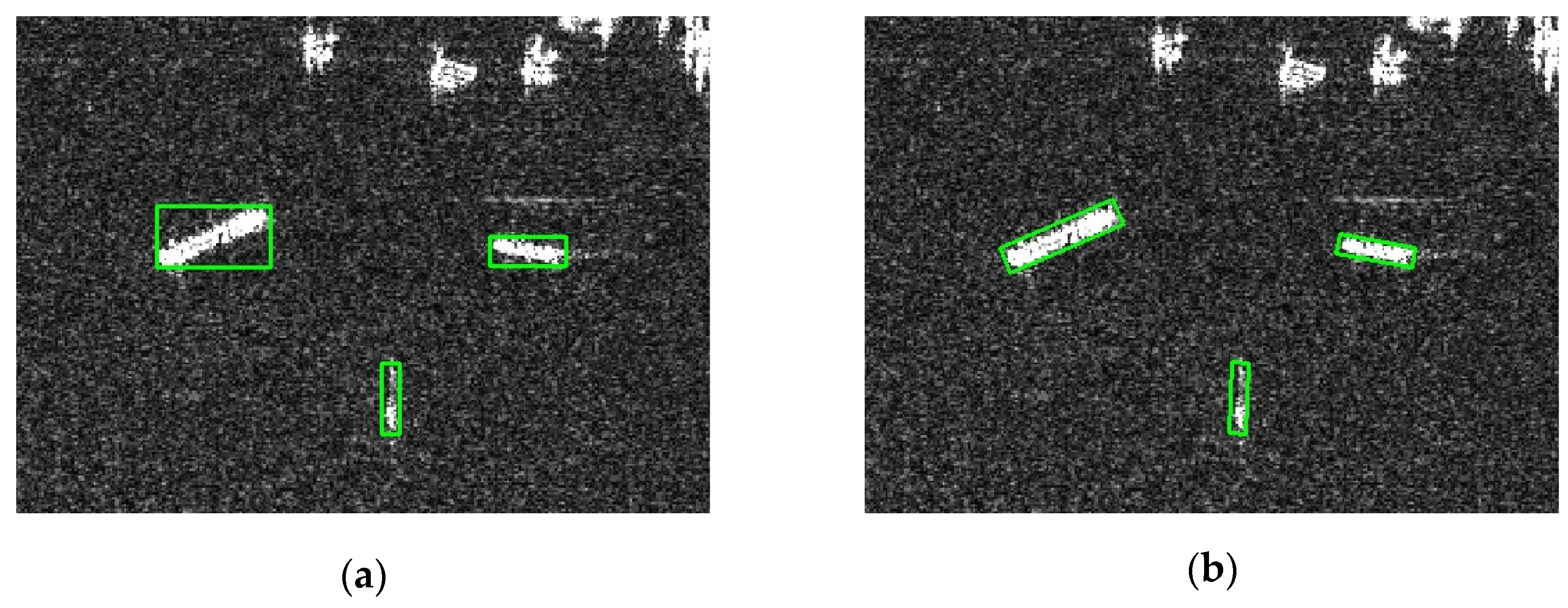

Some visualization results of the ablation experiment are shown in

Figure 10, in which

Figure 10a–d respectively show the experimental results of the ablation experiments No.1–No.4, including different background states of offshore, far-sea and berthed in the port. The green rectangle in the figure is the real ship label, and the yellow rectangle is the detected ship target. The first two rows of

Figure 10 show the detection situation under the complex background state of berthing in the port, the third row to the seventh row show the detection situation in the offshore navigation state, in which the seventh row shows the detection results of the dense distribution of small ships in the offshore area. The last row shows the detection in the far sea background state. It can be seen from the visualization results that the large-scale network YOLOX-Tiny has superior detection performance, but in the face of SAR ship detection with the characteristics of small samples, multi-scale and outline blurring, there will still be obvious missed and false detections. The ultra-lightweight detection algorithm proposed by us not only greatly reduces the number of parameters, but also improves the missed detection and false detection in some scenarios. At the same time, it also has excellent detection performance for dense small targets, but there are still some missed detections due to the blurred outline of ships, and false detections due to ship-like reefs with blurred contours and other severe noise disturbances. These cases are significantly improved after preprocessing with our proposed SAR outline enhancement method, as shown in the results in the first and second rows in

Figure 10. After using the multi-scale feature pyramid based on channel attention enhancement, the detection ability of densely distributed small ships is significantly improved, as shown in the seventh row of results in

Figure 10. From the quantitative analysis of performance evaluation indicators and the qualitative analysis of visualization results, the superiority of our proposed SAR ship detection method is confirmed.

3.5. Comparison with the Latest SAR Ship Detection Methods Using SSDD Datasets

To further demonstrate the advancement and superiority of our proposed method, we compare with the latest SAR ship detection methods experimentally verified using the SSDD dataset, as shown in

Table 4, where pre-SSDD represents the SAR images in the original SSDD dataset were preprocessed before input into the target detection network. These detection methods are all based on horizontal rectangular box.

It should be noted that the latest SAR ship detection algorithm compared in

Table 4 cannot be reproduced using the same experimental equipment, experimental environment and experimental parameters because there is no open-source code, so we directly quote the relevant performance indicators in the corresponding papers. It can be seen that our proposed method achieves higher AP detection performance with fewer parameters than the latest SAR ship detection methods.

5. Conclusions

In this paper, we propose an ultra-lightweight and high average precision anchor-free target detection algorithm ImYOLOX for SAR ship detection. This method is based on the advanced one-stage target detection algorithm YOLOX. Aiming at the problems faced by SAR ship detection, such as complex background, large scale differences, dense distribution of small targets and limited computing resources of hardware equipment of micro-SAR platforms such as small unmanned aerial vehicles, a light and strong backbone network GhostCDNet and a multi-scale feature pyramid based on channel attention enhancement are designed to improve YOLOX. In addition, aiming at the problems of unclear ship outline information caused by speckle noise in SAR image, a SAR ship feature enhancement method based on high frequency sub-band channel fusion is proposed, which reduces the influence of speckle noise and enhances the ship outline features. The experimental results based on the SSDD dataset show that the proposed method achieves average precision of 97.45% with 868,594 parameters and a model size of 4.35 MB. From both quantitative and qualitative perspectives, the proposed method has superior detection performance. The comparison results show that this method is superior to other SAR ship detection methods.

There are some limitations in horizontal box target detection. In the future, we will study the SAR ship rotating box target detection with angle information on this basis, so as to achieve the purpose of obtaining the angle of the ship target while detecting it.