1. Introduction

Synthetic aperture radar (SAR) is a high-resolution imaging technique that uses electromagnetic waves in the microwave spectrum to acquire electromagnetic scattering characteristics of the detection area [

1,

2,

3]. Different with optical sensor, SAR is an active imaging technique, and its long-wavelength radiation can penetrate through most climatic conditions. Therefore, SAR imaging has the advantages of long imaging distance and all-day, all-weather operation [

4]. However, SAR images are poor in object recognition and band information. This has motivated researchers to fuse SAR images with other remote sensing images to obtain better visual performance and additional valuable information.

SAR image fusion for enhancing visual performance has been widely studied by researchers in recent years. There are various ways to achieve the fusion [

5,

6,

7,

8,

9,

10,

11,

12,

13,

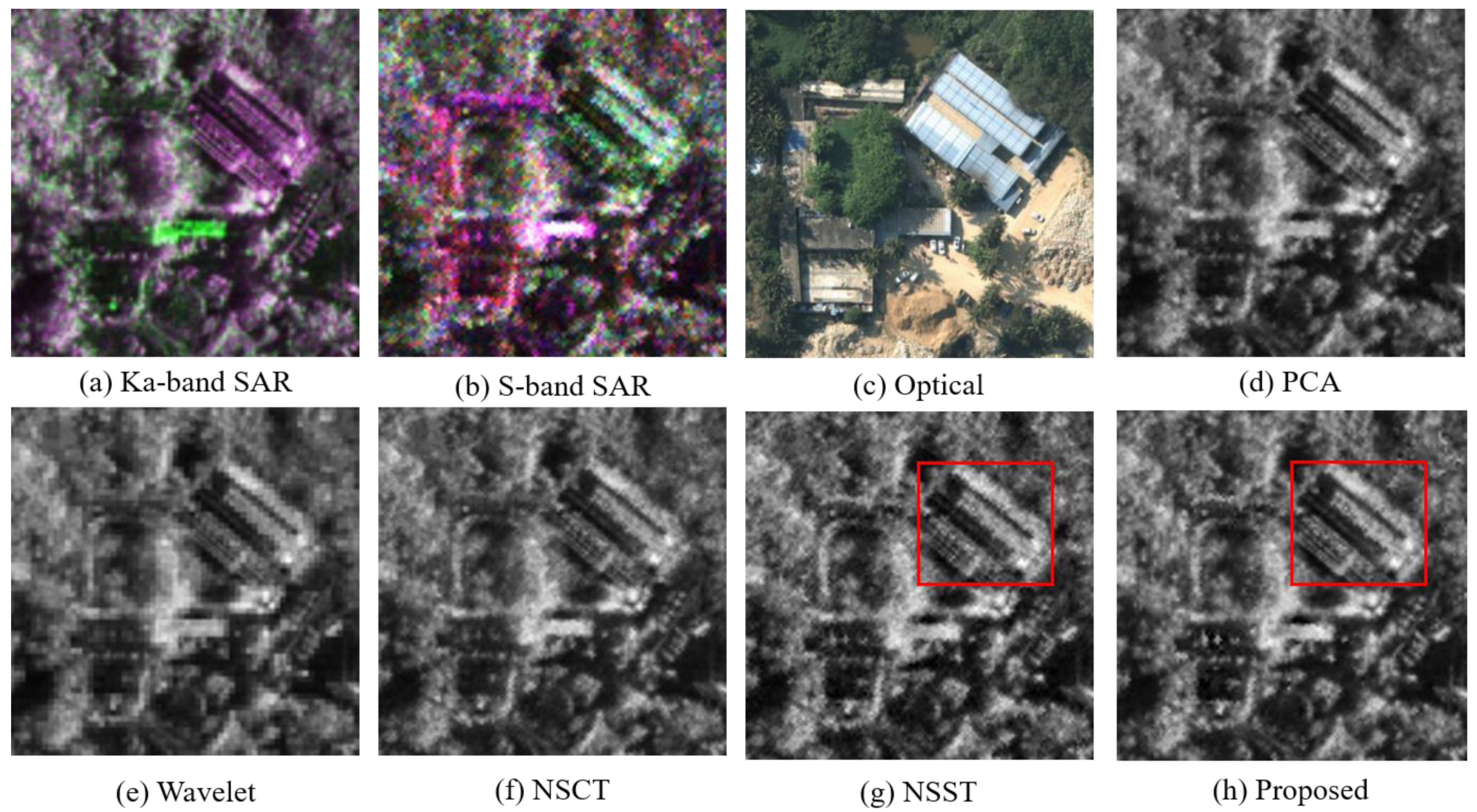

14]. Among them, the multi-scale decomposition methods, such as wavelet transform [

5,

6,

7], non-subsampled contourlet transform (NSCT) [

8], and non-subsampled shearlet transform (NSST) [

9] have been frequently used in the fusion of SAR images because of their good fusion performance and fast implementation. There are various fields where the fused SAR image can be applied, such as searching ice cracks [

5], improving the quality of urban remote sensing images [

8,

9], or revealing cloud-obscured areas in optical images [

10]. After the fusion, the object recognition of the fused SAR image is significantly improved, thus, many researchers utilize the fused SAR image for target detection, which includes obtaining the distribution of buildings in cities [

11], monitoring the damage of urban buildings after earthquakes [

12], monitoring glaciers in the ocean [

13], and the detection of ships in ports [

14].

The fusion between SAR images has received attention from researchers in an effort to obtain more band and polarization information [

15,

16,

17,

18,

19,

20,

21,

22]. Due to the different penetration and electromagnetic characteristics of the different bands, the fusion of multi-band SAR images can be used to achieve the detection of special targets. Wu et al. used the fusion of X, C, and L-band SAR images to achieve bridge detection [

17]. Guida et al. used the fusion of X and S-band SAR images to identify oil and gas [

18]. Chanika et al. applied X and C-band SAR image fusion to enhance the classification accuracy of maize lands [

19]. Moreover, the fusion of multi-polarization SAR images can be utilized to distinguish the regions with different surface structures. Ruan et al. fused horizontal

–horizontal (HH), horizontal

–vertical (HV) and vertical

–vertical (VV) polarized SAR images to improve the classification accuracy of different areas [

20]. Song et al. fused HH and HV polarized SAR images to reduce the false alarm rate of moving target detection [

21]. Zhu et al. enhanced the performance of vessel detection by using the fusion of HH and VV polarized SAR images [

22].

However, although the fused multi-band SAR image can be used to detect objects that vary in scattering characteristics with respect to the band, it is difficult to determine the kind of objects they are. In addition, although the fused multi-polarization SAR image can be used to distinguish the regions with different surface structures, it is difficult to find masked objects based on the information of a single band. Therefore, if we can fuse the information of multi-band and multi-polarization SAR images at the same time, we can detect the masked object and determine the kind of object it is simultaneously, which will greatly facilitate the application of the SAR images.

Unfortunately, there are few effective fusion methods for the multi-band and polarization SAR images. Traditional fusion methods compress input images into a grayscale image. This compression will result in the loss of critical band and polarization information. Furthermore, the grayscale image is hard to interpret. The objects with only a one-dimensional grayscale difference are difficult to detect and discriminate.

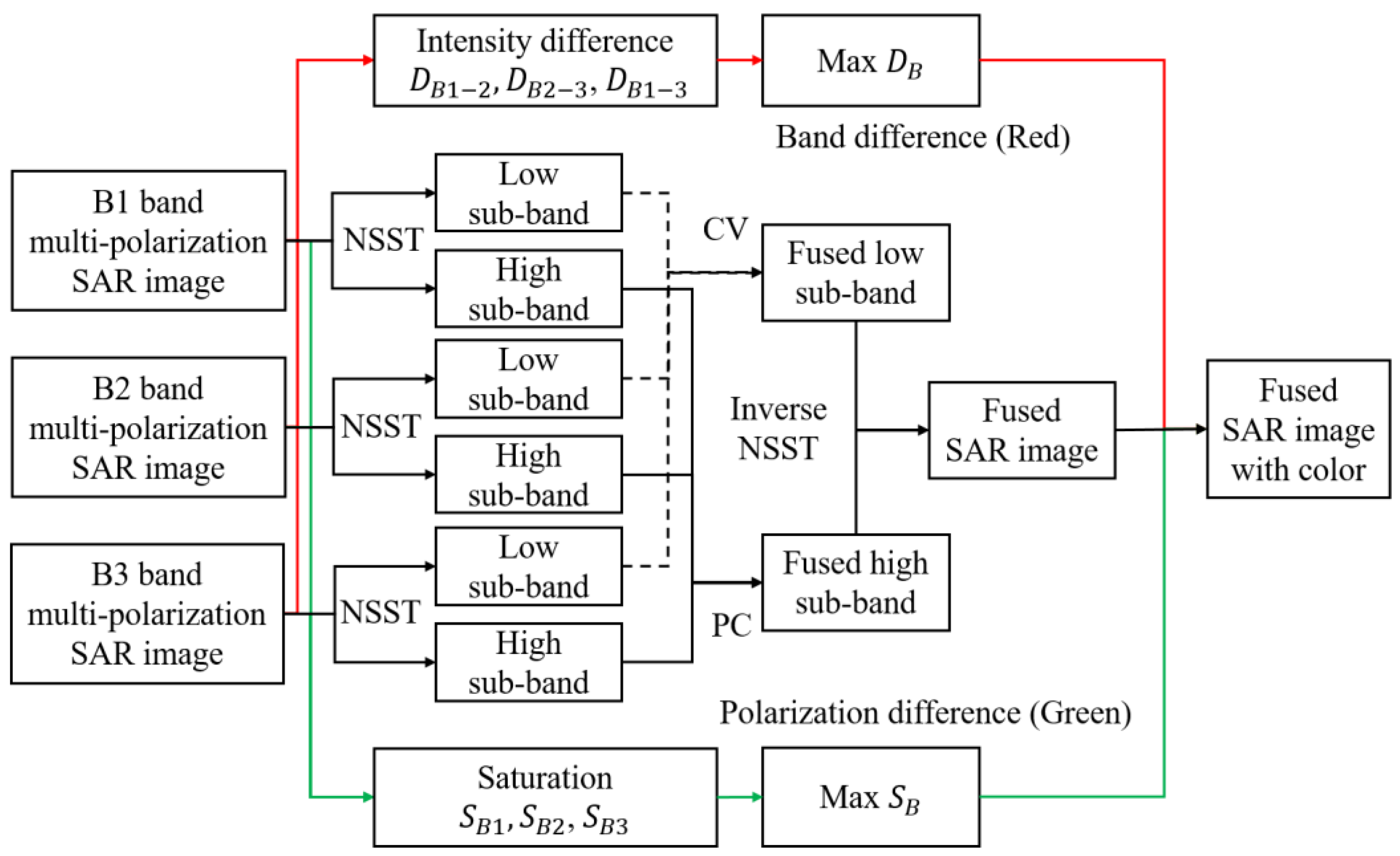

In this paper, we propose a colorization fusion method for multi-band and polarization SAR images. First, we use non-subsampled shearlet transform (NSST) to decompose and fuse multi-band and polarization SAR images. Among them, the low and high-frequency sub-band images acquired from NSST are fused using the fusion rules based on coefficient of variation (CV) and phase consistency (PC), respectively. Then, the band difference map and the polarization difference map are obtained from the multi-band and polarization SAR images through the calculation of intensity differences and color saturation, respectively. Finally, the fused image is colorized according to the difference maps. This proposed fusion method not only preserves the detail information between different bands of SAR images but also increases the fused image by the band and polarization differences information, which achieves a high visual performance.

The rest of this paper is divided into three parts.

Section 2 introduces the detailed fusion rules of multi-band and polarization SAR images.

Section 3 presents the results of the proposed fusion method as well as the comparison with other fusion methods. We conclude in

Section 4.

2. Methodology

In this section, we first introduce the concepts of NSST. Then, we present the NSST-based image fusion rules, in which the low and high-frequency sub-band images are respectively fused according to the CV and PC. Subsequently, the coloring rules for the fused SAR image are proposed. At the end of this section, we introduce the dataset and evaluation indexes that will be used in the experiments.

2.1. Concepts of NSST

Non-subsampled shearlet transform (NSST) is a non-orthogonal transform derived from wavelet transform [

23]. For a continuous wavelet, a two-dimensional affine system with composite dilations is defined as:

where

,

.

j,

l, and

k denote scale, direction, and shift parameter, respectively.

is the dilation matrix, and

is related to the scale decomposition.

is the shear matrix, and

is related to the direction decomposition. When

and

, they are called the shearlet filter (SF), and this system is called the shearlet transform.

Compared with the shearlet transform, NSST utilizes the not sampling pyramid (NSP) to achieve the scale decomposition [

23]. The process of NSST is described as follows: First, NSP scale decomposition is performed to obtain a low-frequency sub-band image and j-1 high-frequency sub-band images. Then, the high-frequency sub-band images are processed with SF to obtain the sub-band images in different directions.

Figure 1 shows the three-level NSST image decomposition. As a multi-scale decomposition method, NSST can achieve image multi-scale and multi-directional decomposition with high speed.

2.2. Fusion Rules

After decomposing the multi-band and multi-polarization SAR images by NSST, we can acquire their low and high-frequency sub-band images. Then, we fuse the low and high-frequency sub-band images respectively, according to the individual fusion rule, as follows.

2.2.1. Low-Frequency Fusion Rule

The low-frequency sub-band image contains the approximate information as well as the majority of the energy of the original SAR image. We use the coefficient of variation (CV) [

24] as the weight of the low-frequency sub-band images. The CV is calculated as follows:

where

n represents the number of pixels in a window,

is the neighbor pixel of the center pixel

, and

represents the mean of the pixels in the window. The calculation window of the CV is

in this paper.

CV can describe the degree of local variation of a pixel. If a pixel has larger CV, it will provide more useful information and should have larger weight than the corresponding pixel in the other low-frequency sub-band image. After calculating the CV for each pixel in the low-frequency sub-band image and making the CV as their weights, the fused low-frequency sub-band image is acquired as follows:

where

m represents the number of the original images, and

L is the low-frequency sub-band image decomposed from NSST.

2.2.2. High-Frequency Fusion Rule

The high-frequency sub-band image contains the detailed texture, contour features, as well as interfering noise. In order to preserve the detailed texture and contour features as much as possible and to minimize the effect of noise, we use the PC weighted fusion method to achieve the fusion of the high-frequency sub-band images.

Phase Congruency (PC) is an image contour extraction method. Because PC analyzes the phase information of image in the frequency domain, it is invariant to the illumination change [

25] and thus, has been widely used in the SAR image registration [

26,

27,

28].

For the phase analysis, we should first extract the multi-frequency and orientation phase information from the original SAR image. In practice, the image is convolved with multi-frequency and orientation band-pass filters to achieve the extraction as follows:

where

represents the original SAR image.

and

represent the amplitude and phase at the frequency

ω and orientation

, respectively.

represents the band-pass filter in the time domain. The function

denotes the convolution of two metrics. The oriented log-Gabor filter is suitable as the band-pass filter

f. In the frequency domain, the oriented log-Gabor filter is defined as

where

is direction filter.

is the central frequency of the log-Gabor filter, and

is a parameter that controls the band width. Fourier transform can achieve the transformation of the oriented log-Gabor filter between the frequency domain and the time domain.

Considering the effect of image noise, the phase deviation

as well as the PC in the orientation

are defined as:

where

is mean phase angle,

is the weighting function related to the band-pass filter,

T is the estimated noise threshold, and

is a small constant that avoids division by zero. The function

denotes that the enclosed quantity is equal to itself when its value is positive, and zero otherwise. If the

value of the pixel is close to 1, this pixel has good phase congruency in the orientation

and is likely an edge pixel of the SAR image.

Furthermore, taking the orientation into account, we can distinguish the kind of edge. The maximum moment

PCM and the minimum moment

PCm can be obtained as follows [

29,

30]:

in which the three intermediate quantities are calculated by:

where

denotes the PC at the orientation of

.

PCM and

PCm represent the edge and corner maps of the original SAR image, respectively. In this paper, we select

PCM as the output of the PC method. To make the contours more visible, the

PCM is optimized as follows:

Finally, the

PCM is made as the weights of each high-frequency sub-band image, then, the fused high-frequency sub-band images can be obtained as follows:

where

m denotes the number of the original images, and

H is the high-frequency sub-band image decomposed from NSST.

j and

l represent the scale and direction in the NSST, respectively.

So far, we have obtained the fused low-frequency sub-band image and the fused high-frequency sub-band images. Then, the NSST inversion is applied to the sub-band images to realize the fusion of the multi-band and polarization SAR images. Subsequently, we extract the band and polarization difference information from the original multi-band and polarization SAR images to colorize the fused SAR image.

2.3. Coloring Rules

2.3.1. Band Difference Extraction

Since the penetration of the detection microwaves vary from different frequencies, the masked object will show different intensity in the multi-band SAR images. We can locate these objects by extracting the band difference map between the images. The extraction of band difference is conducted as follows:

in which the

and

represent the different band SAR images at

P1 and

P2 polarization, respectively. If there are more than two bands, the band differences between each band should be calculated. Then the maximum

of pixels between each band is retained to obtain the final band difference map, as follows:

However, large amounts of noise in the SAR image is retained in the , which will reduce the quality of the band difference map. To reduce the impact of noise, we calculate the mean of in the band difference map. The values less than mean of in the band difference map is set to zero, then the map is convolved with mean filter.

2.3.2. Polarization Difference Extraction

The regions with different surface structures will present different polarization information. Therefore, we can use the color saturation [

31] to extract the polarization difference map to distinguish different regions easily.

The color saturation of each pixel in the polarization difference map is calculated as:

in which

denotes a certain band SAR image at polarization

P. If a pixel has high saturation, the intensity of the pixel varies sharply among the multi-polarization SAR images, indicating that the pixel belongs to a special region. According to the color saturation values of the pixels, we can obtain the mean of them in the polarization difference map. In order to reduce the effect of noise, the values less than mean of

in the polarization difference map is set to zero, then the map is convolved with

mean filter.

Finally, the maximum

of pixels in each band is retained to obtain the final polarization difference map, as follows:

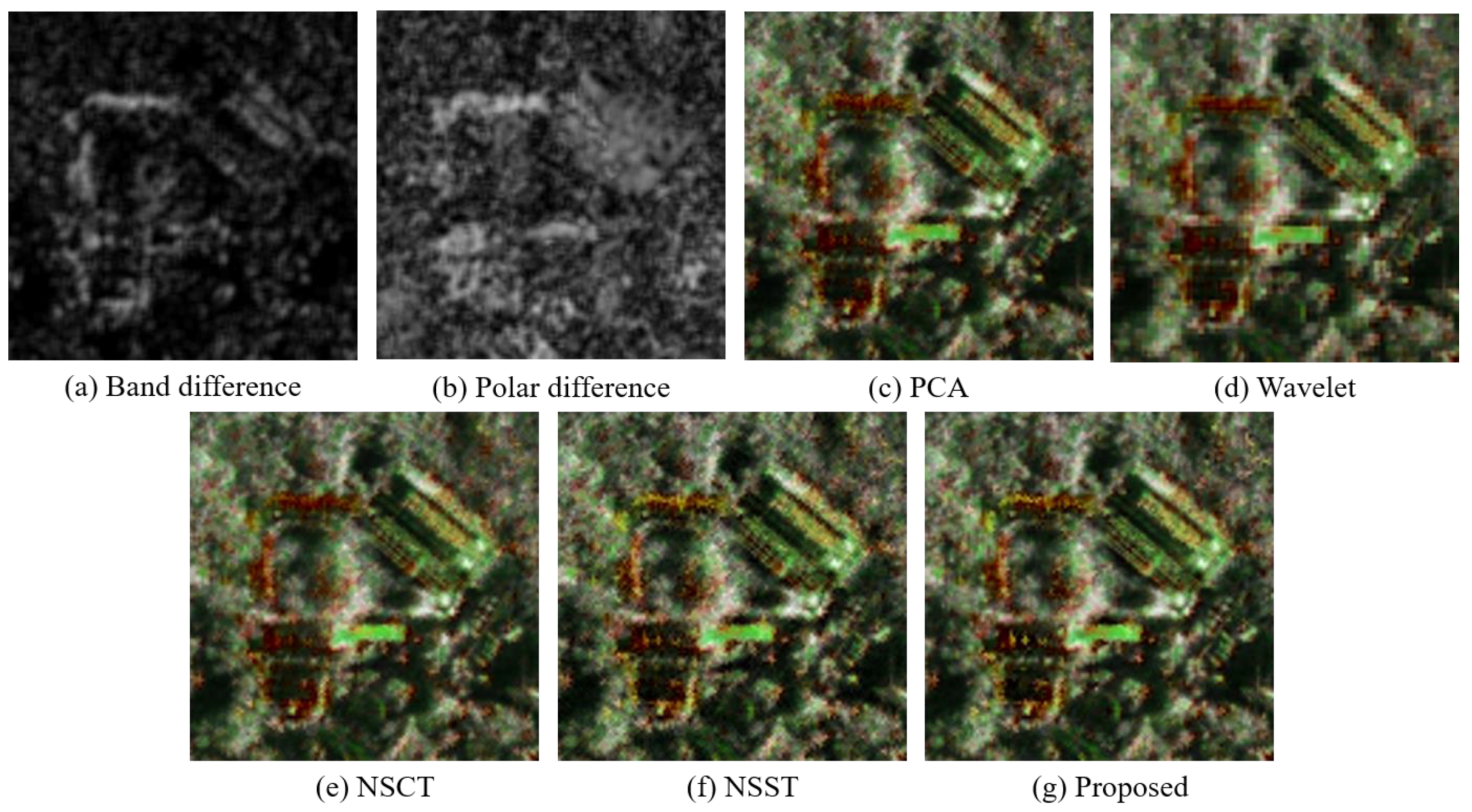

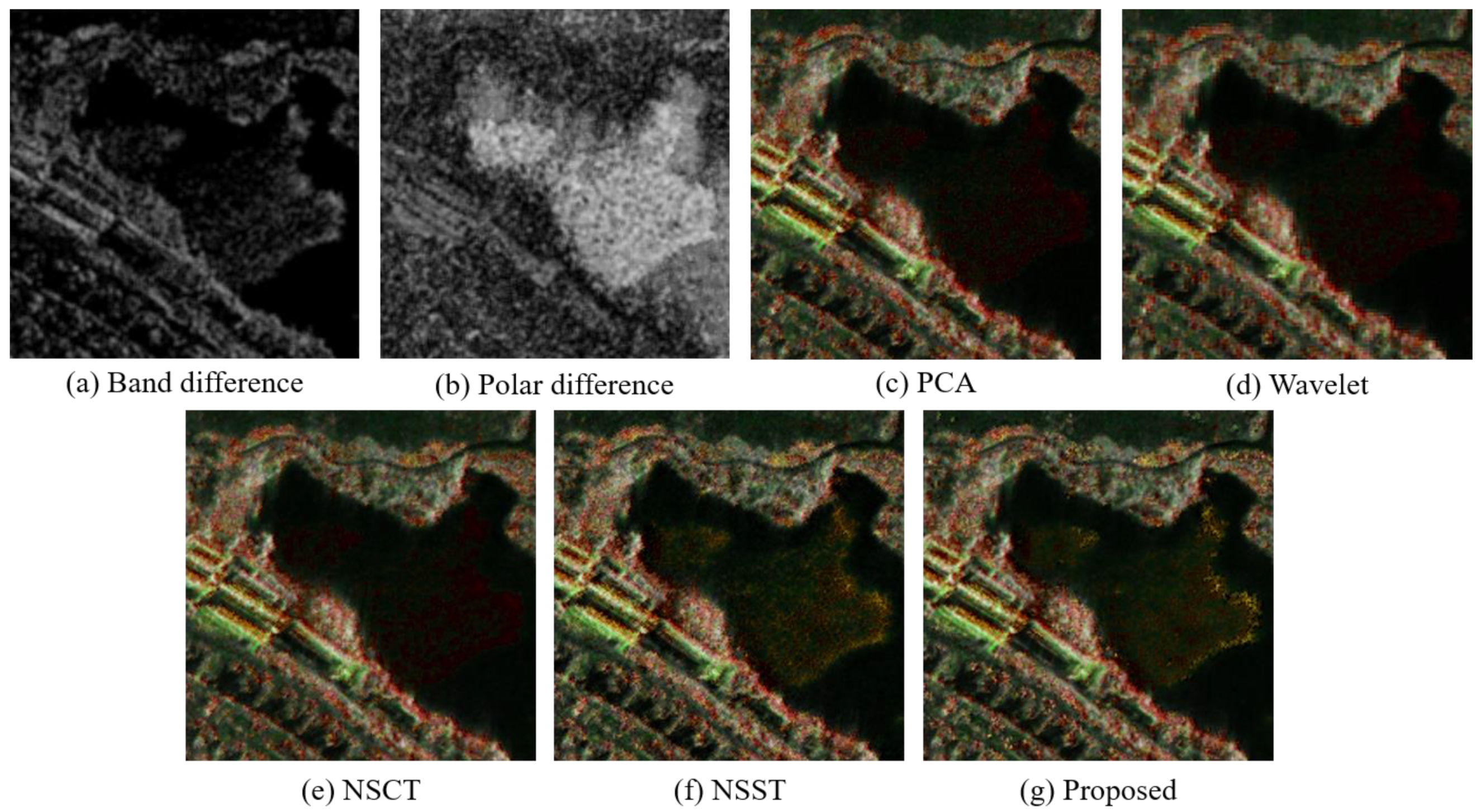

2.3.3. Coloring Rules of Difference Information

So far, we have obtained the fused SAR image, the band difference map as well as the polarization difference map from the multi-band and polarization SAR images. Next, the band and polarization difference maps are expressed on the fused SAR image by different colors.

Since the blue objects cannot be distinguished well in the human eyes because of the low brightness, we use red and green to express the band and polarization difference map, respectively. The regions with both band and polarization differences will appear yellow.

As the polarization difference map is presented as saturation, it is first transformed into the intensity difference as follows:

in which the

F is the fused SAR image. The intensity of each color in the fused SAR image is assigned according to the following rules:

After that, we obtain the colorized fused SAR image that contains band and polarization differences information.

The specific flow chart of multi-band and polarization SAR images fusion in this paper is shown in

Figure 2.

2.4. Datasets

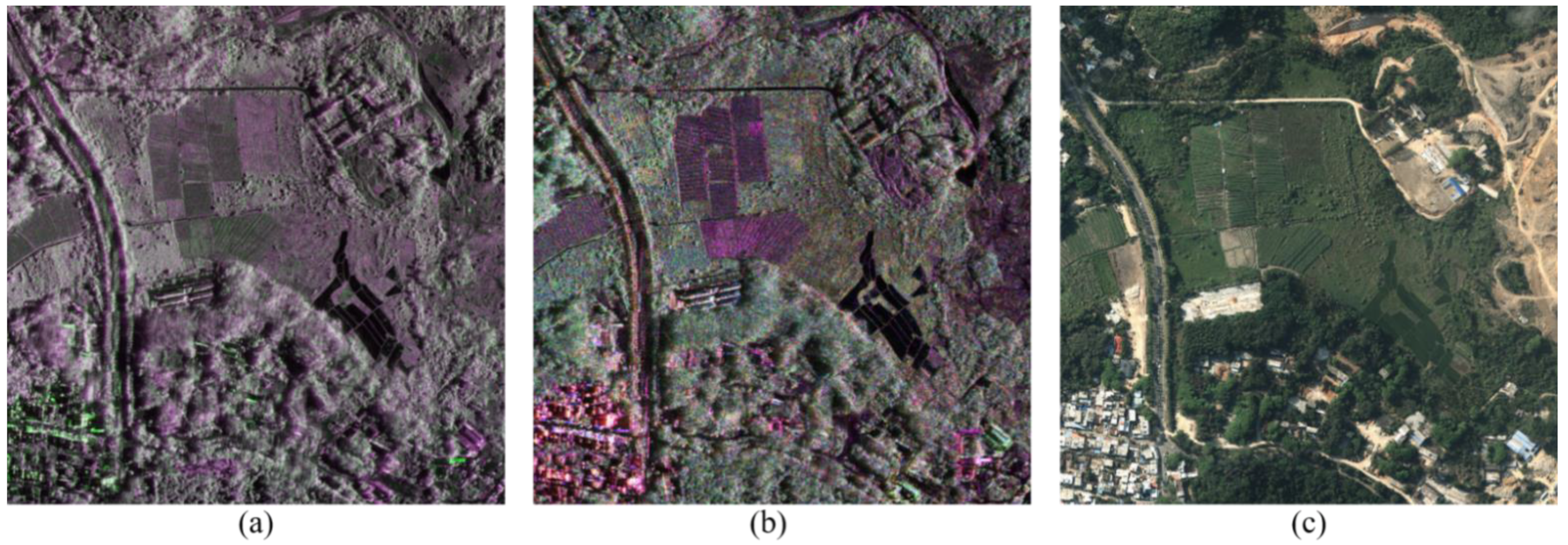

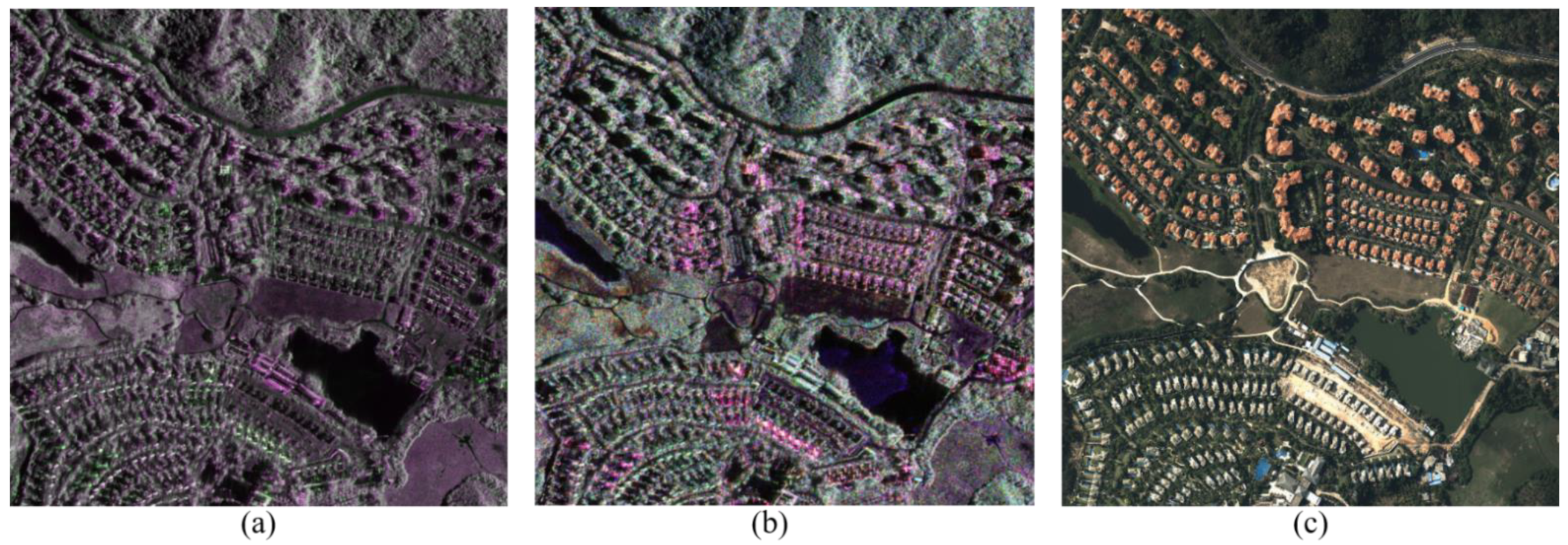

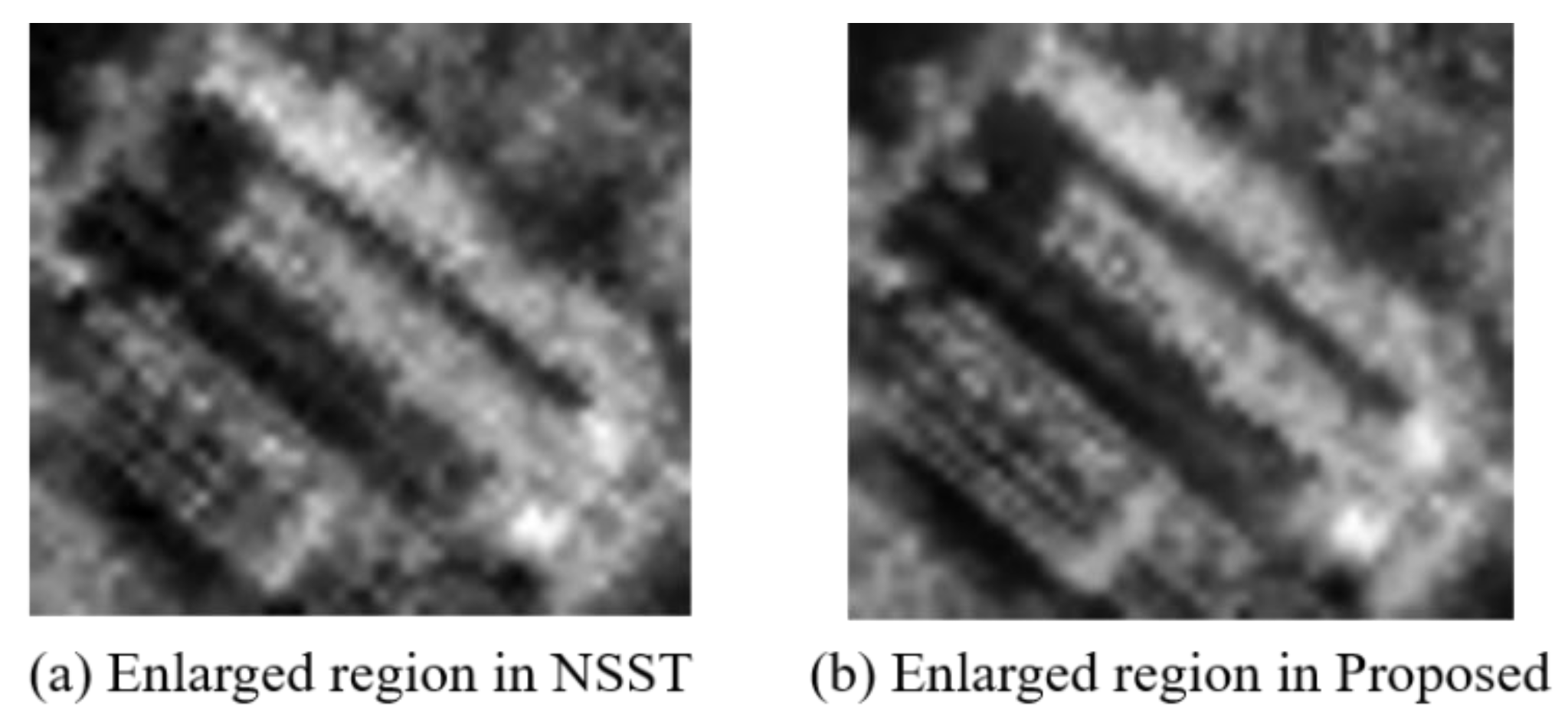

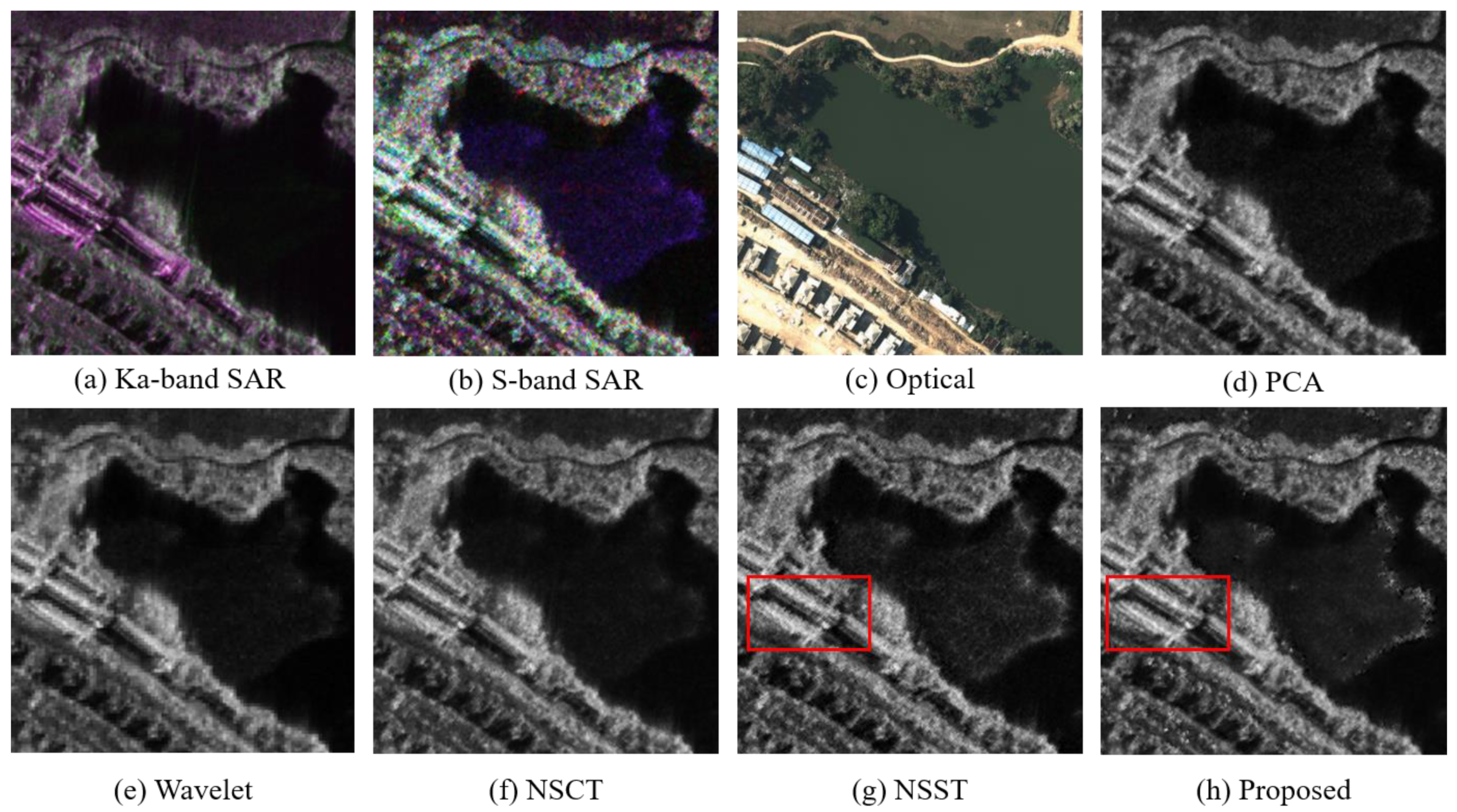

Two pairs of air-borne SAR images were selected for the fusion quality analysis. Each image pair contains two band (Ka and S-band) and three polarization (HH, HV and VV) SAR images. The polarization information is painted in different colors, in which HH is red, HV is green, and VV is blue. The resizing and registration between the multi-band SAR images was accomplished. Each SAR image had the size of and the resolution of 1 m. We also provide the optical images of the same scenes as references. All images were taken during an airplane flight on 14 March 2021.

The two pairs of Ka and S-band multi-polarization SAR images are shown in the

Figure 3a,b and

Figure 4a,b, respectively. Since the frequency of Ka-band microwave is higher than S-band microwave, the Ka-band SAR image has a higher resolution [

1,

2,

3]. After the resizing, the Ka-band multi-polarization SAR images appear sharper than the S-band. Moreover, because the electromagnetic scattering characteristics of objects varies with the frequencies of the detection microwaves, the detailed texture of the objects in the Ka and S-band multi-polarization SAR images is significantly different. Furthermore, the polarization information of the Ka and S-band SAR images is also inconsistent. Since different kinds of objects present different polarization information, we can easily distinguish these regions such as farmlands or buildings from the images according to their own colors.

2.5. Evaluation indexes

Several statistical indexes will be used to evaluate the fused images, which include average gradient (AG), information entropy (IE), standard deviation (STD), correlation coefficient (CC), and structural similarity index measure (SSIM) [

4,

9]. Their introduction and specific equations are shown as follows:

AG represents the detail-describing ability of the fused image. It can be obtained by calculating the mean of image gradients:

where

and

are the gradients of pixels in the vertical and horizontal directions, respectively.

and

can be acquired by convolving the image with

and

, respectively.

IE is the most intuitive standard for reflecting the amount of image information. The following equation is used to calculate information entropy.

where

L is the dynamic range of the image being analyzed, and

is the probability of occurrence of

gray level. In the 8-bit image,

L is 255.

STD is a measure of contrast in the fused image. High contrast in the fused image indicates information richness. Standard deviation can be calculated as follows:

where

μ is the mean value of the fused image,

F,

N is the number of the pixels in the fused image.

CC is a measure of the correlation between the reference image and the fused image. It can be calculated as follows:

where

F and

R are fused and reference images, respectively.

and

are mean values of fused and reference images, respectively.

SSIM measures structural similarity between the reference image and the fused image. It is calculated using following equation:

where

f and

r represent the fused and reference images, respectively;

and

are their mean values;

and

are their variances;

is the covariance between them; and

and

are small constants for stabilizing denominator with weak division.

It should be noted that if the input image is in color, the image needs to be converted to a grayscale image first.