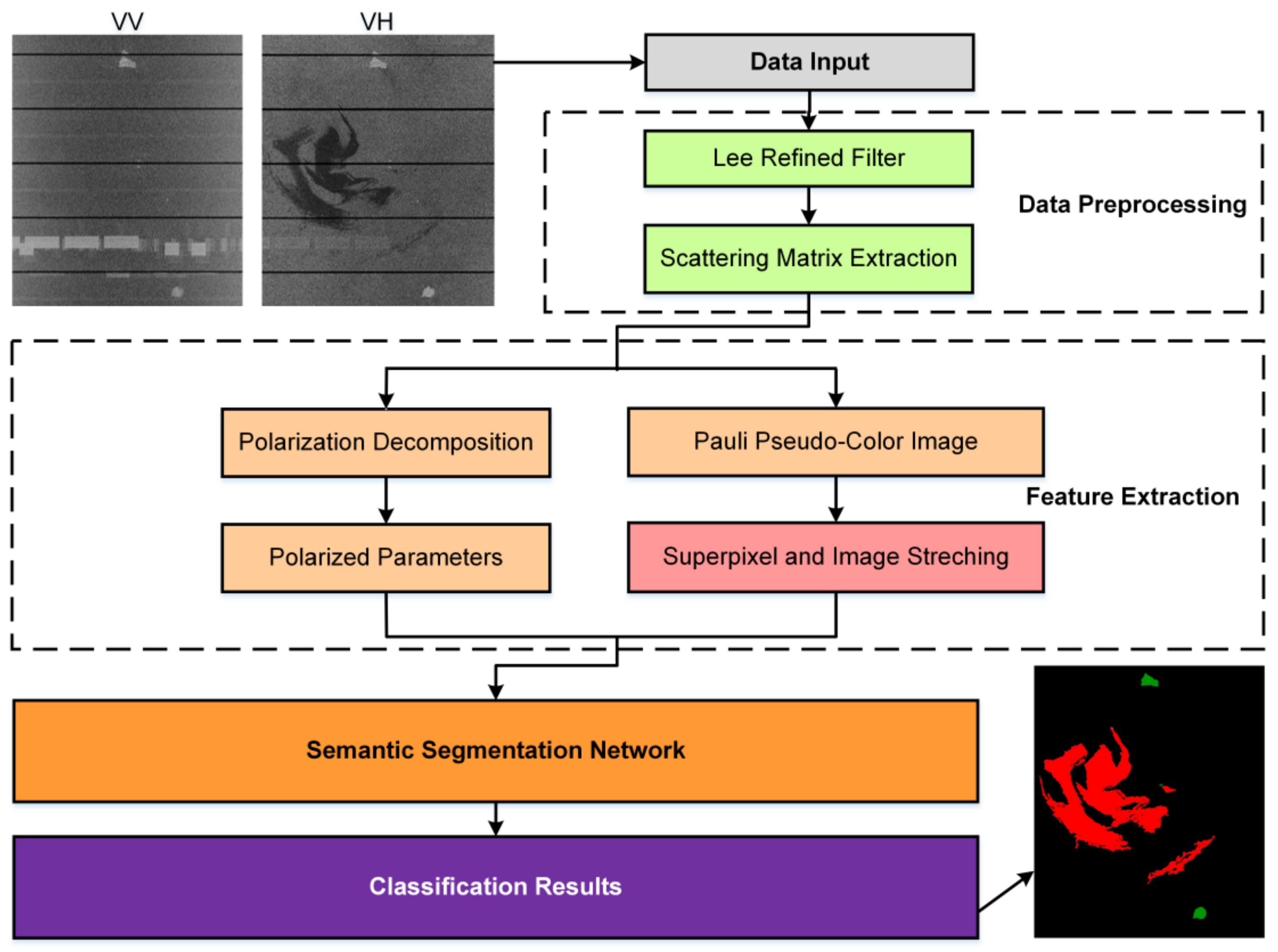

Figure 1.

Overall flowchart of the proposed oil spill detection algorithm.

Figure 1.

Overall flowchart of the proposed oil spill detection algorithm.

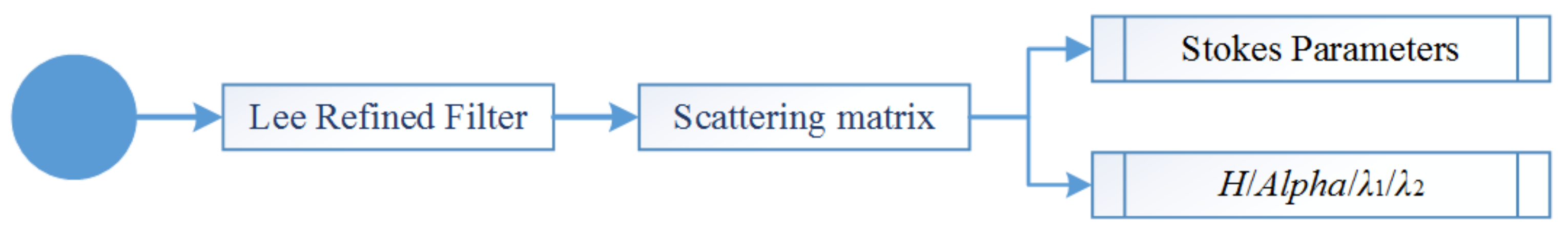

Figure 2.

Polarization decomposition parameters: H/Alpha decomposition and Stokes parameters.

Figure 2.

Polarization decomposition parameters: H/Alpha decomposition and Stokes parameters.

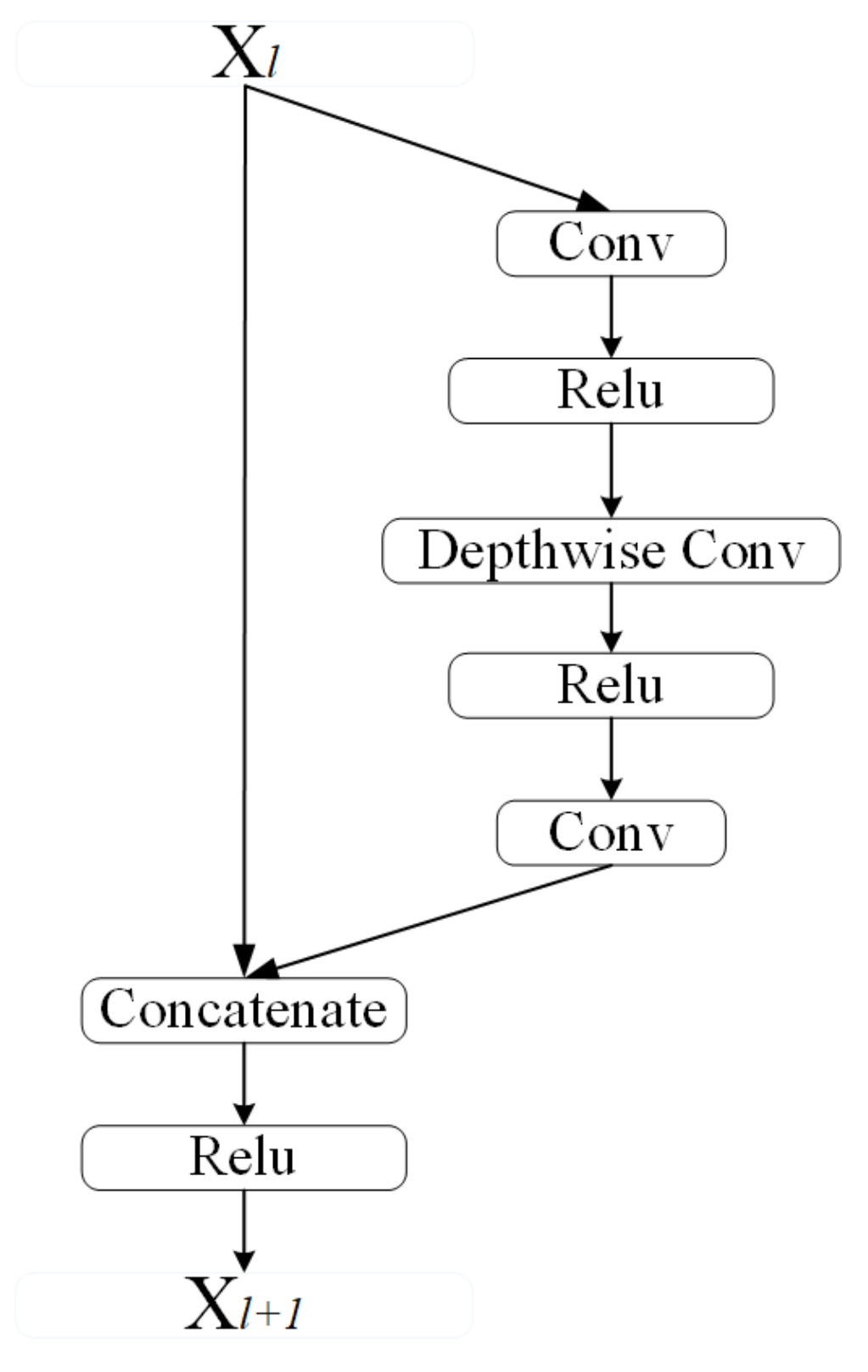

Figure 3.

Detailed structure of Resblock.

Figure 3.

Detailed structure of Resblock.

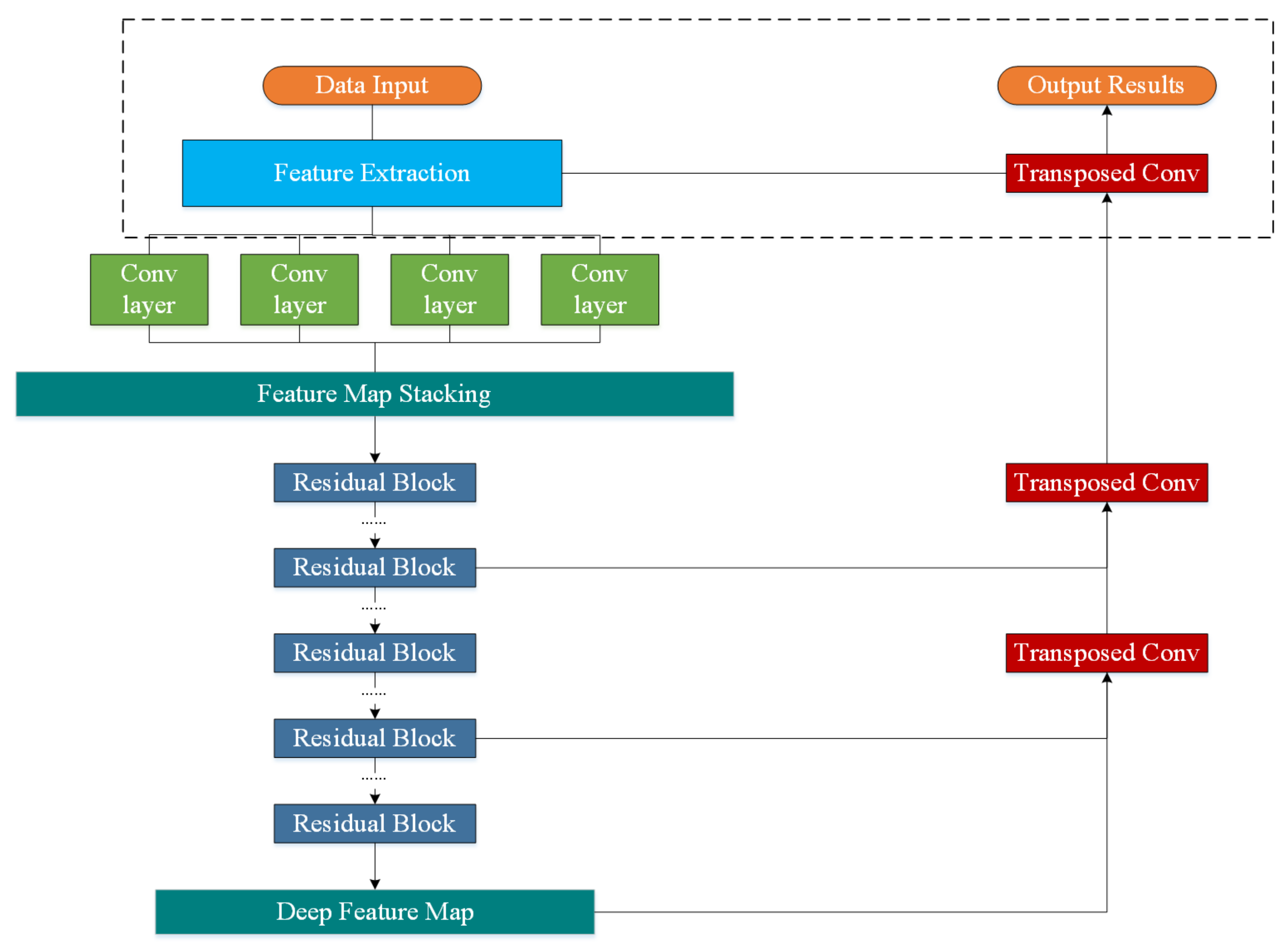

Figure 4.

Structure diagram of neural network model.

Figure 4.

Structure diagram of neural network model.

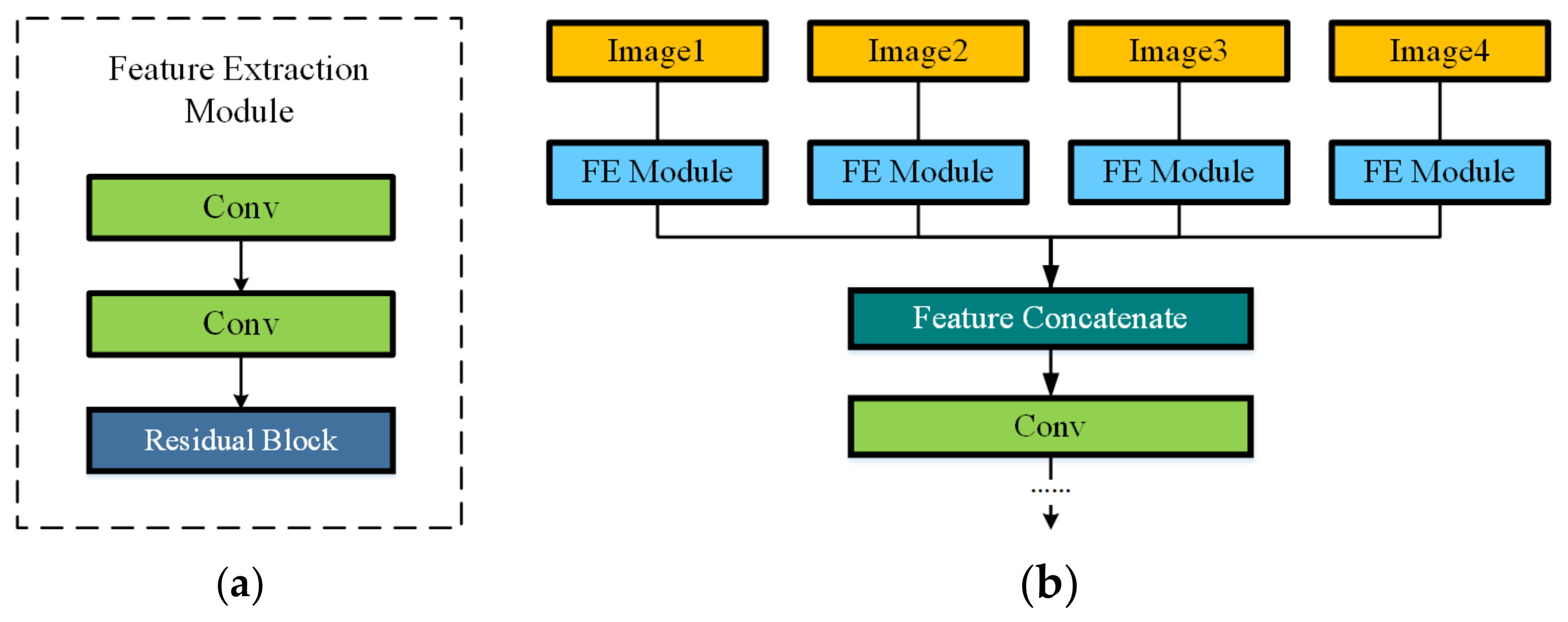

Figure 5.

Structure of feature extraction module and data input/output segments: (a) feature extraction module; (b) data input segment; (c) data output segment.

Figure 5.

Structure of feature extraction module and data input/output segments: (a) feature extraction module; (b) data input segment; (c) data output segment.

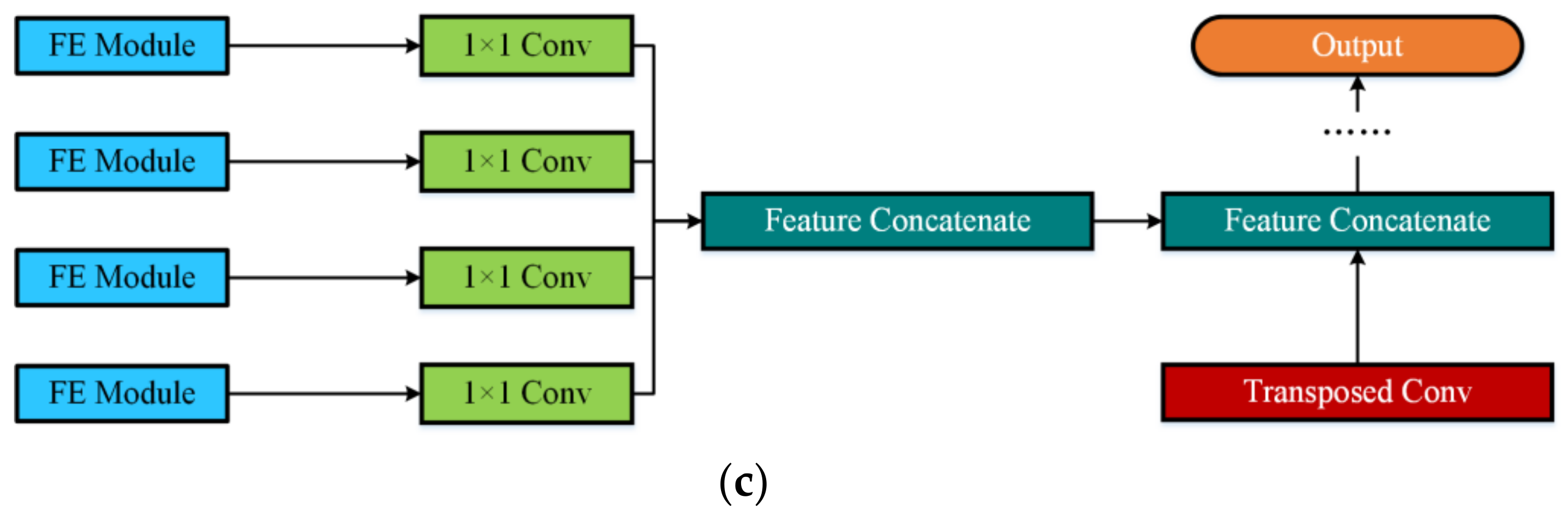

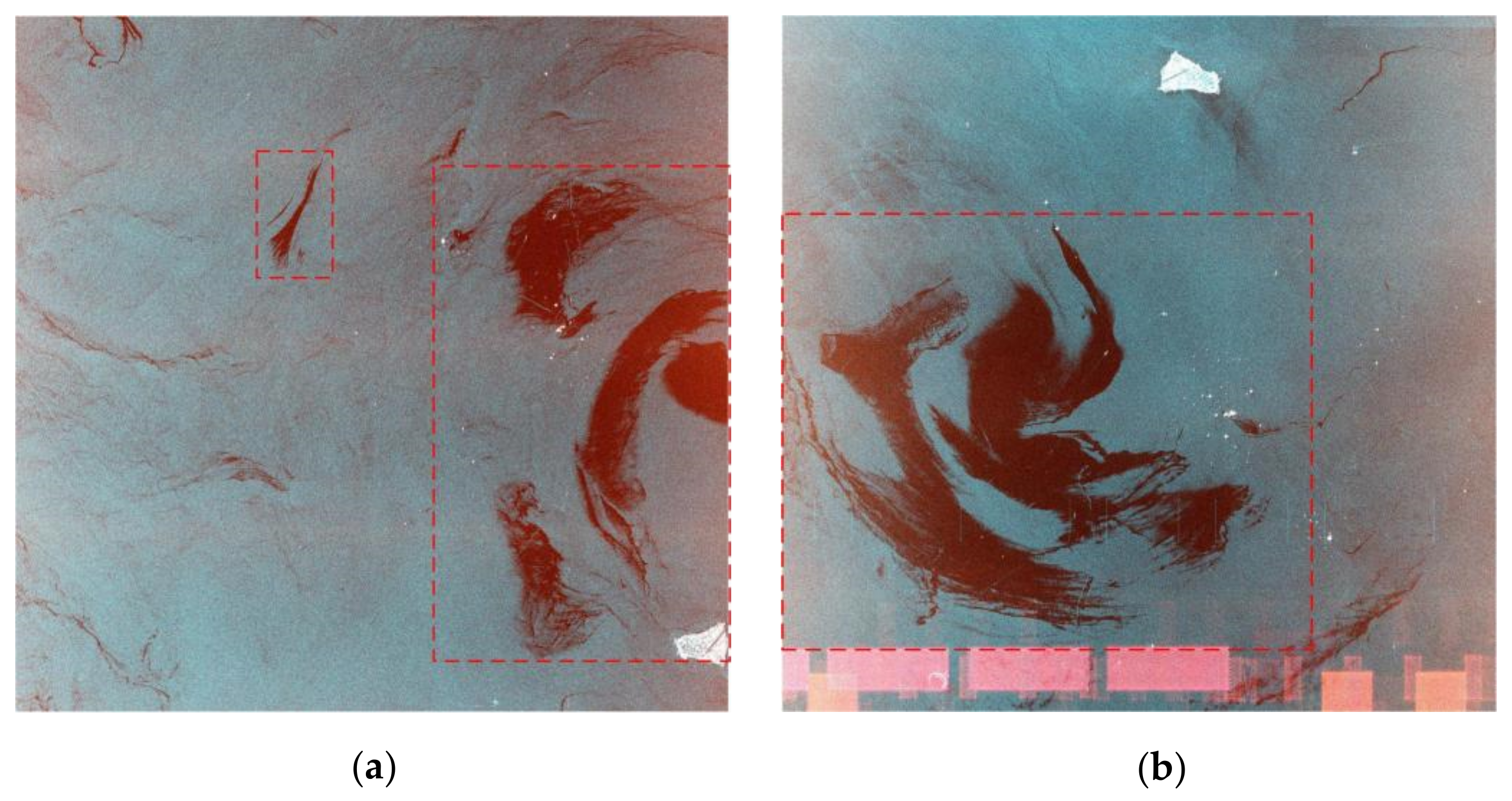

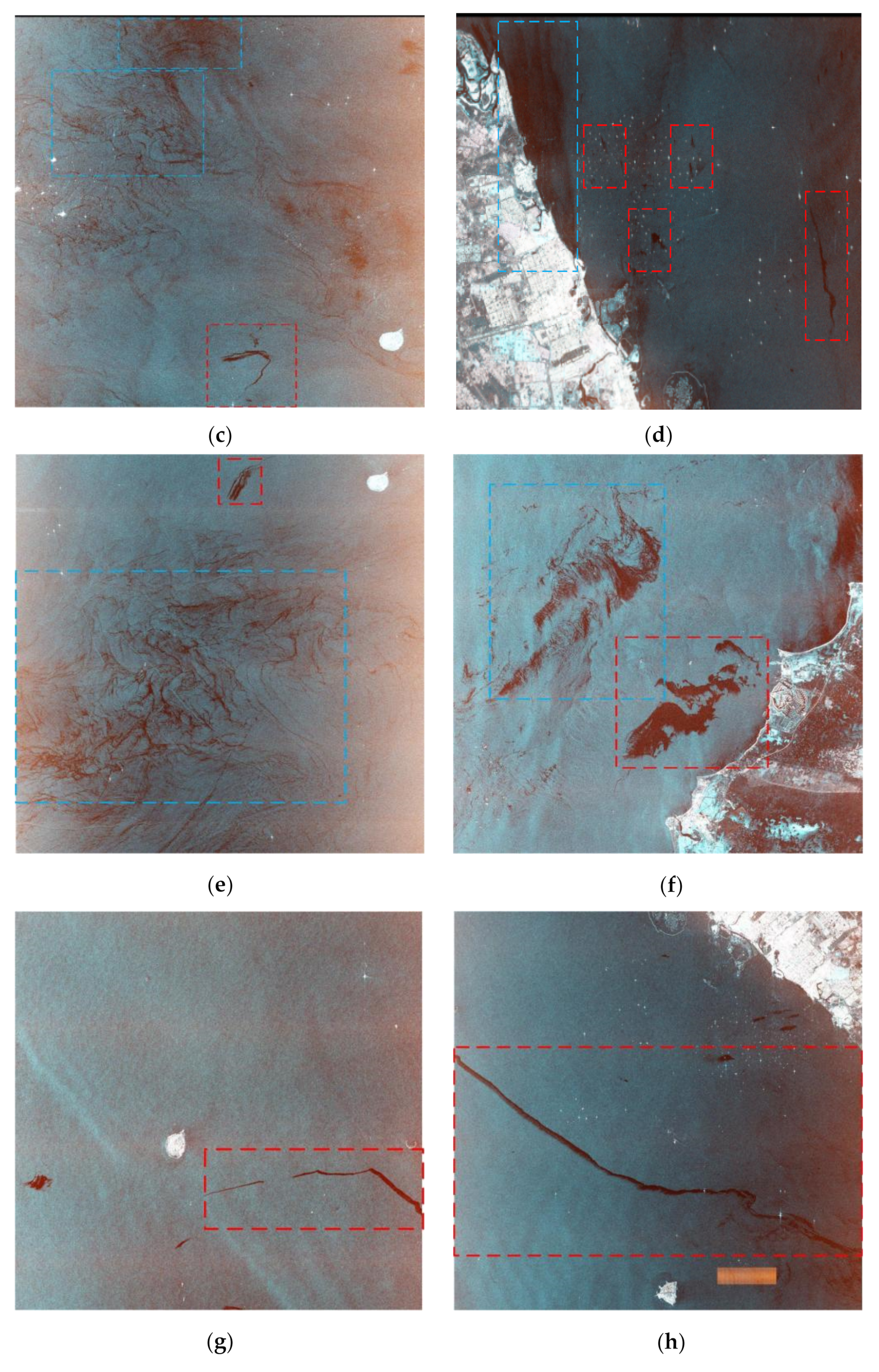

Figure 6.

Pseudo-color images. The dark spots within red dotted boxes represent oil spill area, while blue dotted boxes represent look-alike areas: (a) Image 1, (b) Image 2, (c) Image 3, (d) Image 4, (e) Image 5, (f) Image 6, (g) Image 7, and (h) Image 8.

Figure 6.

Pseudo-color images. The dark spots within red dotted boxes represent oil spill area, while blue dotted boxes represent look-alike areas: (a) Image 1, (b) Image 2, (c) Image 3, (d) Image 4, (e) Image 5, (f) Image 6, (g) Image 7, and (h) Image 8.

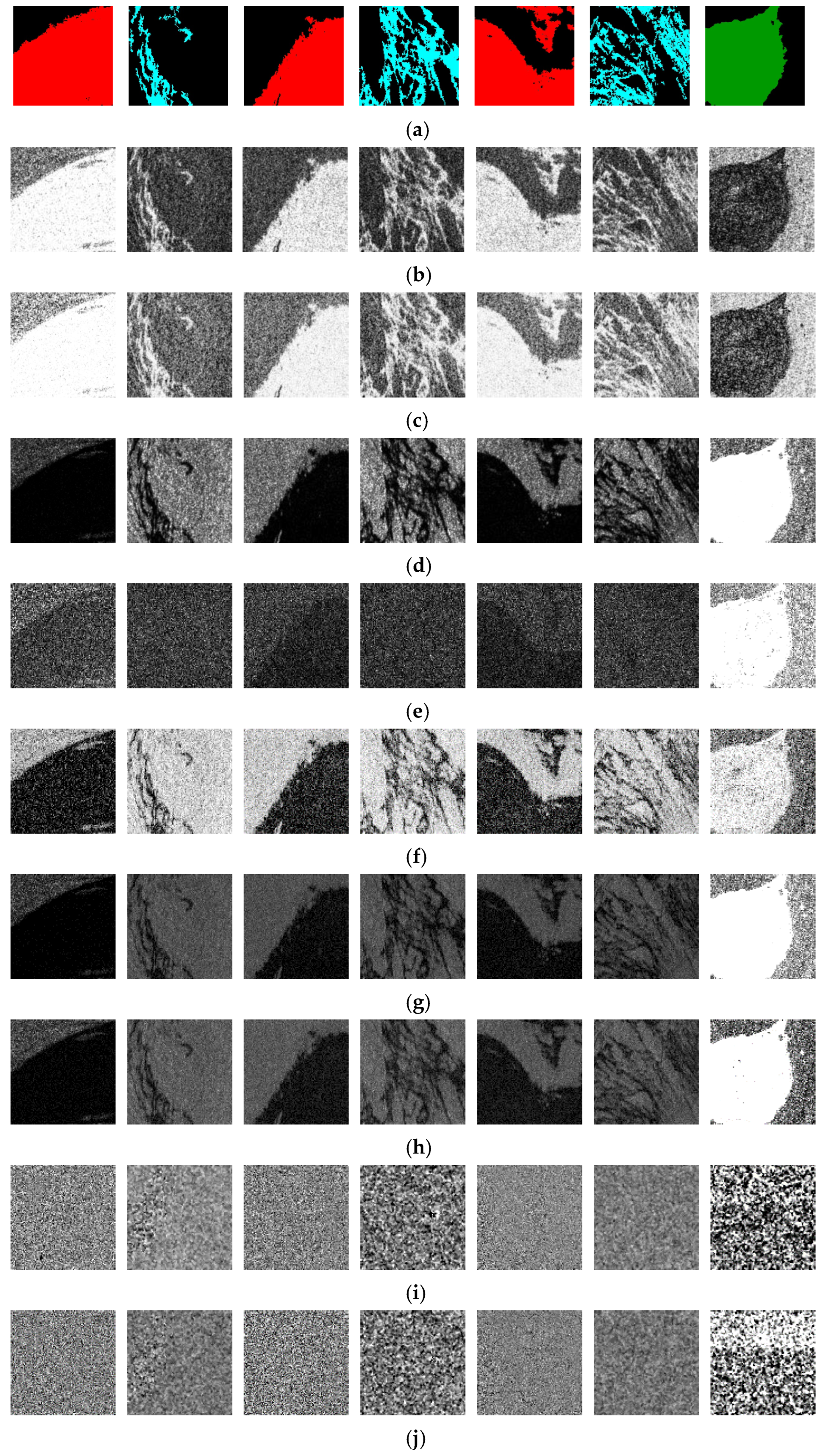

Figure 7.

The ground truth and polarized parameter extraction results of the samples used in the experiment. In the ground truth, red represents oil spill, blue represents look-alike, and green represents land areas: (a) ground truth, (b) Alpha, (c) H, (d) λ1, (e) λ2, (f) Stokes-contrast, (g) Stokes-g0, (h) Stokes-g1, (i) Stokes-g2, and (j) Stokes-g3.

Figure 7.

The ground truth and polarized parameter extraction results of the samples used in the experiment. In the ground truth, red represents oil spill, blue represents look-alike, and green represents land areas: (a) ground truth, (b) Alpha, (c) H, (d) λ1, (e) λ2, (f) Stokes-contrast, (g) Stokes-g0, (h) Stokes-g1, (i) Stokes-g2, and (j) Stokes-g3.

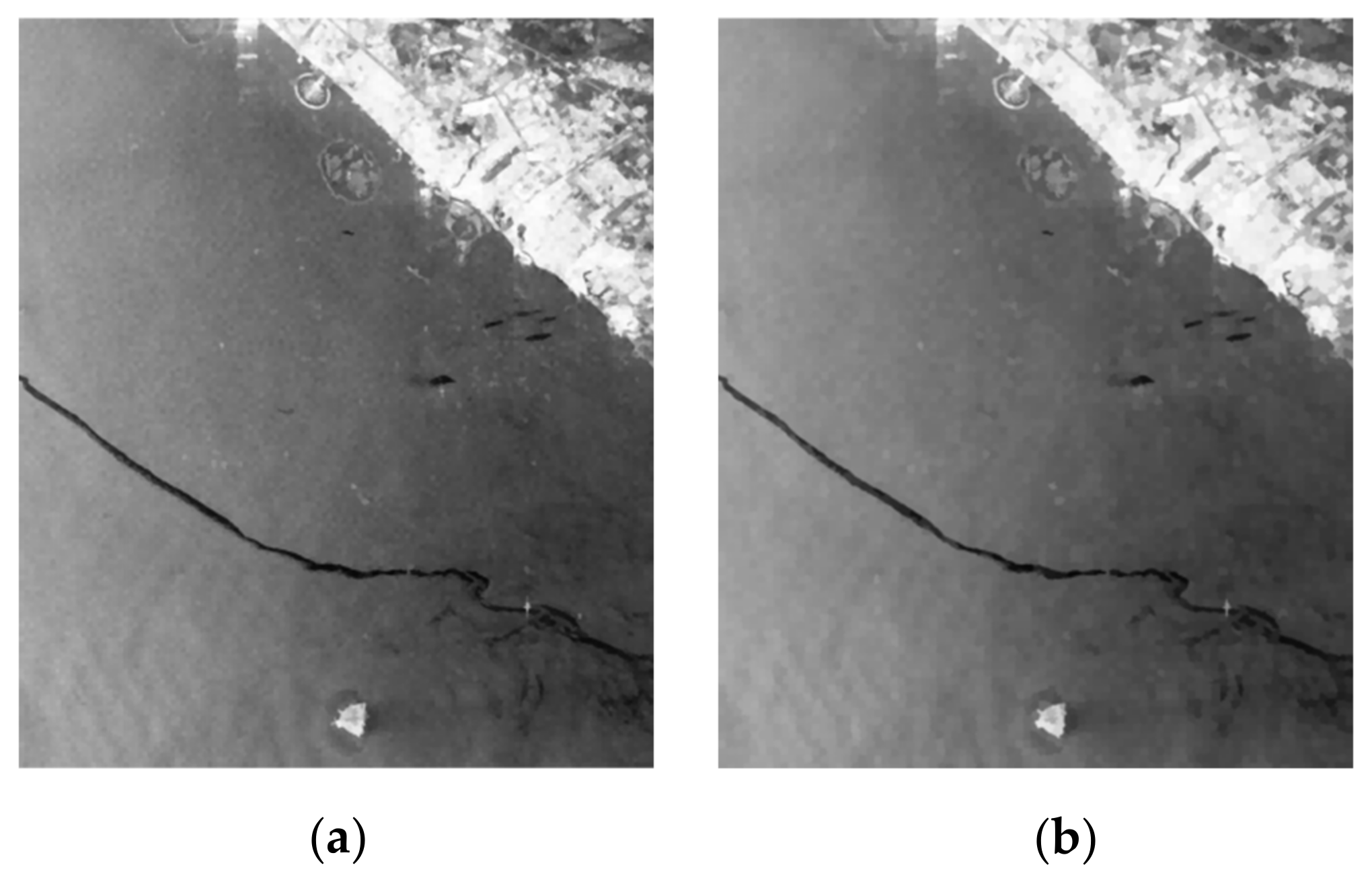

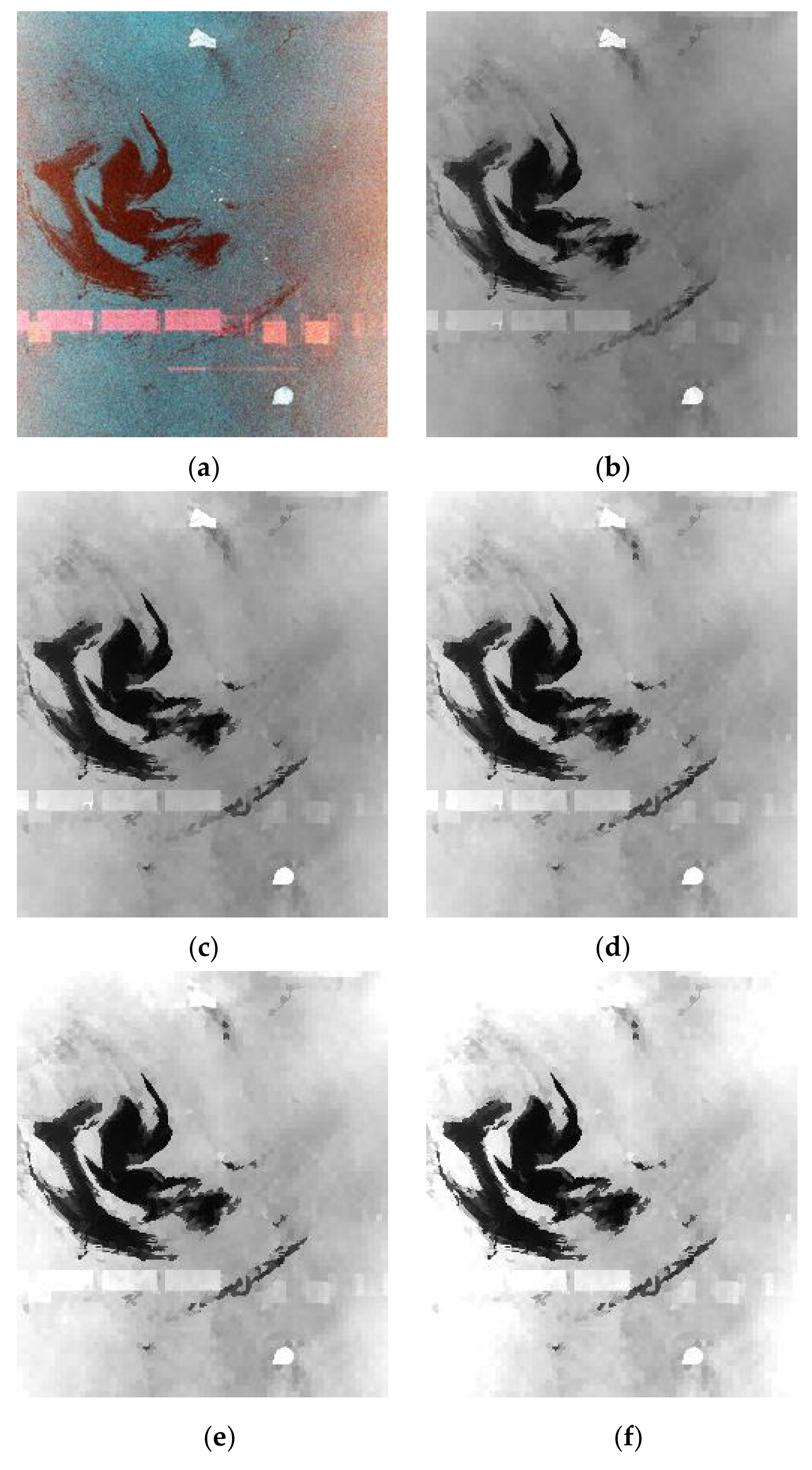

Figure 8.

SLIC superpixel results of Image 8 using different superpixel number settings: (a) 50 × 50, (b) 75 × 75, (c) 100 × 100, (d) 125 × 125, (e) 150 × 150, and (f) 200 × 200.

Figure 8.

SLIC superpixel results of Image 8 using different superpixel number settings: (a) 50 × 50, (b) 75 × 75, (c) 100 × 100, (d) 125 × 125, (e) 150 × 150, and (f) 200 × 200.

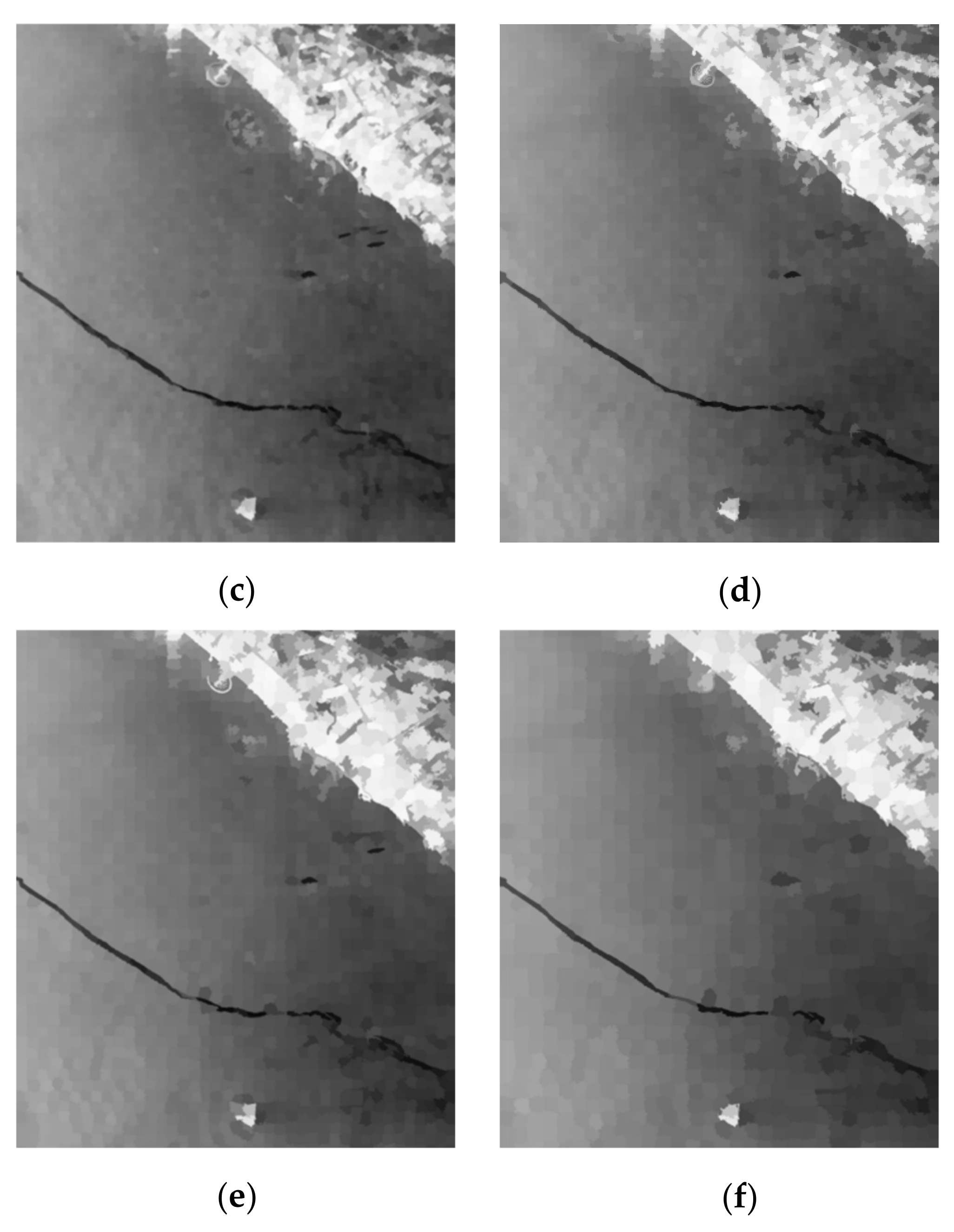

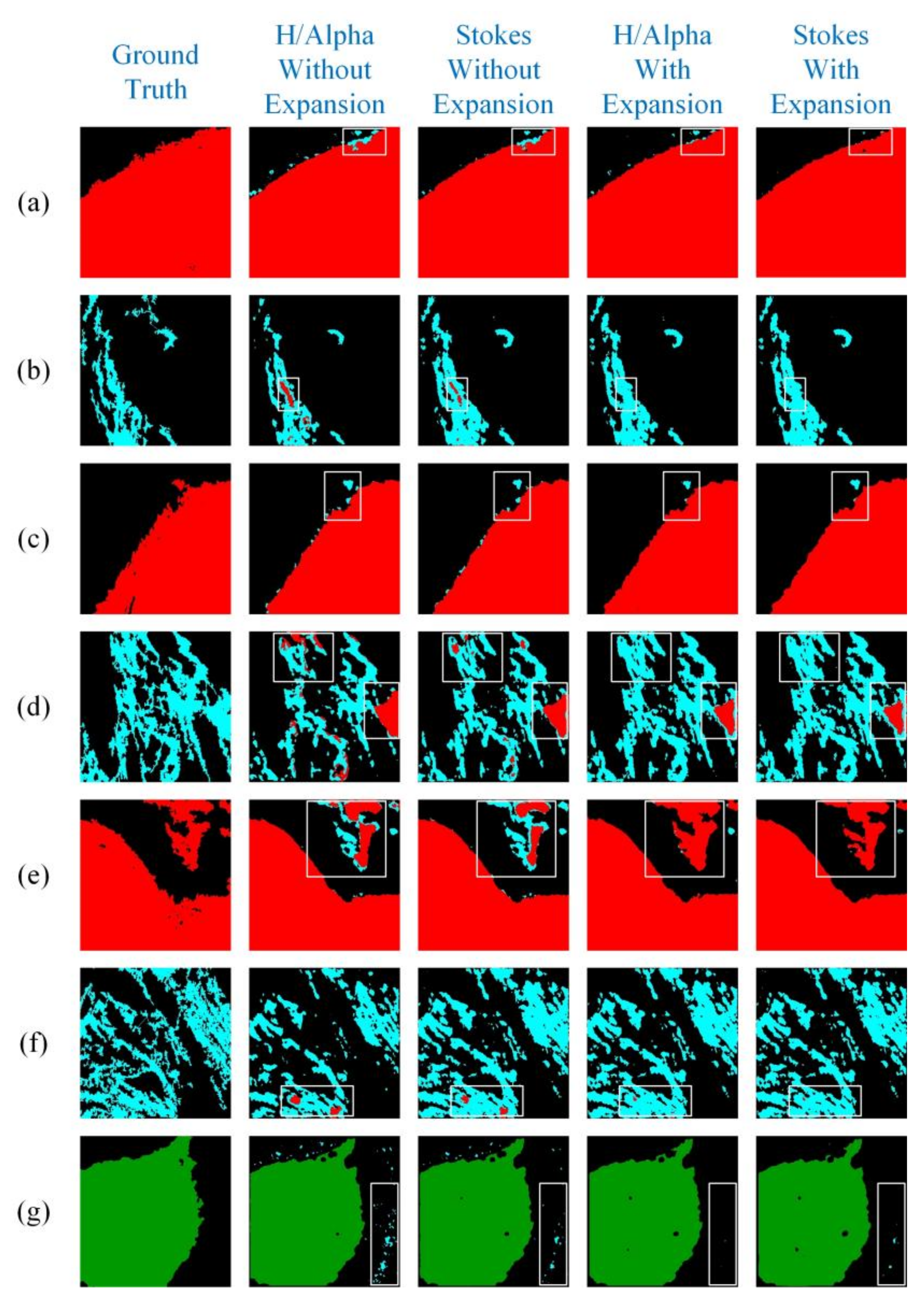

Figure 9.

Classification results of some typical samples using polarized parameters and SLIC superpixels. Red represents oil spill, blue represents look alike, while green represents land areas. (a) oil spill, (b) look alike, (c) oil spill, (d) look alike, (e) oil spill, (f) look alike, (g) land.

Figure 9.

Classification results of some typical samples using polarized parameters and SLIC superpixels. Red represents oil spill, blue represents look alike, while green represents land areas. (a) oil spill, (b) look alike, (c) oil spill, (d) look alike, (e) oil spill, (f) look alike, (g) land.

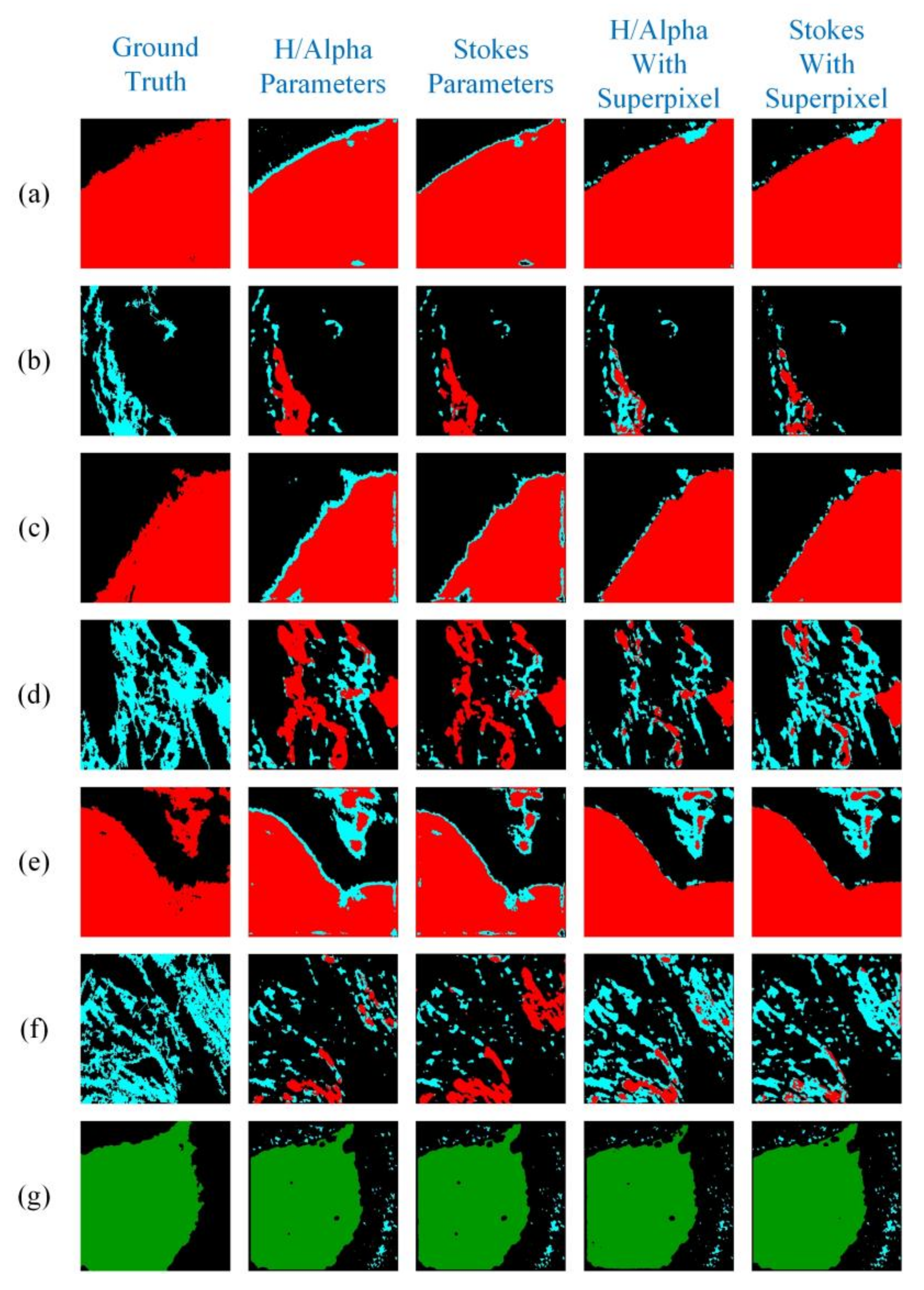

Figure 10.

Image stretching result with different expansion coefficients: (a) original pseudo-color image, (b) SLIC superpixel, (c) SLIC superpixel with 1.2 expansion coefficient, (d) SLIC superpixel with 1.4 expansion coefficient, (e) SLIC superpixel with 1.6 expansion coefficient, and (f) SLIC superpixel with 1.8 expansion coefficient.

Figure 10.

Image stretching result with different expansion coefficients: (a) original pseudo-color image, (b) SLIC superpixel, (c) SLIC superpixel with 1.2 expansion coefficient, (d) SLIC superpixel with 1.4 expansion coefficient, (e) SLIC superpixel with 1.6 expansion coefficient, and (f) SLIC superpixel with 1.8 expansion coefficient.

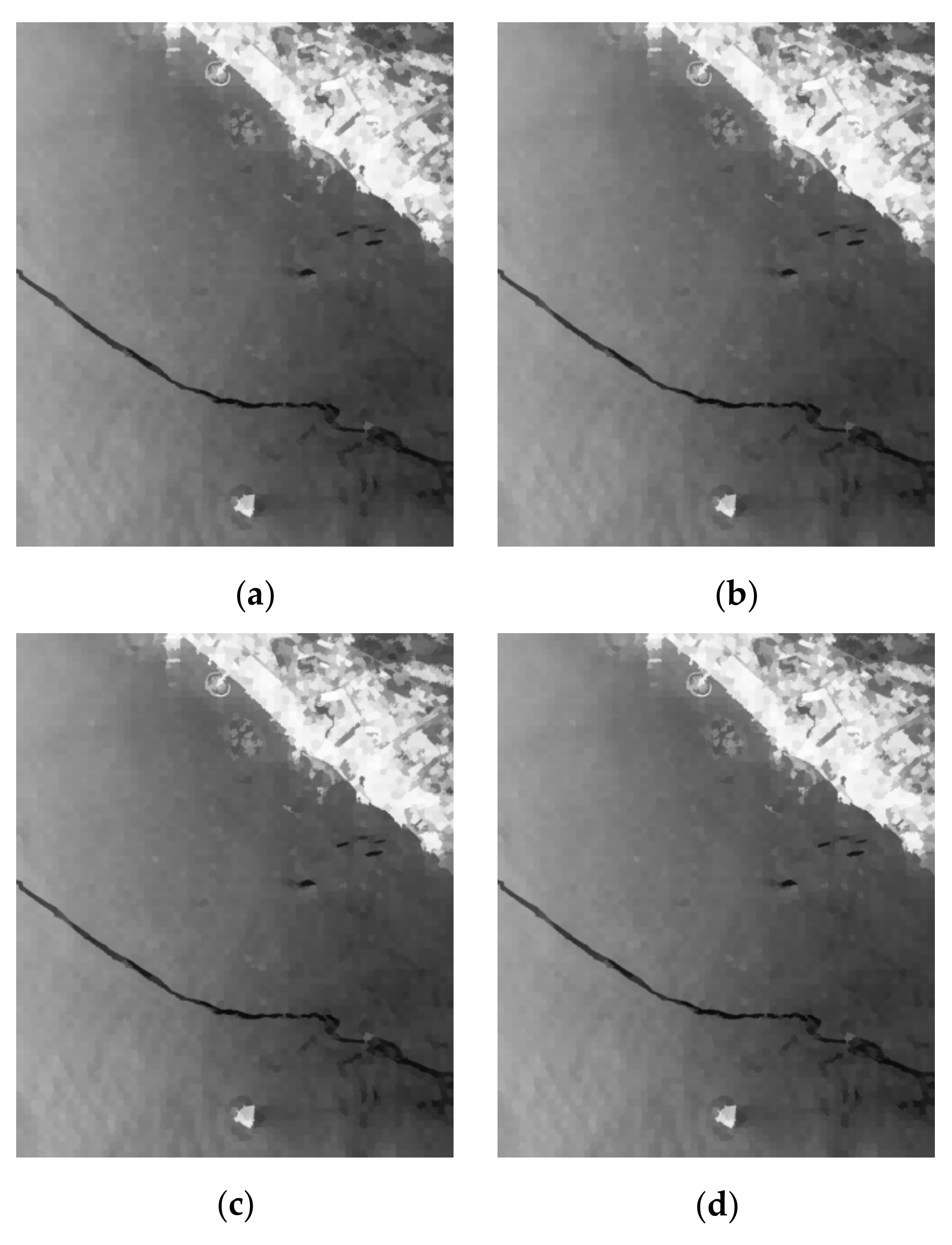

Figure 11.

Image stretching results using different inhibition coefficients: (a) 1.05, (b) 1.1, (c) 1.15, and (d) 1.2.

Figure 11.

Image stretching results using different inhibition coefficients: (a) 1.05, (b) 1.1, (c) 1.15, and (d) 1.2.

Figure 12.

Image stretching results using different expansion coefficients: (a) 1.2, (b) 1.4, (c) 1.6, and (d) 1.8.

Figure 12.

Image stretching results using different expansion coefficients: (a) 1.2, (b) 1.4, (c) 1.6, and (d) 1.8.

Figure 13.

Classification results of several samples using polarized parameters and image stretching. Red represents oil spill, blue represents look alike, while green represents land areas. (a) oil spill, (b) look alike, (c) oil spill, (d) look alike, (e) oil spill, (f) look alike, (g) land.

Figure 13.

Classification results of several samples using polarized parameters and image stretching. Red represents oil spill, blue represents look alike, while green represents land areas. (a) oil spill, (b) look alike, (c) oil spill, (d) look alike, (e) oil spill, (f) look alike, (g) land.

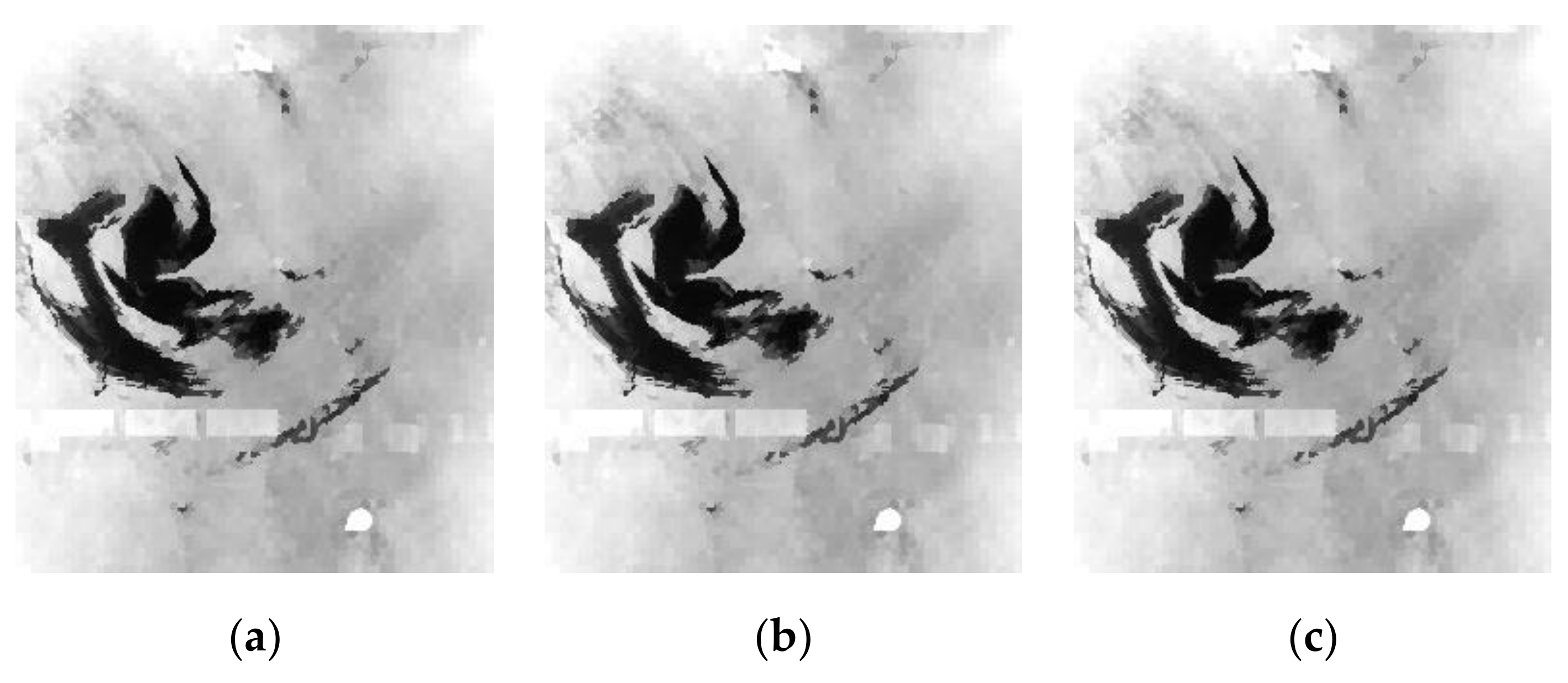

Figure 14.

Image stretching results with different thresholds: (a) 0.67, (b) 0.73, and (c) 0.69.

Figure 14.

Image stretching results with different thresholds: (a) 0.67, (b) 0.73, and (c) 0.69.

Table 1.

Detailed parameters of neural network model.

Table 1.

Detailed parameters of neural network model.

| Layer | Kernel | Size | Strides |

|---|

| Conv1(FE) | Conv | 4 × 4 | 1 |

| Conv2(FE) | Conv | 2 × 2 | 1 |

| Conv3(FE) | Resblock | 3 × 3 | 1 |

| Conv1 | Conv | 3 × 3 | 1 |

| Conv2 | Conv | 3 × 3 | 1 |

| Conv3_b0 | Conv | 3 × 3 | 1 |

| Conv3_b1 | Dilated Conv | 3 × 3 (2) * | 1 |

| Conv3_b2 | Dilated Conv | 3 × 3 (4) | 1 |

| Conv3_b3 | Dilated Conv | 3 × 3 (8) | 1 |

| Conv4 | Dilated Conv | 3 × 3 (2) | 1 |

| Conv5 | Resblock | 3 × 3 | 1 |

| Conv6 | Depthwise | 4 × 4 | 2 |

| Conv7 | Dilated Conv | 3 × 3 (2) | 1 |

| Conv8 | Resblock | 3 × 3 | 1 |

| Conv9 | Depthwise | 4 × 4 | 2 |

| Conv10 | Dilated Conv | 3 × 3 (2) | 1 |

| Conv11 | Resblock | 3 × 3 | 1 |

| Conv12 | Depthwise | 4 × 4 | 2 |

| Conv13 | Dilated Conv | 3 × 3 (2) | 1 |

| Conv14 | Resblock | 3 × 3 | 1 |

| Conv15 | Depthwise | 4 × 4 | 2 |

| Conv16 | Dilated Conv | 3 × 3 (2) | 1 |

| Conv17 | Resblock | 3 × 3 | 1 |

| Conv18 | Depthwise | 4 × 4 | 2 |

| Deconv1 | Transposed Conv | 4 × 4 | 2 |

| Skip1 | Conv | 1 × 1 | 1 |

| Deconv2 | Transposed Conv | 4 × 4 | 4 |

| Skip2 | Conv | 1 × 1 | 1 |

| Deconv3 | Transposed Conv | 4 × 4 | 4 |

| Skip3 | Conv | 1 × 1 | 1 |

Table 2.

Sentinel-1 data used in experiments.

Table 2.

Sentinel-1 data used in experiments.

| Image Number | Date | ID | Stripes | Dark Spots Areas |

|---|

| 1 | 8 March 2017 | 105080 | IW1 | Oil spill |

| 2 | 11 March 2017 | 105355 | IW3 | Oil spill |

| 3 | 18 April 2017 | 109672 | IW3 | Oil spill, look-alike |

| 4 | 10 May 2017 | 112112 | IW2 | Oil spill, look-alike |

| 5 | 5 June 2017 | 115163 | IW3 | Oil spill, look-alike |

| 6 | 10 August 2017 | 122615 | IW1 | Oil spill, look-alike |

| 7 | 10 October 2017 | 129633 | IW1 | Oil spill |

| 8 | 10 October 2017 | 129633 | IW2 | Oil spill |

Table 3.

Number of pixel samples of different classes.

Table 3.

Number of pixel samples of different classes.

| | CS | OS | LA | LAND | SH |

|---|

| Training | 3.028 × 107 | 1.095 × 107 | 8.110 × 106 | 4.313 × 106 | 5.601 × 104 |

| Test | 7.773 × 106 | 2.732 × 106 | 2.304 × 106 | 1.305 × 106 | 1.688 × 104 |

Table 4.

Number of samples of different classes.

Table 4.

Number of samples of different classes.

| | CS | OS | LA | LAND | SH |

|---|

| Training | 160 | 132 | 117 | 60 | 75 |

| Test | 40 | 34 | 30 | 15 | 19 |

Table 5.

Average and variance of different polarized parameters.

Table 5.

Average and variance of different polarized parameters.

| | | CS | OS | LA | LAND | SH |

|---|

| H | Average | 0.5254 | 0.9719 | 0.9173 | 0.6380 | 0.6180 |

| Variance | 0.0038 | 0.0006 | 0.0028 | 0.0341 | 0.0349 |

| Alpha | Average | 12.6749 | 40.3197 | 33.5511 | 20.0331 | 19.1461 |

| Variance | 5.0410 | 14.5093 | 24.9643 | 51.0270 | 66.5614 |

| λ1 | Average | 0.0104 | 0.0016 | 0.0042 | 0.2543 | 1.0049 |

| Variance | 2.5366 × 10−6 | 7.6022 × 10−8 | 9.6723 × 10−7 | 4.1617 | 3.3318 |

| λ2 | Average | 0.0014 | 0.0010 | 0.0021 | 0.0241 | 0.1156 |

| Variance | 3.7614 × 10−8 | 1.6030 × 10−8 | 6.9204 × 10−8 | 0.0010 | 0.0247 |

| Stokes-g0 | Average | 0.0118 | 0.0026 | 0.0063 | 0.2783 | 1.3210 |

| Variance | 2.5764 × 10−6 | 1.1994 × 10−7 | 1.0547 × 10−6 | 4.1810 | 3.8715 |

| Stokes-g1 | Average | 0.0090 | 0.0004 | 0.0019 | 0.2191 | 0.8730 |

| Variance | 2.5728 × 10−6 | 9.3223 × 10−8 | 1.0947 × 10−6 | 4.0485 | 2.0367 |

| Stokes-g2 | Average | 8.0368 × 10−5 | −9.4482 × 10−6 | −2.7806 × 10−5 | −0.0175 | −0.0458 |

| Variance | 3.8482 × 10−7 | 4.3933 × 10−8 | 2.2210 × 10−7 | 0.0966 | 0.0161 |

| Stokes-g3 | Average | 3.3683 × 10−5 | 2.5317 × 10−5 | 2.9162 × 10−5 | 0.0022 | 0.0889 |

| Variance | 3.8098 × 10−7 | 4.3567 × 10−8 | 2.2311 × 10−7 | 0.0041 | 0.0066 |

| Contrast | Average | 0.7568 | 0.1306 | 0.2951 | 0.6223 | 0.6413 |

| Variance | 0.0019 | 0.0095 | 0.0134 | 0.0221 | 0.0137 |

Table 6.

MIoU of classification results based on polarimetric parameters with different SLIC superpixel numbers.

Table 6.

MIoU of classification results based on polarimetric parameters with different SLIC superpixel numbers.

| Superpixel Number | H/Alpha/λ1/λ2 | Stokes |

|---|

| Without superpixel | 70.6% | 72.0% |

| 50 | 75.9% | 76.7% |

| 75 | 77.0% | 77.6% |

| 100 | 77.3% | 77.7% |

| 125 | 77.2% | 78.1% |

| 150 | 75.5% | 76.0% |

| 200 | 75.2% | 75.4% |

Table 7.

IoU values of oil spill detection with and without superpixels.

Table 7.

IoU values of oil spill detection with and without superpixels.

| | H/Alpha/λ1/λ2 | Stokes |

|---|

| | Without Superpixels | With 125 × 125 Superpixels | Without Superpixels | With 125 × 125 Superpixels |

|---|

| Clean sea | 80.6% | 87.0% | 81.5% | 88.3% |

| Oil spill | 72.7% | 78.5% | 74.3% | 78.5% |

| Look alike | 65.3% | 71.2% | 68.1% | 73.8% |

| Land | 75.9% | 81.3% | 77.7% | 82.4% |

| Ship | 58.5% | 66.9% | 58.3% | 67.7% |

Table 8.

MIoU value of different inhibition coefficients.

Table 8.

MIoU value of different inhibition coefficients.

| Inhibition Coefficient | None | 1.05 | 1.1 | 1.15 | 1.2 |

|---|

| H/Alpha/λ1/λ2 | 77.3% | 77.8% | 78.2% | 78.8% | 78.7% |

| Stokes | 77.7% | 78.6% | 78.9% | 79.5% | 80.0% |

Table 9.

Characteristics of polarized parameters in experiments.

Table 9.

Characteristics of polarized parameters in experiments.

| | Clean Sea | Oil Spill | Look Alike | Land | Ship |

|---|

| H/Alpha/λ1/λ2 | 88.6% | 79.8% | 73.4% | 83.0% | 68.9% |

| Stokes | 89.1% | 80.9% | 76.5% | 84.4% | 69.1% |

Table 10.

MIoU value of different inhibition coefficients.

Table 10.

MIoU value of different inhibition coefficients.

| Expansion Coefficient | None | 1.2 | 1.4 | 1.6 | 1.8 |

|---|

| H/Alpha/λ1/λ2 | 78.7% | 80.0% | 82.1% | 84.5% | 83.0% |

| Stokes | 80.0% | 81.7% | 83.9% | 85.4% | 83.6% |

Table 11.

IoU Values of polarized parameters with 125 × 125 superpixels and different expansion coefficients.

Table 11.

IoU Values of polarized parameters with 125 × 125 superpixels and different expansion coefficients.

| | Expansion | Clean Sea | Oil Spill | Look Alike | Land | Ship |

|---|

| H/Alpha/λ1/λ2 | 1.2 | 88.5% | 84.7% | 77.9% | 84.4% | 69.3% |

| 1.4 | 89.7% | 88.1% | 82.8% | 86.8% | 69.5% |

| 1.6 | 90.2% | 89.3% | 84.6% | 88.7% | 69.7% |

| 1.8 | 88.7% | 89.1% | 84.4% | 87.2% | 69.1% |

| Stokes | 1.2 | 88.8% | 85.3% | 79.9% | 86.6% | 69.3% |

| 1.4 | 89.4% | 88.8% | 84.3% | 88.5% | 69.4% |

| 1.6 | 90.6% | 90.1% | 86.8% | 89.0% | 69.6% |

| 1.8 | 88.1% | 89.2% | 85.2% | 87.0% | 69.0% |

Table 12.

Indicators of Polarized parameters with 125 × 125 superpixels and different expansion coefficients.

Table 12.

Indicators of Polarized parameters with 125 × 125 superpixels and different expansion coefficients.

| | Expansion | Accuracy | Precision | Recall | F1-Score |

|---|

| H/Alpha/λ1/λ2 | 1.2 | 0.894 | 0.815 | 0.894 | 0.852 |

| 1.4 | 0.921 | 0.835 | 0.907 | 0.870 |

| 1.6 | 0.925 | 0.861 | 0.923 | 0.891 |

| 1.8 | 0.920 | 0.836 | 0.905 | 0.869 |

| Stokes | 1.2 | 0.899 | 0.839 | 0.905 | 0.869 |

| 1.4 | 0.926 | 0.847 | 0.919 | 0.881 |

| 1.6 | 0.933 | 0.869 | 0.926 | 0.897 |

| 1.8 | 0.928 | 0.844 | 0.914 | 0.878 |

Table 13.

IoU and MIoU values of validation experiments with and without image stretching.

Table 13.

IoU and MIoU values of validation experiments with and without image stretching.

| | Image Stretching | Clean Sea | Oil Spill | Look Alike | Land | Ship | MIoU |

|---|

| Image 2 | Without | 86.2% | 83.1% | - | 84.3% | 64.4% | 79.5% |

| With | 88.7% | 87.5% | - | 88.4% | 67.6% | 83.1% |

| Image 4 | Without | 79.8% | 76.6% | 74.4% | 77.7% | 63.9% | 74.5% |

| With | 84.3% | 80.1% | 79.9% | 80.3% | 67.0% | 78.3% |

| Image 6 | Without | 84.1% | 70.5% | 65.7% | - | 63.7% | 71.0% |

| With | 89.1% | 77.2% | 73.3% | - | 67.2% | 76.7% |

| Average | Without | 83.4% | 76.7% | 70.0% | 81.0% | 64.0% | 75.0% |

| With | 87.4% | 81.6% | 76.6% | 84.3% | 67.3% | 79.4% |

| Mean Square Deviation | Without | 2.66% | 5.19% | 4.35% | 3.30% | 0.29% | 3.49% |

| With | 2.24% | 4.25% | 3.30% | 4.05% | 0.25% | 2.72% |