Abstract

Differences in acquisition time, light conditions, and viewing angle create significant differences among the airborne remote sensing images from Unmanned Aerial Vehicles (UAVs). Real-time scene matching navigation applications based on fixed reference maps are error-prone and have poor robustness. This paper presents a novel shadow-based matching method for the localization of low-altitude flight UAVs. A reference shadow map is generated from an accurate (0.5 m spatial resolution) Digital Surface Model (DSM) with the known date and time information; a robust shadow detection algorithm is employed to detect shadows in aerial images; the shadows can then be used as a stable feature for scene matching navigation. Combining the conventional intensity-based matching method, a fusion scene navigation scheme that is more robust to illumination variations is proposed. Experiments were performed with Google satellite maps, DSM data, and real aerial images of the Zurich region. The radial localization error of the Shadow-based Matching (SbM) is less than 7.3 m at flight height below 1200 m. The fusion navigation approach also achieves an optimal combination of shadow-based matching and intensity-based matching. This study shows the solution to the inconsistencies caused by changes in light, viewing angle, and acquisition time for accurate and effective scene matching navigation.

1. Introduction

UAVs are commonly used for surveying and mapping applications. Traditional Inertial Navigation System (INS) and Global Navigation Satellite System (GNSS) integrated navigation systems are widely used to achieve autonomous localization and navigation [1]. However, the INS accumulates positioning error over time and the GNSS is susceptible to radio interference and jamming [2]. Utilizing the onboard camera to assist the aerial/ground vehicle in positioning and navigation is necessary and beneficial in areas where the GNSS is unavailable or unreliable. This technique is named vision-based navigation [3,4,5,6], and it may involve relative localization or absolute localization [7,8]. Relative localization serves to estimate the UAV’s relative motion by tracking the correspondences between sequential images over time. The most commonly used relative localization method is Visual Odometry (VO) [9]. However, VO is based on continuous images acquired with consistent lighting conditions. Similar to the INS, VO comes with cumulative error; it requires loop closure detection and later bundle adjustment optimization to reach acceptable accuracy [10,11]. Absolute localization is achieved by registering real-time visual images to the prior geo-referenced map [12,13]. Although it requires extra geo-referenced data, it can reveal the absolute locations of UAVs without cumulative errors. Therefore, it is thus a promising alternative navigation system for UAVs. We focus on absolute localization, which also is called the Scene Matching Navigation (SMN) [6], as referred to in this paper.

Current satellite images cover almost the entire planet and are available to access via geographical information engines such as Google Earth and Bing Maps. Very high-resolution satellite images reach a ground sampling distance up to 0.3 m [14], such high ground resolution geo-referenced image data are accurate enough for a UAV’s scene matching navigation application. However, the images collected by the UAV might have significant differences compared to satellite images in terms of illumination conditions and viewing angles [15]. The conventional Intensity-based Matching (IbM) method [16] and feature-based matching method may fail.

Shadow is one of the main factors which cause the failure of the image matching algorithm, especially in the scenario of low-altitude flying in urban areas [17]. Few researchers have conducted in-depth studies on shadows and used them for SMN. This paper employs shadow as the matching feature for SMN, and achieves the following contributions:

(1) Proposes a novel Shadow-based Matching (SbM) method for SMN which is insensitive to illumination changes. (2) Proposes an illumination-invariant shadow detection approach that combines morphological and mathematical methods, which is used for real-time shadow detection of aerial images [18].

As an extension to our preliminary work [18], this paper provides additional experiments in the new dataset and makes the following improvements: (1) Extends the image dataset for shadow detection with different illumination conditions to further validate the robust performance of the shadow detection algorithm. (2) Proposes a robust selection strategy of the SMN method for SbM and IbM. (3) Proposes the constraint of multi-frame consistency for SMN. (4) Flight simulation experiments are conducted using real flight data, to compare and verify the performance of the four navigation models.

This paper is organized as follows. Related work is described in Section 2. In Section 3, we verify the difficulty of image matching by the IbM method and the feature-based method with illumination variation. Section 4 describes in detail the proposed SbM method, illumination-invariant shadow detection approach, and the robust selection strategy of SMN. Experiments and discussions are described in Section 5 and Section 6. A brief conclusion of our work and suggestions for future research are given in Section 7.

2. Related Work

The matching methods supporting SMN are intensity-based, feature-based, and structure-based. Intensity-based algorithms such as the Normalized Cross-Correlation (NCC) [12] and Phase Correlation (PC) [19] are based on global spatial distributions of image gray values; they are robust to overall illumination variations and suitable for high-altitude aerial image matching. However, when there are more non-uniform change factors, especially in low-altitude aerial images in urban areas (e.g., shadows, occlusion), the performance of the intensity-based approach may be significantly degraded [20].

In contrast to intensity-based approaches, feature-based approaches are more often adopted in urban areas where salient features can be easily detected. Features can be distinctive points and lines [21,22]. The SIFT [23], SURF [24], and ORB [25] feature points are widely applied for image registration due to their robustness to scaling, rotation, and translation. Image matching using these point feature detectors and descriptors, which vary significantly in terms of illumination or camera pose (position and orientation), remains a challenging task [26,27], especially for images from different sensing devices (e.g., aerial versus satellite images). Deep learning-based feature points that are highly invariant to significant viewpoint changes and illumination changes are also applied in image matching [28,29]. However, it takes a long period to obtain a large sample of training images.

With the development of object recognition and classifier, special structures in images can be extracted or identified. The structure-based approaches use such structure information to construct the nodes of the topological graph and use graph matching methods to accomplish image matching [30,31]. Dawadee et al. [32] proposed a matching method based on the geometric relationship between significant lines and landmarks that is invariant to illumination, image scaling, and rotation. Jin et al. [5] used a color constancy algorithm for landmark detection, and then use the structural information of the center location of landmarks for scene matching and positioning. Michaelsen et al. [30] proposed a knowledge-based structural pattern recognition method used for SMN. Though effective, structural features are dependent on the availability of landmarks and unique structures in the scenes.

Many organizations and companies, such as the U.S. Geological Survey (USGS) and Google, provide high-quality topographic data including Digital Elevation Models (DEMs) and DSMs, to meet the growing needs for three-dimensional map representation and autonomous vehicles [33]. In recent years, several authors have used these terrain data for image matching of rendered images to overcome variations in illumination or camera pose. The principle of these methods is to establish a correspondence between a rendered image and a visual image. Talluri and Aggarwal [34] used a horizon line contour matching method to localize a robot in mountainous regions. Woo et al. [35] used mountain peaks and the horizon as features of render/visual image for UAV localization. Baboud et al. [36] proposed a method to estimate the position of a camera relative to a geometric terrain model based on detected mountain edges. Wang et al. [37] rendered a reference image from the DEM data and developed a matching approach for mountainous regions based on the “Minutiae” feature. Taneja et al. [38] proposed a method to register spherical panoramic images with 3D street models in urban areas. Ramalingam et al. [39] estimated the global position by matching the skyline detected from the omnidirectional image with the skyline segments extracted from a coarse 3D city model. These methods are still limited to ground-based robots and high-flying aircraft.

The study in [18] uses DSM data to generate shadows and employs shadows as an image feature for SMN to overcome the influence of illumination variations on image matching, but is limited to areas with buildings and is not effective during cloudy days and in flat areas.

3. Problem Description

When UAVs fly at low altitudes, the images they take at different times may significantly differ in appearance. This lack of consistency, especially in urban areas with tall objects such as houses, buildings, and trees, can significantly complicate the matching process. In addition to variable lighting conditions, problems also arise with building projection transformations caused by different camera poses. The shadows of these tall objects may cause the scene matching algorithm to fail. Traditional scene matching algorithms are not effective in regard to these issues.

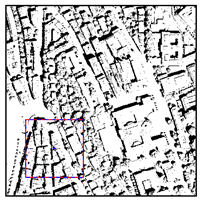

As shown in Figure 1a–c, geo-referenced images of the area with buildings captured at different times present different illumination conditions, which markedly affect the brightness of the scene and the size and orientation of the shadows of tall objects. The presence and variation of local shadows make the descriptors of the features no longer stable even after normalization. There are too few identified inliers in the feature-based matching results. In images of the flat area taken at different times, as shown in Figure 1g–i, we can see that there are almost no shadows there. Some identified inliers can be found in the matched image pairs (Figure 1j–l), but these inliers are also very sparse due to the lack of texture and gradient information in the flat region.

Figure 1.

Images captured at different times with visual matching results. (a–c) Images of the area with buildings at different times; (d–f) visual matching results by SURF + FLANN [40] of image pairs (a,b), (b,c), and (a,c); (g–i) images of the flat area at different times; (j–l) visual matching results by SURF + FLANN of image pairs (j,k), (k,l), and (j,l).

In addition, we calculate the correlation coefficient [16] of the two groups of image pairs, which is the core measure metric in the IbM method, where a low coefficient represents low image similarity and vice versa. As seen in Table 1, the correlation coefficients of the image pairs of the building area are all less than 0.26, while the correlation coefficients of the image pairs in the flat area are all higher than 0.47.

Table 1.

Feature-based matching and correlation coefficient of image pairs in Figure 1.

In other words, the presence of shadows affects the robustness of feature-based matching and the IbM, but in the flat area, the interference produced by lighting variations is very small, which makes the IbM method have certain advantages.

4. Methodology

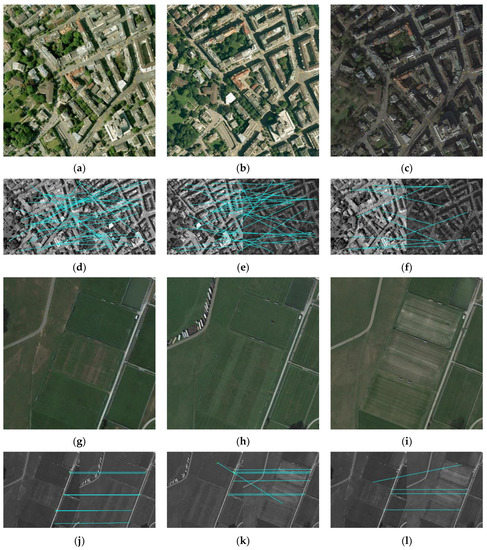

The scene matching localization system uses a downward-looking camera and localizes them in a geo-referenced map. Based on the problem described in Section 3, the scene matching method proposed in this paper uses shadows as features for matching, and finally proposes a more robust selection strategy of SbM and IbM. The principal mechanism of shadow matching is described in Section 4.1. The workflow of the proposed SbM method is depicted within the left black dashed border of Figure 2. The workflow of the IbM method is shown within the right black dashed border of Figure 2. Using onboard DSM data and the current date and time, a reference shadow map is created online (described in Section 4.2). We use the proposed thresholding-based shadow detection algorithm to generate the real-time shadow image from the UAV’s real-time shot (described in Section 4.3). Then, the two previously obtained binary images are used for image matching to obtain the localization result. The matching result is subsequently evaluated with the multi-frame consistency constraint (described in Section 4.4). Integrated navigation strategies are described in Section 4.5.

Figure 2.

The flowchart of the shadow-based matching approach and the intensity-based matching approach for SMN.

4.1. Consistency of Shadow in Orthophoto

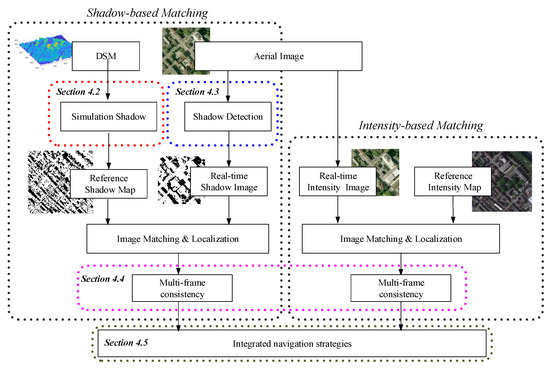

An Orthophoto (OP) is based on an infinite camera model. All pixels in the OP have a uniform spatial resolution. An aerial image, conversely, is based on a finite projection camera model; the pixels of objects at different depths have different spatial resolutions. When the depth of field is at the same level, pixels in aerial images also have a uniform spatial resolution. In urban areas, the shadows of vertical objects are mostly cast on the ground area adjacent to them; therefore, we consider the spatial resolution of shadows to be in good coherence with the ground.

As shown in Figure 3, the shadows in an aerial image and OP image will change as the Sun’s position changes. The pixel positions of roofs and facades in an aerial image also vary as the camera pose (position and attitude) changes. Displacement and occlusion of buildings vary according to the principle of the projection camera model in an aerial image [41]. However, the shadow in an OP is closely consistent with the shadow in the rectified aerial image, regardless of variations in camera pose and the Sun’s position. As described in Section 3, one of the major problems leading to image matching failure is illumination. Shadows affect the robustness of traditional image matching methods. If accurate acquisition time and local DSM data are available, the shadow in a simulated OP will indeed be consistent with the shadow in a rectified aerial image and may be used as a stable feature for matching.

Figure 3.

Building and shadow in aerial image and OP. “Equivalent plane” means that the scale of the aerial image is the same as the scale of the OP.

4.2. Generation of the Reference Shadow Map

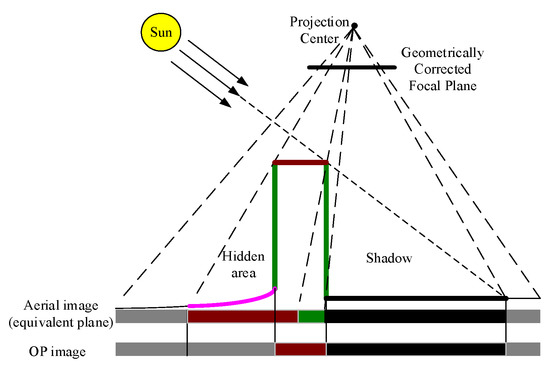

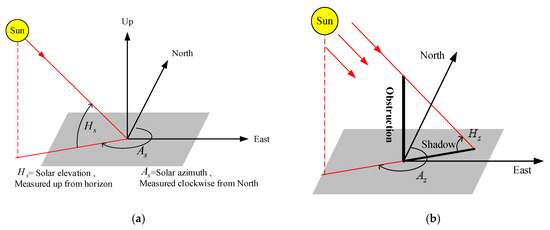

The shadow of an object is related to the solar elevation angle (Figure 4a) and the solar azimuth angle (Figure 4b). Assuming that sunlight is a parallel light source and that atmosphere influence has been calibrated, the shadows of an object in an OP can be simulated by the parallel ray-casting method.

Figure 4.

Shadows of an object in OPs: (a) Solar elevation angle and solar azimuth angle; (b) shadow of an obstruction.

When the geo-referenced DSM data of an observed scene and accurate local time information are known, the local solar elevation angle and solar azimuth angle can be calculated as follows:

where is the current declination of the Sun (which can be calculated from the current time), is the local latitude, and is the hour angle in the local solar time, which is related to the local longitude and coordinated universal time [42]. After calculating the position of the Sun , a simplified ray-tracing [43,44] method (orthographic projection with a parallel light source) is used to generate the current OP shadow image of the DSM data. We refer to this OP shadow image with geolocation information as the “reference shadow map”. Table 2 shows two groups of OP shadow images generated with different simulated based on the DSM data given in Figure 5.

Table 2.

OP shadow images produced by DSM with different solar elevation angles and solar azimuth angles .

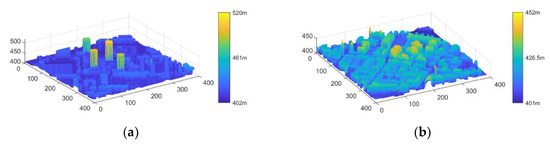

Figure 5.

Urban DSM data. (a) DSM data with skyscrapers; (b) DSM data with ordinary buildings.

The differences in shadows caused by the various positions of the Sun are significant, including the direction, length, and coverage area of the shadows, as shown in Table 2. These variations also contribute to the feature instability and poor correlation of the images. However, when the shadows can be simulated, they can be used as a significant and stable binary feature for matching.

4.3. Shadow Detection from Aerial Photos

While the previous section describes the simulation of the shadows, on the other hand, we will detect the shadows in real aerial images. Therefore, shadow detection is the critical step. Shadow detection methods applied to aerial photos can be split into three categories: Property-based histogram thresholding [45,46,47,48,49,50], model-based [51], and machine learning-based [52]. Property-based histogram thresholding is the simplest among the available methods and also the most popular for shadow detection. These thresholding algorithms provide thresholds automatically without prior knowledge of the distribution. Adeline et al. [53] compared several state-of-the-art shadow detection algorithms to find that the property-based histogram thresholding method is superior. The “first valley” method established by Nagao et al. [48] is the most accurate existing property-based histogram thresholding method. Table 3 lists several popular thresholding methods for shadow detection and briefly describes their shortcomings.

Table 3.

Thresholding methods for shadow detection.

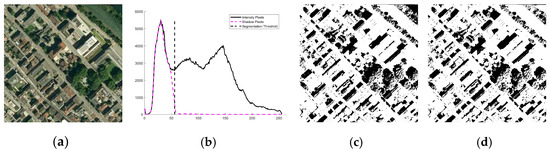

Under the strong illumination, objects such as shadow, vegetation, and man-made structures (buildings and roads) have distinguishable distributions in RGB channels. In this regard, the “first valley” method [48] uses a single channel or combined channel () to accurately determine the shadow area. Nagao et al.’s method determines the threshold by finding the position of the first valley closest to the value of 0 in the histogram of the combined channel and determines the pixels whose value is smaller than the threshold in the image as the shadow (Figure 6c). Unfortunately, it is not a parameter-adaptive method as the valley width parameter is set as a fixed value. Since the actual valley width is illumination-variant, Nagao et al.’s fixed-width valley method often fails. In most cases, at least one channel of an RGB image has a valley that can be detected as the threshold. Three independent channels are used to perform the detection with a dynamic search method so that the threshold adapts to various lighting environments.

Figure 6.

Morphology-based thresholding method. (a) RGB image; (b) histograms of shadow pixels and intensity pixels, where dashed line represents the histogram of true shadow pixels and vertical line represents threshold [48]; (c) shadow detection result by [48]; (d) ground truth.

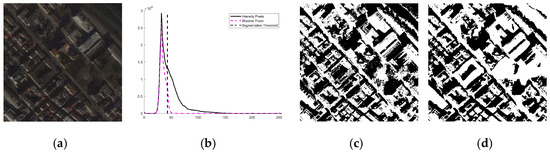

In low-light conditions, the histogram distribution of different object classes fuses into a unimodal distribution which is difficult to divide by the morphology-based thresholding method. Although Chen et al. [50] proposed a unimodal histogram solution by using the position of the first peak as the segmentation threshold, it is likely to result in under-detection. In cases of unimodal distribution, we used a mathematical approach based on the twice hierarchical Otsu method (Xu et al. [54]) (Figure 7).

Figure 7.

Mathematical-based thresholding method. (a) RGB image; (b) histograms of shadow pixels and intensity pixels, where dashed line represents the histogram of true shadow pixels and vertical line represents [54] threshold; (c) shadow detection result by [54]; (d) ground truth.

Therefore, we propose a shadow detection algorithm that combines mathematics-based and morphology-based methods. This algorithm is roughly divided into three divisions and the algorithm is as follows.

- Find the first valley in respective red, green, and blue channels.

- (a)

- Assume that is the valley width parameter. The initial value is set to 9.

- (b)

- Find the minimum on the histogram of the channel image with the parameter satisfying the following condition:If exists, the histogram distribution is not unimodal and the value of is found. Otherwise, go to sub-step c.

- (c)

- Make , if (), repeat sub-step b, or else set to 256, to indicate that the search for has failed with any and that the histogram of the channel is unimodal.After the “first valley” is detected for each channel, the thresholds , , and of red, green, and blue channels can be obtained.

- If at least one channel is not unimodal, the final shadow image can be obtained by the intersection of the three-channel shadow images , , and , i.e.,

- If all three channels are unimodal, the range-constrained Otsu method [54] is applied in the combined channel ():

- (a)

- Evaluate the threshold by the Otsu method [45] in the whole image .

- (b)

- Evaluate the threshold by the Otsu method in the pixels with the range in .

- (c)

- Then, the final shadow image can be produced by .

By dynamically searching for valleys to cope with different valley patterns in the histogram under various illumination conditions, a mathematical approach is combined to handle the unimodal histogram case under low illumination. The proposed shadow detection algorithm is simple and effective for a wide range of lighting conditions and will be used for shadow detection in real-time aerial images.

4.4. Scene Matching with Constraints Based on Multi-Frame Consistency

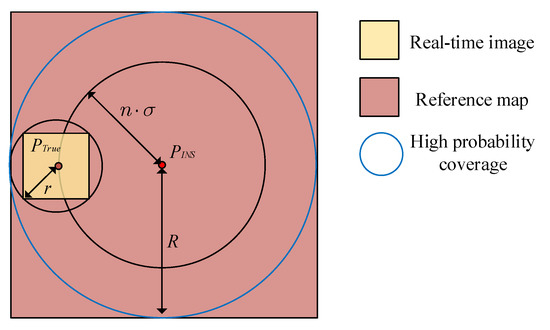

Like Maxar Technologies’ recently validated navigation system using airborne DSM data [55], our system also uses airborne DSM data to generate shadow images online for scenery matching navigation. To ensure the efficiency and accuracy of image matching, the range of the reference map must be restricted. Assuming that the standard deviation of INS drift is and that the point indicated by the INS is considered as the reference map’s center (), a circle (the black circle in Figure 8) with () as the radius can highly (probability greater than 99.7%) enclose the real-time image center (, true position of UAV). If the Field of View (FOV) and the Height Above Ground Level (HAGL) of the camera are known, the actual ground length corresponding to the half diagonal of the image can be derived as:

Figure 8.

Search area initialization.

Then, the entire real-time image can be contained with high probability within the geographic coordinates of the circle (blue circle in Figure 8) with a radius :

The circumscribed square of the circle ( as the center, as the radius) is determined as the range of the reference shadow map (orange area in Figure 8). After obtaining the DSM data for that range from the onboard database, the reference shadow map is then constructed using the approach outlined in Section 4.2. Finally, using the Normalized Cross-Correlation [16] algorithm, the real-time shadow image is matched with the reference shadow map.

It is important to note that the spatial resolution of the reference map needs to be adapted for the aerial image, and the process of adaption is to resample the reference map to the spatial resolution of the aerial image. The spatial resolution of the aerial image should be estimated first before resampling. The Spatial Resolution (, ) in the two axes of the aerial image coordinates can be derived from HAGL and the camera parameters as in Equation (7) ( is usually equal to ).

where , are the camera’s field of view in the two axes of the image plane, and (, ) represents the size of the real-time image [56]. The UAV’s measurement of HAGL is calculated based on the local height of the DSM, combined with the measurement results of the barometric altimeter.

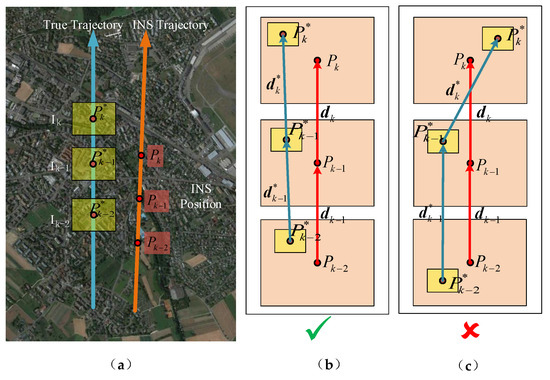

The matching of the aerial image in the reference map is the core of SMN, but the result of a single matching is not always reliable, because any matching algorithm will give a matching position, and this position may not be the correct matching position. Therefore, it is extremely important to judge whether the matching result is correct or not. In this paper, an a posteriori method based on multi-frame consistency constraints is proposed to analyze the correctness of the matching results.

The relative drift error of the INS is small in a short period. If the image matching result is correct, the relative position between the matching results and the relative position between the INS should have a good consistency. Based on this, we formulate a decision criterion based on multi-frame consistency constraints. (The number of consecutive frames used for judgment in this paper is set to 3). Assume that the three consecutive frames of the real-time image are , , and as illustrated in the diagram. The coordinates obtained after image matching are , , and, which form the vector , ; and the positions indicated by the INS of the three frames are , , , which form the vector , . The four constraint terms in Equation (8) can then be used to represent the multi-frame restriction.

where is the angle between vectors and , is the angle between vectors and , is the difference in length between vectors and , and is the difference in length between vectors and . and should be smaller than the threshold . and should be smaller than the threshold . We consider the matching positions obtained by image matching credible if the above conditions hold. The larger the parameters and that are set, the greater the tolerance for matching error. Figure 9 shows a schematic of the constraints based on multi-frame consistency.

Figure 9.

Constraints based on multi-frame consistency. (a) Schematic diagram; (b) Example of correct match; (c) Example of incorrect match. (Blue vectors with arrow in diagram (b,c) represent and , red vectors with arrows represent and .

Furthermore, no overlapping area between consecutive real-time aerial images should be ensured to eliminate the correlation between them. If the UAV’s altitude from the ground is and the on-board camera’s display field of view is DFOV, the length of the diagonal in the real-time image is :

This system is mainly to correct the positioning error of the UAV in the cruise phase, and we assume that the vehicle does not make big flight maneuvers or have variable speed. Therefore, the horizontal speed provided by INS is used as a reference to calculate the time interval of image sampling to ensure that there is no overlapping part of the captured images. The conditional equation of the camera sampling interval time that satisfies the two frames without overlapping is:

4.5. Integrated Navigation Strategies

The use of the SbM method is not suitable for all scenarios, such as flat areas, while the IbM method is more suitable for this type of area. Therefore, we tried to combine the two methods to obtain a better localization performance. The matching results of the IbM method are also evaluated using the same multi-frame consistency constraint. Finally, the available results of the two matching methods are used to correct the drift error of the INS by the following fusion strategy:

- If one of the results of SbM and IbM is available, the available one is used.

- If both the results of SbM and IbM are available, the final matching result is the arithmetic mean of both.

- If neither the results of SbM nor IbM are available, the position update is based on IMU only.

5. Experimental Results

We further investigated the proposed SbM applied to SMN via a series of experiments. Below, Section 5.1 presents the experimental data supporting this work. Section 5.2 verifies the shadow detection algorithm suggested in this paper. We perform experiments and analyses of SbM and IbM in Section 5.3.

5.1. Data Acquisition and Evaluation Metrics

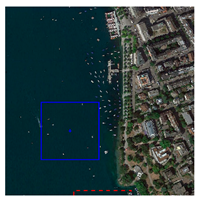

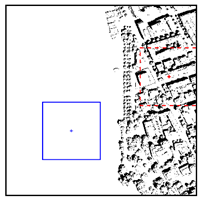

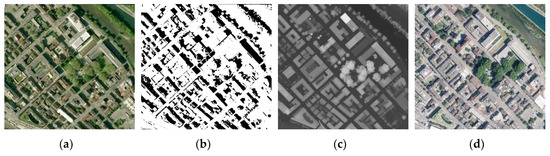

The experiment is based on four different sources of data (Figure 10):

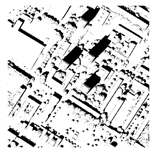

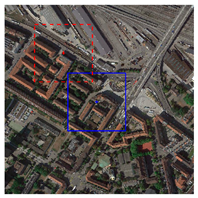

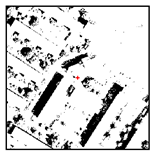

Figure 10.

Four kinds of experimental data. (a) Google Earth image; (b) ground truth of shadow; (c) DSM; (d) aerial image.

- Satellite images from Google Earth that have a spatial resolution of 0.5 m. Photographs from Google Earth are used to test shadow detection algorithms and in navigation experiments as geo-referenced images.

- Ground truth of shadow images for assessment of the shadow detection algorithm. All images used in the evaluation of the shadow detection algorithm were manually calibrated to generate the corresponding ground truth shadow images.

- Rasterized DSM data at 0.5 m spatial resolution from the GIS information project of the cantonal government of Zurich (http://maps.zh.ch/, accessed on 18 March 2021).

- Downward-looking aerial image with a resolution of 0.25 m taken in the summer of 2018 over Zurich. In the navigation experiment, this aerial photograph was used as a real-time image.

(The SMN scheme provided in this paper is to solve the navigation problem of UAVs in GPS-denied environments in urban areas and is not specific to a certain city. The Zurich area is used as the simulation experimental scenario because Zurich provides available DSM data and aerial imagery).

We used four indexes (Table 4) to assess the effectiveness of the shadow detection method. If the shadow detection is positive and the actual shadow value is also positive, it is marked as a True Positive (TP); if the shadow is detected but the reference shadow image is negative, it is a False Positive (FP). A True Negative (TN) means both the shadow detection outcome and the reference shadow value are negative; a False Negative (FN) means the shadow detection outcome is negative while the reference shadow value is positive. (Indicators in Table 4 are commonly used to evaluate the performance of shadow detection algorithms. For example, a high producer’s accuracy coupled with a low user’s accuracy indicates the over-detection of shadows, whereas a low producer’s accuracy coupled with a high user’s accuracy implies the under-detection of shadows [53]. The overall accuracy and F-score are used here as comprehensive evaluation metrics for shadow detection algorithms).

Table 4.

Accuracy and evaluation metrics for shadow detection.

5.2. Shadow Detection and Evaluation

Shadow detection for real-time aerial images is a precondition task for shadow matching, and a robust shadow detection method should be applicable under various lighting situations and ground scenarios. To test the proposed shadow detection algorithm in this paper, we chose five Zurich locales (P1 to P5) with scenes that included high buildings, low buildings, vegetation, and water. We obtained images from Google Earth from six different historical eras (T1 to T6) for each of these five locations. These satellite images along with their acquisition date, solar azimuth angle, and elevation angle are presented in Figure 11 and Table 5.

Figure 11.

Five groups of RGB images from Google Earth.

Table 5.

Solar azimuth angle and solar elevation angle for P1 to P5.

Table 6 lists the shadow detection accuracy of methods established by Chen et al. [50], Xu et al. [54], Tsai [46], Otsu [45], and the proposed method. Among them, Chen et al. [50], Xu et al. [54], and Otsu’s [45] methods were tested with a combined channel (); Tsai’s method [46] was deployed in HIS space . We generated four sets of experimental results applied to all images for comparison accordingly. All ground truth shadow images were prepared manually to calculate the evaluation metrics of the algorithms. The scores of our method were consistently better than the other methods in the average indices of overall accuracy and F-score.

Table 6.

Shadow detection results in the five image groups. (The highest value in column “Overall Accuracy” and “F-Score” metrics are bolded, and the least time-consuming in column “Time Cost”is bolded.)

The Otsu method [45] is carried out by calculating the maximum between-class variance to delimit the threshold. It is mainly used for binary classification problems. Categories in an actual scene often have three components (shadow, building, plant), however, Otsu’s method [45] as applied to the combined channel tends toward over-detection, and in this study, it produced the worst results. Tsai’s method [33] performed slightly better. Tsai’s method is based on the Otsu method in the transform domain, which enhances its performance and effectively distinguishes water bodies from shadows but does not solve the multi-classification problem. For the five groups of image data (except for images in T1), a significant “first valley” exists in the histograms of the combined channel or the independent channels.

The threshold obtained by using the first valley detection method based on morphology is closer to the ideal threshold than that obtained by the mathematical-based method [45,46,54]. However, the width of the first valley becomes smaller as the light decreases. Detecting the first valley in the histogram is likely to fail when the fixed parameters method is used (Chen et al. [50]). Our method, however, and its dynamic adjustment parameters perform well regardless of changes in illumination. In the case of unimodal distribution (images in T1), the morphology-based valley detection method fails. Chen et al.’s method [50] uses the gray value at the peak as a threshold, resulting in under-detection. Both our method and Xu et al.’s method [54] adopt the limited range Otsu method; the detection results are optimal under the unimodal distribution.

We calculated the average time consumption of the compared algorithms on the shadow detection dataset (the sizes of the images in Figure 11 are all 801 × 801). The shadow detection algorithms were tested on a laptop with an Intel i5-3230 m CPU, 8 G of RAM, and Matlab 2020 platform. The time consumption of the compared algorithms is shown in the last column of Table 6. Tsai [46] and Chen et al. [50] are less efficient because domain transformation operations from RGB to HSI are performed in these two algorithms, and Tsai [46] carries out the histogram equalization operation twice. Otsu [45] is the most efficient, while Xu et al. [54] performs the calculation of Otsu twice, so the computational efficiency of Xu et al. [54] is lower than that of Otsu [45]. The complexity of our algorithm is mainly focused on the iterative loop operation of finding “the first valley” of the histogram, and its efficiency is in the middle of the five compared algorithms, but it can meet the demand of real-time detection.

5.3. Simulation and Experiment

To demonstrate the application and performance of our proposed method, we designed a flight route in Zurich for simulated flight, as shown in Figure 12. The aerial image was taken on 24 July 2018, with the solar azimuth angle and solar elevation angle at 134.11° and 61.26°, respectively. The drone flew for 681 s and reached a total distance of 11.3 km at a horizontal speed of 60 Km per hour. The drone was outfitted with a gimbal camera that allowed it to take downward-looking photographs. The camera had a field of view of 77 degrees and captured images at a resolution of 720 × 720 pixels.

Figure 12.

Flight area and trajectory, and locations of aerial images acquisition. (The yellow line represents the flight trajectory; the blue paddles represent the location of aerial image acquisition; the red paddle is the start and end of the flight trajectory).

Aerial images with a pixel resolution of 0.25 m were taken at an altitude of 200 m above the ground with a 10 s interval, and a total of 68 real-time images were taken during the flight. Three scene matching navigation modes were used on the flight trajectory, namely, the Shadow-based Matching (SbM) method, the Intensity-based Matching (IbM) method (matching aerial photographs with six independent reference intensity maps), and the Fusion Matching (FM) method of the above two methods. For the constraints based on multi-frame consistency, the condition parameters α and β in Equation (8) are set to 9° and 40 pixels. The measurement error of height over ground is assumed to originate from the barometric altimeter, and this error is set to a random error within ±%2 of the flight altitude (based on the empirical confidence bounds of a barometric altimeter [57]). We calculated the Root Mean Square Error (RMSE) between the true position and the output position of the four navigation modes (IMU, IbM, SbM, and FM), as shown in Table 7.

Table 7.

RMSE comparison of different navigation models (The smallest value in each column is bolded).

Among all the results of the IbM, group T3 has the worst result (42.09 m) and is the worst of all the scene matching results. Although the reference image T3 was taken in a similar season to that of the real-time image (same seasonal factors), the difference between the solar azimuth of T3 (251.44°) and that of the real-time image (134.11°) is the largest among the six groups of reference images. This indicates that the performance of the IbM is seriously affected when the difference between the solar azimuth of the real-time image and the reference map is large. The IbM results on different reference maps are inferior to the SbM result, which shows that the SbM is more robust for scene matching under illumination variations. The FM mode further reduces the RMSE compared to SbM (reflected in five groups out of six reference maps).

For each of the three scene matching modes (IbM, SbM, FM), we calculated the number of available image frames evaluated by the multi-frame constraint. In addition, as shown in the last two rows of Table 8, the number of images adopting fusion strategy (1) in FM mode was calculated. “IbM (P/N)” in Table 8 denotes a Positive (P) or Negative (N) multi-frame constraint result for IbM, and “SbM (P/N)” in a similar way. The average of the above numbers was also calculated as a percentage of the 66 real-time images (excluding the first two frames that cannot be determined by multi-frame constraints), as shown in the last column of Table 8. The ratio of available frames of SbM (78.8%) to total frames is higher than that of IbM (42.4%), indicating that SbM is more applicable to the scene. The ratio of IbM (N) and SbM (P) to total frames means that 40.9% of the images can be successfully matched with SbM only. However, SbM is not a complete substitute for IbM, and the ratio of IbM (N) and SbM (P) to total frames indicates that 4.5% of the images are available for IbM only. FM has the highest ratio (83.3%) of available frames to total frames in the comparison, indicating that it successfully combines the advantages of both scene matching methods.

Table 8.

The number of available image frames.

We also statistically calculated the average radial error of the available image frames for IbM/SbM in each group of experiments, as well as the total average radial error, as shown in Table 9. The radial error is the distance between the matching position provided by IbM/SbM and the true position. The statistics of the average radial error also indicate that the matching performance of SbM is significantly superior to that of IbM.

Table 9.

The average radial error of available matching results (m).

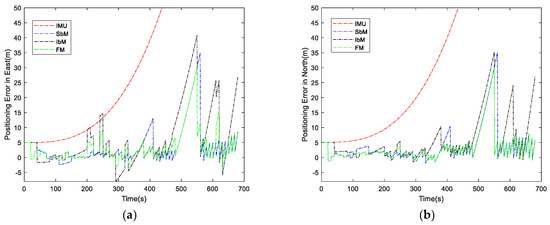

Taking T5 as the reference map, for example, the navigation performance of the three matching modes and IMU is shown in Figure 13. The x-axis represents time and the y-axis represents the error in meters.

Figure 13.

Performance of the four navigation modes. (a) Positioning error in east direction; (b) positioning error in north direction.

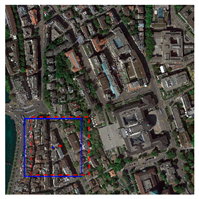

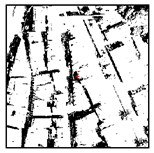

We selected 121 s, 151 s, 171 s, 521 s, and 611 s moments to show the typical matching results of SbM and IbM in Table 10. The blue bounding box in the reference map is the ground truth position of the UAV, and the red dashed bounding box is the position obtained by matching. At 121 s, the IbM method successfully matches, but the SbM method fails because there is no sufficient shadow in both the reference shadow map and the real-time shadow image of the region. The proportion of shadows detected in the real-time shadow image is only 5.04%. The proportion of shadows in the reference shadow map is also only 6.47%. Additionally, 151 s and 171 s are examples of successful SbM and failed IbM, and the availability of SbM results is higher than that of IbM over the whole trajectory. Meanwhile, at 521 s, the UAV flew over Lake Zurich and the uninformative lake image leads to the failure of the shadow detection algorithm. The SbM mode, as well as the IbM mode, failed. In the time range of 482 s to 550 s of the flight trajectory, which was mostly over the lake, neither of the two scene matching modes could obtain a valid matching result, so the IbM, SbM, and FM modes continuously maintain the offset of IMU, as seen in Figure 13. At 611 s, both SbM and IbM obtain valid matching results, but it can be seen that the localization error of IbM is slightly larger than that of SbM.

Table 10.

Typical matching results of SbM and IbM. (The red “+” is the center of the real-time image and the blue “+” is the actual position of the center of the real-time image in the reference map.)

To verify the performance of the low-flying UAV for scene matching at different altitudes, we scaled the 0.25 m spatial resolution images based on the relationship between altitude and image pixel resolution, and simulated real-time aerial images for HAGL from 200 m to 1200 m. The flight trajectory, flight speed, and camera parameters are the same as in the above experimental settings, but due to the increase in flight altitude, the range of scenes contained in the images increases accordingly, and to meet the condition of no overlapping of adjacent images, the shooting interval of real-time images increased gradually in response, and the number of images taken is reduced from 68 at 200 m to 12 at 1200 m. The random error within ±%2 of the flight altitude was added to measurements of HAGL. The Monte Carlo simulations were used to model the matching performance at different HAGLs from 200 m to 1200 m (The positioning results used for the statistical analysis satisfy the constraints of the multi-frame condition).

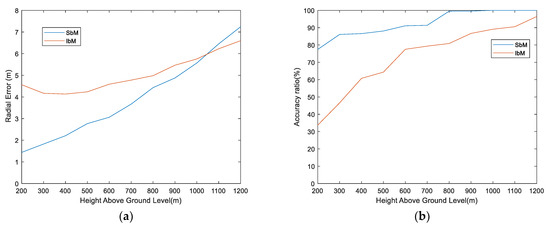

As shown in Figure 14a, the statistical radial errors in meters of SbM and IbM at different HAGLs are presented. The radial error range of SbM is between 1.44 m and 7.24 m, and the radial error range of IbM is between 4.13 m and 6.60 m. The radial error of SbM increases nearly linearly with increasing HAGL (200 m to 1200 m). The radial error of IbM tends to decrease in the HAGL range of 200 m to 400 m, and increases nearly linearly at HAGLs is over 400 m. For HAGLs below 1000 m, the radial error of SbM is lower than that of IbM, while for HAGLs over 1000 m, it is higher than that of IbM. The radial error of IbM does not obey linear growth in the range of HAGL from 200 m to 400 m, which we suggest as the reason for the large proportion of images with local inconsistency between the real-time image and the reference map caused by the variation in illumination and perspective, thus bringing a larger error. As the HAGL increases, more scene information (including more flat areas) is captured in the real-time image, making the correlation between the real-time image and the reference map gradually increase, while the degree of impact of local detail inconsistency decreases.

Figure 14.

Scene matching performance with IbM and SbM at different HAGLs. (a) Radial error; (b) accuracy ratio.

Figure 14b shows the accuracy ratio of available image to total images at different HAGLs. The rate of SbM ranged from 77.4% to 100%, while the rate of IbM ranged from 33.7% to 96.4%. The accuracy ratios of SbM are higher than that of IbM at different HAGLs. In addition to illumination and perspective factors, at lower HAGLs, the range of scenes contained in the image is smaller and the probability of containing single-mode scenes is greater (e.g., all flat land or all buildings), giving both SbM and IbM a higher probability of mismatch (lower accuracy ratio). As HAGL increases, the range of scenes contained in the image increases, the probability of a single-mode scene occurring decreases, and the accuracy ratio of both increases. The above experiments at different HAGLs illustrate that shadows can be used as an independent, less disturbed feature for SMN.

6. Discussion

In summary, the proposed shadow detection method, which combines the advantages of morphology-based and mathematical methods, shows the highest average score on the tested data among the various methods we used for this experiment. It also shows greater robustness in dealing with various lighting conditions compared to the other methods. It can also meet the demand for real-time detection. However, the proposed shadow detection method will have over-detection problems in river areas and images with a small proportion of shadows. The under-detection phenomenon will occur in the parts of roads and vehicles covered by shadows. These problems are the inherent defects of the threshold segmentation algorithm.

This paper explores the effect of shadows as interference factors on image matching, and, on the other hand, verifies that shadows can be used as a binary feature for image matching. Based on the work in [18], this paper combines the advantages of IbM to further improve the performance of SMN based on SbM. The research in this paper also proposes new directions and ideas for the matching problem of 2D images and DSMs.

7. Conclusions

Scene matching navigation methods based on a fixed reference map do not perform adequately when there are changes in shadows, light, and viewing angles in the images. This study was conducted to determine the influence of shadows on image matching and to test the feasibility of using shadows as a feature for scene matching navigation operations. A shadow-based matching method was developed which has higher matching accuracy and robustness against illumination variations than the intensity-based matching method under different lighting. A robust shadow detection method based on a combination of mathematical and morphological methods is proposed in this paper. The proposed method allows for effective thresholding for detecting shadows in aerial images under different illumination conditions and with various distributions. It has higher robustness and detection accuracy than state-of-the-art thresholding methods for shadow detection.

The SbM method is not suitable for flat areas with scarce shadows, while the IbM method has advantages in flat areas. Therefore, we propose a fusion navigation model based on both methods. Experimental results demonstrated that the proposed method is feasible and robust even when viewpoint positions and illumination between the matching pair markedly differ. This approach can be applied in UAV navigation systems under GPS-denied circumstances.

We did find that the SbM is not applicable in all weather conditions. In the case of rainy or cloudy weather, shadow information might be too difficult to obtain for our method to function properly. Vegetation shadows also change with the season, which affects the accuracy of the shadow matching technique. In the future, we will build an additional model of vegetation shadows changing with the seasons and investigate image matching issues under cloudy conditions.

Author Contributions

Conceptualization, H.W.; methodology, H.W. and N.L.; software, H.W. and N.L.; validation, H.W., N.L. and Z.L.; formal analysis, Y.C., N.L. and H.W.; investigation, H.W. and N.L.; resources, H.W., Y.C. and Y.Z.; data curation, H.W. and N.L.; writing—original draft preparation, H.W.; writing—review and editing, Y.C., Y.Z., J.C.-W.C. and N.L.; visualization, H.W.; supervision, Y.C.; project administration, Y.C. and Y.Z; funding acquisition, Y.C. and Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant numbers 61771391, 61371152, and 61603364.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Grewal, M.S.; Weill, L.R.; Andrews, A.P. Global Positioning Systems, Inertial Navigation, and Integration; John Wiley & Sons: New York, NY, USA, 2007; ISBN 0470099712. [Google Scholar]

- Tang, Y.; Jiang, J.; Liu, J.; Yan, P.; Tao, Y.; Liu, J. A GRU and AKF-Based Hybrid Algorithm for Improving INS/GNSS Navigation Accuracy during GNSS Outage. Remote Sens. 2022, 14, 752. [Google Scholar] [CrossRef]

- Piasco, N.; Sidibé, D.; Demonceaux, C.; Gouet-Brunet, V. A survey on Visual-Based Localization: On the benefit of heterogeneous data. Pattern Recognit. 2018, 74, 90–109. [Google Scholar] [CrossRef]

- Kim, Y.; Park, J.; Bang, H. Terrain-Referenced Navigation using an Interferometric Radar Altimeter. Navig. J. Inst. Navig. 2018, 65, 157–167. [Google Scholar] [CrossRef]

- Jin, Z.; Wang, X.; Moran, B.; Pan, Q.; Zhao, C. Multi-Region Scene Matching Based Localisation for Autonomous Vision Navigation of UAVs. J. Navig. 2016, 69, 1215–1233. [Google Scholar] [CrossRef]

- Choi, S.H.; Park, C.G. Robust aerial scene-matching algorithm based on relative velocity model. Robot. Auton. Syst. 2020, 124, 103372. [Google Scholar] [CrossRef]

- Qu, X.; Soheilian, B.; Paparoditis, N. Landmark based localization in urban environment. ISPRS J. Photogramm. Remote Sens. 2018, 140, 90–103. [Google Scholar] [CrossRef]

- Wan, X.; Liu, J.; Yan, H.; Morgan, G.L.K. Illumination-invariant image matching for autonomous UAV localisation based on optical sensing. ISPRS J. Photogramm. Remote Sens. 2016, 119, 198–213. [Google Scholar] [CrossRef]

- Nistér, D.; Naroditsky, O.; Bergen, J. Visual Odometry. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 1, p. I. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar] [CrossRef]

- Brown, L.G. A survey of image registration techniques. ACM Comput. Surv. 1992, 24, 325–376. [Google Scholar] [CrossRef]

- Shukla, P.K.; Goel, S.; Singh, P.; Lohani, B. Automatic geolocation of targets tracked by aerial imaging platforms using satellite imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2014, XL-1, 381–388. [Google Scholar] [CrossRef]

- Jacobsen, K. Very High Resolution Satellite Images—Competition to Aerial Images. 2009. Available online: https://www.ipi.uni-hannover.de/fileadmin/ipi/publications/VHR_Satellites_Jacobsen.pdf (accessed on 8 March 2022).

- Zhuo, X.; Koch, T.; Kurz, F.; Fraundorfer, F.; Reinartz, P. Automatic UAV Image Geo-Registration by Matching UAV Images to Georeferenced Image Data. Remote Sens. 2017, 9, 376. [Google Scholar] [CrossRef]

- Yoo, J.-C.; Han, T.H. Fast Normalized Cross-Correlation. Circuits Syst. Signal Process. 2009, 28, 819–843. [Google Scholar] [CrossRef]

- Mo, N.; Zhu, R.; Yan, L.; Zhao, Z. Deshadowing of Urban Airborne Imagery Based on Object-Oriented Automatic Shadow Detection and Regional Matching Compensation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 585–605. [Google Scholar] [CrossRef]

- Wang, H.; Cheng, Y.; Liu, N.; Kang, Z. A Method of Scene Matching Navigation in Urban Area Based on Shadow Matching. In Proceedings of the 2018 IEEE CSAA Guidance, Navigation and Control Conference (CGNCC), Xiamen, China, 10–12 August 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Wan, X.; Liu, J.G.; Yan, H. The Illumination Robustness of Phase Correlation for Image Alignment. IEEE Trans. Geosci. Remote Sens. 2015, 53, 5746–5759. [Google Scholar] [CrossRef]

- Movia, A.; Beinat, A.; Crosilla, F. Shadow detection and removal in RGB VHR images for land use unsupervised classification. ISPRS J. Photogramm. Remote Sens. 2016, 119, 485–495. [Google Scholar] [CrossRef]

- Song, L.; Cheng, Y.M.; Liu, N.; Song, C.H.; Xu, M. A Scene Matching Method Based on Weighted Hausdorff Distance Combined with Structure Information. In Proceedings of the 32nd Chinese Control Conference, Xi’an, China, 26–28 July 2013; pp. 5241–5244. [Google Scholar]

- Sim, D.-G.; Park, R.-H. Two-dimensional object alignment based on the robust oriented Hausdorff similarity measure. IEEE Trans. Image Process. 2001, 10, 475–483. [Google Scholar] [CrossRef]

- Cesetti, A.; Frontoni, E.; Mancini, A.; Zingaretti, P.; Longhi, S. A Vision-Based Guidance System for UAV Navigation and Safe Landing using Natural Landmarks. J. Intell. Robot. Syst. 2010, 57, 233–257. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar] [CrossRef]

- Ma, J.; Jiang, X.; Fan, A.; Jiang, J.; Yan, J. Image matching from handcrafted to deep features: A survey. Int. J. Comput. Vis. 2021, 129, 23–79. [Google Scholar] [CrossRef]

- Lowry, S.; Sunderhauf, N.; Newman, P.; Leonard, J.J.; Cox, D.; Corke, P.; Milford, M.J. Visual Place Recognition: A Survey. IEEE Trans. Robot. 2016, 32, 1–19. [Google Scholar] [CrossRef]

- Krajník, T.; Cristóforis, P.; Kusumam, K.; Neubert, P.; Duckett, T. Image features for visual teach-and-repeat navigation in changing environments. Robot. Auton. Syst. 2017, 88, 127–141. [Google Scholar] [CrossRef]

- Azzalini, D.; Bonali, L.; Amigoni, F. A Minimally Supervised Approach Based on Variational Autoencoders for Anomaly Detection in Autonomous Robots. IEEE Robot. Autom. Lett. 2021, 6, 2985–2992. [Google Scholar] [CrossRef]

- Michaelsen, E.; Meidow, J. Stochastic reasoning for structural pattern recognition: An example from image-based UAV navigation. Pattern Recognit. 2014, 47, 2732–2744. [Google Scholar] [CrossRef]

- Yang, X.; Wang, J.; Qin, X.; Wang, J.; Ye, X.; Qin, Q. Fast Urban Aerial Image Matching Based on Rectangular Building Extraction. IEEE Geosci. Remote Sens. Mag. 2015, 3, 21–27. [Google Scholar] [CrossRef]

- Dawadee, A.; Chahl, J.; Nandagopal, D.; Nedic, Z. Illumination, Scale and Rotation Invariant Algorithm for Vision-Based Uav Navigation. Int. J. Pattern Recognit. Artif. Intell. 2013, 27, 1359003. [Google Scholar] [CrossRef]

- McCabe, J.S.; DeMars, K.J. Vision-based, terrain-aided navigation with decentralized fusion and finite set statistics. Navig. J. Inst. Navig. 2019, 66, 537–557. [Google Scholar] [CrossRef]

- Talluri, R.; Aggarwal, J. Position estimation for an autonomous mobile robot in an outdoor environment. IEEE Trans. Robot. Autom. 1992, 8, 573–584. [Google Scholar] [CrossRef]

- Woo, J.; Son, K.; Li, T.; Kim, G.; Kweon, I.S. Vision-Based UAV Navigation in Mountain Area. In Proceedings of the IAPR Conference on Machine Vision Applications, Tokyo, Japan, 16–18 May 2007; pp. 236–239. [Google Scholar]

- Baboud, L.; Cadik, M.; Eisemann, E.; Seidel, H.-P. Automatic photo-to-terrain alignment for the annotation of mountain pictures. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 41–48. [Google Scholar] [CrossRef]

- Wang, T.; Celik, K.; Somani, A.K. Characterization of mountain drainage patterns for GPS-denied UAS navigation augmentation. Mach. Vis. Appl. 2016, 27, 87–101. [Google Scholar] [CrossRef]

- Taneja, A.; Ballan, L.; Pollefeys, M. Registration of Spherical Panoramic Images with Cadastral 3D Models. In Proceedings of the 2012 Second International Conference on 3D Imaging, Modeling, Processing, Visualization & Transmission, Zurich, Switzerland, 13–15 October 2012; pp. 479–486. [Google Scholar] [CrossRef]

- Ramalingam, S.; Bouaziz, S.; Sturm, P.; Brand, M. SKYLINE2GPS: Localization in urban canyons using omni-skylines. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 3816–3823. [Google Scholar] [CrossRef]

- Muja, M.; Lowe, D.G. Fast Approximate Nearest Neighbors with Automatic Algorithm Configuration. In Proceedings of the Fourth International Conference on Computer Vision Theory and Applications, Lisboa, Portugal, 5–8 February 2009; pp. 331–340. [Google Scholar] [CrossRef]

- Ahmar, F.; Jansa, J.; Ries, C. The Generation of True Orthophotos Using a 3D Building Model in Conjunction With a Conventional Dtm. Int. Arch. Photogramm. Remote Sens. 1998, 32, 16–22. [Google Scholar]

- Zhang, T.; Stackhouse, P.W.; Macpherson, B.; Mikovitz, J.C. A Solar Azimuth Formula That Renders Circumstantial Treatment Unnecessary without Compromising Mathematical Rigor: Mathematical Setup, Application and Extension of a Formula Based on the Subsolar Point and Atan2 Function. Renew. Energy 2021, 172, 1333–1340. [Google Scholar] [CrossRef]

- Woo, A. Efficient shadow computations in ray tracing. IEEE Comput. Graph. Appl. 1993, 13, 78–83. [Google Scholar] [CrossRef]

- McCool, M.D. Shadow volume reconstruction from depth maps. ACM Trans. Graph. 2000, 19, 1–26. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Tsai, V.J.D. A comparative study on shadow compensation of color aerial images in invariant color models. IEEE Trans. Geosci. Remote Sens. 2006, 44, 1661–1671. [Google Scholar] [CrossRef]

- Chung, K.-L.; Lin, Y.-R.; Huang, Y.-H. Efficient Shadow Detection of Color Aerial Images Based on Successive Thresholding Scheme. IEEE Trans. Geosci. Remote Sens. 2009, 47, 671–682. [Google Scholar] [CrossRef]

- Nagao, M.; Matsuyama, T.; Ikeda, Y. Region extraction and shape analysis in aerial photographs. Comput. Graph. Image Process. 1979, 10, 195–223. [Google Scholar] [CrossRef]

- Richter, R.; Müller, A. De-shadowing of satellite/airborne imagery. Int. J. Remote Sens. 2005, 26, 3137–3148. [Google Scholar] [CrossRef]

- Chen, Y.; Wen, D.; Jing, L.; Shi, P. Shadow Information Recovery in Urban Areas from Very High Resolution Satellite Imagery. Int. J. Remote Sens. 2007, 28, 3249–3254. [Google Scholar] [CrossRef]

- Rau, J.-Y.; Chen, N.-Y.; Chen, L.-C. True Orthophoto Generation of Built-Up Areas Using Multi-View Images. Photogramm. Eng. Remote Sens. 2002, 68, 581–588. [Google Scholar]

- Tappen, M.F.; Freeman, W.T.; Adelson, E.H. Recovering intrinsic images from a single image. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1459–1472. [Google Scholar] [CrossRef]

- Adeline, K.R.M.; Chen, M.; Briottet, X.; Pang, S.K.; Paparoditis, N. Shadow detection in very high spatial resolution aerial images: A comparative study. ISPRS J. Photogramm. Remote Sens. 2013, 80, 21–38. [Google Scholar] [CrossRef]

- Xu, X.; Xu, S.; Jin, L.; Song, E. Characteristic analysis of Otsu threshold and its applications. Pattern Recognit. Lett. 2011, 32, 956–961. [Google Scholar] [CrossRef]

- Maxar Technologies. Maxar 3D Data Integrated Into Swedish Gripen Fighter Jet for GPS-Denied Navigation. Available online: https://blog.maxar.com/earth-intelligence/2021/maxar-3d-data-integrated-into-swedish-gripen-fighter-jet-for-gps-denied-navigation (accessed on 12 July 2022).

- Said, A.F. Robust and Accurate Objects Measurement in Real-World Based on Camera System. In Proceedings of the 2017 IEEE Applied Imagery Pattern Recognition Workshop (AIPR), Washington, DC, USA, 10–12 October 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Albéri, M.; Baldoncini, M.; Bottardi, C.; Chiarelli, E.; Fiorentini, G.; Raptis, K.G.C.; Realini, E.; Reguzzoni, M.; Rossi, L.; Sampietro, D.; et al. Accuracy of Flight Altitude Measured with Low-Cost GNSS, Radar and Barometer Sensors: Implications for Airborne Radiometric Surveys. Sensors 2017, 17, 1889. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).