1. Introduction

The midcourse of ballistic missiles is often accompanied by the release of decoys, which makes it difficult to detect radar targets. Traditional recognition methods based on target characteristics have difficulty distinguishing warheads from decoys. It is necessary to improve the accuracy of target recognition using target micro-motion information [

1]. Chen V C divides target micro-movement into rotation, conical rotation, swing and other forms [

2,

3]. Most existing micro-motion feature extraction and characterisation research methods are designed solely for micro-motion. However, translation and micro-motion occur during flight of the ballistic target. Translation can destroy the structure of the micro-Doppler and cause it to tilt, fold or translate. Therefore, translational compensation is required before the micro-Doppler characteristics of ballistic targets are investigated.

Researchers have proposed a variety of methods to address the problem of target translation compensation in the middle of the ballistic object trajectory. They can mainly be divided into translation compensation methods based on the Doppler spectrum information [

4,

5], methods based on signal processing [

6,

7,

8,

9], methods based on time-frequency analysis [

10,

11,

12,

13,

14] and other types of method [

15,

16,

17,

18,

19,

20].

The translation compensation method based on Doppler spectrum information mainly uses the echo spectrum to estimate the speed and acceleration of the target through correlation transformation and related information, to achieve target translation compensation. Target translation compensation methods include the spectral rearrangement method and the template method. The spectral rearrangement method detects the spectral peaks of each pulse. Then, the peaks and their corresponding signal spectral regions are centered to achieve translation compensation [

4]. The template method compensates for the echo signal using the known velocity and acceleration in the template database. Then, it calculates the relevant evaluation index of the compensated signal spectrum. The template data corresponding to the optimal value of the evaluation index are the speed and acceleration of the target [

5].

The translation compensation method based on signal processing uses digital signal processing technology to simplify the echo form or reduce the dimension of echo parameters. Then, it estimates the target translation parameters to achieve compensation through related technical methods. This type of method has a significant inhibitory effect on the target fretting component. At present, such target translation compensation methods primarily include conjugate multiplication and the state-space method. Conjugate multiplication is divided into two types: delayed conjugate multiplication and symmetric conjugate multiplication. Multiplication of the delayed signal and the conjugate signal or multiplication of the symmetrical delayed signal is used to reduce the target translation order. Then, the translation parameters are estimated step-by-step by combining Radon transform, fractional Fourier transform, spectral peak search and other methods [

6,

7,

8]. The state-space method derives the relationship between the cross-correlation function of the normalized sampling sequence of two adjacent pulse echoes and the radial velocity of the target to obtain the estimation formula of the radial velocity of the target. Therefore, the speed of the target can be estimated indirectly by straightforward measurement [

9].

The translation compensation method based on time-frequency analysis is mainly aimed at echo time-frequency data and can be combined with the image processing method to estimate the target translation parameters and achieve target translation compensation. At present, such target translation compensation methods include the (extended) Hough/Radon transform method, the corner detection method and the entropy value method. The (extended) Hough/Radon transform method uses the time-frequency analysis method to transform time-domain echo data into the time-frequency domain. Then, it uses the (extended) Hough/Radon transform to project the implicit features in the time-frequency domain to the dominant features in the parameter domain. The peak detection method entails the detection of dominant features to better estimate the target translation parameters [

10,

11]. This method can achieve parameter dimensionality reduction, but the time complexity increases sharply with increase in parameter quantity. The corner detection method detects the corner points of the time-frequency diagram to obtain the intersection points that reflect the movement trajectory of the target. Then, the translation parameters are estimated by combining the fitting methods [

12,

13]. This method is dependent on the time-frequency transform method and requires extremely high time-frequency resolution. The entropy method uses the information entropy of the time-frequency map, after compensation with different parameters, to judge the compensation effect and achieve high-precision translation compensation of the target [

14]. The entropy method has extremely high requirements on the quality of the time-frequency map. More importantly, the noise suppresses the compensation effect significantly, and the computation time increases rapidly with increase in the time-frequency graph scale. In addition to the above shortcomings, most translation compensation methods based on time-frequency analysis are completely ineffective for spectral aliasing.

To address the shortcomings of the translation compensation method based on time-frequency analysis, this paper proposes a translation compensation method based on template matching. The method involves first obtaining a binary image through Gaussian filtering and binarization. Next, according to the characteristics of the micro-Doppler curve, a matching template is designed and convolution matching performed with the binary image to obtain all the contour points of the overall micro-Doppler curve. Then, the upper and lower contour points are preliminarily determined by the maximum and minimum values. Meanwhile, the structural similarity in the image processing field is used to judge the authenticity of the contour points and eliminate all the false contour points. Finally, a polynomial fitting method is used to fit the upper and lower trend lines. The average value is taken to estimate the translational parameters of the target and to achieve compensation for the translation.

In summary, the proposed algorithm provides a matching template construction method for contour points suitable for motion compensation. Combined with the structural similarity in image processing technology, the precise detection of external contour points, reflecting the translational trend in the time-frequency diagram, is achieved. The algorithm offers a new approach to the translational compensation of ballistic midcourse targets. In addition, the adaptive aliasing judgment and the order of translational fit make practical application of the algorithm possible.

This paper is organized as follows: In

Section 2, the motion model and echo model of the target in the middle trajectory are described.

Section 3 presents the theory underpinning the proposed algorithm and its key steps. In

Section 4, the simulations performed are discussed.

Section 5 provides the conclusions.

2. Target Motion Model in the Middle of the Trajectory

Assuming that the target in the middle of the ballistic trajectory is a cone, its precession model is shown in

Figure 1. The center of gravity of the target is the origin, the precession axis is the

z axis. The

y axis is located in the plane formed by the precession axis and the target symmetry axis (i.e., the spin axis). The

x axis satisfies the right-handed spiral theorem to construct a rectangular coordinate system. The distance from the top of the cone A to the gravity is

, the distance from the bottom of the cone to the gravity is

, the radius of the bottom surface is

r, the angle between the target precession axis and the spin axis is

(that is, the precession angle). The precession angular velocity is

. The spin angular velocity is

. The angle between the radar line of sight (LOS) and the spin axis is

, the azimuth angle is

, and the pitch angle is

.

It can be seen from [

21] that when electromagnetic waves are incident at any angle, there are only three points on the cone target, namely, one at the top of the cone (point A in

Figure 1) and two at the edge of the bottom surface (B and C in

Figure 1). The bottom edge scattering point is generally the intersection of the plane formed by the incident ray and the axis of symmetry and the bottom edge of the cone. Therefore, the micro-Doppler formula of the above three scattering points can be obtained without considering the occlusion effect.

Among the terms, , is the signal wavelength and is the initial azimuth.

Then, the radial distance caused by the micro-motion of the target is

where

represents the real-time radial distance of point

i caused by fretting, and

represents the micro-Doppler of point

i.

Assuming that a single-frequency signal is transmitted, the radar baseband echo signal

at this time is

The Doppler will be severely folded when the target is flying at a high speed. In the middle of the trajectory, the target flight trajectory is relatively stable and the radar is in a tracking state. The echo can be roughly compensated with the speed measured by the radar at a certain pulse. The translational trajectory of the target after rough compensation is

where

is the radial distance caused by translation,

is the initial distance,

v is the residual velocity after coarse compensation, and

are the first-order acceleration and the second-order acceleration, respectively. Then the radial distance of the target relative to the radar at this time is

.

According to Formula (5), the baseband echo signal of the target is obtained as

The target micro-Doppler is

3. Target Translation Compensation Based on Template Matching

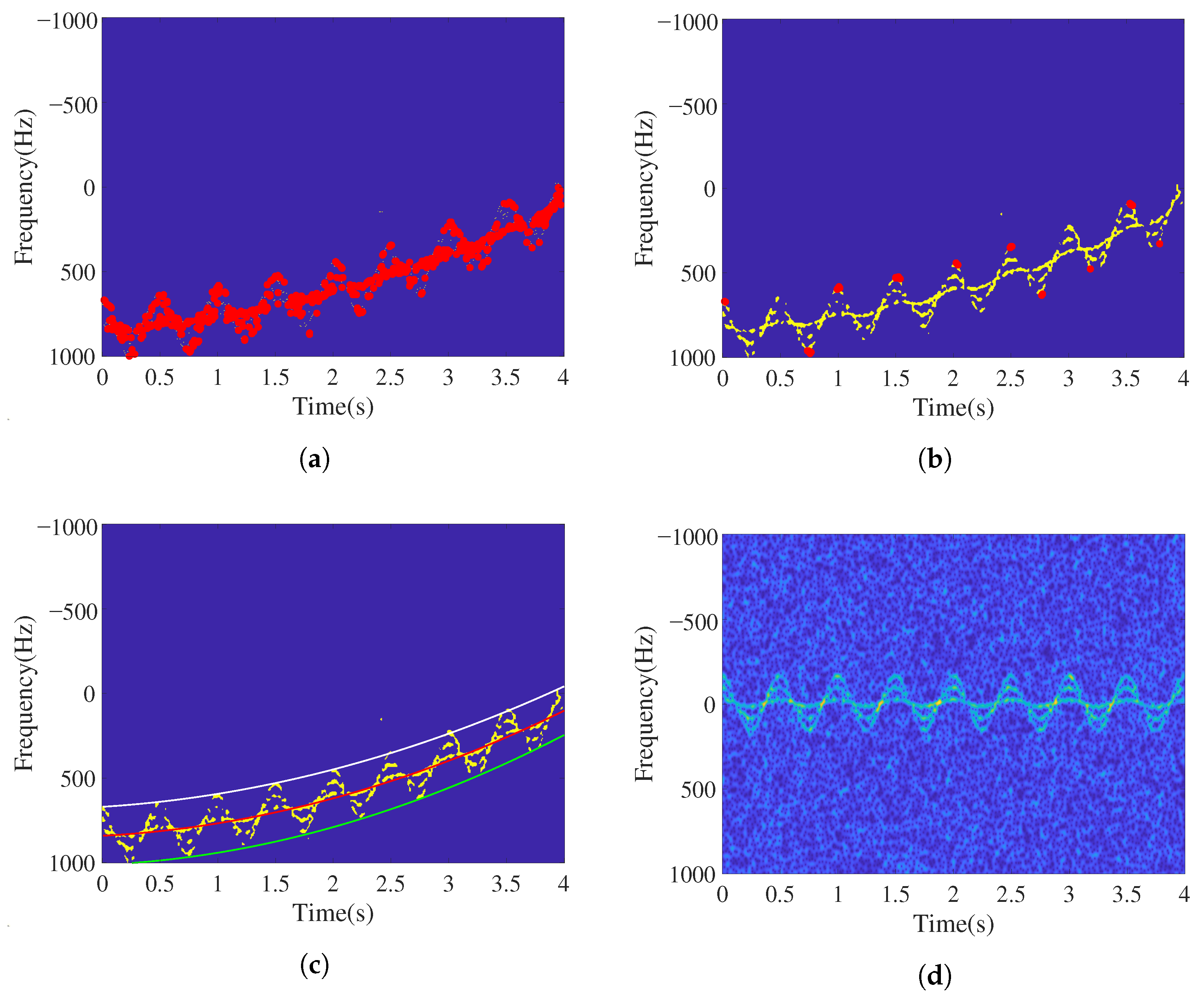

The target’s binarized micro-Doppler signal is obtained by time-frequency transformation and binarization of the baseband echo signal. The existence of translation makes it difficult to extract the micro-motion feature of the target. Therefore, it must be effectively and accurately compensated before extracting the micro-motion feature.

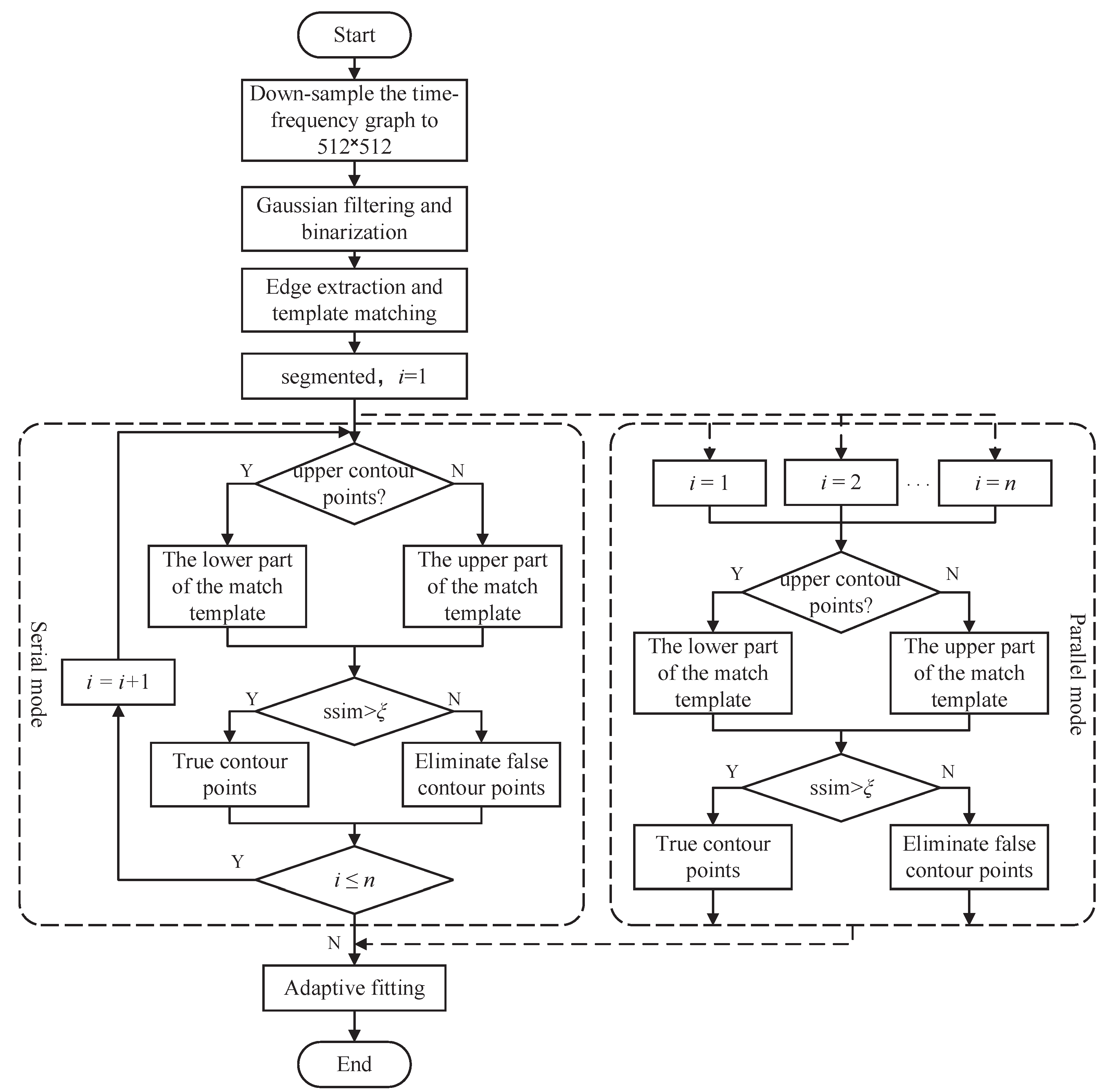

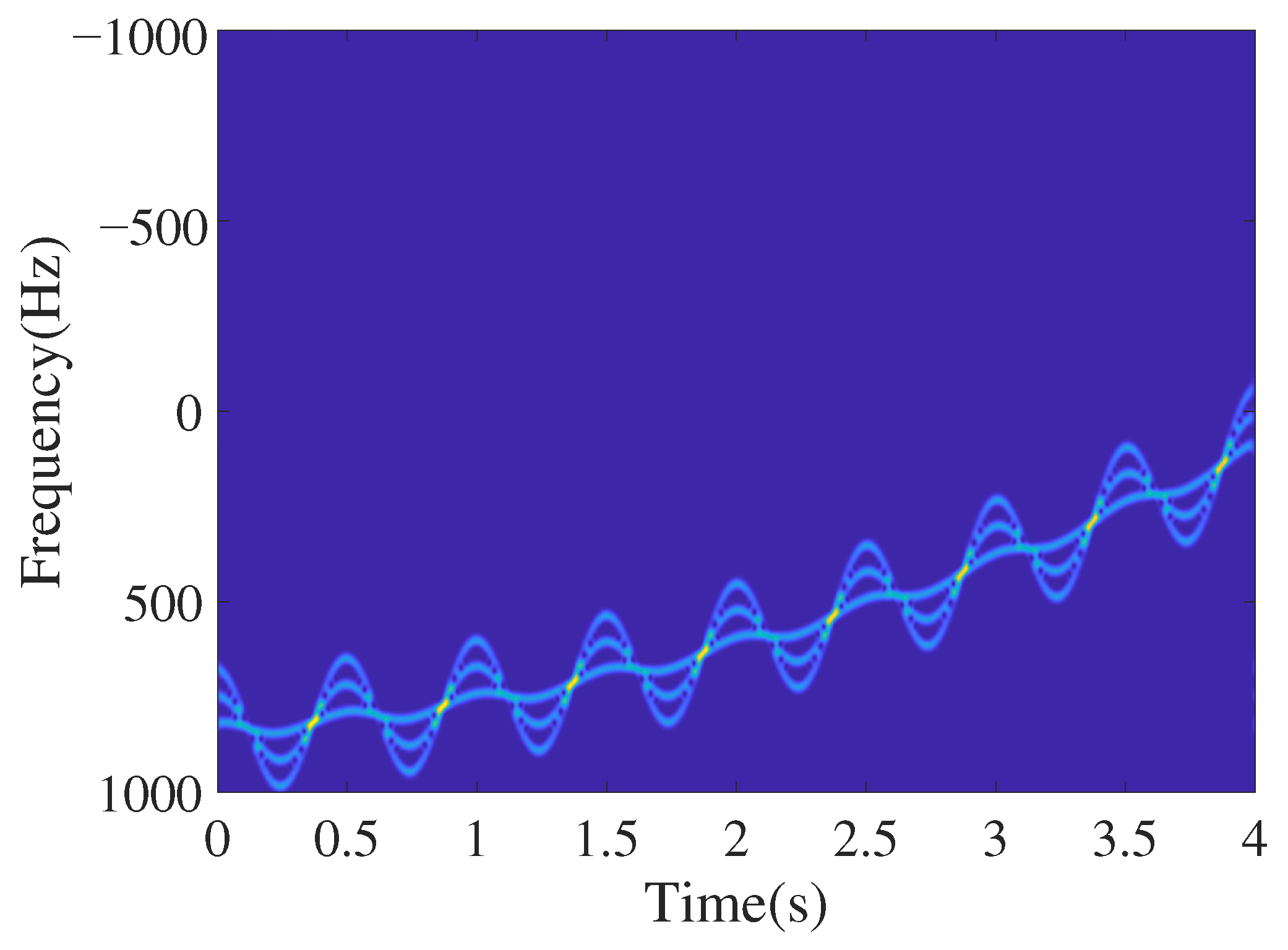

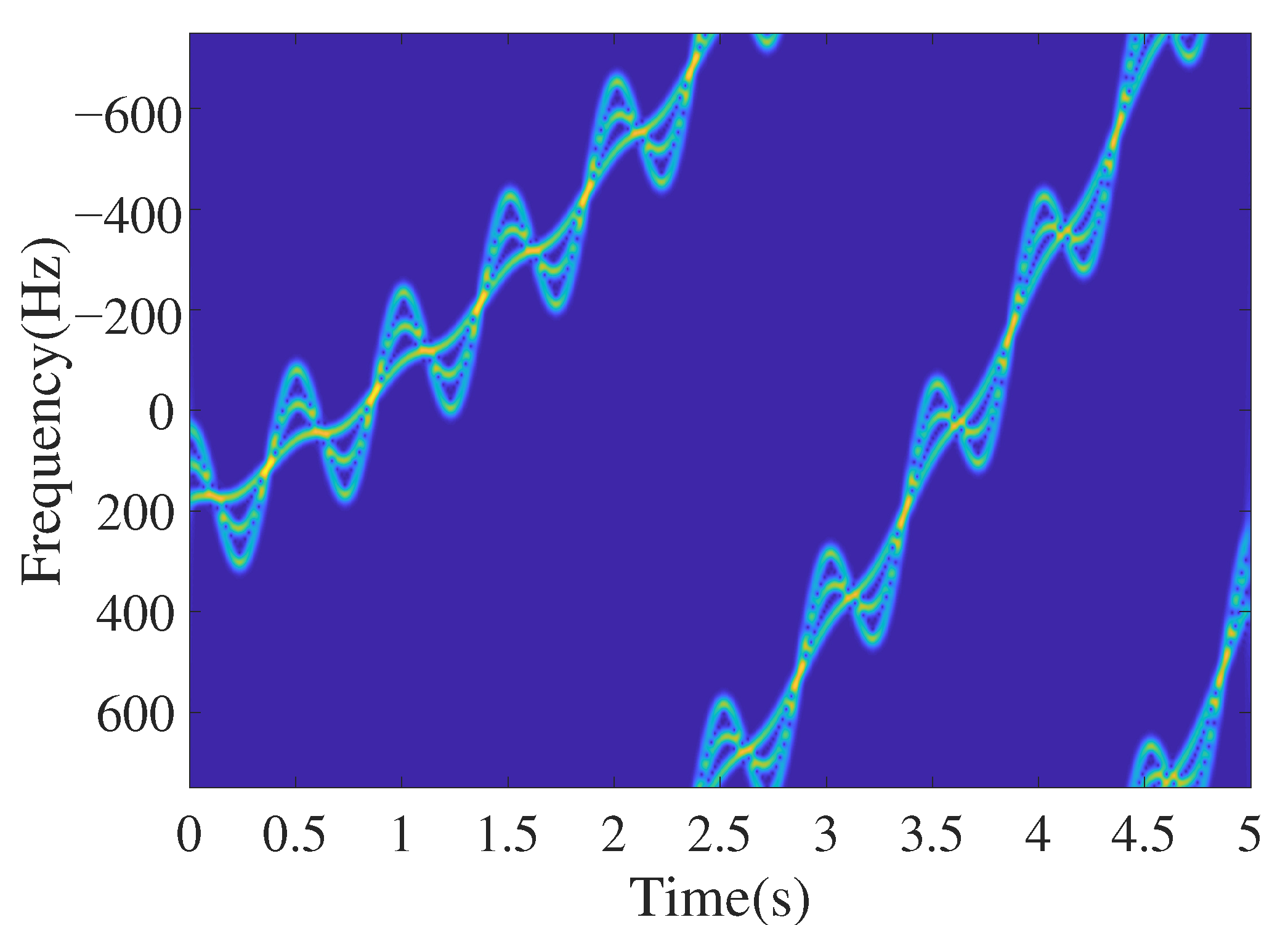

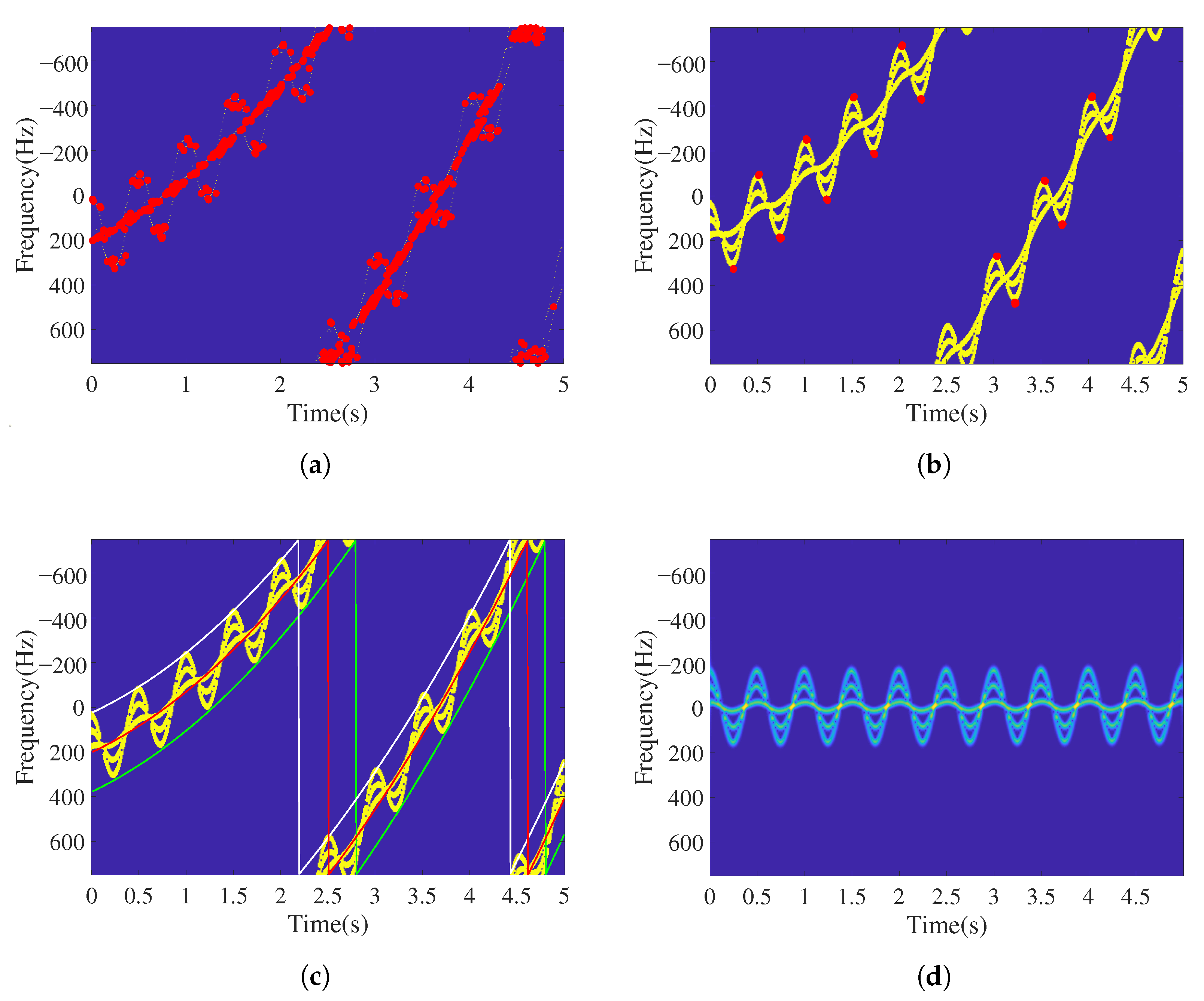

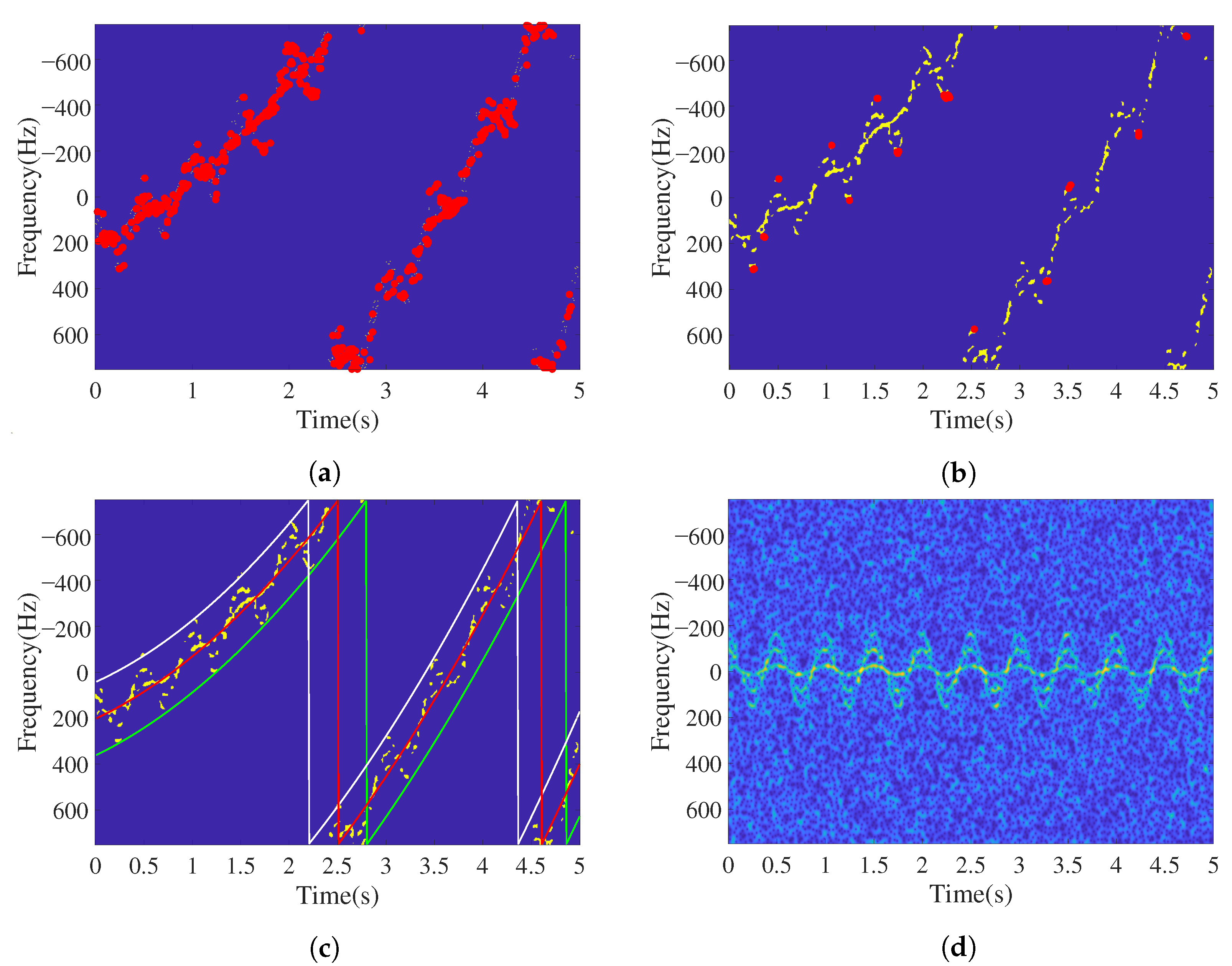

Observing the micro-Doppler signal , it can be found that the intersection of each component and the outer contour of the entire signal provides the best information for translational parameter estimation. However, high time-frequency resolution and a relatively low noise environment are required when using intersection information to estimate the translational parameters, which limits its application. Therefore, this paper uses the external contour of the signal to estimate the translational parameters and achieve the compensation.

To accurately obtain the external contour information of

, the current high-precision edge detection algorithm based on a canny operator [

22,

23] is used to obtain the external contour of the signal. Then, all of the contour points are obtained through the template matching method, which reflects the target translation. Observing

, as shown in

Figure 2, we can see that the local micro-Doppler contour in the white box is parabolic, and multiple protruding points (vertices) can indirectly reflect the overall translation trend of the target. This paper uses this feature to construct a matching template suitable for protruding contour point detection, as shown in

Figure 3. The matching template is composed of two parabolas that are symmetrical, up and down, and the sharpness of the micro-Doppler contour of the area to be matched determines the template width

and height

.

After template matching, the obtained contour points, which we collectively call class contour points, are either true or false. False contour points reduce the estimation accuracy of the translational parameters. This paper adopts the segmentation concept and uses structural similarity to judge and reject false contour points. Finally, the upper and lower trend lines are fitted by an adaptive fitting method. The translational trend line is the average of the two lines above. The following are the specific steps involved:

Step 1: The time-frequency signal is obtained by the time-frequency transformation of .

Step 2: The time–frequency signal is downsampled to obtain a 512 × 512 time-frequency diagram . After Gaussian filtering and binarizing, we obtain .

Step 3: Edge detection on is performed based on the canny operator to obtain the envelope of .

Step 4: According to the size of the time-frequency data, a matching template

, as shown in

Figure 2, is constructed.

and

are convolved to obtain the matching result.

Step 5: The threshold method is used to preliminarily screen out the class contour points. According to the structural characteristics of the matching template, the threshold is given as

. The matching result is further simplified as

Step 6: All class contour points are segmented on the time axis. Then, maximum (and minimum) value is used to determine the preliminary upper and lower contour points.Based on the number of upper and lower protruding points, the segment number

n is determined as the addition of the upper and lower contour points minus one. The coordinate sets of the upper and lower contour points are initially obtained as follows.

Among the terms, represents the interval of the i-th segment; is the set of vertical coordinates of points whose abscissas are in the interval in the set ; and is the range threshold which reduces the matching deviation caused by noise.

Step 7: The structural similarity [

24] is segmented and used to eliminate false contour points. The structural similarity is calculated between various contour points and the corresponding matching template.

where:

where

are constants,

are parameters used to adjust the relative importance of the three components, and

are the average gray level and standard deviation of the matching image, respectively.

The false contour points are removed and the set of upper and lower true contour points obtained as

where

and

are the final coordinate sets of the upper and lower contour points, respectively, and

is the structure similarity threshold.

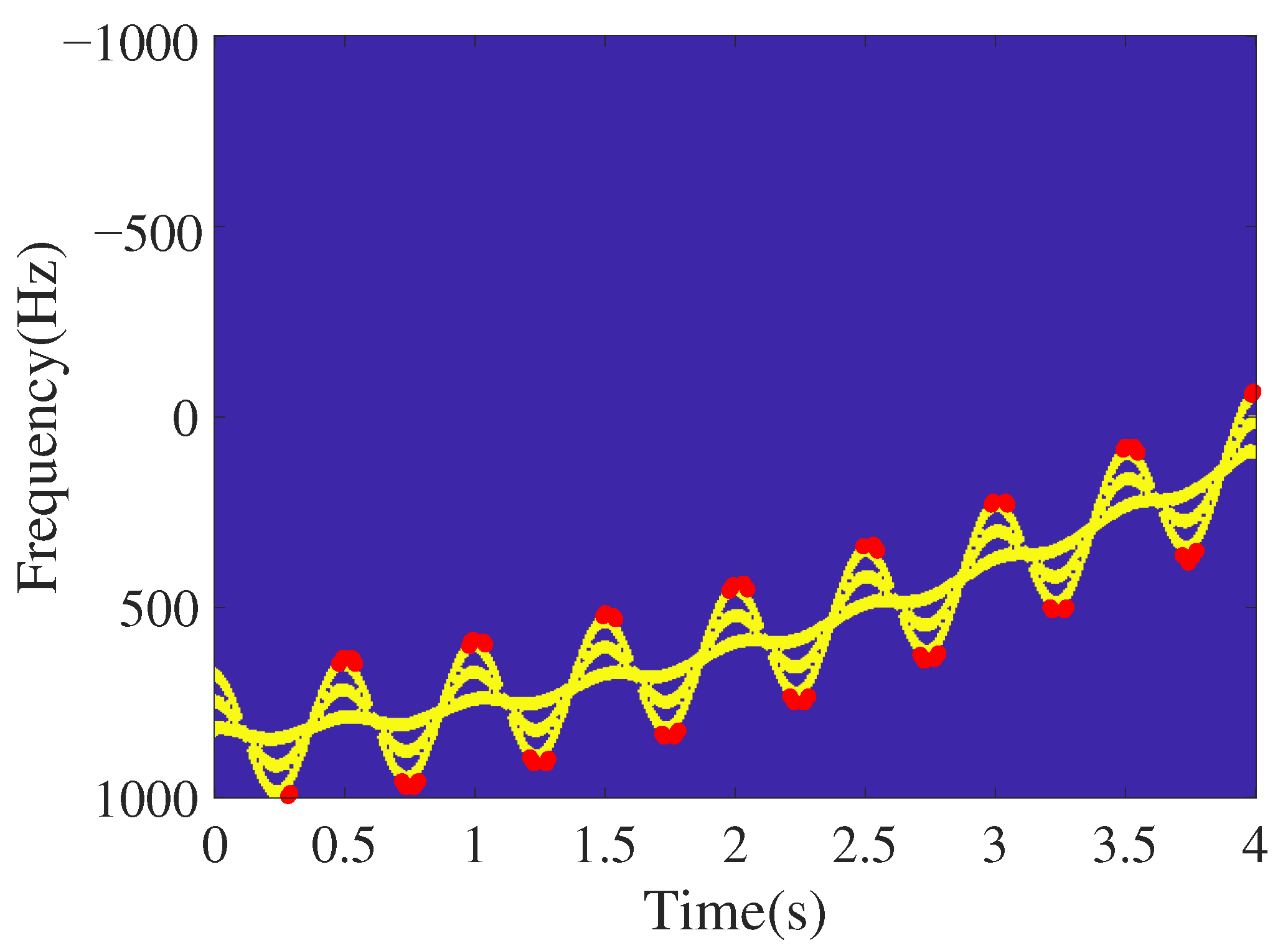

Step 8: Whether there is aliasing in the spectrum is determined. The points in are arranged in ascending order of the abscissa to obtain . The ordinate difference is calculated between adjacent points. It is considered that there is aliasing when . Otherwise, there is no aliasing. is generally half the height of the matching template.

Step 9: According to Step 6 and Step 7, the upper and lower trend lines are fitted. To better adapt to the translational motion under different orders, and to accomplish the adaptive determination of the translational order, the fitting order in Step 7 should satisfy the following conditions

where

k is the translational order,

is the coefficient of each order of the fitted polynomial, and

is the effective lower bound of the coefficient.

The average value of the upper and lower trend lines is taken to estimate the target residual translational velocity

and the first- and second-order acceleration

.

In Formulas (

21)–(

23),

is the sampling frequency,

W is the matching template width,

H is the matching template height, and

T is the observation time.

are the second-order, first-order and zero-order term coefficients of the fitted translational trend equation, respectively.

The baseband echo signal after compensation is

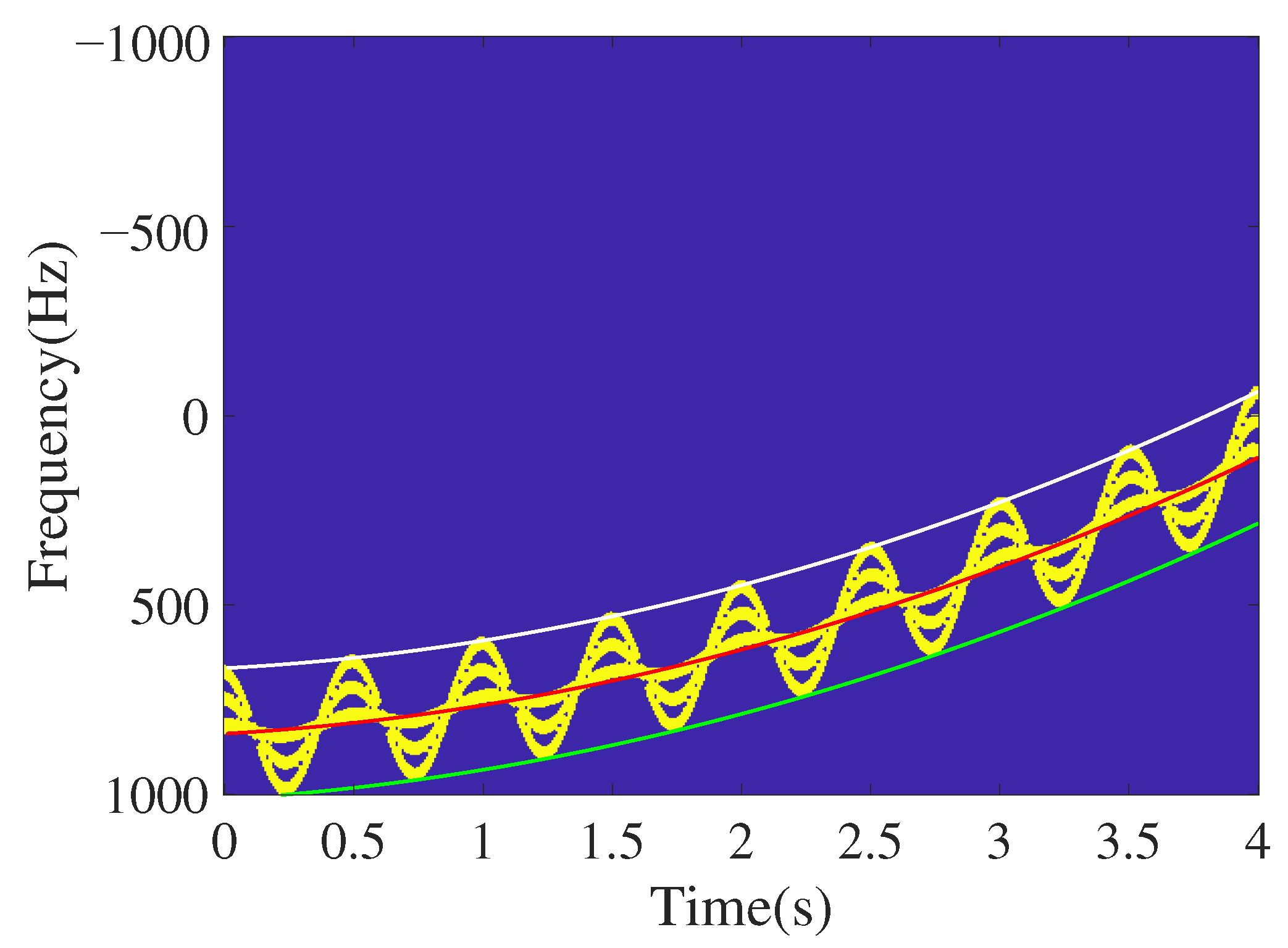

Taking into account the time complexity of the algorithm, the false contour point elimination in Step 7 can be processed in parallel, which can greatly reduce the running time.

The algorithm flowchart is shown in

Figure 4.