Advantages of Nonlinear Intensity Components for Contrast-Based Multispectral Pansharpening

Abstract

:1. Introduction

2. Basics of CS and Mra Pansharpening

2.1. Notation

2.2. CS

2.3. MRA

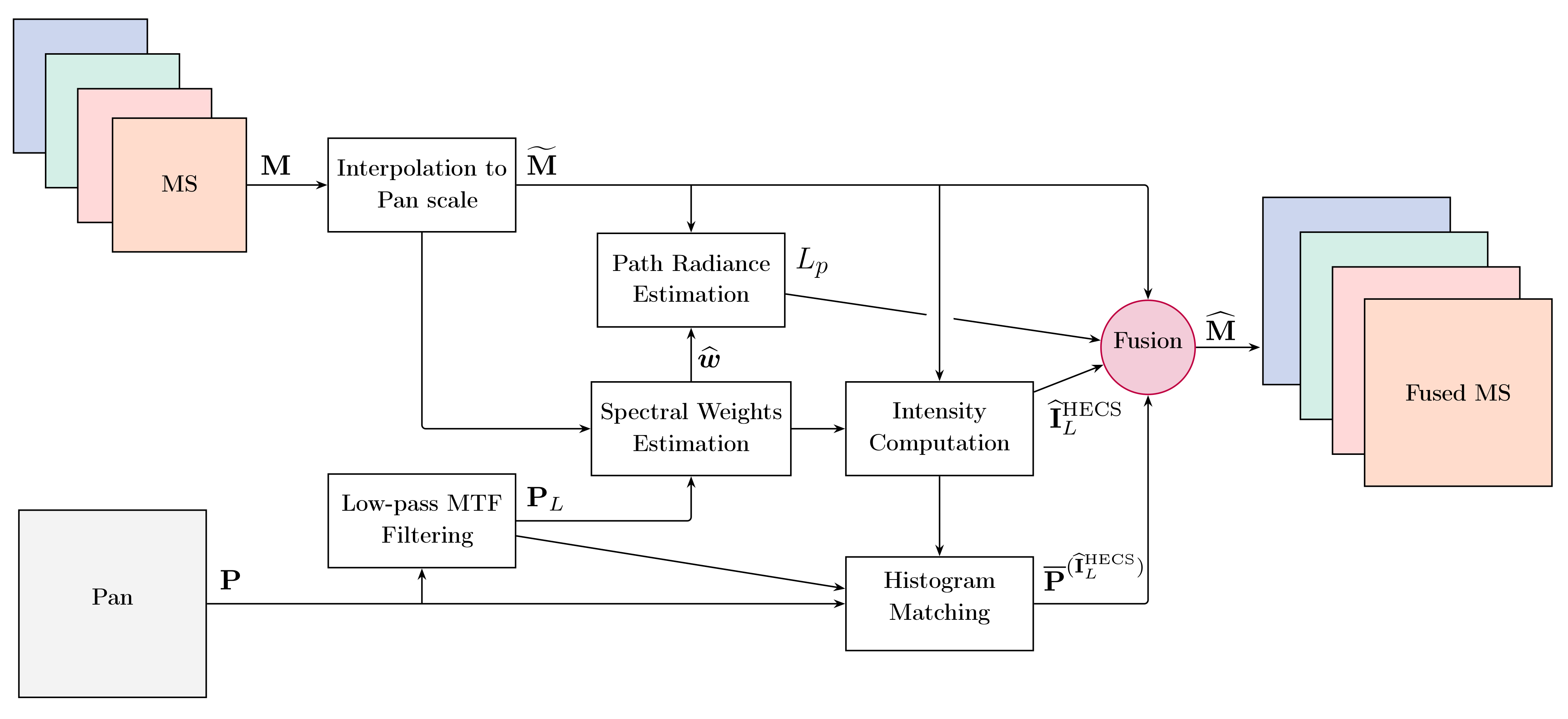

3. Pansharpening Based on Nonlinear Intensity Components

4. Haze Estimation

4.1. Definition of Haze

4.2. Shadowed Pixel Assumption

4.3. Haze Computation

5. Quality Assessment

- -

- Consistency: the fused image, once spatially degraded to the original resolution, should be as close as possible to the original image;

- -

- Synthesis: any low-resolution (LR) image fused by means of a high-resolution (HR) image should be as identical as possible to the ideal image that the corresponding sensor, if existent, would observe at the resolution of the HR image.

- -

- Vector synthesis: the set of multispectral images fused by means of the HR image should be as identical as possible to the set of ideal images that the corresponding sensor, if existent, would observe at the spatial resolution of the HR image.

5.1. Reduced-Resolution Assessment

5.1.1. SAM

5.1.2. ERGAS

5.1.3. Multivariate UIQI

5.2. Full-Resolution Assessment

5.2.1. QNR

- The fusion process should not change the intra-relationships between couples of MS bands; in other words, any intra-relationship changes between couples of MS bands across resolution scales are considered as indicators of spectral distortions;

- The fusion process should not change the inter-relationships between each MS band and the Pan image; in other words, any inter-relationship changes between each MS band and the Pan across resolution scales are modeled as spatial distortions.

5.2.2. Khan’s QNR

- Each fused MS band is spatially degraded (filtered and decimated) with its specific MTF-matched filter;

- The index between the set of spatially degraded fused MS images and the original MS dataset is computed;

- The one’s complement is taken to obtain a distortion measure:

5.2.3. Hybrid QNR

6. Experimental Results and Discussion

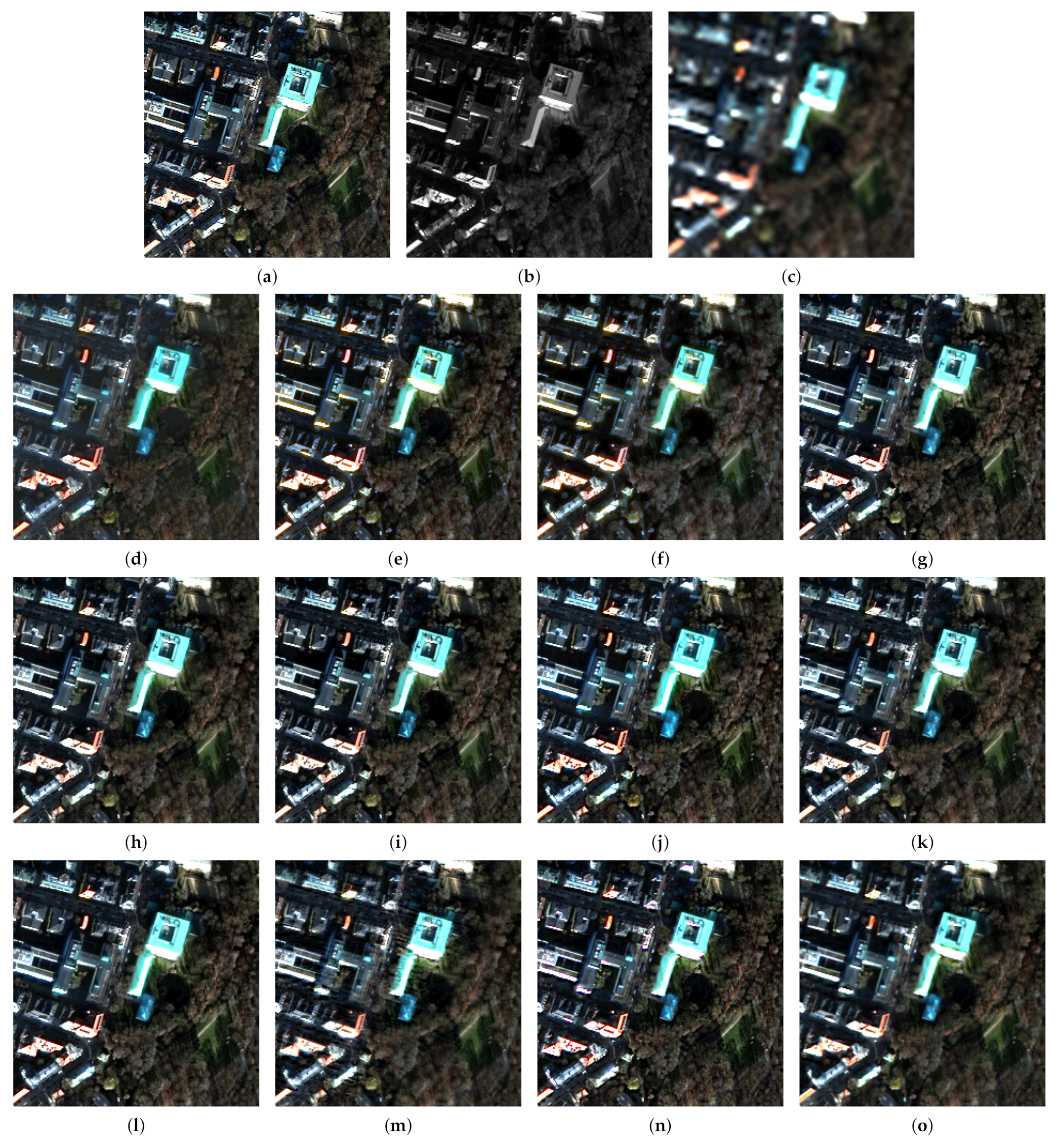

6.1. Data Sets

6.1.1. Trenton Dataset

6.1.2. Munich Dataset

6.2. Analysis of the LS Intensity Component

6.3. Simulations

- Fast fusion with hyperspherical color space (HCS) [26];

- Optimized BT with haze correction (BT-H) [15];

- The proposed method with hyper-ellipsoidal color space (HECS);

- Fusion method with band-dependent spatial-details (BDSD) injection [65];

- Additive wavelet luminance proportional with haze correction (AWLP-H) [31];

- GLP with MTF filters and full-scale detail injection modeling (MTF-GLP-FS) [66];

- Fusion based on sparse representation of spatial details (SR-D) [21];

- Fusion based on total-variation (TV) optimization [20];

- Advanced pansharpening with neural networks and fine tuning (A-PNN-FT) [22].

6.4. Discussion

7. Concluding Remarks

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Alparone, L.; Aiazzi, B.; Baronti, S.; Garzelli, A. Remote Sensing Image Fusion; CRC Press: Boca Raton, FL, USA, 2015. [Google Scholar]

- Aiazzi, B.; Alparone, L.; Arienzo, A.; Garzelli, A.; Zoppetti, C. Monitoring of changes in vegetation status through integration of time series of hyper-sharpened Sentinel-2 red-edge bands and information-theoretic textural features of Sentinel-1 SAR backscatter. In Proceedings of the Image and Signal Processing for Remote Sensing XXV, Strasbourg, France, 9–11 September 2019; Bruzzone, L., Ed.; SPIE: Bellingham, WA, USA, 2019; Volume 11155, pp. 111550Z-1–111550Z-12. [Google Scholar]

- Aiazzi, B.; Alparone, L.; Baronti, S. Information-theoretic heterogeneity measurement for SAR imagery. IEEE Trans. Geosci. Remote Sens. 2005, 43, 619–624. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A. Coherence estimation from multilook incoherent SAR imagery. IEEE Trans. Geosci. Remote Sens. 2003, 41, 2531–2539. [Google Scholar] [CrossRef]

- D’Elia, C.; Ruscino, S.; Abbate, M.; Aiazzi, B.; Baronti, S.; Alparone, L. SAR image classification through information-theoretic textural features, MRF segmentation, and object-oriented learning vector quantization. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 7, 1116–1126. [Google Scholar] [CrossRef]

- Vivone, G.; Dalla Mura, M.; Garzelli, A.; Restaino, R.; Scarpa, G.; Ulfarsson, M.; Alparone, L.; Chanussot, J. A new benchmark based on recent advances in multispectral pansharpening: Revisiting pansharpening with classical and emerging pansharpening methods. IEEE Geosci. Remote Sens. Mag. 2021, 9, 53–81. [Google Scholar] [CrossRef]

- Thomas, C.; Ranchin, T.; Wald, L.; Chanussot, J. Synthesis of multispectral images to high spatial resolution: A critical review of fusion methods based on remote sensing physics. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1301–1312. [Google Scholar] [CrossRef] [Green Version]

- Arienzo, A.; Alparone, L.; Aiazzi, B.; Garzelli, A. Automatic fine alignment of multispectral and panchromatic images. In Proceedings of the 2020 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Waikoloa, HI, USA, 26 September–2 October 2020; pp. 9324689-228–9324689-231. [Google Scholar]

- Garzelli, A.; Nencini, F.; Alparone, L.; Baronti, S. Multiresolution fusion of multispectral and panchromatic images through the curvelet transform. In Proceedings of the 2005 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Seoul, Korea, 25–29 July 2005; pp. 2838–2841. [Google Scholar]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Carlà, R.; Garzelli, A.; Santurri, L. Sensitivity of pansharpening methods to temporal and instrumental changes between multispectral and panchromatic data sets. IEEE Trans. Geosci. Remote Sens. 2017, 55, 308–319. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Garzelli, A.; Santurri, L. Blind correction of local misalignments between multispectral and panchromatic images. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1625–1629. [Google Scholar] [CrossRef]

- Alparone, L.; Garzelli, A.; Vivone, G. Intersensor statistical matching for pansharpening: Theoretical issues and practical solutions. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4682–4695. [Google Scholar] [CrossRef]

- Vivone, G.; Marano, S.; Chanussot, J. Pansharpening: Context-based generalized Laplacian pyramids by robust regression. IEEE Trans. Geosci. Remote Sens. 2020, 58, 6152–6167. [Google Scholar] [CrossRef]

- Li, H.; Jing, L. Improvement of a pansharpening method taking into account haze. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2017, 10, 5039–5055. [Google Scholar] [CrossRef]

- Lolli, S.; Alparone, L.; Garzelli, A.; Vivone, G. Haze correction for contrast-based multispectral pansharpening. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2255–2259. [Google Scholar] [CrossRef]

- Pacifici, F.; Longbotham, N.; Emery, W.J. The importance of physical quantities for the analysis of multitemporal and multiangular optical very high spatial resolution images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6241–6256. [Google Scholar] [CrossRef]

- Garzelli, A.; Nencini, F. Fusion of panchromatic and multispectral images by genetic algorithms. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Denver, CO, USA, 31 July–4 August 2006; pp. 3810–3813. [Google Scholar]

- Addesso, P.; Longo, M.; Restaino, R.; Vivone, G. Sequential Bayesian methods for resolution enhancement of TIR image sequences. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2015, 8, 233–243. [Google Scholar] [CrossRef]

- Fasbender, D.; Radoux, J.; Bogaert, P. Bayesian data fusion for adaptable image pansharpening. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1847–1857. [Google Scholar] [CrossRef]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O. A new pansharpening algorithm based on total variation. IEEE Geosci. Remote Sens. Lett. 2014, 11, 318–322. [Google Scholar] [CrossRef]

- Vicinanza, M.R.; Restaino, R.; Vivone, G.; Dalla Mura, M.; Chanussot, J. A pansharpening method based on the sparse representation of injected details. IEEE Geosci. Remote Sens. Lett. 2015, 12, 180–184. [Google Scholar] [CrossRef]

- Masi, G.; Cozzolino, D.; Verdoliva, L.; Scarpa, G. Pansharpening by convolutional neural networks. Remote Sens. 2016, 8, 594. [Google Scholar] [CrossRef] [Green Version]

- Ma, J.; Yu, W.; Chen, C.; Liang, P.; Guo, X.; Jiang, J. Pan-GAN: An unsupervised pan-sharpening method for remote sensing image fusion. Inform. Fusion 2020, 62, 110–120. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A generative adversarial network for infrared and visible image fusion. Inform. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Padwick, C.; Deskevich, M.; Pacifici, F.; Smallwood, S. WorldView-2 pan-sharpening. In Proceedings of the ASPRS 2010 Annual Conference, San Diego, CA, USA, 27 April 2010; pp. 1–14. [Google Scholar]

- Tu, T.M.; Hsu, C.L.; Tu, P.Y.; Lee, C.H. An adjustable pan-sharpening approach for IKONOS/QuickBird/GeoEye-1/WorldView-2. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2012, 5, 125–134. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Arienzo, A.; Garzelli, A.; Lolli, S. Fast multispectral pansharpening based on a hyper-ellipsoidal color space. In Proceedings of the Image and Signal Processing for Remote Sensing XXV, Strasbourg, France, 9–11 September 2019; Bruzzone, L., Ed.; SPIE: Bellingham, WA, USA, 2019; Volume 11155, pp. 1115507-1–1115507-12. [Google Scholar]

- Vivone, G.; Alparone, L.; Chanussot, J.; Dalla Mura, M.; Garzelli, A.; Licciardi, G.A.; Restaino, R.; Wald, L. A critical comparison of pansharpening algorithms. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Quebec City, QC, Canada, 13–18 July 2014; pp. 191–194. [Google Scholar]

- Licciardi, G.; Vivone, G.; Dalla Mura, M.; Restaino, R.; Chanussot, J. Multi-resolution analysis techniques and nonlinear PCA for hybrid pansharpening applications. Multidim. Syst. Signal Process. 2016, 27, 807–830. [Google Scholar] [CrossRef]

- Laben, C.A.; Brower, B.V. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent 6,011,875, 4 January 2000. [Google Scholar]

- Vivone, G.; Alparone, L.; Garzelli, A.; Lolli, S. Fast reproducible pansharpening based on instrument and acquisition modeling: AWLP revisited. Remote Sens. 2019, 11, 2315. [Google Scholar] [CrossRef] [Green Version]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. Advantages of Laplacian pyramids over “à trous” wavelet transforms for pansharpening of multispectral images. In Proceedings of the Image and Signal Processing for Remote Sensing XVIII, Edinburgh, UK, 24–27 September 2012; Bruzzone, L., Ed.; SPIE: Bellingham, WA, USA, 2012; Volume 8537, pp. 853704-1–853704-10. [Google Scholar]

- Aiazzi, B.; Selva, M.; Arienzo, A.; Baronti, S. Influence of the system MTF on the on-board lossless compression of hyperspectral raw data. Remote Sens. 2019, 11, 791. [Google Scholar] [CrossRef] [Green Version]

- Gillespie, A.R.; Kahle, A.B.; Walker, R.E. Color enhancement of highly correlated images-II. Channel ratio and “Chromaticity” Transform techniques. Remote Sens. Environ. 1987, 22, 343–365. [Google Scholar] [CrossRef]

- Garzelli, A.; Aiazzi, B.; Alparone, L.; Lolli, S.; Vivone, G. Multispectral pansharpening with radiative transfer-based detail-injection modeling for preserving changes in vegetation cover. Remote Sens. 2018, 10, 1308. [Google Scholar] [CrossRef] [Green Version]

- Lolli, S. Is the air too polluted for outdoor activities? Check by using your photovoltaic system as an air quality monitoring device. Sensors 2021, 21, 6342. [Google Scholar] [CrossRef]

- Vivone, G.; Arienzo, A.; Bilal, M.; Garzelli, A.; Pappalardo, G.; Lolli, S. A dark target Kalman filter algorithm for aerosol property retrievals in urban environment using multispectral images. Urban Climate 2022, 43, 101135. [Google Scholar] [CrossRef]

- Chavez, P.S., Jr. Image-based atmospheric corrections–Revisited and improved. Photogramm. Eng. Remote Sens. 1996, 62, 1025–1036. [Google Scholar]

- Chavez, P.S., Jr. An improved dark-object subtraction technique for atmospheric scattering correction of multispectral data. Remote Sens. Environ. 1988, 24, 459–479. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Barducci, A.; Baronti, S.; Pippi, I. Estimating noise and information of multispectral imagery. Optical Engin. 2002, 41, 656–668. [Google Scholar]

- Campbell, J.; Ge, C.; Wang, J.; Welton, E.; Bucholtz, A.; Hyer, E.; Reid, E.; Chew, B.; Liew, S.C.; Salinas, S.; et al. Applying advanced ground-based remote sensing in the Southeast Asian maritime continent to characterize regional proficiencies in smoke transport modeling. J. Appl. Meteorol. Climatol. 2016, 55, 3–22. [Google Scholar] [CrossRef]

- Lolli, S.; Di Girolamo, P.; Demoz, B.; Li, X.; Welton, E. Rain evaporation rate estimates from dual-wavelength lidar measurements and intercomparison against a model analytical solution. J. Atmos. Ocean. Technol. 2017, 34, 829–839. [Google Scholar] [CrossRef]

- Fu, Q.; Liou, K.N. On the correlated k-distribution method for radiative transfer in nonhomogeneous atmospheres. J. Atmos. Sci 1992, 49, 2139–2156. [Google Scholar] [CrossRef] [Green Version]

- Lolli, S.; Di Girolamo, P. Principal component analysis approach to evaluate instrument performances in developing a cost-effective reliable instrument network for atmospheric measurements. J. Atmos. Ocean. Technol. 2015, 32, 1642–1649. [Google Scholar] [CrossRef]

- Lolli, S.; Sauvage, L.; Loaec, S.; Lardier, M. EZ Lidar™: A new compact autonomous eye-safe scanning aerosol Lidar for extinction measurements and PBL height detection. Validation of the performances against other instruments and intercomparison campaigns. Opt. Pura Apl. 2011, 44, 33–41. [Google Scholar]

- Lolli, S.; D’Adderio, L.; Campbell, J.; Sicard, M.; Welton, E.; Binci, A.; Rea, A.; Tokay, A.; Comerón, A.; Barragan, R.; et al. Vertically resolved precipitation intensity retrieved through a synergy between the ground-based NASA MPLNET lidar measurements, surface disdrometer datasets and an analytical model solution. Remote Sens. 2018, 10, 1102. [Google Scholar] [CrossRef] [Green Version]

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 1997, 63, 691–699. [Google Scholar]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Carlà, R. Assessment of pyramid-based multisensor image data fusion. In Proceedings of the Image and Signal Processing for Remote Sensing IV, Barcelona, Spain, 21–25 September 1998; Serpico, S.B., Ed.; SPIE: Bellingham, WA, USA, 1998; Volume 3500, pp. 237–248. [Google Scholar]

- Aiazzi, B.; Alparone, L.; Argenti, F.; Baronti, S. Wavelet and pyramid techniques for multisensor data fusion: A performance comparison varying with scale ratios. In Proceedings of the Image and Signal Processing for Remote Sensing V, Florence, Italy, 20–24 September 1999; Serpico, S.B., Ed.; SPIE: Bellingham, WA, USA, 1999; Volume 3871, pp. 251–262. [Google Scholar]

- Du, Q.; Younan, N.H.; King, R.L.; Shah, V.P. On the performance evaluation of pan-sharpening techniques. IEEE Geosci. Remote Sens. Lett. 2007, 4, 518–522. [Google Scholar] [CrossRef]

- Alparone, L.; Aiazzi, B.; Baronti, S.; Garzelli, A.; Nencini, F.; Selva, M. Multispectral and panchromatic data fusion assessment without reference. Photogramm. Eng. Remote Sens. 2008, 74, 193–200. [Google Scholar] [CrossRef] [Green Version]

- Khan, M.M.; Alparone, L.; Chanussot, J. Pansharpening quality assessment using the modulation transfer functions of instruments. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3880–3891. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Carlà, R.; Garzelli, A.; Santurri, L. Full scale assessment of pansharpening methods and data products. In Proceedings of the Image and Signal Processing for Remote Sensing XX, Amsterdam, The Netherlands, 22–25 September 2014; Bruzzone, L., Ed.; SPIE: Bellingham, WA, USA, 2014; Volume 9244, pp. 924402-1–924402-12. [Google Scholar]

- Vivone, G.; Restaino, R.; Chanussot, J. A Bayesian procedure for full resolution quality assessment of pansharpened products. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4820–4834. [Google Scholar] [CrossRef]

- Arienzo, A.; Alparone, L.; Aiazzi, B.; Baronti, S.; Garzelli, A. Reproducibility of spectral and radiometric normalized similarity indices for multiband images. In Proceedings of the 2019 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Yokohama, Japan, 28 July–2 August 2019; pp. 8898662-839–8898662-842. [Google Scholar]

- Arienzo, A.; Aiazzi, B.; Alparone, L.; Garzelli, A. Reproducibility of pansharpening methods and quality indexes versus data formats. Remote Sens. 2021, 13, 4399. [Google Scholar] [CrossRef]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O.; Benediktsson, J.A. Quantitative quality evaluation of pansharpened imagery: Consistency versus synthesis. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1247–1259. [Google Scholar] [CrossRef]

- Yuhas, R.H.; Goetz, A.F.H.; Boardman, J.W. Discrimination among semi-arid landscape endmembers using the Spectral Angle Mapper (SAM) algorithm. In Proceedings of the Summaries of the Third Annual Jpl Airborne Geoscience Workshop, Pasadena, CA, USA, 1–5 June 1992; pp. 147–149. [Google Scholar]

- Wald, L. Data Fusion: Definitions and Architectures—Fusion of images of Different Spatial Resolutions; Les Presses de l’École des Mines: Paris, France, 2002. [Google Scholar]

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Garzelli, A.; Nencini, F. Hypercomplex quality assessment of multi-/hyper-spectral images. IEEE Geosci. Remote Sens. Lett. 2009, 6, 662–665. [Google Scholar] [CrossRef]

- Arienzo, A.; Vivone, G.; Garzelli, A.; Alparone, L.; Chanussot, J. Full-resolution quality assessment of pansharpening: Theoretical and hands-on approaches. IEEE Geosci. Remote Sens. Mag. 2022, 10, 2–35. [Google Scholar] [CrossRef]

- Zhou, J.; Civco, D.L.; Silander, J.A. A wavelet transform method to merge Landsat TM and SPOT panchromatic data. Int. J. Remote Sens. 1998, 19, 743–757. [Google Scholar] [CrossRef]

- Vivone, G.; Dalla Mura, M.; Garzelli, A.; Pacifici, F. A benchmarking protocol for pansharpening: Dataset, preprocessing, and quality assessment. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2021, 14, 6102–6118. [Google Scholar] [CrossRef]

- Garzelli, A.; Nencini, F.; Capobianco, L. Optimal MMSE Pan sharpening of very high resolution multispectral images. IEEE Trans. Geosci. Remote Sens. 2008, 46, 228–236. [Google Scholar] [CrossRef]

- Vivone, G.; Restaino, R.; Chanussot, J. Full scale regression-based injection coefficients for panchromatic sharpening. IEEE Trans. Image Process. 2018, 27, 3418–3431. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Arienzo, A.; Baronti, S.; Garzelli, A.; Santurri, L. Deployment of pansharpening for correction of local misalignments between MS and Pan. In Proceedings of the Image and Signal Processing for Remote Sensing XXIV, Berlin, Germany, 10–13 September 2018; Bruzzone, L., Ed.; SPIE: Bellingham, WA, USA, 2018; Volume 9643, pp. 1078902-1–1078902-12. [Google Scholar]

| GE-1 | Pan | B | G | R | NIR |

|---|---|---|---|---|---|

| 0.1779 | 0.1487 | 0.1718 | 0.1619 | 0.0959 | |

| 0 | 0 | 0 | 0 | 0 |

| WV-3 | Pan | C | B | G | Y | R | RE | NIR1 | NIR2 |

|---|---|---|---|---|---|---|---|---|---|

| 0.1365 | 0.3451 | 0.1900 | 0.1233 | 0.1764 | 0.1010 | 0.1567 | 0.0675 | 0.1164 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Trenton | B | G | R | NIR | CD | ||

|---|---|---|---|---|---|---|---|

| Lin. | −0.0573 | 0.5384 | 0.3072 | 0.2710 | −0.0128 | 1.0593 | 0.9916 |

| Lin. w/o bias | −0.0582 | 0.5393 | 0.3071 | 0.2709 | - | 1.0591 | 0.9916 |

| Quad. | −0.1539 | 0.6661 | 0.3140 | 0.2296 | 87.8771 | 1.0558 | 0.9913 |

| Quad. w/o bias | −0.0820 | 0.5802 | 0.3327 | 0.2437 | - | 1.0746 | 0.9913 |

| Munich | C | B | G | Y | R | RE | NIR1 | NIR2 | CD | ||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Lin. w/ bias | 0.1897 | −0.0119 | 0.0960 | 0.4025 | 0.0522 | 0.2294 | −0.0051 | 0.1955 | −3.6256 | 1.1482 | 0.9855 |

| Lin. w/o bias | −0.0121 | 0.1635 | 0.0442 | 0.3949 | 0.0624 | 0.2352 | 0.0354 | 0.1313 | − | 1.0548 | 0.9854 |

| Quad. w/ bias | 0.0701 | 0.0386 | 0.1140 | 0.3149 | 0.1195 | 0.3202 | −0.1904 | 0.5874 | −76.2715 | 1.3742 | 0.9794 |

| Quad. w/o bias | −0.0311 | 0.1496 | 0.0518 | 0.3339 | 0.1175 | 0.3132 | −0.1598 | 0.5318 | − | 1.3070 | 0.9793 |

| Dataset | Munich | Trenton | ||||||

|---|---|---|---|---|---|---|---|---|

| Q8 | Qavg | SAM | ERGAS | Q4 | Qavg | SAM | ERGAS | |

| GT | 1.0000 | 1.0000 | 0.0000 | 0.0000 | 1.0000 | 1.0000 | 0.0000 | 0.0000 |

| EXP | 0.6311 | 0.6354 | 4.7548 | 10.8511 | 0.5826 | 0.5894 | 6.6167 | 10.2034 |

| BT | 0.8803 | 0.8703 | 4.7548 | 5.5754 | 0.9000 | 0.8938 | 6.6167 | 5.3655 |

| GS | 0.8028 | 0.8190 | 4.2535 | 6.9518 | 0.8461 | 0.8513 | 6.2997 | 6.6388 |

| HCS | 0.8906 | 0.8633 | 4.7548 | 6.1731 | 0.8969 | 0.8909 | 6.6167 | 5.4681 |

| BT-H | 0.9236 | 0.9298 | 2.9309 | 4.2466 | 0.9025 | 0.9052 | 4.9937 | 4.9978 |

| GSA | 0.9204 | 0.9215 | 3.2007 | 4.4250 | 0.8985 | 0.8962 | 6.0420 | 5.2664 |

| HECS | 0.9287 | 0.9347 | 2.9078 | 4.1268 | 0.9066 | 0.9091 | 4.9565 | 4.9609 |

| BDSD | 0.9245 | 0.9269 | 3.2388 | 4.1748 | 0.9054 | 0.9065 | 6.0254 | 5.1267 |

| AWLP-H | 0.9154 | 0.9135 | 2.9794 | 4.3915 | 0.8928 | 0.8946 | 5.2913 | 5.2182 |

| MTF-GLP-FS | 0.9200 | 0.9210 | 3.1876 | 4.4465 | 0.9030 | 0.9005 | 6.0093 | 5.1501 |

| SR-D | 0.8936 | 0.8991 | 3.4386 | 5.3399 | 0.8915 | 0.8946 | 5.4449 | 5.3810 |

| TV | 0.9164 | 0.9190 | 3.4225 | 4.6557 | 0.7693 | 0.7711 | 6.1318 | 7.7066 |

| A-PNN-FT | 0.8747 | 0.8798 | 3.6465 | 5.8899 | 0.8857 | 0.8895 | 4.3841 | 5.4262 |

| QNR | KQNR | HQNR | DQNR | |||||

|---|---|---|---|---|---|---|---|---|

| EXP | 0.0000 | 0.0938 | 0.9062 | 0.0887 | 0.1844 | 0.7432 | 0.8258 | 0.8156 |

| BT | 0.0269 | 0.0924 | 0.8831 | 0.0472 | 0.8140 | 0.7754 | 0.9272 | |

| GS | 0.0171 | 0.0834 | 0.9009 | 0.1535 | 0.0688 | 0.7882 | 0.7759 | 0.9153 |

| HCS | 0.0306 | 0.0851 | 0.8869 | 0.0418 | 0.7802 | 0.9289 | ||

| BT-H | 0.0305 | 0.0830 | 0.8890 | 0.1480 | 0.0451 | 0.8136 | 0.9258 | |

| GSA | 0.0456 | 0.1023 | 0.8567 | 0.1533 | 0.0528 | 0.8020 | 0.7600 | 0.9040 |

| HECS | 0.0221 | 0.0606 | 0.9187 | 0.1592 | 0.0275 | 0.9510 | ||

| BDSD | 0.0339 | 0.0135 | 0.9530 | 0.2171 | 0.0745 | 0.7246 | 0.7723 | 0.8941 |

| AWLP-H | 0.0463 | 0.0468 | 0.9090 | 0.0525 | 0.0106 | 0.9375 | 0.9032 | 0.9436 |

| MTF-GLP-FS | 0.0727 | 0.0651 | 0.8670 | 0.0505 | 0.0102 | 0.9397 | 0.8877 | 0.9178 |

| SR-D | 0.0843 | 0.0816 | 0.8409 | 0.0314 | 0.0656 | 0.9051 | 0.8896 | 0.8556 |

| TV | 0.0233 | 0.0374 | 0.9402 | 0.0776 | 0.1015 | 0.8288 | 0.8879 | 0.8776 |

| A-PNN-FT | 0.0774 | 0.0300 | 0.8949 | 0.0629 | 0.0404 | 0.8993 | 0.9090 | 0.8853 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arienzo, A.; Alparone, L.; Garzelli, A.; Lolli, S. Advantages of Nonlinear Intensity Components for Contrast-Based Multispectral Pansharpening. Remote Sens. 2022, 14, 3301. https://doi.org/10.3390/rs14143301

Arienzo A, Alparone L, Garzelli A, Lolli S. Advantages of Nonlinear Intensity Components for Contrast-Based Multispectral Pansharpening. Remote Sensing. 2022; 14(14):3301. https://doi.org/10.3390/rs14143301

Chicago/Turabian StyleArienzo, Alberto, Luciano Alparone, Andrea Garzelli, and Simone Lolli. 2022. "Advantages of Nonlinear Intensity Components for Contrast-Based Multispectral Pansharpening" Remote Sensing 14, no. 14: 3301. https://doi.org/10.3390/rs14143301

APA StyleArienzo, A., Alparone, L., Garzelli, A., & Lolli, S. (2022). Advantages of Nonlinear Intensity Components for Contrast-Based Multispectral Pansharpening. Remote Sensing, 14(14), 3301. https://doi.org/10.3390/rs14143301