Robust Extraction of 3D Line Segment Features from Unorganized Building Point Clouds

Abstract

:1. Introduction

1.1. Related Work

1.2. Contribution

- (1)

- A 3D line segment extraction method for building point clouds is proposed, which is conceptually simple and easy to implement. The proposed method only uses the original point cloud, and does not require the images or reconstructed models;

- (2)

- The multiple constraints in the algorithm filter out a lot of irrelevant debris, which means that the proposed method can restore detailed information more accurately, and greatly reduces the misidentification of 3D line segments;

- (3)

- The proposed method transforms the problem of structure line detection in 3D space into 2D space, and most operations are mainly carried out in 2D space, hence the algorithm proposed in this paper is very efficient;

- (4)

- The 3D line segment extraction method can be flexibly combined with other plane segmentation methods. The method can simultaneously detect 2D and 3D line segments without changing the model structure;

- (5)

- The proposed method works well in both high-quality TLS and low-quality RGB-D point clouds, and is robust to noise.

2. Materials and Methods

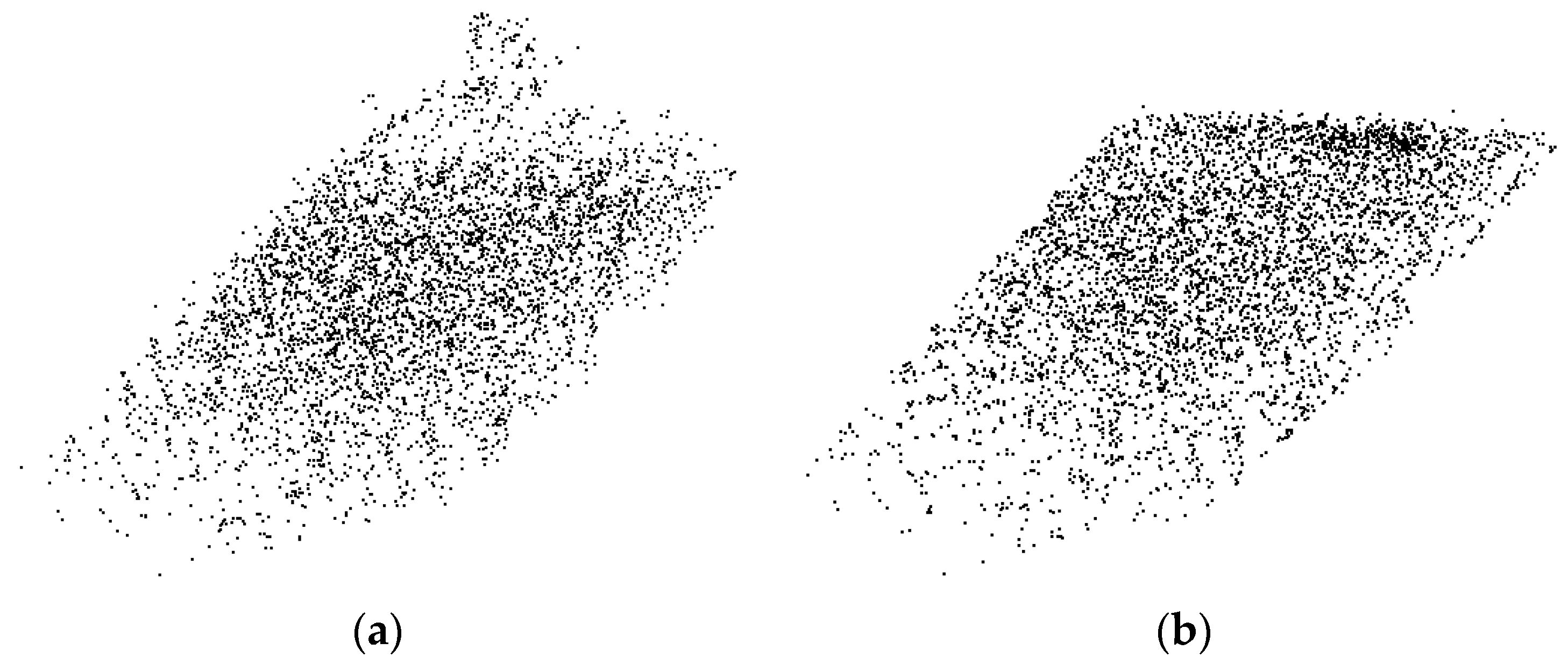

2.1. Data Pre-Processing

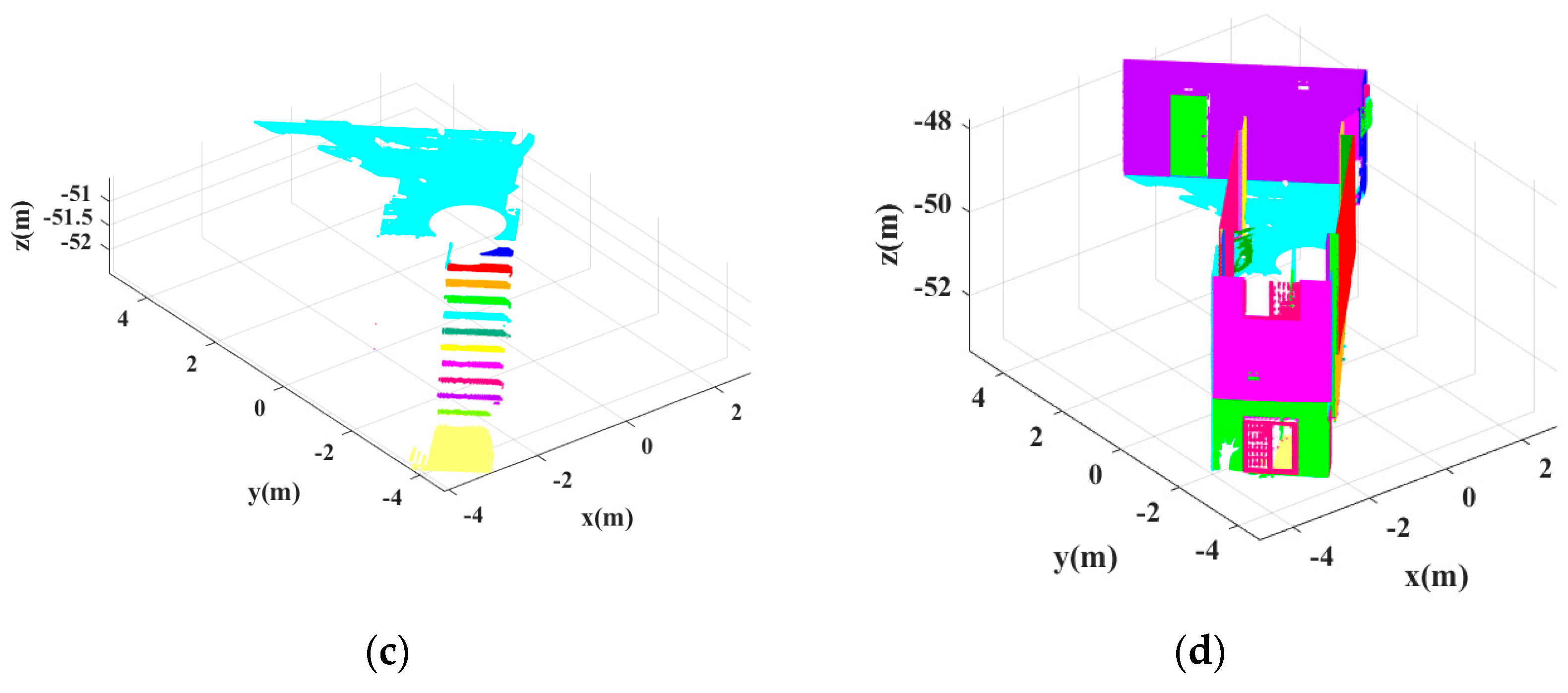

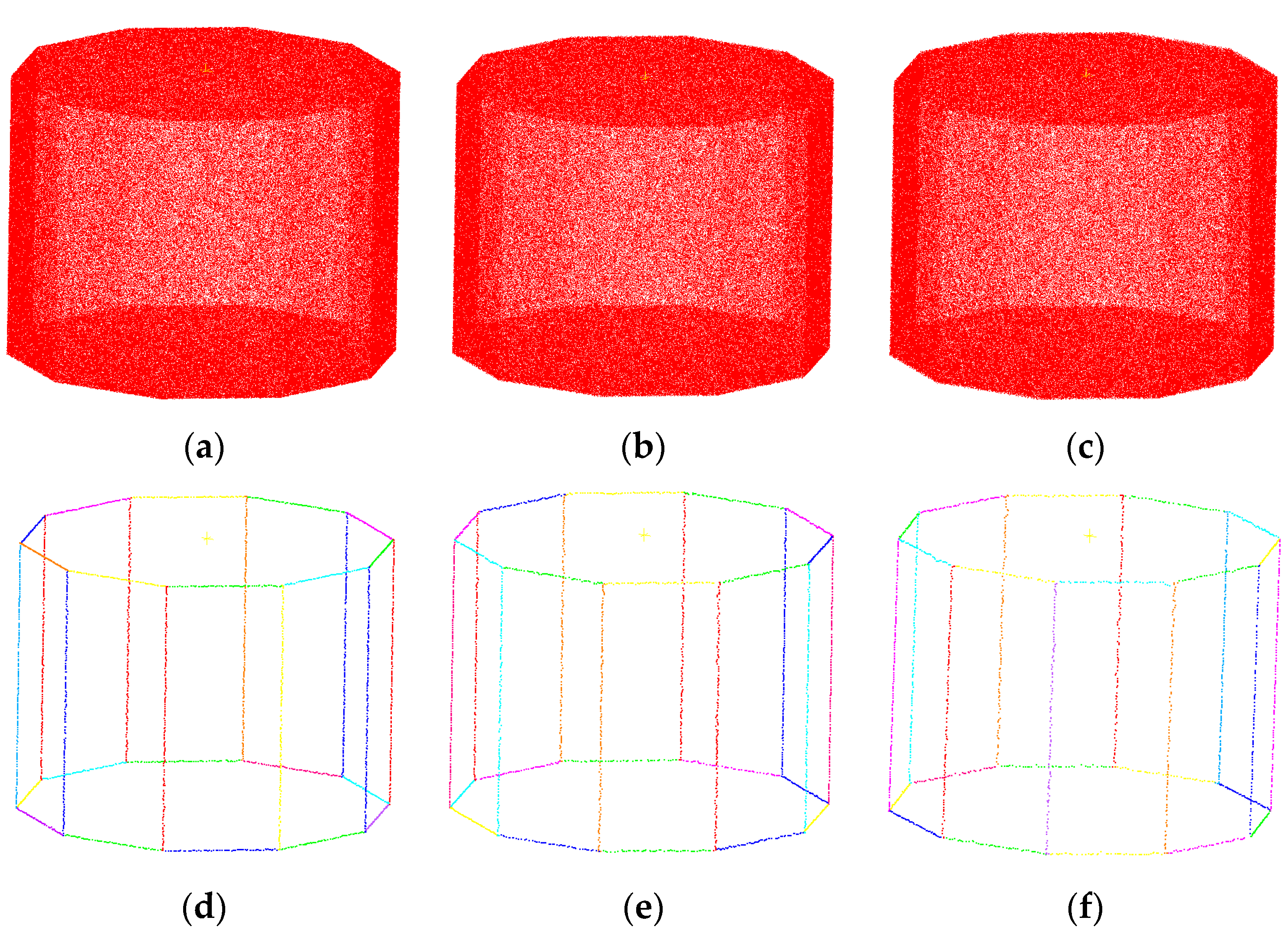

2.2. Plane Segmentation

- (1)

- The kd-tree structure is used to manage Pcol. An empty cluster list E and a pending queue Q are established. Then each point in Pcol is added into Q;

- (2)

- For each point Qi in Q, a neighborhood search is performed on it and the searched points are stored in Qik. For each point in Qik, the distance dik between it and Qi is calculated. If dik ≤ , the corresponding point will be stored in E along with Qi;

- (3)

- The distances between all the clusters in E are calculated according to Equation (6), and the clusters that are less than apart from each other are merged. The merging process iterates until all the distances between clusters are greater than ;

- (4)

- If the clustering results are not empty, the results are stored in Pseg. For each subset Psegi in Pseg, its length Lsegi and point number Nsegi are calculated. A threshold is set as the minimum number of points per meter. A threshold is set as the shortest distance of a qualified line segment. If Nsegi/Lsegi ≥ and Lsegi ≥ , Psegi is stored as a qualified line segment. Then all qualified line segments in Pseg are removed from Pproj;

- (5)

- The remaining points are invoked as input data, and the next cycle is continued. The iteration ceases when the qualified clustering results are null for five consecutive times. On the other hand, to make the program converge as quickly as possible, when the remaining points are less than a specified percentage (e.g., 5%) of the input data Pproj, the iteration is also forced to end. The extracted qualified line segments are stored in Pqual (see Figure 7b).

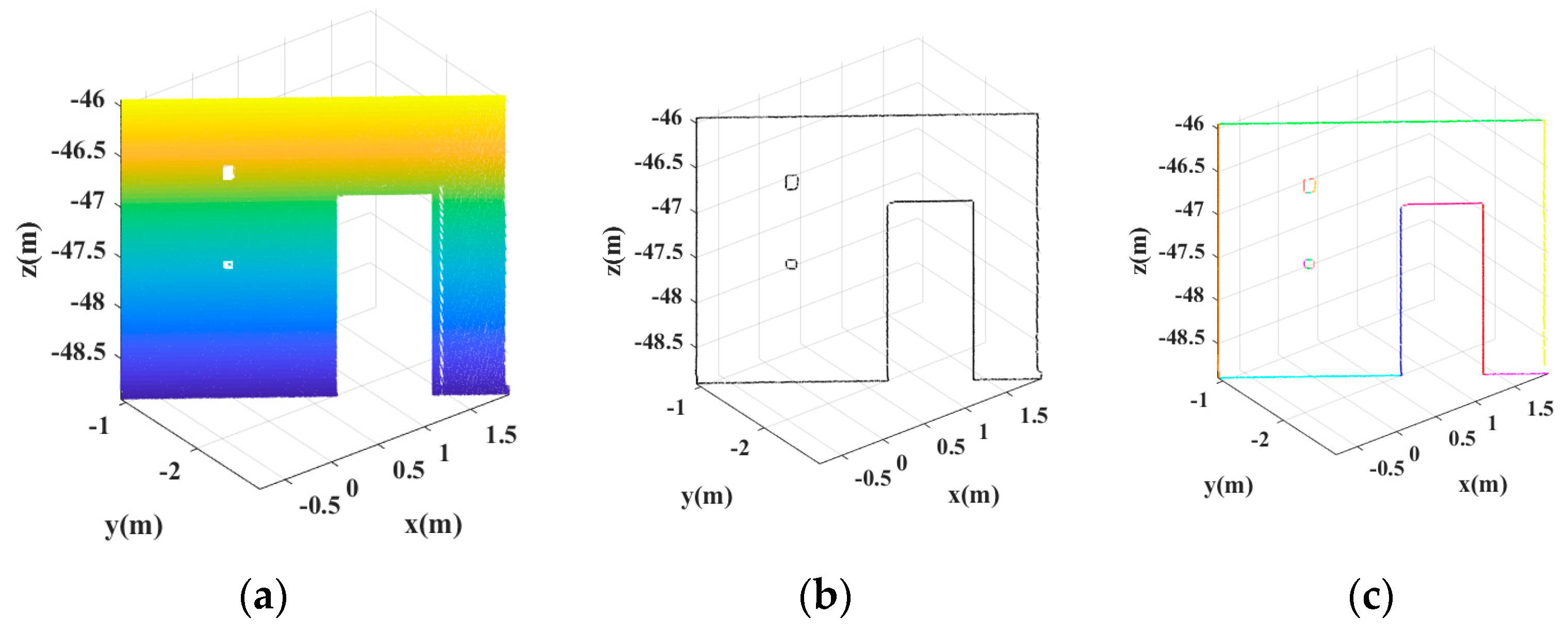

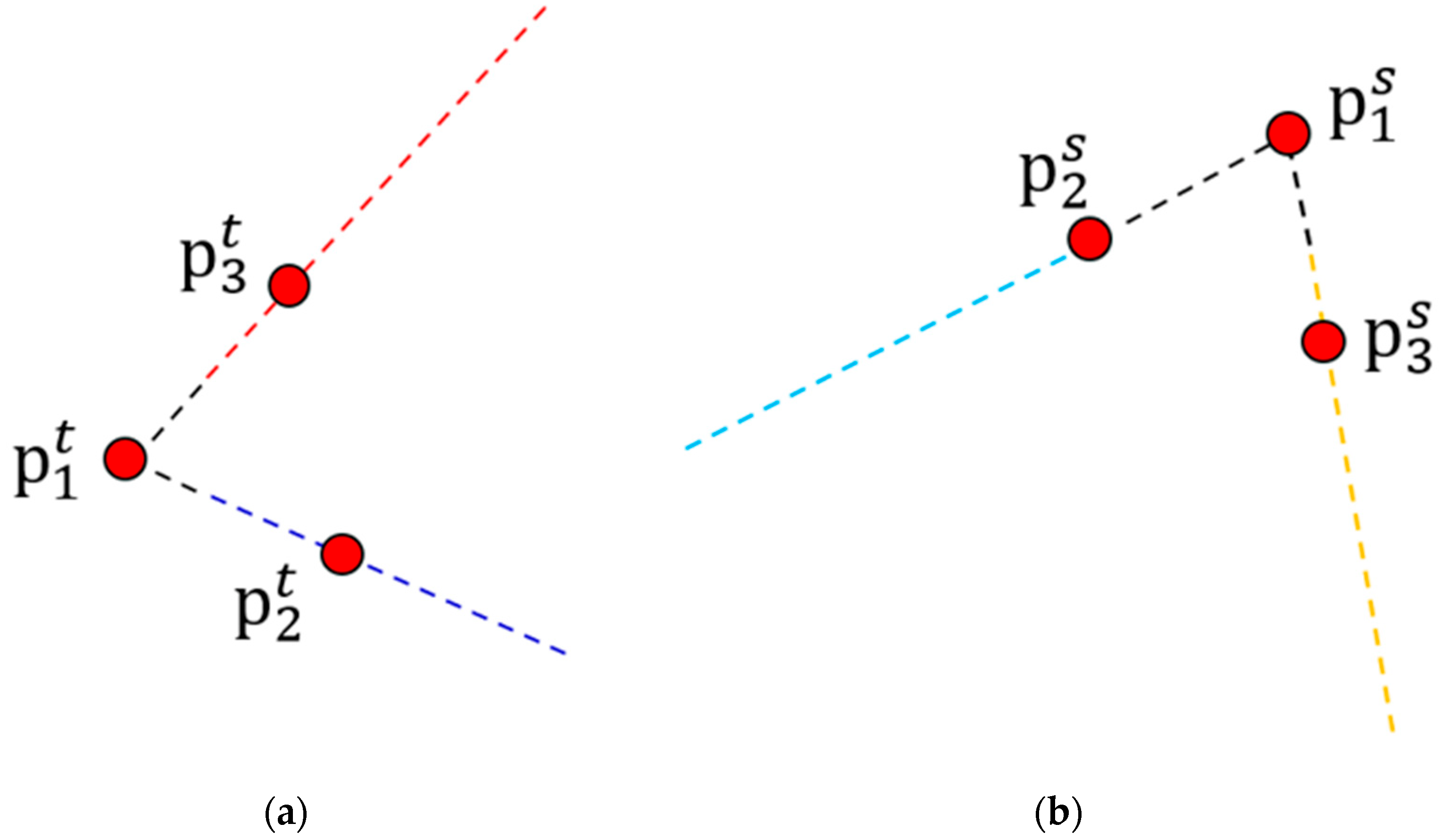

2.3. Three-Dimensional Line Segment Extraction

3. Experiments and Analysis

3.1. Parameters Setting

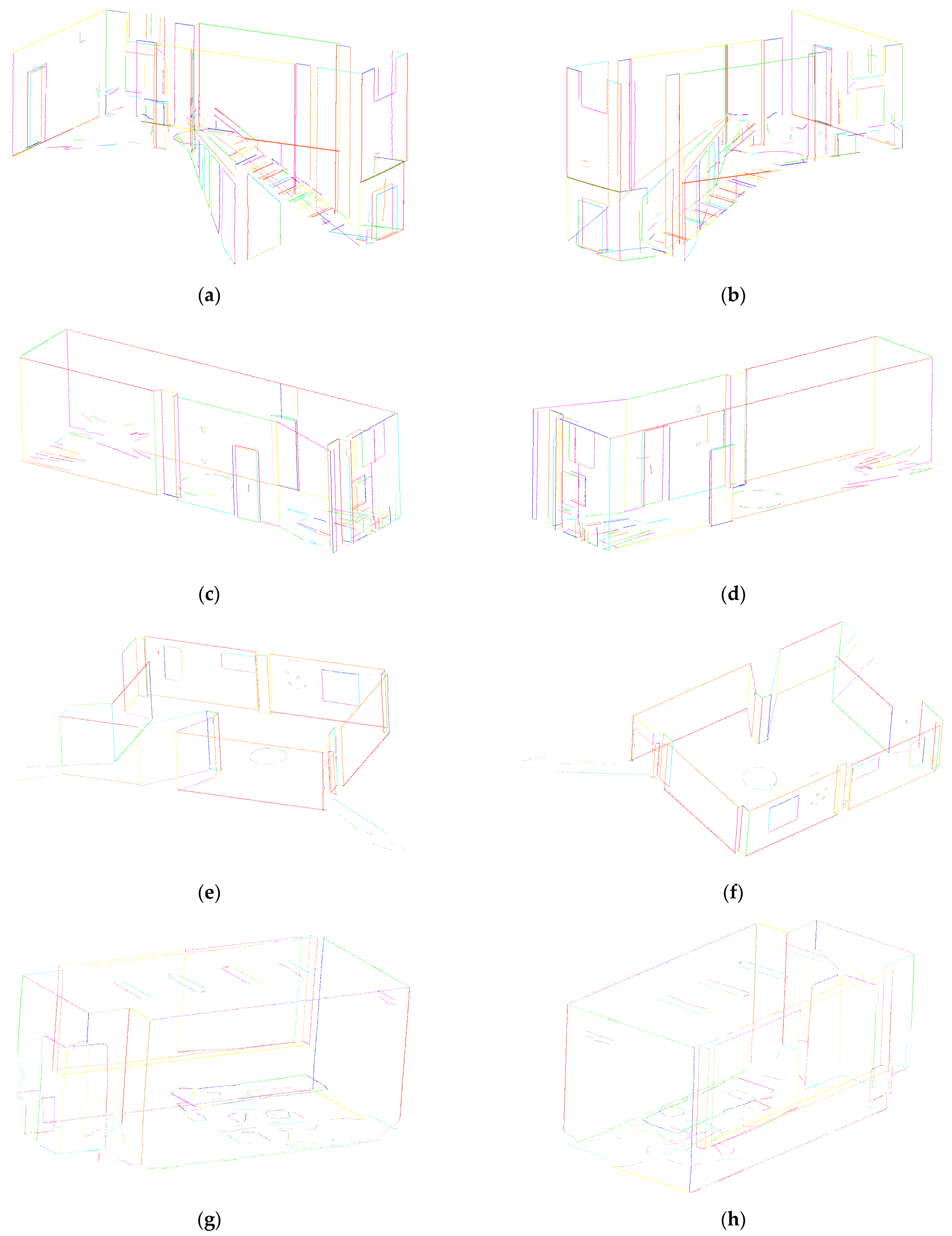

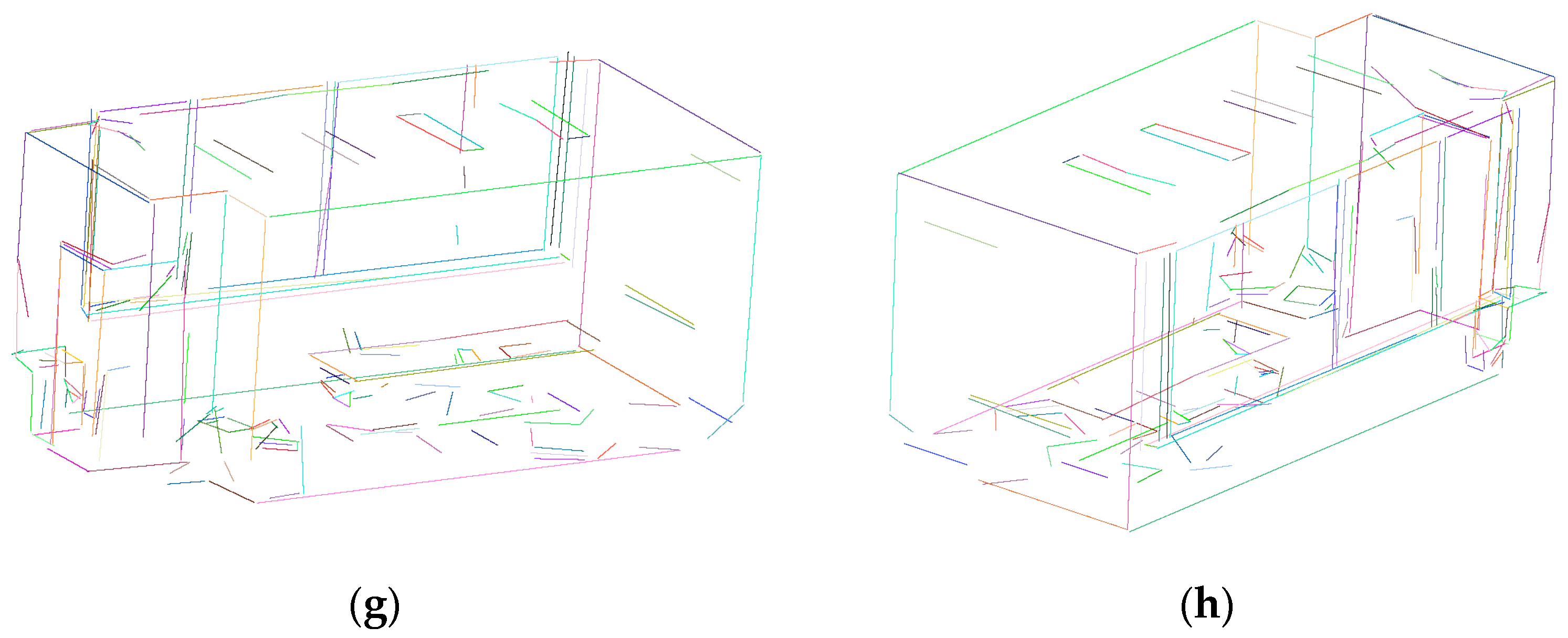

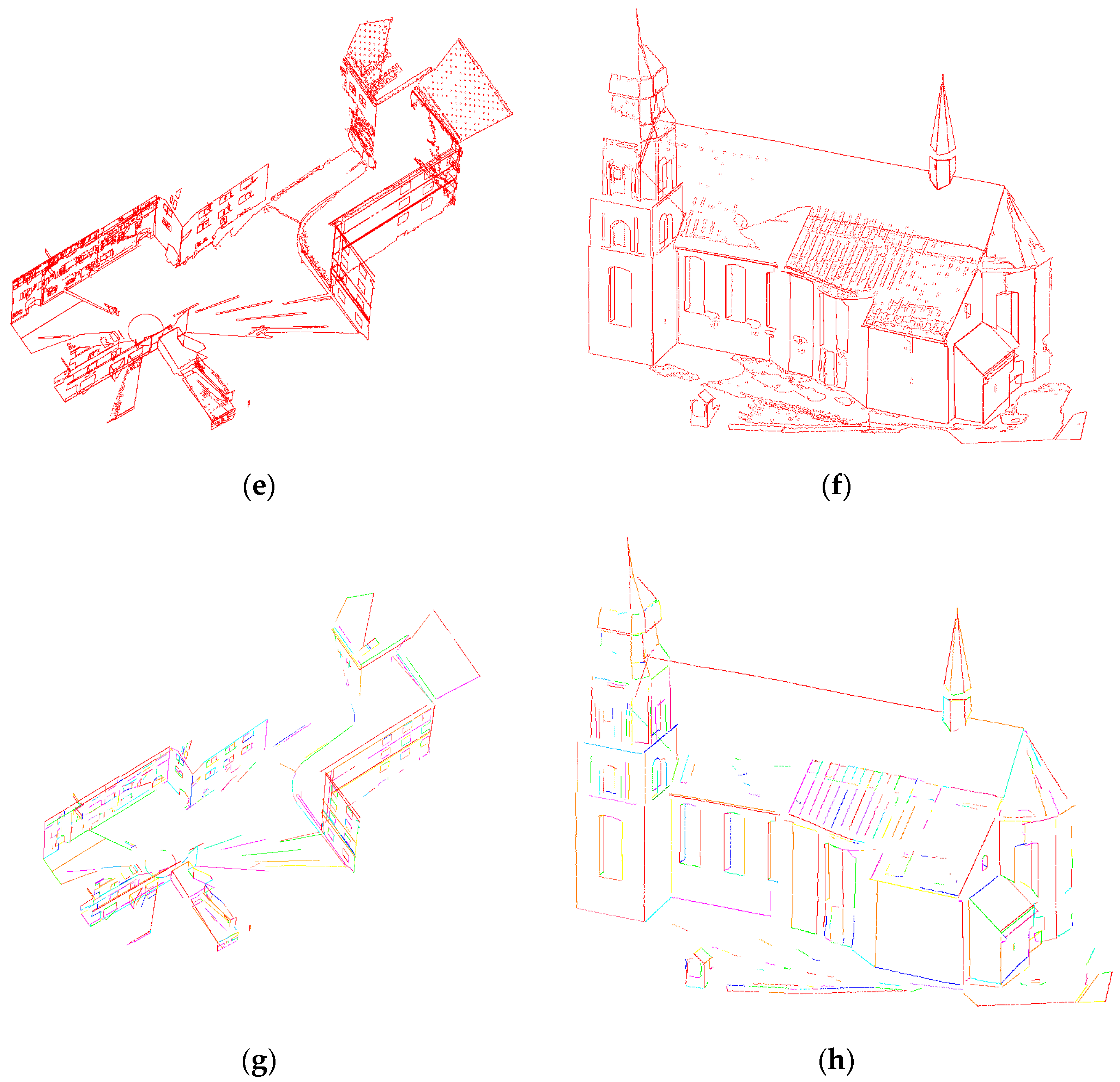

3.2. Three-Dimensional Line Segment Extraction Effect Evaluation

3.3. Application

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lin, Y.; Wang, C.; Chen, B.; Zai, D.; Li, J. Facet segmentation-based line segment extraction for large-scale point clouds. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4839–4854. [Google Scholar] [CrossRef]

- Partovi, T.; Fraundorfer, F.; Bahmanyar, R.; Huang, H.; Reinartz, P. Remote sensing automatic 3-d building model reconstruction from very high-resolution stereo satellite imagery. Remote Sens. 2019, 11, 1660. [Google Scholar] [CrossRef] [Green Version]

- Pepe, M.; Costantino, D.; Alfio, V.S.; Vozza, G.; Cartellino, E. A novel method based on deep learning, GIS and geomatics software for building a 3d city model from VHR satellite stereo Imagery. ISPRS Int. J. Geo-Inf. 2021, 10, 697. [Google Scholar] [CrossRef]

- Yang, B.; Fang, L.; Li, J. Semi-automated extraction and delineation of 3D roads of street scene from mobile laser scanning point clouds. ISPRS J. Photogramm. Remote Sens. 2013, 79, 80–93. [Google Scholar] [CrossRef]

- Habib, A.; Ghanma, M.; Morgan, M.; Al-Ruzouq, R. Photogrammetric and LiDAR data registration using linear features. Photogram. Eng. Remote Sens. 2005, 71, 699–707. [Google Scholar] [CrossRef]

- Balali, V.; Jahangiri, A.; Machiani, S.G. Multi-class us traffic signs 3d recognition and localization via image-based point cloud model using color candidate extraction and texture-based recognition. Adv. Eng. Inform. 2017, 32, 263–274. [Google Scholar] [CrossRef]

- Moghadam, P.; Bosse, M.; Zlot, R. Line-based extrinsic calibration of range and image sensors. In Proceedings of the IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 4–11. [Google Scholar]

- Gioi, R.G.; Jakubowicz, J.; Morel, J.M.; Randall, G. LSD: A fast line segment detector with a false detection control. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 722–732. [Google Scholar] [CrossRef]

- Almazan, E.J.; Tal, R.; Qian, Y.; Elder, J.H. MCMLSD: A Dynamic Programming Approach to Line Segment Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5854–5862. [Google Scholar]

- Fernandes, L.A.F.; Oliveira, M.M. Real-time line detection through an improved Hough transform voting scheme. Pattern Recognit. 2008, 41, 299–314. [Google Scholar] [CrossRef]

- Song, B.; Li, X. Power line detection from optical images. Neurocomputing 2014, 129, 350–361. [Google Scholar] [CrossRef]

- Akinlar, C.; Topal, C. Edlines: A real-time line segment detector with a false detection control. Pattern Recognit. Lett. 2011, 32, 1633–1642. [Google Scholar] [CrossRef]

- Heijden, F.V.D. Edge and line feature extraction based on covariance models. IEEE Trans. Pattern Anal. Mach. Intell. 1995, 17, 16–33. [Google Scholar] [CrossRef] [Green Version]

- Christopher, W.; Hahmann, S.; Hagen, H. Sharp feature detection in point clouds. In Proceedings of the 2010 Shape Modeling International Conference, Washington, DC, USA, 21–23 June 2010; pp. 175–186. [Google Scholar]

- Bazazian, D.; Casas, J.R.; Ruiz-Hidalgo, J. Fast and robust edge extraction in unorganized point clouds. In Proceedings of the 2015 International Conference on Digital Image Computing: Techniques and Applications (DICTA), New York, NY, USA, 23–25 November 2015; pp. 1–8. [Google Scholar]

- Ioannou, Y.; Taati, B.; Harrap, R.; Greenspan, M. Difference of normals as a multi-scale operator in unorganized point clouds. In Proceedings of the 2nd International Conference on 3D Imaging, Modeling, Processing, Visualization and Transmission (3DIMPVT), Zurich, Switzerland, 13–15 October 2012; pp. 501–508. [Google Scholar]

- Hackel, T.; Wegner, J.D.; Schindler, K. Contour Detection in Unstructured 3D Point Clouds. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1610–1618. [Google Scholar]

- Jarvis, R.A. Computing the shape hull of points in the plane. In Proceedings of the Computer Society Conference on Pattern Recognition and Image Processing, New York, NY, USA, 6–8 June 1977; pp. 231–241. [Google Scholar]

- Zhang, W.N.; Chen, L.W.; Xiong, Z.Y.; Zang, Y.; Li, J.; Zhao, L. Large-scale point cloud contour extraction via 3d guided multi-conditional generative adversarial network. ISPRS J. Photogramm. Remote Sens. 2020, 164, 97–105. [Google Scholar] [CrossRef]

- Chen, X.J.; Yu, K.G. Feature line generation and regularization from point clouds. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9779–9790. [Google Scholar] [CrossRef]

- Taylor, C.J.; Kriegman, D.J. Structure and motion from line segments in multiple images. IEEE Trans. Pattern Anal. Mach. Intell. 1995, 17, 1021–1032. [Google Scholar] [CrossRef]

- Martinec, D.; Pajdla, T. Line reconstruction from many perspective images by factorization. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 16–22 June 2003; pp. 497–502. [Google Scholar]

- Jain, A.; Kurz, C.; Thormahlen, T.; Seidel, H.P. Exploiting global connectivity constraints for reconstruction of 3D line segments from images. In Proceedings of the Twenty-Third IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 1586–1593. [Google Scholar]

- Lin, Y.; Wang, C.; Cheng, J.; Chen, B.; Jia, F.; Chen, Z.; Li, J. Line segment extraction for large scale unorganized point clouds. ISPRS J. Photogramm. Remote Sens. 2015, 102, 172–183. [Google Scholar] [CrossRef]

- Lu, X.; Liu, Y.; Li, K. Fast 3D line segment detection from unorganized point cloud. arXiv 2019, arXiv:1901.02532. [Google Scholar]

- Sampath, A.; Shan, J. Segmentation and reconstruction of polyhedral building roofs from aerial lidar point clouds. IEEE Trans. Geosci. Remote Sens. 2010, 48, 1554–1567. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar]

- Saito, S.; Li, T.; Li, H. Real-time facial segmentation and performance capture from RGB input. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 244–261. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Charles, R.Q.; Su, H.; Kaichun, M.; Guibas, L.J. PointNet: Deep learning on point sets for 3D classification and segmentation. In Proceedings of the IEEE Conference Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep hierarchical feature learning on point sets in a metric space. Adv. Neural Inf. Process. Syst. 2017, 5099–5108. Available online: https://arxiv.org/abs/1706.02413 (accessed on 3 July 2021).

- Zhao, B.; Hua, X.; Yu, K.; Xuan, W.; Tao, W. Indoor point cloud segmentation using iterative gaussian mapping and improved model fitting. IEEE Trans. Geosci. Remote Sens. 2020, 58, 1–18. [Google Scholar] [CrossRef]

- Yu, L.; Li, X.; Fu, C.; Cohen-Or, D. Ec-net: An edge-aware point set consolidation network. arXiv 2018, arXiv:1807.06010. [Google Scholar]

- Limberger, F.A.; Oliveira, M.M. Real-time detection of planar regions in unorganized point clouds. Pattern Recognit. 2015, 48, 2043–2053. [Google Scholar] [CrossRef] [Green Version]

- Chum, O.; Matas, J. Matching with prosac progressive sample consensus. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 20–25 July 2005; pp. 220–226. [Google Scholar]

- Ma, W.; Li, Q. An improved ball pivot algorithm-based ground filtering mechanism for lidar data. Remote Sens. 2019, 11, 1179. [Google Scholar] [CrossRef] [Green Version]

- Dong, Z.; Yang, B.; Hu, P.; Scherer, S. An efficient global energy optimization approach for robust 3D plane segmentation of point clouds. ISPRS J. Photogramm. Remote Sens. 2018, 137, 112–133. [Google Scholar] [CrossRef]

- Rusu, R.B.; Cousins, S. 3D is here: Point cloud library (PCL). In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 23–27 May 2011; pp. 1–4. [Google Scholar]

- Besl, P.J.; McKay, D.N. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Tao, W.; Hua, X.; Yu, K.; He, X.; Chen, X. An improved point-to-plane registration method for terrestrial laser scanning data. IEEE Access. 2018, 6, 48062–48073. [Google Scholar] [CrossRef]

- Li, W.; Song, P. A modified ICP algorithm based on dynamic adjustment factor for registration of point cloud and CAD model. Pattern Recognit. Lett. 2015, 65, 88–94. [Google Scholar] [CrossRef]

- Rusu, R.B.; Blodow, N.; Beetz, M. Fast Point Feature Histograms (FPFH) for 3D registration. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009. [Google Scholar]

- Tao, W.; Hua, X.; Wang, R.; Xu, D. Quintuple local coordinate images for local shape description. Photogramm. Eng. Remote Sens. 2020, 86, 121–132. [Google Scholar] [CrossRef]

- Yang, J.; Xiao, Y.; Cao, Z. Aligning 2.5D scene fragments with distinctive local geometric features and voting-based correspondences. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 714–729. [Google Scholar] [CrossRef]

| Name | Point Number | Method | Number of Extracted Planes | Number of Extracted Lines | Number of Extracted Points | Running Time (s) |

|---|---|---|---|---|---|---|

| Staircase | 7,960,776 | Proposed | 42 | 203 | 59,925 | 418.37 |

| Lu et al. [25] | 488 | 997 | 1,204,472 | 874.19 | ||

| Corridor | 5,497,221 | Proposed | 20 | 155 | 16,696 | 185.89 |

| Lu et al. [25] | 191 | 656 | 711,345 | 539.40 | ||

| Laboratory | 2,154,851 | Proposed | 23 | 168 | 11,176 | 156.45 |

| Lu et al. [25] | 282 | 503 | 346,814 | 298.22 | ||

| Lounge | 1,022,584 | Proposed | 16 | 149 | 10,503 | 57.07 |

| Lu et al. [25] | 85 | 200 | 194,370 | 101.78 |

| Name | Reference Size | Extracted Size | Results | ||||

|---|---|---|---|---|---|---|---|

| Width × Height | Area (m2) | Width × Height | Area (m2) | Difference | Accuracy (%) | ||

| (m × m) | (m × m) | (m2) | |||||

| Staircase | Wall_1 | 5.056 × 2.759 | 13.9495 | 5.055 × 2.752 | 13.9114 | 0.0381 | 99.73 |

| Wall_2 | 0.285 × 2.666 | 0.7598 | 0.282 × 2.658 | 0.7496 | 0.0102 | 98.66 | |

| Window_1 | 1.106 × 1.280 | 1.4157 | 1.085 × 1.253 | 1.3595 | 0.0562 | 96.03 | |

| Window_2 | 1.180 × 0.905 | 1.0679 | 1.177 × 0.884 | 1.0405 | 0.0274 | 97.43 | |

| Door | 0.752 × 1.980 | 1.4890 | 0.767 × 1.993 | 1.5286 | 0.0306 | 97.34 | |

| Corridor | Wall | 10.600 × 2.976 | 31.5456 | 10.597 × 2.957 | 31.3353 | 0.2103 | 99.33 |

| Door | 0.801 × 1.997 | 1.5996 | 0.786 × 1.992 | 1.5657 | 0.0339 | 97.88 | |

| Window | 0.964 × 0.976 | 0.9408 | 0.995 × 0.962 | 0.9572 | 0.0164 | 98.20 | |

| Laboratory | Wall_1 | 4.396 × 2.836 | 12.4671 | 4.411 × 2.800 | 12.3508 | 0.1163 | 99.07 |

| Wall_2 | 6.941 × 2.843 | 19.7332 | 6.934 × 2.816 | 19.5261 | 0.2071 | 98.95 | |

| Quadrangular | 0.586 × 2.850 | 1.5112 | 0.523 × 2.816 | 1.4728 | 0.0384 | 97.46 | |

| Window_1 | 1.698 × 1.504 | 2.5538 | 1.714 × 1.478 | 2.5333 | 0.0205 | 99.20 | |

| Window_2 | 1.620 × 0.766 | 1.2409 | 1.595 × 0.748 | 1.1931 | 0.0478 | 96.22 | |

| Door | 1.593 × 2.157 | 3.426 | 1.583 × 2.117 | 3.3512 | 0.075 | 97.82 | |

| Lounge | Wall | 5.762 × 3.075 | 17.7182 | 5.770 × 3.026 | 17.4600 | 0.2582 | 98.54 |

| Window_1 | 2.736 × 2.053 | 5.6170 | 2.748 × 2.028 | 5.5729 | 0.0441 | 99.21 | |

| Window_2 | 2.708 × 2.086 | 5.6489 | 2.799 × 2.114 | 5.9171 | 0.2682 | 95.25 | |

| Door | 0.869 × 2.168 | 1.8840 | 0.897 × 2.121 | 1.9025 | 0.0185 | 99.02 | |

| Name | Point Number | Method | Number of Contour Points | Running Time (s) |

|---|---|---|---|---|

| Staircase | 7,960,776 | Proposed | 100,330 | 333.80 |

| Bazazian et al. [15] | 117,257 | 474.66 | ||

| Ioannou et al. [16] | 37,151 | 4173.42 | ||

| SOR filter | 54,103 | 1593.49 | ||

| Corridor | 5,497,221 | Proposed | 32,043 | 153.82 |

| Bazazian et al. [15] | 65,417 | 325.47 | ||

| Ioannou et al. [16] | 24,347 | 2854.33 | ||

| SOR filter | 56,311 | 1096.31 | ||

| Laboratory | 2,154,851 | Proposed | 16,962 | 144.15 |

| Bazazian et al. [15] | 320,971 | 207.22 | ||

| Ioannou et al. [16] | 63,370 | 1143.68 | ||

| SOR filter | 192,615 | 402.50 | ||

| Lounge | 1,022,584 | Proposed | 18,933 | 44.82 |

| Bazazian et al. [15] | 57,979 | 59.75 | ||

| Ioannou et al. [16] | 14,058 | 300.01 | ||

| SOR filter | 883,627 | 205.94 |

| Name | Point Number | Number of Extracted Planes | Number of Extracted Line Segments | Number of Extracted Contour Points | Point Number of Extracted Lines | Running Time (s) |

|---|---|---|---|---|---|---|

| Birdfountain | 14,579,089 | 212 | 667 | 94,271 | 46,498 | 543.88 |

| Bildstein | 2,329,405 | 67 | 489 | 43,019 | 25,953 | 195.56 |

| Number of Pairs | Point Number | Reference Value | Calculated Value | dx (mm) | dy (mm) | Displacement (mm) | ||

|---|---|---|---|---|---|---|---|---|

| X(m) | Y(m) | X(m) | Y(m) | |||||

| Pair1 | 1 | 2.5331 | 32.1316 | 2.5338 | 32.131 | 0.7 | −0.6 | 0.9 |

| 2 | 1.7526 | 32.7567 | 1.7533 | 32.7561 | 0.7 | −0.6 | 0.9 | |

| 3 | 3.16106 | 32.9099 | 3.1621 | 32.9093 | 1.0 | −0.6 | 1.2 | |

| Pair2 | 4 | 3.0838 | 32.8141 | 3.0844 | 32.8129 | 0.6 | −1.2 | 1.3 |

| 5 | 2.4558 | 32.0359 | 2.4562 | 32.0349 | 0.4 | −1 | 1.1 | |

| 6 | 3.8589 | 32.1824 | 3.86 | 32.1817 | 1.1 | −0.7 | 1.3 | |

| Pair3 | 7 | 3.6216 | 27.6053 | 3.6192 | 27.6071 | −2.4 | 1.8 | 3.0 |

| 8 | 2.8399 | 28.2289 | 2.8371 | 28.2301 | −2.8 | 1.2 | 3.1 | |

| 9 | 4.2379 | 28.3928 | 4.2377 | 28.3926 | −0.2 | −0.2 | 0.3 | |

| Pair4 | 10 | 18.0089 | 16.0006 | 18.0072 | 16.0029 | −1.7 | 2.3 | 2.9 |

| 11 | 18.796 | 15.3838 | 18.794 | 15.3856 | −2 | 1.8 | 2.7 | |

| 12 | 18.6282 | 16.7857 | 18.6266 | 16.7879 | −1.6 | 2.2 | 2.72 | |

| Pair5 | 13 | 22.5925 | 15.9288 | 22.5951 | 15.927 | 2.6 | −1.8 | 3.2 |

| 14 | 23.3677 | 15.2971 | 23.37 | 15.2951 | 2.3 | −2 | 3.1 | |

| 15 | 23.231 | 16.6985 | 23.2323 | 16.6978 | 1.3 | −0.7 | 1.5 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, P.; Hua, X.; Tao, W.; Zhang, M. Robust Extraction of 3D Line Segment Features from Unorganized Building Point Clouds. Remote Sens. 2022, 14, 3279. https://doi.org/10.3390/rs14143279

Tian P, Hua X, Tao W, Zhang M. Robust Extraction of 3D Line Segment Features from Unorganized Building Point Clouds. Remote Sensing. 2022; 14(14):3279. https://doi.org/10.3390/rs14143279

Chicago/Turabian StyleTian, Pengju, Xianghong Hua, Wuyong Tao, and Miao Zhang. 2022. "Robust Extraction of 3D Line Segment Features from Unorganized Building Point Clouds" Remote Sensing 14, no. 14: 3279. https://doi.org/10.3390/rs14143279

APA StyleTian, P., Hua, X., Tao, W., & Zhang, M. (2022). Robust Extraction of 3D Line Segment Features from Unorganized Building Point Clouds. Remote Sensing, 14(14), 3279. https://doi.org/10.3390/rs14143279