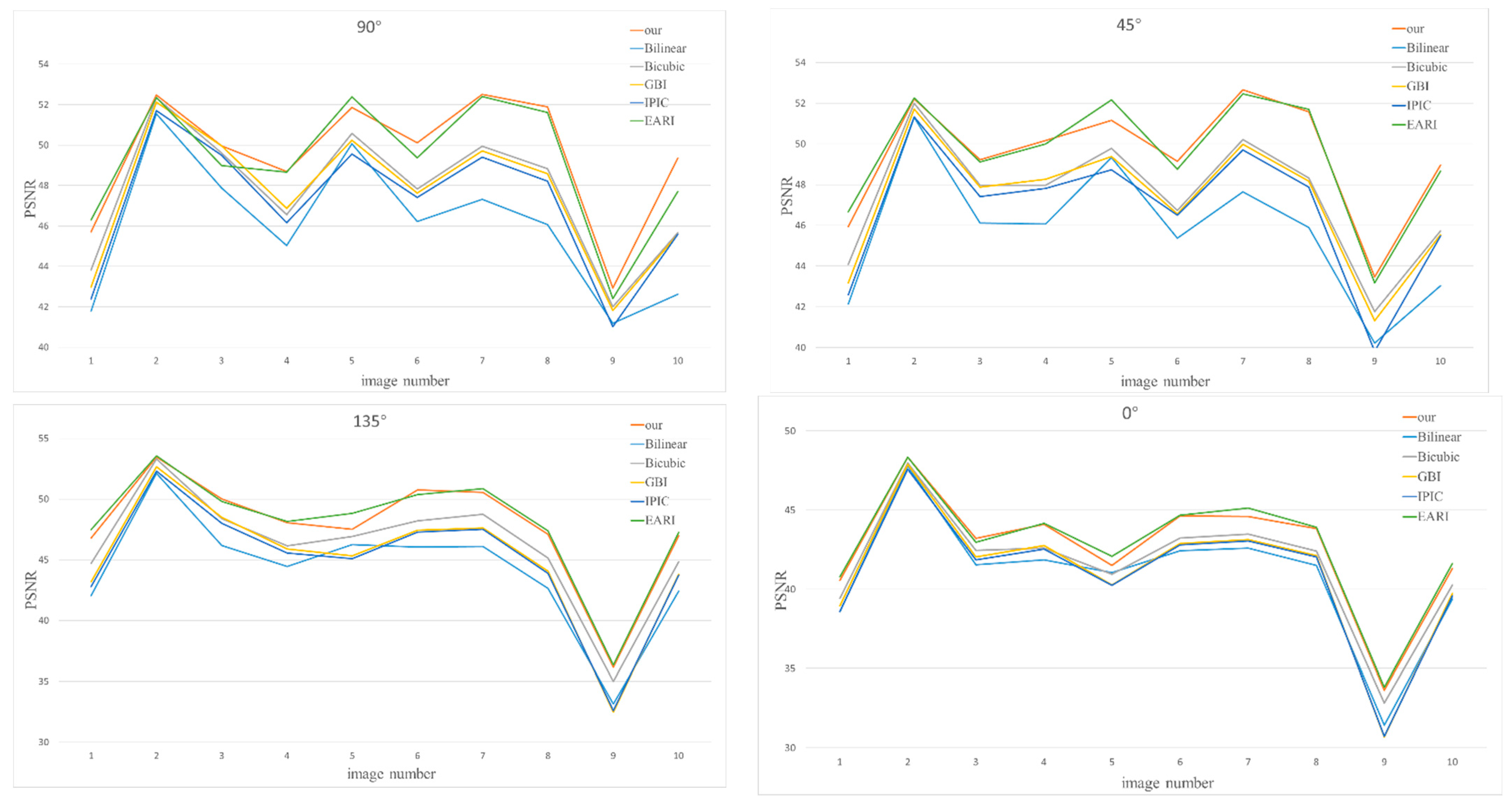

Based on the constraint relationship between polarization channels discussed in

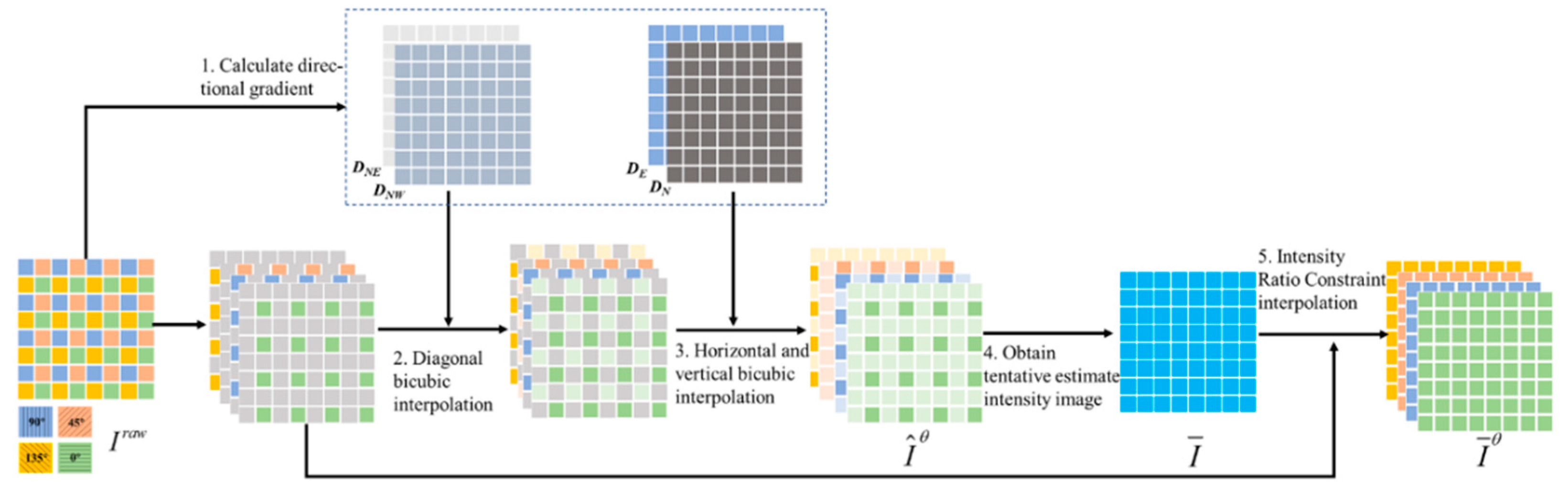

Section 3, we propose a new polarized intensity ratio constraint (PIRC) demosaicing method. Our fundamental goal is the following: the image texture and polarization state of each pixel are maintained. Thus, the proposed PIRC demosaicing method is divided into two main steps. First, the intensity image is obtained. The recovered intensity image is expected to retain the truthful image texture; thus, the relationship between neighboring pixels must be fully considered. To achieve this, a directional gradient-based method is applied to interpolate each polarization channel, a tentative estimation value of each polarization channel is obtained, and the full intensity is half of the sum of four channels. Second, based on the full intensity image, each polarization channel is recovered by a mutated guided filter method. The full process is shown in

Figure 2. In brief, we apply gradient filtering to the raw images in each channel to obtain tentative values of intensity with each polarization azimuth in any given pixel, and then apply the derived guided filtering to calculate the final estimated value.

2.2.1. Recover the Intensity Image by Image Gradient

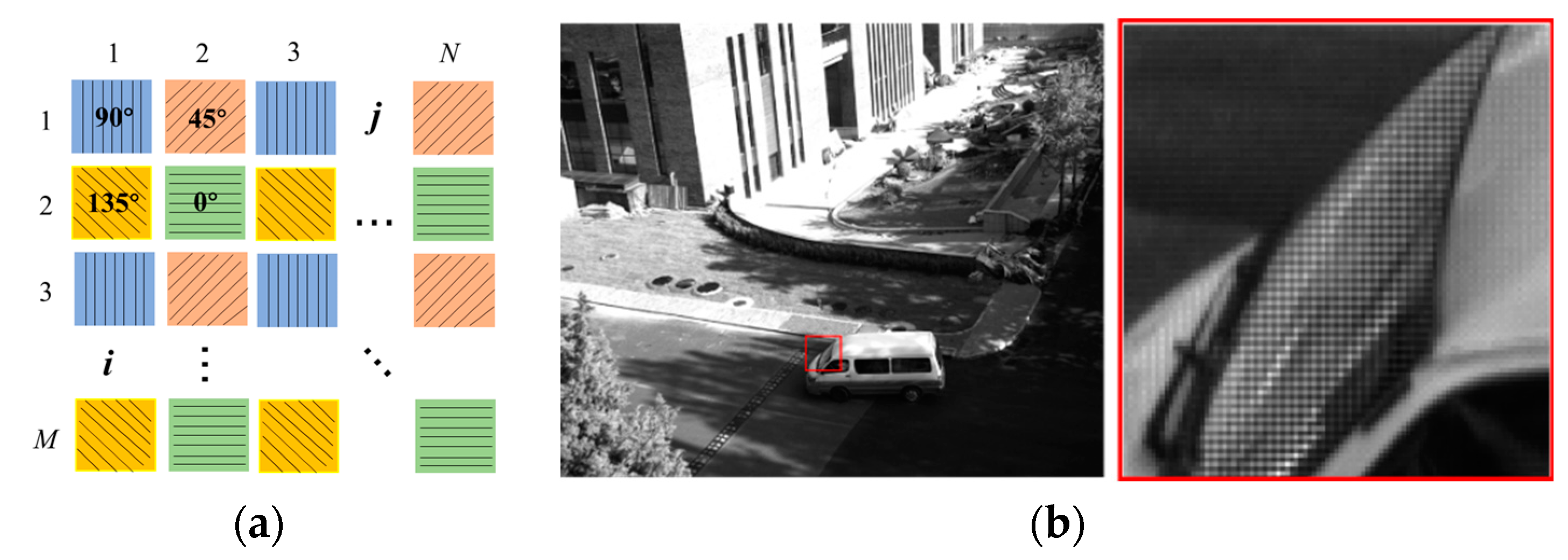

Only one of the polarization directions is detected for each pixel (

i,

j) of the raw image. Based on the relationship in Equation (10), if its perpendicular direction is estimated, then the full intensity

of location (

i,

j) can be estimated. For example, when the

is detected at the location (

i,

j), the full intensity

can be obtained by adding the detected

to the tentatively estimated

. As illustrated in

Figure 1, for each pixel (

i,

j), the four diagonal adjacent pixels are in the perpendicular polarization direction, and the vertical and horizontal adjacent pixels are in two other polarization directions. In order to make full use of the relationship between adjacent pixels, three nondetected polarization directions should be tentatively estimated in each pixel. Thus, the full intensity

can be estimated by:

where

,

, and

are the tentatively estimated polarization channel values.

Since the recovered intensity image should convey the actual image texture, the image edges and gradients should be fully considered during interpolating. The fundamental idea is that the interpolation should be performed along the edge and not across the edge. We first evaluate the gradient of the raw image in four different directions, east (the horizontal direction), northeast (the diagonal direction with positive tangent), north (the vertical direction), and northwest (the diagonal direction with negative tangent). For each pixel (

i,

j) of the raw image, four gradient values are calculated on a 7 × 7 window using Equation (12).

The process of tentatively estimating the nondetected polarization intensity has two steps, diagonal interpolation and vertical and horizontal interpolation:

Diagonal interpolation. In each pixel, the diagonal interpolation can interpolate the dual value (i.e., the value of the perpendicular direction) of the detected one. If the gradient in the NE direction is larger than the gradient in the NW direction, i.e., , bicubic interpolation is applied to the target pixel along the NW direction. If the gradient in the NW direction is larger than the gradient in the NE direction, i.e., , bicubic interpolation is applied to the target pixel along the NE direction. If there is an equal situation, i.e., , the average of the two bicubic interpolation values is taken. Diagonal interpolation of all four polarization channels should be completed before the next step.

Vertical and horizontal interpolation. As shown in

Figure 1, each polarization channel is detected every second row and every second column. Thus, when we do the bicubic interpolation in the

N and

E directions for the any target pixel (

i,

j), in only one direction the required adjacent pixel has detected value. Additionally, in another direction, only the estimated value from the above diagonal interpolation process can be used. For example, when

is detected on pixel (

i,

j), the adjacent detected

can be used in both the

NE and

NW directions (the diagonal directions). Thus,

can be estimated by diagonal interpolation. However, for

and

there is no detected

in the

E direction (the horizontal direction) or

in the

N direction (the vertical direction). Thus, in those directions, the

and

from their own diagonal interpolations are used. If the gradient in the

E direction is larger than the gradient in the

N direction, i.e.,

, bicubic interpolation is applied to the target pixel along the

N direction. If the gradient in the

N direction is not larger than the gradient in the

E direction, i.e.,

, bicubic interpolation is applied to the target pixel along the

E direction. If there is an equal situation, i.e.,

, the average of the two is taken.

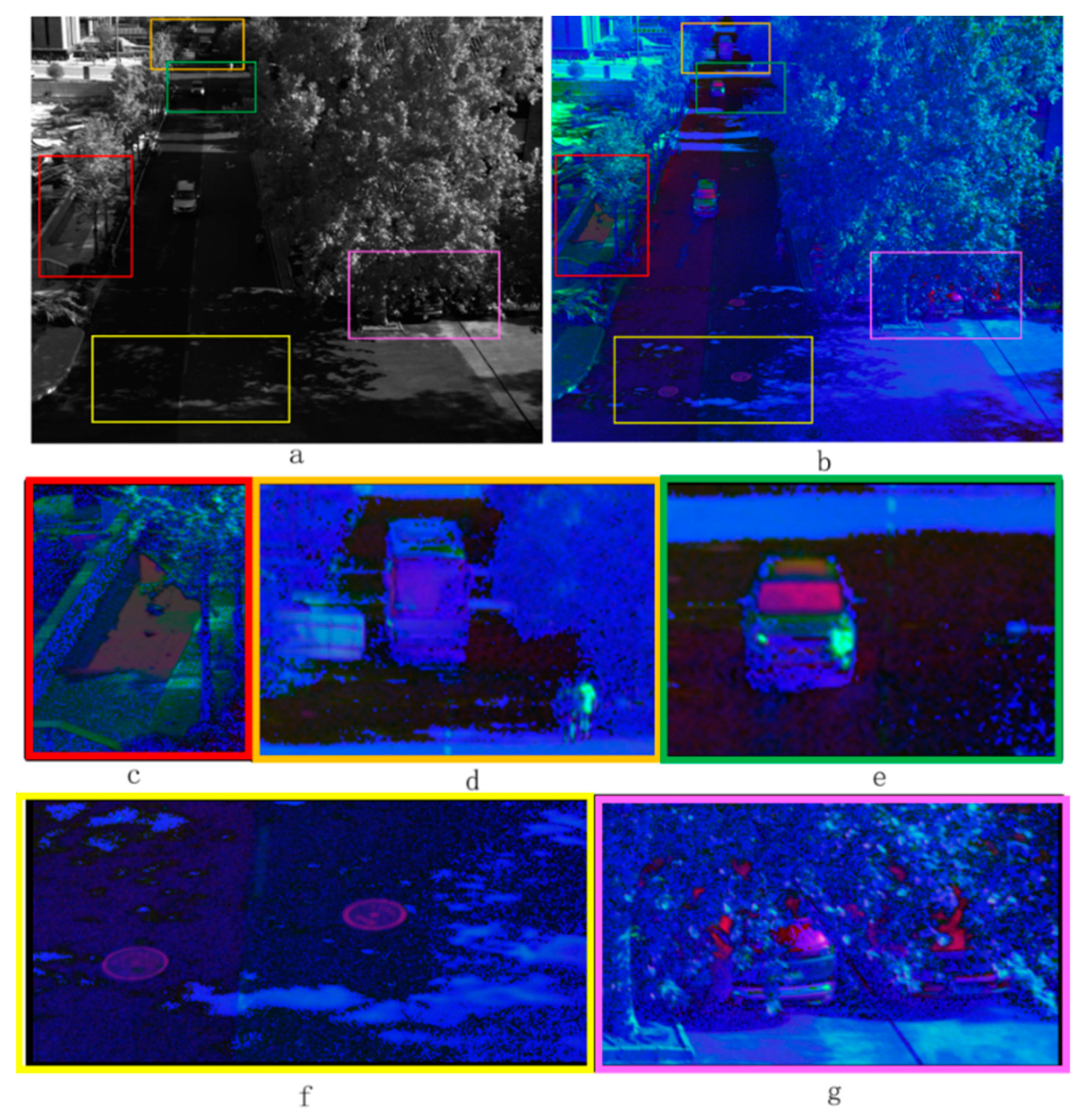

2.2.2. Interpolate Each Polarization Channel by Intensity Ratio Constraint

After obtaining the tentative estimate intensity image

, each polarization channel

,

,

, and

can be calculated. Considering the constraint of Equation (10), a method derived from the guided filter technique [

30] is proposed. This method allows each polarization channel

to adhere to the texture of the intensity image

. At the same time, the relationship between the channels is also fully retained, which ensures the correct recovery of polarization information.

In the proposed method, the intensity image is employed as a guidance image, which is used as a reference to exploit the image structures. The input sparse polarization image is accurately unsampled by the derived guided filter.

For each polarization channel image

,

, we define the filter as:

where

is the filtering output and

is the guidance image. Equation (13) assumes that

is a linear transform of

in a window

centered at the pixel (

p,

q), whereas (

,

) are the linear coefficients assumed to be constant in

. A square window is used for radius

, i.e., the side length is

. This local linear model ensures that

has an edge only if

has an edge, because

. At the same time, Equation (13) is consistent with Equation (10).

To determine the linear coefficients (

,

), we minimize the following cost function in the window

:

where

is a binary mask at the pixel (

,

), which is one for the sampled pixels (i.e.,

has the sampling value) and zero for the others.

is a regularization parameter penalizing large

values to ensure the bias term

is not too large and is only used to fit the nonideal measured value, which is described in Equation (10). Equation (10) is the physical fact we deduce. In Equation (10), the coefficients

a and

c (analogous to

in Equations (13) and (14)) determine the proportion of

Iθ in

I, and the bias term Δ (analogous to

in Equations (13) and (14)) only characterizes a small error. Thus, in Equation (13), the output

Iθ should be mainly determined by the coefficient

. In addition, the bias term

representing the error should be small. That is, regularizing the coefficients of

is appropriate, and it is consistent with physical facts. Regularizing the coefficients of

instead of

in the cost function is an important difference between our method and the original guided filter [

30]. Compared experiment results are shown in

Section 3.2.

Equation (14) has a closed-form solution. First, let the partial derivative of the function with respect to

and

be zero:

Then, from Equation (16),

can be determined:

where

and

are the mean values of

and

, respectively, in the window

under the mask

.

Finally, by incorporating Equation (17) into Equation (15),

can be obtained:

In each pixel (

i,

j), the linear coefficients (

a,

b) are different in different overlapping windows

that cover (

i,

j). Thus, the average coefficients of all windows overlapping (

i,

j) are calculated here, i.e.,

and

. Equation (13) can then be rewritten as:

Based on Equation (19), each polarization channel with the polarization direction can be interpolated. The algorithm’s full steps are shown in Algorithm 1.

| Algorithm 1 Polarized Intensity Ratio Constraint Demosaic for Division-of-Focal-Plane Polarimetric Image |

| Input: RAW mosaic polarization image ; |

| 1: , by Equation (12); |

| 2: , by the method in Section 2.2.2; |

| 3: , by Equation (19). |

| Output: Four channels polarization images . |