Investigating the Ability to Identify New Constructions in Urban Areas Using Images from Unmanned Aerial Vehicles, Google Earth, and Sentinel-2

Abstract

1. Introduction

2. Materials and Methods

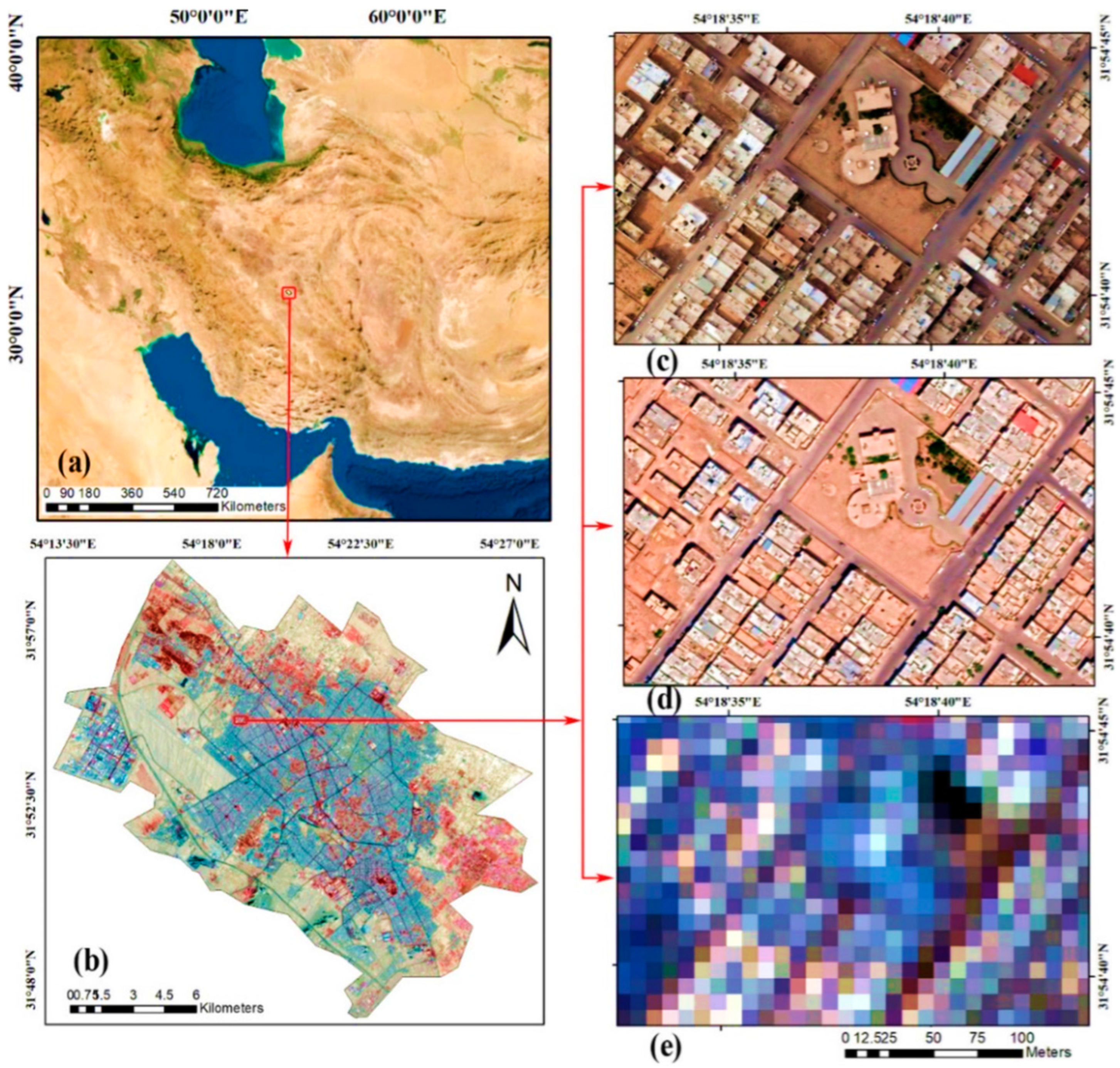

2.1. Study Area

2.2. Data Inputs

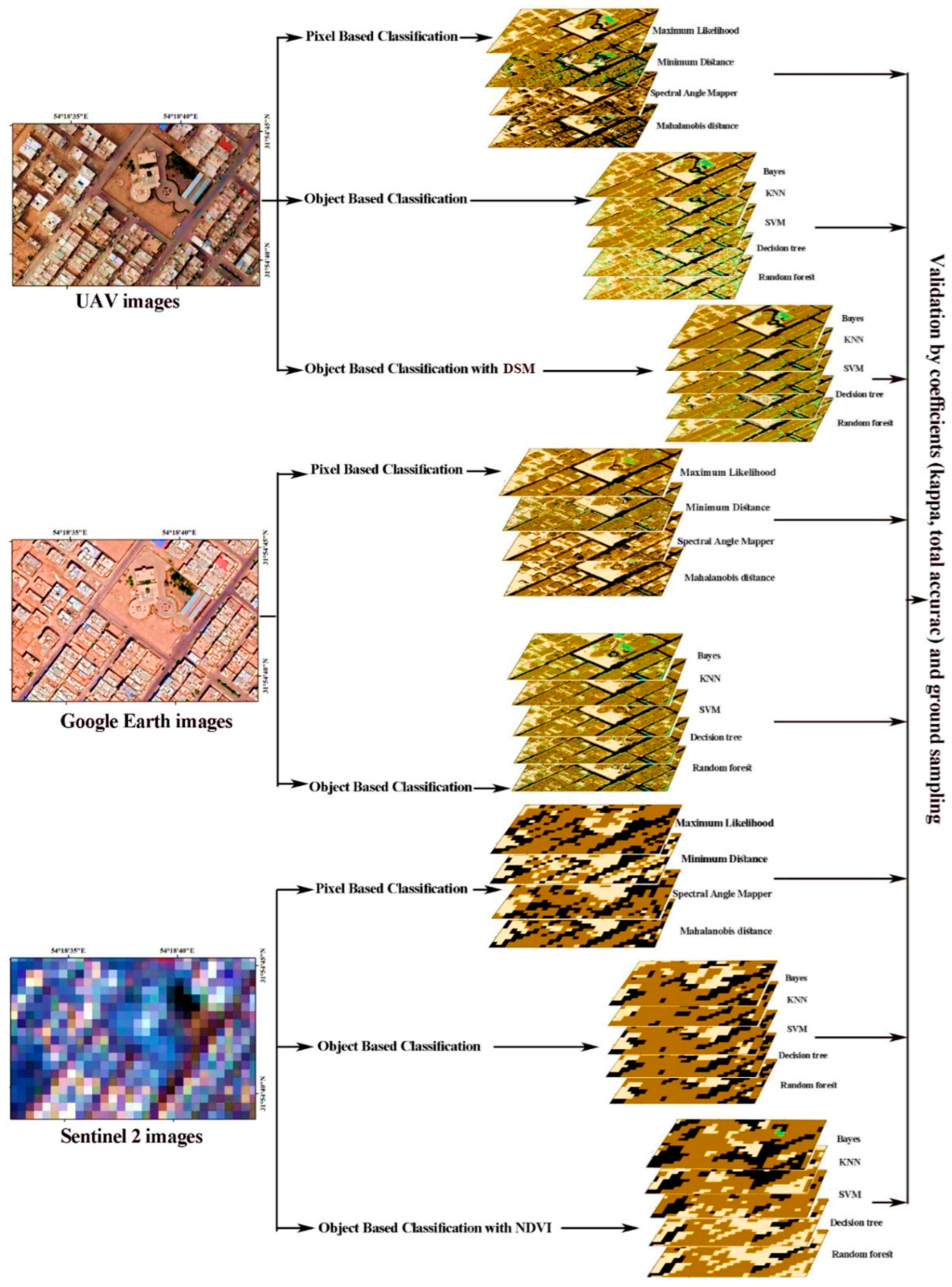

2.3. Method

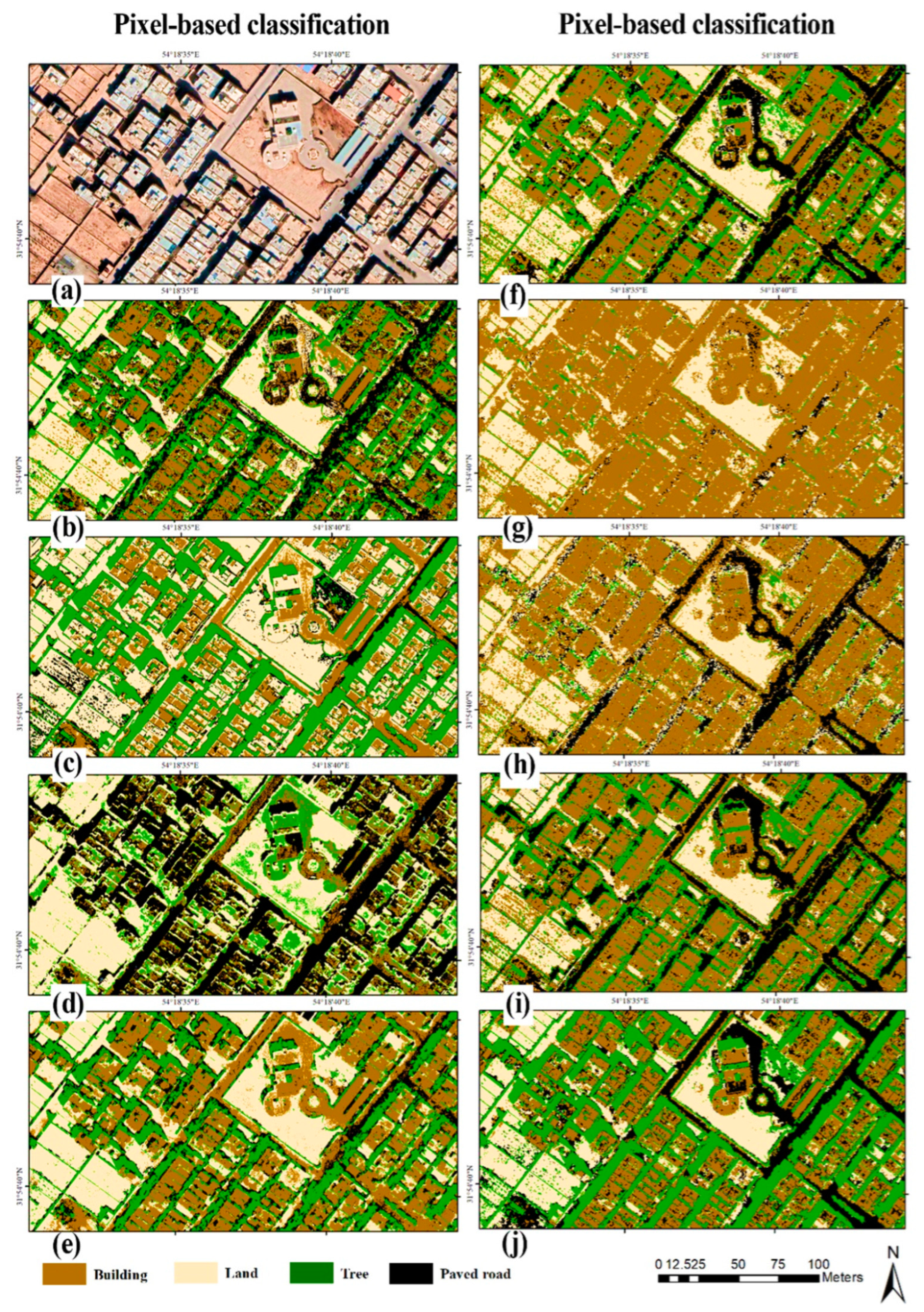

2.4. Image Classification Using Pixel-Based Methods

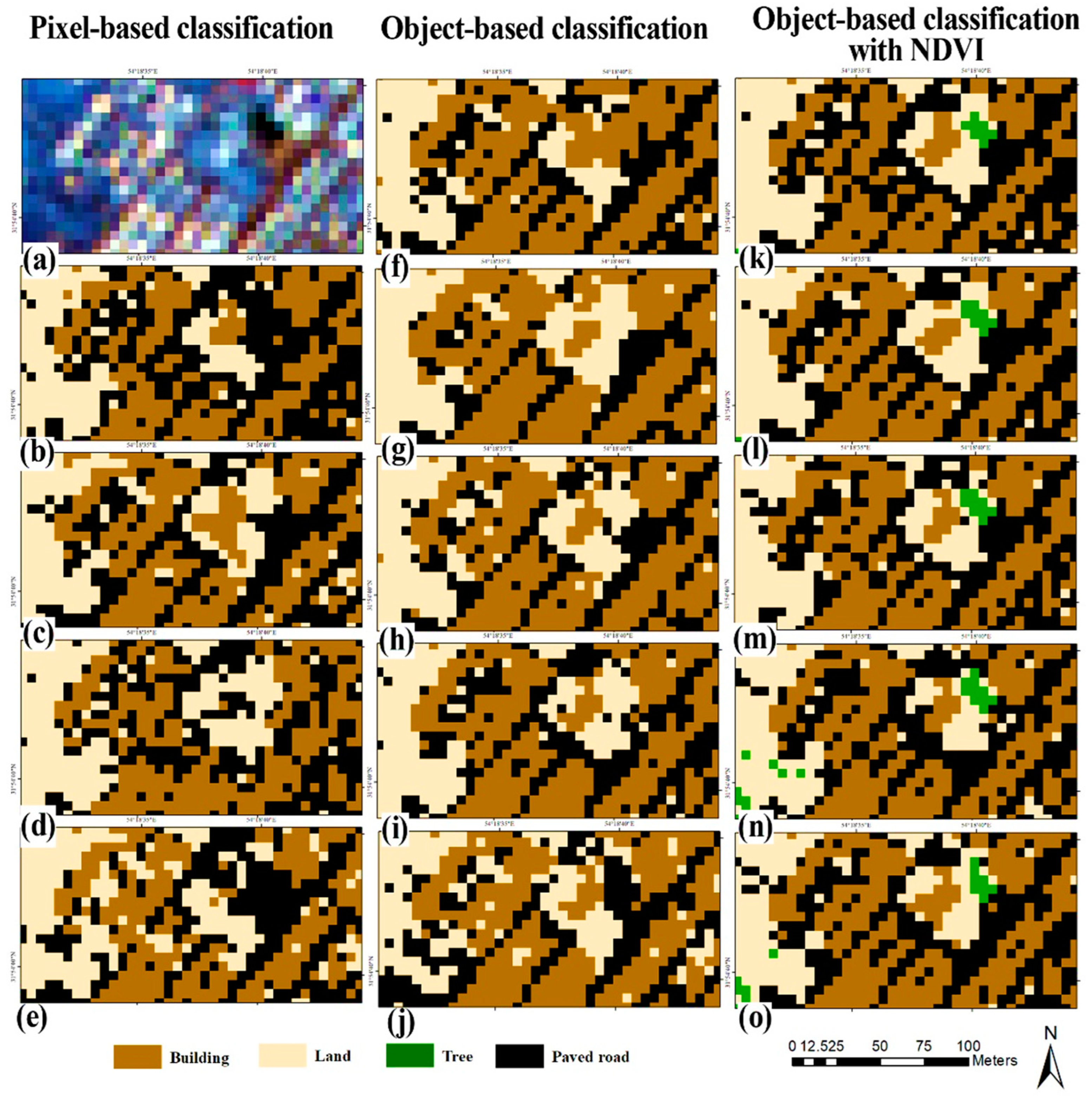

2.5. Image Classification Using Object-Based Methods

2.6. Evaluating the Accuracy of Classification

2.7. Proposed Method

3. Results and Discussion

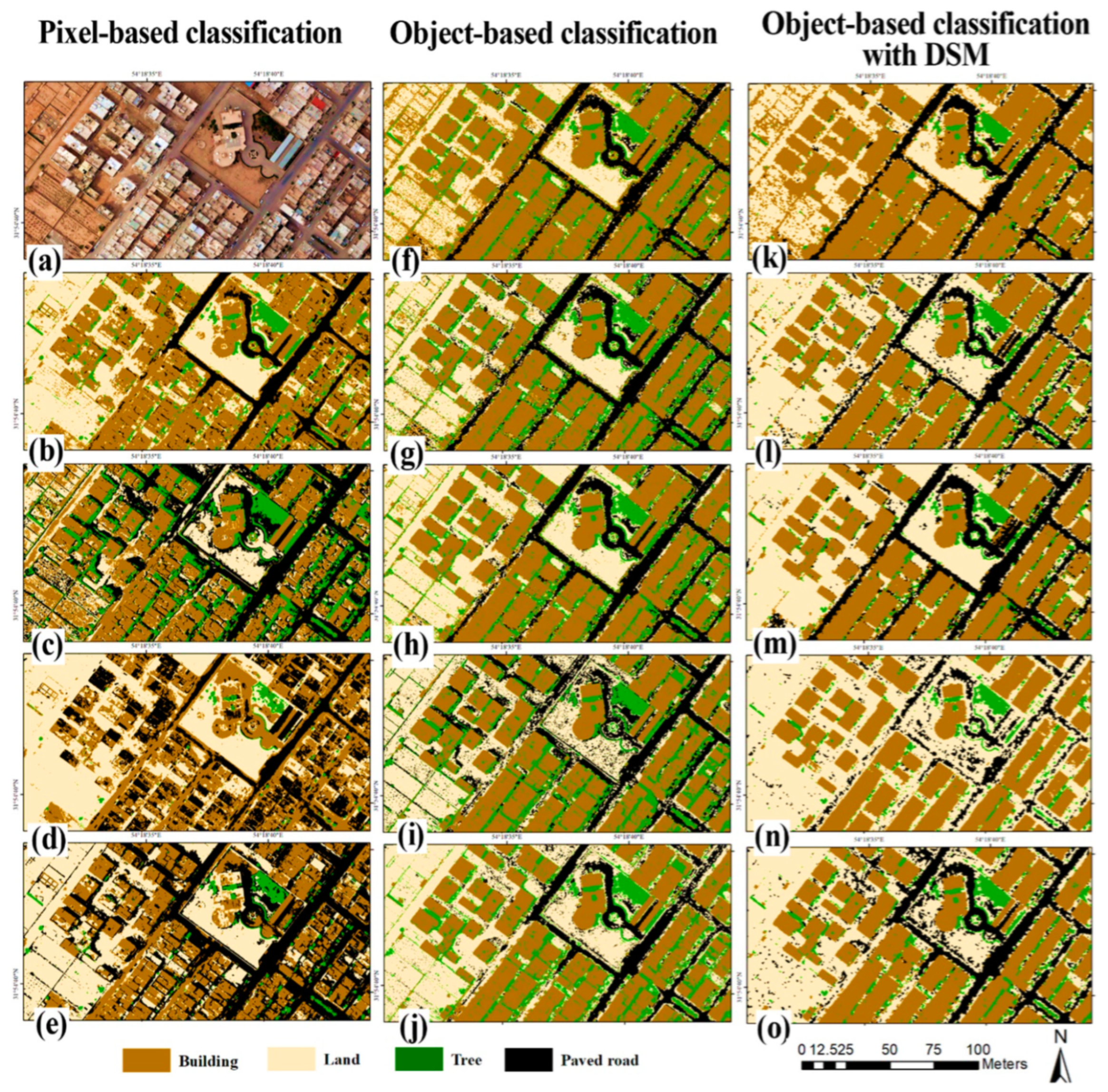

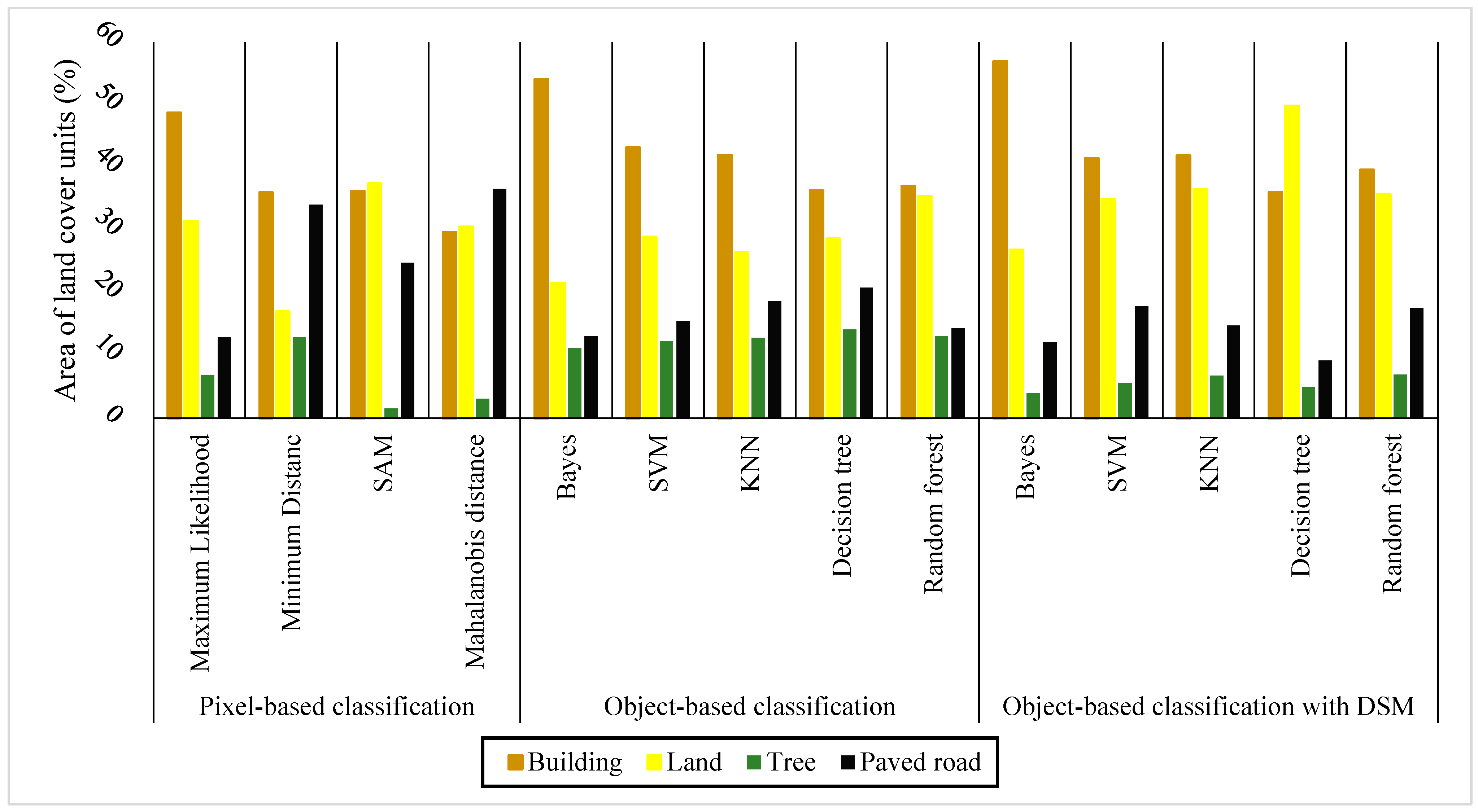

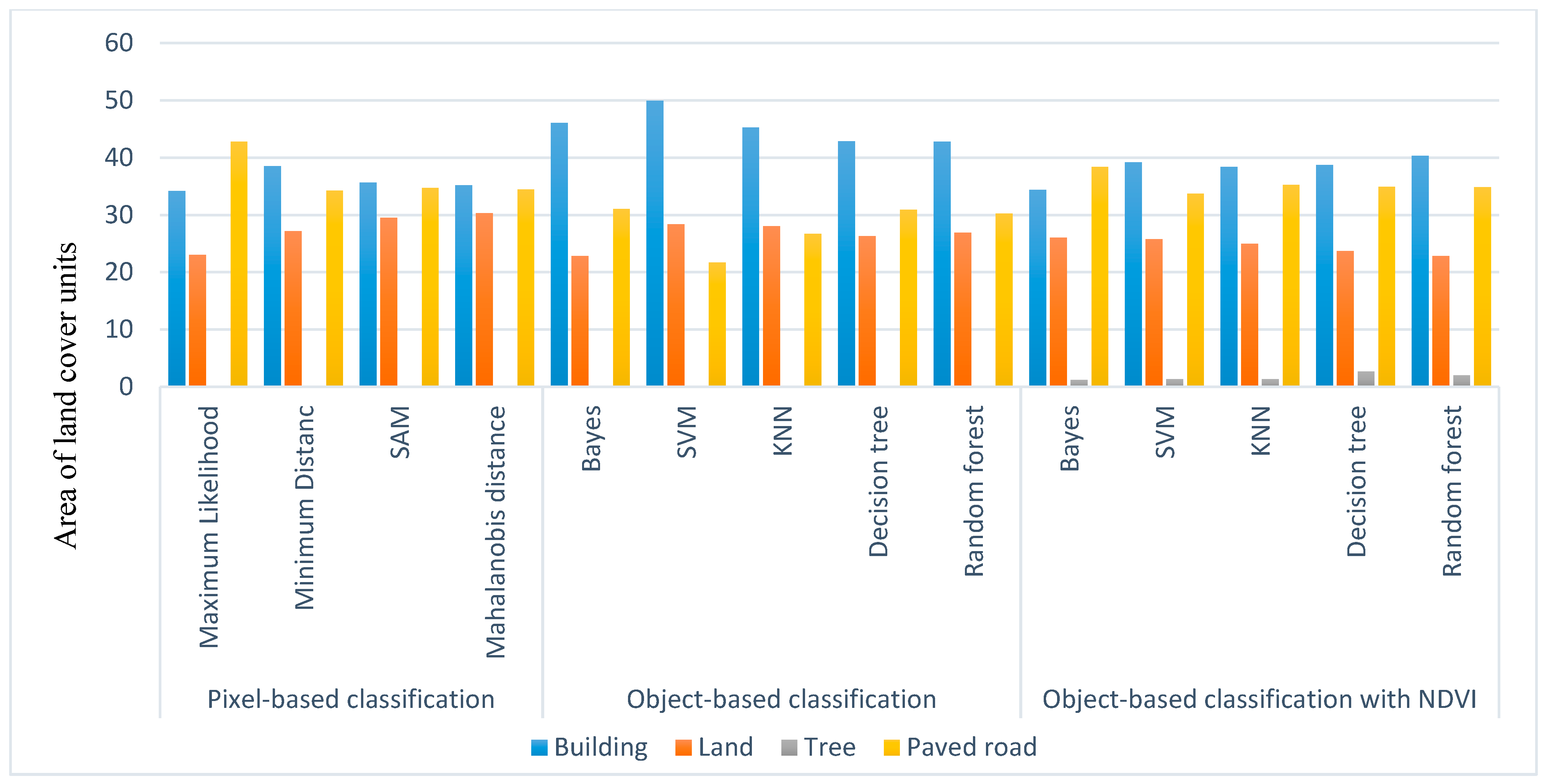

3.1. Accuracy of UAV Image Classification

3.2. Accuracy of Google Earth Image Classification

3.3. Accuracy of Sentinel-2 Image Classification

3.4. Proposed Method for Identification of New Constructions in Urban Areas

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jaeger, J.A.G.; Schwick, C. Improving the Measurement of Urban Sprawl: Weighted Urban Proliferation (WUP) and Its Application to Switzerland. Ecol. Indic. 2014, 38, 294–308. [Google Scholar] [CrossRef]

- Yeh, A.G.O.; Li, X. Measurement and monitoring of urban sprawl in a rapidly growing region using entropy. Photogramm. Eng. Remote Sens. 2001, 67, 83–90. [Google Scholar]

- Szuster, B.W.; Chen, Q.; Borger, M. A comparison of classification techniques to support land cover and land use analysis in tropical coastal zones. Appl. Geogr. 2011, 31, 525–532. [Google Scholar] [CrossRef]

- Duro, D.C.; Franklin, S.E.; Dubé, M.G. A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using SPOT-5 HRG imager. Remote Sens. Environ. 2012, 118, 259–272. [Google Scholar] [CrossRef]

- Clemens, E.; Drăguţ, L. Automated classification of topography from SRTM data using object-based image analysis. Geomorphology 2012, 141–142, 21–33. [Google Scholar]

- Kim, M. Object-Based Spatial Classification of Forest Vegetation with IKONOS Imagery. Ph.D. Thesis, University of Georgia, Athens, GA, USA, 2009; p. 133. [Google Scholar]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Zhang, L.; Huang, X.; Huang, B.; Li, P. A pixel shape index coupled with spectral information for classification of high spatial resolution remotely sensed imagery. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2950–2961. [Google Scholar] [CrossRef]

- Gao, Y.; Mas, J.F. A comparison of the performance of pixel-based and object-based classifications over images with various spatial resolutions. Online J. Earth Sci. 2008, 2, 27–35. [Google Scholar]

- Yan, G. Pixel Based and Objects Oriented Image Analysis for Coal Fire Research; ITC: Enschede, The Netherlands, 2003; pp. 15–97. [Google Scholar]

- Leinenkugel, P.; Deck, R.; Huth, J.; Ottinger, M.; Mack, B. The Potential of Open Geodata for Automated Large-Scale Land Use and Land Cover Classification. Remote Sens. 2019, 11, 2249. [Google Scholar] [CrossRef]

- Thapa, B.; Watanabe, T.; Regmi, D. Flood Assessment and Identification of Emergency Evacuation Routes in Seti River Basin, Nepal. Land 2022, 11, 82. [Google Scholar] [CrossRef]

- Agapiou, A.; Vionis, A.; Papantoniou, G. Detection of Archaeological Surface Ceramics Using Deep Learning Image-Based Methods and Very High-Resolution UAV Imageries. Land 2021, 10, 1365. [Google Scholar] [CrossRef]

- Xie, F.; Zhao, G.; Mu, X.; Tian, P.; Gao, P.; Sun, W. Sediment Yield in Dam-Controlled Watersheds in the Pisha Sandstone Region on the Northern Loess Plateau, China. Land 2021, 10, 1264. [Google Scholar] [CrossRef]

- Koeva, M.; Humayun, M.I.; Timm, C.; Stöcker, C.; Crommelinck, S.; Chipofya, M.; Zevenbergen, J. Geospatial Tool and Geocloud Platform Innovations: A Fit-for-Purpose Land Administration Assessment. Land 2021, 10, 557. [Google Scholar] [CrossRef]

- Alfonso-Torreño, A.; Gómez-Gutiérrez, Á.; Schnabel, S. Dynamics of erosion and deposition in a partially restored valley-bottom gully. Land 2021, 10, 62. [Google Scholar] [CrossRef]

- Yu, Q.; Gong, P.; Tian, Y.Q.; Pu, R.; Yang, J. Factors affecting spatial variation of classification uncertainty in an image object-based vegetation mapping. Photogramm. Eng. Remote Sens. 2008, 74, 1007–1018. [Google Scholar] [CrossRef]

- Li, M.; Ma, L.; Blaschke, T.; Cheng, L.; Tiede, D. A systematic comparison of different object-based classification techniques using high spatial resolution imagery in agricultural environments. Int. J. Appl. Earth Obs. Geoinf. 2016, 49, 87–98. [Google Scholar] [CrossRef]

- Frantz, D.; Schug, F.; Okujeni, A.; Navacchi, C.; Wagner, W.; van der Linden, S.; Hostert, P. National-scale mapping of building height using Sentinel-1 and Sentinel-2 time series. Remote Sens. Environ. 2021, 252, 112128. [Google Scholar] [CrossRef]

- Gombe, K.E.; Asanuma, I.; Park, J.-G. Quantification of annual urban growth of Dar es Salaam Tanzania from Landsat time Series data. Adv. Remote Sens. 2017, 6, 175–191. [Google Scholar] [CrossRef][Green Version]

- Iannelli, G.C.; Gamba, P. Jointly exploiting Sentinel-1 and Sentinel-2 for urban mapping. In Proceedings of the IGARSS 2018–2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 8209–8212. [Google Scholar]

- Ng, W.-T.; Rima, P.; Einzmann, K.; Immitzer, M.; Atzberger, C.; Eckert, S.J.R.S. Assessing the potential of Sentinel-2 and Pléiades data for the detection of Prosopis and Vachellia spp. in Kenya. Remote Sens. 2017, 9, 74. [Google Scholar] [CrossRef]

- Phiri, D.; Simwanda, M.; Salekin, S.R.; Nyirenda, V.; Murayama, Y.; Ranagalage, M. Sentinel-2 Data for Land Cover. Use Mapping: A Review. Remote Sens. 2020, 12, 2291. [Google Scholar] [CrossRef]

- Boonpook, W.; Tan, Y.; Ye, Y.; Torteeka, P.; Torsri, K.; Dong, S. A deep learning approach on building detection from unmanned aerial vehicle-based images in riverbank monitoring. Sensors 2018, 18, 3921. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Yang, M.; Xie, M.; Guo, Z.; Li, E.; Zhang, L.; Wang, D. Accurate Building Extraction from Fused DSM and UAV Images Using a Chain Fully Convolutional Neural Network. Remote Sens. 2019, 11, 2912. [Google Scholar] [CrossRef]

- Priyadarshini, K.N.; Sivashankari, V.; Shekhar, S. Identification of Urban Slums Using Classification Algorithms—A Geospatial Approach. In Proceedings of the International Conference on Unmanned Aerial System in Geomatics, Greater Noida, India, 6–7 April 2019; Springer: Cham, Switzerland; pp. 237–252. [Google Scholar]

- Immitzer, M.; Vuolo, F.; Atzberger, C. First Experience with Sentinel-2 data for crop and tree species classifications in Central Europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Tavares, P.A.; Beltrão, N.E.S.; Guimarães, U.S.; Teodoro, A.C.J.S. Integration of sentinel-1 and sentinel-2 for classification and LULC mapping in the urban area of Belém, eastern Brazilian Amazon. Sensors 2019, 19, 1140. [Google Scholar] [CrossRef] [PubMed]

- Chunping, Q.; Schmitt, M.; Lichao, M.; Xiaoxiang, Z. Urban local climate zone classification with a residual convolutional Neural Network and multi-seasonal Sentinel-2 images. In Proceedings of the 2018 10th IAPR Workshop on Pattern Recognition in Remote Sensing (PRRS), Beijing, China, 19–20 August 2018; pp. 1–5. [Google Scholar]

- Gibson, L.; Engelbrecht, J.; Rush, D. Detecting historic informal settlement fires with sentinel 1 and 2 satellite data-Two case studies in Cape Town. Fire Saf. J. 2019, 108, 102828. [Google Scholar] [CrossRef]

- Haas, J.; Ban, Y. Sentinel-1A SAR and sentinel-2A MSI data fusion for urban ecosystem service mapping. Remote Sens. Appl. Soc. Environ. 2017, 8, 41–53. [Google Scholar] [CrossRef]

- Kranjčić, N.; Medak, D.; Župan, R.; Rezo, M.J.R.S. Support Vector Machine Accuracy Assessment for Extracting Green Urban Areas in Towns. Remote Sens. 2019, 11, 655. [Google Scholar] [CrossRef]

- Crommelinck, S.; Bennett, R.; Gerke, M.; Nex, F.; Yang, M.Y.; Vosselman, G. Review of automatic feature extraction from high-resolution optical sensor data for UAV-based cadastral mapping. Remote Sens. 2016, 8, 689. [Google Scholar] [CrossRef]

- Tahar, K.N.; Ahmad, A. An evaluation on fixed wing and multi-rotor UAV images using photogrammetric image processing. Int. J. Comput. Electr. Autom. Control. Inf. Eng. 2013, 7, 48–52. [Google Scholar]

- Shahraki, S.Z.; Sauri, D.; Serra, P.; Modugno, S.; Seifolddini, F.; Pourahmad, A. Urban sprawl pattern and land-use change detection in Yazd, Iran. Habitat Int. 2011, 35, 521–528. [Google Scholar] [CrossRef]

- Toth, C.; Józków, G. Remote sensing platforms and sensors: A survey. ISPRS J. Photogramm. Remote Sens. 2016, 115, 22–36. [Google Scholar] [CrossRef]

- Whiteside, T.G.; Boggs, G.S.; Maier, S.W. Comparing object-based and pixel-based classifications for mapping savannas. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 884–893. [Google Scholar] [CrossRef]

- Chen, M.; Su, W.; Li, L.; Zhang, C.; Yue, A.; Li, H. Comparisson of Pixel-Based and Obgectoriented Knowledge Based Classification Methods Using SPOT5 Imagery. WSEAS Trans. Inf. Sci. Appl. 2009, 6, 477–489. [Google Scholar]

- Yuqi, T. Object-Based Change Detection with Multi-Feature in Urban High-Resolution Remote Sensing Imagery. Ph.D. Thesis, Wuhan University, Wuhan, China, 2013; p. 162. [Google Scholar]

- Tso, B.; Mather, P.M. Chapter 2–3. In Classification Methods for Remotely Sensed Data, 2nd ed.; Taylor and Francis Pub.: Boca Raton, FL, USA, 2009. [Google Scholar]

- Xing, E.P.; Ng, A.Y.; Jordan, M.I.; Russell, S. Distance metric learning, with application to clustering with side-information. In Advances in NIPS; MIT Press: Cambridge, MA, USA, 2003. [Google Scholar]

- Richards, J.A. Remote Sensing Digital Image Analysis; Springer: Berlin/Heidelberg, Germany, 1999; p. 240. [Google Scholar]

- Luc, B.; Deronde, B.; Kempeneers, P.; Debruyn, W.; Provoost, S.; Sensing, R.; Observation, E. Optimized Spectral Angle Mapper classification of spatially heterogeneous dynamic dune vegetation, a case study along the Belgian coastline. In Proceedings of the 9th International Symposium on Physical Measurements and Signatures in Remote Sensing (ISPMSRS), Beijing, China, 17–19 October 2005; Volume 1, pp. 17–19. [Google Scholar]

- Kumar, R.; Nandy, S.; Agarwal, R.; Kushwaha, S.P.S. Forest cover dynamics analysis and prediction modeling using logistic regression model. Ecol. Indic. 2014, 45, 444–455. [Google Scholar] [CrossRef]

- Tehrany, M.S.; Pradhan, B.; Jebuv, M.N. A comparative assessment between object and pixel-based classification approaches for land use-land cover mapping using SPOT 5 imagery. Geocarto Int. 2014, 29, 351–369. [Google Scholar] [CrossRef]

- Baatz, M.; Schäpe, A. Object-based and Multi-Scale Image Analysis in Semantic Network. In Proceedings of the 2nd International Symposium on Operationalization of Remote Sensing, ITC, Enschede, The Netherlands, 16–20 August 1999. [Google Scholar]

- Huang, L.; Ni, L. Object-based Classification of High Resolution Satellite Image for Better Accuracy. In Proceedings of the 8th International Symposium on Spatial Accuracy Assessment in Natural Resources and Environment, Shanghai, China, 25–27 June 2008. [Google Scholar]

- Pradhan, R.; Ghose, M.K.; Jeyaram, A. Land cover classification of remotely sensed satellite data using bayesian and hybrid classifier. Int. J. Comput. Appl. 2010, 7, 1–4. [Google Scholar] [CrossRef]

- Rudrapal, D.; Subhedar, M. Land cover classification using support vector machine. Int. J. Eng. Res. Technol. 2015, 4, 584–588. [Google Scholar] [CrossRef]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clarke, S.; Weng, Q. Per-pixel vs. object-based classification of urban land cover extraction using high spatial resolution imagery. Remote Sens. Environ. 2011, 115, 1145–1161. [Google Scholar] [CrossRef]

- Wijaya, A.; Budiharto, R.S.; Tosiani, A.; Murdiyarso, D.; Verchot, L.V. Assessment of Large Scale land Cover Change Classifications and Drivers of Deforestation in Indonesia. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 557–573. [Google Scholar] [CrossRef]

- DeFries, R.S.; Chan, J.C.-W. Multiple Criteria for Evaluating Machine Learning Algorithms for Land Cover Classification from Satellite Data. Remote Sens. Environ. 2000, 74, 503–515. [Google Scholar] [CrossRef]

- Lennon, R. Remote Sensing Digital Image Analysis: An Introduction; ESA/ESRIN: Frascati, Italy, 2002. [Google Scholar]

- Rounds, E. A combined nonparametric approach to feature selection and binary decision tree design. Pattern Recognit. 1980, 12, 313–317. [Google Scholar] [CrossRef]

- Basukala, A.K.; Oldenburg, C.; Schellberg, J.; Sultanov, M.; Dubovyk, O. Towards improved land use mapping of irrigated croplands: Performance assessment of different image classification algorithms and approaches. Eur. J. Remote Sens. 2017, 50, 187–201. [Google Scholar] [CrossRef]

- Breiman, L.; Cutler, A. Random Forests. 2017. Available online: https://www.stat.berkeley.edu/~breiman/RandomForests/cc_papers.htm (accessed on 30 June 2002).

- McHugh, L. Interrater reliability: The kappa statistic. Biochem. Med. 2012, 22, 276–282. [Google Scholar] [CrossRef]

| Image | Wavelength (µm) | Band | Spatial Resolution (m) |

|---|---|---|---|

| Sentinel-2A | 0.458–0.523 | Band 2—Blue | 10 |

| 0.543–0.578 | Band 3—Green | ||

| 0.650–0.680 | Band 4—Red | ||

| 0.785–0.899 | Band 8—Infrared | ||

| Google Earth | 1.5 | ||

| UAV RGB | 0.450 | Blue | 0.15 |

| 0.550 | Green | ||

| 0.625 | Red | ||

| DSM | - | - | 0.15 |

| Kappa Coefficient | Overall Accuracy (%) | ||

|---|---|---|---|

| Pixel-based classification | Maximum likelihood | 0.82 | 85 |

| Minimum distance | 0.56 | 79 | |

| Spectral angle mapping | 0.62 | 76 | |

| Mahalanobis | 0.51 | 67 | |

| Object-based classification | Bayes | 0.9 | 92 |

| Support vector machine | 0.91 | 88 | |

| K-nearest-neighbor | 0.93 | 92 | |

| Decision tree | 0.78 | 82 | |

| Random forest | 0.83 | 76 | |

| Object-based classification with DSM | Bayes | 0.94 | 93 |

| Support vector machine | 0.95 | 94 | |

| K-nearest-neighbor | 0.97 | 94 | |

| Decision tree | 0.93 | 91 | |

| Random forest | 0.91 | 92 |

| Kappa Coefficient | Overall Accuracy (%) | ||

|---|---|---|---|

| Pixel-based classification | Maximum likelihood | 0.75 | 80 |

| Minimum distance | 0.41 | 53 | |

| Spectral angle mapping | 0.39 | 47 | |

| Mahalanobis | 0.56 | 60 | |

| Object-based classification | Bayes | 0.56 | 53 |

| Support vector machine | 0.23 | 37 | |

| K-nearest-neighbor | 0.83 | 79 | |

| Decision tree | 0.69 | 74 | |

| Random forest | 0.66 | 75 |

| Kappa Coefficient | Overall Accuracy (%) | ||

|---|---|---|---|

| Pixel-based classification | Maximum likelihood | 0.74 | 80 |

| Minimum distance | 0.66 | 79 | |

| Spectral angle mapping | 0.73 | 67 | |

| Mahalanobis | 0.47 | 51 | |

| Object-based classification | Bayes | 0.71 | 76 |

| Support vector machine | 0.87 | 74 | |

| K-nearest-neighbor | 0.85 | 82 | |

| Decision tree | 0.8 | 75 | |

| Random forest | 0.69 | 73 | |

| Object-based classification with NDVI | Bayes | 0.74 | 80 |

| Support vector machine | 0.85 | 81 | |

| K-nearest-neighbor | 0.81 | 77 | |

| Decision tree | 0.76 | 83 | |

| Random forest | 0.71 | 75 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aliabad, F.A.; Malamiri, H.R.G.; Shojaei, S.; Sarsangi, A.; Ferreira, C.S.S.; Kalantari, Z. Investigating the Ability to Identify New Constructions in Urban Areas Using Images from Unmanned Aerial Vehicles, Google Earth, and Sentinel-2. Remote Sens. 2022, 14, 3227. https://doi.org/10.3390/rs14133227

Aliabad FA, Malamiri HRG, Shojaei S, Sarsangi A, Ferreira CSS, Kalantari Z. Investigating the Ability to Identify New Constructions in Urban Areas Using Images from Unmanned Aerial Vehicles, Google Earth, and Sentinel-2. Remote Sensing. 2022; 14(13):3227. https://doi.org/10.3390/rs14133227

Chicago/Turabian StyleAliabad, Fahime Arabi, Hamid Reza Ghafarian Malamiri, Saeed Shojaei, Alireza Sarsangi, Carla Sofia Santos Ferreira, and Zahra Kalantari. 2022. "Investigating the Ability to Identify New Constructions in Urban Areas Using Images from Unmanned Aerial Vehicles, Google Earth, and Sentinel-2" Remote Sensing 14, no. 13: 3227. https://doi.org/10.3390/rs14133227

APA StyleAliabad, F. A., Malamiri, H. R. G., Shojaei, S., Sarsangi, A., Ferreira, C. S. S., & Kalantari, Z. (2022). Investigating the Ability to Identify New Constructions in Urban Areas Using Images from Unmanned Aerial Vehicles, Google Earth, and Sentinel-2. Remote Sensing, 14(13), 3227. https://doi.org/10.3390/rs14133227