AC-LSTM: Anomaly State Perception of Infrared Point Targets Based on CNN+LSTM

Abstract

:1. Introduction

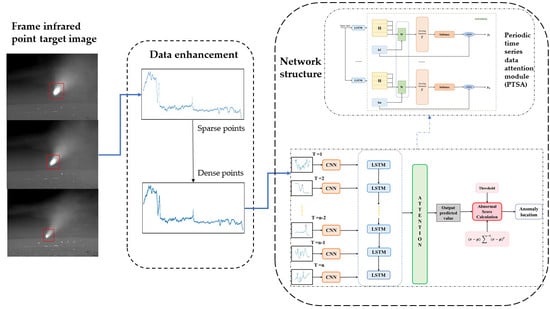

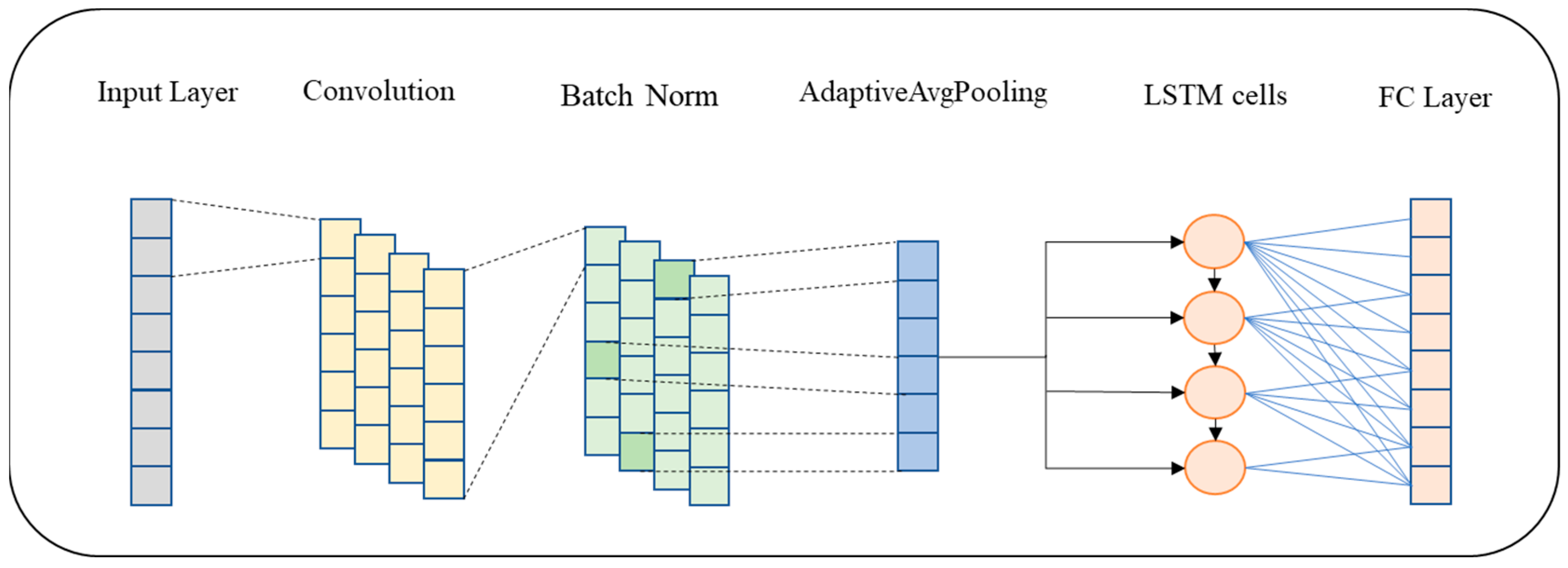

- A spatio-temporal hybrid network (CNN+LSTM) is designed for infrared target abnormal event sensing, called AC-LSTM, which focuses not only on the abnormal temporal characteristics of the target, but also on the spatial feature changes between the abnormal and normal states. It allows online real-time pre-processing and analysis of the target’s radiation characteristics and motion characteristics, instead of the traditional method that can only be processed afterward, to promptly observe and deal with the target during its flight. Moreover, compared with the traditional manual methods, our AC-LSTM has higher recognition accuracy and higher stability.

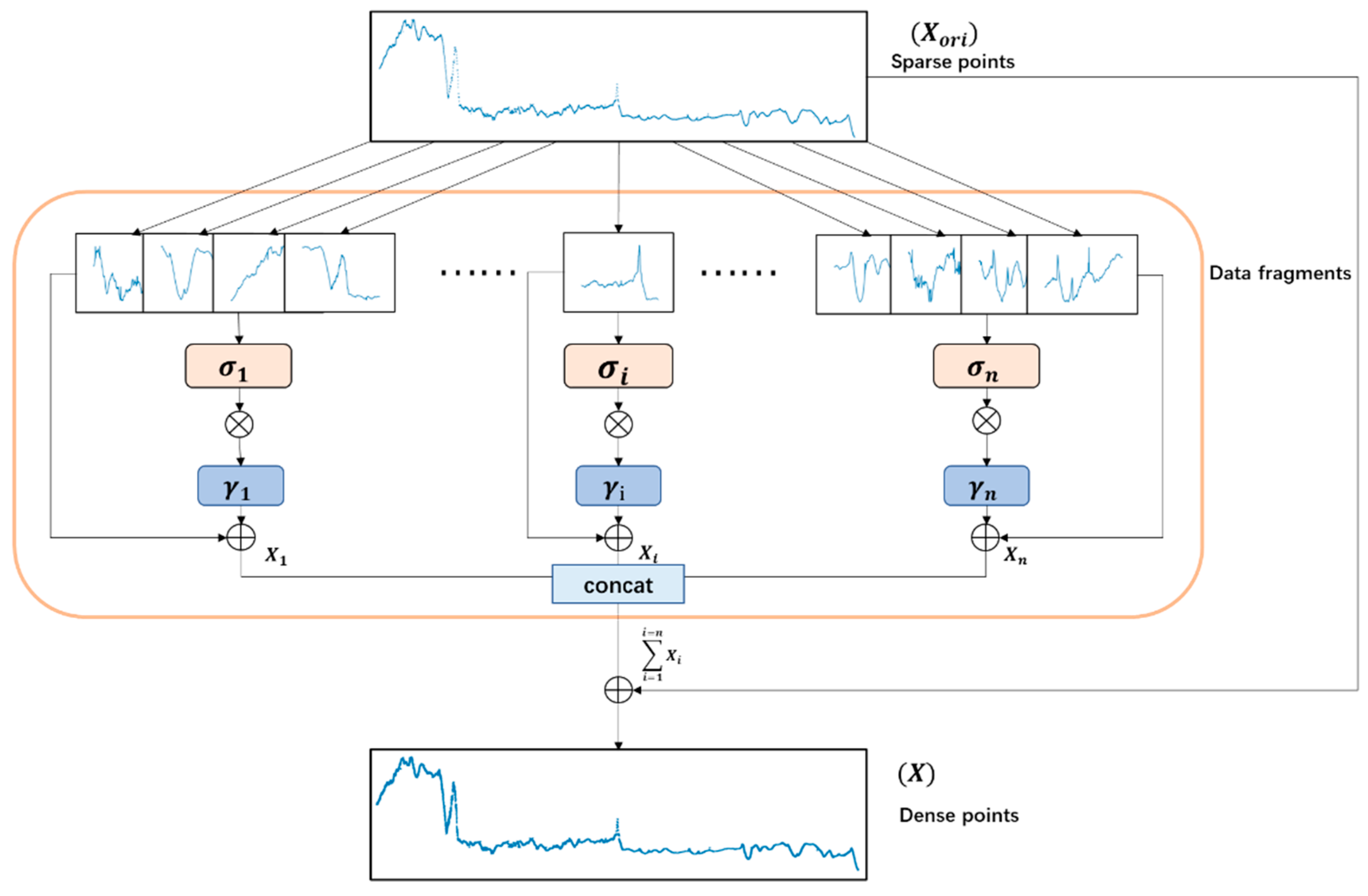

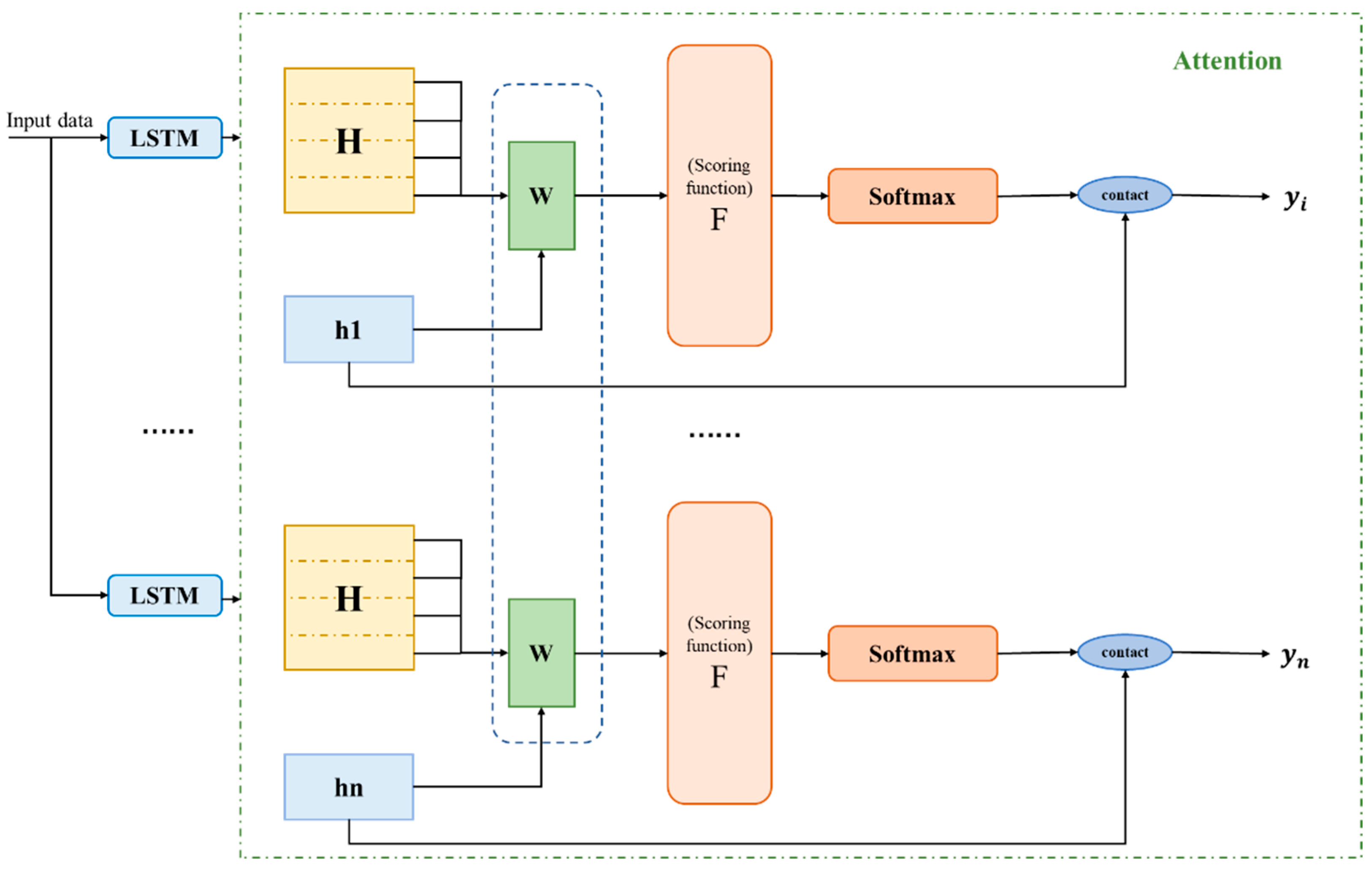

- A Periodic Time Series Data Attention (PTSA) module is proposed, which is incorporated into our AC-LSTM model with negligible overheads. It adaptively strengthens the “period” features to increase the representation power of the network by exploiting the inter-batch relationship of features. A large number of experimental results with real cases demonstrate that the PTSA module can help our AC-LSTM model better grasp the time-series data changes.

- A data expansion method is proposed to improve the generalization ability of our model. This method uses the time window method to expand a large number of data while keeping the overall trend of the target unchanged.

2. Related Work

2.1. Time Series Anomaly Detection Method

2.2. Application of CNN+LSTM Algorithm

3. The Proposed Method

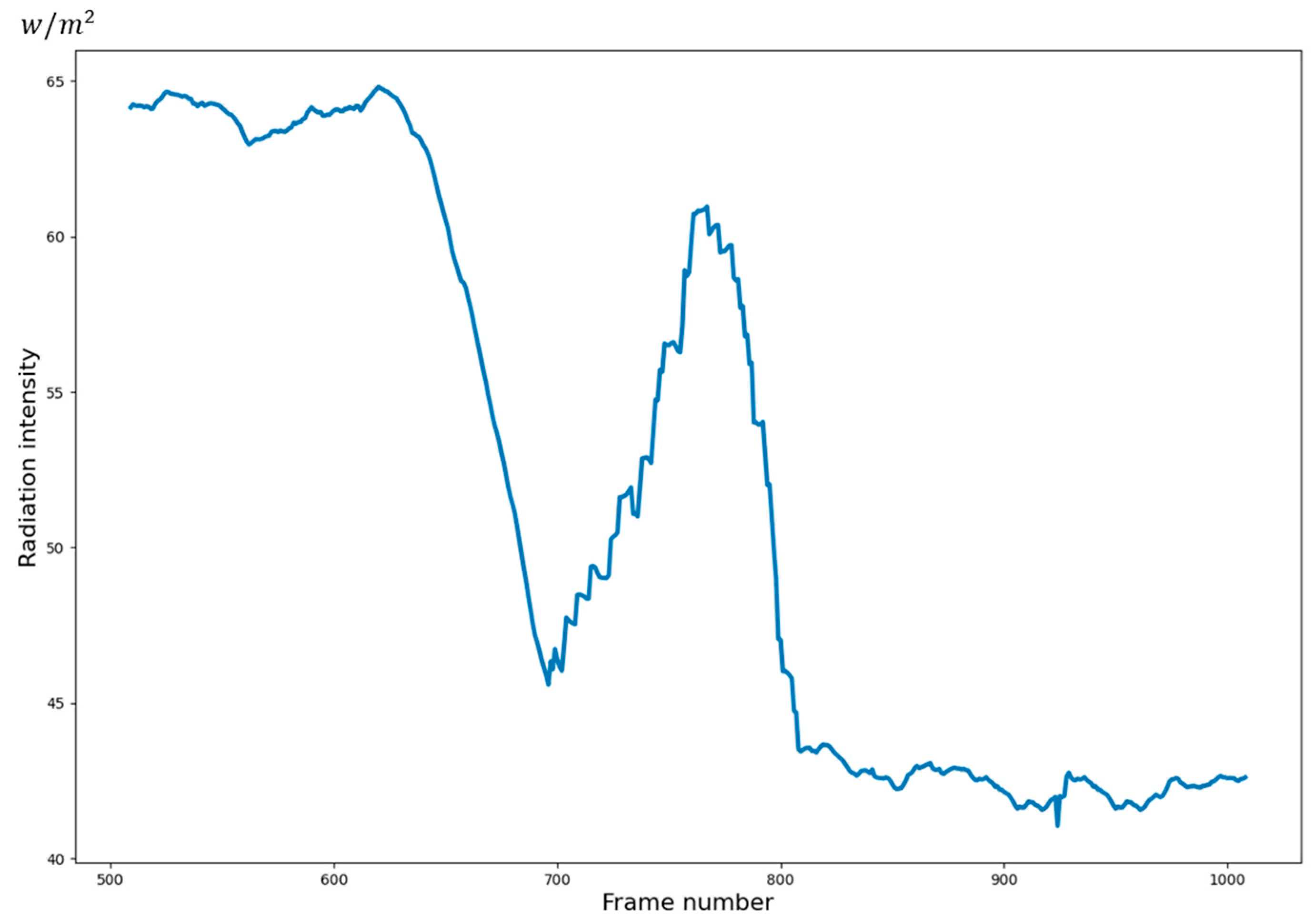

3.1. Data Enhancement

3.2. CNN-LSTM for Anomaly Detection

- Forgetting phase. The forgetting gate consists of a Sigmoid neural network layer and a per-bit multiplication operation. The in Equation (7) is previous hidden layer status. The is the input of data. The is offset value. The represents the Sigmoid function.

- Selective memory stage. The role of the memory gate is the opposite of the forgetting gate, which will determine among the newly entered information and , what of the information will be retained. The in Equation (9) is the out-of-selective memory stage.

- Output phase. This phase will determine what will be treated as the output of the current state. Then, at the time, we input the signal . Later, the corresponding output signals are calculated according to Equations (10) and (11).

3.3. Periodic Time Series Data Attention

4. Experiment

4.1. Experimental Setup

4.1.1. Training Details

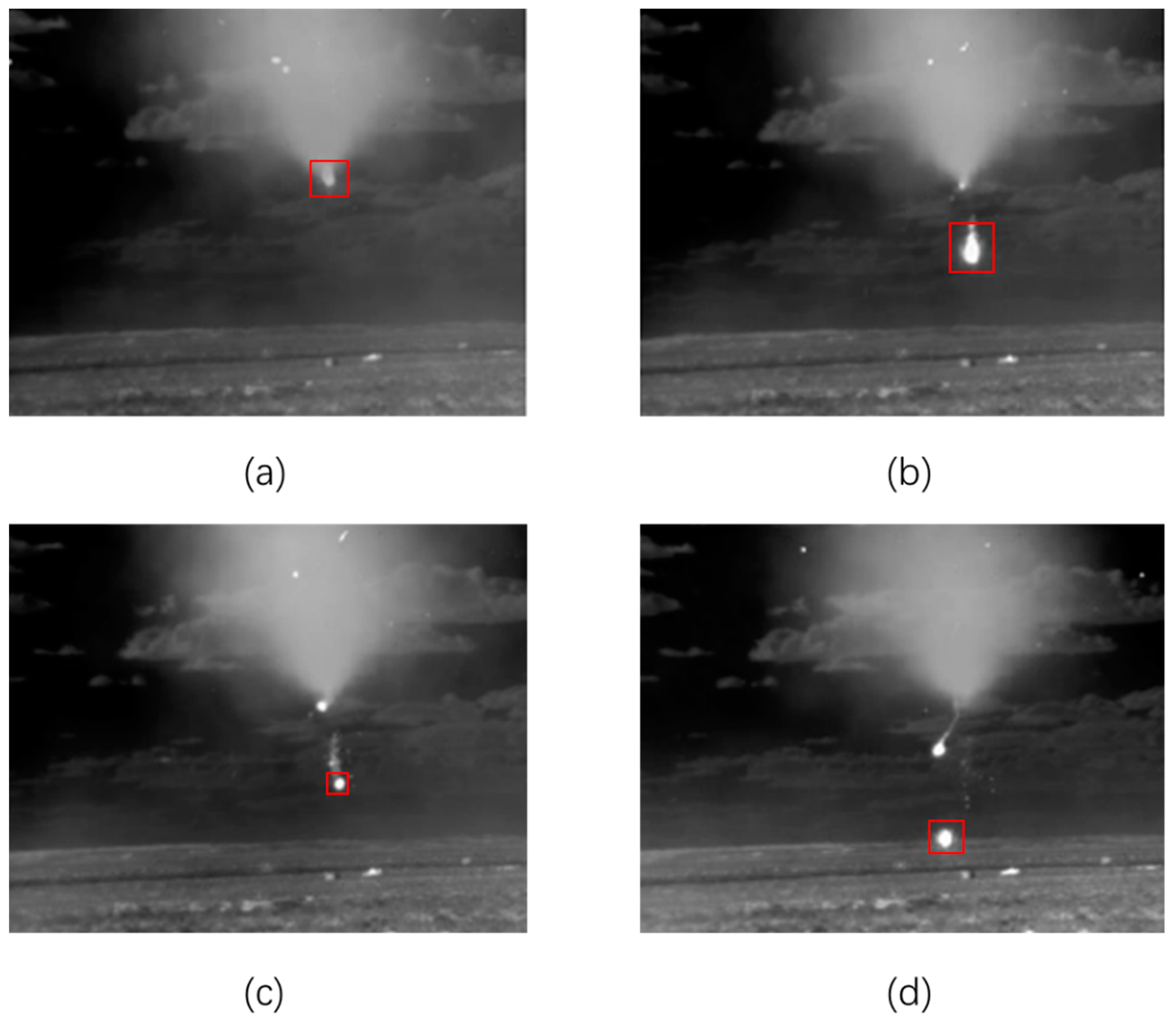

4.1.2. Datasets

4.1.3. Evaluation Metric

4.2. Ablation Studies

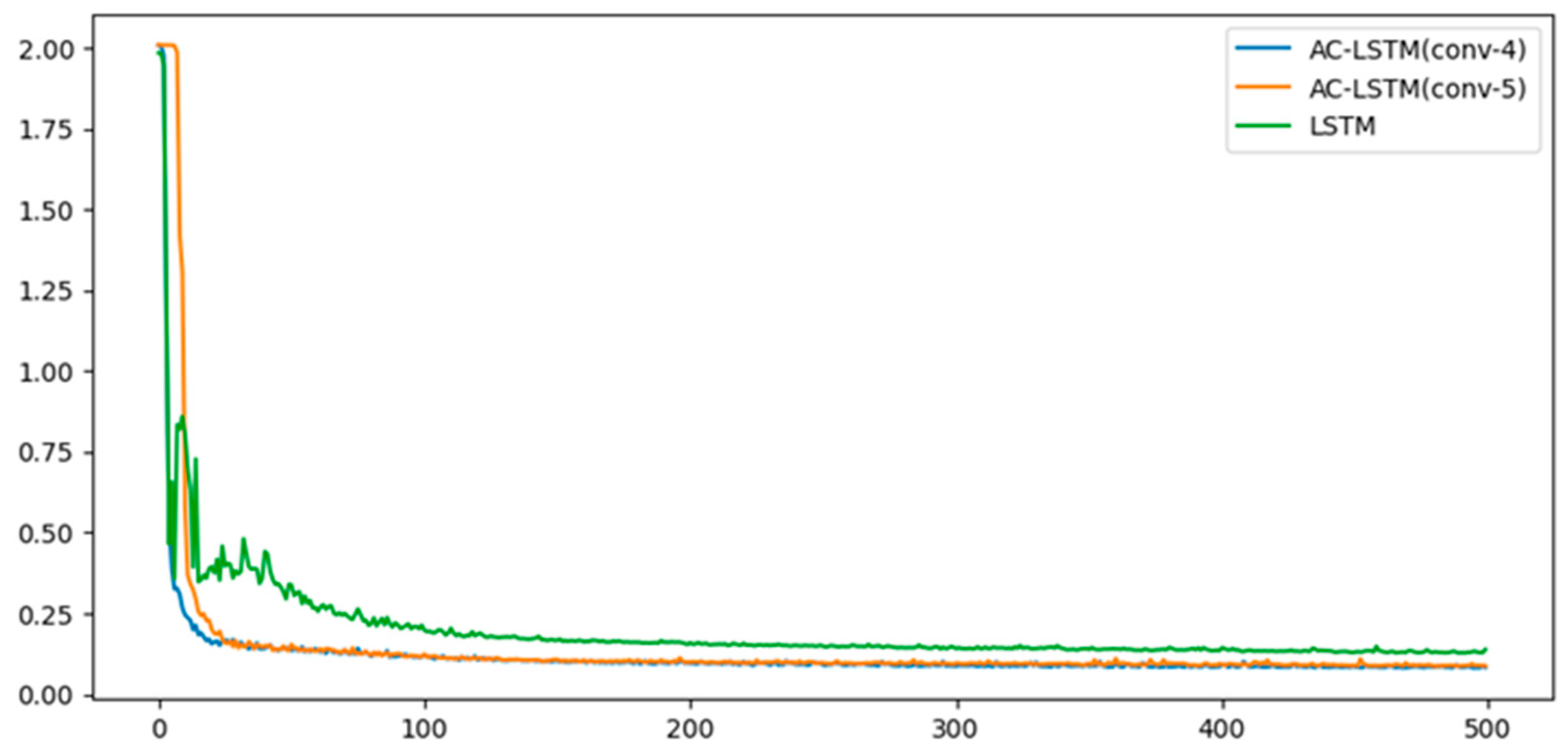

4.2.1. Performance Comparison of Model Combinations

4.2.2. Effect of Sliding Window Enhancement

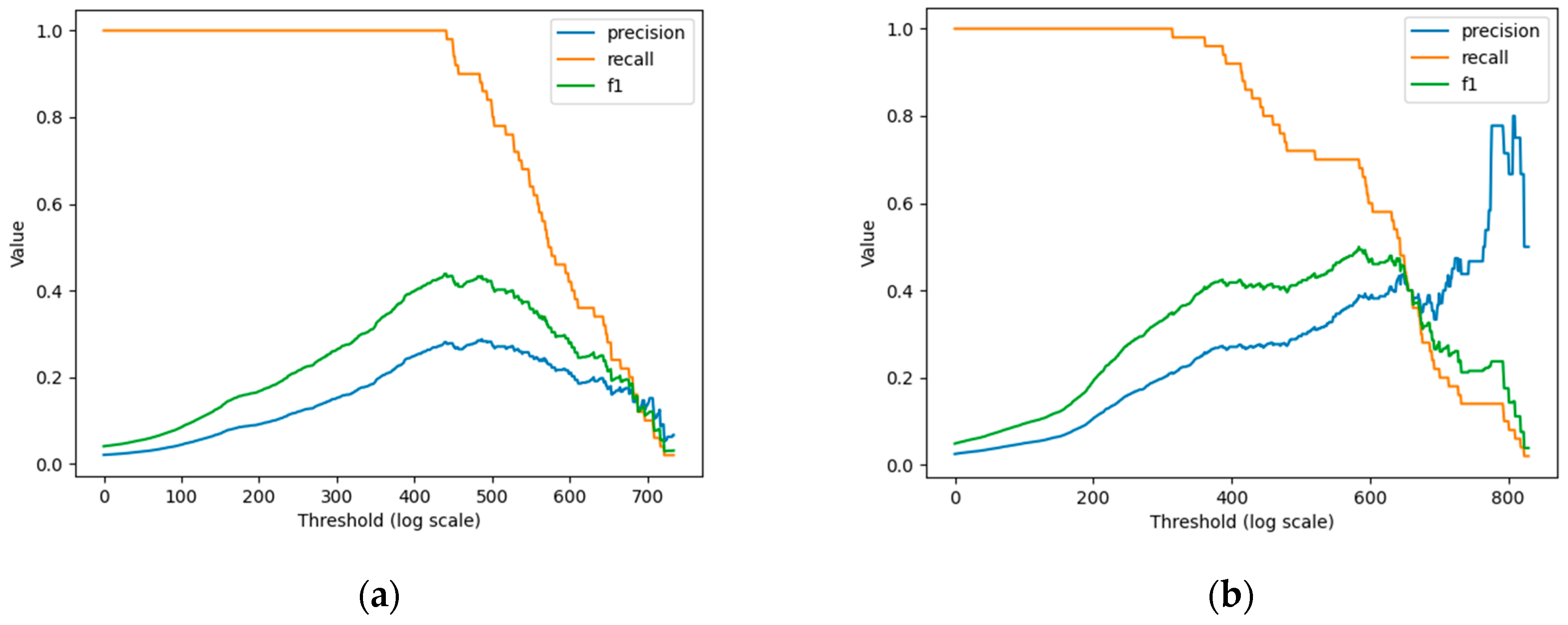

4.2.3. Effect of Periodic Time Series Data Attention (PTSA) Module

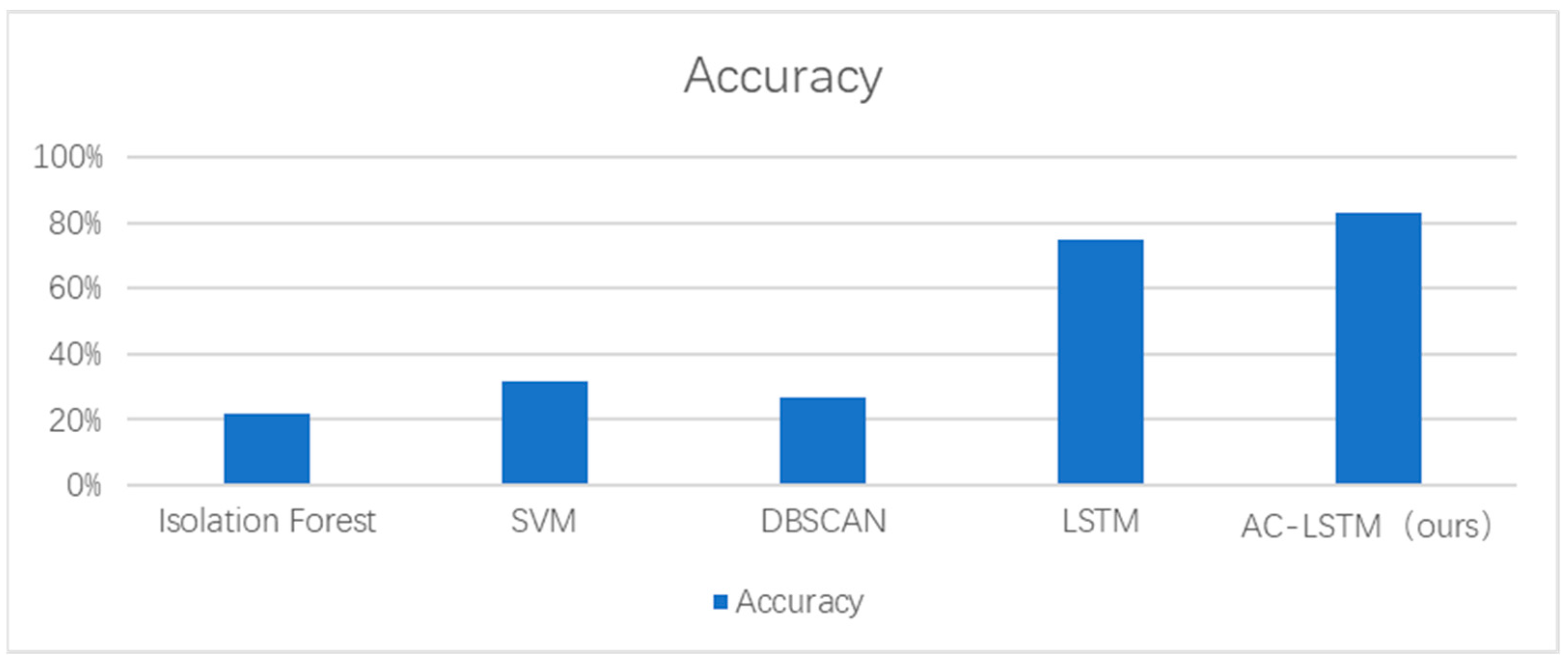

4.3. Comparison with State-of-the-Art Methods

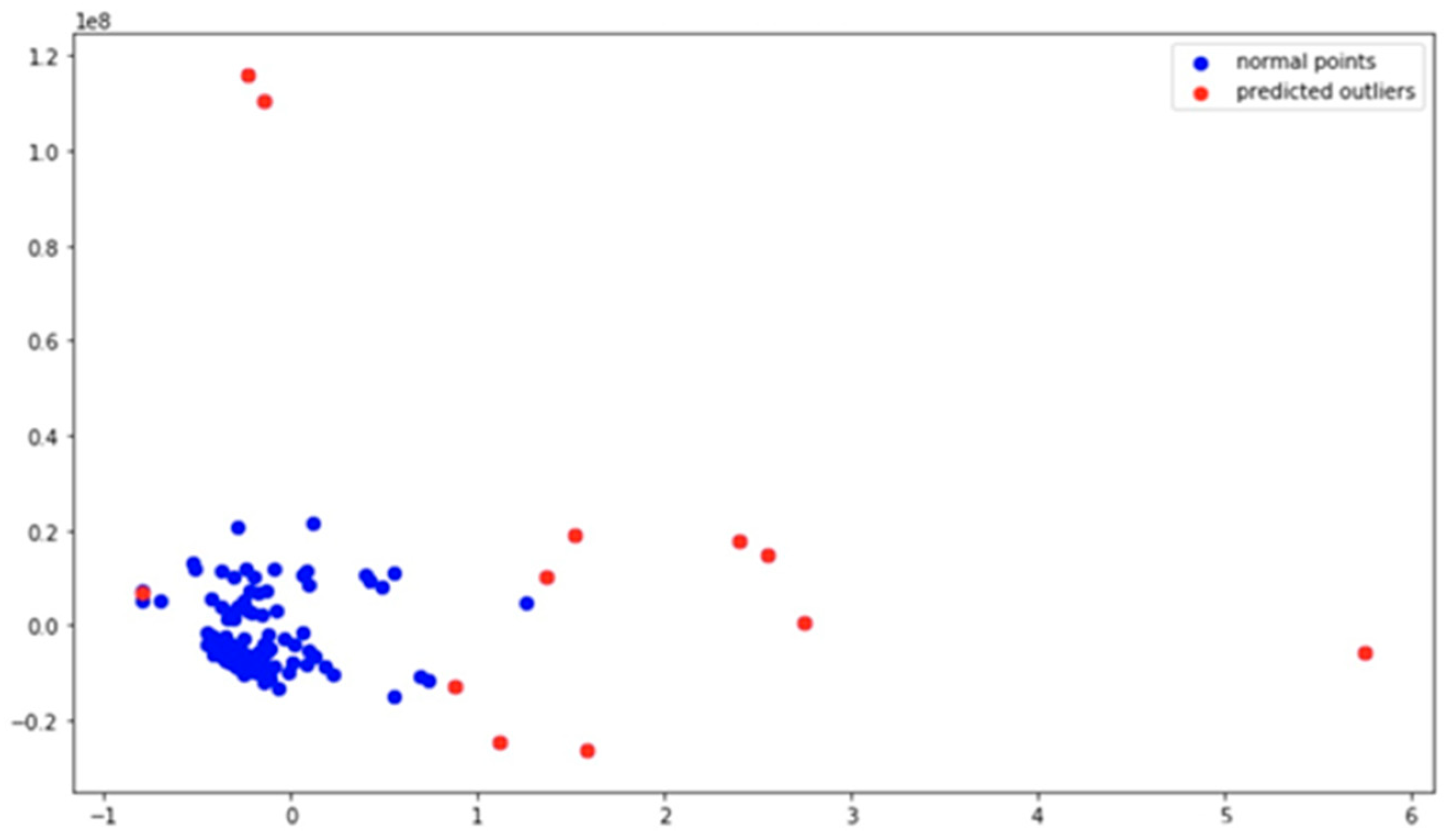

4.4. Qualitative Analysis

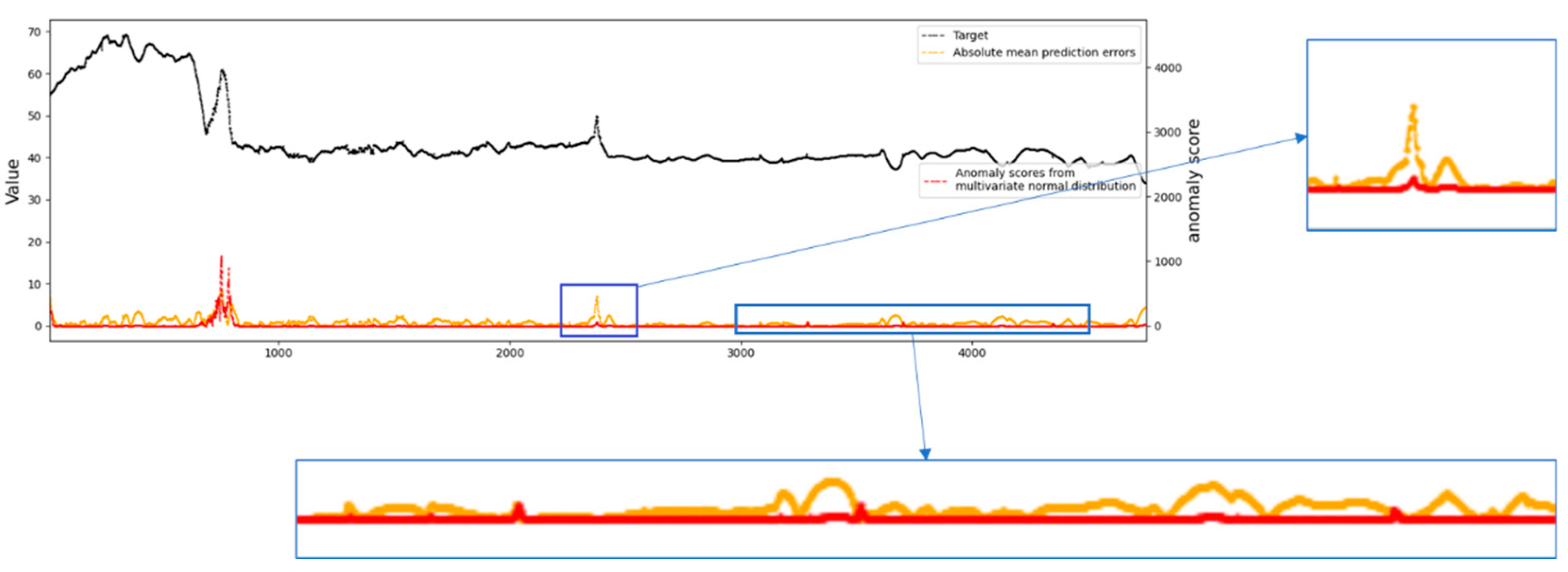

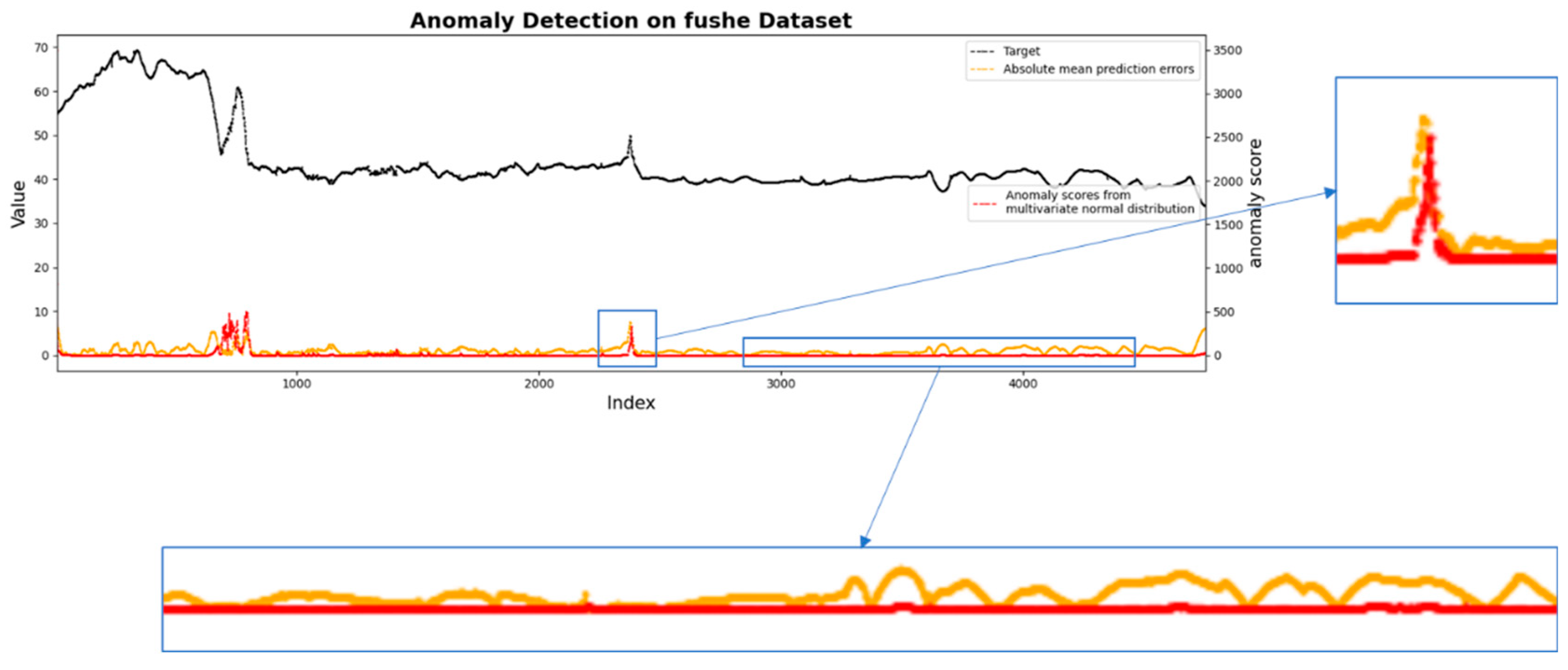

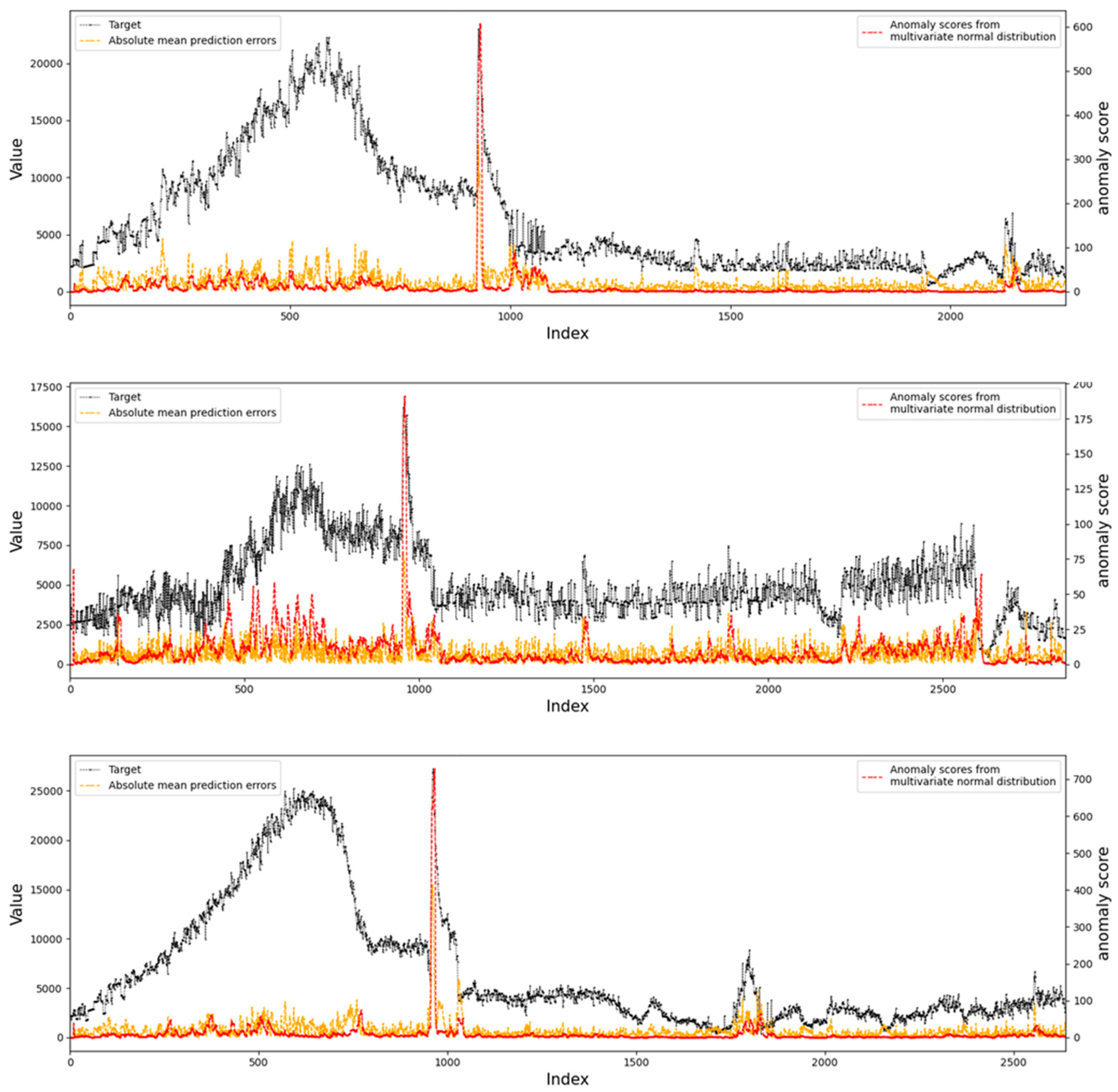

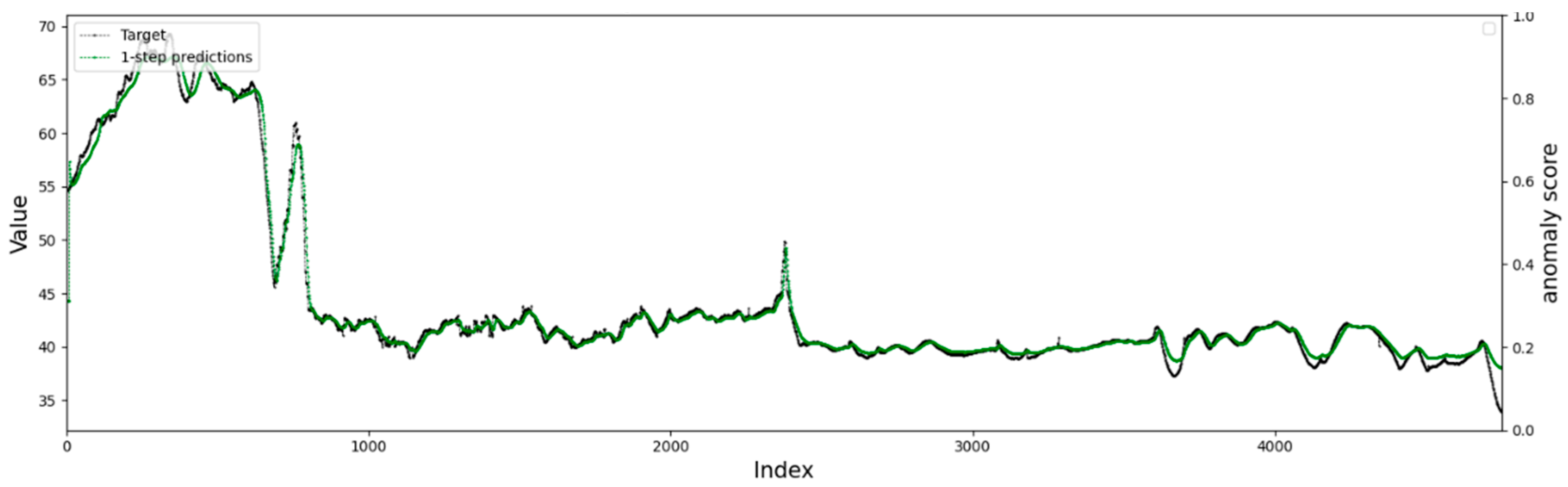

4.4.1. Visualization of Anomaly Detection Results

4.4.2. Comparison of Single-Step and Multi-Step Prediction Results

4.5. Error Analysis

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Yamazaki, S. Investigation on the usefulness of the infrared image for measuring the temperature developed by transducer. Ultrasound Med. Biol. 2008, 35, 1698–1701. [Google Scholar]

- Wang, P. Research on Infrared Target Detection and Tracking Technology in Complex Background with Large Field of View; National University of Defense Technology: Changsha, China, 2018. [Google Scholar]

- Gf, A.; Schmidhuber, J.; Cummins, F. Learning to Forget: Continual Prediction with LSTM. In Proceedings of the Istituto Dalle Molle di Studi Sull Intelligenza Artificiale, Lugano, Switzerland, 15 August 1999. [Google Scholar]

- Loganathan, G.; Samarabandu, J.; Wang, X. Sequence to Sequence Pattern Learning Algorithm for Real-Time Anomaly Detection in Network Traffic. In Proceedings of the 2018 IEEE Canadian Conference on Electrical & Computer Engineering, Quebec, QC, Canada, 13–16 May 2018; pp. 1–4. [Google Scholar]

- Zenati, H.; Foo, C.S.; Lecouat, B.; Manek, G.; Chandrasekhar, V.R. Efficient GAN-Based Anomaly Detection. arXiv 2018, arXiv:1802.06222. [Google Scholar]

- Kim, T.Y.; Cho, S.B. Web Traffic Anomaly Detection using C-LSTM Neural Networks. Expert Syst. Appl. 2018, 106, 66–76. [Google Scholar] [CrossRef]

- Ullah, W.; Ullah, A.; Haq, I.U.; Muhammad, K.; Baik, S.W. CNN Features with Bi-Directional LSTM for Real-Time Anomaly Detection in Surveillance Networks. Multimed. Tools Appl. 2020, 80, 16979–16995. [Google Scholar] [CrossRef]

- Tan, X.; Xi, H. Hidden semi-Markov model for anomaly detection. Appl. Math. Comput. 2008, 205, 562–567. [Google Scholar] [CrossRef]

- Tan, Y.; Hu, C.; Zhang, K.; Zheng, K.; Davis, E.A.; Park, J.S. LSTM-based Anomaly Detection for Non-linear Dynamical System. IEEE Access 2020, 8, 103301–103308. [Google Scholar] [CrossRef]

- Xia, Y.; Li, J.; Li, Y. An Anomaly Detection System Based on Hide Markov Model for MANET. In Proceedings of the 2010 6th International Conference on Wireless Communications Networking and Mobile Computing (WiCOM), Chengdu, China, 23–25 September 2010. [Google Scholar]

- Gu, X.; Wang, H. Online anomaly prediction for robust cluster systems. In Proceedings of the 2009 IEEE 25th International Conference on Data Engineering, Shanghai, China, 29 March–2 April 2009; pp. 1000–1011. [Google Scholar]

- Sendi, A.S.; Dagenais, M.; Jabbarifar, M.; Couture, M. Real Time Intrusion Prediction based on Optimized Alerts with Hidden Markov Model. J. Netw. 2012, 7, 311. [Google Scholar]

- Kaur, H.; Singh, G.; Minhas, J. A Review of Machine Learning based Anomaly Detection Techniques. arXiv 2013, arXiv:1307.7286. [Google Scholar] [CrossRef]

- Fei, T.L.; Kai, M.T.; Zhou, Z.H. Isolation Forest. In Proceedings of the IEEE International Conference on Data Mining, Washington, DC, USA, 15–19 December 2008. [Google Scholar]

- Liu, F.T.; Ting, K.M.; Zhou, Z.H. Isolation-Based Anomaly Detection. ACM Trans. Knowl. Discov. Data 2012, 6, 1–39. [Google Scholar] [CrossRef]

- Olkopf, B.S.; Williamson, R.; Smola, A.; Shawe-Taylor, J.; Platt, J. Support Vector Method for Novelty Detection. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 11 May 2000. [Google Scholar]

- Jing, S.; Ying, L.; Qiu, X.; Li, S.; Liu, D. Anomaly Detection of Single Sensors Using OCSVM_KNN. In Proceedings of the International Conference on Big Data Computing and Communications, Taiyuan, China, 1–3 August 2015. [Google Scholar]

- Kittidachanan, K.; Minsan, W.; Pornnopparath, D.; Taninpong, P. Anomaly Detection based on GS-OCSVM Classification. In Proceedings of the 2020 12th International Conference on Knowledge and Smart Technology (KST), Pattaya, Thailand, 29 January–1 February 2020. [Google Scholar]

- Chandola, V.; Banerjee, A.; Kumar, V. Anomaly Detection: A Survey. ACM Comput. Surv. 2009, 41, 1–58. [Google Scholar] [CrossRef]

- Park, Y.H.; Yun, I.D. Comparison of RNN Encoder-Decoder Models for Anomaly Detection. arXiv 2018, arXiv:1807.06576. [Google Scholar]

- Nanduri, A.; Sherry, L. Anomaly detection in aircraft data using Recurrent Neural Networks (RNN). In Proceedings of the Integrated Communications Navigation & Surveillance, Herndon, VA, USA, 19–21 April 2016. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Lindemann, B.; Müller, T.; Vietz, H.; Jazdi, N.; Weyrich, M. A Survey on Long Short-Term Memory Networks for Time Series Prediction. Procedia CIRP 2021, 99, 650–655. [Google Scholar] [CrossRef]

- Malhotra, P.; Vig, L.; Shroff, G.; Agarwal, P. Long Short Term Memory Networks for Anomaly Detection in Time Series. In Proceedings of the 23rd European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning, ESANN 2015, Bruges, Belgium, 22–24 April 2015. [Google Scholar]

- Bontemps, L.; Cao, V.L.; McDermott, J.; Le-Khac, N.-A. Collective anomaly detection based on long short-term memory recurrent neural networks. In Proceedings of the International Conference on Future Data and Security Engineering, Can Tho City, Vietnam, 23–25 November 2016; pp. 141–152. [Google Scholar]

- Lee, M.-C.; Lin, J.-C.; Gan, E.G. ReRe: A lightweight real-time ready-to-go anomaly detection approach for time series. In Proceedings of the 2020 IEEE 44th Annual Computers, Software, and Applications Conference (COMPSAC), Madrid, Spain, 13–17 July 2020; pp. 322–327. [Google Scholar]

- Sainath, T.N.; Vinyals, O.; Senior, A.; Sak, H. Convolutional, Long Short-Term Memory, fully connected Deep Neural Networks. In Proceedings of the ICASSP 2015—2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, Australia, 19–24 April 2015. [Google Scholar]

- Lih, O.S.; Ng, E.; Tan, R.S.; Rajendra, A.U. Automated diagnosis of arrhythmia using combination of CNN and LSTM techniques with variable length heart beats. Comput. Biol. Med. 2018, 102, 278–287. [Google Scholar]

- Liu, S.; Chao, Z.; Ma, J. CNN-LSTM Neural Network Model for Quantitative Strategy Analysis in Stock Markets. In International Conference on Neural Information Processing; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Yao, H.; Tang, X.; Wei, H.; Zheng, G.; Yu, Y.; Li, Z. Modeling spatial-temporal dynamics for traffic prediction. arXiv 2018, arXiv:1803.01254. [Google Scholar]

- Renjith, S.; Abraham, A.; Jyothi, S.B.; Chandran, L.; Thomson, J. An ensemble deep learning technique for detecting suicidal ideation from posts in social media platforms. arXiv 2021, arXiv:2112.10609. [Google Scholar] [CrossRef]

- Zhao, B.; Lu, H.; Chen, S.; Liu, J.; Wu, D. Convolutional neural networks for time series classification. J. Syst. Eng. Electron. 2017, 28, 162–169. [Google Scholar] [CrossRef]

- Wu, Q.; Guan, F.; Lv, C.; Huang, Y. Ultra-short-term multi-step wind power forecasting based on CNN-LSTM. IET Renew. Power Gener. 2021, 15, 1019–1029. [Google Scholar] [CrossRef]

- Rojas-Dueñas, G.; Riba, J.-R.; Moreno-Eguilaz, M. CNN-LSTM-Based Prognostics of Bidirectional Converters for Electric Vehicles’ Machine. Sensors 2021, 21, 7079. [Google Scholar] [CrossRef]

- Qiao, Y.; Wang, Y.; Ma, C.; Yang, J. Short-term traffic flow prediction based on 1DCNN-LSTM neural network structure. Mod. Phys. Lett. B 2021, 35, 2150042. [Google Scholar] [CrossRef]

- Parvathala, V.; Kodukula, S.; Andhavarapu, S.G. Neural Comb Filtering using Sliding Window Attention Network for Speech Enhancement. TechRxiv 2021. [Google Scholar] [CrossRef]

- Azahari, S.; Othman, M.; Saian, R. An Enhancement of Sliding Window Algorithm for Rainfall Forecasting. In Proceedings of the International Conference on Computing and Informatics 2017 (ICOCI2017), Seoul, Korea, 25–27 April 2017. [Google Scholar]

- Liu, F.; Zhou, X.; Cao, J.; Wang, Z.; Zhang, Y. Anomaly Detection in Quasi-Periodic Time Series based on Automatic Data Segmentation and Attentional LSTM-CNN. IEEE Trans. Knowl. Data Eng. 2020, 34, 2626–2640. [Google Scholar] [CrossRef]

- Shroff, P.; Chen, T.; Wei, Y.; Wang, Z. Focus longer to see better: Recursively refined attention for fine-grained image classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 868–869. [Google Scholar]

- Gao, C.; Zhang, N.; Li, Y.; Bian, F.; Wan, H. Self-attention-based time-variant neural networks for multi-step time series forecasting. Neural Comput. Appl. 2022, 34, 8737–8754. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Cinar, Y.G.; Mirisaee, H.; Goswami, P.; Gaussier, E.; Ait-Bachir, A.; Strijov, V. Time Series Forecasting using RNNs: An Extended Attention Mechanism to Model Periods and Handle Missing Values. arXiv 2017, arXiv:1703.10089. [Google Scholar]

- Lai, G.; Chang, W.C.; Yang, Y.; Liu, H. Modeling Long- and Short-Term Temporal Patterns with Deep Neural Networks. In Proceedings of the 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, Ann Arbor, MI, USA, 8–12 July 2018. [Google Scholar] [CrossRef] [Green Version]

- Geiger, A.; Liu, D.; Alnegheimish, S.; Cuesta-Infante, A.; Veeramachaneni, K. TadGAN: Time Series Anomaly Detection Using Generative Adversarial Networks. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020. [Google Scholar]

- Park, J. RNN Based Time-Series Anomaly Detector Model Implemented in Pytorch. Available online: https://github.com/chickenbestlover/RNN-Time-series-Anomaly-Detection (accessed on 18 April 2021).

- Shuang, G.; Deng, L.; Dong, L.; Xie, Y.; Shi, L. L1-Norm Batch Normalization for Efficient Training of Deep Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2018, 30, 2043–2051. [Google Scholar]

- Yang, L.; Fang, B.; Li, G.; You, C. Network anomaly detection based on TCM-KNN algorithm. In Proceedings of the 2nd ACM Symposium on Information, Computer and Communications Security, Singapore, 20–22 March 2007; pp. 13–19. [Google Scholar]

- Feng, S.R.; Xiao, W.J. An Improved DBSCAN Clustering Algorithm. J. China Univ. Min. Technol. 2008, 37, 105–111. [Google Scholar]

| Method | Precision | Recall | F1-Score | |

|---|---|---|---|---|

| LSTM | 0.27 | 0.9 | 0.41 | |

| CNN | 0.63 | 0.8 | 0.27 | |

| LSTM+CNN | Conv(3) | 0.77 | 1.0 | 0.36 |

| Conv(3) + BN(3) | 0.82 | 1.0 | 0.47 | |

| Conv(4) + BN(4) | 0.83 | 1.0 | 0.47 | |

| Conv(5) + BN(5) | 0.83 | 1.0 | 0.47 | |

| Precision | Recall | F1-Score | |

|---|---|---|---|

| AC-LSTM | 0.53 | 0.8 | 0.37 |

| AC-LSTM+Long-term noise | 0.75 | 1.0 | 0.42 |

| AC-LSTM+Segmented noise | 0.83 | 1.0 | 0.47 |

| Method | Precision | Recall | F1-Score |

|---|---|---|---|

| AC-LSTM | 0.83 | 1.0 | 0.47 |

| AC-LSTM+Attention | 0.83 | 1.0 | 0.47 |

| AC-LSTM+PTSA | 0.84 | 1.0 | 0.49 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, J.; Wang, J.; Hao, Z.; Zhu, M.; Sun, H.; Wei, M.; Dong, K. AC-LSTM: Anomaly State Perception of Infrared Point Targets Based on CNN+LSTM. Remote Sens. 2022, 14, 3221. https://doi.org/10.3390/rs14133221

Sun J, Wang J, Hao Z, Zhu M, Sun H, Wei M, Dong K. AC-LSTM: Anomaly State Perception of Infrared Point Targets Based on CNN+LSTM. Remote Sensing. 2022; 14(13):3221. https://doi.org/10.3390/rs14133221

Chicago/Turabian StyleSun, Jiaqi, Jiarong Wang, Zhicheng Hao, Ming Zhu, Haijiang Sun, Ming Wei, and Kun Dong. 2022. "AC-LSTM: Anomaly State Perception of Infrared Point Targets Based on CNN+LSTM" Remote Sensing 14, no. 13: 3221. https://doi.org/10.3390/rs14133221

APA StyleSun, J., Wang, J., Hao, Z., Zhu, M., Sun, H., Wei, M., & Dong, K. (2022). AC-LSTM: Anomaly State Perception of Infrared Point Targets Based on CNN+LSTM. Remote Sensing, 14(13), 3221. https://doi.org/10.3390/rs14133221