1. Introduction

Synthetic aperture radar (SAR) is a microwave-imaging radar system that can achieve high-resolution imaging [

1]. As an active means of microwave remote sensing, SAR offers all-time and all-weather reconnaissance and strong surface penetration, which are widely used in environmental protection, military reconnaissance, surface mapping, disaster assessment, and other fields. In recent years, SAR technology has made rapid developments, and the resolution of SAR images is constantly improving and has reached sub-meter levels. Compared to moderate- or low-resolution SAR images, high-resolution SAR images contain more details and texture information and can better represent the detailed structural characteristics of the target [

2].

With the increasing requirements of reconnaissance technology in anti-spoofing, anti-jamming, anti-camouflage, and other applications, it is difficult for a single SAR system to meet the necessary requirements. Multi-mode, multi-polarization, and multi-band SAR technology has thus become an important development direction, and the multi-band SAR technology has been rapidly developed [

3]. Compared to a single-band SAR system, the richness and diversity of information expression and the urgency of information processing in multi-band SAR systems greatly improve the complexity of information processing. The use of multi-source information-fusion technology can reduce the workload of manual information processing and improve the fault tolerance and robustness of the system, which is a key factor of multi-band SAR systems [

4].

In practical applications, the characteristics of transmission and backscattering are different for different bands, which leads to different limitations and advantages among SAR images with different bands [

5]. For the same scene, when the resolutions of SAR images are the same or similar, a high-frequency SAR image can obtain a clearer outline of the scene and richer detailed features of the target that are closer to those of an optical image. However, as the penetration of high-frequency electromagnetic waves is poor, high-frequency SAR images have difficulty representing hidden targets. Low-frequency SAR images are dim and have difficulty describing the details of targets. However, they have strong penetrating power and can image hidden targets better, such as scenes and hidden targets in the woods or under the ground. Therefore, fusing SAR images from different bands and synthesizing the information from different bands are beneficial for image interpretation, which is of great significance in military reconnaissance, disaster monitoring, etc.

For SAR-image fusion in different bands, the image-registration technique is a critical step. The registration accuracy will directly affect the results of subsequent image processing. Image registration is the process of aligning two or more images of the same scene with different acquisition conditions, sensors, and viewpoints at the pixel level or sub-pixel level [

6]. Each registration method is not universal, so since the concept of image registration was put forward, many image-registration methods have appeared. In 2003, Zitova Barbara and Jan Flusser comprehensively and deeply investigated the available image-registration methods [

7]. The authors analyzed and classified these methods, which were mainly divided into intensity-based registration methods, feature-based registration methods, and transform-domain-based registration methods.

The intensity-based registration method directly uses the gray values of images to calculate spatial transformation. This method usually defines a certain similarity-measurement criterion to measure the similarity of gray information between images and uses a certain search algorithm to obtain the extreme value of similarity, thus determining the transformation parameters and realizing image registration [

8,

9]. However, this kind of method is sensitive to light and has poor anti-noise abilities. The feature-based registration method first extracts the feature information of images (such as point, line, and area features), which is then taken as the basis of registration. Using a matching method to match the feature information, this method ultimately determines the registration parameters between two images through the matching relationship. This method does not directly use the gray information of images and has a certain adaptability to the gray differences, rotation, translation, and scale transformation between images [

10]. However, this method is computationally expensive and has poor real-time features. The registration method based on the transform domain utilizes fast Fourier transform (FFT) and other principles. It can estimate the rotation, scale, and translation parameters between images well, and has an anti-interference ability to combat low-frequency noise in images [

11,

12]. Even if the images are obtained in different brightness situations, this method still works well and has better real-time features than other techniques [

13,

14,

15,

16,

17]. However, it is limited by the properties of the corresponding transformation; when the transformation form between images is complex, the transformation needs to be solved by a more advanced algorithm.

For the same scene, there is a large difference in gray information when SAR images are located in different bands. At the same time, because of the greater amount of details and texture information contained in high-resolution SAR images, there are more complex geometric distortions between high-resolution SAR images in different bands, making it difficult to achieve accurate registration using traditional registration methods. For the registration of multi-band SAR images, in 2005, based on edge features, Chang et al. realized the relatively high-precision registration of multi-band SAR images with low resolution [

18]. In addition, this method can achieve the registration of multi-band SAR images with large scenes by segmenting edge features. However, this method needs to select a certain number of registration control points, resulting in increased time costs. Fan et al. proposed an SAR-image-registration method based on phase congruency and nonlinear diffusion combined with a scale-invariant feature transform (SIFT) [

19]. The experimental results of multi-band SAR images showed that this algorithm can improve the matching performance and achieve better accuracy than SIFT-based methods. However, due to the impact of speckle noise in SAR images, this method, which is based on feature points, has difficulty obtaining results with high precision. In 2014, Zhou et al. proposed automatic image registration based on both global and local methods [

6]. This method has distinctive characteristics and robustness in the overall registration of SAR images with different bands. However, the thin-plate spline is not improved under this method, resulting in a low degree of improvement in local registration accuracy. Moreover, the time costs of this method are larger.

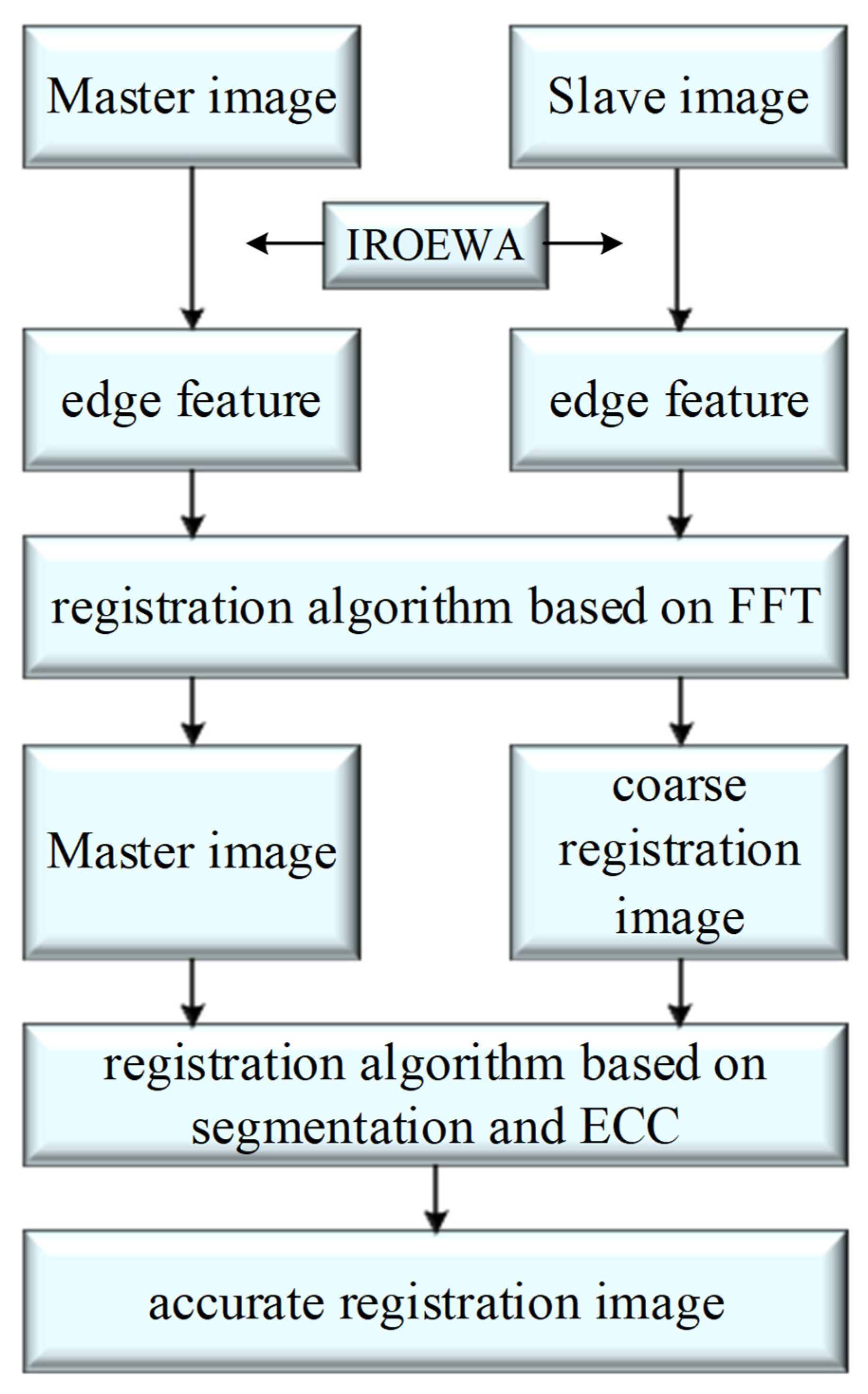

To solve the above problems, this paper proposes a registration method that is suitable for high-resolution SAR images from different bands. Firstly, based on the edge features of SAR images, we use an image-registration algorithm based on FFT to realize the coarse registration of high-resolution SAR images in different bands and thus correct the rotation, translation, and scale of the overall image. Then, we use the image-registration algorithm based on maximizing the enhanced correlation coefficient (ECC), combined with the concept of segmentation to correct the local geometric distortion between coarse-registration images, thus achieving the high-precision registration of different bands’ high-resolution SAR images. To make the experiment more convincing, we use Ku-band and L-band data, which are independently measured to verify these experiments.

This paper is organized as follows. In

Section 2, the basic principles of the coarse image-registration method based on edge features and FFT are introduced. In

Section 3, the accurate image-registration method based on the ECC is introduced. In

Section 4, the experimental results are analyzed to verify the effectiveness of the method.

Section 5 provides a summary of the paper.

2. The Method of Coarse Image Registration Based on Edge Features and FFT

For high-resolution SAR images with different bands, there exists a large gray difference and geometric distortion. The gray relationship is generally nonlinear. Therefore, in order to achieve accurate registration, it is necessary to overcome the nonlinear difference of gray information between images. The usual solution is to find the same information between images and then match the identical object positions in the images. Due to imaging of the same scene, the edge features of different images show strong consistency. Therefore, the interference caused by the nonlinear gray difference between images can be better solved by registering SAR images from different bands with a high resolution based on edge features.

The image-registration algorithm based on FFT offers rapid arithmetic speed and simple hardware implementation. It also works well on relatively large scaling and rotational images. However, when the transformation of images is more complex, the algorithm cannot achieve accurate registration. For high-resolution SAR images from different bands, there is a more complex transformation between the images. Thus, the realization of accurate registration between the images will undoubtedly lead to an increase in time costs. The registration algorithm based on FFT can quickly realize the coarse registration of two images, to reduce the search range of subsequent accurate registration and improve the arithmetic speed of registration.

Let

and

be the SAR images of the same area from different bands,

is the edge feature of

, and

is the edge feature of

. Taking

and

as the feature spaces, let

be the reference image (master image) and

be the image to be registered (slave image). The coordinate-transformation relationship between the images is estimated by using a similar transformation model:

where

is the scale factor,

is the rotation angle, and

denotes the translation. Then, Equation (1) can be written as

Taking the Fourier transform of

and

, respectively, to obtain

and

,

and

can be related as:

Ignoring the multiplication factor

,

and

, which are the magnitudes of

and

, are related by

Converting the axes to the log-polar domain

,

where

and

k denotes the base of the logarithm. Then,

and

are related by

Taking the Fourier transform of

and

, respectively, to obtain

and

, the cross-power spectrum of

and

can be defined as

where

is the complex conjugate of

, and

denotes the amplitude. The inverse Fourier transform (IFFT) of

is

:

where the size of

is

.

Assume that the coordinate corresponding to the peak value of

is

. According to the phase-correlation method [

12],

,

, and

are related as

Then, the scale factor

and rotation angle

between

and

can be expressed as:

Using the calculated

and

values,

represents a scaled and rotated replica of

. At this time, there is only translation transformation between

and

:

where

denotes the coordinates of

.

Let

be the IFFT of the cross-power spectrum of

and

and

be the coordinate corresponding to the peak value of

. According to the phase-correlation method, the translation transformation between

and

is:

So far, all parameters of the similarity transformation between and have been determined.

The improved ratio of exponentially weighted averages (IROEWA) is an edge detector suitable for SAR images. Compared to Sobel, Prewitt, and Canny, which are based on the model of additive noise, IROEWA is a statistical edge detector suitable for the model of multiplicative noise. Compared to the ratio of averages (ROA), which is also a statistical edge detector, IROEWA is optimized for multi-edge models [

20,

21]. Compared to the ratio of exponentially weighted averages (ROEWA), IROEWA can correctly obtain the direction of edge points [

22]. Therefore, we use IROEWA to obtain the edge feature of SAR images.

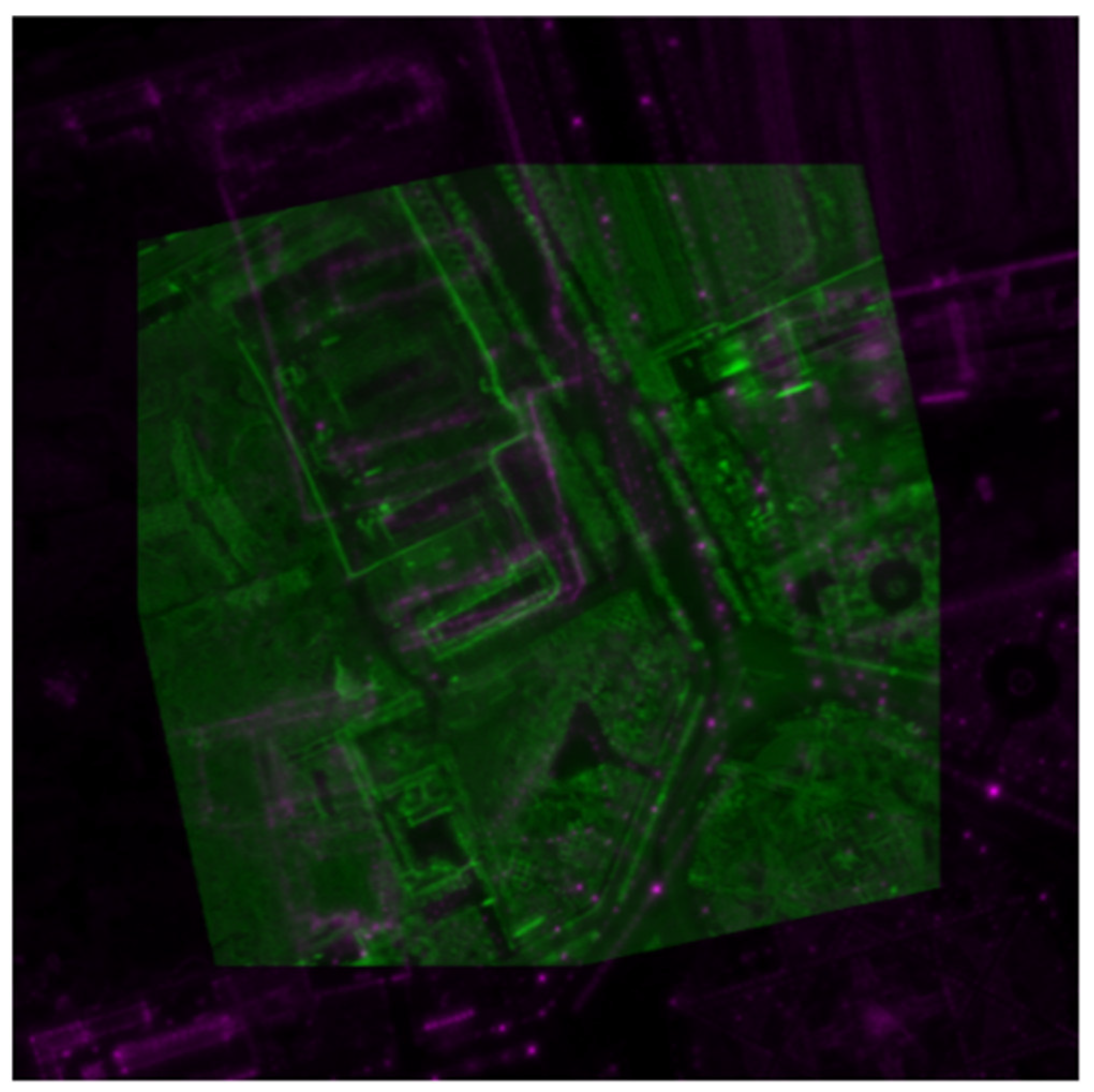

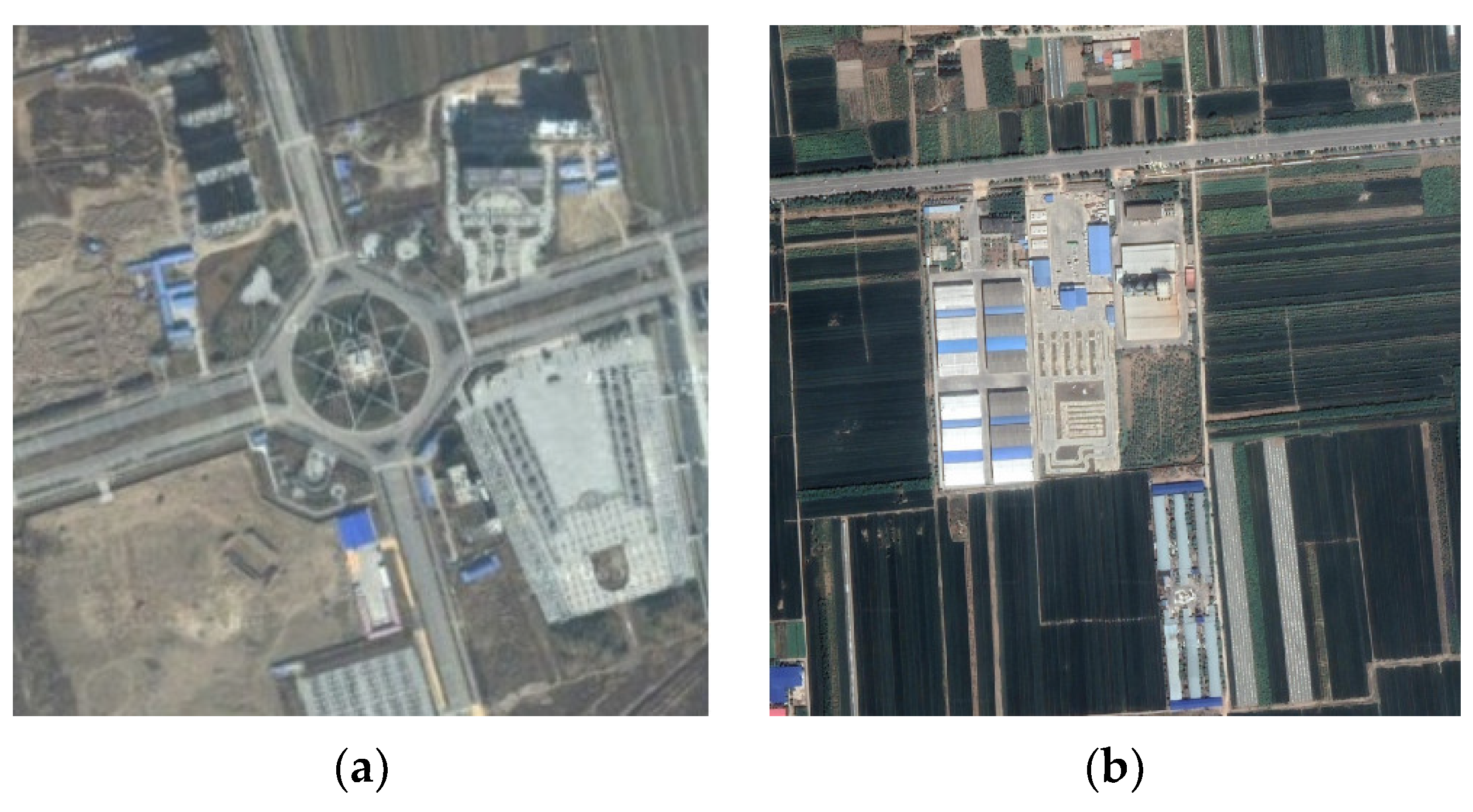

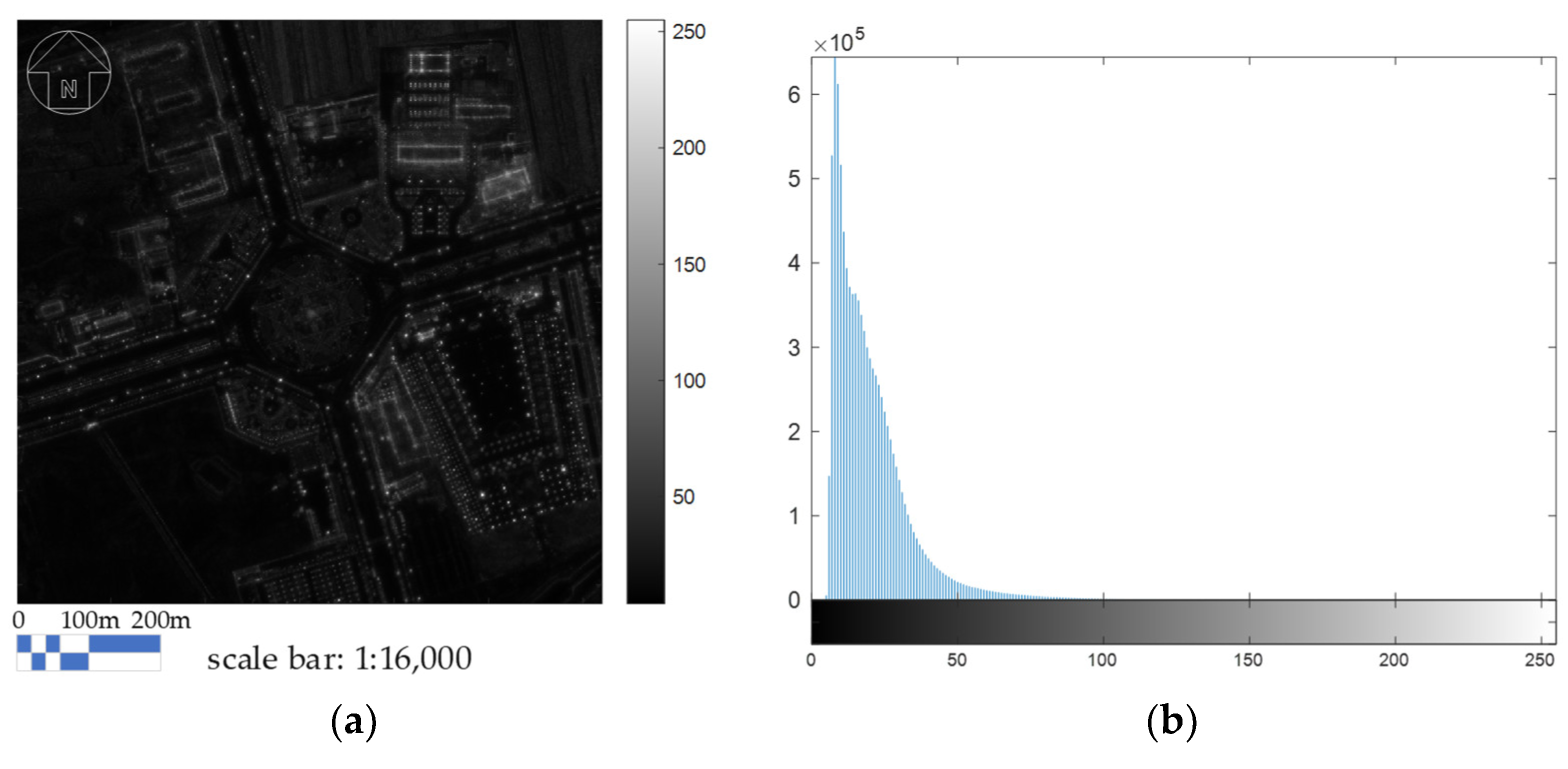

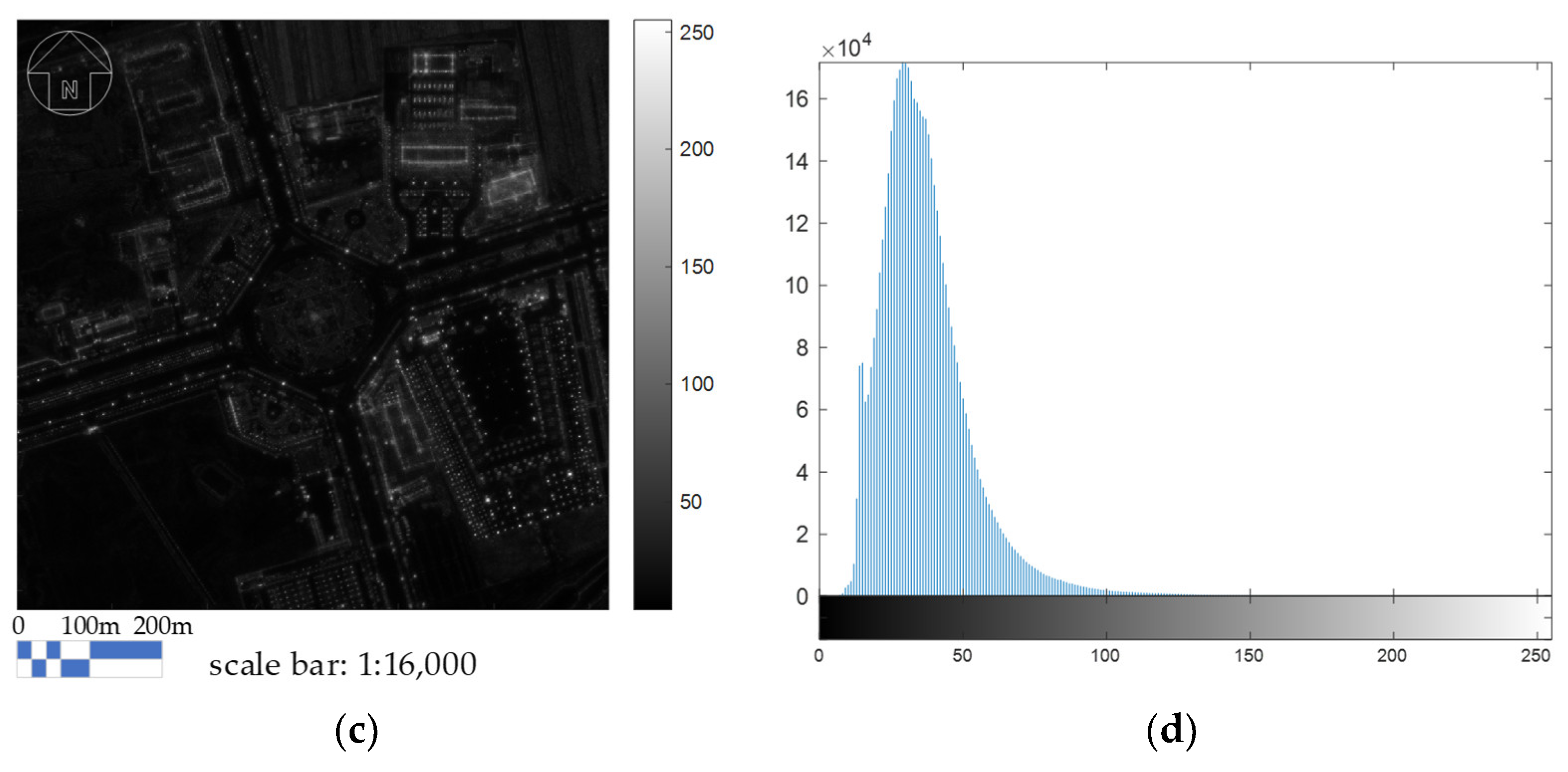

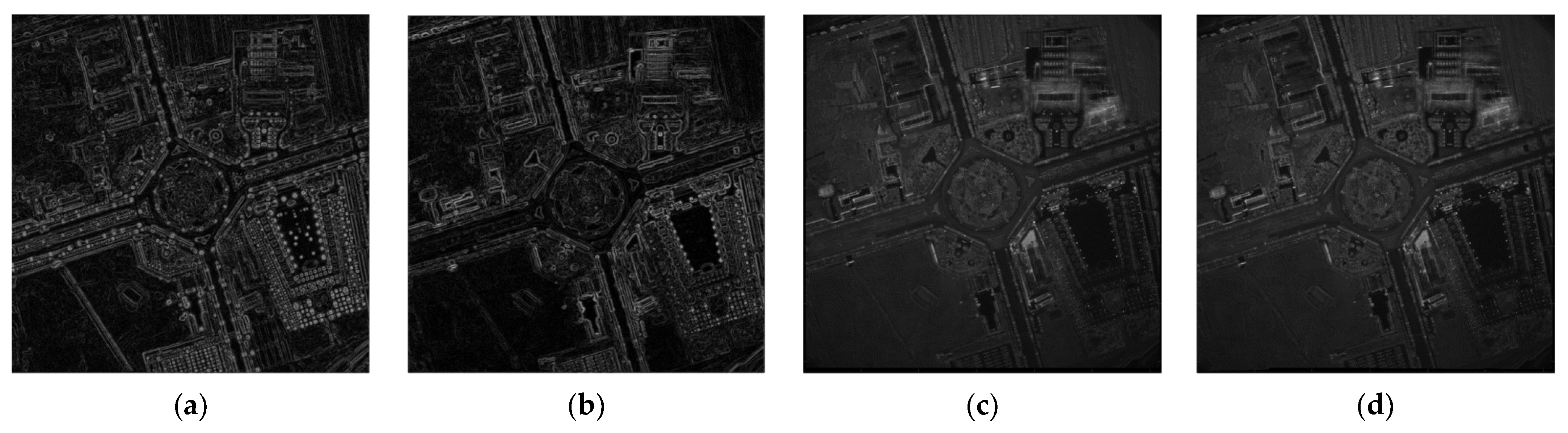

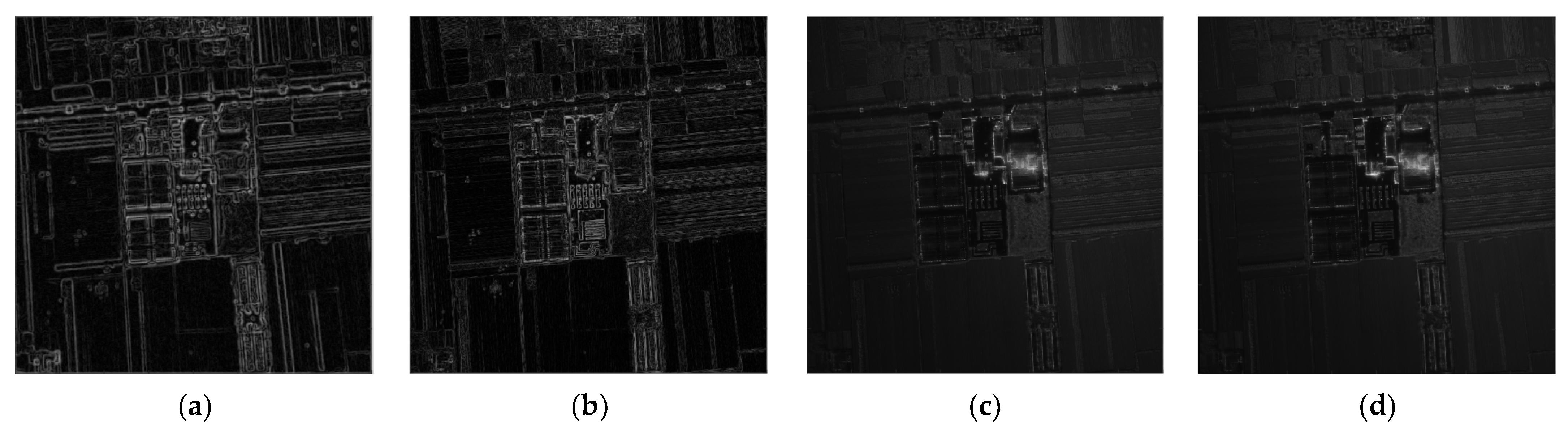

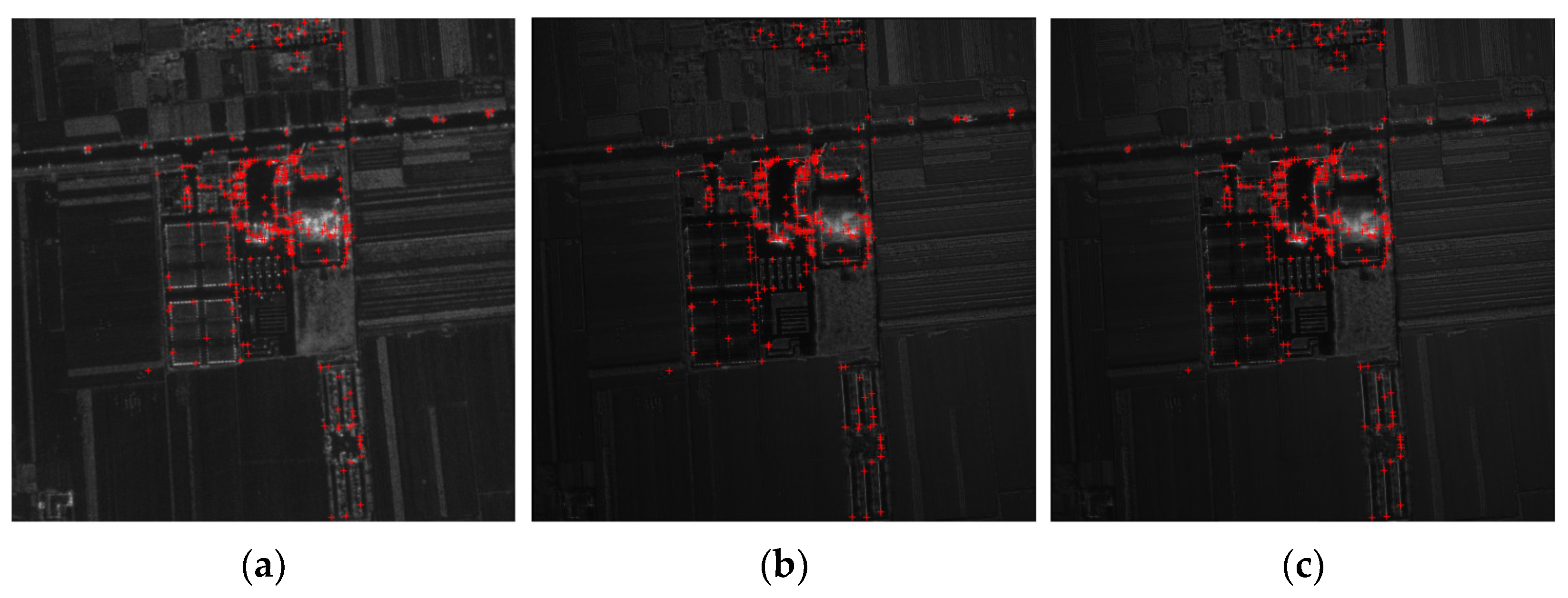

Figure 1a,c, respectively, show the L-band and Ku-band SAR images of an area with a latitude of 34°50′N and longitude of 109°35′E. Gray histograms of the L-band and Ku-band SAR images are shown in

Figure 1b,d, respectively. As shown in

Figure 1, there is a large gray difference between the two images. It is assumed that the L-band SAR image is the master image, and the Ku-band SAR image is the slave image. Firstly, the gray information of the two images is directly used as the feature space, and the image-registration algorithm based on FFT is used to register the two images. As shown in

Figure 2, the two images were not registered. Then, according to the method proposed in

Section 2, we use IROEWA to extract the edge features of the L-band and Ku-band SAR images, respectively (as shown in

Figure 3), and then use the edge feature to register the two images with the image-registration algorithm based on FFT. As shown in

Figure 4, a better registration result between the two was achieved, but the registration accuracy was not high enough. As shown in

Figure 4b, the registration error in the red-dotted-line box reached more than 10 pixels.

3. Accurate Registration of Dual-Frequency SAR Images Based on ECC

As outlined in

Section 2, the registration algorithm based on edge features and FFT is used to solve the problem of the large gray difference between SAR images with different bands and to correct the global difference of rotation, translation, and scaling between SAR images at high and low frequencies. However, the registration results show that the overall registration accuracy of the two SAR images is still too low in some regions. This low accuracy is due to the complex geometric distortions (such as nonlinear distortions caused by sensors, target deformation, and motion) between actual SAR images in different bands, while the registration algorithm based on edge features and FFT uses the global transformation model and cannot properly handle local geometric distortion [

23]. Therefore, this registration algorithm has difficulty achieving high accuracy in high- and low-frequency SAR images.

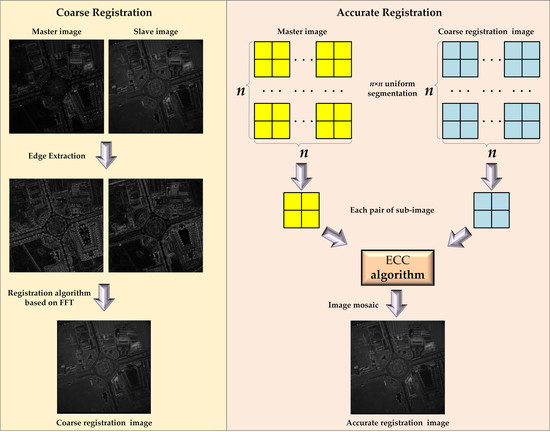

To solve the inability of the registration algorithm based on edge features and FFT to achieve high-accuracy registration between dual-frequency SAR images, we propose a registration algorithm, the flow chart of which is shown in

Figure 5. In this algorithm, the master and slave images are coarsely registered using the registration algorithm based on edge features and FFT. Then, based on the ECC algorithm, the master image and the coarse-registration results are registered to realize accurate registration.

3.1. Principle of the Image-Registration Algorithm Based on ECC

The enhanced correlation coefficient (ECC) is an excellent similarity-evaluation index that has good invariance for brightness, contrast, and other distortions. Evagelidis et al. used the ECC as the optimization objective function and proposed an algorithm based on maximizing the ECC to realize image registration [

24]. The authors also developed a linear iterative method to calculate the optimal parameters. This method solved the problem of the ECC transformation parameters being nonlinear functions and achieved excellent registration results while reducing the amount of calculations. At the same time, the algorithm offers certain invariance for gray deviations and gains between the master image and slave image [

25].

The mathematical expression of image registration is outlined in Equation (15):

where

denotes the coordinate-mapping relationship;

denotes the brightness-transformation relationship and is parameterized by a vector of unknown parameters

;

and

denote the master image and slave image, respectively; and

denotes the

norm, usually

(Euclidean norm). In the algorithm based on the ECC, to eliminate the influence of the overall gray differences of the image, the expression of the objective function

is

where

denotes the Euclidean norm, and

and

denote the zero-mean vectors of

and

, which are obtained by subtracting their corresponding arithmetic means from

and

. Using the above process, to a certain extent, the algorithm based on the ECC cannot be affected by the overall gray level of the image and only considers geometric changes. As the minimization of

can be equivalent to the maximization of the enhanced correlation coefficient, the registration problem can be transformed into finding the maximum value of the enhanced correlation coefficient. The expression of the enhanced correlation coefficient is outlined in Formula (17):

where

is a constant that denotes the normalized version of the zero-mean reference vector. In Formula (17), the objective function

has a nonlinear relationship with

, which means that the maximization of

is a nonlinear problem. Finally, the algorithm based on the ECC continuously iterates through the gradient-based approaches to complete the optimization of the above nonlinear problem and correct the deformed image.

The authors in [

26,

27] noted that the algorithm based on the ECC is not significantly affected by the overall brightness of the image. Instead, the algorithm only focuses on geometric changes and has a certain ability to correct the deformation of images. However, for images with complex geometric distortions or large sizes, the homography matrix determined using the whole image cannot accurately correct the geometric misalignment caused by different degrees of distortion between images. Therefore, the registration of local images cannot be achieved well.

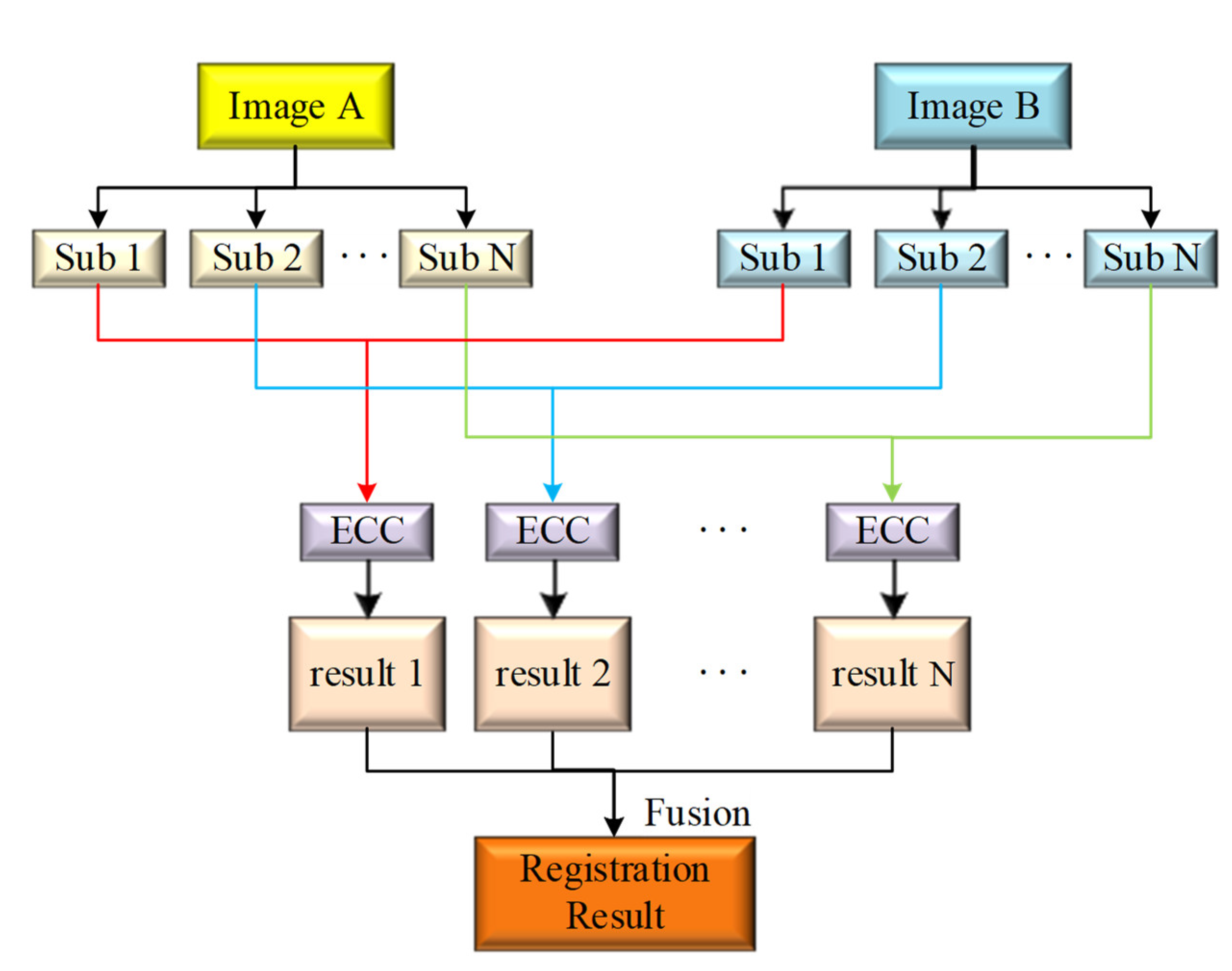

3.2. The Accurate Registration of Dual-Frequency SAR Images Based on Segmentation and ECC

To solve the problem of complex geometric distortions between high- and low-frequency SAR images, we divide the coarse-registration image and master image into blocks, apply the algorithm based on the ECC to register every block, and ultimately splice the registered blocks into the whole image. A schematic of the algorithm is shown in

Figure 6.

In the process of image segmentation, uniform segmentation is a simple and effective method by which the image is uniformly segmented into

k × k sub-images.

Figure 7 shows the 3 × 3 uniform blocking mode. After the image is evenly segmented, the size of each sub-image is

n × n (as shown in the yellow area). There is no intersection between adjacent sub-images, which will lead to dislocation at the junction of sub-images in the process of creating the image mosaic. To solve the above problem, we make an improvement based on uniform segmentation. When dividing the image, each sub-image is expanded to a certain extent, as shown in the area marked with the red wireframe in

Figure 7. The size of this area is

d × d, which is obtained by enlarging

s in four directions based on the yellow area. Through the above improvement, a common area is formed between adjacent sub-images. In the process of creating the image mosaic, the yellow area is involved in stitching, and the common area plays a transitional role in reducing splicing distortion.

Secondly, when the image is uniformly segmented into

k × k sub-images, the value of

k directly affects the quality of the registration. Experimental results show that too small a

k value will lead to a poor correction effect in some local areas, while too large a

k value will lead to an increase in the amount of computation, and the pair of sub-images may be mismatched. The structural-similarity index measure (SSIM) is an indicator of the similarity between two images, which is evaluated by comparing the structural information between the images [

28]. This paper will determine the

k value based on SSIM. Notably, due to the increase in the amount of computations and the possible mismatch of sub-image pairs when

k is too large, the

k value in this paper will not exceed 5. The specific method for determining

k is as follows:

Step 1. Uniformly divide the image pairs into m × m sub-images.

Step 2. Calculate the SSIM values of each pair of sub-images.

Step 3. Find the minimum value of the SSIM values obtained in Step 2 and denote it as .

Step 4. Compare all values, and let k be equal to m, which corresponds to the minimum value of .

When the SSIM between a pair of sub-images is smaller, the similarity of the structure between images is lower. That is to say, the quality of the registration is worse, which means that this pair of sub-images needs to be finely registered. Therefore, under this method, the SSIM values are minimum.

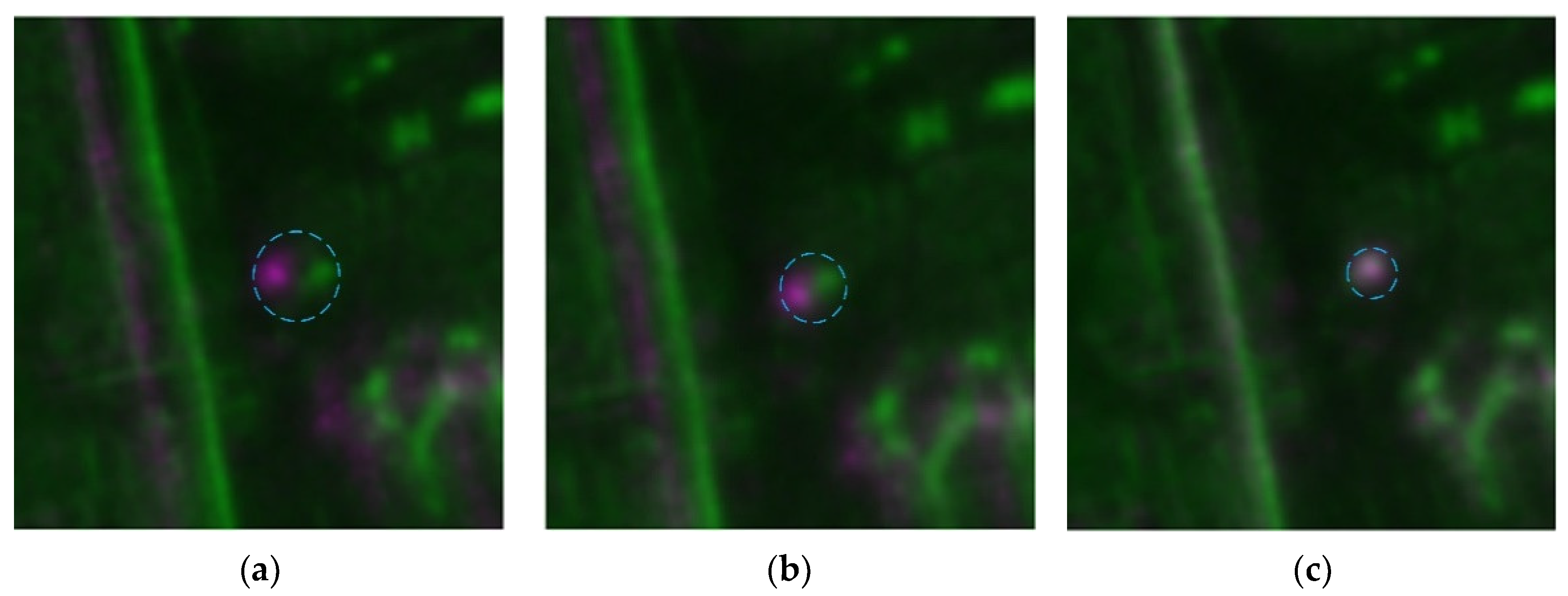

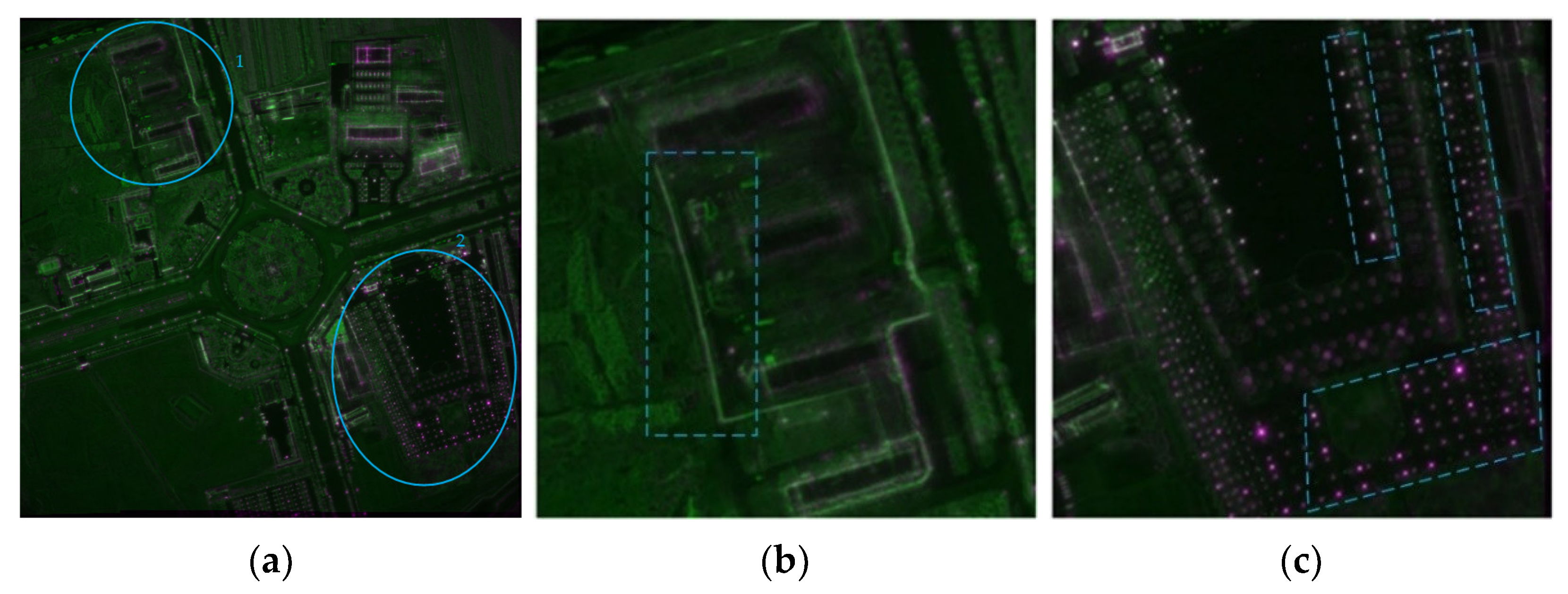

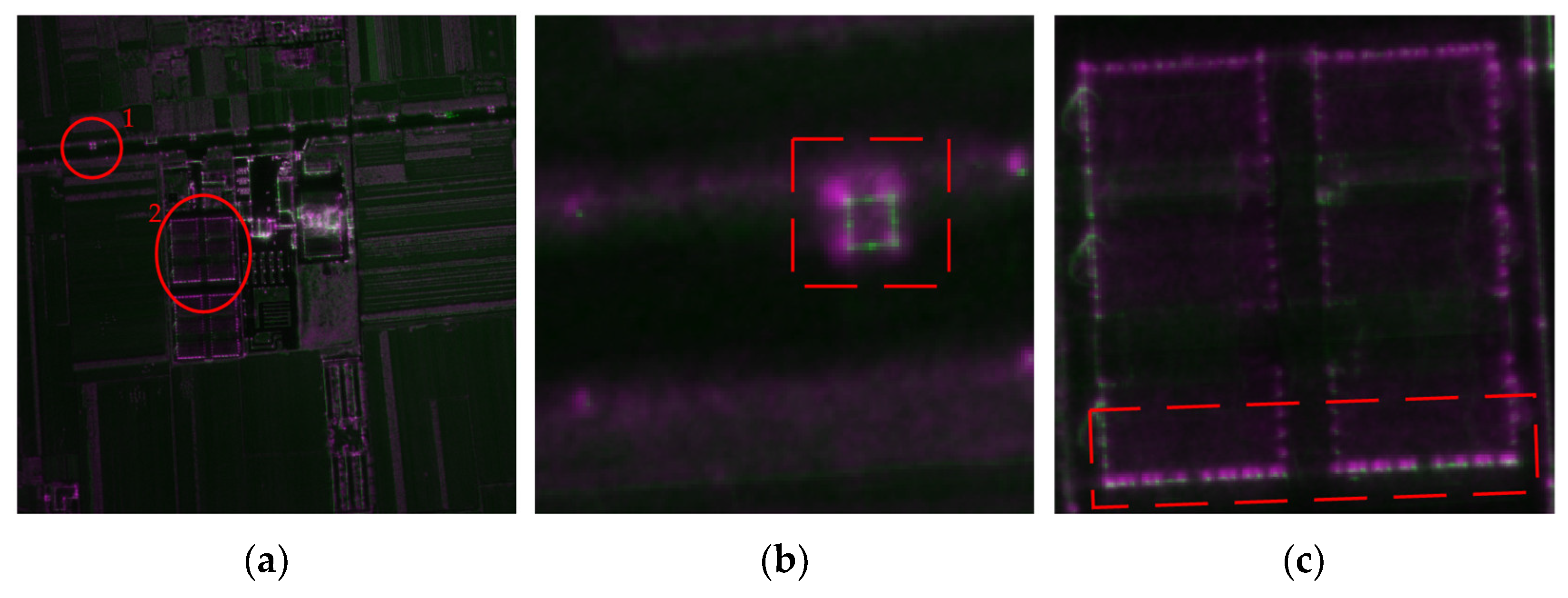

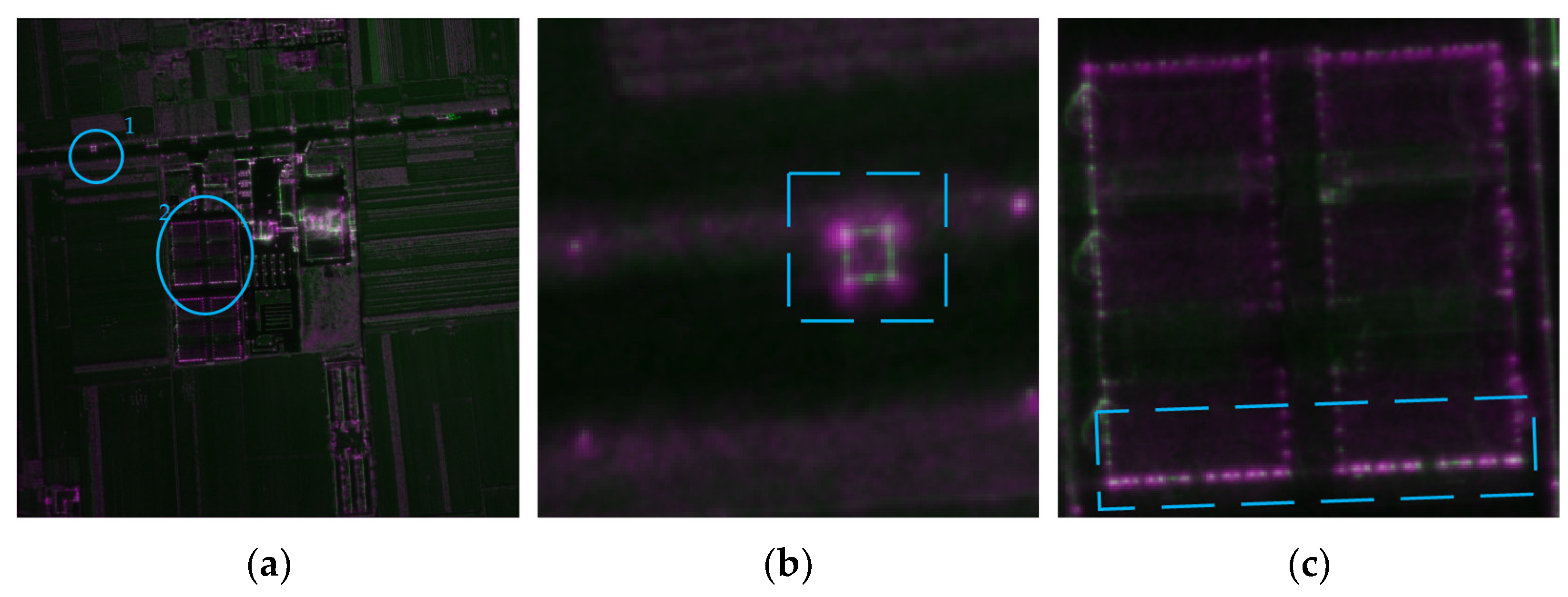

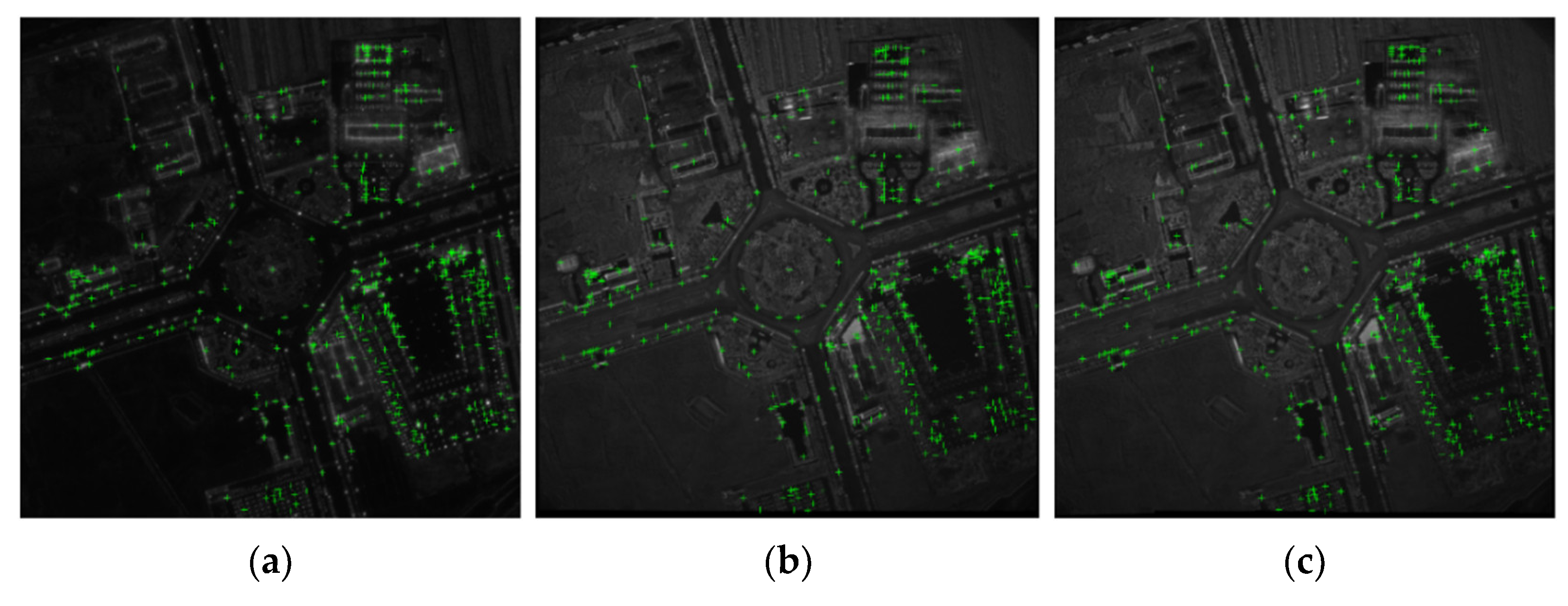

Figure 8a shows the pseudo-color superposition of the master image and coarse-registration image.

Figure 8b shows the pseudo-color superposition of the master image and accurate-registration image obtained based on the registration results using the algorithm based on the ECC.

Figure 8c shows the pseudo-color superposition of the master image and accurate-registration image obtained based on the registration results using the algorithm based on segmentation and the ECC. In the blue-dotted-line area of

Figure 8, the red and green points represent the actual positions of the same target in different images. By calculating the distance between these two points, the error of the coarse-registration image is about 16 pixels. The error of the accurate-registration image based on the ECC is about 7 pixels, which shows that, to some extent, the algorithm based on the ECC can correct the geometric distortion between images, but the correction accuracy is still not sufficient. In the accurate-registration image based on segmentation and the ECC, the two points basically coincide, with no obvious difference in vision. The experimental results show that the algorithm based on segmentation and the ECC proposed in this paper can achieve a better correction effect for the local geometric distortion between images.