Abstract

Crop height is an essential parameter used to monitor overall crop growth, forecast crop yield, and estimate crop biomass in precision agriculture. However, individual maize segmentation is the prerequisite for precision field monitoring, which is a challenging task because the maize stalks are usually occluded by leaves between adjacent plants, especially when they grow up. In this study, we proposed a novel method that combined seedling detection and clustering algorithms to segment individual maize plants from UAV-borne LiDAR and RGB images. As seedlings emerged, the images collected by an RGB camera mounted on a UAV platform were processed and used to generate a digital orthophoto map. Based on this orthophoto, the location of each maize seedling was identified by extra-green detection and morphological filtering. A seed point set was then generated and used as input for the clustering algorithm. The fuzzy C-means clustering algorithm was used to segment individual maize plants. We computed the difference between the maximum elevation value of the LiDAR point cloud and the average elevation value of the bare digital terrain model (DTM) at each corresponding area for individual plant height estimation. The results revealed that our height estimation approach test on two cultivars produced the accuracy with R2 greater than 0.95, with the mean square error (RMSE) of 4.55 cm, 3.04 cm, and 3.29 cm, as well as the mean absolute percentage error (MAPE) of 3.75%, 0.91%, and 0.98% at three different growth stages, respectively. Our approach, utilizing UAV-borne LiDAR and RGB cameras, demonstrated promising performance for estimating maize height and its field position.

1. Introduction

Precision agriculture is an approach that uses large data sources in conjunction with crop advanced analytical tools [1]. It helps farmers make more informed decisions by providing precise information about crop location and growth rate [2,3]. Precision agriculture plays an increasingly important role in ensuring adequate food supply, especially as the growth of the global population has led to a higher demand for food. Remote sensing with unmanned aerial vehicles (UAVs) offers high spatiotemporal resolution and high maneuverability, as well as detailed vegetation height data and multi-angular observations in precision agriculture [4].

Crop monitoring provides an informative reference basis for precision agriculture because of its ability to assess overall crop conditions, determine irrigation schedules, and estimate terminal yield [5]. Plant height is a vital phenotypic trait governed by complex genetic mechanisms and can serve as a growth indicator [6,7]. It can be measured directly and indirectly and used to estimate the plant nitrogen usage [8] and biomass [9,10], yield [11,12,13], and quantification of lodged areas [14,15]. Therefore, a fast and accurate method to estimate the height of individual crop plants is essential to precision agriculture schemes.

Traditionally, crop height is obtained by field measurements. The conventional manual method is time-consuming, laborious, destructive, and constrained by random field sampling and human errors [16,17]. A significant amount of research has been done trying to develop tools and technologies to help automate some of these manual tasks. Field phenotyping technologies, such as ultrasonic sensors, high-performance RGB cameras, and LiDAR, have been gaining popularity in recent years due to their ability to identify various crop traits in a non-destructive and high-throughput manner [18,19]. For example, the ultrasonic sensor was first used for tree canopy height and volume measurements in the late 1980s [20,21]. It is commonly used in field phenotyping because it is typically inexpensive and user-friendly. Nevertheless, it has some disadvantages including reduced sensor accuracies when sensors become farther from objects due to the larger field of view, reduced sensor sensitivity to temperature as sound speed changes with temperature [22], and the difficulties in interpreting sensor data because of variance in measurement conditions and transducer behavior. Some work has been done using RGB cameras mounted on fixed-wing [23] and rotor-based UAVs. Alternatively, UAV imagery can be utilized to create 3D field reconstructions using structure from motion and multi-view stereo to estimate crop height. These algorithms have been applied to the height estimation of various crops including maize [12,24,25,26], sorghum [24,27,28], and wheat [29,30,31,32]. However, using optical remote sensing imagery to predict crop height has great limitations, as optical remote sensing systems can only obtain two-dimensional information; the vertical structure information related to crop height is excluded [17,33,34].

However, UAV-borne LiDAR systems can be used to obtain highly accurate measurements and three-dimensional information of the crop, leading to improved estimation precision of crop structure parameters [34,35,36]. Its performance is also less likely to be influenced by changes in lighting and wide-range measurements. UAV LiDAR data for estimating forest inventory attributes, such as tree height, crown diameter, standing volume, and aboveground biomass [37,38,39], has been recently developed. Unfortunately, only a few studies making use of the UAV LiDAR data have concerned agriculture. The few agricultural studies on the data solely focused on plant height [14,15,34,40,41,42] and leaf area estimation [43]. Furthermore, these studies acquired the crop parameters at group levels rather than the individual crop level, failing to fully exploit the potential of 3D point clouds and meet the needs of precision crop monitoring [44].

To acquire parameters at the individual crop level, individual plants need to be separated or segmented from the population LiDAR points. Su et al. [45] manually separated individual maize plants from the population maize LiDAR points using the cut tool with CloudCompare software, and further extracted individual phenotypic parameters. Jin et al. [44] first proposed a method that combines deep learning and regional growth algorithms to segment individual maize from terrestrial LiDAR (TSL) data. The height of the truly segmented maize was found to be highly correlated with the manually measured height (R2 > 0.9). Individual maize segmentation is the prerequisite for precision field monitoring at the individual plant level. However, individual maize segmentation is a challenging task because maize stalks are usually occluded by leaves between adjacent plants, especially when they grow up.

Currently, two main classes of individual object segmentation algorithms for LiDAR point cloud are available. They are widely used in forest monitoring, i.e., CHM (canopy height model)-based [46] and direct point-based methods [47,48,49]. The CHM-based methods use the raster image interpolated from the LiDAR point cloud to depict the top of the forest canopy, which may lead to inherent spatial errors from the interpolated gridded height model [50]. Additionally, CHM-based methods are not suitable for homogenous, interlocked, and blocked canopy [51]. These limitations can be overcome by point-based methods [34], such as regional growth methods [44,49,52], voxel space projection [53], normalized cut [54], and clustering feature-based methods [55,56,57]. Among them, the fuzzy clustering segmentation method has shown better performance. However, the algorithm depends heavily on the choice of seed points [58,59,60]. However, for LiDAR-scanned maize data, it is not always feasible to choose seed points by using the maximum value within a certain range because the maximum point of an individual maize plant is often not the center of the maize [44]. As established above, the main difficulty of segmentation at the individual crop level is to accurately identify individual plant locations.

This study proposes a novel approach for locating individual maize plants using a digital orthophoto map of maize seedlings. The two-dimensional coordinate information of the seedling locations is used to generate a seed point set and later input to the fuzzy C-means clustering algorithm to segment individual maize plants. Furthermore, the maximum elevation values of 3D points based on individual plant segmentation results are used for automatic height estimation.

2. Materials and Methods

2.1. Experimental Design

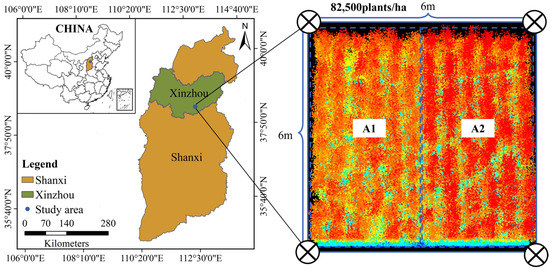

Field experiments were conducted in 2021 at the National Maize Industrial Technology System Experimental Demonstration Base, Shanxi, China (38°27′N, 112°43′E, altitude 776 m). The size of the experimental area was 6 m × 6 m, as shown in Figure 1. The plot was sowed with column spacing of 55 cm, plant spacing of 25 cm, and planting density of approximately 82,500 plants/ha (which corresponds to a plant density of 8 plants/m2). Lianchuang 825 (A1) and Shaandan 650 (A2) maize cultivars were planted in 6 rows on each side. Four GCPs (ground control points) were set up and evenly distributed in the field with two GCPs on each side. The maize was sowed on 4 May, with the jointing stage on 10 June, the tasseling stage on 16 July, and was harvested on 12 September. The maize seedlings emerged on 19 May.

Figure 1.

Geographical position of study area.

2.2. Data Acquisition

2.2.1. UAV-Borne LiDAR and RGB Images

The UAV DJI Matrice 600 Pro with a Hummingbird Genius micro-LiDAR system (Beike Tianmai, Beijing, China) was used for acquiring 3D point clouds. The UAV LiDAR system is shown in Figure 2a, and the output point cloud data format is LAS1.2. The main parameters are shown in Table 1. As is shown in Figure 2b, the DJI Phantom 4 Pro was used to acquire UAV-RGB images and generate orthophotos.

Figure 2.

UAV platform: (a) DJI Matrice 600 Pro with Genius LiDAR system; (b) DJI Phantom 4 Pro.

Table 1.

LiDAR system parameters.

UAV-LiDAR data and RGB images were collected on 21 May (seedling stage), 18 June (jointing stage), 23 July (tasseling stage), and 17 September (mature stage) corresponding to 17, 45, 80, and 136 days after seeding. For each data acquisition, the UAV flew at 25 m above ground level, with a flight speed of 3 m/s. The UAV LiDAR scans were set as 40% adjacent overlap in both the forward and side directions, while the UAV RGB images were adjacently overlapped 80% in both directions. The specification of the acquired UAV data is shown in Table 2, including the total number of RGB images acquired by each flight, average ground sampling distance (GSD) of the orthophoto, the total number of LiDAR points acquired by each flight, and point density of the LiDAR point cloud. In addition, LiDAR data from the same area were collected at different flight heights, ranging from 5 m to 40 m, to quantitatively analyze the influence of flight height on point density and crop height estimation in August 2020.

Table 2.

Overview of captured images and LiDAR point cloud.

2.2.2. Field Data

The ground truth of the geographical location and height of each maize plant in the plot was obtained by manual measurement. Three-dimensional positions of each maize plant were recorded by RTK (HITARGET-V200, Guangzhou, China) at the seedling stage. The horizontal and vertical accuracy of the RTK is ±8 mm and ±15 mm, respectively. The maize plant heights (i.e., the vertical distance from the root to the top) were measured using a leveling rod. In this study, a total of 289 maize samples were measured. The statistics of field-measured maize plant heights at three growing periods are listed in Table 3.

Table 3.

Statistics of field measurements per maize at three growth stages.

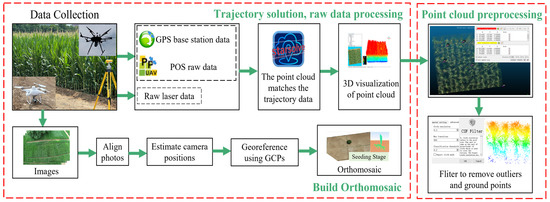

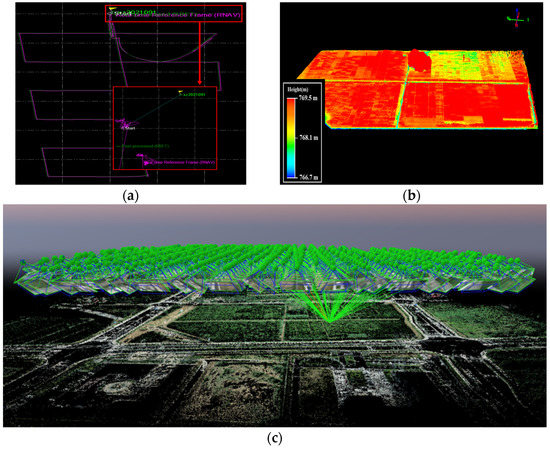

2.3. Data Preprocessing

To obtain a real maize point cloud, the data acquired by the UAV-borne LiDAR must be preprocessed. This included three tasks: data interpretation, point cloud registration, and denoising. The data interpretation consisted of two parts: (1) GPS and INS joint interpretation to obtain the trajectory, and (2) laser point cloud data interpretation, using POSPac UAV 8.3 Lite software and StarSolve software, respectively. Then, the point cloud data in the LAS file format was exported. The LiDAR point cloud at different growth stages was registered by the Align algorithm where five pairs of homonymous points were selected to complete the registration. Direct-pass filtering was used to remove the outliers and cloth simulation filtering (CSF) [61] was used to remove the ground points. The registration and filtering mentioned above were processed using CloudCompare, which is an open-source project of 3D point cloud and mesh processing software. The preprocessing of LiDAR data and the generation of digital orthophoto maps are shown in Figure 3. UAV-borne RGB images at the seedling stage were processed by the Pix4Dmapper software to generate a digital orthophoto map, following four main steps: data import, local initialization, image alignment, and generation of the orthophoto. As it can be seen, both the orthophoto and LiDAR data were registered to GPS so that they were registered to each other. The interface of the trajectory solution for point cloud is shown in Figure 4a, the processed point cloud data is shown in Figure 4b, and the overview of the flight path and camera location of where images were collected is shown in Figure 4c.

Figure 3.

Trajectory solution, raw LiDAR data processing, point cloud data preprocessing, and orthophoto generation.

Figure 4.

The processing interface: (a) Software interface for point cloud trajectory solution; (b) The processed point cloud data; (c) The flight path and camera location of where images were collected.

2.4. Individual Maize Plant Segmentation and Height Estimation Algorithms

2.4.1. Seed Point Detection Based on Digital Orthophoto Map

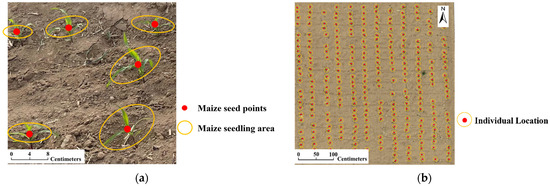

Orthophoto, generated from UAV-RGB images captured at the maize seedling stage, was used to locate individual maize plants by accurate seedling detection. The GSD of orthophoto was less than 1 cm and each maize seedling could occupy at least five pixels. In this study, we developed an algorithm to extract seed point sets based on extra-green detection and morphological filtering. As the field scene during the seedling period is relatively simple, mainly consisting of bare soil, weeds, and seedlings, the orthophotos were taken within 15–20 days after seeding. At the same time, all maize seedlings have emerged with no less than three leaves to ensure all individual maize plants were detected.

A color space conversion, as shown in Equation (1), was applied to the orthophotos to suppress the information in the blue and red bands in the RGB orthophotos. This made the contrast between seedlings and bare soil more significant by increasing the weight of the green component k, where k < 1.

Then, the Otsu thresholding algorithm was used to extract the seedling area as foreground containing noise and fresh green weed and remove the bare soil area as background in the orthophotos. Next, the foreground image was operated by a morphological filter including eroding noise and removing fresh green weeds. In detail, a square structure element of 3 3 was used to erode the foreground noise, as shown in Equation (2), and a circular shape operator of 15 cm radius was used to remove fresh green weeds considering that weeds always grow rapidly in the field.

Let I denote an image; E is a structural element (in this study, it is a 3 3 square structure element). The erosion of I by E is defined as I E; the erosion at (x, y) of an image I by E is given in Equation (2). and are the definitional domain of I and E, respectively.

Finally, each extracted seedling area was fitted with a circle and its center was selected as the single plant locus. Based on the above automatically extracted seedling area centers, a maize seed point set was generated, as shown in Figure 5, with the extracted maize seed points marked in red.

Figure 5.

The diagram of extracted maize seedling area and seed points: (a) UAV-borne RGB image; (b) Orthophoto.

2.4.2. Individual Maize Plant Segmentation Based on LiDAR Point Cloud

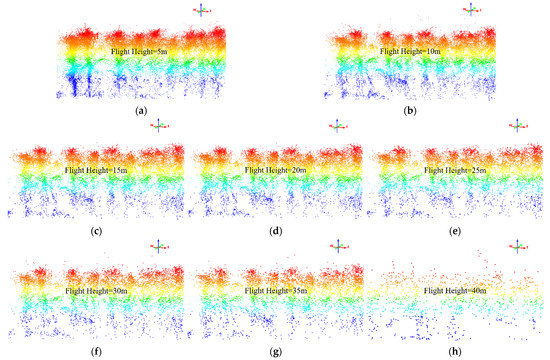

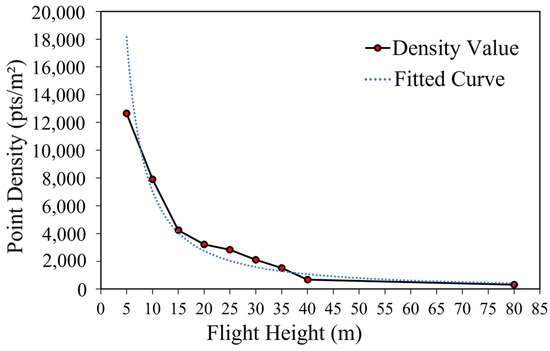

With the growth of maize plants, the maize field gradually forms a closed canopy layer overlapped with plant leaves. Because of this, the acquired UAV-borne LiDAR data missed some parts of the lower stalks, but it was able to detect more complete information about the middle and upper layers of maize plants [43,62]. The first task was to decide the flight height to acquire the LiDAR point cloud. Based on field experience, the heights of maize plants were in the range of 1.5–3.5 m at the mature stage, so the LiDAR data was acquired when the UAV flew in the height of 5 m to 40 m. A side view of the LiDAR point cloud acquired at different altitudes in an area is shown in Figure 6. From Figure 6, it was observed that when the altitude was less than 10 m, there were many noise points on the top of the point cloud data due to the downward airflow of the UAV. When the altitude was greater than 30 m, the point cloud data could no longer present an obvious spatial structure of maize plants. The difference in the quality of the point cloud data captured at altitudes of 10 m–30 m was not obvious, but gradually the rootstalk parts of the maize plants were missing from the point cloud. Considering that lower flight altitudes affect the data collection efficiency (a smaller area covered in each scan), and only the plant height was extracted without the need for complete leaf information, the flight height of 25 m was chosen to collect LiDAR point cloud data in this study. In addition, the LiDAR point cloud acquisition of 10 hectares of a maize field was completed in 15 min at the UAV height of 25 m.

Figure 6.

Side views of maize point cloud data in the same plot of different flight heights: (a) flight height = 5 m; (b) flight height = 10 m; (c) flight height = 15 m; (d) flight height = 20 m; (e) flight height = 25 m; (f) flight height = 30 m; (g) flight height = 35 m; (h) flight height = 40 m.

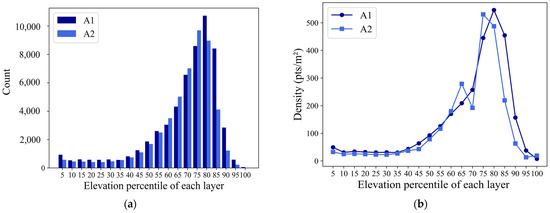

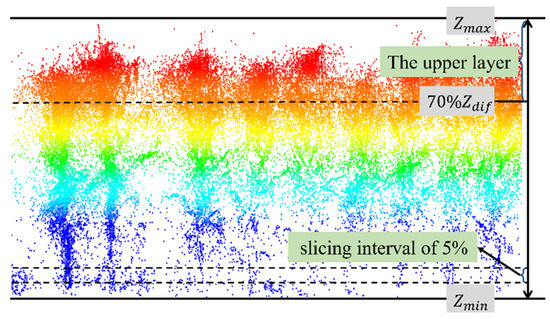

The LiDAR point cloud of the two cultivars A1 and A2 in the experimental area was sliced horizontally into 20 layers with an interval of 5% of the difference value between the maximum minus minimum of the LiDAR data elevation from 0 to 100 percentile. The number of LiDAR point cloud distribution and point density distribution of the two cultivars A1 and A2 sliced into 20 layers in the experimental area is shown in Figure 7. From Figure 7, it can be seen that the majority of the LiDAR points fall in the range of 70–80% height percentile. Therefore, the information on the part of maize in the 70–80% interval of elevation was more completely recorded in the LiDAR points. The use of LiDAR points in this interval was crucial for accurate segmentation of individual maize plants. In this study, the upper point cloud above 70% elevation was used for individual maize plant segmentation for plant height estimation, as shown in Figure 8.

Figure 7.

Point cloud distribution at each layer (interval: 5%): (a) LiDAR point cloud distribution; (b) Point density distribution.

Figure 8.

LiDAR point cloud slicing interval with the upper layer indicated.

Using the maize plant location information gained at the seedling stage as the initial input, the number of clusters with their initial clustering centers was identified. The upper layer of the LiDAR point cloud was clustered to individual maize plants by using the fuzzy C-mean (FCM) clustering algorithm based on Euclidean distance in 2D coordinates. After individual maize plant segmentation, each cluster was used to provide local elevation on the maxima of each maize plant for the subsequent plant height estimation.

The FCM algorithm combines the clustering algorithm with fuzzy theory and is a data clustering method based on the optimization of an objective function [55]. Each sample point has a membership degree for each class, and for one certain point, the sum of membership degrees for different classes is 1. Fuzzy clustering issues can be presented as the following Equation (3):

where symbolizes the LiDAR points, s is the dimension of sample space, in our case, s = 3, n is the number of samples, c is the number of clustering classes, m > 1 is a fuzzy factor, and is the distance between sample point and ith cluster center , V = ; similarly, , fuzzy membership matrix satisfies Equation (3), and is the membership degree of a point belonging to the ith class, which satisfies the following conditions:

The steps of FCM to segment individual maize plants include: (1) Set the seed point set of maize plants detected at the seedling stage as the initial cluster center; (2) Calculate the membership degree matrix, as shown in Equation (5); (3) Compute the cluster center using the Equation (6); (4) Iteration will stop after satisfying the condition: , where is a positive number and k is the number of iterations. This procedure converges to a local minimum. As a result, prototypes are placed the closest to the data nucleus, and, at the same time, as far from the others as possible. After individual maize plants were segmented by FCM, each cluster was used to provide local elevation on the maximum of each maize plant for the subsequent plant height estimation.

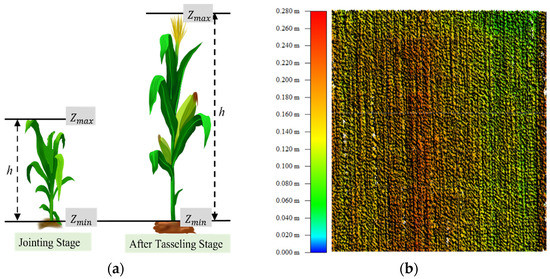

2.4.3. Extraction of Individual Maize Plant Height

Plant height, which is an important three-dimensional crop structure parameter, is closely related to crop biomass and yield. Based on the processes described in Section 2.4.1 and Section 2.4.2, it can be extracted directly by subtracting the lowest point from the highest point of each isolated plant cluster, as shown in Figure 9a. The segmented maize individuals were indexed one by one. The local maximum of each plant was extracted from the clusters of the maize point cloud, and the lowest point of each maize plant was obtained from the corresponding DTM generated at the seedling stage. The DTM was extracted from the LiDAR point cloud using the CSF filtering provided in CloudCompare software, and the classified ground point cloud was interpolated using Inverse Distance Weighted (IDW) [50,63] method to generate DTM with 0.01 m resolution, as shown in Figure 9b. IDW is an interpolation method that effectively estimates the spatial distribution of attribute values and has been applied to DTM generation [50].

Figure 9.

(a) Diagram of maize height estimation; (b) DTM at the seedling stage.

2.5. Evaluation Metrics

The performance of the developed algorithm for locating individual maize plants was quantitatively evaluated by using True Positive (TP), False Negative (FN), False Positive (FP), Recall (r), Precision (p), and F-Score (F), as shown in Equations (7)–(9), respectively, where TP referred to the number of detected maize plants that were real maize plants, FN was the number of real maize plants missed out from the detection, and FP was the number of maize plants incorrectly detected as maize plants. Correct detection means that the actual plant location and the detected plant location were within 10 cm in the plant candidate zone. Both recall and precision have a natural interpretation in terms of probability. The recall was the ratio of TP to the real maize plants number, while precision was the ratio of TP to the detected maize plants number. F-score takes into account the accurate detection as well as the missed detection. F-score has a range of 0 to 1, with higher values representing higher detection accuracy.

The extraction accuracy of plant height was evaluated using three evaluation indicators: the coefficient of determination (2), root mean square error (RMSE) and mean absolute percentage error (MAPE), calculated using Equations (10)–(12).

and are the observed and calculated plant height, and n is the number of measured or estimated height values.

3. Results

3.1. Determination of the Optimal k Value

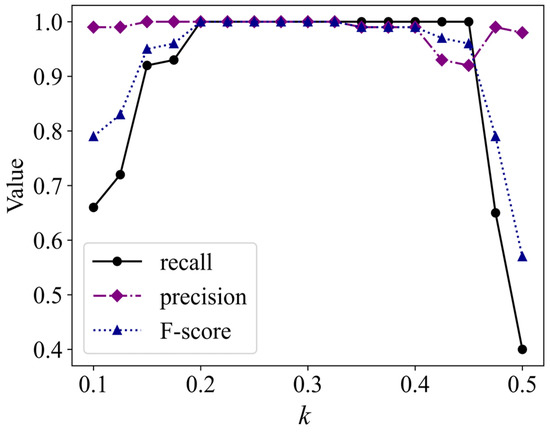

The influence of the key parameter k on the accuracy of plant seed point set extraction was analyzed by adjusting the value of the weight k controlling the green component in Equation (1). The range of k was chosen from 0.1 to 0.5. The experiments and analysis were carried out in the experimental area with the specific detection accuracy shown in Figure 10, which showed that the k value had a significant effect on the recall, precision, and F-score. Plants at the seedling stage in the field were detected correctly when k takes the value of 0.2–0.3. In this study, k = 0.25 was selected for the seed point set extraction.

Figure 10.

Location detection accuracy versus parameter k.

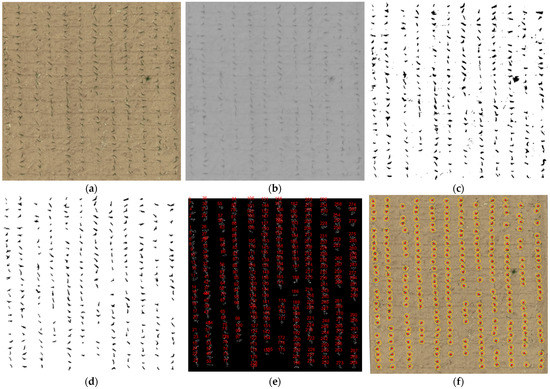

The original orthophoto of the experimental area is shown in Figure 11a. Firstly, k = 0.25 was taken to Equation (1) for color space conversion (Figure 11b) and binary segmentation using the Otsu thresholding algorithm to identify maize seed locations as shown in Figure 11c. Secondly, the maize seed locations were extracted by morphological filtering, as shown in Figure 11d. Then, the number of seedlings was counted (Figure 11e), the extracted plant area was fitted with a circle, and its center was selected as the single plant locus to obtain the maize plant seed point set marked in red (Figure 11f). A total of 289 maize seed positions in the plot were all correctly detected. The detection rate was 100% when the UAV images were taken when the maize seedlings emerged with no less than three leaves per plant.

Figure 11.

Extraction of seed point set: (a) orthophoto, (b) image processed by color space conversion, (c) the results map of extra-detection, (d) seedlings region, (e) the number of seedlings, (f) seed point set.

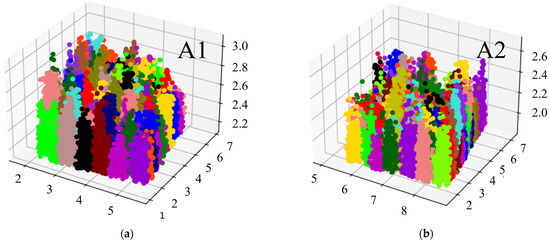

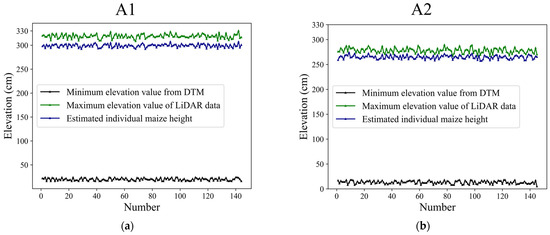

3.2. Estimation of Individual Maize Plant Height

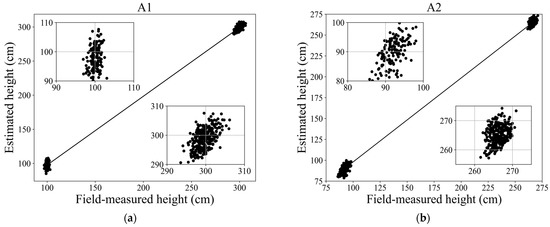

As described in Section 2.4.2, the upper point cloud collected at the mature stage was used to visualize the results of segmentation and plant height estimation. There were 144 maize plants of A1 and 145 maize plants of A2, as shown in Figure 12a,b. The difference between the maximum elevation value of the LiDAR point cloud and the average elevation value of the bare DTM at each corresponding area was computed to estimate individual maize plant height. The maximum elevation value, minimum elevation value, and the estimated heights corresponding to the local area of each maize plant are shown in Figure 13a,b.

Figure 12.

Segmentation of individual maize plants: (a) A1, (b) A2.

Figure 13.

The maximum elevation of LiDAR data, the elevation of DTM, and the estimated height: (a) A1, (b) A2.

3.3. Quantitative Analysis of Height Estimation Accuracy

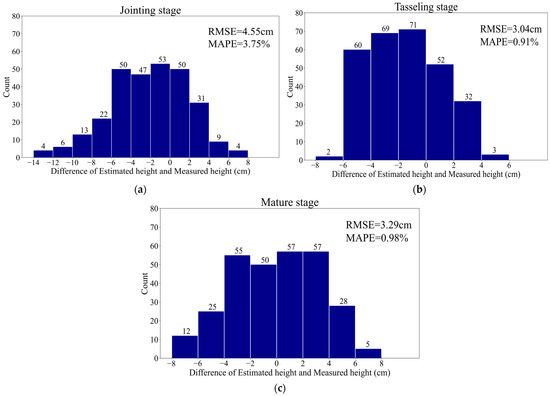

The height estimation errors of the two cultivars (A1, A2) were analyzed to assess the algorithm performance at each of the different growth stages. The distributions of the errors for the 289 maize plants at the jointing, tasseling, and mature stages are shown in Figure 14a–c, respectively, with the horizontal axis of difference between the estimated height and the field-measured height (ground truth). Plant trait surveys were completed on the day of the aerial photography by researchers from the Cultivation and Physiology Research Unit of the Maize Research Institute of Shanxi Agricultural University, who provided the measured plant height data (as shown in Table 3). The accuracies of the maize plant height estimation are shown in Table 4.

Figure 14.

Maize plant estimation errors at different growth stages: (a) the jointing stage, (b) the tasseling stage, (c) the mature stage.

Table 4.

Statistics of maize height estimation accuracies at three growth stages.

Figure 15 demonstrates the correlations between estimated maize plant height and the field-measured height for the two cultivars, A1 and A2. As can be seen, a good agreement is shown between the estimated and measured maize plant height, with R2 = 0.97 for A1 (Figure 15a) and R2 = 0.96 for A2 (Figure 15b).

Figure 15.

Scatter plots of field-measured heights versus estimated heights during the entire growth period: (a) A1, (b) A2.

4. Discussion

In this study, we developed a novel approach, which can locate individual maize plants and estimate their heights in their growth stages using orthophoto and UAV LiDAR point cloud. The maize plants were densely distributed in the experimental plot. As the leaves grew, they occluded each other, especially in the later stage of growth. The algorithm for locating individual maize has produced a high accuracy of 100% in detecting seedlings with a total of 289 maize plants of two cultivars. The maize plant height estimation results have shown that the developed approach had the lowest error (RMSE = 3.04 cm) at the jointing stage and the highest error (RMSE = 4.55 cm) at the tasseling stage. We noticed that the accuracy of the maize plant height estimation is not entirely determined by plant occlusion, but also depends on the accurate extraction of elevations. In the experiments, it has been observed from the LiDAR point cloud that the highest point of the maize falls on the leaves on both sides of the plant at the jointing stage. The maximum elevation values of the extracted individual point cloud deviate from the real highest points of the leaves. At the tasseling stage, the maize plant stamens are outstanding for 3–5 cm as the real highest points, which are relatively easier to extract. Meanwhile, maize stalks at the tasseling stage are in the vertical position with high water content and less affected by the downward airflow introduced by the drone in measurement, resulting in the less noisy and more concentrated point cloud. In contrast, maize stalks at maturity are thin and prone to swaying with the wind, and the point cloud data is relatively noisy, introducing errors in plant height measurements.

Previous studies have shown that UAV LiDAR data can be used to estimate crop height reliably. Harkel et al. [42] successfully estimated crop heights of potato (R2 = 0.50, RMSE = 12 cm), sugar beet (R2 = 0.70, RMSE = 7.4 cm), and winter wheat (R2 = 0.78, RMSE = 3.4 cm) using UAV-Based LiDAR data. Luo et al. [34] found that LiDAR derived CHM was able to predict maize height (R2 = 0.649, RMSE = 23.7 cm). Zhou et al. [14] analyzed the plant height of the lodging maize and the estimation accuracy parameters were R2 = 0.96, RMSE = 13 cm. Hu et al. [15] used UAV-LiDAR technology to obtain the multi-temporal dense point cloud in the experimental area and further extract plant height to assess the self-recovery ability of maize after lodging. The estimation accuracy parameters of maize plant height at the tasseling stage were R2 = 0.9824, RMSE = 6.13 cm. The estimated accuracy parameters at the filling stage were R2 = 0.9470, RMSE = 12.94 cm. The results showed that our estimation precisions were comparable to the results derived from the latest study using UAV LiDAR [15] and even slightly better in terms of RMSE. Although these studies have shown that UAV LiDAR data produced a high accuracy of crop height estimation, they focused on the height estimation at a group crop plant level rather than at an individual crop plant level. Jin et al. [44] proposed a method to combine deep learning and regional growth algorithms to segment individual maize plants from terrestrial Lidar data. The R2 of the height between the automatically segmented and the manually segmented maize plants were all higher than 0.9, and RMSE all equaled to 0.02 m. The study showed that the monitoring of individual plants in the field based on LiDAR data is promising. However, compared with the method of this study, the Lidar is fixed on the ground, and the data can only be obtained in a small area in a single experiment so that the efficiency is low. In our study, the individual maize plants in the field were located and further the locations were used to estimate their height using UAV LiDAR point cloud at different growth stages. The results have shown that the UAV LiDAR system has the potential for efficient extraction of individual crop structure parameters in the field.

Moreover, the study results have shown that the algorithm has a higher estimation accuracy. It is worth to mention that the developed algorithm had a reliable performance in individual maize plant height estimation compared to most previous research, with consideration that our performance was calculated from one-to-one comparison of the manually measured data and the algorithm estimated data, whereas other research only used an average of randomly sampled crop plant heights in a field as the measured data. This limited their measurement accuracy. In the experiments, we have also noticed that different UAV flight heights and LiDAR point densities have a large impact on the extraction of phenotypic features of maize plants in the field. By comparing LiDAR data from multiple UAV flight heights, the optimal flight altitude for different tasks can be determined. A quantitative comparison of the point cloud density at different flight heights is presented in Figure 16. The point density is obviously low and not suitable for accurate crop monitoring when the UAV flight height is higher than 35 m. The point density varies slightly between 15 m and 30 m, with all greater than 2000 pts/m2, which can be used for individual maize plant location and height estimation. When the flight height is less than 15 m, the point density is high and suitable for estimating maize plant structure parameters.

Figure 16.

The LiDAR point densities corresponding to different UAV flight heights.

In summary, the experimental results have shown that the UAV remote sensing platform could be used to monitor the height of individual maize plants during the entire growth sta. In particular, the experiment plot used in this study was planted at a dense density, which perfectly demonstrates the performance of our algorithm. The accuracy of acquired LiDAR data is important so that well-controlled data acquisition and a stable flight altitude contribute to the final performance.

5. Conclusions

In this study, the heights of maize plants were estimated in three stages. Firstly, the maize seed point set was extracted using orthophoto generated from UAV-borne RGB images at the seedling stage. Secondly, maize population point clouds were segmented based on the seed point set. Finally, the difference between the maximum elevation of the individual point cloud and the average elevation value of the bare digital terrain model (DTM) was computed for individual plant height estimation. A comparative analysis of the plant height estimation results for three typical growth stages in the same plot led to the following conclusions.

- (1)

- UAV-borne LiDAR point cloud can generate a complete and accurate DTM in a maize field at a relatively early stage of maize growth. Then, the DTM can be effectively used for bare ground estimation and individual maize plant height estimation to avoid the occlusion problem as maize plants grow.

- (2)

- UAV-borne RGB images of the maize planting area can be captured within 15–20 days after sowing and used to generate a digital orthophoto map. Based on the orthophoto, the proposed algorithm can identify individual maize seedlings with no less than three leaves and locate their positions with an accuracy of 100%.

- (3)

- The individual maize plant height can be monitored by the UAV remote sensing platform mounted with a LiDAR system and an RGB camera during the entire growth period. At the different typical growth stages, the height estimation approach for two cultivars produced the highest accuracy with R2 greater than 0.95, the mean RMSE of 3.63 cm, and the mean MAPE of 1.88%.

The proposed algorithm for maize seedling location and individual maize plant height estimation provides an automated approach for monitoring maize growth using UAV-borne LiDAR and RGB images. The results have shown that the proposed approach has achieved reasonably high accuracy. It can be used to estimate a variety of crop growth parameters, providing a reference for scientific management and yield estimation. In the early growth stages, the seedling survival rate can be automatically detected. In the later stages of maize growth, yield estimation can be done by monitoring maize structure phenotypic parameters.

Crop field monitoring that utilizes UAV remote sensing data has great advantages because of its high efficiency. However, its performance is limited by the current data, which while it gives a good indication of the plant canopy structure, it does not allow complete recovery of the maize plants’ three-dimensional structure. Future work will attempt to improve the quality of the LiDAR point cloud by optimizing route settings to extract the number of leaves, leaf area index, and other maize growth characteristics.

Author Contributions

Conceptualization, F.Y., M.G., and H.W.; methodology, M.G. and X.L.; software, M.G.; validation, M.G. and F.Y.; formal analysis, M.G.; investigation, M.G.; resources, F.Y. and M.G.; data curation, F.Y, M.G., and X.L.; writing—original draft preparation, M.G. and F.Y.; writing—review and editing, H.W.; supervision, F.Y. and H.W.; project administration, F.Y.; funding acquisition, F.Y. and H.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of China, grant number 61972363, Central Government Leading Local Science and Technology Development Fund Project, grant number YDZJSX2021C008; the Postgraduate Education Innovation Project of Shanxi Province, grant number 2021Y612, Shanxi Province Key Research and Development Program Project, grant number 201903D421043, and the Postgraduate Education Innovation Project of Shanxi Province, grant number 2021Y609.

Data Availability Statement

If interested in the data used in the research work, contact gaomindm@163.com for the original dataset.

Acknowledgments

The authors would like to acknowledge the support from the Cultivation and Physiology Research Unit of the Maize Research Institute of Shanxi Agricultural University; the plant trait surveys were completed by Zhengyu Guo, Zhongdong Zhang, Shuai Gong, Li Chen, and Haoyu Wang. In addition, the authors would like to acknowledge the assistance of data acquisition in the field supported by Xuan Zhang, Fan Xie, Zeliang Ma, Jialun Zhang, and Rui Wang.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| UAV | unmanned aerial vehicle |

| LiDAR | Light detection and ranging |

| TSL | terrestrial LiDAR |

| FCM | fuzzy C-means |

| DTM | digital terrain model |

| CHM | canopy height model |

| GCP | ground control point |

| GSD | ground sampling distance |

| CSF | cloth simulation filtering |

| IDW | Inverse Distance Weighted |

| RMSE | root mean square error |

| MAPE | mean absolute percentage error |

References

- Pierce, F.J.; Nowak, P. Aspects of Precision Agriculture. In Advances in Agronomy; Sparks, D.L., Ed.; Elsevier: Amsterdam, The Netherlands, 1999; pp. 1–85. [Google Scholar]

- Raj, R.; Kar, S.; Nandan, R.; Jagarlapudi, A. Precision Agriculture and Unmanned Aerial Vehicles (UAVs). In Unmanned Aerial Vehicle: Applications in Agriculture and Environment; Avtar, R., Watanabe, T., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 7–23. [Google Scholar]

- Delavarpour, N.; Koparan, C.; Nowatzki, J.; Bajwa, S.; Sun, X. A Technical Study on UAV Characteristics for Precision Agriculture Applications and Associated Practical Challenges. Remote Sens. 2021, 13, 1204. [Google Scholar] [CrossRef]

- Maes, W.H.; Steppe, K. Perspectives for Remote Sensing with Unmanned Aerial Vehicles in Precision Agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef] [PubMed]

- Chang, A.; Jung, J.; Maeda, M.M.; Landivar, J. Crop Height Monitoring with Digital Imagery from Unmanned Aerial System (UAS). Comput. Electron. Agric. 2017, 141, 232–237. [Google Scholar] [CrossRef]

- Tirado, S.B.; Hirsch, C.N.; Springer, N.M. UAV-Based Imaging Platform for Monitoring Maize Growth throughout Development. Plant Direct 2020, 4, e00230. [Google Scholar] [CrossRef]

- Xie, T.; Li, J.; Yang, C.; Jiang, Z.; Chen, Y.; Guo, L.; Zhang, J. Crop Height Estimation Based on UAV Images: Methods, Errors, and Strategies. Comput. Electron. Agric. 2021, 185, 106155. [Google Scholar] [CrossRef]

- Eitel, J.U.H.; Magney, T.S.; Vierling, L.A.; Brown, T.T.; Huggins, D.R. LiDAR Based Biomass and Crop Nitrogen Estimates for Rapid, Non-Destructive Assessment of Wheat Nitrogen Status. Field Crops Res. 2014, 159, 21–32. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating Biomass of Barley Using Crop Surface Models (CSMs) Derived from UAV-Based RGB Imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef] [Green Version]

- Li, B.; Xu, X.; Zhang, L.; Han, J.; Bian, C.; Li, G.; Liu, J.; Jin, L. Above-Ground Biomass Estimation and Yield Prediction in Potato by Using UAV-Based RGB and Hyperspectral Imaging. ISPRS J. Photogramm. Remote Sens. 2020, 162, 161–172. [Google Scholar] [CrossRef]

- Feng, A.; Zhang, M.; Sudduth, K.A.; Vories, E.D.; Zhou, J. Cotton Yield Estimation from Uav-Based Plant Height. Trans. ASABE 2019, 62, 393–403. [Google Scholar] [CrossRef]

- Geipel, J.; Link, J.; Claupein, W. Combined Spectral and Spatial Modeling of Corn Yield Based on Aerial Images and Crop Surface Models Acquired with an Unmanned Aircraft System. Remote Sens. 2014, 6, 10335–10355. [Google Scholar] [CrossRef] [Green Version]

- Barrero Farfan, I.D.; Murray, S.C.; Labar, S.; Pietsch, D. A Multi-Environment Trial Analysis Shows Slight Grain Yield Improvement in Texas Commercial Maize. Field Crops Res. 2013, 149, 167–176. [Google Scholar] [CrossRef]

- Zhou, L.; Gu, X.; Cheng, S.; Yang, G.; Shu, M.; Sun, Q. Analysis of Plant Height Changes of Lodged Maize Using UAV-LiDAR Data. Agriculture 2020, 10, 146. [Google Scholar] [CrossRef]

- Hu, X.; Sun, L.; Gu, X.; Sun, Q.; Wei, Z.; Pan, Y.; Chen, L. Assessing the Self-Recovery Ability of Maize after Lodging Using UAV-LiDAR Data. Remote Sens. 2021, 13, 2270. [Google Scholar] [CrossRef]

- Khan, Z.; Chopin, J.; Cai, J.; Eichi, V.-R.; Haefele, S.; Miklavcic, S. Quantitative Estimation of Wheat Phenotyping Traits Using Ground and Aerial Imagery. Remote Sens. 2018, 10, 950. [Google Scholar] [CrossRef] [Green Version]

- Liu, F.; Hu, P.; Zheng, B.; Duan, T.; Zhu, B.; Guo, Y. A Field-Based High-Throughput Method for Acquiring Canopy Architecture Using Unmanned Aerial Vehicle Images. Agric. For. Meteorol. 2021, 296, 108231. [Google Scholar] [CrossRef]

- Shakoor, N.; Lee, S.; Mockler, T.C. High Throughput Phenotyping to Accelerate Crop Breeding and Monitoring of Diseases in the Field. Curr. Opin. Plant Biol. 2017, 38, 184–192. [Google Scholar] [CrossRef]

- Wang, X.; Silva, P.; Bello, N.M.; Singh, D.; Evers, B.; Mondal, S.; Espinosa, F.P.; Singh, R.P.; Poland, J. Improved Accuracy of High-Throughput Phenotyping From Unmanned Aerial Systems by Extracting Traits Directly From Orthorectified Images. Front. Plant Sci. 2020, 11, 587093. [Google Scholar] [CrossRef]

- Fricke, T.; Richter, F.; Wachendorf, M. Assessment of Forage Mass from Grassland Swards by Height Measurement Using an Ultrasonic Sensor. Comput. Electron. Agric. 2011, 79, 142–152. [Google Scholar] [CrossRef]

- Lee, W.S.; Alchanatis, V.; Yang, C.; Hirafuji, M.; Moshou, D.; Li, C. Sensing Technologies for Precision Specialty Crop Production. Comput. Electron. Agric. 2010, 74, 2–33. [Google Scholar] [CrossRef]

- Barker, J.; Zhang, N.; Sharon, J.; Steeves, R.; Wang, X.; Wei, Y.; Poland, J. Development of a Field-Based High-Throughput Mobile Phenotyping Platform. Comput. Electron. Agric. 2016, 122, 74–85. [Google Scholar] [CrossRef] [Green Version]

- Ziliani, M.; Parkes, S.; Hoteit, I.; McCabe, M. Intra-Season Crop Height Variability at Commercial Farm Scales Using a Fixed-Wing UAV. Remote Sens. 2018, 10, 2007. [Google Scholar] [CrossRef] [Green Version]

- Malambo, L.; Popescu, S.C.; Murray, S.C.; Putman, E.; Pugh, N.A.; Horne, D.W.; Richardson, G.; Sheridan, R.; Rooney, W.L.; Avant, R.; et al. Multitemporal Field-Based Plant Height Estimation Using 3D Point Clouds Generated from Small Unmanned Aerial Systems High-Resolution Imagery. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 31–42. [Google Scholar] [CrossRef]

- Varela, S.; Assefa, Y.; Vara Prasad, P.V.; Peralta, N.R.; Griffin, T.W.; Sharda, A.; Ferguson, A.; Ciampitti, I.A. Spatio-Temporal Evaluation of Plant Height in Corn via Unmanned Aerial Systems. J. Appl. Remote Sens. 2017, 11, 1. [Google Scholar] [CrossRef] [Green Version]

- Che, Y.; Wang, Q.; Xie, Z.; Zhou, L.; Li, S.; Hui, F.; Wang, X.; Li, B.; Ma, Y. Estimation of Maize Plant Height and Leaf Area Index Dynamics Using an Unmanned Aerial Vehicle with Oblique and Nadir Photography. Ann. Bot. 2020, 126, 765–773. [Google Scholar] [CrossRef]

- Watanabe, K.; Guo, W.; Arai, K.; Takanashi, H.; Kajiya-Kanegae, H.; Kobayashi, M.; Yano, K.; Tokunaga, T.; Fujiwara, T.; Tsutsumi, N.; et al. High-Throughput Phenotyping of Sorghum Plant Height Using an Unmanned Aerial Vehicle and Its Application to Genomic Prediction Modeling. Front. Plant Sci. 2017, 8, 421. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hu, P. Estimation of Plant Height Using a High Throughput Phenotyping Platform Based on Unmanned Aerial Vehicle and Self-Calibration: Example for Sorghum Breeding. Eur. J. Agron. 2018, 95, 24–32. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of Winter-Wheat above-Ground Biomass Based on UAV Ultrahigh-Ground-Resolution Image Textures and Vegetation Indices. ISPRS J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Li, C.; Li, Z.; Wang, Y.; Feng, H.; Xu, B. Estimation of Winter Wheat Above-Ground Biomass Using Unmanned Aerial Vehicle-Based Snapshot Hyperspectral Sensor and Crop Height Improved Models. Remote Sens. 2017, 9, 708. [Google Scholar] [CrossRef] [Green Version]

- Haghighattalab, A.; Crain, J.; Mondal, S.; Rutkoski, J.; Singh, R.P.; Poland, J. Application of Geographically Weighted Regression to Improve Grain Yield Prediction from Unmanned Aerial System Imagery. Crop Sci. 2017, 57, 2478–2489. [Google Scholar] [CrossRef]

- Madec, S.; Baret, F.; de Solan, B.; Thomas, S.; Dutartre, D.; Jezequel, S.; Hemmerlé, M.; Colombeau, G.; Comar, A. High-Throughput Phenotyping of Plant Height: Comparing Unmanned Aerial Vehicles and Ground LiDAR Estimates. Front. Plant Sci. 2017, 8, 2002. [Google Scholar] [CrossRef] [Green Version]

- Schulze-Brüninghoff, D.; Hensgen, F.; Wachendorf, M.; Astor, T. Methods for LiDAR-Based Estimation of Extensive Grassland Biomass. Comput. Electron. Agric. 2019, 156, 693–699. [Google Scholar] [CrossRef]

- Luo, S.; Liu, W.; Zhang, Y.; Wang, C.; Xi, X.; Nie, S.; Ma, D.; Lin, Y.; Zhou, G. Maize and Soybean Heights Estimation from Unmanned Aerial Vehicle (UAV) LiDAR Data. Comput. Electron. Agric. 2021, 182, 106005. [Google Scholar] [CrossRef]

- Cao, L.; Coops, N.C.; Sun, Y.; Ruan, H.; Wang, G.; Dai, J.; She, G. Estimating Canopy Structure and Biomass in Bamboo Forests Using Airborne LiDAR Data. ISPRS J. Photogramm. Remote Sens. 2019, 148, 114–129. [Google Scholar] [CrossRef]

- Yang, F.; Wei, H.; Feng, P. A Hierarchical Dempster-Shafer Evidence Combination Framework for Urban Area Land Cover Classification. Measurement 2020, 151, 105916. [Google Scholar] [CrossRef]

- Guan, H.; Su, Y.; Hu, T.; Wang, R.; Ma, Q.; Yang, Q.; Sun, X.; Li, Y.; Jin, S.; Zhang, J.; et al. A Novel Framework to Automatically Fuse Multiplatform LiDAR Data in Forest Environments Based on Tree Locations. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2165–2177. [Google Scholar] [CrossRef]

- Su, Y.; Hu, T.; Wang, Y.; Li, Y.; Dai, J.; Liu, H.; Jin, S.; Ma, Q.; Wu, J.; Liu, L.; et al. Large-Scale Geographical Variations and Climatic Controls on Crown Architecture Traits. J. Geophys. Res. Biogeosci. 2020, 125, e2019JG005306. [Google Scholar] [CrossRef]

- Lu, J.; Wang, H.; Qin, S.; Cao, L.; Pu, R.; Li, G.; Sun, J. Estimation of Aboveground Biomass of Robinia Pseudoacacia Forest in the Yellow River Delta Based on UAV and Backpack LiDAR Point Clouds. Int. J. Appl. Earth Obs. Geoinf. 2020, 86, 102014. [Google Scholar] [CrossRef]

- Anthony, D.; Elbaum, S.; Lorenz, A.; Detweiler, C. On Crop Height Estimation with UAVs. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 4805–4812. [Google Scholar]

- Dhami, H.; Yu, K.; Xu, T.; Zhu, Q.; Dhakal, K.; Friel, J.; Li, S.; Tokekar, P. Crop Height and Plot Estimation for Phenotyping from Unmanned Aerial Vehicles Using 3D LiDAR. arXiv 2020, arXiv:1910.14031. [Google Scholar]

- Ten Harkel, J.; Bartholomeus, H.; Kooistra, L. Biomass and Crop Height Estimation of Different Crops Using UAV-Based Lidar. Remote Sens. 2019, 12, 17. [Google Scholar] [CrossRef] [Green Version]

- Lei, L.; Qiu, C.; Li, Z.; Han, D.; Han, L.; Zhu, Y.; Wu, J.; Xu, B.; Feng, H.; Yang, H.; et al. Effect of Leaf Occlusion on Leaf Area Index Inversion of Maize Using UAV–LiDAR Data. Remote Sens. 2019, 11, 1067. [Google Scholar] [CrossRef] [Green Version]

- Jin, S.; Su, Y.; Gao, S.; Wu, F.; Hu, T.; Liu, J.; Li, W.; Wang, D.; Chen, S.; Jiang, Y.; et al. Deep Learning: Individual Maize Segmentation From Terrestrial Lidar Data Using Faster R-CNN and Regional Growth Algorithms. Front. Plant Sci. 2018, 9, 866. [Google Scholar] [CrossRef] [PubMed]

- Su, W.; Zhu, D.; Huang, J.; Guo, H. Estimation of the Vertical Leaf Area Profile of Corn (Zea Mays) Plants Using Terrestrial Laser Scanning (TLS). Comput. Electron. Agric. 2018, 150, 5–13. [Google Scholar] [CrossRef]

- Hyyppa, J.; Kelle, O.; Lehikoinen, M.; Inkinen, M. A Segmentation-Based Method to Retrieve Stem Volume Estimates from 3-D Tree Height Models Produced by Laser Scanners. IEEE Trans. Geosci. Remote Sens. 2001, 39, 969–975. [Google Scholar] [CrossRef]

- Li, W.; Guo, Q.; Jakubowski, M.K.; Kelly, M. A New Method for Segmenting Individual Trees from the Lidar Point Cloud. Photogramm. Eng. Remote Sens. 2012, 78, 75–84. [Google Scholar] [CrossRef] [Green Version]

- Lu, X.; Guo, Q.; Li, W.; Flanagan, J. A Bottom-up Approach to Segment Individual Deciduous Trees Using Leaf-off Lidar Point Cloud Data. ISPRS-J. Photogramm. Remote Sens. 2014, 94, 1–12. [Google Scholar] [CrossRef]

- Tao, S.; Wu, F.; Guo, Q.; Wang, Y.; Li, W.; Xue, B.; Hu, X.; Li, P.; Tian, D.; Li, C.; et al. Segmenting Tree Crowns from Terrestrial and Mobile LiDAR Data by Exploring Ecological Theories. ISPRS-J. Photogramm. Remote Sens. 2015, 110, 66–76. [Google Scholar] [CrossRef] [Green Version]

- Guo, Q.; Li, W.; Yu, H.; Alvarez, O. Effects of Topographic Variability and Lidar Sampling Density on Several DEM Interpolation Methods. Photogramm. Eng. Remote Sens. 2010, 76, 701–712. [Google Scholar] [CrossRef] [Green Version]

- Koch, B.; Heyder, U.; Weinacker, H. Detection of Individual Tree Crowns in Airborne Lidar Data. Photogramm. Eng. Remote Sens. 2006, 72, 357–363. [Google Scholar] [CrossRef] [Green Version]

- Vo, A.-V.; Truong-Hong, L.; Laefer, D.F.; Bertolotto, M. Octree-Based Region Growing for Point Cloud Segmentation. ISPRS J. Photogramm. Remote Sens. 2015, 104, 88–100. [Google Scholar] [CrossRef]

- Wang, Y.; Weinacker, H.; Koch, B.; Stereńczak, K. Lidar Point Cloud Based Fully Automatic 3D Single Tree Modelling in Forest and Evaluations of the Procedure. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 8, 1682–1750. [Google Scholar]

- Yao, W.; Krzystek, P.; Heurich, M. Tree Species Classification and Estimation of Stem Volume and DBH Based on Single Tree Extraction by Exploiting Airborne Full-Waveform LiDAR Data. Remote Sens. Environ. 2012, 123, 368–380. [Google Scholar] [CrossRef]

- Biosca, J.M.; Lerma, J.L. Unsupervised Robust Planar Segmentation of Terrestrial Laser Scanner Point Clouds Based on Fuzzy Clustering Methods. ISPRS J. Photogramm. Remote Sens. 2008, 63, 84–98. [Google Scholar] [CrossRef]

- Lari, Z.; Habib, A. An Adaptive Approach for the Segmentation and Extraction of Planar and Linear/Cylindrical Features from Laser Scanning Data. ISPRS J. Photogramm. Remote Sens. 2014, 93, 192–212. [Google Scholar] [CrossRef]

- Cai, Z.; Ma, H.; Zhang, L. A Building Detection Method Based on Semi-Suppressed Fuzzy C-Means and Restricted Region Growing Using Airborne LiDAR. Remote Sens. 2019, 11, 848. [Google Scholar] [CrossRef] [Green Version]

- Xu, S.; Wang, R.; Wang, H.; Yang, R. Plane Segmentation Based on the Optimal-Vector-Field in LiDAR Point Clouds. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3991–4007. [Google Scholar] [CrossRef] [PubMed]

- Luo, Z.; Mohrenschildt, M.; Habibi, S. A Probability Occupancy Grid Based Approach for Real-Time LiDAR Ground Segmentation. IEEE Trans. Intell. Transp. Syst. 2020, 21, 998–1010. [Google Scholar] [CrossRef]

- Shao, J.; Zhang, W.; Shen, A.; Mellado, N.; Cai, S.; Luo, L.; Wang, N.; Yan, G.; Zhou, G. Seed Point Set-Based Building Roof Extraction from Airborne LiDAR Point Clouds Using a Top-down Strategy. Autom. Constr. 2021, 126, 103660. [Google Scholar] [CrossRef]

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An Easy-to-Use Airborne LiDAR Data Filtering Method Based on Cloth Simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Holmgren, J.; Nilsson, M.; Olsson, H. Simulating the Effects of Lidar Scanning Angle for Estimation of Mean Tree Height and Canopy Closure. Can. J. Remote Sens. 2003, 29, 10. [Google Scholar] [CrossRef]

- Bartier, P.M.; Keller, C.P. Multivariate Interpolation to Incorporate Thematic Surface Data Using Inverse Distance Weighting (IDW). Comput. Geosci. 1996, 22, 795–799. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).