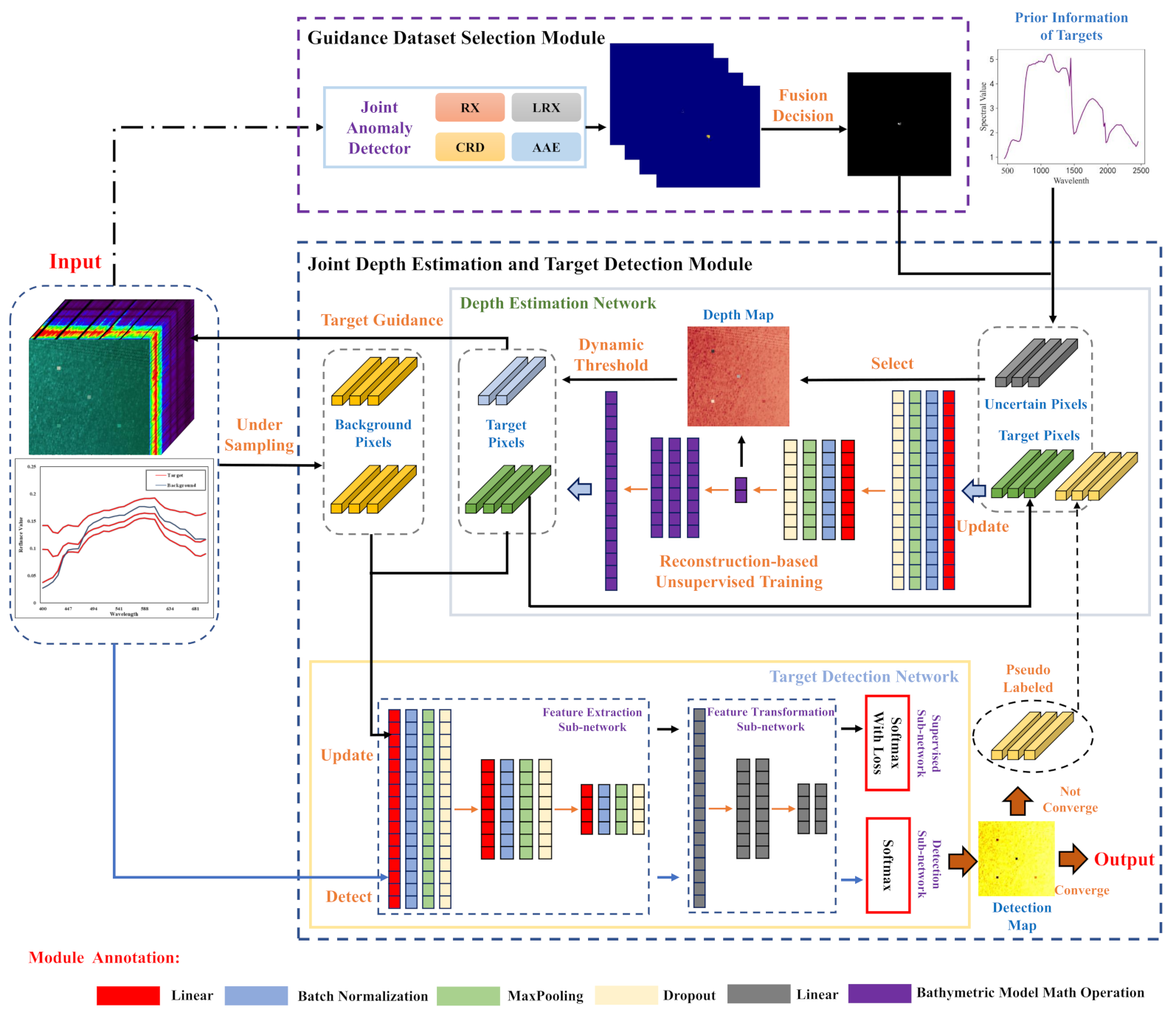

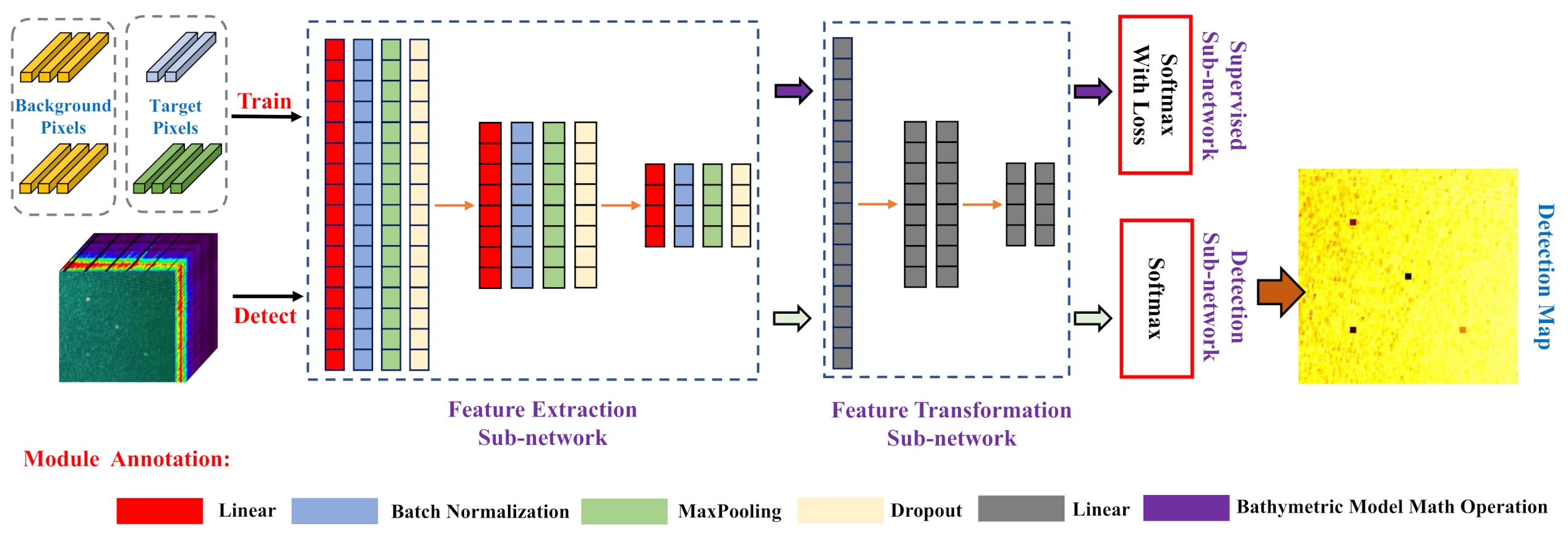

There is no doubt that the JDETD module plays the most crucial role in SUTDF. This specific module is made up of three components and the concrete information of these components is demonstrated in the remainder of this subsection.

3.3.1. Unsupervised Autoencoder-Form Network for Depth Estimation

As mentioned beforehand, the water-associated parameters have been computed by the IOPE-Net. That is, the bathymetric mentioned in Equation (

1) will become a simple function with the only variable depth information

H. Besides, the sensor-observed spectra are HSI and are also known as prior knowledge. Therefore, estimating the target depth information can be transformed into finding out the solution of a linear equation with a single variable. However, it is difficult to use typical mathematical convex optimization (MCO) methods to solve this linear equation. On the one hand, due to the redundant spectral information of input HSI, the rank of the coefficient matrix is smaller than the rank of augmented matrix for the above linear equation. Under this condition, MCO is not capable of finding out a real number solution. On the other hand, the impact of spectral variability derived from environment factors will make it difficult for MCO methods to acquire a stable result. Moreover, previous works [

24,

25] have confirmed that MCO is time-consuming, especially in dealing with large-scale datasets.

A deep neural network is one of the prevalent techniques solving optimization problems by learning the characteristic of datasets, which is deemed to attain the global optimal solution once the network has been well-fitted. Owing to being short of the ground-truth about depth information, in this paper we propose an autoencoder-form depth estimation network demonstrated in

Figure 3. The structure of estimation network consists of two components, named the encoder and decoder.

The encoder part, possessing two independent blocks, acts as a predictor to estimate the depth information in this network. The first block is built with 1-D CNN, which devotes to attaining middle-level, locally invariant, and discriminative features from the input spectrum while eliminating the adverse impact of spectral variability. Given an input pixel

with

B bands, the output of

t-th layer in the first block is defined as:

where

and

are weight parameters of the

t-th layer, ∗ denotes the convolution operation, and

h refers to the nonlinear activation function contributing to impose nonlinearity on the encoder network. In this work, ReLU [

32] is exploited as the activation function, which has been widely used to tackle the gradient vanish issue. The batch normalization and dropout tricks are also applied to this CNN-based block for improving the speediness of convergence. The second block is composed with the fully connected layers. It can flatten the spectral features generated by the first block and use them to predict depth information.

Regarding the decoder part, it is utilized to reconstruct the input spectrum according to the predicted depth information. Unlike the traditional decoder, this network structure does not own any weight parameters and can be interrupted as a linear transformer to embed the bathymetric model into the depth estimation network. Several element-wise math operation layers are devised in the decoder part, which correspond to the bathymetric operation mentioned in Equation (

1). After deposing by this specific decoder, we can attain the reconstruction spectrum

from the depth estimation result. Apart from the spectrum reconstruction function, this decoder part also makes the depth estimation network become model-driven and explainable. With the bathymetric model embedding operation, our method follows the same physical background as existing research works with the contribution of this specific decoder.

Obviously, the objective function is one of the most important factors for DNN, which teaches the network how to adjust its parameters. In this work, we use a multi-criterion reconstruction error containing three loss terms as the objective function. This particular objective function devotes to depicting the spectral discrepancy from different aspects.

(1) Mean Square Error Loss: The first term is calculated by the

norm, which measures the spectral discrepancy with Euclidean distance:

where

is the

norm of a given vector. Under the favorable derivative characteristic, this term is readily implemented by an existing deep learning framework (e.g., Pytorch).

(2) Spectral Angle Loss: The second item refers to the spectral angle loss between input spectrum

and reconstruction spectrum

. This specific metric contributes to penalizing the spectral difference in the spectral shape aspect and its physical essence turns out to be the spectral angle between

and

:

To unify the scales of different loss terms, we divide the spectral angle by a constant to map the value range .

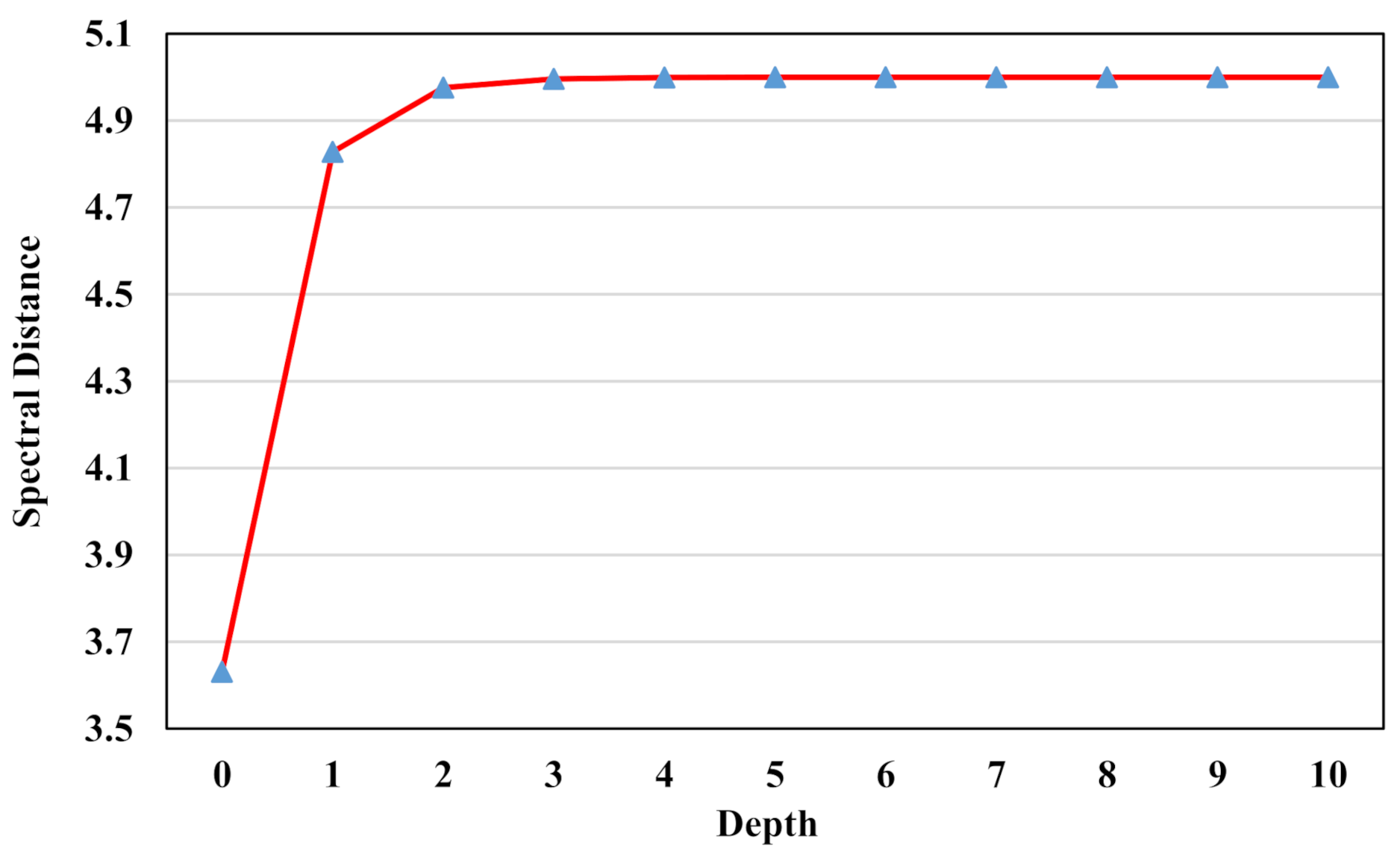

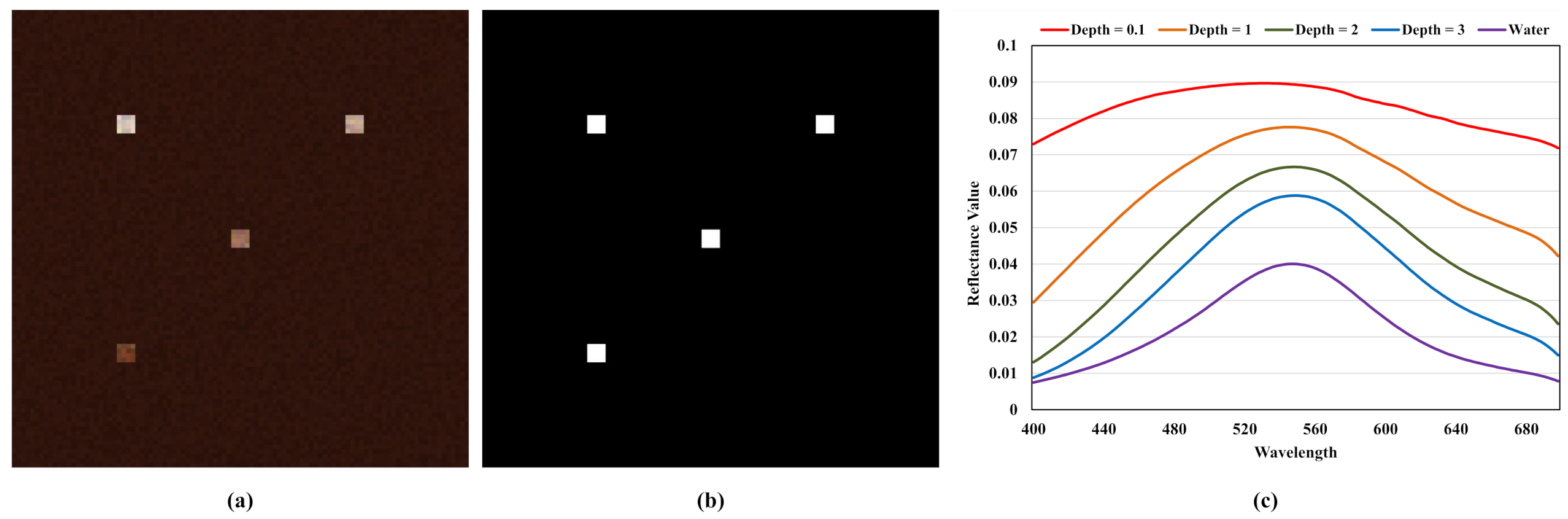

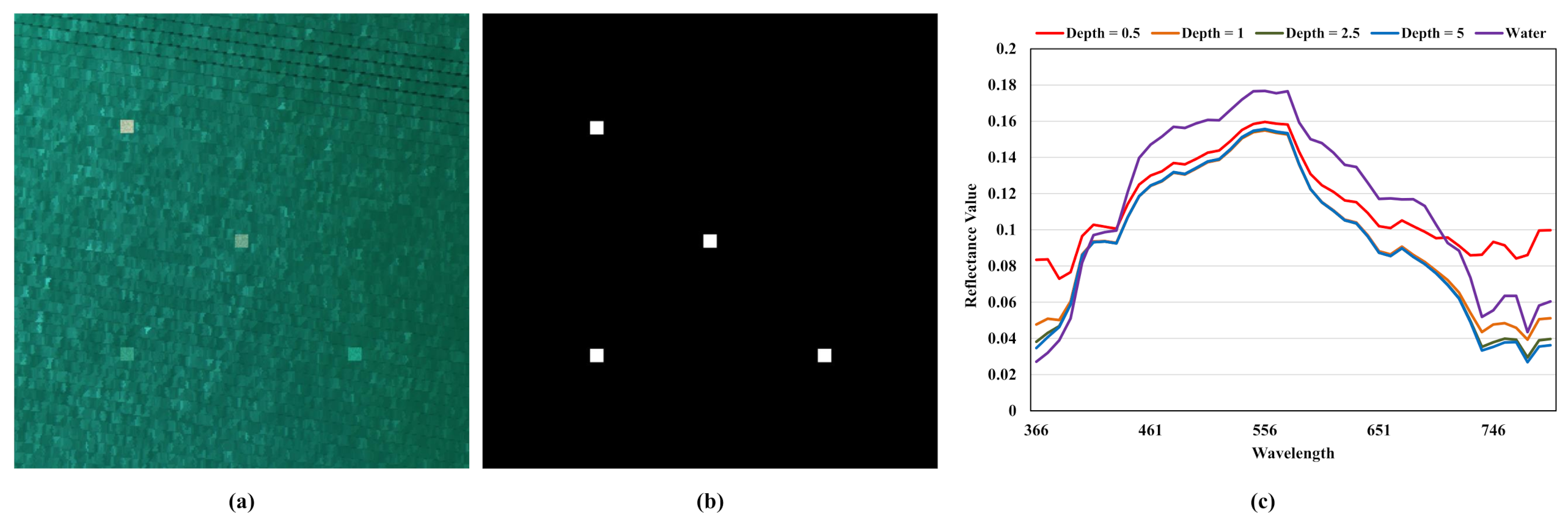

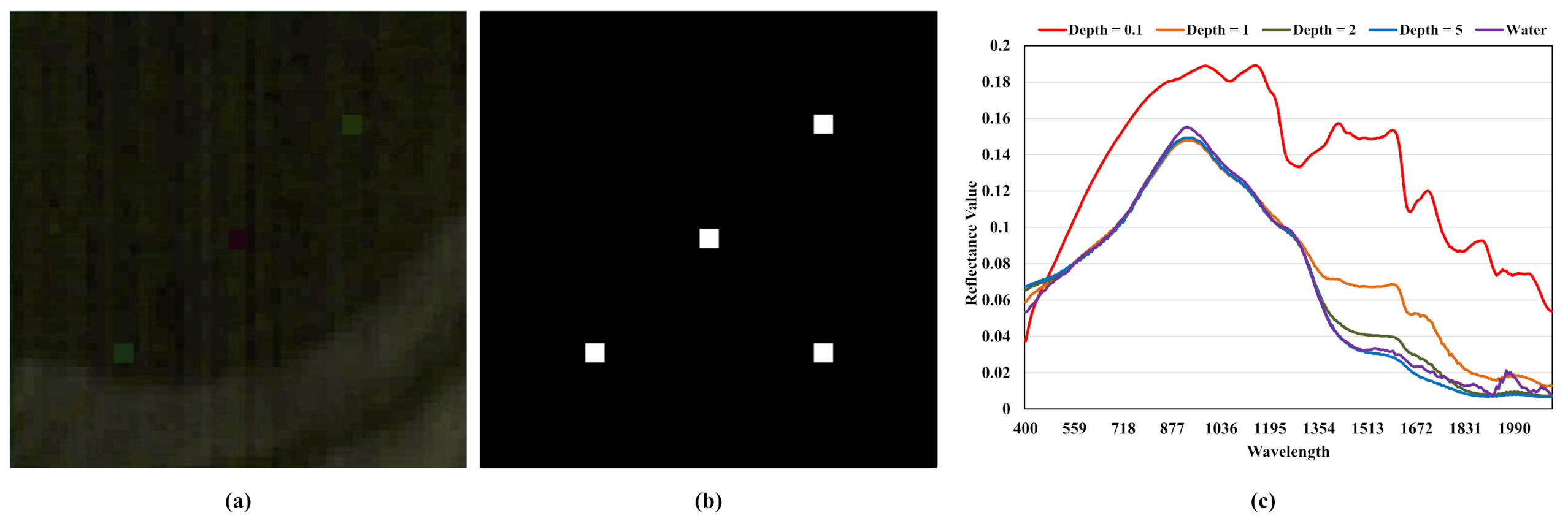

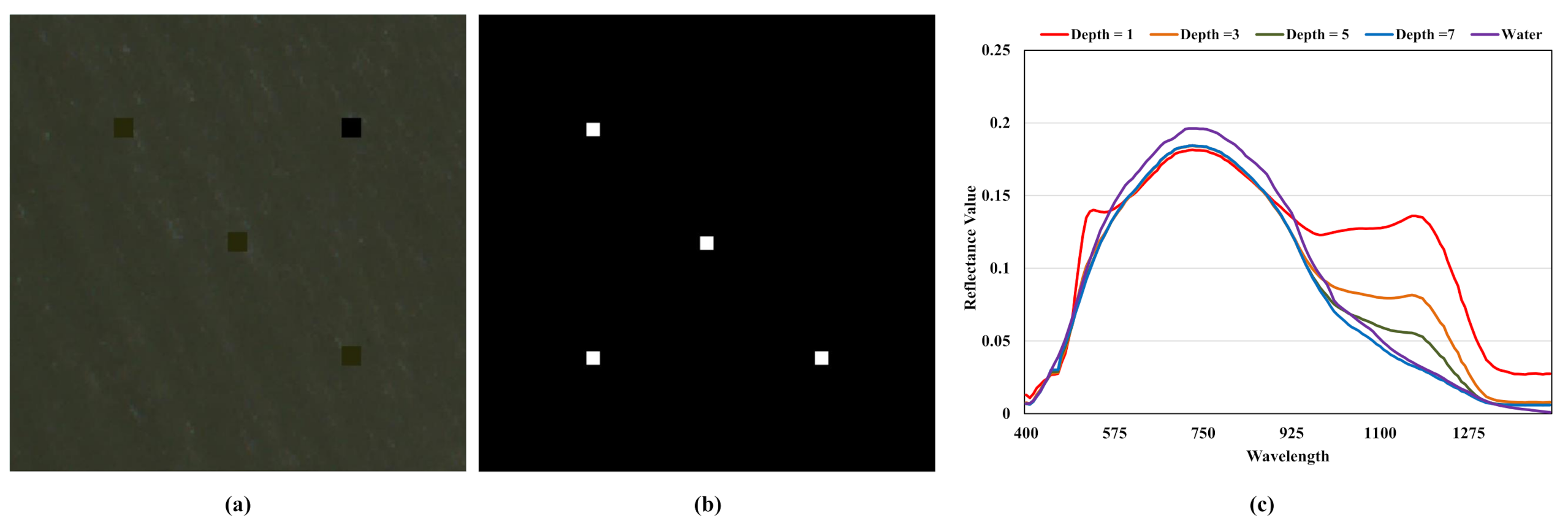

(3) Depth Value Constraint Loss: According to

Figure 4, as the depth value increases, the spectral distance between sensor-observed spectrum

and land-based spectrum

is becoming larger. However, the degree of this change in spectral distance gradually decreases and finally turns into zero. In other words, when depth information exceeds a certain value, altering the depth value would not have the impact on

. This phenomenon would lead to the gradient vanishing problem if the depth estimation network has not been well initialized and then predicts the depth information as a large value. The precondition of figuring out the depth information is that the target-associated spectrum should be distinguishable from the background spectrum. However, as the value of depth information increases, the target-associated pixel will finally turn into the background pixels. Therefore, the depth value constraint loss, which restricts depth information

H to a relatively small value, is required for contributing to the convergence of the depth estimation network.

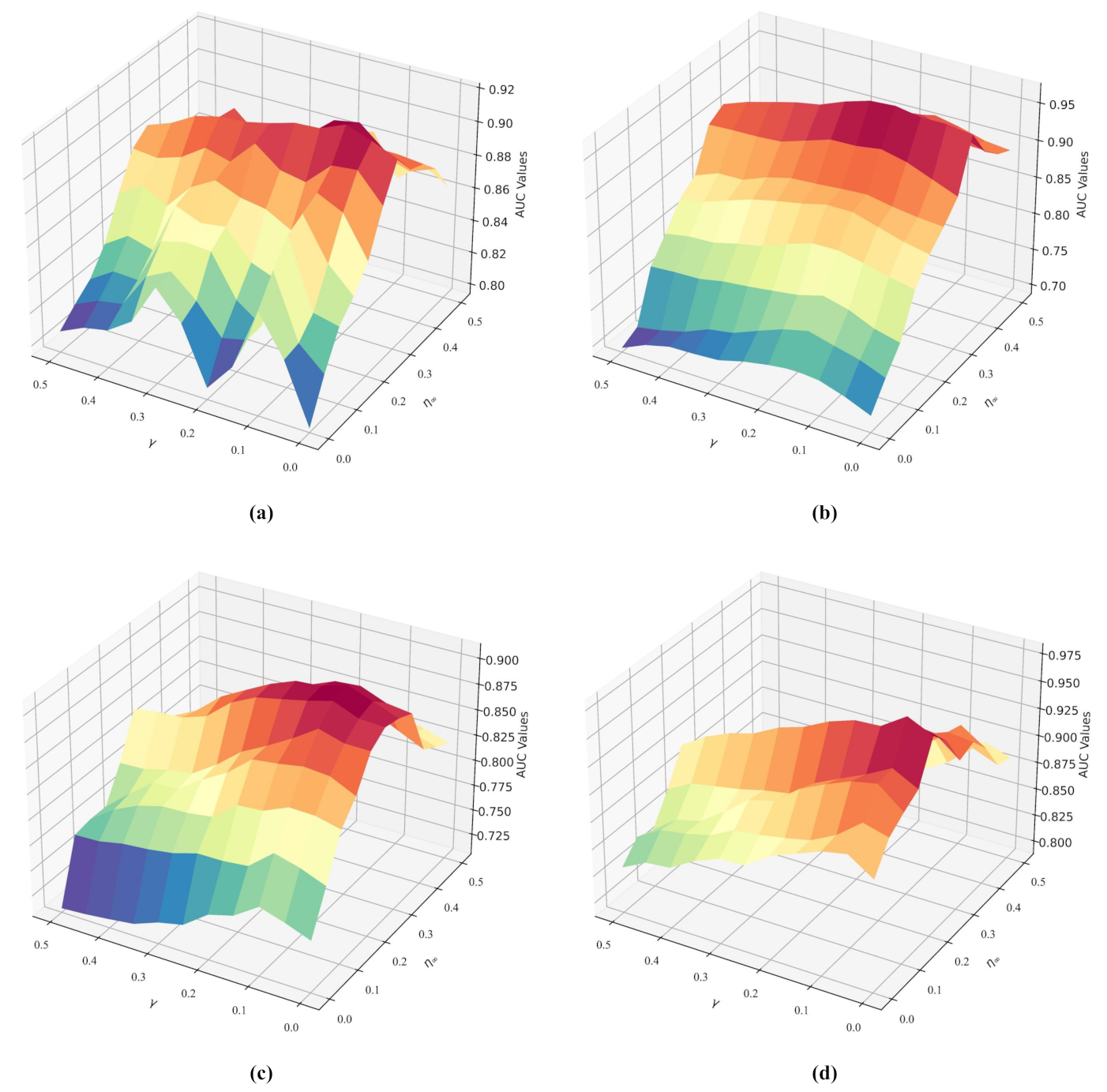

To put everything together, the depth estimation network will fit its weight parameters by the following multi-criterion objective function:

where

and

are hyperparameters representing the importance of

and

for the overall objective function. Both of these two parameters will be determined according to the training datasets.

Glancing over the depth estimation network, the decoder part contributes to predicting the depth estimation based on spectral characteristics and the objective function would teach the decoder part how to predict depth information more precisely. Due to this particular unsupervised training manner, we could acquire the depth information without any ground-truth information. Moreover, this manner simultaneously guarantees that when the input becomes a background pixel, noise point, or other target-associated pixel, the estimation result will turn into a relatively large value which can be regarded as an outlier in a depth map. It will help to eliminate the interference of environment factors and then a more satisfied depth estimation performance is achievable for a given HSI dataset.

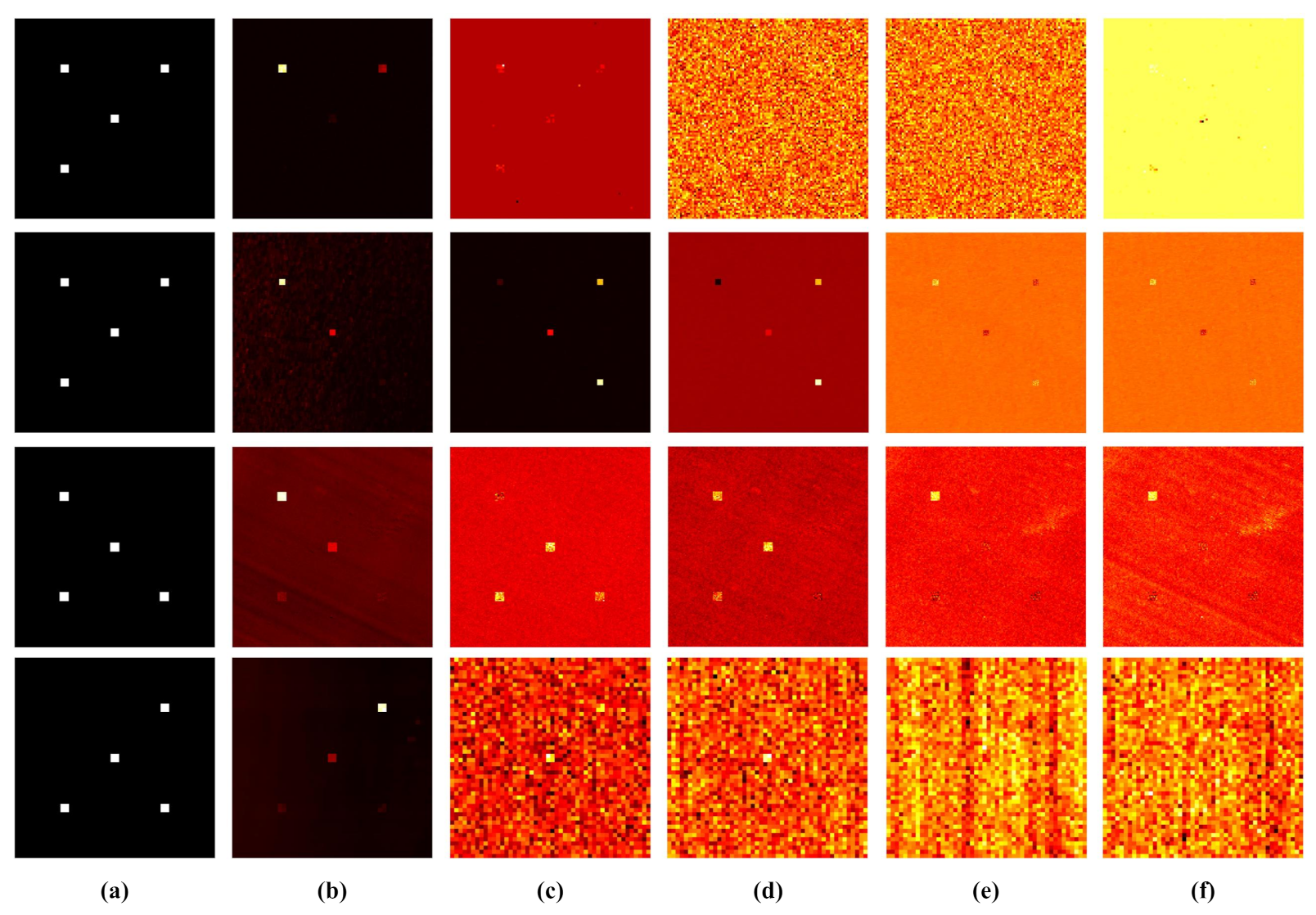

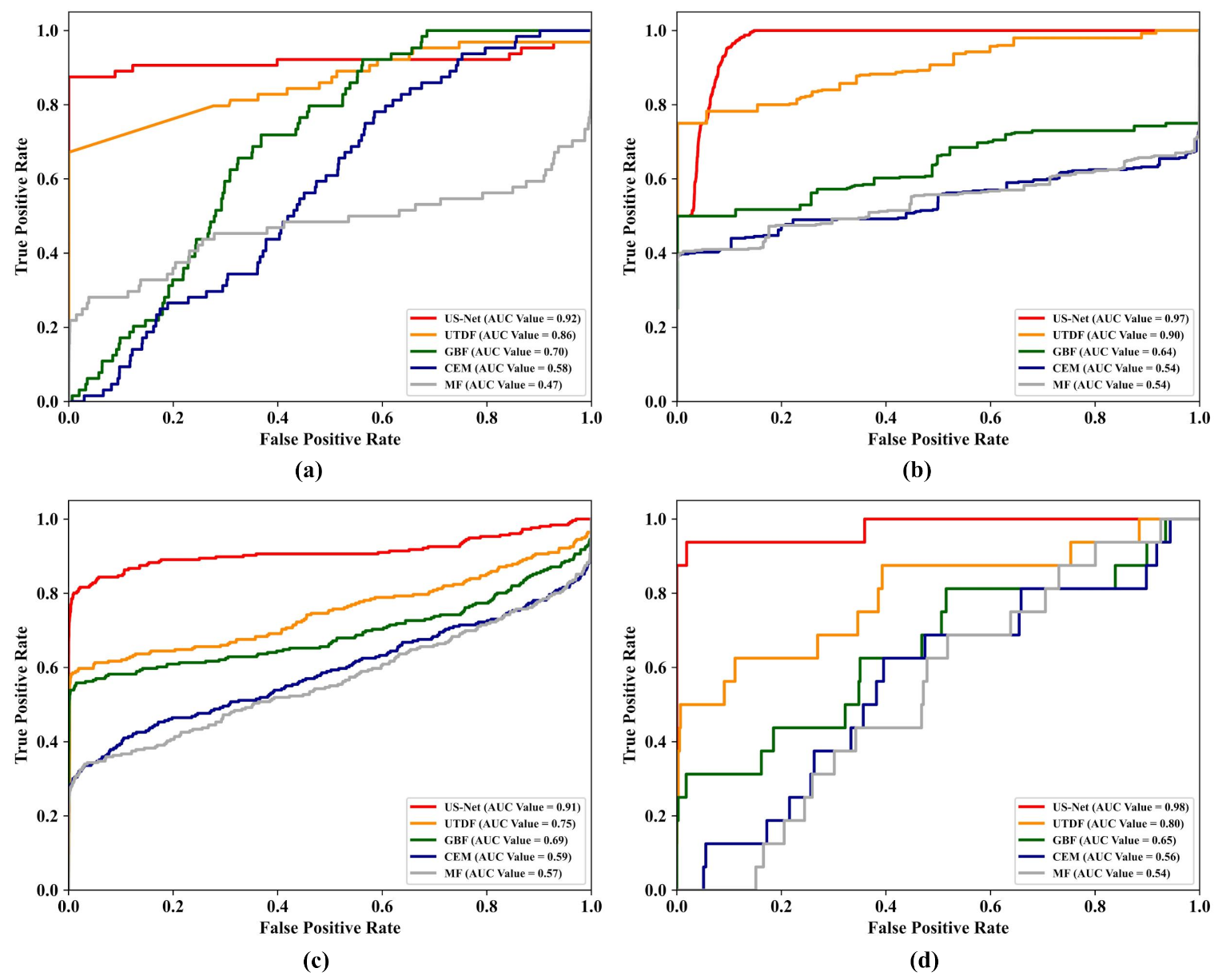

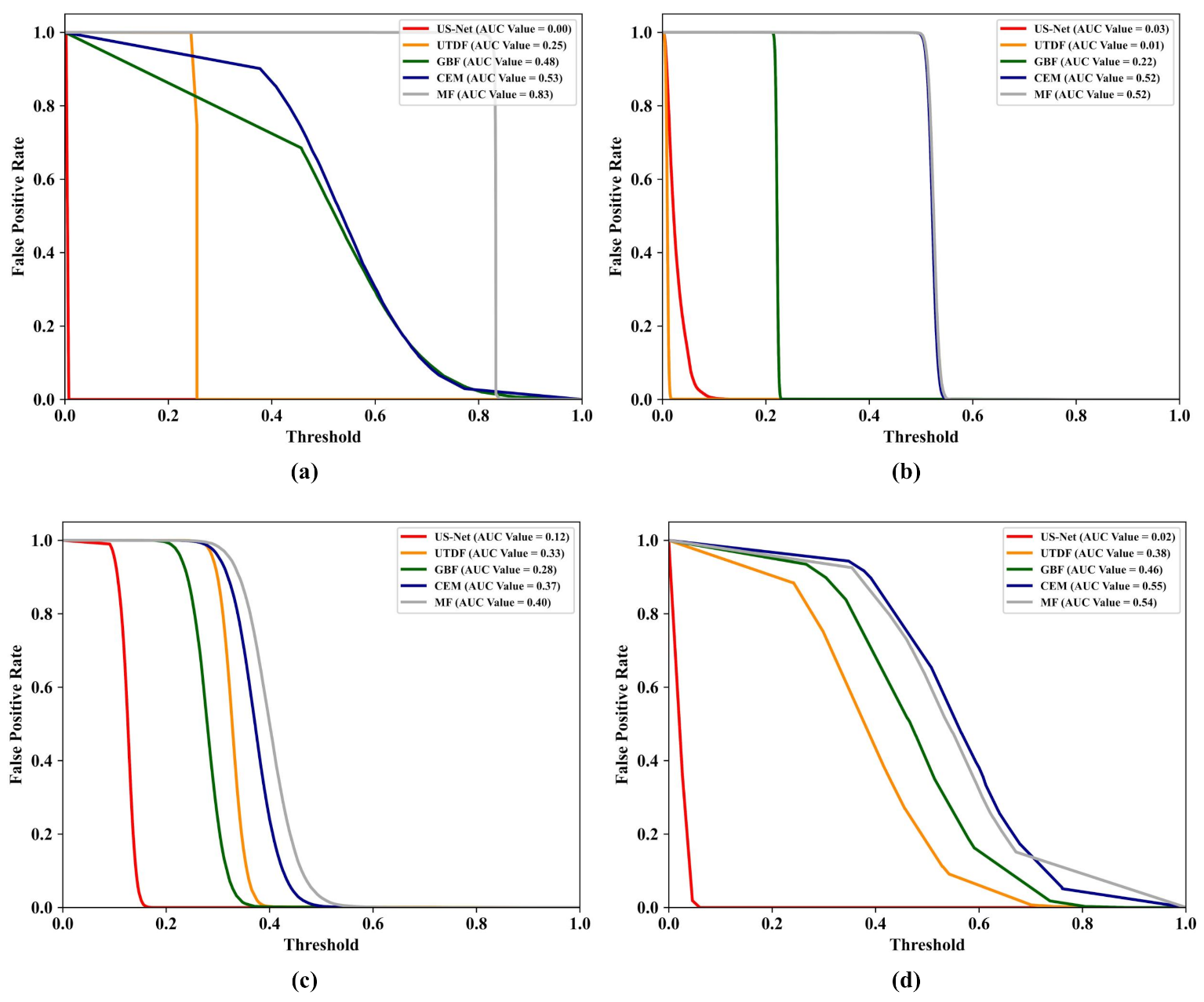

3.3.2. Binary Classifier Network for Target Detection

Underwater target detection can be implicitly explained as a binary classifier whose assignment is categorizing all the pixels into a target group or background group. Furthermore, the classification map and detection map have an identical physical essence which are both used to represent a confidence coefficient of being the target or background for each pixel. Consequently, we design a binary classifier based on deep learning methodology to finish the underwater target detection task. As illustrated in

Figure 5, this particular target detection network is comprised of four subnetworks: Feature extraction sub-network, feature transform sub-network, supervised training sub-network, and detection sub-network.

Feature extraction sub-network. The feature extraction is a stack of cascade DNN blocks with different convolution steps. Here, in each block, there exists a convolution layer, pooling layer, batch normalization, dropout, and nonlinear activation. Similar with the first block in the encoder part, we employ this sub-network to refine the spectral information provided with input HSI. Besides, it can also assist to reduce the network parameters, adapt the spectral variability, and boost the generalization ability for the target detection network.

Feature transformation sub-network. The feature transformation sub-network is made up of some fully connected layers containing different amounts of neurons, which is utilized to predict the class probability for each pixel. This sub-network firstly flattens the spectral features derived from the last sub-network for dimensionality reduction. Then, it transforms the flattened feature vector into a two-dimensional prediction vector. To make the sub-network more capable and stable, the nonlinear activation function and dropout trick are also imposed between different fully connected layer blocks.

Supervised training sub-network. The proposed DNN-based binary classifier requires to be trained in a supervised fashion. With the assistance of a depth estimation network, we are capable of determining which pixels contain targets of interest. However, these pixels only occupy a tiny percent of the total HSI, resulting in a class imbalance problem. To address this problem, we adopt an under-sampling strategy to pick out a suitable training dataset at the very beginning. After that, a softmax function is exploited to transform the prediction vector yielded by feature transformation sub-network into a probability distribution over different classes. Then, the supervised training sub-network computes the logistic loss between the predict vector and the one-hot vector of training labels to be the objective function. Finally, the depth estimation network is trained with the calculated logistic loss and the stochastic gradient descent algorithm.

Detection sub-network. The detection sub-network is the final component of the target detection network and it is exploited to determinate the category for each testing pixel. Analogously, the detection sub-network only contains a softmax function. However, compared with a supervised training sub-network, all the pixels of input HSI are fed into this subnetwork to predict their categorical attributes, finally generating the detection map. For the sake of attaining a confident detection result, a labeling strategy based on maximum a posteriori probability criterion is proposed as follows:

where

is the input pixels and

N refers to the target detection network.

represents the posteriori probability of class label

i with a determinate input pixel

and network

N. This detection sub-network actually conducts the testing process to encode the input HSI into a detection map consisting of numerous one-hot probability vectors.

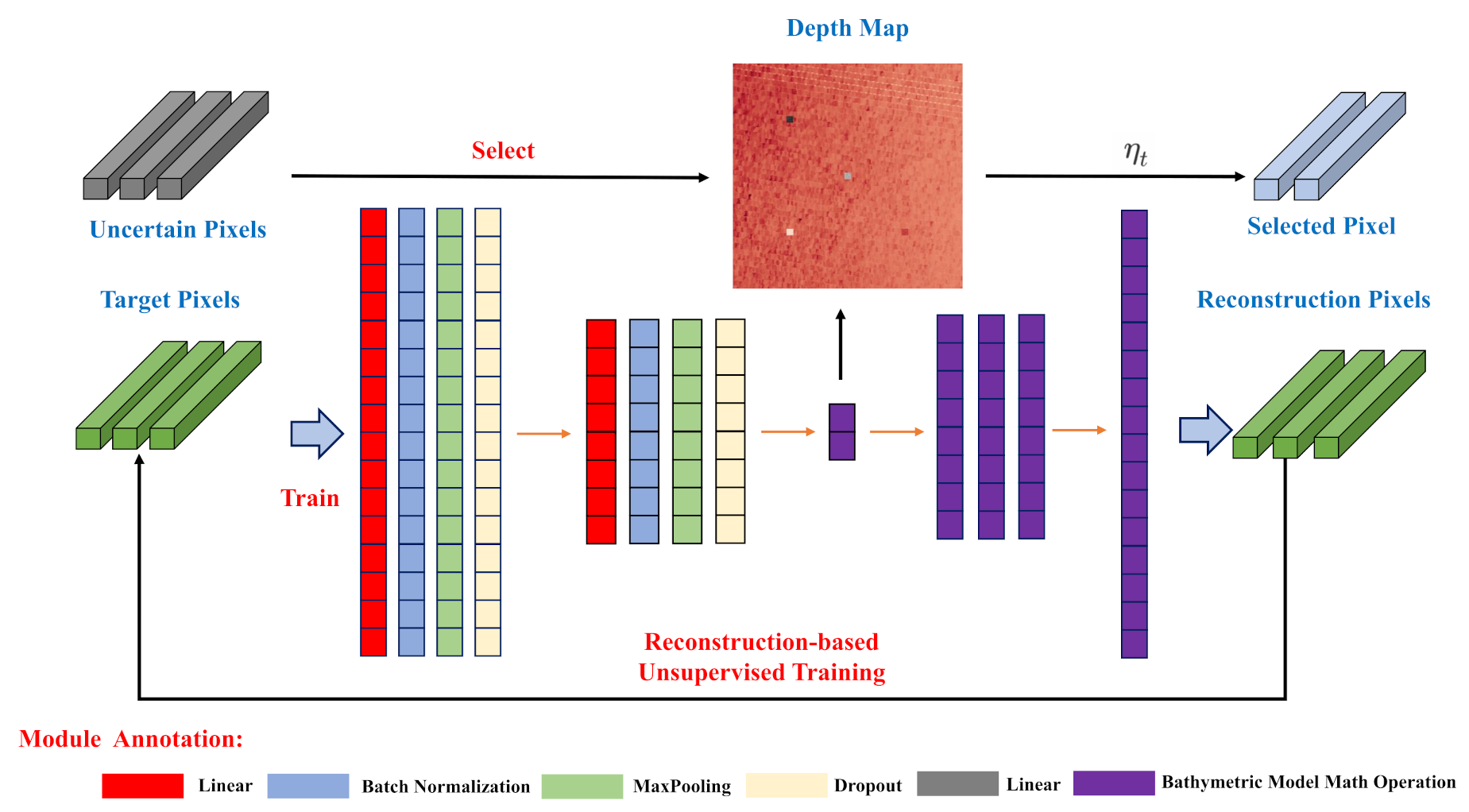

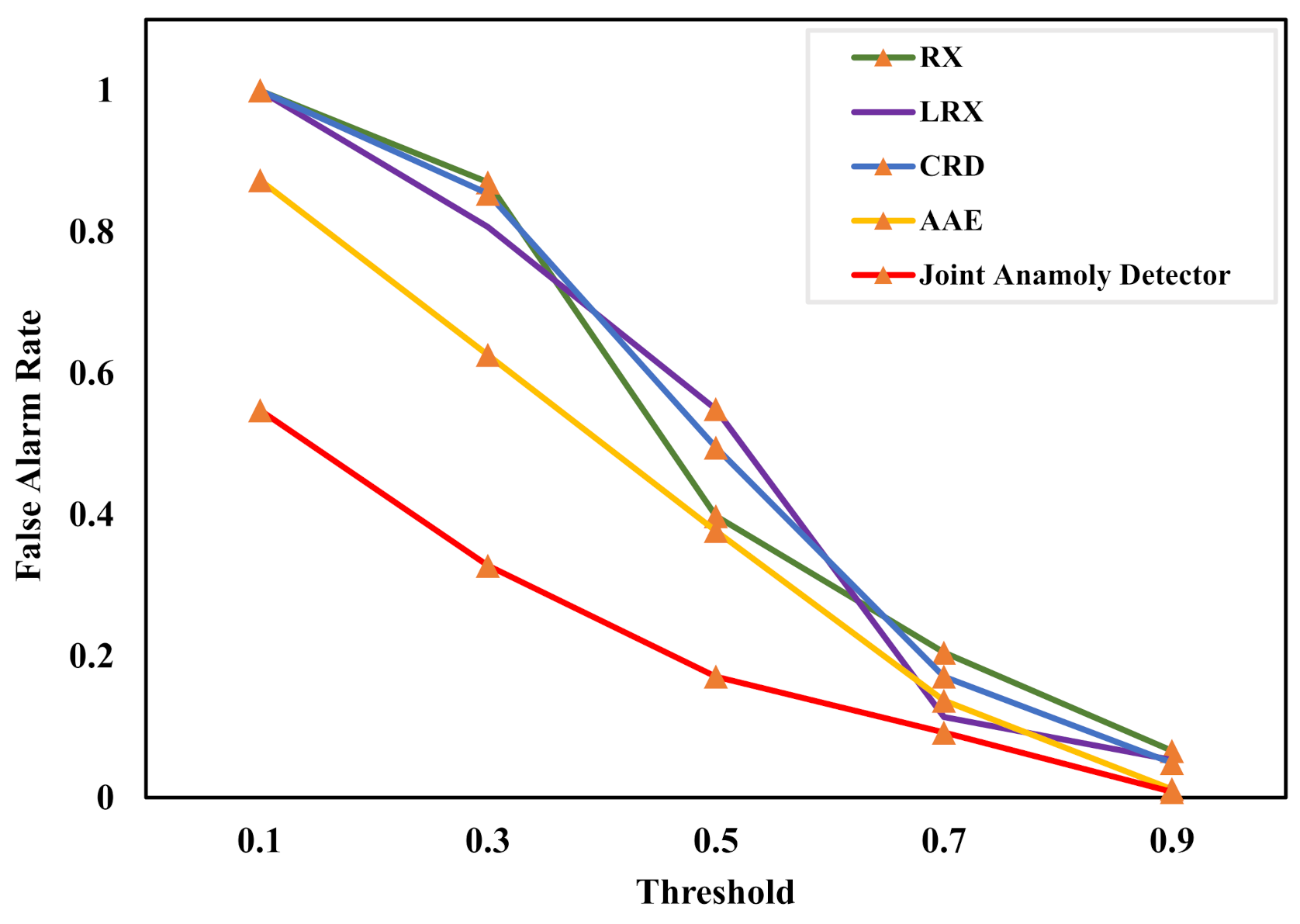

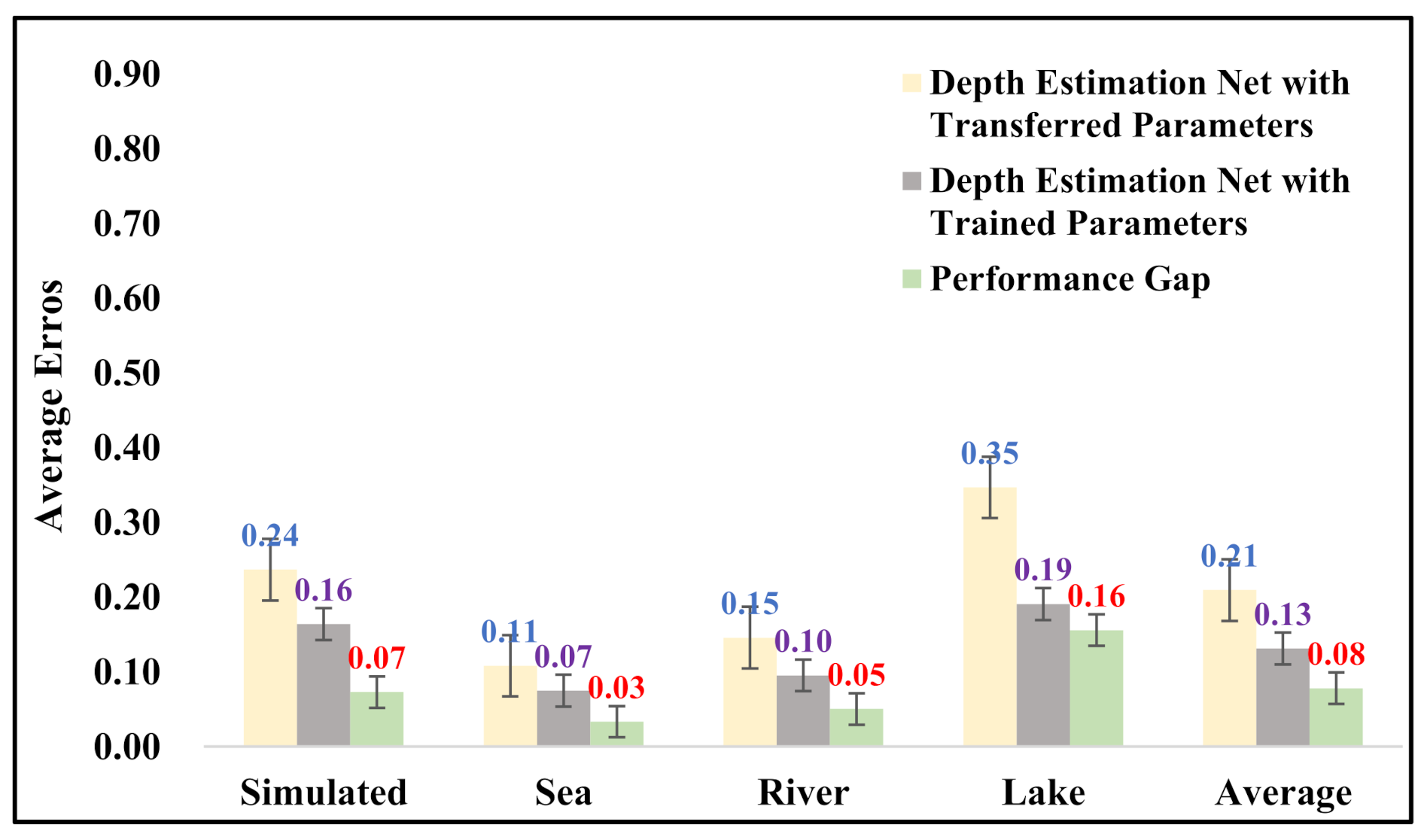

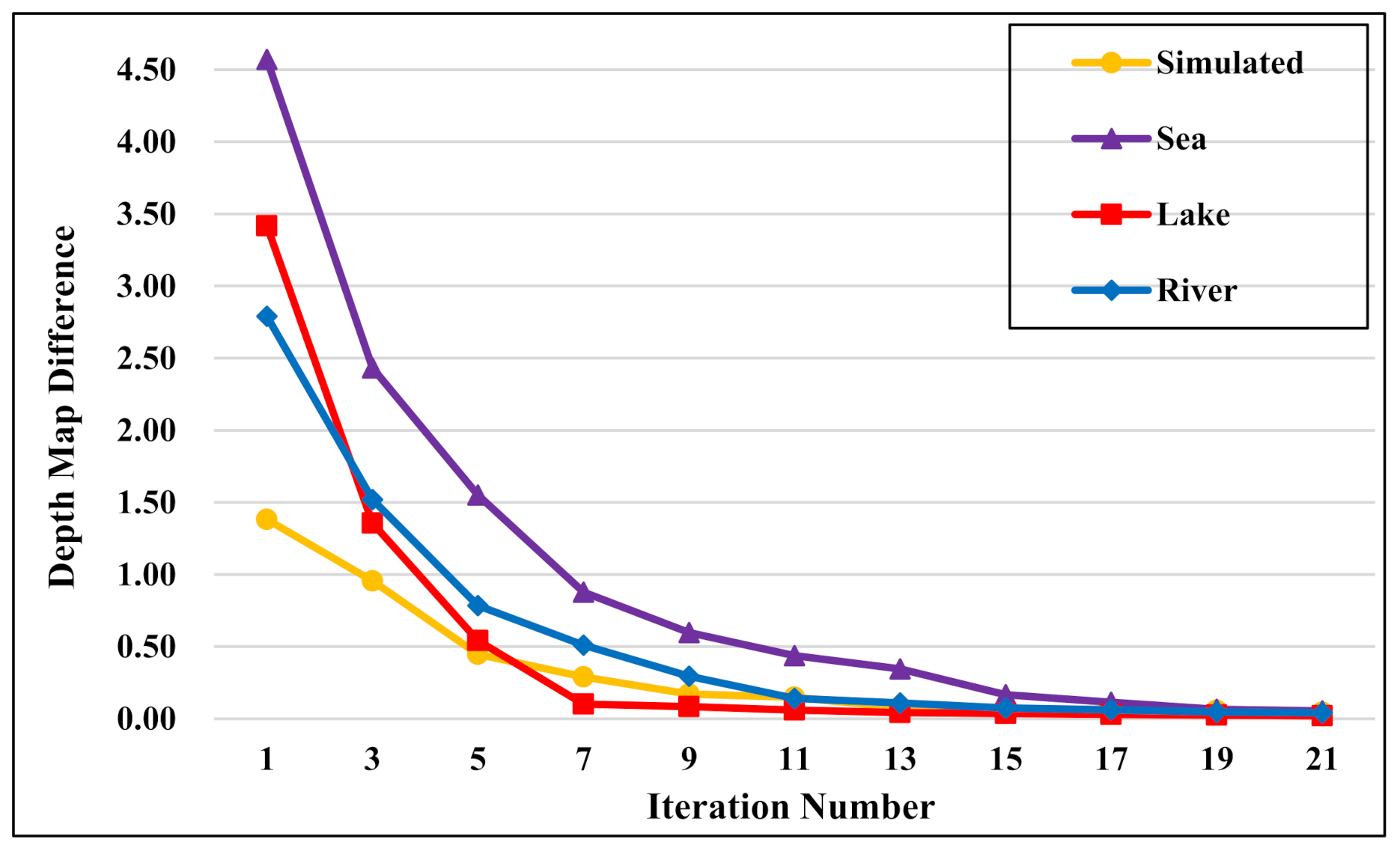

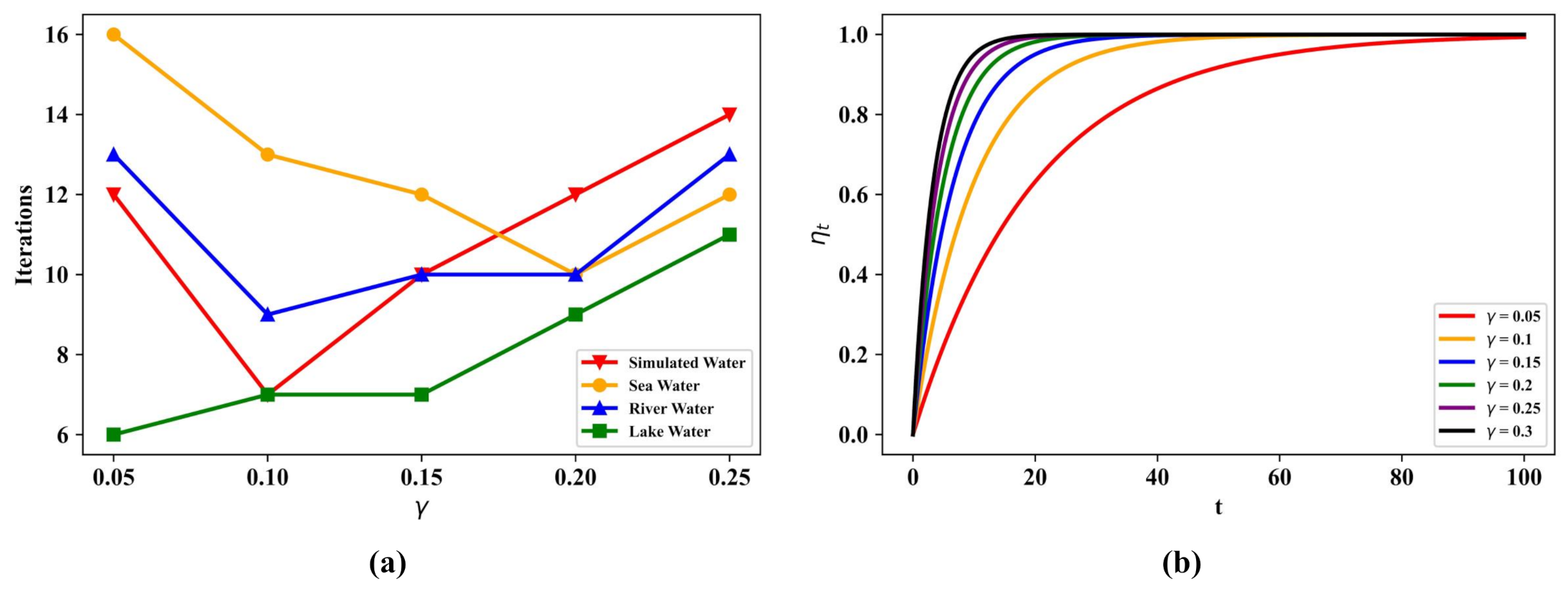

3.3.3. Self-Improving Iteration Scheme

Under an ideal condition, we would manage to perform the underwater target detection with aforementioned two networks. Unfortunately, the joint anomaly detector can not find out all the target-associated pixels with little prior information. Then, the depth estimation network and target detection network will not be well fitted on account of training with biased datasets. As a result, our proposed method can only provide a suboptimal solution for the underwater target detection problem. In order to tackle this dilemma, we decide to perform the joint depth estimation and underwater target detection with a self-improving iteration scheme.

Different from other machine learning methods, DNNs possess a promising generalization capability so that the networks can achieve the correct results even if the inputs are not contained in training dataset. Consequently, the testing result can be used to boost the network with some specific principles [

33]. However, if we employ the testing result of one DNN to improve itself, the iteration process would be sensitive to bad examples and finally crash owing to error accumulation. Therefore, in this work, we employ a depth estimation network and detection network to alternately boost the entire detection performance. Furthermore, there is no doubt that the generalization ability of a DNN is limited but

we can eliminate this limitation by designing an updating scheme for the whole network based on the EM theory. In this way, we propose a self-improving iteration scheme which contributes to improving the performance of joint depth estimation and underwater target detection simultaneously by utilizing the training experience accumulation. Motivated by above perspectives, the self-improving iteration scheme is conducted as follows.

At the very beginning, input HSI is divided into two groups: Target-associated pixels set

and uncertain pixels set

. We first consider the initial depth estimation network

that is trained over the limited dataset

. According to the analysis mentioned above, it is achievable to label the uncertain pixels based on the depth estimation result. Compared with background pixels, the depth information of target-associated pixels possess two unique characters: (1) Their values are relatively small and (2) they occupy a small percentage. Consequently, we select the pixels with depth values less than a certain threshold

to label as targets. As for the threshold

, it is associated with the capability of depth estimation network. With the training experience accumulating, the network will perform better in depth estimation and then we can figure out the depth information of targets locating in deeper positions. In this way, we need to dynamically increase the value of threshold

during the updating process. Therefore, a time-varying threshold is proposed to fit this specific requirement:

where

refers to the largest value of the threshold

,

t denotes as the number of iterations, and

is the hyperparameter to control the growth rate. Then, we can pick out a target-associated pixel subset

from the uncertain pixels set

by:

where

represents the depth information calculated by the depth estimation network

for an uncertain pixel

.

In the following, the subset

is exploited to generate the novel target-associated pixels set

and uncertain pixels set

. Meanwhile, we can acquire the training data

for initial target detection network

with the under-sampling strategy (randomly sample a pixel subset with the same sample capacity as

from

to be background pixels). Using the dataset

, we update the target detection network

and select a new subset of target-associated pixels

according to the following calculation:

where

represents the probability of being a target-associated pixel calculated by the target detection network

for an uncertain pixel

. In the same way, the new target-associated set

and uncertain pixel set

are available and they will be utilized to update the depth estimation network

. We would repeat this process until the performances of the depth estimation network and target detection network do not improve any more.

In summary, the total process of the proposed framework is shown in Algorithm 1. It is noticeable that we employ the network parameters derived from the penultimate training epoch instead of the last one since the network parameters in last training epoch have been overfitting.

| Algorithm 1 The total process of the proposed framework. |

Input: HSI , anomaly detection methods set , hyperparameters , and

Output: Underwater target detection map and depth map.

- 1:

fordo - 2:

Compute the result with k-th anomaly detector according to Equation ( 3); - 3:

end for - 4:

Figure out joint anomaly detection result by Equation ( 4); - 5:

Construct target-associated pixels set and uncertain pixels set according to threshold ; - 6:

Initialize a depth estimation network ; - 7:

Initialize a target detection network ; - 8:

; - 9:

while and do not converge do - 10:

Calculate the dynamic threshold by Equation ( 11); - 11:

Train with ; - 12:

Generate a target-pixels subset from based on:

- 13:

Update ; - 14:

Update ; - 15:

Establish training data by under-sampling from according to ; - 16:

Train with ; - 17:

Generate a target-pixels subset from based on:

- 18:

Update ; - 19:

Update ; - 20:

; - 21:

end while - 22:

Calculate the depth map by feeding into ; - 23:

Figure out the detection map by feeding into ;

|