Abstract

In recent years, representation-based methods have attracted more attention in the hyperspectral image (HSI) classification. Among them, sparse representation-based classifier (SRC) and collaborative representation-based classifier (CRC) are the two representative methods. However, SRC only focuses on sparsity but ignores the data correlation information. While CRC encourages grouping correlated variables together but lacks the ability of variable selection. As a result, SRC and CRC are incapable of producing satisfied performance. To address these issues, in this work, a correlation adaptive representation (CAR) is proposed, enabling a CAR-based classifier (CARC). Specifically, the proposed CARC is able to explore sparsity and data correlation information jointly, generating a novel representation model that is adaptive to the structure of the dictionary. To further exploit the correlation between the test samples and the training samples effectively, a distance-weighted Tikhonov regularization is integrated into the proposed CARC. Furthermore, to handle the small training sample problem in the HSI classification, a multi-feature correlation adaptive representation-based classifier (MFCARC) and MFCARC with Tikhonov regularization (MFCART) are presented to improve the classification performance by exploring the complementary information across multiple features. The experimental results show the superiority of the proposed methods over state-of-the-art algorithms.

1. Introduction

Hyperspectral images (HSIs) provide valuable spectral information by using the hyperspectral imaging sensors to capture data at hundreds of narrow contiguous bands from the same spatial location. In the past few years, HSIs have been extensively applied in various fields [1,2,3,4,5]. Among them, HSI classification is a crucial task for a wide variety of real-world applications, such as ecological science, mineralogy, and precision agriculture [6]. However, there exist open challenges in the HSI classification task. For example, although the hundreds of spectral bands provide sufficient information, it results in the Hughes phenomenon. Additionally, given that the practical sample labeling is difficult and expensive, lacking sufficient labeled samples is another major challenge in HSI classification techniques.

In recent years, deep-learning-based techniques have aroused broad interests in the geoscience and remote sensing community. It shows the effectiveness in HSI classification due to a powerful ability of learning high-level and abstract features from the HSI data automatically [7]. Compared with traditional classification methods, deep learning-based approaches normally require higher computation power and a larger number of training samples to learn the massive tuning parameters, resulting from the manner in deep hierarchical networks [8]. According to the fact that available training samples are limited in hyperspectral data, it is still challenging to use deep learning approaches to fulfill the HSI classification task with high accuracy.

Currently, representation-based classification methods have been successfully applied to HSI classification and numerous representation-based classifiers have been investigated extensively. Started by Wright et al. [9], sparse representation-based classifier (SRC) was proposed for face recognition. Later on, Chen et al. [10] explored a joint sparse representation model into the HSI classification. In recent years, many variants of SRC have been designed for the HSI classification, such as joint SRC (JSRC) [11,12], robust SRC (RSRC) [13] and group SRC (GSRC) [14,15]. Zhang et al. [16] investigated the core principle of SRC and asserted that it was the collaborative representation (CR) mechanism deduced by rather than the based sparsity constraint to improve the final classification performance. Similar to the query-adapted technique adopted in weighted SRC (WSRC) [17], the nearest regularized subspace (NRS) [18] was presented by introducing the distance-weighted Tikhonov regularization into CRC. Then the CR with Tikhonov regularization (CRT) [19] and different kernel versions of CRC and CRT [20,21,22] were introduced in the HSI classification.

According to the above-mentioned discussion, for the representation-based classifiers, one of the crucial issues is to seek for a suitable representation for any test sample and derive the discriminative representation coefficients to achieve the subsequent classification task. Note, when applying SRC to hyperspectral data, whether a useful sparse solution can be obtained or not mostly depends on the degree of coherence of the dictionary [23]. Specifically, when the samples from the same subspace within the dictionary are highly correlated, SR tends to select one atom at random for representation and overlooks other correlated atoms. It leads to SR suffering from the instability problem. Besides, SR lacks the ability to reveal the correlation structure of the dictionary due to the sparsity [24]. On the other hand, when applying CRC to the HSI classification, CR tends to group correlated samples together but lacks the ability of sample selection, which potentially introduces the between-class interference, resulting in poor classification performance.

Given that both SR and CR have their intrinsic limitations, there are some attempts to achieve the balance between SR and CR for better performance. Li et al. [25] presented a fused representation-based classifier (FRC) by combining SR and CR in the spectral residual domain and Gan et al. [26] developed a kernel version of FRC (KFRC) to attain the balance in the kernel residual domain. Liu et al. [27] extended the KFRC from the spectral kernel residual domain to the composite kernel with ideal regularization (CKIR)-based residual domain to further enhance the class separability. Although these fusion-based classifiers have been demonstrated to perform better than the individual representation-based classifiers, they still cannot perform sample selection and group correlated samples simultaneously. As a remedy, the elastic net representation (ENR) model [24] was proposed to encourage the sparsity and the grouping effect via a combination of the LASSO and the Ridge regression. Inspired from the elastic net, several variants of the ENR-based classification methods have been developed for HSIs to take full advantages of SRC and CRC [28,29,30]. However, the ENR model benefits from both the and the at the cost of having two regularization parameters to tune. In addition, the ENR model is blind to the exact correlation structure of the data and thus fails to balance between SR and CR adaptively according to the precise correlation structure of the dictionary.

To tackle the above-mentioned issues, in this paper, a correlation adaptive representation (CAR) solution and CAR-based classifier (CARC) are proposed by exploring the precise correlation structure of the dictionary effectively. Specifically, we introduce a data correlation adaptive penalty by utilizing the advantage of the correlation adaptive behavior and thus make the model adaptive to the correlation structure of the dictionary. Different from ENR-based classifiers, the proposed CARC is capable of performing sample selection and grouping correlated samples jointly with a single regularization parameter to be tuned. By capturing the correlation structure of data samples, the CARC is able to balance SR and CR adaptively, producing more discriminative representation coefficients for the classification task.

Moreover, as an effective solution to the small training samples issue in HSI classification techniques, the multi-task representation mechanism is employed by integrating the discriminative capabilities of complementary features to augment the training samples [31,32]. As a result, it leads to a new trend to exploit the complementarity contained in multi-feature for the HSI classification [33]. Zhang et al. [34] and Jia et al. [35] respectively proposed a multi-feature joint SRC (MF-JSRC) and a 3-D Gabor cube selection based multitask joint SRC model for hyperspectral data. Fang et al. [36] presented a multi-feature based adaptive sparse representation (MFASR) method to exploit the correlations among features. He et al. [37,38] introduced a class-oriented multitask learning based classifier and a kernel low-rank multitask learning (KL-MTL) method to handle multiple features. To deal with the nonlinear distribution of multiple features, Gan et al. [39] developed a multiple feature kernel SRC for HSI classification. Although these multi-feature based classification algorithms employ the multi-task representation mechanism to improve the classification accuracy, the traditional representation models lead to unsatisfactory performance.

In this paper, a multi-feature correlation adaptive representation-based classifier (MFCARC) is proposed to enhance the classification accuracy under the small size samples situations by employing the multi-task representation mechanism. More importantly, the distance-weighted Tikhonov regularization is introduced to MFCARC, namely, multi-feature correlation adaptive representation with Tikhonov regularization (MFCART) classifier, leading to better performance. The main contributions are summarized as follows.

- A new representation-based classifier CARC is proposed to possess the ability of performing sample selection and grouping correlated samples together simultaneously. It overcomes the intrinsic limitations of the traditional SRC & CRC methods and makes the model balance between SR & CR adaptively according to the precise correlation structure of the dictionary.

- A dissimilarity-weighted Tikhonov regularization is integrated into the MFCARC framework to integrate the locality structure information and encode the correlation between the test sample and the training samples effectively, revealing the true geometry of feature space and thus further improving the class separability.

- A multi-task representation strategy is incorporated into CARC and a correlation adaptive representation with Tikhonov regularization (CART) classifier, respectively, enhancing the classification accuracy under the small size samples situations.

The rest of this paper is organized as follows. Section 2 briefly introduces the extraction of multiple features and the conventional representation-based classifiers. In Section 3, the proposed MFCARC and MFCART algorithms are described. The experimental results on real hyperspectral datasets are present in Section 4. Section 5 provides a discussion of the results and conclusions are drawn in Section 6.

2. Preliminaries

2.1. Multiple Feature Extraction

To provide a comprehensive description of 3-D cube HSIs, we extract four complementary spectral-spatial features from HSIs, i.e., the spectral value feature, the Gabor texture feature, the differential morphological profile (DMP) feature and the local binary pattern (LBP) feature, respectively. The description and extraction of the four features are introduced as follows.

2.1.1. Spectral Value Feature

Spectral feature is used to extract the detailed spectral information. The spectral feature of each hyperspectral pixel is a vector of B elements, with each element corresponding to the spectral value of each band and B denoting the number of all spectral bands.

2.1.2. Gabor Texture Feature

The Gabor wavelet filter has been extensively used in the HSI analysis due to the ability of providing a global description of spatial texture information [40,41]. As an orientation-dependent bandpass filter, its impulse response can be defined by an elliptical Gaussian envelope and a complex plane wave. In a 2-D coordinate system, the Gabor filter can be defined as

where

where denotes the wavelength of the sinusoidal factor, represents the orientation separation angle of Gabor kernels, and is the phase offset of the Gabor function. Besides, the real component and the imaginary component of the Gabor filter can be obtained when and , respectively. is the spatial aspect ratio used to give the ellipticity of the Gabor function. The standard derivation of the Gaussian envelope is denoted by For the given wavelength and the spatial frequency bandwidth , the parameter can be calculated as

Subsequently, the designed Gabor filter can be utilized to extract the Gabor texture feature by performing the 2-D Gabor wavelet transform on the selected principle components (PCs) of the HSI. By stacking the Gabor features calculated from each PC, the final Gabor feature for each hyperspectral pixel can be obtained.

2.1.3. Differential Morphological Profile (DMP)

As an effective tool for extracting structural information from HSIs, Morphological profiles (MPs) [42] can be constructed by implementing a series of morphological opening and closing operators with a group of structuring elements (SEs) of increasing sizes. By calculating the slopes of the MPs, DMP can be obtained to represent the shape information. Then DMP features are extracted from several PCs of the HSIs. Specifically, we apply the DMP on the selected PCs in a sequential way to yield the DMP feature.

Let and denote the morphological opening operator and closing operator by reconstruction using the SEs for each selected PC image , where represents the PC of the HSI. The definition of MPs is based on a series of SEs with increasing sizes.

where denotes the radius of the disk-shaped SE. According to the definition of opening and closing by reconstruction, we have for Subsequently, DMPs can be calculated as

where To represent both bright and dark features in the PC image, DMP feature descriptor for each PC image can be obtained by concatenating and into a vector.

2.1.4. Local Binary Pattern (LBP)

The LBP operator is known as a powerful tool for characterizing the local spatial texture information. As an effective texture feature extraction approach, LBP has been employed to extract rotational invariant features for HSI classification [43]. By performing thresholding on the circular neighborhood of the center pixel, the neighbor pixels of the center pixel can be assigned with a binary label (“0” or “1”). For the center pixel its neighboring pixels are located at a circle of radius centered at the center pixel. Given the number of the surrounding neighbors , the LBP code for the center pixel can be expressed as

where the function if and if . The gray values of the center pixel and the neighboring pixels are denoted as and , respectively. By concatenating all the binary values calculated in a clockwise direction, the binary code can be obtained to reflect the texture orientation and smoothness in a local region. For HSI data, each PC image can be regarded as a gray-scale image. Then we apply the LBP model on each selected PC to obtain the LBP code. Afterwards, the LBP histogram can be generated over a local patch around the center pixel to serve as the LBP feature. By concatenating the LBP features from all the selected PCs, the final LBP feature can be obtained.

2.2. Representation-Based Classification

Representation-based classification has been successfully applied to HSI classification tasks. Suppose that a dictionary contains N training samples from C classes, where denotes the ith training

sample with B spectral bands. For the class label c (c), the corresponding training subset Dc is denoted as where denotes the number of training samples belonging to the class, i.e., For a test sample the representation-based models aim to approximate the test sample by a linear combination of all available training samples from the given dictionary D. The approximation of the test sample y can be represented as

where denotes the coefficient vector for reconstruction of the test sample y and ε represents the error residual term. Then the class label of the test sample y can be determined according to the minimum residual criterion, i.e.,

where denotes the coefficients associated with the class and represents the residual between the sample y and its approximation.

According to the signal sparsity representation theory, only a few training atoms from the dictionary D are utilized to represent the test sample. The formulated objective of SRC includes the sparse constraint term within a least-squares framework to force the reconstruction coefficients to be sparse. Then the sparse coefficients can be obtained through solving the following optimization problem

where L denotes the sparsity level, specifying the maximum number of the nonzero entries in . It is known that (12) is a non-deterministic polynomial-time hard (NP-hard) problem. Therefore, a convex relaxation was proposed to replace the by the of . Then the optimization problem is convex and can be efficiently optimized by some fast algorithms, such as Nesterov’s algorithm (NESTA) [44] and augmented Lagrange multiplier (ALM) [45].

Distinct from SR, with the idea of representing the test sample y via a linear combination of all training samples from the dictionary D, CRC imposes the term on the representation coefficient vector , which is calculated as

where λ is the regularization parameter used to balance between the residual term and the regularization term. The analytical solution to (13) is

After obtaining the label of the test sample y is predicted according to (11).

3. Proposed Methods

3.1. Correlation Adaptive Representation-Based Classification (CARC)

As an effective tool for the HSI classification, SRC has the ability of performing sample selection automatically due to the nature of the However, SRC suffers from the instability problem when the samples within the training dictionary are highly correlated. For the hyperspectral data, the spectral signatures of different samples exhibit strong correlation among various structures, leading to unsatisfied performance. On the other hand, due to the CR yields stable results for its ability of grouping correlated samples. Nevertheless, CRC lacks the ability of sample selection and thus produces a dense representation for hyperspectral data, which potentially introduces the between-class interference and thus leads to unsatisfactory performance.

Essentially, the ideal representation model should promote sparsity by allowing the relevant class to be involved and leaving the rest classes uninvolved during the representation. Moreover, the ideal representation model should not overemphasize sparsity by encouraging all the samples within the training subset to contribute their own strength during the representation. To make the representation model own the ability of performing sample selection and grouping correlated samples simultaneously, the trace Lasso [46] is employed as the correlation regularizer to capture the correlation structure of the data samples adaptively. By imposing the trace norm onto the representation coefficient α with the dictionary D, the correlation regularizer is denoted as . represents converting the coefficient vector α into a diagonal matrix in which each diagonal entry corresponds to each element in α. Trace norm is also known as nuclear norm, denoted as i.e., the sum of all the singular values of the matrix X. By introducing the dictionary D into the regularizer, the correlation information can be encoded into the model, thus making the model adaptive to the correlation structure of the dictionary. Then we propose the following correlation adaptive representation model:

where λ is a trade-off parameter balancing the effects of the two terms. Subsequently, we will discuss the adaptive behavior of the model in detail. Consider this scenario: All the samples within the dictionary are uncorrelated, i.e., Then the regularization term can be rewritten as:

Obviously, the above correlation adaptive representation model becomes SRC. Consider another scenario: All the samples within the dictionary are strongly correlated. Suppose these training atoms are the same as the first atom and all the columns in D have unit norm, i.e., where 1 denotes an all ones vector. Then the regularization term can be reformulated as:

Then, the correlation adaptive representation model is the same as CRC. In other general scenarios, when all the columns in D own unit norm, the regularization term possesses the following property [46]:

It indicates the regularizer is able to interpolate between and depending on the correlation among samples. Owing to the adaptive property of the correlation adaptive penalty, the proposed representation model is capable of balancing between SRC and CRC adaptively according to the precise correlation structure of the dictionary.

Although Alternating Direction Method (ADM) [47] can be adopted as the solution to the convex optimization problem in (15), it may slow down the convergence by introducing several auxiliary variables corresponding to non-smooth terms [48]. In this paper, we utilize the Iteratively Reweighted Least Squares (IRLS) method [49,50] to solve this issue. Thus, it avoids the introduction of auxiliary variables. The commonly used variational formulation for the nuclear norm [51] is given as follows:

The trace norm of the matrix X admits the above variational form and reaches the infimum for . Subsequently, the optimization problem (15) is reformulated as

The detailed mathematical derivation from (15) to (20) is provided in the Appendix A. Equation (20) is jointly convex in and the alternating minimization algorithm is adopted to optimize Algorithm 1 However, the convergence of the alternating minimization algorithm cannot be guaranteed because the infimum over Q could be achieved at a non-invertible Q. To handle this issue, a commonly used strategy [52] to make the algorithm convergent is to add a term to (20). In this way, (20) is rewritten as:

where μ is a smoothing parameter. Now the regularization terms in (21) make the objective function smooth and keep the matrix Q nonsingular. To obtain the optimal solution for α and Q, the alternating minimization algorithm is employed to minimize the function with respect to α and Q through two alternating steps. The detailed optimization steps are given as follows:

Update α: Fix Q and update α by solving the following problem:

It is a least-squares problem penalized by a reweighted i.e., By taking the partial derivative with respect to α of the function in (22) and setting the derivative to zero, solution to (22) is solved with a closed form.

Update Q: Fix α and update Q by solving the following problem:

The term keeps Q-iterates of the algorithm at a certain distance from the boundary of the subset of the positive semidefinite matrices [53]. The optimal solution of Q is given by

The complete procedure of the proposed CARC algorithm is listed in Algorithm 1. It has been demonstrated that the problem (15) always has a unique minimum [46]. Therefore, the proposed CARC has much more stable performance than SRC.

| Algorithm 1 CARC |

| Input: Training data test sample and the regularization parameter λ. |

| Initialization: Q = I, , , ρ, maxiter, t = 0. |

| while not converged or maxuter do |

| Step 1: Fix Q and update α according to (23). |

| Step 2: Fix α and update Q according to (25). |

| Step 3: Update the parameter by . |

| Step 4: Check the convergence conditions and . |

| end while |

| Output: The class label of the sample y. |

3.2. Multi-Feature Correlation Adaptive Representation-Based Classification (MFCARC)

The proposed CARC model is developed for classification using only the spectral feature. To alleviate the problem caused by the lack of sufficient training samples, it is potential to achieve better performance by combining a set of diverse and complementary features for the representation model. Therefore, we incorporate the multi-task representation strategy into the CARC model and propose the MFCARC method for the HSI classification. Suppose each pixel in the hyperspectral data is represented by K features. For each feature modality index k, is denoted as the training dictionary associated with the kth feature. For the test sample y, we denote as the kth feature of the sample. Based on the theory of multi-task learning, the proposed MFCARC model is formulated as:

where λ is the regularization parameter used to trade off the two terms. denotes the representation coefficients of over the dictionary . represents an ensemble of the coefficient vectors. The representation coefficients is optimized according to the Algorithm 1. After obtaining and , the label of the test sample is determined according to the minimum residual criterion, i.e.,

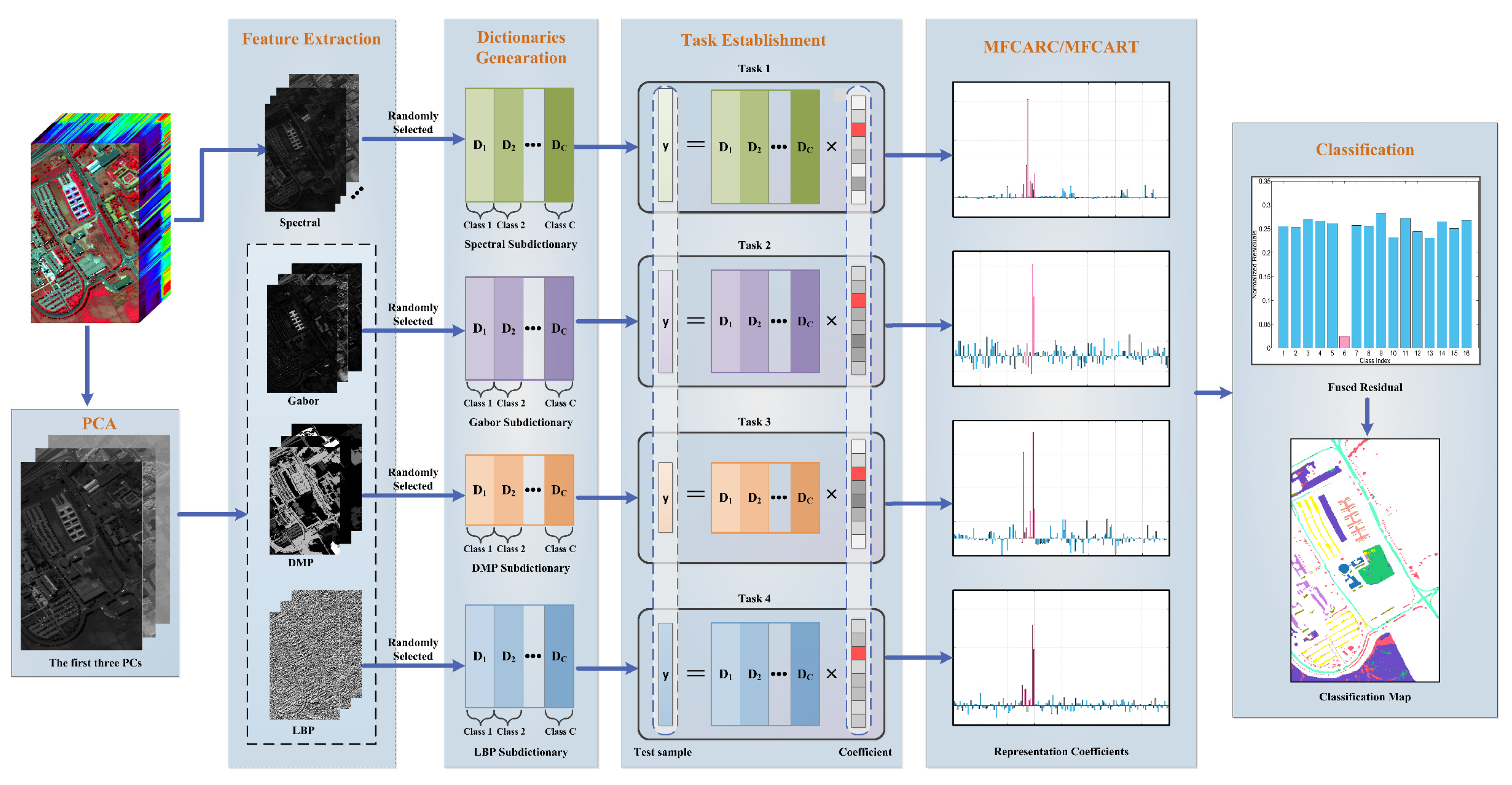

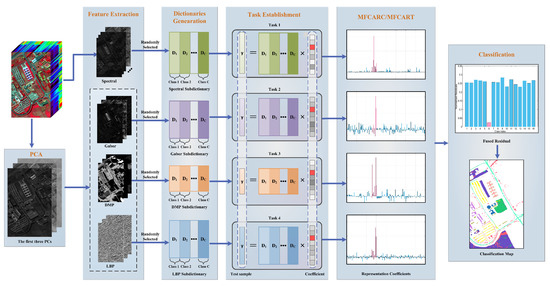

where denotes the subdictionary of the kth feature for the corresponding class c, and indicates the subset of associated with the cth class. Finally, the class label of the test sample y is determined as the class with the lowest reconstruction error accumulated over all the K tasks. The procedure of the proposed MFCARC is summarized in Algorithm 2. In addition, the illustration of the proposed MFCARC is depicted in Figure 1.

| Algorithm 2 MFCARC |

| Input: Multi-feature Dictionary test sample and the regularization parameter λ. |

| Initialization: Q = I, , , ρ, maxiter, t = 0. |

| fork = 1 to K do |

| while not converged or maxiter do |

| Step 1: Fix and update according to (23). |

| Step 2: Fix and update according to (25). |

| Step 3: Update the parameter by |

| Step 4: Check the convergence conditions and . |

| end while |

| Calculate the representation residual of the test sample y for the kth modality of the feature according to (11). |

| end for |

| Calculate the class label class(y) for the test sample y according to (27). |

| Output: The class label of the sample y. |

Figure 1.

Schematic illustration of the proposed multi-feature correlation adaptive representation-based classifier (MFCARC) and MFCARC with Tikhonov regularization (MFCART) algorithms.

3.3. Multi-Feature Correlation Adaptive Representation-Based Classification with Tikhonov Regularization (MFCART)

Considering that the relationship between the test sample and the training samples is also distinct for representation, we integrate the distance-weighted Tikhonov regularization into the MFCARC framework to obtain a more accurate representation model, a multi-feature correlation adaptive representation with Tikhonov regularization (MFCART) classifier. It is formulated as

where the regularization parameters λ and β are used to control the contributions of the correlation regularizer and the distance-weighted regularizer, respectively. represents the Tikhonov matrix used to measure the distance between the test sample and the training atoms from the dictionary i.e.,

where denotes the dictionary associated with the kth feature. Similar to the elastic net representation [28,29,30], the proposed MFCART integrates the two regularization terms into the objective function to combine the merits of the correlation regularizer and the distance-weighted regularizer. Specifically, both the correlation information within the training dictionary and the correlation information between the test sample and the dictionary atoms are encoded into the model. Therefore, the proposed MFCART not only performs sample selection and groups correlated samples together, but also enforces the training samples that are more similar to the test sample to yield larger representation coefficients. Additionally, by introducing the dissimilarity-weighted regularization term into the objective function, the proposed MFCART integrates the locality structure information to reveal the true geometry of feature space and thus gains an advantage over MFCARC to produce more discriminative representation coefficients.

To optimize the representation coefficients and , (28) is reformulated as

To obtain the optimal solution for and the alternating minimization algorithm is employed to minimize the function . The detailed optimization steps are shown as follows.

Update : Fix and update by solving the following problem:

By taking the partial derivative with respect to of the function of (31) and setting it to zero, solution to (31) can be solved with a closed form.

Update : Fix and update by solving the following problem:

As described earlier, the optimal solution of can be obtained as

The complete procedure of the proposed MFCART is described in Algorithm 3. Also, the diagram of the proposed MFCART algorithm is depicted in Figure 1.

| Algorithm 3 MFCART |

| Input: Multi-feature Dictionary test sample and the regularization parameter λ. |

| Initialization: Q = I, , , ρ, maxiter, t = 0. |

| fork = 1 to K do |

| while not converged or maxiter do |

| Step 1: Fix and update according to (32). |

| Step 2: Fix and update according to (34). |

| Step 3: Update the parameter by |

| Step 4: Check the convergence conditions and . |

| end while |

| Calculate the representation residual of the test sample y for the kth modality of the feature according to (11). |

| end for |

| Calculate the class label class(y) for the test sample y according to (27). |

| Output: The class label of the sample y. |

4. Results

In this section, we examine the effectiveness of the proposed methods on three real hyperspectral data sets, including the Indian Pines data set, the University of Paiva data set, and the Salinas data set. State-of-the-art classification algorithms are used as the benchmark, including kernel sparse representation classification (KSRC) [54], multiscale adaptive sparse representation classification (MASR) [55], collaborative representation classification with Tikhonov regularization (CRT) [19], kernel fused representation classification via the composite kernel with ideal regularization (KFRC-CKIR) [27], multiple feature sparse representation classification (MF-SRC) [34], multiple feature joint sparse representation classification (MF-JSRC) [34], and multi-feature based adaptive sparse representation classification (MFASR) [36]. To further demonstrate the effectiveness of the proposed methods, the classification performance of several recent deep-learning-based classifiers is also compared, including two convolutional neural networks (2-D CNN and 3-D CNN) [56], a spatial prior generalized fuzziness extreme learning machine autoencoder (GFELM-AE) based active learning method [57], a spatial–spectral convolutional long short-term memory (ConvLSTM) 2-D neural network (SSCL2DNN) [58] and a spatial–spectral ConvLSTM 3-D neural network (SSCL3DNN) [58]. In addition, four widely used metrics, the overall accuracy (OA), the average accuracy (AA), the kappa coefficient, and the class-specific accuracy (CA) are utilized for evaluation.

4.1. Hyperspectral Data Sets

To evaluate the effectiveness of the proposed methods, three public HSI data sets, i.e., Indian Pines, University of Paiva and Salinas are used for comparison. All of them are available online (http://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes, accessed on 10 January 2020).

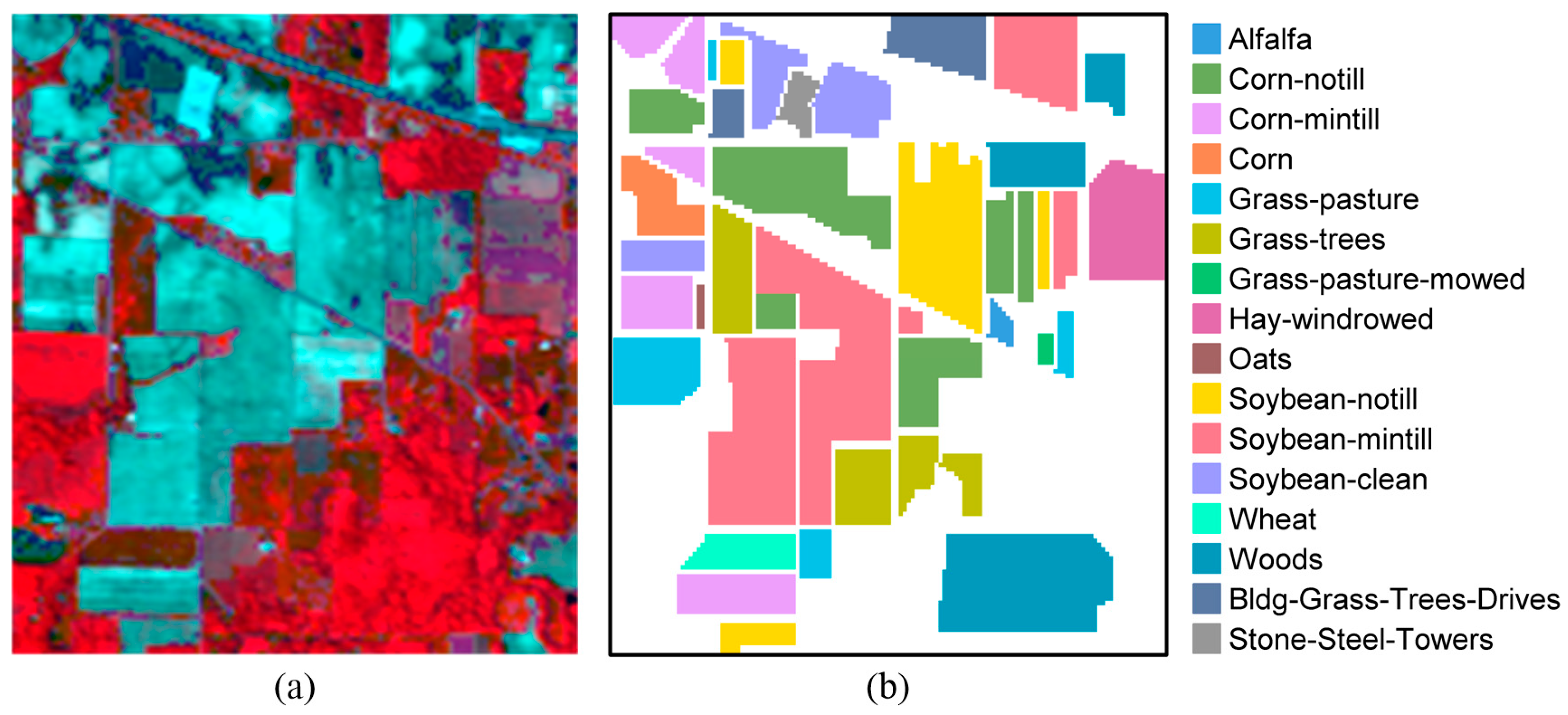

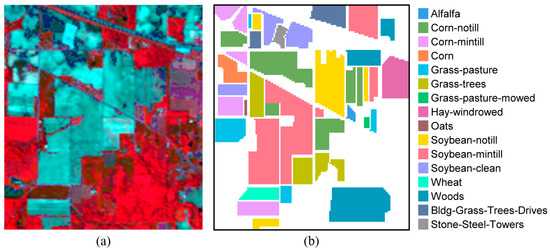

4.1.1. Indian Pines Data Set

This data set was gathered by the Airborne Visible Infrared Imaging Spectrometer (AVIRIS) sensor over the agricultural regions in northwest Indiana’s Indian Pines test site. The spatial size of the data set is pixels. Considering the effect of water absorption, 20 water absorption bands are removed, leaving 200 spectral bands for the HSI classification. The scene contains 16 land-cover classes. The detailed information of the ground-truth classes and the number of labeled samples are shown in Table 1. The false-color composite image and the corresponding ground-truth map are shown in Figure 2. As the small size samples situation is mainly concerned in our work, ten samples from each class are randomly picked up to constitute the dictionary, and the remaining samples are used for testing.

Table 1.

Sixteen ground-truth classes of the Indian Pines data set.

Figure 2.

(a) False-color image and (b) ground truth map of the Indian Pines data set.

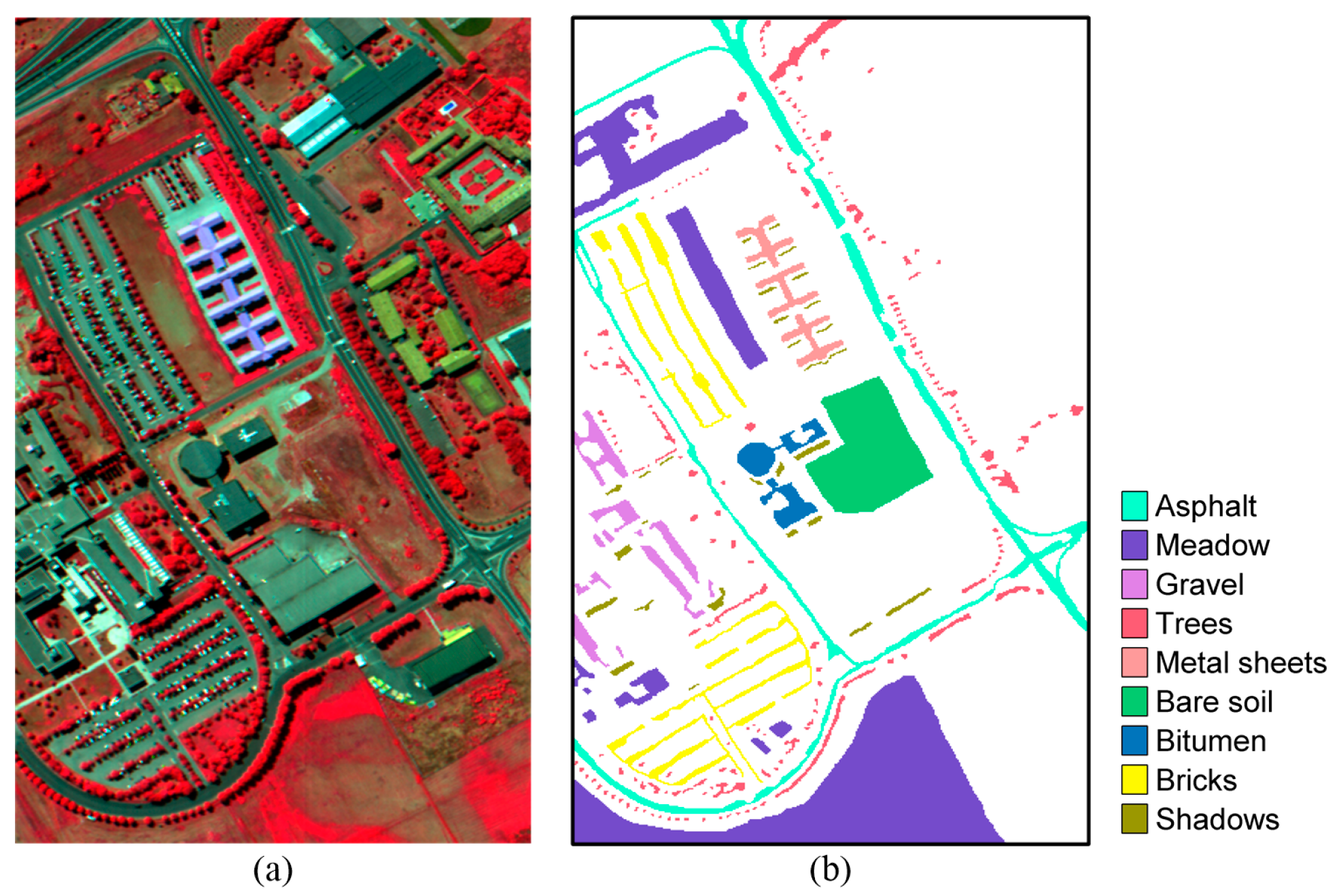

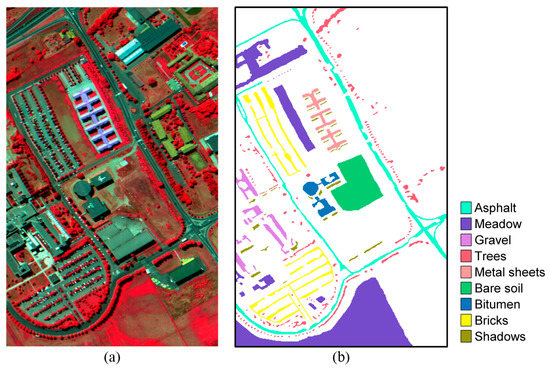

4.1.2. University of Pavia Data Set

This data set was acquired by the Reflective Optics System Imaging Spectrometer (ROSIS) sensor over the urban area of the University of Pavia, Northern Italy. The original data set contains pixels and 115 spectral bands, with 103 spectral bands reserved for analysis after removing 12 noisy bands. The nine ground-truth classes and the number of labeled samples from each class are listed in Table 2. Figure 3 displays the false color image and the ground truth map. As for the number of training and testing samples, we randomly pick up ten labeled samples from each class for training and the rest for testing.

Table 2.

Nine ground-truth classes of the Pavia University data set.

Figure 3.

(a) False-color image and (b) ground truth map of the Pavia University data set.

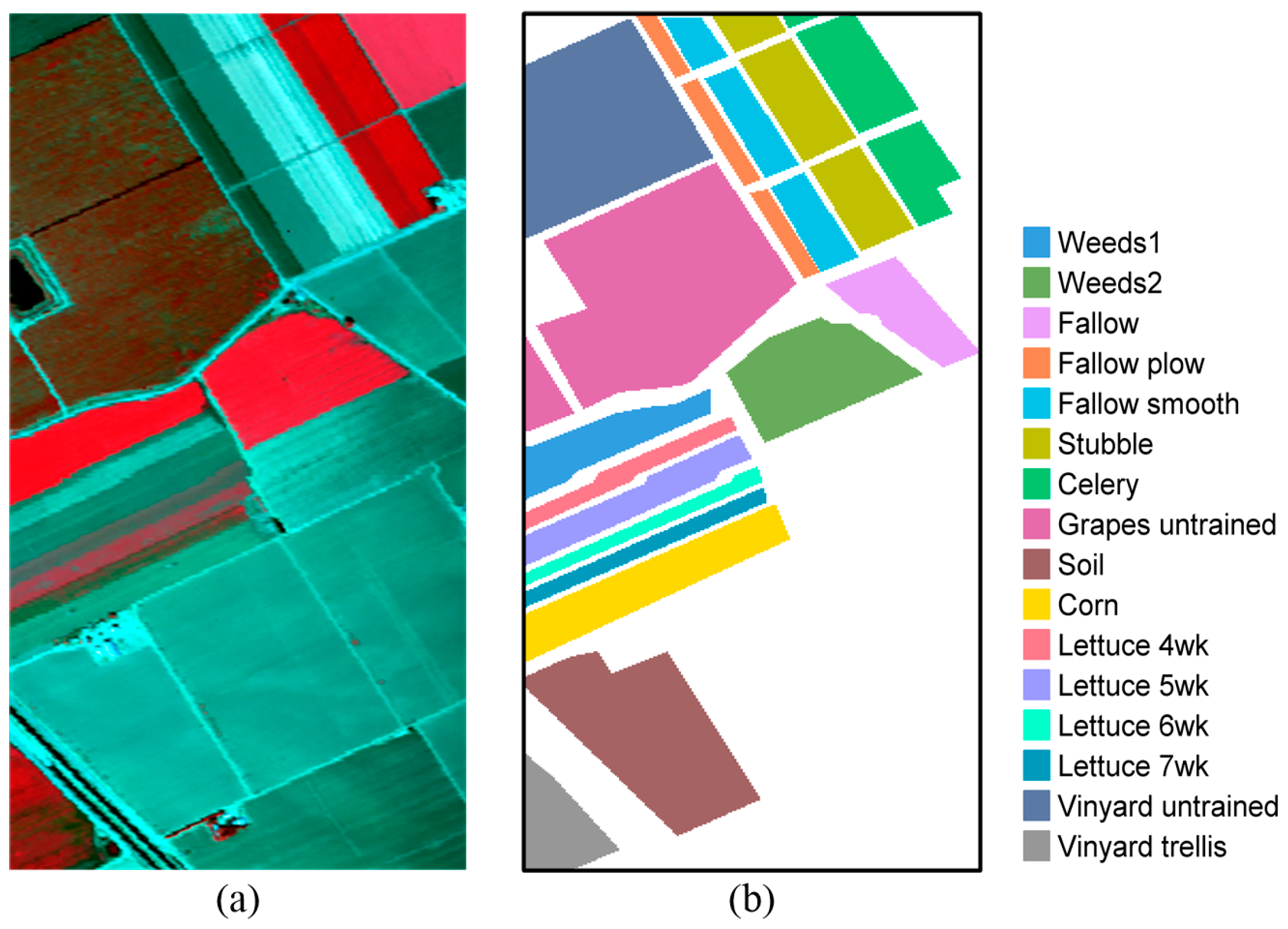

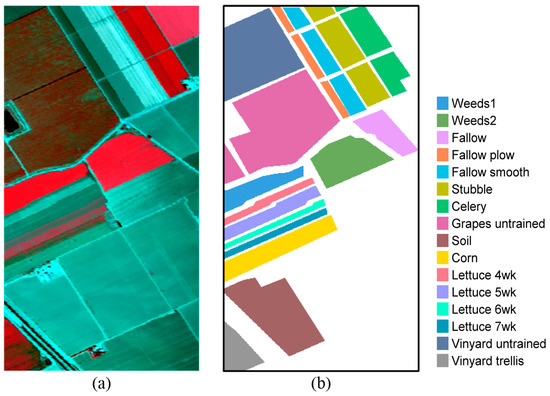

4.1.3. Salinas Data Set

This data set was also collected by the AVIRIS sensor over the area of Salinas Valley, CA, USA. The data contains pixels and 224 spectral bands. Like the Indian Pines data set, we also remove 20 water absorption spectral bands and keep 204 spectral bands preserved for the HSI classification. The thorough description of labeled samples from the 16 ground-truth classes is provided in Table 3. The false-color image and the corresponding ground-truth map are shown in Figure 4. Likewise, ten labeled samples from each class are chosen at random as the training dictionary and the remaining samples as the testing set.

Table 3.

Sixteen ground-truth classes of the Salinas data set.

Figure 4.

(a) False-color image and (b) ground truth map of the Salinas data set.

4.2. Experimental Setting

4.2.1. Feature Extraction

For the proposed multi-feature-based representation methods, four features need to be extracted from the hyperspectral data, respectively. As described in Section 2.1, the Gabor texture feature, LBP feature and DMP shape feature are extracted from the first three principal components of the HSIs by using the principal component analysis. The detailed parameter values used in our work for the four feature descriptors are listed in Table 4.

Table 4.

Parameter setting for multiple feature descriptors.

4.2.2. Parameter Tuning

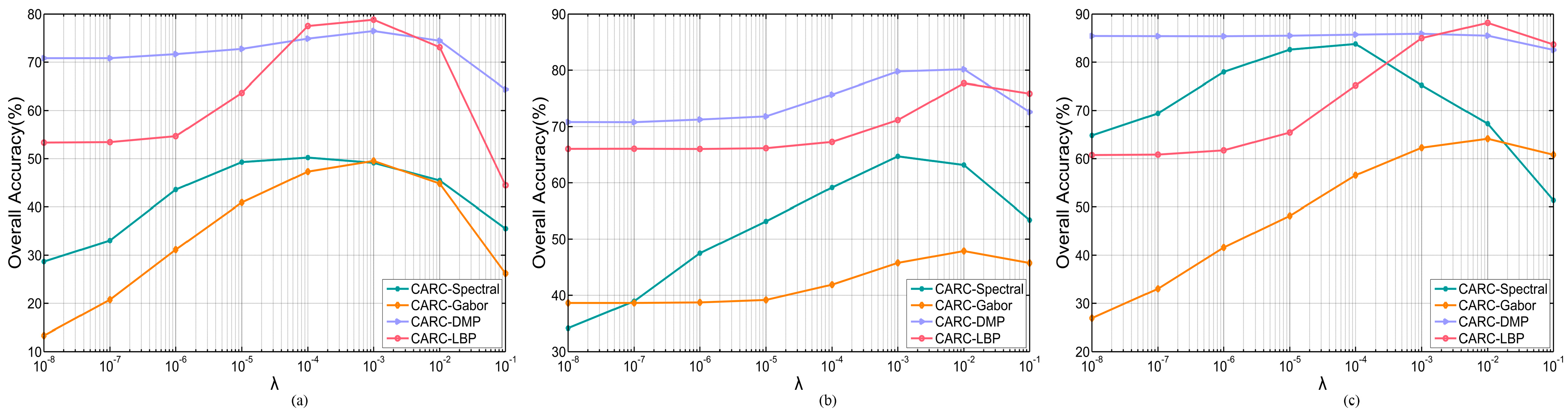

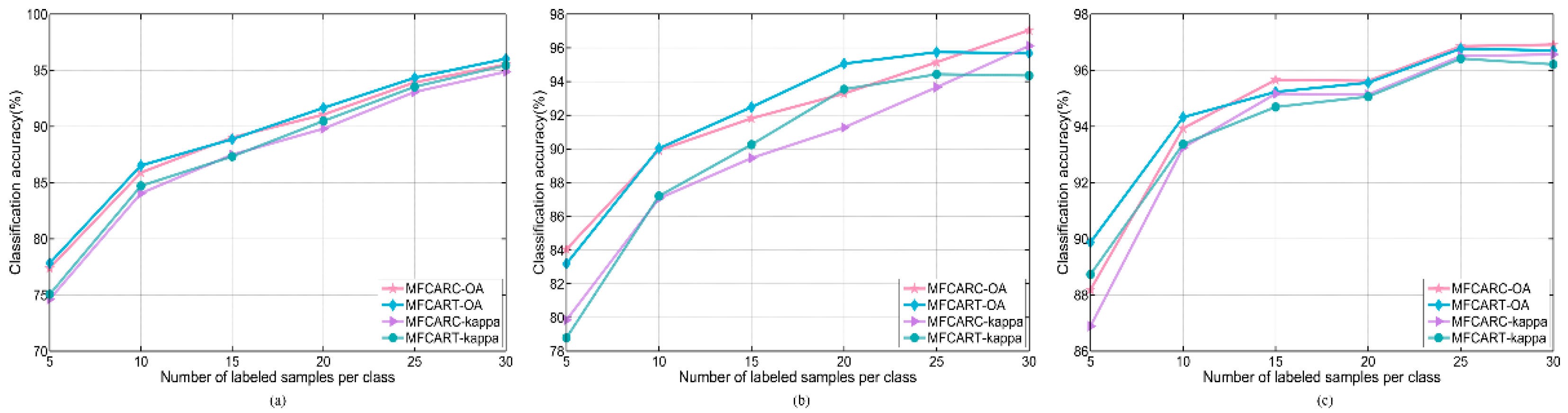

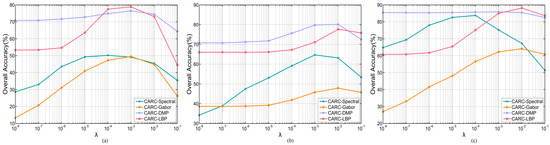

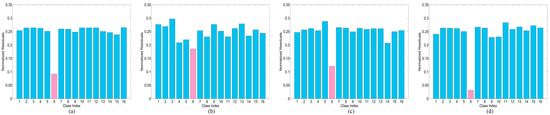

The impact of the two regularization parameters λ and β on the classification performance of the proposed algorithms with the three hyperspectral data sets need to be investigated. The range of the parameter λ in the proposed MFCARC and MFCART algorithms is set as “”. For the parameter β in the MFCART, the candidate set is set as “1, 5, 10”. Considering that the value of the parameter λ involved in the single-feature-based CARC methods (i.e., CARC-Spectral, CARC-Gabor, CARC-DMP, and CARC-LBP) has great influence on the performance of the proposed multi-feature based representation methods, we examine the effect of λ on the performance of the CARC with four features, respectively. The overall accuracy tendencies of the single-feature-based CARC methods versus varying λ with three data sets are illustrated in Figure 5. As observed, the overall accuracies of the single-feature-based CARC methods generally increase significantly with the growing λ and then begin to decrease after the maximum value.

Figure 5.

Classification accuracy versus varying λ for the proposed MFCARC and MFCART methods. (a) Indian Pines. (b) Pavia University. (c) Salinas.

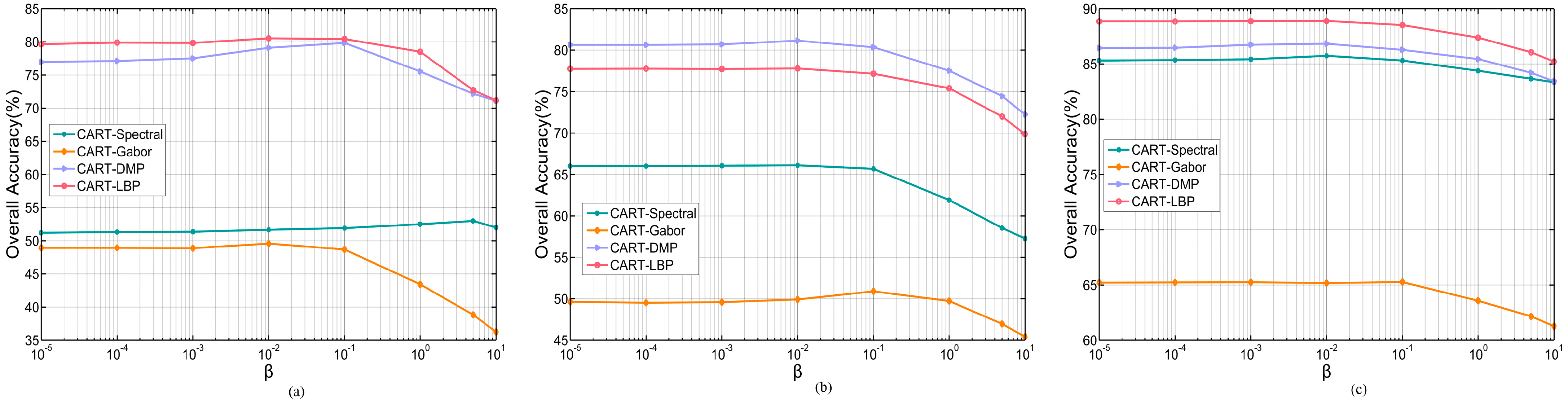

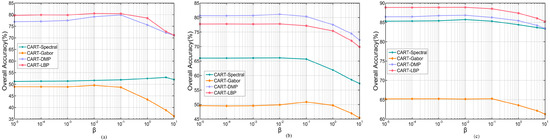

The influence of the regularization parameter β on the classification performance of the MFCART algorithm is also evaluated with all three data sets. As illustrated in Figure 6, the single-feature-based CART methods generally achieve better performance when the parameter β ranges from to except for the CART-spectral, as the CART-spectral achieves the best performance when β = 5 on the Indian Pines data set. The optimal parameter values of λ and β for the proposed MFCARC and MFCART algorithms on the three data sets are summarized in Table 5.

Figure 6.

Classification accuracy versus varying β for the proposed MFCART method. (a) Indian Pines. (b) Pavia University. (c) Salinas.

Table 5.

Optimal parameters for the proposed methods.

4.3. Classification Results

First, classification performance of the proposed CARC with the four features, i.e., CARC-Spectral, CARC-Gabor, CARC-DMP, and CARC-LBP is compared with the performance of the proposed MFCARC and MFCART, as shown in Table 6. The experiments of the proposed methods are conducted under the optimal parameters listed in Table 5. For the Salinas data set, ten labeled samples from each class are chosen at random for training and the rest for testing. It is observed that the proposed MFCARC and MFCART outperform the single-feature-based CARC methods, which demonstrates the effectiveness of combining multiple complementary features in the multi-task representation model. Besides, among those features, DMP and LBP features have superior performance and stronger class separability.

Table 6.

Classification accuracy (%) of the proposed CARC and CART with different single features and multiple features for the Salinas Data.

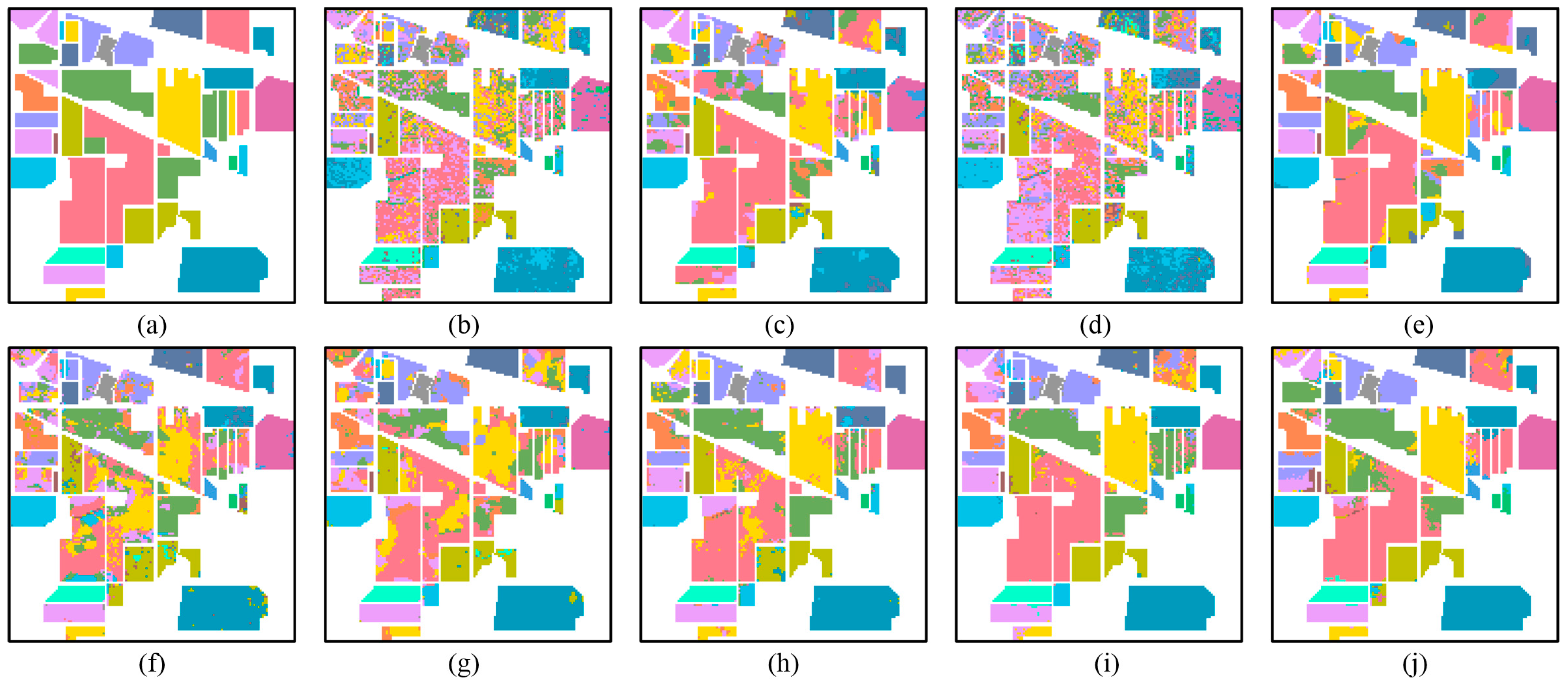

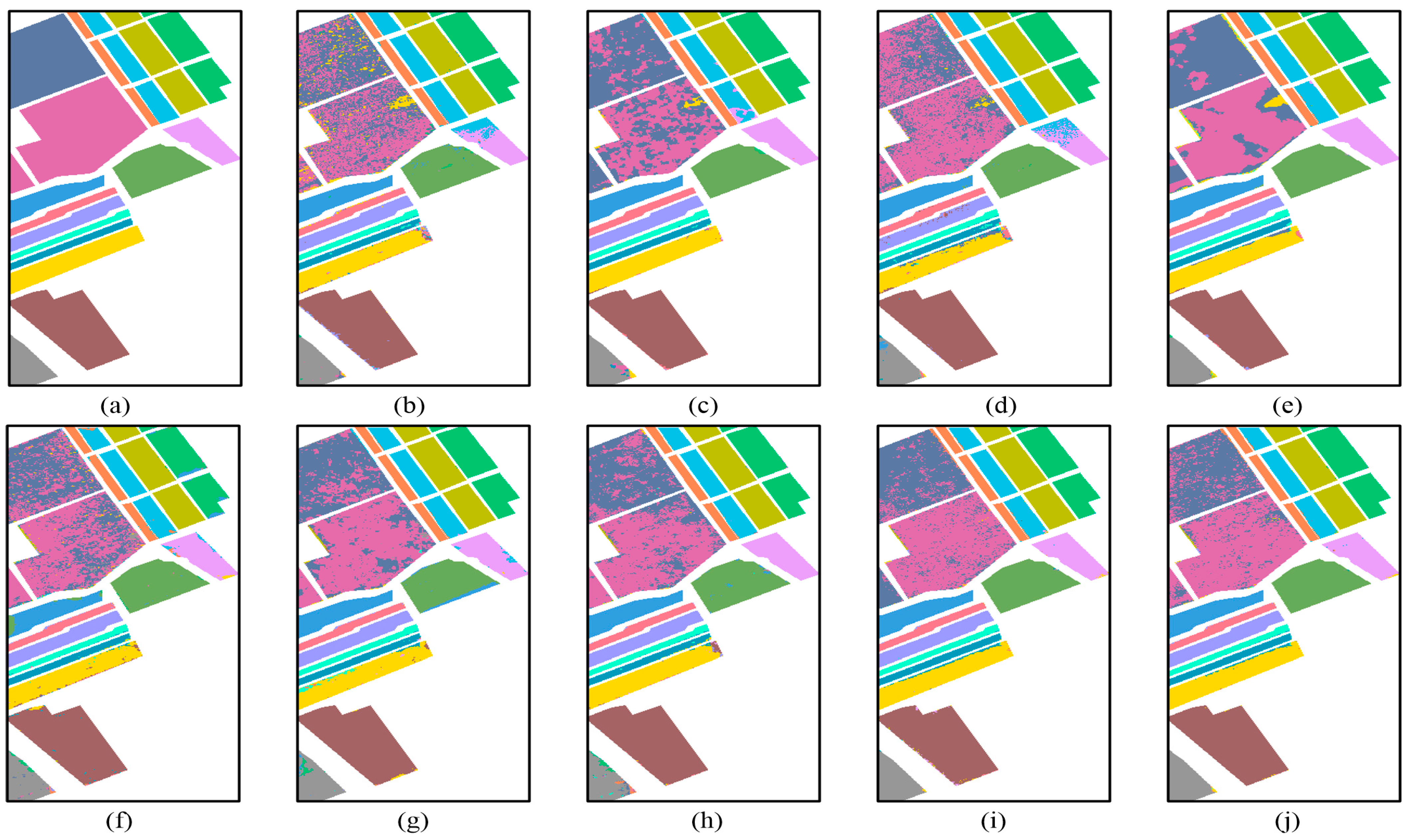

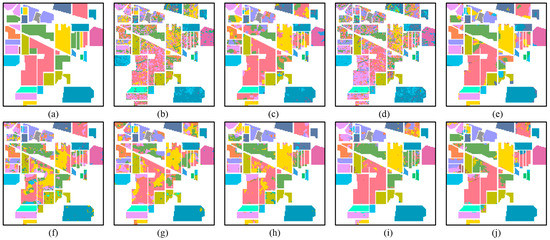

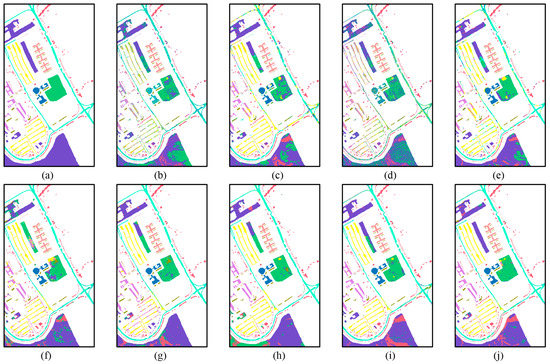

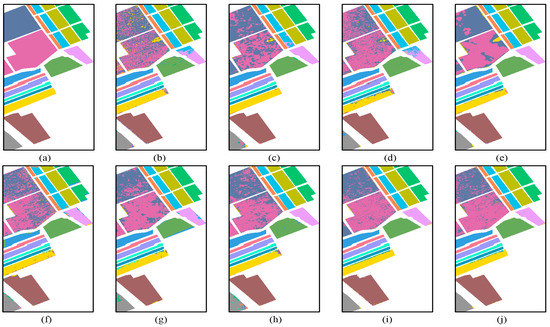

Subsequently, we compare the classification performance of the proposed MFCARC and MFCART methods with KSRC, MASR, CRT, KFRC-CKIR, MF-SRC, MF-JSRC, and MFASR. To avoid any bias, we repeat all the experiments ten times and record the averaged classification results, including the mean and standard deviation of OA, AA, CA, and the kappa coefficient. Classification performance of the proposed methods and its competitors for the Indian Pines data set is listed in Table 7. From Table 7, it is observed that the proposed methods provide remarkable performance with approximately 20% higher improvement in terms of OA compared with the state-of-the-art KSRC, CRT, and MF-SRC. In addition, when compared with the multi-feature-based classification algorithms such as MF-SRC, MF-JSRC, and MFASR, our proposed methods show better performance. Besides, the classification maps generated by the proposed methods and other comparative methods are shown in Figure 7b–j. It shows that the proposed methods achieve promising performance in both large homogeneous regions and regions of small objects.

Table 7.

Classification accuracy (%) of different classifiers for the Indian Pines data set.

Figure 7.

Ground truth and classification maps for the Indian Pines data set. (a) Ground truth. (b) Kernel version of FRC (KFRC). (c) Multiscale adaptive sparse rep-resentation classification (MASR). (d) Collaborative representation classification with Tikhonov regularization (CRT). (e) kernel fused representation classification via the composite kernel with ideal regularization (KFRC-CKIR). (f) multiple feature sparse representation classification (MF-SRC). (g) multiple feature joint sparse representation classification (MF-JSRC). (h) Multi-feature based adaptive sparse representation (MFASR). (i) Multi-feature correlation adaptive representation-based classifier (MFCARC). (j) MFCARC with Tikhonov regularization (MFCART).

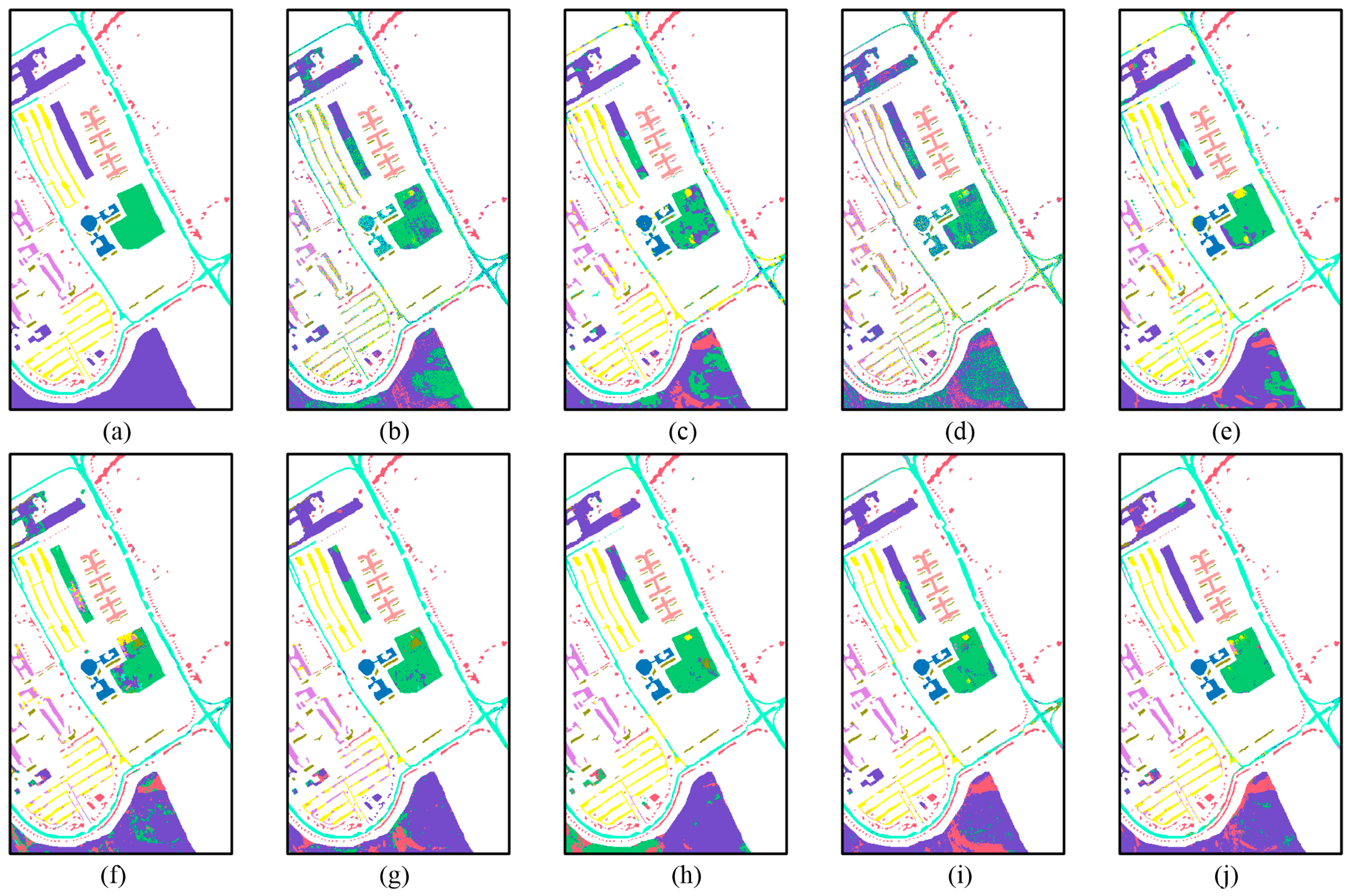

The detailed classification performance for the University of Pavia data set is given in Table 8, and the classification maps are shown in Figure 8b‒j. The proposed methods achieve the best performance with the OA value reaching about 90%. There exists significant improvement when compared with multi-feature-based classification methods. Specifically, our methods produce nearly 11%, 8%, and 6% improvement in terms of OA compared with MF-SRC, MF-JSRC, and MFASR. As illustrated in Figure 8, our methods result in more accurate classification maps than other classifiers.

Table 8.

Classification accuracy (%) of different classifiers for the University of Pavia data set.

Figure 8.

Ground truth and classification maps for the University of Pavia data set. (a) Ground truth. (b) KSRC. (c) MASR. (d) CRT. (e) KFRC-CKIR. (f) MF-SRC. (g) MF-JSRC. (h) MFASR. (i) MFCARC. (j) MFCART.

The classification performance for Salinas data set is listed in Table 9, and the classification maps generated by different classifiers are shown in Figure 9b–j. From Table 9, it is seen that the proposed MFCARC and MFCART methods outperform other comparative algorithms. For the Salinas data, there are two classes that are very difficult to be distinguished, i.e., class 8 (Grapes untrained) and class 15 (Vinyard untrained). This is because these two classes are not only spatially adjacent, but also have very similar spectral reflectance curves. From the classification maps, we can see that our proposed methods produce satisfactory results. The accuracy for class 15 of MFCART improves nearly 6% compared with MFCARC, which validates the effectiveness of the MFCART.

Table 9.

Classification accuracy (%) of different classifiers for the Salinas data set.

Figure 9.

Ground truth and classification maps for the Salinas data set. (a) Ground truth. (b) KSRC. (c) MASR. (d) CRT. (e) KFRC-CKIR. (f) MF-SRC. (g) MF-JSRC. (h) MFASR. (i) MFCARC. (j) MFCART.

To further validate the effectiveness of the proposed methods, we compare our methods with several recent deep-learning-based classifiers. The experimental results over the Indian Pines data set and the University of Paiva data set are reported in Table 10 and Table 11. From Table 10 and Table 11, it is observed that the proposed methods outperform deep-learning-based models on both data sets. Specifically, compared with the state-of-the-art GFELM-AE, SSCL2DNN and SSCL3DNN algorithms, MFCART obtains 25.93%, 26.76%, and 16.07% gains in OA on the Indian Pines data set. For the University of Pavia data set, the proposed MFCARC yields an accuracy of 90.59%, achieving 46.29%, 32.09%, and 9.48% improvement over the 2-D CNN, 3-D CNN, and SSCL3DNN methods, respectively. Based on the experimental results, it demonstrates the effectiveness and the superiority of the proposed MFCARC and MFCART methods.

Table 10.

Classification performance of different methods over the Indian Pines data set.

Table 11.

Classification performance of different methods over the University of Paiva data set.

5. Discussion

5.1. Coefficients Distribution

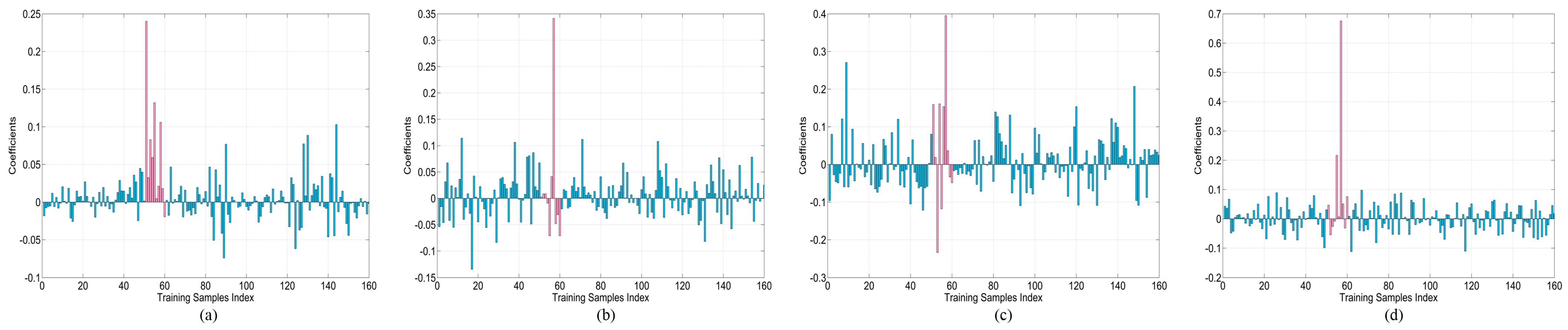

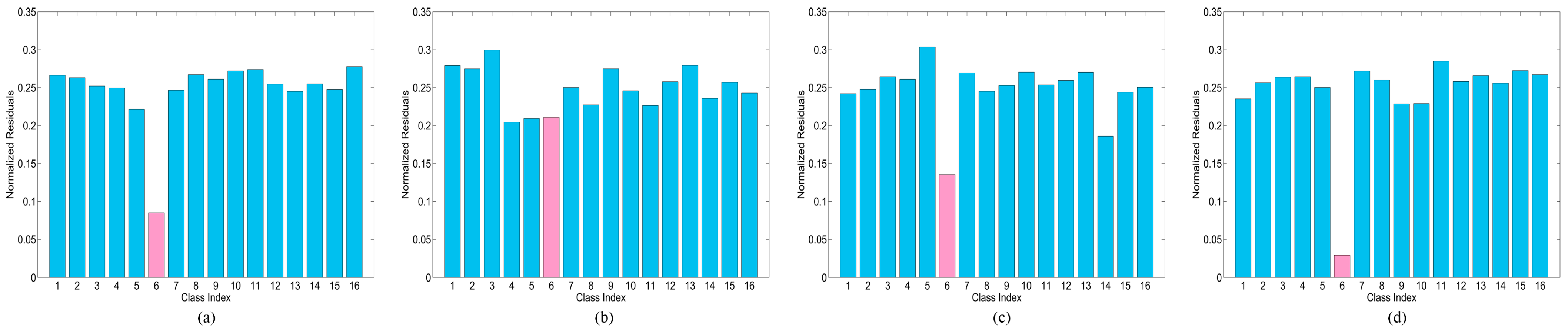

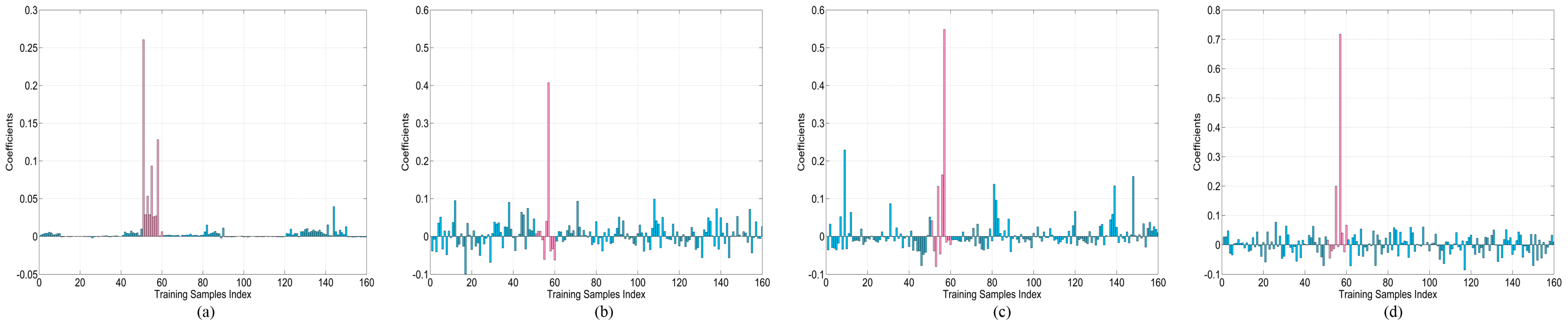

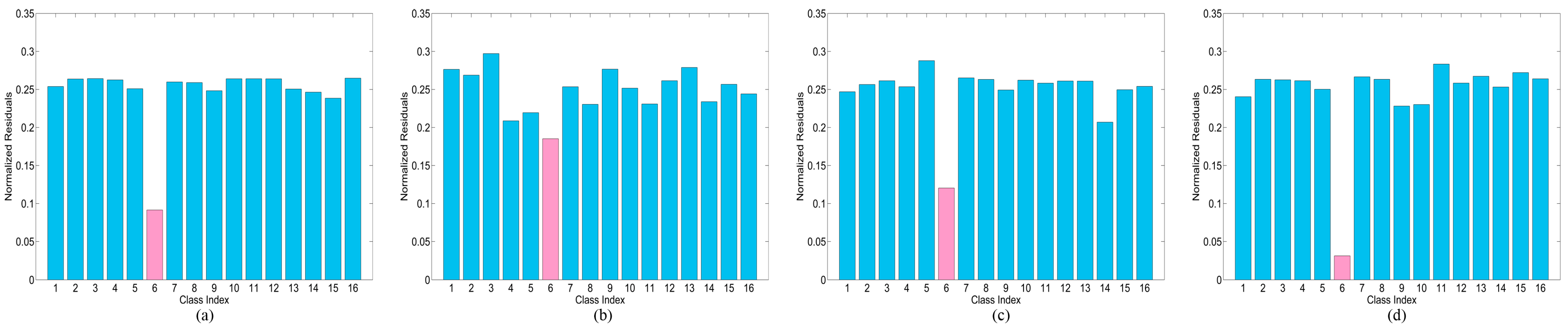

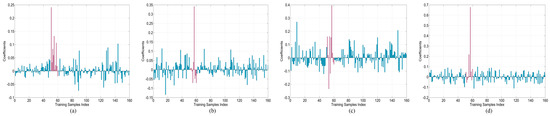

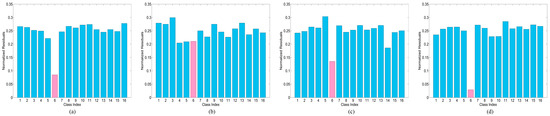

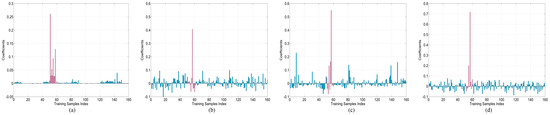

To validate the effectiveness of the proposed MFCARC and MFCART algorithms, the representation coefficients distribution on training samples and the corresponding reconstruction residuals in MFCARC and MFCART are displayed in Figure 10, Figure 11, Figure 12 and Figure 13. Specifically, 10 training samples from each class are randomly chosen to construct the training dictionary and a sample belonging to class 6 (i.e., grass/trees) from the Indian Pines data set is selected as the test sample. As illustrated in Figure 1, for the four features, the representation coefficients distribution presents such a characteristic that higher weights are concentrated in the class to which the test sample belongs. However, the representation coefficients obtained by the Gabor feature make a wrong prediction by classifying the test sample to class 4, as shown in Figure 11b. Figure 12b illustrates the representation coefficients distribution obtained by the MFCART using the four features. As can be observed, the largest weight coefficients are distributed in the class of the test sample, with the highest coefficients being much higher than that of MFCARC. Besides, the weight coefficients distributed in the remaining classes are much smaller or even zero, this characteristic is particularly prominent on the coefficients obtained by the Spectral feature. Therefore, such a coefficient distribution is quite close to the distribution of the ideal representation model that we mentioned earlier. It can be clearly seen from Figure 13 that the representation coefficients obtained by the MFCART with the four features can make correct prediction by classifying the test sample to the right class. By comparing Figure 11 and Figure 13, it is seen that the test sample that was previously misclassified by the MFCARC with Gabor feature is now corrected by the MFCART with Gabor feature. This demonstrates the effectiveness and superiority of the MFCART by taking the correlation between the test sample and the training samples into consideration.

Figure 10.

Representation coefficients of a test sample from class 6 in the Indian Pines data set using 10 training samples per class for the MFCARC algorithm. (a) Spectral. (b) Gabor. (c) Differential morphological profile (DMP). (d) Local binary pattern (LBP).

Figure 11.

Reconstruction residuals of a test sample from class 6 in the Indian Pines data set using 10 training samples per class for the MFCARC algorithm. (a) Spectral. (b) Gabor. (c) DMP. (d) LBP.

Figure 12.

Representation coefficients of a test sample from class 6 in the Indian Pines data set using 10 training samples per class for the MFCART algorithm. (a) Spectral. (b) Gabor. (c) DMP. (d) LBP.

Figure 13.

Reconstruction residuals of a test sample from class 6 in the Indian Pines data set using 10 training samples per class for the MFCART algorithm. (a) Spectral. (b) Gabor. (c) DMP. (d) LBP.

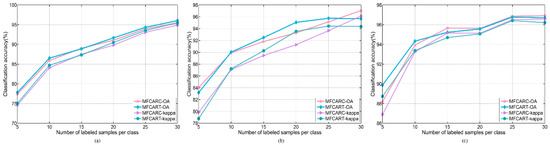

5.2. Influence of Dictionary Size

Figure 14a–c shows the influence of the training dictionary size on the OA and the kappa coefficient for the Indian Pines, University of Pavia, and Salinas data sets. The candidate set for the number of training samples per class is set as From Figure 14, it is observed that the dictionary size has a decisive influence on the classification performance of the proposed MFCARC and MFCART methods. Specifically, when the training dictionary has a small number of samples, the classification performance is not so satisfactory for the Indian Pines data set. The main reason is that the information covered by the dictionary is insufficient to reflect the precise correlation structure of the dictionary. When the size of the training dictionary becomes larger, the overall classification accuracy and the kappa coefficient of the two proposed methods increase on all three data sets.

Figure 14.

Classification accuracy versus the number of training samples per class. (a) Indian Pines. (b) Pavia University. (c) Salinas.

5.3. Computing Time

In order to evaluate the computational complexity of the various classification algorithms, all experiments are implemented using MATLAB on a 2.60 GHz CPU workstation with 16 GB of RAM. More detailed information is summarized and then listed in Table 12. As illustrated in Table 12, CRT and MF-SRC are the two fastest algorithms among all these classifiers, with running time less than ten seconds. In comparison, the running time of our proposed algorithms is much slower due to the iterative process involved in the optimization algorithms. Specifically, for the proposed MFCARC and MFCART methods, the dominant computational cost comes from the learning of representation coefficients via the correlation regularization problem in multiple-feature-based subspace, which is implemented by the Iteratively Reweighted Least Squares (IRLS) method. As shown in Table 12, although MFCART costs more time than MFCARC owing to the integration of the distance-weighted Tikhonov regularization, MFCART achieves higher classification accuracy. On the other hand, both of the proposed algorithms provide better classification performance under the small size samples situations, compared with other state-of-the-art classifiers.

Table 12.

Execution time (in seconds) for the classification of the Indian Pines data set.

6. Conclusions

In this paper, a new representation-based classifier CARC is proposed to perform sample selection and group correlated samples simultaneously. Owing to the adaptive property of the regularization, CARC is capable of balancing between SRC and CRC adaptively according to the precise correlation structure of the dictionary, which overcomes the intrinsic limitations of the traditional SR- and CR-based classification methods in HSI classification. In addition, by taking the correlation between the test sample and the training samples into consideration, CART is developed to make the representation model reveal the true geometry of feature space. Moreover, aiming at solving the small training samples issue, multi-feature representation frameworks are constructed according to the proposed CARC and CART, leading to MFCARC and MFCART frameworks, which can build more accurate representation models for hyperspectral data under the small size samples situations. Experimental results show that the MFCARC and MFCART achieve superior performance than state-of-the-art algorithms.

However, there also exist certain limitations for the proposed methods. In our proposed methods, training samples are directly used as training atoms of the dictionary and the random sampling strategy is adopted for constructing the dictionary. Considering that constructing a compact and representative dictionary is beneficial for the sample representation, our future research will focus on the design of the discriminative dictionary and the integration of the dictionary learning process to further improve the classification performance under the small size samples situations.

Author Contributions

Conceptualization, G.L. and L.G.; methodology, G.L.; software, G.L.; writing—original draft preparation, G.L.; writing—review and editing, G.L. and L.G.; supervision, L.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Project of Science and Technology Department of Henan Province in China (No. 212102210106).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

We follow the commonly used variational formulation for the nuclear norm [51] to reformulate the problem (15). According to the variational form for the trace norm of the matrix X, i.e., , it yields

where , and Based on the cyclic property of the trace of products on three matrices, i.e., it leads to (A2)

Since is a diagonal matrix with its i-th diagonal entry being and assume that the diagonal elements of the matrix are i.e., , (A2) is further written into (A3), i.e.,

Thus, the problem (15) is rewritten as

References

- Zhang, N.; Yang, G.; Pan, Y.; Yang, X.; Chen, L.; Zhao, C. A review of advanced technologies and development for hyperspectral-based plant disease detection in the past three decades. Remote Sens. 2020, 12, 3188. [Google Scholar] [CrossRef]

- Lv, M.; Fowler, J.E.; Jing, L. Spatial functional data analysis for the spatial-spectral classification of hyperspectral imagery. IEEE Geosci. Remote Sens. Lett. 2018, 16, 1–5. [Google Scholar] [CrossRef]

- Liu, N.; Li, W.; Tao, R.; Fowler, J.E. Wavelet-domain low-rank/group-sparse destriping for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 10310–10321. [Google Scholar] [CrossRef]

- Huang, H.; Chen, M.; Duan, Y. Dimensionality reduction of hyperspectral image using spatial-spectral regularized sparse hypergraph embedding. Remote Sens. 2019, 11, 1039. [Google Scholar] [CrossRef]

- Hennessy, A.; Clarke, K.; Lewis, M. Hyperspectral classification of plants: A review of waveband selection generalisability. Remote Sens. 2020, 12, 113. [Google Scholar] [CrossRef]

- Ghamisi, P.; Maggiori, E.; Li, S.; Souza, R.; Tarablaka, Y.; Moser, G.; De Giorgi, A.; Fang, L.; Chen, Y.; Chi, M.; et al. New frontiers in spectral-spatial hyperspectral image classification: The latest advances based on mathematical morphology, Markov random fields, segmentation, sparse representation, and deep learning. IEEE Geosci. Remote Sens. Mag. 2018, 6, 10–43. [Google Scholar] [CrossRef]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Deep learning for classification of hyperspectral data: A comparative review. IEEE Geosci. Remote Sens. Mag. 2019, 7, 159–173. [Google Scholar] [CrossRef]

- Li, S.; Song, W.; Fang, L.; Chen, Y.; Ghamisi, P.; Benediktsson, J.A. Deep learning for hyperspectral image classification: An overview. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6690–6709. [Google Scholar] [CrossRef]

- Wright, J.; Yang, A.Y.; Ganesh, A.; Sastry, S.S.; Ma, Y. Robust face recognition via space representation. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 210–227. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Nasrabadi, N.M.; Tran, T.D. Hyperspectral image classification using dictionary-based sparse representation. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3973–3985. [Google Scholar] [CrossRef]

- Zhang, H.; Li, J.; Huang, Y.; Zhang, L. A nonlocal weighted joint sparse representation classification method for hyperspectral imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2056–2065. [Google Scholar] [CrossRef]

- Hu, S.; Peng, J.; Fu, Y.; Li, L. Kernel joint sparse representation based on self-paced learning for hyperspectral image classification. Remote Sens. 2019, 11, 1114. [Google Scholar] [CrossRef]

- Li, C.; Ma, Y.; Mei, X.; Liu, C.; Ma, J. Hyperspectral image classification with robust sparse representation. IEEE Geosci. Remote Sens. Lett. 2016, 13, 641–645. [Google Scholar] [CrossRef]

- Zhang, X.; Song, Q.; Gao, Z.; Zheng, Y.; Weng, P.; Jiao, L.C. Spectral-spatial feature learning using cluster-based group sparse coding for hyperspectral image classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4142–4159. [Google Scholar] [CrossRef]

- Yu, H.; Gao, L.; Liao, W.; Zhang, B. Group sparse representation based on nonlocal spatial and local spectral similarity for hyperspectral imagery classification. Sensors 2018, 18, 1695. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Yang, M.; Feng, X. Sparse representation or collaborative representation: Which helps face recognition? In Proceedings of the 2011 International Conference on Computer Vision, Institute of Electrical and Electronics Engineers, Barcelona, Spain, 3–6 November 2011; pp. 471–478. [Google Scholar]

- Fan, Z.; Ni, M.; Zhu, Q.; Liu, E. Weighted sparse representation for face recognition. Neurocomputing 2015, 151, 304–309. [Google Scholar] [CrossRef]

- Li, W.; Tramel, E.W.; Prasad, S.; Fowler, J.E. Nearest regularized subspace for hyperspectral classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 477–489. [Google Scholar] [CrossRef]

- Li, W.; Du, Q.; Xiong, M. Kernel collaborative representation with tikhonov regularization for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 2014, 12, 1–5. [Google Scholar] [CrossRef]

- Du, P.; Gan, L.; Xia, J.; Wang, D. Multikernel adaptive collaborative representation for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4664–4677. [Google Scholar] [CrossRef]

- Li, W.; Zhang, Y.; Liu, N.; Du, Q.; Tao, R. Structure-aware collaborative representation for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7246–7261. [Google Scholar] [CrossRef]

- Tu, B.; Zhou, C.; Liao, X.; Zhang, G.; Peng, Y. Spectral-spatial hyperspectral classification via structural-kernel collaborative representation. IEEE Geosci. Remote Sens. Lett. 2020, 1–5. [Google Scholar] [CrossRef]

- Iordache, M.-D.; Bioucas-Dias, J.M.; Plaza, A. Sparse unmixing of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2014–2039. [Google Scholar] [CrossRef]

- Zou, H.; Hastie, T. Regularization and variable selection via the elastic net. J. R. Stat. Soc. Ser. B 2005, 67, 301–320. [Google Scholar] [CrossRef]

- Li, W.; Du, Q.; Zhang, F.; Hu, W. Hyperspectral image classification by fusing collaborative and sparse representations. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4178–4187. [Google Scholar] [CrossRef]

- Gan, L.; Du, P.; Xia, J.; Meng, Y. Kernel fused representation-based classifier for hyperspectral imagery. IEEE Geosci. Remote Sens. Lett. 2017, 14, 684–688. [Google Scholar] [CrossRef]

- Liu, G.; Qi, L.; Tie, Y.; Ma, L. Hyperspectral image classification using kernel fused representation via a spatial-spectral composite kernel with ideal regularization. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1422–1426. [Google Scholar] [CrossRef]

- Bian, X.; Chen, C.; Xu, Y.; Du, Q. Robust hyperspectral image classification by multi-layer spatial-spectral sparse representations. Remote Sens. 2016, 8, 985. [Google Scholar] [CrossRef]

- Soomro, B.N.; Xiao, L.; Huang, L.; Soomro, S.H.; Molaei, M. Bilayer elastic net regression model for supervised spectral-spatial hyperspectral image classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4102–4116. [Google Scholar] [CrossRef]

- Soomro, B.N.; Xiao, L.; Molaei, M.; Huang, L.; Lian, Z.; Soomro, S.H. Local and nonlocal context-aware elastic net representation-based classification for hyperspectral images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2922–2939. [Google Scholar] [CrossRef]

- Gao, L.; Qi, L.; Chen, E.; Guan, L. Discriminative multiple canonical correlation analysis for information fusion. IEEE Trans. Image Process. 2018, 27, 1951–1965. [Google Scholar] [CrossRef] [PubMed]

- Gao, L.; Zhang, R.; Qi, L.; Chen, E.; Guan, L. The labeled multiple canonical correlation analysis for information fusion. IEEE Trans. Multimed. 2019, 21, 375–387. [Google Scholar] [CrossRef]

- Su, H.; Zhao, B.; Du, Q.; Du, P.; Xue, Z. Multifeature dictionary learning for collaborative representation classification of hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2467–2484. [Google Scholar] [CrossRef]

- Zhang, E.; Zhang, X.; Liu, H.; Jiao, L. Fast multifeature joint sparse representation for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1397–1401. [Google Scholar] [CrossRef]

- Jia, S.; Hu, J.; Xie, Y.; Shen, L.; Jia, X.; Li, Q. Gabor cube selection based multitask joint sparse representation for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3174–3187. [Google Scholar] [CrossRef]

- Fang, L.; Wang, C.; Li, S.; Benediktsson, J.A. Hyperspectral image classification via multiple-feature-based adaptive sparse representation. IEEE Trans. Instrum. Meas. 2017, 66, 1646–1657. [Google Scholar] [CrossRef]

- He, Z.; Li, J.; Liu, L.; Liu, K.; Zhuo, L. Fast three-dimensional empirical mode decomposition of hyperspectral images for class-oriented multitask learning. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6625–6643. [Google Scholar] [CrossRef]

- He, Z.; Wang, Y.; Hu, J. Joint sparse and low-rank multitask learning with laplacian-like regularization for hyperspectral classification. Remote Sens. 2018, 10, 322. [Google Scholar] [CrossRef]

- Gan, L.; Xia, J.; Du, P.; Chanussot, J. Multiple feature kernel sparse representation classifier for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5343–5356. [Google Scholar] [CrossRef]

- Li, W.; Du, Q. Gabor-filtering-based nearest regularized subspace for hyperspectral image classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1012–1022. [Google Scholar] [CrossRef]

- Jia, S.; Shen, L.; Li, Q. Gabor feature-based collaborative representation for hyperspectral imagery classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1118–1129. [Google Scholar] [CrossRef]

- Lv, Z.Y.; Zhang, P.; Benediktsson, J.A.; Shi, W.Z. Morphological profiles based on differently shaped structuring elements for classification of images with very high spatial resolution. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4644–4652. [Google Scholar] [CrossRef]

- Li, W.; Chen, C.; Su, H.; Du, Q. Local binary patterns and extreme learning machine for hyperspectral imagery classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3681–3693. [Google Scholar] [CrossRef]

- Becker, S.; Bobin, J.; Candès, E.J. NESTA: A fast and accurate first-order method for sparse recovery. SIAM J. Imaging Sci. 2011, 4, 1–39. [Google Scholar] [CrossRef]

- Yang, J.; Zhang, Y. Alternating direction algorithms for in compressive sensing. SIAM J. Sci. Comput. 2011, 33, 250–278. [Google Scholar] [CrossRef]

- Grave, E.; Obozinski, G.R.; Bach, F.R. Trace lasso: A trace norm regularization for correlated designs. Adv. Neural Inf. Process. Syst. 2011, arXiv:1109.1990v1. [Google Scholar]

- Lin, Z.; Chen, M.; Ma, Y. The Augmented Lagrange Multiplier Method for Exact Recovery of a Corrupted Low-Rank Matrices; UIUC Tech. Rep. UILU-ENG-09-2215; Department of Electrical and Computer Engineering UIUC: Champaign, IL, USA, 2009. [Google Scholar]

- Lu, C.; Lin, Z.; Yan, S. Smoothed low rank and sparse matrix recovery by iteratively reweighted least squares minimization. IEEE Trans. Image Process. 2015, 24, 646–654. [Google Scholar] [CrossRef] [PubMed]

- Daubechies, I.; Devore, R.; Fornasier, M.; Güntürk, C.S. Iteratively reweighted least squares minimization for sparse recovery. Commun. Pure Appl. Math. 2010, 63, 1–38. [Google Scholar] [CrossRef]

- Mohan, K.; Fazel, M. Iterative reweighted algorithms for matrix rank minimization. J. Mach. Learn. Res. 2012, 13, 3441–3473. [Google Scholar]

- Argyriou, A.; Evgeniou, T.; Pontil, M. Multi-task feature learning. Adv. Neural Inf. Process. Syst. 2007. [Google Scholar] [CrossRef]

- Bach, F.; Jenatton, R.; Mairal, J.; Obozinski, G. Optimization with sparsity-inducing penalties. Found. Trends. Mach. Learn. 2011, 4, 1–106. [Google Scholar] [CrossRef]

- Argyriou, A.; Evgeniou, T.; Pontil, M. Convex multi-task feature learning. Mach. Learn. 2008, 73, 243–272. [Google Scholar] [CrossRef]

- Zhang, L.; Zhou, W.-D.; Chang, P.-C.; Liu, J.; Yan, Z.; Wang, T.; Li, F.-Z. Kernel sparse representation-based classifier. IEEE Trans. Signal Process. 2011, 60, 1684–1695. [Google Scholar] [CrossRef]

- Fang, L.; Li, S.; Kang, X.; Benediktsson, J.A. Spectral-spatial hyperspectral image classification via multiscale adaptive sparse representation. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7738–7749. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep feature extraction and classification of hyperspectral images based on convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef]

- Ahmad, M.; Shabbir, S.; Oliva, D.; Mazzara, M.; Distefano, S. Spatial-prior generalized fuzziness extreme learning machine autoencoder-based active learning for hyperspectral image classification. Optik 2020, 206, 163712. [Google Scholar] [CrossRef]

- Hu, W.-S.; Li, H.-C.; Pan, L.; Li, W.; Tao, R.; Du, Q. Spatial-spectral feature extraction via deep ConvLSTM neural networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4237–4250. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).