AgroShadow: A New Sentinel-2 Cloud Shadow Detection Tool for Precision Agriculture

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Area and Data Retrieval

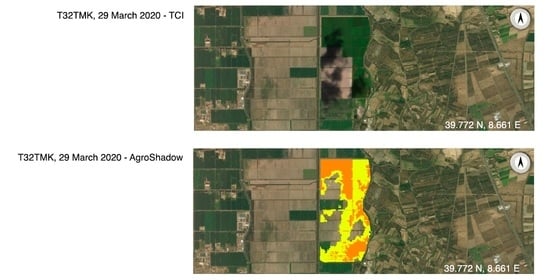

2.2. The AgroShadow Tool

- a threshold of B2/B11 < 1.5, to discriminate soil from water pixels;

- a k-means for classifying soil moisture values;

- a classified value ≤0 is detected as cloud;

- a classified value ≥1 is stated as possible shadow, snow or flooded condition;

- a threshold of TCI > 200, to distinguish snow pixels from shadows and flooded condition;

- a 5-pixel buffer neighbouring the detected area with a soil moisture threshold >0.6 is stated as flooded condition;

- a 5-pixel buffer neighbouring the detected area with a soil moisture threshold ≤0.6 is stated as shadow.

2.3. Sen2Cor Classification

2.4. MAJA Classification

3. Results and Discussion

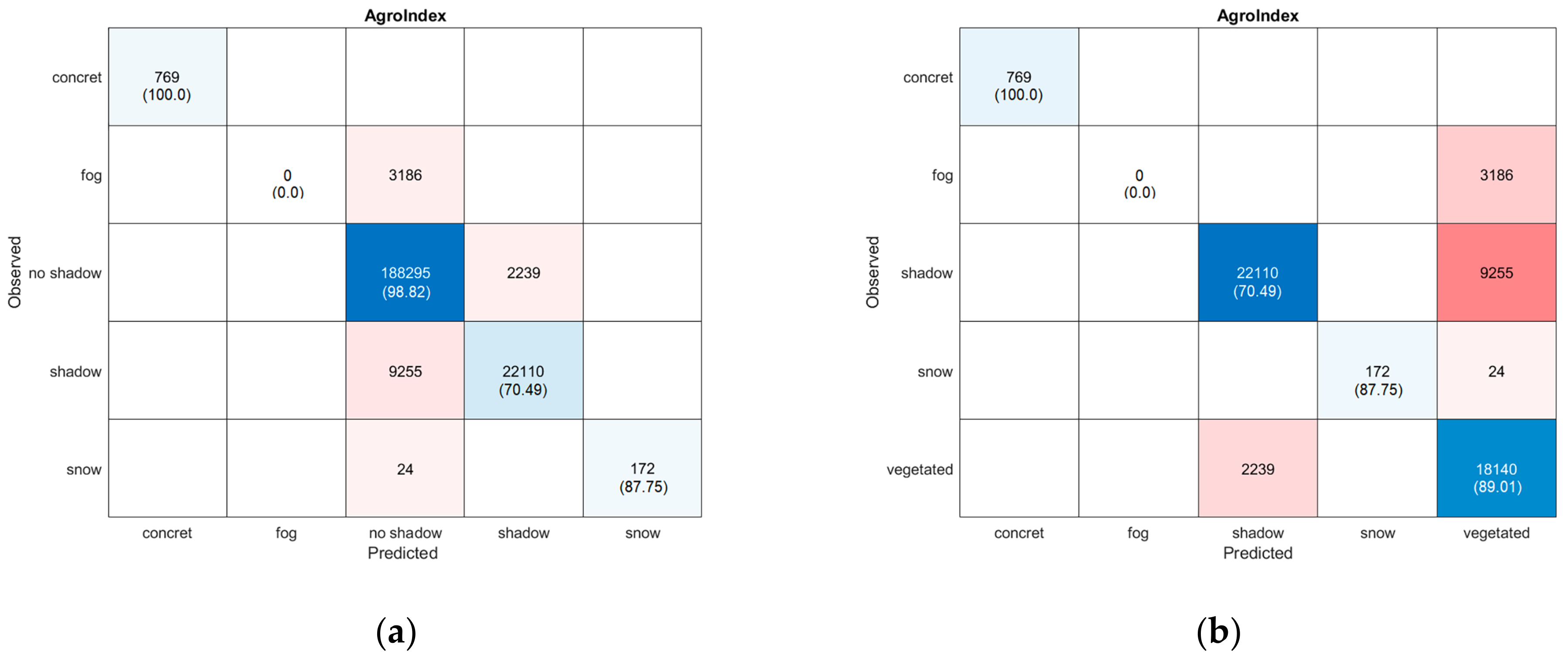

3.1. AgroShadow Tool Validation

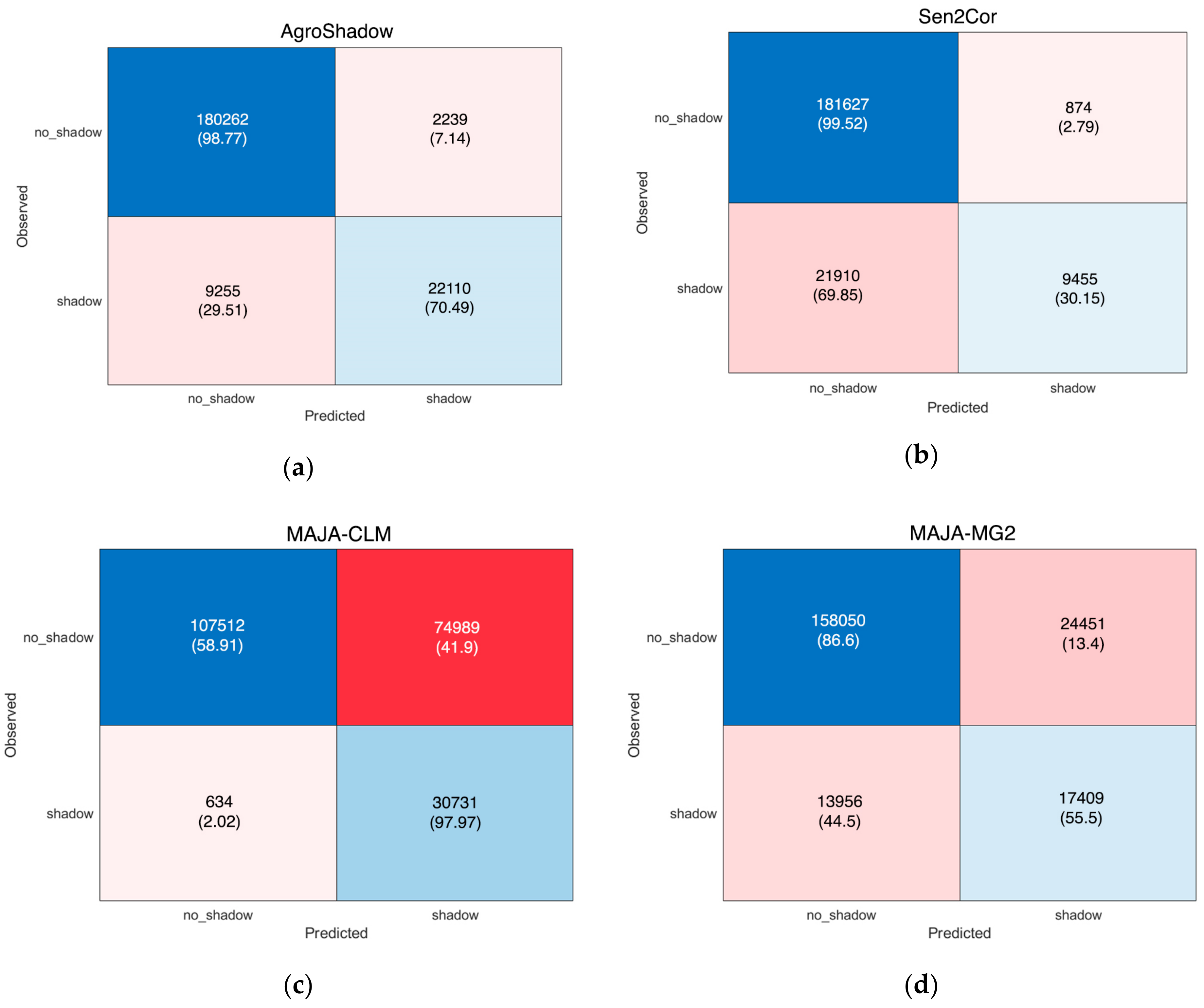

3.2. Comparison with Sen2Cor and MAJA Tools

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Coluzzi, R.; Imbrenda, V.; Lanfredi, M.; Simoniello, T. A first assessment of the Sentinel-2 Level 1-C cloud mask product to support informed surface analyses. Remote Sens. Environ. 2018, 217, 426–443. [Google Scholar] [CrossRef]

- Saiz-Rubio, V.; Rovira-Más, F. From smart farming towards agriculture 5.0: A review on crop data management. Agronomy 2020, 10, 207. [Google Scholar] [CrossRef]

- Castaldi, F.; Hueni, A.; Chabrillat, S.; Ward, K.; Buttafuoco, G.; Bomans, B.; Vreis, K.; Brell, M.; van Wesemael, B. Evaluating the capability of the Sentinel 2 data for soil organic carbon prediction in croplands. ISPRS J. Photogramm. Remote Sens. 2019, 147, 267–282. [Google Scholar] [CrossRef]

- Toscano, P.; Castrignanò, A.; Di Gennaro, S.F.; Vonella, A.V.; Ventrella, D.; Matese, A. A precision agriculture approach for durum wheat yield assessment using remote sensing data and yield mapping. Agronomy 2019, 9, 437. [Google Scholar] [CrossRef]

- Segarra, J.; Buchaillot, M.L.; Araus, J.L.; Kefauver, S.C. Remote sensing for precision agriculture: Sentinel-2 improved features and applications. Agronomy 2020, 10, 641. [Google Scholar] [CrossRef]

- Tewes, A.; Montzka, C.; Nolte, M.; Krauss, G.; Hoffmann, H.; Gaiser, T. Assimilation of sentinel-2 estimated LAI into a crop model: Influence of timing and frequency of acquisitions on simulation of water stress and biomass production of winter wheat. Agronomy 2020, 10, 1813. [Google Scholar] [CrossRef]

- Mueller-Wilm, U.; Devignot, O.; Pessiot, L. S2 MPC—Sen2Cor Configuration and User Manual; ESA Report, Ref. S2-PDGS-MPC-L2A-SUM-V2.8 Issue 2. 2019. Available online: http://step.esa.int/thirdparties/sen2cor/2.8.0/docs/S2-PDGS-MPC-L2A-SUM-V2.8.pdf (accessed on 24 November 2020).

- Hagolle, O. MAJA Processor for Cloud Detection and Atmospheric Correction Tool. Available online: https://logiciels.cnes.fr/en/node/58?type=desc (accessed on 26 November 2020).

- Google Earth Engine. Sentinel-2 Cloud Masking with s2cloudless. Available online: https://developers.google.com/earth-engine/tutorials/community/sentinel-2-s2cloudless (accessed on 13 January 2021).

- Qiu, S.; Zhu, Z.; He, B. Fmask 4.2 Handbook. 2020. Available online: https://drive.google.com/drive/folders/1bVwvlGDFOsWnVj5b3MqI5yqRDoi8g935 (accessed on 13 January 2021).

- Aboutalebi, M.; Torres-Rua, A.F.; Kustas, W.P.; Nieto, H.; Coopmans, C.; McKee, M. Assessment of different methods for shadow detection in high-resolution optical imagery and evaluation of shadow impact on calculation of NDVI, and evapotranspiration. Irrig. Sci. 2019, 37, 407–429. [Google Scholar] [CrossRef]

- Mostafa, Y. A review on various shadow detection and compensation techniques in remote sensing images. Can. J. Remote Sens. 2017, 43, 545–562. [Google Scholar] [CrossRef]

- Tarko, A.; De Bruin, S.; Bregt, A.K. Comparison of manual and automated shadow detection on satellite imagery for agricultural land delineation. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 493–502. [Google Scholar] [CrossRef]

- Tatar, N.; Saadatseresht, M.; Arefi, H.; Hadavand, A. A robust object-based shadow detection method for cloud-free high resolution satellite images over urban areas and water bodies. Adv. Space Res. 2018, 61, 2787–2800. [Google Scholar] [CrossRef]

- Wang, Q.; Yan, L.; Yuan, Q.; Ma, Z. An automatic shadow detection method for VHR remote sensing orthoimagery. Remote Sens. 2017, 9, 469. [Google Scholar] [CrossRef]

- França, M.M.; Fernandes Filho, E.I.; Ferreira, W.P.; Lani, J.L.; Soares, V.P. Topographyc shadow influence on optical image acquired by satellite in the southern hemisphere. Eng. Agrícola 2018, 38, 728–740. [Google Scholar] [CrossRef]

- Wójcik-Długoborska, K.A.; Bialik, R.J. The influence of shadow effects on the spectral characteristics of glacial meltwater. Remote Sens. 2021, 13, 36. [Google Scholar] [CrossRef]

- Frantz, D.; Haß, E.; Uhi, A.; Stoffels, J.; Hill, J. Improvement of the Fmask algorithm for Sentinel-2 images: Separating clouds from bright surfaces based on parallax effects. Remote Sens. Environ. 2018, 215, 471–481. [Google Scholar] [CrossRef]

- Sun, L.; Mi, X.; Wei, J.; Wang, J.; Tian, X.; Yu, H.; Gan, P. A cloud detection algorithm-generating method for remote sensing data at visible to short-wave infrared wavelengths. ISPRS J. Photogramm. Remote Sens. 2017, 124, 70–88. [Google Scholar] [CrossRef]

- Irish, R.R.; Barker, J.L.; Goward, S.N.; Arvidson, T. Characterization of the Landsat-7 ETM+ automated cloud-cover assessment (ACCA) algorithm. Photogramm. Eng. Remote Sens. 2006, 72, 1179–1188. [Google Scholar] [CrossRef]

- Amin, R.; Gould, R.; Hou, W.; Arnone, R.; Lee, Z. Optical algorithm for cloud shadow detection over water. IEEE Trans. Geosci. Remote Sens. 2012, 51, 732–741. [Google Scholar] [CrossRef]

- Shahtahmassebi, A.; Yang, N.; Wang, K.; Moore, N.; Shen, Z. Review of shadow detection and de-shadowing methods in remote sensing. Chin. Geogr. Sci. 2013, 23, 403–420. [Google Scholar] [CrossRef]

- Hollstein, A.; Segl, K.; Guanter, L.; Brell, M.; Enesco, M. Ready-to-use methods for the detection of clouds, cirrus, snow, shadow, water and clear sky pixels in Sentinel-2 MSI images. Remote Sens. 2016, 8, 666. [Google Scholar] [CrossRef]

- Hughes, M.J.; Hayes, D.J. Automated detection of cloud and cloud shadow in single-date Landsat imagery using neural networks and spatial post-processing. Remote Sens. 2014, 6, 4907–4926. [Google Scholar] [CrossRef]

- Baetens, L.; Desjardins, C.; Hagolle, O. Validation of copernicus Sentinel-2 cloud masks obtained from MAJA, Sen2Cor, and FMask processors using reference cloud masks generated with a supervised active learning procedure. Remote Sens. 2019, 11, 433. [Google Scholar] [CrossRef]

- Zhu, Z.; Woodcock, C.E. Object-based cloud and cloud shadow detection in Landsat imagery. Remote Sens. Environ. 2012, 118, 83–94. [Google Scholar] [CrossRef]

- Zhong, B.; Chen, W.; Wu, S.; Hu, L.; Luo, X.; Liu, Q. A cloud detection method based on relationship between objects of cloud and cloud-shadow for Chinese moderate to high resolution satellite imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4898–4908. [Google Scholar] [CrossRef]

- Le Hegarat-Mascle, S.; Andre, C. Use of Markov Random Fields for automatic cloud/shadow detection on high resolution optical images. ISPRS J. Photogramm. Remote Sens. 2009, 64, 351–366. [Google Scholar] [CrossRef]

- Main-Knorn, M.; Pflug, B.; Louis, J.; Debaecker, V.; Müller-Wilm, U.; Gascon, F. Sen2Cor for sentinel-2. In Proceedings of the International Society for Optics and Photonics, Warsaw, Poland, 11–14 September 2017; Volume 10427, p. 1042704. [Google Scholar] [CrossRef]

- Richter, R.; Louis, J.; Müller-Wilm, U. Sentinel-2 MSI—Level 2A Products Algorithm Theoretical Basis Document; ESA Report S2PAD-ATBD-0001; Telespazio VEGA Deutschland GmbH: Darmstadt, Germany, 2012. [Google Scholar]

- Hagolle, O.; Huc, M.; Desjardins, C.; Auer, S.; Richter, R. MAJA ATBD—Algorithm Theoretical Basis Document; CNES-DLR Report MAJA-TN-WP2-030 V1.0 2017/Dec/07; Zenodo: Meyrin, Switzerland, 2017. [Google Scholar] [CrossRef]

- Hagolle, O.; Huc, M.; Pascual, D.V.; Dedieu, G. A multi-temporal method for cloud detection, applied to FORMOSAT-2, VENμS, LANDSAT and SENTINEL-2 images. Remote Sens. Environ. 2010, 114, 1747–1755. [Google Scholar] [CrossRef]

- Tarrio, K.; Tang, X.; Masek, J.G.; Claverie, M.; Ju, J.; Qiu, S.; Zhu, Z.; Woodcock, C.E. Comparison of cloud detection algorithms for Sentinel-2 imagery. Sci. Remote Sens. 2020, 2, 100010. [Google Scholar] [CrossRef]

- Zekoll, V.; Main-Knorn, M.; Louis, J.; Frantz, D.; Richter, R.; Pflug, B. Comparison of masking algorithms for sentinel-2 imagery. Remote Sens. 2021, 13, 137. [Google Scholar] [CrossRef]

- Ambrosone, M.; Matese, A.; Di Gennaro, S.F.; Gioli, B.; Tudoroiu, M.; Genesio, L.; Miglietta, M.; Baronti, S.; Maienza, A.; Ungaro, F.; et al. Retrieving soil moisture in rainfed and irrigated fields using Sentinel-2 observations and a modified OPTRAM approach. Int. J. Appl. Earth Obs. Geoinf. 2020, 89, 102113. [Google Scholar] [CrossRef]

- Kohonen, T. Self-organized formation of topologically correct feature maps. Biol. Cybern. 1982, 43, 59–69. [Google Scholar] [CrossRef]

- Engler, R.; Waser, L.T.; Zimmermann, N.E.; Schaub, M.; Berdos, S.; Ginzler, C.; Psomas, A. Combining ensemble modeling and remote sensing for mapping individual tree species at high spatial resolution. For. Ecol. Manag. 2013, 310, 64–73. [Google Scholar] [CrossRef]

- Healey, S.P.; Cohen, W.B.; Yang, Z.; Kenneth Brewer, C.; Brooks, E.B.; Gorelick, N.; Hernandez, A.J.; Huang, C.; Joseph Hughes, M.; Kennedy, R.E.; et al. Mapping forest change using stacked generalization: An ensemble approach. Remote Sens. Environ. 2018, 204, 717–728. [Google Scholar] [CrossRef]

- Jin, S.; Homer, C.; Yang, L.; Xian, G.; Fry, J.; Danielson, P.; Townsend, P.A. Automated cloud and shadow detection and filling using two-date Landsat imagery in the USA. Int. J. Remote Sens. 2013, 34, 1540–1560. [Google Scholar] [CrossRef]

| Study Areas | Lat | Lon | Land Use | Fields Info | Height AMSL | Area | N. of Scenes | Tiles | DOY |

|---|---|---|---|---|---|---|---|---|---|

| (m) | (ha) | ||||||||

| Sondrio | 46.34 | 10.33 | MNV | Snow/Mountain | 1590 | 5.46 | 1 | T32TPS | 77 |

| Palmanova | 45.85 | 13.26 | CI | Irrigated area/Plain | 7 | 198.49 | 1 | T33TUL | 164 * |

| Brescia | 45.5 | 10.18 | CI | Close to urban area/Plain | 113 | 38.07 | 2 | T32TNR | 124 *, 184 * |

| Vercelli | 45.34 | 8.3 | CI | Rice/Flooding/Plain | 150 | 127.32 | 3 | T32TMR | 105, 165, 247 * |

| Vicenza | 45.26 | 11.52 | CI | Alluvial plain | 12 | 133.99 | 2 | T32TPR | 164 *, 299 * |

| Piacenza | 45.07 | 10.03 | CI | Close to the river/Plain | 35 | 120.52 | 2 | T32TNQ | 124 *, 189 * |

| Alessandria | 44.79 | 8.84 | CI | Close to river/Plain/Test clear sky | 178 | 61.36 | 1 | T32TMQ | 187 |

| Bologna | 44.58 | 11.35 | CI | Close to urban area/Plain | 24 | 32.73 | 2 | T32TPQ | 194 *, 219 * |

| Ravenna | 44.41 | 12.29 | CI | Close to river and sea | 0 | 111.18 | 2 | T32TQQ | 164 *, 254 * |

| Pesaro | 43.86 | 12.83 | MC | Close to industrial area/steep slope | 49–140 | 29.88 | 2 | T33TUJ | 121 *,206 * |

| Grosseto | 42.88 | 11.05 | CR | Dry land/surrounded by hills | 11 | 101.96 | 2 | T32TPN | 239 *, 274 * |

| Tuscania | 42.41 | 11.84 | CR | Smooth hill | 167 | 33.53 | 3 | T32TQN | 164 *, 291 *, 296 * |

| Avezzano | 42 | 13.57 | MC | Large endorheic lake/Plateau | 651 | 113.94 | 1 | T33TUG | 96 * |

| Foggia | 41.36 | 15.6 | CR | Dry land/Plain | 88 | 79.11 | 1 | T33TWF | 128 * |

| Caserta | 41.02 | 13.99 | CR | Plain | 0 | 80.54 | 1 | T33TVF | 118 * |

| Oristano | 40 | 8.57 | CR | Close to river/wet area/Plain | 0 | 39.43 | 3 | T32TMK | 169 *, 247 *, 282 * |

| Cretto di Burri | 37.79 | 12.97 | CBA | Land art/Slope/ Concrete | 417 | 7.84 | 1 | T33SUB | 208 |

| Enna | 37.57 | 14.35 | CR | Hilly/Slope | 435 | 24.36 | 3 | T33SVB | 118 *, 158 *, 218 * |

| Classes | Error | Accuracy | Precision | Recall | Specificity | False Positive Rate | F Score |

|---|---|---|---|---|---|---|---|

| Shadow | 0.051 | 0.949 | 0.908 | 0.705 | 0.988 | 0.012 | 0.794 |

| Snow | 0.000 | 1.000 | 1.000 | 0.878 | 1.000 | 0.000 | 0.935 |

| Concrete | 0.000 | 1.000 | 1.000 | 1.000 | 1.000 | 0.000 | 1.000 |

| Fog | 0.014 | 0.986 | 0.000 | 0.000 | 1.000 | 0.000 | 0.000 |

| No Shadow | 0.065 | 0.935 | 0.938 | 0.988 | 0.649 | 0.351 | 0.962 |

| Tools | Error | Accuracy | Precision | Recall | Specificity | False Positive Rate | F Score | N° of Missed Scenes |

|---|---|---|---|---|---|---|---|---|

| AgroShadow | 0.054 | 0.946 | 0.908 | 0.705 | 0.988 | 0.012 | 0.794 | 3 |

| Sen2Cor | 0.107 | 0.893 | 0.915 | 0.301 | 0.995 | 0.005 | 0.454 | 11 |

| MAJA-CLM | 0.354 | 0.646 | 0.291 | 0.980 | 0.589 | 0.411 | 0.448 | 3 |

| MAJA-MG2 | 0.180 | 0.820 | 0.416 | 0.555 | 0.866 | 0.134 | 0.475 | 12 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Magno, R.; Rocchi, L.; Dainelli, R.; Matese, A.; Di Gennaro, S.F.; Chen, C.-F.; Son, N.-T.; Toscano, P. AgroShadow: A New Sentinel-2 Cloud Shadow Detection Tool for Precision Agriculture. Remote Sens. 2021, 13, 1219. https://doi.org/10.3390/rs13061219

Magno R, Rocchi L, Dainelli R, Matese A, Di Gennaro SF, Chen C-F, Son N-T, Toscano P. AgroShadow: A New Sentinel-2 Cloud Shadow Detection Tool for Precision Agriculture. Remote Sensing. 2021; 13(6):1219. https://doi.org/10.3390/rs13061219

Chicago/Turabian StyleMagno, Ramona, Leandro Rocchi, Riccardo Dainelli, Alessandro Matese, Salvatore Filippo Di Gennaro, Chi-Farn Chen, Nguyen-Thanh Son, and Piero Toscano. 2021. "AgroShadow: A New Sentinel-2 Cloud Shadow Detection Tool for Precision Agriculture" Remote Sensing 13, no. 6: 1219. https://doi.org/10.3390/rs13061219

APA StyleMagno, R., Rocchi, L., Dainelli, R., Matese, A., Di Gennaro, S. F., Chen, C.-F., Son, N.-T., & Toscano, P. (2021). AgroShadow: A New Sentinel-2 Cloud Shadow Detection Tool for Precision Agriculture. Remote Sensing, 13(6), 1219. https://doi.org/10.3390/rs13061219