1. Introduction

During the last years, there has been a great interest in two very different technologies that have demonstrated to be complementary, unmanned aerial vehicles (UAVs) and hyperspectral imaging. In this way, the combination of both allows to extend the range of applications in which they have been independently used up to now. In one hand, UAVs have been employed in a great variety of uses due to the advantages that these vehicles have over traditional platforms, such as satellites or manned aircrafts. Among these advantages are the high spatial and spectral resolutions that can be reached in images obtained from UAVs, the revisit time and a relative low economical cost. The monitoring of road-traffic [

1,

2,

3,

4], searching and rescuing operations [

5,

6,

7], or security and surveillance [

8,

9] are some examples of civil applications that have adopted UAVs-based technologies. On the other hand, hyperspectral imaging technology has also gained an important popularity increment due to the high amount of information that this kind of images is able to produce, which is specially valuable for applications that need to detect or distinguish different kind of materials that may look like very similar in a traditional digital image. Accordingly, it can be inferred that the combination of these two technologies by mounting hyperspectral cameras onto drones would bring important advances in fields like real-time target detection and tracking [

10].

Despite the benefits that can be obtained combining UAVs and hyperspectral technology, it is necessary to take into account that both also have important limitations. Referring to UAVs, they have a flight autonomy that is limited by the capacity of their batteries, a restriction that gets worse as the weight carried by the drone increases with additional elements, such as cameras, gimbal, on-board PC, or sensors. In reference to the hyperspectral technology, the analysis of the large quantity of data provided by this kind of cameras to extract conclusions for the targeted application implies a high computational cost and makes it difficult to achieve real-time performance. So far, two main approaches have been followed in the state-of-the-art of hyperspectral UAVs-based applications:

Storing the acquired data into a non-volatile memory and off-board processing it once back in the laboratory. The main advantage of this approach is its simplicity and the possibility of processing the data without any limitation in terms of computational resources. However, it is not viable for applications that require a real-time response, and it does not allow the user to visualize the acquired data during the mission. For instance, following this strategy, the drone could fly over a region, scan it with the hyperspectral camera, and store all of the acquired data into a solid state drive (SSD), so, once the drone lands, it is powered off and the disk is removed for extracting the data and processing it [

11,

12,

13]. However, if, by any reason, the acquisition parameters were not correctly configured or the data was not captured as expected, this will not be detected until finishing the mission and extracting the data for its analysis.

On-board processing the acquired hyperspectral data. This is a more challenging approach due to the high data acquisition rate of the hyperspectral sensors as well as the limited number of computational resources available on-board. Its main advantage is that the acquired data could be potentially analysed in real-time or near real-time and, so, decisions could be taken on the fly according to the obtained results. However, the data analysis methods that can be on-board executed are very limited [

10,

14,

15]. Additionally, since, while following this approach, the data are fully processed on-board and never transmitted to the ground station during the mission, it cannot be used for any supervised or semi-supervised application in which an operator is required for visualizing the acquired data or its results and taking decisions according to it. Some examples of this kind of applications are surveillance and search and rescue missions.

This work focuses on providing an alternative solution for a UAV-based hyperspectral acquisition platform to achieve greater flexibility and adaptability to a larger number of applications. Concretely, the aforementioned platform consists of a visible and near-infrared (VNIR) camera, the Specim FX10 pushbroom scanner, [

16] mounted on a DJI Matrice 600 [

17], which also carries a NVIDIA Jetson board as on-board computer. A detailed description of this platform can be found in [

10]. Two different Jetson boards have been used in the experiments and it can be set in the platform as on-board computer, the Jetson Nano [

18] and the Jetson Xavier NX [

19]. They are both pretty similar in shape and weight, although the Jetson Xavier NX has a higher computational capability as well as a higher price.

The main goal of this work is to provide a working solution that allows us to rapidly download the acquired hyperspectral data to the ground station. Once the data are in the ground station, they could be processed in a low latency manner and both the hyperspectral data or the results obtained after the analysis could be visualized by an operator in real-time. This is advantageous for many applications that involve a supervised or semi-supervised procedure, such as defense, surveillance, or search and rescue missions. Currently, these kind of applications are mostly carried out by using RGB first person view cameras [

1,

2], but they do not take advantage of the possibilities that are offerred by the extra information provided by the hyperspectral sensors.

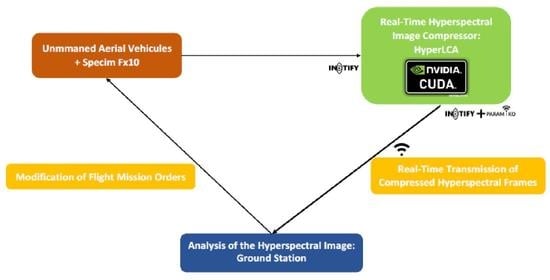

Figure 1 graphically describes the desired targeted solution. To achieve this goal, it is assumed that a Wireless Local Area Network (WLAN) based on the 802.11 standard, which was established by the Institute of Electrical and Electronics Engineers (IEEE), will be available during the flight, and that both the on-board computer and the ground station will be connected to it to use it as data downlink. It will be considered to be real-time transmissions those situations in which the acquisition frame rate and the transmission frame rate reach the same value, although there may be some latency between the capturing process and the transmission one.

According to our experience in the flight missions carried up to now, the frame rate of the Specim FX10 camera mounted in our flying platform is usually set to a value between 100 and 200 frames per second (FPS) and, so, these are the targeted scenarios to be tested in this work. The Specim FX10 camera produces hyperspectral frames of 1024 pixels with up to 224 spectral bands each, storing them using 12 or 16 bits per pixel per band (bpppb). This means that the acquisition data rate goes from 32.8 MB/s (100 FPS and 12 bpppb) to 87.5 MB/s (200 FPS and 16 bpppb). These values suggest the necessity of carrying out the hyperspectral data compression before its transmission to the ground station in order to achieve real-time performance. For that purpose, the

Lossy Compression Algorithm for Hyperspectral Image Systems (HyperLCA) has been chosen. This specific compressor has been selected due to the fact that it offers several advantages with respect to other state-of-the art solutions. One one side, it presents a low computational complexity and high level of parallelism in comparison with other transform-based compression approaches. On the other side, the HyperLCA compressor is able to achieve high compression ratios while preserving the relevant information for the ulterior hyperspectral applications. Additionally, the HyperLCA compressor independently compresses blocks of hyperspectral pixels without any spatial alignment required, which makes it especially advantageous for compressing hyperspectral data that were captured by pushbroom or whiskbroom sensors and providing a natural error-resilience behaviour. All of these advantages were deeply analyzed in [

20].

The data compression process reduces the amount of data to be downloaded to the ground station, which makes the achievement of a real-time data transmission viable. However, it is also necessary to achieve a real-time performance in the compression process. For doing so, the HyperLCA compressor has been parallelized taking advantage of the available LPGPU included in the Jetson boards as it was done in [

21]. However, multiple additional optimizations have been developed in this work with respect to the one presented in [

21] to make the entire system work as expected, encompassing the capturing process, the on-board data storing, the hyperspectral data compression and the compressed data transmission.

The document is structured, as follows.

Section 2 contains the description of the algorithm used in this work for the compression of the hyperspectral data.

Section 3 displays the information of the on-board computers that have been tested in this work. The proposed design for carrying out the real-time compression and transmission of the acquired hyperspectral data is fully described in

Section 4.1.

Section 4.2 contains the data and resources that are used for the experiments and

Section 5 shows the description of the experiments and obtained results. Finally, the obtained conclusions are summarized in

Section 7.

2. Description of the HyperLCA Algorithm

The HyperLCA compressor is used in this work to on-board compress the hyperspectral data, reducing its volume before being transmitted to the ground station, as it was previously specified. This compressor is a lossy transform-based algorithm that presents several advantages that satisfy the necessities of this particular application very well. The most relevant are [

20]:

It is able to achieve high compression ratios with reasonable good rate-distortion ratios and keeping the relevant information for the ulterior hyperspectral images analysis. This allows considerably reducing the data volume to be transmitted without decreasing the quality of the results obtained by analyzing the decompressed data in the ground station.

It has a reasonable low computational cost and it is highly parallelizable. This allows for taking advantage of the LPGPU available on-board to speed up the compression process for achieving a real-time compression.

It permits independently compressing each hyperspectral frame as it is captured. This makes possible to compress and transmit the acquired frames in a pipelined way, enabling the entire system to continuously operate in an streaming fashion, with just some latency between the acquired frame and the frame received in the ground station.

It permits setting the minimum desired compression ratio in advance, making it possible to fix the maximum data rate to be transmitted prior to the mission, thus ensuring that it will not saturate the donwlink.

The compression process within the HyperLCA algorithm consists of three main compression stages, which are a spectral transform, a preprocessing stage, and the entropy coding stage. In this work, each compression stage independently processes one single hyperspectral frame at a time. The spectral transform sequentially selects the most different pixels of the hyperspectral frame using orthogonal projection techniques. The set of selected pixels is then used for projecting this frame, obtaining a spectral decorrelated and compressed version of the data. The preprocessing stage is executed after the spectral transform for adapting the output data for being entropy coded in a more efficient way. Finally, the entropy coding stage manages the codification of the extracted vectors using a Golomb–Rice coding strategy. In addition to these three compression stages, there is one extra initialization stage, which carries out the operations that are used for initializing the compression process according to the introduced parameters.

Figure 2 graphically shows these compression stages, as well as the data that the HyperLCA compressor share between them.

2.1. HyperLCA Input Parameters

The HyperLCA algorithm needs three input parameters in order to be configured:

Minimum desired compression ratio (CR), defined as the relation between the number of bits in the real image and the number of bits of the compressed data.

Block size (BS), which indicates the number of hyperspectral pixels in a single hyperspectral frame.

Number of bits used for scaling the projection vectors (Nbits). This value determines the precision and dynamic range to be used for representing the values of the V vectors.

2.2. HyperLCA Initialization

Once the compressor has been correctly configured, its initialization stage is carried out. This initialization stage consists on determining the number of pixel vectors and projection vectors (

) to be extracted for each hyperspectral frame, as shown in Equation (

1), where

DR refers to the number of bits per pixel per band of the hyperspectral image to be compressed and

refers to the number of spectral bands. The extracted pixel vectors are referred as

P and the projection vectors are referred as

V in the rest of this manuscript.

The value is used as an input of the HyperLCA transform, which is the most relevant part of the HyperLCA compression process. The compression that is achieved within this process directly depends on the number of selected pixels, . Selecting more pixels provides better decompressed images, but lower compression ratios.

2.3. HyperLCA Transform

For each hyperspectral frame, the HyperLCA Transform calculates the average pixel, also called centroid. Subsequently, the centroid pixel is used by the HyperLCA Transform to select the first pixel as the most different from the average. This is an unmixing like strategy used in the HyperLCA compressor for selecting the most different pixels of the data set to perfectly preserve them through the compression–decompression process. This results in being very useful for many remote sensing applications, like anomaly detection, spectral unmixing, or even hyperspectral and multispectral image fusion, as demonstrated in [

22,

23,

24].

The HyperLCA Transform provides most of the compression ratio that was obtained by the HyperLCA compressor and also most of its flexibility and advantages. Additionally, it is the only lossy part of the HyperLCA compression process. Algorithm 1 describes, in detail, the process followed by the HyperLCA Transform for a single hyperspectral frame. The pseudocode assumes that the hyperspectral frame is stored as a matrix, M, with the hyperspectral pixels being placed in columns. The first step of the HyperLCA Transform consists on calculating the centroid pixel, c, and subtracting it to each pixel of M, obtaining the centralized frame, . The transform modifies the values in each iteration.

Figure 3 graphically describes the overall process that is followed by the HyperLCA Transform for compressing a single hyperspectral frame. As shown in this figure, the process that is followed by the HyperLCA Transform mainly consists of three steps that are sequentially repeated. First, the brightest pixel in

, which is the pixel with more remaining information,

, is selected (lines 2 to 7 of Algorithm 1). After doing so, the vector

is calculated as the projection of the centralized frame,

, in the direction spanned by

(lines 8 to 10 of Algorithm 1). Finally, the information of the frame that can be represented with the extracted

and

vectors is subtracted from

, as shown in line 11 of Algorithm 1.

Accordingly,

contains the information that is not representable with the already selected pixels,

P, and

V vectors. Hence, the values of

in a particular iteration,

i, would be the information lost in the compression–decompression process if no more pixels

and

vectors are extracted.

| Algorithm 1 HyperLCA transform. |

| Inputs: |

| , |

| Outputs: |

| , |

| Declarations: |

| c; {Centroid pixel.} |

| ; {Extracted pixels.} |

| ; {Projected image vectors.} |

| ; {Centralized version of M.} |

| Algorithm: |

| 1: {Centroid calculation and frame centralization (c, )} |

| 2: for

to do |

| 3: for to do |

| 4: |

| 5: end for |

| 6: |

| 7: |

| 8: |

| 9: |

| 10: |

| 11: |

| 12: end for |

2.4. HyperLCA Preprocessing

This stage is crucial in the HyperLCA compression algorithm to adapt the HyperLCA Transform output data for being entropy coded in a more efficient way. This compression stage encompasses two different parts.

2.4.1. Scaling V Vectors

After executing the HyperLCA Transform, the resulting

V vectors contain the projection of the frame pixels into the space spanned by the different orthogonal projection vector

in each iteration. This results in floating point values of

V vector elements between −1 and 1. These values need to be represented using integer data type for their codification. Hence, vectors

V can be easily scaled in order to fully exploit the dynamic range available according to the

used for representing these vectors and avoid loosing too much precision in the conversion, as shown Equation (

2). After doing so, the scaled

V vectors are rounded to the closest integer values.

2.4.2. Error Mapping

The entropy coding stage takes advantage of the redundancies within the data to assign the shortest word length to the most common values in order to achieve higher compression ratios. In order to facilitate the effectiveness of this stage, the output vectors of the HyperLCA Transform, after the preprocessing of

V vectors, are independently lossless processed to represent their values using only positive integer values closer to zero than the original ones, using the same dynamic range. To do this, the HyperLCA algorithm makes use of the prediction error mapper described in the Consultative Committee for Space Data Systems (CCSDS) that are recommended standard for lossless multispectral and hyperspectral image compression [

25].

2.5. HyperLCA Entropy Coding and Bitstream Generation

The last stage of the HyperLCA compressor corresponds to a lossless entropy coding strategy. The HyperLCA algorithm makes use of the Golomb–Rice algorithm [

26] where each single output vector is independently coded.

Finally, the outputs of the aforementioned compression stages are packaged in the order that they are produced, generating the compressed bitstream. The first part of the bitstream consists of a header that includes all of the necessary information to correctly decompress the data.

The detailed description of the coding methodology followed by the HyperLCA compressor as well as the exact structure of the compressed bitstream can be found in [

20].

3. On-Board Computers

The on-board computer is one of the key elements of the entire acquisition platform, as described in [

10]. It is in charge of managing the overall mission, controlling the UAV actions and flying parameters (direction, speed, altitude, etc.), as well as the data acquisition controlling all of the sensors available on-board, including the Specim FX10 hyperspectral camera. For doing so, two different Nvidia Jetson boards have been separately tested in this work, the Jetson Nano [

18] and the Jetson Xavier NX [

19]. These two boards present very good computational capabilities as well as many connection interfaces that facilitate the integration of all the necessary devices into the system, while, at the same time, are characterized by a high computational capability in relation to their reduced size, weight and power consumption. Additionally, their physical characteristics are generally better than the ones that are presented by the Jetson TK1, the board originally used in the first version of our UAV capturing platform, described in [

10]. This is a remarkable point when taking into account the restrictions in terms of available space and load weight in the drone.

Table 1 summarizes the main characteristics of the Jetson TK1, whereas, in

Table 2 and

Table 3, the main characteristics of the two Jetson boards tested in this work are summarized.

In addition to the management of the entire mission and acquisition processes, the on-board computer is also used in this work for carrying out the necessary operations to compress and transmit in real-time the acquired hyperspectral data to the ground station. For doing so, the compression process, which is the most computational demanding one, has been accelerated while taking advantage of the LPGPUs integrated into these Jetson boards.

By default, both the Jetson Nano and Jetson Xavier NX are prepared for running the operating system in a Secure Digital card (SD). However, this presents limitations in terms of memory speed as well as in the maximum amount of data that can be stored. To overcome this issue, an external SSD that is connected through an USB3.0 port has been used in the Jetson Nano. The Jetson Xavier NX is already prepared to integrate a Non-Volatile Memory Express (NVMe) 2.0 SSD keeping the system more compact and efficient. However, this memory was not yet available during this work and, hence, all of the experiments executed in the Jetson Xavier NX were carried out using just the SD card memory.

Additionally, a Wifi antenna is required. to be able to connect to the WLAN to transmit the compressed hyperspectral data to the ground station. While the Jetson Xavier NX already integrates one, the Jetson Nano does not. Hence, an external USB2.0 TP-Link TL-WN722N Wifi antenna [

27] has been included in the experiments that were carried out using the Jetson Nano.

5. Results

Several experiments have been carried out in this work to evaluate the performance of the proposed designed for real-time hyperspectral data compression and transmission. First, the achievable transmission speed that could be obtained without compressing the acquired hyperspectral frames is analyzed in

Section 5.1. The obtained results verify that the data compression is necessary for achieving a real-time transmission. Secondly, the maximum compression speed that can be obtained without parallelizing the compression process using the available GPU has been tested in

Section 5.2. The obtained results demonstrate the necessity of parallelizing the process using the available GPU to achieve real-time performance. Finally, the results that can be obtained with the proposed design taking advantage of the different levels of parallelism and the available GPU are tested in

Section 5.3, demonstrating its suitability for the real-time hyperspectral data compression and transmission.

In the following

Table 4,

Table 5,

Table 6 and

Table 7, the simulated capturing speed and the maximum compressing and transmission speeds are referred to as Capt, Comp, and Trans, respectively. In addition, the WiFi signal strength is named Signal in the aforementioned tables of results, a value that is directly obtained from the WLAN interface during the transmission process.

5.1. Maximum Transmission Speed without Compression

First of all, the maximum transmission speed that could be achieved if the acquired frames were not compressed has been tested. For doing so, the targeted capturing speed has been set to 100 FPS, since it is the lowest frame rate typically used in our flight missions.

Table 4 displays the obtained results.

As it can be observed, when independently transmitting each frame (Packing = 1), the transmission speed is too low in relation to the capturing speed, even capturing at 100 FPS. When transmitting five frames at a time, the transmission speed increases, but it is still very low. It can also be observed that, when using the uint12-bil format, the transmission speed is approximately 25 percent faster than when using the uint16-bip one. This makes sense, since the amount of data per file when using the uint12-bil format is 25 percent lower than the uint16-bip one. These results verify the necessity of compressing the acquired hyperspectral data before transmitting it to the ground station.

Additionally, it can also be observed in the results that the Jetson Xavier NX is able to achieve a higher transmission rate than the Jetson Nano, even if the Jetson Nano has a higher WiFi signal strength. This could be due to the fact that the Jetson Nano is using an external TP-Link TL-WN722N WiFi antenna that is connected through USB2.0 interface, while the WiFi antenna used by the Jetson Xavier NX is integrated in the board.

5.2. Maximum Compression Speed without Gpu Implementation

Once the necessity of carrying out the compression of the acquired hyperspectral data has been demonstrated, the necessity of speeding up this process by carrying out a parallel implementation is to be tested. For doing so, the compression process within the HyperLCA algorithm has been serially executed in the CPU integrated in both boards for evaluating the compression speed. The capturing speed has been set to the minimum value used in our missions (100 FPS), as done in

Section 5.1. The compression parameters have been set to the less restrictive values typically used for the compression within the HyperLCA algorithm (CR = 20 and

= 12). From the possible combinations of input parameters for the HyperLCA compressor, these values should lead to the fastest compression results according to the analysis done in [

21], where the HyperLCA compressor was implemented onto a Jetson TK1 and Jetson TX2 developing boards. Additionally, the data format used for this experiment is

uint16-bip, since this is the data format for which the reference version of the HyperLCA compressor is prepared. By using this data format, the execution of extra data format transformations that would lead to slower results is prevented.

Table 5 displays the obtained results.

The compression speed achieved without speeding up the process is too low and far from a real-time performance, as it can be observed in

Table 5. This demonstrates the necessity of a parallel implementation that takes advantage of the available LPGPUs integrated in the Jetson boards for increasing the compression speed. It can also be observed that the Jetson Xavier NX offers a better performance that the Jetson Nano.

5.3. Proposed Design Evaluation

In this last experiment, the performance of the proposed design has been evaluated for both the Jetson Xavier NX and the Jetson Nano boards. The capturing speed has been set to the minimum and maximum values that are typically used in our flying missions, which are 100 FPS and 200 FPS, respectively. Two different combinations of compression parameters have been tested. The first one, CR = 20 and

= 12, corresponds to the less restrictive scenario and should lead to the fastest compression results according to [

21]. Similarly, the second one, CR = 12 and

= 8, corresponds to the most restrictive case and it should lead to the slowest compression results, as demonstrated in [

21]. Furthermore, both the

uint16-bip and

uint12-bil data formats have been tested. Finally, the compressed frames have been packed in two different ways, individually and in groups of 5. All of the obtained results are displayed in

Table 6.

The Jetson Xavier NX is always capable of compressing more than 100 frames per second, regardless of the configuration used, as observed in

Table 6. However, it is only capable of achieving 200 FPS in the compression in the less restrictive scenario, which is

uint12-bil, CR = 20,

= 12 and Packing = 5. Additionally, when capturing at 200 FPS, there are other scenarios in which real-time compression is almost achieved, producing compression speeds up to 190 FPS or 188 FPS. On the other hand, the Jetson Nano only presents a poorer compression performance, achieving real-time compression speed in the less restrictive scenario.

Regarding the transmission speed, it can be observed that, when packing the frames in groups of five, the transmission speed is always the same as the compression speed, but in the two fastest compression scenarios in which the compression speed reaches 200 and 188 FPS, the transmission speed stays at 185 and 162 FPS, respectively. This may be to the fact that the WiFi signal strength is not high enough (36/100 and 39/100) in these two tests. Nevertheless, two additional tests have been carried out for these particular situations with the Jetson Xavier NX, whose results are displayed in

Table 7. A real-time transmission is achieved when increasing the packing size to 10 frames, as shown in this table. It can be also observed that in these two tests real-time compression has also been achieved. This may be due to the fact that a lower number of files are being written and read by the operating system. This suggests that a faster performance could be obtained in the Jetson Xavier NX using a solid stage disk (SSD) instead of the SD card that has been used so far in these experiments.

Additionally, the time at which each hyperspectral frame has been captured, compressed, and transmitted in these two last experiments is graphically displayed in

Figure 8.

Figure 8a shows the results that were obtained for the

uint12-bil data format, while

Figure 8b shows the values that were obtained for the

uint16-bip one. In these two graphics, it can be observed how, on average, the slope of the line representing the captured frames (in blue color), the line representing the compressed frames (in orange), and the line representing the transmitted frames (in green color) is the same, indicating a real-time performance. It can also be observed the effect of packing the frames in groups of 10 in the transmission line. Finally,

Figure 8a also shows the effect of a short reduction in the transmission speed due to a lower WiFi signal quality. As it can be observed in this figure, the transmission process is temporarily delayed and so is the compression one to prevent writing new data to the shared memory before processing the existing one. After a while, both the connection and the overall process are fully recovered, demonstrating the benefits of the proposed methodology previously exposed in

Section 4.1.

Finally, all of the obtained results demonstrate that packing the compressed frames reduces the communication overhead and accelerates the transmission process. It can also be observed that using the data in uint12-bil format accelerates the compression process, since less data are been transferred through the shared memory, demonstrating the benefits of the developed kernels for allowing the compression process to be adapted to any input data format.

In general, the results that were obtained for the Jetson Xavier NX demonstrate that the proposed design can be used for real-time compressing and transmitting hyperspectral images at most of the acquisition frame rates typically used in our flying missions and using different configuration parameters for the compression. Additionally, it could be potentially faster if a solid stage disk (SSD) memory were used instead of the SD card that was used in the experiments. Regarding the Jetson Nano, a real-time performance was hardly achieved in just the less restrictive scenarios.

6. Discussion

The main goal of this work is to be able to transmit the hyperspectral data captured by an UAV-based acquisition platform to a ground station in such a way that it can be analyzed and/or visualized in real-time to take decisions on the flight. The experiments conducted in

Section 5.1 show the necessity of compressing the acquired hyperspectral data in order to achieve a real-time transmission. Nevertheless, the achievement of a real-time compression performance on-board this kind of platforms is not an easy task when considering the high data rate that is produced by the hyperspectral sensors and the limited computational resources available on-board. This fact has been experimentally tested in

Section 5.2. While considering this, the compression algorithm to be used must meet several requirements, including a low computational cost, a high level of parallelism that allows for taking advantage of the LPGPUs available on-board to speed up the compression process, being able to guarantee high compression ratios, and integrate an error resilience nature to ensure that the compressed data can be delivered as expected. These requirements are very similar to those found in the space environment, where the acquired hyperspectral data must be rapidly compressed on-board the satellite to save transmission bandwidth and storage space, using limited computational resources and ensuring an error resilience behaviour.

The HyperLCA compressor has been selected in this work for carrying out the compression of the acquired hyperspectral data, since this compressor was originally developed for the space environment and satisfies all the mentioned requirements [

20,

28]. This compressor has been tested in previous works against those that were proposed by the

Consultative Committee for Space Data Systems (CCSDS) in their

Recomended Standards (Blue Books) [

25]. Concretely, in [

20], it was compared with the Karhunen–Loeve transform-based approach described in the

CCSDS 122.1-B-1 standard (Spectral Preprocessing Transform for Multispectral and Hyperspectral Image Compression) [

30] as the best spectral transform for decorrelating hyperspectral data in terms of accuracy. The results shown in [

20] indicate that the HyperLCA transform is able to achieve a similar decorrelation performance, but at a much lower computational cost and introducing extra advantages, such as higher levels of parallelism and an error resilience behavior. In [

20], the HyperLCA compressor was also tested against the lossless prediction-based approach that was proposed in the

CCSDS 123.0-B-1 standard (Lossless Multispectral and Hyperspectral Image Compression) [

31]. As expected, the compression ratios that can be achieved by a lossless approach are very far from those that are required by the application targeted in this work. The CCSDS has recently published a new version of this prediction-based algorithm, making it able to behave not only as a lossless solution, but also as a near-lossless one to achieve higher compression ratios. This new solution has been published under the

CCSDS 123.0-B-2 standard (Low-Complexity Lossless and Near-Lossless Multispectral and Hyperspectral Image Compression) [

32] and it represents a very interesting alternative to be considered in future works.

On the other hand, although presenting a new methodology to transmit hyperspectral information from a UAV to a ground station in real-time is the main goal of this research work, the ulterior hyperspectral imaging applications have been always taken into account during the development process. This means that, while it is necessary to carry out lossy-compression to meet the compression ratios that are imposed by the acquisition data rates and transmission bandwidth, the quality of the hyperspectral data received in the ground station has to be good enough to preserve the desired performance in the targeted applications. As previously described in this work, the HyperLCA compressor was developed following an unmixing-like strategy in which the most different pixels present in each image block are perfectly preserved through the compression-decompression process. This is traduced in the fact that most of the information that is lost in the compression process corresponds to the image noise, while the relevant information is preserved, as demonstrated in [

20,

29]. In [

20], the impact of the compression-decompression process within the HyperLCA algorithm was tested when using the decompressed images for hyperspectral linear unmixing, classification, and anomaly detection, demonstrating that the use of this compressor does not negatively affect the obtained results. This specific study was carried out while using well known hyperspectral datasets and algorithms, such as the

Pavia University data set coupled with the

Support Vector Machine (SVM) classifier, or the

Rochester Institute of Technology (RIT) and the

World Trade Center (WTC) images coupled with the

Orthogonal Subspace Projection Reed-Xiaoli (OSPRX) anomaly detector. The work presented in [

29] carries out a similar study, just for anomaly detection, but using the hyperspectral data that were collected by the acquisition platform used in this work and with the exact same configurations, both in the acquisition stage and compression stage. Concretely, the data used in this work, as described in

Section 4.2, are a reduced subset of the hyperspectral data used in [

29].

Finally, all of this work has been developed while assuming that a Wireless Local Area Network (WLAN), based on the 802.11 standard, will be available during the flight, and that both the on-board computer and ground station will be connected to it to use it as data downlink. Further research works are needed to increase the availability and range of this kind of networks or to be able to integrate the proposed solution with another wireless transmission technologies to make it available to a wider range of remote sensing applications.

7. Conclusions

In this paper, a design for the compression and transmission of hyperspectral data acquired from an UAV to a ground station has been proposed so that it can be analysed and/or visualized by an operator in real-time. This opens the possibility of taking advantage of the spectral information collected by the hyperspectral sensors for supervised or semi-supervised applications, such as defense, survilliance, or search and rescue missions.

The proposed design assumes that a WLAN will be available during the flight, and that both the on-board computer and the ground station will be connected to it to use it as data downlink. This design can work with different input data formats, which allows for using it with most of the hyperspectral sensors present in the market. Additionally, it preserves the original hyperspectral data in a non-volatile memory, producing two additional advantages. On one side, if the connection is lost for a while during the mission, the information is not lost, and the process will go on once the connection is recovered, guaranteeing that all of the acquired data are transmitted to the ground station. On the other side, once the mission finishes and the drone lands, the real hyperspectral data can be extracted from the non-volatile memory without compression if a more detailed analysis is required.

The entire design has been tested using two different boards from NVIDIA that integrate LPGPUs, the Jetson Xavier NX, and the Jetson Nano. The LPGPU has been used for accelerating the compression process, which is required for decreasing the reduction of the data volume for its transmission. The compression has been carried out using the HyperLCA algorithm, which permits achieving high compression ratios with a relatively high rate-distortion relation and at a reduced computational cost.

Multiple experiments have been executed to test the performance of all the stages that build up the proposed design for both the Jetson Xavier NX and Jetson Nano boards. The results obtained for the Jetson Xavier NX demonstrate that the proposed design can be used for real-time compressing and transmitting hyperspectral images at most of the acquisition frame rates typically used in our flying missions and using different configuration parameters for the compression. Additionally, it could be potentially faster if a solid stage disk (SSD) memory was used instead of the SD card that was used in these experiments. On the other hand, when using the Jetson Nano, a real-time performance was achieved in just the less restrictive scenarios.

Future research lines may include the optimization of the proposed design for reducing its computational burden, so that it can achieve a more efficient performance, especially when using boards with more limitations in terms of computational resources as an on-board computer.