A Hybrid Deep Learning-Based Spatiotemporal Fusion Method for Combining Satellite Images with Different Resolutions

Abstract

1. Introduction

- (1)

- HDLSFM has a minimal input requirement, i.e., one fine–coarse image pair and a coarse image at a prediction date. Compared to DL-based STF methods which use at least two fine–coarse image pairs, HDLSFM is more applicable in areas with severe cloud contamination.

- (2)

- HDLSFM can be used to predict complex land surface temporal changes, including PC and LC.

- (3)

- HDLSFM is robust to radiation differences and time interval between prediction date and base date, which ensures its effectiveness in the generation of fused time-series data using a limited number of fine–coarse image pairs.

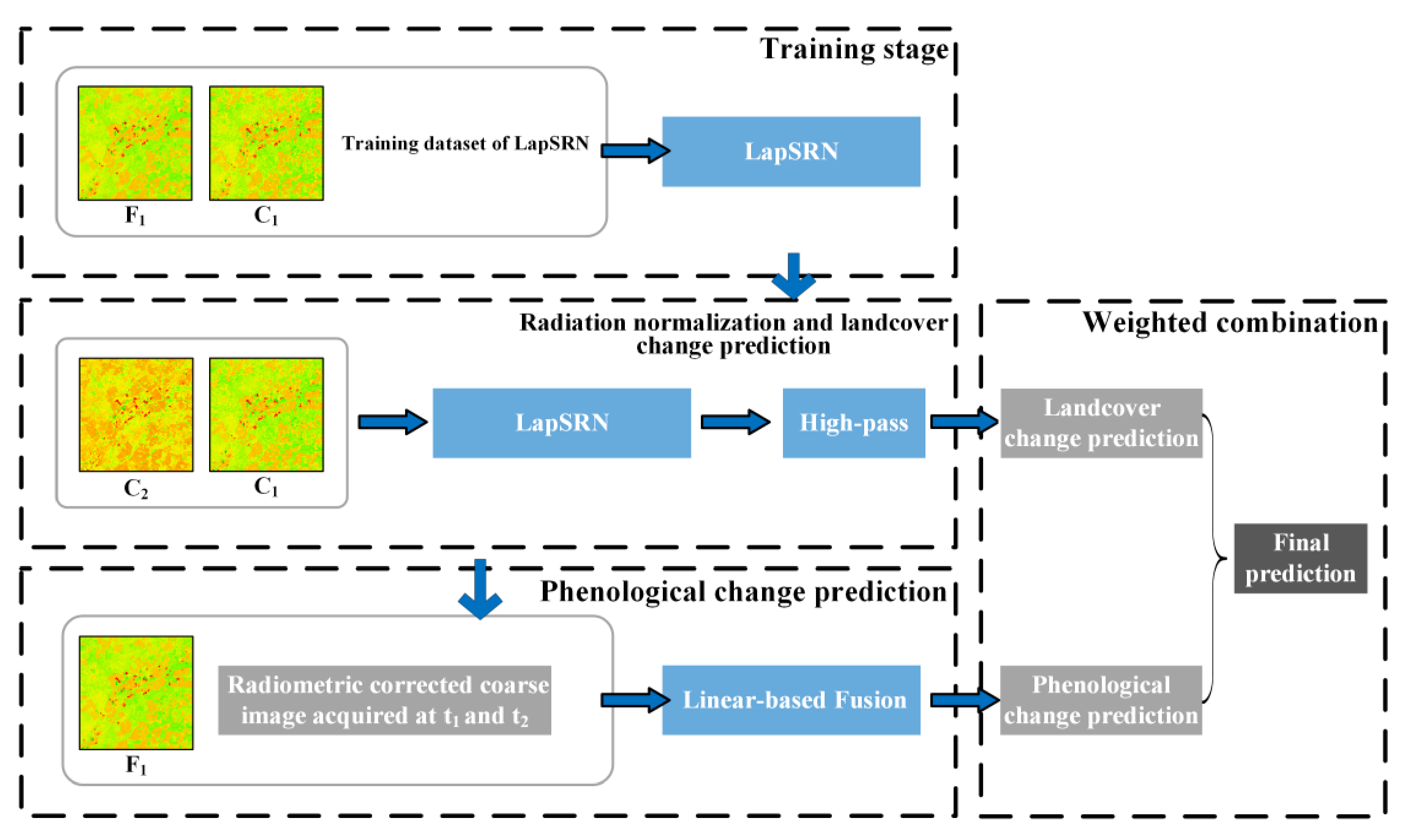

2. Methods

2.1. Radiation Normalization and Landcover Change Prediction

2.1.1. Radiation Normalization

2.1.2. Landcover Change Prediction

2.1.3. Integration of Radiation Normalization and Landcover Change Prediction

2.2. Linear-Based Fusion for Phenological Prediction

2.3. Combination of Linear- and Deep Learning-Based STF

3. Experimental Setup and Datasets

3.1. Experimental Setup

3.1.1. Experiment I: Effectiveness of HDLSFM in Landcover Change Prediction

3.1.2. Experiment II: Effectiveness of HDLSFM in Phenological Change Prediction

3.1.3. Experiment III: Effectiveness of HDLSFM in Generating Fused Time-Series Data

3.1.4. Experiment IV: Effectiveness of HDLSFM on Other Types of Satellite Images

3.2. Comparison and Evaluation Strategy

4. Experimental Results

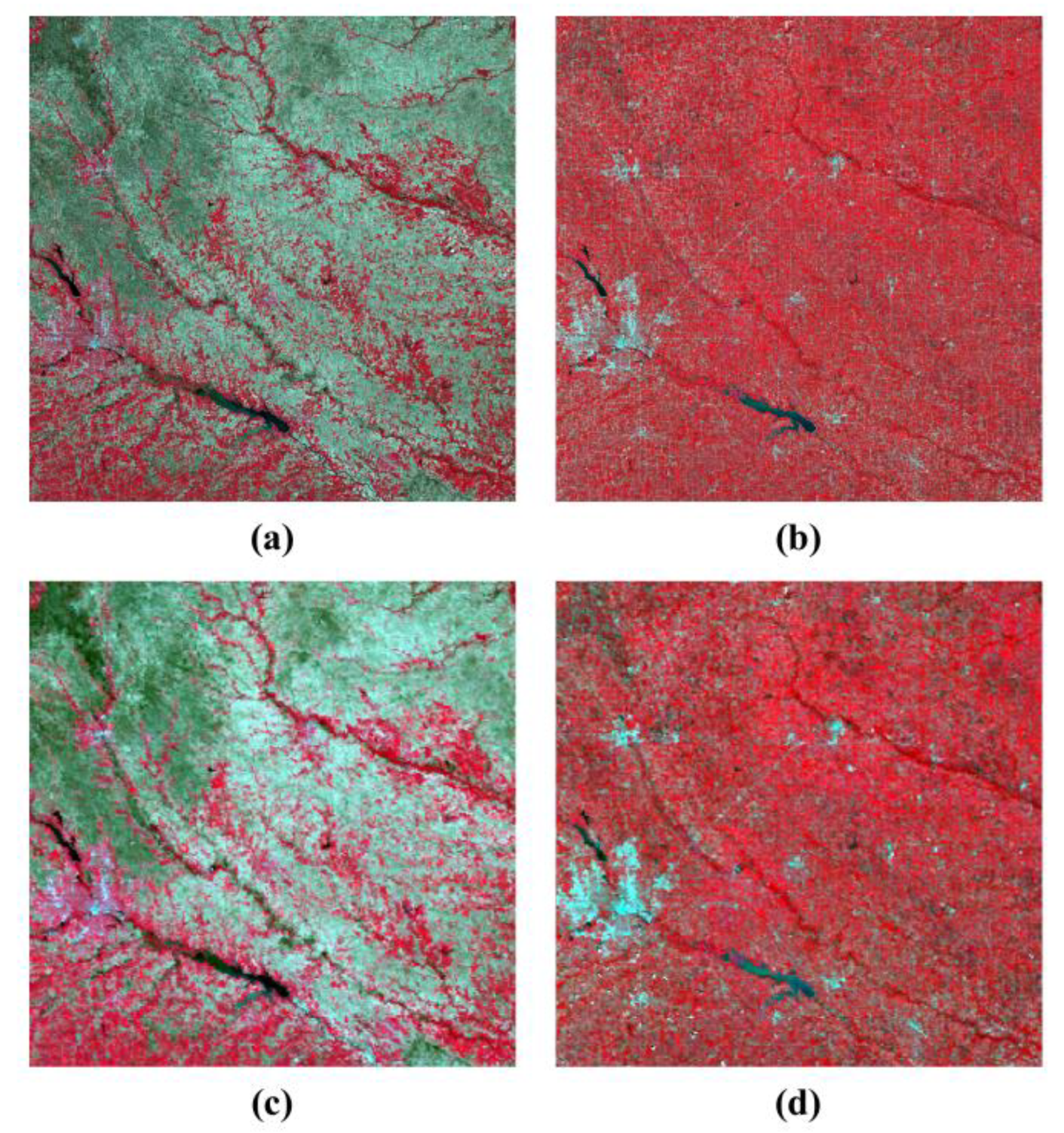

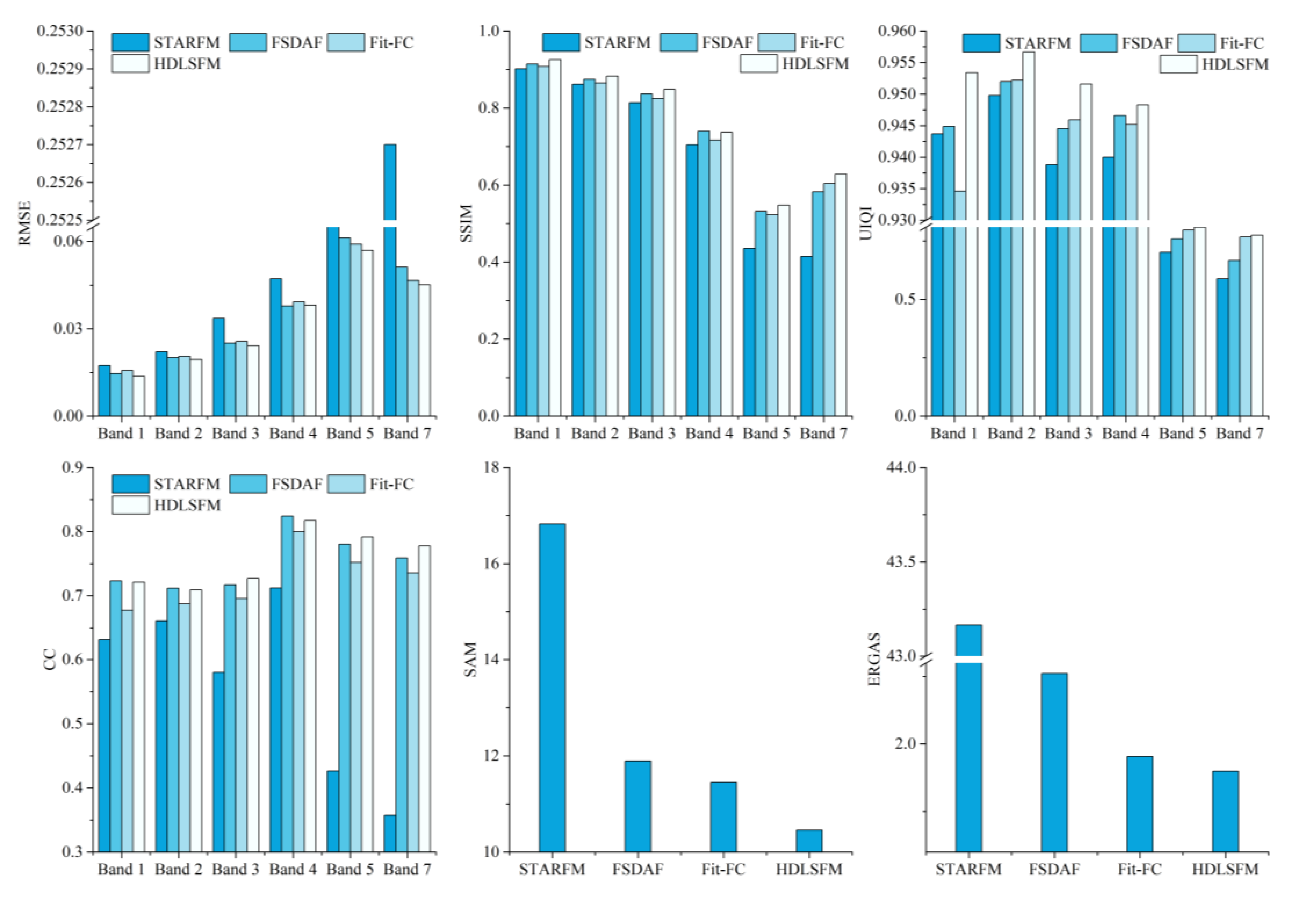

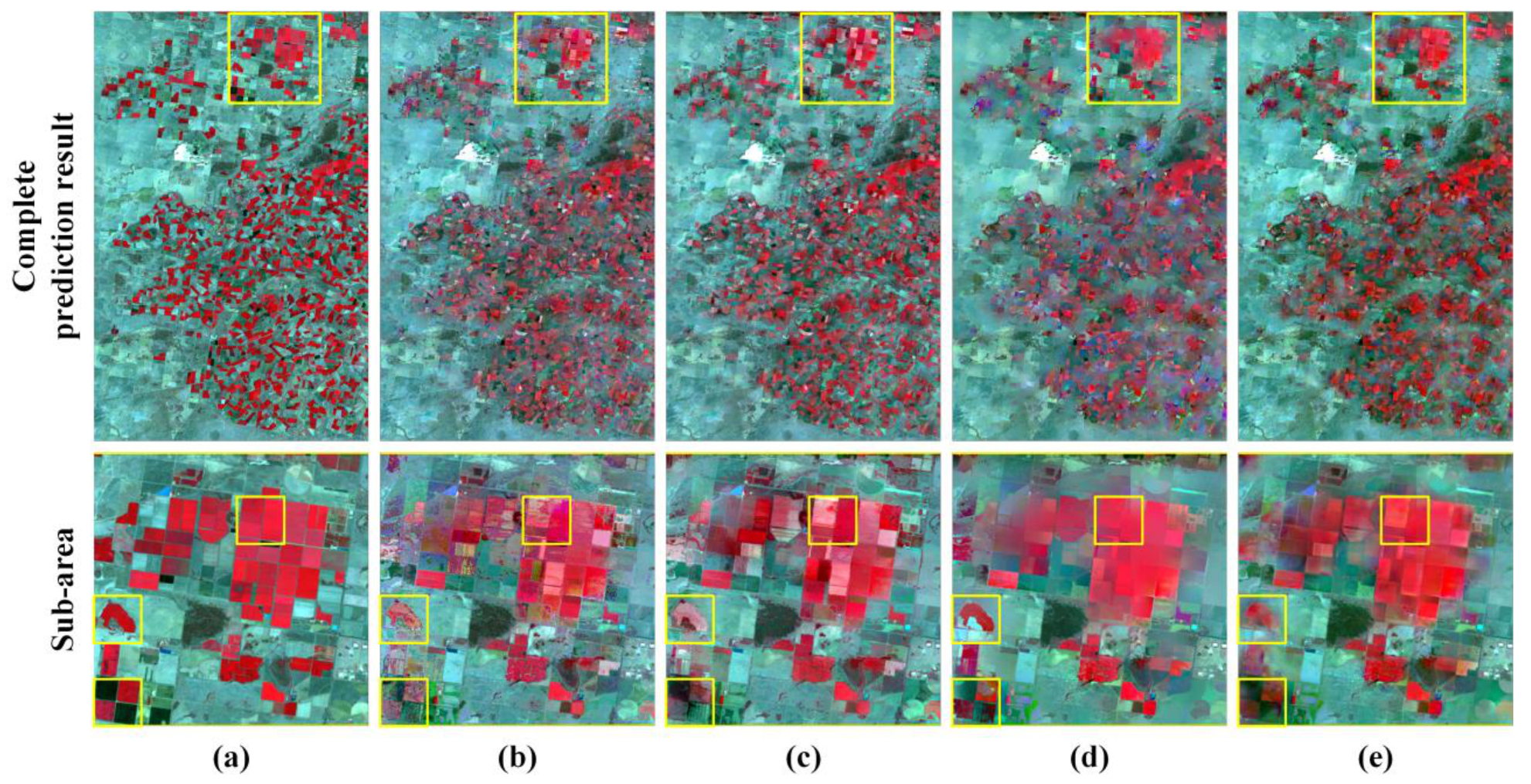

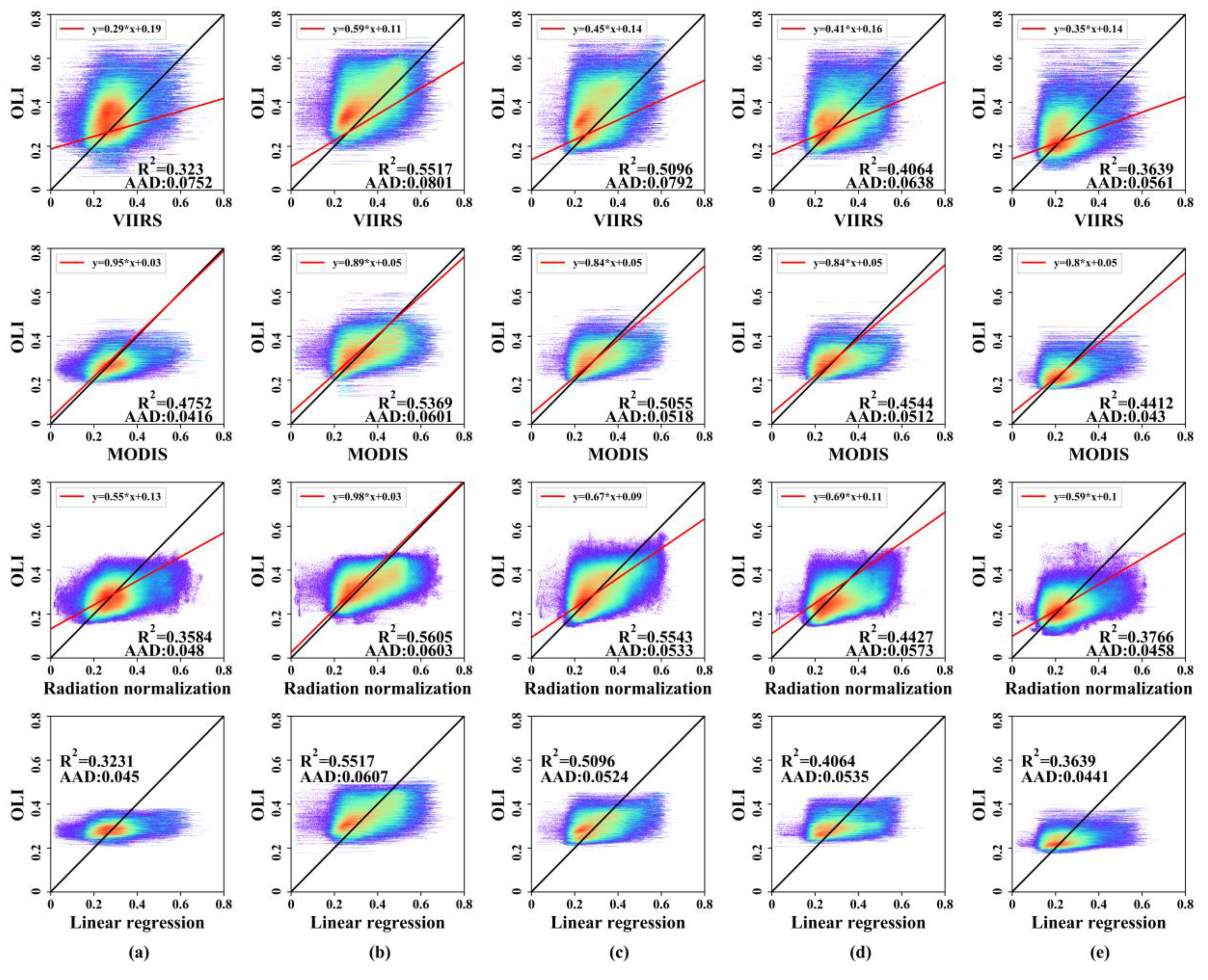

4.1. Experiment I

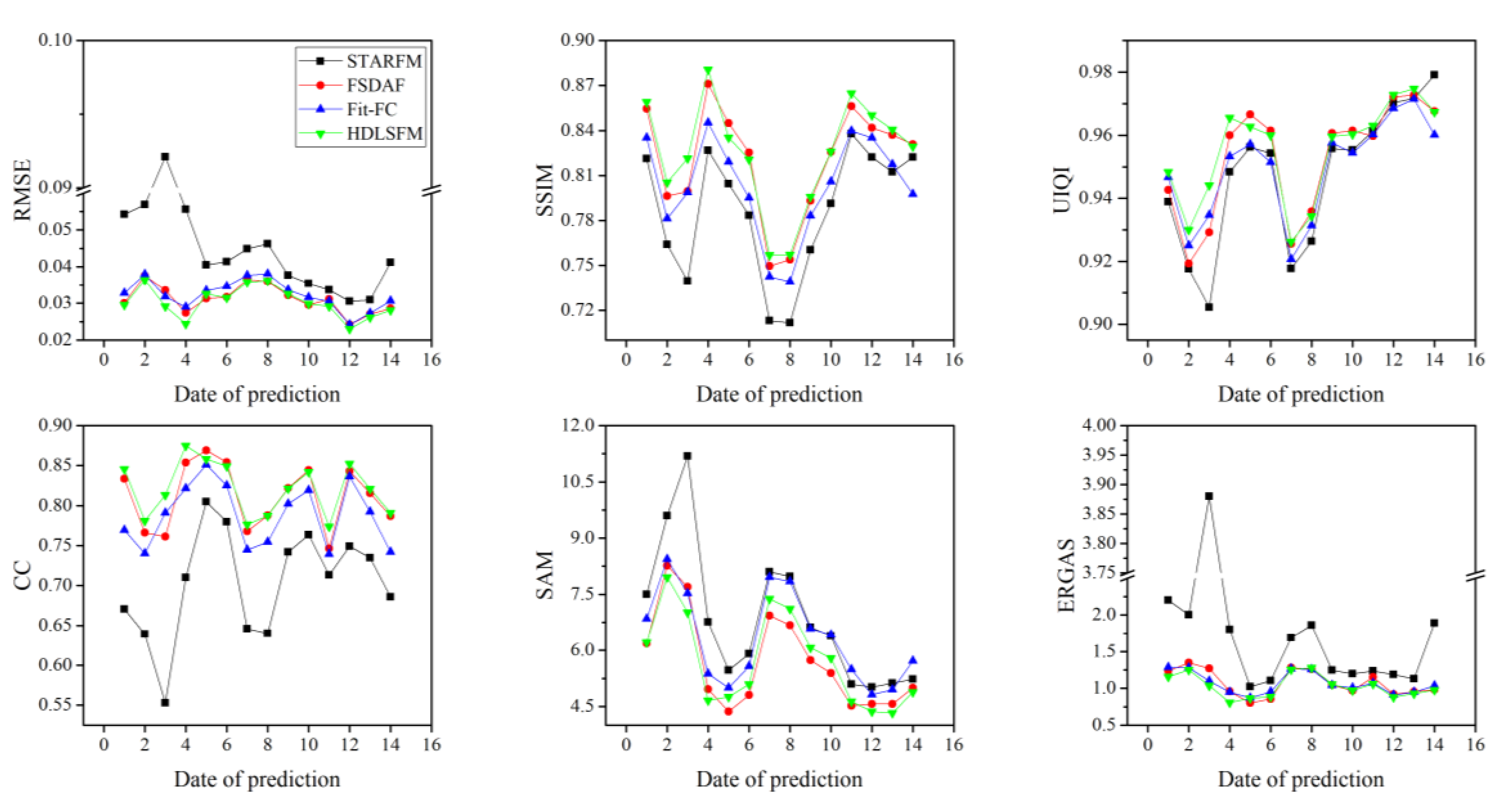

4.2. Experiment II

4.3. Experiment III

4.4. Experiment IV

5. Discussion

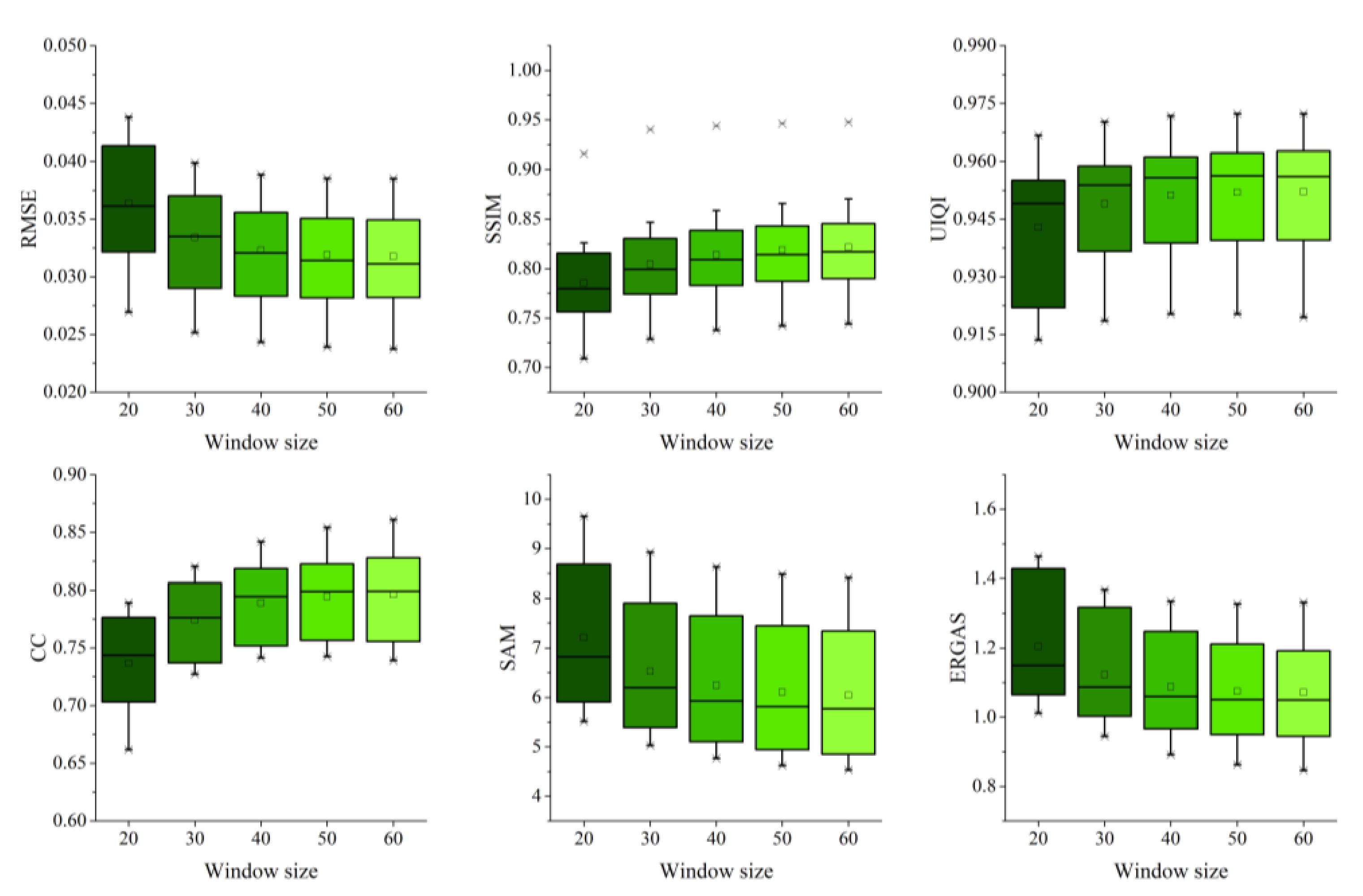

5.1. Prediction Performance Sensitivity to Moving Window Size

5.2. High-Pass Modulation in Spatial Detail Reconstruction

5.3. Fusion of LC Prediction with PC Prediction

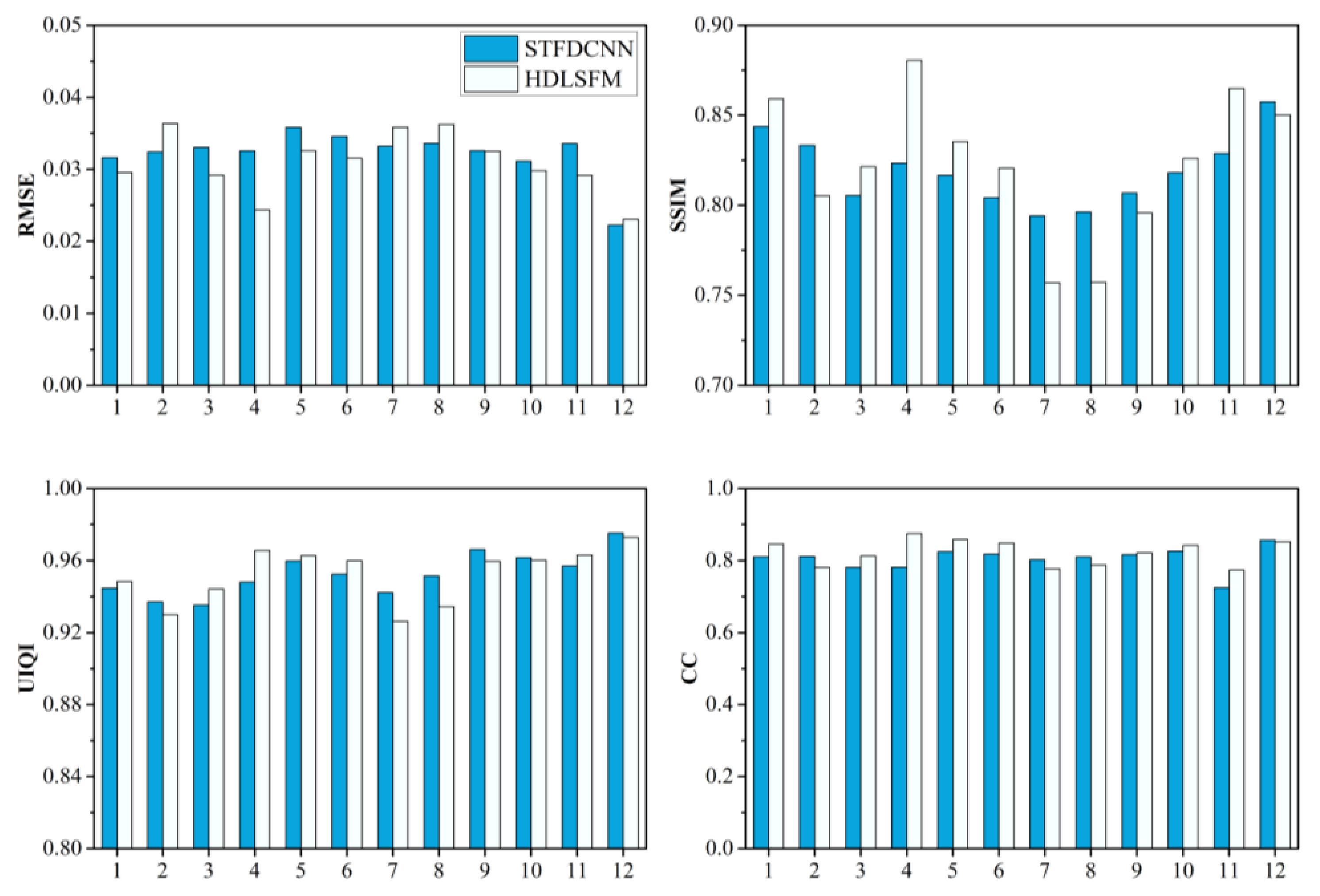

5.4. Comparison with Other DL-Based STF Methods

5.5. The Applicability of HDLSFM

5.6. Limitations and Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Reference Date | Function | |

|---|---|---|

| HDLSFM | STFDCNN | |

| 16 April 2004 | Prior image | Prior image |

| 2 May 2004 | Reference image | Reference image |

| 5 July 2004 | Reference image | Reference image |

| 6 August 2004 | Reference image | Reference image |

| 22 August 2004 | Reference image | Reference image |

| 25 October 2004 | Reference image | Reference image |

| 26 November 2004 | Reference image | Reference image |

| 12 December2004 | Reference image | Reference image |

| 28 December 2004 | Reference image | Reference image |

| 13 January 2005 | Reference image | Reference image |

| 29 January 2005 | Reference image | Reference image |

| 14 February 2005 | Reference image | Reference image |

| 2 March 2005 | Reference image | Reference image |

| 3 April 2005 | -- | Prior image |

References

- Wang, Z.; Schaaf, C.B.; Sun, Q.; Kim, J.; Erb, A.M.; Gao, F.; Román, M.O.; Yang, Y.; Petroy, S.; Taylor, J.R.; et al. Monitoring land surface albedo and vegetation dynamics using high spatial and temporal resolution synthetic time series from Landsat and the MODIS BRDF/NBAR/albedo product. Int. J. Appl. Earth Obs. Geoinf. 2017, 59, 104–117. [Google Scholar] [CrossRef]

- Suess, S.; van der Linden, S.; Okujeni, A.; Griffiths, P.; Leitão, P.J.; Schwieder, M.; Hostert, P. Characterizing 32 years of shrub cover dynamics in southern Portugal using annual Landsat composites and machine learning regression modeling. Remote Sens. Environ. 2018, 219, 353–364. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, J.; Henebry, G.M.; Gao, F. Development and evaluation of a new algorithm for detecting 30 m land surface phenology from VIIRS and HLS time series. ISPRS J. Photogramm. Remote Sens. 2020, 161, 37–51. [Google Scholar] [CrossRef]

- Arévalo, P.; Olofsson, P.; Woodcock, C.E. Continuous monitoring of land change activities and post-disturbance dynamics from Landsat time series: A test methodology for REDD+ reporting. Remote Sens. Environ. 2019. [Google Scholar] [CrossRef]

- Hamunyela, E.; Brandt, P.; Shirima, D.; Do, H.T.T.; Herold, M.; Roman-Cuesta, R.M. Space-time detection of deforestation, forest degradation and regeneration in montane forests of Eastern Tanzania. Int. J. Appl. Earth Obs. Geoinf. 2020, 88, 102063. [Google Scholar] [CrossRef]

- Interdonato, R.; Ienco, D.; Gaetano, R.; Ose, K. DuPLO: A DUal view Point deep Learning architecture for time series classification. ISPRS J. Photogramm. Remote Sens. 2019, 149, 91–104. [Google Scholar] [CrossRef]

- Ghrefat, H.A.; Goodell, P.C. Land cover mapping at Alkali Flat and Lake Lucero, White Sands, New Mexico, USA using multi-temporal and multi-spectral remote sensing data. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 616–625. [Google Scholar] [CrossRef]

- Jia, D.; Gao, P.; Cheng, C.; Ye, S. Multiple-feature-driven co-training method for crop mapping based on remote sensing time series imagery. Int. J. Remote Sens. 2020, 41, 8096–8120. [Google Scholar] [CrossRef]

- Lees, K.J.; Quaife, T.; Artz, R.R.E.; Khomik, M.; Clark, J.M. Potential for using remote sensing to estimate carbon fluxes across northern peatlands—A review. Sci. Total Environ. 2018, 615, 857–874. [Google Scholar] [CrossRef]

- Gao, F.; Masek, J.; Schwaller, M.; Hall, F. On the blending of the Landsat and MODIS surface reflectance: Predicting daily Landsat surface reflectance. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2207–2218. [Google Scholar] [CrossRef]

- Gao, F.; Hilker, T.; Zhu, X.; Anderson, M.; Masek, J.; Wang, P.; Yang, Y. Fusing Landsat and MODIS Data for Vegetation Monitoring. IEEE Geosci. Remote Sens. Mag. 2015, 3, 47–60. [Google Scholar] [CrossRef]

- Zhu, X.; Helmer, E.H.; Gao, F.; Liu, D.; Chen, J.; Lefsky, M.A. A flexible spatiotemporal method for fusing satellite images with different resolutions. Remote Sens. Environ. 2016, 172, 165–177. [Google Scholar] [CrossRef]

- Senf, C.; Leitão, P.J.; Pflugmacher, D.; van der Linden, S.; Hostert, P. Mapping land cover in complex Mediterranean landscapes using Landsat: Improved classification accuracies from integrating multi-seasonal and synthetic imagery. Remote Sens. Environ. 2015, 156, 527–536. [Google Scholar] [CrossRef]

- Jia, K.; Liang, S.; Zhang, N.; Wei, X.; Gu, X.; Zhao, X.; Yao, Y.; Xie, X. Land cover classification of finer resolution remote sensing data integrating temporal features from time series coarser resolution data. ISPRS J. Photogramm. Remote Sens. 2014, 93, 49–55. [Google Scholar] [CrossRef]

- Chen, B.; Chen, L.; Huang, B.; Michishita, R.; Xu, B. Dynamic monitoring of the Poyang Lake wetland by integrating Landsat and MODIS observations. ISPRS J. Photogramm. Remote Sens. 2018, 139, 75–87. [Google Scholar] [CrossRef]

- Wang, C.; Lei, S.; Elmore, J.A.; Jia, D.; Mu, S. Integrating Temporal Evolution with Cellular Automata for Simulating Land Cover Change. Remote Sens. 2019, 11, 301. [Google Scholar] [CrossRef]

- Jia, D.; Wang, C.; Lei, S. Semisupervised GDTW kernel-based fuzzy c-means algorithm for mapping vegetation dynamics in mining region using normalized difference vegetation index time series. J. Appl. Remote Sens. 2018, 12, 016028. [Google Scholar] [CrossRef]

- Li, Y.; Huang, C.; Hou, J.; Gu, J.; Zhu, G.; Li, X. Mapping daily evapotranspiration based on spatiotemporal fusion of ASTER and MODIS images over irrigated agricultural areas in the Heihe River Basin, Northwest China. Agric. For. Meteorol. 2017, 244–245, 82–97. [Google Scholar] [CrossRef]

- Ke, Y.; Im, J.; Park, S.; Gong, H. Spatiotemporal downscaling approaches for monitoring 8-day 30m actual evapotranspiration. ISPRS J. Photogramm. Remote Sens. 2017, 126, 79–93. [Google Scholar] [CrossRef]

- Ma, Y.; Liu, S.; Song, L.; Xu, Z.; Liu, Y.; Xu, T.; Zhu, Z. Estimation of daily evapotranspiration and irrigation water efficiency at a Landsat-like scale for an arid irrigation area using multi-source remote sensing data. Remote Sens. Environ. 2018, 216, 715–734. [Google Scholar] [CrossRef]

- Shen, H.; Huang, L.; Zhang, L.; Wu, P.; Zeng, C. Long-term and fine-scale satellite monitoring of the urban heat island effect by the fusion of multi-temporal and multi-sensor remote sensed data: A 26-year case study of the city of Wuhan in China. Remote Sens. Environ. 2016, 172, 109–125. [Google Scholar] [CrossRef]

- Xia, H.; Chen, Y.; Li, Y.; Quan, J. Combining kernel-driven and fusion-based methods to generate daily high-spatial-resolution land surface temperatures. Remote Sens. Environ. 2019, 224, 259–274. [Google Scholar] [CrossRef]

- Weng, Q.; Fu, P.; Gao, F. Generating daily land surface temperature at Landsat resolution by fusing Landsat and MODIS data. Remote Sens. Environ. 2014, 145, 55–67. [Google Scholar] [CrossRef]

- Quan, J.; Zhan, W.; Ma, T.; Du, Y.; Guo, Z.; Qin, B. An integrated model for generating hourly Landsat-like land surface temperatures over heterogeneous landscapes. Remote Sens. Environ. 2018, 206, 403–423. [Google Scholar] [CrossRef]

- Ghosh, R.; Gupta, P.K.; Tolpekin, V.; Srivastav, S.K. An enhanced spatiotemporal fusion method – Implications for coal fire monitoring using satellite imagery. Int. J. Appl. Earth Obs. Geoinf. 2020, 88, 102056. [Google Scholar] [CrossRef]

- Houborg, R.; McCabe, M.F.; Gao, F. A Spatio-Temporal Enhancement Method for medium resolution LAI (STEM-LAI). Int. J. Appl. Earth Obs. Geoinf. 2016, 47, 15–29. [Google Scholar] [CrossRef]

- Li, Z.; Huang, C.; Zhu, Z.; Gao, F.; Tang, H.; Xin, X.; Ding, L.; Shen, B.; Liu, J.; Chen, B.; et al. Mapping daily leaf area index at 30 m resolution over a meadow steppe area by fusing Landsat, Sentinel-2A and MODIS data. Int. J. Remote Sens. 2018, 39, 9025–9053. [Google Scholar] [CrossRef]

- Kimm, H.; Guan, K.; Jiang, C.; Peng, B.; Gentry, L.F.; Wilkin, S.C.; Wang, S.; Cai, Y.; Bernacchi, C.J.; Peng, J.; et al. Deriving high-spatiotemporal-resolution leaf area index for agroecosystems in the U.S. Corn Belt using Planet Labs CubeSat and STAIR fusion data. Remote Sens. Environ. 2020, 239, 111615. [Google Scholar] [CrossRef]

- Long, D.; Bai, L.; Yan, L.; Zhang, C.; Yang, W.; Lei, H.; Quan, J.; Meng, X.; Shi, C. Generation of spatially complete and daily continuous surface soil moisture of high spatial resolution. Remote Sens. Environ. 2019, 233, 111364. [Google Scholar] [CrossRef]

- Guo, S.; Sun, B.; Zhang, H.K.; Liu, J.; Chen, J.; Wang, J.; Jiang, X.; Yang, Y. MODIS ocean color product downscaling via spatio-temporal fusion and regression: The case of chlorophyll-a in coastal waters. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 340–361. [Google Scholar] [CrossRef]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Wang, Q.; Atkinson, P.M. Spatio-temporal fusion for daily Sentinel-2 images. Remote Sens. Environ. 2018, 204, 31–42. [Google Scholar] [CrossRef]

- Kwan, C.; Budavari, B.; Gao, F.; Zhu, X. A Hybrid Color Mapping Approach to Fusing MODIS and Landsat Images for Forward Prediction. Remote Sens. 2018, 10, 520. [Google Scholar] [CrossRef]

- Cheng, Q.; Liu, H.; Shen, H.; Wu, P.; Zhang, L. A Spatial and Temporal Nonlocal Filter-Based Data Fusion Method. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4476–4488. [Google Scholar] [CrossRef]

- Ping, B.; Meng, Y.; Su, F. An Enhanced Linear Spatio-Temporal Fusion Method for Blending Landsat and MODIS Data to Synthesize Landsat-Like Imagery. Remote Sens. 2018, 10. [Google Scholar] [CrossRef]

- Zhu, X.; Cai, F.; Tian, J.; Williams, T.K. Spatiotemporal Fusion of Multisource Remote Sensing Data: Literature Survey, Taxonomy, Principles, Applications, and Future Directions. Remote Sens. 2018, 10. [Google Scholar] [CrossRef]

- Zhukov, B.; Oertel, D.; Lanzl, F.; Reinhackel, G. Unmixing-based multisensor multiresolution image fusion. IEEE Trans. Geoence Remote Sens. 1999, 37, 1212–1226. [Google Scholar]

- Zurita-Milla, R.; Clevers, J.G.P.W.; Schaepman, M.E. Unmixing-Based Landsat TM and MERIS FR Data Fusion. IEEE Geosci. Remote Sens. Lett. 2008, 5, 453–457. [Google Scholar] [CrossRef]

- Wu, M.; Niu, Z.; Wang, C.; Wu, C.; Wang, L. Use of MODIS and Landsat time series data to generate high-resolution temporal synthetic Landsat data using a spatial and temporal reflectance fusion Model. J. Appl. Remote Sens. 2012, 6, 063507. [Google Scholar] [CrossRef]

- Wu, M.; Huang, W.; Niu, Z.; Wang, C. Generating Daily Synthetic Landsat Imagery by Combining Landsat and MODIS Data. Sensors 2015, 15, 24002–24025. [Google Scholar] [CrossRef]

- Maselli, F.; Rembold, F. Integration of LAC and GAC NDVI data to improve vegetation monitoring in semi-arid environments. Int. J. Remote Sens. 2002, 23, 2475–2488. [Google Scholar] [CrossRef]

- Liu, X.; Deng, C.; Chanussot, J.; Hong, D.; Zhao, B. StfNet: A Two-Stream Convolutional Neural Network for Spatiotemporal Image Fusion. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6552–6564. [Google Scholar] [CrossRef]

- Zhou, J.; Chen, J.; Chen, X.; Zhu, X.; Qiu, Y.; Song, H.; Rao, Y.; Zhang, C.; Cao, X.; Cui, X. Sensitivity of six typical spatiotemporal fusion methods to different influential factors: A comparative study for a normalized difference vegetation index time series reconstruction. Remote Sens. Environ. 2021, 252, 112130. [Google Scholar] [CrossRef]

- Liu, M.; Yang, W.; Zhu, X.; Chen, J.; Chen, X.; Yang, L.; Helmer, E.H. An Improved Flexible Spatiotemporal DAta Fusion (IFSDAF) method for producing high spatiotemporal resolution normalized difference vegetation index time series. Remote Sens. Environ. 2019, 227, 74–89. [Google Scholar] [CrossRef]

- Li, X.; Foody, G.M.; Boyd, D.S.; Ge, Y.; Zhang, Y.; Du, Y.; Ling, F. SFSDAF: An enhanced FSDAF that incorporates sub-pixel class fraction change information for spatio-temporal image fusion. Remote Sens. Environ. 2020, 237, 111537. [Google Scholar] [CrossRef]

- Guo, D.; Shi, W.; Hao, M.; Zhu, X. FSDAF 2.0: Improving the performance of retrieving land cover changes and preserving spatial details. Remote Sens. Environ. 2020, 248, 111973. [Google Scholar] [CrossRef]

- Huang, B.; Song, H. Spatiotemporal Reflectance Fusion via Sparse Representation. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3707–3716. [Google Scholar] [CrossRef]

- Song, H.; Liu, Q.; Wang, G.; Hang, R.; Huang, B. Spatiotemporal Satellite Image Fusion Using Deep Convolutional Neural Networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 821–829. [Google Scholar] [CrossRef]

- Wu, B.; Huang, B.; Zhang, L. An Error-Bound-Regularized Sparse Coding for Spatiotemporal Reflectance Fusion. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6791–6803. [Google Scholar] [CrossRef]

- Wei, J.; Wang, L.; Liu, P.; Song, W. Spatiotemporal Fusion of Remote Sensing Images with Structural Sparsity and Semi-Coupled Dictionary Learning. Remote Sens. 2017, 9, 21. [Google Scholar] [CrossRef]

- Wei, J.; Wang, L.; Liu, P.; Chen, X.; Li, W.; Zomaya, A.Y. Spatiotemporal Fusion of MODIS and Landsat-7 Reflectance Images via Compressed Sensing. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7126–7139. [Google Scholar] [CrossRef]

- Zheng, Y.; Song, H.; Sun, L.; Wu, Z.; Jeon, B. Spatiotemporal Fusion of Satellite Images via Very Deep Convolutional Networks. Remote Sens. 2019, 11. [Google Scholar] [CrossRef]

- Jia, D.; Song, C.; Cheng, C.; Shen, S.; Ning, L.; Hui, C. A Novel Deep Learning-Based Spatiotemporal Fusion Method for Combining Satellite Images with Different Resolutions Using a Two-Stream Convolutional Neural Network. Remote Sens. 2020, 12. [Google Scholar] [CrossRef]

- Li, Y.; Li, J.; He, L.; Chen, J.; Plaza, A. A new sensor bias-driven spatio-temporal fusion model based on convolutional neural networks. Sci. China Inf. Sci. 2020, 63, 140302. [Google Scholar] [CrossRef]

- Tan, Z.; Di, L.; Zhang, M.; Guo, L.; Gao, M. An Enhanced Deep Convolutional Model for Spatiotemporal Image Fusion. Remote Sens. 2019, 11. [Google Scholar] [CrossRef]

- Lai, W.; Huang, J.; Ahuja, N.; Yang, M. Fast and Accurate Image Super-Resolution with Deep Laplacian Pyramid Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 2599–2613. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Song, J.; Han, L.; Wang, X.; Wang, J. Reconstruction of High-Temporal- and High-Spatial-Resolution Reflectance Datasets Using Difference Construction and Bayesian Unmixing. Remote Sens. 2020, 12. [Google Scholar] [CrossRef]

- Chander, G.; Hewison, T.J.; Fox, N.; Wu, X.; Xiong, X.; Blackwell, W.J. Overview of Intercalibration of Satellite Instruments. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1056–1080. [Google Scholar] [CrossRef]

- Roy, D.P.; Ju, J.; Lewis, P.; Schaaf, C.; Gao, F.; Hansen, M.; Lindquist, E. Multi-temporal MODIS–Landsat data fusion for relative radiometric normalization, gap filling, and prediction of Landsat data. Remote Sens. Environ. 2008, 112, 3112–3130. [Google Scholar] [CrossRef]

- Sun, Y.; Zhang, H.; Shi, W. A spatio-temporal fusion method for remote sensing data Using a linear injection model and local neighbourhood information. Int. J. Remote Sens. 2019, 40, 2965–2985. [Google Scholar] [CrossRef]

- Emelyanova, I.V.; McVicar, T.R.; Van Niel, T.G.; Li, L.T.; van Dijk, A.I.J.M. Assessing the accuracy of blending Landsat–MODIS surface reflectances in two landscapes with contrasting spatial and temporal dynamics: A framework for algorithm selection. Remote Sens. Environ. 2013, 133, 193–209. [Google Scholar] [CrossRef]

- Chen, Y.; Cao, R.; Chen, J.; Zhu, X.; Zhou, J.; Wang, G.; Shen, M.; Chen, X.; Yang, W. A New Cross-Fusion Method to Automatically Determine the Optimal Input Image Pairs for NDVI Spatiotemporal Data Fusion. IEEE Trans. Geosci. Remote Sens. 2020, 58, 5179–5194. [Google Scholar] [CrossRef]

- Zhai, H.; Huang, F.; Qi, H. Generating High Resolution LAI Based on a Modified FSDAF Model. Remote Sens. 2020, 12. [Google Scholar] [CrossRef]

- Li, P.; Ke, Y.; Wang, D.; Ji, H.; Chen, S.; Chen, M.; Lyu, M.; Zhou, D. Human impact on suspended particulate matter in the Yellow River Estuary, China: Evidence from remote sensing data fusion using an improved spatiotemporal fusion method. Sci. Total Environ. 2021, 750, 141612. [Google Scholar] [CrossRef] [PubMed]

- Yang, H.; Xi, C.; Zhao, X.; Mao, P.; Wang, Z.; Shi, Y.; He, T.; Li, Z. Measuring the Urban Land Surface Temperature Variations Under Zhengzhou City Expansion Using Landsat-Like Data. Remote Sens. 2020, 12. [Google Scholar] [CrossRef]

- Zhang, L.; Weng, Q.; Shao, Z. An evaluation of monthly impervious surface dynamics by fusing Landsat and MODIS time series in the Pearl River Delta, China, from 2000 to 2015. Remote Sens. Environ. 2017, 201, 99–114. [Google Scholar] [CrossRef]

- Zhou, W.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Zhou, W.; Bovik, A.C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Khan, M.M.; Alparone, L.; Chanussot, J. Pansharpening Quality Assessment Using the Modulation Transfer Functions of Instruments. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3880–3891. [Google Scholar] [CrossRef]

- Yuhas, R.H.; Goetz, A.F.H.; Boardman, J.W. Discrimination Among Semi-arid Landscape Endmembers Using the Spectral Angle Mapper (SAM) Algorithm. In Proceedings of the Annual JPL Airborne Earth Science Workshop, Pasadena, CA, USA, 1–5 June 1992; pp. 147–149. [Google Scholar]

- Song, H.; Huang, B. Spatiotemporal Satellite Image Fusion Through One-Pair Image Learning. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1883–1896. [Google Scholar] [CrossRef]

- Chen, B.; Huang, B.; Xu, B. A hierarchical spatiotemporal adaptive fusion model using one image pair. Int. J. Digit. Earth 2017, 10, 639–655. [Google Scholar] [CrossRef]

- Zhao, Y.; Huang, B.; Song, H. A robust adaptive spatial and temporal image fusion model for complex land surface changes. Remote Sens. Environ. 2018, 208, 42–62. [Google Scholar] [CrossRef]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| Known Fine Image Date | Prediction Date | |

|---|---|---|

| 8 October 2001 | 1st | 17 October 2001 |

| 2nd | 2 November 2001 | |

| 4 December 2001 | 3rd | 9 November 2001 |

| 4th | 25 November 2001 | |

| 5th | 5 January 2002 | |

| 6th | 12 January 2002 | |

| 11 April 2002 | 7th | 13 February 2002 |

| 8th | 22 February 2002 | |

| 9th | 10 March 2002 | |

| 10th | 17 March 2002 | |

| 11th | 2 April 2002 | |

| 12th | 18 April 2002 | |

| 13th | 27 April 2002 | |

| 14th | 4 May 2002 | |

| Sensor Identification | Function |

|---|---|

| LC080300342019060201T1-SC20200325041150 | Known image at base date |

| LC080300342019072001T1-SC20200325041156 | Prediction |

| LC080300342019090601T1-SC20200325041203 | Prediction |

| LC080300342019092201T1-SC20200325041142 | Prediction |

| LC080300342019100801T1-SC20200325041147 | Prediction |

| Index | Band | STARFM | FSDAF | Fit-FC | HDLSFM |

|---|---|---|---|---|---|

| RMSE (root mean square error) | Band 1 | 0.0185 | 0.0160 | 0.0193 | 0.0163 |

| Band 2 | 0.0234 | 0.0223 | 0.0249 | 0.0233 | |

| Band 3 | 0.0298 | 0.0262 | 0.0297 | 0.0264 | |

| Band 4 | 0.0493 | 0.0358 | 0.0397 | 0.0353 | |

| Band 5 | 0.2684 | 0.0630 | 0.0652 | 0.0584 | |

| Band 7 | 0.3117 | 0.0536 | 0.0546 | 0.0457 | |

| SSIM (structural similarity) | Band 1 | 0.8895 | 0.9041 | 0.8702 | 0.9096 |

| Band 2 | 0.8447 | 0.8624 | 0.8255 | 0.8634 | |

| Band 3 | 0.8077 | 0.8344 | 0.7899 | 0.8385 | |

| Band 4 | 0.7142 | 0.7505 | 0.6997 | 0.7604 | |

| Band 5 | 0.3856 | 0.4993 | 0.4456 | 0.5014 | |

| Band 7 | 0.3748 | 0.5544 | 0.5381 | 0.5743 | |

| UIQI (universal image quality index) | Band 1 | 0.9355 | 0.9375 | 0.9058 | 0.9388 |

| Band 2 | 0.9424 | 0.9437 | 0.9352 | 0.9430 | |

| Band 3 | 0.9426 | 0.9456 | 0.9373 | 0.9487 | |

| Band 4 | 0.9326 | 0.9382 | 0.9326 | 0.9420 | |

| Band 5 | 0.6452 | 0.6983 | 0.7349 | 0.7487 | |

| Band 7 | 0.5297 | 0.5834 | 0.7031 | 0.7121 | |

| CC (correlation coefficient) | Band 1 | 0.6120 | 0.6958 | 0.6356 | 0.6988 |

| Band 2 | 0.7010 | 0.7308 | 0.6610 | 0.7127 | |

| Band 3 | 0.6551 | 0.7353 | 0.6453 | 0.7348 | |

| Band 4 | 0.6541 | 0.8224 | 0.7540 | 0.8190 | |

| Band 5 | 0.4170 | 0.7421 | 0.6310 | 0.7527 | |

| Band 7 | 0.3789 | 0.7062 | 0.5213 | 0.7386 | |

| SAM (spectral angle mapper) | 20.4060 | 13.0858 | 13.8030 | 11.7314 | |

| ERGAS (erreur relative global adimensionnelle de synthèse) | 24.1511 | 2.8994 | 2.4629 | 2.2766 | |

| Index | Band | STARFM | FSDAF | Fit-FC | HDLSFM |

|---|---|---|---|---|---|

| RMSE | band 1 | 0.0335 | 0.0194 | 0.0173 | 0.0156 |

| band 2 | 0.0280 | 0.0275 | 0.0235 | 0.0211 | |

| band 3 | 0.0884 | 0.0438 | 0.0350 | 0.0343 | |

| band 4 | 0.0547 | 0.0525 | 0.0508 | 0.0493 | |

| band 5 | 0.0777 | 0.0610 | 0.0612 | 0.0561 | |

| band 7 | 0.0825 | 0.0544 | 0.0514 | 0.0499 | |

| SSIM | band 1 | 0.8553 | 0.8446 | 0.8747 | 0.8884 |

| band 2 | 0.8087 | 0.7970 | 0.8381 | 0.8549 | |

| band 3 | 0.6506 | 0.6612 | 0.7350 | 0.7502 | |

| band 4 | 0.6299 | 0.6517 | 0.6886 | 0.6961 | |

| band 5 | 0.5719 | 0.6149 | 0.6560 | 0.6592 | |

| band 7 | 0.5706 | 0.6245 | 0.6597 | 0.6624 | |

| UIQI | band 1 | 0.8960 | 0.8705 | 0.8964 | 0.9155 |

| band 2 | 0.9260 | 0.9096 | 0.9294 | 0.9443 | |

| band 3 | 0.8546 | 0.8497 | 0.8930 | 0.8996 | |

| band 4 | 0.9642 | 0.9643 | 0.9653 | 0.9690 | |

| band 5 | 0.9362 | 0.9444 | 0.9458 | 0.9544 | |

| band 7 | 0.8929 | 0.8989 | 0.9151 | 0.9178 | |

| CC | band 1 | 0.3996 | 0.6186 | 0.6840 | 0.7413 |

| band 2 | 0.5326 | 0.5609 | 0.6503 | 0.7318 | |

| band 3 | 0.3679 | 0.6097 | 0.7446 | 0.7604 | |

| band 4 | 0.7515 | 0.7738 | 0.7870 | 0.8045 | |

| band 5 | 0.6763 | 0.7888 | 0.7692 | 0.8090 | |

| band 7 | 0.6691 | 0.8152 | 0.8226 | 0.8345 | |

| SAM | 9.1292 | 8.0004 | 8.1169 | 7.5171 | |

| ERGAS | 2.4817 | 1.5588 | 1.3590 | 1.2855 | |

| Index | Band | LGC Site | CI Site | ||

|---|---|---|---|---|---|

| Com-1 | Com-2 | Com-1 | Com-2 | ||

| RMSE | Band 1 | 0.0137 | 0.0142 | 0.0143 | 0.0145 |

| Band 2 | 0.0195 | 0.0201 | 0.0169 | 0.0171 | |

| Band 3 | 0.0242 | 0.0248 | 0.0259 | 0.0260 | |

| Band 4 | 0.0381 | 0.0377 | 0.0498 | 0.0497 | |

| Band 5 | 0.0569 | 0.0576 | 0.0479 | 0.0479 | |

| Band 7 | 0.0451 | 0.0455 | 0.0483 | 0.0484 | |

| SSIM | Band 1 | 0.9258 | 0.9202 | 0.9098 | 0.9068 |

| Band 2 | 0.8828 | 0.8746 | 0.872 | 0.8693 | |

| Band 3 | 0.8486 | 0.8391 | 0.7597 | 0.7561 | |

| Band 4 | 0.7369 | 0.7377 | 0.5028 | 0.5029 | |

| Band 5 | 0.5482 | 0.5465 | 0.5426 | 0.5422 | |

| Band 7 | 0.6289 | 0.6227 | 0.5232 | 0.5226 | |

| UIQI | Band 1 | 0.9534 | 0.9506 | 0.9464 | 0.9438 |

| Band 2 | 0.9567 | 0.9544 | 0.9636 | 0.9629 | |

| Band 3 | 0.9516 | 0.9492 | 0.9088 | 0.9073 | |

| Band 4 | 0.9483 | 0.9488 | 0.9845 | 0.9845 | |

| Band 5 | 0.8089 | 0.8065 | 0.9694 | 0.9694 | |

| Band 7 | 0.7746 | 0.7704 | 0.9083 | 0.9087 | |

| CC | Band 1 | 0.7210 | 0.7016 | 0.6295 | 0.6186 |

| Band 2 | 0.7091 | 0.6917 | 0.6400 | 0.6299 | |

| Band 3 | 0.7271 | 0.7128 | 0.5740 | 0.5672 | |

| Band 4 | 0.8173 | 0.8199 | 0.6148 | 0.6149 | |

| Band 5 | 0.7915 | 0.786 | 0.5644 | 0.5649 | |

| Band 7 | 0.7777 | 0.7769 | 0.5099 | 0.5088 | |

| SAM | 10.4542 | 10.7636 | 7.9944 | 8.018 | |

| ERGAS | 1.8990 | 1.9349 | 1.5754 | 1.5824 | |

| STARFM | FSDAF | Fit-FC | HDLSFM | |

|---|---|---|---|---|

| Experiment I | 302 | 2503 | 7650 | 30,450 |

| Experiment II | 1441 | 6003 | 18,804 | 46,100 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jia, D.; Cheng, C.; Song, C.; Shen, S.; Ning, L.; Zhang, T. A Hybrid Deep Learning-Based Spatiotemporal Fusion Method for Combining Satellite Images with Different Resolutions. Remote Sens. 2021, 13, 645. https://doi.org/10.3390/rs13040645

Jia D, Cheng C, Song C, Shen S, Ning L, Zhang T. A Hybrid Deep Learning-Based Spatiotemporal Fusion Method for Combining Satellite Images with Different Resolutions. Remote Sensing. 2021; 13(4):645. https://doi.org/10.3390/rs13040645

Chicago/Turabian StyleJia, Duo, Changxiu Cheng, Changqing Song, Shi Shen, Lixin Ning, and Tianyuan Zhang. 2021. "A Hybrid Deep Learning-Based Spatiotemporal Fusion Method for Combining Satellite Images with Different Resolutions" Remote Sensing 13, no. 4: 645. https://doi.org/10.3390/rs13040645

APA StyleJia, D., Cheng, C., Song, C., Shen, S., Ning, L., & Zhang, T. (2021). A Hybrid Deep Learning-Based Spatiotemporal Fusion Method for Combining Satellite Images with Different Resolutions. Remote Sensing, 13(4), 645. https://doi.org/10.3390/rs13040645