Random Forest Classification of Inundation Following Hurricane Florence (2018) via L-Band Synthetic Aperture Radar and Ancillary Datasets

Abstract

:1. Introduction

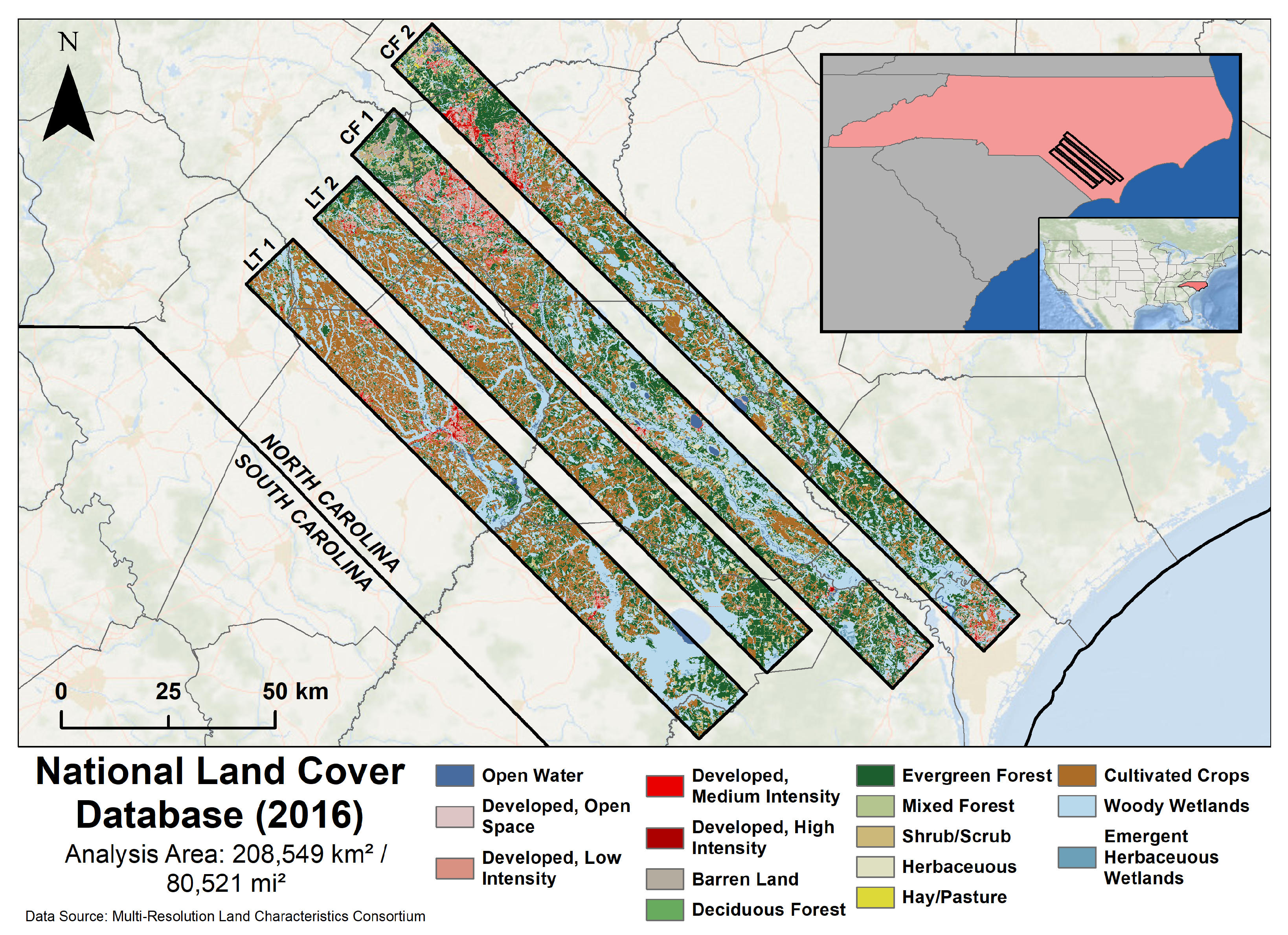

2. Study Area and Materials

2.1. Study Area and Event Background

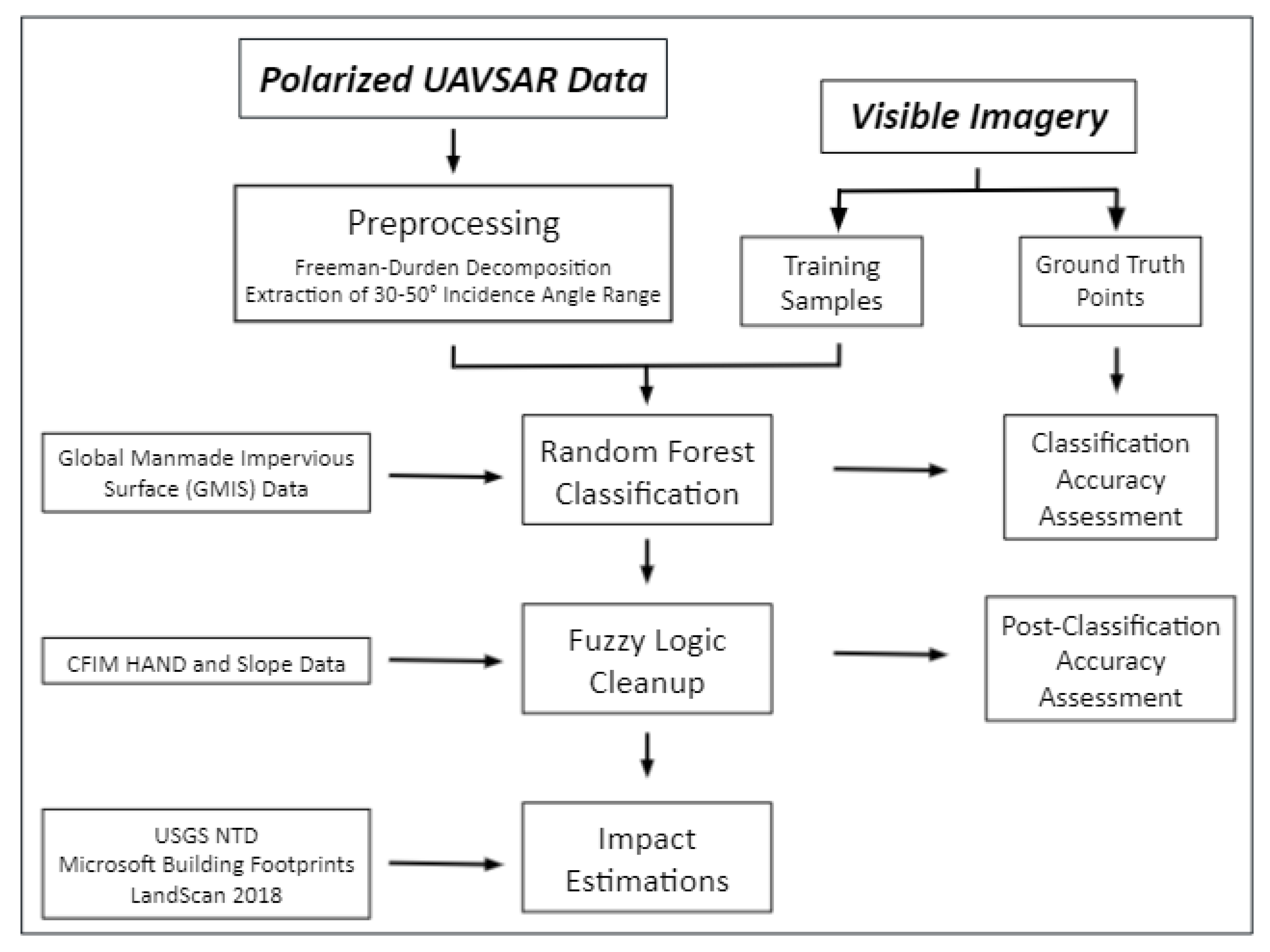

2.2. Datasets and Preprocessing

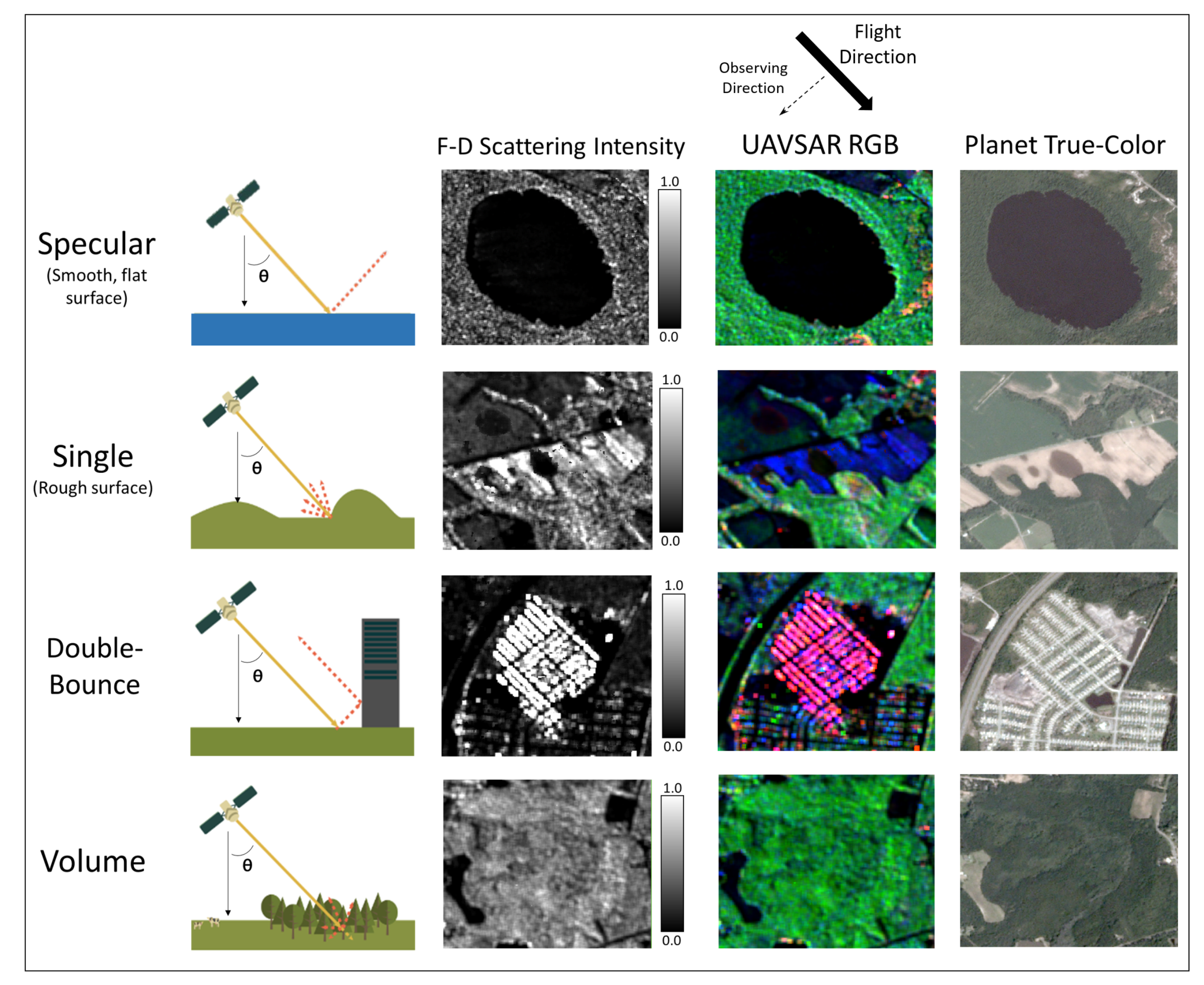

2.2.1. UAVSAR Data

2.2.2. UAVSAR Preprocessing

2.2.3. Visible Imagery

2.2.4. Ancillary Datasets

3. Methods

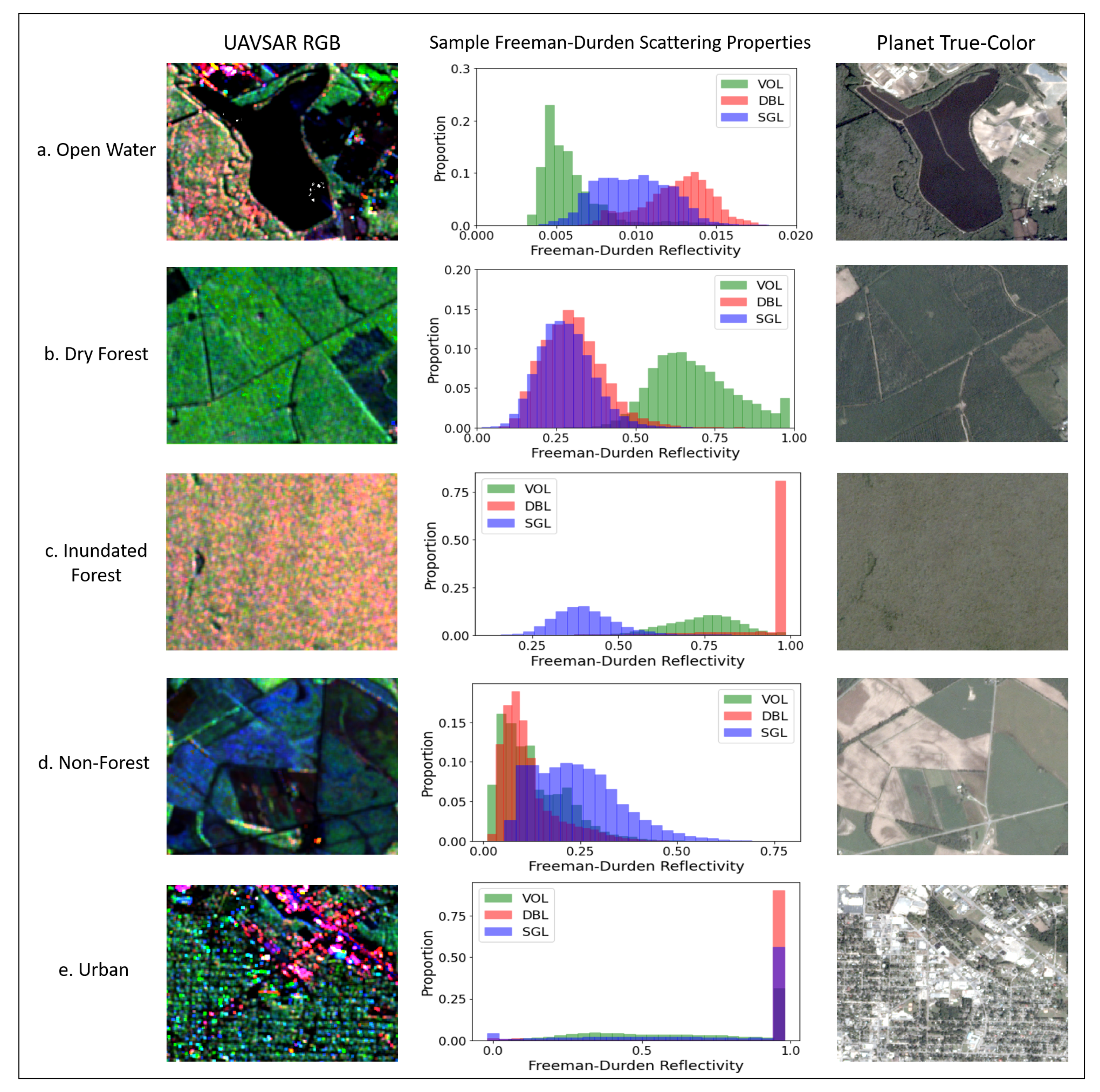

3.1. Random Forest Classification

3.1.1. Class Determination and Training Sample Gathering

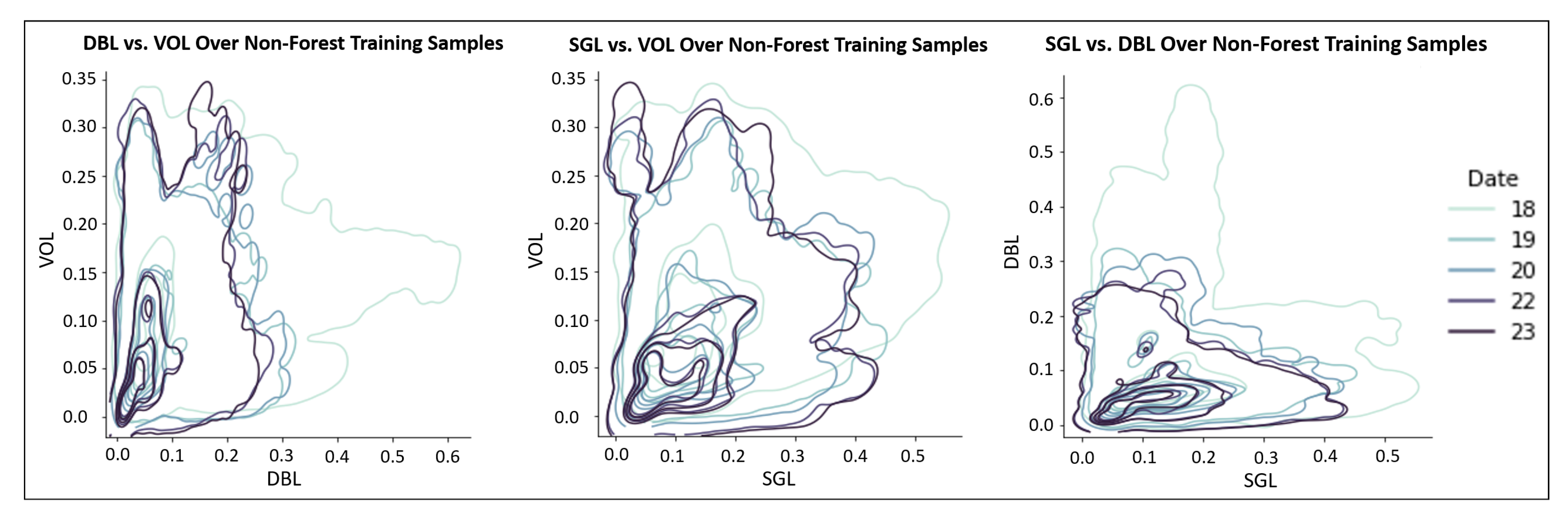

3.1.2. Classification and Accuracy Assessment

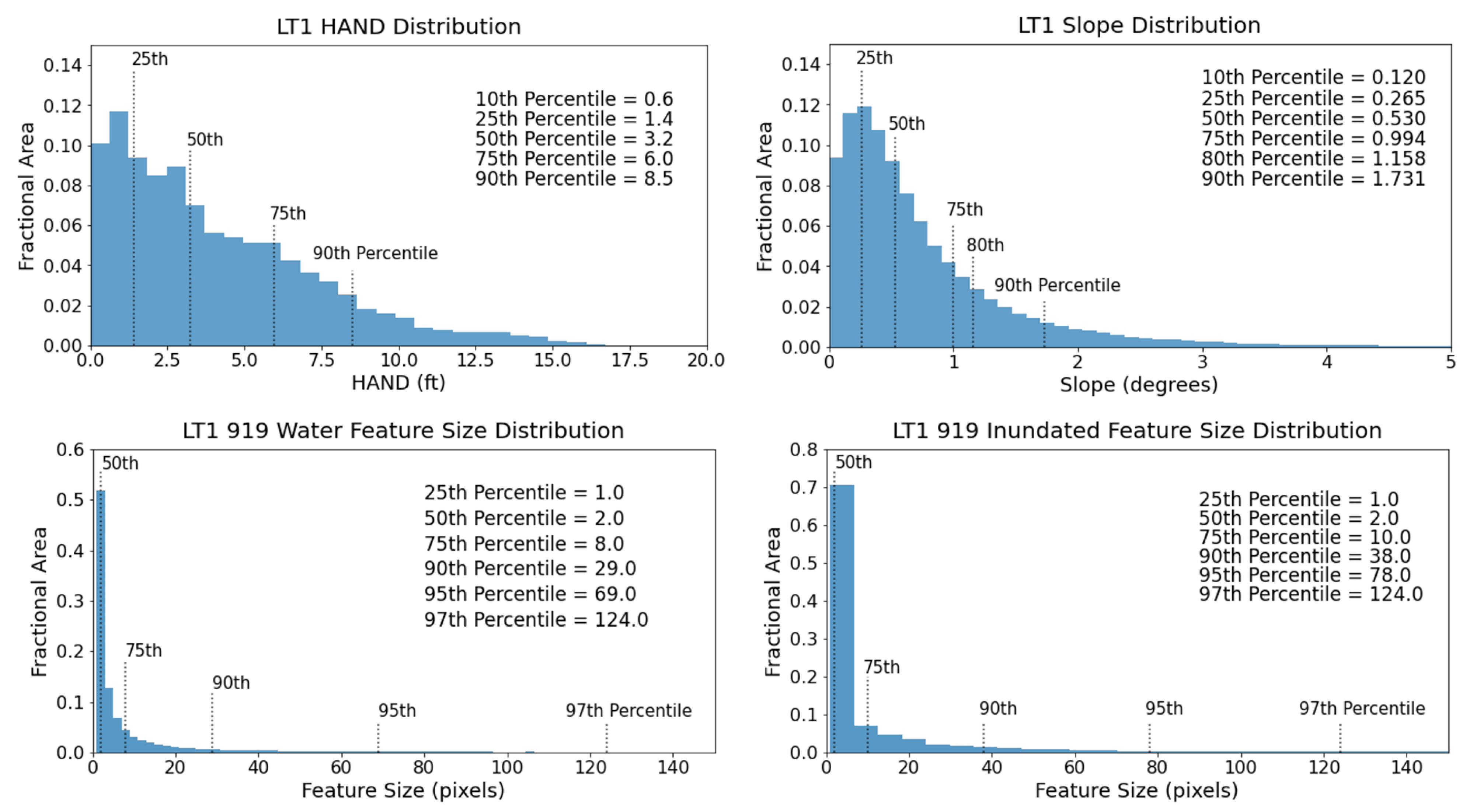

3.1.3. Post-Classification

4. Results

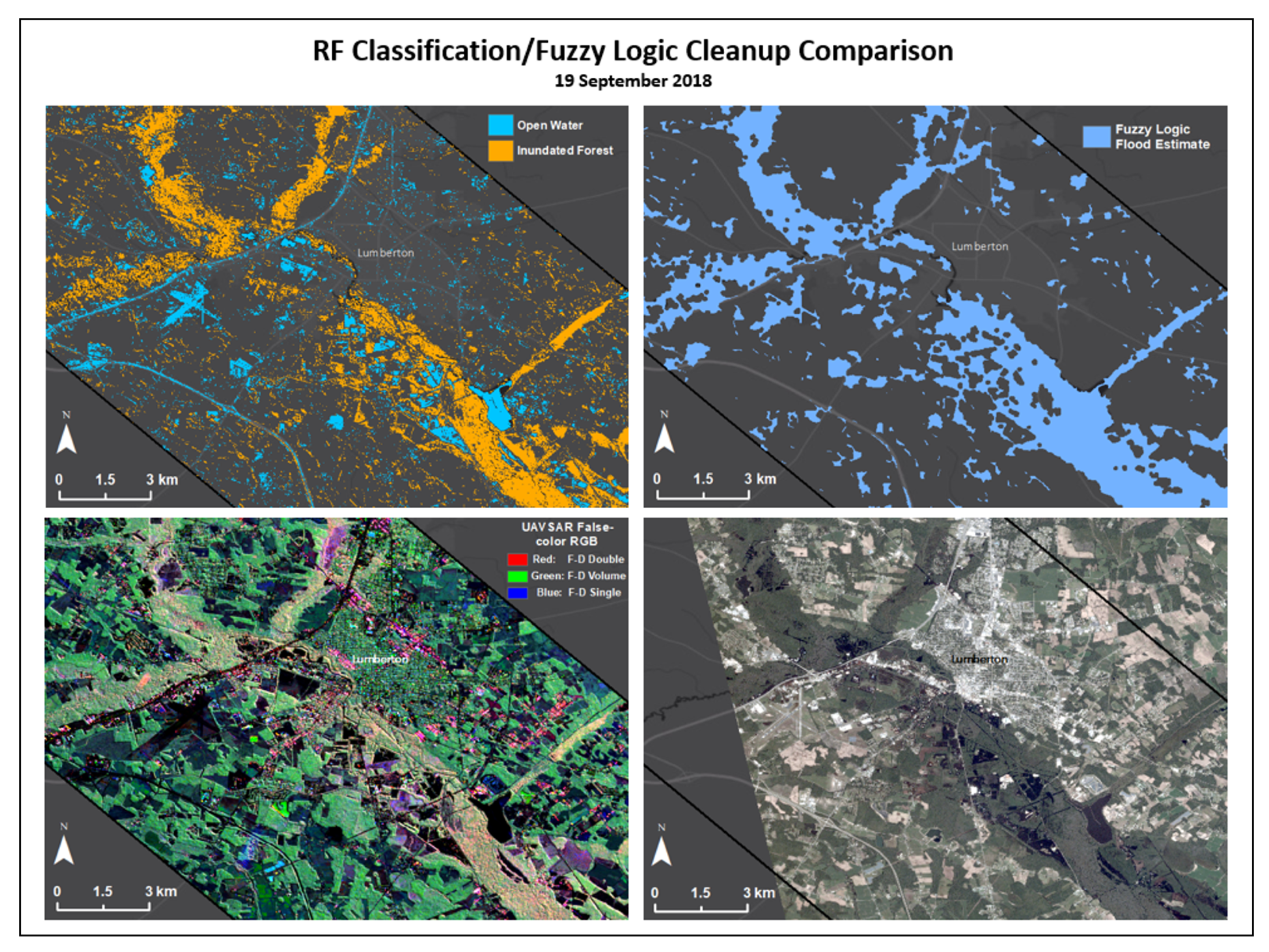

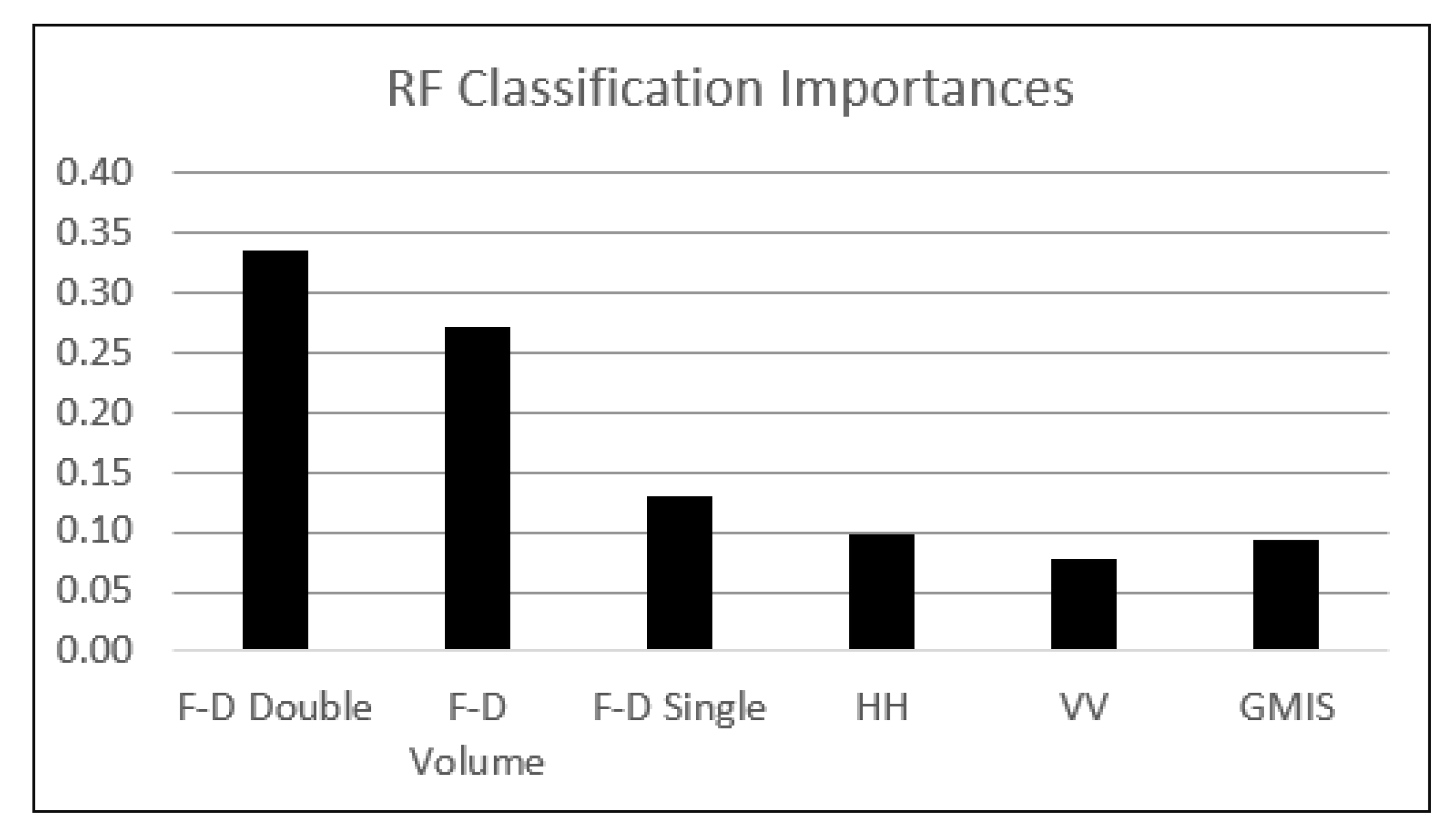

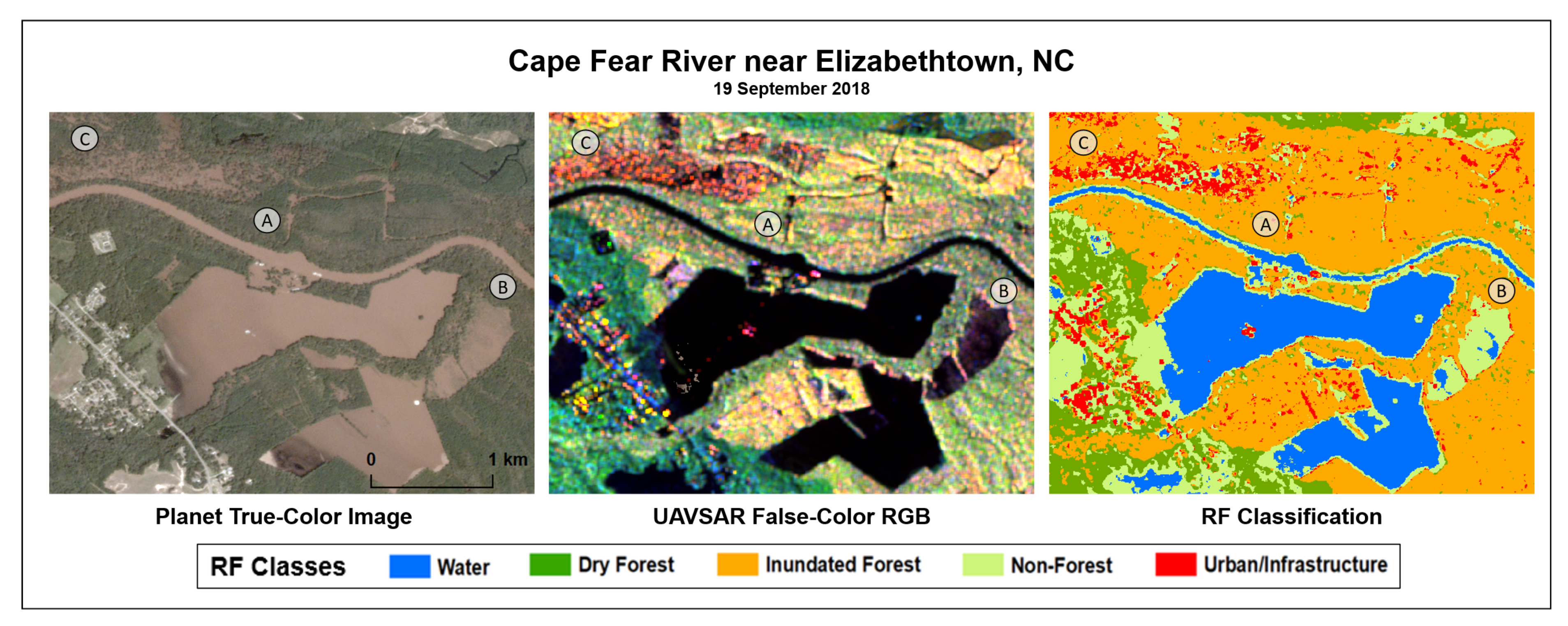

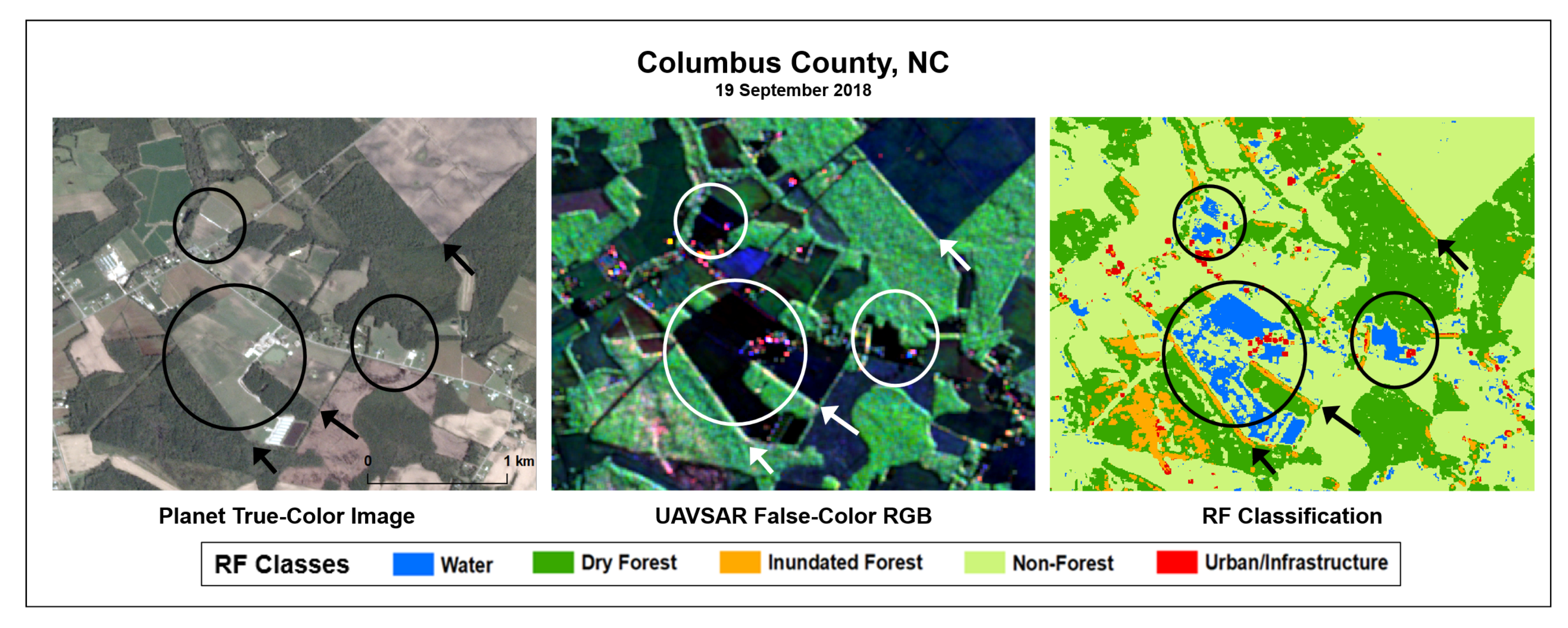

4.1. RF Classification

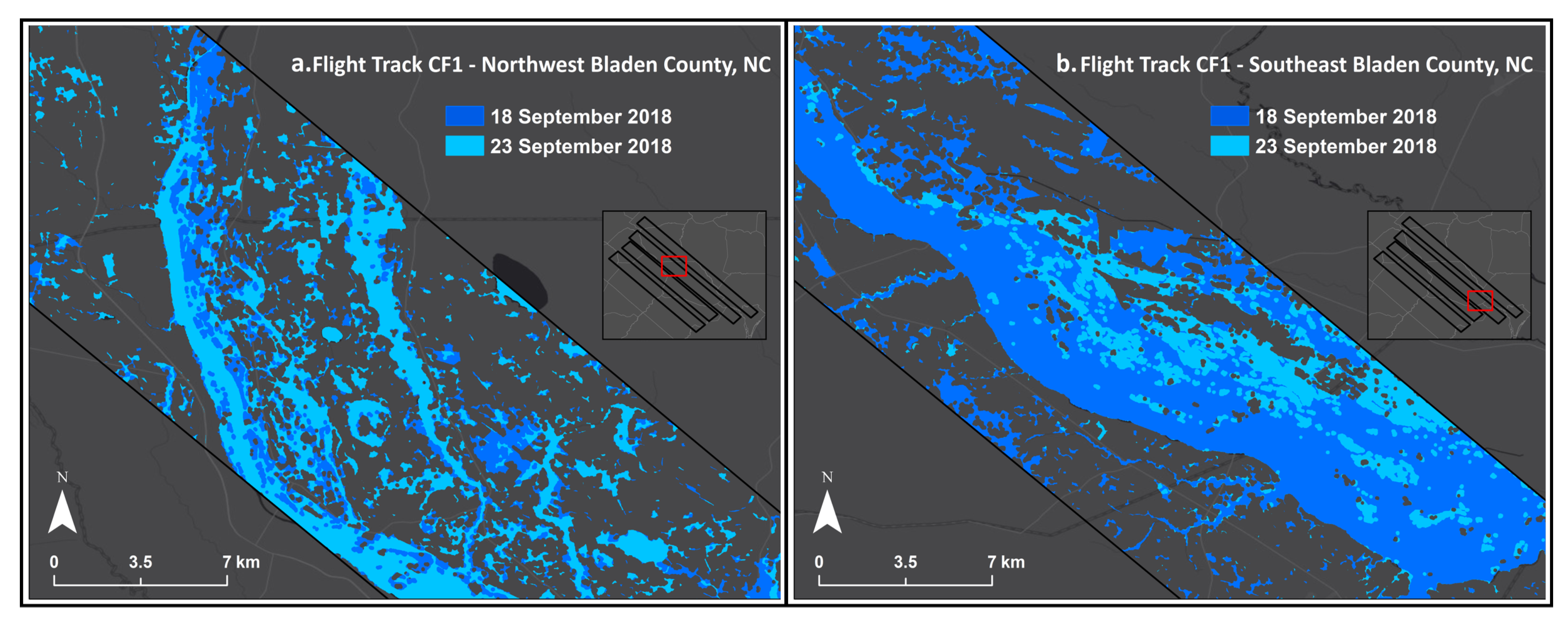

4.2. Post-Classification

4.3. Societal Impacts

5. Discussion

5.1. Classifier Performance

5.1.1. Areas of Underprediction

5.1.2. Areas of Overprediction

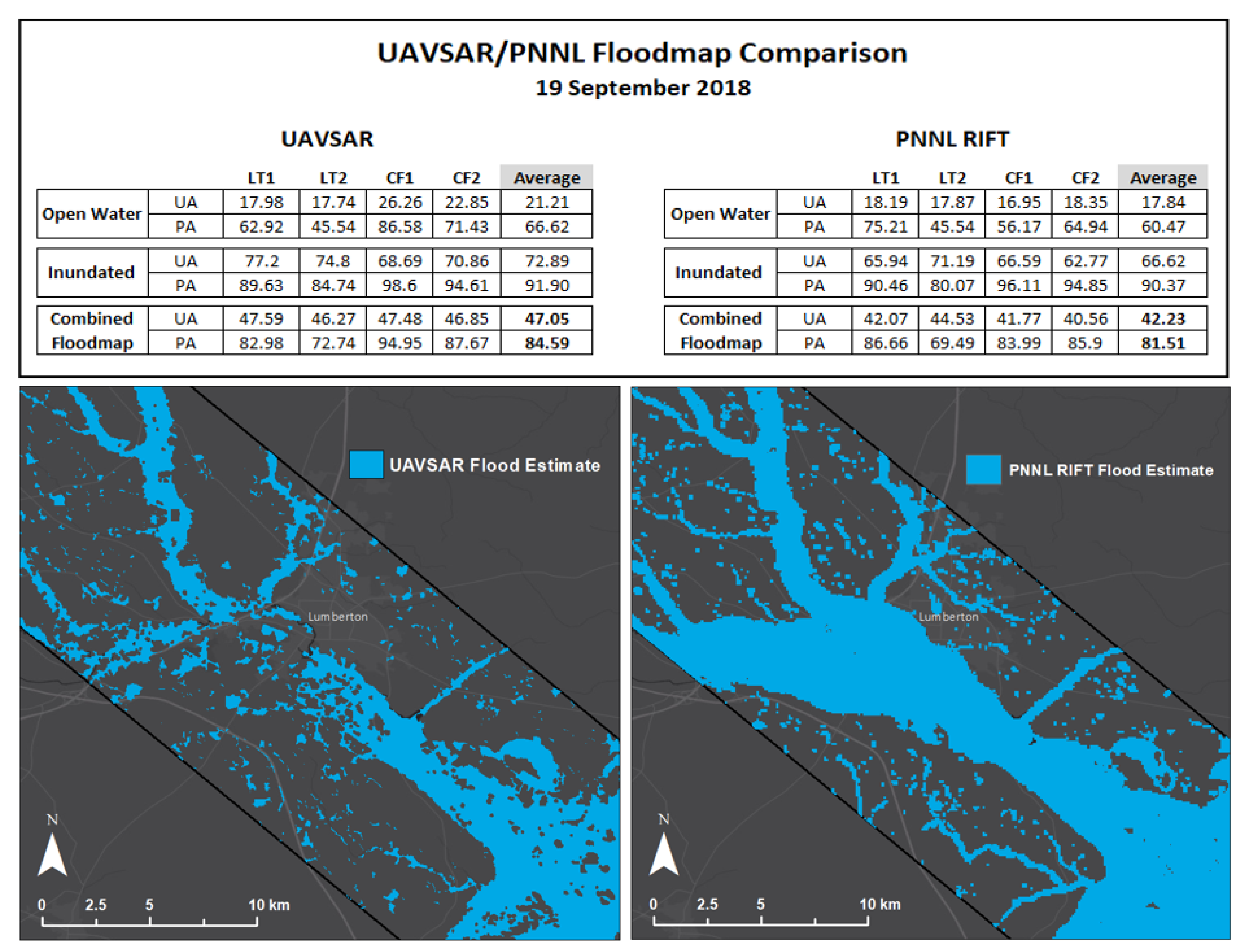

5.2. Comparison to Current Operational Product

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- NOAA National Centers for Environmental Information (NCEI) U.S. Billion-Dollar Weather and Climate Disasters. Available online: https://www.ncdc.noaa.gov/billions/summary-stats (accessed on 27 April 2021).

- National Research Council. Benefits and Costs of Accurate Flood Mapping. In Mapping the Zone: Improving Flood Map Accuracy; The National Academies Press: Washington, DC, USA, 2009; pp. 79–80. [Google Scholar]

- Martinez, J.; Le Toan, T. Mapping of Flood Dynamics and Spatial Distribution of Vegetation in the Amazon Floodplain using Multitemporal SAR Data. Remote Sens. Environ. 2007, 108, 209–223. [Google Scholar] [CrossRef]

- NASA Applied Remote Sensing Training (ARSET) Program: Introduction to Synthetic Aperture Radar. Available online: appliedsciences.nasa.gov/join-mission/training/english/arset-introduction-synthetic-aperture-radar (accessed on 7 June 2019).

- Chapman, B.; McDonald, K.; Shimada, M.; Rosenqvist, A.; Schroeder, R.; Hess, L. Mapping Regional Inundation with Spaceborne L-Band SAR. Remote Sens. 2015, 7, 5440–5470. [Google Scholar] [CrossRef] [Green Version]

- Meyer, F. Spaceborne Synthetic Aperture Radar—Principles, Data Access, and Basic Processing Techniques. In SAR Handbook: Comprehensive Methodologies for Forest Monitoring and Biomass Estimation; Flores, A., Herndon, K., Thapa, R., Cherrington, E., Eds.; SERVIR Global Science Coordination Office: Huntsville, AL, USA, 2019; pp. 26–30. [Google Scholar]

- Wang, Y.; Hess, L.; Filoso, S.; Melack, J.M. Understanding the Radar Back-Scattering from Flooded and Nonflooded Amazonian Forests: Results from Canopy Backscatter Modeling. Remote Sens. Environ. 1995, 54, 324–332. [Google Scholar] [CrossRef]

- Richards, J.A.; Woodgate, P.W.; Skidmore, A.K. An Explanation of Enhanced Radar Backscattering from Flooded Forests. Int. J. Remote Sens. 1987, 8, 1093–1100. [Google Scholar] [CrossRef]

- Ramsey, E.; Rangoonwala, A.; Bannister, T. Coastal Flood Inundation Monitoring with Satellite C-band and L-band Synthetic Aperture Radar Data. J. Am. Water Resour. Assoc. 2013, 49, 1239–1260. [Google Scholar] [CrossRef]

- Belgiu, M. and Dragu, L. Random Forest in Remote Sensing: A Review of Applications and Future Directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Brieman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Du, P.; Samat, A.; Waske, B.; Liu, S.; Li, Z. Random Forest and Rotation Forest for Fully Polarized SAR Image Classification using Polarimetric and Spatial Features. ISPRS J. Photogramm. Remote Sens. 2015, 105, 38–53. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J. An Assessment of the Effectiveness of a Random Forest Classifier for Land Cover Classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Uhlmann, S. and Kiranyaz, S. Integrating Color Features in Polarimetric SAR Image Classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2197–2206. [Google Scholar] [CrossRef]

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.V.; Woodcock, C.E.; Wulder, M.A. Good Practices for Estimating Area and Assessing Accuracy of Land Change. Remote Sens. Environ. 2014, 148, 42–57. [Google Scholar] [CrossRef]

- Rose, A.N.; McKee, J.J.; Urban, M.L.; Bright, E.A.; Sims, K.M. Oak Ridge National Laboratory LandScan 2018 Global Population Database. Available online: https://landscan.ornl.gov (accessed on 13 November 2019).

- Microsoft. U.S. Building Footprints. 13 June 2018. Available online: https://github.com/Microsoft/USBuildingFootprints (accessed on 13 November 2019).

- USGS National Transportation Dataset (NTD) Downloadable Data Collection. Available online: https://catalog.data.gov/dataset/usgs-national- transportation-dataset-ntd-downloadable-data-collection1 (accessed on 16 November 2019).

- Stewart, S.; Berg, R. National Hurricane Center Tropical Cyclone Report: Hurricane Florence. Available online: https://www.nhc.noaa.gov/data/tcr/AL062018_Florence.pdf (accessed on 28 July 2019).

- Feaster, T.D.; Weaver, J.C.; Gotvald, A.J.; Kolb, K.R. Preliminary Peak Stage and Streamflow Data for Selected U.S. Geological Survey Streamgaging Stations in North and South Carolina for Flooding Following Hurricane Florence; United States Geological Survey: Reston, VA, USA, 2018; 36p.

- Armstrong, T. Hurricane Florence: 14 September 2018. Available online: https://www.weather.gov/ilm/HurricaneFlorence (accessed on 29 July 2019).

- Yang, L.; Suming, J.; Danielson, P.; Homer, C.; Gass, L.; Bender, S.; Case, A.; Costello, C.; Dewitz, J.; Fry, J.; et al. A New Generation of the United States National Land Cover Database: Requirements, Research Priorities, Design, and Implementation Strategies. ISPRS J. Photogramm. Remote Sens. 2018, 146, 108–123. [Google Scholar] [CrossRef]

- Rosen, P.; Hensley, S.; Wheeler, K.; Sadowy, G.; Miller, T.; Shaffer, S.; Muellerschoen, R.; Jones, C.; Madsen, S.; Zebker, H. UAVSAR: New NASA Airborne SAR System for Research. IEEE Aerosp. Electron. Syst. Mag. 2007, 22, 21–28. [Google Scholar] [CrossRef]

- Lou, Y. Uninhabited Aerial Vehicle Synthetic Aperture Radar (UAVSAR). Available online: https://airbornescience.nasa.gov/instrument/UAVSAR (accessed on 1 August 2019).

- UAVSAR Data. Available online: https://uavsar.jpl.nasa.gov/cgi-bin/data.pl (accessed on 8 June 2019).

- Cantalloube, H.; Nahum, C. How to Compute a Multi-Look SAR Image? In Proceedings of the Working Group on Calibration and Validation, Toulouse, France, 26–29 October 1999. [Google Scholar]

- Tsyganskaya, V.; Martinis, S.; Marzahn, P.; Ludwig, R. SAR-based Detection of Flooded Vegetation - A Review of Characteristics and Approaches. International Journal of Remote Sensing 2018, 39, 2255–2293. [Google Scholar] [CrossRef]

- White, L.; Brisco, B.; Dabboor, M.; Schmitt, A.; Pratt, A. A Collection of SAR Methodologies for Monitoring Wetlands. Remote Sens. 2015, 7, 7615–7645. [Google Scholar] [CrossRef] [Green Version]

- Woodhouse, I.H. The Scattering Matrix. In Introduction to Microwave Remote Sensing; CRC Press: Boca Raton, FL, USA, 2006; p. 80. [Google Scholar]

- Huang, X.; Runkle, B.; Isbell, M.; Moreno-Garcia, B.; McNairn, H.; Reba, M.; Torbick, N. Rice Inundation Assessment Using Polarimetric UAVSAR Data. Earth Space Sci. 2021, 8, e2020EA001554. [Google Scholar] [CrossRef]

- Freeman, A.; Durden, S.L. A Three-Component Scattering Model for Polarimetric SAR Data. IEEE Trans. Geosci. Remote Sens. 1998, 36, 963–973. [Google Scholar] [CrossRef] [Green Version]

- Chapman, B. Classifying Inundation from UAVSAR Polarimetric Data. Available online: https://uavsar.jpl.nasa.gov/science/workshops/workshop2015.html (accessed on 10 June 2019).

- Pottier, E. PolSARpro v6.0 (Biomass Edition) Software. Available online: https://ietr-lab.univ-rennes1.fr/polsarpro-bio/ (accessed on 4 April 2019).

- Martinis, S.; Rieke, C. Backscatter Analysis using Multi-Temporal and Multi-Frequency SAR Data in the Context of Flood Mapping at River Saale, Germany. Remote Sens. 2015, 7, 7732–7752. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Imhoff, M.L. Simulated and Observed LHH Radar Backscatter from Tropical Mangrove Forests. Int. J. Remote Sens. 1993, 14, 2819–2828. [Google Scholar] [CrossRef]

- Lin, Y.; Yun, S.; Bhardwaj, A.; Hill, E. Urban Flood Detection with Sentinel-1 Multi-Temporal Synthetic Aperture Radar (SAR) Observations in a Bayesian Framework: A Case Study for Hurricane Matthew. Remote Sens. 2019, 11, 1778. [Google Scholar] [CrossRef]

- Arnesen, A.; Thiago, S.F.; Hess, L.L.; Novo, E.M.; Rudorff, C.M.; Chapman, B.D.; McDonald, K.C. Monitoring Flood Extent in the Lower Amazon Floodplain using ALOS/PALSAR ScanSAR Images. Remote Sens. Environ. 2013, 130, 51–61. [Google Scholar] [CrossRef]

- CRC Press. Remote Sensing of Wetlands: Applications and Advances; Tiner, R.W., Lang, M.W., Klemas, V.V., Eds.; Taylor & Francis Group: Boca Raton, FL, USA, 2015; p. 574. [Google Scholar]

- Planet Labs Inc. Disaster Data. Available online: https://planet.com/disasterdata/ (accessed on 16 July 2019).

- September 2018: Hurricane Florence. Available online: https://oceanservice.noaa.gov/news/sep18/florence-storm-imagery.html (accessed on 16 July 2019).

- Beechcraft King Air 350CER. Available online: http://www.omao.noaa.gov/learn/aircraft-operations/aircraft/hawker-beechcraft-king-air-350er (accessed on 16 July 2019).

- Brown de Colstoun, E.C.; Huang, C.; Wang, P.; Tilton, J.C.; Tan, B.; Phillips, J.; Niemczura, S.; Ling, P.Y.; Wolfe, R.E. Global Man-Made Impervious Surface (GMIS) Dataset from Landsat; NASA Socioeconomic Data and Applications Center (SEDAC): Palisades, NY, USA, 2017. Available online: https://sedac.ciesin.columbia.edu/data/set/ulandsat-gmis-v1 (accessed on 15 October 2019).

- Height Above Nearest Drainage (HAND) for CONUS. Available online: https://www.hydroshare.org/resource/69f7d237675c4c73938481904358c789/ (accessed on 6 May 2020).

- Nobre, A.D.; Cuartas, L.A.; Hodnett, M.; Renno, C.D.; Rodrigues, G.; Silviera, A.; Waterloo, M.; Saleska, S. Height Above Nearest Drainage - A Hydrologically Relevant New Terrain Model. J. Hydrol. 2011, 404, 13–29. [Google Scholar] [CrossRef] [Green Version]

- Tarboton, D.G. A New Method for the Determination of Flow Directions and Upslope Areas in Grid Digital Elevation Models. AGU Water Sources Res. 1997, 33, 309–319. [Google Scholar] [CrossRef] [Green Version]

- Colditz, R. An Evaluation of Different Training Sample Allocation Schemes for Discrete and Continuous Land Cover Classification Using Decision Tree-Based Algorithms. Remote Sens. 2015, 7, 9655–9681. [Google Scholar] [CrossRef] [Green Version]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Hess, L.L.; Melack, J.M.; Filoso, S.; Wang, Y. Delineation of Inundated Area and Vegetation Along the Amazon Floodplain with the SIR-C Synthetic Aperture Radar. IEEE Trans. Geosci. Remote Sens. 1995, 7, 9655–9681. [Google Scholar] [CrossRef] [Green Version]

- Vaughan, C.; (Federal Emergency Management Agency, Department of Homeland Security, Washington, DC, USA); Molthan, A.; (NASA Marshall Space Flight Center, Washington, DC, USA). Personal Communication, 2021.

- Warner, J.D. Scikit-Fuzzy Version 0.4.2. Available online: https://zenodo.org/record/3541386/export/hx (accessed on 9 December 2020).

- Zadeh, L.A. Fuzzy Sets. Inform. Control 1965, 8, 338–353. [Google Scholar] [CrossRef] [Green Version]

- Martinis, S.; Kersten, J.; Twele, A. A Fully Automated TerraSAR-X Based Flood Service. ISPRS J. Photogramm. Remote Sens. 2015, 104, 203–212. [Google Scholar] [CrossRef]

- Pulvirenti, L.; Pierdicca, N.; Chini, M.; Guerriero, L. An Algorithm for Operational Flood Mapping from Synthetic Aperture Radar (SAR) Data using Fuzzy Logic. Natl. Hazards Earth Syst. Sci. 2011, 11, 529–540. [Google Scholar] [CrossRef] [Green Version]

- European Space Agency. Radar Course 2: Bragg Scattering. Available online: https://earth.esa.int/web/guest/missions/esa-operational-eo-missions/ers/instruments/sar/applications/radar-courses/content-2/-/asset_publisher/qIBc6NYRXfnG/content/radar-course-2-bragg-scattering (accessed on 21 January 2021).

- FEMA Mapping and Analysis Center. Pacific Northwest National Laboratory Rapid Infrastructure Flood Tool for Hurricane Florence. Available online: https://www.arcgis.com/home/item.html?id=a3163b34af324c099a5e9f4b97a9523a (accessed on 18 January 2021).

| Class | Percent Area | Number of Pixels | Target Training Pixels | Actual Training Pixels |

|---|---|---|---|---|

| Water | 0.015 | 2,095,824 | 6417 | 6421 |

| Dry Forest | 0.176 | 10,554,317 | 26,408 | 26,400 |

| Inun. Forest | 0.377 | 15,554,317 | 38,254 | 38,253 |

| Non-Forest | 0.348 | 13,879,889 | 34,676 | 34,710 |

| Urban | 0.083 | 7,506,615 | 17,646 | 17,720 |

| Total | 1.000 | 49,360,182 | 123,401 | 123,504 |

| Class | Water | Dry Forest | Inundated Forest | Non-Forest | Urban | Total |

|---|---|---|---|---|---|---|

| W (Pixels) | 761,957 | 8,704,984 | 18,636,794 | 17,186,411 | 4,088,181 | 49,378,327 |

| 0.800 | 0.750 | 0.900 | 0.750 | 0.750 | - | |

| S | 0.775 | 0.707 | 0.894 | 0.707 | 0.707 | - |

| Olofsson Method Truth Pixels | 2771 | 3466 | 7185 | 4948 | 2775 | 21,144 |

| UAVSAR Resolution Adjustment | 554 | 693 | 1437 | 990 | 555 | 4229 |

| Buffered Truth Points | 55 | 69 | 144 | 99 | 56 | 423 |

| Actual Truth Pixels | 623 | 688 | 1432 | 991 | 565 | 4299 |

| 18 September | 19 September | 20 September | 22 September | 23 September | Event Average | |

|---|---|---|---|---|---|---|

| Overall Accuracy (OA) | 86.36 | 87.57 | 89.37 | 86.71 | 88.90 | 87.67 |

| Open Water UA | 87.87 | 87.51 | 85.00 | 79.29 | 84.81 | 85.65 |

| Open Water PA | 68.77 | 80.23 | 90.20 | 91.34 | 91.40 | 82.14 |

| Dry Forest UA | 88.69 | 88.52 | 90.31 | 88.74 | 86.96 | 88.74 |

| Dry Forest PA | 93.20 | 92.77 | 93.59 | 93.45 | 93.26 | 93.21 |

| Inun. Forest UA | 93.90 | 94.82 | 95.14 | 96.06 | 95.60 | 94.91 |

| Inun. Forest PA | 90.63 | 87.87 | 91.79 | 88.76 | 89.67 | 89.78 |

| Non-Forest UA | 74.46 | 78.92 | 85.21 | 79.17 | 84.32 | 79.74 |

| Non-Forest PA | 90.11 | 91.82 | 87.96 | 82.29 | 89.45 | 89.01 |

| Urban UA | 86.51 | 86.13 | 85.60 | 84.60 | 89.04 | 86.31 |

| Urban PA | 77.66 | 78.76 | 79.04 | 73.95 | 77.42 | 77.70 |

| 18 September | 19 September | 20 September | 22 September | 23 September | Event Average | |

|---|---|---|---|---|---|---|

| Open Water UA | 21.24 | 21.21 | 23.53 | 23.17 | 24.81 | 22.42 |

| Open Water PA | 62.96 | 66.62 | 81.32 | 72.55 | 83.34 | 71.52 |

| Inun. Forest UA | 73.88 | 72.89 | 69.83 | 70.49 | 67.62 | 71.52 |

| Inun. Forest PA | 95.10 | 91.90 | 97.15 | 92.82 | 94.83 | 94.31 |

| Floodmap UA | 47.56 | 47.05 | 46.68 | 46.83 | 46.21 | 46.97 |

| Floodmap PA | 85.33 | 84.59 | 92.63 | 86.84 | 91.48 | 87.61 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Melancon, A.M.; Molthan, A.L.; Griffin, R.E.; Mecikalski, J.R.; Schultz, L.A.; Bell, J.R. Random Forest Classification of Inundation Following Hurricane Florence (2018) via L-Band Synthetic Aperture Radar and Ancillary Datasets. Remote Sens. 2021, 13, 5098. https://doi.org/10.3390/rs13245098

Melancon AM, Molthan AL, Griffin RE, Mecikalski JR, Schultz LA, Bell JR. Random Forest Classification of Inundation Following Hurricane Florence (2018) via L-Band Synthetic Aperture Radar and Ancillary Datasets. Remote Sensing. 2021; 13(24):5098. https://doi.org/10.3390/rs13245098

Chicago/Turabian StyleMelancon, Alexander M., Andrew L. Molthan, Robert E. Griffin, John R. Mecikalski, Lori A. Schultz, and Jordan R. Bell. 2021. "Random Forest Classification of Inundation Following Hurricane Florence (2018) via L-Band Synthetic Aperture Radar and Ancillary Datasets" Remote Sensing 13, no. 24: 5098. https://doi.org/10.3390/rs13245098

APA StyleMelancon, A. M., Molthan, A. L., Griffin, R. E., Mecikalski, J. R., Schultz, L. A., & Bell, J. R. (2021). Random Forest Classification of Inundation Following Hurricane Florence (2018) via L-Band Synthetic Aperture Radar and Ancillary Datasets. Remote Sensing, 13(24), 5098. https://doi.org/10.3390/rs13245098