Scale-Adaptive Adversarial Patch Attack for Remote Sensing Image Aircraft Detection

Abstract

:1. Introduction

- We propose an adversarial attack method for aircraft detection in RSIs, which hides the decision features of the aircraft in the object detector and reduces the confidence of the bounding box in the detector to a lower level than the threshold, thus misleading the detection results of the detector.

- Our proposed adversarial attack method has the characteristic of adversarial patch size adaption, which can adapt to the variation of aircraft scale in RSIs and effectively attack object detectors.

- The adversarial patches generated by our proposed attack method have attack transferability between different datasets and models.

2. Materials and Methods

2.1. Related Work

2.2. Method

2.2.1. Patch-Noobj Framework

2.2.2. Patch Applier

2.2.3. Detector

2.2.4. Attach Patch and Optimize Patch

3. Results

3.1. Databases and Evaluation Metrics

3.2. Experiments Details

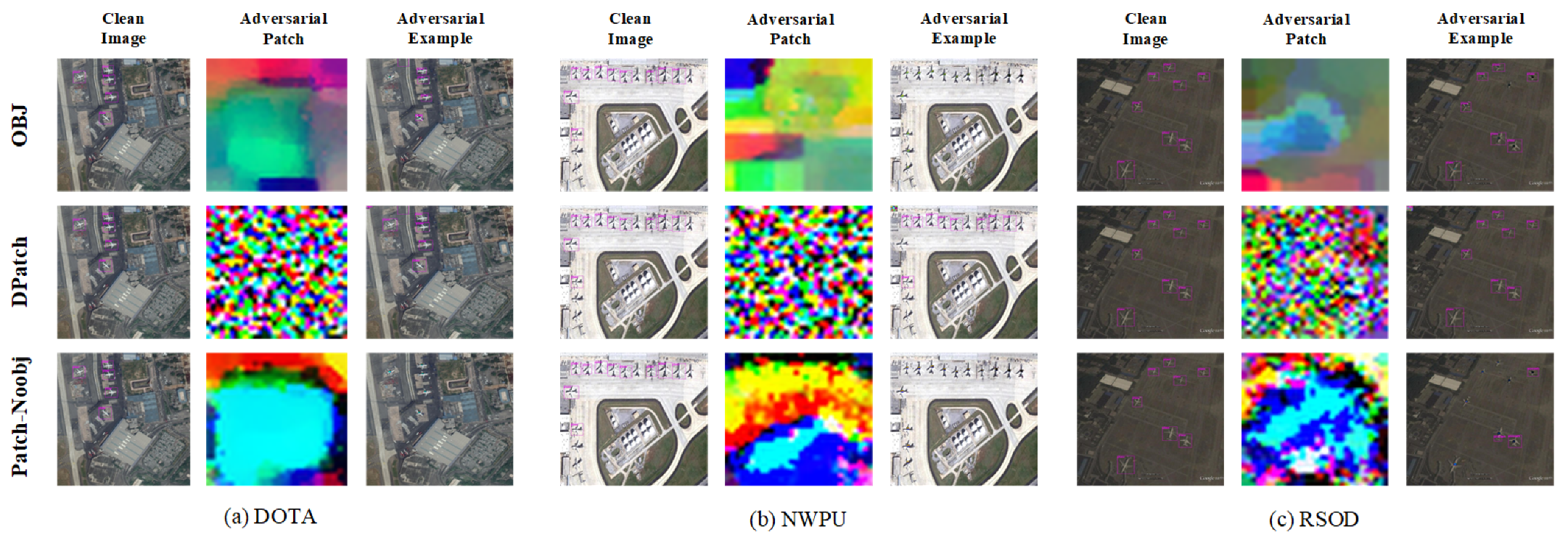

3.3. Patch-Noobj Attack

3.4. Attack Transferability

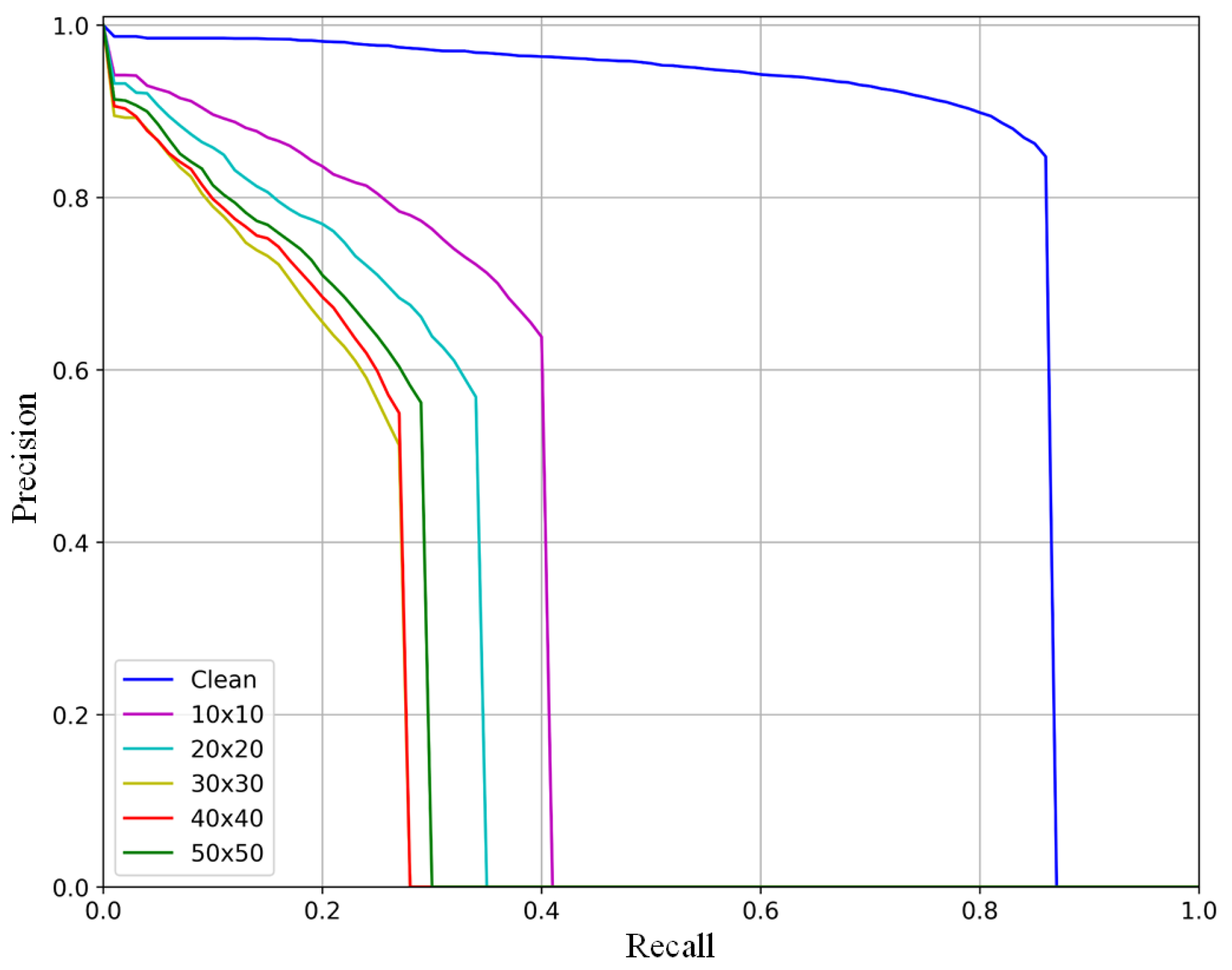

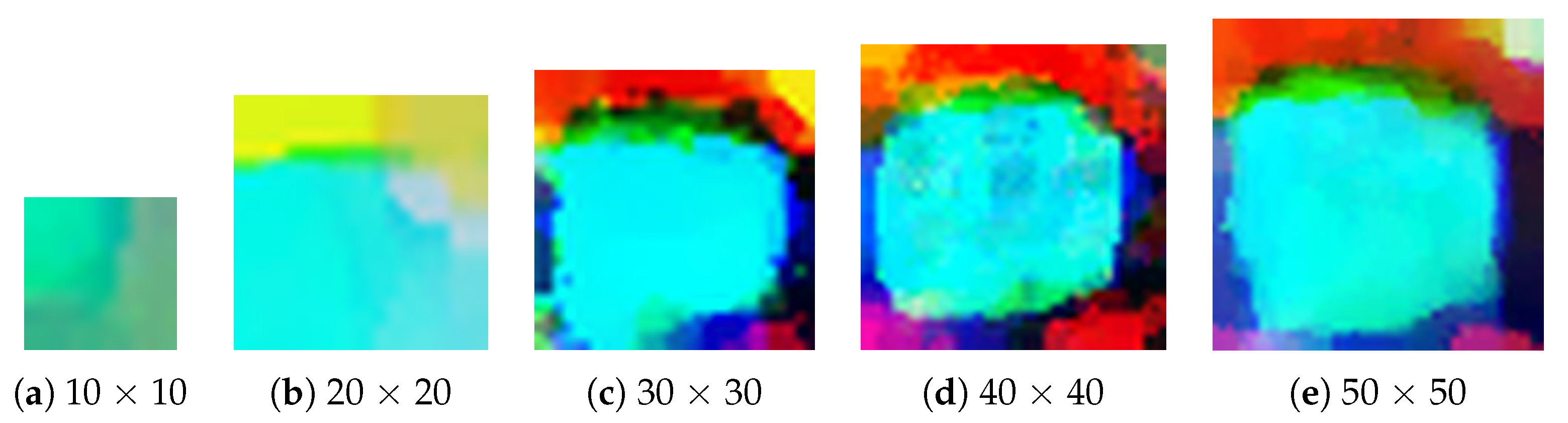

3.5. Attack Performance for Different Size Patches

3.6. Why Patch-Noobj Works

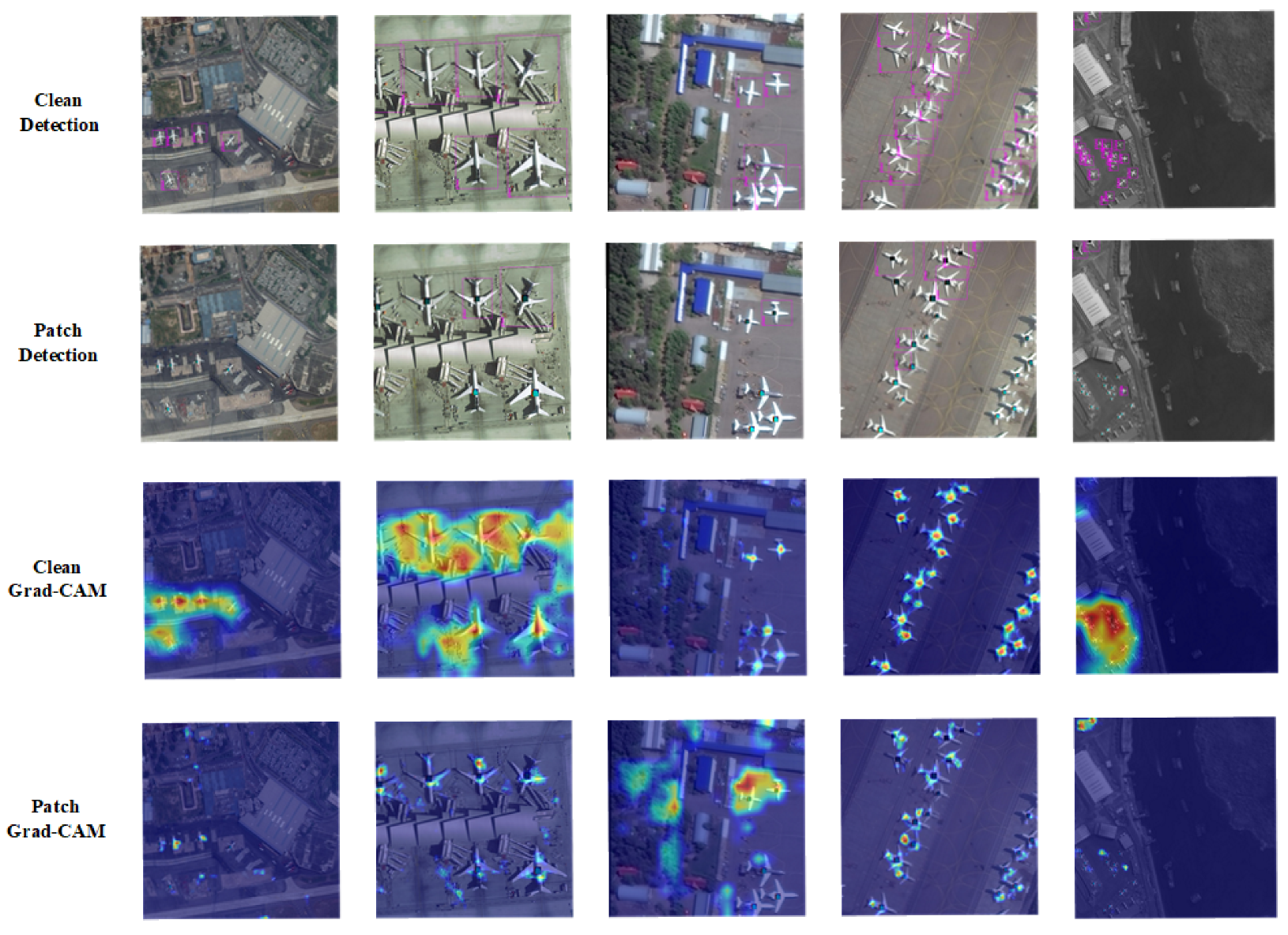

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ji, F.; Ming, D.; Zeng, B.; Yu, J.; Qing, Y.; Du, T.; Zhang, X. Aircraft Detection in High Spatial Resolution Remote Sensing Images Combining Multi-Angle Features Driven and Majority Voting CNN. Remote Sens. 2021, 13, 2207. [Google Scholar] [CrossRef]

- Wang, J.; Xiao, H.; Chen, L.; Xing, J.; Pan, Z.; Luo, R.; Cai, X. Integrating Weighted Feature Fusion and the Spatial Attention Module with Convolutional Neural Networks for Automatic Aircraft Detection from SAR Images. Remote Sens. 2021, 13, 910. [Google Scholar] [CrossRef]

- Han, X.; Zhong, Y.; Zhang, L. An efficient and robust integrated geospatial object detection framework for high spatial resolution remote sensing imagery. Remote Sens. 2017, 9, 666. [Google Scholar] [CrossRef] [Green Version]

- Cai, B.; Jiang, Z.; Zhang, H.; Zhao, D.; Yao, Y. Airport detection using end-to-end convolutional neural network with hard example mining. Remote Sens. 2017, 9, 1198. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Li, H.; Jia, P.; Zhang, G.; Wang, T.; Hao, X. Multi-scale densenets-based aircraft detection from remote sensing images. Sensors 2019, 19, 5270. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mohamad Nezami, O.; Chaturvedi, A.; Dras, M.; Garain, U. Pick-Object-Attack: Type-specific adversarial attack for object detection. Comput. Vis. Image Underst. 2021, 211, 103257. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, F.; Ruan, W. Fooling Object Detectors: Adversarial Attacks by Half-Neighbor Masks. arXiv 2021, arXiv:2101.00989. [Google Scholar]

- Xie, C.; Wang, J.; Zhang, Z.; Zhou, Y.; Xie, L.; Yuille, A. Adversarial examples for semantic segmentation and object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1369–1378. [Google Scholar] [CrossRef] [Green Version]

- Chen, S.T.; Cornelius, C.; Martin, J.; Chau, D.H. Robust Physical Adversarial Attack on Faster R-CNN Object Detector. arXiv 2018, arXiv:1804.05810. [Google Scholar]

- Li, Y.; Tian, D.; Chang, M.C.; Bian, X.; Lyu, S. Robust adversarial perturbation on deep proposal-based models. arXiv 2018, arXiv:1809.05962. [Google Scholar]

- Bose, A.J.; Aarabi, P. Adversarial attacks on face detectors using neural net based constrained optimization. In Proceedings of the 2018 IEEE 20th International Workshop on Multimedia Signal Processing (MMSP), Vancouver, BC, Canada, 29–31 August 2018; pp. 1–6. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.; Yang, H.; Liu, Z.; Song, L.; Li, H.; Chen, Y. Dpatch: An adversarial patch attack on object detectors. arXiv 2018, arXiv:1806.02299. [Google Scholar]

- Thys, S.; Van Ranst, W.; Goedemé, T. Fooling automated surveillance cameras: Adversarial patches to attack person detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar] [CrossRef] [Green Version]

- Zhao, Y.; Zhu, H.; Liang, R.; Shen, Q.; Zhang, S.; Chen, K. Seeing isn’t believing: Towards more robust adversarial attack against real world object detectors. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security, London, UK, 11–15 November 2019; pp. 1989–2004. [Google Scholar] [CrossRef]

- Yang, X.; Wei, F.; Zhang, H.; Zhu, J. Design and interpretation of universal adversarial patches in face detection. In Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XVII 16; Springer: Cham, Switzerland, 2020; pp. 174–191. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Jocher, G.; Stoken, A.; Borovec, J.; NanoCode012; Christopher, S.; Liu, C.; Laughing; Hogan, A.; Lorenzo, M.; Tkianai; et al. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 11 August 2021).

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [Green Version]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar] [CrossRef] [Green Version]

- Cheng, G.; Zhou, P.; Han, J. Learning rotation-invariant convolutional neural networks for object detection in VHR optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Xiao, Z.; Liu, Q.; Tang, G.; Zhai, X. Elliptic Fourier transformation-based histograms of oriented gradients for rotationally invariant object detection in remote-sensing images. Int. J. Remote Sens. 2015, 36, 618–644. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar] [CrossRef] [Green Version]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Kurakin, A.; Goodfellow, I.; Bengio, S. Adversarial examples in the physical world. arXiv 2016, arXiv:1607.02533. [Google Scholar]

- Carlini, N.; Wagner, D. Towards evaluating the robustness of neural networks. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2017; pp. 39–57. [Google Scholar] [CrossRef] [Green Version]

- Moosavi-Dezfooli, S.M.; Fawzi, A.; Frossard, P. Deepfool: A simple and accurate method to fool deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2574–2582. [Google Scholar] [CrossRef] [Green Version]

- Liu, A.; Liu, X.; Fan, J.; Ma, Y.; Zhang, A.; Xie, H.; Tao, D. Perceptual-sensitive gan for generating adversarial patches. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 1028–1035. [Google Scholar] [CrossRef]

- Karmon, D.; Zoran, D.; Goldberg, Y. Lavan: Localized and visible adversarial noise. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 2507–2515. [Google Scholar]

- Brown, T.B.; Mané, D.; Roy, A.; Abadi, M.; Gilmer, J. Adversarial patch. arXiv 2017, arXiv:1712.09665. [Google Scholar]

- Song, D.; Eykholt, K.; Evtimov, I.; Fernandes, E.; Li, B.; Rahmati, A.; Tramer, F.; Prakash, A.; Kohno, T. Physical adversarial examples for object detectors. In Proceedings of the 12th USENIX Workshop on Offensive Technologies (WOOT 18), Baltimore, MD, USA, 13–14 August 2018. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar] [CrossRef] [Green Version]

- Lee, M.; Kolter, Z. On physical adversarial patches for object detection. arXiv 2019, arXiv:1906.11897. [Google Scholar]

- Wu, S.; Dai, T.; Xia, S.T. Dpattack: Diffused patch attacks against universal object detection. arXiv 2020, arXiv:2010.11679. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Zhao, Y.; Yan, H.; Wei, X. Object hider: Adversarial patch attack against object detectors. arXiv 2020, arXiv:2010.14974. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef] [Green Version]

| Datasets | Method | AP | Recall | ||

|---|---|---|---|---|---|

| Clean | Patch | Clean | Patch | ||

| OBJ | 0.654 | 0.728 | |||

| DOTA | DPatch | 0.931 | 0.914 | 0.978 | 0.970 |

| Ours | 0.449 | 0.516 | |||

| OBJ | 0.727 | 0.745 | |||

| NWPU | DPatch | 0.881 | 0.851 | 0.882 | 0.860 |

| Ours | 0.642 | 0.667 | |||

| OBJ | 0.783 | 0.819 | |||

| RSOD | DPatch | 0.920 | 0.839 | 0.949 | 0.881 |

| Ours | 0.718 | 0.745 | |||

| Datasets | Method | Plane (AP) | Other Classes (AP) | ||

|---|---|---|---|---|---|

| Clean | Patch | Clean | Patch | ||

| OBJ | 0.654 | 0.562 | |||

| DOTA | DPatch | 0.931 | 0.914 | 0.570 | 0.565 |

| Ours | 0.449 | 0.556 | |||

| OBJ | 0.727 | 0.883 | |||

| NWPU | DPatch | 0.881 | 0.851 | 0.918 | 0.896 |

| Ours | 0.642 | 0.885 | |||

| Source Dataset | Target Dataset | Clean | Adversarial Patch | Decrease (↓) |

|---|---|---|---|---|

| DOTA | 0.449 | 0.482 ↓ | ||

| NWPU | DOTA | 0.931 | 0.631 | 0.300 ↓ |

| RSOD | 0.591 | 0.340 ↓ | ||

| DOTA | 0.736 | 0.145 ↓ | ||

| NWPU | NWPU | 0.881 | 0.642 | 0.239 ↓ |

| RSOD | 0.762 | 0.119 ↓ | ||

| DOTA | 0.784 | 0.136 ↓ | ||

| NWPU | RSOD | 0.920 | 0.765 | 0.155 ↓ |

| RSOD | 0.718 | 0.202 ↓ |

| Datasets | Model | Clean | Adversarial Patch | Decrease (↓) |

|---|---|---|---|---|

| DOTA | YOLOv5 | 0.972 | 0.737 | 0.235 ↓ |

| DOTA | Faster R-CNN | 0.815 | 0.610 | 0.205 ↓ |

| NWPU | YOLOv5 | 0.906 | 0.632 | 0.274 ↓ |

| NWPU | Faster R-CNN | 0.709 | 0.557 | 0.152 ↓ |

| RSOD | YOLOv5 | 0.928 | 0.724 | 0.204 ↓ |

| RSOD | Faster R-CNN | 0.720 | 0.506 | 0.214 ↓ |

| Source Dataset | Target Dataset | Model | Clean | Adversarial Patch | Decrease (↓) |

|---|---|---|---|---|---|

| DOTA | NWPU | YOLOv5 | 0.906 | 0.679 | 0.227 ↓ |

| Faster R-CNN | 0.709 | 0.566 | 0.143 ↓ | ||

| RSOD | YOLOv5 | 0.920 | 0.765 | 0.155 ↓ | |

| Faster R-CNN | 0.720 | 0.508 | 0.212 ↓ | ||

| NWPU | DOTA | YOLOv5 | 0.972 | 0.845 | 0.127 ↓ |

| Faster R-CNN | 0.815 | 0.676 | 0.139 ↓ | ||

| RSOD | YOLOv5 | 0.920 | 0.703 | 0.217 ↓ | |

| Faster R-CNN | 0.720 | 0.521 | 0.199 ↓ | ||

| RSOD | DOTA | YOLOv5 | 0.972 | 0.812 | 0.160 ↓ |

| Faster R-CNN | 0.815 | 0.609 | 0.206 ↓ | ||

| NWPU | YOLOv5 | 0.906 | 0.663 | 0.243 ↓ | |

| Faster R-CNN | 0.709 | 0.555 | 0.154 ↓ |

| Size | AP | Recall |

|---|---|---|

| 10 × 10 | 0.623 | 0.690 |

| 20 × 20 | 0.548 | 0.609 |

| 30 × 30 | 0.449 | 0.516 |

| 40 × 40 | 0.477 | 0.542 |

| 50 × 50 | 0.490 | 0.546 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, M.; Li, Q.; Chen, L.; Li, H. Scale-Adaptive Adversarial Patch Attack for Remote Sensing Image Aircraft Detection. Remote Sens. 2021, 13, 4078. https://doi.org/10.3390/rs13204078

Lu M, Li Q, Chen L, Li H. Scale-Adaptive Adversarial Patch Attack for Remote Sensing Image Aircraft Detection. Remote Sensing. 2021; 13(20):4078. https://doi.org/10.3390/rs13204078

Chicago/Turabian StyleLu, Mingming, Qi Li, Li Chen, and Haifeng Li. 2021. "Scale-Adaptive Adversarial Patch Attack for Remote Sensing Image Aircraft Detection" Remote Sensing 13, no. 20: 4078. https://doi.org/10.3390/rs13204078

APA StyleLu, M., Li, Q., Chen, L., & Li, H. (2021). Scale-Adaptive Adversarial Patch Attack for Remote Sensing Image Aircraft Detection. Remote Sensing, 13(20), 4078. https://doi.org/10.3390/rs13204078