Abstract

Unmanned aerial vehicle (UAV) hyperspectral remote sensing technologies have unique advantages in high-precision quantitative analysis of non-contact water surface source concentration. Improving the accuracy of non-point source detection is a difficult engineering problem. To facilitate water surface remote sensing, imaging, and spectral analysis activities, a UAV-based hyperspectral imaging remote sensing system was designed. Its prototype was built, and laboratory calibration and a joint air–ground water quality monitoring activity were performed. The hyperspectral imaging remote sensing system of UAV comprised a light and small UAV platform, spectral scanning hyperspectral imager, and data acquisition and control unit. The spectral principle of the hyperspectral imager is based on the new high-performance acousto-optic tunable (AOTF) technology. During laboratory calibration, the spectral calibration of the imaging spectrometer and image preprocessing in data acquisition were completed. In the UAV air–ground joint experiment, combined with the typical water bodies of the Yangtze River mainstream, the Three Gorges demonstration area, and the Poyang Lake demonstration area, the hyperspectral data cubes of the corresponding water areas were obtained, and geometric registration was completed. Thus, a large field-of-view mosaic and water radiation calibration were realized. A chlorophyl-a (Chl-a) sensor was used to test the actual water control points, and 11 traditional Chl-a sensitive spectrum selection algorithms were analyzed and compared. A random forest algorithm was used to establish a prediction model of water surface spectral reflectance and water quality parameter concentration. Compared with the back propagation neural network, partial least squares, and PSO-LSSVM algorithms, the accuracy of the RF algorithm in predicting Chl-a was significantly improved. The determination coefficient of the training samples was 0.84; root mean square error, 3.19 μg/L; and mean absolute percentage error, 5.46%. The established Chl-a inversion model was applied to UAV hyperspectral remote sensing images. The predicted Chl-a distribution agreed with the field observation results, indicating that the UAV-borne hyperspectral remote sensing water quality monitoring system based on AOTF is a promising remote sensing imaging spectral analysis tool for water.

1. Introduction

Rapid developments of light unmanned aerial vehicles (UAVs), small hyperspectral imagers, and related instruments have facilitated the translation of UAV hyperspectral imaging system concepts into reality [1]. Hyperspectral imaging remote sensing technologies based on light UAVs are a combination of UAV, imaging spectrum, and remote sensing technologies, which possess unique advantages with regard to temporal, spatial, and spectral resolution [2]. Thus, they have gradually become research hotspots, in addition to having numerous potential applications.

The spectrum generation mode of a spectrometer in the imaging spectrometer system directly affects the performance, structural complexity, mass, and volume of the imaging spectrometer [3]. The imaging modes of UAV spectrometers mainly include pushbroom, snapshot, spatial scanning, and spectral scanning [4].

In particular, pushbroom spectral imaging technologies are most commonly applied to airborne hyperspectral imaging systems [5]. Pushbroom spectrometers represent a type of linear imaging instrument. One axis of the two-dimensional (2D) sensor is used to record the spatial information, whereas the other axis obtains the spectral one [6]. Typical UAV pushbroom imaging spectrometers have Headwall Nano Hyperspec [7,8], ITRES hyperspectral sensor systems [9], and HySpex hyperspectral cameras [10]. In UAV airborne hyperspectral missions, the accuracy of a pushbroom hyperspectral cube is a function of sensor frame rate, relative flight speed, sensor altitude, and position information [2]. Consequently, to obtain an accurate data cube, pushbroom sensors usually require a stable UAV platform to ensure uniform movement in a straight line, and a global navigation satellite system/inertial management unit (IMU) module to record accurate altitude and position information.

The working principle of the snapshot-type spectral imager is to capture a spectral data cube in one snapshot and use a large-area detector array to record millions of pixels at the same time, without any spatial scanning or moving parts [11]. The common airborne imagers include BaySpec OCI-D2000 [12] and Cubert UHD185 [13]. The core advantages of snapshot imaging are that the data acquisition time is short, and the spectral data cube is captured continuously at a video frame rate. Because it is non-scanning imaging, snapshot imaging has a relatively strict image geometry structure and can avoid artifacts introduced by motion.

In 2014, Sascha Grusche introduced a spatial spectral scanning technology [2,14], in which each frame in the scanning process could be regarded as a diagonal slice of the entire hyperspectral cube; another spatial spectral scanning spectrometer is an imaging spectrometer based on linear variable filter technology [15]. In addition, there is a space spectral scanning spectrometer with a built-in push-sweep imaging platform, which can obtain spectral information of specific spectral segments through linear scanning, such as the IMEC SNAPSCAN series imager [16]. Generally, spatial spectral scanning technologies exhibits the high spatial resolution characteristic of the pushbroom spectral imaging and rapid data acquisition characteristic of snapshot imaging.

The spectral scanning spectral imager is a type of frame-based imager. Its spectral channel and the number of spatial pixels are adjustable. The whole data cube can be captured by exposing each band in turn. Spectral scanning spectral imaging is either realized by a filter wheel [17], acousto-optic tunable filter (AOTF), liquid crystal tunable filter (LCTF) [18], or Fabry–Perot interferometer (FPI) [19]. The response/switching times of the various approaches range from 1 s for the filter wheel, to 50 to 500 ms for the LCTF and mechanically tuned Fabry–Perot, and to 10 to 50 μs for the AOTF [20]. Typical products include SENOP Rikola [21], which is a typical example of a spectral scanning instrument based on tunable FPI and is widely used in hyperspectral remote sensing performed by UAVs.

Image distortion and motion artifacts, which considerably influence the accuracy of target recognition, are the challenges encountered during the application of spectral scanning imaging spectrometers in remote sensing applications [22]. In UAV hyperspectral cameras based on AOTF, three major reasons for the distortion and motion artifacts observed in the imaging processes exist. (1) There are optical system design errors and assembly errors of the spectral imager [23], which are similar to the case in ordinary cameras. (2) In remote sensing flight tests, the positions and altitudes of sensors change due to airflow disturbance and UAV vibration, resulting in spatial position deviation of different spectral segments, and different spectral bands cannot be completely matched in the original data cube; therefore, registration correction should be considered during post-processing. (3) The diffraction phenomena of beams in AOTF crystals caused by ultrasonic waves vary at different wavelengths [24]. The diffraction problem is attributable to the AOTF spectral camera, and there is no dedicated mathematical model to resolve it; however, AOTF crystals have been calibrated before they leave the factory, which is not within the scope of this paper.

Due to their adaptability, UAV spectral remote sensing technologies can facilitate water quality monitoring based on a wide range of parameters. The common elements that can be monitored by drone airborne spectral remote sensing technology in water body monitoring are Chl-a, suspended matter concentration, turbidity, transparency, total nitrogen, total phosphorus, algae, aquatic vegetation, reservoir and river siltation, urban black and smelly water bodies, microbes in water, etc. For example, based on the Nemerow index and gradient enhanced decision tree regression, the spatial distribution map of Nemerow comprehensive pollution index and its technical feasibility in pollution source monitoring were introduced in [25]. In [26], based on the high spatial resolution monitoring of phycocyanin and Chl-a from aerial hyperspectral images, four ground and air monitoring activities were performed to measure the water surface reflectance, and the effects of different optical methods on the spatial distribution and concentration of phycocyanin (PC), chlorophyll-a (Chl-a), and total suspended solids (TSSs) were studied. In [27], a UAV was used to monitor submerged aquatic vegetation in rivers. The results show that UAV optical remote sensing technology can effectively monitor algae and submerged aquatic vegetation in shallow rivers with low turbidity and good optical transmission. In [28], airborne hyperspectral data were used to assess suspended particulate matter and aquatic vegetation in shallow and turbid lakes. Another study [29] was performed for aerial hyperspectral imaging of AisaFENIX under cloud cover change over a small inland water body. A popular semi analytical band ratio algorithm was used to retrieve the Chl-a concentration in turbid inland water, and its accuracy was close to that of the measured reflectance. In [30], a supervised machine learning (ML) algorithm was trained to predict the concentrations of TSS and Chl-a in two water bodies using Sentinel 2 spectral images, different spatial resolution data of UAV, and laboratory analysis data. In [31], a low-cost unmanned airborne spectral camera was used to monitor reservoir sedimentation. In [32], a preliminary study was conducted on the evaluation of microbial water quality in irrigation ponds based on UAV imaging.

Some methods mainly used to establish water quality parameter prediction models include regression algorithms, such as partial least squares (PLS) and extreme gradient boosting (XGBoost) regression algorithms, artificial neural network methods, such as back propagation (BP) neural network and convolutional neural network (CNN), machine learning methods, such as support vector regression (SVR), least squares support vector machine (LSSVM), and random forest algorithm (RF) methods. For example, in [33], partial least squares regression and remote sensing inversion models were used for laboratory chemical oxygen demand (COD) standard solution and field actual water body, respectively, using COD sensitive spectrum segment and full spectrum segment, to realize the analysis of COD spectral characteristics of a water body. Moreover, using near infrared (NIR) data collected by UAV, a fuzzy regression model was established to analyze the water quality of Sanchun dam reservoir in Japan [34]. In [35], retrieval of chlorophyll-a and total suspended solids using iterative stepwise elimination partial least squares (ISE-PLS) regression based on field hyperspectral measurements in ignition pools in Higashihiroshima, Japan was performed. In [36], UAV remote sensing using XGBoost regression algorithm was proposed for quantitative inversion of urban river transparency. In [37], the concentration of suspended solids in reservoirs and rivers was detected using an unmanned airborne spectrometer, and the inversion model of suspended solids concentration was established by particle swarm optimization algorithm. The classical machine learning method and support vector regression (SVR) was used in [38] to estimate the global chlorophyll-a concentration from medium resolution imaging spectrometer in comparison with the proposed CNN method. In [39], a method was proposed to determine the correlation between total suspended solids and dissolved organic matter in water by spectral imaging and artificial neural network. Using the hyperspectral remote sensing and ground monitoring data of UAV [40], established the prediction model of total nitrogen concentration through twelve machine learning algorithms and analyzed the spatial heterogeneity of total nitrogen concentration in four sensitive areas of the Miyun reservoir. In [41], a comparison of machine learning algorithms for retrieval of water quality indicators in case-II waters, using a case study of Hong Kong, established models using artificial neural network (ANN), random forest (RF), cubist regression (CB), and support vector regression (SVR) models to predict the concentrations of suspended solids (SS), chlorophyll-a (Chl-a), and turbidity in the coastal waters of Hong Kong. In [42,43,44], a pixel-by-pixel matching algorithm based on UAV images was proposed to study the empirical model of water quality monitoring.

To meet the needs of spectral imaging analysis of water quality parameters in water remote sensing, this paper introduces UAV hyperspectral remote sensing systems based on AOTF as a solution and its application in water quality monitoring. First, the construction and calibration of a hyperspectral imager system for UAV are introduced. Second, this paper describes the remote sensing data acquisition control and data processing flow of the system and introduces geometric registration, radiation calibration, field-of-view splicing, and concentration inversion of the collected data in detail. Then, air–ground joint water quality monitoring experiments in the Three Gorges demonstration area and Poyang Lake demonstration area are introduced, including remote sensing imaging of the water bodies, in situ sampling test of water quality parameters, measurement of water-leaving reflectance and image registration, mosaic, and radiation calibration of remote sensing data. Taking water quality parameter Chl-a as an example, this study analyzes and compares 11 traditional Chl-a sensitive spectrum selection algorithms, and accordingly analyzes and compares the water quality parameter concentration prediction models established by a conventional regression algorithm, neural network algorithm, and machine learning algorithm. Finally, the application of the system is analyzed and discussed. At the same time, the existing problems and application potential of the AOTF-based UAV hyperspectral remote sensing system in water remote sensing imaging spectral analysis are briefly discussed.

2. Materials and Methods

2.1. UAV Hyperspectral Imaging System

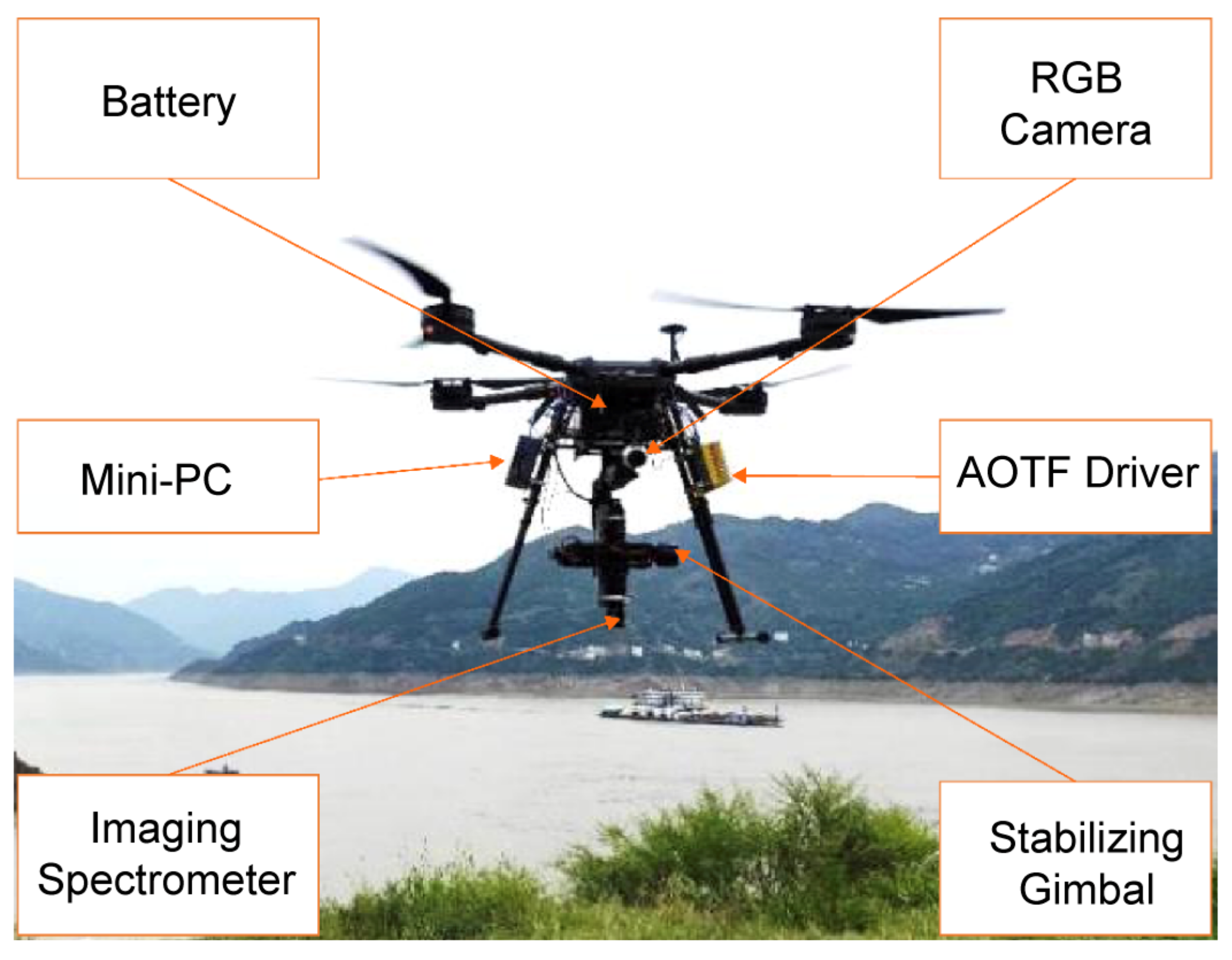

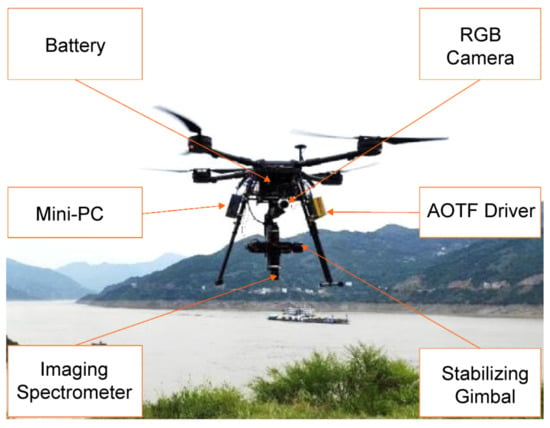

The UAV hyperspectral imaging remote sensing system based on AOTF consists of a light UAV, a spectral scanning imaging spectrometer based on AOTF (detailed in Section 2.1.1), a pan–tilt system for the spectrometer, a high-definition RGB camera, a mini-PC for data recording and control, batteries, cables, and other components. Figure 1 shows the system used, and Table A1 in Appendix A shows the main technical indexes of some components of hyperspectral UAV system.

Figure 1.

Photograph of UAV hyperspectral imaging system.

For the light UAV platform, a customized DJI Wind 4 flight platform was adopted [45]; its symmetrical motor wheelbase was 1050 mm, the overall weight (excluding battery) was 7.3 kg, the maximum takeoff weight was 24.5 kg, the maximum flight speed was 14 m/s, and the maximum hovering time (single battery) was 28 min, However, considering the duration of the ascent and descent phases and the safety buffer, generally, the duration of a flight plan did not exceed 20 min.

The pan/tilt/zoom (PTZ) system of the spectrometer is a Ronin-MX Gimbal Stabilizer [46], and the built-in IMU can feed back to the 32-bit digital signal processing (DSP) processor customized by DJI after accurate measurement of PTZ information. The DSP processor completes the calculation of the stability enhancement action in milliseconds and feeds back to three brushless motors to ensure that the angle jitter of the PTZ is controlled within ± 0.02°. Consequently, even if the three axes of the pan–tilt are in motion, the Ronin MX can still ensure that the spectrometer obtains a stable and smooth picture.

The RGB camera is a DJI Zenmuse X3 PTZ camera, with the highest support 4Kp30 @60Mbps ultra-high-definition and 1080 @60fps high-definition video recording, supporting up to 12 million pixels still photo shooting, with nine lenses, including two aspheric lenses, a 1/2.3-inch CMOS sensor, a 94° wide-angle fixed focus lens, and no distortion of the picture. The camera has a three-axis pan–tilt system, rotating 360° without occlusion.

The mini-PC is an Intel NUC Mini-PC with a i5-7260U processor, a 2.2 GHz–3.2 GHz main frequency, a 4 MB cache, a 15 W thermal design power consumption, 32 GB memory, and a 200 GB SSD storage hard disk, which meets the storage requirements of 500 waypoint data cube.

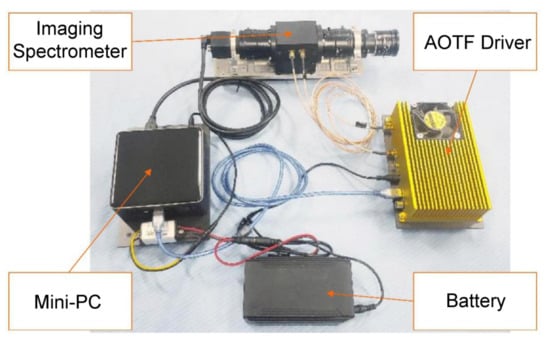

2.1.1. Hyperspectral Imager

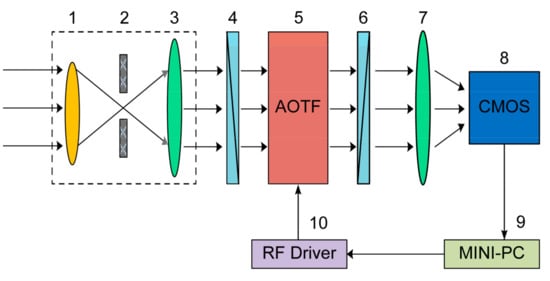

The merits of the AOTF include a small size, light weight, lack of moving parts, flexible wavelength selection, and strong environmental adaptability [20,47,48,49], which make it suitable as a light-splitting device in a high-resolution imaging spectrometer applied for aerial remote sensing [50]. The AOTF hyperspectral imager provides a novel spectral scanning imaging method. While designing such a system, the aperture diaphragm and camera focal plane conjugate imaging optical path design are adopted, as shown in Figure A1. The entire system includes an optical system, diffraction, orthogonal polarization, and radio frequency (RF) control, and a detector [20]. The design of an optical system should meet the imaging and diffraction requirements. The diffraction design meets the Bragg diffraction efficiency requirements; that is, the diffraction energy must be concentrated on the first-order positive energy and the first-order negative energy, respectively. Orthogonal polarization designs should meet a high extinction ratio requirement and eliminate zero-order light and stray light. The RF driver meets the requirements of the RF drive and synchronous control function. A large-area array CMOS detector is used, and spectrum data acquisition is completed by the detector based on the RF driver control through software and hardware design. The specific workflow of the AOTF hyperspectral imager is as follows [51].

The control computer controls the RF signal driver by sending instructions to generate a sine wave RF signal with a specific frequency. Afterward, the RF signal is transmitted to the ultrasonic transducer of the AOTF module to complete the conversion of the RF signal and ultrasonic signal. The birefringence of the AOTF crystal (a TeO2 crystal is used in this system) is altered by ultrasonic waves. Simultaneously, the target and background radiation incident on the front objective and collimator are converted into parallel light and then transmitted to the orthogonal polarimeter and the TeO2 crystal. The incident light is then divided into first-order positive light, first-order negative light, and zero-order white light. The lights are converged by the second imaging lens, and the first-order positive light enters the detector for imaging, while the other light does not enter the detector. Finally, the CMOS detector completes the single-band 2D data acquisition, and then obtains the hyperspectral data cube [52].

The core optical path structure of the AOTF hyperspectral imaging system spectrometer is illustrated in Figure A1 in Appendix A. Table A2 in Appendix A shows the spectrometer design integration materials based on AOTF technology.

The incident beam is refracted by the front objective lens (1), aperture diaphragm (2), and collimating lens (3), and then incident on the surface of the linear polarizer (4), and then to the surface of AOTF module after polarization. After acousto-optic interaction in the AOTF module, a diffracted beam is generated. After passing through a linear polarizer (6), the beam is focused by a secondary imaging lens (7) to the CMOS detector target and the data is recorded and processed by the mini-PC. The polarization direction of the linear polarizer (4) is parallel to the acousto-optic interaction plane of the AOTF and perpendicular to the polarization direction of the linear polarizer (6). The objective of using a linear polarizer (6) is to filter out the 0-order transmitted light.

There are two optimizations in the design of the imaging spectrometer. The first is that the optical axis cannot be perpendicular to the imaging plane of the detector, owing to the existence of a first-order deflection angle of 2.17° in the AOTF module, which causes aberration. Therefore, in the structural design of the subsequent optical path of the AOTF crystal diffraction beam, the secondary imaging lens assembly is deflected to a certain angle to offset the influence of the first-order deflection angle of the AOTF module. Moreover, because the separation angle of the AOTF module is 4°, and the linear polarizer 2 cannot completely eliminate the 0-order transmission light, the first-order diffraction light overlaps with the zero-order transmission light. By ingeniously designing an aperture diaphragm in the optical system, the first-order diffraction light and the zero-order transmission light are completely separated before reaching the imaging plane of the CMOS detector, and only the first-order diffraction monochromatic light is received, by limiting the large-area array CMOS detector window. Figure 2 shows the spectrometer used.

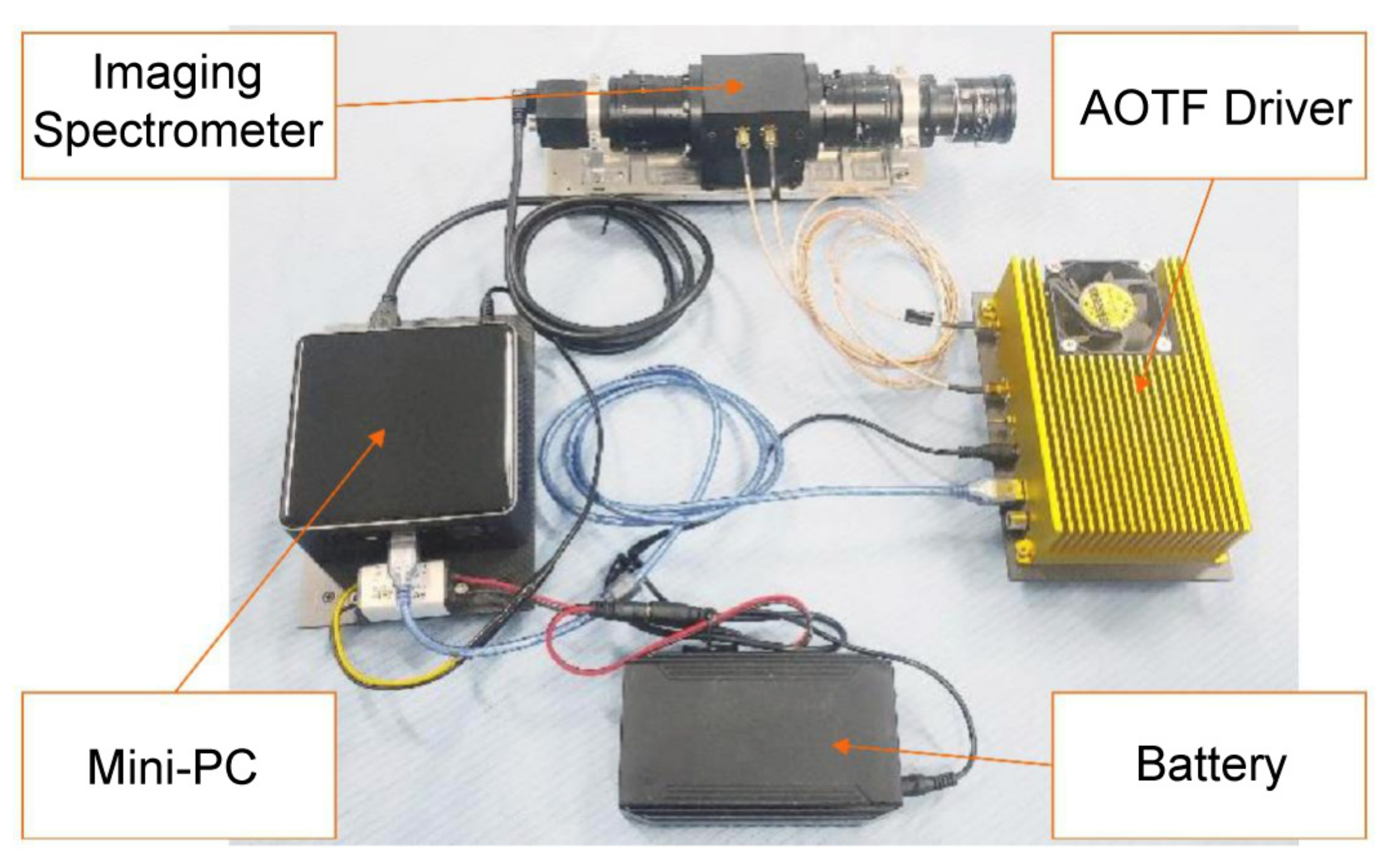

Figure 2.

Components of the AOTF imaging system: AOTF imaging Spectrometer, a AOTF Driver, a MINI-PC, a LiPo battery.

2.1.2. Spectral Resolution of Spectral Imager

According to the working principle of an AOTF, the tuning relationship of the non-collinear acousto-optic filter is expressed as follows [53,54]:

where is the diffraction wavelength, is the ultrasonic wave velocity, is the angle between the incident angle and the optical axis of the crystal, is the ultrasonic frequency, and is the birefringence of the interaction medium. The tuning relationship reflects the one-to-one correspondence between the driving frequency and the diffraction wavelength of the AOTF. By changing the frequency of the RF signal and then changing the frequency of the acoustic wave, we can complete the spectral wavelength switching within a certain spectral range.

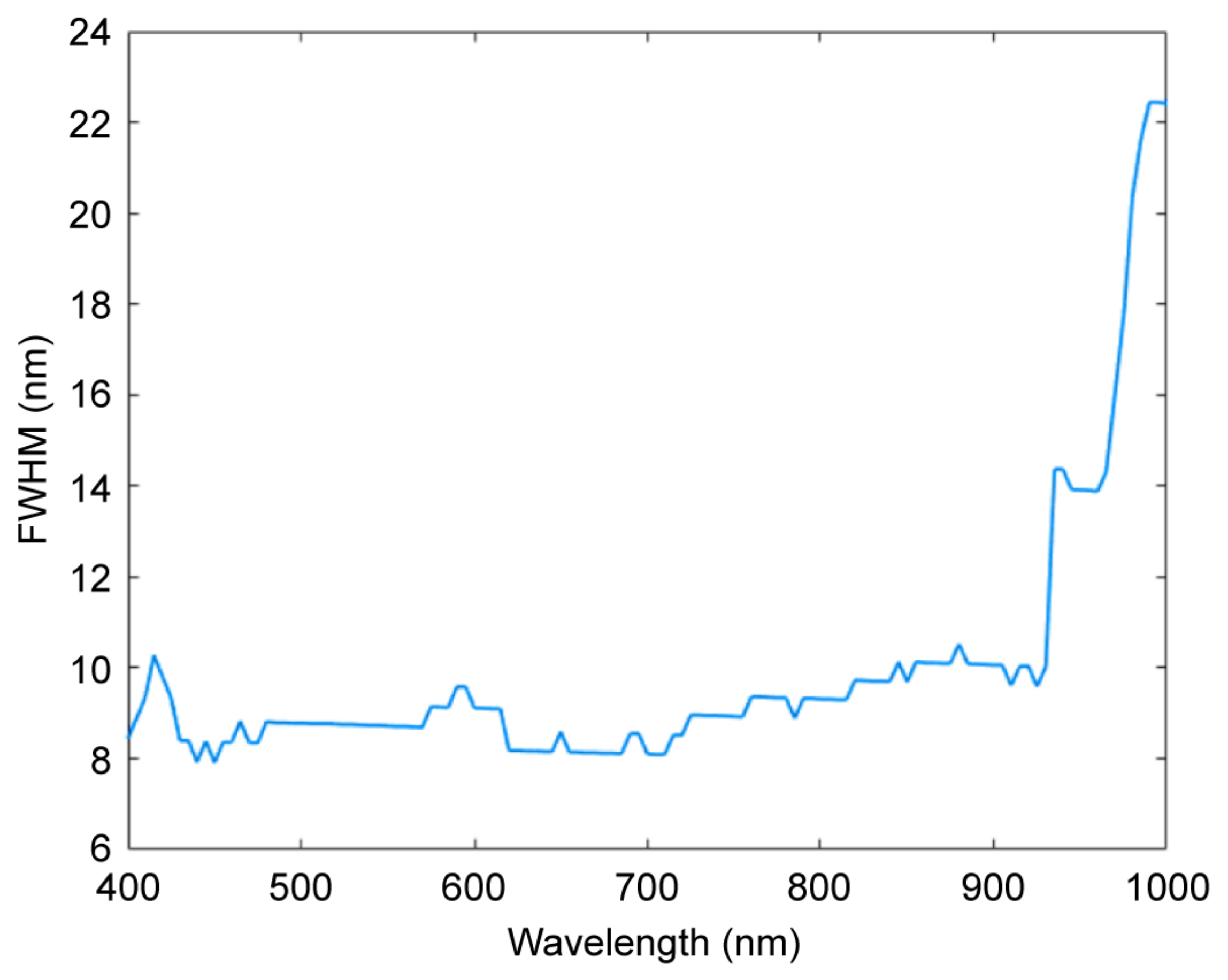

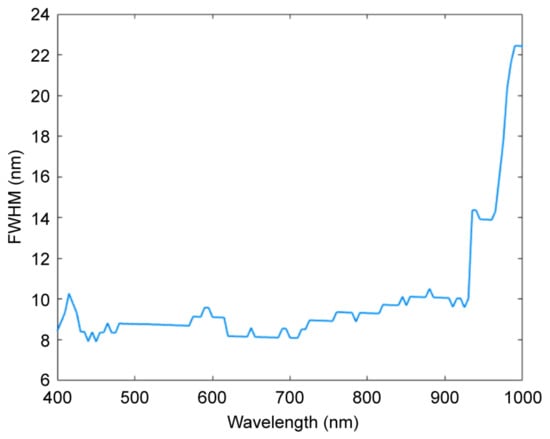

Based on the core optical structure of the spectrometer, the CMOS detector was replaced by an optical fiber spectrometer, and the RF driver was controlled by a mini-PC. The spectral performance of the AOTF module of the spectrometer was measured with a step size of 5 nm from 400–1000 nm. Figure 3 shows the spectral resolution test results of 121 processed spectral bands measured using an optical fiber spectrometer. The full width at half maximum was used to represent the spectral resolution of the spectrometer.

Figure 3.

Determination of spectral resolution of spectral imager.

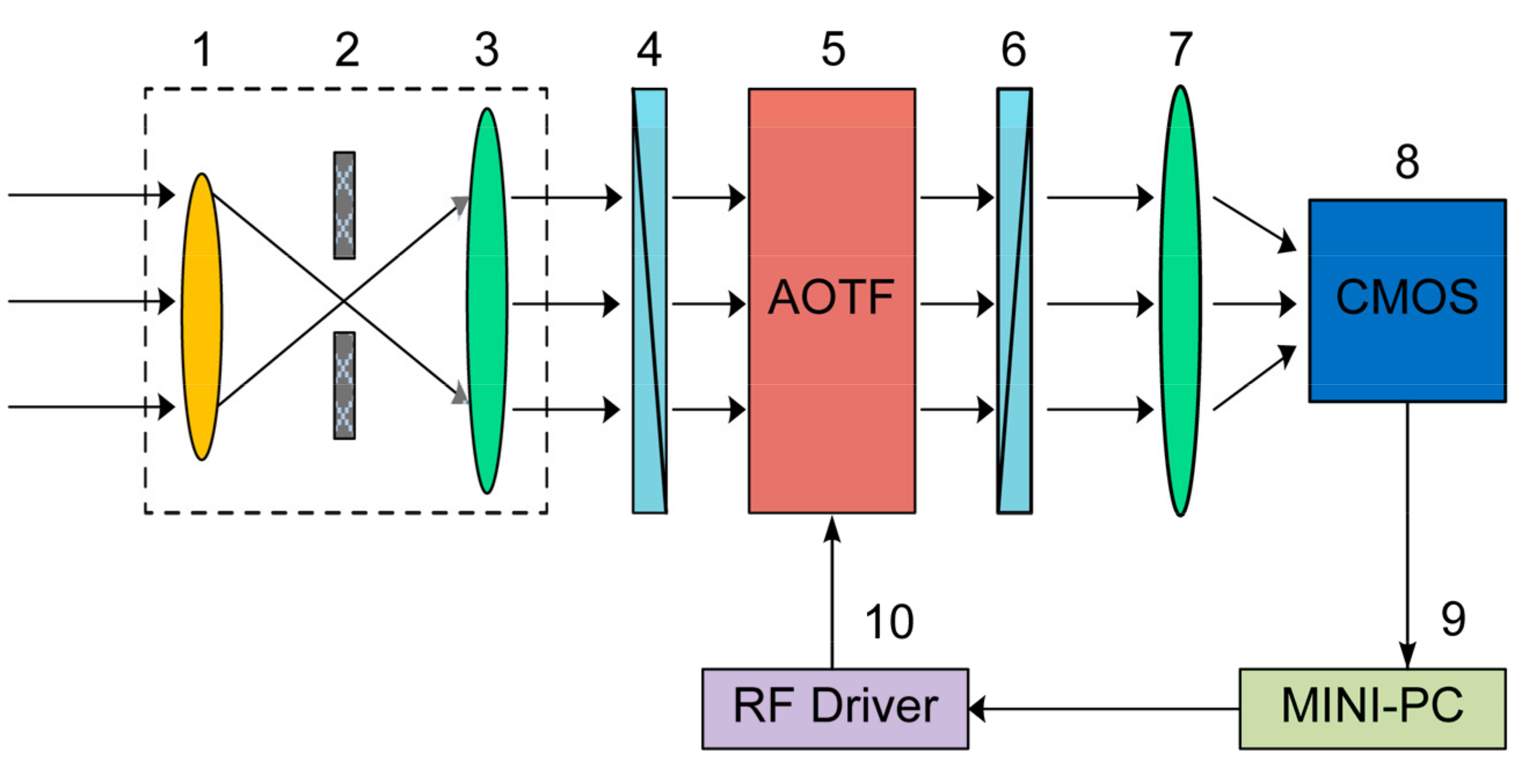

2.2. Remote Sensing Data Acquisition Control

According to the working area of remote sensing data collection, the flight route was planned using DJI ground flight control software to select the waypoints on the flight route. While selecting the flight route and waypoints, the duration of the flight plan must be considered. In addition, waypoints must be set according to the focal length of the imaging objective lens of the spectrometer and the ground field of view calculated by the aperture diaphragm. The ground field of view corresponding to the two waypoints needs to overlap the field of view to facilitate the splicing of spectral data with a large field of view. After the route and waypoints are determined, the actions to be undertaken at each waypoint need to be set, including triggering RGB camera exposure, triggering the imaging spectrometer to begin scanning, and UAV hovering time, to complete spectrometer data cube acquisition. Subsequently, KML files containing route and waypoint data are generated using ground flight control software and imported into UAV flight control software. It is necessary to lay the corresponding reflectance targets on the ground at points corresponding to the flight route or waypoints to facilitate subsequent radiation correction. Subsequently, the hyperspectral remote sensing data can be collected according to the corresponding UAV remote sensing data acquisition process shown in Figure A2 in Appendix A.

The specific procedure is as follows:

- (1)

- Before the implementation of the remote sensing flight test, check the system link and power it on, log in to the control computer mini-PC, start the remote sensing data acquisition control software, link the AOTF-based hyperspectral imager and AOTF RF controller, and complete the ground photographing self-test.

- (2)

- Initiate parameter configuration: according to the weather conditions of the flight test, set the integration time and gain step length of the spectral camera, and then set the spectral range and spectral segment number of the AOTF spectrometer in flight.

- (3)

- Import the preset route and waypoint information into the UAV flight control software, including longitude and latitude, altitude, yaw angle, and hover time, and start the UAV to perform flight tasks.

- (4)

- After the UAV reaches the waypoint, it triggers the RGB camera to carry out an image acquisition over a large field of view; simultaneously, the trigger command is transmitted to the spectrometer acquisition control program.

- (5)

- At the waypoint, the spectrometer acquisition control program controls the AOTF driver to turn off the RF drive signal and carry out dark background image acquisition.

- (6)

- According to the data acquisition parameter configuration, the spectrometer acquisition control program conducts high-speed data acquisition at each wavelength. In addition, in the process of data acquisition at each wavelength, the distortion model is used to carry out real-time correction, and the hyperspectral data cube is stored in a specific data structure.

- (7)

- Whether data acquisition of the last waypoint in the route has been carried out is assessed. If not, the flight proceeds to the next waypoint and repeats step (4); if completed, flight data acquisition is terminated, and the UAV returns to the ground automatically.

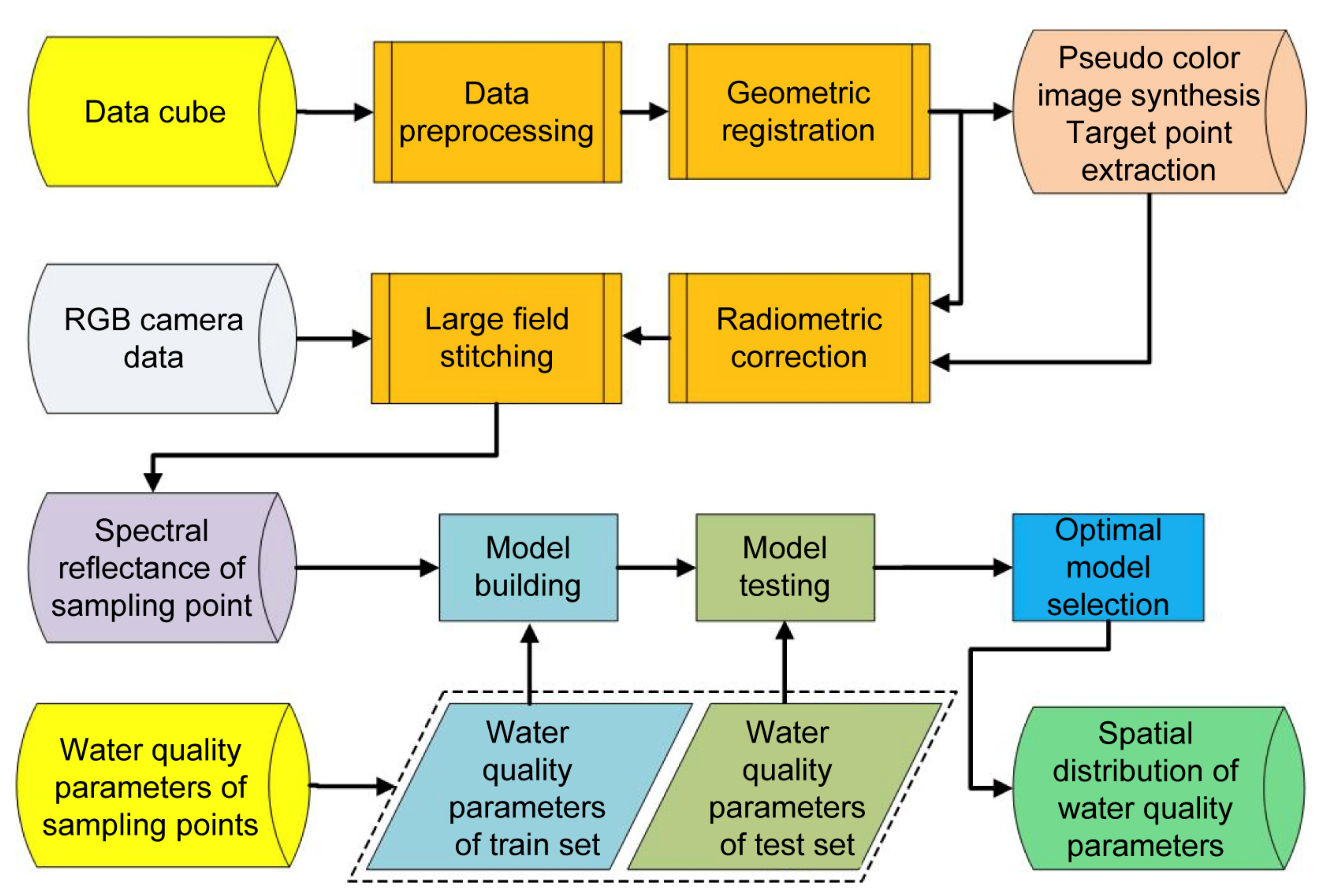

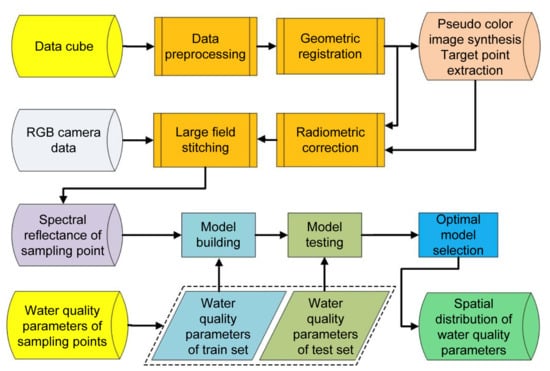

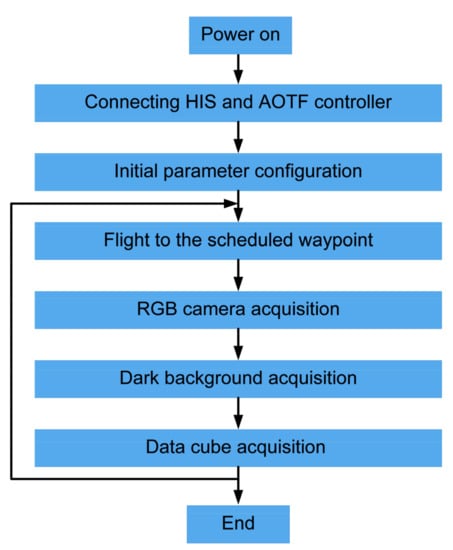

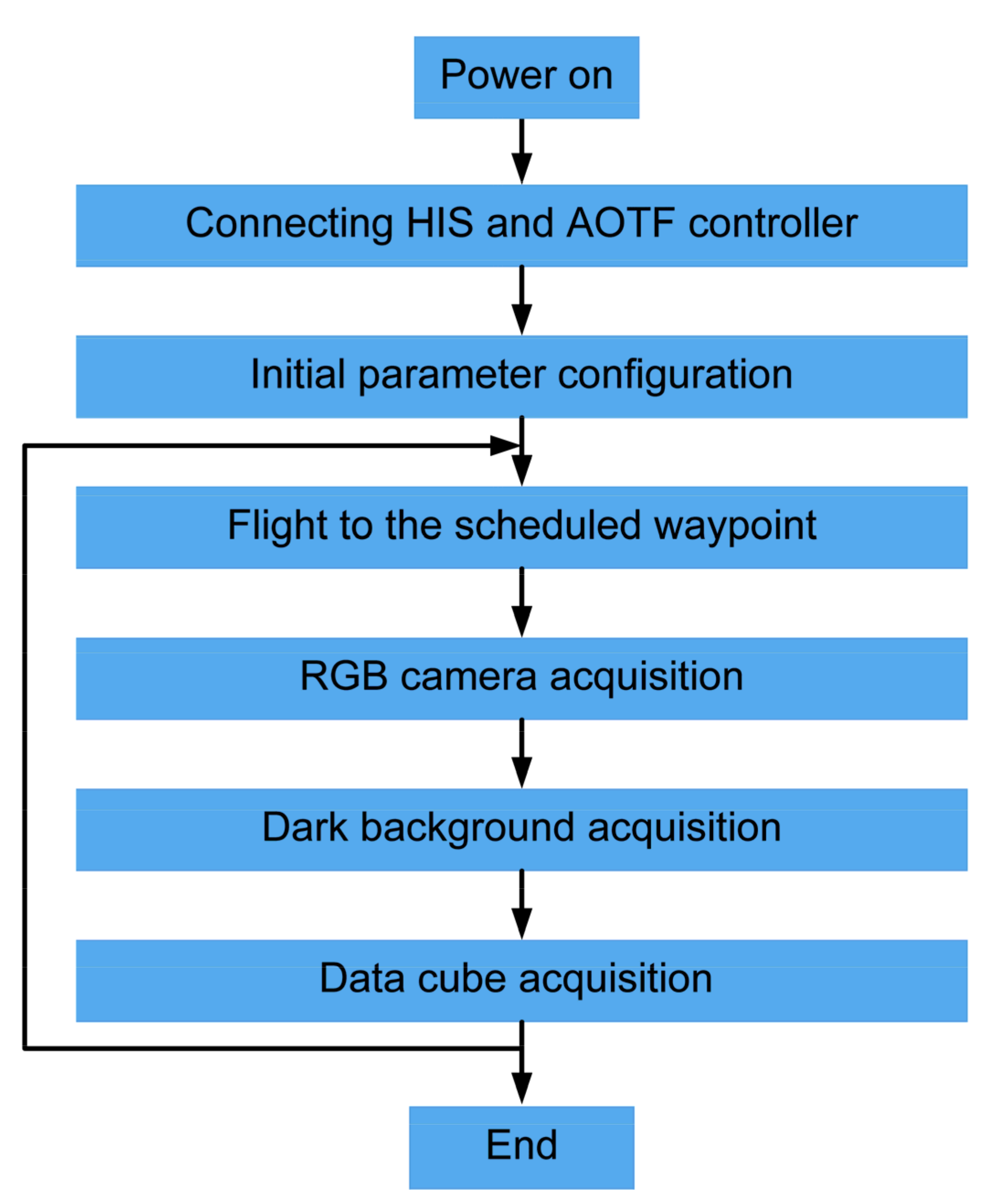

2.3. Data Processing Workflow

Following the data acquisition as elaborated in Section 2.2, data processing is performed according to the workflow illustrated in Figure 4.

Figure 4.

Data processing flow chart.

First, the data cube collected by the UAV at a single navigation point is preprocessed, which includes subtracting the collected dark background image from the two-dimensional image of each band to obtain the spectral data cube with the dark background removed, and then nonuniformity correction is carried out on the spectral data cube.

Second, the geometric registration algorithm is used to register spectral data to eliminate registration errors of different spectral segments caused by airflow and UAV vibration in remote sensing flight tests.

Third, appropriate spectral segments (480, 530, and 650 nm in this paper) are selected to form a pseudo-color fusion image for the extraction of target points on the ground.

Fourth, the relative radiation calibration is completed using the hyperspectral data cube with the dark background removed and the image coordinate information of the calibration target board synthesized in the pseudo-color image, and the reflectivity hyperspectral data cube is obtained.

Fifth, using RGB camera data acquisition, the reflectivity hyperspectral data cube is used to complete the sampling point spectrum extraction and data wide field-of-view mosaic.

Sixth, the water quality parameters of the sampling points and the extracted spectral reflectance of the sampling points are divided into the modeling and test sets, the inversion model of water quality parameters are established, and the model test is completed.

Finally, according to the concentration inversion model, the optimal model is selected and applied to the wide field-of-view spectral data to obtain the concentration of various parameters in the water and draw a spatial distribution map of water quality parameters.

2.3.1. Data Preprocessing

Numerous types of information exist in the dark background, such as dark noise, stray light, and interference information of order 0 diffracted light caused by the limited extinction ratio of the polarizer in the spectrometer. Thus, we must remove the dark background from the original data cube, and to do this, the specific operation steps are as follows: the dark background image is collected after turning AOTF off. Then, the dark background image is subtracted from the 2D image of each band in the data cube collected by the UAV at a single waypoint. In this way, a spectral data cube with dark background removed can be obtained, which can effectively improve the contrast and clarity of a two-dimensional image of a single spectrum in the spectral data cube.

In addition, to eliminate the influence of uneven illumination, dark current inside the detector, uneven spatial diffraction efficiency distribution of the AOTF, different pixel responses of the CMOS detector, different transmittance of the optical lens at different positions on the measurement results, and flat-field correction of the spectral data cube are carried out [55,56].

In the present study, a typical two-point correction algorithm [57] was used to correct the nonuniformity of the data cube collected using the spectral imager, and the nonuniformity correction coefficients and were obtained. At a specific wavelength, the mathematical model of the two-point correction method is as follows:

where is the average gray value of all pixels on the detector target surface when the radiance is ; is the actual gray value of the column pixel in row of the detector without correction after removing dark noise and random noise, represents the gain of the column pixel in row of the detector, and represents the offset of the column pixel in row of the detector. The non-uniformity correction coefficients and can be derived by selecting two images with different radiances.

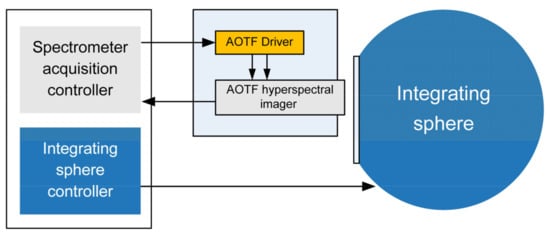

Generally, an integrating sphere is used as a uniform radiation source in the flat-field correction process. An Xth-2000 large aperture integrating sphere of Labsphere (Sutton, NH, USA) was used in the present study. Figure A3 in Appendix A presents a schematic diagram of laboratory calibration.

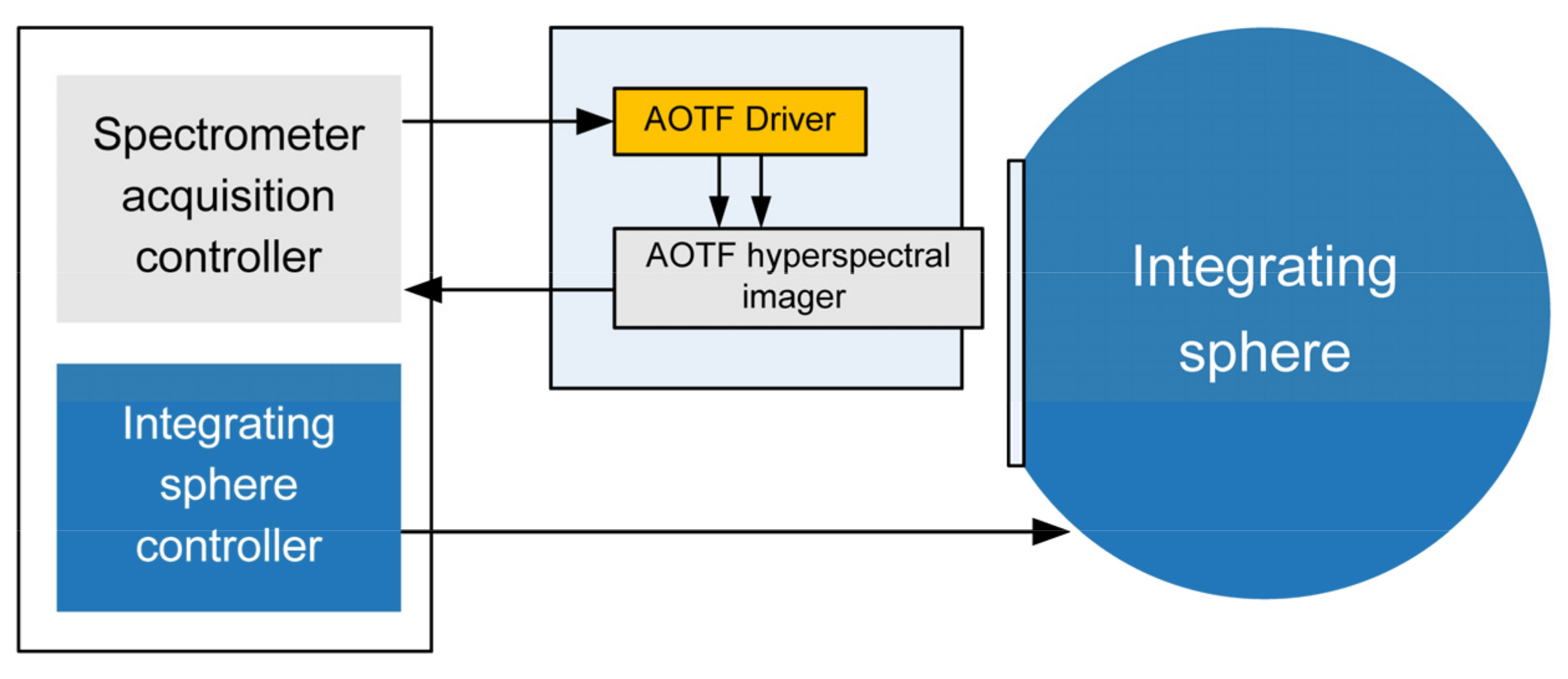

2.3.2. Geometric Registration

The data cube acquired by the AOTF spectrometer is a form of frame scanning. Owing to the inevitable air flow and UAV vibration during the UAV flight test, the images of different spectral segments will shift, resulting in an inability to extract an accurate spectral curve for ground objects [58]. Therefore, geometric registration of the collected data cube is required.

Fortunately, in the frame scanning imaging spectrometer based on AOTF, each frame is a complete 2D surface feature image. Geometric registration can be carried out using the point feature, line segment feature, edge, contour, closed area, and other features of the 2D surface feature image [59]. In addition, the phase difference caused by the drift of diffracted light in the AOTF spectrometer can be improved following geometric registration.

In geometric registration, the image of a single spectral bond can be represented using a two-dimensional matrix. If and represent the gray values of an image with wavelengths of and , respectively, at point , then the geometric registration relationship between images and can be expressed as follows [60]:

where represents the 2D geometric transformation function, and represents the one-dimensional gray-scale transformation function.

The main objective of registration is to find the optimal spatial transformation relationship and gray transformation relationship , so that the two images can achieve the best alignment. Spatial transformation is the premise of gray level transformation, and in some cases, it is not necessary to determine the relationship between gray level transformation and spatial transformation. Consequently, it is essential to determine the spatial transformation relation . Therefore, Equation (5) can be simplified as follows:

According to the above description of geometric registration, combined with the spectral data cube collected by the spectral imager, a schematic of any two spectral bands is illustrated in Figure A4 in Appendix A [60].

The concrete realization process of geometric registration is as follows: first, the reference image and the image to be registered were preprocessed using Gaussian low-pass filtering. Subsequently, the partial volume interpolation method was used to calculate the joint histogram [61], and the mutual information values of and were calculated. Subsequently, the Powell search algorithm [62] was used to determine whether the obtained parameters are optimal using the maximum mutual information theory. If not, the search for optimal parameters was continued. Meanwhile, spatial geometric transformation, joint statistical histogram analysis, mutual information value calculation, and optimization were repeated until the parameters meeting the accuracy requirements were found.

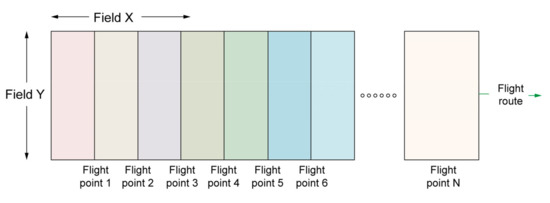

2.3.3. Field-of-View Splicing

To achieve a large spatial range of remote sensing and spectral data acquisition using a spectral scanning imaging spectrometer, data cube acquisition was first conducted directly above an imaging field of view, and then multiple data cube acquisitions were conducted after moving to the top of the adjacent field of view. Finally, multiple fields of view splicing was performed. To carry out the large field-of-view stitching, the following steps are required: first, feature points are extracted from each image; second, feature points are matched, and image registration is carried out; third, the image is copied to a specific position of another image; finally, an overlapping boundary is processed. For the hyperspectral data cube, the method based on a single spectral segment is used to conduct splicing one by one. As illustrated in Figure A5 in Appendix A, there are several waypoints on the UAV flight route, and the data cube within the field of view is collected at each waypoint.

In the present study, the flight altitude of the UAV was set to 100 m, and the aperture size of the field of view was 10 mm × 3.2 mm. To ensure a high imaging spatial resolution, the focal length of the front objective lens was 12 mm, so that the ground field of view was 83 m × 26 m. To meet the basic conditions of field-of-view splicing, two adjacent fields of view must have more than a half of each of their fields overlapping according to the relevant technical specifications for aerial photography [63,64,65]. Generally, the course overlap rate is 53–65%, while the side overlap rate is 15–40%. In practical applications, the heading and side overlap rates should be appropriately increased to ensure the quality of the image mosaic in a large field of view with relatively few surface features. Therefore, when setting the waypoint, the distance between the two waypoints should not be greater than 15 m.

2.3.4. Radiation Calibration

In the working of the hyperspectral imager, the relationship between the image data acquired by it and the target radiance is affected by the irradiance of the object, transmittance of the optical system, spectral efficiency, quantum efficiency of the detector, electronic system, and other factors [66]. Moreover, common hyperspectral imagers require radiometric calibration [67,68] for establishing the relationship between the radiance at the entrance pupil of the hyperspectral imager and the output image data. According to this relationship, the image data can be converted into radiance data, and the reflectivity of the target data can be further calculated by combining the imaging conditions. This paper introduces the calibration of hyperspectral cameras in the laboratory and field.

In laboratory calibration, for a full field of view and uniform brightness of input targets, the output image may be uneven due to the different photosensitive responses of each pixel of the CMOS detector, dark current, and other factors. Therefore, we require a set of detector response parameters relative to the spatial consistency, that is, the nonuniformity correction parameters in Section 2.3.1, to achieve the nonuniformity correction of each wavelength of the spectral camera, and to reduce the image color difference and quantitative error caused by the nonuniformity of the spectrometer response.

For UAV water remote sensing applications, to obtain the remote sensing emissivity of the airborne spectral imager to water, the radiometer spectrometer on the ground should be used to measure the radiance out of water at the control point of the water surface. In the case of avoiding direct solar reflection and ignoring or avoiding surface foam, the radiance relationship of water measured by the spectrometer is as follows.

When the hyperspectral imager is mounted on the UAV platform, the radiance at the entrance pupil of the spectrometer can be expressed as follows [69]:

where is the radiance of departure from water; is the diffuse reflection of the sky, without any water information, so it must be removed; ; and is the reflectivity of the air-water boundary facing the sky light, which depends on factors such as solar position , observation geometry , and wind speed and direction . The value of the reflectivity of the air–water boundary facing sky light can be taken as when the water surface is calm, when the wind speed is 5 m/s, and when the wind speed is 10 m/s under normal geometric observation conditions.

Second, it is necessary to obtain the total incident irradiance on the water surface, which can be obtained by measuring the standard target plate [70]:

where is the radiance of the standard plate measured by the spectrometer; and is the reflectivity of the standard plate, which is usually , so that the instrument is in the same state when observing the water body and the standard plate.

Then, the following formula is used to calculate the proximal remote sensing emissivity of the water body [25]:

Finally, the corresponding relationship between the near-end remote sensing reflectance measured by the water surface control point and the remote sensing data cube collected by the UAV is established to complete the radiometric correction of the UAV remote sensing system and obtain the correction coefficient. The specific corresponding relationship is as follows [37]:

2.3.5. Water Quality Parameter Inversion

The UAV hyperspectral imager acquires hyperspectral images of the study area and conducts in situ sampling and test analysis of water quality parameters at ground sampling points. A concentration prediction model was established using the hyperspectral reflectance data cube and in situ water quality parameters. In this study, the prediction model of Chl-a concentration was established by using the data cube collected by the UAV hyperspectral imager, and the optimal prediction model involved inversion to the UAV hyperspectral image to obtain the spatial distribution map of water quality parameters.

For the hyperspectral data cube, the method introduced in Section 2.3.4 was used to extract the spectral reflectance of the water surface at the sampling points. There were multiple ground sampling points in this study, and the water quality at the ground sampling points was sampled in situ and tested for analysis. Then, the content gradient method [71] was applied to select the modeling set and the test set at the ratio of 1:1 for the sampling points.

Water quality parameter band selection finds the most relevant band by analyzing the correlation between the in situ measurement results of the water quality parameters of the sampling points and the spectral reflectance of the sampling points to obtain the sensitive band selection of a certain water quality parameter. The sensitive band can be a single band, double band, or multi-band [72,73,74]. Due to the small number of samples collected from the ground in this study, the water quality parameter bands of the traditional algorithm were selected to find the best band combination. To avoid the difference in the correlation coefficient caused by different modeling environments, Pearson’s correlation coefficient was used uniformly. Specific sensitive bands and algorithms are shown in Table 1 below.

Table 1.

Sensitive bands and brief descriptions of 11 Chl-a inversion algorithms.

To establish the water quality parameter prediction model, we employed various methods, such as the partial least squares (PLS) algorithm [33], neural network methods (e.g., BP) [38,85], machine learning methods (e.g., least squares support vector machine based on particle swarm optimization algorithm (PSO–LSSVM)) [37,86], and the random forest (RF) algorithm [87,88,89,90].

We input the spectral reflectance obtained from the test into the developed model to obtain the predicted water quality parameters, and then compared them with those measured by the sensor. In this way, the model was verified. The specific water quality parameter prediction model was evaluated using the determination coefficient R2, root mean square error (RMSE), and mean absolute percentage error (MAPE).

If R2 is small, the RMSE and MAPE is large, and the accuracy of the model is poor. If R2 is large, RMSE and MAPE is small, and the accuracy of the model is high.

After the optimal model is determined, the full field of view image in the optimal band is selected according to the method of field-of-view mosaic in Section 2.3.3. The concentration of water quality parameters in the whole water area is calculated. Then, image processing or geographic information processing software was used to produce a water quality parameter spatial distribution map.

3. Experiments and Analysis

3.1. Study Sites and Surveys

The Beautiful Chinese Academy of Sciences Strategic Pilot A Special Project was combined with the Yangtze River mainstream water environment water ecological air–sky–ground three-dimensional monitoring sub-project. Simultaneously, several field flight tests were carried out on the whole system to improve the robustness of the system. According to the data acquisition and control process described in Section 2.2, two air–ground joint experiments were conducted to demonstrate the data acquisition and processing results.

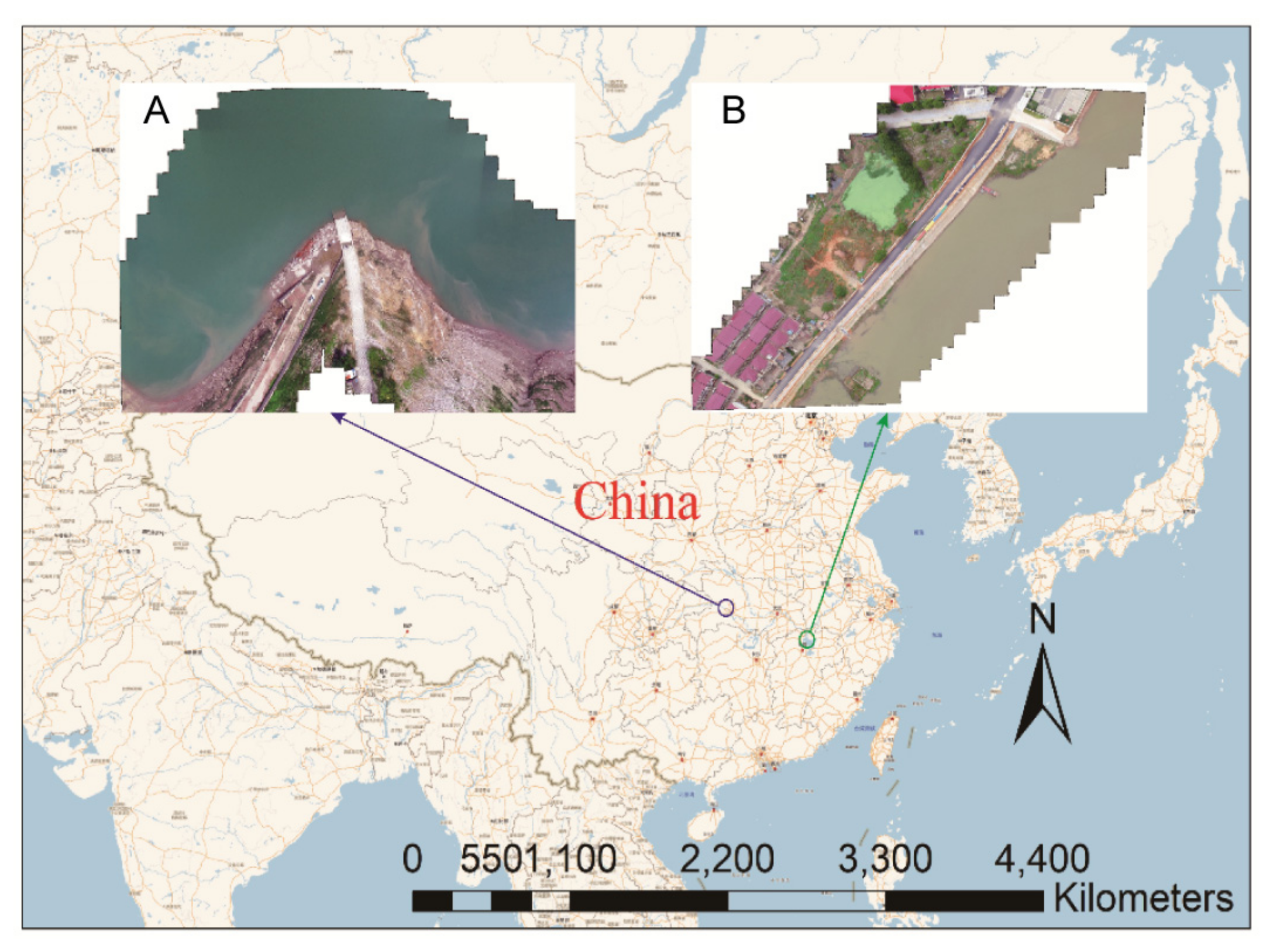

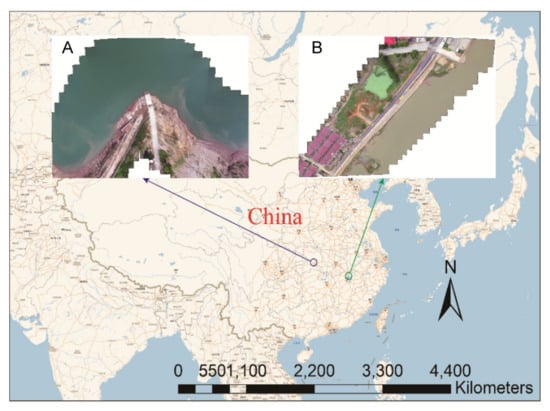

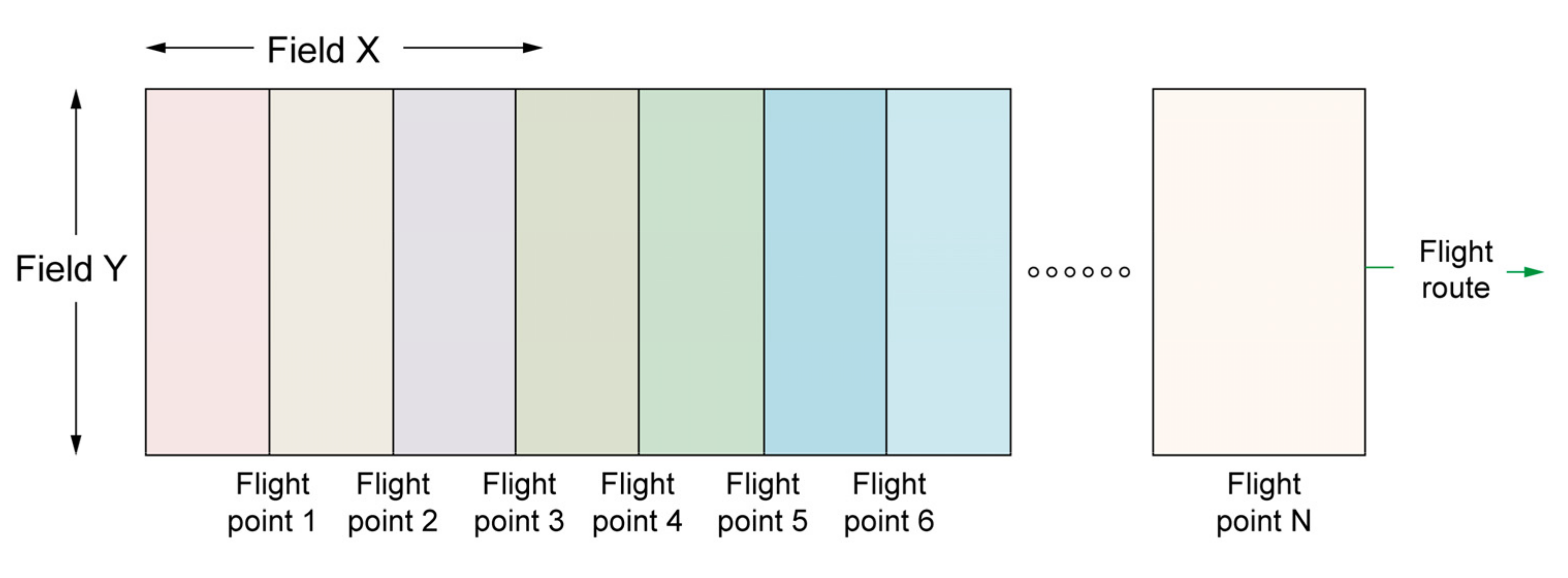

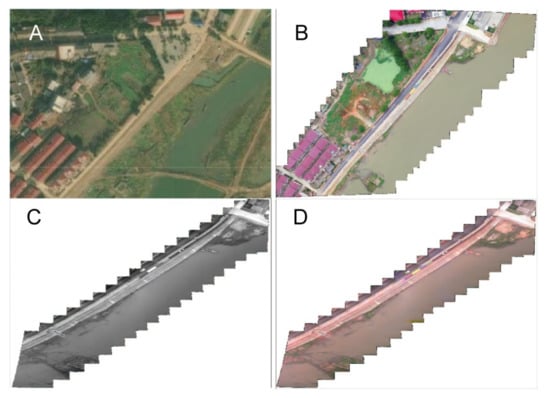

On 29 May 2021, at around 13:00 in the morning, with clear weather and breezes, an experiment was carried out in Guojiaba Town, Zigui County, Yichang City, Hubei Province, China (30°57′21.4″ N, 110°44′58.6″ E), located in the mainstream of the Yangtze River. Figure 5 Experimental point A.

Figure 5.

Air–ground joint experimental sites. (A) Experimental site of the Three Gorges demonstration area. (B) Experimental site of the Poyang Lake demonstration area.

At about 14:00 noon on 4 June 2021, with clear weather and no wind, an experiment was carried out in Wucheng Town, Yongxiu County, Jiujiang City, Jiangxi Province, China (29°11′12.5″ N, 116°0′46.3″ E), a tributary of the Yangtze River, on the side of Poyang Lake, the largest freshwater lake in China. Figure 5 shows experimental point B.

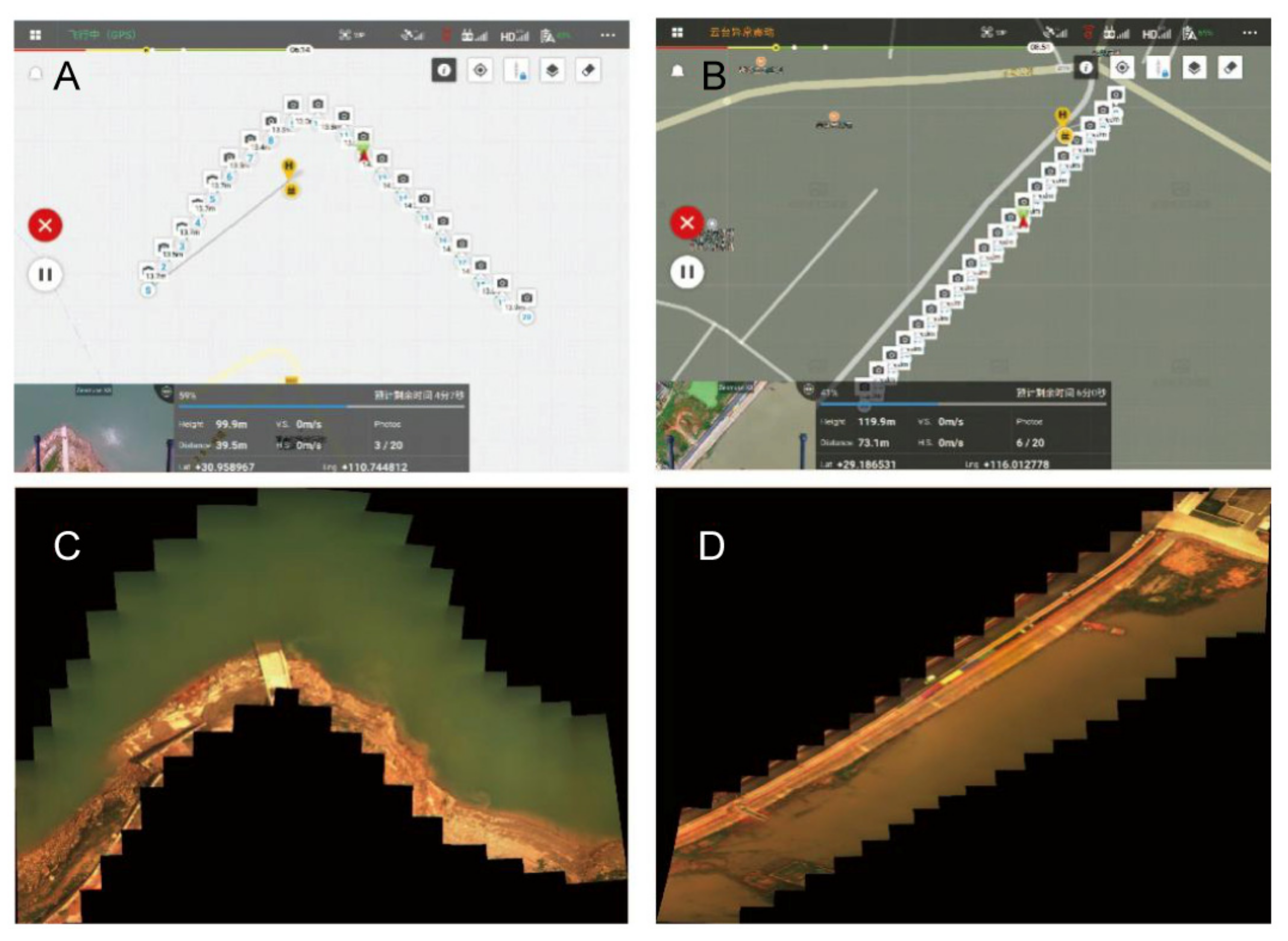

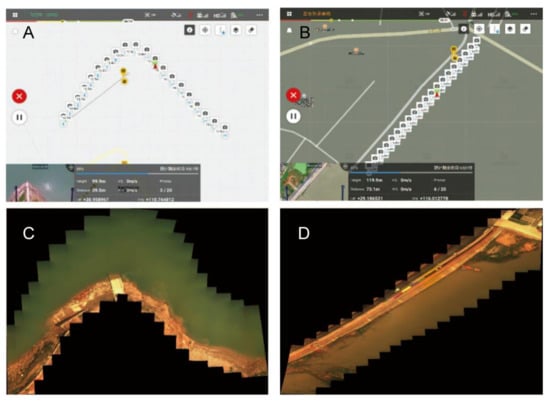

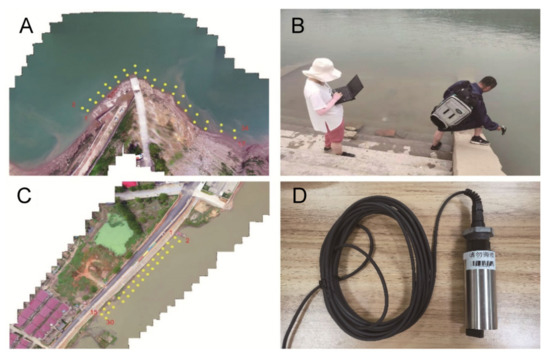

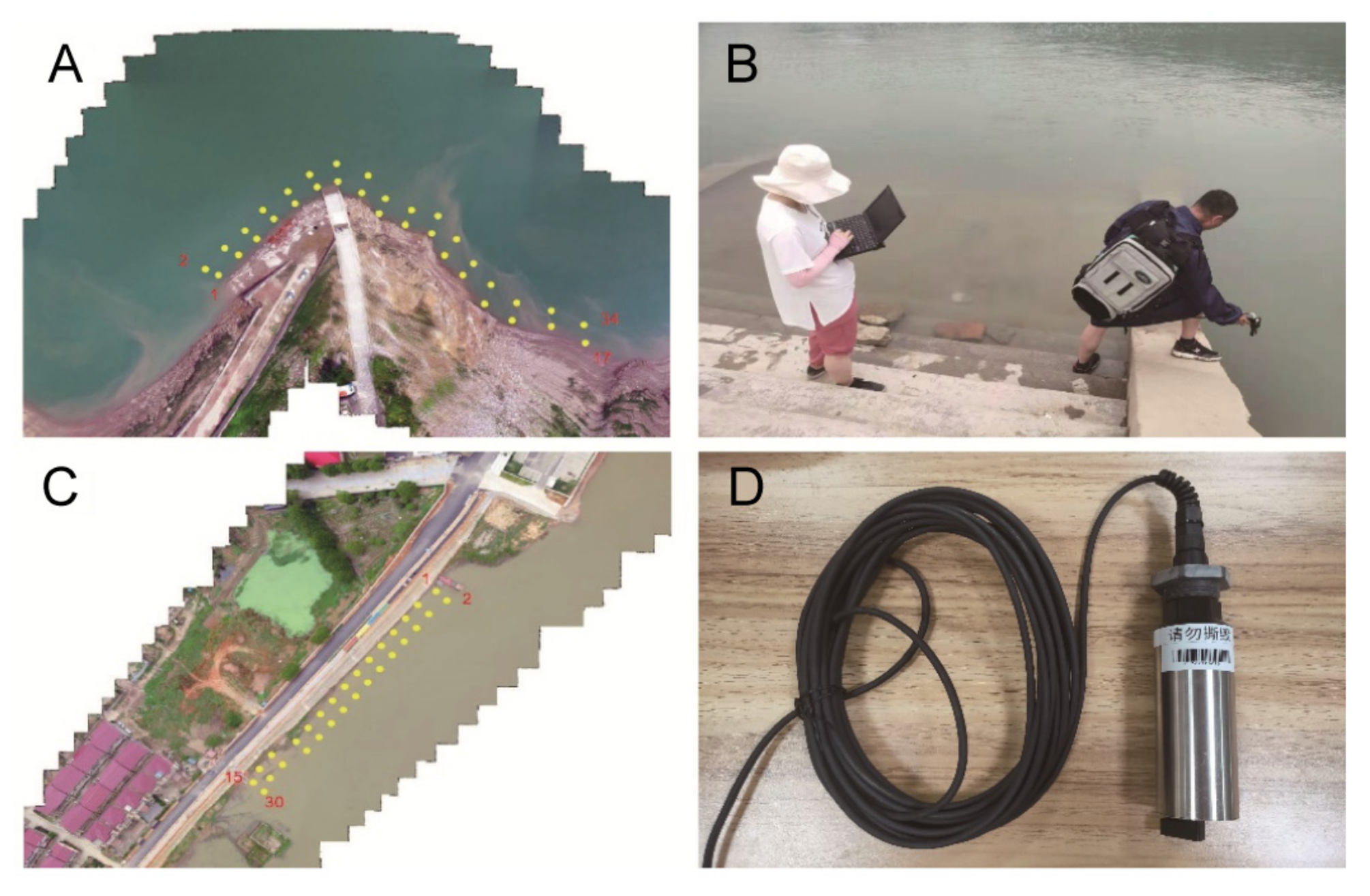

In the field water remote sensing experiment of the Three Gorges demonstration area, a camera lens with a focal length of 16 mm was used, the flying height of the UAV was set at 100 m, and the theoretical resolution of the image on the ground was 0.045 m. In the field water remote sensing experiment of the Poyang Lake demonstration area, a camera lens with a focal length of 16 mm was used, the UAV flight height was set as 120 m, and the theoretical resolution of the image on the ground was 0.038 m. In Figure 6, panels A and C are the route and navigation point diagram and composite color image of Guojiaba UAV flight experiment planned in the Three Gorges demonstration zone, respectively. In Figure 6, panels B and D are the flight route and navigation point diagram and composite color image of Wucheng Town UAV flight experiment in the Fan District of Poyang Lake, respectively. Further information is provided in Figure A6 and Figure A7 in Appendix A.

Figure 6.

(A) The planned route and navigation point of the UAV flight experiment of Guojiaba. (B) The route and navigation point planned by the Wucheng Town UAV flight experiment. (C) Color images of GuoJiaba synthesized at 480, 540, and 650 nm. (D) Color images of Wucheng Town synthesized at 480, 540, and 650 nm.

Ground sampling and testing at 34 and 30 points were completed in Guojiaba and Wucheng, respectively, for model establishment and verification of data processing. Figure A8 in Appendix A shows the field sampling and field testing at ground sampling points. DCH500-S chlorophyll-a sensor is used to measure water quality parameters. After in situ testing and sampling analysis, the Chl-a parameters were statistically analyzed, and the results are shown in Table 2. The coefficient of variance (CV) in Table 2 is the ratio of the standard deviation to the mean, which is used to reflect the degree of dispersion in the mean of the sample unit.

Table 2.

Measured statistical values of water quality parameters.

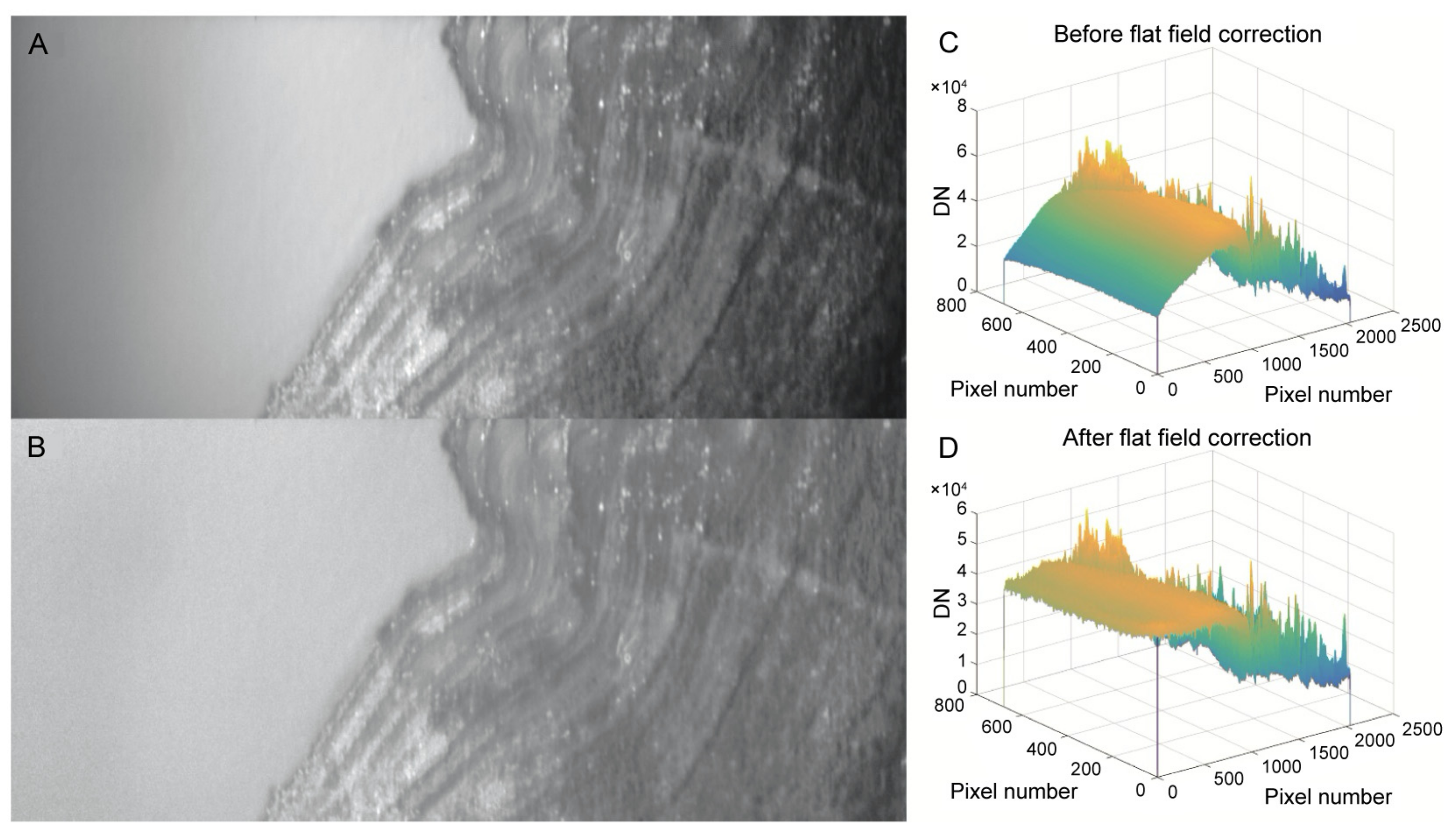

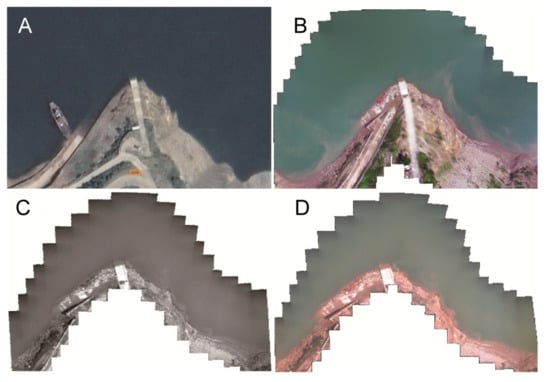

3.2. Data Preprocessing Results

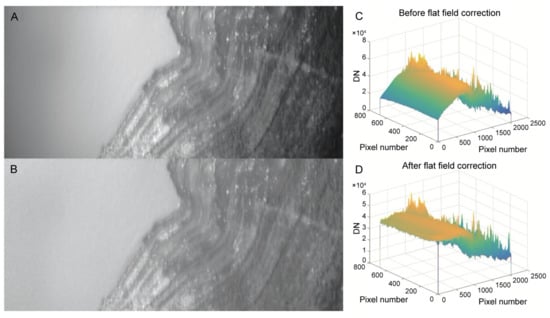

The premise of spectral radiometric calibration of the spectral imager is that all pixels in the same spectral channel respond uniformly when imaging a uniform object, so nonuniformity correction is carried out first. The method in Section 2.3.1 is used to perform nonuniformity correction on the data cube collected by the spectral imager. Figure 7 shows a comparison of the effects of a single spectrum in a data cube before and after the nonuniformity correction. In Figure 7C,D, the X and Y axes represent the coordinates of the pixel point, and the Z axis represents the DN value of the pixel.

Figure 7.

Comparison before and after nonuniformity correction. (A) is the original image of a single band at the navigation point without correction, and (C) is the corresponding 3D curved surface image. (B) is the original corrected image of a single wave band at the navigation point, and (D) is the corresponding 3D curved surface image.

It can be seen from Figure 7A that the color of the left water changes greatly before the single waveband is corrected. From the three-dimensional surface diagram (C), it can also be seen that the DN value of the water surface is greater as it is closer to the shore. The range of 20,000 to greater than 40,000 is not consistent with the actual situation. Additionally, it can be seen from Figure 7B that, after correction, the water surface on the left changes smoothly. From the three-dimensional surface diagram (D), it can also be seen that the DN value of the water surface does not change significantly, essentially remaining in the range of 35,000 to 40,000. This is consistent with the actual situation.

Before nonuniformity correction, the background collected when AOTF is turned off needs to be subtracted from the whole data cube, which can improve the data signal-to-noise ratio and the contrast of each band image. However, when AOTF is turned on, the zero-order diffraction light of AOTF in some bands cannot be completely eliminated by the second linear polarizer. Because the extinction ratio of the linearly polarizer in different bands is different, it will affect the purity of a single spectral segment of the image, which is a problem to be solved.

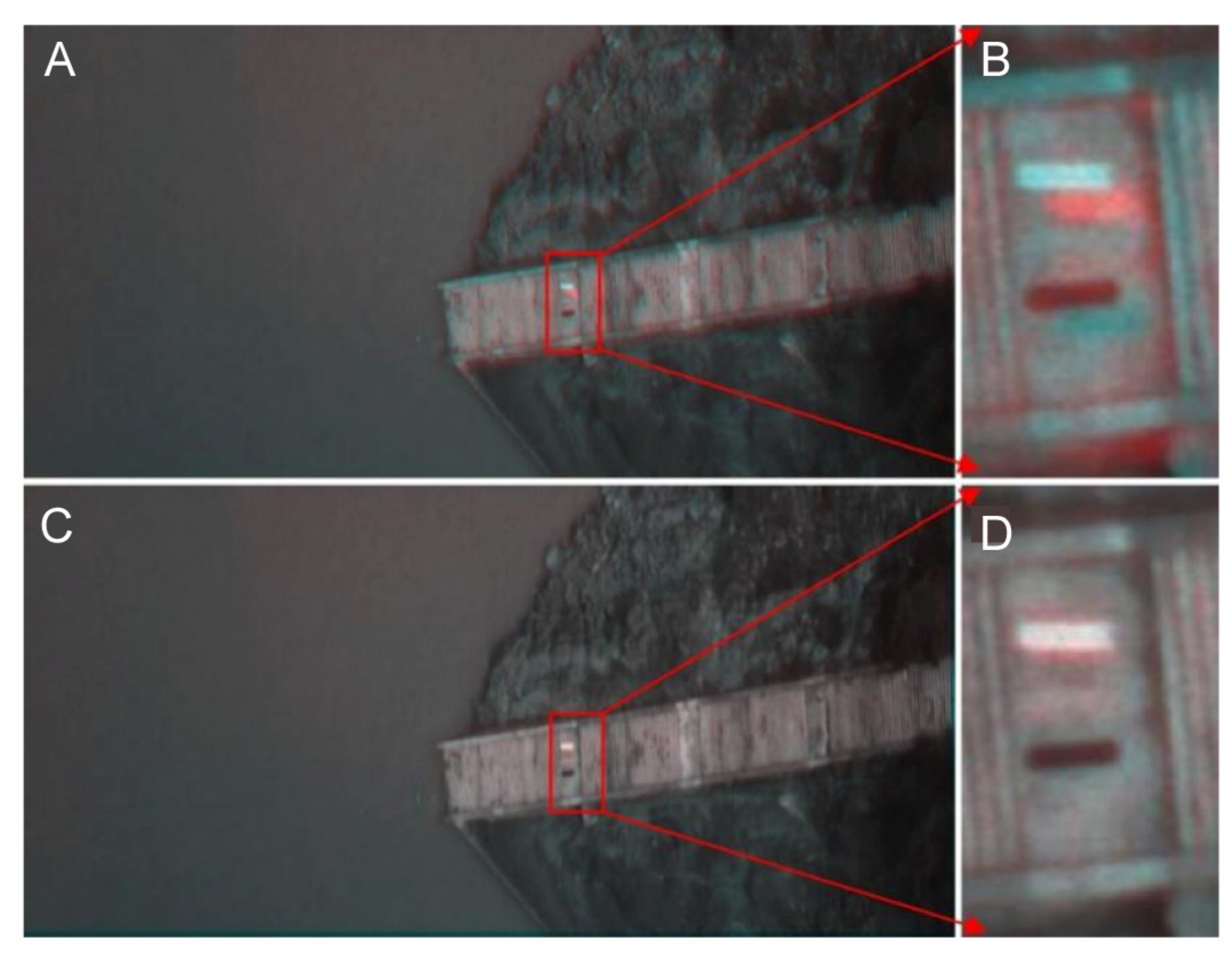

3.3. Image Registration Results

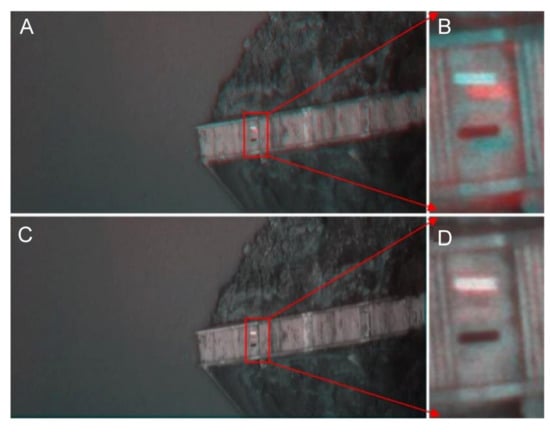

According to the image registration method introduced in Section 2.3.2, in a single data cube, a specific spectral segment is selected as a fixed image and the remaining images are selected as moving images to complete the registration of the whole data cube in turn. In Figure 8, a comparison of the effects of two band images without geometric registration and completed geometric registration is shown. The spectral bands selected in A and C are both 650 nm and 515 nm.

Figure 8.

Without geometric registration (A,B) and completed registration (C,D) renderings.

In terms of image registration, the UAV is affected by the airflow and its own vibration during the flight, and thus, at a waypoint, images of different spectrums will drift irregularly, as shown in Figure 8A,B. In the experiment, it was observed that, when the UAV arrives at the waypoint, we should wait for around 3~5 s before starting the spectral data collection, which can improve the stability and reduce the workload of registration. At the same time, due to the different diffraction angles of different wavelengths, the regular drift caused by image registration can be improved.

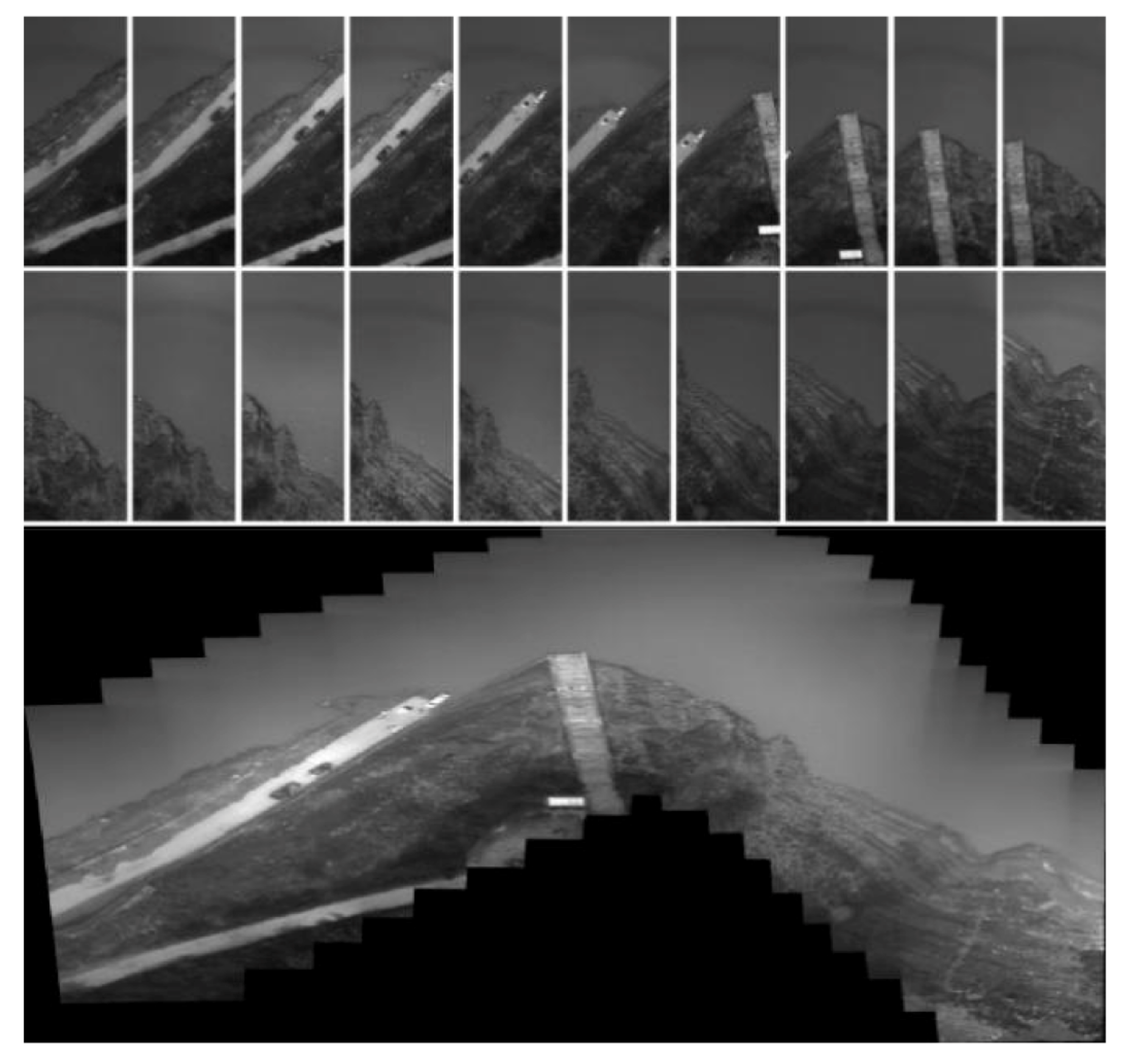

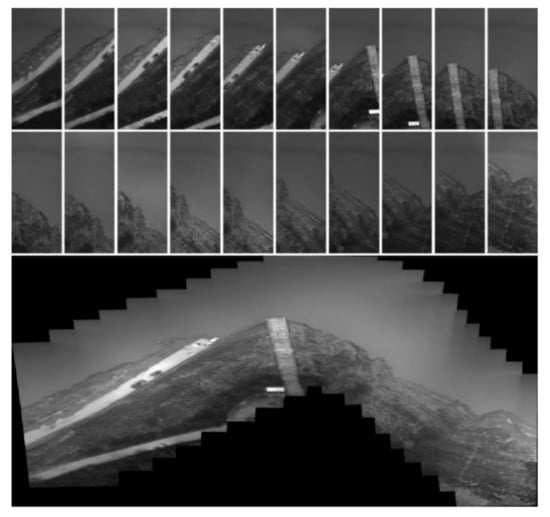

3.4. Image Mosaic Results

As shown in Figure 9, in the Guojiaba test, the images of 20 waypoints on the route with a wavelength of 623 nm had a total of 20 fields of view, and a large field of view mosaic map of 20 waypoints was completed.

Figure 9.

Images at 625 nm and field-of-view mosaic of 20 waypoints on the route.

For data processing of field-of-view mosaic images, we applied the image feature-based large field-of-view mosaic method mentioned in Section 2.3.3. Because the water surface features of the two navigation points are relatively few, stitching is challenging. Consequently, the surface features of the water bank need to be included in the route planning to resolve the challenge associated with few water-surface features and difficulty in splicing. Experiment 2 was an improvement over Experiment 1. However, the main approach of addressing the problem is a large field-of-view splicing based on geographical coordinates [17]. For large field-of-view splicing, it is necessary to introduce the GPS geographic coordinates of routes and waypoints.

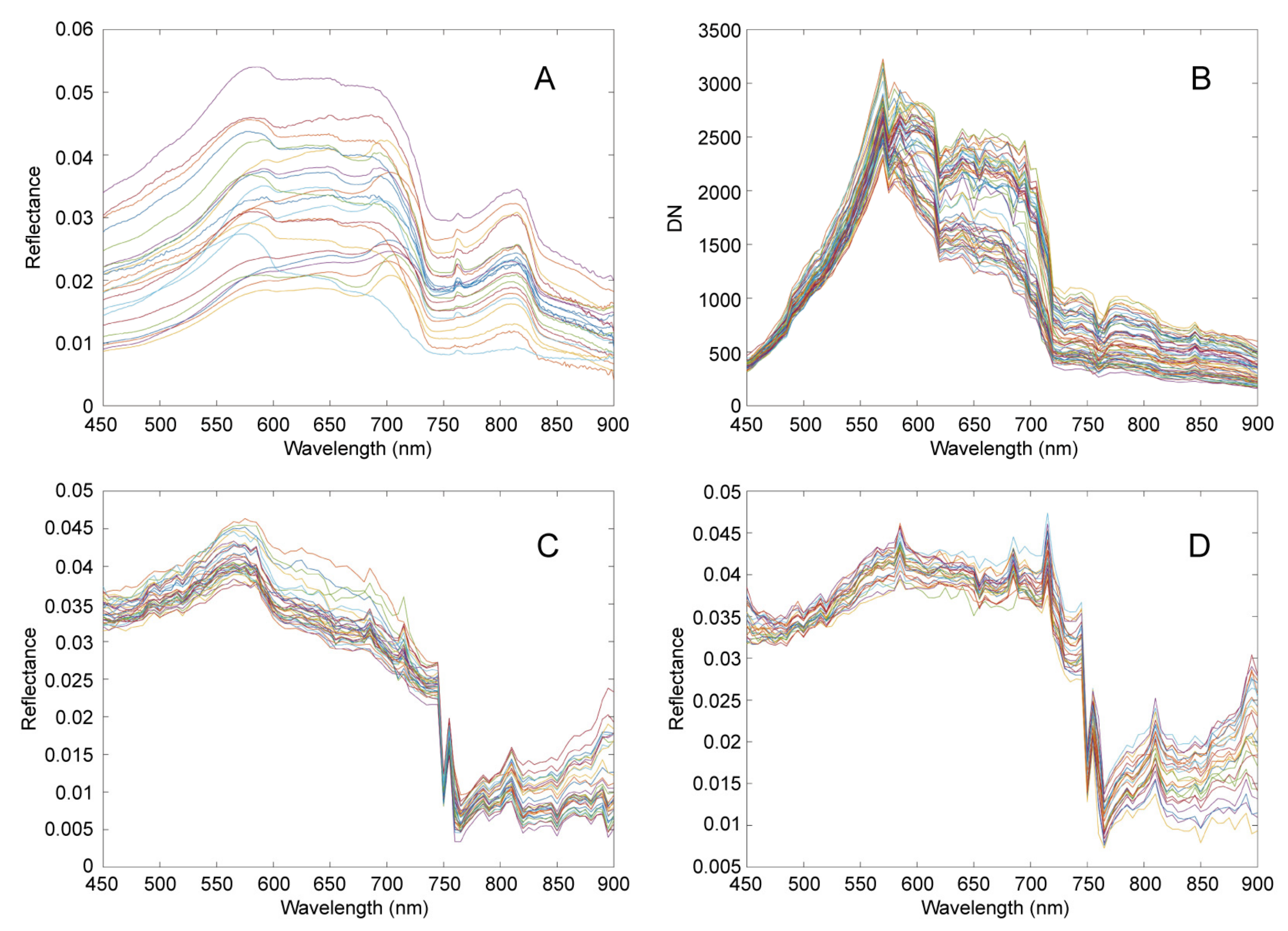

3.5. Radiation Calibration Results

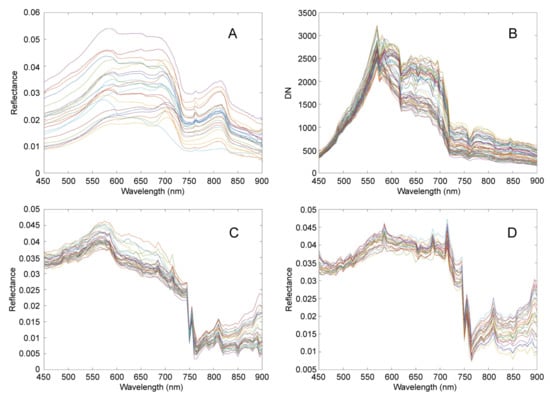

Then, according to the radiation calibration principle in Section 2.3.4, hyperspectral remote sensing data were obtained based on the UAV flight experiments. First, the reflectance of the control point near water was obtained using ASD. Then, according to the radiance of the ground control points collected by the UAV, the spectral curve of the reflectance out of water corresponding to the remote sensing data of the UAV was converted. Radiation calibration results were as shown in Figure 10.

Figure 10.

Radiation calibration results. (A) is the reflectance curve of water separation from the control points on the near-shore ground calculated using ASD data. (B) is the spectral curve of 64 control points at two experimental points collected by the spectrometer. (C) is the reflectance curve of water converted from the control point data collected by the Poyang Lake experimental point spectrometer. (D) is the water-free reflectance curve converted from the control point data collected by the Three Gorges experiment point spectrometer.

As can be seen from Figure 10A, the changes in spectral characteristics of the water body are as follows: in the range of 450–550 nm, the spectral reflectance of water presents an upward trend; in the range of 550–600 nm, the spectral reflectance of water presents a peak; in the range of 600–660 nm, the peak is relatively stable; in the range of 680–700 nm, there is a peak; and in the range of 700–750 nm, the spectral reflectance curve of water shows a fast-decreasing trend. Another peak appears at 760 nm, then slowly rises to 800 nm, and then slowly decreases. According to the comprehensive analysis, the variation trend of spectral curves in different regions and under different water quality conditions is generally the same, but the values of the reflection peak and absorption peak are different, which is caused by the difference in water quality parameter concentration at different sampling points.

Due to the spectral scanning characteristics of AOTF spectrometer, in the experiment, we carried out spectral scanning in the range from 450 nm to 900 nm according to the step size of 5 nm, so each spectral curve only had 91 spectral bands, as shown in Figure 10B. Due to the small number of bands in 91, the converted out-of-water reflectance curve had poor continuity and smoothness compared with Figure 10A, as shown in Figure 10C,D.

AOTF with a wide spectral range (400–1000 nm) requires two sets of driving signals: 149 MHz–80.1 MHz, 79.3 MHz–45.8 MHz, and 80 MHz as the boundary between high and low frequency driving signals, which will cause a jump mutation in the spectral curve at 623 nm, as shown in Figure 10B. Unfortunately, no correction was made before the two air–ground joint experiments. There are two correction methods: one is to adjust the amplitude of the AOTF drive signal so that the diffraction efficiency at 623 nm can be smoothly transitioned, and the other is to adjust the integration time and gain of the detector so that the DN value at 623 nm can be smoothly transitioned.

During radiation calibration of data processing, compared with the simple use of a single white target, the use of double targets can lead to more accurate field radiation calibration. In the test, due to the different diffraction efficiencies of AOTF at different wavelengths and the different quantum responses of detectors, the three selected reflectivity targets of 5, 30, and 60% could easily saturate the target DN value under different weather conditions. Once saturated, reflectivity correction could not be carried out. Therefore, the number of different reflectivity targets can be increased in the later stages. Saturation of the target DN value can easily occur. It may also be because the dynamic range of the selected detector is too small. A useful attempt can be made to merge the original pixel with the adjacent pixel in both the spectral and spatial domains, so that the SNR can be slightly improved.

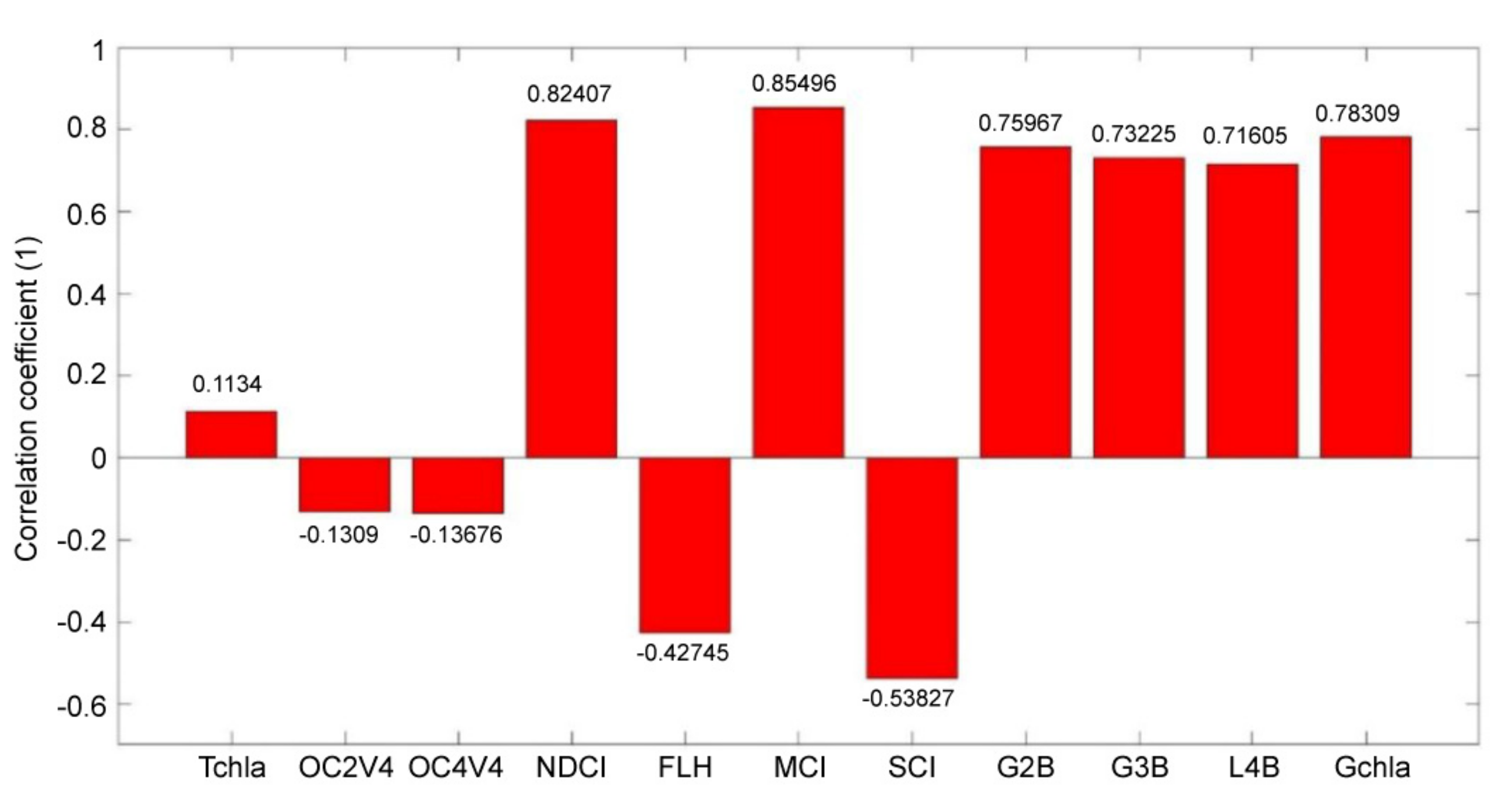

3.6. Inversion Results of Water Quality Parameters

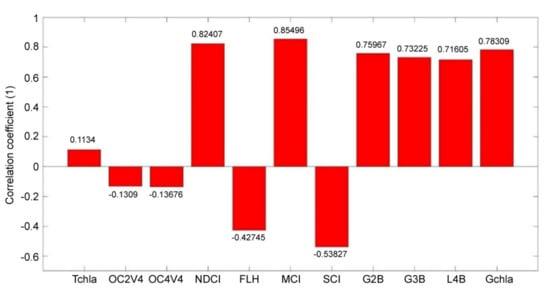

In the spectrometer, the diffraction efficiency of AOTF is low, in the range of 400–500 and 900–1000 nm, and the quantum response efficiency of the detector is low, in the range of 400–500 and 900–1000 nm. Therefore, when looking for the correlation of sensitive bands by concentration inversion, the range of 500–900 nm is mainly considered. Water quality parameter bands of 11 types of traditional algorithms were selected to find the best combination of bands. The characteristic spectral segments of Chl-a in 11 traditional algorithms were compared with the Pearson correlation coefficient of Chl-a concentration at ground control points. The specific comparison results are shown in Figure 11 below.

Figure 11.

Correlation coefficient between Chl-a concentration and combinations of sensitive bands in 11 traditional algorithms.

By comparing the 11 algorithms in Figure 11, the Chl-a characteristic spectrum segment and selected by the MCI algorithm have the maximum correlation coefficient of 0.85496 for the Chl-a concentration at the ground control points. Therefore, the combination of bands in the MCI algorithm is selected in this study.

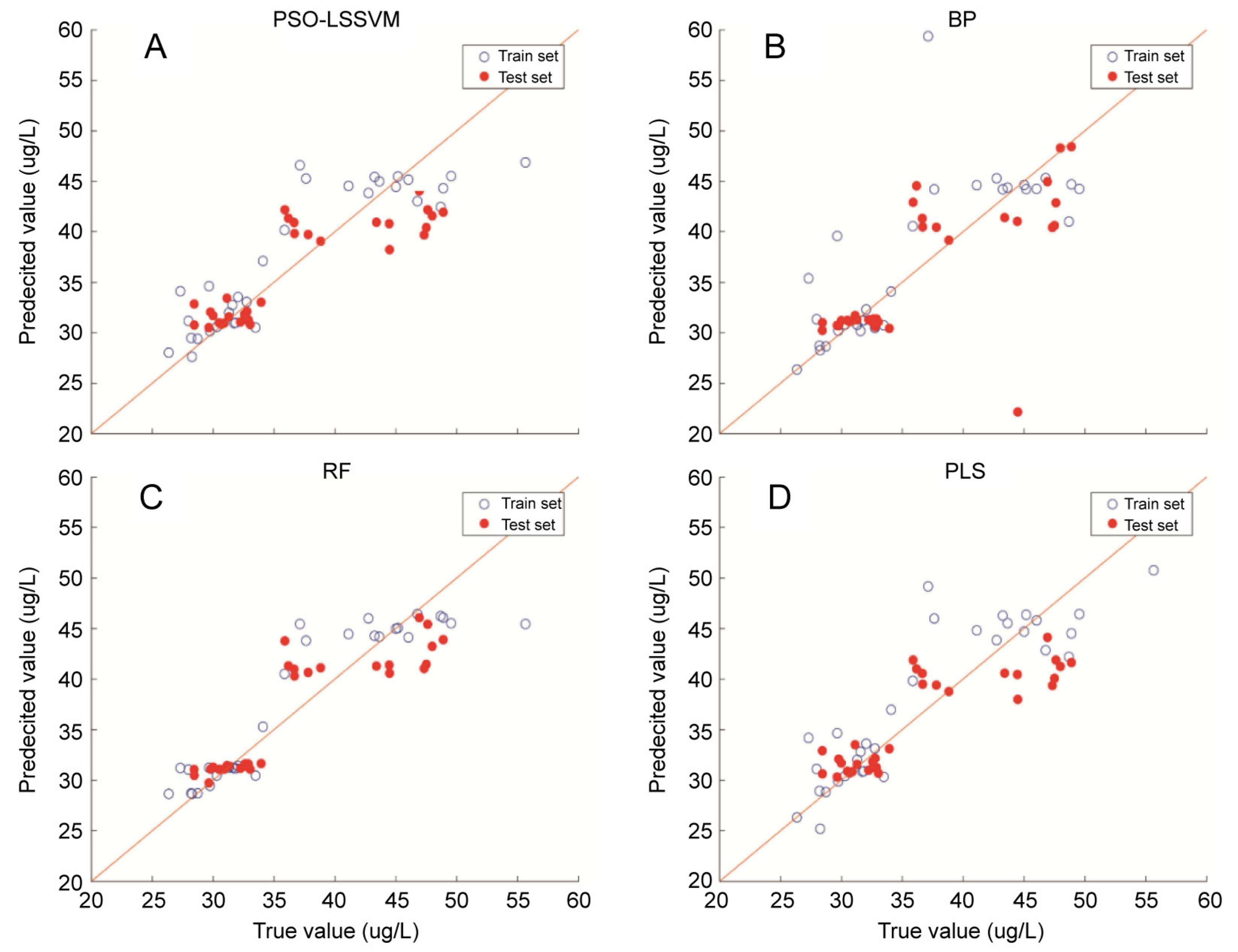

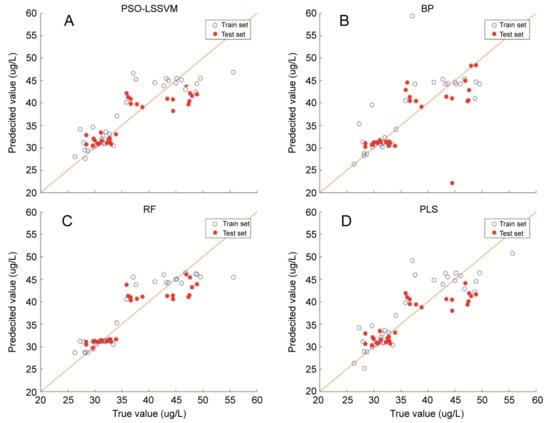

The combination of bands in the MCI algorithm and the concentration of Chl-a at the ground control points were used as input, and the control points were divided into modeling sets and test sets. The PLS method, artificial neural network (BP neural network), and machine learning (PSO-LSSVM and RF) methods were used to establish the water quality parameter prediction model. The determination coefficient (R2), RMSE, and MAPE were used for evaluation. The specific modeling results are shown in Table 3 below.

Table 3.

Comparison of the different methods used to build the models for the dataset.

The inversion results of the four modeling methods adopted, the training set, and the test data set are shown in Figure 12. The predicted and true values of all samples are basically evenly distributed on the diagonal, indicating good inversion results. The predicted value and true value of the random forest (RF) algorithm are more concentrated on the diagonal line.

Figure 12.

Relationship between the predicted value of Chl-a concentration and the measured value. (A) The PSO-LSSVM algorithm. (B) BP neural network algorithm. (C) RF algorithm. (D) Partial least squares algorithm. The relationship between the predicted value of Chl-a concentration and the measured value retrieved from the training set and the test set of the model was established.

LSSVM uses equality constraints instead of traditional inequality constraints to solve the problem of regression classification, which considerably reduces the complexity of calculation and improves the calculation speed. In the LSSVM model, the regularization parameter (γ) and the kernel parameter (σ) are very important to predict the calculation accuracy of the model. The magnitude of γ and σ determines the fitting error and duration of the function; therefore, the optimization of γ and σ is the key to model establishment. PSO has the advantages of simple operation, few parameters, and a short fitting time; thus, PSO was used to optimize the key parameters of LSSVM. The optimized parameters in this study are γ = 1.5212 and σ2 = 5.8704. Although the PSO-LSSVM algorithm was adopted in this study to predict the concentration of Chl-a, the overall performance was not as good as the prediction effect of PSO-LSSVM on the total suspended matter in water, as shown in previous studies [37].

The BP neural network is trained using the error back propagation algorithm. The BP network can learn and store a large number of input/output relations. It is one of the most widely used neural network models at present. Its adaptability and the abilities of self-learning and distributed processing have been well applied in water quality evaluation. In this study, by adjusting the parameters, it was determined that BP neural network contained 20 hidden layers, one output layer, 10,000 maximum number of training times, and 0.00000000001 global minimum error. However, the BP neural network itself has some shortcomings, such as local minimization and convergence. As shown in Figure 12B, true values and predicted values with large deviations are not concentrated near the diagonal.

The RF algorithm is one of the most commonly used algorithms at present. It is favored by many researchers for its fast training speed and high accuracy. In the algorithm, multiple prediction models are generated at the same time, and the prediction results of each model are comprehensively analyzed to improve the prediction accuracy. The RF algorithm is used to sample and provide variables, and a large number of decision trees are generated. Self-help sampling is carried out for each tree, and error estimation is carried out using out-of-bag sample data. Therefore, the RF algorithm is used in this study to verify its practicability in predicting Chl-a. After numerous attempts, when NumTrees = 100, other parameters are maintained as default, and the best modeling results are obtained. Through the comparison in Table 3, it is obvious that both the training set and the training set determination coefficient (R2), RMSE, and MAPE are superior to the other three methods.

PLS combines the advantages of principal component analysis, canonical correlation analysis, and multiple linear regression analysis. Therefore, the PLSs method is widely used in the inversion of water quality parameters. The fitting process of PLS does not involve parameter adjustment, and directly performs multiple linear regression. Combining Table 3 and Figure 12 for the prediction of the concentration of Chl-a in this study, the performance was determined to be not as good as neural network and machine learning methods.

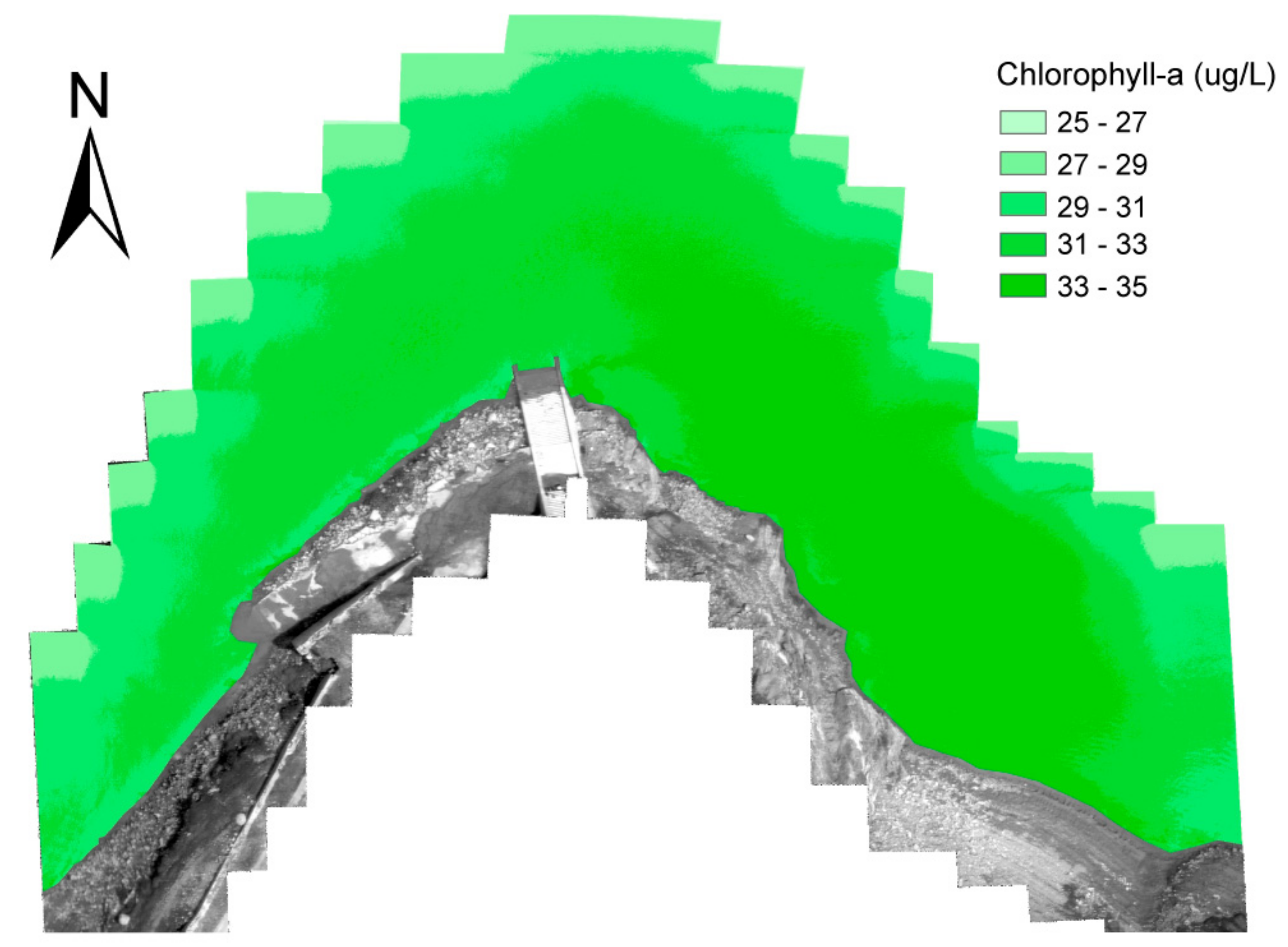

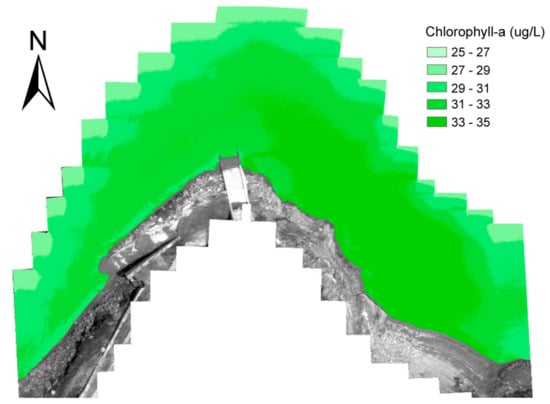

Figure 13 shows the results of Chl-a inversion of UAV Airborne Hyperspectral Images in the Three Gorges demonstration area using the RF algorithm. Because the working mode of the spectrometer on the UAV is the route and waypoint mode, there are obvious field-of-view stitching traces on the image. Therefore, the inversion results only reflect the distribution trend of water quality parameters and do not consider the prediction results of a single pixel. According to the inversion results, the maximum value of Chl-a in the Three Gorges demonstration area is 35 μg/L, and the minimum value is 25 μg/L, which is basically consistent with the sensor test results (Chl-a max = 34.09 μg/L, Chl-a min = 26.35 μg/L).

Figure 13.

Spatial distribution of Chl-a in Three Gorges demonstration area.

Another observation is presented in Figure 13. The Chl-a prediction results of remote sensing images are basically consistent with the sensor test results. The total Chl-a concentration on the left side (west) of the experimental area was lower than that on the right side (east). This is because the Guojiaba experimental site is a ferry. In future, there could be ferries in the west to connect pedestrians and cars. The coming and going of ferries will increase the turbidity of the water in the west, thus affecting the concentration distribution of Chl-a.

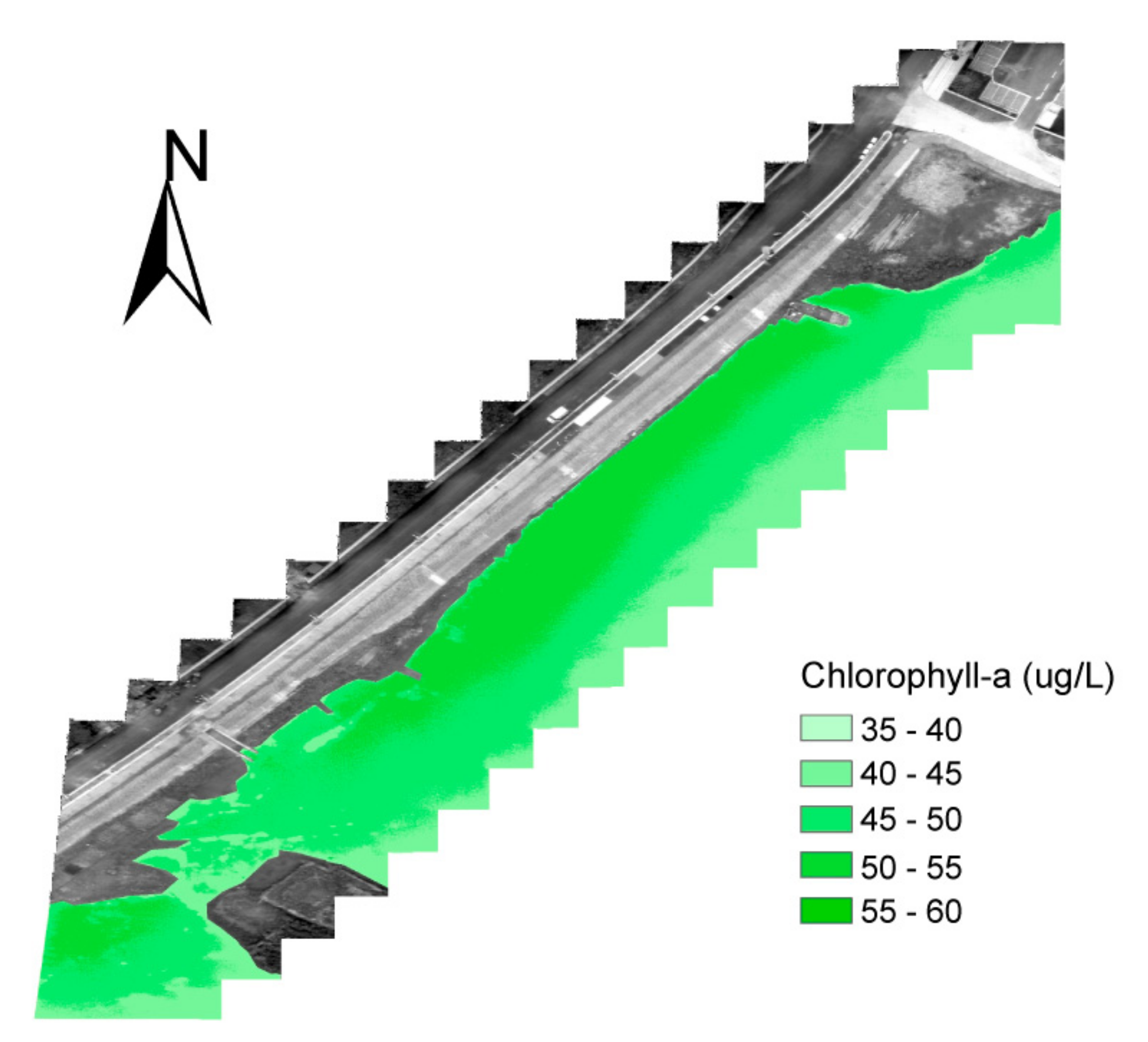

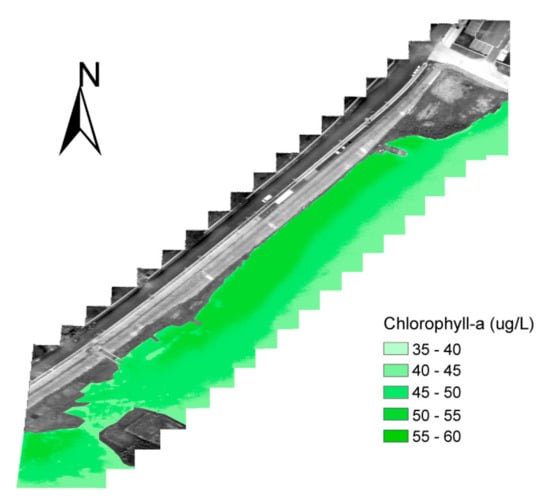

Figure 14 shows the results of Chl-a inversion of UAV Airborne Hyperspectral Images in the Poyang Lake demonstration area using the RF algorithm. According to the inversion results, the maximum value of Chl-a in the Poyang Lake demonstration area is 60 μg/L, and the minimum value is 35 μg/L, which is basically consistent with the sensor test results (Chl-a max = 55.65 μg/L, Chl-a min = 35.86 μg/L).

Figure 14.

Spatial distribution of Chl-a in the experimental sites of Poyang Lake.

In the second experiment, the experimental site is close to Wucheng Town living area. It can be seen from the figure that the drainage outlets of rainwater and domestic wastewater in the living area are in the experimental area. Therefore, this part of the water body is rich in nutrients, and the overall Chl-a concentration is higher than that in the Three Gorges demonstration area.

From the perspective of concentration inversion of data processing, two air–ground joint experiments were carried out to realize the division of water area characteristics. According to the content of water quality parameters of ground control points, the concentration inversion of water quality parameters of the target water body was completed using machine learning. In the experiment, the concentration of water quality parameters in the two waters was considerably different; however, this was beneficial for the establishment of the water quality inversion model. Therefore, in the later stage, as many different water bodies as possible are selected for the experiment to accumulate water quality parameter inversion samples.

4. Conclusions

The rapid development of UAV remote sensing technologies has offered novel tools with potential applications in the monitoring and conservation of aquatic ecosystems and aquatic environments. To facilitate water remote sensing imaging and spectral analysis activities, we designed a novel simple and reproducible UAV hyperspectral imaging remote sensing system based on AOTF.

A hyperspectral imager was adopted as the spectral scanning AOTF technology. Based on the imaging characteristics of AOTF spectral scanning, the UAV adopts the route and waypoint flight mode, in addition to waypoint triggers, to carry out hyperspectral data cube acquisition. The technology can be used as a reference acquisition mode for UAV spectral scanning hyperspectral imagers in remote sensing applications.

Through the introduction of two joint air–ground experiments in the Three Gorges demonstration area and Poyang Lake demonstration area of the Yangtze River, the hyperspectral imager, remote sensing data acquisition control, and data processing workflow of the UAV hyperspectral remote sensing water quality monitoring system based on AOTF are tested in the present study. The stability of the system and the feasibility of its application in water quality monitoring were preliminarily demonstrated.

The limitations of this system are as follows:

- (1)

- The instability of water surface fluctuation may affect the results of water quality detection.

- (2)

- The sample space of remote sensing imaging water quality detection is too narrow, which affects the stability and accuracy of water quality parameter inversion model.

- (3)

- The water area of UAV single flight monitoring is limited.

In view of the limitations of the system, the following investigations should be conducted.

First, the hyperspectral imaging remote sensing system of UAV, especially the performance, data acquisition control, and data processing of the hyperspectral imager based on AOTF, must be optimized. Specifically, it should be optimized in terms of optical path optimization, phase difference suppression, data acquisition, data preprocessing, geometric correction, waypoint image mosaic, radiometric correction, etc. Based on system optimization, this study discusses the influence of water fluctuation instability on the results of water quality detection.

Second, different water areas, such as inland rivers, artificial ponds, and natural lakes, can be selected to increase the number of UAV water quality remote sensing detection sorties, and increase the number of samples. In this way, a better inversion model of water quality parameters can be established to meet the environmental adaptability of different waters.

Finally, we can make full use of the wavelength tunable characteristics of AOTF and only select the characteristic spectral bands of the monitored water quality parameters for scanning and capturing. This could reduce the shooting time of a single waypoint and increase the number of shots and the flight routes of UAVs, which could make large water area monitoring possible. Thus, spectral imaging remote sensing system has great potential in water quality monitoring.

Author Contributions

Conceptualization, H.L., T.Y. and Z.Z.; methodology, H.L., X.L. and J.Z.; supervision, S.X. and B.Q.; formal analysis, H.L., J.L. and Z.T.; writing—original draft preparation, H.L., T.Y. and X.W.; writing—review and editing, B.H. and X.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by class a plan of major strategic pilot project of the Chinese Academy of Sciences: XDA23040101; National Natural Science Foundation of China: 61872286; Key R&D Program of Shaanxi Province of China :2020ZDLGY04-05 and S2021-YF-YBSF-0094; National Key R&D Program of China: 2017YFC1403700; National Natural Science Foundation of China: 11727806; “Light of the west” project of Chinese Academy of Sciences: XAB2017B25.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing not applicable.

Acknowledgments

We are grateful to the Three Gorges Hydrological Bureau of the Yangtze River Water Conservancy Commission and the Institute of geography and Resources Sciences of the Chinese Academy of Sciences for their joint water quality survey assistance. We would like to thank the northwest general agent of DJI UAV for his help in UAV modification, spectrometer mounting and UAV flight. We also thank anonymous reviewers for their valuable comments.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Appendix A

Table A1.

Main technical indexes of some components of hyperspectral UAV system.

Table A1.

Main technical indexes of some components of hyperspectral UAV system.

| Component | Parameter | Specification |

|---|---|---|

| UAV (WIND4)  | Dimensions (mm) | 1668 (L) × 1518 (W) × 727 (H) |

| Weight (without batteries) | 7.3 kg | |

| Max. takeoff weight | 24.5 kg | |

| Max. speed | 50 km/h (no wind) | |

| Hovering accuracy | Vertical: ±0.5 m, horizontal: ±1.5 m | |

| Max. wind resistance | 8 m/s | |

| Max. service ceiling above sea level | 2500 m by 2170R propellers; 4500 m by 2195 propellers | |

| Operating temperature | −10 to 40 °C | |

| Max. transmission distance | 3.5 km | |

| Gimbal (DJI Ronin-MX 3-Axis Gimbal Stabilizer)  | Rotation range | pitch: −150°–270° |

| roll: −110°–110° | ||

| yaw: 360° | ||

| Follow speed | pitch: 100°/s | |

| roll: 30°/s | ||

| yaw: 200°/s | ||

| Stabilization accuracy | ±0.02° | |

| Weight | 2.15 kg | |

| Load capacity | 4.5 kg | |

RGB Camera | Resolution | 4000 × 2250 |

| Aperture value | f/2.8 | |

| Focal length | 4 mm | |

| Weight | 80 g | |

MINI-PC | CPU | i5-7260U |

| Hard disk capacity | 200 GB | |

| Weight | 680 g |

Table A2.

Spectrometer design integration materials based on acousto-optic tunable filter (AOTF) technology.

Table A2.

Spectrometer design integration materials based on acousto-optic tunable filter (AOTF) technology.

| Component | Parameter | Specification | Component | Parameter | Specification |

|---|---|---|---|---|---|

| AOTF filter (SGL30-V-12LE) | Wavelength | 400~1000 nm | Objective lens (3ghAIubL#5*9V1228-MPY) | Focal length | 12 mm |

| FWHM | ≤8 nm | Image plane | 1.1″ | ||

| Diffraction efficiency | ≥75% | Aperture | F2.8–F16.0 | ||

| Separation angle | ≥4° | Weight | 98 g | ||

| Aperture angle | ≥3.6° | Collimating lens (V5014-MP) | Focal length | 50 mm | |

| Primary deflection angle | ≥2.17° | Image plane | 1″ | ||

| Optical aperture | 12 mm × 12 mm | Aperture | F1.4–F16.0 | ||

| Electric power | ≤4 W | Weight | 200 g | ||

| Weight | 200 g | Linear polarizer (R5000490667) | Wavelength range | 300~2700 | |

| AOTF driver | Frequency range | 43~156 MHz | Extinction ratio | >800:1 | |

| Output power | 2.5 W | Size | 25.4 mm | ||

| Input voltage | 24 V | CMOS camera (MV-CA050-20UM) | Detector | PYTHON5000 | |

| Stability frequency | 10 PPM | Pixel size | 4.8 μm × 4.8 μm | ||

| Frequency resolution | 0.1 MHz | Resolution | 2592 × 2048 | ||

| Interface | USB 2.0 | Interface | USB 3.0 | ||

| Weight | 100 g | Weight | 60 g |

Figure A1.

Core optical structure of spectrometer. The core optical structure of the acousto-optic tunable filter (AOTF) hyperspectral imaging system spectrometer is shown in Figure 2: (1) front objective lens; (2) aperture diaphragm; (3) collimating lens; (4) linear polarizer; (5) AOTF composed of TeO2 crystal and piezoelectric transducer; (6) linear polarizer; (7) secondary imaging lens; (8) CMOS detector; (9) mini-PC control and data acquisition system; and (10) RF driver.

Figure A1.

Core optical structure of spectrometer. The core optical structure of the acousto-optic tunable filter (AOTF) hyperspectral imaging system spectrometer is shown in Figure 2: (1) front objective lens; (2) aperture diaphragm; (3) collimating lens; (4) linear polarizer; (5) AOTF composed of TeO2 crystal and piezoelectric transducer; (6) linear polarizer; (7) secondary imaging lens; (8) CMOS detector; (9) mini-PC control and data acquisition system; and (10) RF driver.

Figure A2.

UAV remote sensing data acquisition process.

Figure A2.

UAV remote sensing data acquisition process.

Figure A3.

Spectrometer non-uniformity test platform.

Figure A3.

Spectrometer non-uniformity test platform.

Figure A4.

Flow chart of spectral data registration.

Figure A4.

Flow chart of spectral data registration.

Figure A5.

Field of view splicing diagram.

Figure A5.

Field of view splicing diagram.

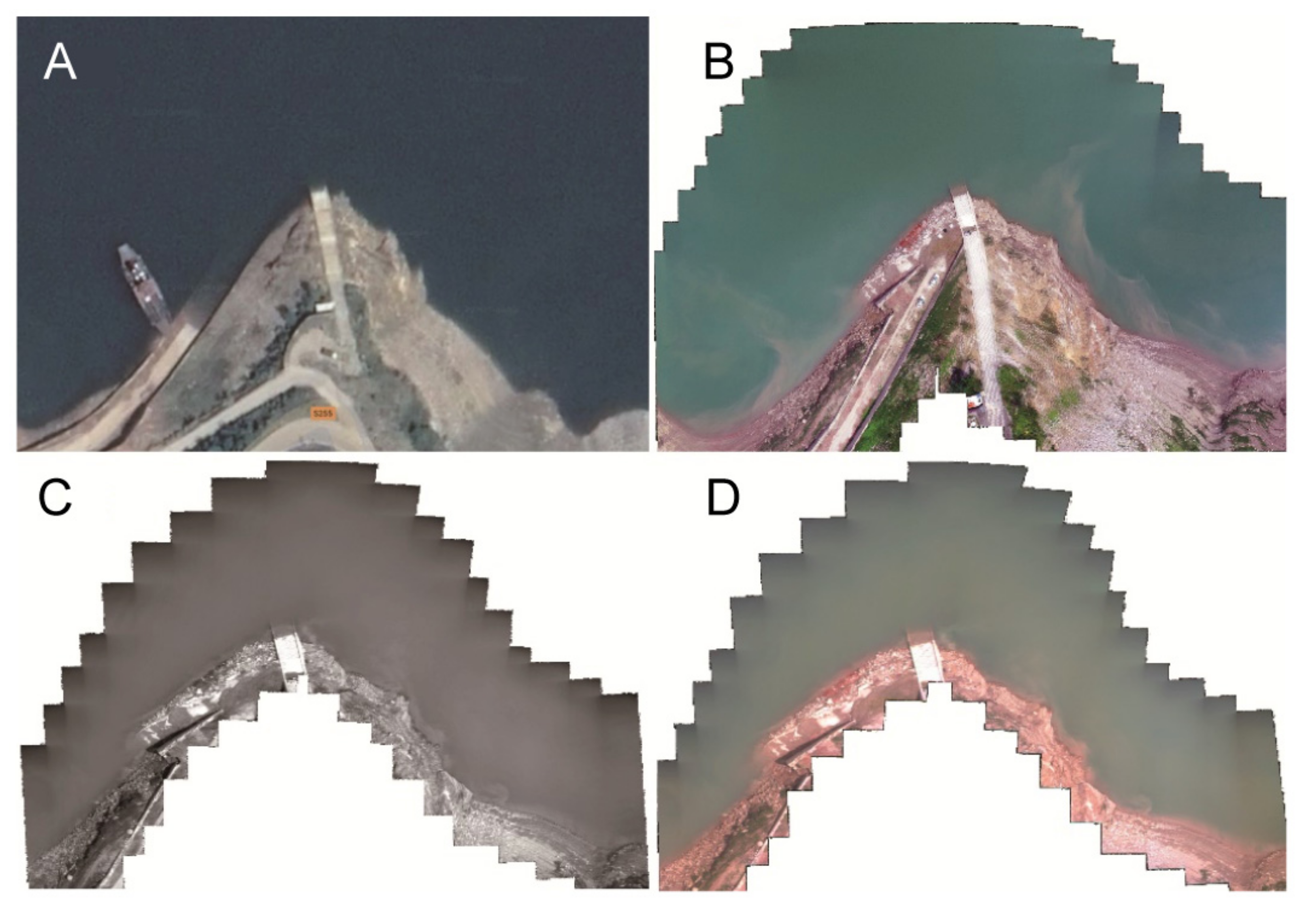

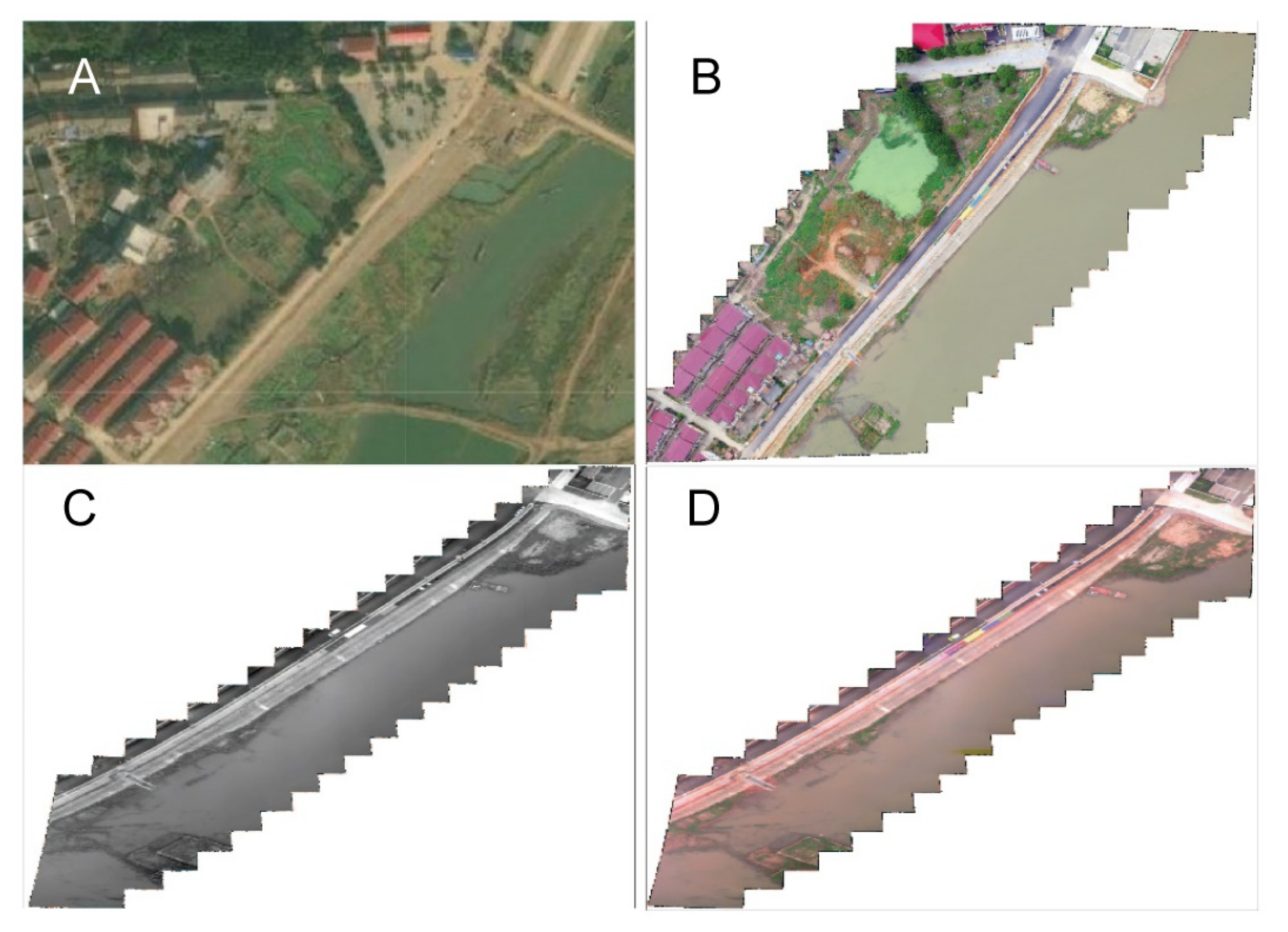

Figure A6.

Experimental site in Guojiaba (A) This is a satellite image obtained from Google Earth on 23 September 2020. (B) Images taken by the UAV panchromatic camera. (C) The image obtained at 625 nm wavelength from a single spectral band obtained for the UAV spectrometer. (D) A color picture obtained at wavelengths of 480, 540, and 650 nm.

Figure A6.

Experimental site in Guojiaba (A) This is a satellite image obtained from Google Earth on 23 September 2020. (B) Images taken by the UAV panchromatic camera. (C) The image obtained at 625 nm wavelength from a single spectral band obtained for the UAV spectrometer. (D) A color picture obtained at wavelengths of 480, 540, and 650 nm.

Figure A7.

Experimental site of Poyang Lake. (A) A satellite image obtained from Google Earth on 23 September 2020. (B) Images taken for the UAV panchromatic camera. (C) Image of 540 nm wavelength in a single band was obtained from the UAV spectrometer. (D) A color picture obtained at wavelengths of 480, 540, and 650 nm.

Figure A7.

Experimental site of Poyang Lake. (A) A satellite image obtained from Google Earth on 23 September 2020. (B) Images taken for the UAV panchromatic camera. (C) Image of 540 nm wavelength in a single band was obtained from the UAV spectrometer. (D) A color picture obtained at wavelengths of 480, 540, and 650 nm.

Figure A8.

(A) Three Gorges demonstration area and ground control points. (B) Reflectance measurement of the near end of ground control points in the Three Gorges demonstration area. (C) Poyang Lake demonstration area and ground control points. (D) DCH500-S Chl-a sensor.

Figure A8.

(A) Three Gorges demonstration area and ground control points. (B) Reflectance measurement of the near end of ground control points in the Three Gorges demonstration area. (C) Poyang Lake demonstration area and ground control points. (D) DCH500-S Chl-a sensor.

References

- Zhang, H.; Zhang, B.; Wei, Z.; Wang, C.; Huang, Q. Lightweight Integrated Solution for a UAV-Borne Hyperspectral Imaging System. Remote Sens. 2020, 12, 657. [Google Scholar] [CrossRef] [Green Version]

- Zhong, Y.; Wang, X.; Xu, Y.; Wang, S.; Jia, T.; Hu, X.; Zhao, J.; Wei, L.; Zhang, L. Mini-UAV-Borne Hyperspectral Remote Sensing from Observation and Processing to Applications. IEEE Geosci. Remote Sens. Mag. 2018, 6, 46–62. [Google Scholar] [CrossRef]

- Zheng, Y.; Yu, B. Overview of Spectrum-Dividing Technologies in Imaging Spectrometers. J. Remote Sens. 2002, 6, 75–80. [Google Scholar] [CrossRef]

- Zhiping, H.; Xiage, Q.; Yingyu, X. Staring Acousto-Optic Spectral Imaging Technology with Area FPA and Discussion on its Application of Unmanned Aerial Vehicle (UAV) Platforms. Infrared Laser Eng. 2016, 45, 1–7. [Google Scholar] [CrossRef]

- Li, Y.; Yang, C.; Zhou, C.; Su, J. Advance and Application of UAV Hyperspectral Imaging Equipment. Bull. Surv. Mapp. 2019, 9, 1–6. [Google Scholar] [CrossRef]

- Freitas, S.; Silva, H.; Almeida, J.; Silva, E. Hyperspectral Imaging for Real-Time Unmanned Aerial Vehicle Maritime Target Detection. J. Intell. Robot. Syst. 2018, 90, 551–570. [Google Scholar] [CrossRef] [Green Version]

- Headwall. Available online: https://www.headwallphotonics.com (accessed on 6 February 2021).

- Nano-Hyperspec. Available online: https://cdn2.hubspot.net/hubfs/145999/Nano-Hyperspec_Oct19.pdf (accessed on 6 February 2021).

- ITRES. Available online: https://itres.com/sensor-line-1-high-fidelity-hyperspectral-sensor-system/ (accessed on 6 February 2021).

- HySpex. Available online: https://www.hyspex.com (accessed on 6 February 2021).

- Yue, J.; Feng, H.; Jin, X.; Yuan, H.; Li, Z.; Zhou, C.; Yang, G.; Tian, Q. A Comparison of Crop Parameters Estimation Using Images from UAV-Mounted Snapshot Hyperspectral Sensor and High-Definition Digital Camera. Remote Sens. 2018, 10, 1138. [Google Scholar] [CrossRef] [Green Version]

- BaySpec. Available online: https://www.bayspec.com (accessed on 6 February 2021).

- Cubert. Available online: https://cubert-gmbh.com/ (accessed on 6 February 2021).

- Grusche, S. Basic Slit Spectroscope Reveals Three-Dimensional Scenes through Diagonal Slices of Hyperspectral Cubes. Appl. Opt. 2014, 53, 4594–4603. [Google Scholar] [CrossRef]

- Rahmlow, T.D., Jr.; Cote, W.; Johnson, R.L., Jr. Hyperspectral Imaging Using a Linear Variable Filter (LVF) Based Ultracompact Camera. In Proceedings of the Photonic Instrumentation Engineering VII, San Francisco, CA, USA, 4–6 February 2020. [Google Scholar]

- Imec-Int. Available online: https://www.imec-int.com/en/hyperspectral-imaging (accessed on 6 February 2021).

- Jaud, M.; Le Dantec, N.; Ammann, J.; Grandjean, P.; Constantin, D.; Akhtman, Y.; Barbieux, K.; Allemand, P.; Delacourt, C.; Merminod, B. Direct Georeferencing of a Pushbroom, Lightweight Hyperspectral System for Mini-UAV Applications. Remote Sens. 2018, 10, 204. [Google Scholar] [CrossRef] [Green Version]

- Abdlaty, R.; Sahli, S.; Hayward, J.; Fang, Q. Hyperspectral Imaging: Comparison of Acousto-Optic and Liquid Crystal Tunable Filters. In Proceedings of the Medical Imaging 2018: Physics of Medical Imaging, Houston, TX, USA, 12–15 February 2018. [Google Scholar]

- Makynen, J.; Holmlund, C.; Saari, H.; Ojala, K.; Antila, T. Unmanned Aerial Vehicle (UAV) Operated Megapixel Spectral Camera. In Proceedings of the Electro-Optical Remote Sensing, Photonic Technologies, and Applications V, Prague, Czech Republic, 21–22 September 2011. [Google Scholar]

- Wang, J.; Ding, N.; Zheng, Y.; Zhao, Y.; Gao, F.; Li, J.; Wang, J.; Gao, M.; Wu, J. Overall Design Technology of Hyperspectral Imaging System Based on AOTF. In Proceedings of the International Symposium on Optoelectronic Technology and Application 2014: Imaging Spectroscopy, and Telescopes and Large Optics, Beijing, China, 13–15 May 2014. [Google Scholar]

- Senop. Available online: https://senop.fi/ (accessed on 6 February 2021).

- Aasen, H.; Burkart, A.; Bolten, A.; Bareth, G. Generating 3D Hyperspectral Information with Lightweight UAV Snapshot Cameras for Vegetation Monitoring: From Camera Calibration to Quality Assurance. ISPRS J. Photogramm. 2015, 108, 245–259. [Google Scholar] [CrossRef]

- Fang, S.; Xia, X.; Xiao, Y.; Guo, R. Lens Distortion Calibration Method for Linear Array Cameras. J. Xi’an Jiaotong Univ. 2013, 47, 11–14. [Google Scholar]

- Machikhin, A.; Batshev, V.; Pozhar, V. Aberration Analysis of AOTF-Based Spectral Imaging Systems. JOSA A 2017, 34, 1109–1113. [Google Scholar] [CrossRef]

- Wei, L.; Huang, C.; Wang, Z.; Wang, Z.; Zhou, X.; Cao, L. Monitoring of Urban Black-Odor Water Based on Nemerow Index and Gradient Boosting Decision Tree Regression Using UAV-Borne Hyperspectral Imagery. Remote Sens. 2019, 11, 2402. [Google Scholar] [CrossRef] [Green Version]