A Task-Driven Invertible Projection Matrix Learning Algorithm for Hyperspectral Compressed Sensing

Abstract

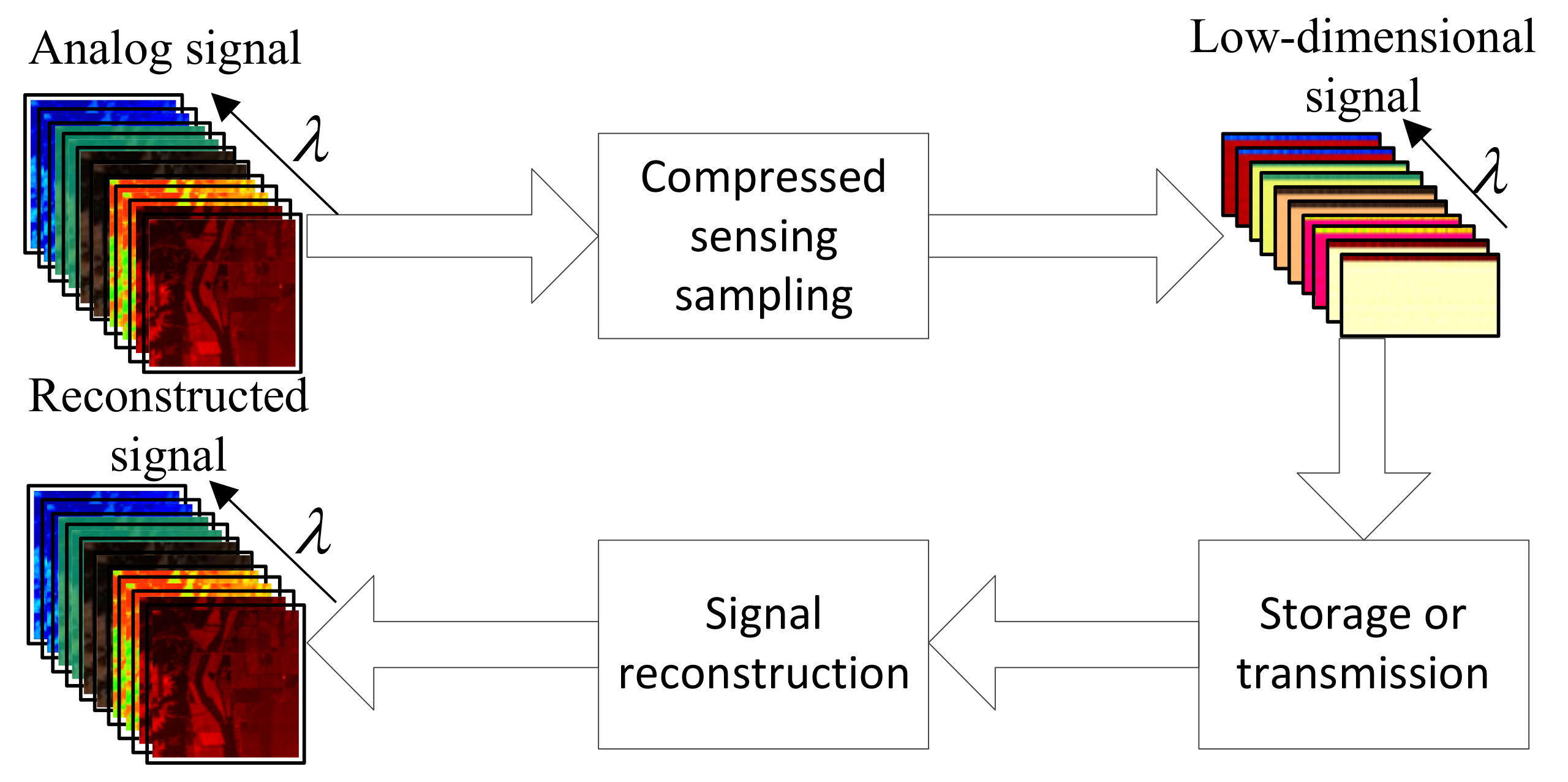

1. Introduction

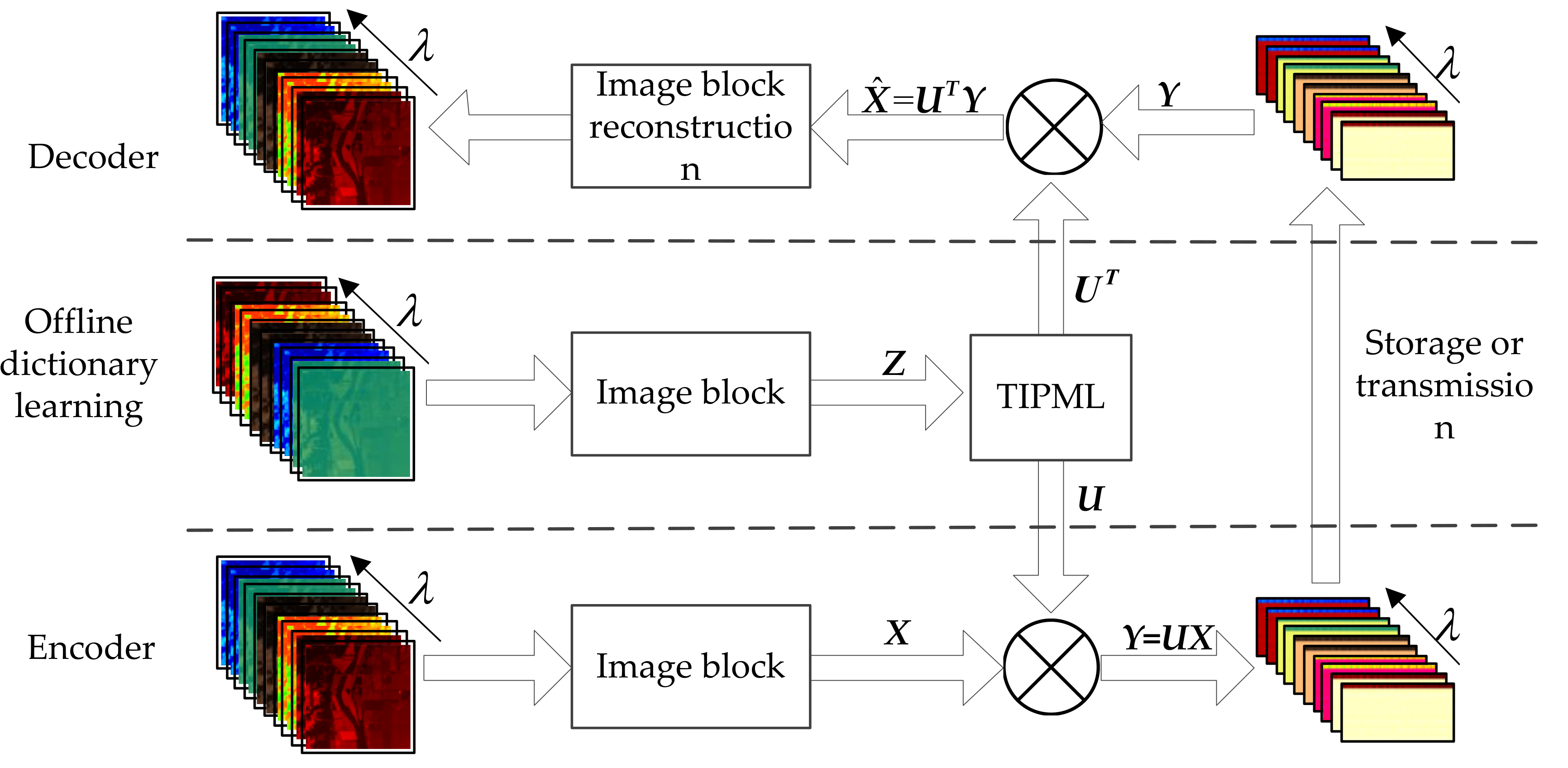

2. Principles and Methods

2.1. Constraints on Invertible Projection Transformation

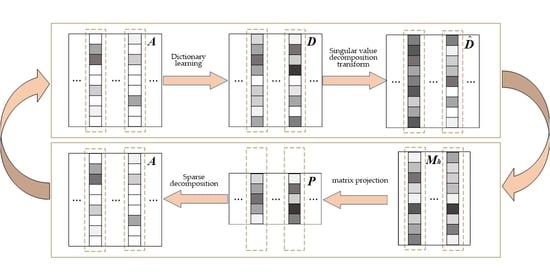

2.2. Singularity Transformation

- (1)

- The expression coefficient of signal x under dictionary D is sparse enough;

- (2)

- the first h singular values of dictionary D are large enough, and the last l singular values are small enough, and the value is not 0 [16].

2.3. Task-Driven Invertible Projection Matrix Learning

| Algorithm 1: TIPML |

| Input: Training data set X, number of iterations T, the singular value ʘ transformation parameter t,r, low-dimensional dictionary P dimension h, Signal sparsity K, and dimension of the data column N. Output: Projection matrix U. |

| 1: Initialization: Split the data set X into data columns xi (N × 1), I = 1,2,…, i is the index of the data column xi. Assuming X = [x1, x2,…, xn] (xi∈RN). The initial dictionary D is set as a DCT dictionary, D = [d1, d2,…,dn], (di∈RN). |

| 2: Repeat |

| 3: Do singular value ʘ transformation to dictionary D: D = D(t,r). |

| 4: Singular value decomposition: D = MΛVT. |

| 5: Calculate low-dimensional dictionary: P = MhTD. |

| 6: Based on the low-dimensional dictionary P, the OMP algorithm is used to sparse the data set X to obtain the sparse coefficient A = [a1, a2,…,an], update the index j = 1 of the dictionary atom. |

| 7: Repeat |

| 8: The error is calculated: . |

| 9: The error is decomposed by SVD (rank-1 decomposition) into: . |

| 10: Update dictionary: dj= u, Update sparsity coefficient: aj= λv. |

| 11: j = j + 1 |

| 12: Until j > n |

| 13: Until is big enough, or reach the maximum number of iterations T. |

| 14: Calculate the projection matrix: U = MhT. |

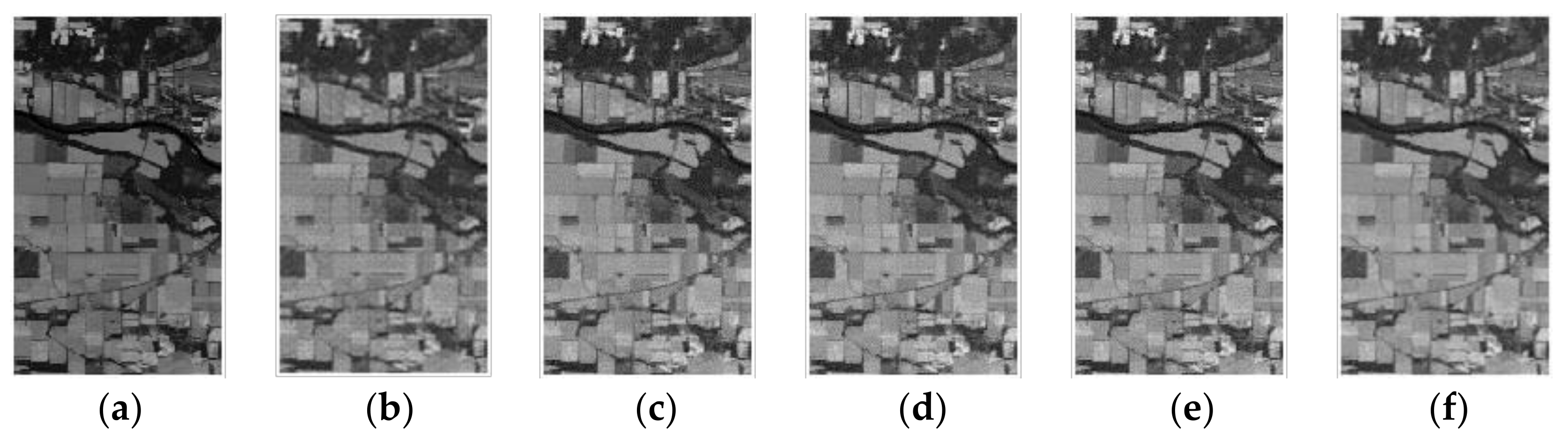

3. Simulation Experiment and Results

4. Conclusions

- (1)

- Derived the equivalent model of the invertible projection model theoretically, which converts the complex invertible projection training model into a coupled dictionary training model;

- (2)

- proposed a task-driven invertible projection matrix learning algorithm for invertible projection model training;

- (3)

- based on a task-driven reversible projection matrix learning algorithm, established a compressed sensing algorithm with strong real-time performance and high reconstruction accuracy.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Parente, M.; Kerekes, J.; Heylen, R. A Special Issue on Hyperspectral Imaging [From the Guest Editors]. IEEE Geosci. Remote Sens. Mag. 2019, 7, 6–7. [Google Scholar] [CrossRef]

- Vohland, M.; Jung, A. Hyperspectral Imaging for Fine to Medium Scale Applications in Environmental Sciences. Remote Sens. 2020, 12, 2962. [Google Scholar] [CrossRef]

- Saari, H.; Aallos, V.-V.; Akujärvi, A.; Antila, T.; Holmlund, C.; Kantojärvi, U.; Mäkynen, J.; Ollila, J. Novel Miniaturized Hyperspectral Sensor for UAV and Space Applications. In Proceedings of the SPIE 7474, Sensors, Systems, and Next-Generation Satellites XIII, 74741M, SPIE Remote Sensing, Berlin, Germany, 31 August–3 September 2009. [Google Scholar] [CrossRef]

- Renhorn, I.G.E.; Axelsson, L. High spatial resolution hyperspectral camera based on exponentially variable filter. Opt. Eng. 2019, 58, 103106. [Google Scholar] [CrossRef]

- Pu, H.; Lin, L.; Sun, D.-W. Principles of Hyperspectral Microscope Imaging Techniques and Their Applications in Food Quality and Safety Detection: A Review. Compr. Rev. Food Sci. Food Saf. 2019, 18, 853–866. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Lu, K.; Liu, P. Compressed Sensing of a Remote Sensing Image Based on the Priors of the Reference Image. IEEE Geosci. Remote Sens. Lett. 2015, 12, 736–740. [Google Scholar] [CrossRef]

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Hsu, C.; Lin, C.; Kao, C.; Lin, Y. DCSN: Deep Compressed Sensing Network for Efficient Hyperspectral Data Transmission of Miniaturized Satellite. IEEE Trans. Geosci. Remote Sens. 2020. [Google Scholar] [CrossRef]

- Biondi, F. In Compressed Sensing Radar-New Concepts of Incoherent Continuous Wave Transmissions; IEEE: Piscataway, NJ, USA, 2015; pp. 204–208. [Google Scholar]

- Candes, E.J.; Romberg, J.; Tao, T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 2004, 52, 489–509. [Google Scholar] [CrossRef]

- Candes, E.J.; Tao, T. Near-Optimal Signal Recovery from Random Projections: Universal Encoding Strategies. Inf. Theory IEEE Trans. 2006, 52, 5406–5425. [Google Scholar] [CrossRef]

- Xue, H.; Sun, L.; Ou, G. Speech reconstruction based on compressed sensing theory using smoothed L0 algorithm. In Proceedings of the 2016 8th International Conference on Wireless Communications & Signal Processing (WCSP), Yangzhou, China, 13–15 October 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Luo, H.; Zhang, N.; Wang, Y. Modified Smoothed Projected Landweber Algorithm for Adaptive Block Compressed Sensing Image Reconstruction. In Proceedings of the 2018 International Conference on Audio, Language and Image Processing (ICALIP), Shanghai, China, 16–17 July 2018; pp. 430–434. [Google Scholar] [CrossRef]

- Matin, A.; Dai, B.; Huang, Y.; Wang, X. Ultrafast Imaging with Optical Encoding and Compressive Sensing. J. Light. Technol. 2019, 761–768. [Google Scholar] [CrossRef]

- Dao, P.; Li, X.; Griffin, A. Quantitative Comparison of EEG Compressed Sensing using Gabor and K-SVD Dictionaries. In Proceedings of the 2018 IEEE 23rd International Conference on Digital Signal Processing (DSP), Shanghai, China, 19–21 November 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Sana, F.; Katterbauer, K.; Al-Naffouri, T. Enhanced recovery of subsurface geological structures using compressed sensing and the Ensemble Kalman filter. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 3107–3110. [Google Scholar] [CrossRef]

- Dehkordi, R.; Khosravi, H.; Ahmadyfard, A. Single image super-resolution based on sparse representation using dictionaries trained with input image patches. IET Image Process. 2020, 14, 1587–1593. [Google Scholar] [CrossRef]

- Silong, Z.; Yuanxiang, L.; Xian, W. Nonlinear dimensionality reduction method based on dictionary learning. Acta Autom. Sin. 2016, 42, 1065–1076. [Google Scholar]

- Vehkaperä, M.; Kabashima, Y.; Chatterjee, S. Analysis of Regularized LS Reconstruction and Random Matrix Ensembles in Compressed Sensing. IEEE Trans. Inf. Theory 2016, 62, 2100–2124. [Google Scholar] [CrossRef]

- Ziran, W.; Huachuang, W.; Jianlin, Z. Structural optimization of measurement matrix in image reconstruction based on compressed sensing. In Proceedings of the 2017 7th IEEE International Conference on Electronics Information and Emergency Communication (ICEIEC), Macau, China, 21–23 July 2017; pp. 223–227. [Google Scholar] [CrossRef]

- Zhang, W.; Tan, C.; Xu, Y. Electrical Resistance Tomography Image Reconstruction Based on Modified OMP Algorithm. IEEE Sens. J. 2019, 19, 5723–5731. [Google Scholar] [CrossRef]

- Qian, Y.; Zhu, D.; Yu, X. SAR high-resolution imaging from missing raw data using StOMP. J. Eng. 2019, 2019, 7347–7351. [Google Scholar] [CrossRef]

- Davenport, M.; Needell, D.; Wakin, M. Signal Space CoSaMP for Sparse Recovery with Redundant Dictionaries. IEEE Trans. Inf. Theory 2013, 59, 6820–6829. [Google Scholar] [CrossRef]

- Chen, S.; Feng, C.; Xu, X. Micro-motion feature extraction of narrow-band radar target based on ROMP. J. Eng. 2019, 2019, 7860–7863. [Google Scholar] [CrossRef]

- Trevisi, M.; Akbari, A.; Trocan, M. Compressive Imaging Using RIP-Compliant CMOS Imager Architecture and Landweber Reconstruction. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 387–399. [Google Scholar] [CrossRef]

- Wei, X. Reconstructible Nonlinear Dimensionality Reduction via Joint Dictionary Learning. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 175–189. [Google Scholar] [CrossRef] [PubMed]

- Shufang, X. Theory and Method of Matrix Calculation; Peking University Press: Beijing, China, 1995; p. 7. [Google Scholar]

- Baumgardner, M.F.; Biehl, L.L.; Landgrebe, D.A. 220 Band AVIRIS Hyperspectral Image Data Set: 12 June 1992 Indian Pine Test Site 3. In Purdue University Research Repository; Purdue University: West Lafayette, IN, USA, 2015. [Google Scholar] [CrossRef]

- Lee, C.; Youn, S.; Jeong, T.; Lee, E.; Serra-Sagristà, J. Hybrid Compression of Hyperspectral Images Based on PCA with Pre-Encoding Discriminant Information. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1491–1495. [Google Scholar] [CrossRef]

| Methods | Sampling Rate | ||||

|---|---|---|---|---|---|

| 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | |

| Ours | 61.20 | 63.39 | 64.17 | 64.86 | 65.63 |

| PCA | 54.09 | 56.15 | 57.84 | 59.46 | 61.10 |

| KSVD-StOMP | 51.06 | 54.10 | 59.21 | 60.87 | 61.51 |

| KSVD-OMP | 31.37 | 28.30 | 26.93 | 27.08 | 27.20 |

| KSVD-GOMP | 33.85 | 30.31 | 29.02 | 28.61 | 28.74 |

| KSVD-GROMP | 34.39 | 33.59 | 33.57 | 35.04 | 35.72 |

| KSVD-CoSaMP | 48.70 | 49.61 | 50.30 | 50.18 | 50.26 |

| DCT-SPL | 38.27 | 46.83 | 54.10 | 58.81 | 60.70 |

| DCT-StOMP | 30.44 | 30.97 | 31.52 | 32.17 | 32.96 |

| CDL-StOMP | 60.67 | 63.06 | 63.33 | 63.33 | 63.33 |

| CDL-OMP | 60.67 | 63.06 | 63.33 | 63.33 | 63.33 |

| CDL-CoSaMP | 48.79 | 49.93 | 49.94 | 49.94 | 49.94 |

| CDL-GROMP | 14.83 | 22.90 | 28.39 | 33.27 | 37.21 |

| CDL-GOMP | 9.19 | 13.73 | 17.85 | 21.60 | 24.74 |

| Methods | Sampling Rate | ||||

|---|---|---|---|---|---|

| 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | |

| Ours | 1.17 × 10−8 | 1.16 × 10−8 | 1.18 × 10−8 | 1.18 × 10−8 | 1.14 × 10−8 |

| PCA | 1.20 × 10−8 | 1.19 × 10−8 | 1.22 × 10−8 | 1.21 × 10−8 | 1.18 × 10−8 |

| KSVD-StOMP | 8.39 × 10−4 | 1.16 × 10−8 | 1.19 × 10−8 | 1.18 × 10−8 | 1.15 × 10−8 |

| KSVD-OMP | 1.45 × 10−1 | 6.06 × 10−3 | 5.03 × 10−4 | 1.18 × 10−8 | 1.32 × 10−3 |

| KSVD-GOMP | 5.34 × 10−2 | 1.15 × 10−8 | 4.82 × 10−3 | 1.18 × 10−8 | 1.97 × 10−3 |

| KSVD-GROMP | 1.22 × 10−3 | 1.16 × 10−8 | 3.19 × 10−2 | 1.17 × 10−8 | 1.07 × 10−2 |

| KSVD-CoSaMP | 1.16 × 10−8 | 1.16 × 10−8 | 1.19 × 10−8 | 1.16 × 10−8 | 1.15 × 10−8 |

| DCT-SPL | 1.14 × 10−8 | 1.18 × 10−8 | 1.15 × 10−8 | 1.17 × 10−8 | 1.15 × 10−8 |

| CDL-StOMP | 1.17 × 10−8 | 1.16 × 10−8 | 1.19 × 10−8 | 1.17 × 10−8 | 1.15 × 10−8 |

| CDL-OMP | 1.17 × 10−8 | 1.16 × 10−8 | 1.19 × 10−8 | 1.17 × 10−8 | 1.15 × 10−8 |

| CDL-CoSaMP | 1.65 × 10−3 | 7.16 × 10−1 | 1.18 × 10−8 | 1.75 × 10−2 | 8.31 × 10−2 |

| CDL-GROMP | 4.55 × 10−4 | 1.20 × 10−4 | 1.32 × 10−3 | 9.83 × 10−4 | 2.88 × 10−4 |

| CDL-GOMP | 4.55 × 10−4 | 1.20 × 10−4 | 1.32 × 10−3 | 9.83 × 10−4 | 2.88 × 10−4 |

| Methods | Sampling Rate | ||||

|---|---|---|---|---|---|

| 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | |

| Ours | 0.3 | 0.3 | 0.4 | 0.4 | 0.4 |

| PCA | 0.3 | 0.4 | 0.4 | 0.4 | 0.4 |

| KSVD-StOMP | 210.8 | 443.7 | 667.1 | 934.6 | 1235.0 |

| KSVD-OMP | 264.9 | 401.8 | 545.3 | 594.0 | 613.5 |

| KSVD-GOMP | 101.7 | 170.4 | 206.2 | 246.8 | 252.3 |

| KSVD-GROMP | 84.6 | 131.7 | 181.0 | 186.7 | 189.3 |

| KSVD-CoSaMP | 302.7 | 527.7 | 597.3 | 829.7 | 877.4 |

| DCT-SPL | 354.8 | 355.1 | 354.8 | 357.0 | 359.1 |

| DCT-StOMP | 199.4 | 437.6 | 732.2 | 1085.2 | 1517.4 |

| CDL-StOMP | 228.2 | 503.3 | 763.0 | 1054.0 | 1384.2 |

| CDL-OMP | 209.5 | 462.7 | 696.1 | 955.6 | 1261.4 |

| CDL-CoSaMP | 341.3 | 553.0 | 635.0 | 895.7 | 943.9 |

| CDL-GROMP | 75.5 | 111.8 | 150.4 | 153.0 | 157.3 |

| CDL-GOMP | 167.7 | 253.3 | 317.4 | 365.8 | 375.5 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dai, S.; Liu, W.; Wang, Z.; Li, K. A Task-Driven Invertible Projection Matrix Learning Algorithm for Hyperspectral Compressed Sensing. Remote Sens. 2021, 13, 295. https://doi.org/10.3390/rs13020295

Dai S, Liu W, Wang Z, Li K. A Task-Driven Invertible Projection Matrix Learning Algorithm for Hyperspectral Compressed Sensing. Remote Sensing. 2021; 13(2):295. https://doi.org/10.3390/rs13020295

Chicago/Turabian StyleDai, Shaofei, Wenbo Liu, Zhengyi Wang, and Kaiyu Li. 2021. "A Task-Driven Invertible Projection Matrix Learning Algorithm for Hyperspectral Compressed Sensing" Remote Sensing 13, no. 2: 295. https://doi.org/10.3390/rs13020295

APA StyleDai, S., Liu, W., Wang, Z., & Li, K. (2021). A Task-Driven Invertible Projection Matrix Learning Algorithm for Hyperspectral Compressed Sensing. Remote Sensing, 13(2), 295. https://doi.org/10.3390/rs13020295