Deep Learning for Feature-Level Data Fusion: Higher Resolution Reconstruction of Historical Landsat Archive

Abstract

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Theoretical Basis

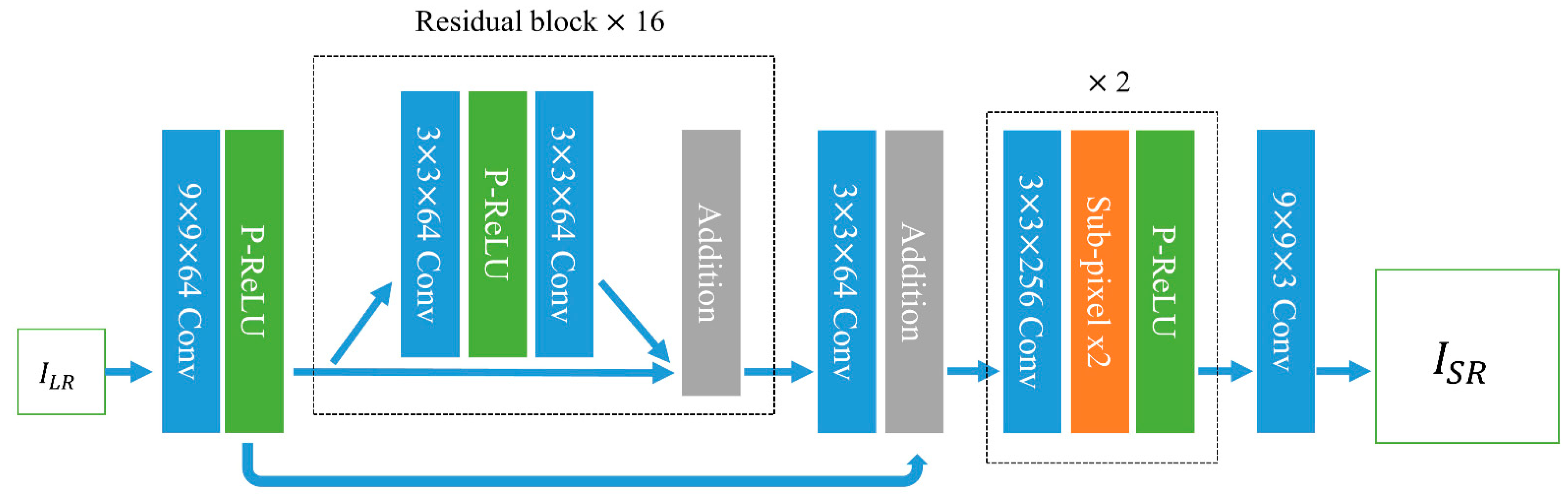

2.1.1. Deep Convolutional Neural Networks

2.1.2. Generative Adversarial Networks

2.1.3. Loss Function

2.2. Implementation

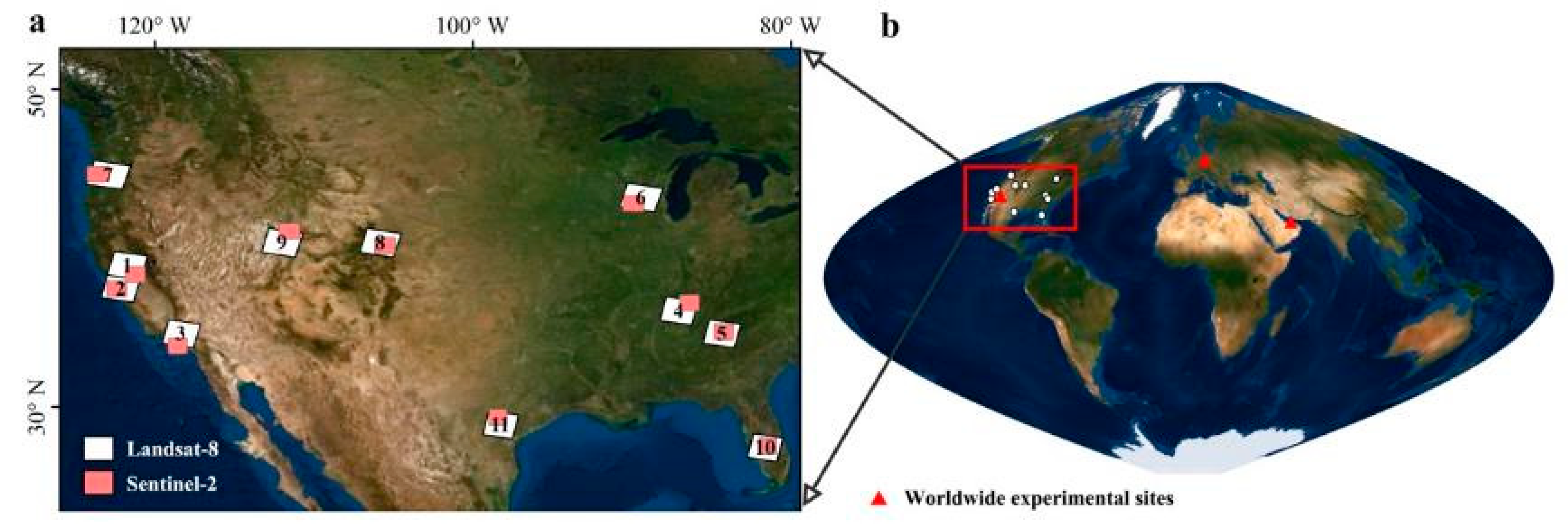

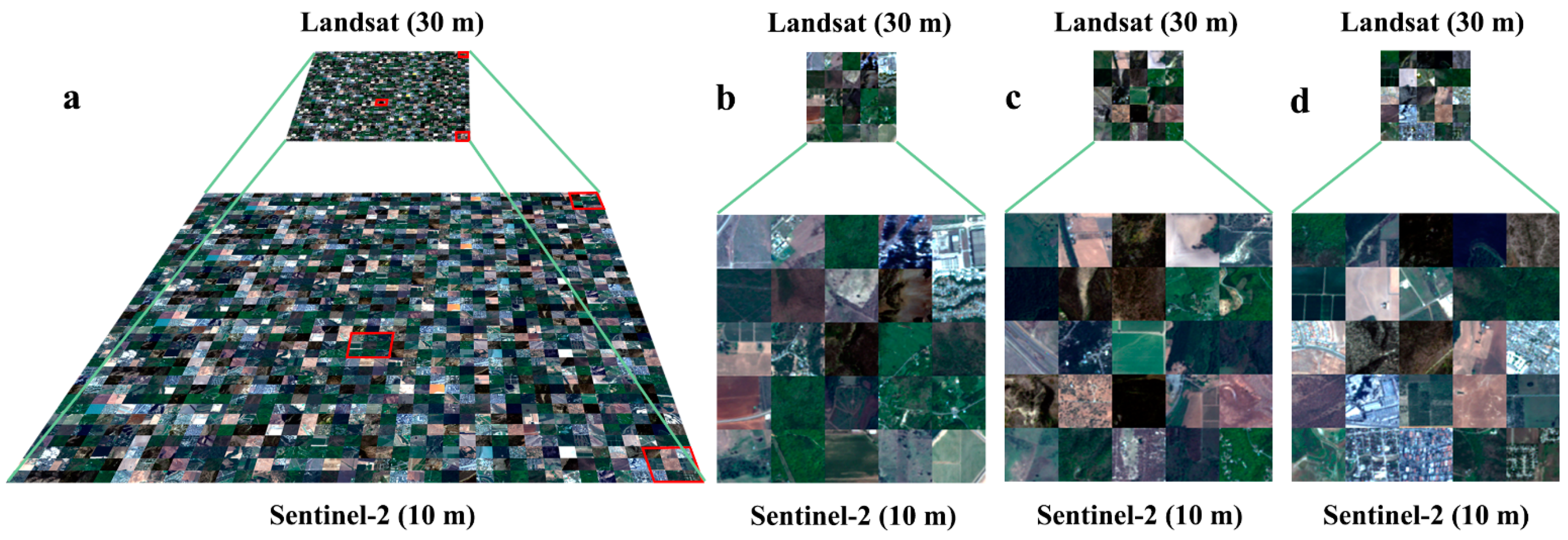

2.3. Landsat and Sentinel-2 Datasets

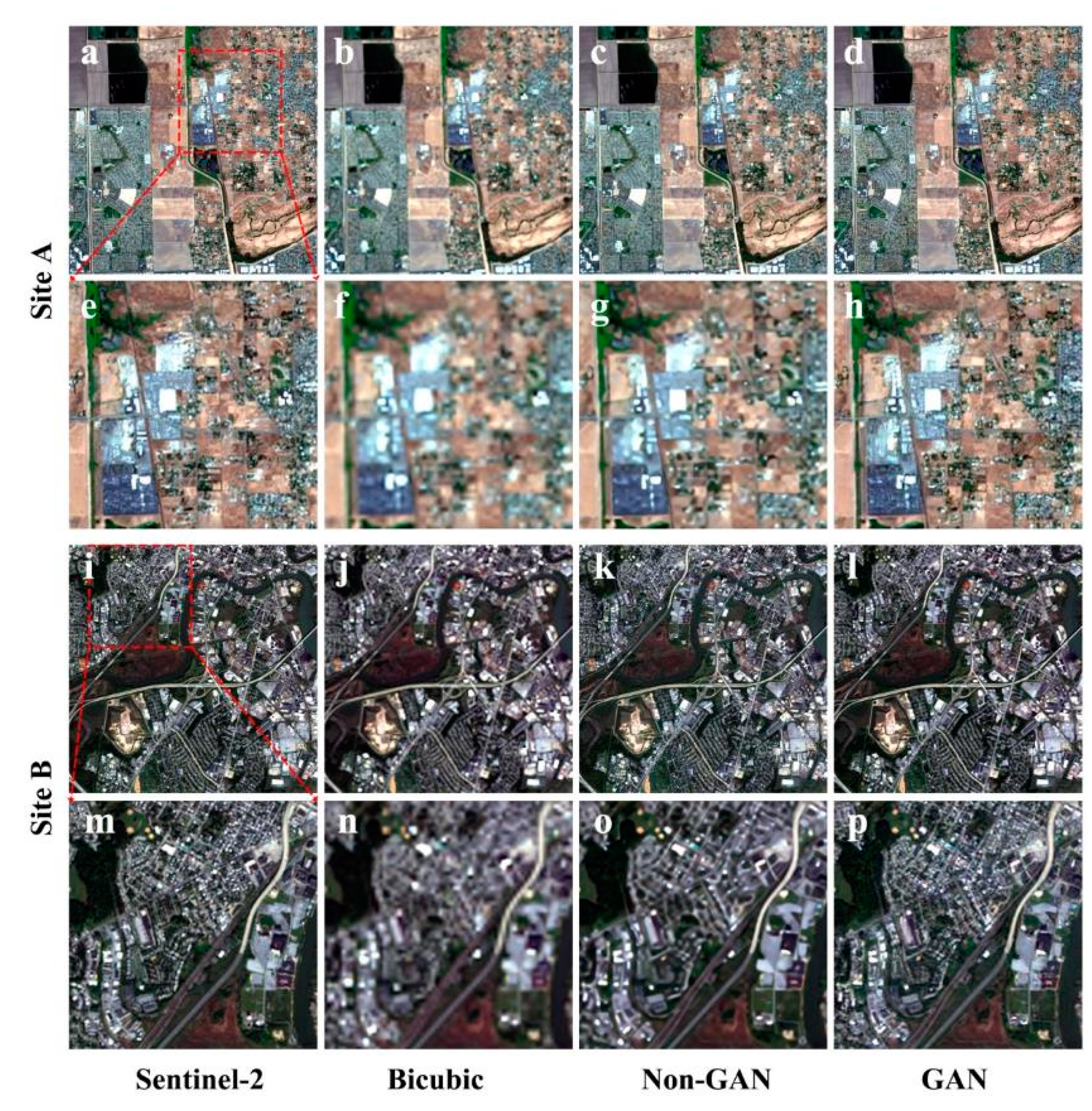

3. Experimental Tests and Results

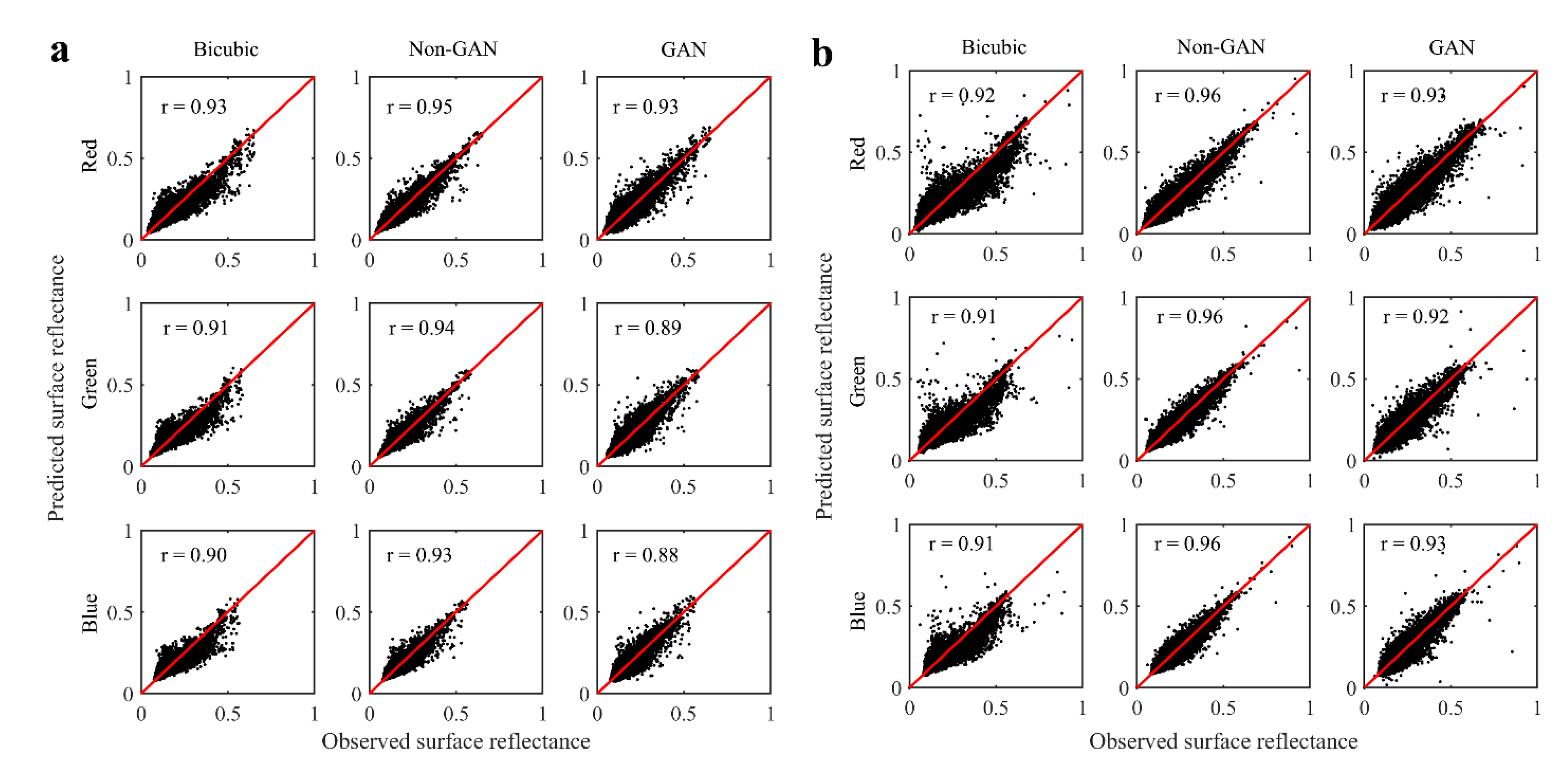

3.1. Tests with Simulated Data

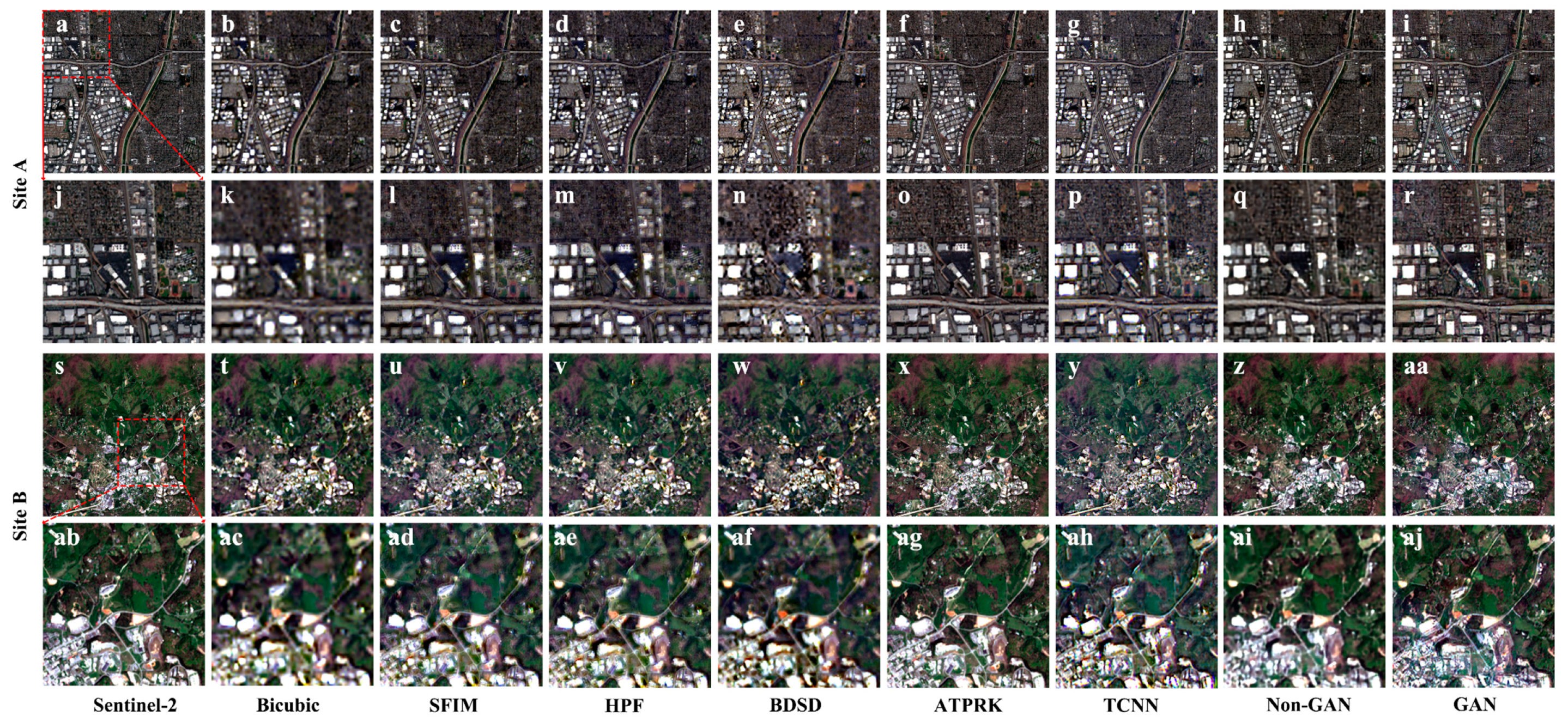

3.2. Tests with Real Data

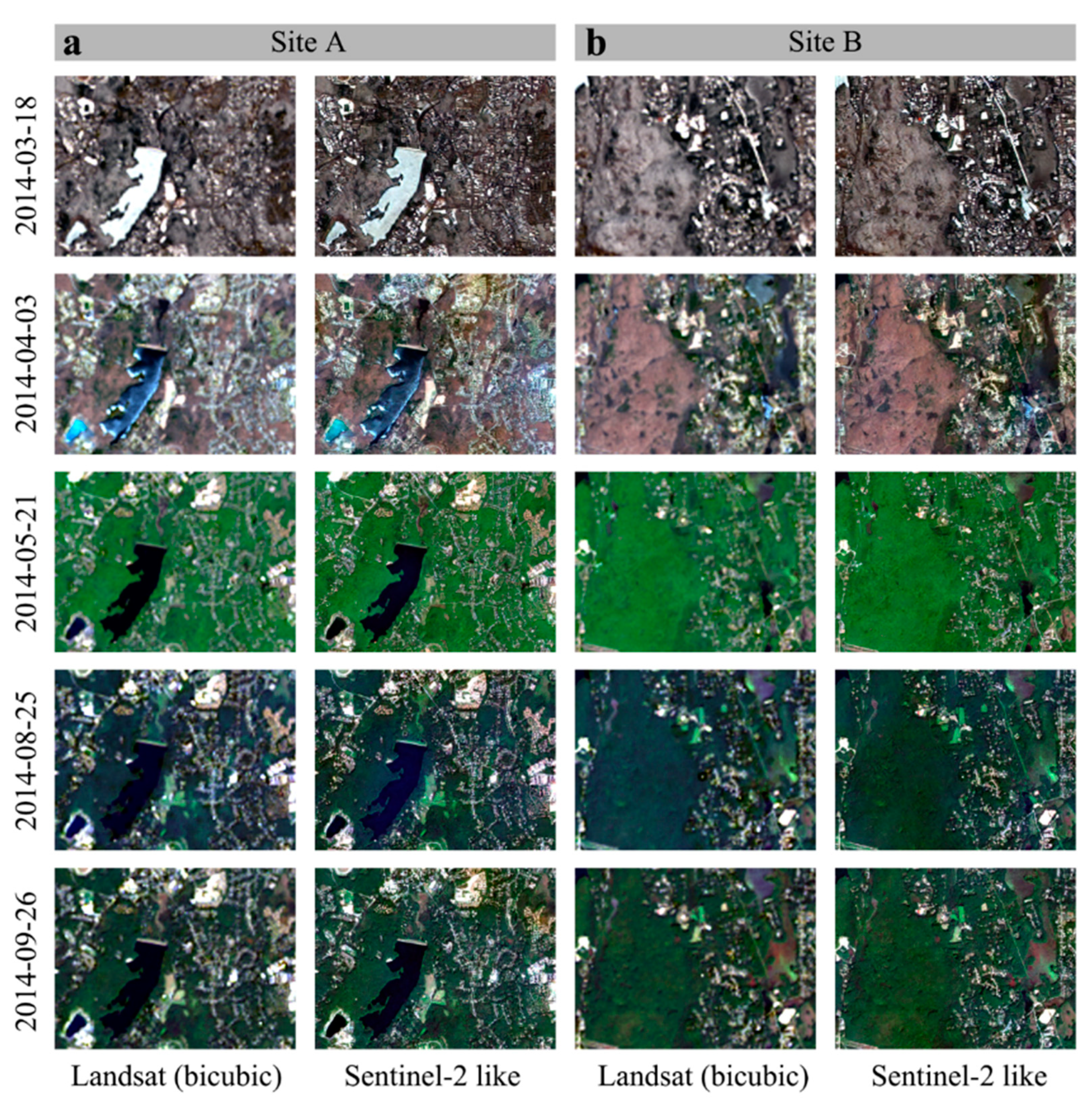

3.3. Tests with Time-Series Data

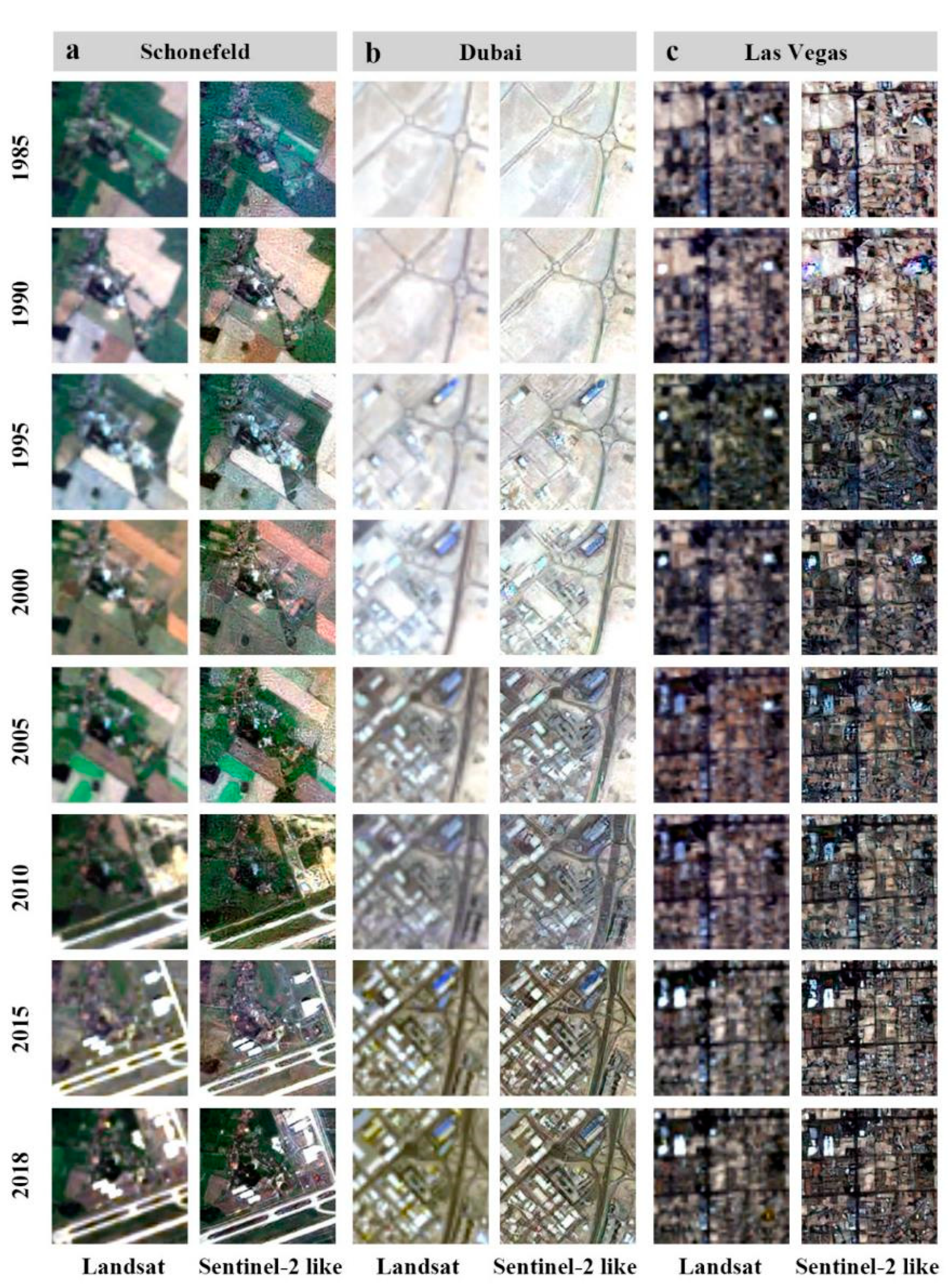

3.4. Reconstruction of 10 m Historical Landsat Archive

4. Discussion

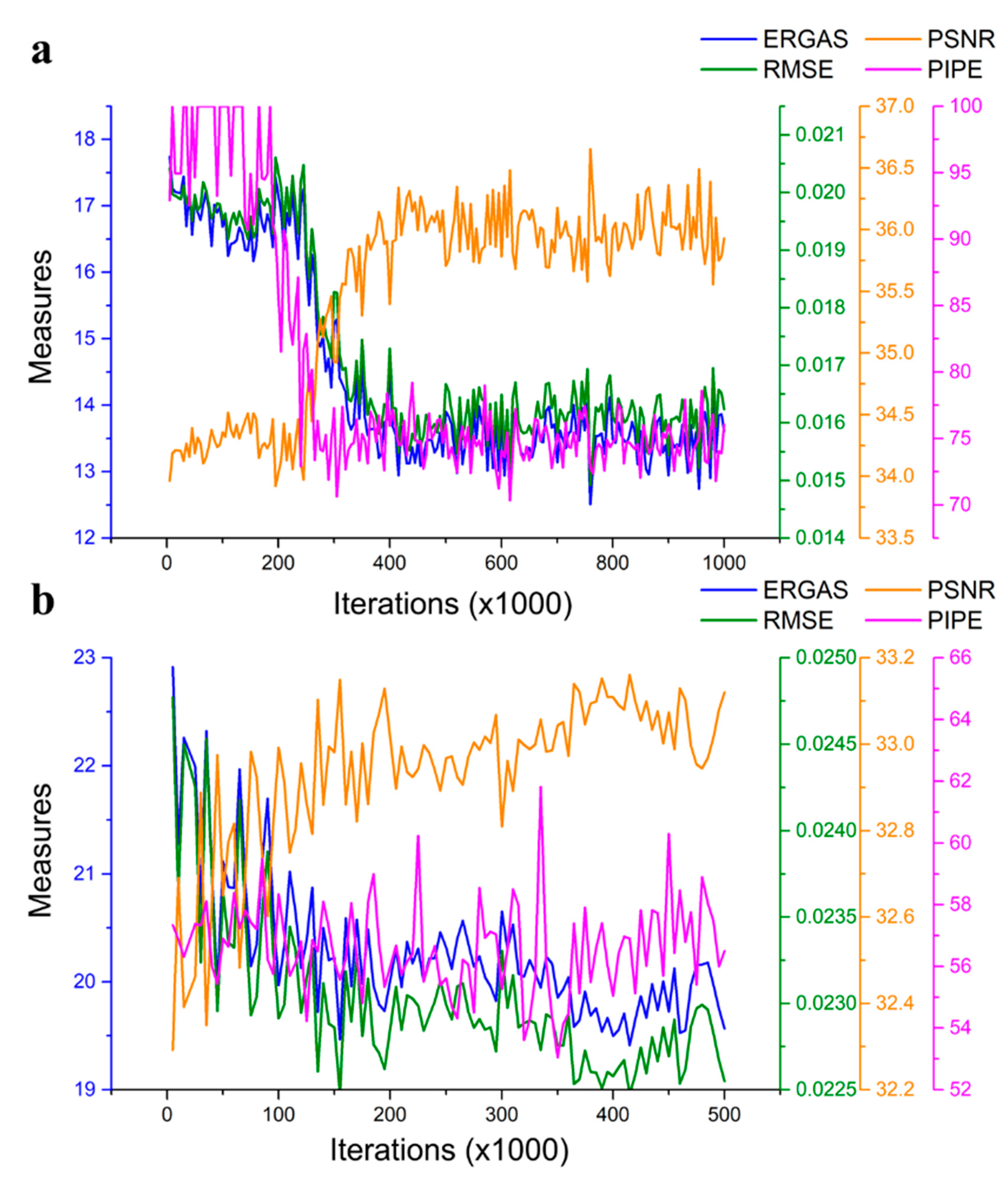

4.1. Training and Tuning of Deep Learning Models

4.2. Future Prospect

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Allen, C.D. Interactions across spatial scales among forest dieback, fire, and erosion in northern New Mexico landscapes. Ecosystems 2007, 10, 797–808. [Google Scholar] [CrossRef]

- Lepers, E.; Lambin, E.F.; Janetos, A.C.; DeFries, R.; Achard, F.; Ramankutty, N.; Scholes, R.J. A synthesis of information on rapid land-cover change for the period 1981–2000. BioScience 2005, 55, 115–124. [Google Scholar] [CrossRef]

- Roy, D.P.; Wulder, M.A.; Loveland, T.R.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Helder, D.; Irons, J.R.; Johnson, D.M.; Kennedy, R. Landsat-8: Science and product vision for terrestrial global change research. Remote Sens. Environ. 2014, 145, 154–172. [Google Scholar] [CrossRef]

- Wulder, M.A.; Loveland, T.R.; Roy, D.P.; Crawford, C.J.; Masek, J.G.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Belward, A.S.; Cohen, W.B. Current status of Landsat program, science, and applications. Remote Sens. Environ. 2019, 225, 127–147. [Google Scholar] [CrossRef]

- Wulder, M.A.; White, J.C.; Goward, S.N.; Masek, J.G.; Irons, J.R.; Herold, M.; Cohen, W.B.; Loveland, T.R.; Woodcock, C.E. Landsat continuity: Issues and opportunities for land cover monitoring. Remote Sens. Environ. 2008, 112, 955–969. [Google Scholar] [CrossRef]

- Chen, B.; Huang, B.; Chen, L.; Xu, B. Spatially and temporally weighted regression: A novel method to produce continuous cloud-free Landsat imagery. IEEE Trans. Geosci. Remote Sens. 2016, 55, 27–37. [Google Scholar] [CrossRef]

- Grimmond, S.U. Urbanization and global environmental change: Local effects of urban warming. Geogr. J. 2007, 173, 83–88. [Google Scholar] [CrossRef]

- Theobald, D.M.; Kennedy, C.; Chen, B.; Oakleaf, J.; Baruch-Mordo, S.; Kiesecker, J. Earth transformed: Detailed mapping of global human modification from 1990 to 2017. Earth Syst. Sci. Data 2020, 12, 1953–1972. [Google Scholar] [CrossRef]

- Michishita, R.; Jiang, Z.; Xu, B. Monitoring two decades of urbanization in the Poyang Lake area, China through spectral unmixing. Remote Sens. Environ. 2012, 117, 3–18. [Google Scholar] [CrossRef]

- Zhang, L.; Weng, Q. Annual dynamics of impervious surface in the Pearl River Delta, China, from 1988 to 2013, using time series Landsat imagery. ISPRS J. Photogramm. Remote Sens. 2016, 113, 86–96. [Google Scholar] [CrossRef]

- Chen, B.; Jin, Y.; Brown, P. An enhanced bloom index for quantifying floral phenology using multi-scale remote sensing observations. ISPRS J. Photogramm. Remote Sens. 2019, 156, 108–120. [Google Scholar] [CrossRef]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Gilbertson, J.K.; Kemp, J.; Van Niekerk, A. Effect of pan-sharpening multi-temporal Landsat 8 imagery for crop type differentiation using different classification techniques. Comput. Electr. Agric. 2017, 134, 151–159. [Google Scholar] [CrossRef]

- Gong, P.; Chen, B.; Li, X.; Liu, H.; Wang, J.; Bai, Y.; Chen, J.; Chen, X.; Fang, L.; Feng, S. Mapping essential urban land use categories in China (EULUC-China): Preliminary results for 2018. Sci. Bull. 2020, 65, 182–187. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Salehi, B.; Mohammadimanesh, F.; Homayouni, S.; Gill, E. The first wetland inventory map of newfoundland at a spatial resolution of 10 m using Sentinel-1 and Sentinel-2 data on the Google Earth Engine cloud computing platform. Remote Sens. 2019, 11, 43. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P. Sentinel-2: ESA’s optical high-resolution mission for GMES operational services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Korhonen, L.; Packalen, P.; Rautiainen, M. Comparison of Sentinel-2 and Landsat 8 in the estimation of boreal forest canopy cover and leaf area index. Remote Sens. Environ. 2017, 195, 259–274. [Google Scholar] [CrossRef]

- Chen, B.; Huang, B.; Xu, B. Comparison of spatiotemporal fusion models: A review. Remote Sens. 2015, 7, 1798–1835. [Google Scholar] [CrossRef]

- Gao, F.; Masek, J.; Schwaller, M.; Hall, F. On the blending of the Landsat and MODIS surface reflectance: Predicting daily Landsat surface reflectance. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2207–2218. [Google Scholar]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Li, S.; Kwok, J.T.; Wang, Y. Using the discrete wavelet frame transform to merge Landsat tm and spot panchromatic images. Inf. Fusion 2002, 3, 17–23. [Google Scholar] [CrossRef]

- Li, S.; Yang, B. A new pan-sharpening method using a compressed sensing technique. IEEE Trans. Geosci. Remote Sens. 2010, 49, 738–746. [Google Scholar] [CrossRef]

- Zhang, H.K.; Roy, D.P. Computationally inexpensive Landsat 8 operational land imager (OLI) pansharpening. Remote Sens. 2016, 8, 180. [Google Scholar] [CrossRef]

- Huang, B.; Song, H. Spatiotemporal reflectance fusion via sparse representation. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3707–3716. [Google Scholar] [CrossRef]

- Luo, Y.; Guan, K.; Peng, J. Stair: A generic and fully-automated method to fuse multiple sources of optical satellite data to generate a high-resolution, daily and cloud-/gap-free surface reflectance product. Remote Sens. Environ. 2018, 214, 87–99. [Google Scholar] [CrossRef]

- Shen, H.; Huang, L.; Zhang, L.; Wu, P.; Zeng, C. Long-term and fine-scale satellite monitoring of the urban heat island effect by the fusion of multi-temporal and multi-sensor remote sensed data: A 26-year case study of the city of Wuhan in china. Remote Sens. Environ. 2016, 172, 109–125. [Google Scholar] [CrossRef]

- Weng, Q.; Fu, P.; Gao, F. Generating daily land surface temperature at Landsat resolution by fusing Landsat and MODIS data. Remote Sens. Environ. 2014, 145, 55–67. [Google Scholar] [CrossRef]

- Shao, Z.; Cai, J.; Fu, P.; Hu, L.; Liu, T. Deep learning-based fusion of Landsat-8 and Sentinel-2 images for a harmonized surface reflectance product. Remote Sens. Environ. 2019, 235, 111425. [Google Scholar] [CrossRef]

- Yan, L.; Roy, D.P. Spatially and temporally complete Landsat reflectance time series modelling: The fill-and-fit approach. Remote Sens. Environ. 2020, 241, 111718. [Google Scholar] [CrossRef]

- Claverie, M.; Ju, J.; Masek, J.G.; Dungan, J.L.; Vermote, E.F.; Roger, J.-C.; Skakun, S.V.; Justice, C. The harmonized Landsat and Sentinel-2 surface reflectance data set. Remote Sens. Environ. 2018, 219, 145–161. [Google Scholar] [CrossRef]

- Wang, Q.; Blackburn, G.A.; Onojeghuo, A.O.; Dash, J.; Zhou, L.; Zhang, Y.; Atkinson, P.M. Fusion of Landsat 8 OLI and Sentinel-2 MSI data. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3885–3899. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A.; Bengio, Y. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Volume 1. [Google Scholar]

- Kim, J.; Kwon Lee, J.; Mu Lee, K. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 295–307. [Google Scholar] [CrossRef]

- Pouliot, D.; Latifovic, R.; Pasher, J.; Duffe, J. Landsat super-resolution enhancement using convolution neural networks and sentinel-2 for training. Remote Sens. 2018, 10, 394. [Google Scholar] [CrossRef]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual dense network for image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2472–2481. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Amsterdam, The Netherlands, 2016; pp. 694–711. [Google Scholar]

- Lanaras, C.; Bioucas-Dias, J.; Galliani, S.; Baltsavias, E.; Schindler, K. Super-resolution of sentinel-2 images: Learning a globally applicable deep neural network. ISPRS J. Photogramm. Remote Sens. 2018, 146, 305–319. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Change Loy, C. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Ranchin, T.; Aiazzi, B.; Alparone, L.; Baronti, S.; Wald, L. Image fusion—the arsis concept and some successful implementation schemes. ISPRS J. Photogramm. Remote Sens. 2003, 58, 4–18. [Google Scholar] [CrossRef]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 2012, 20, 209–212. [Google Scholar] [CrossRef]

- Chan, R.W.; Goldsmith, P.B. A psychovisually-based image quality evaluator for jpeg images. In Proceedings of the 2000 IEEE International Conference on Systems, Man and Cybernetics, Nashville, TN, USA, 8–11 October 2000; pp. 1541–1546. [Google Scholar]

- Liu, J. Smoothing filter-based intensity modulation: A spectral preserve image fusion technique for improving spatial details. Int. J. Remote Sens. 2000, 21, 3461–3472. [Google Scholar] [CrossRef]

- Vivone, G.; Alparone, L.; Chanussot, J.; Dalla Mura, M.; Garzelli, A.; Licciardi, G.A.; Restaino, R.; Wald, L. A critical comparison among pansharpening algorithms. IEEE Trans. Geosci. Remote Sens. 2014, 53, 2565–2586. [Google Scholar] [CrossRef]

- Garzelli, A.; Nencini, F.; Capobianco, L. Optimal mmse pan sharpening of very high resolution multispectral images. IEEE Trans. Geosci. Remote Sens. 2007, 46, 228–236. [Google Scholar] [CrossRef]

- Wang, Q.; Shi, W.; Atkinson, P.M.; Zhao, Y. Downscaling MODIS images with area-to-point regression kriging. Remote Sens. Environ. 2015, 166, 191–204. [Google Scholar] [CrossRef]

- Masi, G.; Cozzolino, D.; Verdoliva, L.; Scarpa, G. Pansharpening by convolutional neural networks. Remote Sens. 2016, 8, 594. [Google Scholar] [CrossRef]

- Scarpa, G.; Vitale, S.; Cozzolino, D. Target-adaptive CNN-based pansharpening. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5443–5457. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 9. [Google Scholar] [CrossRef]

- Agustsson, E.; Timofte, R. Ntire 2017 challenge on single image super-resolution: Dataset and study. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 126–135. [Google Scholar]

- Sajjadi, M.S.; Scholkopf, B.; Hirsch, M. Enhancenet: Single image super-resolution through automated texture synthesis. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4491–4500. [Google Scholar]

- Dahl, R.; Norouzi, M.; Shlens, J. Pixel recursive super resolution. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5439–5448. [Google Scholar]

- Blau, Y.; Michaeli, T. The perception-distortion tradeoff. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6228–6237. [Google Scholar]

- Chen, B.; Huang, B.; Xu, B. A hierarchical spatiotemporal adaptive fusion model using one image pair. Int. J. Digit. Earth 2017, 10, 639–655. [Google Scholar] [CrossRef]

| Landsat-8 | Sentinel-2 | Landsat-8 | Sentinel-2 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Scene | Date | Scene | Date | Scene | Date | Scene | Date | ||

| #1 | 044033 | 2016-07-13 | 10SFH | 2016-07-14 | #7 | 046029 | 2018-08-18 | 10TDQ | 2018-08-19 |

| #2 | 044034 | 2016-07-13 | 10SEG | 2016-07-14 | #8 | 034032 | 2018-09-15 | 13TDE | 2018-09-14 |

| #3 | 041036 | 2017-12-18 | 11SLT | 2017-12-15 | #9 | 038032 | 2018-11-14 | 12TVL | 2018-11-14 |

| #4 | 021035 | 2018-04-29 | 16SEF | 2018-04-28 | #10 | 016041 | 2018-12-06 | 17RML | 2018-12-05 |

| #5 | 019036 | 2018-05-01 | 16SGD | 2018-04-30 | #11 | 027040 | 2019-01-01 | 14RNT | 2019-01-05 |

| #6 | 024030 | 2018-07-07 | 15TYH | 2018-07-08 | |||||

| Massachusetts (Scene: 012031) | California (Scene: 043034) | ||||

|---|---|---|---|---|---|

| #1 | 2014-03-18 | #1 | 2014-01-22 | #6 | 2014-06-15 |

| #2 | 2014-04-03 | #2 | 2014-02-23 | #7 | 2014-07-01 |

| #3 | 2014-05-21 | #3 | 2014-03-11 | #8 | 2014-08-18 |

| #4 | 2014-08-25 | #4 | 2014-04-28 | #9 | 2014-09-03 |

| #5 | 2014-09-26 | #5 | 2014-05-14 | #10 | 2014-10-05 |

| Measures | Bicubic | Non-GAN | GAN |

|---|---|---|---|

| QI | 0.647 ± 0.060 | 0.775 ± 0.051 | 0.669 ± 0.066 |

| PSNR | 39.197 ± 3.600 | 41.977 ± 3.987 | 39.391 ± 3.856 |

| RMSE | 0.012 ± 0.005 | 0.009 ± 0.004 | 0.012 ± 0.005 |

| ERGAS | 12.212 ± 3.973 | 9.027 ± 3.294 | 11.903 ± 4.266 |

| NIQE | 5.995 ± 0.507 | 4.839 ± 0.776 | 2.911 ± 0.497 |

| PIQE | 78.008 ± 8.498 | 61.687 ± 9.048 | 24.509 ± 14.898 |

| BRISQUE | 52.079 ± 3.413 | 40.553 ± 5.194 | 25.257 ± 7.568 |

| Measures | Bicubic | SFIM | HPF | BDSD | ATPRK | TCNN | Non-GAN | GAN |

|---|---|---|---|---|---|---|---|---|

| QI | 0.34 ± 0.10 | 0.65 ± 0.19 | 0.63 ± 0.10 | 0.57 ± 0.15 | 0.85 ± 0.07 | 0.54 ± 0.10 | 0.65 ± 0.14 | 0.37 ± 0.15 |

| PSNR | 29.71 ± 1.72 | 30.23 ± 1.54 | 30.26 ± 1.53 | 30.43 ± 1.37 | 31.43 ± 1.38 | 29.80 ± 1.83 | 41.50 ± 6.03 | 37.14 ± 5.75 |

| RMSE | 0.04 ± 0.01 | 0.04 ± 0.01 | 0.03 ± 0.01 | 0.03 ± 0.01 | 0.03 ± 0.01 | 0.04 ± 0.01 | 0.01 ± 0.01 | 0.02 ± 0.02 |

| ERGAS | 76.97 ± 30.19 | 75.30 ± 30.90 | 74.83 ± 30.80 | 73.63 ± 31.33 | 71.06 ± 31.73 | 74.00 ± 27.24 | 12.03 ± 15.09 | 18.66 ± 14.99 |

| NIQE | 6.43 ± 0.81 | 4.45 ± 0.71 | 4.44 ± 0.66 | 4.50 ± 1.46 | 3.36 ± 0.75 | 3.79 ± 0.59 | 5.37 ± 0.86 | 3.40 ± 0.70 |

| PIQE | 87.11 ± 11.85 | 20.89 ± 11.03 | 30.36 ± 11.55 | 50.92 ± 24.69 | 29.85 ± 11.74 | 31.39 ± 11.84 | 64.68 ± 12.42 | 28.62 ± 11.37 |

| BRISQUE | 52.63 ± 3.46 | 30.45 ± 6.04 | 35.09 ± 6.32 | 42.71 ± 10.35 | 31.28 ± 6.43 | 31.70 ± 5.11 | 44.25 ± 4.37 | 30.27 ± 6.33 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, B.; Li, J.; Jin, Y. Deep Learning for Feature-Level Data Fusion: Higher Resolution Reconstruction of Historical Landsat Archive. Remote Sens. 2021, 13, 167. https://doi.org/10.3390/rs13020167

Chen B, Li J, Jin Y. Deep Learning for Feature-Level Data Fusion: Higher Resolution Reconstruction of Historical Landsat Archive. Remote Sensing. 2021; 13(2):167. https://doi.org/10.3390/rs13020167

Chicago/Turabian StyleChen, Bin, Jing Li, and Yufang Jin. 2021. "Deep Learning for Feature-Level Data Fusion: Higher Resolution Reconstruction of Historical Landsat Archive" Remote Sensing 13, no. 2: 167. https://doi.org/10.3390/rs13020167

APA StyleChen, B., Li, J., & Jin, Y. (2021). Deep Learning for Feature-Level Data Fusion: Higher Resolution Reconstruction of Historical Landsat Archive. Remote Sensing, 13(2), 167. https://doi.org/10.3390/rs13020167