Comparison of UAS-Based Structure-from-Motion and LiDAR for Structural Characterization of Short Broadacre Crops

Abstract

:1. Introduction

2. Materials and Methods

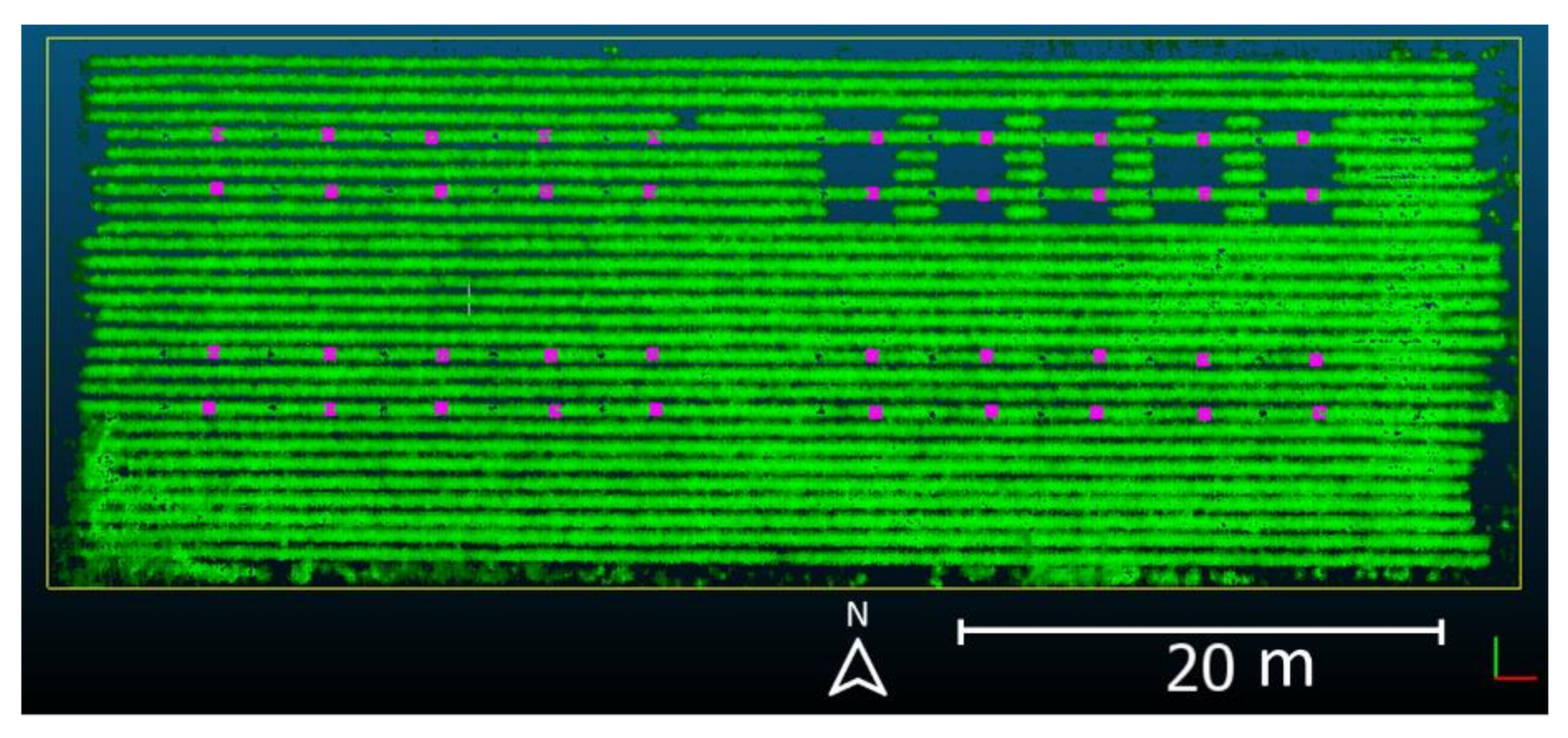

2.1. Study Site and Data Collection

2.2. Processing of the UAS-Based 3D Point Cloud Data

2.2.1. SfM and LiDAR Point Cloud Preprocessing

2.2.2. Derivation of Digital Models

2.2.3. Calculating CH and RW from Vegetation Sample Points

2.3. Comparing Methods and Evaluation Metrics

2.3.1. Preliminary Comparisons

2.3.2. Derived Products Comparison

- Find the “core” points, which are essentially a sub-sampled version from the original point cloud;

- For each core point, a normal vector is defined by fitting a plane to its neighbors, enclosed by a user-defined diameter , which is named “normal scale”;

- Given every core point and its normal vector, a cylinder can be defined via the user-defined projection scale, , and the cylinder depth, , oriented along the normal direction. Thus, the intercept of the two clouds with the cylinder defines two subsets of points; and

- Project both subsets to the orientation axis of the cylinder, i.e., the normal vector, to generate two distributions of distances. The distance between the means or medians of the two distributions is the local distance, .

3. Results

3.1. Preliminary Comparison of the Point Clouds

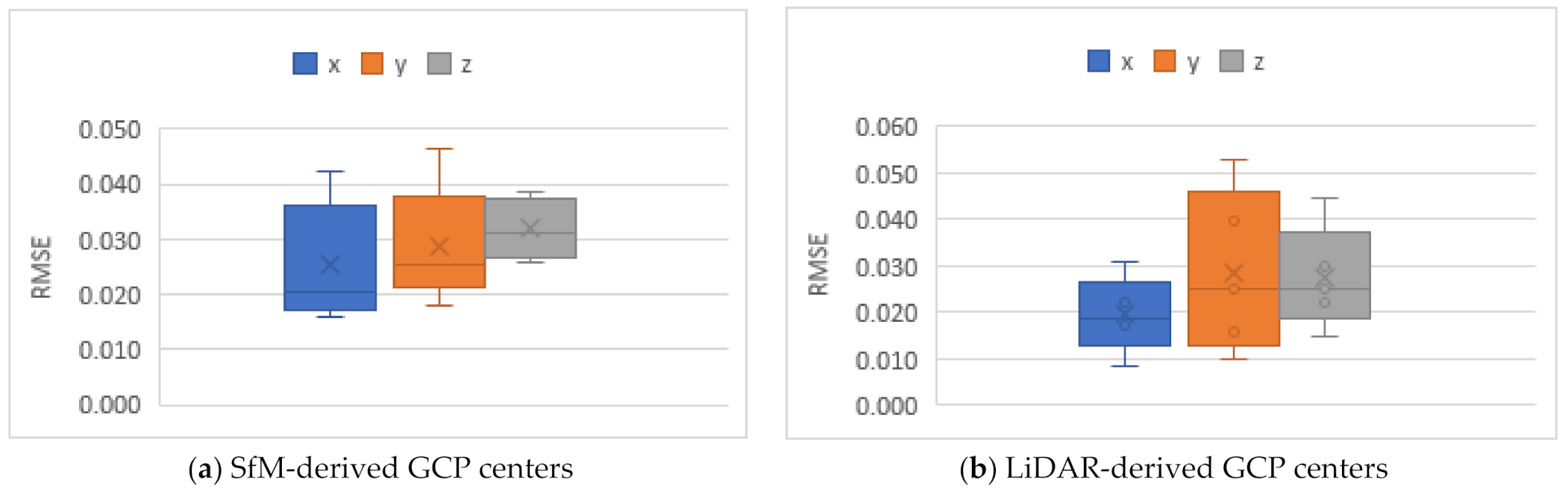

3.1.1. Absolute Accuracy of GCPs

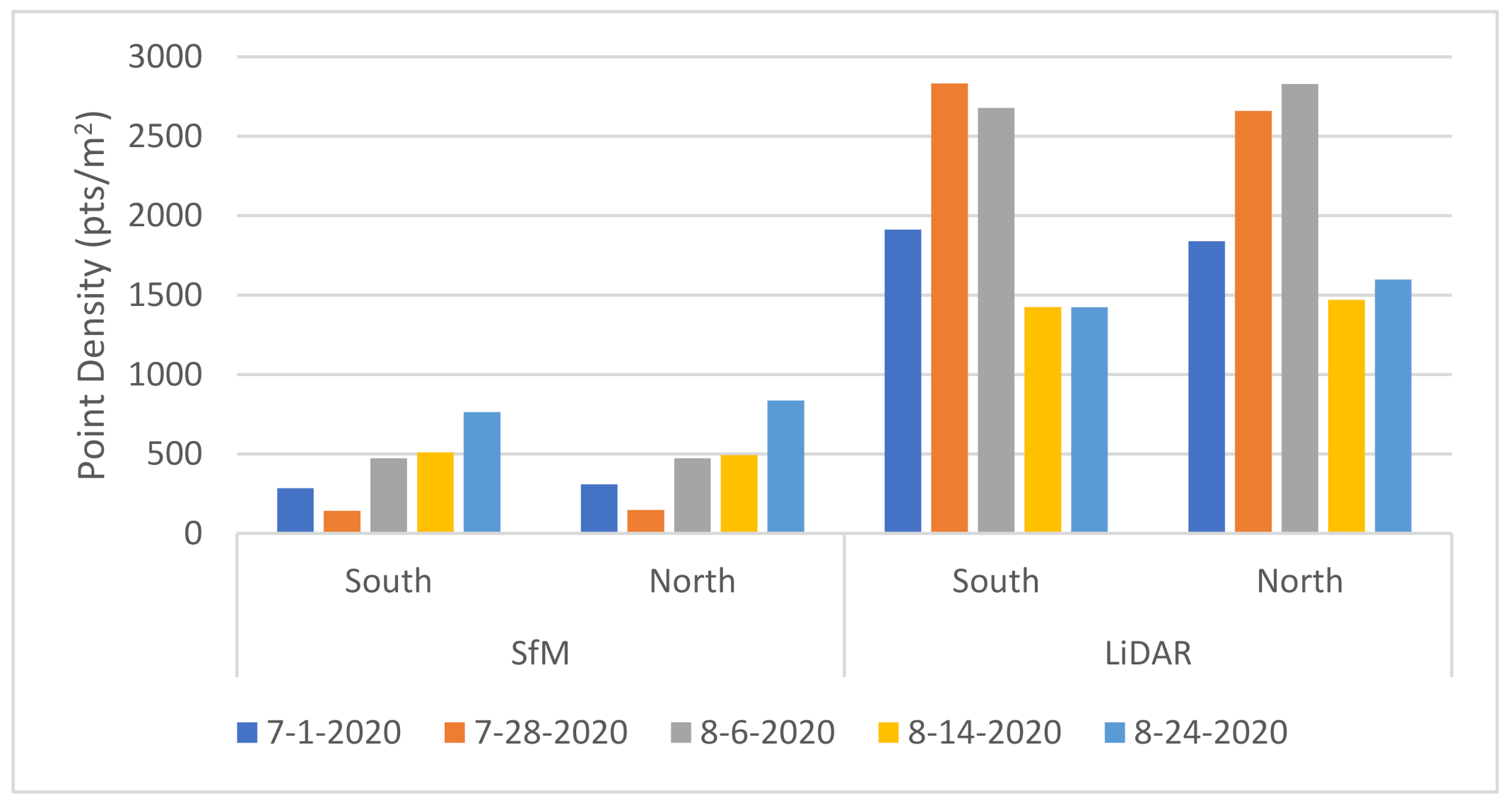

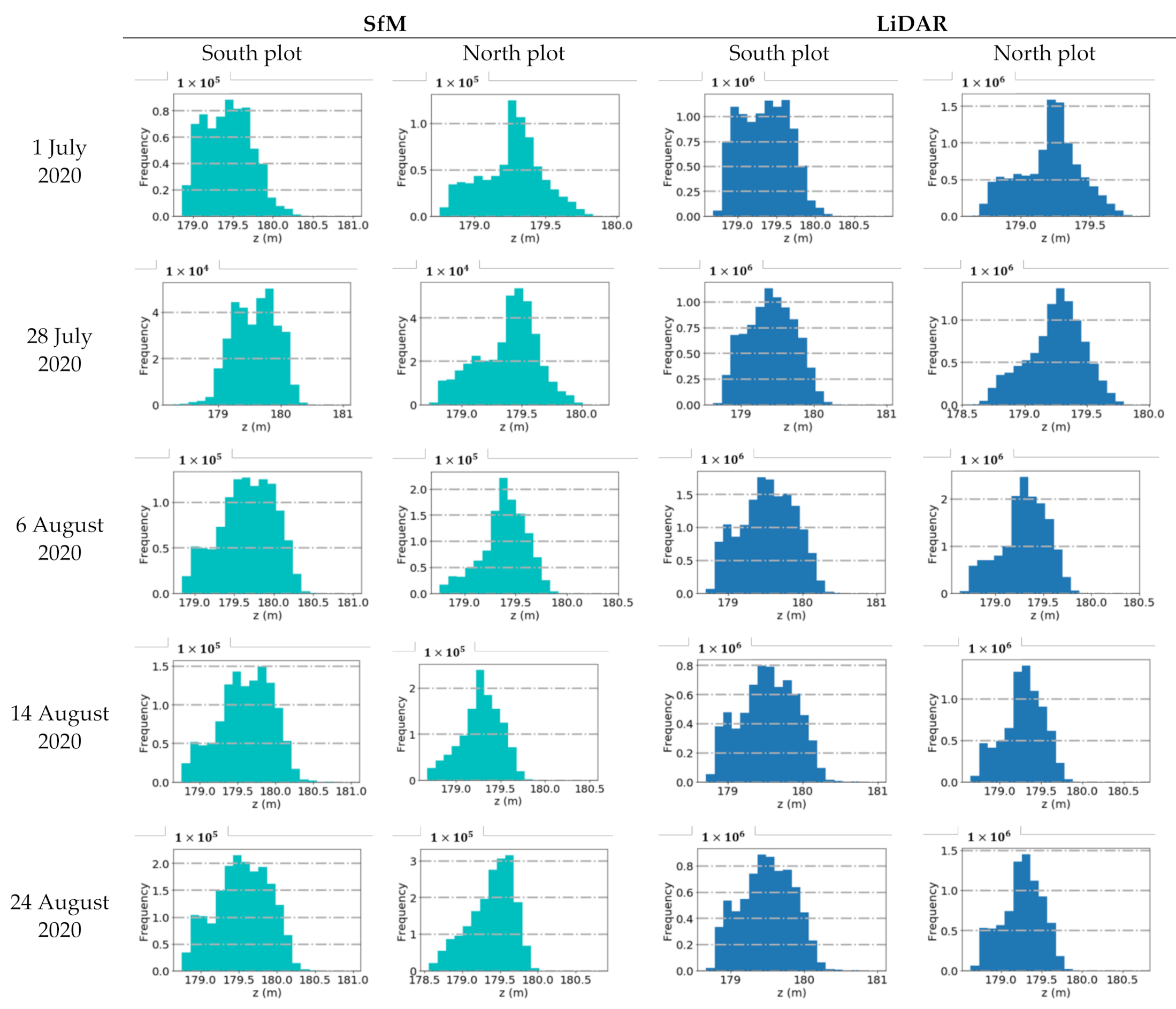

3.1.2. Average Density and Histograms of the z Coordinate

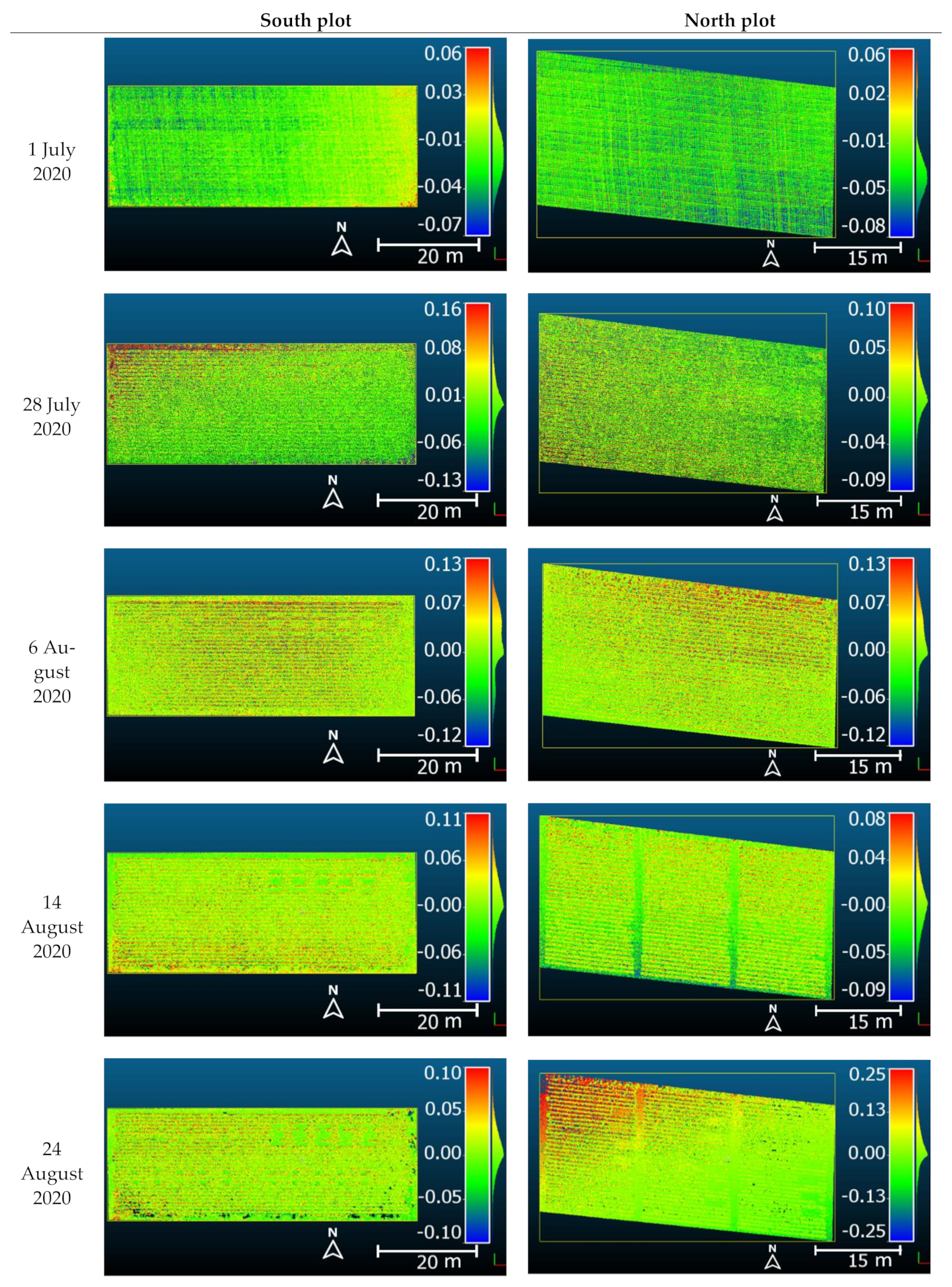

3.2. C2M Distance Map Derived from the Preprocessed Point Clouds

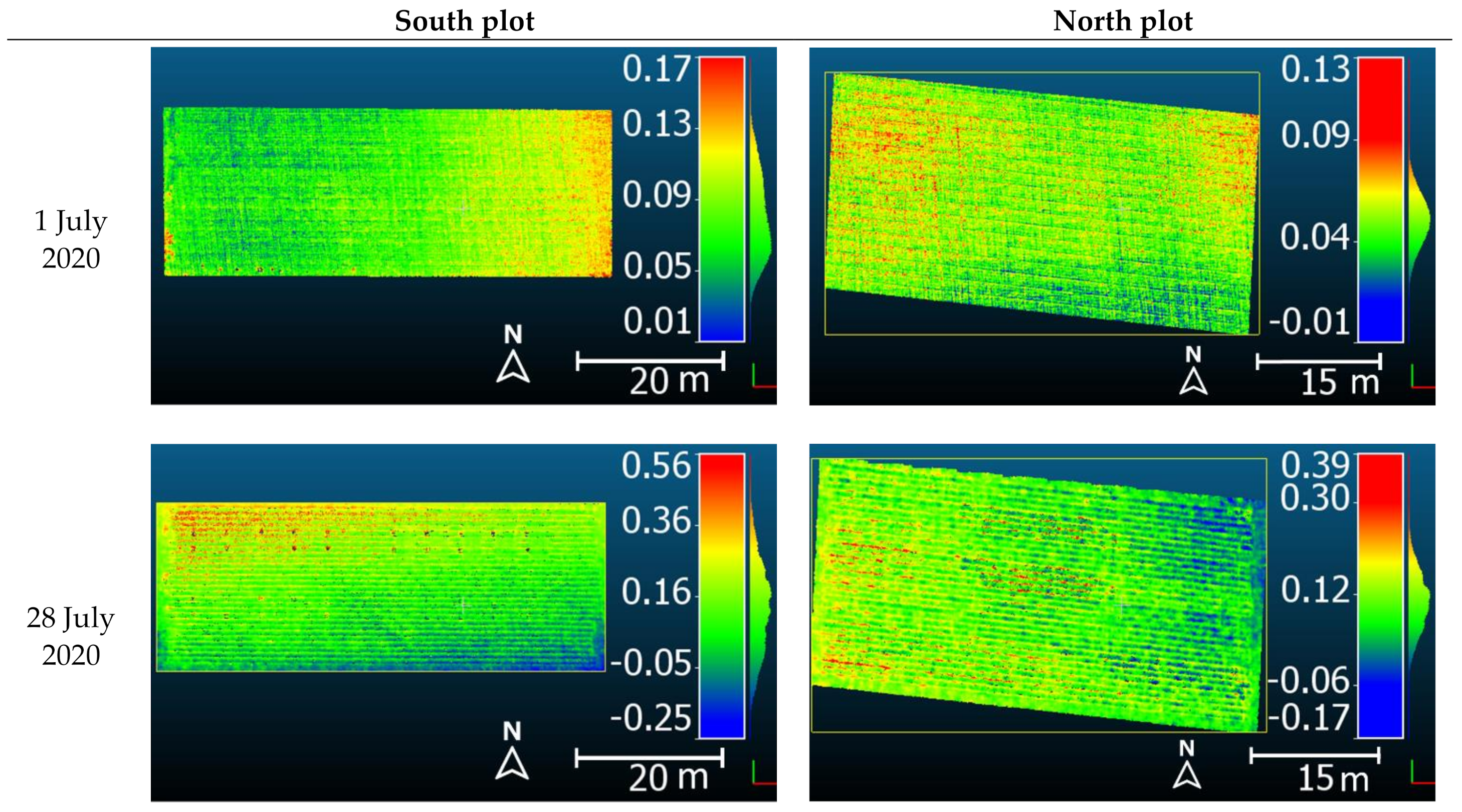

3.3. M3C2 Distance Map Derived from the DEMs and the CHMs

3.4. Comparison of Sampled Cross-Sections in Point Clouds

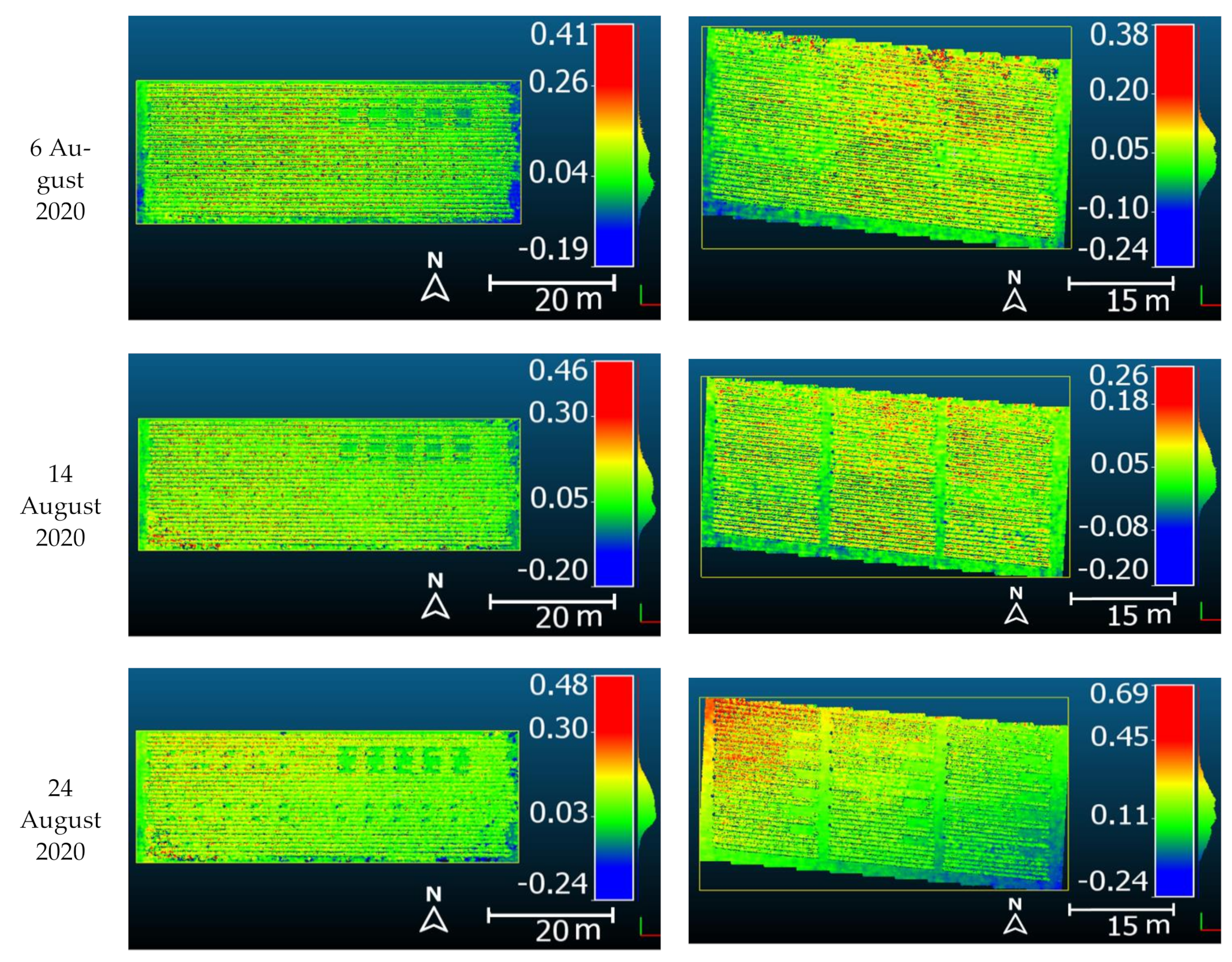

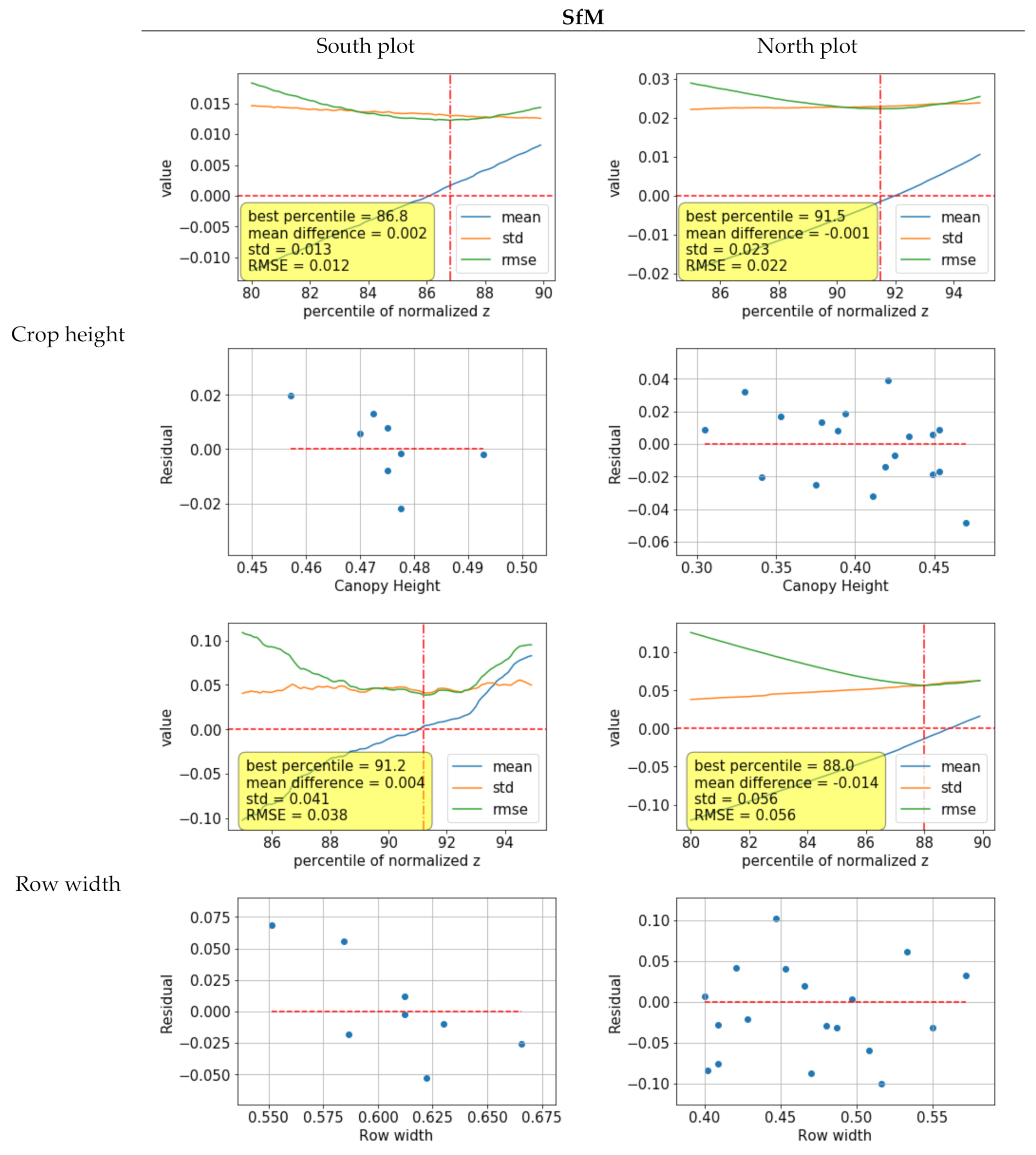

3.5. Comparison of the Sampled CH and RW

4. Discussion

4.1. Qualitative and Quantitative Comparisons of the Two Modalities

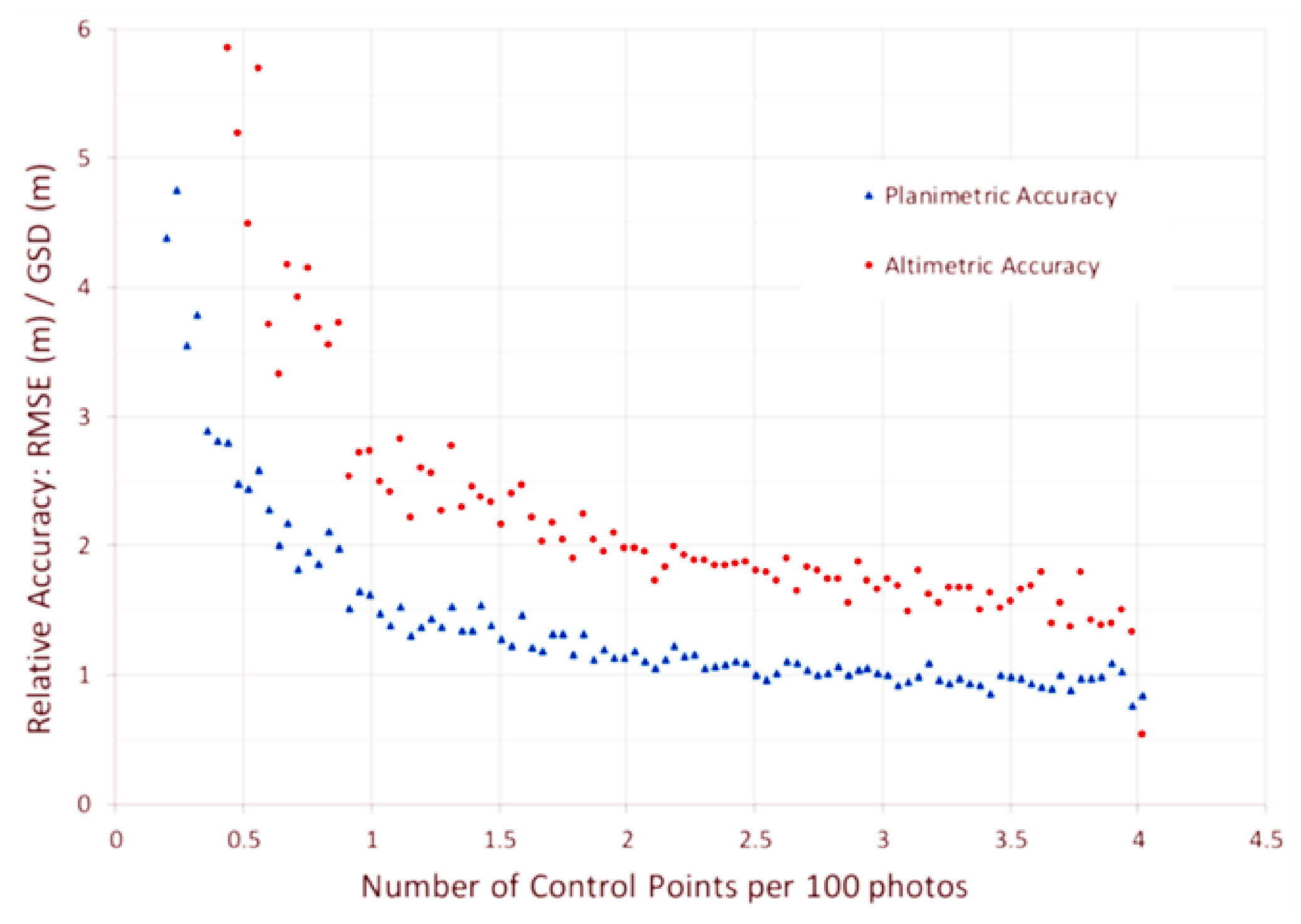

4.2. Importance of the GCPs

4.3. Limitations and Future Research

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. “Structure-from-Motion” photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Smith, M.W.; Carrivick, J.L.; Quincey, D.J. Structure from motion photogrammetry in physical geography. Prog. Phys. Geogr. 2015, 40, 247–275. [Google Scholar] [CrossRef] [Green Version]

- Holman, F.H.; Riche, A.B.; Michalski, A.; Castle, M.; Wooster, M.J.; Hawkesford, M.J. High throughput field phenotyping of wheat plant height and growth rate in field plot trials using UAV based remote sensing. Remote Sens. 2016, 8, 1031. [Google Scholar] [CrossRef]

- Malambo, L.; Popescu, S.C.; Murray, S.C.; Putman, E.; Pugh, N.A.; Horne, D.W.; Richardson, G.; Sheridan, R.; Rooney, W.L.; Avant, R.; et al. Multitemporal field-based plant height estimation using 3D point clouds generated from small unmanned aerial systems high-resolution imagery. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 31–42. [Google Scholar] [CrossRef]

- Cunliffe, A.M.; Brazier, R.E.; Anderson, K. Ultra-fine grain landscape-scale quantification of dryland vegetation structure with drone-acquired structure-from-motion photogrammetry. Remote Sens. Environ. 2016, 183, 129–143. [Google Scholar] [CrossRef] [Green Version]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Maimaitiyiming, M.; Hartling, S.; Peterson, K.T.; Maw, M.J.W.; Shakoor, N.; Mockler, T.; Fritschi, F.B. Vegetation Index Weighted Canopy Volume Model (CVM VI ) for soybean biomass estimation from Unmanned Aerial System-based RGB imagery. ISPRS J. Photogramm. Remote Sens. 2019, 151, 27–41. [Google Scholar] [CrossRef]

- Dos Santos, L.M.; Ferraz, G.A.E.S.; Barbosa, B.D.D.S.; Diotto, A.V.; Andrade, M.T.; Conti, L.; Rossi, G. Determining the Leaf Area Index and Percentage of Area Covered by Coffee Crops Using UAV RGB Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6401–6409. [Google Scholar] [CrossRef]

- Kalisperakis, I.; Stentoumis, C.; Grammatikopoulos, L.; Karantzalos, K. Leaf area index estimation in vineyards from UAV hyperspectral data, 2D image mosaics and 3D canopy surface models. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences—ISPRS Archives, Toronto, ONT, Canada, 30 August–2 September 2015. [Google Scholar]

- Narvaez, F.Y.; Reina, G.; Torres-Torriti, M.; Kantor, G.; Cheein, F.A. A survey of ranging and imaging techniques for precision agriculture phenotyping. IEEE/ASME Trans. Mechatron. 2017, 22, 2428–2439. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, Y.; Liao, Q.; Ye, H.; Liu, M.; Wang, L. Characterization of a RS-LiDAR for 3D Perception. In Proceedings of the 8th Annual IEEE International Conference on Cyber Technology in Automation, Control and Intelligent Systems, CYBER 2018, Tianjin, China, 19–23 July 2018. [Google Scholar]

- Korhonen, L.; Korpela, I.; Heiskanen, J.; Maltamo, M. Airborne discrete-return LIDAR data in the estimation of vertical canopy cover, angular canopy closure and leaf area index. Remote Sens. Environ. 2011, 115, 1065–1080. [Google Scholar] [CrossRef]

- White, J.C.; Wulder, M.A.; Varhola, A.; Vastaranta, M.; Coops, N.C.; Cook, B.D.; Pitt, D.; Woods, M. A best practices guide for generating forest inventory attributes from airborne laser scanning data using an area-based approach. For. Chron. 2013, 89, 722–723. [Google Scholar] [CrossRef] [Green Version]

- Hyyppä, J.; Hyyppä, H.; Leckie, D.; Gougeon, F.; Yu, X.; Maltamo, M. Review of methods of small-footprint airborne laser scanning for extracting forest inventory data in boreal forests. Int. J. Remote. Sens. 2008, 29, 1339–1366. [Google Scholar] [CrossRef]

- Moskal, L.M.; Zheng, G. Retrieving forest inventory variables with terrestrial laser scanning (TLS) in urban heterogeneous forest. Remote Sens. 2012, 4, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Liang, X.; Kankare, V.; Hyyppä, J.; Wang, Y.; Kukko, A.; Haggrén, H.; Yu, X.; Kaartinen, H.; Jaakkola, A.; Guan, F.; et al. Terrestrial laser scanning in forest inventories. ISPRS J. Photogramm. Remote Sens. 2016, 115, 63–77. [Google Scholar] [CrossRef]

- Beyene, S.M.; Hussin, Y.A.; Kloosterman, H.E.; Ismail, M.H. Forest Inventory and Aboveground Biomass Estimation with Terrestrial LiDAR in the Tropical Forest of Malaysia. Can. J. Remote Sens. 2020, 46, 130–145. [Google Scholar] [CrossRef]

- Christiansen, M.P.; Laursen, M.S.; Jørgensen, R.N.; Skovsen, S.; Gislum, R. Designing and testing a UAV mapping system for agricultural field surveying. Sensors 2017, 17, 2703. [Google Scholar] [CrossRef] [Green Version]

- Ziliani, M.G.; Parkes, S.D.; Hoteit, I.; McCabe, M.F. Intra-season crop height variability at commercial farm scales using a fixed-wing UAV. Remote Sens. 2018, 10, 2007. [Google Scholar] [CrossRef] [Green Version]

- ten Harkel, J.; Bartholomeus, H.; Kooistra, L. Biomass and crop height estimation of different crops using UAV-based LiDAR. Remote Sens. 2020, 12, 17. [Google Scholar] [CrossRef] [Green Version]

- Lin, Y.C.; Habib, A. Quality control and crop characterization framework for multi-temporal UAV LiDAR data over mechanized agricultural fields. Remote Sens. Environ. 2021, 256, 112299. [Google Scholar] [CrossRef]

- Kidd, J.R. Performance evaluation of the Velodyne VLP-16 system for surface feature surveying. Univ. New Hampsh. 2017. [Google Scholar]

- Lei, L.; Qiu, C.; Li, Z.; Han, D.; Han, L.; Zhu, Y.; Wu, J.; Xu, B.; Feng, H.; Yang, H.; et al. Effect of leaf occlusion on leaf area index inversion of maize using UAV-LiDAR data. Remote Sens. 2019, 11, 1067. [Google Scholar] [CrossRef] [Green Version]

- Zhang, F.; Hassanzadeh, A.; Kikkert, J.; Pethybridge, S.; Van Aardt, J. Toward a Structural Description of Row Crops Using UAS-Based LiDAR Point Clouds. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS); IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 465–468. [Google Scholar]

- Bareth, G.; Bendig, J.; Tilly, N.; Hoffmeister, D.; Aasen, H.; Bolten, A. A comparison of UAV- and TLS-derived plant height for crop monitoring: Using polygon grids for the analysis of crop surface models (CSMs). Photogramm. Fernerkund. Geoinf. 2016, 2016, 85–94. [Google Scholar] [CrossRef] [Green Version]

- Wallace, L.; Lucieer, A.; Malenovskỳ, Z.; Turner, D.; Vopěnka, P. Assessment of forest structure using two UAV techniques: A comparison of airborne laser scanning and structure from motion (SfM) point clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Niu, Z.; Chen, H.; Li, D. Characterizing canopy structural complexity for the estimation of maize LAI based on ALS data and UAV stereo images. Int. J. Remote Sens. 2017, 38, 2106–2116. [Google Scholar] [CrossRef]

- Guerra-Hernández, J.; Cosenza, D.N.; Rodriguez, L.C.E.; Silva, M.; Tomé, M.; Díaz-Varela, R.A.; González-Ferreiro, E. Comparison of ALS- and UAV(SfM)-derived high-density point clouds for individual tree detection in Eucalyptus plantations. Int. J. Remote Sens. 2018, 39, 5211–5235. [Google Scholar] [CrossRef]

- Guerra-Hernández, J.; Cosenza, D.N.; Cardil, A.; Silva, C.A.; Botequim, B.; Soares, P.; Silva, M.; González-Ferreiro, E.; Díaz-Varela, R.A. Predicting growing stock volume of Eucalyptus plantations using 3-D point clouds derived from UAV imagery and ALS data. Forests 2019, 10, 905. [Google Scholar] [CrossRef] [Green Version]

- Cao, L.; Liu, H.; Fu, X.; Zhang, Z.; Shen, X.; Ruan, H. Comparison of UAV LiDAR and digital aerial photogrammetry point clouds for estimating forest structural attributes in subtropical planted forests. Forests 2019, 10, 145. [Google Scholar] [CrossRef] [Green Version]

- Lin, Y.C.; Cheng, Y.T.; Zhou, T.; Ravi, R.; Hasheminasab, S.M.; Flatt, J.E.; Troy, C.; Habib, A. Evaluation of UAV LiDAR for mapping coastal environments. Remote Sens. 2019, 11, 2893. [Google Scholar] [CrossRef] [Green Version]

- Sofonia, J.; Shendryk, Y.; Phinn, S.; Roelfsema, C.; Kendoul, F.; Skocaj, D. Monitoring sugarcane growth response to varying nitrogen application rates: A comparison of UAV SLAM LiDAR and photogrammetry. Int. J. Appl. Earth Obs. Geoinf. 2019, 82, 101878. [Google Scholar] [CrossRef]

- Shendryk, Y.; Sofonia, J.; Garrard, R.; Rist, Y.; Skocaj, D.; Thorburn, P. Fine-scale prediction of biomass and leaf nitrogen content in sugarcane using UAV LiDAR and multispectral imaging. Int. J. Appl. Earth Obs. Geoinf. 2020, 92, 102177. [Google Scholar] [CrossRef]

- Hama, A.; Hayazaki, Y.; Mochizuki, A.; Tsuruoka, Y.; Tanaka, K.; Kondoh, A. Rice Growth Monitoring Using Small UAV and SfM-MVS Technique. J. Jpn. Soc. Hydrol. Water Resour. 2016, 29, 44–54. [Google Scholar] [CrossRef]

- Yang, M.D.; Huang, K.S.; Kuo, Y.H.; Tsai, H.P.; Lin, L.M. Spatial and spectral hybrid image classification for rice lodging assessment through UAV imagery. Remote Sens. 2017, 9, 583. [Google Scholar] [CrossRef] [Green Version]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating biomass of barley using crop surface models (CSMs) derived from UAV-based RGB imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef] [Green Version]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Song, Y.; Wang, J. Winter wheat canopy height extraction from UAV-based point cloud data with a moving cuboid filter. Remote Sens. 2019, 11, 1239. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Niu, Z.; Chen, H.; Li, D.; Wu, M.; Zhao, W. Remote estimation of canopy height and aboveground biomass of maize using high-resolution stereo images from a low-cost unmanned aerial vehicle system. Ecol. Indic. 2016, 67, 637–648. [Google Scholar] [CrossRef]

- Duan, T.; Zheng, B.; Guo, W.; Ninomiya, S.; Guo, Y.; Chapman, S.C. Comparison of ground cover estimates from experiment plots in cotton, sorghum and sugarcane based on images and ortho-mosaics captured by UAV. Funct. Plant Biol. 2017, 44, 169. [Google Scholar] [CrossRef] [PubMed]

- Varela, S.; Pederson, T.; Bernacchi, C.J.; Leakey, A.D.B. Understanding growth dynamics and yield prediction of sorghum using high temporal resolution UAV imagery time series and machine learning. Remote Sens. 2021, 13, 1763. [Google Scholar] [CrossRef]

- Sanchiz, J.M.; Pla, F.; Marchant, J.A.; Brivot, R. Structure from motion techniques applied to crop field mapping. Image Vis. Comput. 1996, 14, 353–363. [Google Scholar] [CrossRef]

- Mathews, A.J.; Jensen, J.L.R. Visualizing and quantifying vineyard canopy LAI using an Unmanned Aerial Vehicle (UAV) collected high density structure from motion point cloud. Remote Sens. 2013, 5, 2164–2183. [Google Scholar] [CrossRef] [Green Version]

- MicaSense, I. MicaSense RedEdge-M Multispectral Camera User Manual Rev 01 2017, 40.

- Propeller Aerobotics Pty Ltd. How Accurate are AeroPoints? Available online: https://help.propelleraero.com/en/articles/145-how-accurate-are-aeropoints (accessed on 24 May 2021).

- Girardeau-Montaut, D. CloudCompare 3D Point Cloud and Mesh Processing Software Open Source Project. Available online: http://www.danielgm.net/cc/ (accessed on 24 May 2021).

- Noaa Vertical Datum Transformation. Available online: https://vdatum.noaa.gov/welcome.html (accessed on 24 May 2021).

- Sofonia, J.J.; Phinn, S.; Roelfsema, C.; Kendoul, F.; Rist, Y. Modelling the effects of fundamental UAV flight parameters on LiDAR point clouds to facilitate objectives-based planning. ISPRS J. Photogramm. Remote Sens. 2019, 149, 105–118. [Google Scholar] [CrossRef]

- VLP-16 User Manual 63-9243 Rev. D. Available online: https://velodynelidar.com/wp-content/uploads/2019/12/63-9243-Rev-E-VLP-16-User-Manual.pdfhttps://greenvalleyintl.com/wp-content/uploads/2019/02/Velodyne-LiDAR-VLP-16-User-Manual.pdf (accessed on 2 October 2021).

- GmbH, R. LAStools. Available online: https://rapidlasso.com/lastools/ (accessed on 2 October 2021).

- Chang, A.; Jung, J.; Maeda, M.M.; Landivar, J. Crop height monitoring with digital imagery from Unmanned Aerial System (UAS). Comput. Electron. Agric. 2017, 141, 232–237. [Google Scholar] [CrossRef]

- De Souza, C.H.W.; Lamparelli, R.A.C.; Rocha, J.V.; Magalhães, P.S.G. Height estimation of sugarcane using an unmanned aerial system (UAS) based on structure from motion (SfM) point clouds. Int. J. Remote Sens. 2017, 38, 2218–2230. [Google Scholar] [CrossRef]

- Matese, A.; Di Gennaro, S.F.; Berton, A. Assessment of a canopy height model (CHM) in a vineyard using UAV-based multispectral imaging. Int. J. Remote Sens. 2017, 38, 2150–2160. [Google Scholar] [CrossRef]

- Lane, S.N.; Westaway, R.M.; Hicks, D.M. Estimation of erosion and deposition volumes in a large, gravel-bed, braided river using synoptic remote sensing. Earth Surf. Process. Landf. 2003, 28, 249–271. [Google Scholar] [CrossRef]

- Williams, R. DEMs of Difference. Geomorphol. Tech. 2012, 2, 117. [Google Scholar]

- Wheaton, J.M.; Brasington, J.; Darby, S.E.; Sear, D.A. Accounting for uncertainty in DEMs from repeat topographic surveys: Improved sediment budgets. Earth Surf. Process. Landf. 2010, 35, 136–156. [Google Scholar] [CrossRef]

- Feurer, D.; Vinatier, F. Joining multi-epoch archival aerial images in a single SfM block allows 3-D change detection with almost exclusively image information. ISPRS J. Photogramm. Remote Sens. 2018, 146, 495–506. [Google Scholar] [CrossRef] [Green Version]

- Girardeau-Montaut, D.; Roux, M.; Marc, R.; Thibault, G. Change detection on points cloud data acquired with a ground laser scanner. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences—ISPRS Archives, Enschede, The Netherlands, 12–14 September 2005; Volume 36. [Google Scholar]

- Ahmad Fuad, N.; Yusoff, A.R.; Ismail, Z.; Majid, Z. Comparing the performance of point cloud registration methods for landslide monitoring using mobile laser scanning data. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences—ISPRS Archives, Kuala Lumpur, Malaysia, 3–5 September 2018; Volume 42. [Google Scholar]

- Webster, C.; Westoby, M.; Rutter, N.; Jonas, T. Three-dimensional thermal characterization of forest canopies using UAV photogrammetry. Remote Sens. Environ. 2018, 209, 835–847. [Google Scholar] [CrossRef] [Green Version]

- Tsoulias, N.; Paraforos, D.S.; Fountas, S.; Zude-Sasse, M. Estimating canopy parameters based on the stem position in apple trees using a 2D lidar. Agronomy 2019, 9, 740. [Google Scholar] [CrossRef] [Green Version]

- Olivier, M.D.; Robert, S.; Richard, A.F. A method to quantify canopy changes using multi-temporal terrestrial lidar data: Tree response to surrounding gaps. Agric. For. Meteorol. 2017, 237, 184–195. [Google Scholar] [CrossRef]

- Jaud, M.; Kervot, M.; Delacourt, C.; Bertin, S. Potential of smartphone SfM photogrammetry to measure coastal morphodynamics. Remote Sens. 2019, 11, 2242. [Google Scholar] [CrossRef] [Green Version]

- Jaud, M.; Bertin, S.; Beauverger, M.; Augereau, E.; Delacourt, C. RTK GNSS-assisted terrestrial SfM photogrammetry without GCP: Application to coastal morphodynamics monitoring. Remote Sens. 2020, 12, 1889. [Google Scholar] [CrossRef]

- Lague, D.; Brodu, N.; Leroux, J. Accurate 3D comparison of complex topography with terrestrial laser scanner: Application to the Rangitikei canyon (N-Z). ISPRS J. Photogramm. Remote Sens. 2013, 82, 10–26. [Google Scholar] [CrossRef] [Green Version]

- Esposito, G.; Mastrorocco, G.; Salvini, R.; Oliveti, M.; Starita, P. Application of UAV photogrammetry for the multi-temporal estimation of surface extent and volumetric excavation in the Sa Pigada Bianca open-pit mine, Sardinia, Italy. Environ. Earth Sci. 2017, 76, 103. [Google Scholar] [CrossRef]

- Eker, R.; Aydın, A.; Hübl, J. Unmanned Aerial Vehicle (UAV)-based monitoring of a landslide: Gallenzerkogel landslide (Ybbs-Lower Austria) case study. Environ. Monit. Assess. 2018, 190, 28. [Google Scholar] [CrossRef] [PubMed]

- Jafari, B.; Khaloo, A.; Lattanzi, D. Deformation Tracking in 3D Point Clouds Via Statistical Sampling of Direct Cloud-to-Cloud Distances. J. Nondestruct. Eval. 2017, 36, 65. [Google Scholar] [CrossRef]

- Gómez-Gutiérrez, Á.; Gonçalves, G.R. Surveying coastal cliffs using two UAV platforms (multirotor and fixed-wing) and three different approaches for the estimation of volumetric changes. Int. J. Remote Sens. 2020, 41, 8143–8175. [Google Scholar] [CrossRef]

- Becirevic, D.; Klingbeil, L.; Honecker, A.; Schumann, H.; Rascher, U.; Léon, J.; Kuhlmann, H. On the Derivation of Crop Heights from multitemporal uav based imagery. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Enschede, The Netherlands, 10–14 June 2019; Volume 4. [Google Scholar]

- Qin, R.; Tian, J.; Reinartz, P. 3D change detection—Approaches and applications. ISPRS J. Photogramm. Remote Sens. 2016, 122, 41–56. [Google Scholar] [CrossRef] [Green Version]

- M3C2 (Plugin)—CloudCompareWiki. Available online: https://www.cloudcompare.org/doc/wiki/index.php?title=M3C2_(plugin) (accessed on 6 January 2021).

- Cook, K.L. An evaluation of the effectiveness of low-cost UAVs and structure from motion for geomorphic change detection. Geomorphology 2017, 278, 195–208. [Google Scholar] [CrossRef]

- Bash, E.A.; Moorman, B.J.; Menounos, B.; Gunther, A. Evaluation of SfM for surface characterization of a snow-covered glacier through comparison with aerial lidar. J. Unmanned Veh. Syst. 2020, 8, 119–139. [Google Scholar] [CrossRef]

- Chu, T.; Starek, M.J.; Brewer, M.J.; Murray, S.C.; Pruter, L.S. Characterizing canopy height with UAS structure-from-motion photogrammetry—Results analysis of a maize field trial with respect to multiple factors. Remote Sens. Lett. 2018, 9, 753–762. [Google Scholar] [CrossRef] [Green Version]

- Sanz-Ablanedo, E.; Chandler, J.H.; Rodríguez-Pérez, J.R.; Ordóñez, C. Accuracy of Unmanned Aerial Vehicle (UAV) and SfM photogrammetry survey as a function of the number and location of ground control points used. Remote Sens. 2018, 10, 1606. [Google Scholar] [CrossRef] [Green Version]

- Moeckel, T.; Dayananda, S.; Nidamanuri, R.R.; Nautiyal, S.; Hanumaiah, N.; Buerkert, A.; Wachendorf, M. Estimation of vegetable crop parameter by multi-temporal UAV-borne images. Remote Sens. 2018, 10, 805. [Google Scholar] [CrossRef] [Green Version]

- Belton, D.; Helmholz, P.; Long, J.; Zerihun, A. Crop Height Monitoring Using a Consumer-Grade Camera and UAV Technology. PFG-J. Photogramm. Remote Sens. Geoinf. Sci. 2019, 87, 249–262. [Google Scholar] [CrossRef]

- Cisternas, I.; Velásquez, I.; Caro, A.; Rodríguez, A. Systematic literature review of implementations of precision agriculture. Comput. Electron. Agric. 2020, 176, 105626. [Google Scholar] [CrossRef]

- Yost, M.A.; Kitchen, N.R.; Sudduth, K.A.; Sadler, E.J.; Drummond, S.T.; Volkmann, M.R. Long-term impact of a precision agriculture system on grain crop production. Precis. Agric. 2017, 18, 823–842. [Google Scholar] [CrossRef]

| Data Set Number | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| Date | 1 July 2020 | 28 July 2020 | 6 August 2020 | 14 August 2020 | 24 August 2020 |

| Flight altitude (m) | 25 | 25 | 22 | 25 | 25 |

| Flight speed (m/s) | 3 | 2 | 2 | 2 | 2 |

| Flight line space (m) | 6.6 | 5 | 5 | 5 | 5 |

| Ground sample distance (m/pixel) | 0.017 | 0.017 | 0.015 | 0.017 | 0.017 |

| Snap bean growth stage | Bare ground | Budding | Eight days before full blooming | Full blooming; 10 days ahead of harvest | Ready for harvesting |

| Number of images for structure-from-motion (SfM) | 671 | 590 | 566 | 606 | 617 |

| Date | MD (m) | Standard Deviation of the Difference (St. Dev; m) | RMSE (m) | |||

|---|---|---|---|---|---|---|

| South | North | South | North | South | North | |

| 1 July 2020 | −0.01 | −0.03 | 0.02 | 0.03 | 0.02 | 0.04 |

| 28 July 2020 | 0.01 | 0.01 | 0.04 | 0.03 | 0.04 | 0.03 |

| 6 August 2020 | 0.02 | 0.02 | 0.04 | 0.04 | 0.05 | 0.05 |

| 14 August 2020 | 0.01 | 0 | 0.03 | 0.03 | 0.03 | 0.03 |

| 24 August 2020 | 0.01 | 0.03 | 0.03 | 0.07 | 0.03 | 0.08 |

| Date | MD (m) | St. Dev (m) | RMSE (m) | |||

|---|---|---|---|---|---|---|

| South | North | South | North | South | North | |

| 1 July 2020 | 0.08 | 0.05 | 0.02 | 0.01 | 0.08 | 0.05 |

| 28 July 2020 | 0.12 | 0.10 | 0.13 | 0.06 | 0.18 | 0.12 |

| 6 August 2020 | 0.04 | 0.05 | 0.05 | 0.05 | 0.07 | 0.07 |

| 14 August 2020 | 0.07 | 0.04 | 0.06 | 0.05 | 0.09 | 0.06 |

| 24 August 2020 | 0.07 | 0.14 | 0.07 | 0.11 | 0.10 | 0.17 |

| Best Percentile (%) | Mean (m) | St. Dev (m) | RMSE (m) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| South | North | South | North | South | North | South | North | Average | ||

| CH | SfM | 86.8 | 91.5 | 0.002 | −0.001 | 0.013 | 0.023 | 0.012 | 0.022 | 0.017 |

| LiDAR | 98.4 | 99.2 | −0.001 | −0.003 | 0.014 | 0.03 | 0.013 | 0.029 | 0.021 | |

| RW | SfM | 91.2 | 88 | 0.004 | −0.014 | 0.041 | 0.056 | 0.038 | 0.056 | 0.047 |

| LiDAR | 95.7 | 95.7 | −0.001 | −0.007 | 0.062 | 0.051 | 0.058 | 0.05 | 0.054 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, F.; Hassanzadeh, A.; Kikkert, J.; Pethybridge, S.J.; van Aardt, J. Comparison of UAS-Based Structure-from-Motion and LiDAR for Structural Characterization of Short Broadacre Crops. Remote Sens. 2021, 13, 3975. https://doi.org/10.3390/rs13193975

Zhang F, Hassanzadeh A, Kikkert J, Pethybridge SJ, van Aardt J. Comparison of UAS-Based Structure-from-Motion and LiDAR for Structural Characterization of Short Broadacre Crops. Remote Sensing. 2021; 13(19):3975. https://doi.org/10.3390/rs13193975

Chicago/Turabian StyleZhang, Fei, Amirhossein Hassanzadeh, Julie Kikkert, Sarah Jane Pethybridge, and Jan van Aardt. 2021. "Comparison of UAS-Based Structure-from-Motion and LiDAR for Structural Characterization of Short Broadacre Crops" Remote Sensing 13, no. 19: 3975. https://doi.org/10.3390/rs13193975

APA StyleZhang, F., Hassanzadeh, A., Kikkert, J., Pethybridge, S. J., & van Aardt, J. (2021). Comparison of UAS-Based Structure-from-Motion and LiDAR for Structural Characterization of Short Broadacre Crops. Remote Sensing, 13(19), 3975. https://doi.org/10.3390/rs13193975