Extracting Canopy Closure by the CHM-Based and SHP-Based Methods with a Hemispherical FOV from UAV-LiDAR Data in a Poplar Plantation

Abstract

:1. Introduction

2. Materials and Methods

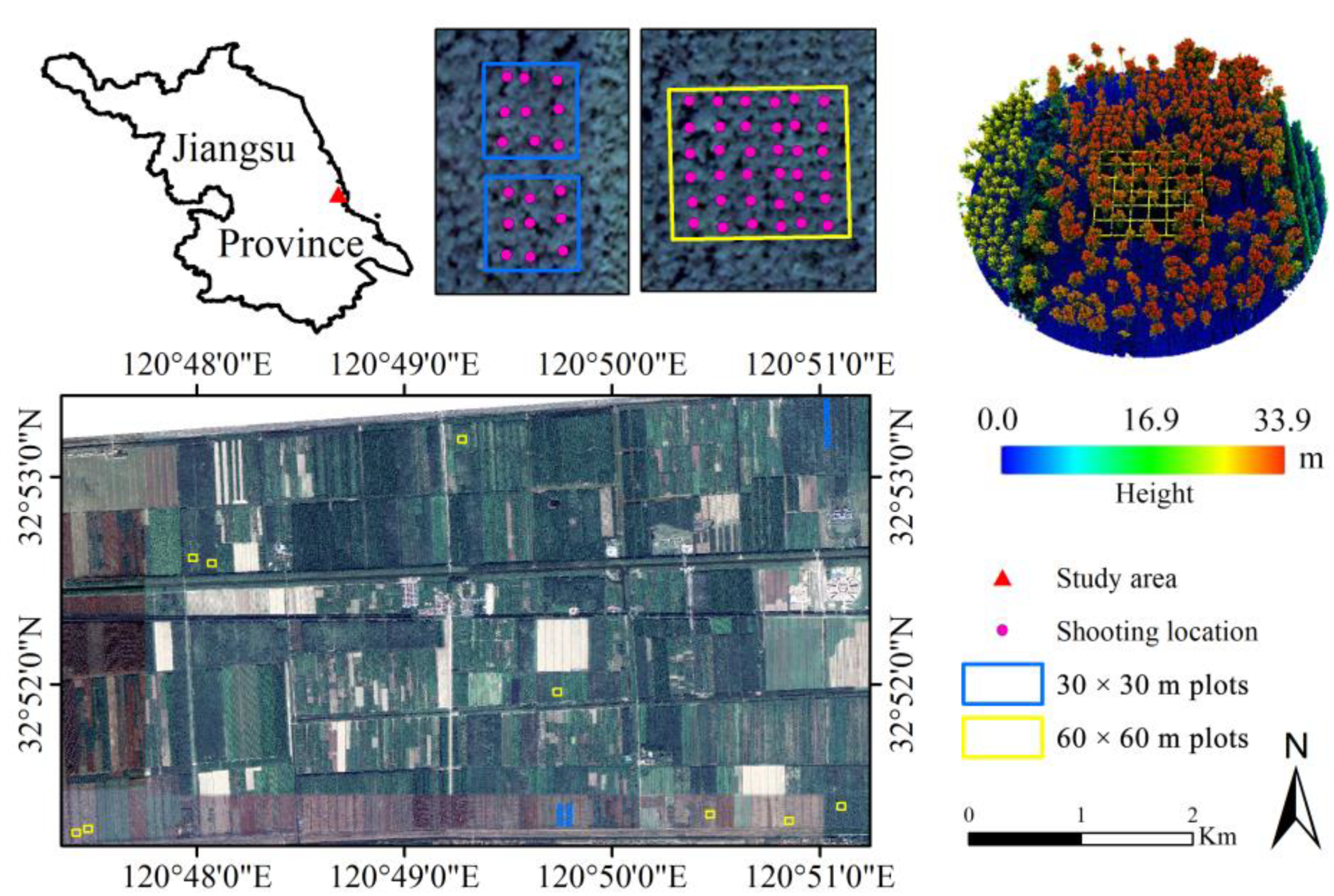

2.1. Study Area

2.2. Field Design and Field Data Collection

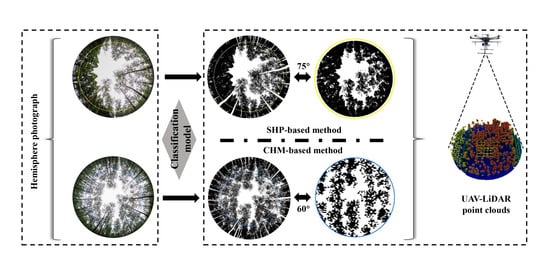

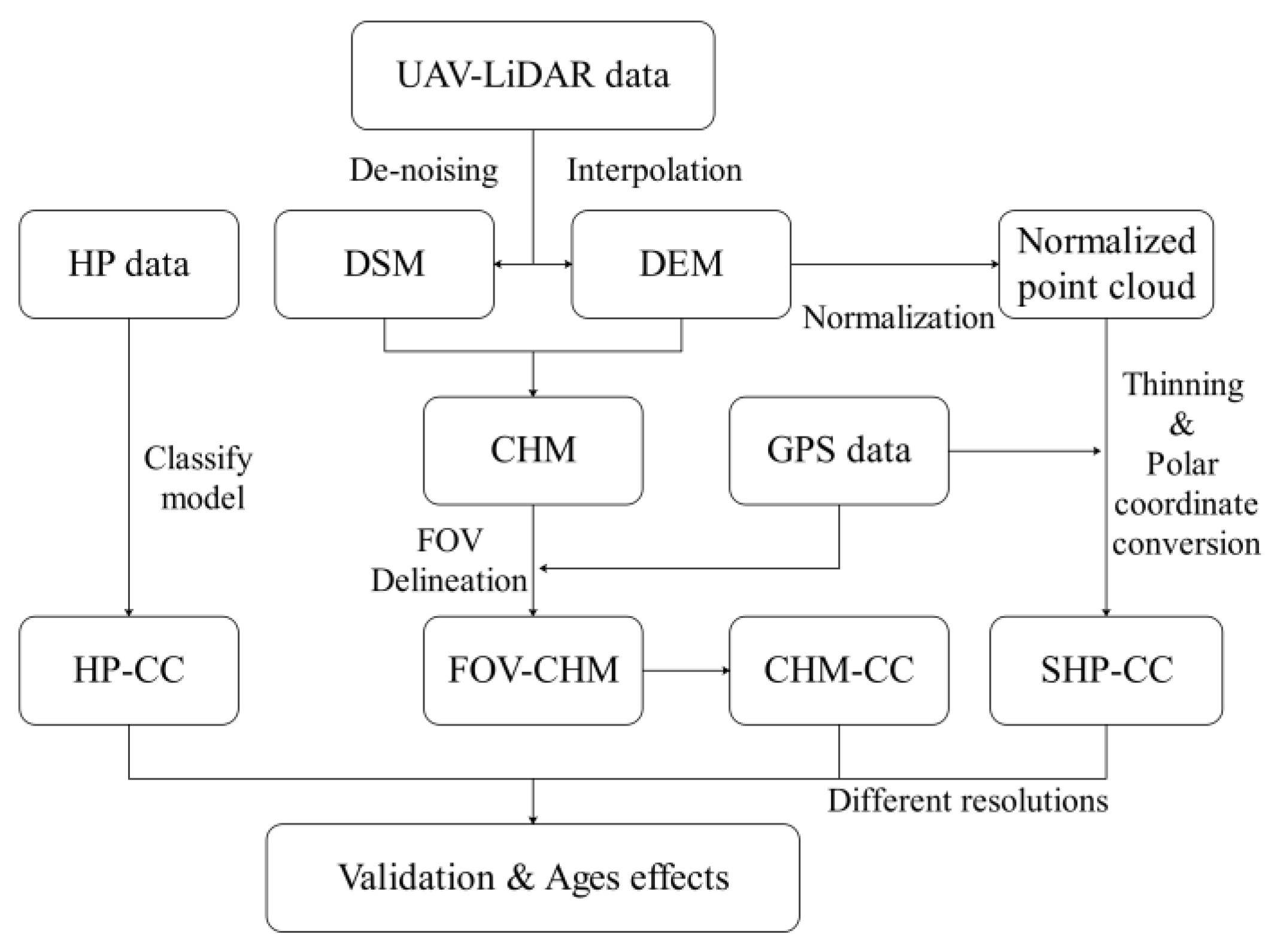

2.3. Methodology

2.3.1. Preprocessing of UAV-LiDAR Point Cloud Data

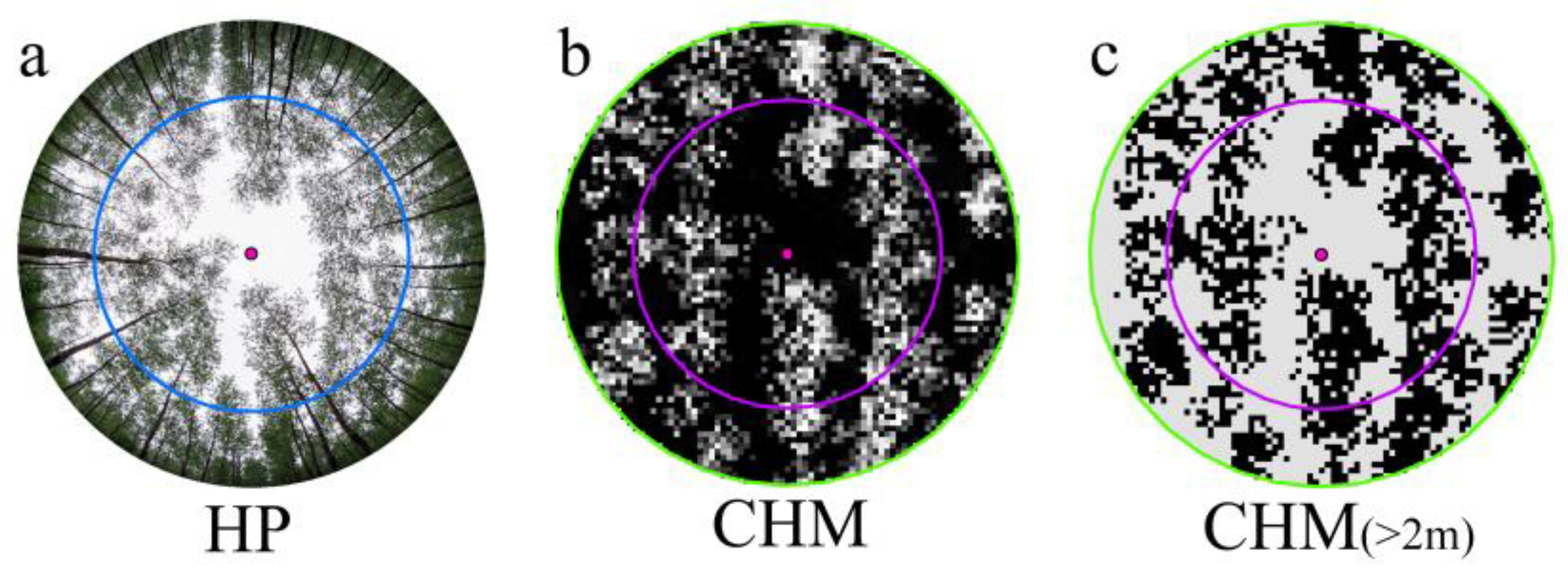

2.3.2. Preprocessing of HP and FOV Delineation

2.3.3. CC Extraction by the CHM-Based Method with a Hemispherical FOV

2.3.4. Estimation of CC with a SHP-Based Method

2.3.5. A New Semi-Automated Classification Method for CC Extraction from HP

2.3.6. Validation and Accuracy Assessment of CC Extraction from UAV-LiDAR Point Cloud Data

3. Results

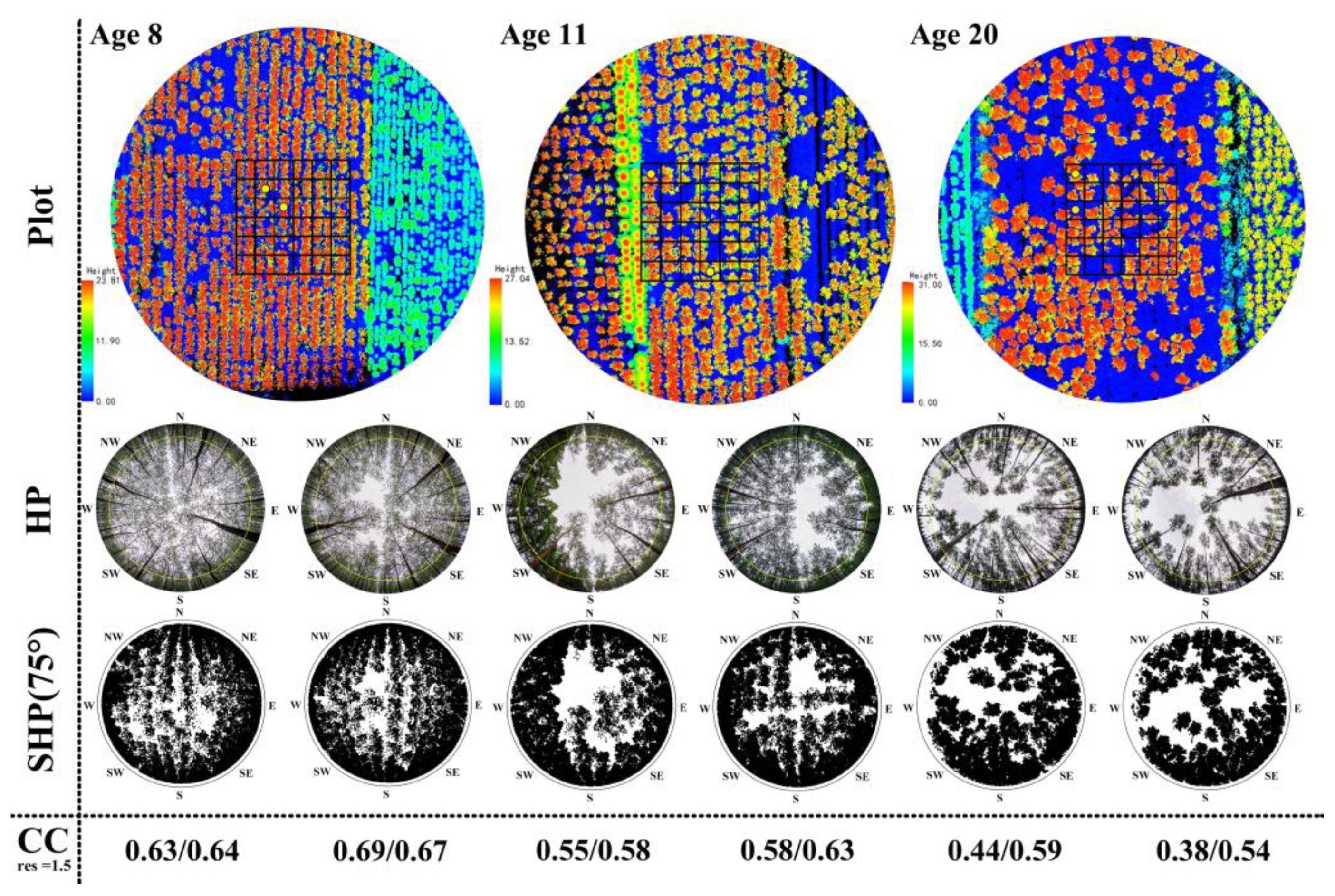

3.1. The Extracted CC from UAV-LiDAR Data in Different Poplar Plots

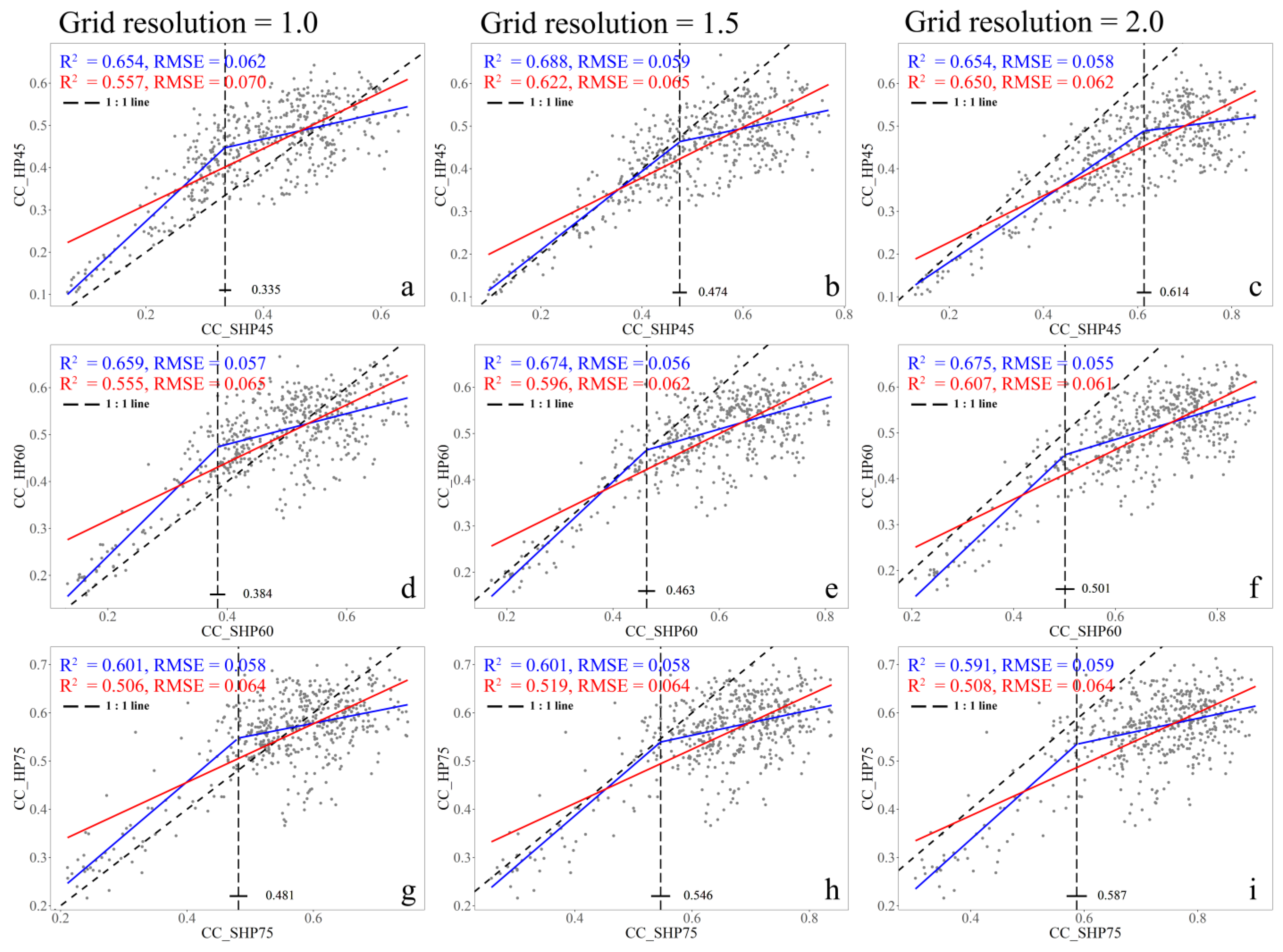

3.2. Validation of CC Extraction by the CHM-Based Method with the Extraction Results from HP

3.3. Validation of CC Extraction by the SHP-Based Method with the Extraction Results from HP

4. Discussion

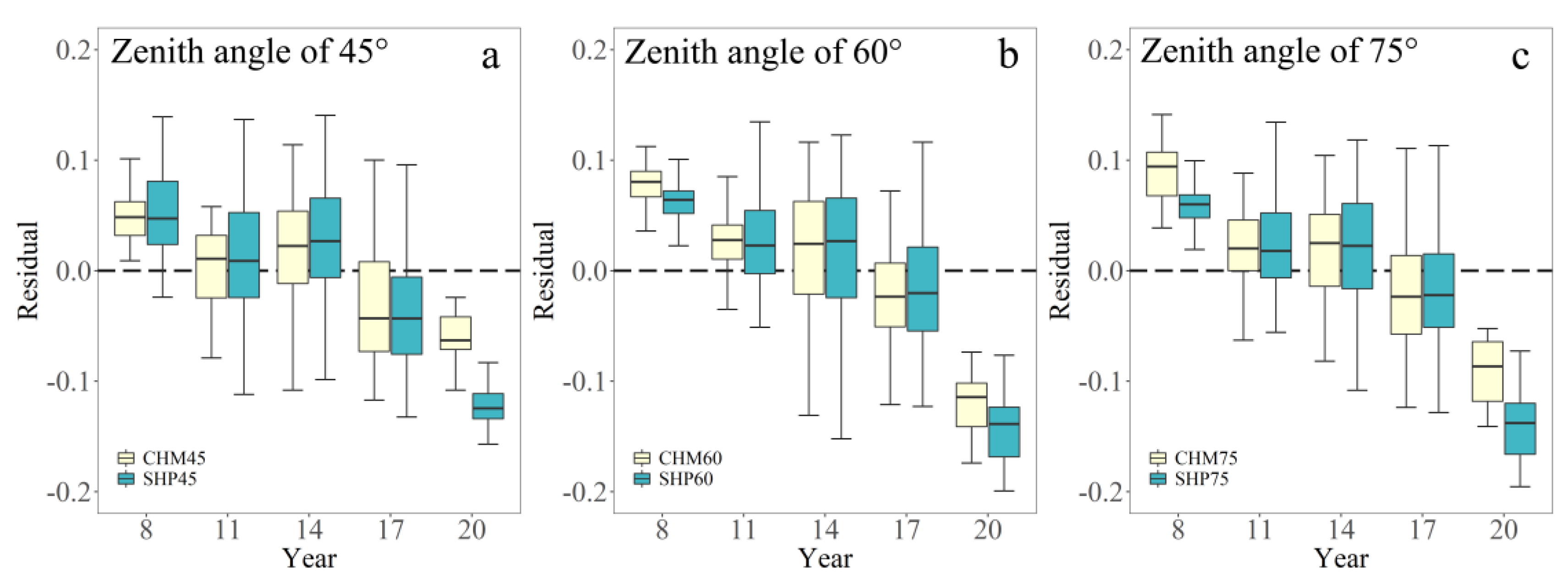

4.1. The Advantages and Disadvantages of CC Extraction from UAV-LiDAR Data by CHM-Based and SHP-Based Methods with a Hemispherical FOV

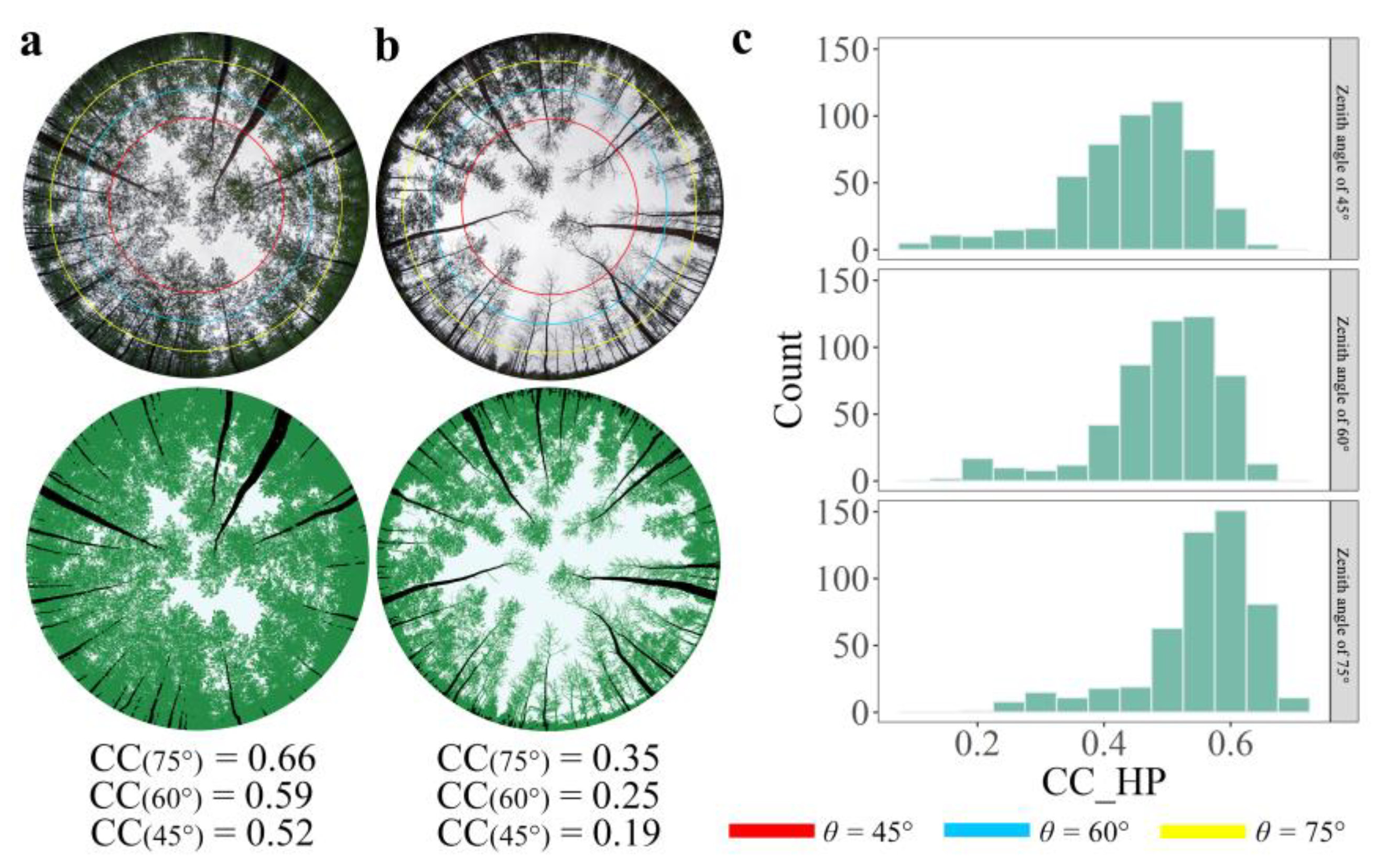

4.2. The Accuracy of the Validation Data (CC Extraction from HP)

4.3. Stand Age Effects on the Model Accuracy for CC Extraction

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Hartley, M.J. Rationale and methods for conserving biodiversity in plantation forests. For. Ecol. Manag. 2002, 155, 81–95. [Google Scholar] [CrossRef]

- Pawson, S.M.; Brin, A.; Brockerhoff, E.G.; Lamb, D.; Payn, T.W.; Paquette, A.; Parrotta, J.A. Plantation forests, climate change and biodiversity. Biodivers. Conserv. 2013, 22, 1203–1227. [Google Scholar] [CrossRef]

- Wang, G.B.; Deng, F.F.; Xu, W.H.; Chen, H.Y.H.; Ruan, H.H.; Goss, M. Poplar plantations in coastal China: Towards the identification of the best rotation age for optimal soil carbon sequestration. Soil Use Manag. 2016, 32, 303–310. [Google Scholar] [CrossRef]

- Meroni, M.; Colombo, R.; Panigada, C. Inversion of a radiative transfer model with hyperspectral observations for LAI mapping in poplar plantations. Remote Sens. Environ. 2004, 92, 195–206. [Google Scholar] [CrossRef]

- Canham, C.D.; Finzi, A.C.; Pacala, S.W.; Burbank, D.H. Causes and consequences of resource heterogeneity in forests: Interspecific variation in light transmission by canopy trees. Can. J. For. Res. 1994, 24, 337–349. [Google Scholar] [CrossRef]

- Popescu, S.C.; Wynne, R.H. Seeing the trees in the forest: Using lidar and multispectral data fusion with local filtering and variable window size for estimating tree height. Photogramm. Eng. Remote Sens. 2004, 70, 589–604. [Google Scholar] [CrossRef] [Green Version]

- Lee, A.C.; Lucas, R.M. A LiDAR-derived canopy density model for tree stem and crown mapping in Australian forests. Remote Sens. Environ. 2007, 111, 493–518. [Google Scholar] [CrossRef]

- Lieffers, V.J.; Messier, C.; Stadt, K.J.; Gendron, F.; Comeau, P.G. Predicting and managing light in the understory of boreal forests. Can. J. For. Res. 1999, 29, 796–811. [Google Scholar] [CrossRef]

- Korhonen, L.; Korhonen, K.T.; Rautiainen, M.; Stenberg, P. Estimation of forest canopy cover: A comparison of field measurement techniques. Silva. Fenn. 2006, 40, 577–588. [Google Scholar] [CrossRef] [Green Version]

- Jennings, S.B.; Brown, N.D.; Sheil, D. Assessing forest canopies and understorey illumination: Canopy closure, canopy cover and other measures. Forestry 1999, 72, 59–74. [Google Scholar] [CrossRef]

- Korhonen, L.; Korpela, I.; Heiskanen, J.; Maltamo, M. Airborne discrete-return LIDAR data in the estimation of vertical canopy cover, angular canopy closure and leaf area index. Remote Sens. Environ. 2011, 115, 1065–1080. [Google Scholar] [CrossRef]

- Musselman, K.N.; Molotch, N.P.; Margulis, S.A.; Kirchner, P.B.; Bales, R.C. Influence of canopy structure and direct beam solar irradiance on snowmelt rates in a mixed conifer forest. Agric. For. Meteorol. 2012, 161, 46–56. [Google Scholar] [CrossRef]

- Alexander, C.; Moeslund, J.E.; Bocher, P.K.; Arge, L.; Svenning, J.-C. Airborne laser scanner (LiDAR) proxies for understory light conditions. Remote Sens. Environ. 2013, 134, 152–161. [Google Scholar] [CrossRef]

- Zhu, X.; Liu, J.; Skidmore, A.K.; Premier, J.; Heurich, M. A voxel matching method for effective leaf area index estimation in temperate deciduous forests from leaf-on and leaf-off airborne LiDAR data. Remote Sens. Environ. 2020, 240, 111696. [Google Scholar] [CrossRef]

- Wei, S.; Yin, T.; Dissegna, M.A.; Whittle, A.J.; Ow, G.L.F.; Yusof, M.L.M.; Lauret, N.; Gastellu-Etchegorry, J.-P. An assessment study of three indirect methods for estimating leaf area density and leaf area index of individual trees. Agric. For. Meteorol. 2020, 292, 108101. [Google Scholar] [CrossRef]

- Welles, J.M. Some indirect methods of estimating canopy structure. Remote Sens. Rev. 1990, 5, 31–43. [Google Scholar] [CrossRef]

- Jonckheere, I.; Nackaerts, K.; Muys, B.; Coppin, P. Assessment of automatic gap fraction estimation of forests from digital hemispherical photography. Agric. For. Meteorol. 2004, 132, 96–114. [Google Scholar] [CrossRef]

- Jonckheere, I.; Fleck, S.; Nackaerts, K.; Muys, B.; Coppin, P.; Weiss, M.; Baret, F. Review of methods for in situ leaf area index determination—Part I. Theories, sensors and hemispherical photography. Agric. For. Meteorol. 2004, 121, 19–35. [Google Scholar] [CrossRef]

- Danson, F.M.; Hetherington, D.; Morsdorf, F.; Koetz, B.; Allgoewer, B. Forest canopy gap fraction from terrestrial laser scanning. IEEE Geosci. Remote Sens. Lett. 2007, 4, 157–160. [Google Scholar] [CrossRef] [Green Version]

- Thimonier, A.; Sedivy, I.; Schleppi, P. Estimating leaf area index in different types of mature forest stands in Switzerland: A comparison of methods. Eur. J. For. Res. 2010, 129, 543–562. [Google Scholar] [CrossRef]

- Smith, A.M.; Ramsay, P.M. A comparison of ground-based methods for estimating canopy closure for use in phenology research. Agric. For. Meteorol. 2018, 252, 18–26. [Google Scholar] [CrossRef]

- Tao, S.; Wu, F.; Guo, Q.; Wang, Y.; Li, W.; Xue, B.; Hu, X.; Li, P.; Tian, D.; Li, C.; et al. Segmenting tree crowns from terrestrial and mobile LiDAR data by exploring ecological theories. ISPRS-J. Photogramm. Remote Sens. 2015, 110, 66–76. [Google Scholar] [CrossRef] [Green Version]

- Luo, L.; Zhai, Q.; Su, Y.; Ma, Q.; Kelly, M.; Guo, Q. Simple method for direct crown base height estimation of individual conifer trees using airborne LiDAR data. Opt. Express 2018, 26, A562–A578. [Google Scholar] [CrossRef]

- Zhou, H.; Chen, Y.; Feng, Z.; Li, F.; Hyyppa, J.; Hakala, T.; Karjalainen, M.; Jiang, C.; Pei, L. The Comparison of Canopy Height Profiles Extracted from Ku-band Profile Radar Waveforms and LiDAR Data. Remote Sens. 2018, 10, 701. [Google Scholar] [CrossRef] [Green Version]

- Rostami, M.; Kolouri, S.; Eaton, E.; Kim, K. SAR Image Classification Using Few-Shot Cross-Domain Transfer Learning. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019; pp. 907–915. [Google Scholar]

- Pardini, M.; Armston, J.; Qi, W.; Lee, S.K.; Tello, M.; Cazcarra Bes, V.; Choi, C.; Papathanassiou, K.P.; Dubayah, R.O.; Fatoyinbo, L.E. Early Lessons on Combining Lidar and Multi-baseline SAR Measurements for Forest Structure Characterization. Surv. Geophys. 2019, 40, 803–837. [Google Scholar] [CrossRef]

- Cao, C.; Bao, Y.; Xu, M.; Chen, W.; Zhang, H.; He, Q.; Li, Z.; Guo, H.; Li, J.; Li, X.; et al. Retrieval of forest canopy attributes based on a geometric-optical model using airborne LiDAR and optical remote-sensing data. Int. J. Remote Sens. 2012, 33, 692–709. [Google Scholar] [CrossRef]

- Ma, Q.; Su, Y.; Guo, Q. Comparison of Canopy Cover Estimations From Airborne LiDAR, Aerial Imagery, and Satellite Imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2017, 10, 4225–4236. [Google Scholar] [CrossRef]

- Lefsky, M.A.; Cohen, W.B.; Acker, S.A.; Parker, G.G.; Spies, T.A.; Harding, D. Lidar remote sensing of the canopy structure and biophysical properties of Douglas-fir western hemlock forests. Remote Sens. Environ. 1999, 70, 339–361. [Google Scholar] [CrossRef]

- Zhang, Y.; Shao, Z. Assessing of Urban Vegetation Biomass in Combination with LiDAR and High-resolution Remote Sensing Images. Int. J. Remote Sens. 2020, 42, 964–985. [Google Scholar] [CrossRef]

- Shen, X.; Cao, L. Tree-Species Classification in Subtropical Forests Using Airborne Hyperspectral and LiDAR Data. Remote Sens. 2017, 9, 1180. [Google Scholar] [CrossRef] [Green Version]

- Parent, J.R.; Volin, J.C. Assessing species-level biases in tree heights estimated from terrain-optimized leaf-off airborne laser scanner (ALS) data. Int. J. Remote Sens. 2015, 36, 2697–2712. [Google Scholar] [CrossRef]

- Hopkinson, C. The influence of flying altitude, beam divergence, and pulse repetition frequency on laser pulse return intensity and canopy frequency distribution. Can. J. Remote Sens. 2007, 33, 312–324. [Google Scholar] [CrossRef]

- McLane, A.J.; McDermid, G.J.; Wulder, M.A. Processing discrete-return profiling lidar data to estimate canopy closure for large-area forest mapping and management. Can. J. Remote Sens. 2009, 35, 217–229. [Google Scholar] [CrossRef]

- Hopkinson, C.; Chasmer, L. Testing LiDAR models of fractional cover across multiple forest ecozones. Remote Sens. Environ. 2009, 113, 275–288. [Google Scholar] [CrossRef]

- Torresani, M.; Rocchini, D.; Sonnenschein, R.; Zebisch, M.; Hauffe, H.C.; Heym, M.; Pretzsch, H.; Tonon, G. Height variation hypothesis: A new approach for estimating forest species diversity with CHM LiDAR data. Ecol. Indic. 2020, 117, 106520. [Google Scholar] [CrossRef]

- Parent, J.R.; Volin, J.C. Assessing the potential for leaf-off LiDAR data to model canopy closure in temperate deciduous forests. ISPRS-J. Photogramm. Remote Sens. 2014, 95, 134–145. [Google Scholar] [CrossRef]

- Riano, D.; Valladares, F.; Condes, S.; Chuvieco, E. Estimation of leaf area index and covered ground from airborne laser scanner (Lidar) in two contrasting forests. Agric. For. Meteorol. 2004, 124, 269–275. [Google Scholar] [CrossRef]

- Bunce, A.; Volin, J.C.; Miller, D.R.; Parent, J.; Rudnicki, M. Determinants of tree sway frequency in temperate deciduous forests of the Northeast United States. Agric. For. Meteorol. 2019, 266, 87–96. [Google Scholar] [CrossRef] [Green Version]

- Seidel, D.; Fleck, S.; Leuschner, C. Analyzing forest canopies with ground-based laser scanning: A comparison with hemispherical photography. Agric. For. Meteorol. 2012, 154, 1–8. [Google Scholar] [CrossRef]

- Hancock, S.; Essery, R.; Reid, T.; Carle, J.; Baxter, R.; Rutter, N.; Huntley, B. Characterising forest gap fraction with terrestrial lidar and photography: An examination of relative limitations. Agric. For. Meteorol. 2014, 189, 105–114. [Google Scholar] [CrossRef] [Green Version]

- Perez, R.P.A.; Costes, E.; Theveny, F.; Griffon, S.; Caliman, J.-P.; Dauzat, J. 3D plant model assessed by terrestrial LiDAR and hemispherical photographs: A useful tool for comparing light interception among oil palm progenies. Agric. For. Meteorol. 2018, 249, 250–263. [Google Scholar] [CrossRef]

- Varhola, A.; Frazer, G.W.; Teti, P.; Coops, N.C. Estimation of forest structure metrics relevant to hydrologic modelling using coordinate transformation of airborne laser scanning data. Hydrol. Earth Syst. Sci. 2012, 16, 3749–3766. [Google Scholar] [CrossRef] [Green Version]

- Moeser, D.; Roubinek, J.; Schleppi, P.; Morsdorf, F.; Jonas, T. Canopy closure, LAI and radiation transfer from airborne LiDAR synthetic images. Agric. For. Meteorol. 2014, 197, 158–168. [Google Scholar] [CrossRef]

- Zellweger, F.; Baltensweiler, A.; Schleppi, P.; Huber, M.; Kuchler, M.; Ginzler, C.; Jonas, T. Estimating below-canopy light regimes using airborne laser scanning: An application to plant community analysis. Ecol. Evol. 2019, 9, 9149–9159. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chianucci, F.; Disperati, L.; Guzzi, D.; Bianchini, D.; Nardino, V.; Lastri, C.; Rindinella, A.; Corona, P. Estimation of canopy attributes in beech forests using true colour digital images from a small fixed-wing UAV. Int. J. Appl. Earth Obs. Geoinf. 2016, 47, 60–68. [Google Scholar] [CrossRef] [Green Version]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J. Hyperspectral Imaging: A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef] [Green Version]

- Bruellhardt, M.; Rotach, P.; Schleppi, P.; Bugmann, H. Vertical light transmission profiles in structured mixed deciduous forest canopies assessed by UAV-based hemispherical photography and photogrammetric vegetation height models. Agric. For. Meteorol. 2020, 281, 107843. [Google Scholar] [CrossRef]

- Beland, M.; Parker, G.; Sparrow, B.; Harding, D.; Chasmer, L.; Phinn, S.; Antonarakis, A.; Strahler, A. On promoting the use of lidar systems in forest ecosystem research. For. Ecol. Manag. 2019, 450, 117484. [Google Scholar] [CrossRef]

- Feng, Q.; Yang, J.; Liu, Y.; Ou, C.; Zhu, D.; Niu, B.; Liu, J.; Li, B. Multi-Temporal Unmanned Aerial Vehicle Remote Sensing for Vegetable Mapping Using an Attention-Based Recurrent Convolutional Neural Network. Remote Sens. 2020, 12, 1668. [Google Scholar] [CrossRef]

- Guimarães, N.; Pádua, L.; Marques, P.; Silva, N.; Peres, E.; Sousa, J.J. Forestry Remote Sensing from Unmanned Aerial Vehicles: A Review Focusing on the Data, Processing and Potentialities. Remote Sens. 2020, 12, 1046. [Google Scholar] [CrossRef] [Green Version]

- Cao, L.; Liu, H.; Fu, X.; Zhang, Z.; Shen, X.; Ruan, H. Comparison of UAV LiDAR and Digital Aerial Photogrammetry Point Clouds for Estimating Forest Structural Attributes in Subtropical Planted Forests. Forests 2019, 10, 145. [Google Scholar] [CrossRef] [Green Version]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR System with Application to Forest Inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef] [Green Version]

- Wu, X.; Shen, X.; Cao, L.; Wang, G.; Cao, F. Assessment of Individual Tree Detection and Canopy Cover Estimation using Unmanned Aerial Vehicle based Light Detection and Ranging (UAV-LiDAR) Data in Planted Forests. Remote Sens. 2019, 11, 908. [Google Scholar] [CrossRef] [Green Version]

- Yan, W.; Guan, H.; Cao, L.; Yu, Y.; Li, C.; Lu, J. A Self-Adaptive Mean Shift Tree-Segmentation Method Using UAV LiDAR Data. Remote Sens. 2020, 12, 515. [Google Scholar] [CrossRef] [Green Version]

- Brede, B.; Lau, A.; Bartholomeus, H.M.; Kooistra, L. Comparing RIEGL RiCOPTER UAV LiDAR Derived Canopy Height and DBH with Terrestrial LiDAR. Sensors 2017, 17, 2371. [Google Scholar] [CrossRef]

- Liu, K.; Shen, X.; Cao, L.; Wang, G.; Cao, F. Estimating forest structural attributes using UAV-LiDAR data in Ginkgo plantations. ISPRS-J. Photogramm. Remote Sens. 2018, 146, 465–482. [Google Scholar] [CrossRef]

- Li, Y.; Chen, Y.; Xu, C.; Xu, H.; Zou, X.; Chen, H.Y.H.; Ruan, H. The abundance and community structure of soil arthropods in reclaimed coastal saline soil of managed poplar plantations. Geoderma 2018, 327, 130–137. [Google Scholar] [CrossRef]

- Glira, P.; Pfeifer, N.; Briese, C.; Ressl, C. A Correspondence Framework for ALS Strip Adjustments based on Variants of the ICP Algorithm. Photogramm. Fernerkund. Geoinf. 2015, 275–289. [Google Scholar] [CrossRef]

- Zhao, X.; Guo, Q.; Su, Y.; Xue, B. Improved progressive TIN densification filtering algorithm for airborne LiDAR data in forested areas. ISPRS-J. Photogramm. Remote Sens. 2016, 117, 79–91. [Google Scholar] [CrossRef] [Green Version]

- Woodgate, W.; Jones, S.D.; Suarez, L.; Hill, M.J.; Armston, J.D.; Wilkes, P.; Soto-Berelov, M.; Haywood, A.; Mellor, A. Understanding the variability in ground-based methods for retrieving canopy openness, gap fraction, and leaf area index in diverse forest systems. Agric. For. Meteorol. 2015, 205, 83–95. [Google Scholar] [CrossRef]

- Solberg, S.; Brunner, A.; Hanssen, K.H.; Lange, H.; Næsset, E.; Rautiainen, M.; Stenberg, P. Mapping LAI in a Norway spruce forest using airborne laser scanning. Remote Sens. Environ. 2009, 113, 2317–2327. [Google Scholar] [CrossRef]

- Leblanc, S.G.; Chen, J.M.; Fernandes, R.; Deering, D.W.; Conley, A. Methodology comparison for canopy structure parameters extraction from digital hemispherical photography in boreal forests. Agric. For. Meteorol. 2005, 129, 187–207. [Google Scholar] [CrossRef] [Green Version]

- Zhu, X.; Skidmore, A.K.; Wang, T.; Liu, J.; Darvishzadeh, R.; Shi, Y.; Premier, J.; Heurich, M. Improving leaf area index (LAI) estimation by correcting for clumping and woody effects using terrestrial laser scanning. Agric. For. Meteorol. 2018, 263, 276–286. [Google Scholar] [CrossRef]

- Fiala, A.C.S.; Garman, S.L.; Gray, A.N. Comparison of five canopy cover estimation techniques in the western Oregon Cascades. For. Ecol. Manag. 2006, 232, 188–197. [Google Scholar] [CrossRef]

- Lu, J.; Wang, H.; Qin, S.; Cao, L.; Pu, R.; Li, G.; Sun, J. Estimation of aboveground biomass of Robinia pseudoacacia forest in the Yellow River Delta based on UAV and Backpack LiDAR point clouds. Int. J. Appl. Earth Obs. Geoinf. 2020, 86. [Google Scholar] [CrossRef]

- Lovell, J.L.; Jupp, D.L.B.; Culvenor, D.S.; Coops, N.C. Using airborne and ground-based ranging lidar to measure canopy structure in Australian forests. Can. J. Remote Sens. 2003, 29, 607–622. [Google Scholar] [CrossRef]

- Macfarlane, C. Classification method of mixed pixels does not affect canopy metrics from digital images of forest overstorey. Agric. For. Meteorol. 2011, 151, 833–840. [Google Scholar] [CrossRef]

- Wasser, L.; Day, R.; Chasmer, L.; Taylor, A. Influence of Vegetation Structure on Lidar-derived Canopy Height and Fractional Cover in Forested Riparian Buffers During Leaf-Off and Leaf-On Conditions. PLoS ONE 2013, 8, e54776. [Google Scholar] [CrossRef] [Green Version]

- Moeser, D.; Morsdorf, F.; Jonas, T. Novel forest structure metrics from airborne LiDAR data for improved snow interception estimation. Agric. For. Meteorol. 2015, 208, 40–49. [Google Scholar] [CrossRef]

| Stand Age (yrs) | Average Height (m) | Average Branch Height (m) | Planting Spacing (m) | Point Cloud Density (pts·m−2) | Plot Size (m) |

|---|---|---|---|---|---|

| 8 | 21.3 | 9.0 | 4 × 6 | 76 | 60 × 60 |

| 11 | 23.7 | 10.0 | 4 × 8 | 77 | 60 × 60 |

| 12 | 24.8 | 12.5 | 3 × 5 | 53 | 30 × 30 |

| 14 | 24.4 | 12.0 | 3 × 8 | 72 | 60 × 60 |

| 16 | 28.8 | 13.5 | 6 × 5 | 77 | 30 × 30 |

| 17 | 28.5 | 14.5 | 6 × 5 | 84 | 60 × 60 |

| 20 | 32.2 | 17.0 | 5 × 6 | 140 | 60 × 60 |

| CHM Pixel Size | Zenith Angle-45° | Zenith Angle-60° | Zenith Angle-75° | |||

|---|---|---|---|---|---|---|

| R2 | RMSE | R2 | RMSE | R2 | RMSE | |

| 0.5 m | 0.751 | 0.053 | 0.707 | 0.053 | 0.490 | 0.066 |

| 2.0 m | 0.706 | 0.057 | 0.679 | 0.055 | 0.467 | 0.067 |

| 5.0 m | 0.634 | 0.064 | 0.670 | 0.056 | 0.445 | 0.069 |

| Zenith Angle-45° | Zenith Angle-60° | Zenith Angle-75° | ||||

|---|---|---|---|---|---|---|

| R2 | RMSE | R2 | RMSE | R2 | RMSE | |

| 2 | 0.751 | 0.053 | 0.707 | 0.053 | 0.490 | 0.066 |

| 3 | 0.707 | 0.057 | 0.679 | 0.055 | 0.589 | 0.059 |

| FOV | r | p | F |

|---|---|---|---|

| 0–45° | 0.951 | 0.983 | 0.967 |

| 0–60° | 0.896 | 0.984 | 0.938 |

| 0–75° | 0.901 | 0.986 | 0.942 |

| Poplar Plantations | HP | SHP | CHM | ||||||

|---|---|---|---|---|---|---|---|---|---|

| 45°–60° | 60°–75° | Mean | 45°–60° | 60°–75° | Mean | 45°–60° | 60°–75° | Mean | |

| 4 | 0.095 | 0.127 | 0.111 | 0.195 | 0.117 | 0.156 | −0.003 | 0.045 | 0.021 |

| 7 | 0.161 | 0.175 | 0.168 | 0.236 | 0.181 | 0.209 | −0.102 | −0.019 | −0.060 |

| 10 | 0.115 | 0.089 | 0.102 | 0.155 | 0.095 | 0.125 | −0.003 | 0.008 | 0.003 |

| 13 | 0.121 | 0.100 | 0.111 | 0.111 | 0.053 | 0.082 | 0.003 | 0.003 | 0.003 |

| 16 | 0.110 | 0.098 | 0.104 | 0.125 | 0.084 | 0.104 | −0.013 | −0.016 | −0.015 |

| all years | 0.121 | 0.118 | 0.119 | 0.164 | 0.106 | 0.135 | −0.024 | 0.004 | −0.010 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pu, Y.; Xu, D.; Wang, H.; An, D.; Xu, X. Extracting Canopy Closure by the CHM-Based and SHP-Based Methods with a Hemispherical FOV from UAV-LiDAR Data in a Poplar Plantation. Remote Sens. 2021, 13, 3837. https://doi.org/10.3390/rs13193837

Pu Y, Xu D, Wang H, An D, Xu X. Extracting Canopy Closure by the CHM-Based and SHP-Based Methods with a Hemispherical FOV from UAV-LiDAR Data in a Poplar Plantation. Remote Sensing. 2021; 13(19):3837. https://doi.org/10.3390/rs13193837

Chicago/Turabian StylePu, Yihan, Dandan Xu, Haobin Wang, Deshuai An, and Xia Xu. 2021. "Extracting Canopy Closure by the CHM-Based and SHP-Based Methods with a Hemispherical FOV from UAV-LiDAR Data in a Poplar Plantation" Remote Sensing 13, no. 19: 3837. https://doi.org/10.3390/rs13193837

APA StylePu, Y., Xu, D., Wang, H., An, D., & Xu, X. (2021). Extracting Canopy Closure by the CHM-Based and SHP-Based Methods with a Hemispherical FOV from UAV-LiDAR Data in a Poplar Plantation. Remote Sensing, 13(19), 3837. https://doi.org/10.3390/rs13193837