License Plate Image Reconstruction Based on Generative Adversarial Networks

Abstract

:1. Introduction

Related Work

2. GAN

2.1. GAN Principle

2.2. Reasons for Using GAN

3. Network Structure

3.1. Generative Model

3.1.1. Residual Dense Network

3.1.2. Progressive Upsampling

3.2. Discriminative Model

3.3. Loss Function

4. Experimental Results

4.1. Experiment

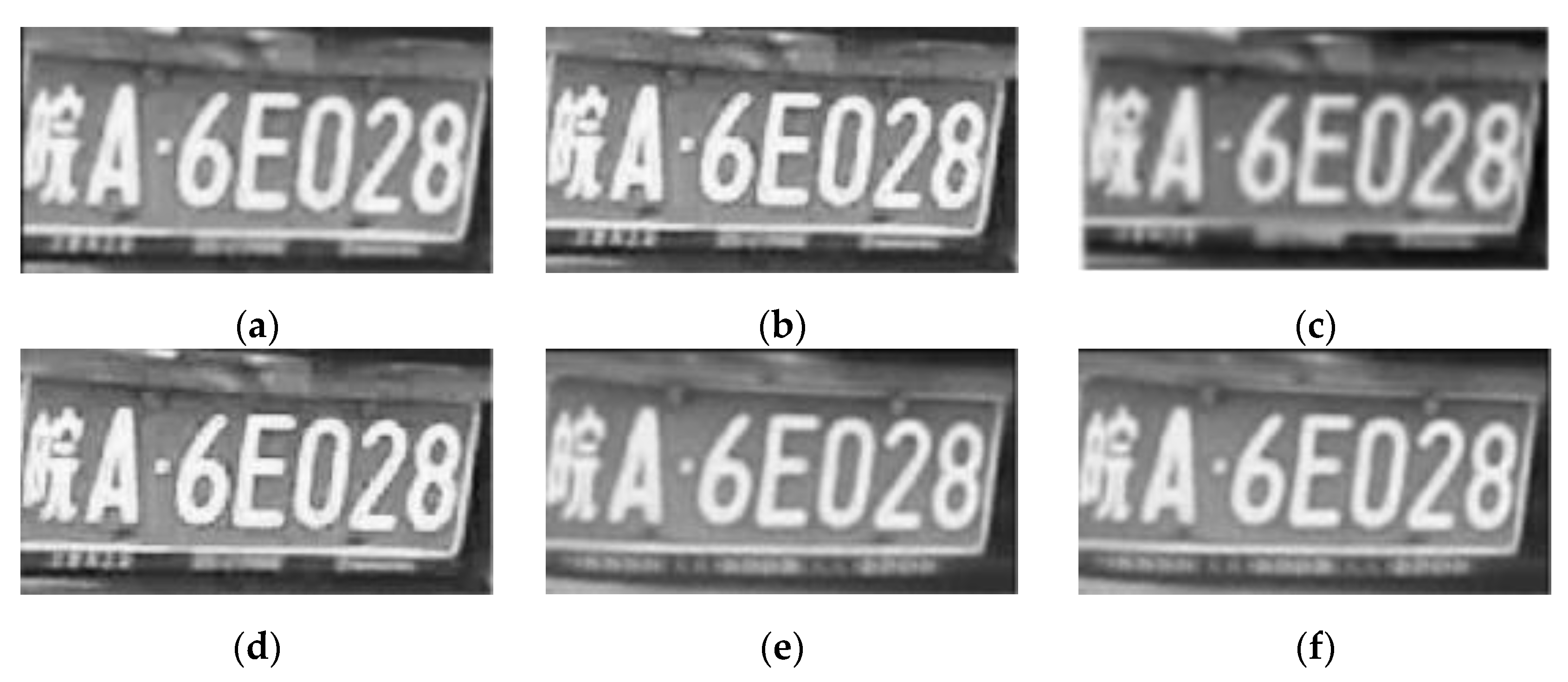

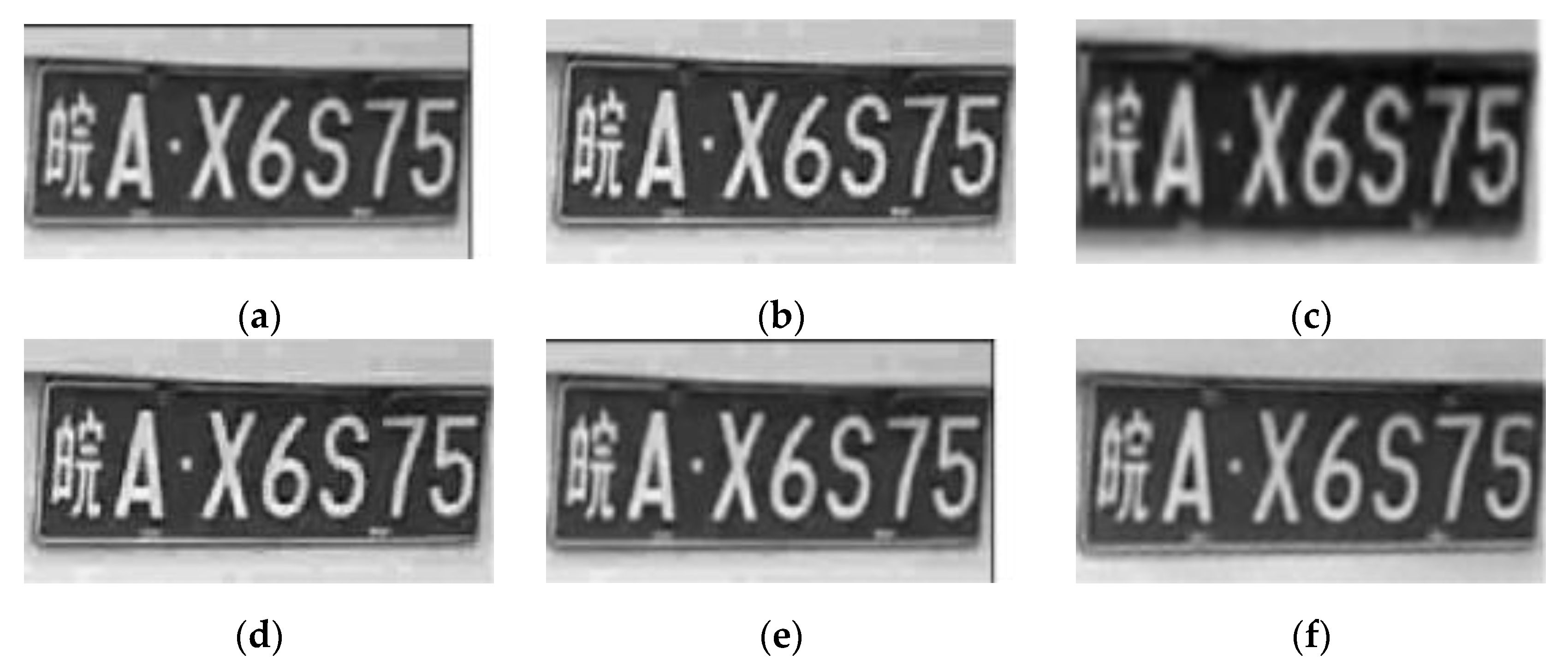

4.2. Experimental Result

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhou, F.; Yang, W.; Liao, Q. Interpolation-based image super-resolution using multisurface fitting. IEEE Trans. Image Process. 2012, 21, 3312–3318. [Google Scholar] [CrossRef] [PubMed]

- Lin, Z.; Shum, H.Y. Fundamental limits of reconstructionbased superresolution algorithms under local translation. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 83–97. [Google Scholar] [PubMed]

- Lian, Q.S.; Zhang, W. Image super-resolution algorithms based on sparse representation of classified image patches. Dianzi Xuebao 2012, 40, 920–925. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef] [Green Version]

- Kim, J.; Lee, K.J.; Lee, K.M. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1646–1654. [Google Scholar]

- Kim, J.; Lee, K.J.; Lee, K.M. Deeply-recursive convolutional network for image super-resolution. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1637–1645. [Google Scholar]

- Zhang, Y.L.; Tian, Y.P.; Kong, Y.B.; Zhong, B.; Fu, Y. Residual dense network for image super-resolution. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 43, 2472–2481. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z. Photo-realistic single image super-resolution using a generative adversarial network. arXiv e-prints 2016, 11, 1–14. [Google Scholar]

- Fu, K.; Peng, J.; Zhang, H.; Wang, X.; Jiang, F. Image super-resolution based on generative adversarial networks: A brief review. Comput. Mater. Contin. 2020, 64, 1977–1997. [Google Scholar] [CrossRef]

- Wang, X.T.; Ke, Y.; Wu, S.X.; Gu, J.J.; Liu, Y.H.; Chao, D.; Yu, Q.; Chen, C.L. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the 2018 European Conference on Computer Vision workshops, Munich, Germany, 8–14 September 2018; pp. 63–79. [Google Scholar]

- Chen, Y.; Liu, L.; Phonevilay, V.; Gu, K.; Xia, R.; Xie, J.; Zhang, Q.; Yang, K. Image super-resolution reconstruction based on feature map attention mechanism. Appl. Intell. 2021, 51, 4367–4380. [Google Scholar] [CrossRef]

- Guo, L.L.; Woźniak, M. An image super-resolution reconstruction method with single frame character based on wavelet neural network in internet of things. Mob. Netw. Appl. 2021, 26, 390–403. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Bing, X.; Bengio, Y. Generative adversarial nets. In Proceedings of the 2014 Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Ratliff, L.J.; Burden, S.A.; Sastry, S.S. Characterization and computation of local Nash equilibria in continuous games. In Proceedings of the 2013 Annual Allerton Conference on Communication, Control, and Computing, Monticello, IL, USA, 2–4 October 2013; pp. 917–924. [Google Scholar]

- Lai, C.; Chen, Y.; Wang, T.; Liu, J.; Wang, Q.; Du, Y.; Feng, Y. A machine learning approach for magnetic resonance image-based mouse brain modeling and fast computation in controlled cortical impact. Med. Biol. Eng. Comput. 2020, 58, 2835–2844. [Google Scholar] [CrossRef] [PubMed]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 1125–1134. [Google Scholar]

- Xu, Z.; Wei, Y.; Meng, A.; Lu, N.; Huang, H.; Ying, C.; Huang, L. Towards End-to-End License Plate Detection and Recognition: A Large Dataset and Baseline. In Proceedings of the 2018 European Conference, Munich, Germany, 8–14 September 2018; pp. 261–277. [Google Scholar]

- Keys, R.G. Cubic convolution interpolation for digital image processing. IEEE Trans. Acoust. 2003, 29, 1153–1160. [Google Scholar] [CrossRef] [Green Version]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a deep convolutional network for image super-resolution. In Proceedings of the 2014 European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 184–199. [Google Scholar]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Kwon, H.; Yoon, H.; Park, K.W. Multi-targeted backdoor: Indentifying backdoor attack for multiple deep neural networks. IEICE Trans. Inf. Syst. 2020, 103, 883–887. [Google Scholar] [CrossRef]

| Dataset | Evaluating Indicator | Bicubic | SRCNN | ESPCN | SRGAN | Our Algorithm |

|---|---|---|---|---|---|---|

| CCPD | PSNR | 22.45 | 24.75 | 28.70 | 26.37 | 26.08 |

| SSIM | 0.71 | 0.8 | 0.76 | 0.79 | 0.77 |

| Figure | Bicubic | SRCNN | ESPCN | SRGAN | Our Algorithm | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | |

| 6 | 23.72 | 0.75 | 20.06 | 0.82 | 27.06 | 0.82 | 25.92 | 0.87 | 26.12 | 0.67 |

| 7 | 25.53 | 0.84 | 24.16 | 0.65 | 25.73 | 0.87 | 23.09 | 0.88 | 24.67 | 0.78 |

| 8 | 24.25 | 0.85 | 22.02 | 0.61 | 28.75 | 0.85 | 28.55 | 0.87 | 26.89 | 0.87 |

| 9 | 22.57 | 0.79 | 21.58 | 0.75 | 28.16 | 0.69 | 26.55 | 0.89 | 27.08 | 0.64 |

| Algorithm | Bicubic | SRCNN | ESPCN | SRGAN | Our Algorithm |

|---|---|---|---|---|---|

| Time | 0.023 | 0.063 | 0.068 | 0.089 | 0.060 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, M.; Liu, L.; Wang, F.; Li, J.; Pan, J. License Plate Image Reconstruction Based on Generative Adversarial Networks. Remote Sens. 2021, 13, 3018. https://doi.org/10.3390/rs13153018

Lin M, Liu L, Wang F, Li J, Pan J. License Plate Image Reconstruction Based on Generative Adversarial Networks. Remote Sensing. 2021; 13(15):3018. https://doi.org/10.3390/rs13153018

Chicago/Turabian StyleLin, Mianfen, Liangxin Liu, Fei Wang, Jingcong Li, and Jiahui Pan. 2021. "License Plate Image Reconstruction Based on Generative Adversarial Networks" Remote Sensing 13, no. 15: 3018. https://doi.org/10.3390/rs13153018

APA StyleLin, M., Liu, L., Wang, F., Li, J., & Pan, J. (2021). License Plate Image Reconstruction Based on Generative Adversarial Networks. Remote Sensing, 13(15), 3018. https://doi.org/10.3390/rs13153018